Abstract

Motion intention detection is fundamental in the implementation of human-machine interfaces applied to assistive robots. In this paper, multiple machine learning techniques have been explored for creating upper limb motion prediction models, which generally depend on three factors: the signals collected from the user (such as kinematic or physiological), the extracted features and the selected algorithm. We explore the use of different features extracted from various signals when used to train multiple algorithms for the prediction of elbow flexion angle trajectories. The accuracy of the prediction was evaluated based on the mean velocity and peak amplitude of the trajectory, which are sufficient to fully define it. Results show that prediction accuracy when using solely physiological signals is low, however, when kinematic signals are included, it is largely improved. This suggests kinematic signals provide a reliable source of information for predicting elbow trajectories. Different models were trained using 10 algorithms. Regularization algorithms performed well in all conditions, whereas neural networks performed better when the most important features are selected. The extensive analysis provided in this study can be consulted to aid in the development of accurate upper limb motion intention detection models.

Keywords: motion intention detection, assistive robotics, rehabilitation robotics, human-machine interface, machine learning

1. Introduction

Human-machine interfaces (HMIs) are widely used in a range of applications: control of assistive robots, such as robotic prostheses [1,2] and orthoses [3,4,5,6], or teleoperation [7,8]. Motion intention detection (MID) is fundamental for implementing HMIs, as it allows for an efficient, hands-free interaction between the user and the device [9]. Predicting motion intention in the case of upper limb movements, when compared to lower limb, is of particular interest, given that they are the main facilitators of the execution of high-skill and dexterous tasks within the user’s peripersonal space. However, unlike the lower limb, the upper limb typically exhibits far more varied motions during the performance of Activities of Daily Living (ADLs) due to the redundancy embedded in the arm’s kinematics [10] and the kinematics couplings between joint axes [11]. This, coupled with the non-cyclical nature of the upper extremity’s movements, makes MID for the upper limb a challenging problem to solve.

The multiple ways to detect motion intention can be broadly categorised according to the signals collected from the user, the features extracted from the data and the algorithms using said feature data to form predictions.

Physiological signals are one of the most commonly used ones. They are mainly obtained by measuring the electrical activity of the muscles, known as electromyographic (EMG) signals, or the brain, known as electroencefalographic (EEG) signals. Myographic signals are measured using surface electrodes and are typically used in classification systems (also known as discrete control systems), implemented for example as state machines [2], or in continuous control systems (also known as proportional control), where the delivered forces/torques are continuously modulated according to a biological model [12] or using machine learning techniques [13]. Using EMG signals is one of the most common ways of detecting user intention due to the signal being detectable before the actual motion is executed and direct correlation to motion intention [14,15]. However, there are some limitations such as change in skin conductivity due to artefacts or the difference in EMG patterns activation between healthy and pathological subjects [16]. EEG signals can be extracted using an array of electrodes placed on the scalp, which can control robots through processing bioelectric signals [17,18]. Like EMG signals, they are favoured for MID due to their intrinsic relation to human motion and are widely used in brain-computer interfaces as well [3,19,20,21,22]. Their main limitations are the long training periods, poor signal-to-noise ratio and the bulkiness of the sensing system [14]. In some applications, it is helpful to use a combination system of physiological sensors rather than using a single one. Wöhrle et al. [23] proposed a hybrid Field Programmable Gate Array (FPGA)-based system for EMG and EEG-based motion prediction, which can provide high computational speed and low power consumption. Another, less popular, type of physiological signal is the change in the muscles dimensions that occurs due to contraction. Kim et al. [24] developed a sensor that measures the Biceps Brachii (BB) muscle circumference and a model that estimates the elbow torques from the sensor data. Most of the literature that employs this physiological signal uses ultrasound as an acquisition system, which is cumbersome to use due to the associated hardware [25,26]. Nonetheless, these studies highlight the potential of using muscle dimensions variations as a feasible input signal.

Kinematic signals measure changes in joint or segment position (or its derivatives, i.e., velocity, acceleration, etc.). They can be used in ON/OFF systems, where a certain movement triggers a control routine to follow a pre-defined trajectory [27], or use a state-space model to decode the angular velocity of shoulder and motion of elbow [28]. One can also use position data from the initial portion of a movement to classify motion intention [29]. Kinematic data can be useful in motion intention detection if the user still has healthy function of their limbs. However, that is not always the case when considering impaired users, who compose a large part of the intended user pool for most applications. For such users, muscle activity is often decreased and they have difficulty in performing motions. Therefore, kinematic signals can prove unreliable. A common method of measuring kinematic data is using Inertial Measurement Units (IMUs), as they provide global position as well as rotation and orientation data, and they are easy to attach to the body segments. However, IMU signals suffer from bias and noises when integrating the measurements of the accelerometer and gyroscope, which can generate a drift issue [30]. Another method is using adaptive oscillators, which can predict the torque during the elbow flexion [31].

In order to use the signals mentioned above, they must be converted into a form readable by a model/algorithm, i.e., features must be extracted. In the case of kinematic signals, this is a straightforward procedure that requires little processing other than filtering, as typical features include position, velocity or acceleration (or their angular equivalent in case of joint data). However, in the case of EMG or EEG, more complex procedures are required, as explained in [32]. In the case of EMG, one can extract time domain features, such as mean absolute value and zero crossing, which are simple to extract and have low-dimensionality but are less effective; frequency domain features, such as power spectrum ratio, considered poor for accurate classification; or time-frequency domain features, such as the continuous wavelet transform, which have high-dimensionality, requiring dimensionality reduction techniques, but are very effective in EMG classification.

To address the limitations presented by the use of the different types of signals, the use of multimodal techniques has seen a growth in popularity. In these techniques, multiple signals are used together so that the shortcomings associated to each signal are compensated by complementing the intention prediction. Park et al. [33] implemented a multimodal interface that uses EMG, bend and pressure sensor data to classify intention to open the hand. They propose adapting the selected signals according to the impairment of the subject, where one mode uses bend sensor data for opening the hand and EMG signals for closing it (for users who can initiate opening motion but have difficulty in maintaining an open hand), and another mode uses EMG data for opening the hand and pressure sensors to close it (for users who have clear EMG pattern for opening the hand but difficulty in closing it). Geng et al. [34] used accelerometers to measure mechanomyographic (MMG) data and predict limb position, whereas EMG data was used to classify upper limb motion. Leeb et al. [35] showed that fusing EMG and EEG data provided a reliable source of data for creating a brain-computer interface able to perform well even under conditions of muscular fatigue.

Once the signals have been collected and their features extracted, the obtained data is input to a predictive algorithm. Generally, one can use either classification or regression algorithms [36]. Classification algorithms try to recognise the pattern of the motion and to classify the intention according to predefined groups of possible outcomes. For example, Gardner et al. [37] used a multimodal approach combining MMG sensors, camera-based visual information and kinematic data to classify hand motion intention according to the type of grasp and the object to be grasped. Classification-based algorithms are useful when the goal is to label the motion, and can have high accuracy due to the discrete nature of the prediction. However, they do not allow for generalisation of motion intention prediction, being limited to a finite number of classes. Regression algorithms can be used to directly predict continuous variables such as position or velocity, which can be a more suitable approach to solve the fundamental problem of prediction of a trajectory of the upper limb.

The aim of this paper is to perform an analysis of kinematic and physiological features for predicting elbow motion intention. We investigate different strategies in terms of used sensors, extracted features and selected algorithms. We employed a multimodal sensing system using EMG, IMU and stretch sensors to extract a large number of features. The features were organised into different groups and their correlation with the motion data was calculated to understand which were more important. Different combinations of features were fed into multiple regression-based models in order to compare the performance of multiple types of regression algorithms.

The rest of this paper is organised as follows. Section 2 outlines the experimental setup for data acquisition, data processing and extracted features, and the selected algorithms to investigate. Section 3 presents the motion prediction results. Section 4 presents discussions and concluding remarks.

2. Methods

2.1. Experimental Protocol

Three healthy participants (2M, 1F, age: y.o., weight: kg, height: cm), with no previous history of musculoskeletal problems, were asked to perform a series of elbow flexion motions by following an animation shown on a computer monitor (Figure 1). The motions were limited to the sagittal plane and were performed with forearm pronated 90 (i.e., palm facing centrally), 0 shoulder abduction and 0 shoulder internal/external rotation. All subjects were right-handed, and they all used their dominant side. The subjects were not physically constrained, but instructed to perform the motions according to the above description.

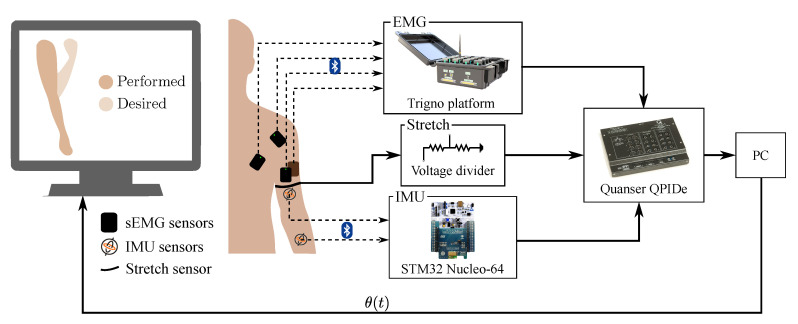

Figure 1.

Experimental setup. The subject follows a reference trajectory shown on a computer monitor. The subject’s forearm is simultaneously shown on the screen by reconstructing the elbow angle from the Inertial Measurement Units (IMU) data. The electromyographic (EMG) and IMU data are captured wirelessly and stretch sensor data are captured via a wire. The data are read by the DAQ Quanser QPIDe, which allows the data to be stored on a PC.

Firstly, IMU, sEMG and stretch sensors were mounted on different locations of the subjects’ upper limb (Figure 2). Then, the subject was shown a target elbow flexion amplitude on a screen and was instructed to flex their elbow to match this position. The elbow angle measured by the IMUs was displayed to give visual feedback to the subject. Each subject completed 300 elbow flexions over 2 sessions with a 10 minute break between sessions to avoid the onset of fatigue. The trajectories varied within a range of for the elbow flexion angle and with velocities within the range /s.

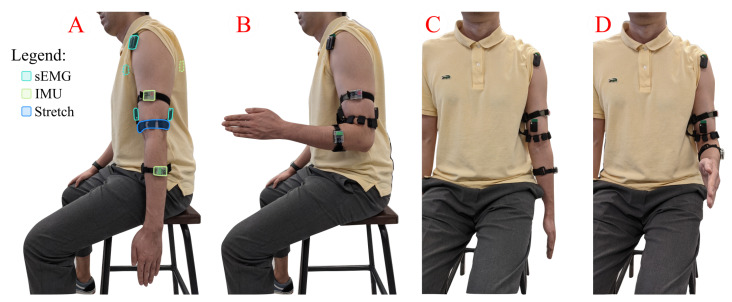

Figure 2.

Placement of EMG, IMU and stretch sensors on the user. (A,B), lateral views of the subject at resting position and when matching the desired elbow angle, respectively; (C,D) frontal views of the subject at resting position and when matching the desired elbow angle, respectively. The dashed lines represent a sensor that is not visible due to being under the clothes or placed on a side opposite to the current view.

The trajectory of the arm during elbow flexion can be approximated using a minimum-jerk trajectory (MJT). We assumed therefore that the trajectory of the elbow rotation when performing flexion movements is of the following form:

| (1) |

where is the peak amplitude of the elbow angle trajectory, is the mean angular velocity and is the duration of the movement to reach from a resting position of . From (1), one can see that only two parameters, peak amplitude and mean angular velocity, are needed to fully define the MJT. These two have been used as inference parameters for the predictive model.

2.2. Sensors and Data Processing

Three different types of sensors were used to acquire upper limb data: sEMG to measure muscle activity, IMUs to measure elbow flexion angle, and a custom-made stretch sensor to measure changes in muscle volume. All sensors were connected as analog inputs to a real-time data acquisition board (Quanser- QPIDe). Data sampling was performed at a frequency of 167 Hz. All the control scheme was built in Matlab 2014a Simulink.

2.2.1. EMG Sensors

The sEMG sensors (Delsys Trigno) were placed on the muscle belly of the Biceps Brachii (BB), Triceps Brachii (TB), Anteriod Deltoid (AD) and Pectoralis Major (PM) muscles following the SENIAM guidelines [38]. The signals were processed through full-wave rectification followed by low-pass filtering (Butterworth, 4th order, 30 Hz cutoff frequency).

2.2.2. IMU Sensors

The wireless IMU sensors (ST Microelectronics STEVAL-STLKT01V1) are attached on the dorsal side of the forearm and on the dorsal side of the upper arm. The sensors communicate via bluetooth with a development board (STM32 Nucleo-64) equipped with an expansion board (X-NUCLEO-IDB05A1) to allow bluetooth communication, and this board streams the data to the Quanser board. The sensors are then calibrated to a specific body configuration of the user, which is with the shoulder adducted to the trunk and the elbow fully extended. After this calibration is complete, the elbow angle is measured by finding the rotation of the forearm IMU with respect to the upper arm IMU (where a elbow angle corresponds to the IMUs being aligned). This measurement requires the following assumptions: (1) the IMUs are placed such that the x axis of each sensor is aligned with the axis of the segment to which it is attached and (2) the IMUs do not slide on the skin or move during motion. The raw data from the IMUs were low-pass filtered (Butterworth, 2nd order, 2 Hz cutoff frequency), then differentiated to obtain velocity values and low-pass filtered (Butterworth, 2nd order, 7 Hz cutoff frequency) and finally differentiated again to obtain acceleration data and low-pass filtered again (Butterworth, 2nd order, 9 Hz cutoff frequency).

2.2.3. Stretch Sensor

The stretch sensor (Conductive Rubber Cord Stretch Sensor, Adafruit) was wrapped around the upper arm. This sensor behaves as a variable resistor whose resistance changes according to the extension of the cord. The sensor was connected to a voltage divider circuit where a low-current, low-voltage signal was fed. Changes in the length of the sensor due to changing muscle volume resulted in variations of the read voltage. The data were low-pass filtered (Butterworth, 2th order, 4 Hz cutoff frequency).

The data was segmented in order to isolate the elbow movements, where each segment represents an MJT performed by the subjects.

2.3. Feature Extraction

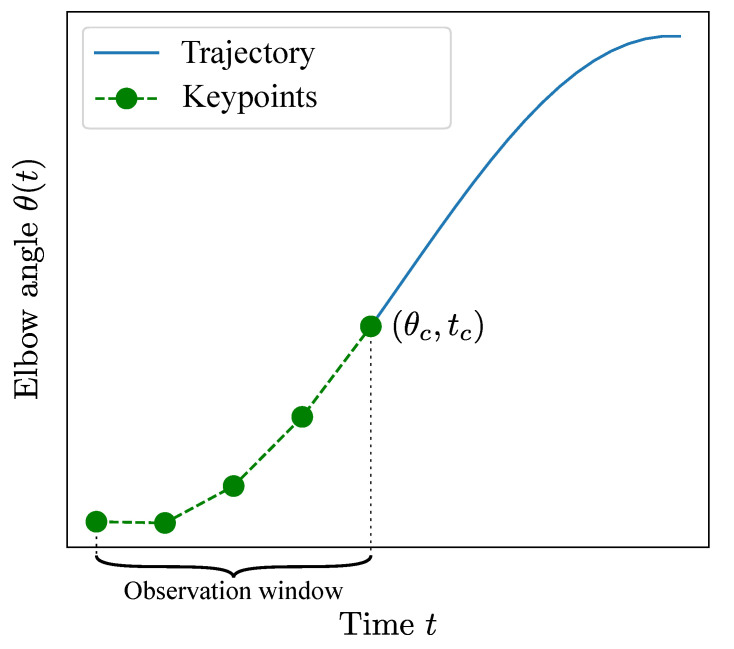

The features used for model training were extracted from the processed and segmented data. Instead of selecting the whole segment for feature extraction, the used data was limited according to 2 factors (Figure 3):

Observation window (OW). The model is intended to predict motion intention. Therefore, the used data should be limited to a certain time interval at the beginning of the movement. This window is defined between the beginning of the motion () and a certain cutoff angle , which is reached at time . The observation window is the time interval , where different define different OW.

Keypoints. A certain number of equally distant points was sampled from the selected OW. This was done to avoid overfitting.

Figure 3.

Example of a typical minimum jerk trajectory (MJT) followed by the elbow. The data used for feature extraction is downsampled to a certain number of keypoints which are chosen from within an observation window defined by cutoff angle .

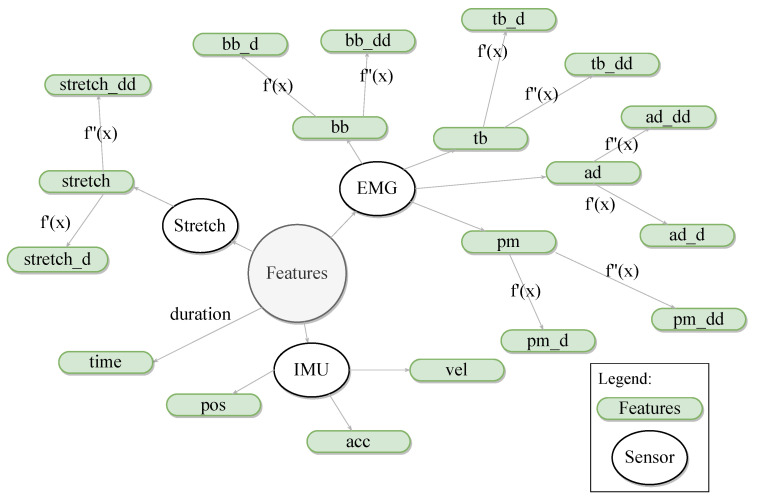

The choice of and is explained in Section 2.5. The extracted features (Figure 4) consist of the 4 EMG signals, the elbow angle, the stretch sensor signal and the time at each keypoint. Furthermore, the first and second time derivative of the EMG signals, elbow angle and stretch sensor signal are computed at each keypoint and added as features as well, amounting to a total of features.

Figure 4.

Features extracted from the windowed data, at each keypoint. Features include the EMG, stretch sensor and IMU data and the timestamp at each keypoint, as well as the first and the second derivative of the same data (excluding the timestamp). The features are named as follows: %feature_%derivative, where %feature is the feature name in lowercase letters, and %derivative is the first or second order derivative. For example, “bb” represents the biceps bracchi EMG activity, whereas “bb_d” represents the first derivative of the EMG signal of the BB muscle. bb: biceps bracchi; tb: triceps bracchi; ad: anterior deltoid; pm: pectoralis major; pos: position; vel: velocity; acc: acceleration.

2.4. Cross-Validation

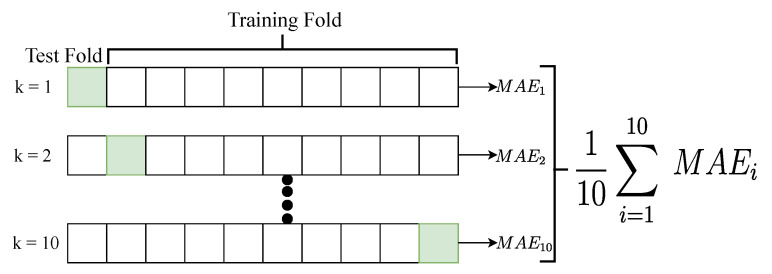

For evaluating model prediction, k- cross validation method is used with k value set at 10. Dataset is split into k-, were sets are used for training and the remaining set is used for testing. This process is performed k times and the average Mean Absolute Error (MAE) is used to quantify model prediction effectiveness (Figure 5):

| (2) |

Figure 5.

k- cross validation.

2.5. Evaluation of Model Performance

The performance of the predictive model was analysed by comparing the predicted values of mean velocity and peak amplitude with the real ones, and computing the MAE according to (2). Performance is affected by four independent factors: , OW, selected features and the selected regression algorithms.

: we investigated in the range from 1 to 200.

OW: the cut-off angle took the values of and to explore different window lengths. We limited the values to so that the model prediction is performed for the as close as possible to the onset of the movement.

Selected features: the features were organised according to their importance, which was computed using a statistical index known as Mutual Information (MI) [39]. Mutual information quantifies the redundancy between two distributions X,Y whose joint probability distribution is .

| (3) |

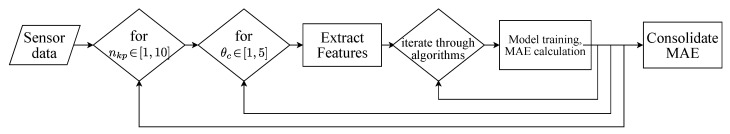

The MI was calculated between the feature data and the inference parameters, being therefore independent from the used algorithm. MAE for mean velocity and peak amplitude was computed when different sets of features were used: all features, Physiological features only, Kinematic features only, EMG only, Stretch only, IMU+EMG and IMU+stretch. For each set of features the process flow of MAE calculation can be understood from the flowchart in Figure 6.

Figure 6.

Process flow of Mean Absolute Error () calculation. This flow is adopted for each set of features and values for each combination is recorded individually.

Regression algorithms: Machine learning algorithms are broadly classified as regression and classification. Since the two inference parameters we are interested in are continuous variables and not categorical, the analysis performed in this study focuses on the use of regression algorithms. Ten algorithms based on four different working principles were selected for performance comparison: Ridge, Lasso and Elastic net (based on regularized Least Squares method), Decision Trees, Random Forest, Extra Trees, AdaBoost and Gradient Boosting (based on Decision Trees method), Support Vector Regression (SVR) (based on support vector machines) and Multiple Layer Perceptron (MLP) (based on neural networks).

We are interested to find the best combination of features and algorithms to maximise the accuracy of our inference parameters. The first step was to reduce the complexity of the analysis by selecting the and OW to optimise the performance.

3. Results

3.1. and Variation

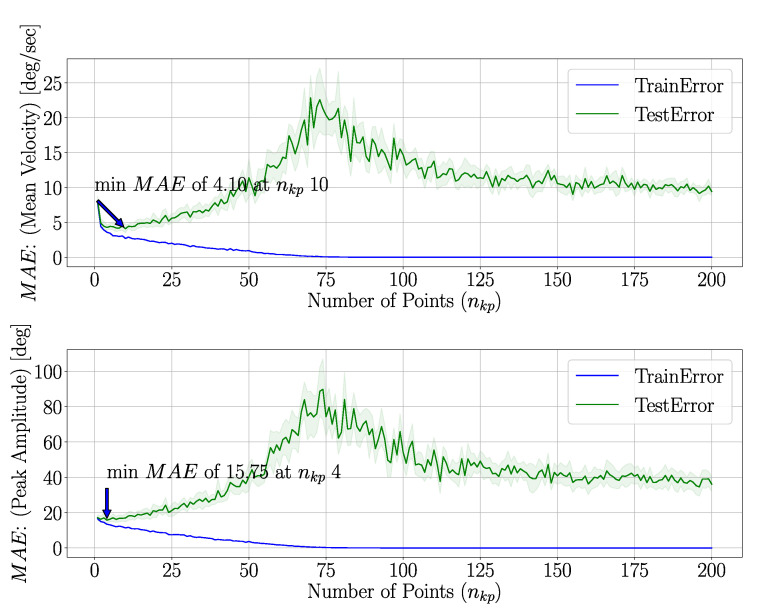

The intention detection model was trained using a wide range of different to identify the optimum number of samples that can be used to represent a trajectory. As seen in Figure 7, the MAE values are lowest when the number of points is lower for smaller . With increasing, the training error plateaus after point number 75. This characteristic shows that the model is prone to over fitting when is larger. Therefore, subsequent analysis is performed with limited to a maximum of 10 points.

Figure 7.

MAE of mean velocity (top) and peak amplitude (bottom) with changing . Training error converges at approximately and testing error is lowest below 20 points. All features are used here for model training and MAE calculation.

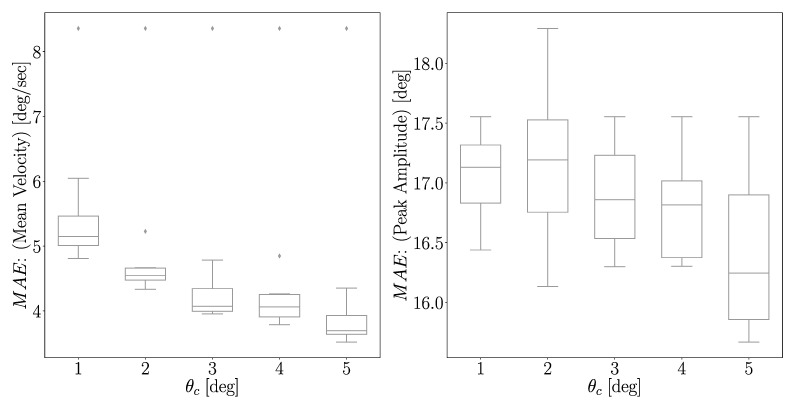

It can be observed that lower MAE values can be attributed to higher OW (Figure 8). In all experiments performed, it was observed that this trend of lower MAE with higher OW persisted. For the mean velocity, the highest observed error was 5.15 deg/s for and the lowest was 3.70 deg/s for . The errors for were approximately the same, taking the values of 4.07 and 4.06 deg/s, respectively. In the case of peak amplitude, the largest error was 17.19 for and the lowest was 16.24 for .

Figure 8.

Distribution of MAE of mean velocity and peak amplitude for different and . For a given , the MAE values are calculated when , and their distribution is plotted. It is clear that MAE values are lower when increases.

3.2. Feature Selection

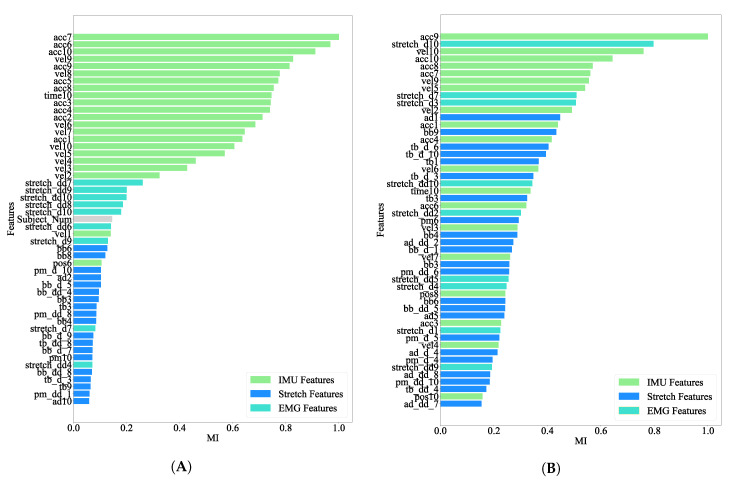

Model parameter tuning using a high-dimensional feature space can render model coefficients to sub-optimal characteristics. To avoid this, sound theoretical considerations deduced from ground truth or other statistical variance analysis methods are generally used for dimensionality reduction (e.g., PCA, LDA). The MI score helps to identify the relevance of the model training inputs in the larger contextual feature space. MI scores that relate our selected features to the mean velocity and peak amplitude are shown in Figure 9A,B, respectively. As observed from the MI bar-plots, kinematic features generated from the IMU sensor have higher relevance compared with other features while predicting both the mean velocity and peak amplitude. The MAE for peak velocity and mean amplitude when considering all features or only the top 20% most important features (according to the MI score) was calculated (Table 1). One can see that the MAE decreases when only the top 20% most important features are used. A variance analysis of inference parameters between each of the subjects showed results as in Figure 10. Significant change in variance is not observed which indicates that the data is not biased with respect to any of the subjects involved in the experiment.

Figure 9.

Ranked Mutual Information (MI) of features with respect to the inferred parameters (A) mean velocity and (B) peak amplitude. For MI calculation the data used is of nkp at 10, θc at 5 and using all available features.Features are color coded as green, blue and turquoise to denote IMU, Stretch and EMG features respectively.

Table 1.

MAE for all features and top 20% of most important features according to the MI scores. Values are represented as mean value ± standard deviation.

| Parameter | MAE | |

|---|---|---|

| All Features | Top 20% | |

| Mean Velocity | 4.78 ± 0.47 | 4.34 ± 0.40 |

| Peak Amplitude | 17.56 ± 1.62 | 16.87 ± 1.50 |

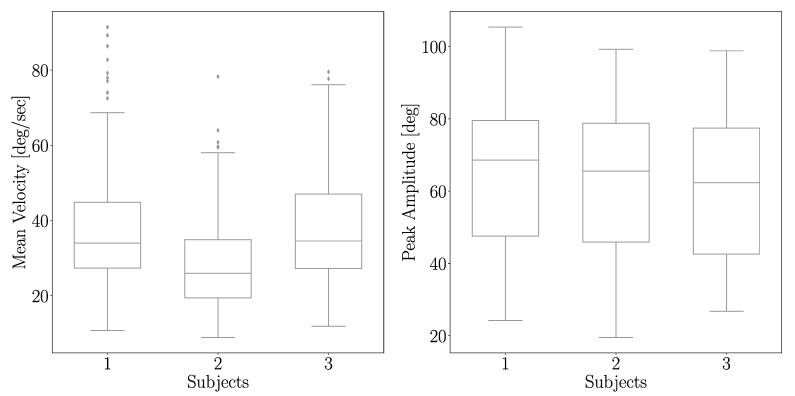

Figure 10.

Distribution of inference parameters with respect to subjects. Mean velocity of subject 2 is comparatively lower. In the case of peak amplitude, this trend is not seen.

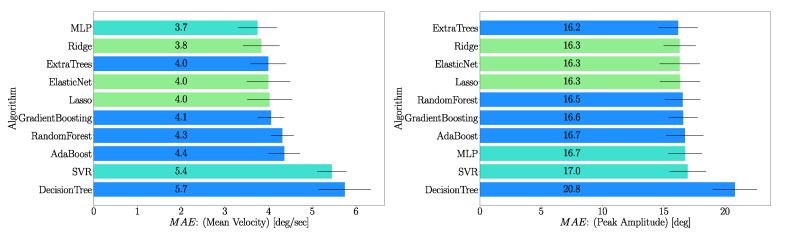

3.3. Comparison of Algorithms

A comparison between regularization algorithms selected for this research is shown in Figure 11. This comparison was done for . We can see that the MLP algorithm is the best performing algorithm when predicting mean velocity, whereas Extra Trees is the best one for predicting the peak amplitude. However, for the prediction of the peak amplitude, all algorithms seem to perform similarly with the exception of Decision Tree, which is the worst performing one for both inference parameters.

Figure 11.

Comparison of MAE for mean velocity and peak amplitude using all features (). Features with top 20% of MI scores are used here for model training. Algorithms based on decision trees technique are color coded in blue, least squares in green and turquoise for Support Vector Regression (SVR) and Multiple Layer Perceptron (MLP).

3.4. Model Prediction Performance

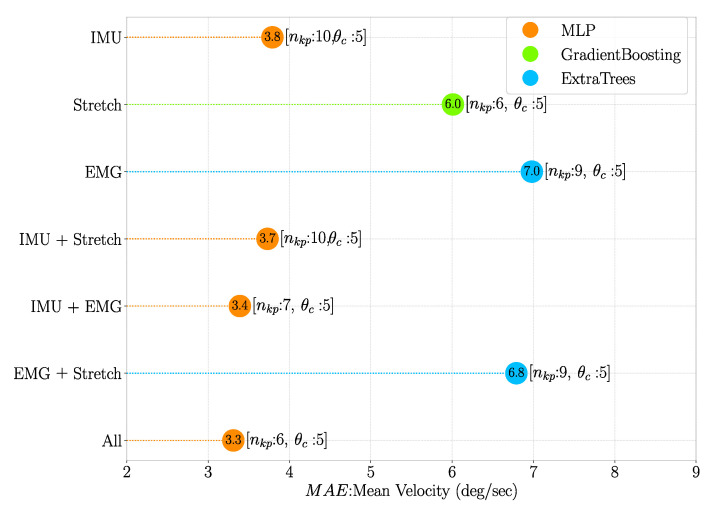

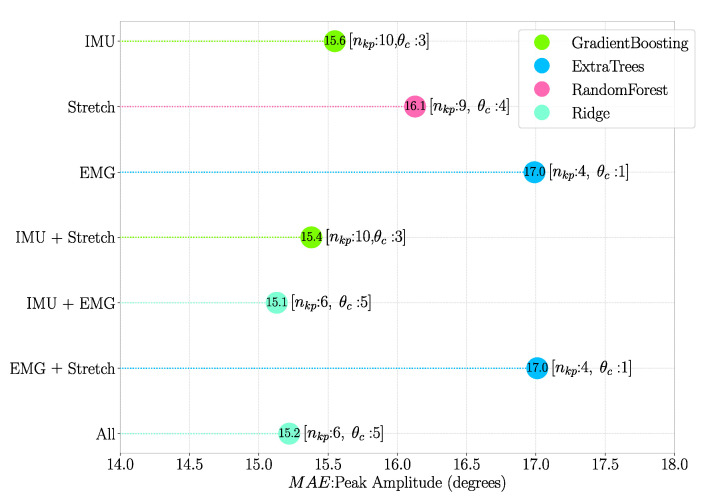

Model prediction performance was evaluated iteratively for all possible combinations of the 10 selected algorithms, and , where and . The combination for which MAE values were minimum is shown in Figure 12 and Figure 13 for mean velocity and peak amplitude, respectively.

Figure 12.

Mean Velocity for the different features combinations, where the top 20% most important features according to their scores were used. The and values at which minimum was obtained are shown within square brackets.

Figure 13.

Peak amplitude for the different features combinations, where the top 20% most important features according to their scores were used. The and values at which minimum was obtained are shown within square brackets.

4. Discussion

In this paper, we used a multi-modal wearable sensor system to predict the elbow flexion trajectories of the upper limb. Through evaluation and comparison of MI and MAE values, the suitable features and algorithms were investigated to achieve accurate prediction of elbow trajectories.

It can be seen from Figure 7 that as increases, the MAE of the predictions also increases, peaking for both mean velocity and peak amplitude at . For , MAE decreases and plateaus for MAE values larger than the values observed for approximately . The reason behind the occurrence of a peak in the MAE for a certain is unclear. However, the predictions with the lowest errors occur for lower values, therefore we concluded such occurrence to be of minor importance. Using a small number of data points for making predictions can contribute to faster computation times, which is a fundamental requirement in real-time predictive systems. Therefore, it is recommended to use a low number of data points.

Regarding the length of the OW, the prediction error of MAE for mean velocity decreases as increases. This is not surprising, as a larger OW corresponds to a longer duration for extracting features and hence more information regarding intention of the subject is embedded in the signal. However, such a result is not so evident when considering peak amplitude, as the largest difference in MAE for the different possible was approximately 1. Considering that the inference error for peak velocity only increases about 0.5 deg/s when using rather than , the choice of could be a beneficial one, depending on the priority of the model. If the priority lies in achieving the best possible performance, then it is clear that an OW with larger than is preferable. In contrast, if the priority is in predicting the elbow trajectory as early as possible, without having a significant loss of accuracy, then using is a viable option.

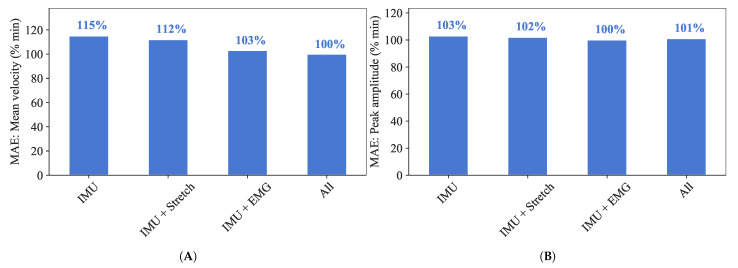

Features obtained from IMU sensor data seem to be the most important ones for predicting the defined inference parameters. This is extremely evident when considering the mean velocity, with the top 20% most important features being all obtained through IMU sensors (Figure 9A). Although not as dominant in the case of peak amplitude (Figure 9B), IMU features are still overall the ones most correlated with this inference parameter. This is likely because the inference parameters represent kinematic variables, and IMUs provide kinematic features only. Stretch sensor features also seem to be important, especially for the prediction of peak amplitude, but far less correlated than IMU based features. This suggests that kinematic signals are more relevant than physiological ones when it comes to predicting defined inference parameters, and, in truth, EMG-based features play little to no role. This can also be seen in Figure 12 and Figure 13, where there is a minor increase in the error of prediction when only kinematic features (Only IMU) are considered vs. IMU + stretch, IMU + EMG or all types of features considered (Figure 14). Such results lead us to think that for this particular type of elbow trajectories, using a single type of sensors—kinematic—should suffice to achieve satisfactory motion intention prediction. The slight improvement that would come from considering physiological sensors as well is outweighed by the decreased complexity that results from using only kinematic sensors: there are fewer used sensors, making the sensing system overall simpler to equip, and the computations performed by the model simpler as well.

Figure 14.

Best four combinations of sensors, features, , and algorithm in terms of percentage of the minimum observed MAE value for (A) mean velocity and (B) peak amplitude.

The algorithm that seems to perform the best in predicting mean velocity is MLP, as it was the one which resulted in the lowest errors using all features for a certain (Figure 11) and also when investigating all different combinations of algorithms, and (Figure 12). Although MLP was not present in the best combinations when predicting peak amplitude with different sets of features (Figure 13), one can see from Figure 11 that the difference in performance between different algorithms seems to be negligible. One of the reasons why MLP seems to perform well is probably due to the fact it is based on neural networks, which are known for having good performance when there is a small number of features with high correlation with the predicted variables (note that we chose the 20% top performing features) [40]. Another algorithm that seems to have performed well is Ridge, which could be an equally valid final choice. However, even though computation times were not investigated in this research, the authors are aware that such a characteristic plays an important role in the choice of algorithm. If the model is to be implemented with the aim of real-time prediction, MLP could prove to be a less optimal choice, as neural network-based approaches are often associated with longer computation times. On the other hand, regularisation-based algorithms are not computationally intensive, so they could prove to be the best option in that case.

This comparative analysis can provide several useful suggestions. First, this study provides a strategy for properly choosing OW and . Second, features from IMU sensor data achieved better performance of prediction comparing with other sensory features. In general, EMG signals are more widely used for human MID [41], but our results suggest that models using solely EMG data do not perform as well has ones using IMU sensor data for MID of elbow flexion. Third, the MLP algorithm was observed to result in good performance when inferring mean velocity, and the Ridge or Gradient Boosting algorithms for inferring peak amplitude. It is important to emphasize that the framework for developing MID algorithms mainly include three aforementioned guidelines. However, the strategy taken should vary depending on different application scenarios. For example, in the field of prosthetic engineering, subjects can appropriately eliminate the number of EMGs and decrease OW and keypoints under the condition of ensuring the control accuracy. A less complicated wearable sensor system can enhance the comfort and convenience of the subject.

There are still some limitations and challenges that need to be addressed. This study discusses single joint movement only, which does not encompass all movements performed by the upper limb during daily life activities. In future studies, we intend to explore the performance of our models by collecting data for more complex motions, such as planar reach and grasp. As a next step, we intend to implement an HMI with an upper limb assistive robot. The MID capability of this HMI will be adjusted according to the findings of the study here presented, namely in the choice of sensors and algorithm to be used. The performance of the HMI will then be evaluated in a scenario where the user’s upper extremity is being assisted. The findings also can be explored in the prostheses technology. The multimodal wearable system can detect and fuse user’s motion intention as input to control the prostheses devices.

5. Conclusions

In this study, a multimodal wearable sensor system was used to extract features from kinematic and physiological signals acquired during the performance of elbow flexion movements. We analysed the impact of selecting different groups of features and various algorithms. To the best of the authors’ knowledge, this is the first multimodal wearable upper limb sensing system that uses signals acquired from EMG, IMU and stretch sensors. We have concluded that, depending on the priority of the developer of the system, there are different combinations of sensors that should be used. If one prefers to have a system that can quickly detect motion intention at the cost of a slight decrease in accuracy, using kinematic signals only and with a small observation window is a preferred option. If instead, the priority is on having the best possible prediction, a longer observation window and using both physiological and kinematic features is necessary. However, such a decision implies the use of extra sensors for detecting muscle activity, which can increase the complexity of the system.

Abbreviations

The following abbreviations are used in this manuscript:

| AD | Anterior Deltoid |

| BB | Biceps Brachii |

| EEG | Electroencefalography |

| sEMG | Surface Electromyography |

| HMI | Human-machine interface |

| IMU | Inertial measurement unit |

| MAE | Mean absolute error |

| MI | Mutual information |

| MID | Motion intention detection |

| MJT | Minimum jerk trajectory |

| MLP | Multiple Layer Perceptron |

| MMG | Mechanomyographic |

| OW | Observation window |

| PM | Pectoralis Major |

| SVR | Support Vector Regression |

| TB | Triceps Brachii |

Author Contributions

Conceptualization, K.L., B.K.P., S.Y., B.N. and D.A.; experiment and analysis, K.L., B.K.P.; writing—original draft preparation, B.K.P., S.Y., B.N.; review and editing, K.L., B.K.P., S.Y., B.N., D.C. and D.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the grant “Intelligent Human-Robot interface for upper limb wearable robots” (Award Number SERC1922500046, A*STAR, Singapore).

Institutional Review Board Statement

Ethical review and approval were waived for this study, due to experiments being carried out using commercial sensors following the manufacturers’ instructions, and no energy/forces were applied to the subjects.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data available on request.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Geethanjali P. Myoelectric control of prosthetic hands: State-of-the-art review. Med. Devices Evid. Res. 2016;9:247–255. doi: 10.2147/MDER.S91102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cipriani C., Zaccone F., Micera S., Carrozza M.C. On the shared control of an EMG-controlled prosthetic hand: Analysis of user-prosthesis interaction. IEEE Trans. Robot. 2008;24:170–184. doi: 10.1109/TRO.2007.910708. [DOI] [Google Scholar]

- 3.Looned R., Webb J., Xiao Z.G., Menon C. Assisting drinking with an affordable BCI-controlled wearable robot and electrical stimulation: A preliminary investigation. J. Neuroeng. Rehabil. 2014;11:1–13. doi: 10.1186/1743-0003-11-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Perry J.C., Rosen J., Burns S. Upper-limb powered exoskeleton design. IEEE/ASME Trans. Mechatronics. 2007;12:408–417. doi: 10.1109/TMECH.2007.901934. [DOI] [Google Scholar]

- 5.Kim Y.G., Xiloyannis M., Accoto D., Masia L. Development of a Soft Exosuit for Industrial Applications; Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob); Enschede, The Netherlands. 26–29 August 2018; pp. 324–329. [Google Scholar]

- 6.Little K., Antuvan C.W., Xiloyannis M., De Noronha B.A., Kim Y.G., Masia L., Accoto D. IMU-based assistance modulation in upper limb soft wearable exosuits; Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR); Toronto, ON, Canada. 24–28 June 2019; pp. 1197–1202. [DOI] [PubMed] [Google Scholar]

- 7.Aarno D., Kragic D. Motion intention recognition in robot assisted applications. Robot. Auton. Syst. 2008;56:692–705. doi: 10.1016/j.robot.2007.11.005. [DOI] [Google Scholar]

- 8.Tanwani A.K., Calinon S. A generative model for intention recognition and manipulation assistance in teleoperation; Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Vancouver, BC, Canada. 24–28 September 2017; pp. 43–50. [Google Scholar]

- 9.Noronha B., Dziemian S., Zito G.A., Konnaris C., Faisal A.A. “Wink to grasp” — comparing eye, voice & EMG gesture control of grasp with soft-robotic gloves; Proceedings of the International Conference on Rehabilitation Robotics (ICORR); London, UK. 17–20 July 2017; pp. 1043–1048. [DOI] [PubMed] [Google Scholar]

- 10.Shen Y., Hsiao B.P.Y., Ma J., Rosen J. Upper limb redundancy resolution under gravitational loading conditions: Arm postural stability index based on dynamic manipulability analysis; Proceedings of the 2017 IEEE-RAS 17th International Conference on Humanoid Robotics (Humanoids); Birmingham, UK. 15–17 November 2017; pp. 332–338. [Google Scholar]

- 11.Georgarakis A.M., Wolf P., Riener R. Simplifying Exosuits: Kinematic Couplings in the Upper Extremity during Daily Living Tasks; Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR); Toronto, ON, Canada. 24–28 June 2019; pp. 423–428. [DOI] [PubMed] [Google Scholar]

- 12.Clancy E.A., Liu L., Liu P., Moyer D.V. Identification of constant-posture EMG-torque relationship about the elbow using nonlinear dynamic models. IEEE Trans. Biomed. Eng. 2012;59:205–212. doi: 10.1109/TBME.2011.2170423. [DOI] [PubMed] [Google Scholar]

- 13.Nielsen J.L., Holmgaard S., Jiang N., Englehart K.B., Farina D., Parker P.A. Simultaneous and proportional force estimation for multifunction myoelectric prostheses using mirrored bilateral training. IEEE Trans. Biomed. Eng. 2011;58:681–688. doi: 10.1109/TBME.2010.2068298. [DOI] [PubMed] [Google Scholar]

- 14.Lobo-Prat J., Kooren P.N., Stienen A.H., Herder J.L., Koopman B.F., Veltink P.H. Non-invasive control interfaces for intention detection in active movement-assistive devices. J. Neuroeng. Rehabil. 2014;11:168. doi: 10.1186/1743-0003-11-168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Triwiyanto T., Wahyunggoro O., Nugroho H.A., Herianto H. Evaluating the performance of Kalman filter on elbow joint angle prediction based on electromyography. Int. J. Precis. Eng. Manuf. 2017;18:1739–1748. doi: 10.1007/s12541-017-0202-5. [DOI] [Google Scholar]

- 16.Cesqui B., Tropea P., Micera S., Krebs H.I. EMG-based pattern recognition approach in post stroke robot-aided rehabilitation: A feasibility study. J. Neuroeng. Rehabil. 2013;10:75. doi: 10.1186/1743-0003-10-75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Katona J., Ujbanyi T., Sziladi G., Kovari A. Speed control of Festo Robotino mobile robot using NeuroSky MindWave EEG headset based brain-computer interface; Proceedings of the 2016 7th IEEE international conference on cognitive infocommunications (CogInfoCom); Wroclaw, Poland. 16–18 October 2016; pp. 000251–000256. [Google Scholar]

- 18.Katona J., Ujbanyi T., Sziladi G., Kovari A. Cognitive Infocommunications, Theory and Applications. Springer; Berlin/Heidelberg, Germany: 2019. Electroencephalogram-based brain-computer interface for internet of robotic things; pp. 253–275. [Google Scholar]

- 19.Randazzo L., Iturrate I., Perdikis S., Millán J.D.R. Mano: A Wearable Hand Exoskeleton for Activities of Daily Living and Neurorehabilitation. IEEE Robot. Autom. Lett. 2018;3:500–507. doi: 10.1109/LRA.2017.2771329. [DOI] [Google Scholar]

- 20.Katona J., Kovari A. Examining the learning efficiency by a brain-computer interface system. Acta Polytech. Hung. 2018;15:251–280. [Google Scholar]

- 21.Katona J., Kovari A. A Brain–Computer Interface Project Applied in Computer Engineering. IEEE Trans. Educ. 2016;59:319–326. doi: 10.1109/TE.2016.2558163. [DOI] [Google Scholar]

- 22.Katona J. Examination and comparison of the EEG based Attention Test with CPT and TOVA; Proceedings of the 2014 IEEE 15th International Symposium on Computational Intelligence and Informatics (CINTI); Budapest, Hungary. 19–21 November 2014; pp. 117–120. [Google Scholar]

- 23.Wöhrle H., Tabie M., Kim S.K., Kirchner F., Kirchner E.A. A hybrid FPGA-based system for EEG-and EMG-based online movement prediction. Sensors. 2017;17:1552. doi: 10.3390/s17071552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kim W.S., Lee H.D., Lim D.H., Han J.S., Shin K.S., Han C.S. Development of a muscle circumference sensor to estimate torque of the human elbow joint. Sens. Actuators A Phys. 2014;208:95–103. doi: 10.1016/j.sna.2013.12.036. [DOI] [Google Scholar]

- 25.González D.S., Castellini C. A realistic implementation of ultrasound imaging as a human-machine interface for upper-limb amputees. Front. Neurorobotics. 2013;7:1–11. doi: 10.3389/fnbot.2013.00017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Shi J., Chang Q., Zheng Y.P. Feasibility of controlling prosthetic hand using sonomyography signal in real time: Preliminary study. J. Rehabil. Res. Dev. 2010;47:87–98. doi: 10.1682/JRRD.2009.03.0031. [DOI] [PubMed] [Google Scholar]

- 27.In H., Kang B., Sin M., Cho K.J. Exo-Glove: A wearable robot for the hand with a soft tendon routing system. IEEE Robot. Autom. Mag. 2015;22:97–105. doi: 10.1109/MRA.2014.2362863. [DOI] [Google Scholar]

- 28.Artemiadis P.K., Kyriakopoulos K.J. Estimating arm motion and force using EMG signals: On the control of exoskeletons; Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems; Nice, France. 22–26 September 2008; pp. 279–284. [Google Scholar]

- 29.Zunino A., Cavazza J., Volpi R., Morerio P., Cavallo A., Becchio C., Murino V. Predicting Intentions from Motion: The Subject-Adversarial Adaptation Approach. Int. J. Comput. Vis. 2020;128:220–239. doi: 10.1007/s11263-019-01234-9. [DOI] [Google Scholar]

- 30.Filippeschi A., Schmitz N., Miezal M., Bleser G., Ruffaldi E., Stricker D. Survey of motion tracking methods based on inertial sensors: A focus on upper limb human motion. Sensors. 2017;17:1257. doi: 10.3390/s17061257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ronsse R., Vitiello N., Lenzi T., Van Den Kieboom J., Carrozza M.C., Ijspeert A.J. Human–robot synchrony: Flexible assistance using adaptive oscillators. IEEE Trans. Biomed. Eng. 2010;58:1001–1012. doi: 10.1109/TBME.2010.2089629. [DOI] [PubMed] [Google Scholar]

- 32.Chowdhury R.H., Reaz M.B., Bin Mohd Ali M.A., Bakar A.A., Chellappan K., Chang T.G. Surface electromyography signal processing and classification techniques. Sensors. 2013;13:12431–12466. doi: 10.3390/s130912431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Park S., Meeker C., Weber L.M., Bishop L., Stein J., Ciocarlie M. Multimodal sensing and interaction for a robotic hand orthosis. IEEE Robot. Autom. Lett. 2018;4:315–322. doi: 10.1109/LRA.2018.2890199. [DOI] [Google Scholar]

- 34.Geng Y., Zhou P., Li G. Toward attenuating the impact of arm positions on electromyography pattern-recognition based motion classification in transradial amputees. J. Neuroeng. Rehabil. 2012;9:1. doi: 10.1186/1743-0003-9-74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Leeb R., Sagha H., Chavarriaga R., Millán J.D.R. A hybrid brain-computer interface based on the fusion of electroencephalographic and electromyographic activities. J. Neural Eng. 2011;8:025011. doi: 10.1088/1741-2560/8/2/025011. [DOI] [PubMed] [Google Scholar]

- 36.Novak D., Riener R. A survey of sensor fusion methods in wearable robotics. Robot. Auton. Syst. 2015;73:155–170. doi: 10.1016/j.robot.2014.08.012. [DOI] [Google Scholar]

- 37.Gardner M., Castillo C.S.M., Wilson S., Farina D., Burdet E., Khoo B.C., Atashzar S.F., Vaidyanathan R. A multimodal intention detection sensor suite for shared autonomy of upper-limb robotic prostheses. Sensors. 2020;20:6097. doi: 10.3390/s20216097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hermens H.J., Freriks B., Disselhorst-Klug C., Rau G. Development of recommendations for SEMG sensors and sensor placement procedures. J. Electromyogr. Kinesiol. 2000;10:361–374. doi: 10.1016/S1050-6411(00)00027-4. [DOI] [PubMed] [Google Scholar]

- 39.Peng H., Long F., Ding C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005;27:1226–1238. doi: 10.1109/TPAMI.2005.159. [DOI] [PubMed] [Google Scholar]

- 40.Zhang C., Pan X., Li H., Gardiner A., Sargent I., Hare J., Atkinson P.M. A hybrid MLP-CNN classifier for very fine resolution remotely sensed image classification. ISPRS J. Photogramm. Remote. Sens. 2018;140:133–144. doi: 10.1016/j.isprsjprs.2017.07.014. [DOI] [Google Scholar]

- 41.Bi L., Guan C. A review on EMG-based motor intention prediction of continuous human upper limb motion for human-robot collaboration. Biomed. Signal Process. Control. 2019;51:113–127. doi: 10.1016/j.bspc.2019.02.011. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data available on request.