Abstract

Background:

Language is a valuable source of clinical information in Alzheimer’s disease, as it declines concurrently with neurodegeneration. Consequently, speech and language data have been extensively studied in connection with its diagnosis.

Objective:

Firstly, to summarize the existing findings on the use of artificial intelligence, speech, and language processing to predict cognitive decline in the context of Alzheimer’s disease. Secondly, to detail current research procedures, highlight their limitations, and suggest strategies to address them.

Methods:

Systematic review of original research between 2000 and 2019, registered in PROSPERO (reference CRD42018116606). An interdisciplinary search covered six databases on engineering (ACM and IEEE), psychology (PsycINFO), medicine (PubMed and Embase), and Web of Science. Bibliographies of relevant papers were screened until December 2019.

Results:

From 3,654 search results, 51 articles were selected against the eligibility criteria. Four tables summarize their findings: study details (aim, population, interventions, comparisons, methods, and outcomes), data details (size, type, modalities, annotation, balance, availability, and language of study), methodology (pre-processing, feature generation, machine learning, evaluation, and results), and clinical applicability (research implications, clinical potential, risk of bias, and strengths/limitations).

Conclusion:

Promising results are reported across nearly all 51 studies, but very few have been implemented in clinical research or practice. The main limitations of the field are poor standardization, limited comparability of results, and a degree of disconnect between study aims and clinical applications. Active attempts to close these gaps will support translation of future research into clinical practice.

Keywords: Alzheimer’s disease, artificial intelligence, cognitive decline, computational linguistics, dementia, machine learning, screening, speech processing

INTRODUCTION

Alzheimer’s disease (AD) is a neurodegenerative disease that involves decline of cognitive and functional abilities as the illness progresses [1]. It is the most common etiology of dementia. Given its prevalence, it has effects beyond just patients and carers as it also has a severe societal and economic impact worldwide [2]. Although memory loss is often considered the signature symptom of AD, language impairment may also appear in its early stages [3]. Consequently, and due to the ubiquitous nature of speech and language, multiple studies rely on these modalities as sources of clinical information for AD, from foundational qualitative research (e.g., [4, 5]) to more recent work on computational speech technology (e.g., [6–8]). The potential for using speech as a biomarker for AD is based on several prospective values, including: 1) the ease with which speech can be recorded and tracked over time, 2) its non-invasiveness, 3) the fact that technologies for speech analysis have improved markedly in the past decade, boosted by advances in artificial intelligence (AI) and machine learning, and 4) the fact that speech problems may be manifest at different stages of the disease, making it a life-course assessment that has value unlimited by disease stage.

Recent studies on the use of AI in AD research entail using language and speech data collected in different ways and applying computational speech processing for diagnosis, prognosis, or progression modelling. This technology encompasses methods for recognizing, analyzing, and understanding spoken discourse. It implies that at least part of the AD detection process could be automated (passive). Machine learning methods have been central to this research program. Machine learning is a field of AI that concerns itself with the induction of predictive models “learned” directly from data, where the learner improves its own performance through “experience” (i.e., exposure to greater amounts of data). Research on automatic processing of speech and language with AI and machine learning methods have yielded encouraging results and attracted increasing interest. Different approaches have been studied, including computational linguistics (e.g., [9]), computational paralinguistics (e.g., [10]), signal processing (e.g., [11]), and human-robot interaction (e.g., [12]).

However, investigations of the use of language and speech technology in AD research are heterogeneous, which makes consensus, conclusions, and translation into larger studies or clinical practice problematic. The range of goals pursued in such studies is also broad, including automated screening for early AD, tools for early detection of disease in clinical practice, monitoring of disease progression, and signalling potential mechanistic underpinnings to speech problems at a biological level thereby improving disease models. Despite progress in research, the small, inconsistent, single-laboratory and non-standardized nature of most studies has yielded results that are not robust enough to be aggregated and thereafter implemented toward those goals. This has resulted in gaps between research contexts, clinical potential, and actual clinical applications of this new technology.

We sought to summarize the current state of the evidence regarding AI approaches in speech analysis for AD with a view to setting a foundation for future research in this area and potential development of guidelines for research and implementation. The review has three main objectives: Firstly, to present the main aims and findings of this research, secondly to outline the main methodological approaches, and finally surmise the potential for each technique to be ready for further evaluation toward clinical use. In doing so, we hope to contribute to the development of these novel, exciting, and yet under-utilized approaches, toward clinical practice.

METHODS

The procedures adopted in this review were specified in a protocol registered with the international prospective register of systematic reviews PROSPERO (reference: CRD42018116606). In the following sections we describe the elegibility criteria, information sources, search strategy, study records management, study records selection, data collection process, data items (extraction tool), risk of bias in individual studies, data synthesis, meta-bias(es), and confidence in cumulative evidence.

Elegibility criteria

We aimed to summarize all available scientific studies where an interactive AI approach was adopted for neuropsychological monitoring. Interaction-based technology entails data obtained through a form of communication, and AI entails some automation of the process. Therefore, we included articles where automatic machine learning methods were used for AD screening, detection, and prediction, by means of computational linguistics and/or speech technology.

Articles were deemed eligible if they described studies of neurodegeneration in the context of AD. That is, subjective cognitive impairment (SCI), mild cognitive impairment (MCI), AD or other dementia-related terminology if indicated as AD-related in the full text (e.g., if a paper title reads unspecified “dementia” but the research field is AD). The included studies examined behavioral patterns that may precede overt cognitive decline as well as observable cognitive impairment in these neurodegenerative diseases. Related conditions such as semantic dementia (a form of aphasia) or Parkinson’s disease (a different neurodegenerative disease) formed part of the exclusion criteria (except when in comorbidity with AD). Language was not an exclusion criterion, and translation resources were used as appropriate.

Another exclusion criterion is the exclusive use of traditional statistics in the analysis. The inclusion criteria require at least one component of AI, machine learning, or big data, even if the study encompasses traditional statistical analysis. Further exclusion criteria apply to related studies relying exclusively on neuroimaging techniques such as magnetic resonance imaging (MRI), with no relation to language or speech, even if they do implement AI methods. The same applies to biomarker studies (e.g., APOE genotyping). This review also excluded purely epidemiological studies, that is, studies aimed at analyzing the distribution of the condition rather than assessing the potential of AI tools for monitoring its progress.

In terms of publication status, we considered peer-reviewed journal and conference articles only. Records that were not original research papers were excluded (i.e., conference abstracts and systematic reviews). In order to avoid redundancy, we assessed research by the same group and excluded overlapping publications. This was assessed by reading the text in full and selecting only the most relevant article for review (i.e., most comprehensive and up to date). Due to limited resources, we also excluded papers when full-texts were found unavailable in all our alternative sources.

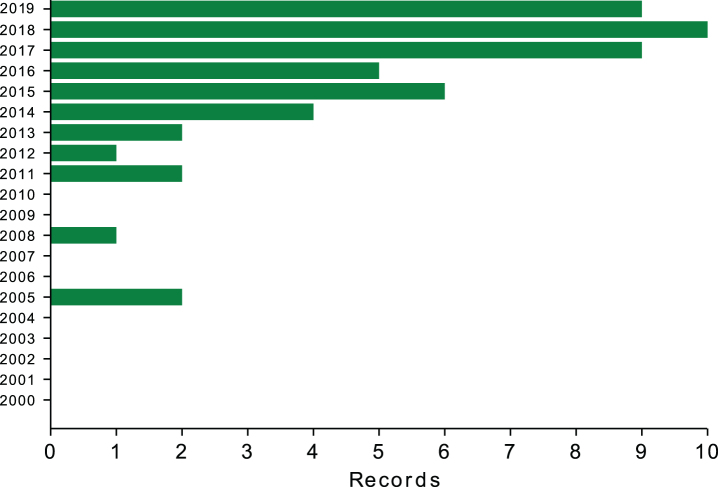

Lastly, we considered papers from a twenty-year span, from the beginning of 2000 to the end of 2019, anticipating that the closer to the end of this time-frame, the larger the number of results, as shown in Fig. 1.

Fig.1.

Number of relevant records per year (2000–2019).

Information sources

Between October and December 2019, we searched the following electronic databases: ACM, Embase, IEEE, PsycINFO, PubMed, and Web of Science. We contacted study authors by email when full-text versions of relevant papers where not available through the university library, with varying degrees of success.

We also included relevant titles found through “forward citation tracking” with Google Scholar, screening articles references and research portal suggestions suggestions.

Search strategy

Given the heterogeneity of the field, a broad search needed to be conducted. For the health condition of interest, AD, we included terms such as dementia, cognitive decline, and Alzheimer. For the methodology, we included speech, technology, analysis and natural language processing, AI, machine learning, and big data.

The search strategy was developed collaboratively between the authors, and with the help of the University of Edinburgh’s academic support librarian. After a few iterations and trials, we decided not to include the AI terms, since this seemed to constrain the search too much, yielding fewer results. Therefore, the search queries were specified as follows (example for PubMed):

-

•

(speech AND (dementia OR “cognitive decline” OR (cognit* AND impair*) OR Alzheimer) AND (technology OR analysis)) OR (“natural language processing” AND (dementia OR “cognitive decline” OR (cognit* AND impair*) OR Alzheimer))

-

•

Filters applied: 01/01/2000 - 31/12/2019.

Then, we applied the exclusion criteria, starting from the lack of AI, machine learning, and big data methods, usually detected in the abstract.

We used EndNote X8 [13] for study records management.

Study records selection

Screening for record selection happened in two phases, independently undertaken by two reviewers and following pre-established eligibility criteria. In the first phase, the two independent authors screened titles and abstracts against exclusion criteria using EndNote. The second phase consisted of a full-text screening for those papers that could not be absolutely included or excluded based on title and abstract information only. Any emerging titles that were deemed relevant were added to the screening process. Disagreements at any of the stages were discussed and, when necessary, a third author convened to find a resolution. Some records reported results that were redundant with a later paper of the same research group, mainly because the earlier record was a conference paper or because an extended version of the research paper had been published elsewhere at a later date. When this happened, earlier and shorter reports were excluded.

Data collection process

Our original intention was to rely on the PICO framework [14] for data collection. However, given the relative youth and heterogeneity of the research field reviewed, and the lack of existing reviews on the topic, we adapted a data extraction tool specifically for our purposes. This tool took the form of four comprehensive tables which were used to extract the relevant information from each paper. Those tables summarize general study information, data details, methodology, and clinical applicability.

The tables were initially “piloted” with a few studies, in order to ensure they were fit to purpose. Information extraction was performed independently by two reviewers and consistency was compared. When differences about extracted items was not resolved by discussion, the third author was available to mediate with the paper’s full text as reference.

Data items (extraction tool)

As stated in the data collection process, data items will be extracted through the elaboration of four tables. These tables are:

-

•

SPICMO: inspired in the PICO framework, it contains information on Study, Population, Interventions, Comparison groups, Methodology, and Outcomes. More details can be found just before Supplementary Table 4.

-

•

Data details: dataset/subset size, data type, other data modalities, data annotation, data availability, and language. More details can be found just before Supplementary Table 5.

-

•

Methodology details: pre-processing, features generated, machine learning task/method, evaluation technique, and results. More details can be found just before Supplementary Table 6.

-

•

Clinical applicability: research implications, clinical potential, risk of bias, and strengths/limitations. More details can be found just before Supplementary Table 7.

Risk of bias in individual studies

Many issues, such as bias, do not apply straightforwardly to this review because it focuses on diagnostic and prognostic test accuracy, rather than interventions. Therefore, if there were to be significance tests they would be for comparisons between the results of the different methods. Besides, the scope of the review is machine learning technology, where the evaluation through significance testing is rare. Papers that rely exclusively on traditional statistics will be excluded, and therefore we expect the review to suffer from a negligible risk of bias in terms of significance testing.

The risk of bias in machine learning studies often comes from how the data is prepared in order to train your models. In a brief example, if a dataset is not split in a training and a testing subset, the model will be trained and tested on the same data. Such model is likely to achieve very good results, but chances are that its performance will drop dramatically when tested on unseen data. This risk is called “overfitting”, and is assessed in Supplementary Table 7. Other risks accounted for in this table are data balance, the use of suitable metrics, the contextualization of results, and the sample size. Data balance reports whether the dataset has comparable numbers of AD and healthy participants, as well as in terms of gender or age. Suitable metrics is an assessment of whether the metric chosen to evaluate a model is appropriate, in conjunction with data balance and sample size (e.g., accuracy is not a robust metric when a dataset is imbalanced). Contextualization refers to whether their study results are compared to a suitable baseline (i.e., a measure without a target variable or comparable research results). Finally, sample size is particularly relevant because machine learning methodology was developed for large datasets, but data scarcity is a distinctive feature of this field.

The poor reporting of results and subsequent interpretation difficulties is a longstanding challenge of diagnostic test accuracy research [15]. Initially, we considered two tools for risk of bias assessment, namely the “QUADAS-2: Quality Assessment of Diagnosis Studies checklist - 2” [16] and the “PROBAST: Prediction model Risk Of Bias ASsessment Tool” [17]. However, our search covers an emerging interdisciplinary field where papers are neither diagnostic studies nor predictive ones. Additionally, the Cochrane Collaboration recently emphasized a preference for systematic reviews to focus on the performance of individual papers’ on the different risk of bias criteria [18]. Consequently, we decided to assess risk of bias as part of Supplementary Table 7, according to criteria that are suitable to the heterogeneity currently inherent to the field. These criteria include the risks of bias described above, as well as an assessment of generalizability, replicability, and validity, which are standard indicators of the quality of a study. Risk of bias was independently assessed by two reviewers and disagreements were resolved by discussion.

Data synthesis

Given the discussed characteristics of the field, as well as the broad range of details covered by the tables, we anticipate a thorough discussion of all the deficiencies and inconsistencies that future research should address. Therefore, we summarize the data in narrative form, following the structure provided by the features summarized in each table. Although a meta-analysis is beyond scope at the current stage of the field, we do report outcome measures in a comparative manner when possible.

Confidence in cumulative evidence

We will assess accuracy of prognostic and diagnostic tools, rather than confidence in an intervention. Hence, we will not be drawing any conclusions related to treatment implementation.

Background on AI, cognitive tests, and databases

This section briefly defines key terminology and abbreviations referring and offers a taxonomy of features, adapted from Voleti et al. [20], to enhance the readability of the systematic review tables. This section also briefly describes the most commonly used databases and neuropsychological assessments, with the intention of making these accessible for the reader.

AI, machine learning, and speech technologies

AI can be loosely defined as a field of research that studies artificial computational systems that are capable of exhibiting human-like abilities or human level performance in complex tasks. While the field encompasses a variety of symbol manipulation systems and manual encoding of expert knowledge, the majority of methods and techniques employed by the studies reviewed here concern machine learning methods. While machine learning dates back to the 1950s, the term “machine learning” as it is used today, originated within the AI community in the late 1970s to designate a number of techniques designed to automate the process of knowledge acquisition. Theoretical developments in computational learning theory and the resurgence of connectionism in the 1980s helped consolidate the field, which incorporated elements of signal processing, information theory, statistics, and probabilistic inference, as well as inspiration from a number of disciplines.

The general architecture of a machine learning system as used in AD prediction based on speech and language can be described in terms of the learning task, data representation, learning algorithm, nature of the “training data”, and performance measures. The learning task concerns the specification of the function to be learned by the system. In this review, such functions include classification (for instance, the mapping of a voice or textual sample from a patient to a target category such as “probable AD”, “MCI”, or “healthy control”) and regression tasks (such as mapping the same kind of input to a numerical score, such as a neuropsychological test score). The data representation defines which features of the vocal or linguistic input will be used in the mapping of that input to the target category or value, and how these features will be formally encoded. Much research in machine learning applied to this and other areas focuses on data representation. A taxonomy of features used in the papers reviewed here is presented in Table 1. There is a large variety of learning algorithms available to the practitioner, and a number of them have been employed in AD research. These range from connectionist systems, of which most “deep learning” architectures are examples, to relatively simple linear classifiers such as naïve Bayes and logistic regression, to algorithms that produce interpretable outputs in the form of decision trees or logical expressions, to ensembles of classifiers and boosting methods. The nature of the training data affects both its representation and the choice of algorithm. Usually, in AD research, patient data are annotated with labels for the target category (e.g., “AD”, “control”) or numerical scores. Machine learning algorithms that make use of such annotated data for induction of models are said to perform supervised learning, while learning that seeks to structure unannotated data is called unsupervised learning. Performance measures, and by extension the loss function with respect to which the learning algorithm attempts to optimize, usually depend on the application. Commonly used performance measures are accuracy, sensitivity (also known as recall), specificity, positive predictive value (also known as precision), and summary measures of trade-offs between these measures, such as area under the receiver operating characteristic curve and F scores. These methods and metrics are further detailed below.

Table 1.

Feature taxonomy, adapted from Voleti et al. [20]

| Category | Subcategory | Feature type | Feature name, abbreviation, reference |

| Text-based (NLP) | Lexical features | Bag of words, vocabulary analysis | BoW, Vocab. |

| Linguistic Inquiry and Word Count | LIWC [21] | ||

| Lexical diversity | Type-Token Ratio (TTR), Moving Average TTR (MATTR), Simpson’s Diversity Index (SDI) Brunét’s Index (BI), Honoré’s Statistic (HS). | ||

| Lexical Density | Content density (CD), Idea Density (ID), P-Density (PD). | ||

| Part-of-Speech tagging | PoS. | ||

| Syntactical features | Constituency-based parse tree scores | Yngve [22], Frazier [23]. | |

| Dependency-based parse tree scores | |||

| Speech graph | Speech Graph Attributes (SGA). | ||

| Semantic features | Matrix decomposition methods | Latent Semantic Analysis (LSA), Principal Component Analysys (PCA). | |

| (Word and sentence embeddings) | Neural word/sentence embeddings | word2vec [24] | |

| Topic modelling | Latent Dirichlet Allocation [25]. | ||

| Psycholinguistics | Reliance on familiar words (PsyLing). | ||

| Pragmatics | Sentiment analysis | Sent. | |

| Use of language UoL | Pronouns, paraphrasing, filler words (FW). | ||

| Coherence | Coh. | ||

| Acoustic | Prosodic features | Temporal | Pause rate (PR), Phonation rate (PhR), Speech rate (SR), Articulation rate (AR). Vocalization events. |

| Fundamental Frequency | F0 and trajectory. | ||

| Loudness and energy | loud, E. | ||

| Emotional content | emo. | ||

| Spectral features | Formant trajectories | F1, F2, F3. | |

| Mel Frequency Cepstral Coefficients | MFCCs [26]. | ||

| Vocal quality | Jitter, Shimmer, harmonic-to-noise ratio | jitt, shimm, HNR. | |

| ASR-related | Filled pauses, repetitions, dysfluencies, hesitations. fractal dimension, entropy. | FP, rep, dys, hes, FD, entr. | |

| Dialogue features (i.e., Turn-Taking) | TT:avg turn length, inter-turn silences. |

Cognitive tests

This is a brief description of the traditional cognitive tests (as opposed to speech-based cognitive tasks) most commonly applied in this field, with two main purposes. On the one hand, neuropsychological assessments are one of the several factors on which clinicians rely in order to make a clinical diagnosis, which in turn results on participants being assigned to an experimental group (i.e., healthy control, SCI, MCI, or AD). On the other hand, some of these tests are recurrently used as part of the speech elicitation protocols.

Batteries used for diagnostic purposes consist of reliable and systematically validated assessment tools that evaluate a range of cognitive abilities. They are specifically designed for dementia and aimed to be time-efficient, as well as able to highlight preserved and impaired abilities. The most commonly used batteries are the Mini-Mental State Examination (MMSE) [27], the Montreal Cognitive Assessment (MoCA) [28], the Hierarchical Dementia Scale-Revised (HDS-R) [29], the Clinical Dementia Rating (CDR) [30], the Clock Drawing Test (CDT) [31], the Alzheimer’s disease Assessment Scale, Cognitive part (ADAS-Cog) [32], the Protocol for an Optimal Neurpsychological Evaluation (PENO, in French) [33], or the General Practitioner Assessment of Cognition (GPCog) [34]. Most of these tests have been translated into different languages, such as the Spanish version of the MMSE (MEC) [35], which is used in a few reviewed papers.

Tools measuring general functioning, such as the General Deterioration Scale (GDS) [36] or Activities of Daily Living, such as the Katz Index [37] and the Lawton Scale [38], are also commonly used. Based on the results of these tests, clinicians usually proceed to diagnose MCI, following Petersen’s criteria [39], or AD, following NINCDS-ADRDA criteria [40]. Alternative diagnoses appear in some texts, such as Functional Memory Disorder (FMD), following [41]’s criteria.

Speech elicitation protocols often include tasks extracted from examinations that were originally designed for aphasia, such as fluency tasks. Semantic verbal fluency tasks (SVF, in COWAT) [42] and are often known as “animal naming” because they require the participant generating a list of nouns from a certain category (e.g., animals) while being recorded. Another tool recycled from aphasia examinations is the Cookie Theft Picture task [43], which requires participants to describe a picture depicting a dynamic scene, and hence to also elaborate a short story. Although that is by far the most common picture used in such tests, other pictures have also been designed to elicit speech in a similar way (e.g., [44]).

Another group of tests consists, essentially, of language sub-tests (i.e., vocabulary) and immediate/delayed recall tests, extracted from batteries to measure intelligence and cognitive abilities, such as the Wechsler Adult Intelligence Scale (WAIS-III) [45] or the Wechsler Memory Scale (WMS-III) [46], respectively. Besides, the National Adult Reading Test (NART) [47], the Arizona Battery for Communication Disorders of Dementia ABCD battery (ABCD) [48], the Grandfather Passage [49] and a passage of The Little Prince [50] are also used to elicit speech in some articles.

Databases

Although types of data will be further discussed later, we hereby give an overview of the main datasets described. For space reasons, we only mention here those datasets which have been used in more than one study, and for which a requesting procedure might be available. For monologue data:

-

•

Pitt Corpus: By far the most commonly used. It consists of picture descriptions elicited by the Cookie Theft Picture, generated by healthy participants and patients with probable AD, and linked to their neuropsychological data (i.e., MMSE). It was collected by the University of Pittsburgh [51] and distributed through DementiaBank [52].

-

•

BEA Hungarian Dataset: This is a phonetic database, containing over 250 hours of multipurpose Hungarian spontaneous speech. It was collected by the Research Institute for Linguistics at the Hungarian Academy of Sciences [53] and distributed through META-SHARE.

-

•

Gothenburgh MCI database: This includes comprehensive assessments of young elderly participants during their Memory Clinic appointments and senior citizens that were recruited as their healthy counterparts [54]. Speech research undertaken with this dataset uses the Cookie Theft picture description and reading tasks subsets, all recorded in Swedish.

For dialogue data, the Carolina Conversations Collection (CCC) is the only available database. It consists of conversations between healthcare professionals and patients suffering from a chronic disease, including AD. For dementia research, participants are assigned to an AD group or a non-AD group, if their chronic condition is unrelated to dementia (i.e., diabetes, heart disease). Conversations are prompted by questions about their health condition and experience in healthcare. It is collected and distributed by the Medical University of South Carolina [55].

In addition, some of the reviewed articles refer to the IVA dataset, which consists of structured interviews undertaken and recorded simultaneously by an Intelligent Virtual Agent (a computer “avatar”) [56]. However, the potential availability of this dataset is unknown.

RESULTS

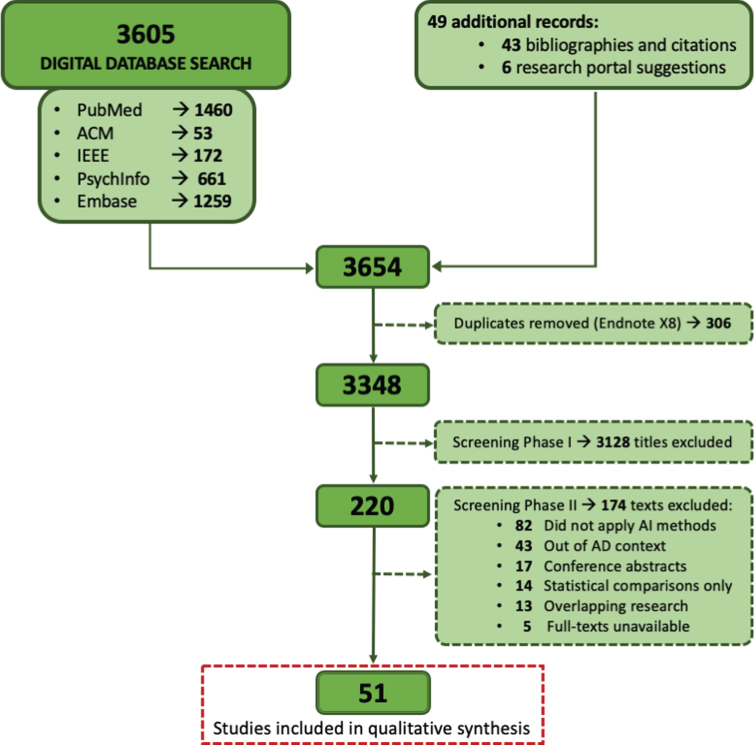

Adding up all digital databases, the searches resulted in 3,605 records. Another 43 papers were identified by searching through bibliographies and citations and 6 through research portal suggestions, adding up to 3,654 papers in total. Of those, 306 duplicates were removed using EndNote X8, leaving 3,348 for the first screening phase. In this first phase, 3,128 papers were excluded based on title and abstract, and therefore 220 reached the second phased of screening. Five of these papers did not have a full-text available, and therefore 215 papers where fully screened. Finally, 51 papers were included in the review (Fig. 2).

Fig.2.

Screening and selection procedure, following guidelines provided by PRISMA [19].

Existing literature

The review by [20] is, to our knowledge, the only published work with a comparable aim to the the present review, although there are important scope differences. First of all, the review by Voleti et al. differs from ours in terms of methodological scopes. While their focus was to create a taxonomy for speech and language features, ours was to survey diagnosis and cognitive assessment methods that are used in this field and to assess the extent to which they are successful. In this sense, our search was intentionally broad. There are also differences in the scope of medical applications. Their review studies a much broader range of disorders, from schizophrenia to depression and cognitive decline. Our search, however, targeted cognitive decline in the context of dementia and AD. It is our belief that these reviews complement each other in providing systematic accounts of these emerging fields.

Data extraction

Tables with information extracted from the papers are available as Supplementary Material. There are four different tables: a general table concerning usual clinical features of interest (after the PICOS framework), and three more specific tables concerning data details, methodology details, and implications for clinicians and researchers. Certain conventions and acronyms were adopted when extracting article information, and should be considered when interpreting the information contained on those tables. These conventions are available in the Supplementary Material, prior to the tables.

DISCUSSION

In this section, the data and outcomes of the different tables are synthesized in different subsections and put into perspective. Consistent patterns and exceptions are outlined. Descriptive aspects are organized by column names, following table order and referencing their corresponding table in brackets.

Study aim and design (Supplementary Table 4: SPICMO)

Most of the reviewed articles aim to use acoustic and/or linguistic features in order to distinguish the speech produced by healthy participants from the one produced by participants with a certain degree of cognitive impairment. The majority of studies attempt binary models to detecting AD and, less often, MCI, in comparison to HC. A few studies also attempt to distinguish between MCI an AD. Even when the dataset contains three or four groups (e.g., HC, SCI, MCI, AD), most studies only report pairwise group comparisons [57–61]. Out of 51 reviewed papers, only seven did attempt three-way [50, 62–64] or four-way [12, 65, 66] classification. Their results are inconclusive and present potential biases related to the quality of the datasets (i.e., low accuracy on balanced datasets, or high accuracy on imbalanced datasets).

Slightly different objectives are described by [67], the only study predicting conversion from MCI to AD, and by [68], the only study predicting progression from HC to any form of cognitive impairment. While these studies also learned classifiers to detect differences between groups, they differ from other studies in that they use longitudinal data. There is only one article with a different aim than classification. This is the study by Duong et al. [69], who attempt to describe AD and HC discourse patterns through cluster analysis.

Despite many titles mentioning cognitive monitoring, most research addresses only the presence or absence of cognitive impairments (41, out of 51 papers). Outside of those, seven papers are concerned with three or four disease stages [12, 50, 62–66], two explore longitudinal cognitive changes (although still through binary classification) [67, 68], and one describes discourse patterns [69]. We note that future research could take further advantage of this longitudinal aspect to build models able to generate a score reflecting risk of developing an impairment.

Population (Supplementary Table 4: SPICMO)

The target population are elderly people who are healthy or exhibit certain signs of cognitive decline related to AD (i.e., SCI, MCI, AD). Demographic information is frequently reported, most commonly age, followed by gender and years of education.

Cognitive scores such as MMSE are often part of the descriptive information provided for study participants as well. This serves group assignment purposes and allows quantitative comparisons of participants’ degree of cognitive decline. In certain studies, MMSE is used to calculate the baseline against which classifier performance will be measured [44, 70, 71]. However, despite being widely used in clinical and epidemiological investigations, MMSE has been criticized for having ceiling effects, especially when used to assess pre-clinical AD [72].

Some studies report no demographics [6, 66, 68, 73, 74], only age [10, 75], only age and gender [61, 76, 77], or only age and education [70, 78]. An exception is the dataset AZTIAHORE [79, 80], which contains the youngest healthy group (20–90 years old) and a typical AD group (68–98 years old), introducing potential biases due to this imbalance. Demographic variables are established risk factors for AD [81], therefore demographics reporting is essential for this type of study.

Interventions (Supplementary Table 4: SPICMO)

Study interventions almost invariably consist of a speech generation task preceded by a health assessment. This varies between general clinical assessments, including medical and neurological examinations, and specific cognitive testing. The comparison groups are based on diagnosis groups, which in turn are established with the results of such assessments. Therefore, papers lacking that information do not specify their criteria for group assignment [60, 61, 75, 79, 80, 82–85]. This could be problematic, since the field currently revolves around diagnostic categories, trying to identify such categories through speech data. Consequently, one should ensure that standard criteria have been used and that models are accurately tuned to these categories.

Speech tasks are sometimes part of the health assessment. For instance, speech data are often recorded during the language sub-test of a neuropsychological battery (e.g., verbal fluency, story recall, or picture description tasks). Another example of speech generated within clinical assessment is the recording of patient-doctor consultations [8, 85, 86] of cognitive examinations (e.g., MMSE [83]). There are also studies where participants are required to perform language tests outwith the health assessment, for speech elicitation purposes only. Exceptionally, two of these studies work with written rather than spoken language [87, 88]. Alternative tasks for this purpose are reading text passages aloud (e.g., [89]), recalling short films (e.g., [63]), retelling a story (e.g., [90]), retelling a day or a dream (e.g., [91]), or taking part in a semi-standardized (e.g., [68]) or conversational (e.g., [10]) interview.

Most of these are examples of constrained, laboratory-based interventions, which seldom include spontaneously generated language. There are advantages to collecting speech under these conditions, such as ease of standardization, better control over potential confunding factors, and focus on high cognitive load tasks that may be more likely to elicit cognitive deficits. However, analysis of spontaneous speech production and natural conversations also has advantages. Spontaneous and conversational data can be captured in natural settings over time, thus mitigating problems that might affect performance in controlled, cross-sectional data, such as a participant having an “off day” or having slept poorly the night before the test.

Comparison groups (Supplementary Table 4: SPICMO)

This review targets cognitive decline in the context of AD. For its purpose, nomenclature heterogeneity has been homogenized into four consistent groups: HC, SCI, MCI, and AD; with an additional group, CI, to account for unspecified impairment (see Supplementary Table 1). As an exception to this nomenclature are Mirheidari et al. [8, 12, 86], who compare participants with an impairment caused by neurodegenerative disease (ND group, including AD) to an impairment caused by functional memory disoders (FMD); and Weiner and Schultz [68] and Weiner et al. [74], who introduce a category called age-associated cognitive decline (AACD).

Furthermore, some studies add subdivisions to these categories. For instance, there are two studies that classify different stages within the AD group [79, 80]. Another study divides the MCI group between amnesic single domain (aMCI) and amnesic multiple domain (a+mdMCI), although classification results for two groups are not very promising [57]. Within-subject comparisons have also been attempted, comparing participants who remained in a certain cognitive status to those who changed [67, 74].

Most studies target populations where a cohort has already been diagnosed with AD or a related condition, looking for speech differences between those and healthy cohorts. Therefore, little insight is offered into pre-clinical stages of the disease.

Outcomes of interest (Supplementary Table 4: SPICMO)

Given the variety of diagnostic categories and types of data and features used, it is not easy to establish state-of-the-art performance. For binary classification, the most commonly attempted task, the reported performance ranges widely depending in the data use, the recording conditions, and the variables used in modelling. For instance Lopez-de Ipiña et al. [80] reported an accuracy that varied between 60% and 93.79% using only acoustic features that were generated ad hoc. Although the second figure is very promising, their dataset is small, 40 participants, and remarkably imbalanced in terms of both diagnostic class and age. In terms of class, even though they initially report 20 AD and 20 HC, the AD group is divided in three different severity stages, with 4, 10, and 6 participants, respectively, whereas the control group remains unchanged (20). In terms of age, 25% percent of their healthy controls fall within a 20–60 years old age range, while 100% of the AD group are over 60 years old. In contrast, Haider et al. [11] reported 78.7% accuracy, using also acoustic features only, but generated from standard feature sets that had been developed for computational paralinguistics. Besides, this figure appears as more robust because the dataset is much larger (164 participants) and it is balanced for class, age and gender, as well as audio enhanced. Guo et al. [92] obtained 85.4% accuracy on the same dataset as [11], but using text-based features only and without establishing class, age, or gender balance. All the figures quoted so far refer to monologue studies. The state-of-the-art accuracy for dialogue data is 86.6%, obtained by Luz et al. [10] using acoustic features only.

Regarding other classification experiments, we see that Mirzaei et al. [50] reports 62% for a 3-way classification, discriminating HC, MCI, and AD. They are also among the few to appropriately report accuracy, since they work with a class-balanced dataset, while many other studies report overall accuracy in class-imbalanced datasets. Accuracy figures can be very misleading in the presence of class imbalance. A trivial rejector (i.e., a classifier that trivially classifiers all instances as negative with respect to a class of interest), would achieve very high accuracy on a dataset that contained, say, 90% negative instances. For example, Nasrolahzadeh et al. [65] report really high accuracy with a 4-way classifier, 97.71%, but in a highly imbalanced dataset. However, Mirheidari et al. [12] reported 62% accuracy and 0.815 AUC for a 4-way classifier in a slightly more balanced dataset and Thomas et al. [66], also 4-way, only 50%, on four groups of MMSE scores. Other studies attempting 3-way classification experiments in balanced datasets are Egas López et al. [62], 56% and Gosztolya et al. [63] with 66.7%. Kato et al. [64], however, reports 85.4% 3-way accuracy in an imbalanced dataset.

These results are diverse, and it stands clear that some will lead to more robust conclusions than others. Notwithstanding, numerical outcomes are always subject to the science behind them, the quality of the datasets and the rigor of the method. This disparity of results therefore highlights the need for improved standards of reporting in this kind of study. Reported results should include metrics that allow the reader to assess the trade-off between false positives and false negatives in classification, such as specificity, sensitivity, fallout, and F scores, as well measures that are less sensitive to class imbalance, widely used in other applications of computational paralinguistics, such as unweighted average recall. Contingency tables and ROC curves should also be provided whenever possible. Given the difficulties in reporting, comparing and differentiating the results for the 51 reviewed studies on an equal footing, we refer the reader to Supplementary Tables 4 and 6.

Size of dataset or subset (Supplementary Table 5: Data Details)

Within a machine learning context, all the reviewed studies use relatively small datasets. About 31% train their models with less than 50 participants [8, 10, 50, 64, 68, 71, 79, 80, 82, 83, 85, 86, 89, 91, 93, 94], while only 27% have 100 or more participants [9, 11, 57, 60, 67, 70, 73, 75–77, 92, 95–97]. In fact, 5 report samples with less than 30 participants [68, 79, 83, 89, 94].

It is worth noting that those figures represent the dataset size in full, which is then divided in two, three or four groups, most of the times unevenly. There are only 6 studies where not only the dataset, but also each experimental group contains 100 or more participants/speech samples [6, 9, 11, 85, 92, 95]. All of these studies used the Pitt Corpus.

The Pitt Corpus is the largest dataset available. It is used in full by Ben Ammar and Ben Ayed [95], and contains 484 speech samples, although it is not clear to how many unique participants these samples belong. With the same dataset, Luz [6] reports 398 speech samples, but again, no number of unique participants. However, another study working with the Pitt Corpus does report 473 speech samples from 264 participants [9]. It is important for studies to report numbers of unique participants in order to allow the reader to assess the risk that the machine learning models might actually be simply learning to recognize participants rather than their underlying cognitive status. This risk can be mitigated, for example, by ensuring that all samples from each participant are in either the training set or the test set, but not both.

Data type (Supplementary Table 5: Data Details)

This column refers to the data used in each reviewed study, indicating if these data consist of monologues or dialogues, purposefully elicited narratives or speech obtained through a cognitive test. It also includes whether data was recorded or recorded and transcribed, and how this transcription was done (i.e., manual or automatic).

Of the reviewed studies, 82% used monologue data, and most of them (36) obtained speech through a picture description task (e.g., Pitt Corpus). These are considered relatively spontaneous speech samples, since participants may describe the picture in whichever way they want, although the speech content is always constrained. Among other monologue studies, eight work with speech obtained through cognitive tests, frequently verbal fluency tasks. Only two papers rely on truly spontaneous and natural monologues, prompted with an open question instead of a picture description [60, 65].

Dialogue data are present less frequently, in 27% of the studies, and elicited more heterogeneously. For instance, in structured dialogues (4 studies), both speakers (i.e., patient and professional) are often recorded while taking a cognitive test [8, 12, 83, 94]. Semi-structured dialogues (5 studies) are interview-type conversations where questions are roughly even across participants. From our point of view, the most desirable data type are conversational dialogues (5 studies), where interactive speech is prompted with the least possible constraints [10, 66, 79, 80, 98]. A few studies have collected dialogue data through an intelligent virtual agent (IVA) [8, 12, 94] showing the potential for data to be collected remotely, led by an automated computer system.

In terms of data modalities (e.g., audio, text, or both), two studies are the exception where data was directly collected as written text [87, 88]. A few studies (6) work with audio files and associated ASR transcriptions [12, 44, 62, 63, 77, 99]. Another group of studies (14) use solely voice recordings [50, 57, 60, 61, 64, 65, 71, 79, 80, 82, 84, 89, 97, 100]. More than half of the studies (55%) rely, at least partially, on manually transcribed data. This is positive for data sharing purposes, since manual transcriptions are usually considered golden standard quality data. However, methods that rely on transcribed speech may have limited practical applicability, as they are costly and time-consuming, and often (as when ASR is used) require error prone (see section on pre-processing below) intermediate steps compared to working directly with the audio recordings.

Other modalities (Supplementary Table 5: Data Details)

The most frequently encountered data modality, apart from speech and language, is structured data related to cognitive examinations, largely dominated by MMSE and verbal fluency scores. Another modality is video, which is available in some datasets such as CCC [10, 98], AZTITXIKI [79], AZTIAHORE [60, 80], IVA [12, 85], or the one in Tanaka et al. [94], although it is not included in their analysis. Other analyzed modalities include neuroimaging data, such as MRI [67] and fNIRS [64], eye-tracking [7, 94], or gait information [71].

In order to develop successful prediction models for pre-clinical populations, it is likely that future interactive AI studies will begin to include demographic information, biomarker data, and lifestyle risk factors [100].

Data annotation (Supplementary Table 5: Data Details)

Group labels and sizes are presented in this section of the Data Details table, the aim of which is to give information about the available speech datasets. Accordingly, labels remain as they are reported in each study, as opposed to the way in which we homogenized them to describe Comparison Groups in Supplementary Table 4. In other words, even though the majority of studies annotate their groups as HC, SCI, MCI, and AD, some do not. For example, the HC group is labelled as CON (control) [91], NC (normal cognition) [56, 64, 88, 99], CH (cognitively healthy) [82], and CN (cognitively normal) [67]. SCI can also be named SMC [96], and there is a similar but different category (AACD) reported in two other studies [68, 74]. MCI and AD are more homogeneous due to being diagnostic categories that need to meet certain clinical criteria to be assigned, although some studies do refer to AD as dementia [62, 95]. Another heterogeneous category is CI (i.e., unspecified cognitive impairment), which is annotated as low or high MMSE scores [93], or as mild dementia [89]. Mild dementia may sound similar to MCI, however the study did not report diagnostic criteria for MCI to be considered.

This section offers insight into another aspect in which lack of consensus and uniformity is obvious. Using accurate terminology (i.e., abiding by diagnosis categories) when referring to each of these groups could help establish the relevance of this kind of research to clinical audiences.

Data balance (Supplementary Table 5: Data Details)

Only 39% (20) of the reviewed studies present class balance, that is, the number of participants is evenly distributed across the two, three, or four diagnostic categories [7, 8, 11, 50, 60, 62–64, 75, 78, 82, 84, 86, 88–91, 94, 95, 98]. Among these 20 studies, one reports only between-class age and gender balance [94]; another one reports class balance, within-class gender balance, and between-class age and gender balance [11]. A few report balance for all features except for within-class gender balance, which is not specified [62, 63, 88]. Lastly, there is only one study that, apart from class balance, also reports gender balance within and between classes, as well as age and education balance between classes [87]. Surprisingly, nine other studies fail to report one or more demographic aspects.

Sometimes gender is reported per dataset, but not per class (e.g., [92]), and therefore not accounted for in the analysis, even though is one of the main risk factors [80]. Often, p-values are appropriately presented to indicate that demographics are balanced between groups (e.g., [62]). Unfortunately, almost as often, no statistical values are reported to argue for balance between groups (e.g., [83]). There are also cases where where the text reports demographic balance but neither group distributions nor statistical tests are presented (e.g., [91]). Another aspect to take into account is the differences between raw and pre-processed data. For instance, Lopez-de Ipiña et al. [79, 80] describe a dataset where 20% of the HC speech data, but 80% of the AD speech data, is removed during pre-processing. Hence, even if these datasets had been balanced before (they were not) they will definitely not be balanced after pre-processing has taken place.

It is also worth discussing the reasons behind participant class imbalance when the same groups are class balanced in terms of samples. Fraser et al. [9], for example, work with a subset of the Pitt Corpus of 97 HC participants and 176 AD participants; however, the number of samples is 233 and 240, respectively. Similar patterns apply to other studies where the number of participants and samples are reported [92, 98]. Did HC come for more visits, or did perhaps AD participants fail to come to later visits or drop out of the study? These incongruities could be hiding systematic group biases.

Conclusions drawn from imbalanced data are subject to a greater probability of bias, especially in small datasets. For example, certain performance metrics to evaluate classifiers are more robust (e.g., F1) than others (e.g., acc) against this imbalance. Accordingly, in this table, the smaller the dataset, the more strict we have been when evaluating the balance of its features. Moving forward, it is desirable that more emphasis is placed on data balance, not only in terms of group distribution, but also in terms of those demographic features established risk factors (i.e., age, gender, and years of education).

Data availability (Supplementary Table 5: Data Details)

Strikingly, very few studies make their data available, or even report on its (un)availability, even when using available data hosted by a different institution (e.g., studies using the Pitt Corpus). The majority (77%, 39 studies) fail to report on data availability. From the remaining 12 studies, nine use data from DementiaBank (Pitt Corpus or Mandarin_Lu) and do report data origin and availability. However, only [75, 90] share the exact specification of the subset of Pitt Corpus used for their analysis, in order for other researchers to be able to replicate their findings, taking advantage of the availability of the corpus. The same applies to Luz et al. [10], who made available their identifiers for the CCC dataset. One other study, Fraser et al. [7], mentions that data are available upon request to authors.

Haider et al. [11], one of the studies working on the Pitt Corpus, has released their subset as part of a challenge for INTERSPEECH 2020, providing the research community with a dataset matched for age and gender and with enhanced audio. In such an emerging and heterogeneous field, shared tasks and data availability are important progression avenues.

Language (Supplementary Table 5: Data Details)

As expected, a number of studies (41%) were conducted through English. However, there is a fair amount of papers using data in a variety of languages, including: Italian [91], Portuguese [57, 90], Chinese and Taiwanese [82], French [50, 69, 77, 96, 102], Hungarian [62, 63, 99], Spanish [83, 89, 100], Swedish [7, 59, 87], Japanese [64, 71, 94], Turkish [84], Persian [65], Greek [61, 88], German [68, 74], or reported as multilingual [60, 79, 80].

This is essential if screening methodologies for AD are to be implemented worldwide [102]. The main caveat, however, is not the number of studies conducted in a particular language, but the fact that most of the studies conducted in languages other than English do not report on data availability. As mentioned, only Dos Santos et al. [90] and Fraser et al. [7] report their data being accessible upon request, and Chien et al. [82] works with data available from DementiaBank. For speech-based methodology aimed at AD detection, it would be a helpful practice to make these data available, so that other groups are able to increase the amount of research done in any given language.

Pre-processing (Supplementary Table 6: Methodology)

Pre-processing includes the steps for data preparation prior to data analysis. It is essential to determine in which shape any given data is introduced in the analysis pipeline, and therefore, the outcome of it. However, surprisingly little detail is reported in the reviewed studies.

Regarding text data, the main pre-processing procedure is transcription. Transcription may happen manually or through ASR. The Kaldi speech recognition toolkit [103], for instance, was used in several recent papers (e.g., [12, 62]). Where not specified, manual transcription is assumed. Although many ASR approaches do extract information on word content (e.g., [8, 44, 71, 85, 86, 96]), some focus on temporal features, which are content-independent (e.g., [63, 82]). Some studies report their transcription unit, that is, word-level transcription (e.g., [9]), phone-level transcription (e.g., [63]) or utterance-level transcription (e.g., [91]). Further text pre-processing involves tokenization [73, 82, 90, 94], lemmatization [87], and removal of stopwords and punctuation [87, 90]. Depending on the research question, dysfluencies are also removed (e.g., [87, 90]), or annotated as relevant for subsequent analysis (e.g., [59]).

Currently, commercial ASRs are optimized to minimize errors at word level, and therefore not ideal for generating non-verbal acoustic features. Besides, it seems that AD patients are more likely to generate ungrammatical sentences, incorrect inflections and other subtleties that are not well handled by such ASR systems. In spite of this, only a few papers, by the same research group, rely on ASR and report WER (word error rate), DER (diarisation eror rate), or WDER (word diarisation error rate) [8, 12, 85]. It is becoming increasingly obvious that off-the-shelf ASR tools are not readily prepared for dementia research, and therefore some reviewed studies developed their own custom ASR systems [44, 63].

Regarding acoustic data, pre-processing is rarely reported outside the audio files being put through an ASR. When reported, it mainly involves speech-silence segmentation with voice activity deteciton algorithms (VAD), including segment length and the acoustic criterion chosen for segmentation thresholds (i.e., intensity) [6, 11, 44, 50, 60, 61, 64, 65, 68, 76, 79, 80, 96, 102]. It should also include any audio enhancement procedures, such as volume normalization or removal of background noise, only reported in Haider et al. [11] and Sadeghian et al. [44].

We concluded from the reviewed papers that it is not common practice for authors in this field to give a complete account of the data pre-processing procedures they followed. As these procedures are crucial to reliability and replicability of results, we recommend that further research specify these procedures more thoroughly.

Feature generation (Supplementary Table 6: Methodology)

Generated speech features are divided into two main groups, text-based and acoustic features, and follow the taxonomy presented in Table 1. Some studies work with multimodal feature sets, including images [94] and gait [71] measurements.

Text-based features comprise a range of NLP elements, commonly a subset consisting of lexical and syntactical indices such as type-token ratio (TTR), idea density or Yngve and Frazier indices. TTR is a measure of lexical complexity, calculated by taking the total number of unique words, also called lexical items (i.e., types) and dividing by the total number of words (i.e., tokens) in a given language instance [105]. Idea density is the number of ideas expressed in a given language instance, with ’ideas’ understood as new information and adequate use of complex propositions. High early idea density seems to be a lower risk predictor for developing AD later in life, whereas lower idea density appears associated with brain atrophy [106]. Yngve [22] and Frazier [23] scores indicate syntactical complexity by calculating the depth of the parse tree that results from the grammatical analysis of a given language instance. Both indices have been associated with working memory [107] and showed a declining pattern in the longitudinal analysis of the written work by Iris Murdoch, a novelist who was diagnosed with AD [108].

In some studies, the research question targets a specific aspect of language, such as syntactical complexity [59], or a particular way of representing it, such as speech graph attributes [57]. Fraser et al. [9] present a more comprehensive feature set, including some acoustic features. Similar to Fraser et al. [9], although less comprehensive, a few other studies combine text-based and acoustic features [8, 44, 71, 78, 86, 89, 91, 92, 94, 96]. However, most published research is specific to one type of data or another.

The most commonly studied acoustic features are prosodic temporal features, which are almost invariably reported, followed by ASR-related features, specifically pause patterns. There is also focus on spectral features (features of the frequency domain representation of the speech signal obtained through application of the Fourier transform), which include MFCCs [62]. The most comprehensive studies include spectral, ASR-related, prosodic temporal, voice quality features [8, 50, 60, 79, 84, 92, 100], as well as features derived from the Higuchi Fractal Dimension [80] or from higher order spectral analysis [65]. It is worth noting here that Tanaka et al. [94] extract F0’s coefficient of variation per utterance. The decision to not extract F0’s mean and SD was due to their association with individual differences and sex. Similarly, Gonzalez-Moreira et al. [89] report F0 and functionals in semitones, because research argues that using semitones to express F0 reduces gender differences [109], which is corroborated by the choice of semitones in the standardized eGeMAPS [11].

Studies using spoken dialogue recordings extract turn-taking patterns, vocalization instances, and speech rate [10, 94]. Those focusing on transcribed dialogues also extract turn-taking patterns, as well as dysfluencies [8, 12, 86]. Guinn et al. [98] work with longitudinal dialogue data but do not extract specific dialogue or longitudinal features.

With regards to feature selection, 30% of the studies do not report feature selection procedures. Among those that do, the majority (another 30%) report using a filter approach based on a statistical index of feature differences between classes, such as p-values, Cohen’s d, AUC, or Pearson’s correlation. Others rely on wrapper methods [50], RFE [8, 86], filter methods based on information gain [65, 95], PCA [64], best first greedy algorithm [44], and cross-validation, seeking through the iterations for which feature type contributes more to the classification model [80].

Despite certain similarities and a few features being common to most acoustic works (i.e., prosodic temporal), there is striking heterogeneity among studies. Since they usually obtain features using ad hoc procedures, these studies are seldom comparable, making it difficult to ascertain the state-of-the-art in terms of performance, as pointed out before, and assess further research avenues. However, this state of affairs may be starting to change as the field matures. Haider et al. [11], for instance, chose to employ standardized feature sets (i.e., ComPare, eGeMAPS, emobase) obtained through formalized procedures [110] which are extensively documented and can be easily replicated. Furthermore, one of these feature sets, eGeMAPS, was developed specifically to target affective speech and underlying physiological processes. Utilizing theoretically informed, standardized feature sets increases the reliability of a study, since the same features have been previously applied (and can continue to be applied) to other engineering tasks, always extracted in the exact same way. Likewise, we argue that creating and utilizing standardized feature sets will improve this field by allowing cross-study comparisons. Additionally, we recommend that the approach to feature generation should be more consistently reported to enhance study replicability and generalizability.

Machine learning task/method (Supplementary Table 6: Methodology)

Most reviewed papers employ supervised learning, except for a study that uses cluster analysis to investigate distinctive discourse patterns among participants [69].

As regards choice of machine learning methods, very few papers report the use of artificial neural networks [91, 95], recurrent neural networks [82], multi-layer perceptron [44, 67, 79, 80, 86], or convolutional neural networks [60, 85]. This is probably due to the fact that most datasets are relatively small, and these methods require large amounts of data. Rather, most studies use several conventional machine learning classifiers, most commonly SVM, NB, RF, and k-NN and then compare their performance. Although these comparisons must be assessed cautiously, a clear pattern seems to emerge with SVM consistently outperforming other classifiers.

Cognitive scores, particularly MMSE, are available with many datasets, including the most commonly used, Pitt Corpus. However, these scores mostly remain unused except for diagnostic group assignments, or more rarely, as baseline performance [44, 70, 71], in studies that conclude that MMSE is not more informative than speech based features. All supervised learning approaches work toward classification and no regression over cognitive scores is attempted. We regard this as a gap that could be explored in future research.

It is worth noting, however, that some attempts at prediction of MMSE score have been presented in workshops and computer science conferences that are not indexed in the larger biobliography databases. These approaches achieved some degree of success. Linz et al. [111], for instance, trained a regression model that used the SVF to predict MMSE scores and obtained a mean absolute error of 2.2. A few other works used the Pitt Corpus for similar purposes, such as Al-Hameed et al. [112], who extracted 811 acoustic features to build a regression model able to predict MMSE scores with an average mean absolute error of 3.1; or Pou-Prom and Rudzicz [113], who used a multiview embedding to capture different levels of cognitive impairment and achieved a mean absolute error of 3.42 in the regression task. Another publication with the Pitt Corpus is authored by Yancheva et al. [114], who extracted a more comprehensive feature set, including lexicosyntactic, acoustic, and semantic measures, and used them to predict MMSE scores. They trained a dynamic Bayes network that modeled the longitudinal progression observed on these features and MMSE over time, reporting a mean absolute error of 3.83. This is, actually, one of the very few works attempting a progression analysis over longitudinal data.

Evaluation techniques (Supplementary Table 6: Methodology)

A substantial proportion of studies (43%) do not present a baseline against which study results can be compared. Among the remaining papers, a few set specific results from a comparable work as their baseline [6, 65] or from their own previous work [75]. Others calculate their baseline by training a classifier with all the generated features, that is, before attempting to reduce the feature set with either selection or extraction methods [83, 95, 99], with cognitive scores only [7, 44, 70, 71] or by training a classifier with demographic scores only [63]. Some baseline classifiers are also trained with a set of speech-based features that excludes the feature targeted by the research question. Some examples are studies investigating the potential of topic model features [87], emotional features [79], fractal dimension features [80], higher order spectral features [65], or feature extracted automatically, as opposed to manually [73, 85, 96]. Some studies choose random guess or naive estimations (ZeroR) [10, 11, 66, 74, 88] as their baseline performance.

While several performance metrics are often reported, accuracy is the most common one. While it seems straightforward to understand a classifier’s performance by knowing its accuracy, it is not always appropriately informed. Since accuracy is not robust against dataset imbalances, it is only appropriate when diagnostic groups are balanced, such as when reported in Roark et al. [78] and Khodabakhsh and Demiroğlu [84]. This is especially problematic for works on imbalanced datasets where accuracy is the only metric reported [9, 12, 44, 60, 66, 77, 83, 92, 93, 100]. Clinically relevant metrics such as AUC and EER (e.g., [61, 102]), which summarize the rates of false alarms and false negatives, are reported in less than half of the reviewed studies.

Cross-validation (CV) is probably the most established practice for classifier evaluation. It is reported in all papers but five, of which two are not very recent [66, 93], another two do not report CV but report using a hold-out set [82, 91], and only one reports using neither CV nor a hold-out set procedure [95]. There is a fair amount of variation within the CV procedures reported, since datasets are limited and heterogeneous. For example, leave-one-out CV is used in one third of the reviewed papers, as an attempt to mitigate the potential bias caused by using a small dataset. Several other studies choose leave-pair-out CV instead [7, 70, 73, 75, 78, 97], since it produces unbiased estimates for AUC and also reduces potential size bias. There is also another research group who attempted to reduce the effects of their imbalance dataset by using stratified CV [68, 74]. Lastly, no studies report hold-out set procedures, except for the two mentioned above, with training/test sets divided at 80/20% and 85/15%, respectively, and another study where the partition percentages are not detailed [97].

There is a potential reporting problem in that many studies do not clearly indicate whether their models’ hyper-parameters were optimized on the test set within or outside each fold of the CV. However, CV is generally considered the best method of evaluation when working with small datasets, where held-out set procedures would be even less reliable, since they would involve testing the system on only a few samples. CV is therefore an appropriate choice for the articles reviewed. The lack of systematic model validation on entirely separate datasets, and the poor practice of using accuracy as the single metric in imbalanced datasets, could compromise the generalizability of results in this field. While it is worth noting that the former issue is due to data scarcity, and therefore more difficult to address, a more appropriate selection of performance metrics could be implemented straight away to enhance the robustness of current findings.

Results overview (Supplementary Table 6: Methodology)

Performance varies depending on the metric chosen, the type of data and the classification algorithm used. Hence, it is very difficult to summarize these results. The evaluated classifiers range between 50% or even lower in some cases, up to over 90% accuracy. However, as we have pointed out, performance figures must be interpreted with caution due to the potential biases introduced by dataset size, dataset imbalances and non standardized ad hoc feature generation. Since these biases cannot be fully accounted for and models are hardly comparable to one another, we do not think it is meaningful to further highlight the best performing models. Such comparisons will become more meaningful when all conditions for evaluation can be aligned, such as in the ADReSS challenge [115], which provides a benchmark dataset (balanced and enhanced) and commits to a reliable study comparison.

Further research on the methodology and how different algorithms behave with certain types of data will shed light on why some classifiers perform even worse than random while others are close to perfect. This could simply be because the high performing algorithms were coincidentally tested on ’easy’ data (e.g., better quality, simpler structures, very clear diagnoses), but the problem could also be classifier specific and therefore differences would be associated with the choice of algorithm. Understanding this would influence the future viability of this sort of technology.

Research implications (Supplementary Table 7: Clinical applicability)

This section reviews the papers in terms of novelty, replicability, and generalizability, three aspects key to future research.

As regards novelty, the newest aspect of each research paper is succinctly presented in the tables. This is often conveyed by the title of an article, although caution must be exercised with regards to how this information is presented. For example, Tröger et al.’s title (2018) reads “Telephone-based Dementia Screening I: Automated Semantic Verbal Fluency Assessment”, but only when you read the full text does it become clear that such telephone screening has been simulated.

There is often novelty in pilot studies, especially those presenting preliminary results for a new project, hence involving brand new data [80, 91] or tests for a newly developed system or device [60]. Outside of those, assessing novelty in a systematic review over a 20-year span can be complicated what was novel 10 years ago might not be novel since today. For example, 3-way classification entailed novelty in Bertola et al. (2014) [57], as well as 4-way classification did in Thomas et al. (2005) [66] with text data and little success, and later in Nasrolahzadeh et al. (2018) [65] with acoustic data and an improved performance. Given its low frequency and its naturalness, we have chosen to present the use of dialogue data [10, 68, 84, 85, 94, 98] as a novelty relevant for future research. Other examples of novelty consist of automated neuropsychological scoring, either by automating traditional scoring [57] or by generating a new type of score [67, 70].

Methodological novelty is also present. Even though most studies apply standard machine learning classifiers to distinguish between experimental groups, two approaches do stand out: Duong et al.’s (2005) unique use of cluster analysis (a form of unsupervised learning) with some success, and the use of ensemble [67, 90] and cascaded [7] classifiers, with much better results. Some studies present relevant novelty for pre-processing, generating their own custom ASR systems [45, 61, 63, 99], which offers relevant insight about off-the-shelf ASR. While this is based on word accuracy, some of the customized ASR systems are phone-based [63, 99] and seem to work better with speech generated by participants with AD. Another pre-processing novelty is the use of dynamic threshold for pause behavior [76], which could be essential for personalized screening. With regards to feature generation, “active data representation” is a novel method utilized in conjunction with standardized feature sets by Haider et al. [11], who confirmed the feasibility of a useful tool that is open software and readily available (i.e., ComParE, emobase and eGeMAPS). A particularity of certain papers is their focus on emotional response, analyzed from the speech signal [79, 80]. This could be an avenue for future research, since there are other works presenting interesting findings on emotional prosody and AD [116, 117]. Last, but not least, despite the mentioned importance of early detection, most papers do not target early diagnosis, or do it in conjunction with severe AD only (i.e., if the dataset contains participants at different stages). Consequently, Lundholm Fors et al. (2018) [59] introduced a crucial novelty by not only assessing, but actively recruiting and focusing on participants at the pre-clinical stage of the disease (SCI).

Another essential novelty is related to longitudinal aspects of data [68, 77, 97]. The vast majority of studies work on monologue cross-sectional data, although some datasets do include longitudinal information (i.e., each participant has produced several speech samples). This is sometimes discarded, either by treating each sample as a different participant, which generates subject dependence across samples [74]; or by cross-observation averaging, which misses longitudinal information but does not generate this dependence [75, 97]. Other studies successfully used this information to predict change of cognitive status within-subject [68, 118]. Guinn et al. [98] work with longitudinal dialogue data that becomes cross-sectional after pre-processing (i.e., they conglomerate samples by the same participant) and they do not extract specific dialogue features.

The novelty with most clinical potential is, in our view, the inclusion of different types of data, since something as complex as AD is likely to require a comprehensive model for successful screening. However, only a few studies combine different sources of data, such as MRI data [67], eye-tracking [7], and gait [71]. Similarly, papers where human-robot interaction [8, 12, 85, 94] or telephone-based systems [96, 97] are implemented also offer novel insight and avenues for future research. These approaches offer a picture of what automatic, cost-effective screening could look like in a perhaps not so distant future.

On a different front, replicability is assessed based on whether the authors report complete and accurate procedures of their research. Replicability has research implications because, before translating any method into clinical practice, its performance needs to be confirmed by other researchers being able to reproduce similar results. In this review, replicabilility is labelled as low, partial, and full. When we labelled an article as full with regards to replicability, we meant that their methods section was considered to be thorough enough to be reproduced by an independent researcher, from the specification of participants demographics and group size to the description of pre-processing, feature generation, classification, and evaluation procedures. Only three articles were labeled as low replicability [66, 74, 85], as they lacked detail in at least two of those sections (frequently data information and feature generation procedures). Twenty-two and twenty-five studies were labelled as partial and full, respectively. The elements most commonly missing in the partial papers are pre-processing (e.g., [83]) and feature generation procedures (e.g., [78]), which are essential steps in shaping the input to the machine learning classifiers. It must be highlighted that all low replicability papers are conference proceedings, where text space is particularly restricted. Hence, it does not stand out as one of the key problems of the field, even though it is clear that the description of pre-processing and feature generation must be improved.

The last research implication is generalizability, which is the degree to which a research approach may be attempted with different data, different settings, or real practice. Since generalizability is essentially about how translatable research is, most aspects in this last table are actually related to it:

-

•

Whether external validation has been attempted is directly linked to generalizability;

-

•

feature balance: results obtained in imbalanced datasets are less reliable and therefore less generalizable to other datasets;

-

•

contextualization of results: for something to be generalizable is essential to know where it comes from and how does it compare to similar research;

-

•

spontaneous speech: speech spontaneity is one aspect of naturalness, and the more natural the speech data, the more representative of “real” speech and the more generalizable;

-

•

conversational speech: we propose that conversational speech is more representative of “real” speech;

-

•

content-independence: if the classifier input includes features that are tied up with task content (e.g., lexical, syntactic, semantic, pragmatics), some degree of generalizability is lost;

-

•