Highlights

-

•

Cognitive bias is due to systematic thinking errors caused by human processing limitations or inappropriate mental models.

-

•

Cognitive bias in medicine results in diagnostic errors and a delay in the acceptance of new scientific findings.

-

•

A delay in the acceptance of AMR and the role of HLA antibodies in transplantation resulted in a delay in the development of better treatments.

-

•

Strategies emphasizing analytic thinking can speed scientific progress and should be implemented to avoid bias.

Key Words: analytic thinking, antibody-mediated rejection, cardiac transplantation, cognitive bias, debiasing strategies, human leucocyte antigen antibodies, intuitive thinking, publication bias

Abbreviations and Acronyms: AMR, antibody-mediated rejection; COVID-19, coronavirus disease-2019; HLA, human leucocyte antigen

Summary

Cognitive bias consists of systematic errors in thinking due to human processing limitations or inappropriate mental models. Cognitive bias occurs when intuitive thinking is used to reach conclusions about information rather than analytic (mindful) thinking. Scientific progress is delayed when bias influences the dissemination of new scientific knowledge, as it has with the role of human leucocyte antigen antibodies and antibody-mediated rejection in cardiac transplantation. Mitigating strategies can be successful but involve concerted action by investigators, peer reviewers, and editors to consider how we think as well as what we think.

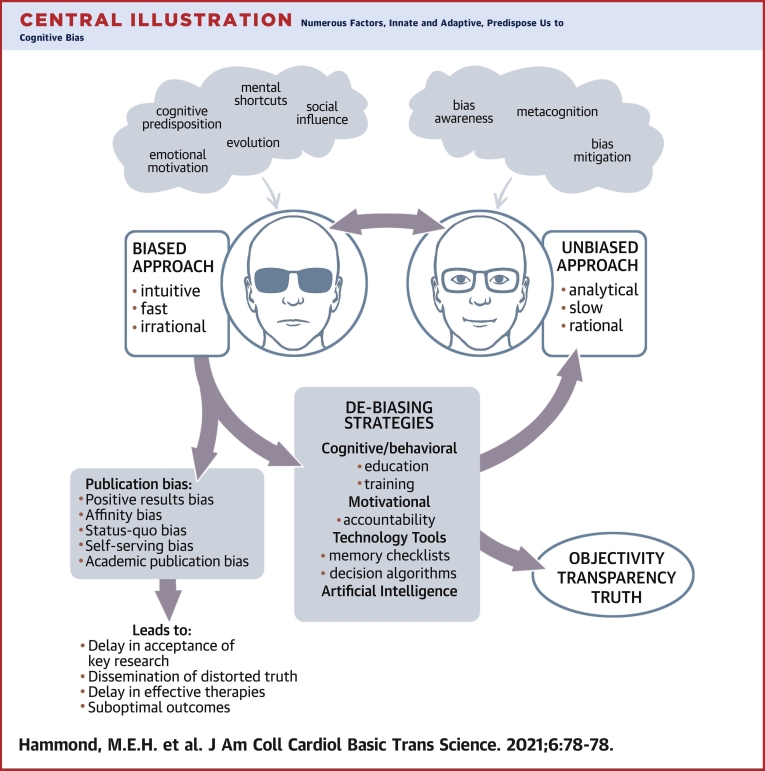

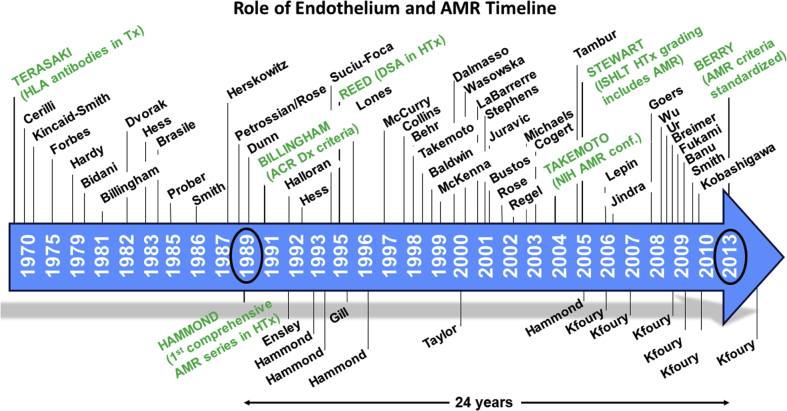

Central Illustration

Clinical decisions and scientific decisions about choosing which articles to publish in scientific journals are both subject to biases in thought processes. In this paper article, we review the types of bias that can lead to flawed clinical decisions, as well as the delays in publishing of scientific articles that do not fit into current accepted scientific norms.

Cognitive bias consists of systematic errors in thinking due to human processing limitations or inappropriate mental models (1). Cognitive bias occurs when intuitive thinking is used to reach conclusions about information. Intuitive or “fast thinking” in modern parlance is the preferred route of decision making because it is practical and efficient. It is hardwired, subconscious, or gained by repeated experience. It is largely autonomous. As part of this intuitive thinking process, humans used heuristics, mental shortcuts learned or inculcated by evolutionary processes, to make decisions using a few relevant predictors. The counterpoint approach to intuitive thinking is analytic (mindful) thinking. In analytic thinking, logic and self-examination of attitudes about data inputs are also included. Analytic thinking is conscious, deliberate, and generally reliable (2). Because it is a slower mental process, it is infrequently used in daily decision making.

Because we all mostly operate in the mode of intuitive thinking, we all are subject to various cognitive biases in variable degrees. These biases are important because they affect human interactions with any presented information, including our processing of scientific information in publications and our evaluation of responses to the current pandemic. There are at least 100 described types of cognitive bias; the common ones of relevance to this topic are shown in Table 1.

Table 1.

| Type of Bias | Description |

|---|---|

| Anchoring bias | Implicit reference point of first data |

| Attribution bias | Attempts to discover reason for observations |

| Search-satisficing bias | Tendency to believe that our current knowledge is sufficient and complete |

| Confirmation bias | Favor of information confirming previous belief |

| Framing bias | Favor based on presentation of information in negative or positive context |

| Status quo bias | Favor of options supporting current scientific dogma |

| False consensus bias | Tendency to overestimate how much others agree with us |

| Blind spot bias | Tendency to believe one is less biased than others |

| Not-invented-here bias | Bias against external knowledge |

The coronavirus disease-2019 (COVID-19) pandemic has become an international obsession by exposing us to disease, death, economic consequences, and arguments about best options for disease control. Our biases strongly influence how we perceive this threat. The framing of information (framing bias) strongly influences our acceptance of information. When COVID-19 is framed as being a danger only to older adults, such a frame may appeal to younger adults who see their risk of infection and possibly death as being much less. When living among others who feel that the danger of COVID-19 is much less than is reported by scientists or the press, we can be affected by false consensus bias, presuming that everyone in our state or community agrees with our assessment of low risk. Such attitudes have encouraged large gatherings of people on beaches in Florida or during Mardi Gras in New Orleans in defiance of recommended social distancing practices. We promote our confirmation biases when we choose to restrict our sources of information to only those sources that agree with our social and political opinions. As Thomas Davenport recently wrote, “Emotion-driven beliefs and intuition are powerful at guiding people toward less-than-optimal decisions. By understanding our biases, we have a better chance of quieting them and moving toward better choices” (3) (Central Illustration).

Central Illustration.

Numerous Factors, Innate and Adaptive, Predispose Us to Cognitive Bias

A biased approach to decision making, although practical, may result in errors. Publication bias in medicine delays the acceptance of novel key ideas, distorts truth, and may negatively impact outcomes by hindering the development and testing of candidate therapies. Debiasing strategies, although underused, can effectively enhance self-awareness of one’s thought processes away from bias and closer to objectivity and truth.

Although we are constantly exposed to the influence of bias in our daily lives, as physicians and scientists, we are unlikely to consider its influence in medical decision making or research. Bias has been extensively studied in the social sciences but has often been ignored in medicine (2). There has been no systematic training of medical professionals in how bias affects decision making in either medical schools or research training programs (other than financial conflict of interest bias). However, there are recent efforts directed at increasing bias awareness in medical training programs, particularly in emergency medicine (4). Training about bias and debiasing strategies could condition medical professionals to consciously consider how they make decisions using scientific information so that analytic thinking can become routine. Both medical school and postgraduate training emphasize team discussions as part of case presentations. Part of those discussions could include questions to address why team members prefer specific diagnoses or treatments and how they might develop a more systematic and analytic approach to the problem. We know that diagnostic failure rates can be as high as 10% to 15%; however, cognitive bias is rarely considered as a significant factor in these failures. In a systematic review of the contribution of cognitive bias to medical decision making, Saposnik et al. (5) found that cognitive bias contributed to diagnostic errors in 36% to 77% of specific case scenarios described in 20 publications involving 6,810 physicians. Five of 7 studies showed an association between cognitive errors and therapeutic or management errors (5). By necessity, clinical thinking often relies on intuitive thinking and heuristic shortcuts that belie the ability to stop and consider how we are approaching a clinical problem, as well as what the clinical problem is. The clinical gamble of trusting intuitive thinking usually carries good odds but may also fail some patients. Although clinicians rarely have all the information necessary to make a truly rational decision, exclusive use of intuitive thinking invites automatic reactions that may be primed by bias. Ironically, the most valuable technological tools to overcome common biases in clinical medicine provide heuristic shortcuts as additions to diagnostic algorithms; first, search for the diagnosis using weighted predictors, then stop searching when predictor specifies a diagnosis, and then use specific criteria for various diagnoses under consideration (6).

There are many examples of the delay in scientific progress due to cognitive bias. We are most familiar with cognitive bias affecting cardiac transplantation. An early example concerns the role of serum HLA antibodies and their relationship to transplant outcomes. HLA antibodies were first described to affect transplant outcomes in the 1970s by Terasaki et al. (7), who showed that kidney transplant recipients with preformed HLA antibodies had a significantly lower graft survival compared with recipients without such preformed antibodies (i.e., 40% vs. 60% at 12 months after transplant, respectively). The risk of graft failure for a second renal transplant in recipients who had HLA antibodies was even more pronounced, even after excluding hyperacute rejection due to HLA mismatching (7). Despite these and other reports starting in the 1970s, routine inclusion of HLA antibody testing as part of post-transplant monitoring was not a consensus recommendation for kidney and heart transplant recipients until the mid-2000s (8,9). Furthermore, responses to the finding of HLA antibodies in the serum continued to vary, and a consensus recommendation for routine treatment was not agreed on until 2013 (10). This delay in the acceptance of the role of HLA antibodies in transplant rejection was potentiated by confirmation bias, which also led to a delay in a wider study and understanding of other potentially damaging antibodies including those against major histocompatibility complex class I–related chain A, vimentin, angiotensin II type 1 receptor, tubulin, myosin, and collagen (11). These alternative antibodies bind to endothelial cells rather than to lymphocytes. Routine screening methods and protocols are still not readily available (10).

Another serious delay that occurred in transplantation biology was the careful consideration to the role of the vascular endothelium in the health of a transplanted organ. Beginning in the 1960s, studies of the vascular endothelium during inflammation demonstrated that endothelial cell activation was crucial in the inflammatory responses to diverse injuries including autoimmune processes. Endothelial cells were not just passive lining cells but rather active participants in immune processes. Vascular biologists showed persuasive evidence that HLA and non-HLA antibody binding to the vascular endothelium resulted in endothelial activation, complement activation, and binding and augmentation of downstream inflammatory responses (12, 13, 14).

Studies beginning in 1994 have shown that this innate immune system, anciently developed to respond to pathogens, is also important in activating the allograft immune response (13,15). The role of antibodies in alloimmune reactions was first demonstrated in experimental animals and in human kidney transplant rejection from the 1970s (16,17). The first series of patients demonstrating the adverse role of endothelial injury and antibody binding in heart transplant patients was published in 1989 (18). Studies from several institutions published in the 1990s to 2000 highlighted the impact of antibody-mediated rejection (AMR) on outcomes after heart transplant, establishing that AMR increases the risk of cardiovascular mortality (19, 20, 21, 22, 23) (Figure 1).

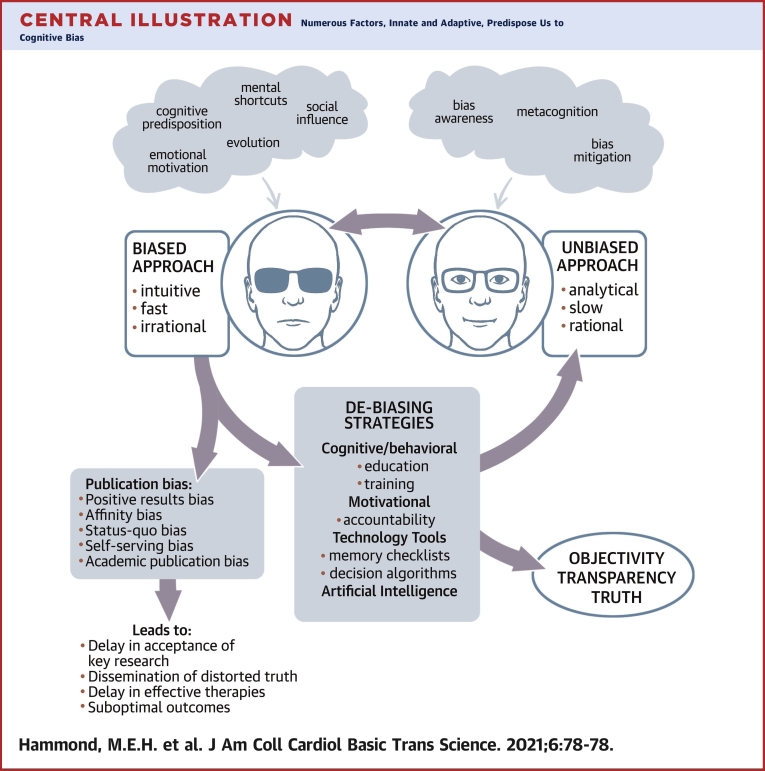

Figure 1.

Timeline of Significant Publications Documenting the Relationship of Endothelial Activation, Inflammation, and AMR

Authors last names are designated. Twenty-four years elapsed from the first publication of AMR in heart transplantation to the emergence of standardized criteria for diagnosis. AMR = antibody-mediated rejection; DSA = donor-specific antibodies; HTx = heart transplantation; ISHLT = International Society for Heart and Lung Transplantation.

Reports documented that asymptomatic AMR was often present early in the post-transplant period (first 3 months), and AMR episodes in the first post-transplant year would often recur. Patients with multiple episodes of AMR (>3) were highly likely to die of cardiovascular-related causes. (The incremental risk of death for patients was 8% per episode.) Although this evidence was published and widely available beginning in the 1990s, confirmation bias and affinity bias delayed its acceptance. Framing of the information in a skeptical light by experts emphasized controversy rather than the scientific facts in meetings to address this topic. As a result, it took 24 years since the first description of AMR in heart transplant for AMR diagnostic criteria to be included in consensus guideline documents (24). Publication bias and affinity bias delayed the development and adoption of AMR guidelines. In the meantime, the design of clinical trials and the development of innovative therapies to address this serious form of rejection were also delayed, and, unfortunately, are still not available.

As the previously described examples illustrate, cognitive biases promote a delay in the acceptance of important scientific ideas principally through a delay in publication and dissemination of those ideas.

Publication bias refers to the predilection of editors or reviewers to select publications based on their personal cognitive biases (25). The major types of publication bias are shown in Table 2. A frequent type of publication bias is the tendency to publish results that are positive. There are numerous investigations of this “positive outcome” bias. A recent report examining 4,656 published studies indicated that the prevalence of studies showing positive statistical association with the stated hypothesis increased by 22% from 1990 to 2007 (26). Editors of scientific journals are under pressure to publish papers with significant relevance to their readers to justify continued publication. Journals are continuously measured by their impact factor, a scientometric measure of the yearly average of article citations from that journal used as a proxy for journal importance among its peers. Many editors perceive that the publication of studies with positive outcomes will raise their journal’s impact factor. This bias can have unintended serious consequences for the scientific community. Impact factor ratings have been recently shown to correlate with the effect size of the results reported in the publication (R2 = 0.13; p < 0.001) (26). If, because of publication bias, the positive effect of a treatment is inflated, there is potential for risk of patient harm because the benefit of the treatment is exaggerated and may hasten earlier adoption. Such inflated treatment effects lower the certainty of evidence (27). Another significant enhancer of impact factor ratings is the preferred publication of guideline documents and meta-analyses. Although these types of publications are very important for editors to select because of their value to the scientific audience, the inclusion of these categories of articles in calculation of the impact factor may limit editors in the allocation of space to original scientific publications. These examples illustrate the difficulty for journal editors to withstand these pressures by more careful consideration of potential biases. Another type of publishing bias is status quo bias, which refers to publishing articles that support current dogmas. This has 2 negative consequences, the first of which is that articles that conform to current dogmas are often not held to the same rigorous scientific review standards because the findings must be true. The second is that studies that do not conform to current dogmas are often rejected because they cannot be true.

Table 2.

Common Publication Bias Types (25)

| Type of Publication Bias | Definition |

|---|---|

| Affinity bias | Preference for studies from highly ranked institutions or investigators |

| Positive outcome bias | Preference for studies with positive results |

| Status quo bias | Favor of options supporting current dogma |

| Self-serving bias | Favor of opinions matching those of reviewer or colleagues |

| Academic publication bias | Favor of studies benefiting personal institution, peers, or promoting promotion in rank |

Substantial improvements in the adoption of scientific advancements may be possible if bias could be systematically confronted, ideally starting during scientific training. Biased thinking is hardwired, automatic, and efficient in daily decision making. It is impossible to remove our reliance on these thought processes; however, we could choose to deliberately use intuitive thinking more appropriately, more frequently substituting it with analytic thinking, or using other mitigating strategies when we become aware that our intuitive thinking about a scientific or clinical problem is flawed by bias. Recent publications have proposed ways to enhance awareness and lessen the impact of cognitive and publication biases and have described successful results. Ludolph and Shultz (28) conducted a systematic review of debiasing strategies in health care, reporting on 87 relevant studies of debiasing strategies, of which most were at least partially successful. Strategies involving technological interventions appeared most promising, with a success rate of 88% (28). A summary of published debiasing strategies, mostly involving computerized logic, is provided in Table 3.

Table 3.

| Strategy Type | Strategy | Tactic | Tactic Type | Example |

|---|---|---|---|---|

| Collective personal | Add medical school training | Team discussions | Education | Case presentations inquiring about bias |

| Personal | Develop personal insight/awareness | Consider opposite of first impression of data | Cognitive | What if hypothesis was false? |

| Personal | Specific training | In services with staff, peer reviewers, editors | Cognitive | Brainstorm methods to mitigate bias among editors and peer reviewers |

| Personal | Reduce reliance on memory | Require review of literature and use of checklists that confront bias | Technological | Digital scientific review and evaluation of skewness |

| Personal | Feedback | Editor communication with peer reviewers about decisions | Technological | Digital metrics |

| Editorial | Monitor performance | Editors review metrics | Technological | Digital report of % positive outcome over time |

| Editorial | Confront affinity bias | Double-blind versus single-blind review | Technological | Increase publication from lesser known institutions |

| Collective editorial | Confront impact factor influence | Editorial policy consensus to modify impact factor | Political | Report with/without guidelines and meta-analyses included |

| Editorial | Make task easier | Provision of checklists and templates for editors/reviewers | Technological | Digital application |

| Editorial | Monitoring of performance | Metrics review | Technological | Artificial Intelligence |

| Editorial | Improve scientific reliability | Editorial review of potentially important ideas | Cognitive | Publish validation studies |

Technological tools hold great promise in providing analytic thinking prompts in the evaluation of scientific data. Such tools often use computerized algorithms based on probabilities or artificial intelligence to guide the desired analytic thinking. Tools that are readily available during review obviate the reliance of individuals on memory as they consider how to think about their review decision as well as thinking about what decision to make. The checklist provided in Table 4 prompts reviewers to consider their biases as they consider their decision (29, 30, 31, 32). The routine use of statistical tests for article bias by journal editors and the development of editorial policy changes by a consensus of scientific journal editors are other highly recommended initiatives. Routine inclusion of statistical reviewers as part of every peer review process would help to mitigate bias because such reviewers could reject studies with weak statistical arguments or flawed conclusions, obviating the need for peer review (33). Editors could use technology to combat affinity bias by instituting double-blind review of scientific papers in which peer reviews are unknown to authors and also do not know the names or institutions of the research submitters. In a recent report, Tomkins et al. (34) assessed double-blind versus single-blind review of computer science research. Single-blind reviewers were significantly more likely than their double-blind counterparts to recommend the acceptance of papers from top universities (1.58×) or famous authors (1.63×).

Table 4.

Reviewer Checklist Example

|

|

|

|

|

|

|

Although it is imperative that individual editors and peer reviewers acknowledge and address bias, collective action by scientific journal editors could also have a major impact on this problem by creating consensus editorial policy recommendations to deal with the most serious issues. There is precedent for collective action by scientific journal editors. Consensus recommendations for medical journal editors have been published and updated in December 2019 through the International Committee of Medical Journal Editors (35). This international committee could be a forum for the discussion and adoption of policies related to personal cognitive and publication bias. Positive publication bias is perceived to be the most serious issue. Various strategies have been proposed to address this problem, including creating journals for negative results. To be effective, any strategy will need widespread adoption by most scientific journals. Similarly, studies validating positive reports need to receive priority in publication (29, 30, 31, 32, 33).

Review publication and guideline documents are popular with editors because they improve the impact factor of the journal. If impact factors were calculated with and without such publications included, a more representative ranking based on original science could be promulgated. A discussion among scientific journal editors could be held to assess the feasibility of this modification of journal assessment.

Finally, editorial policy could influence scientific advancement by advocating for the publication of perspective articles or opinion pieces that emphasize important priorities or serious scientific gaps. If such publications had been forthcoming about AMR, the delay in acceptance could have been mitigated. It took the determination of various investigators, worried about their own patient populations who were dying of AMR or its consequences, to call for serious consensus discussions of this topic (8).

In summary, by working together, educators, editors, reviewers, and investigators could establish principles and policies that might influence the problem of cognitive and publication bias, which would mitigate the delay in the acceptance of important new scientific evidence and protect our patients from harm. By considering how we think as well as what we think, we can trigger the use of debiasing methods to make scientific progress more efficient.

Author Disclosures

Supported by A Lee Christensen Fund, Intermountain Healthcare Foundation, Salt Lake City, Utah. All authors have reported that they have no relationships relevant to the contents of this paper to disclose.

Footnotes

The authors attest they are in compliance with human studies committees and animal welfare regulations of the authors’ institutions and Food and Drug Administration guidelines, including patient consent where appropriate. For more information, visit the Author Center.

References

- 1.Croskerry P. From mindless to mindful practice--cognitive bias and clinical decision making. N Engl J Med. 2013;368:2445–2448. doi: 10.1056/NEJMp1303712. [DOI] [PubMed] [Google Scholar]

- 2.Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775–780. doi: 10.1097/00001888-200308000-00003. [DOI] [PubMed] [Google Scholar]

- 3.Davenport T.H. How to Make Better Decisions about Coronavirus. MIT Sloan Management Review [serial online] April 2020 https://sloanreview.mit.edu/article/how-to-make-better-decisions-about-coronavirus/ Available at: [Google Scholar]

- 4.Daniel M., Khandelwal S., Santen S.A., Malone M. Cognitive debiasing strategies for the emergency department. AEM Educ Train. 2017;1:41–42. doi: 10.1002/aet2.10010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Saposnik G., Redelmeier D., Ruff C.D., Tobler P.N. Cognitive biases associated with medical decisions: a systematic review. BMC Med Inform Decis Mak. 2016;16:138–152. doi: 10.1186/s12911-016-0377-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Marewski J.N., Gigerenzer G. Heuristic decision making in medicine. Dialogues Clin Neurosci. 2012;14:77–89. doi: 10.31887/DCNS.2012.14.1/jmarewski. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Terasaki P.I., Kreisler M., Mickey R.M. Presensitization and kidney transplant failures. Postgrad Med J. 1971;47:89–100. [PMC free article] [PubMed] [Google Scholar]

- 8.Takemoto S.K., Zeevi A., Feng S. National conference to assess antibody-mediated rejection in solid organ transplantation. Am J Transplant. 2004;4:1033–1041. doi: 10.1111/j.1600-6143.2004.00500.x. [DOI] [PubMed] [Google Scholar]

- 9.Reed E.F., Demetris A.J., Hammond E. Acute antibody-mediated rejection of cardiac transplants. J Heart Lung Transplant. 2006;25:153–159. doi: 10.1016/j.healun.2005.09.003. [DOI] [PubMed] [Google Scholar]

- 10.Tait B.D., Susal C., Gebel H.M. Consensus guidelines on the testing and clinical management issues associated with HLA and Non-HLA antibodies in transplantation. Transplantation. 2013;95:19–47. doi: 10.1097/TP.0b013e31827a19cc. [DOI] [PubMed] [Google Scholar]

- 11.Zhang Q., Reed E.F. The importance of non-HLA antibodies in transplantation. Nat Rev Nephrol. 2016;12:484–495. doi: 10.1038/nrneph.2016.88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pober J.S. Cytokine-mediated activation of vascular endothelium, physiology and pathology. Am J Pathol. 1988;133:426–433. [PMC free article] [PubMed] [Google Scholar]

- 13.Pober J.S., Tellides G. Participation of blood vessel cells in human adaptive immune responses. Trends Immunol. 2012;33:49–57. doi: 10.1016/j.it.2011.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pober J.S., Sessa W.P. Inflammation and the blood vascular system. Cold Spring Harb Perspect Biol. 2015;7:a016345. doi: 10.1101/cshperspect.a016345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Land W.G. Innate immunity-mediated allograft rejection and strategies to prevent it. Transplant Proc. 2007;39:667–672. doi: 10.1016/j.transproceed.2007.01.052. [DOI] [PubMed] [Google Scholar]

- 16.Forbes R.D., Guttmann R.D., Pinto-Blonde M. A passive transfer model of hyperacute rat cardiac allograft rejection. Lab Invest. 1979;41:348–355. [PubMed] [Google Scholar]

- 17.Gailiunas P., Jr., Suthanthiran M., Busch G.J., Carpenter C.B., Garovoy M.R. Role of humoral presenitization in human renal transplant rejection. Kidney Int. 1980;17:638–646. doi: 10.1038/ki.1980.75. [DOI] [PubMed] [Google Scholar]

- 18.Hammond E.H., Yowell R.L., Nunoda S. Vascular (humoral) rejection in heart transplantation: pathologic observations and clinical implications. J Heart Transplant. 1989;8:430–443. [PubMed] [Google Scholar]

- 19.Fishbein M.C., Kobashigawa J. Biopsy-negative cardiac transplant rejection: etiology, diagnosis, and therapy. Curr Opin Cardiol. 2004;19:166–169. doi: 10.1097/00001573-200403000-00018. [DOI] [PubMed] [Google Scholar]

- 20.Crespo-Leiro M.G., Veiga-Barreiro A., Domenech N. Humoral heart rejection (severe allograft dysfunction with no signs of cellular rejection or ischemia): incidence, management, and the value of C4d for diagnosis. Am J Transplant. 2005;5:2560–2564. doi: 10.1111/j.1600-6143.2005.01039.x. [DOI] [PubMed] [Google Scholar]

- 21.Wu G.W., Kobashigawa J.A., Fishbein M.C. Asymptomatic antibody-mediated rejection after heart transplantation predicts poor outcomes. J Heart Lung Transplant. 2009;28:417–422. doi: 10.1016/j.healun.2009.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kfoury A.G., Snow G.L., Budge D. A longitudinal study of the course of asymptomatic antibody-mediated rejection in heart transplantation. J Heart Lung Transplant. 2012;31:46–51. doi: 10.1016/j.healun.2011.10.009. [DOI] [PubMed] [Google Scholar]

- 23.Hammond M.E., Kfoury A.G. Antibody-mediated rejection in the cardiac allograft: diagnosis, treatment and future considerations. Curr Opin Cardiol. 2017;32:326–335. doi: 10.1097/HCO.0000000000000390. [DOI] [PubMed] [Google Scholar]

- 24.Berry G.J., Burke M.M., Andersen C. The 2013 International Society for Heart and Lung Transplantation Working Formulation for the standardization of nomenclature in the pathologic diagnosis of antibody-mediated rejection in heart transplantation. J Heart Lung Transplant. 2013;32:1147–1162. doi: 10.1016/j.healun.2013.08.011. [DOI] [PubMed] [Google Scholar]

- 25.Joober R., Schmitz N., Annable L., Boksa P. Publication bias: what are the challenges and can they be overcome? J Psychiatry Neurosci. 2012;37:149–152. doi: 10.1503/jpn.120065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lin L., Chu H. Quantifying publication bias in meta-analysis. Biometrics. 2018;74:785–794. doi: 10.1111/biom.12817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Murad M.H., Chu H., Lin L., Wang Z. The effect of publication bias magnitude and direction on the certainty in evidence. BMJ Evid Based Med. 2018;23:84–86. doi: 10.1136/bmjebm-2018-110891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ludolph R., Schulz P.J. Debiasing health-related judgments and decision making: a systematic review. Med Decis Making. 2018;38:3–13. doi: 10.1177/0272989X17716672. [DOI] [PubMed] [Google Scholar]

- 29.Emerson G.B., Warme W.J., Wolf F.M. Testing for the presence of positive-outcome bias in peer review: a randomized controlled trial. Arch Intern Med. 2010;170:1934–1939. doi: 10.1001/archinternmed.2010.406. [DOI] [PubMed] [Google Scholar]

- 30.Heim A., Ravaud P., Baron G., Boutron I. Designs of trials assessing interventions to improve the peer review process: a vignette-based survey. BMC Med. 2018;16:191. doi: 10.1186/s12916-018-1167-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Thaler K., Kien C., Nussbaumer B. Inadequate use and regulation of interventions against publication bias decreases their effectiveness: a systematic review. J Clin Epidemiol. 2015;68:792–802. doi: 10.1016/j.jclinepi.2015.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Baddeley M. Herding. Social influences and behavioural bias in scientific research: simple awareness of the hidden pressures and beliefs that influence our thinking can help to preserve objectivity. EMBO Rep. 2015;16:902–905. doi: 10.15252/embr.201540637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cobo E., Selva-O'Callagham A., Ribera J.M., Cardellach F., Dominguez R., Vilardell M. Statistical reviewers improve reporting in biomedical articles: a randomized trial. PLoS One. 2007;28:e332. doi: 10.1371/journal.pone.0000332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tompkins A., Zhang M., Heavlin W.D. Single- vs. double-blind reviewing at WSDM 2017. Proc Natl Acad Sci U S A. 2017;114:12708–12713. doi: 10.1073/pnas.1707323114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.International Committee of Medical Journal Editors Recommendations for the Conduct, Reporting, Editing, and Publication of Scholarly Work in Medical Journals. http://www.icmje.org/recommendations/ Updated December 2019. Available at: [PubMed]