Abstract

Purpose

This study sought to determine the feasibility of using phonetic complexity manipulations as a way to systematically assess articulatory deficits in talkers with progressive dysarthria due to Parkinson's disease (PD).

Method

Articulatory kinematics were recorded using three-dimensional electromagnetic articulography from 15 talkers with PD (58–84 years old) and 15 healthy controls (55–80 years old) while they produced target words embedded in a carrier phrase. Majority of the talkers with PD exhibited a relatively mild dysarthria. For stimuli selection, phonetic complexity was calculated for a variety of words using the framework proposed by Kent (1992), and six words representative of low, medium, and high phonetic complexity were selected as targets. Jaw, posterior tongue, and anterior tongue kinematic measures that were used to test for phonetic complexity effects included movement speed, cumulative path distance, movement range, movement duration, and spatiotemporal variability.

Results

Significantly smaller movements and slower movement speeds were evident in talkers with PD, predominantly for words with high phonetic complexity. The effect sizes of between-groups differences were larger for several jaw kinematic measures than those of the tongue.

Discussion and Conclusion

Findings suggest that systematic manipulations of phonetic complexity can support the detection of articulatory deficits in talkers with PD. Phonetic complexity should therefore be leveraged for the assessment of articulatory performance in talkers with progressive dysarthria. Future work will be directed toward linking speech kinematic and auditory–perceptual measures to determine the clinical significance of the current findings.

Parkinson's disease (PD) is a progressive neurodegenerative disease typically associated with basal ganglia pathology and reduced dopamine output to the striatum (Sapir, 2014). More recently, however, nondopaminergic neuromodulators and extrastriatal networks are also thought to be affected by the disease (Ferrer, 2011; Wolters, 2008). About 90% of individuals with PD will develop dysarthria during the course of the disease (Sauvageau et al., 2015), but the deviant speech characteristics may vary and could involve one or more speech subsystems (Darley et al., 1969a). Of note, an estimated 50% of these individuals will develop impaired articulation that manifests perceptually as imprecise consonants and speech rate abnormalities (Duffy, 2013). Consequently, there is a growing body of literature focused on articulatory performance in PD (e.g., Connor et al., 1989; Forrest et al., 1989; Kearney et al., 2017; Mefferd, 2015; Svensson et al., 1993; Walsh & Smith, 2011; Weismer et al., 2012; Yunusova et al., 2008). Some of these studies report reduced acoustic vowel space even during the preclinical stages of dysarthria, suggesting that reduced range of tongue motion may manifest before perceptible changes are evident (Rusz et al., 2013; Skodda et al., 2011). Associations have also been reported between dysarthria severity and reductions in articulatory movement amplitude (Forrest et al., 1989; Kearney et al., 2017), average tongue speed (Weismer et al., 2012), lip peak velocity (Bandini et al., 2016; Walsh & Smith, 2011), lip peak acceleration (Bandini et al., 2016), and lip peak deceleration (Forrest et al., 1989). Additionally, studies on spatiotemporal variability show that there is considerable trial-to-trial variability in articulatory movement patterns in PD (Anderson et al., 2008; Darling & Huber, 2011).

Although existing studies have offered valuable insights in the articulatory mechanisms contributing to dysarthria in PD, our current understanding of articulatory deficits in PD is still limited due to the lack of systematic research efforts examining articulatory motor performance across utterances of varying phonetic complexity levels. It is presumed that articulatory impairment characteristics will vary with stimuli that challenge the articulatory system (Forrest et al., 1989), but substantive research evidence is needed to support this idea. From a clinical standpoint, systematic manipulation of phonetic complexity may be helpful to detect incremental changes in speech motor function that can be used to monitor behavioral, pharmacological, and/or surgical (i.e., deep brain stimulation) treatment effects (Bang et al., 2013; Ho et al., 2008; Sapir, 2014).

Stimulus-Specific Effects on Speech Performance

Stimulus-specific effects have been reported at the perceptual (Kent, 1992; Weismer et al., 2001), acoustic (Flint et al., 1992; Y. Kim et al., 2009; Weismer et al., 2001), and kinematic levels (Kearney et al., 2017; Yunusova et al., 2008). Perceptually, words with certain phonetic features, for example, high–low vowel contrast, were affected to a greater extent than words with contrasts like tongue advancement for vowels (i.e., front vs. back), which was relatively unaffected even in severely impaired talkers with amyotrophic lateral sclerosis (ALS; Kent, 1992). At the acoustic level, monosyllabic words such as wax and hail showed significantly lower F2 slopes compared to words such as sigh, coat, and shoot in speakers with ALS and PD. Interestingly, words that were most sensitive to dysarthric speech (i.e., wax and hail) were presumed to require rapid and large changes in vocal tract geometry compared to the remaining words (Y. Kim et al., 2009). At the kinematic level, changes in movement distance, speed, and duration were also found to be stimulus specific (Yunusova et al., 2008). Specifically, words with vowels requiring larger and faster movements (e.g., bad, cat, and dog) were affected to a greater extent across talkers with dysarthria. Similar observations were made in a PD study where the magnitude of disease-related effects was greatest for complex movements such as the transition from /aI/ to /b/ in the sentence Buy Bobby a puppy, which requires movement from a maximally open position for /a/, to a more closed position for /I/, to a completely closed position for /b/ (Forrest et al., 1989). At the sentence level, bradykinesia of the jaw was observed for Buy Bobby a puppy (Walsh & Smith, 2012), but not for Sally sells seven spices (Kearney et al., 2017), presumably because larger jaw movements are required for the first sentence compared to the second. In these prior studies, conclusions about stimulus-related effects, particularly phonetic complexity effects, were speculative because they were not designed to systematically assess complexity effects on speech performance. So far, only one kinematic study and one acoustic study have focused on how phonetic structure affects dysarthric speech (Hermes et al., 2019; Rosen et al., 2008). The results of the acoustic study confirmed what researchers had long speculated about phonetic complexity effects on speech performance—phonetic contexts that require quick, large movements, as measured by the magnitude of the F2 slope, are sensitive detectors of dysarthria (Rosen et al., 2008). In the kinematic study, inefficient articulatory timing which was only evident during complex syllable productions was deemed a sensitive indicator of speech impairments in those with essential tremor (Hermes et al., 2019).

Leveraging Phonetic Complexity for Improved Detection of Articulatory Impairments

Although phonetic complexity is known to influence speech production, there is no universal framework for phonetic complexity, and researchers have conceptualized it in different ways (Jakielski, 1998; MacNeilage & Davis, 1990; Stoel-Gammon, 2010). For the current study, phonetic complexity was characterized by the articulatory motor adjustments required to produce vowels and consonants based on the framework introduced by Kent (1992). This framework is based on well-established biological and phonological principles related to speech motor development, wherein phonemes acquired at an earlier age (e.g., /p, m/) are considered to be less complex because they require basic articulatory movements. Conversely, phonemes acquired later in development (e.g., /ʈʃ, dʒ/) are considered more complex because they require more refined articulatory adjustments and interarticulator coordination. This framework has been applied to document phonetic complexity effects on speech intelligibility and articulatory precision in dysarthria due to cerebral palsy (Allison & Hustad, 2014; H. Kim et al., 2010) and ALS (Kuruvilla-Dugdale et al., 2018). Overall, both studies on cerebral palsy showed that later acquired sounds, which are motorically more complex targets, were misarticulated more frequently and had a greater negative impact on speech intelligibility than earlier acquired, less complex targets (Allison & Hustad, 2014; H. Kim et al., 2010). For talkers with ALS, complexity-based effects on word intelligibility and articulatory precision varied depending on dysarthria severity (Kuruvilla-Dugdale et al., 2018). As expected, word intelligibility and articulatory precision were significantly lower in the severe dysarthria group compared to controls, regardless of stimulus complexity. For the mild group, intelligibility was reduced relative to controls only for complex words (Kuruvilla-Dugdale et al., 2018).

Existing studies support the notion that the motor control deficit in dysarthria may be best observed during movements that are relatively complex. Regardless of how phonetic complexity is conceptualized, it needs to be taken into account when selecting stimuli that will challenge the speech motor system and will help capture slight changes in speech that may otherwise go undetected. Yet, for progressive dysarthria, kinematic investigations of phonetic complexity effects on articulatory performance are limited.

Differential Involvement of Articulators in PD

In general, it has been argued that speech deterioration in PD follows a forward progression affecting the larynx, pharynx, posterior tongue, anterior tongue, and, finally, the lips (Critchley, 1981; Logemann et al., 1978). However, based on findings from various kinematic studies, there are mixed reports about articulatory involvement in PD in the literature. In some of the prior studies, for example, hypokinesia and bradykinesia were observed to a greater extent in the jaw compared to the lower lip and tongue (e.g., Connor et al., 1989; Forrest et al., 1989; Kearney et al., 2017). Contrastingly, other studies reported a disproportionately greater impairment of the tongue relative to the jaw (Mefferd & Dietrich, 2019; Yunusova et al., 2008). These inconsistent findings may be explained by methodological differences between studies, such as dysarthria severity of talkers with PD and nature of the speech stimuli (simple phrases vs. long sentences). Moreover, several studies have used coupled tongue–jaw and/or lip–jaw movements, whereas very few studies have attempted to decouple jaw movements from tongue and/or lower lip movements (Mefferd & Dietrich, 2019; Yunusova et al., 2008) to examine articulator-specific involvement in PD. Although linear subtraction can be used to decouple the jaw from the lower lip (e.g., Grigos & Patel, 2007; Mefferd et al., 2014), decoupling tongue and jaw movements requires several additional steps. First, the rotational movement of the jaw during speech needs to be considered. As a result of this rotation, the relative contribution of the jaw is greater at the anterior tongue segment than the posterior tongue segment (Westbury et al., 2002). Second, the anterior tongue segment is not fully attached to the jaw and therefore can move more independently of the jaw than the posterior tongue. Due to these reasons, it is difficult to estimate the relative contribution of the jaw toward tongue movement. A linear subtraction of jaw movements from tongue movements cannot be used because it can introduce large errors (Westbury et al., 2002).

As an alternative to implementing a relatively complex tongue–jaw decoupling algorithm, researchers have investigated tongue movements independent of the jaw using a bite block (Mefferd & Bissmeyer, 2016). However, bite block speech elicits a reorganization of speech movements and will not allow insights into typical speech motor performance because the tongue needs to adapt to the altered, atypical jaw position. Given these methodological challenges and constraints, in the current study, tongue movement trajectories were examined without decoupling them from the jaw. These movements are referred to as tongue movements despite the fact that they also include the contribution of the jaw. Reporting findings of coupled tongue–jaw and independent jaw motor performance is an important starting point to improve our understanding of articulator-specific performance changes due to phonetic complexity manipulations in PD.

Study Aims and Hypotheses

The aim of the current study was to systematically investigate the effect of phonetic complexity on kinematic performance measures, namely, movement speed, cumulative path distance, movement range, duration, and spatiotemporal variability of the jaw and tongue (anterior and posterior tongue) in talkers with PD and controls. Based on the existing literature (Forrest et al., 1989; Rosen et al., 2008), the effect sizes of between-groups differences in articulatory motor performance were expected to increase with phonetic complexity and significant between-groups findings were expected to be constrained to stimuli with high phonetic complexity.

Method

Participants

The study was approved by the Vanderbilt University Medical Center (VUMC) and the University of Missouri (MU) Institutional Review Boards. All participants provided written consent and were compensated for their participation. The experimental group comprised 15 participants with a definite diagnosis of idiopathic PD (nine men, six women) and 15 age- and sex-matched healthy controls (nine men, six women). The mean age of participants with PD was 67.7 years (SD = 6.79, age range: 58–84 years), and the mean age of participants in the control group was 66.79 years (SD = 7.42, age range: 55–80 years). In terms of dialect, six participants with PD and 12 controls spoke with a Standard American English dialect, six participants with PD and three control participants spoke with a subtle regional dialect, and three participants with PD spoke with a pronounced regional dialect. The following inclusionary criteria were applied to all participants: (a) no prior history of speech, language, or hearing impairments; (b) be a monolingual, American English speaker; (c) no hearing aids or a prescription for hearing aids; and (d) no diagnosis of a cognitive impairment as per self-report. For participants with PD, the additional inclusionary criteria were (a) not having undergone neurosurgical treatment (e.g., deep brain stimulation), (b) not having other comorbid neurological disorders, and (c) not having metal including pacemakers and deep brain stimulators in the head and/or neck region. Participants with PD were taking anti-Parkinson's medications and were scheduled for data collection approximately 1–2 hr after taking their medication. One participant with PD, however, had a dopamine pump. Four participants with PD received speech therapy within the last 3 years. Two of these participants had completed the LSVT treatment program, whereas the other two completed speech exercises to reduce speech rate and/or increase vocal loudness.

All the PD data and half of the control data for this study were collected at VUMC. Data from seven control participants were collected at MU. Participants at VUMC completed the Mini-Mental State Examination (Folstein et al., 1975), while participants at MU completed the Montréal Cognitive Assessment (MoCA; Nasreddine et al., 2005). The mean Mini-Mental State Examination score for participants with PD was 27.73 (SD = 1.7, range: 25–30), and for the VUMC control participants, it was 28.71 (SD = 1.6, range: 26–30). The mean MoCA score for the MU control participants was 24.83 (SD = 1.7, range: 23–27). Two individuals in the control group did not complete the MoCA due to time constraints. None of the controls reported prior history of cognitive impairment, and none had difficulty following task instructions.

Although the results of a hearing screening were not used as an inclusionary criterion, all VUMC participants completed a pure-tone hearing screening at 500, 1000, 2000, and 4000 Hz. The hearing screening revealed that all the participants with PD were able to detect pure tones at 35 dB HL in both ears at 500 Hz, 1 kHz, and 2 kHz. At 4 kHz, five participants with PD were able to detect pure tones at 35 dB HL, and for 10 of the 15 participants with PD, the hearing threshold was at least 40 dB HL in one ear. Similarly, all but one of the eight VUMC control participants were able to detect pure tones at 30 dB HL in both ears at 500 Hz, 1 kHz, and 2 kHz. At 4 kHz, one of the eight control participants was able to detect pure tones at 25 dB HL, and for seven of the eight control participants, the hearing threshold was at least 40 dB HL in one ear. For the MU controls, no formal hearing screening was completed; however, these participants were able to follow instructions and conversation at normal loudness levels with no signs of hearing problems.

The Speech Intelligibility Test (SIT; Yorkston et al., 2007) was used to determine percent sentence intelligibility and speaking rate in words per minute to characterize speech function and impairment severity. For the SIT, participants were instructed to read aloud a list of 11 randomly generated sentences ranging in length from five to 15 words, which were recorded with a lavalier condenser microphone (Audiotechnica, Model AT899) to a digital recorder (Tascam, Model DR-100KMII) at VUMC and with a high-quality condenser microphone (Shure, Model PG42) to a solid state recorder (Marantz, Model PMD670) at MU. Five trained undergraduate research assistants listened to the SIT sentence recordings from each participant and orthographically transcribed exactly what they heard using the SIT software. Sentence intelligibility was calculated based on the number of words correctly understood by the listener divided by the total number of words spoken, multiplied by 100. Speaking rate was estimated in words per minute using the SIT software. To estimate speaking rate, the total number of words was divided by the total duration of all 11 sentences in minutes. For the control group, percent intelligibility averaged across the five raters was 99% (SD = 2.02, range: 93–100), and for the PD group, percent intelligibility averaged across the five raters was 98% (SD = 2.3, range: 93–100). For the control group, speaking rate averaged across the five raters was 183 words per minute (SD = 25.93, range: 146–225), and for the PD group, speaking rate averaged across the five raters was 199 words per minute (SD = 24.64, range: 134–242). The SIT files were also used by a speech-language pathologist with expertise in motor speech disorders to determine the deviant perceptual speech characteristics of the participants with PD based on the Darley et al. (1969b) rating scale. Finally, the SIT-based sentence intelligibility and speaking rate scores were used to determine dysarthria severity of talkers with PD (see Table 1).

Table 1.

Demographics of participants with Parkinson's disease.

| Participant | Age (years) | Disease duration (years) | MMSE (out of 30) | Sentence intelligibility(%) | Speaking rate (WPM) | Dysarthria severity | Deviant speech characteristics |

|---|---|---|---|---|---|---|---|

| PDM14 | 84 | 4 | 27 | 93 | 224 | Mild | Monopitch and monoloudness, imprecise consonants, harsh voice, reduced stress |

| PDM15 | 75 | 4 | 29 | 96 | 206 | Mild | Monopitch, imprecise consonants, fast rate, reduced stress, low pitch, harsh voice |

| PDM17 | 72 | 12 | 25 | 93 | 204 | Mild | Monopitch, short rushes of speech, reduced stress, irregular articulatory breakdowns |

| PDM19 | 66 | 7 | 29 | 97 | 201 | Mild | Monopitch, imprecise consonants, harsh voice, irregular articulatory breakdowns, reduced stress |

| PDM21 | 73 | 5 | 27 | 97 | 188 | Mild–moderate | Monopitch, reduced stress, breathy voice, low pitch |

| PDM22 | 58 | 10 | 28 | 98 | 215 | Mild | Monopitch |

| PDM23 | 63 | 15 | 28 | 100 | 134 | Mild–moderate | Festinations, low volume, monoloudness, low pitch, reduced stress, breathy voice |

| PDM24 | 73 | 6 | 26 | 99 | 177 | Mild–moderate | Harsh voice, breathy voice |

| PDM25 | 66 | 9 | 29 | 99 | 179 | Mild–moderate | Harsh voice, breathy voice, reduced stress |

| PDF15 | 74 | 1 | 29 | 100 | 197 | Mild | Harsh voice, breathy voice, irregular articulatory breakdowns, low pitch |

| PDF16 | 61 | 4 | 30 | 100 | 242 | Mild | Harsh voice, strained-strangled voice, monopitch, reduced stress |

| PDF17 | 60 | 4 | 26 | 99 | 199 | Mild | Harsh voice, breathy voice, hyponasality, low pitch, monopitch, monoloudness |

| PDF18 | 66 | 7 | 25 | 99 | 196 | Mild | Low pitch, harsh voice, vocal tremor, monoloudness, reduced stress |

| PDF19 | 70 | 2 | 30 | 99 | 202 | Mild | Monopitch, monoloudness, harsh voice, low pitch, reduced stress |

| PDF20 | 66 | 5 | 28 | 96 | 220 | Mild | Harsh voice, low volume, low pitch, strained-strangled voice, monopitch, imprecise consonants, irregular breakdowns, fast rate, short rushes of speech |

Note. MMSE = Mini-State Mental Examination; WPM = words per minute; PDM = male participant with Parkinson's disease; PDF = female participant with Parkinson's disease.

Experimental Stimuli

Fifteen words were initially chosen for the study. All selected words contained an initial bilabial consonant, but the place of articulation of the final phoneme varied. To select target stimuli from among the 15 words, phonetic complexity was calculated for each word based on the framework proposed by Kent (1992). As seen in Table 2, consonants, consonant clusters, and vowels were assigned levels of complexity ranging from one to seven based on the articulatory motor adjustments required to produce them. For each word, the overall phonetic complexity was the sum total of the complexity scores of its constituent consonants and vowels. For example, for the word brittle, the overall complexity score was 22, which was calculated by summing the complexity scores of the individual phonemes that make up the word. Once the complexity scores were calculated for each word, words that could be grouped into different complexity categories, namely, low, medium, and high, were chosen as target words. The target words were brittle and music (low complexity), frequency and physical (medium complexity), and parenthesis and particular (high complexity). Low-complexity words had an average complexity score of 22.5, whereas the average score of the medium and high-complexity categories were 28 and 36.5, respectively. With regard to word length, low-complexity words had two syllables, medium-complexity words had three syllables, and high-complexity words had four syllables.

Table 2.

Classification of phonemes by complexity levels based on articulatory motor demands.

| Complexity level a | Phoneme | Articulatory motor adjustments |

|---|---|---|

| Vowels | ||

| 1 | /ʌ, ə/ | Anterior–posterior tongue movement with low elevation. |

| 2 | /a, i, u, o/ | Maximally contrasted vowels based on acoustic and articulatory properties. |

| 3 | /ɜ, aI, aU, ɔɪ, ɔ / | Diphthongs require precise movement of the tongue body. The introduction of /ɜ/ gives the vowel a truncated, quadrilateral shape. /ɔ / and /a/ are distinct. |

| 4 | /I, e, ae, ʊ / | Front vowels require precise tongue–jaw configuration. |

| 5 | /ɝ/ /ɚ/ | Retroflex vowels require bunching of the tongue. |

| Consonants | ||

| 3 | /p, m, h, n, w/ | Quick, ballistic movements for /p,m,n/ with opening of the velopharyngeal port for /m,n/. Consistent slow movements for /w,h/. |

| 4 | /b, k, g, d, f, j/ | Introduction of velars. Fast, ballistic movements for /b,k,g,d/. Consistent, slow movements for /j/. Control of the fricative /f/. |

| 5 | /t, ŋ, r, l/ | Additional fast, ballistic movements for /t, ŋ/. Complex movement and bending of the tongue for /r,l/. |

| 6 | /ʈʃ, dʒ, s, z, v, ʒ, ʃ, ð, θ/ | Precise movements of the tongue for dental, alveolar, and palatal placements with frication. |

| 7 | Two consonant clusters | Transition of articulatory placements requiring precision and efficiency. |

Age at which phonemes are mastered is also considered for each complexity level. Thus, vowels start at a complexity level of 1 and consonants start at a complexity level of 3 based on the age at which the earliest vowels and consonants are mastered.

Lexical and Phonological Properties of Experimental Stimuli

Because other lexical and linguistic factors are also known to influence speech production, properties such as neighborhood density, phonotactic probability, word frequency, and syllable stress were examined for the stimuli used in the current study. Neighborhood density, defined as the number of words that are phonologically similar to the target word, was determined using the Irvine Phonotactic Online Dictionary (Vaden et al., 2009; available from http://www.iphod.com). Average neighborhood density was 6 for the low-complexity category (for both words), 1.5 (SD = 0.71) for the medium-complexity category, and 0.5 (SD = 0.71) for the high-complexity category. Because neighborhood density was notably different between complexity categories, density was included as a covariate in the statistical analysis.

Phonotactic probability, which is an estimate of the likelihood that a phonological segment will occur in given position within a word, was calculated using the University of Kansas' phonotactic probability calculator (Vitevitch & Luce, 2004; available from https://calculator.ku.edu). Phonotactic probability was .006 (SD = .002) for the low-complexity category, .004 (SD = .0002) for the medium-complexity category, and .003 (SD = .001) for the high-complexity category. Similar to density, phonotactic probability was also included as a covariate in the statistical models.

Word frequency values were obtained from SUBTLEXUS (Brysbaert & New, 2009; available from http://subtlexus.lexique.org/). Word frequency was 2.87 (SD = 1.44) for the low-complexity category, 2.84 (SD = 0.43) for the medium-complexity category, and 1.88 (SD = 1.80) for the high-complexity category. Lastly, syllable stress was obtained using the Irvine Phonotactic Online Dictionary (Vaden et al., 2009; available from http://www.iphod.com). Low- and medium-complexity words had initial syllable stress, and high-complexity words had medial syllable stress. Lexicosemantic variables (e.g., word familiarity and predictability) were not carefully controlled in this study; however, these properties are considered to be subjective and have shown to correlate with objective word frequency counts (Gordon, 1985).

Experimental Task

Participants were instructed to read aloud sentences that contained each target word within the carrier phrase “Say ____ again” (e.g., Say frequency again) at a normal rate and loudness level. A list of five sentences was displayed at a time on a television monitor, and readability was confirmed before commencing data collection. Participants produced at least five repetitions of each word in a pseudorandomized order where the lists of words were randomized, but the words in each list remained the same. Repetitions of each target word were not elicited in a blocked fashion. Four participants with PD and two control participants had fewer than five repetitions due to technical issues (e.g., sensors detaching prematurely from the tongue surface) or time constraints. The audio signal was recorded via a high-quality condenser microphone (Audiotechnica, Model AT899 or Shure, Model PG42), which was placed approximately 20 cm away from each participant's mouth.

Data Acquisition and Segmentation

Articulatory kinematic data were collected using the Wave Speech Research System (NDI) for seven control participants, while kinematic data for the remaining participants were collected with AG501 (Carstens Medizinelektronik, GmbH). Using two different devices for recording kinematic data was not a concern because the precision of both devices has been formally examined and their precision performance has been deemed comparable for speech kinematic recordings (Savariaux et al., 2017). In addition, for this study, the control data collected from the two devices were compared. Statistically, no significant differences were observed between data from the two devices; however, the small sample size of the comparison groups needs to be kept in mind. Nevertheless, both devices are known to produce reliable data with an acceptable measurement error range (Savariaux et al., 2017); therefore, the use of two different devices should not confound the current study findings in any way.

As in previous studies (Kuruvilla-Dugdale & Chuquilin-Arista, 2017; Kuruvilla-Dugdale & Mefferd, 2017; Mefferd, 2017), orofacial sensors were placed along the midsagittal plane of the anterior and posterior tongue segments at approximately 1 and 4 cm from the tongue tip, respectively, using nontoxic glue (Periacryl 90, Glustitch, Inc.). A sensor was also glued to the vermillion border of the lower lip along the midsagittal plane, and jaw sensors were attached to the mandibular gum line using small amounts of putty (Stomahesive, ConvaTec). For most participants, the jaw sensor was placed along the sagittal midline (jaw center sensor); however, for some MU participants, jaw sensors were placed near the gum line of the right and left lower canines. Only the left jaw sensor was used for analysis in these cases. A reference sensor was placed on the central part of the forehead; additional reference sensors were placed lateral to the forehead for the AG501 (see Figure 1). Movement of the orofacial sensors were expressed in the x (lateral), y (ventral-dorsal), and z (anterior–posterior) dimensions relative to the reference head sensor(s). For recordings with the AG501, participants were asked to hold a bite plate with three additional sensors in their mouth. This recording was later used to transpose the kinematic data into a head-based coordinate system, with the origin located just anterior to the jaw center sensor (Mefferd, 2017). Of note, the Wave system automatically transposes all kinematic data into a head-based coordinate system after the data are recorded; however, for data recorded with the AG501 system, such a transposition algorithm, has to be executed as a separate step. Therefore, the biteplate correction is only necessary for the AG501 data. This biteplate correction creates a head-based coordinate system that is comparable to that of the Wave system. As a result, the orientation of the axes is the same across both systems. This aspect of the kinematic postprocessing is particularly important for the range of movement and variability measures because they were obtained along a specific axis (i.e., anterior–posterior dimension or ventral–dorsal dimension). The sampling rate for the AG501 was 1250 Hz, which was further down sampled to 250 Hz, and for the Wave system, the sampling rate was 400 Hz. The audio signal was synchronized with the kinematic data and was sampled at 48,000 and 22,000 Hz for the AG501 and the Wave systems, respectively.

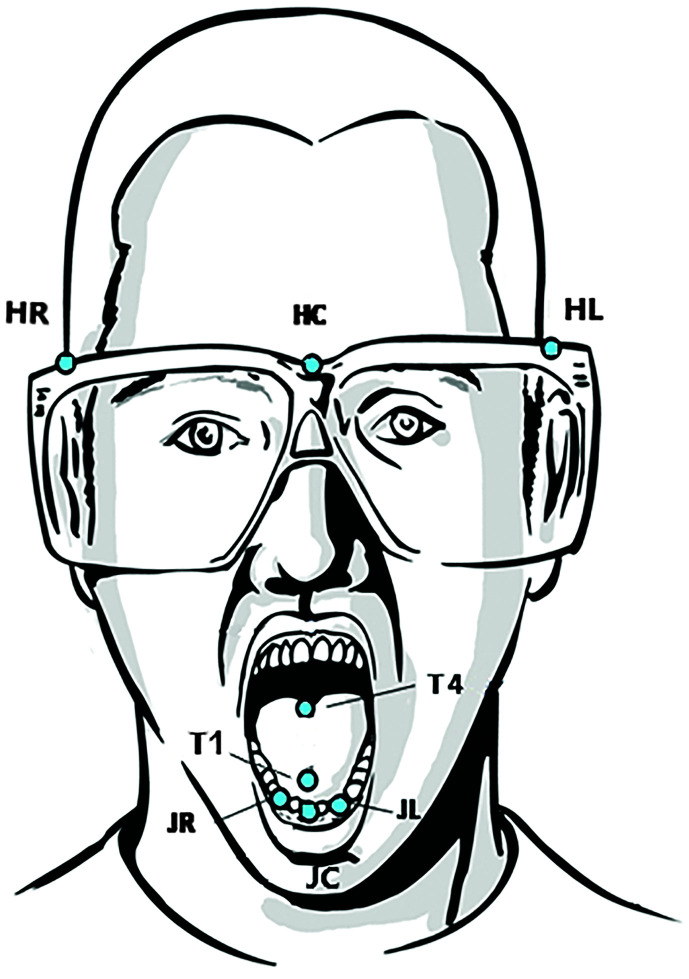

Figure 1.

Sensor placement for the Vanderbilt University Medical Center (HR, HC, HL, T1, T4, JC) and the University of Missouri (HC, T1, T4, JR, JL, or JC) kinematic data collection sessions. HR, HC, and HL = head sensors; T1 = anterior tongue sensor; T4 = posterior tongue sensor; JC, JL, and JR = jaw sensors.

To parse the target words from the carrier phrase, audio and kinematic files were loaded into SMASH, which is a custom-written MATLAB tool used to view and analyze kinematic data (Green et al., 2013). For the AG501, the recorded kinematic data were corrected for head movements and transposed into a head-based coordinate system using NormPos (Carstens Medizinelektronik, GmbH) prior to processing the data in SMASH. For recordings with the Wave system, the kinematic data were corrected for head movements automatically using WaveFront (NDI). Trained research assistants parsed target words using movement onset and offset defined by the peak vertical displacement of word-initial and -final phonemes. Specifically, the lower lip peak in the y-dimension was used as the onset marker, and the anterior tongue peak in the y-dimension was used as the offset marker for all words with the exception of frequency and music, for which the posterior tongue peak in the y-dimension was used as the offset marker. All kinematic data were low-pass filtered at 15 Hz using SMASH.

The tongue was not decoupled from the jaw in this study because the primary goal was to examine naturally occurring coupled tongue–jaw movements during speech to better understand the effect of phonetic complexity on articulatory motor performance. Furthermore, comparing kinematic performance between coupled tongue–jaw and independent jaw movements is a first step toward understanding articulator-specific performance changes due to phonetic complexity manipulations in PD.

Data Analysis

Across the low-, medium-, and high-complexity categories, 2,880 trials were analyzed (i.e., 240 trials per group per word). Convex hull analysis was used to obtain kinematic data from the parsed words. For this analysis, a convex hull, which represents the tightest polygon that contains all the data points for each sensor, was fitted around each sensor's three-dimensional (3D) movement path. After the convex hull fitting, 3D movement speed, cumulative path distance, and movement duration were extracted for all three sensors (i.e., jaw, posterior tongue, anterior tongue) for each word. In addition, movement range was derived from data in the horizontal (anterior–posterior) and vertical (ventral–dorsal) dimensions, whereas spatiotemporal variability of jaw and tongue movement patterns was assessed only in the vertical dimension.

Average Movement Speed (mm/s)

Speed time histories were derived for each word by computing the first-order derivative of each marker's 3D distance time history. The 3D distance time history is based on each marker's Euclidean distance from the head-based origin.

Cumulative Path Distance (mm)

3D cumulative distance was calculated as the distance traveled by each sensor during the production of the target word.

Movement Range (mm)

Based on each marker's Euclidean distance, the relative distance from minimum to maximumin in the z (anterior–posterior) and y (ventral–dorsal) dimensions was calculated for each sensor to index articulatory movement range for each word.

Movement Duration (s)

Movement duration was calculated as the total time of sensor movement between the onset and offset of the target word. Note that this durational measure does not include time during which the sensor was stationary. Thus, movement duration can differ between sensors depending on how much each sensor moved during the target word.

Spatiotemporal Variability Index

The spatiotemporal variability index (STI) was used to estimate the trial-to-trial variability in vertical movement patterns for words within each complexity category (Smith et al., 1995). In order to calculate STI, vertical displacement signals were time- and amplitude-normalized. For time normalization, a cubic spline procedure was used to adjust each movement time-history onto a constant axis length of 1,000 points. Each time series was also amplitude normalized by subtracting the mean of the displacement signal and dividing by its standard deviation. The standard deviation of the normalized time series was calculated at fixed 2% intervals in relative time. The 50 SDs were then added.

Statistical Analysis

The effects of phonetic complexity (low, medium, high), articulator (jaw, posterior tongue, anterior tongue), and group (PD, healthy controls) on articulatory motor performance (speed, distance, range, duration, STI) were analyzed using three-way analysis of covariance (ANCOVA) with repeated measures on words within each complexity category. For the ANCOVA analysis, for a given participant, the mean values of measures across word repetitions were computed before submitting the data to the ANCOVA. In addition, the correlation structure for different words from each participant was taken into account by the repeated-measures model. Log transformations were applied to all dependent measures to meet the assumption of normality. To control for possible confounding variables, neighborhood density, phonotactic probability, and word length were included as covariates in the analysis. The significance level was set at α = .05. The analyses were carried out using SAS 9.4 version using PROC MIXED procedure.

Results

Main and interaction effects are listed in Table 3. For the significant three-way interactions, the between-groups difference for each articular and complexity combination was analyzed using posthoc tests, and the significance level was set at α = .05. Results of the post hoc analyses and effect sizes of between-groups differences calculated using Cohen's d are shown in Figures 2 –4. Effect sizes may be interpreted as small (0.2–0.4), medium (0.5–0.7), and large (> 0.8; Cohen, 1992). Effect sizes, along with the 95% confidence intervals were used to interpret the significant post hoc comparisons (Nakagawa, 2004).

Table 3.

Repeated-measures analysis of covariance for articulatory kinematic measures.

| Source | Average speed (mm/s) |

Cumulative distance (mm) |

Horizontal (z) movement range (mm) |

Vertical (y) movement range (mm) |

Movement duration (s) |

STI |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| df | F | p | F | p | F | p | F | p | F | p | F | p | |

| Grp a | 1 | 1.97 | .17 | 1.16 | .292 | 4.15 | .05 | 0.21 | .650 | 2.26 | .145 | 0.01 | .915 |

| Art | 2 | 640.7 | < .0001 | 775.24 | < .0001 | 424.07 | < .0001 | 290.37 | < .0001 | 78.64 | < .0001 | 4.87 | .012 |

| Complex | 2 | 14.4 | < .0001 | 39.47 | < .0001 | 32.83 | < .0001 | 3.71 | .031 | 3.91 | .026 | 6.15 | .004 |

| Grp × Art | 2 | 2.21 | .119 | 2.49 | .092 | 4.7 | .013 | 4.26 | .019 | 11.01 | .0001 | 7.23 | .002 |

| Grp × Complex | 2 | 2.35 | .105 | 2.32 | .108 | 0.94 | .398 | 3.25 | .046 | 0.14 | .870 | 0.4 | .672 |

| Grp × Art × Complex | 8 | 2.74 | .009 | 3.35 | .002 | 4.38 | .0001 | 2.2 | .034 | 0.99 | .448 | 1.47 | .179 |

Note. Bolded text indicates p < .05. STI = spatiotemporal variability index; Grp = group; Art = articulator; Complex = complexity levels.

n = 15 for the PD group and n = 15 for the healthy control group.

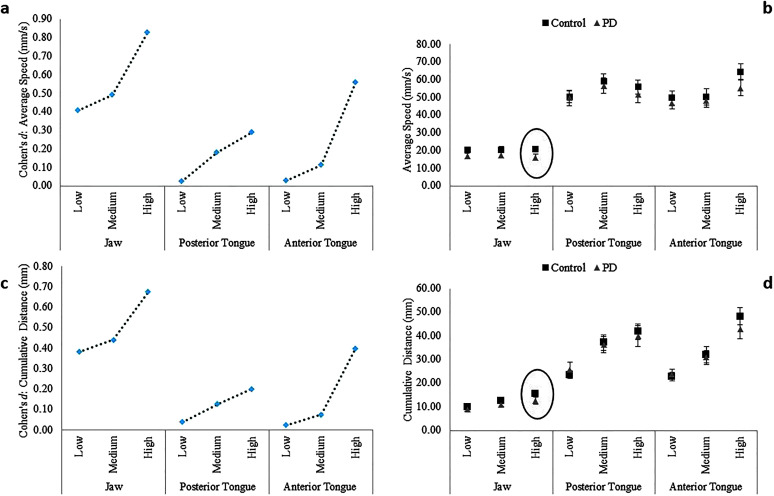

Figure 2.

Between-groups effect sizes (Cohen's d) by articulator and complexity level for average speed (a) and cumulative distance (c). Mean (standard error) of average speed (b) and cumulative distance (d) for each participant group, articulator, and complexity level. Statistically significant between-groups differences are indicated by circles on the right. PD = Parkinson's disease.

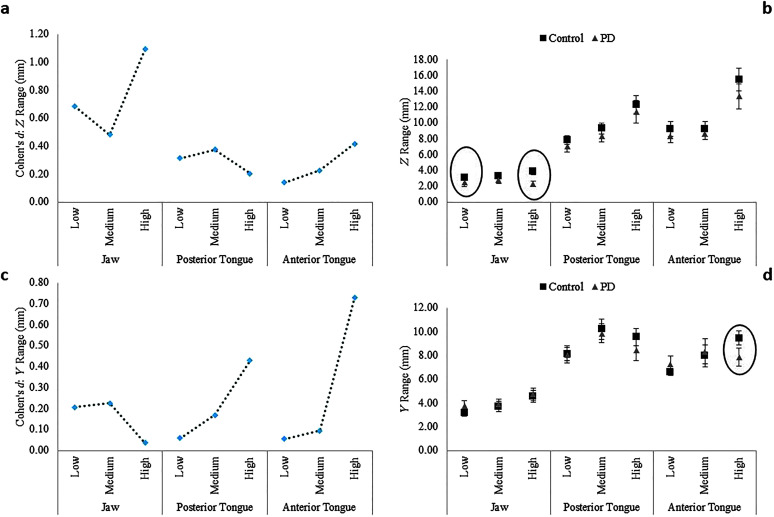

Figure 3.

Between-groups effect sizes (Cohen's d) by articulator and complexity level for z range (anterior–posterior; a) and y range (ventral–dorsal; c). Mean (standard error) of z range (b) and y range (d) for each participant group, articulator, and complexity level. Statistically significant between-groups differences indicated by circles on the right. PD = Parkinson's disease.

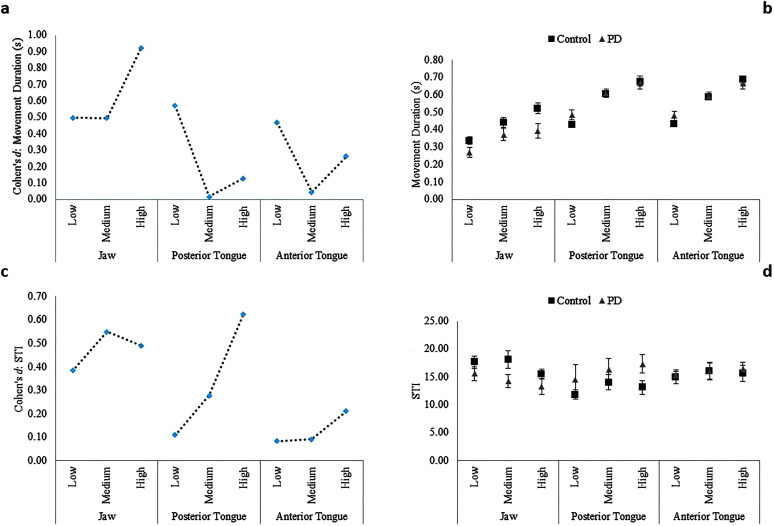

Figure 4.

Between-groups effect sizes (Cohen's d) by articulator and complexity level for movement duration (a) and spatiotemporal variability index (STI; c). Mean (standard error) of movement duration (b) and STI (d) for each participant group, articulator, and complexity level. PD = Parkinson's disease.

Average Movement Speed

Statistical tests revealed significant main effects of articulator and phonetic complexity on movement speed. The Group × Articulator and Group × Complexity interactions were not significant but, the Group × Articulator × Complexity interaction was significant. Post hoc analysis of the significant three-way interaction revealed a between-groups difference in jaw movement speed only for words with high phonetic complexity (p = .02, d = 0.83, 95% CI [0.30, 1.36]). Specifically, jaw speed was significantly lower in talkers with PD compared to healthy controls for high-complexity words (see Figures 2a and 2b).

Cumulative Path Distance

The main effects of articulator and phonetic complexity on cumulative distance were significant. In contrast, the Group × Articulator and Group × Complexity interactions were not significant but, the Group × Articulator × Complexity interaction was significant. Post hoc analysis of the significant three-way interaction revealed a between-groups difference in the cumulative path distance traveled by the jaw only for words with high phonetic complexity (p = .04, d = 0.68, 95% CI [0.16, 1.20]). Specifically, the PD group had significantly shorter cumulative distance for the jaw than controls (see Figures 2c and 2d).

Movement Range

The main effects of articulator and phonetic complexity on range of movement in the anterior–posterior (z) dimension were significant. In contrast, the Group × Complexity interaction was not significant, but the Group × Articulator and Group × Articulator × Complexity interactions were significant. Post hoc analysis of the significant three-way interaction revealed between-groups differences in jaw movement range in the z-dimension for words with low (p = .03, d = 0.69, 95% CI [0.16, 1.21]) and high phonetic complexity (p = .0003, d = 1.09, 95% CI [0.55, 1.64]). Range of jaw movement in the z-dimension was significantly lower in talkers with PD compared to healthy controls (see Figures 3a and 3b). Post hoc analysis of the significant two-way Group × Marker interaction revealed a between-groups difference only for the jaw (p = .003, d = 0.75, 95% CI [0.74, 0.90]), where talkers with PD had significantly lower jaw movement range compared to the control group.

For range of movement in the ventral–dorsal (y) dimension, the main effects of articulator and phonetic complexity were significant. Furthermore, the Group × Articulator, Group × Complexity, and Group × Articulator × Complexity interactions were significant. Post hoc analysis of the significant three-way interaction revealed a between-groups difference in anterior tongue movement range (p = .04, d = 0.73, 95% CI [0.21, 1.25]) only for high-complexity words, where talkers with PD displayed significantly reduced y range of movement for the anterior tongue compared to controls (see Figures 3c and 3d).

Movement Duration

The main effects of articulator and phonetic complexity on movement duration were significant. In addition, the Group × Articulator interaction was significant, but the Group × Complexity and Group × Articulator × Complexity interactions were not significant. Post hoc analysis of the significant two-way interaction revealed a between-groups difference only for jaw movement duration (p = .001, d = 0.48, 95% CI [0.48, 0.63]), where the PD group had significantly shorter movement durations compared to the controls (see Figures 4a and 4b).

STI

The main effects of articulator and phonetic complexity on STI were significant. Furthermore, the Group × Articulator interaction was significant; however, the Group × Complexity and Group × Articulator × Complexity interactions were not significant. Post hoc analysis of the significant two-way interaction revealed a significant between-groups difference in STI only for the jaw (p = .03, d = 0.44, 95% CI [0.43, 0.59]), where jaw STI was significantly higher in the PD group compared to controls (see Figures 4c and 4d).

Discussion

The overall goal of the current study was to determine the feasibility of using phonetic complexity manipulations as a way to systematically assess articulatory deficits in talkers with predominantly mild dysarthria due to PD. Overall, the findings support our hypothesis about the effect of phonetic complexity on articulatory motor performance because between-groups differences in movement speed, cumulative distance, and movement range were significant only when words were sufficiently complex. Although no specific predictions were made regarding differential involvement of the tongue versus the jaw, effect sizes for between-groups differences were larger for the jaw compared to the tongue for several kinematic measures. These main findings are discussed in detail below.

Complex Phonetic Contexts May Improve Detection of Articulatory Deficits in PD

Although very few acoustic and kinematic studies have investigated phonetic complexity effects systematically, all the existing literature, including our current study, support the notion that complex phonetic contexts are better suited to detect incremental changes in articulatory performance than relatively simple phonetic contexts (Flint et al., 1992; Forrest et al., 1989; Hermes et al., 2019; Kearney et al., 2017; Y. Kim et al., 2009; Rosen et al., 2008; Yunusova et al., 2008). Perceptual studies that have manipulated phonetic complexity and utterance length systematically to assess speech performance in dysarthria also lend their support to this idea (Allison & Hustad, 2014; H. Kim et al., 2010; Kuruvilla-Dugdale et al., 2018). In this study, the PD group displayed hypokinesia of the jaw and anterior tongue for words with high phonetic complexity as indexed by smaller movement range and shorter cumulative distance relative to controls. Because the anterior tongue is actively involved in consonant production, reduced anterior tongue movements, specifically during closing movements, may be linked to consonant imprecision. It is important to recognize that subtle articulatory deficits may be perceptible only when the phonetic complexity of the stimuli places sufficiently high demands on the speech motor system. Therefore, future studies need to investigate the links between complexity-based articulatory performance and auditory–perceptual speech features in PD.

Our kinematic data also show that the movement range of the posterior tongue in talkers with PD was comparable to that of controls, even for words with high phonetic complexity. This finding is surprising given that previous studies showed a reduced acoustic vowel space, even in the preclinical stages of speech decline in those with PD (Rusz et al., 2013; Skodda et al., 2011). Furthermore, this finding is unexpected given the fact that tongue movements were not decoupled from the jaw and therefore also included contributions of the jaw, which by contrast showed a significantly smaller movement range in PD than controls. Although speculative, it is possible that talkers with PD held the jaw in a relatively fixed position to permit greater flexibility for posterior tongue movements during more challenging phonetic contexts. As explained by Forrest et al. (1989), in clinical populations with impaired sensorimotor integration, the jaw may be used as a fixed reference to permit greater accuracy of tongue and lip movements to be produced. Currently, the mechanisms that underlie reduced articulatory movement range in talkers with PD are still poorly understood; however, an interplay between disease- and compensation-related factors as well as trade-offs between efficiency and intelligibility are likely contributing factors.

Because speed and displacement typically co-vary (Munhall et al., 1985; Ostry & Munhall, 1985) and the relative scaling of these two parameters determines changes in movement duration, findings of reduced speed are best interpreted when also considering between-groups differences for displacement and duration. In the current study, for words with high phonetic complexity, jaw movement speed was significantly lower in the PD group than in the control group. By contrast, jaw displacement tended to be shorter in talkers with PD than controls in the high phonetic complexity condition. As a result, jaw movement durations between talkers with PD and controls did not differ significantly. Because durational differences can be achieved if displacement was disproportionately more downscaled than speed or vice versa, the large between-groups effect size for jaw movement duration in the high-complexity condition suggests that either jaw displacement was disproportionately more downscaled than speed or vice versa. If jaw speed was disproportionally more reduced than jaw displacement, it would indicate mandibular bradykinesia or an inability to generate sufficient speed. Prior research suggests that mandibular bradykinesia is more likely to occur at advanced stages of the disease and that very few individuals with mild-to-moderate dysarthria exhibit slow mandibular movements (Umemoto et al., 2011).

Articulator-Specific Performance May Vary Depending on Phonetic Demands of the Stimuli

According to the forward progression framework proposed by Critchley (1981), the tongue is assumed to be affected earlier than the lips in PD; however, progression to the jaw is not specified within this framework. Interestingly, in the current study, the effect sizes of between-groups differences were greater for jaw kinematic measures than for anterior tongue and posterior tongue kinematic measures. This finding is congruent with prior research studies that showed more changes to jaw motor performance than tongue motor performance (Kearney et al., 2017) and lip motor performance (Connor et al., 1989; Forrest et al., 1989) in talkers with PD. Specifically, at the sentence level, smaller movements were observed for the jaw but not the posterior tongue segments (Kearney et al., 2017). Similarly, at the word level, the jaw demonstrated greater impairments than the lips in PD (Connor et al., 1989). In fact, bradykinesia was only observed in the jaw (Connor et al., 1989) and jaw movements of talkers with severe dysarthria due to PD failed to reach the movement amplitude or velocities produced by healthy controls (Forrest et al., 1989).

In contrast, the study by Mefferd and Dietrich (2019), which included most of the talkers with PD in the current study, showed that jaw displacements during the diphthong “ai” in “kite” were comparable between the two groups, whereas decoupled tongue displacements were significantly smaller in the PD group than in healthy controls. Thus, contrasting findings in the literature may not necessarily be due to differences in dysarthria severity or heterogeneity of dysarthria symptoms within a specific severity range. Rather, the articulator that is being challenged the most within the specific epoch (i.e., sentence, word, phoneme segment) may show the greatest articulatory decrement. Specifically, words with high phonetic complexity in the current study may have placed great demands on the jaw. By contrast, the production of the diphthong “ai” may be particularly challenging for the posterior tongue. Therefore, future dysarthria studies need to compare articulatory performance across utterances that explicitly engage specific articulators. Ultimately, the auditory–perceptual consequences of such articulator-specific breakdowns need to be explored in order to optimize clinical dysarthria assessment.

Limitations and Future Directions

One limitation of the current study was that some talkers with PD and some healthy controls spoke with a regional dialect, and the number of talkers with a regional dialect was not balanced between groups. However, it is rather unlikely that this imbalance confounded the between-groups findings because the Southern dialect is typically associated with a slow speaking rate (Jacewicz et al., 2010) and the speaking rate of our PD group tended to be faster than that of our controls (i.e., 199 and 183 words per minute, respectively).

A history of speech therapy may have also impacted the articulatory performance of talkers with PD because of which we compared the control and PD kinematic data with and without those participants who underwent treatment. Three-way interaction effects for Group (control, PD) × Articulator (jaw, posterior tongue, anterior tongue) × Complexity (low, medium, high) as well as two-way interaction effects for Group × Complexity and Group × Articulator were the same for all kinematic measures regardless of whether the treatment group (N = 4) was included or excluded from the PD group.

Similar to other research studies, participants with PD in this study were tested while medicated, so our kinematic measurements may be affected by medication. Future studies should consider studying articulatory motor performance in both “on” and “off” medicated states to determine the contribution of medication to complexity effects (Mücke et al., 2018).

Regarding phonetic complexity, the framework used in the current study is just one way to manipulate phonetic complexity, and other approaches such as the Index of Phonetic Complexity (Jakielski, 1998) and the Word Complexity Measure (Stoel-Gammon, 2010) also exist. The ability to detect impaired articulatory motor performance by leveraging phonetic complexity may be further improved using one of these alternative approaches. Another consideration for future studies is to include more stimuli at each phonetic complexity level that are well controlled for neighborhood density, phonotactic probability, and word length to identify those stimuli that provide the most robust findings. Finally, kinematic findings will need to be linked to auditory–perceptual ratings to determine if deficits at the kinematic level are also detectable at the perceptual level, particularly in individuals with a medical diagnosis of PD, but who are nonsymptomatic for dysarthria. It could be argued that a complexity-based approach gives little consideration to other subsystem (i.e., respiration, phonation, resonance) impairments that may also impact speech performance. However, a systematic manipulation of phonetic complexity should predominantly vary articulatory demands and minimally affect other speech subsystems.

Conclusions

Smaller articulatory movements and slower movement speed were observed in talkers with predominantly mild dysarthria due to PD, mostly during words with high phonetic complexity. Additionally, jaw motor performance was affected to a greater extent than tongue motor performance in talkers with PD, at least for the stimuli used in the current study. These results support the feasibility of using phonetic complexity manipulations for the improved detection of articulatory deficits in talkers with PD. Ultimately, this line of research seeks to improve the sensitivity of dysarthria assessments by designing speech stimuli that systematically vary in their articulatory motor demands.

Acknowledgments

The article is based on research from the master's thesis by Mary Salazar who consented to the authorship order. This research was supported by the National Institutes of Health (R15DC016383, PI: Kuruvilla-Dugdale, and R03DC015075, PI: Mefferd). Furthermore, support was provided by Vanderbilt University VICTR Studio and ResearchMatch, which are resources funded by UL1 TR002243 from the National Center for Advancing Translational Sciences. The content of this article is solely the responsibility of the authors and do not necessarily represent official views of National Center for Advancing Translational Sciences or the National Institutes of Health. We would like to thank David Charles, Fenna Phibbs, Charles Davis, and Daniel Claassen from the Vanderbilt University Medical Center Movement Disorders Clinic as well as Keely McMahan from the Vanderbilt Pi Beta Phi Rehabilitation Institute for their support with participant recruitment. Finally, we would like to acknowledge the contributions of research assistants Lori Bharadwaj, Morgan Hakenewerth, Jerrica Horn, Megan Kane, Alexandra Linderer, Lyndsey Rector, Maggie Schneider, Molly Sifford, Reilly Strong, and Obada Zaidany for their help with data collection and analysis. We are particularly grateful to all those who participated in the study.

Funding Statement

The article is based on research from the master's thesis by Mary Salazar who consented to the authorship order. This research was supported by the National Institutes of Health (R15DC016383, PI: Kuruvilla-Dugdale, and R03DC015075, PI: Mefferd). Furthermore, support was provided by Vanderbilt University VICTR Studio and ResearchMatch, which are resources funded by UL1 TR002243 from the National Center for Advancing Translational Sciences.

References

- Allison K. M., & Hustad K. C. (2014). Impact of sentence length and phonetic complexity on intelligibility of 5-year-old children with cerebral palsy. International Journal of Speech-Language Pathology, 16(4), 396–407. https://doi.org/10.3109/17549507.2013.876667 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson A., Lowit A., & Howell P. (2008). Temporal and spatial variability in speakers with Parkinson's disease and Friedreich's ataxia. Journal of Medical Speech-Language Pathology, 16(4), 173–180. [PMC free article] [PubMed] [Google Scholar]

- Bandini A., Orlandi S., Giovannelli F., Felici A., Cincotta M., Clemente D., Vanni P., Zaccara G., & Manfredi C. (2016). Markerless analysis of articulatory movements in patients with Parkinson's disease. Journal of Voice, 30(6), 766.e1–766.e11. https://doi.org/10.1016/j.jvoice.2015.10.014 [DOI] [PubMed] [Google Scholar]

- Bang Y.-M., Min K., Sohn Y. H., & Cho S.-R. (2013). Acoustic characteristics of vowel sounds in patients with Parkinson disease. Neurorehabilitation, 32(3), 649–654. https://doi.org/10.3233/NRE-130887 [DOI] [PubMed] [Google Scholar]

- Brysbaert M., & New B. (2009). Moving beyond Kučera and Francis: A critical evaluation of current word frequency norms and the introduction of a new and improved word frequency measure for American English. Behavior Research Methods, 41(4), 977–990. https://doi.org/10.3758/BRM.41.4.977 [DOI] [PubMed] [Google Scholar]

- Cohen J. (1992). A power primer. Psychological Bulletin, 112(1), 155–159. https://doi.org/10.1037/0033-2909.112.1.155 [DOI] [PubMed] [Google Scholar]

- Connor N. P., Abbs J. H., Cole K. J., & Gracco V. L. (1989). Parkinsonian deficits in serial multiarticulate movements for speech. Brain, 112(4), 997–1009. https://doi.org/10.1093/brain/112.4.997 [DOI] [PubMed] [Google Scholar]

- Critchley E. M. (1981). Speech disorders of Parkinsonism: A review. Journal of Neurology, Neurosurgery & Psychiatry, 44(9), 751–758. https://doi.org/10.1136/jnnp.44.9.751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darley F. L., Aronson A. E., & Brown J. R. (1969a). Clusters of deviant speech dimensions in the dysarthrias. Journal of Speech and Hearing Research, 12(3), 462–496. https://doi.org/10.1044/jshr.1203.462 [DOI] [PubMed] [Google Scholar]

- Darley F. L., Aronson A. E., & Brown J. R. (1969b). Differential diagnostic patterns of dysarthria. Journal of Speech and Hearing Research, 12(2), 246–269. https://doi.org/10.1044/jshr.1202.246 [DOI] [PubMed] [Google Scholar]

- Darling M., & Huber J. E. (2011). Changes to articulatory kinematics in response to loudness cues in individuals with Parkinson's disease. Journal of Speech, Language, & Hearing Research, 54(5), 1247–1259. https://doi.org/10.1044/1092-4388(2011/10-0024) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffy J. R. (2013). Motor speech disorders: Substrates, differential diagnosis, and management. Elsevier Mosby. [Google Scholar]

- Ferrer I. (2011). Neuropathology and neurochemistry of nonmotor symptoms in Parkinson's disease. Parkinson's Disease, 2011 Article 708404. https://doi.org/10.4061/2011/708404 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flint A. J., Black S. E., Campbell-Taylor I., Gailey G. F., & Levinton C. (1992). Acoustic analysis in the differentiation of Parkinson's disease and major depression. Journal of Psycholinguistic Research, 21(5), 383–399. https://doi.org/10.1007/BF01067922 [DOI] [PubMed] [Google Scholar]

- Folstein M. F., Folstein S. E., & McHugh P. R. (1975). “Mini-mental state”: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research, 12(3), 189–198. https://doi.org/10.1016/0022-3956(75)90026-6 [DOI] [PubMed] [Google Scholar]

- Forrest K., Weismer G., & Turner G. S. (1989). Kinematic, acoustic, and perceptual analyses of connected speech produced by Parkinsonian and normal geriatric adults. The Journal of the Acoustical Society of America, 85(6), 2608–2622. https://doi.org/10.1121/1.397755 [DOI] [PubMed] [Google Scholar]

- Gordon B. (1985). Subjective frequency and the lexical decision latency function: Implications for mechanisms of lexical access. Journal of Memory and Language, 24(6), 631–645. https://doi.org/10.1016/0749-596X(85)90050-6 [Google Scholar]

- Green J. R., Wang J., & Wilson D. L. (2013, August). SMASH: A tool for articulatory data processing and analysis (Conference session). 14th Annual Conference of the International Speech Communication Association (INTERSPEECH 2013), Lyon, France. [Google Scholar]

- Grigos M. I., & Patel R. (2007). Articulator movement associated with the development of prosodic control in children. Journal of Speech, Language, and Hearing Research, 50(1), 119–130. https://doi.org/10.1044/1092-4388(2007/010) [DOI] [PubMed] [Google Scholar]

- Hermes A., Mücke D., Thies T., & Barbe M. T. (2019). Coordination patterns in essential tremor patients with deep brain stimulation: Syllables with low and high complexity. Laboratory Phonology: Journal of the Association for Laboratory Phonology, 10(1) https://doi.org/10.5334/labphon.141 [Google Scholar]

- Ho A. K., Bradshaw J. L., & Iansek R. (2008). For better or worse: The effect of levodopa on speech in Parkinson's disease. Movement Disorders: Official Journal of the Movement Disorder Society, 23(4), 574–580. https://doi.org/10.1002/mds.21899 [DOI] [PubMed] [Google Scholar]

- Jacewicz E., Fox R., & Wei L. (2010). Between-speaker and within-speaker variation in speech tempo of American English. The Journal of the Acoustical Society of America, 128(2), 839–850. https://doi.org/10.1121/1.3459842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jakielski K. J. (1998). Motor organization in the acquisition of consonant clusters (Doctoral dissertation). University of Texas at Austin. [Google Scholar]

- Kearney E., Giles R., Haworth B., Faloutsos P., Baljko M., & Yunusova Y. (2017). Sentence-level movements in Parkinson's disease: Loud, clear, and slow speech. Journal of Speech, Language, and Hearing Research, 60(12), 3426–3440. https://doi.org/10.1044/2017_JSLHR-S-17-0075 [DOI] [PubMed] [Google Scholar]

- Kent R. D. (1992). The biology of phonological development. In Ferguson C. A., Menn L., & Stoel-Gammon C. (Eds.), Phonological Development: Models, Research, Implications (pp. 65–90). York Press. [Google Scholar]

- Kim H., Martin K., Hasegawa-Johnson M., & Perlman A. (2010). Frequency of consonant articulation errors in dysarthric speech. Clinical Linguistics & Phonetics, 24(10), 759–770. https://doi.org/10.3109/02699206.2010.497238 [DOI] [PubMed] [Google Scholar]

- Kim Y., Weismer G., Kent R. D., & Duffy J. R. (2009). Statistical models of F2 slope in relation to severity of dysarthria. Folia Phoniatrica et Logopaedica, 61(6), 329–335. https://doi.org/10.1159/000252849 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuruvilla-Dugdale M., & Chuquilin-Arista M. (2017). An investigation of clear speech effects on articulatory kinematics in talkers with ALS. Clinical Linguistics & Phonetics, 31(10), 725–742. https://doi.org/10.1080/02699206.2017.1318173 [DOI] [PubMed] [Google Scholar]

- Kuruvilla-Dugdale M., Custer C., Heidrick L., Barohn R., & Govindarajan R. (2018). A phonetic complexity-based approach for intelligibility and articulatory precision testing: A preliminary study on talkers with amyotrophic lateral sclerosis. Journal of Speech, Language, and Hearing Research, 61(9), 2205–2214. https://doi.org/10.1044/2018_JSLHR-S-17-0462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuruvilla-Dugdale M., & Mefferd A. (2017). Spatiotemporal movement variability in ALS: Speaking rate effects on tongue, lower lip, and jaw motor control. Journal of Communication Disorders, 67, 22–34. https://doi.org/10.1016/j.jcomdis.2017.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logemann J. A., Fisher H. B., Boshes B., & Blonsky E. R. (1978). Frequency and cooccurrence of vocal tract dysfunctions in the speech of a large sample of Parkinson patients. Journal of Speech and Hearing Sciences, 43(1), 47–57. https://doi.org/10.1044/jshd.4301.47 [DOI] [PubMed] [Google Scholar]

- MacNeilage P. F., & Davis B. (1990). Acquisition of speech production: Frames, then content. In Attention and performance XIII: Motor representation and control (pp. 453–476). Erlbaum; https://doi.org/10.4324/9780203772010-15 [Google Scholar]

- Mefferd A. S. (2015). Articulatory-to-acoustic relations in talkers with dysarthria: A first analysis. Journal of Speech, Language, and Hearing Research, 58(3), 576–589. https://doi.org/10.1044/2015_JSLHR-S-14-0188 [DOI] [PubMed] [Google Scholar]

- Mefferd A. S. (2017). Tongue- and jaw-specific contributions to acoustic vowel contrast changes in the diphthong /ai/ in response to slow, loud, and clear speech. Journal of Speech, Language, and Hearing Research, 60(11), 3144–3158. https://doi.org/10.1044/2017_JSLHR-S-17-0114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mefferd A. S., & Bissmeyer M. (2016). Bite block effects on vowel acoustics in talkers with amyotrophic lateral sclerosis and Parkinson's disease. The Journal of the Acoustical Society of America, 140(4), 3442–3442. https://doi.org/10.1121/1.4971098 [Google Scholar]

- Mefferd A. S., & Dietrich M. (2019). Tongue- and jaw-specific underpinnings of reduced and enhanced acoustic vowel contrast in talkers with Parkinson's disease. Journal of Speech, Language, and Hearing Research, 62(7), 2118–2132. https://doi.org/10.1044/2019_JSLHR-S-MSC18-18-0192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mefferd A. S., Pattee G. L., & Green J. R. (2014). Speaking rate effects on articulatory pattern consistency in talkers with mild ALS. Clinical Linguistics & Phonetics, 28(11), 799–811. https://doi.org/10.3109/02699206.2014.908239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mücke D., Hermes A., Roettger T. B., Becker J., Niemann H., Dembek T. A., Timmermann L., Visser-Vandewalle V., Fink G. R., Grice M., & Barbe M. T. (2018). The effects of thalamic deep brain stimulation on speech dynamics in patients with essential tremor: An articulographic study. PLOS ONE, 13(1) Article e0191359. https://doi.org/10.1371/journal.pone.0191359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munhall K. G., Ostry D. J., & Parush A. (1985). Characteristics of velocity profiles of speech movements. Journal of Experimental Psychology: Human Perception and Performance, 11(4), 457–474. https://doi.org/10.1037/0096-1523.11.4.457 [DOI] [PubMed] [Google Scholar]

- Nakagawa S. (2004). A farewell to Bonferroni: The problems of low statistical power and publication bias. Behavioral Ecology, 15(6), 1044–1045. https://doi.org/10.1093/beheco/arh107 [Google Scholar]

- Nasreddine Z. S., Phillips N. A., Bédirian V., Charbonneau S., Whitehead V., Collin I., Cummings J. L., & Chertkow H. (2005). The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. Journal of the American Geriatrics Society, 53(4), 695–699. https://doi.org/10.1111/j.1532-5415.2005.53221.x [DOI] [PubMed] [Google Scholar]

- Ostry D. J., & Munhall K. G. (1985). Control of rate and duration of speech movements. The Journal of the Acoustical Society of America, 77(2), 640–648. https://doi.org/10.1121/1.391882 [DOI] [PubMed] [Google Scholar]

- Rosen K. M., Goozée J. V., & Murdoch B. E. (2008). Examining the effects of multiple sclerosis on speech production: Does phonetic structure matter. Journal of Communication Disorders, 41(1), 49–69. https://doi.org/10.1016/j.jcomdis.2007.03.009 [DOI] [PubMed] [Google Scholar]

- Rusz J., Cmejla R., Tykalova T., Ruzickova H., Klempir J., Majerova V., Picmausova J., Roth J., & Ruzicka E. (2013). Imprecise vowel articulation as a potential early marker of Parkinson's disease: Effect of speaking task. The Journal of the Acoustical Society of America, 134(3), 2171–2181. https://doi.org/10.1121/1.4816541 [DOI] [PubMed] [Google Scholar]

- Sapir S. (2014). Multiple factors are involved in the dysarthria associated with Parkinson's disease: A review with implications for clinical practice and research. Journal of Speech, Language, and Hearing Research, 57(4), 1330–1343. https://doi.org/10.1044/2014_JSLHR-S-13-0039 [DOI] [PubMed] [Google Scholar]

- Sauvageau V., Roy J.-P., Langlois M., & Macoir J. (2015). Impact of the LSVT on vowel articulation and coarticulation in Parkinson's disease. Clinical Linguistics & Phonetics, 29(6), 424–440. https://doi.org/10.3109/02699206.2015.1012301 [DOI] [PubMed] [Google Scholar]

- Savariaux C., Badin P., Samson A., & Gerber S. (2017). A comparative study of the precision of Carstens and Northern Digital Instruments electromagnetic articulographs. Journal of Speech, Language, and Hearing Sciences, 60(2), 322–340. https://doi.org/10.1044/2016_JSLHR-S-15-0223 [DOI] [PubMed] [Google Scholar]

- Skodda S., Visser W., & Schlegel U. (2011). Vowel articulation in Parkinson's disease. Journal of Voice, 25(4), 467–472. https://doi.org/10.1016/j.jvoice.2010.01.009 [DOI] [PubMed] [Google Scholar]

- Smith A., Goffman L., Zelaznik H. N., Ying G., & McGillem C. (1995). Spatiotemporal stability and patterning of speech movement sequences. Experimental Brain Research, 104(3), 493–501. https://doi.org/10.1007/BF00231983 [DOI] [PubMed] [Google Scholar]

- Stoel-Gammon C. (2010). The word complexity measure: Description and application to developmental phonology and disorders. Clinical Linguistics & Phonetics, 24(4–5), 271–282. https://doi.org/10.3109/02699200903581059 [DOI] [PubMed] [Google Scholar]

- Svensson P., Henningson C., & Karlsson S. (1993). Speech motor control in Parkinson's disease: A comparison between a clinical assessment protocol and a quantitative analysis of mandibular movements. Folia Phoniatrica et Logopaedica, 45(4), 157–164. https://doi.org/10.1159/000266243 [DOI] [PubMed] [Google Scholar]

- Umemoto G., Tsuboi Y., Kitashima A., Furuya H., & Kikuta T. (2011). Impaired food transportation in Parkinson's disease related to lingual bradykinesia. Dysphagia, 26(3), 250–255. https://doi.org/10.1007/s00455-010-9296-y [DOI] [PubMed] [Google Scholar]

- Vaden K. I., Halpin H. R., & Hickok G. S. (2009). Irvine phonotactic online dictionary, Version 2.0 [Data file].

- Vitevitch M. S., & Luce P. A. (2004). A web-based interface to calculate phonotactic probability for words and nonwords in English. Behavior Research Methods, Instruments, & Computers, 36(3), 481–487. https://doi.org/10.3758/BF03195594 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walsh B., & Smith A. (2011). Linguistic complexity, speech production, and comprehension in Parkinson's disease: Behavioral and physiological indices. Journal of Speech, Language, and Hearing Research, 54(3), 787–802. https://doi.org/10.1044/1092-4388(2010/09-0085) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walsh B., & Smith A. (2012). Basic parameters of articulatory movements and acoustics in individuals with Parkinson's disease. Movement Disorders, 27(7), 843–850. https://doi.org/10.1002/mds.24888 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weismer G., Jeng J.-Y., Laures J. S., Kent R. D., & Kent J. F. (2001). Acoustic and intelligibility characteristics of sentence production in neurogenic speech disorders. Folia Phoniatrica et Logopaedica, 53(1), 1–18. https://doi.org/10.1159/000052649 [DOI] [PubMed] [Google Scholar]

- Weismer G., Yunusova Y., & Bunton K. (2012). Measures to evaluate the effects of DBS on speech production. Journal of Neurolinguistics, 25(2), 74–94. https://doi.org/10.1016/j.jneuroling.2011.08.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Westbury J. R., Lindstrom M. J., & McClean M. D. (2002). Tongues and lips without jaws: A comparison of methods for decoupling speech movements. Journal of Speech, Language, and Hearing Research, 45(4), 651–662. https://doi.org/10.1044/1092-4388(2002/052) [DOI] [PubMed] [Google Scholar]

- Wolters E. C. (2008). Variability in the clinical expression of Parkinson's disease. Journal of the Neurological Sciences, 266(1–2), 197–203. https://doi.org/10.1016/j.jns.2007.08.016 [DOI] [PubMed] [Google Scholar]

- Yorkston K., Beukelman D., Hakel M., & Dorsey M. (2007). Speech Intelligibility Test [Computer software]. Institute for Rehabilitation Science and Engineering at the Madonna Rehabilitation Hospital.

- Yunusova Y., Weismer G., Westbury J. R., & Lindstrom M. J. (2008). Articulatory movements during vowels in speakers with dysarthria and healthy controls. Journal of Speech, Language, and Hearing Research, 51(3), 596–611. https://doi.org/10.1044/1092-4388(2008/043) [DOI] [PubMed] [Google Scholar]