Abstract

Motor-learning literature focuses on simple laboratory-tasks due to their controlled manner and the ease to apply manipulations to induce learning and adaptation. Recently, we introduced a billiards paradigm and demonstrated the feasibility of real-world-neuroscience using wearables for naturalistic full-body motion-tracking and mobile-brain-imaging. Here we developed an embodied virtual-reality (VR) environment to our real-world billiards paradigm, which allows to control the visual feedback for this complex real-world task, while maintaining sense of embodiment. The setup was validated by comparing real-world ball trajectories with the trajectories of the virtual balls, calculated by the physics engine. We then ran our short-term motor learning protocol in the embodied VR. Subjects played billiard shots when they held the physical cue and hit a physical ball on the table while seeing it all in VR. We found comparable short-term motor learning trends in the embodied VR to those we previously reported in the physical real-world task. Embodied VR can be used for learning real-world tasks in a highly controlled environment which enables applying visual manipulations, common in laboratory-tasks and rehabilitation, to a real-world full-body task. Embodied VR enables to manipulate feedback and apply perturbations to isolate and assess interactions between specific motor-learning components, thus enabling addressing the current questions of motor-learning in real-world tasks. Such a setup can potentially be used for rehabilitation, where VR is gaining popularity but the transfer to the real-world is currently limited, presumably, due to the lack of embodiment.

Introduction

Motor learning is a key feature of our development and our daily lives, from a baby learning to crawl, to an adult learning crafts or sports, or undergoing rehabilitation after an injury or a stroke. It is a complex process, which involves movement in many degrees of freedom (DoF) and multiple learning mechanisms. Yet the majority of motor learning literature focuses on simple lab-based tasks with limited DoF. The key advantage of these tasks (which made them so popular) is the ability to apply highly controlled manipulations. These manipulations can be a haptic perturbation, where the robotic manipulandum pushes the subjects in a different direction from their intended movement, such as force-field adaptation [e.g. 1–4]. Alternatively, it can be a visual manipulation where the object that represents the subject’s end effector is moving in a different direction, speed, or magnitude than the end effector itself, such as in visuomotor rotation adaptation [e.g. 5–9]. Additionally, visual manipulations can be applied to the feedback, by adding delays [10–13], or showing online feedback of the full movement trajectory versus only the end-point for knowledge of results [e.g. 14–16]. These manipulations allow to isolate specific movement/learning components and establish causality.

In contrast to lab-based tasks, real-world neuroscience approaches study neurobehavioral processes in natural behavioural settings [17–20]. We recently presented a naturalistic real-world motor learning paradigm, using wearables for full-body motion tracking and EEG for mobile brain imaging, while making people perform actual real-world tasks, such as playing the competitive sport of pool-table billiards [21, 22]. We showed that short-term motor learning is a full-body process that involves multiple learning mechanisms, and different subjects might prefer one over the other. While the study of real-world tasks takes us closer to understanding real-world motor-learning, it is lacking the key advantage of lab-based toy-tasks, highly controlled manipulations of known variables.

To mechanistically study the human brain and cognition in real-world tasks we have to be able to introduce causal manipulations. This motivated us now to develop and evaluate a real-world motor learning paradigm using a novel experimental framework: Using Virtual Reality (VR [23, see review 24]) to apply controlled visual manipulations in a real-world task. VR has clear benefits such as ease of controlling repetition, feedback, and motivation, as well as overall advantages in safety, time, space, equipment, cost efficiency, and ease of documentation [25, 26]. Thus, it is commonly used in rehabilitation after stroke [27, 28] or brain injury [29, 30], and for Parkinson’s disease [31, 32]. In simple sensorimotor lab-based motor learning paradigms, VR training showed to have equivalent results to those of real training [33–35], though adaption in VR appears to be more reliant on explicit/cognitive strategies [35].

While VR is good for visual immersion, it is often lacking the Sense of Embodiment–the senses associated with being inside, having, and controlling a body [36]. Sense of embodiment requires a sense of self-location, agency, and body ownership [37–39]. This study aims to set and validate an Embodied Virtual Reality (EVR) for real-world motor-learning. I.e. VR environment in which all the objects the subjects see and interact with are the physical objects that they can physically sense. This is following the operational definition of embodiment through behaviour which is the ability to process sensorimotor information through technology in the same way as the properties of one’s own body parts [40]. Such EVR setup would enable to apply highly controlled manipulations in a real-world task. We develop here an EVR to our billiards paradigm [21] by synchronizing the positions of the real-world billiards objects (table, cue-stick, balls) into the VR environment using optical marker based motion capture. To be clear, our virtual reality environment was presented simultaneously and veridically matched to the real-world environment, a user looking at the pool table holding a cue, would thus see the pool table and the cue at the same location in the visual field if their VR headset is put on or taken off. Thus, the participant can play with a physical cue and a physical ball on the physical pool-table while seeing the world from the same perspective in VR (see Supplemental Video or https://youtu.be/m68_UYkMbSk). We ran our real-world billiards experimental protocol in this novel EVR to explore the similarities and differences in the short-term motor learning between the real-world paradigm and its EVR mock-up.

Methods

Experimental setup

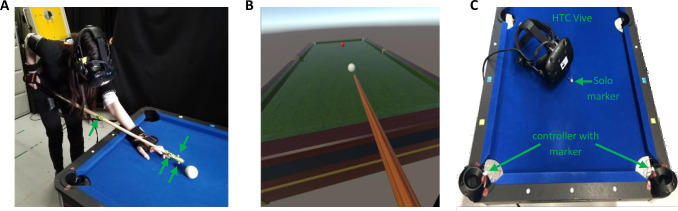

Our EVR experimental setup (Fig 1A) merges a virtual environment and real-world environment which are presented simultaneously and veridically aligned. It is composed of a real-world environment of a physical pool table, a VR environment of the same pool table (Fig 1B), and optical motion capture systems to link between the two environments (Fig 1C). The positions of the virtual billiards table and balls the subjects saw in VR were matched with their respective real-world positions during calibration, and the cue-stick trajectory was streamed into the VR. This allowed subjects to physically engage in the VR task and see their real-world actions and interactions with ball and pool cue stick veridically aligned in VR. This setup enables to apply experimental trickery (which is common in lab-based task but usually impossible in the real-world) to a real-world task (e.g. scaling, up or down, the ball velocity or the directional error to target, adding delay, or hiding the ball’s trajectory).

Fig 1. Experimental setup and calibration.

(A) 10 right-handed healthy subjects performed 300 repeated trials of billiards shots in Embodied Virtual Reality (EVR). Green arrows mark the motion capture markers used to track and stream the cue stick movement into the EVR environment (B) Scene view in the EVR. Subjects were instructed to hit the cue ball (white), which was a physical ball on the table (in A), in attempt to shoot the virtual target ball (red) towards the far-left corner. (C) For environments calibration, MoCap markers were attached to the HTC Vive controllers which were placed in the pool-table’s pockets with additional solo marker in the cue ball position.

Real-world objects included the same billiards table, cue ball, target ball, and cue stick, used in our real-world billiard study [21]. Subjects were unable to see anything in the real-world environment; they could only see a virtual projection of the game objects. They were, however, able to receive tactile feedback from the objects by interacting with them.

The Optitrack system with 4 Motion Capture cameras (Prime 13W) and Motiv software were used (all made by Natural Point Inc., Corvallis, OR) to stream the position of the real-world cue stick into the VR using 4 markers on the pool cue stick (Fig 1A). The position of each marker was streamed to Unity3d using the NATNET Optitrack Unity3d Client plugin and associated Optitrack Streaming Client script edited for the application. The positions were transformed from the Optitrack environment to the Unity3d environment with a transformation matrix derived during calibration. The cue stick asset was then reconstructed in VR using known geometric quantities of the cue stick and marker locations (Fig 1B). The placement of markers on the cue stick, as well as the position and orientation of the cameras, were key to provide consistent marker tracking and accurate control in VR without significantly constraining the subject movement. The rotation of the cue stick or the position of the subject can interfere with the line of sight between the markers on the cue stick and the cameras. Thus, to prevent errors in cue tracking, if markers become untracked the cue stick disappears from the visual scene until proper tracking is resumed.

The head-mounted display used was the HTC Vive Pro (HTC, Xindian, Taiwan). The HTC Vive system includes two base stations which forms a lighthouse tracking system emitting timed infrared pulses that are picked up by the headset and controllers. Here, the controllers were used only for the environment calibration (see below). The frame rate for the VR display was 90 Hz. The VR billiards environment was built with the Unity3d physics engine (Unity Technologies, San Francisco, CA). The Unity3d assets (billiards table, cue stick, balls) were taken from an open-source Unity3d project [41] and scaled to match the dimensions of the real-world objects. Scripts developed in C# to manage game object interactions, apply physics, and record data. Unity3d software was used to develop custom physics for game collisions. Cue stick–cue ball collision force in Unity3d is computed from the median velocity and direction of the cue stick in the 10 frames (~0.11 seconds) before contact. Sensory and auditory feedback comes from the real-world objects for this initial collision. Cue ball–target ball collision is hardcoded as a perfectly inelastic collision. Billiard ball sound effect is outputted to the Vive headphones during this collision. The default Unity3d engine was used for ball dynamics, with specific mass and friction parameters tuned to match as closely as possible to real-world ball behaviour. For the game physics validation, the physical cue ball on the pool table was tracked with a high-speed camera (Dalsa Genie Nano, Teledyne DALSA, Waterloo, Ontario) and its trajectories were compared with those of the VR ball.

For environment calibration, the ‘y-axis’ was set directly upwards (orthogonal to the ground plane) in both the Unity3d and Optitrack environments during their respective initial calibrations. This allows us to only require a 2D (x-z) transformation between environments, using a linear ratio to scale the height. The transformation matrix was determined by matching the positions of known coordinates in both Unity3d and Optitrack environments. We attached markers to the Vive controllers and during calibration mode set them in the corner pockets of the table and placed a solo marker on the cue ball location (Fig 1C), to compute the transformation matrix as well as position and scale of the real-world table. This transformation matrix was then used to transform points from the Optitrack environment into the Unity3d space.

Billiard ball tracking

We validate the reliability of the trajectories of the virtual balls in the EVR, by comparing them with the trajectories of real balls during the same shot. The movement of the real balls on the pool table were tracked with a computer vision system mounted from the ceiling (Genie Nano C1280 Color Camera, Teledyne Dalsa, Waterloo, Canada), with a resolution of 752x444 pixels and a frequency of 200Hz. Image videos were recorded and analysed with our custom software written for the real-world paradigm [21, 22]. The ball tracking was used only for the offline validation of the reliability of the trajectories of the virtual balls in the EVR. During the experiment the ball was placed on a marking on the table. The marked location slightly sunken in the cloth and thus the positioning was highly accurate. We should also note that the accuracy of the physical ball position was important only for the tactile feedback of the ball and not for the ball trajectory, which was calculated by the game’s physics engine using the position of the virtual ball.

Experimental design

10 right-handed healthy human volunteers with normal or corrected-to-normal visual acuity (4 women and 6 men, aged 24±2) participated in the study following the experimental protocol from Haar et al [21]. All experimental procedures were approved by the Imperial College Research Ethics Committee and performed in accordance with the declaration of Helsinki. All volunteers gave informed consent prior to participating in the study. The volunteers, who had little to no previous experience with playing billiards, performed 300 repeated trials in the EVR setup where the cue ball (white) and the target ball (red) were placed in the same locations and the subject was asked to shoot the target ball towards the pocket of the far-left corner (Fig 1B). VR trials ended when ball velocities fell below the threshold value, and the next trial began when the subject moved the cue stick tip to a set distance away from the cue ball start position. The trials were split into 6 sets of 50 trials with a short break in-between. For the data analysis, we further split each set into two blocks of 25 trials each, resulting in 12 blocks. During the entire short-term motor learning process, we recorded the subjects' full-body movements with a motion-tracking ‘suit’ of 17 wireless inertial measurement units (IMUs). Movement of all game objects in Unity3d (most notably ball and cue stick trajectories relative to the table) were captured in every frame in 90Hz sampling.

Full-body motion tracking

Kinematic data were recorded at 60 Hz using a wearable motion tracking ‘suit’ of 17 wireless IMUs (Xsens MVN Awinda, Xsens Technologies BV, Enschede, The Netherlands). Data acquisition was done via a graphical interface (MVN Analyze, Xsens Technologies BV, Ensched, The Netherlands). The Xsens joint angles and position data were exported as XML files and analyzed using custom software written in MATLAB (R2017a, The MathWorks, Inc., MA, USA). The Xsens full-body kinematics were extracted in joint angles in 3 degrees of freedom for each joint that followed the International Society of Biomechanics (ISB) recommendations for Euler angle extractions of Z (flexion/extension), X (abduction/adduction) Y (internal/external rotation).

Analysis of movement velocity profiles

From the sensor data we extracted the joint angular velocity profiles of all joints in all trials. We analysed the joint angular velocity profiles instead of the absolute joint angle’s probability distributions, as the latter are more sensitive to drift in the sensors. We previously showed that joint angular velocity probability distributions are more subject invariant than joint angle distributions suggesting these are the reproducible features across subjects in natural behaviour [42]. In the current task, this robustness is quite intuitive: all subjects stood in front of the same pool table and used the same cue stick, thus the subjects’ body size influenced their joint angles distributions (taller subjects with longer arms had to bend more towards the table and flex their elbow less than shorter subjects with shorter limbs) but not joints angular velocity probability distributions [21]. We defined the peak of the trial as the peak of the average absolute velocity across the DoFs of the right shoulder and the right elbow. We aligned all trials around the peak of the trial and cropped a window of 1 sec around the peak for the analysis of joint angles and velocity profiles.

Task performance & learning measures

The task performance was measured by the trial error which was defined as an absolute angular difference between the target ball movement vector direction and the desired direction to land the target ball in the centre of the pocket. The decay of error over trials is the clearest signature of learning in the task. To calculate success rates and intertrial variability, the trials were divided into blocks of 25 trials each (each experimental set of 50 trials was divided into two blocks to increase resolution). Success rate in each block was defined by the ratio of successful trial (in which the ball fell into the pocket). To improve robustness and account for outliers, we fitted the errors in each block with a t-distribution and used the location and scale parameters (μ and σ) as the blocks’ centre and variability measures. To correct for learning which is happening within a block, we also calculated a corrected intertrial variability [21], which was the intertrial variability over the residuals from a regression line fitted to the ball direction in each block.

To quantify the within-trial variability structure of the body movement, we use the generalised variance, which is the determinant of the covariance matrix [43] and is intuitively related to the multidimensional scatter of data points around their mean. We measured the generalised variance over the velocity profiles of all joints in each trial to see how it changes with learning in the EVR task relative to the real-world task [21]. To study the complexity of the body movement which was defined by the number of degrees of freedom used by the subject we applied principal component analysis (PCA) across joints for the velocity profiles per trial for each subject and used the number of PCs that explain more than 1% of the variance to quantify the degrees of freedom in each trial movement [21]. We also calculated the manipulative complexity which was suggested by Belić and Faisal [44] as a way to quantify complexity for a given number of PCs on a fixed scale (C = 1 implies that all PCs contribute equally, and C = 0 if one PC explains all data variability).

As a measure of task performance in body space, correlation distances (one minus Pearson correlation coefficient) were calculated between the velocity profile of each joint in each trial to the velocity profiles of that joint in all successful trials. The minimum over these correlation distances produced a single measure of Velocity Profile Error (VPE) for each joint in each trial [21]. While there are multiple combinations of body variables that can all lead to successful task performance, this measure looks for the distance from the nearest successful solution used by the subjects and thus provides a metric that accounts for the redundancy in the body.

Results

Our embodied Virtual Reality (EVR) framework is presented simultaneously and veridically accurately tracks the real-world environment. We found that players were reliably able to shoot (and aim) the physical pool ball with the physical cue, while their head and eyes were covered and saw the physical scene rendered in virtual reality. We describe in the following, first, how we carried out a validation of the virtual vs physical reality in terms of pool playing task performance and then, report the results of the short-term motor learning experiments.

Ball trajectories validation

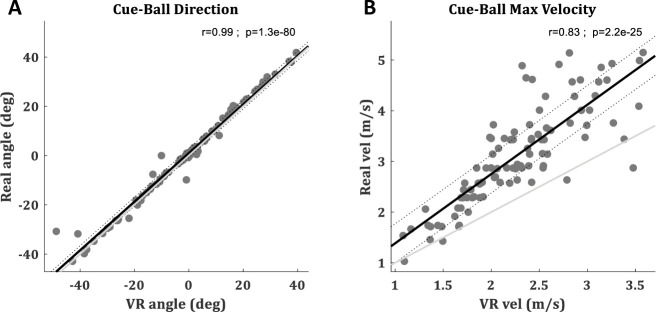

To validate how well the billiards shot in the EVR resembles the same shot in real life, the cue ball trajectories of 100 shots in various directions (-50˚< ø < 50˚ when 0 is straight forward) were compared between the two environments. The cue ball angles were perfectly correlated (Pearson correlation r = 0.99) and the root mean squared error (RMSE) was below 3 degrees (RMSE = 2.85). Thus, the angle of the virtual ball in the EVR, which defines the performance in this billiards task, was very consistent with the angle of the real-world ball (Fig 2A). The velocities were also highly correlated (Pearson correlation r = 0.83) between the environments but the ball velocities in the VR were slightly slower than on the real pool-table (Fig 2B), leading to an RMSE of 1.03 m/s.

Fig 2. Ball trajectories validation.

With-in shot comparison between real cue ball trajectory (measured with a high-speed camera) and the virtual ball trajectory (calculated by the VR physics engine). Each one is a shot. A total of 100 billiard shots at various directions (-50˚< ø < 50˚ when 0 is straight forward) are presented. (A) Cue ball angles. (B) Max velocity of the cue ball during each trial. The regression line is in black with its 95% CI in doted lines. Identity line is in light gray.

Short-term motor learning experiment

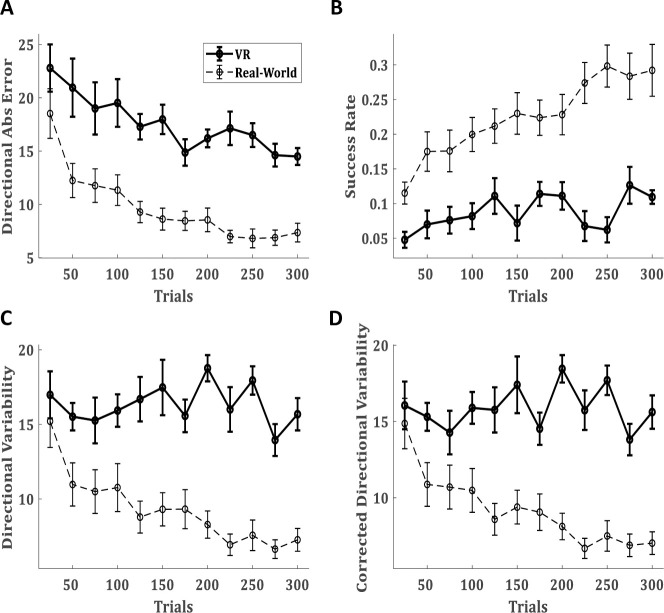

To compare the learning of the billiards shot in our EVR to the learning in real-life, we ran the same experimental protocol as in [21] and compared the mean subjects' performance (Fig 3). In Fig 3, dashed lines are taken from our non-VR physical real-world study [21] which used the identical behaviour protocol, lab environments, table, tracking methods etc, and solid lines are the EVR data from this work. The data of the physical real-world experiment is presented to ease a qualitative comparison between the environments, and thus avoided statistical comparison between the two. Following [21], the trials were divided into blocks of 25 trials each (each experimental set of 50 trials was divided into two blocks to increase resolution) to assess the performance. Over blocks, there is a gradual decay in the mean directional absolute error (Fig 3A). While this decay in the EVR is slower and smaller than in the real-world task, over the session subjects did reduce their error by 8.3±2.47 degrees (mean±SD) and this decay was significant (paired t-test p = 0.008). Accordingly, success rates were increasing over blocks (Fig 3B). The success rates increase in the EVR was also lower than in the real-world task. Over the session, subjects improved their success rates by 6.2%±1.78% (mean±SEM) and this increase was significant (paired t-test p = 0.007).

Fig 3. Task performance in EVR vs real-world.

(A) The mean absolute directional error of the target-ball, (B) The success rate, (C) directional variability, and (D) directional variability corrected for learning (see text). (A-D) presented over blocks of 25 trials. Solid lines present the performance of 10 subjects in the novel EVR environment. For compression, dashed lines present the performance of a group of 30 subjects in the same pool paradigm in the real-world (with no VR) from our previous study [21].

We also see a decay in inter-subject variability over learning, represented by the decrease in the size of the error bar of the directional error over time (Fig 3A). These short-term motor learning trends in the directional error and success rates are similar to those reported in the real world. Nevertheless, there are clear differences in the learning curve. In the EVR, short-term motor learning occurs slower than in the real-world task, and subjects’ performance is worse. The most striking difference between the environments is in the intertrial variability (Fig 3C). In the real-world task, there was a clear decay in intertrial variability throughout the experiment, whereas in EVR we see no clear trend. Corrected intertrial variability (Fig 3D), calculated to correct for learning happening within the block [21], also showed no short-term motor learning trend.

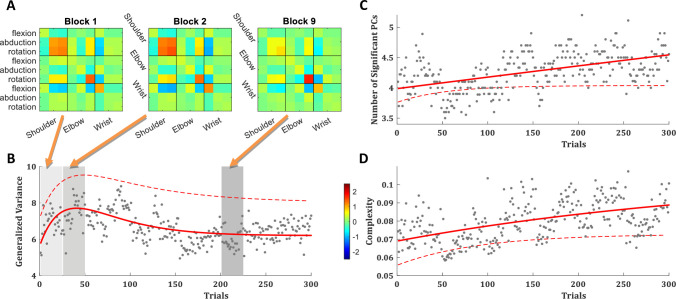

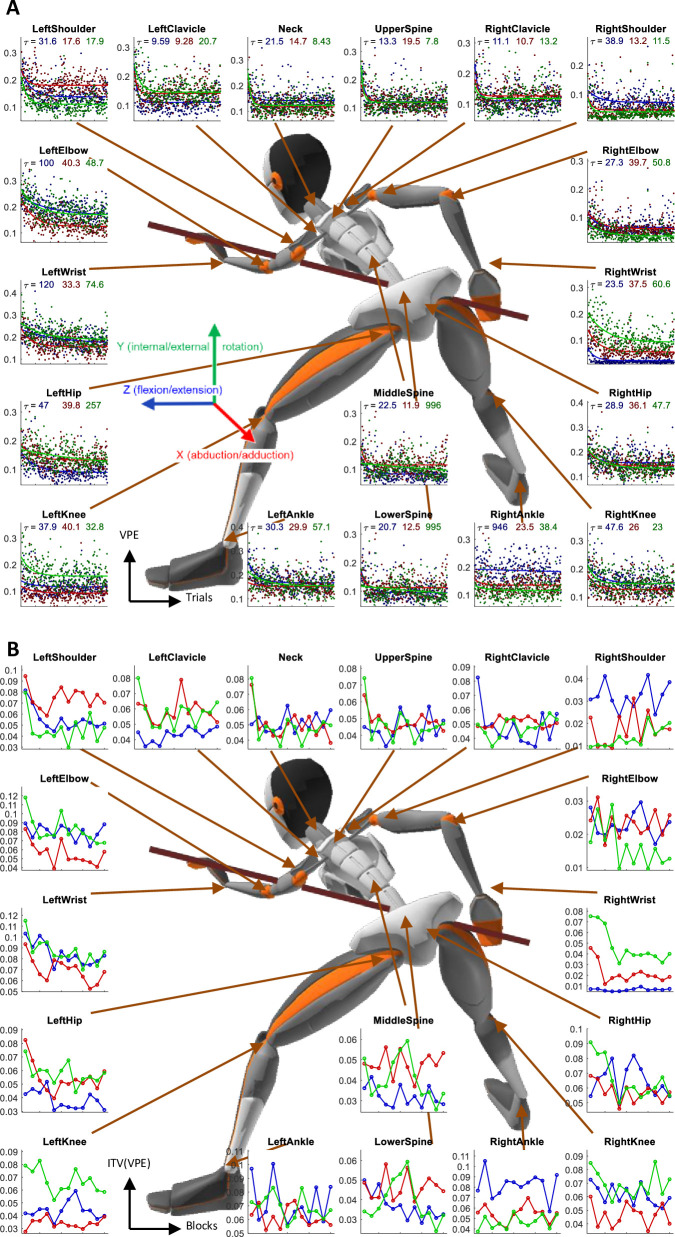

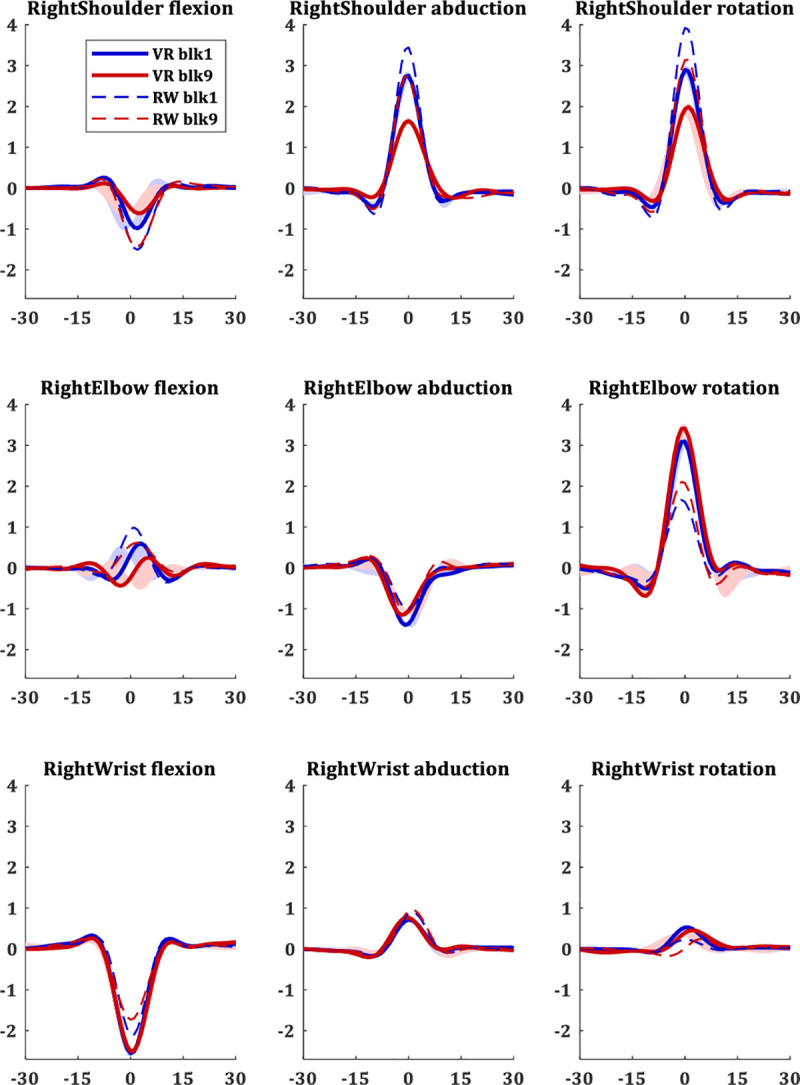

The full-body movements were analysed over the velocity profiles of all joints, as those are less sensitive to potential drifts in the IMUs and more robust and reproducible in natural behaviour across subjects and trials [21, 42]. The velocity profiles of the different joints in the EVR showed that the movement is in the right arm, as expected. The velocity profiles of the right arm showed the same changes following short-term motor learning as in the real-world task. The shoulder velocities showed a decrease from the initial trials to the trials of the learning plateau, suggesting less shoulder movement; while the elbow rotation shows an increase in velocity over learning (Fig 4). The covariance matrix over the velocity profiles of the different joints, averaged across blocks of trials of all subjects, emphasizes this trend. Over the first block it shows that most of the variance in the movement is in the right shoulder while in the 9th block (trials 201–225, the beginning of the learning plateau) there is an overall similar structure of the covariance matrix, but with a strong decrease in the shoulder variance and a strong increase in the variance of right elbow rotation (Fig 5A). This is a similar trend to the one observed in the real-world task, and even more robust.

Fig 4. Velocity profiles in EVR vs real-world.

Velocity profiles in 3 degrees of freedom (DoF) for each joint of the right arm joints. Blue lines are the profiles during the first block (trials 1–25), and red lines are the velocity profiles after learning plateaus, during the ninth block (trials 201–225). Solid lines present the velocity profiles of 10 subjects in the novel EVR environment. For compression, dashed lines present the velocity profiles of a group of 30 subjects in the same pool paradigm in the real-world (with no VR) from our previous study [21].

Fig 5. Variance and complexity comparison.

(A) The variance covariance matrix of the right arm joints velocity profiles in EVR, averaged across subjects and trials over the first block, second block and the ninth block (after learning plateaus). (B) The trial-by-trial generalized variance (GV), with a double-exponential fit (red curve). (C) The number of principal components (PCs) that explain more than 1% of the variance in the velocity profiles of all joints in a single trial, with an exponential fit (red curve). (D) The manipulative complexity (Belić and Faisal, 2015), with an exponential fit (red curve). (B-D) Averaged across all subjects over all trials. Data is averaged over the performance of 10 subjects in the novel EVR environment. Grey dots are the trial averages for the EVR data. Solid red lines are curve fits for EVR data. For compression, dashed lines present the curve fits to a group of 30 subjects in the same pool paradigm in the real-world (with no VR) from our previous study [21].

The generalized variance (GV; the determinant of the covariance matrix [43]) over the velocity profiles of all joints was lower in the EVR than in real-world but showed the same trend: increase fast over the first ~30 trials and later decreased slowly (Fig 5B), suggesting active control of the exploration-exploitation trade-off. The covariance (Fig 5A) shows that the changes in the GV were driven by an initial increase followed by a decrease in the variance of the right shoulder. Like in the real-world, in the EVR as well the internal/external rotation of the right elbow showed a continuous increase in its variance, which did not follow the trend of the GV.

Principal component analysis (PCA) across joints for the velocity profiles per trial for each subject showed that in the EVR subjects used more degrees of freedom in their movement than in the real-world task (Fig 5C and 5D). While in both environments, in all trials, ~90% of the variance can be explained by the first PC, there is a slow but consistent rise in the number of PCs that explain more than 1% of the variance in the joint velocity profiles (Fig 5C). The manipulative complexity, suggested by Belić and Faisal [44] as a way to quantify complexity for a given number of PCs on a fixed scale (C = 1 implies that all PCs contribute equally, and C = 0 if one PC explains all data variability), showed the same trend (Fig 5D). This suggests that, in both environments, over trials subjects use more degrees of freedom in their movement; and in EVR they used slightly more DoF than in the real-world task.

As a measure of task performance in body space, we use the Velocity Profile Error (VPE), as in Haar et al [21]. VPE is defined by the minimal correlation distance (one minus Pearson correlation coefficient) between the velocity profile of each joint in each trial to the velocity profiles of that joint in all successful trials. Like in the real-world, in the EVR environment we also found that VPE shows a clear pattern of decay over trials in an exponential learning curve for all joints (Fig 6A).

Fig 6. Learning over joints.

Velocity Profile Error (VPE) and Intertrial variability reduction across all joints in the EVR task. (A) The trial-by-trial VPE for all 3 DoF of all joints, averaged across all subjects, with an exponential fit. The time constants of the fits are reported under the title. Color coded for the DoF—blue: flexion/extension; red: abduction/adduction; green: internal/external rotation. (B) VPE intertrial variability (ITV) over blocks of 25 trials, averaged across all subjects. Data is averaged over the performance of 10 subjects in the novel EVR environment.

Intertrial variability in joint movement was also measured over the VPEs in each block. Unlike the real-world task, where learning was evident in the decay over learning of the VPE intertrial variability, in the EVR there was no such decay in most joints (Fig 6B). This is in line with the lack of decay in the intertrial variability of the directional error (Fig 3C and 3D).

Discussion

In this paper, we present a novel embodied VR framework capable of providing controlled manipulations to better study naturalistic motor learning in a complex real-world setting. By vertically aligning real-world objects and displaying them into the VR environment, we were able to provide real-world proprioceptive feedback–which is missing in most VR environments–which should enhance the sense of immersion and thus, embodiment. We demonstrate the similarities and differences between the short-term motor learning in the EVR environment and in the real-world environment. Thus, we can now directly compare full-body motor learning in a real-world task between VR and the real-world. Before going into a detailed discussion of our findings, we reiterate the motivation for this work, namely, to develop a framework to allow neuroscience researchers to use our experimental techniques and protocols to perform in real-world tasks causal interventions by manipulating visual feedback. For example, changing the velocity of the virtual reality, manipulating the force perception of the shot, changing the ball directional error feedback to the subject, by modulating it at will or hiding the ball completely once it has been touched [e.g. 45]. In the context of rehabilitation, manipulations can purposefully increase task difficulty to enhance transfer [46].

There is considerable evidence that humans can learn motor skills in VR and transfer learning from the VR to the real-world. Yet, most of these evidences are in simplified movements [e.g. 47–50] while the successful transfer of complex skill learning remains a challenge. Accordingly, there is a real need to enhance transfer to make VR useful for rehabilitation and assistive technology applications [51, for review see 52]. Current thinking suggests that transfer should be enhanced the closer VR replicates the real-world [33, 52]. The physical interactions in our EVR are generating accurate force, touch and proprioceptive perceptions which are lacking in conventional VR setups. This suggests that our EVR framework could thus enhance learning and transfer between VR and the real-world. A recent review on learning and transfer in VR [52] also highlights the fidelity and dimensionality of the virtual environment as key components that determine learning and transfer, which are also addressed in our setup. In that sense, EVR mock-up of real-life tasks, like the one presented here, should be the way forward as it addresses all the key components that determine learning and transfer in real-world tasks. Since both VR and motion tracking technologies are becoming affordable, EVR setups like the one presented here can potentially be deployed in clinical rehabilitation settings in the foreseeable future. There is clearly much work to be done to validate these setups and to make them accessible for deployment (without programming expertise), but the use of affordable off-the-shelf hardware and open-source gaming engine makes it a relatively low-cost yet high-performance system.

Limitations

The comparison of the ball trajectories between the EVR and the real-world environments highlight the similarity in the ball directions, which is the main parameter that determines task error and success. Nevertheless, there were significant velocity differences between the environments. These velocity differences were set to optimize subjects’ experience, accounting for deviations in ball physics due to friction, spin, and follow-through which were not modelled in the VR. Due to these deviations, in VR, the cue ball reaches its max velocity almost instantaneously while in the real-world there is an acceleration phase. For the current version of the setup, we neglected these differences, assuming it would not affect the sense of embodiment of very naïve pool players. Future studies, testing experts on the setup, would require more accurate game physics in the EVR.

Another limitation of the current EVR setup is that subjects are unable to see their own limbs in the environment, whereas in the real-world the positions of the subject’s own limbs may influence how the task is learned. We can probably neglect this difference due to the extensive literature suggesting that learning is optimized by an external focus of attention [for review see 53]. Thus, the lack of body vision should not significantly affect learning. The lack of an avatar (a veridical rendering of the person’s body) could also potentially affect VR embodiment. During a pool shot, most of the body is out of sight and only the hands holding the cue stick should be visible. While the hands and arms are not visible in our setup, the cue stick is, and thus there is a multi-sensory correlation between the haptic and proprioceptive feedback of handling the pool cue as a physical tool and the visual feedback from VR. This multi-sensory effect replicates the induction of embodiment strategy observed in e.g. the rubber hand illusion, as well as other experiments that measure embodiment through self-location [54, 55].

Operating in VR requires acclimatisation and potentially learning a slightly altered visuomotor mapping (i.e. motor adaptation). While stereoscopic virtual reality technology seeks to present a true three-dimensional view of a scene to the user, distortions such as minification, shear distortion, or pincushion distortion can occur [56]. These distortions are dependent on the specific hardware setup, and an estimation of egocentric distances in VR may be off by up to 25% [56]. Moreover, stereoscopic displays induce a conflict between the different cues our visual system uses to infer depth and distance. Specifically, the mechanism of accommodation in the eye, which puts an object into focus, is also combined with other cues, such as vergence (movement of both eyes in opposite directions to maintain a single binocular vision) to estimate the object’s distance. This is an issue in VR as the focus point of all objects is on the screen immediately at nose-tip distance. This results in the accommodation-vergence conflict [57, 58]. This conflict alters the visuomotor mapping and is a known cause of visual discomfort in VR [56, 59, 60]. Latest generation head-mounted displays (like the HTC Vive Pro used here) do address some of these issues, enable more accurate space perception than other head-mounted displays [61], and were validated and recommended for use in real navigation and even motor rehabilitation [62]. Yet, performing a real-world visuomotor task, such as a pool shot, probably requires some visuomotor learning of the VR environment, in addition to the motor learning of the task.

Short-term motor learning in the EVR task and the physical real-world task [21] showed many similarities but also intriguing differences. The main trends over learning that were found in the physical real-world task include the decrease in directional error, decrease in directional intertrial variability, decrease in shoulder movement and increase in elbow rotation, decrease in joints VPE, and decrease in joints VPE intertrial variability [21]. In the EVR environment, we found the same general trends for all these metrics, except for those of the intertrial variability. We do however see a systematic difference in learning rates between VR and real-world when comparing the directional error and VPE trends. Across the board, we see less short-term motor learning in VR compared to the physical real-world. This is presumably due to the additional learning of the altered visuomotor mapping required in VR.

The decay of intertrial variability over trials is a prominent feature of skill learning [63–70], but not found in motor adaptation experiments. In the physical pool paradigm, we found two types of subjects that differ in their intertrial variability decay as well as other behavioural and neural markers [22]. Altogether, this suggested the contribution of two different learning mechanisms to the task: error-based adaptation and reward-based reinforcement learning, where the predominant learning mechanism is different between subjects. Here, the lack of intertrial variability decay and the overall differences in the learning curve between the group that learned the task in the EVR setting and the group that learned it in the physical real-world setting, suggests potential differences in the learning mechanisms used by the subjects who learned the task in the EVR. Presumably, in the EVR environment, the predominant learning mechanism was error-based adaptation. This may be attributed to the fact that all subjects were completely naïve to the EVR environment and had to learn not just the billiards task but also how to operate in EVR.

Like in the physical task, in the EVR setting, we also found the short-term motor learning to be a holistic process–all body joints are affected as a whole by learning the task. This was evident in the decrease in the velocity profile error (VPE) and the intertrial variability over learning (Fig 6). As we highlight in [21], this holistic aspect of motor learning is known in sport science [e.g. 71–73] but is rarely studied in motor neuroscience and motor rehabilitation, which are usually using simplistic artificial tasks and measure movement over only one or two arm joints. This congruence between physical and EVR setting suggests that while there are clear differences between the environments in the learning and performance in the task level (as discussed above), there are strong similarities in the body level [74] which can potentially enhance transfer.

Conclusions

In this study, we have developed an embodied VR framework capable of applying visual feedback manipulations for a naturalistic free-moving real-world skill task. We have also demonstrated in a short-term motor learning paradigm the similarities in learning progression for a pool billiards shot between the EVR and the real-world and have confirmed real-world findings that motor learning is a holistic process which involves the entire body from head to toe. By manipulating the visual feedback in the EVR we can now further investigate the relationships between the distinct learning strategies employed by humans for this real-world motor skill. Our approach can potentially be useful for VR rehabilitation, as it overcomes the limits of VR immersion. Our work highlights the potential of real world-tasks being used in VR in rehabilitation, but also in VR based skill training such as biosurgery.

Acknowledgments

We thank our participants for taking part in the study.

Data Availability

All data files have been deposited in figshare. Data DOI: 10.6084/m9.figshare.13553606.

Funding Statement

The study was enabled by financial support to a Royal Society-Kohn International Fellowship (https://royalsociety.org/grants-schemes-awards/grants/newton-international/; NF170650; SH & AAF). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Shadmehr R, Mussa-Ivaldi FA. Adaptive representation of dynamics during learning of a motor task. J Neurosci. 1994;14: 3208–24. 10.1523/JNEUROSCI.14-05-03208.1994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Smith MA, Ghazizadeh A, Shadmehr R. Interacting adaptive processes with different timescales underlie short-term motor learning. PLoS Biol. 2006;4: e179 10.1371/journal.pbio.0040179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Diedrichsen J, Hashambhoy Y, Rane T, Shadmehr R. Neural correlates of reach errors. J Neurosci. 2005;25: 9919–9931. 10.1523/JNEUROSCI.1874-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Howard IS, Wolpert DM, Franklin DW. The Value of the Follow-Through Derives from Motor Learning Depending on Future Actions. Curr Biol. 2015;25: 397–401. 10.1016/j.cub.2014.12.037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Krakauer JW, Pine Z, Ghilardi M, Ghez C. Learning of visuomotor transformations for vectorial planning of reaching trajectories. J Neurosci. 2000;20: 8916–8924. Available: http://www.jneurosci.org/content/20/23/8916.short 10.1523/JNEUROSCI.20-23-08916.2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mazzoni P, Krakauer J. An Implicit Plan Overrides an Explicit Strategy during Visuomotor Adaptation. J Neurosci. 2006;26: 3642–3645. 10.1523/JNEUROSCI.5317-05.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Taylor J a Krakauer JW, Ivry RB. Explicit and implicit contributions to learning in a sensorimotor adaptation task. J Neurosci. 2014;34: 3023–32. 10.1523/JNEUROSCI.3619-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Haar S, Donchin O, Dinstein I. Dissociating Visual and Motor Directional Selectivity Using Visuomotor Adaptation. J Neurosci. 2015;35: 6813–6821. 10.1523/JNEUROSCI.0182-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bromberg Z, Donchin O, Haar S. Eye movements during visuomotor adaptation represent only part of the explicit learning. eNeuro. 2019;6: 1–12. 10.1523/ENEURO.0308-19.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Honda T, Hirashima M, Nozaki D. Adaptation to Visual Feedback Delay Influences Visuomotor Learning. Brembs B, editor. PLoS One. 2012;7: e37900 10.1371/journal.pone.0037900 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Botzer L, Karniel A. Feedback and feedforward adaptation to visuomotor delay during reaching and slicing movements. Eur J Neurosci. 2013;38: 2108–2123. 10.1111/ejn.12211 [DOI] [PubMed] [Google Scholar]

- 12.Brudner SN, Kethidi N, Graeupner D, Ivry RB, Taylor JA. Delayed feedback during sensorimotor learning selectively disrupts adaptation but not strategy use. J Neurophysiol. 2016;115: 1499–1511. 10.1152/jn.00066.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Avraham G, Leib R, Pressman A, Simo LS, Karniel A, Shmuelof L, et al. State-based delay representation and its transfer from a game of pong to reaching and tracking. eNeuro. 2017;4 10.1523/ENEURO.0179-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Shabbott BA, Sainburg RL. Learning a visuomotor rotation: simultaneous visual and proprioceptive information is crucial for visuomotor remapping. Exp brain Res. 2010;203: 75–87. 10.1007/s00221-010-2209-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Peled A, Karniel A. Knowledge of Performance is Insufficient for Implicit Visuomotor Rotation Adaptation. J Mot Behav. 2012;443: 185–194. 10.1080/00222895.2012.672349 [DOI] [PubMed] [Google Scholar]

- 16.Taylor JA, Hieber LL, Ivry RB. Feedback-dependent generalization. J Neurophysiol. 2013; 202–215. 10.1152/jn.00247.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Faisal A, Stout D, Apel J, Bradley B. The Manipulative Complexity of Lower Paleolithic Stone Toolmaking. Petraglia MD, editor. PLoS One. 2010;5: e13718 10.1371/journal.pone.0013718 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hecht EE, Gutman DA, Khreisheh N, Taylor S V., Kilner J, Faisal AA, et al. Acquisition of Paleolithic toolmaking abilities involves structural remodeling to inferior frontoparietal regions. Brain Struct Funct. 2014;220: 2315–2331. 10.1007/s00429-014-0789-6 [DOI] [PubMed] [Google Scholar]

- 19.Xiloyannis M, Gavriel C, Thomik AAC, Faisal AA. Gaussian Process Autoregression for Simultaneous Proportional Multi-Modal Prosthetic Control with Natural Hand Kinematics. IEEE Trans Neural Syst Rehabil Eng. 2017;25: 1785–1801. 10.1109/TNSRE.2017.2699598 [DOI] [PubMed] [Google Scholar]

- 20.Rito Lima I, Haar S, Di Grassi L, Faisal AA. Neurobehavioural signatures in race car driving: a case study. Sci Rep. 2020;10: 1–9. 10.1038/s41598-019-56847-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Haar S, van Assel CM, Faisal AA. Motor learning in real-world pool billiards. Sci Rep. 2020;10: 1–13. 10.1038/s41598-019-56847-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Haar S, Faisal AA. Brain activity reveals multiple motor-learning mechanisms in a real-world task. Front Hum Neurosci. 2020;14 10.3389/FNHUM.2020.00354 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lanier J. Dawn of the new everything: A journey through virtual reality. Random House; 2017. [Google Scholar]

- 24.Faisal A. Computer science: Visionary of virtual reality. Nature. 2017;551: 298–299. 10.1038/551298a [DOI] [Google Scholar]

- 25.Holden MK, Todorov E. Use of Virtual Environments in Motor Learning and Rehabilitation. Handb Virtual Environ Des Implementation, Appl. 2002;44: 1–35. Available: http://web.mit.edu/bcs/bizzilab/publications/holden2002b.pdf [Google Scholar]

- 26.Rizzo A, Buckwalter JG, van der Zaag C, Neumann U, Thiebaux M, Chua C, et al. Virtual Environment applications in clinical neuropsychology. Proc—Virtual Real Annu Int Symp. 2000; 63–70. [Google Scholar]

- 27.Holden MK. Virtual environments for motor rehabilitation: Review. Cyberpsychology and Behavior. 2005. pp. 187–211. 10.1089/cpb.2005.8.187 [DOI] [PubMed] [Google Scholar]

- 28.Jack D, Boian R, Merians AS, Tremaine M, Burdea GC, Adamovich S V., et al. Virtual reality-enhanced stroke rehabilitation. IEEE Trans Neural Syst Rehabil Eng. 2001;9: 308–318. 10.1109/7333.948460 [DOI] [PubMed] [Google Scholar]

- 29.Zhang L, Abreu BC, Seale GS, Masel B, Christiansen CH, Ottenbacher KJ. A virtual reality environment for evaluation of a daily living skill in brain injury rehabilitation: Reliability and validity. Arch Phys Med Rehabil. 2003;84: 1118–1124. 10.1016/s0003-9993(03)00203-x [DOI] [PubMed] [Google Scholar]

- 30.Levin MF, Weiss PL, Keshner EA. Emergence of Virtual Reality as a Tool for Upper Limb Rehabilitation: Incorporation of Motor Control and Motor Learning Principles. Phys Ther. 2015;95: 415–425. 10.2522/ptj.20130579 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mendes FA dos S, Pompeu JE, Lobo AM, da Silva KG, Oliveira T de P, Zomignani AP, et al. Motor learning, retention and transfer after virtual-reality-based training in Parkinson’s disease–effect of motor and cognitive demands of games: a longitudinal, controlled clinical study. Physiotherapy. 2012;98: 217–223. 10.1016/j.physio.2012.06.001 [DOI] [PubMed] [Google Scholar]

- 32.Mirelman A, Maidan I, Herman T, Deutsch JE, Giladi N, Hausdorff JM. Virtual Reality for Gait Training: Can It Induce Motor Learning to Enhance Complex Walking and Reduce Fall Risk in Patients With Parkinson’s Disease? Journals Gerontol Ser A Biol Sci Med Sci. 2011;66A: 234–240. 10.1093/gerona/glq201 [DOI] [PubMed] [Google Scholar]

- 33.Rose FD, Attree EA, Brooks BM, Parslow DM, Penn PR. Training in virtual environments: transfer to real world tasks and equivalence to real task training. Ergonomics. 2000;43: 494–511. 10.1080/001401300184378 [DOI] [PubMed] [Google Scholar]

- 34.Carter AR, Foreman MH, Martin C, Fitterer S, Pioppo A, Connor LT, et al. Inducing visuomotor adaptation using virtual reality gaming with a virtual shift as a treatment for unilateral spatial neglect. J Intellect Disabil—Diagnosis Treat. 2016;4: 170–184. 10.6000/2292-2598.2016.04.03.4 [DOI] [Google Scholar]

- 35.Anglin JM, Sugiyama T, Liew SL. Visuomotor adaptation in head-mounted virtual reality versus conventional training. Sci Rep. 2017;7 10.1038/srep45469 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lindgren R, Johnson-Glenberg M. Emboldened by Embodiment: Six Precepts for Research on Embodied Learning and Mixed Reality. Educ Res. 2013;42: 445–452. 10.3102/0013189X13511661 [DOI] [Google Scholar]

- 37.Arzy S, Thut G, Mohr C, Michel CM, Blanke O. Neural basis of embodiment: Distinct contributions of temporoparietal junction and extrastriate body area. J Neurosci. 2006;26: 8074–8081. 10.1523/JNEUROSCI.0745-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Longo MR, Schüür F, Kammers MPM, Tsakiris M, Haggard P. What is embodiment? A psychometric approach. Cognition. 2008;107: 978–998. 10.1016/j.cognition.2007.12.004 [DOI] [PubMed] [Google Scholar]

- 39.Kilteni K, Groten R, Slater M. The Sense of Embodiment in Virtual Reality. Presence Teleoperators Virtual Environ. 2012;21: 373–387. 10.1162/PRES_a_00124 [DOI] [Google Scholar]

- 40.Makin TR, de Vignemont F, Faisal AA. Neurocognitive barriers to the embodiment of technology. Nat Biomed Eng. 2017;1: 0014 10.1038/s41551-016-0014 [DOI] [Google Scholar]

- 41.Rehm F. Unity 3D Pool. In: GitHub repository [Internet]. 2015. Available: https://github.com/fgrehm/pucrs-unity3d-pool

- 42.Thomik AAC. On the structure of natural human movement. Imperial College London. 2016. Available: https://spiral.imperial.ac.uk/handle/10044/1/61827 [Google Scholar]

- 43.Wilks SS. Certain Generalizations in the Analysis of Variance. Biometrika. 1932;24: 471 10.2307/2331979 [DOI] [Google Scholar]

- 44.Belić JJ, Faisal AA. Decoding of human hand actions to handle missing limbs in neuroprosthetics. Front Comput Neurosci. 2015;9: 27 10.3389/fncom.2015.00027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Faisal AA, Wolpert DM. Near optimal combination of sensory and motor uncertainty in time during a naturalistic perception-action task. J Neurophysiol. 2009;101 10.1152/jn.90974.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Quadrado VH, Silva TD da, Favero FM, Tonks J, Massetti T, Monteiro CB de M. Motor learning from virtual reality to natural environments in individuals with Duchenne muscular dystrophy. Disabil Rehabil Assist Technol. 2019;14: 12–20. 10.1080/17483107.2017.1389998 [DOI] [PubMed] [Google Scholar]

- 47.Ravi DK, Kumar N, Singhi P. Effectiveness of virtual reality rehabilitation for children and adolescents with cerebral palsy: an updated evidence-based systematic review. Physiotherapy (United Kingdom). Elsevier Ltd; 2017. pp. 245–258. 10.1016/j.physio.2016.08.004 [DOI] [PubMed] [Google Scholar]

- 48.Laver KE, Lange B, George S, Deutsch JE, Saposnik G, Crotty M. Virtual reality for stroke rehabilitation. Cochrane Database of Systematic Reviews. John Wiley and Sons Ltd; 2017. 10.1002/14651858.CD008349.pub4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Massetti T, Trevizan IL, Arab C, Favero FM, Ribeiro-Papa DC, De Mello Monteiro CB. Virtual reality in multiple sclerosis—A systematic review. Multiple Sclerosis and Related Disorders. Elsevier B.V.; 2016. pp. 107–112. 10.1016/j.msard.2016.05.014 [DOI] [PubMed] [Google Scholar]

- 50.Dockx K, Bekkers EMJ, Van den Bergh V, Ginis P, Rochester L, Hausdorff JM, et al. Virtual reality for rehabilitation in Parkinson’s disease. Cochrane Database of Systematic Reviews. John Wiley and Sons Ltd; 2016. 10.1002/14651858.CD010760.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Ktena SI, Abbott W, Faisal AA. A virtual reality platform for safe evaluation and training of natural gaze-based wheelchair driving. International IEEE/EMBS Conference on Neural Engineering, NER. IEEE Computer Society; 2015. pp. 236–239. 10.1109/NER.2015.7146603 [DOI]

- 52.Levac DE, Huber ME, Sternad D. Learning and transfer of complex motor skills in virtual reality: a perspective review. J Neuroeng Rehabil. 2019;16: 121 10.1186/s12984-019-0587-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Wulf G. Attentional focus and motor learning: a review of 15 years. Int Rev Sport Exerc Psychol. 2013;6: 77–104. 10.1080/1750984X.2012.723728 [DOI] [Google Scholar]

- 54.Botvinick M, Cohen J. Rubber hands “feel” touch that eyes see. Nature. Nature Publishing Group; 1998. p. 756 10.1038/35784 [DOI] [PubMed] [Google Scholar]

- 55.Lenggenhager B, Mouthon M, Blanke O. Spatial aspects of bodily self-consciousness. Conscious Cogn. 2009;18: 110–117. 10.1016/j.concog.2008.11.003 [DOI] [PubMed] [Google Scholar]

- 56.Renner RS, Velichkovsky BM, Helmert JR. The perception of egocentric distances in virtual environments—A review. ACM Computing Surveys. 2013. 10.1145/2543581.2543590 [DOI] [Google Scholar]

- 57.Hoffman DM, Girshick AR, Akeley K, Banks MS. Vergence-accommodation conflicts hinder visual performance and cause visual fatigue. J Vis. 2008;8 10.1167/8.3.33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Kramida G. Resolving the vergence-accommodation conflict in head-mounted displays. IEEE Trans Vis Comput Graph. 2016;22: 1912–1931. 10.1109/TVCG.2015.2473855 [DOI] [PubMed] [Google Scholar]

- 59.Carnegie K, Rhee T. Reducing Visual Discomfort with HMDs Using Dynamic Depth of Field. IEEE Comput Graph Appl. 2015;35: 34–41. 10.1109/MCG.2015.98 [DOI] [PubMed] [Google Scholar]

- 60.Vienne C, Sorin L, Blondé L, Huynh-Thu Q, Mamassian P. Effect of the accommodation-vergence conflict on vergence eye movements. Vision Res. 2014;100: 124–133. 10.1016/j.visres.2014.04.017 [DOI] [PubMed] [Google Scholar]

- 61.Kelly JW, Cherep LA, Siegel ZD. Perceived space in the HTC vive. ACM Trans Appl Percept. 2017;15: 1–16. 10.1145/3106155 [DOI] [Google Scholar]

- 62.Borrego A, Latorre J, Alcañiz M, Llorens R. Comparison of Oculus Rift and HTC Vive: Feasibility for Virtual Reality-Based Exploration, Navigation, Exergaming, and Rehabilitation. Games Health J. 2018;7: 151–156. 10.1089/g4h.2017.0114 [DOI] [PubMed] [Google Scholar]

- 63.Deutsch KM, Newell KM. Changes in the structure of children’s isometric force variability with practice. J Exp Child Psychol. 2004;88: 319–333. 10.1016/j.jecp.2004.04.003 [DOI] [PubMed] [Google Scholar]

- 64.Müller H, Sternad D. Decomposition of variability in the execution of goal-oriented tasks: three components of skill improvement. J Exp Psychol Hum Percept Perform. 2004;30: 212–233. 10.1037/0096-1523.30.1.212 [DOI] [PubMed] [Google Scholar]

- 65.Cohen RG, Sternad D. Variability in motor learning: relocating, channeling and reducing noise. Exp Brain Res. 2009;193: 69–83. 10.1007/s00221-008-1596-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Guo CC, Raymond JL. Motor learning reduces eye movement variability through reweighting of sensory inputs. J Neurosci. 2010;30: 16241–16248. 10.1523/JNEUROSCI.3569-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Shmuelof L, Krakauer JW, Mazzoni P. How is a motor skill learned? Change and invariance at the levels of task success and trajectory control. J Neurophysiol. 2012;108: 578–594. 10.1152/jn.00856.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Huber ME, Brown AJ, Sternad D. Girls can play ball: Stereotype threat reduces variability in a motor skill. Acta Psychol (Amst). 2016;169: 79–87. 10.1016/j.actpsy.2016.05.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Sternad D. It’s not (only) the mean that matters: variability, noise and exploration in skill learning. Curr Opin Behav Sci. 2018;20: 183–195. 10.1016/j.cobeha.2018.01.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Krakauer JW, Hadjiosif AM, Xu J, Wong AL, Haith AM. Motor learning. Compr Physiol. 2019;9: 613–663. 10.1002/cphy.c170043 [DOI] [PubMed] [Google Scholar]

- 71.Kageyama M, Sugiyama T, Takai Y, Kanehisa H, Maeda A. Kinematic and Kinetic Profiles of Trunk and Lower Limbs during Baseball Pitching in Collegiate Pitchers. J Sports Sci Med. 2014;13: 742–50. Available: http://www.ncbi.nlm.nih.gov/pubmed/25435765 [PMC free article] [PubMed] [Google Scholar]

- 72.Oliver GD, Keeley DW. Pelvis and torso kinematics and their relationship to shoulder kinematics in high-school baseball pitchers. J Strength Cond Res. 2010;24: 3241–3246. 10.1519/JSC.0b013e3181cc22de [DOI] [PubMed] [Google Scholar]

- 73.Stodden DF, Langendorfer SJ, Fleisig GS, Andrews JR. Kinematic Constraints Associated With the Acquisition of Overarm Throwing Part I. Res Q Exerc Sport. 2006;77: 417–427. 10.1080/02701367.2006.10599377 [DOI] [PubMed] [Google Scholar]

- 74.Haar S, Donchin O. A Revised Computational Neuroanatomy for Motor Control. J Cogn Neurosci. 2020;32: 1823–1836. 10.1162/jocn_a_01602 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data files have been deposited in figshare. Data DOI: 10.6084/m9.figshare.13553606.