Abstract

Purpose

There is a rapid growth of telepractice in both clinical and research settings; however, the literature validating translation of traditional methods of assessments and interventions to valid remote videoconference administrations is limited. This is especially true in the field of speech-language pathology where assessments of language and communication can be easily conducted via remote administration. The aim of this study was to validate videoconference administration of the Western Aphasia Battery–Revised (WAB-R).

Method

Twenty adults with chronic aphasia completed the assessment both in person and via videoconference with the order counterbalanced across administrations. Specific modifications to select WAB-R subtests were made to accommodate interaction by computer and Internet.

Results

Results revealed that the two methods of administration were highly correlated and showed no difference in domain scores. Additionally, most participants endorsed being mostly or very satisfied with the videoconference administration.

Conclusion

These findings suggest that administration of the WAB-R in person and via videoconference may be used interchangeably in this patient population. Modifications and guidelines are provided to ensure reproducibility and access to other clinicians and scientists interested in remote administration of the WAB-R.

Supplemental Material

Continued technological advancement is finding its way into many fields, one of them being health care. Health care providers are increasingly implementing the use of telehealth, which is defined as “technology-enabled health and care management and delivery systems that extend capacity and access” (“Telehealth Basics,” 2019). The American Hospital Association reports that, in 2017, 76% of hospitals in the United States alone had implemented some form of telepractice (Fact Sheet: Telehealth, 2019). Additionally, a recent study by the National Business Group of Health reported that 96% of employers in the United States will be providing medical coverage for telehealth in states where it is allowed (National Business Group on Health, 2017). According to the American Telemedicine Association, a recent push for telepractice is underscored by the need for improved access to care, cost efficiency, improved quality, and patient demand (“Telehealth Basics,” 2019). Of these, accessibility to care is especially significant for those patients who otherwise may not have medical resources available to them. Telemedicine and telepractice now enable patients living with illnesses in remote areas to receive the same services as those who live in large metropolitan centers with easy access to high-quality care. As this practice continues to grow, it is imperative to validate the delivery of assessment and treatment to ensure that the same level of care is provided to patients who are evaluated remotely as to those evaluated in the clinic (i.e., in person).

The field of speech-language pathology is in the process of expanding its telepractice reach and capabilities (Cherney & Van Vuuren, 2012; Duffy et al., 1997; Theodoros et al., 2008). In recent years, both teleassessment (i.e., remote assessment of speech, language, and cognitive function) and telerehabilitation (i.e., treatment of speech, language, and cognitive impairments) services have been offered clinically and in research settings by speech-language pathologists (SLPs; Brennan et al., 2010; Hall et al., 2013). Specifically, according to a 2016 American Speech-Language-Hearing Association survey that was completed by 476 SLPs, 64% endorsed providing services using telepractice. The survey followed up with a question regarding how the SLPs utilized telepractice and found that 37.6% of SLPs used telepractice for screenings, 60.7% used telepractice for assessment, and 96.4% used it for treatment. Although there is clearly an interest in providing telepractice in the realm of speech, language, and hearing sciences, there is a lack of literature on validated telepractice-based assessments and intervention programs. Furthermore while there are countless speech, language, and cognitive assessments, translation of these traditional methods of assessments to valid remote videoconference administrations is limited.

To our knowledge, only two assessments for aphasia (both short forms) have been validated for videoconference administration: the Boston Naming Test–Second Edition (Short Form; Kaplan et al., 2001) and the Boston Diagnostic Aphasia Examination–Third Edition (Short Form; Goodglass et al., 2001; Hill et al., 2009; Palsbo, 2007; Theodoros et al., 2008). Hill et al. conducted a study with 32 adults with acquired aphasia and reported that severity of aphasia did not affect the accuracy of telepractice assessment of the Boston Diagnostic Aphasia Examination–Third Edition Short Form except in assessing naming and paraphasias. Statistically significant differences in rating scale scores in these domains were found for telerehabilitation assessment versus face-to-face assessment for severely impaired individuals only (Hill et al., 2009).

Recently, a group of experts in aphasia reached a consensus to consider the Western Aphasia Battery–Revised (WAB-R; Kertesz, 2007) as the core outcome measure for language impairment in aphasia (Wallace et al., 2019). The WAB-R is a comprehensive test battery for linguistic skills along with several nonlinguistic components that provides three scores: Aphasia Quotient (AQ), Language Quotient (LQ), and Cortical Quotient (CQ). The AQ is often used as a measure of overall aphasia severity, LQ is a measure of spoken and written language performance, and CQ combines linguistic and nonlinguistic performance. Given the diagnostic capabilities of this test and its status as a core outcome measure for aphasia rehabilitation per expert consensus, it is important to validate teleassessment of the WAB-R in adults with aphasia.

Yet, there are several obstacles to validating remote administration of an assessment such as the WAB-R. First, the WAB-R does not lend itself to easy web-based administration as there are objects to manipulate and several subtests require pointing or gesturing. In addition, computer assessments in individuals with poststroke aphasia oftentimes require the help of a caregiver due to reduced comprehension skills in this population. The goal of this study was to establish the feasibility of teleassessment of the WAB-R and to determine if videoconference administration was comparable to in-person administration. Furthermore, we sought to determine whether people with aphasia (PWA) preferred in-person administration or videoconference administration or if they felt similarly about both methods of assessment. Finally, like Hill et al. (2009), we examined whether there were differences in administration and scoring that were influenced by aphasia severity.

Method

A total of 20 adults with chronic acquired aphasia (M age = 55 years, mean years of education = 16) were recruited from the New England area (see Table 1 for demographics). All participants were in the chronic stage of recovery (i.e., at least 6 months postinjury) and did not present with any significant premorbid psychiatric or neurologic illness. Twelve of 20 participants reported being involved in aphasia community groups within the 6 months prior to the study. Twelve of 20 participants reported being involved in individual speech therapy within the 6 months prior to the study. Five of those participants have completed individual treatment prior to study onset. For the remaining seven, two were actively involved in individual treatment, and for five participants, it is not known whether they were actively involved in treatment. All participants provided informed consent according to the Boston University Institutional Review Board.

Table 1.

Demographics.

| Variable | M | SD |

|---|---|---|

| Age (years) | 55 | 13.81 |

| Education (years) | 16 | 3 |

| MPO | 67 | 49 |

| Sex (M/F) | 13/7 | |

| Handedness (R/L) | 18/2 |

Note. Etiology: Left-hemisphere stroke (n = 17), traumatic brain injury (TBI; n = 2), and stroke + TBI (n = 1). MPO = months post onset (stroke/TBI); M = male; F = female; R = right; L = left.

Setup

At the onset of the study, the researchers inquired about the type of computer participants had at home and whether it had the required components for the study such as video camera, microphone, and speakers. If the participant did not have a computer that was compatible with videoconferencing, a laptop was lent to him or her in order to complete the videoconference portion of the study. An important aspect of this study was to maintain ecological validity; therefore, participants were encouraged to use their own technology if possible. In addition to computer equipment, participants were asked whether they had the following materials at home: white printer paper, a comb, a book, and a pen. If they were missing any of these testing materials, materials were provided along with four Kohs blocks. Prior to assessment, participants completed a questionnaire regarding their current technology use. Questions were open-ended to allow participants to comment on the different types of devices they used at the time of the study and in what capacity (see Supplemental Material S1). Lastly, researchers asked caregivers to be readily available to assist during assessment administration if necessary. The researchers provided consistent guidelines as to how much the caregivers should assist (i.e., do not provide feedback on performance, repeat instructions given by the clinician, or allow the use of notes or aids; see Supplemental Material S2 for full description of verbal instructions provided at the time of testing).

Assessment

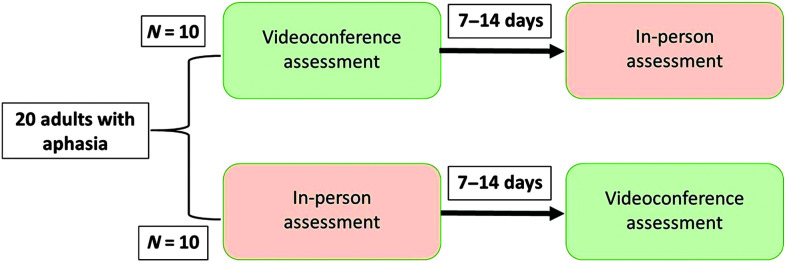

All participants completed both in-person and videoconference testing with the WAB-R. The order of administration (in-person or videoconference) was counterbalanced across participants, with 10 participants completing videoconference testing first and 10 participants completing in-person testing first. Initial counterbalanced order was intended to be every other participant assigned to videoconference versus in-person assessment. This counterbalanced order was modified for convenience as needed based on study team and participant schedule demands. Tests were completed at an interval of 7–14 days (see Figure 1). All in-person assessments were completed either at the Boston University Aphasia Research Laboratory or in the participant's home with a trained research assistant. Videoconference administrations were completed using either the GoToMeeting or Zoom platforms (GoToMeeting, 2018; Zoom, 2018). One important aspect of videoconferencing is to ensure that participant privacy and confidentiality are enforced. In this project, two platforms for videoconferencing were utilized, GoToMeeting and Zoom. Both have specific security settings to ensure patient confidentiality (information on Health Insurance Portability and Accountability Act [HIPAA] compliance for both platforms can be found in the following: https://logmeincdn.azureedge.net/gotomeetingmedia/-/media/pdfs/lmi0098b-gtm-hipaa-compliance-guide-final.pdf [GoToMeeting, 2018]; https://zoom.us/docs/doc/Zoom-hipaa.pdf [Zoom, 2017]). Although both platforms worked well for the current project, the Zoom platform may be a better choice for most clinicians and researchers. It allows for easier sharing of documents with participants and meets all HIPAA requirements.

Figure 1.

Counterbalanced schema for Western Aphasia Battery–Revised administration.

As much as possible, videoconference assessment was completed with participants in their home environment to simulate clinical telepractice. For the three participants who preferred to complete the videoconference testing in the clinic, they were set up with a laptop in a separate room and were not provided assistance with the testing by the researchers.

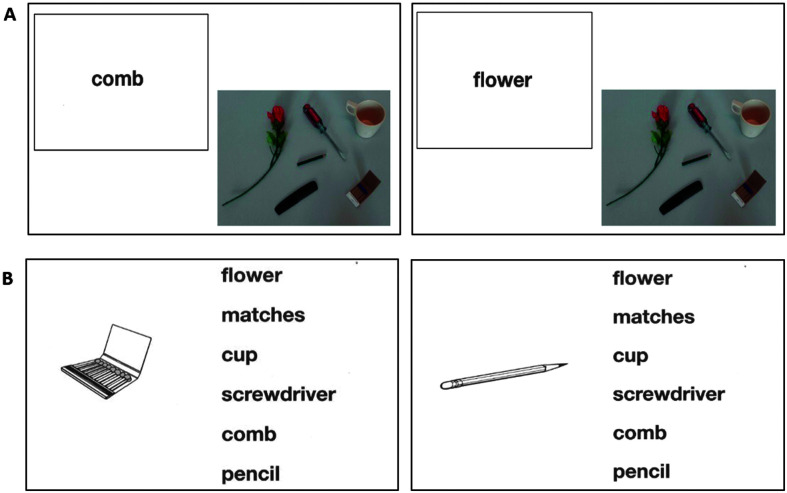

Several modifications were made for the videoconference administration of the WAB-R (see Figure 2). First, all stimuli were scanned and uploaded as a digital .pdf file. These files were shared with participants (only during test administration), allowing them to point to the stimuli with their mouse or their finger if using a touchscreen device. Additional stimuli and task-specific modifications that were made are detailed in Appendix A.

Figure 2.

Adaptations of stimuli for videoconference administration of the Western Aphasia Battery. (A) Written word–object choice matching. (B) Picture–written word choice matching.

After Assessment

At the end of the experiment, participants once again completed a questionnaire based on Hill et al.'s work rating the following: overall satisfaction, audio and visual quality, level of comfort, whether they would participate in videoconference assessment again, and whether they were equally satisfied with in-person and videoconference assessment (Hill et al., 2009).

Assessment Fidelity and Reliability

Several steps were taken to promote assessment fidelity prior to the study, to minimize provider “drift” during the study, and to verify fidelity after test administrations. In addition to emphasizing the importance of assessment fidelity, Richardson et al. outline details to be considered and reported during aphasia assessments including assessor qualifications, assessor and rater training, assessment delivery, scoring reliability, and assessor blinding, which are discussed below (Richardson et al., 2016).

Three trained research team members completed administrations of the WAB-R. Administrator 1 had 6 years of experience administering neuropsychological tests in a research context, including 2 years of experience administering the WAB-R. Administrators 2 and 3 were SLPs with 6 and 2 years of experience, respectively. Prior to beginning the study, administrators reviewed the test together and clarified scoring questions. Administrators referred to the WAB-R scoring booklet and manual for scoring questions as well as a team-developed document of clarifications for common scenarios not specified in the WAB-R manual (see Appendixes B and C).

All WAB-R administrations, both in-person and videoconference, were video-recorded to allow for fidelity and reliability review. Administrators 1 and 2 engaged in a blinded calibration process prior to initiating the study. Administrator 1 blindly rescored two of Administrator 2's evaluations from video-recording, and Administrator 2 blindly reviewed three of Administrator 1's evaluations using the video-recordings. The administrators then reviewed both sets of scores for all five administrations, discussed discrepancies, and reached a consensus regarding scoring procedures for subsequent evaluations (see Table 2 for order of administrations and administrators). After calibration, only scores from the clinician performing the evaluation were used in this project.

Table 2.

Order of administration and administrators for each participant

| Participant | First administration | Administrator 1 | Second administration | Administrator 2 |

|---|---|---|---|---|

| 1 | In person | Administrator 2 | Videoconference | Administrator 2 |

| 2 | Videoconference | Administrator 1 | In person | Administrator 1 |

| 3 | Videoconference | Administrator 2 | In person | Administrator 1 |

| 4 | In person | Administrator 1 | Videoconference | Administrator 1 |

| 5 | In person | Administrator 1 | Videoconference | Administrator 2 |

| 6 | Videoconference | Administrator 2 | In person | Administrator 1 |

| 7 | In person | Administrator 2 | Videoconference | Administrator 1 |

| 8 | Videoconference | Administrator 3 | In person | Administrator 1 |

| 9 | Videoconference | Administrator 3 | In person | Administrator 1 |

| 10 | In person | Administrator 1 | Videoconference | Administrator 3 |

| 11 | In person | Administrator 1 | Videoconference | Administrator 3 |

| 12 | Videoconference | Administrator 2 | In person | Administrator 1 |

| 13 | In person | Administrator 1 | Videoconference | Administrator 2 |

| 14 | In person | Administrator 3 | Videoconference | Administrator 1 |

| 15 | In person | Administrator 1 | Videoconference | Administrator 2 |

| 16 | Videoconference | Administrator 1 | In person | Administrator 2 |

| 17 | Videoconference | Administrator 3 | In person | Administrator 2 |

| 18 | In person | Administrator 3 | Videoconference | Administrator 2 |

| 19 | Videoconference | Administrator 1 | In person | Administrator 2 |

| 20 | Videoconference | Administrator 1 | In person | Administrator 2 |

Although blinded assessment was not possible for this study, for 17 of 20 participants, different assessors administered in-person versus videoconference assessment to reduce administrator bias in reassessment. Each examiner for the second administration did not review assessment scores for the first administration.

Treatment fidelity and reliability were assessed by an independent rater not involved in testing. The research team developed a 32-item fidelity checklist with binary responses (see Appendix D). Midway through the research study, this assessor reviewed videos for WAB-R administrations for four participants from two administrators using the fidelity checklist (eight total administrations, comprising 20% of the final number of administrations). Following this review, the assessor discussed discrepancies with the administrators. This was done to minimize drift in assessment procedures as the study progressed. In addition, post hoc review of participant scores that differed more than 5 points in AQ or that showed a change in aphasia type between administrations was completed to determine whether discrepancies in assessment administration or scoring may have contributed to score changes.

Statistics

Intraclass correlations (ICCs) and paired-samples t tests were calculated to compare the WAB-R scores (AQ, LQ, CQ) between videoconference and in-person administrations. Analyses were performed using IBM SPSS Statistics 22, and analyses were considered significant at a conservative α level of .01. ICCs were conducted with a two-way mixed-effects model with absolute agreement. Mean estimates along with 99% confidence intervals (CIs) are reported for each ICC. Interpretations are as follows: < .50, poor; between .50 and .75, fair; between .75 and .90, good; above .90, excellent (Koo & Li, 2016; Perinetti, 2018).

For reliability assessment, the independent rater rescored eight WAB-R administrations for two administrators without reviewing original scores (20% of total administrations). Domain subscores across the eight domains of the test (Spontaneous Speech; Auditory Verbal Comprehension; Repetition; Naming and Word Finding; Reading; Writing; Apraxia; and Constructional, Visuospatial, and Calculation) were tabulated, and z scores were generated. ICCs were conducted on a summary measure for these z scores to compare scores given by the initial administrator to scores given by the independent rater with a two-way random effects model with absolute agreement. Comparisons were omitted for six missing data points in which the independent rater was unable to complete rescoring (e.g., video-recording malfunction).

Results

A high degree of reliability was found between in-person and videoconference administrations for measures of AQ, LQ, and CQ. The ICCs for the three WAB measures were all excellent, with reliability being .989 (99% CI [.964, .997], p ≤ .001) for AQ, .993 (99% CI [.978, .998], p ≤ .001) for LQ, and .993 (99% CI [.978, .998], p ≤ .001) for CQ (see Table 3). Paired-samples t tests for the three domain scores showed no significant differences between in-person and videoconference administration for AQ, t(19) = −0.856, p =.403; LQ, t(19) = −0.859, p = .401; and CQ, t(19) = −0.073, p = .943 (see Table 3).

Table 3.

Intraclass correlations (ICCs) and t-test results.

| Variable | In-person |

Videoconference |

ICC |

t test |

||

|---|---|---|---|---|---|---|

| M | SD | M | SD | r | p | |

| WAB-AQ | 68.11 | 22.17 | 68.75 | 22.68 | .989 | .403 |

| WAB-LQ | 67.95 | 21.19 | 68.42 | 21.32 | .993 | .401 |

| WAB-CQ | 71.35 | 18.85 | 71.38 | 19.36 | .993 | .943 |

Note. WAB = Western Aphasia Battery; AQ = Aphasia Quotient; LQ = Language Quotient; CQ = Cortical Quotient.

Finally, the same analyses (both ICCs and paired-samples t test) were performed to analyze the differences between first and second administrations (regardless of whether these were in-person or videoconference administrations). All ICCs were found to be excellent (i.e., > .9), and all t tests were nonsignificant at an α level of .01.

Fidelity and Reliability

Assessment fidelity, as measured by percentage of items on the checklist with a score of 1/1, was 94% for in-person administrations and 92% for videoconference administrations. Assessment fidelity was 91% for Administrator 1 and 95% for Administrator 2. In-person versus videoconference and Administrator 1 versus Administrator 2 comparisons indicate a high degree of assessment fidelity at the midpoint of the study. For the limited number of evaluated items that did not receive a score of 1/1 in the checklist, no clear patterns of administrator error emerged. In terms of reliability, ICCs were conducted comparing initial evaluator score with independent rater score showing a correlation coefficient of .991 (99% CI [.982, .995], p < .001), indicating a high degree of interrater reliability.

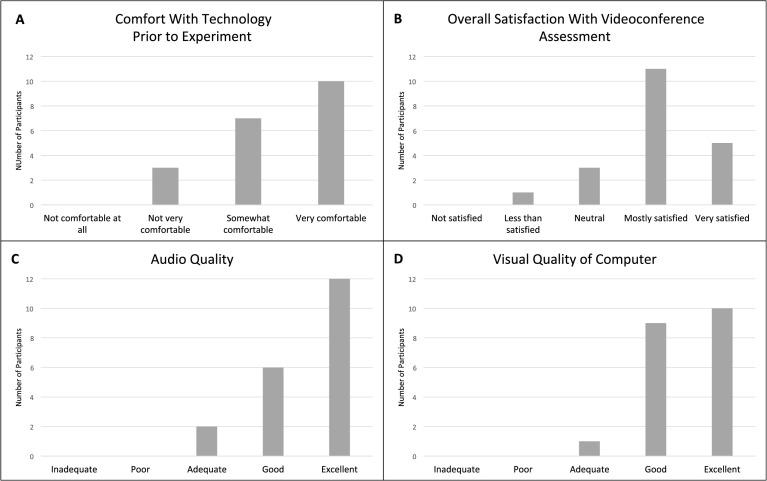

Satisfaction Survey

The survey results revealed that 85% of participants did not show a preference for one form of administration over the other (see Figure 3). Of the three individuals who endorsed being more satisfied with in-person assessment, two had reported not feeling comfortable with technology prior to the study. One stated she had no concerns with in-person testing and that videoconference testing added an additional level of difficulty. Eighty percent of PWA reported being either mostly or very satisfied with the videoconference testing, 15% reported being neutral about the administration method, and only one individual said she was less than satisfied with the videoconference administration. This was the same participant who reported it was easier for her to complete in-person testing. However, all individuals reported that they would be willing to complete teleassessment again. Additionally, participants endorsed good audio and visual quality (for more detailed information, refer to Figure 3).

Figure 3.

Satisfaction survey results. (A) Comfort with technology use prior to the experiment. (B) Overall satisfaction with the videoconference assessment. (C) Rating of audio quality for video assessment. (D) Rating of video quality for video assessment: Survey results demonstrate comfort and overall satisfaction with the technology used during the videoconference and overall assessment experience.

Individual Participant Analyses

Although we found robust results indicating that the two methods of WAB-R administration may be used interchangeably, there were some individual-level inconsistencies that should be highlighted (see Table 4 for individual scores). Of note, five participants (P8, P9, P14, P18, and P19) showed changes in aphasia classification per the WAB-R Classification Criteria between the first and second administrations. In three of five cases, this was due to variation in the Repetition score across the boundary of 7/10 that distinguishes conduction from anomic aphasia (Participants 8, 18, and 19). In these cases, Repetition scores were not consistently higher in either videoconference or in-person assessment. In two of five cases, classification change was due to variation in the Auditory Verbal Comprehension score, higher in one instance for in-person assessment (Participant 14) and higher in the other for videoconference (Participant 9). Only one of these five participants falls in the group that showed greater than a 5-point change in AQ (Participant 19), showing that WAB-R classification can change while overall severity rating remains constant. It should also be noted that Participants 8, 9, and 18 reported being involved in individual treatment in the 6 months preceding the study and that this treatment had concluded prior to study onset. Participant 14 reported being involved in treatment in the 6 months preceding the study, and it is not known whether this treatment was ongoing.

Table 4.

Aphasia Quotient (AQ), Language Quotient (LQ), and Cortical Quotient (CQ) for in-person and videoconference administrations.

| Participant | In person |

Videoconference |

Change AQ | Change LQ | Change CQ | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| AQ | LQ | CQ | Aphasia type | AQ | LQ | CQ | Aphasia type | ||||

| 1 | 89.90 | 90.90 | 91.00 | Anomic | 93.20 | 91.60 | 91.93 | Anomic | 3.30 | 0.70 | 0.93 |

| 2 | 44.60 | 44.60 | 53.80 | Broca's | 39.20 | 39.80 | 48.98 | Broca's | 5.40 a | 4.80 | 4.82 |

| 3 | 98.20 | 94.40 | 95.53 | Anomic | 98.10 | 96.30 | 96.72 | Anomic | 0.10 | 1.90 | 1.19 |

| 4 | 84.10 | 74.10 | 76.42 | Anomic | 85.40 | 74.60 | 78.92 | Anomic | 1.30 | 0.50 | 2.50 |

| 5 | 37.80 | 36.70 | 45.07 | Broca's | 40.40 | 38.20 | 45.82 | Broca's | 2.60 | 1.50 | 0.75 |

| 6 | 49.80 | 47.10 | 48.28 | Wernicke's | 49.30 | 48.00 | 47.53 | Wernicke's | 0.50 | 0.90 | 0.75 |

| 7 | 73.60 | 66.20 | 74.08 | Anomic | 76.90 | 69.70 | 75.88 | Anomic | 3.30 | 3.50 | 1.80 |

| 8 | 74.40 | 74.70 | 77.00 | Conduction b | 74.60 | 74.30 | 76.60 | Anomic b | 0.20 | 0.40 | 0.40 |

| 9 | 56.20 | 56.20 | 62.38 | Wernicke's b | 57.80 | 56.40 | 63.18 | Conduction b | 1.60 | 0.20 | 0.80 |

| 10 | 51.00 | 65.30 | 68.28 | Broca's | 54.40 | 65.60 | 69.90 | Broca's | 3.40 | 0.30 | 1.62 |

| 11 | 72.60 | 74.80 | 74.87 | Conduction | 75.20 | 76.90 | 76.83 | Conduction | 2.60 | 2.10 | 1.96 |

| 12 | 94.00 | 93.50 | 93.62 | Anomic | 94.20 | 94.40 | 93.42 | Anomic | 0.20 | 0.90 | 0.20 |

| 13 | 27.80 | 26.80 | 35.77 | Broca's | 28.40 | 29.80 | 36.83 | Broca's | 0.60 | 3.00 | 1.06 |

| 14 | 71.80 | 73.00 | 74.17 | Anomic b | 71.10 | 70.10 | 71.37 | TS b | 2.70 | 3.90 | 3.80 |

| 15 | 93.00 | 94.70 | 94.58 | Anomic | 91.70 | 94.10 | 94.13 | Anomic | 1.30 | 0.60 | 0.45 |

| 16 | 89.50 | 83.30 | 87.70 | Anomic | 90.20 | 83.20 | 87.90 | Anomic | 0.70 | 0.10 | 0.20 |

| 17 | 80.90 | 84.60 | 84.32 | Anomic | 89.60 | 90.70 | 88.72 | Anomic | 6.70 a | 5.10 a | 3.40 |

| 18 | 69.10 | 72.10 | 76.05 | Anomic b | 69.2 | 69.6 | 74.47 | Conduction b | 0.10 | 2.50 | 1.58 |

| 19 | 77.40 | 75.40 | 78.27 | Anomic b | 69.80 | 72.90 | 73.82 | Conduction b | 7.60 a | 2.50 | 4.45 |

| 20 | 26.50 | 30.60 | 35.75 | Broca's | 26.20 | 32.20 | 34.72 | Broca's | 0.30 | 1.60 | 1.03 |

Note. TS = transcortical sensory.

Participants who showed more than a 5-point change on the AQ and LQ between Time Point 1 and Time Point 2.

Participants whose aphasia type changed between Time Point 1 and Time Point 2.

Additionally, three participants presented with AQ or LQ changes greater than 5 points, a benchmark found to be a significant indicator of change (Gilmore et al., 2019). No clear pattern of change emerged among these three participants in terms of domain scores. Participant 2 showed an increase in AQ from 39.2 to 44.6 between the first (videoconference) and second (in-person) administrations. In Participant 2, this difference appears to be largely driven by increases in Auditory Verbal Comprehension and Repetition. This participant was older (72 years old) and had reported hearing difficulty, which may have partially contributed to the discrepancy in the scores between administrations, given that sound quality may have been degraded in the videoconference assessment. Of note, Participant 2 reported he had been involved in individual treatment in the 6 months preceding the study; it was unknown whether this treatment was ongoing.

In addition, Participant 17 showed a decrease in AQ from 89.6 to 80.9 and a decrease in LQ from 90.7 to 84.6 between the first (videoconference) and second (in-person) administrations. The AQ change was driven primarily by a 3-point decrease in the fluency score between the first and second time points, with more complete sentences produced by the participant during the first assessment. Upon review of this administration, one possible explanation for the discrepancy may be timing of the administrator prompt for full sentences. During the first administration, the clinician prompted during the participant's picture description, while the second clinician prompted prior to the response. The later prompting may have provided an opportunity to reflect on and modify performance, given that the participant produced more fluent sentences following the prompt provided midresponse.

Lastly, Participant 19 showed an increase in AQ from 69.8 to 77.4 between the first (videoconference) and second (in-person) administrations, with a slight increase across all domains. Given this participant's limited experience in the testing environment (both clinically and in a research setting), increased comfort with the testing environment at the second administration may have been a factor in improved performance.

Discussion

In this study, we examined the feasibility and validity of videoconference administration of the WAB-R in PWA. We found that participants with a variety of aphasia severities successfully completed videoconference assessment. Our participants exhibited a range of ages from 26 to 75 years, indicating that participants across the life span are able to complete videoconference testing. We also found that scores for the WAB-R administered in person as compared to via videoconference were highly correlated and therefore conclude that teleassessment is a reasonable option for this population. Finally, most participants endorsed being satisfied with videoconference assessment and would be willing to participate in future work involving this form of test administration.

The validation of teleassessment in this population is particularly important in order to provide greater accessibility to health care. Nearly 180,000 Americans acquire aphasia annually, with the primary cause being stroke followed by the secondary cause being traumatic brain injury (National Institute on Deafness and Other Communication Disorders, 2019). Many of these individuals suffer from comorbid physical disability, with 80% of stroke patients experiencing poststroke hemiparesis in the acute stage and more than 40% experiencing it chronically (Cramer et al., 1997). Due to significant physical disabilities, these individuals may have difficulty with independent transportation to medical appointments. Coupled with their communication difficulties, accessing high-quality medical care may not always be attainable for this population. Videoconference assessment provides a viable alternative to traveling to a medical facility.

In addition to making videoconference technology more easily accessible to PWA, it also needs to be made more accessible to clinicians. Validation studies such as this one are important to establish standardized guidelines for teleassessment in both clinical and research settings. A study conducted by Grogan-Johnson et al. (2015) surveyed academic programs that provide clinical training to graduate students in speech-language pathology. Their findings revealed that, of the 92 academic programs with a university clinic in the sample, 24% of the programs used interactive videoconferencing to deliver speech and language services. Additionally, they found that, among programs that offer videoconferencing services, only 1%–25% of graduate students receive direct clinical training in this type of administration (Grogan-Johnson et al., 2015). This scarcity in training needs to be mitigated as more clinicians are offering videoconference services in their clinic without receiving training in their graduate courses on the proper administration of these assessments. We believe that, by providing guidelines for administration, we can offer educational materials to clinicians utilizing this method of assessment. Additionally, we hope to share evidence supporting the efficacy of videoconference assessment for clinicians who may be considering using this method of assessment administration.

There are several considerations that need to be mentioned. First, most participants who completed this study required some level of caregiver assistance. Most of the assistance was provided in the initial setup stages of the experiment (i.e., opening the videoconference platform, turning on sound/microphone). Therefore, we recommend a caregiver be available to assist with teleassessment. At the same time, it is important to ensure the caregiver does not provide more support than setup to ensure validity of the test results. Another issue to consider is participant mobility. Individuals with hemiparesis should be provided a computer mouse if needed to aid in selection of items during the test administration. Finally, this study did not collect detailed measures of hearing and vision, which may be helpful in better understanding participant difficulty with using technology.

As noted in the results, five participants showed changes in aphasia classification per the WAB-R Classification Criteria between the first and second administrations. While the actual scores changed minimally for these individuals, the scoring range for the classification subtypes appeared to be altered by these minimal changes. This variability is consistent with previous work demonstrating that clinical impressions may differ from assessment classification. For example, one group found only 64.3% agreement between WAB-R classifications and bedside classification by clinicians for individuals with acute aphasia (John et al., 2017). This discrepancy indicates the need to rely on not only standardized test classification but also clinical judgment in assessing aphasia subtype.

While three participants showed greater than 5-point differences in the two test administrations, no clear pattern of change emerged in terms of domain scores, the modality of administration, or the order of administration. Importantly, given the lack of trend in 5-point differences and aphasia classification scores related to in-person versus videoconference assessment and first versus second administration, these differences appear to be due to individual variability and not (a) systemic differences between videoconference and in-person assessment or (b) certain participants receiving treatment during the course of the study. Furthermore, Villard and Kiran observed that stroke-induced aphasia may co-occur with difficulty maintaining consistent performance for attention tasks; this may have implications for both linguistic and nonlinguistic task performance (Villard & Kiran, 2015). Of note, one of these cases appeared to be due to a slight variation in administration between evaluators, highlighting the importance of test administration training and interrater calibration.

In conclusion, we believe there is a need for more validation of videoconference assessment administration in this population. Further work should expand to larger sample sizes, a more diverse patient population, and a variety of assessments for individuals with aphasia, including traditional standardized assessments and natural measures of conversation. It is important to understand the kinds of modifications for videoconferencing that can be made to aphasia assessments while maintaining performance equivalent to in-person test administration. Future studies in this field should also include more detailed information regarding hearing and vision that could affect participation. In addition, future work should determine how best to engage caregivers in teleassessment, including furthering our understanding of barriers to troubleshooting technological difficulties in the home environment.

Supplementary Material

Acknowledgments

This work was supported by National Institute on Deafness and Other Communication Disorders Grant U01DC014922 awarded to Swathi Kiran and National Institute on Deafness and Other Communication Disorders Advanced Research Training Grant T32DC013017. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

We would like to acknowledge and thank the lab members of the Boston University Aphasia Research Laboratory for helping to create administration guidelines for the Western Aphasia Battery–Revised that were utilized in this article, as well as Jiayi Ruan who assisted in data processing. Additionally, we would like to thank all of our participants for their time. Without them, this work would not be possible.

Appendix A

Modifications for Videoconference Administration

| Section | Modification |

|---|---|

| Conversational Questions | If clarification is needed, refer to clinician's location (i.e., have you been here (in my office) before?). |

| Picture Description | Share screen with patient and provide instructions from record form. |

| Yes/No Questions | 10. Are the lights on in this room? If clarification is needed, refer to clinician's location. (i.e., “are the lights on in MY room”; “is the door closed in MY room”). 11. Is the door closed? Make sure the camera is positioned so the patient can see the door in the clinician's room. |

| Auditory Word Recognition | Share screen and controls. Ask the patient to move the mouse to point to the objects displayed on the screen. Provide instructions from record form. Items 37–42: “Desk/bed” can be replaced with “table” if necessary. “Door” can be replaced with “floor” if necessary. Items 54–60: “Left knee” has been replaced with “left eyebrow,” and “left ankle” has been replaced with “left eye.” |

| Sequential Commands | 4. “Point to the window, then to the door.”

Can be replaced with “point to the floor, then to the ceiling” if needed. Make sure the patient has the book, pen, and comb on the table, and ask the patient to align them in front of him/her. Ask the patient to position the camera so you can see their manipulation of objects. If needed, ask for caregiver assistance for accurate setup. |

| Object Naming | Show the objects in front of the camera. Tactile cues cannot be provided. |

| Comprehension of Sentences | Share screen and controls. Ask the patient to move the mouse to point to the words displayed on the screen. Provide instructions from record form. |

| Reading Commands | Share the screen and ensure you can see the patient at the same time. Provide instructions from the record form. The command “draw a cross with your foot” has been replaced with “draw a cross with your finger.” |

| Written Word–Object Choice Matching | Share screen and controls. Ask the patient to move the mouse to point to the objects displayed on the screen. Provide instructions from the record form. |

| Written Word–Picture Choice Matching | Share screen and controls. Ask the patient to move the mouse to point to the objects displayed on the screen. Provide instructions from the record form. |

| Picture–Written Word Choice Matching | Share screen and controls. Ask the patient to move the mouse to point to the objects displayed on the screen. Provide instructions from the record form. |

| Writing Output | Share screen with patient and provide instructions from record form. Screenshot the patient's output to save for scoring. |

| Writing Dictated Words | Show the objects in front of the camera when needed. Screenshot the patient's output to save for scoring. |

| Alphabet and Numbers | Screenshot the patient's output to save for scoring. |

| Dictated Letters and Numbers | Screenshot the patient's output to save for scoring. |

| Copying a Sentence | Share screen with patient and provide instructions from record form. Screenshot the patient's output to save for scoring. |

| Drawing | Share screen with patient if needed to provide cues. Screenshot the patient's output to save for scoring. |

| Block Design | Share the screen and ask the patient to have the Kohs blocks in front of him/her. Make sure the camera is positioned so the patient can see the Demonstration Item. Ask patient to position the camera so you can see his/her manipulation of objects. Depending on the type of webcam the patient is using, he/she may need to switch back and forth to be able to see the stimulus on the screen while completing the figure and then show the completed response. If needed, ask for caregiver assistance for setup. |

| Calculation | Share screen and controls with patient. Provide instructions from record form. |

| Raven's Coloured Progressive Matrices | Share screen and controls with patient. Provide instructions from record form. |

Appendix B

Clarifications to Western Aphasia Battery–Revised Scoring for Interrater Consistency Within This Study

| Section | Question | Scoring |

|

|---|---|---|---|

| Rule | Example/exception | ||

| Conversational Questions | All | Accept paraphasic errors if they are easily recognizable. |

Exception: 4. What is your full address? Consider if you would be able to send a letter based on what the patient said. If the answer is “no,” do not accept it. |

| Sequential commands | Raise your hand | Must raise one hand; score 0 if patient raises both hands. | |

| Shut your eyes | Must shut both eyes for an extended period of time. Do not accept blinking. | ||

| All | No partial credit given in this section (i.e., score for no. 5 can be 0, 2, or 4). | 1. “Point to the pen”

receives a score of 2 if the whole command is performed, would not receive 1 point for just pointing. |

|

| All | No credit given for reversing command. | 8. “Point to the pen with the comb.” If patient points with the comb to the pen, he/she would receive a score of 0. |

|

| Repetition | All | Phonemic paraphasia defined as one phoneme in error or one consonant cluster in error. | 13. “Deliciously fresh baked bread” Total score: 4/8 (Target: “Delicious freshly baked bread”) |

| Object Naming | All | Phonemic paraphasia defined as one phoneme in error or one consonant cluster in error. If phonemic paraphasia after cueing, score 0. |

|

| Word Fluency | All | There can be more than 1 phoneme in error as long as the animal is recognizable. If the patient gives you the category of the item as well as items in the category, count both the category and the items in the category. |

“catsen” for cat “bird, parakeet, sparrow, seagull” Total points: 4 |

| Reading Commands | 5. Point to the chair and then to the door | Per manual, score “then to the door” as the underlined section. Patient must read and perform full command to get full credit. | Patient read: “Point to the chair and open the door” Reading score: 1/2 Patient pointed to the chair and opened the door. Command score: 1/2 |

| Writing Upon Request | All | Score 1 point for each section (cannot get full credit if not all elements are included): • First name • Last name • Street number • Street name • City • State |

|

| Writing Output | All | • Scoring up to 10 points applies only to single words. • Words in short sentences count until maximum of 34. • Do not count perseverations as multiple points. • Take off 0.5 for spelling, morphosyntactic, and paraphasic errors in written output |

“the the the dog” (only count 1 “the”) “The boy is fly the ky” Total score: 7 (complete sentence; -.5 paraphasic error; -.5 morphosyntactic error) |

| Writing to Dictation | All | If patient writes “5” instead of “five,” give a point in this section. | |

| Apraxia | 2. Salute | Patient does not have to release the salute for full credit. | |

| 9. Pretend to sniff a flower | Patient does not have to imitate holding a flower for full credit. | ||

| 10. Pretend to blow out a match | Patient does not have to imitate holding a match for full credit | ||

| 11. Pretend to use a comb | Patient's hand cannot function as object. | Exception: Pretend to make a phone call, hand can function as phone | |

| 12. Pretend to use a toothbrush | Patient's hand cannot function as object. Patient does not have to open mouth for full credit. |

||

| 13. Pretend to use a spoon to eat | Patient does not have to open mouth for full credit. | ||

| 16. Pretend to start and drive a car | Patient does not need to gearshift, just turn on car and use steering wheel for full credit. | ||

| 18. Pretend to fold a sheet of paper | Patient does not need to crease the paper for full credit. | ||

Appendix C

Clarifications to Western Aphasia Battery–Revised Administration for Interrater Consistency Within This Study

| Section | Question | Administration |

|

|---|---|---|---|

| Rule | Example | ||

| Conversational Questions | 6. Why are you here (in the hospital)? What seems to be the trouble? | If patient is unclear about what you are asking you can rephrase the question based on the current context. | “Why did we ask YOU to do this study?” |

| Picture Description | If patient does not spontaneously begin speaking in sentences, prompt for complete sentences when they have uttered 3–5 single words. Do not give this prompt during the initial instructions. | ||

| Object Naming | All | For compound words, only provide tactile then semantic cue (not phonemic); for all other words, only provide tactile then phonemic cue (not semantic). Compound words: safety pin, toothbrush, screwdriver, paper clip, rubber band. | |

| Writing Dictated Words | All | When to cue: If patient writes anything, do not cue; if no response or does not understand task, show object; if still no response or unrecognizable word written, then provide oral spelling. (unrecognizable: limited overlap with target form). | |

| Apraxia | 17. Pretend to knock at a door and open it | If patient knocks on a surface, cue them to “pretend” to do it. | |

| Drawing | All | Discontinue each item after 30 s. If you need to prompt patient to make the drawing more complete, the prompt and subsequent changes to the drawing fall within the 30 s. If the patient draws the wrong figure (e.g., circle for square), show stimulus for 10 s and then restart the 30-s timer. |

|

Appendix D

Administration Fidelity Checklist

| Participant code: | Date of fidelity check: | |||

| Date of test administration: | Rater: | |||

|

Administration type:

(in person vs. videoconference) |

Rate 1 if all items in section administered accurately with accurate script | |||

| Administrator: | Rate 0 if any items in section not administered accurately or script inaccurate Rate N/A if not able to rate (e.g., no video available) |

|||

| Section | Subtest | Specific general scoring notes | Specific online scoring notes | Rate 1, 0, and NA |

| Spontaneous speech | Conversational Questions | |||

| Picture Description | Screen shared | |||

| Auditory Verbal Comprehension | Yes/No Questions | Clarify which environment you are referring to as needed | ||

| Auditory Word Recognition | Screen shared; ask examinee to adjust camera as needed to view responses | |||

| Sequential Commands | Administer example if examinee appears not to understand | Items aligned left to right from examinee angle; camera tilted for clinician view | ||

| Repetition | ||||

| Naming and Word Finding | Object Naming | Administer hierarchy of tactile, phonemic, and semantic cues if indicated | ||

| Word Fluency | Prompt with examples if needed | |||

| Sentence Completion | ||||

| Responsive Speech | ||||

| Reading | Comprehension of Sentences | Prompt examinee to point to item if examinee is not pointing to item | Screen shared | |

| Reading Commands | Repeat directions as needed | Screen shared | ||

| Written Word–Object Choice Matching | Screen shared | |||

| Written Word–Picture Choice Matching | Screen shared | |||

| Picture–Written Word Choice Matching | Screen shared | |||

| Spoken Word-Written Word Choice Matching | Screen shared | |||

| Spelled Word Recognition | Administer example if examinee appears not to understand | |||

| Spelling | Administer example if examinee appears not to understand | |||

| Writing | Writing Upon Request | Prompt examinee to write complete address if they only write name | Ask to see written output | |

| Writing Output | Prompt examinee to write in sentences as needed | Ask to see written output | ||

| Writing to Dictation | Break down sentence into smaller parts as needed | Ask to see written output | ||

| Writing Dictated Words | Provide object or oral spelling if examinee appears not to understand | Show object in front of camera if examinee appears not to understand | ||

| Alphabet and Numbers | ||||

| Dictated Letters and Numbers | ||||

| Copying a Sentence | Screen shared | |||

| Apraxia | Provide model if examinee scores 0 or 1 independently | |||

| Constructional, Visuospatial, and Calculation | Drawing | Provide picture if examinee appears not to understand | Screen shared to provide picture if examinee appears not to understand | |

| Block Design | Complete demonstration of trial item; prompt examinee to complete demonstration of trial item | Participant has been given blocks; examinee has matching color blocks on shared screen | ||

| Calculation | Read aloud all 12 items | Screen shared | ||

| Raven's Coloured Progressive Matrices | Screen shared | |||

| Overall | No more than 1 repetition per item (unless extenuating circumstances such as interruption or audio issues) | |||

| Test materials used accurately; working surface cleared of extra items; accurate items provided to patient | ||||

| Notes | ||||

| Total Correct | ||||

| Percentage Correct | ||||

Funding Statement

This work was supported by National Institute on Deafness and Other Communication Disorders Grant U01DC014922 awarded to Swathi Kiran and National Institute on Deafness and Other Communication Disorders Advanced Research Training Grant T32DC013017.

References

- American Speech-Language-Hearing Association. (2016). 2016 SIG 18 telepractice survey results. https://www.asha.org

- Brennan D., Tindall L., Theodoros D., Brown J., Campbell M., Christiana D., Smith D., Cason J., & Lee A. (2010). A blueprint for telerehabilitation guidelines. International Journal of Telerehabilitation, 2(2), 31–34. https://doi.org/10.5195/ijt.2010.6063 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cherney L. R., & Van Vuuren S. (2012). Telerehabilitation, virtual therapists, and acquired neurologic speech and language disorders. Seminars in Speech and Language, 33(3), 243–258. https://doi.org/10.1055/s-0032-1320044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cramer S. C., Nelles G., Benson R. R., Kaplan J. D., Parker R. A., Kwong K. K., Kennedy D. N., Finklestein S. P., & Rosen B. R. (1997). A functional MRI study of subjects recovered from hemiparetic stroke. Stroke, 28(12), 2518–2527. https://doi.org/10.1161/01.STR.28.12.2518 [DOI] [PubMed] [Google Scholar]

- Duffy J. R., Werven G. W., & Aronson A. E. (1997). Telemedicine and the diagnosis of speech and language disorders. Mayo Clinic Proceedings, 72(12), 1116–1122. https://doi.org/10.4065/72.12.1116 [DOI] [PubMed] [Google Scholar]

- American Hospital Association. (2019). Fact sheet: Telehealth. https://www.aha.org/system/files/2019-02/fact-sheet-telehealth-2-4-19.pdf

- Gilmore N., Dwyer M., & Kiran S. (2019). Benchmarks of significant change after aphasia rehabilitation. Archives of Physical Medicine and Rehabilitation, 100(6), 1131–1139.e87. https://doi.org/10.1016/j.apmr.2018.08.177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodglass H., Kaplan E., & Barresi B. (2001). BDAE-3: Boston Diagnostic Aphasia Examination–Third Edition. Lippincott Williams & Wilkins. [Google Scholar]

- GoToMeeting. (2018). GoToMeeting and HIPAA Compliance.https://logmeincdn.azureedge.net/ gotomeetingmedia/-/media/pdfs/lmi0098b-gtm-hipaacompliance-guide-final.pdf

- Grogan-Johnson S., Meehan R., McCormick K., & Miller N. (2015). Results of a national survey of preservice telepractice training in graduate speech-language pathology and audiology programs. Contemporary Issues in Communication Science and Disorders, 42(Spring), 122–137. https://doi.org/10.1044/cicsd_42_S_122 [Google Scholar]

- Hall N., Boisvert M., & Steele R. (2013). Telepractice in the assessment and treatment of individuals with aphasia: A systematic review. International Journal of Telerehabilitation, 5(1), 27–38. https://doi.org/10.5195/ijt.2013.6119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill A. J., Theodoros D. G., Russell T. G., Ward E. C., & Wootton R. (2009). The effects of aphasia severity on the ability to assess language disorders via telerehabilitation. Aphasiology, 23(5), 627–642. https://doi.org/10.1080/02687030801909659 [Google Scholar]

- John A., Javali M., Mahale R., Mehta A., Acharya P., & Srinivasa R. (2017). Clinical impression and western aphasia battery classification of aphasia in acute ischemic stroke: Is there a discrepancy? Journal of Neurosciences in Rural Practice, 8(1), 74–78. https://doi.org/10.4103/0976-3147.193531 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaplan E., Goodglass H., & Weintraub S. (2001). Boston Naming Test–Second Edition. Lippincott Williams & Wilkins. [Google Scholar]

- Kertesz A. (2007). The Western Aphasia Battery–Revised (WAB-R). Pearson; https://doi.org/10.1037/t15168-000 [Google Scholar]

- Koo T. K., & Li M. Y. (2016). A guideline of selecting and reporting intraclass correlation coefficients for reliability research. Journal of Chiropractic Medicine, 15(2), 155–163. https://doi.org/10.1016/j.jcm.2016.02.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Institute on Deafness and Other Communication Disorders. (2019). Aphasia. https://www.nidcd.nih.gov/health/aphasia

- National Business Group on Health. (2017). Large U.S. employers project health care benefit costs to surpass $14,000 per employee in 2018, National Business Group on Health survey find. https://www.businessgrouphealth.org/news/nbgh-news/press-releases/press-release-details/?ID=334

- Palsbo S. E. (2007). Equivalence of functional communication assessment in speech pathology using videoconferencing. Journal of Telemedicine and Telecare, 13(1), 40–43. https://doi.org/10.1258/135763307779701121 [DOI] [PubMed] [Google Scholar]

- Perinetti G. (2018). StaTips Part IV: Selection, interpretation and reporting of the intraclass correlation coefficient. South European Journal of Orthodontics and Dentofacial Research, 5(1), 3–5. https://doi.org/10.5937/sejodr5-17434 [Google Scholar]

- Richardson J. D., Dalton Hudspeth S. G., Shafer J., & Patterson J. (2016). Assessment fidelity in aphasia research. American Journal of Speech-Language Pathology, 25(4S), S788–S797. https://doi.org/10.1044/2016_AJSLP-15-0146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Telehealth Basics. (2019). https://www.americantelemed.org/resource/why-telemedicine/

- Theodoros D., Hill A., Russell T., Ward E., & Wootton R. (2008). Assessing acquired language disorders in adults via the Internet. Telemedicine and E-Health, 14(6), 552–559. https://doi.org/10.1089/tmj.2007.0091 [DOI] [PubMed] [Google Scholar]

- Villard S., & Kiran S. (2015). Between-session intra-individual variability in sustained, selective, and integrational non-linguistic attention in aphasia. Neuropsychologia, 66, 204–212. https://doi.org/10.1016/j.neuropsychologia.2014.11.026 [DOI] [PubMed] [Google Scholar]

- Wallace S. J., Worrall L., Rose T., Le Dorze G., Breitenstein C., Hilari K., Babbitt E., Bose A., Brady M., Cherney L. R., Copland D., Cruice M., Enderby P., Hersh D., Howe T., Kelly H., Kiran S., Laska A. C., Marshall J., … Webster J. (2019). A core outcome set for aphasia treatment research: The ROMA consensus statement. International Journal of Stroke, 14(2), 180–185. https://doi.org/10.1177/1747493018806200 [DOI] [PubMed] [Google Scholar]

- Zoom. (2017). HIPAA Compliance Guide. https://zoom.us/docs/doc/Zoom-hipaa.pdf

- Zoom. (2018). Zoom video communications. https://www.zoom.us/

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.