Abstract

Mental health clinicians and administrators are increasingly asked to collect and report treatment outcome data despite numerous challenges to select and use instruments in routine practice. Measurement-based care (MBC) is an evidence-based practice for improving patient care. We propose that data collected from MBC processes with patients can be strategically leveraged by agencies to also support clinicians and respond to accountability requirements. MBC data elements are outlined using the Precision Mental Health Framework (Bickman, Lyon, & Wolpert, 2016), practical guidance is provided for agency administrators, and conceptual examples illustrate strategic applications of one or more instruments to meet various needs throughout the organization.

Keywords: measurement-based care, organizational quality improvement, progress monitoring

Measurement-based care (MBC) is the systematic use of patient-reported progress and outcome instruments to inform treatment decisions (Scott & Lewis, 2015). A considerable body of research using rigorous, large scale designs and meta-analyses supports the effectiveness of MBC. Twenty-one randomized controlled trials comparing MBC to usual care among a variety of patient populations and settings were recently identified (Lewis et al., 2019) and fifty-one studies were reviewed to summarize the MBC literature (Fortney et al., 2017). Based on meta-analyses, MBC effect sizes on patient outcomes range widely, from 0.28 to 0.70 (Lambert, Whipple & Kleinstauber, 2018; Shimohawa, Lambert & Smart, 2010). Moderate to large effect sizes of 0.49 to 0.70 are attributed to MBC with any feedback component, particularly when feedback is provided to both the patient and clinicians, or when clinical support tools are provided (Fortney et al., 2017; Krageloh, Czuba, Billington, Kersten, & Siegert, 2015; Lambert et al., 2018; Shimohawa et al., 2010). MBC effects are also in the moderate range of 0.33 to 0.39 for cases “not on track” (i.e., at risk of treatment failure) as compared to those “on track” (Lambert et al., 2003; 2018).

Clear terminology to describe MBC and distinguish it from other terms and concepts such as feedback-informed treatment, routine outcome monitoring, progress monitoring and feedback, concurrent recovery monitoring, data-driven decision making and evidence-based assessment has hindered culmination of the literature (Hawkins, Baer, & Kivlahan, 2008; Hunsley & Mash, 2007; Lewis et al., 2019; Lyon, Borntrager, Nakamura, & Higa-McMillan, 2013). However, we prefer MBC which emphasizes not only the collection and review of patient data but also collaborative decision-making with the patient, making it an individualized, person-centered approach to enhance clinical care (Lewis et al., 2019; Resnick & Hoff, 2019).

Of note, a recent Cochrane review of MBC in adult mental health settings found little to no benefit of MBC as compared to treatment as usual (Kendrick et al., 2016). Another Cochrane review of MBC in youth mental health settings found that the evidence was inconclusive (Bergman et al., 2018). Because of the Cochrane methodology, which excluded studies where therapists utilized outcome measurement and feedback to adjust the treatment regimen, these findings are challenging to interpret in the context of the current MBC definition that emphasizes using progress data to make collaborative treatment decisions with the patient as a critical aspect, and perhaps mechanism of action (Resnick & Hoff, 2019; Scott & Lewis, 2015; Lewis et al., 2019).

There are other notable gaps in the MBC evidence base. First, specific mechanisms of action to explain how MBC improves patient outcomes are not well known, though mostly qualitative research supports hypotheses that MBC increases patient involvement, therapeutic alliance, patient-provider communication, and treatment tailoring to specific targets based on patient report (Dowrick et al., 2009; Eisen, Dickey, & Sederer, 2000; Krageloh et al., 2015). One quantitative study found that clinicians who received feedback from MBC measures addressed client concerns more quickly than those who did not receive feedback, which suggests that the “alerting” function of MBC may be a key mechanism (Douglas et al., 2015). Second, some of the strongest effect sizes of MBC on patient outcomes are for patients “not on track”, so the effectiveness of MBC for “on track” patients is less clear (Lambert, et al., 2003). Third, further research is needed to understand the influence of patient characteristics, diagnoses, treatment settings and contexts on rates of change, deterioration and outcomes (Lambert et al., 2018; Warren, Nelson, Mondragon, Baldwin, & Burlingame, 2010). Finally, there is limited consensus on which patient-reported outcome measures are ideal for relevance, usability, sensitivity to change over time and include norm references for adult and/or youth patients of diverse cultural and linguistic backgrounds (Becker-Haimes, et al., 2020; Kendrick et al., 2016; Trujols, Solà, Iraurgi, Campins, Ribalta, & Duran-Sindreu, 2020).

Nonetheless, measurement-based care (MBC) is increasingly emphasized as a way to elevate the quality of mental health services and demonstrate the value of psychosocial treatments (Fortney et al., 2017; Lewis et al., 2019). Although MBC is usually regarded as an evidence-based practice to improve efficiency and treatment outcomes for patient care (Delgadillo et al., 2017; Fortney et al., 2017), aggregating MBC data may also help agencies meet accountability requirements to demonstrate care quality and patient outcomes.

We propose that clinical administrators can leverage the data collected as part of MBC processes to fulfill organizational quality improvement1 goals and respond to accountability requirements. This paper offers practical guidance and recommendations for mental health agencies to select and use instruments in a way that adds value at the patient, clinician, and agency level. We focus primarily on how agencies can select and adopt instruments to support MBC as a best practice and strategically leverage those data for multiple uses within the agency.

Mental Health Care Accountability Requirements and the Role of MBC

MBC2 is encouraged by clinical practice guidelines (American Psychological Association Task Force on Evidence-Based Practice for Children and Adolescents, 2008) and recommended as central to evidence-based practice for psychosocial treatment in a recent consensus statement endorsed by over two dozen professional organizations (Coalition for the Advancement and Application of Psychological Science., 2018). The data from MBC, as an evidence-based practice to elevate the standard of care for all patients served in an organization, can be aggregated across patients and providers to respond to accountability requirements and facilitate broader quality improvement processes.

For example, federal agencies urge organizations to use routine measurement and feedback to provider and administrator teams about performance on quality improvement targets, as a foundation for quality improvement in health care (Agency for Healthcare Research and Quality, September 2018; The National Quality Forum, 2018). When quality improvement targets are related to care quality, MBC implementation results can be measured and reviewed to achieve implementation targets and MBC data can be aggregated to monitor how well patient needs are being met.

Measurement-based quality improvement has also been recognized as a key component of a value-based approach to healthcare (Baumhauer & Bozic, 2016; Fortney et al., 2017; Porter, Larsson, & Lee, 2016), where reimbursement is based on patient-reported quality, rather than quantity, of care (Centers for Medicare & Medicaid Services, 2016; Hermann, Rollins, & Chan, 2007). As of 2016, 30% of Medicare payments were shifted from fee-for-service to value-based payment models, with a goal of 50% by 2018 (Centers for Medicare & Medicaid Services, 2016). MBC can thus help organizations align with value-based payment models by providing data about care quality and patient outcomes.

Regulatory bodies, such as the Joint Commission are also beginning to require MBC. The Joint Commission is a national U.S. accreditation organization for public health care quality that more than 21,000 health care organizations voluntarily pursue to ensure they meet care quality standards (The Joint Commission, 2011). In 2018, the Joint Commission began requiring primary care and behavioral health care clinicians who treat patients with mental health and substance use disorders in accredited organizations to use standardized assessment instruments completed during encounters to monitor individual progress and inform individual treatment planning and evaluation of outcomes (The Joint Commission, 2018).

External requirements like these may be important drivers of MBC adoption in clinical practice, which has historically been slow. For example, fewer than 20% of clinicians report collecting data during treatment (Bickman et al., 2000; Gilbody, House, & Sheldon, 2001; Jensen-Doss et al., 2016). Low MBC implementation has been attributed to barriers at numerous levels, including patient (e.g., burden, time, concern about confidentiality breach), practitioner (e.g., attitudes, knowledge, self-efficacy, administrative burdens of time and resources), organizational (e.g., training resources, leadership support, climate and culture), and broader system structure and requirements (e.g., incentives, consensus about benefits); see Lewis et al., 2019 for an in-depth discussion of barriers to MBC implementation. However, emerging evidence suggests that organizational support for MBC through internal policies requiring assessment as part of treatment is correlated with more positive clinician views of MBC assessment tools (Jensen-Doss, Haimes et al., 2018) and organizational factors such as internal leaders who champion MBC can facilitate MBC implementation (Gleacher et al., 2016). Some system-level efforts for large-scale adoption of MBC have been made in places like Washington state (Unützer et al., 2012), Hawaii (Kotte et al., 2016; Nakamura, Higa-McMillan, & Chorpita, 2012), the United States Departments of Defense and Veterans Administration (Pomerantz, Kearney, Wray, Post & McCarthy, 2014; Resnick & Hoff, 2019), the United Kingdom (Hall et al., 2014), and Australia (Meehan, McIntosh, & Bergen, 2006; Trauer, Gill, Pedwell, & Slattery, 2006). These efforts have been met with mixed success, but findings indicate that leadership and organizational support as well as perceived clinical utility of measures by clinicians are pertinent to MBC implementation success (Kotte et al., 2016).

To promote better MBC implementation, the ideal situation is a synergistic one, in which clinicians gather data that are useful to their clinical decision-making and provide useful information for agency-wide quality improvement purposes. The field has been primarily focused on the former, without clear linkages to the latter. The current paper discusses (1) MBC data elements, (2) types of assessment instruments, (3) selection of instruments for value and purpose, (4) data-informed decisions, and (5) three conceptual examples of leveraging instruments for their value to patient care, clinician support/supervision, and the agency.

MBC Data Elements

MBC is typically focused on patient progress and outcomes, so clinical administrators must be thoughtful and often creative about how to incorporate this kind of information into agency-wide quality improvement goals. Broadly, administrators are likely concerned with a wide variety of quality of care measures including those that assess the structure of the service delivery system (e.g., number and qualifications of clinicians, patient to clinician ratios), processes such as the type of care delivered, and patient outcomes (Donabedian, 2002). In this paper we do not address the full set of quality indicators, but primarily focus on using MBC to monitor processes and outcomes at the agency level.

The Precision Mental Health Framework provides a wide range of data elements that agency administrators might consider to measure and track service processes and patient outcomes (Bickman et al., 2016). Precision mental health is an approach to prevention and intervention3 focused on the needs, preferences, and prognostic possibilities for any given individual via initial assessment, ongoing monitoring, and individualized feedback. Relevant data elements for MBC include (1) personal data (e.g., diagnostic, developmental, or cultural variables), (2) aims and risks data (i.e., collaboratively determined foci and expected outcomes of the intervention), (3) service preference data (i.e., patient choices from among appropriate intervention options), (4) intervention data (i.e., dose/intensity, duration, fidelity, cost, and timing of services), (5) progress data (i.e., routine collection of information about changes in intervention targets), (6) mechanisms data (i.e., variables that link interventions and outcomes), and (7) contextual data (i.e., moderating and mediating variables within and external to the service system). Although each data element provides value as part of a comprehensive approach to MBC, an agency’s quality improvement goals are likely most related to aims and risks, progress, intervention, and mechanisms data.

First, aims and risks are critical to patient-centered personalization of both process and outcome targets. Aims data are individualized goals on which the patient and clinician mutually agree. Risks are any anticipated side effects or pitfalls that might negatively impact patient progress. This information is often more pertinent to treatment decision-making than personal data because patients with similar backgrounds, symptoms, and genetics are likely to have different objectives for treatment. Because aims and risks data include the expected outcomes of treatment (Bickman et al., 2016), they are also critical for the development of idiographic, or individualized, monitoring targets (see discussion of Goal Attainment Scaling and other individualized methods for monitoring aims and risk are below). Aims and risk data may be especially beneficial for consumers who are seeking services to achieve goals that are not commonly captured by standardized assessments, such as those with substance use disorders, comorbidities, serious mental illness, or others who have goals related to discrete functional improvements in relationships, occupation, education, or daily living domains (Stout, Rubin, Zwick, Zywiak, & Bellino, 1999; Tabak, Link, Holden, & Granholm, 2015). Mental health service systems that collect these data in a systematic and routine manner will be well positioned to advance the personalization and patient-centeredness of the services they deliver.

Second, progress data continue to be the cornerstone of MBC and the primary emphasis of mental health services and value-based care (Borntrager & Lyon, 2015; Fortney et al., 2017; The Joint Commission, 2018). Standardized instruments can be used as progress data to track within-patient change over time, relative to baseline, the previous session, and/or normative benchmarks. Progress data can also be used to aggregate outcomes at the clinician, clinic, or larger service system levels. Use of progress data through practices referred to as “progress monitoring and feedback” and/or “routine outcome monitoring” has been consistently associated with positive outcomes on patient symptoms, functioning, and patient-clinician communication over time (Carlier, Meuldijk, van Vliet, van Fenema, van der Wee, & Zitman, 2012; Knaup, Koesters, Schoefer, Becker & Puschner, 2009; Lambert, Whipple & Kleinstauber, 2018).

Third, intervention (e.g., dose/intensity, fidelity, duration, cost, practices or modality) and mechanisms (e.g., engagement, therapeutic alliance, skill development) data work in tandem to explain treatment success or failure and make progress data actionable (Bickman, Lyon & Wolpert, 2016). Both are important to understanding how treatment affects outcomes and to informing ongoing quality improvement. These data elements are likely useful to clinicians (e.g., adjusting the treatment approach if the patient-clinician alliance deteriorates) and administrators (e.g., prioritizing supervision topics based on patterns observed in aggregate treatment data). In fact, the quality of treatment elements or practices and the extent to which those practices are derived from the evidence-base is a critical type of intervention data to monitor for individual patients, among clinicians and providers, across clinics and agency-wide (Higa-McMillan, Powell, Daleiden, & Mueller, 2011).

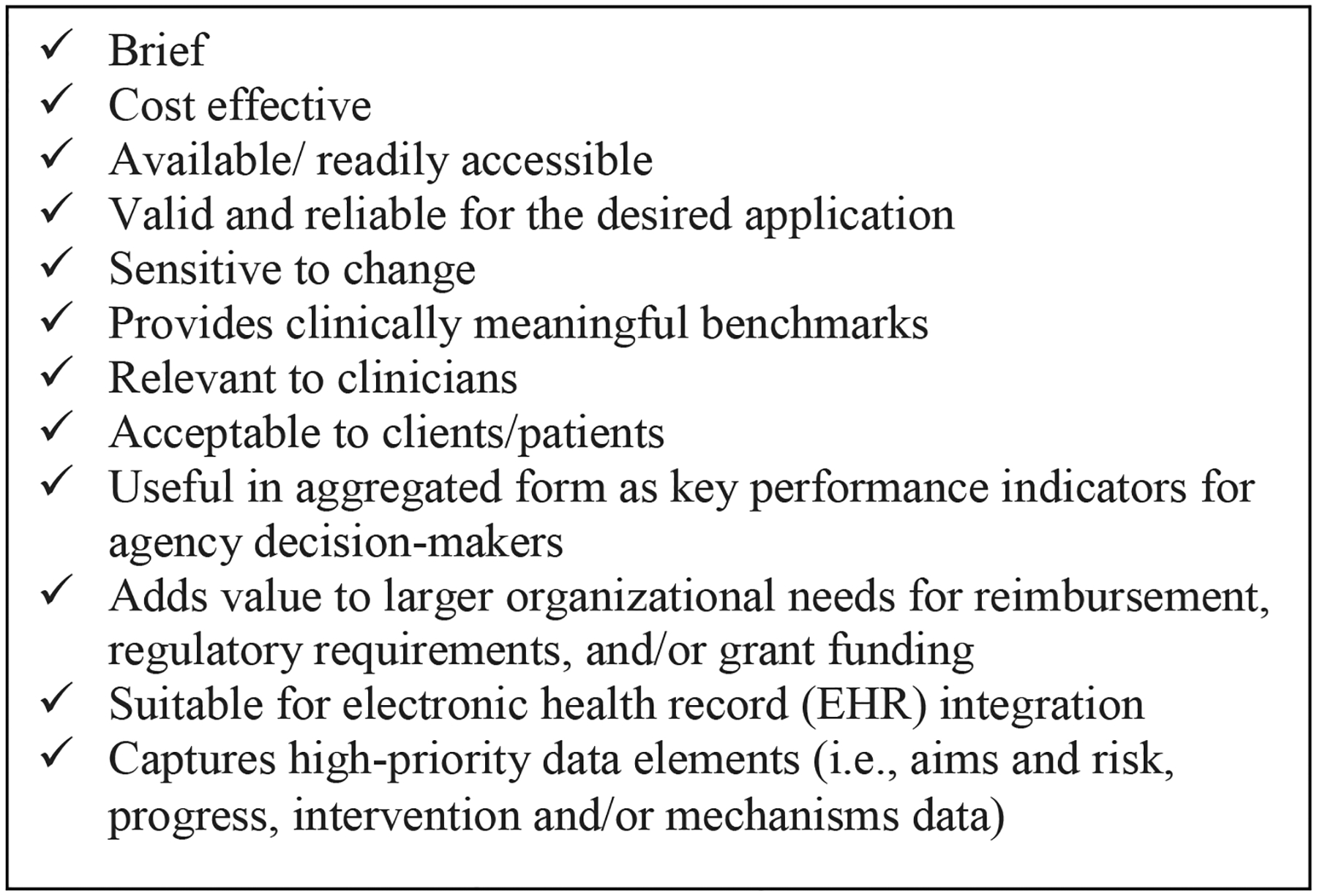

We recommend mental health agency leaders first identify the MBC data elements that are most central to their goals for improving patient care, supporting clinicians, and improving organizational quality. Once clear MBC goals are set, various assessment instruments can be evaluated to determine which will best capture the highest-priority data elements (Fig. 1).

Fig. 1.

Checklist of MBC Instrument Considerations

Types of Assessment Instruments

MBC instruments fall into two broad categories: standardized and individualized. Most research in support of MBC has been grounded in standardized instruments (Gondek, Edbrooke-Childs, Fink, Deighton, & Wolpert, 2016; Krageloh, Czuba, Billington, Kersten, & Siegert, 2015). Standardized instruments are designed to assess the same constructs for all patients, with standard rules for administration and scoring. In mental health, these instruments typically take the form of rating scales, where validated, reliable Likert-style items are administered and translated into scale scores (Gondek et al., 2016; Krageloh et al., 2015). The meaning of patient scores from a standardized instrument are interpreted based on a comparison to norms in a larger sample of patients (Ashworth, Guerra, & Kordowicz, 2019). These norms, or information about the distribution of scores in the population, allow for the estimation of clinical cutoff scores to determine if a given patient is “well” compared to norms and estimates of how much change on a measure can be considered reliable rather than measurement error. Benchmarks such as clinical cutoffs and expected recovery curves based on normed samples provide visual references for clinicians to use in assessing progress and may be associated with more effective MBC systems (deJong, 2017; deJong, Barkham, Wolpert, Douglas, & Delgadillo, 2019).

Individualized instruments stem from the behavioral assessment tradition (Cone & Hoier, 1986), where they were developed to track change in specific assessment targets for individual patients. Also known as idiographic or patient-generated instruments, they typically involve identifying a specific treatment target (e.g., cutting as a form of self-harm), establishing a metric for monitoring it (e.g., number of cuts in the past week), and then gathering data from the patient to monitor progress (Lloyd, Duncan, & Cooper, 2019). While individualized instruments can be fully tailored for a specific patient, models also exist for conducting individualized assessment in a more “standardized” fashion across patients. For example, in goal attainment scaling (GAS; Kiresuk, Stelmachers, & Schultz, 1982; Turner-Stokes, 2009) patients and clinicians collaboratively set a treatment goal and then monitor progress related to the goal (e.g., six days per week without cutting) onto a scale ranging from −2 “Much Less Than Expected” to 2 “Much More Than Expected”. This approach has been associated with significant increases in goal attainment in a clinical trial with consumers with serious mental illness, indicating sensitivity to change over time on goals not typically included in standardized symptom inventories (e.g., positive relationships, self-care, employment, leisure activities, housing, school, and financial goals) but highly relevant to consumers’ success in treatment, recovery and well-being (Tabak, Link, Holden & Granholm, 2015). Another example is the Monthly Treatment and Progress Summary form (MTPS; Nakamura, Daleiden & Chorpita, 2007) which was developed using GAS logic to standardize treatment targets (i.e., aims and risk and/or progress data) by allowing the clinician to select from a list of 48+ treatment targets and to rate them over time.

Individualized progress instruments have several advantages. First, they focus on problems that are highly relevant to individual presenting problems or goals (Ashworth, Evans, & Clement, 2009; Doss, Thum, Sevier, Atkins, & Christensen, 2005) and as such, are highly sensitive to detecting change (Lindhiem, Bennett, Orimoto, & Kolko, 2016; Sales & Alves, 2012; Weisz et al., 2011). In fact, a recent meta-analysis found that effect sizes for patient improvement as measured by an individualized approach of personalized treatment goals was substantially larger than for standardized symptom checklists (d = .86 vs .32, respectively; (Lindhiem, Bennett, Orimoto, & Kolko, 2016). They are also consistent with a personalized medicine approach, as they emphasize individual context, patient goals, and priorities, and are therefore thought to promote patient engagement in treatment (McGuire, Scheyer, & Gwaltney, 2014; Sales & Alves, 2012). Individualized instruments typically do not involve copyrighted material so are also low cost. Patient and clinician perceptions of the utility of MBC could possibly be improved with individualized instruments (which they tend to prefer), and thus improve implementation (Jensen-Doss et al., 2018).

However, distinguishing the types of standardized or individualized assessments is less pertinent then how the data are used in practice and the broader organization. In fact, the difference between standardized and individualized instruments can become blurred in practice depending on how the instruments are used. For example, standardized instruments can be used to monitor individual patient progress over time throughout treatment (e.g., examining the patient’s score on a measure compared to their own scores over time, a practice sometimes referred to as feedback informed therapy (Reese, Norsworthy, & Rowlands, 2009). Also, individualized instruments (e.g., degree of individual goal attainment) can be collected consistently across patient groups and then aggregated for use in a more “standardized” way within a care organization (Ruble & McGrew, 2015). Therefore, we recommend that clinicians and administrators focus on how data can be used for a specific purpose instead of focusing on what kind of measure it is. In other work, we have underscored the importance of standardized and individualized uses of data (Lyon et al., 2017).

Selecting Instruments for Value and Purpose

Clinical administrators are often in the position of selecting the instruments that clinicians are encouraged or required to use with patients. Once administrators obtain input from clinicians and other stakeholders to clarify the goals for using an assessment instrument and determine the desired data elements, we recommend they use the Checklist of MBC Instrument Considerations (see Figure 1) to determine which measure or set of instruments is best for their agency.

Brief, cost effective instruments are usually a top priority particularly for public mental health agencies (Beidas et al., 2015), including cost for the measure itself and any related training, scoring, and support that is needed. Further, it is ideal when instruments are available in existing medical records (e.g., treatment attendance, standardized suicide screening questions asked at every visit). Reliability and validity are also key considerations to ensure the instrument is of high quality, and local or published evidence about how it performs with similar patient populations or agencies can provide greater assurance about its fit. Sensitivity to change – or the degree to which a measure reflects the effects of treatment on a patient – is imperative to monitor progress over time (Vermeersch, Lambert, & Burlingame, 2000). Sensitivity to change is based on several factors, including relevance of the instrument to the patient, range of response options (more response options are generally more sensitive), instructions asking patients to report on change within defined time periods, items that assess factors that are feasibly changed as a result of treatment, and ability to detect changes at the “high” or “low” ends of an instrument (Vermeersch et al., 2000). Instruments must also be clinically relevant and acceptable to patients (Kelley, de Andrade, Ana Regina Vides, Sheffer, & Bickman, 2010; Kelley & Bickman, 2009) to improve the likelihood that the MBC will be implemented successfully (Edbrooke-Childs, Jacob, Law, Deighton, & Wolpert, 2015; Martin, Fishman, Baxter, & Ford, 2011). Indeed, it has been suggested that greater attention to patient perspectives when selecting measures will facilitate practical application of the resulting information (Trujols et al., 2020).

Integrating clinician input during the measure selection process is imperative. Although standardized measurement approaches lend themselves best to system-level priorities such as monitoring aggregate patient outcomes and service effectiveness, a growing base of evidence suggests that individualized measurement approaches might be more preferred and frequently used by clinicians (Bickman et al., 2000; Connors, Arora, Curtis, & Stephan, 2015; Jensen-Doss, Smith et al., 2018). Clinicians are likely to prefer practical instruments that are low burden (i.e., easy to administer, score and interpret), can directly inform clinical care (i.e., are sensitive to change, are actionable, can be used to track and discuss progress with the patient and other providers on the care team), and are easily understood and accepted by their patients (considering all aspects of diversity in terms of age, education level, and cultural and linguistic considerations (Connors et al., 2015; Glasgow & Riley, 2013).

Moreover, there should be a strategy to aggregate data across patient subgroups to evaluate the agency’s performance in key areas and make decisions to target performance improvements. Aggregating data is more straightforward with standardized instruments than with individualized instruments, which presents a conundrum at times given the evidence that clinicians may prefer the latter. However, models for aggregating individualized data do exist. For example, Top Problem ratings have been averaged within and across patients and used to compare the effects of different treatments (e.g., Weisz et al., 2012). Also, measures that allow for simultaneous monitoring treatment progress and intervention data have been used to understand patient progress trends by practice type (e.g., MTPS, see Chorpita, Bernstein, Daleiden & Research Network on Youth Mental Health, 2008).

Finally, administrators also need to determine how a measure will add value to organizational goals or requirements, to fulfill accreditation standards and/or provide justification for reimbursement, grant funding or other support for services provided. Ultimately, agency administrators will have to determine how a prospective measure(s) can meet all considerations in the checklist, or if they prefer to prioritize certain characteristics over others.

Measurement Feedback Systems

A key consideration about MBC adoption for agencies is whether or how to use a measurement feedback system (MFS). A MFS is a digital tool that houses one or more instruments that can be used for electronic administration, scoring, and display of progress (Bickman, 2008). Of note, some MFSs contain only their own proprietary instruments, so MFS selection may also be subject to instrument selection criteria set by the mental health agency. Many MFSs have proliferated, leaving mental health agencies with a large number of characteristics to consider during MFS selection (Lyon, Lewis, Boyd, Hendrix, & Liu, 2016). Most, however, do not include explicit training or support, which administrators should attend to as they consider whether to select a MFS (Lewis et al., 2019). For example, some recommendations for sustainable implementation of MFS include integrating MFS with clinical values and workflow, incorporating data-informed decision-making throughout the agency, and finding ways to encourage innovation or answer important quality improvement questions by critically examining data patterns (Douglas, Button, & Casey, 2016). In fact, MFS are likely to create new implementation challenges if not directly integrated with electronic health records (EHRs, Steinfeld et al., 2015; Steinfeld & Keyes, 2011). As shown in Figure 1, agency administrators are encouraged to evaluate EHR compatibility for external MFS or embedded measurement capabilities to accomplish MBC goals.

Data-Informed Decisions

MBC data can and should drive decisions at the patient care, clinician support, and agency levels. Ideally, a single measure or set of instruments can be used flexibly to inform decisions at all levels, which ensures the data have value throughout the agency and are yielding information that is clinically relevant and actionable. Practical recommendations on how to develop a measurement approach using data elements for all agency levels are provided below.

Patient Care

Perhaps the most obvious use and value of MBC is to drive decisions at the patient level. Aims and risks should be identified at baseline with relevant symptoms. Then, progress data can be used throughout treatment to monitor symptoms and functioning. Treatment and mechanism data can be collected as often as weekly since they may vary frequently throughout the course of treatment. For example, the clinician may report on the treatment techniques or approach delivered, and the patient could report on the impact of treatment in terms of their use of skills or tools. In terms of data-informed decision-making, the value of using measurement at the patient level is two-fold. First, these data elements facilitate discussions with patients regarding their progress. Second, the use of instruments can provide a feedback loop for clinicians. For instance, clinicians can utilize instruments to establish a baseline for patients, implement treatments, and re-administer instruments, utilizing the results to continually update their case conceptualization (Christon, McLeod, & Jensen-Doss, 2015). MFSs can be very useful to help clinicians visually examine progress over time and provide a ‘dashboard’ to be utilized by the clinician and/or their patient (Lyon et al., 2016; Lyon & Lewis, 2016). The value of utilizing instruments in this way is fully realized when clinicians are making their next clinical decision based on the data they are reviewing, ideally in collaboration with the patient. Typically, clinicians could make one of three decisions, (1) stay the course and continue with the same intervention, (2) introduce a new intervention, or (3) review a previous intervention.

Clinician Support

Using and sharing data to inform other clinician support strategies is also a benefit of the MBC approach. For instance, considering baseline or progress data from patients in aggregate form, such as over an entire caseload or for all patients receiving a particular evidence-based treatment can be helpful in developing agency expectations and benchmarks. These data provide clinicians the opportunity to engage in continuous improvement based on self-learning or peer or supervisor feedback. Guiding questions for clinicians could include ‘are my observed findings matching what I would expect compared to the aggregate?’ and, if not, ‘is treatment being delivered in the way it was intended, or is there something else that may account for this discrepancy?’ (Daleiden, & Chorpita, 2005).

Supervisory support or professional development can also help clinicians continually improve their ability to tailor evidence-based practices and/or identify strengths and areas of growth treating patients with varying characteristics. Examining these data in aggregate can also provide valuable information regarding characteristics of a clinician’s caseload, such as severity, and drive decisions about case mix calibration to help balance severity, case volume, new cases or other caseload characteristics to reduce burnout related to job stress and possibly turnover rates (Sheidow, Schoenwald, Wagner, Allred, & Burns, 2007). By aggregating MBC data, supervisors and organizational leadership can answer questions such as, ‘how good are we at getting them better?’ and ‘in what areas do staff need support to meet patients’ needs?’

Organizational Quality Improvement

Organizational data-informed decision-making involves aggregation of data that are used at the patient and clinician level (for interested readers, see local aggregate evidence strategies, which is one of four evidence bases further discussed in Daleiden & Chorpita, 2005). Understanding various data elements among all patients or patient subgroups can inform a myriad of internal performance evaluation questions, quality improvement strategies, budgetary decisions and other organizational policies to improve service delivery and care quality within the organization. For example, MBC data can be integrated into any strategic planning document or process that the agency uses to track progress toward specific, measurable goals within quality improvement initiatives. Aggregated patient progress and outcome data can also equip an organization to report to stakeholders, including third party payors, accreditation bodies, and current or prospective partners (e.g., schools or primary care offices which may serve as service sites or referral sources) and consumers. These data also tie in directly to an emphasis on value-based care and payment models which, in short, are focused on reducing cost of care and healthcare disparities by rewarding quality, outcomes-driven, evidence-based practice (Alberti, Bonham, & Kirch, 2013). Opening/closing and expansion/reduction decisions can also be informed by MBC data which likely reflects the need in the surrounding community.

Performance Evaluation Considerations.

Despite opportunities for MBC to inform improvements in patient care, clinician supports and organizational quality improvement, a significant barrier to collection and use of data relate to how the data will be used (Lewis et al., 2019). That is, can these data be used against me/us/the organization? Ultimately, agencies must be prepared for what the data will reveal, including when progress is not being made or outcomes are not what were expected. Results that are not in the desired direction or do not achieve the target goal should be leveraged to inform where to focus resources, shift priorities, and/or develop action plans for quality improvement. Importantly, patient outcome data must be carefully interpreted if it is to be linked to clinician performance. For instance, patient-level factors such as differences in patient motivation for treatment, risk factors, chronicity of their difficulties, severity of their symptoms, ‘dose’ of treatment, treatment attendance/ engagement, and response to treatment can vary substantially at an individual level. Not all patients get better within a pre-determined timeframe even when provided with the highest quality care. Moreover, some clinicians may see patients who tend to have higher levels of clinical severity, comorbidities or psychosocial risk factors based on their specialty areas or experience level. Thus, although recent models of value-based care and payment (VanLare, Blum, & Conway, 2012) are emerging, it is not yet clear how such models can be implemented in a manner that reinforces quality care and does not penalize clinicians for factors outside of their control. At the very least, systems that underlie implementation of any value-based performance measurement should be clear, reliable, and built into organizational culture.

Mental Health Agency Conceptual Examples

To illustrate this approach of implementing MBC in a way that creates value throughout the agency, three conceptual examples based on actual mental health agencies are provided. In each case, the agency was able to implement one or more patient-reported symptom measure and leverage those data for different purposes. Relevant data elements drawn from the Precision Medicine Framework are noted parenthetically in italics to illustrate the utility of various data elements in practice.

Agency A: Statewide Mental Health Agency Addresses Rising Trauma Cases

Characteristics.

Agency A is a large community mental health agency providing treatment for children and families in four counties by 125 clinicians. Agency A operates in outpatient mental health clinics, foster care, group homes, and charter and nonpublic schools. An electronic health record (EHR) with MFS capabilities is used and can be customized to include instruments selected by the agency.

Current assessment practices.

Agency A provides group training twice per year for new staff, during which they emphasize the importance of collecting standardized instruments from patients at the beginning of treatment and (at a minimum) every six months (to provide aims and risk and progress data), which is the interval required for reimbursement. Every clinician is trained in a global, standardized measure provided by the state insurance purveyor that measures child functioning and can be entered in a state-funded MFS.

MBC goals.

After some clinicians reported an increase in patients who have experienced a traumatic event, agency leaders examined their EHRs for patient diagnoses over the past year. They found that patients needing trauma assessment and treatment were on the rise throughout the agency and they needed to better equip clinicians to serve patients experiencing posttraumatic stress disorder (PTSD). Agency A needs more information about patient PTSD severity and response to treatment (aims and risk data, progress data and treatment data) to understand how best to improve care. The leadership looked for an evidence-based measure of PTSD diagnosis and identification of personalized treatment goals (aims and risk data) to track progress over time (progress data).

Instrument selected.

Agency A adopted the UCLA-PTSD Reaction Index for DSM 5 (UCLA PTSD-RI; Hafstad, Dyb, Jensen, Steinberg, & Pynoos, 2014). The UCLA PTSD-RI was ideal for this agency because it functions as both a diagnostic assessment and a symptom instrument for posttraumatic stress. Agency A also planned a two-day evidence-based practice training for the entire agency in Trauma-Focused Cognitive Behavioral Therapy (TF-CBT, Cohen, Mannarino, & Deblinger, 2016). Use of the UCLA-PTSD-RI was covered in the initial training and emphasized during ongoing consultation calls. TF-CBT emphasizes assessment throughout treatment, so this intervention was aligned with MBC goals.

Instrument value

Patient care.

Clinicians used the UCLA-PTSD-RI to assess for PTSD at intake. Scores were valuable for understanding which symptom clusters are most problematic for the individual patient (aims and risk data), customizing treatment, and monitoring symptom change over time (progress data).

Clinician support.

Agency A supervisors used the UCLA-PTSD-RI to determine the number and severity of cases with trauma exposure and/or symptoms among caseloads they supervise (aims and risk data). This helped them monitor the degree of symptom change for individual patients and/or a whole caseload (progress data), informing supervision.

Organizational quality improvement.

Administrators examined clinical symptom cut-off scores to assess trauma exposure and symptom prevalence (aims and risk data) for the entire agency. The also examined the degree of change in symptoms for patients receiving interventions (progress data). These data helped administrators decide make decisions about additional implementation supports and supported applications for funding to support this growing trauma specialty in their agency.

Agency B: New Agency Starts Small with MBC

Characteristics.

Agency B is a small community mental health clinic that provides individual, group, and family counseling for youth and adults. Agency B employs 30 clinicians across seven counties in one state, so their workforce is small but geographically widespread. There are two clinics, and home-based services are also provided. Agency B uses EHRs, but due to lack of options for customization, most documentation remains in paper charts.

Current assessment practices.

Agency B collects a standardized instrument required for public insurance reimbursement every six months and makes available several other brief, free progress and outcome assessments (aims and risk data) to be used at the discretion of each clinician. However, MBC is not consistently used.

MBC goals.

Agency B administrators became increasingly interested in having a symptom rating scale in addition to the state-required instrument to be conducted at intake and more frequently throughout treatment (progress data) to help clinicians systematically monitor symptom reduction and to inform the frequency and intensity of care. The agency was looking for an instrument that fulfills all MBC considerations displayed in Figure 1; their top priorities are that it is brief, free, easy to administer and score, and available in Spanish.

Instrument selected.

Agency B selected the Youth Top Problem (YTP; Weisz et al., 2011). YTP is an individualized instrument on which youth and caregivers each identify three problems that are of the greatest concern to them and provide weekly ratings on ‘how much of a problem’ each item is on a scale of 0 (‘not at all’) to 10 (‘very much’). YTP was a good fit for Agency B because it does not require a lot of training and clinicians have the flexibility to customize treatment targets collaboratively with children and their families.

Agency B took a phased approach to implementing the new instrument; they initially trained 6 (20%) clinicians, selected based on their interest in MBC. After several group meetings about use of the YTP, the administrators partnered with this first cohort of clinician champions to plan how to scale-up this instrument using peer and supervisor-led training and support.

Instrument value.

Patient care.

Agency B clinicians used the YTP progress data to monitor patient progress over time and to inform whether to change or continue treatment. Clinicians could also compare patient and caregiver ratings assess agreement on progress and experience in treatment.

Clinician support.

Agency B supervisors used the YTP to understand the nature of and improvement in target problems for individual patients and/or entire caseloads. Supervisors can review paper charts with YTP data to understand types of cases the clinicians serve (aims and risk data) and monitor clinician effectiveness (based on progress data), which informs what type of support, professional development or ongoing supervision is needed.

Organizational quality improvement.

By entering data into a spreadsheet, Agency B leadership can understand the type of treatment targets being addressed agency-wide. Also, even though the YTP does not have standardized items, because problems are rated on the same scale, agency leadership can aggregate YTP data across patients (progress data). This might inform whether families are reporting a remission of problems (as compared to their own baseline scores) throughout treatment and at what rate. Agency leadership could also examine frequently-used target problems to identify the need for new programs, training and resource needs.

Agency C: Large Mental Health Agency Sustains MBC After Recent Grant Ends

Characteristics.

Agency C is a community-based, non-profit agency that provides children’s mental health services, child welfare services, and early childhood programs. Agency C has multiple locations in urban and rural areas of a single state and employs 120 mental health clinicians. EHRs are used.

Current assessment practices.

Agency C began using a MFS as part of a larger research project and continued using feedback after the study concluded. Training on the instruments and the use of the MFS was integrated into onboarding procedures for new staff. Instruments were intended to be administered each session, with an expanded set of instruments at intake and discharge. Clinicians were expected to use feedback to inform clinical decision-making and guide supervision.

MBC goals.

Agency C’s assessment goals were to use data (personal, aims and risk, service preference intervention, progress, mechanism and contextual data) to inform ongoing treatment. Instruments needed to be available in multiple languages for multiple reporters on a tablet or with paper-and-pencil option as a backup.

Instrument selected.

The MFS used by Agency C included the Peabody Treatment Progress Battery (PTPB) (Bickman et al., 2010; Riemer et al., 2012). The PTPB consists of eleven standardized instruments completed by youth, caregivers, and/or clinicians that assess aspects of treatment progress (aims and risks), treatment process (e.g., services preference and mechanisms data), and patient strengths (personal and mechanisms data). Instruments are administered on an automated schedule, which can be customized, resulting in brief assessments (about five- to seven-minutes) to be completed at each session. The onboarding procedures included written materials (e.g., PTPB manual) and internal consultation on MFS use and measure interpretation.

Instrument value.

Patient care.

Agency C clinicians used the PTPB to track progress over time to inform ongoing treatment. Benchmarks (e.g., trends, total scores, reliable change indices) were integrated into their documentation to reduce burden of narrative descriptions of progress. Clinicians were trained to use feedback with patients to inform their session case conceptualization.

Clinician support.

Agency C staff viewed feedback for individual patients and/or entire caseloads. Supervisors and clinical directors used the PTPB data to understand type of cases served by clinicians, contributing to “rightsizing” case mix when assigning new cases and using data to tailor professional development.

Organizational quality improvement.

Agency C leadership used aggregated PTPB data to inform program planning, workforce development, reporting to external funders and regulatory agencies. In combination with EHR data, PTPB data provided leaders with the ability to easily monitor the impact of new initiatives (intervention data, contextual data). Agency C’s leadership structure included an executive-level role and accompanying resources focused on quality improvement.

Discussion

Agency administrators could develop a strategic organizational approach to adopt and use patient-level instruments in a way that creates value for everyone who interacts with the system. A focus on providing value at multiple levels can serve not only to improve outcomes and inform treatment, but also enhance clinician support, provide important agency-level data, and improve adoption, uptake, and sustainability of MBC overall. While there is a need for additional research to understand optimal MBC implementation and effectiveness (e.g., optimal training and support, measurement frequency), it is the instruments themselves that are at the core of any MBC. Ultimately, without clear guidance about how to select and use various assessment approaches in a way that creates relevance across the organization, clinic-wide priorities to collect patient outcomes for quality improvement could be viewed as an “add-on,” a perspective that does little to facilitate MBC practices at the provider level. This point is underscored by the following statement (Garland, Bickman, & Chorpita, 2010):

“It should no longer be acceptable for funders or accreditation agencies to require the collection of data that are too often left to gather dust in some office or be hidden in a mass of computer files. The data collection efforts must be designed to be useful to providers and administrators in order to provide effective treatment.”

For this reason, we recommend agencies select instruments using both a “bottom up” and a “top down” approach by eliciting clinician values, assessing patient preferences, and exploring how to integrate the resulting data into key organizational metrics. Fortunately, technology has changed the way organizations use data. The traditional ‘data repository’ is quickly becoming outdated based on increasing recognition that data collection for organizational requirements or reporting to funders has typically not taken the clinician or patient perspective into account. In fact, technology has eased our ability to put the patient and clinician “front and center” in MBC because they can immediately see and use the data – while also creating opportunities for organizations to use these data for quality improvement purposes (Douglas et al., 2016). The Precision Mental Health Framework (Bickman et al., 2016) offers a comprehensive approach to assessing relevant data elements for agencies to consider, including personal, aims and risks, service preference, intervention, progress, mechanisms, and contextual data. As is highlighted in the mental health agency conceptual examples, aims and risks, intervention, progress and mechanisms data are perhaps most relevant for leveraging MBC within an agency to meet multilevel needs.

The type of instrument selected, whether standardized or individualized, should be based on considerations beyond psychometric quality (see Fig. 1). As is illustrated in the examples, when MBC becomes more than simply a tool for clinicians, and aggregate data are used by leadership, the value created at multiple levels may improve MBC fidelity and care quality.

There are certain caveats to the proposed approach to instrument selection. First, no single instrument will collect everything, nor should it. The psychosocial progress and outcome data collected simply provide foundational information for clinicians and agency leadership to ask questions and tailor their planning in a systematic way. At the patient care level, this is precisely why any MBC approach or instrument should be used in conjunction with clinical expertise to make treatment decisions. For clinician supervision or support, patient data should not be viewed as an ‘absolute’ source for clinician performance evaluation. At the agency level, aggregate information about patient progress or outcomes can be used as quality improvement indicators, but gathering input from providers, other stakeholders, and patients is imperative to understand observed patterns in the data.

Moreover, some data elements and instruments can be aggregated with greater ease than others. A combination of standardized and individualized instruments may provide greater value over just one type of instrument alone, as they are highly complementary (Ashworth et al., 2019; Christon et al., 2015; McLeod, Jensen-Doss, & Ollendick, 2013). For instance, Agency B could have added a brief, public-domain standardized instrument at intake and every three or six months to monitor whether a patient is in the clinical range and have MBC data that are more amenable to aggregation and reliable interpretation of progress across patients. The Youth Top Problems scores (individually or averaged across problems as was shown in previous research (J. Weisz et al., 2012) could still be the primary clinical decision-making tool, but with a low-burden standardized instrument as a supplement. There is also a pressing need to develop and test instruments that blend both standardized and individualized measurement approaches to offer a personalized approach to care (Ashworth et al., 2019).

Finally, implementation barriers face agency administrators who wish to select a specific instrument their agency. Agency motivation, resources, and capacity to select and use performance instruments, engage in rigorous quality improvement processes, adopt measurement feedback technologies, and/or train and support providers to engage in MBC is variable. Although beyond the scope of this manuscript (see Lewis et al., 2019, for a review of MBC implementation), it is worth noting that many agencies have limited staffing to support MBC workflows, limited resources especially in a fee-for-service environment, and challenges optimizing electronic medical records to accommodate patient instruments. Also, surveys suggest that clinicians have concerns about the clinical application of instruments and/or limited access to, knowledge of, or training in MBC (Bickman et al., 2000; Connors et al., 2015; Jensen-Doss et al., 2018). Continued efforts to discover and disseminate effective implementation strategies to support MBC at all levels of different types of agency are imperative for agency administrators to advance from instrument selection to full implementation and sustainment of MBC (Lewis et al., 2015; Lewis et al., 2018; Lewis et al., 2019).

Implications and Future Directions

It seems reasonable to think of MBC as one strategy to shift the playing field for continuous quality improvement by having an impact not only on patient care, but also organizational learning and decision-making. Future research should extend this work to focus on how MBC can be applied more broadly to quality improvement strategies, tools and activities to support program growth. Existing literature highlights this from the perspective of MBC technology development (Bickman, Kelley, & Athay, 2012) and strategies to integrate MBC at multiple levels within an agency (Douglas et al., 2016).

Moving beyond the walls of the agency, MBC can drive quality improvement and serve as the foundation of a learning health care system, whereby data captured at the clinical encounter level can feed up to transform ongoing system improvement efforts (Chambers, Feero, & Khoury, 2016; Chambers & Norton, 2016). While other countries such as Norway (Valla & Prescott, 2019), the Netherlands (Beurs, Warmerdam, & Twisk, 2019), the United Kingdom (National Health Service England, 2019) and Canada (Goldberg et al., 2016) have benefited from system-level MBC policy initiatives to aggregate data from the bottom up for organizational learning, such efforts are just beginning in the United States. Currently, data for system improvement in the United States is typically limited to top-down aggregation, such as that found in a recent study of implementation support and policy drivers for state-level EBT adoption (Bruns et al., 2019).

To achieve the lofty goal of learning from every patient treated, the behavioral health service sector would need to embrace MBC utilizing MFS technologies and reach agreement on a common data profile informed by a comprehensive approach, such as the Precision Mental Health Framework (Bickman, 2016). Ideally, the data profile would allow for systematic measurement of the contextual variations in clinical care and implementation across and within systems, which could inform ongoing quality improvement and intervention development and adaptation. Behavioral health system performance metrics (particularly patient progress and outcome data) could also be used by state and national leadership to 1) communicate the value of behavioral health services and 2) target areas for investment in policy and/or practice change. Groups currently seeking statewide behavioral health and wellness metrics include state departments of education, behavioral health and Title V programs investing in school health services and members of the National Governors Association who wish to monitor child mental health and wellbeing metrics related to statewide policies, programs, and interventions such as school safety (Health Resources and Services Administration, 2019; National Center for School Mental Health, 2019; National Governors Association, 2019).

There are also policy implications for managed care organizations, state behavioral health authorities and funders to more be responsive to the practical realities of agency infrastructure and resources to engage in MBC and quality improvement. Specifically, agency administrators should always be involved in the development of policy, legislation and accountability requirements that directly impact agency operations including MBC adoption.

Conclusion

Mental health agency administrators can approach MBC in a way that can reconcile accountability requirements with agency and clinician needs. By strategically selecting key instruments that are valuable for different purposes throughout the agency, workflow efficiencies can be created for busy mental health agency personnel and implementation barriers can be addressed uniformly across the agency.

Footnotes

Publisher's Disclaimer: This Author Accepted Manuscript is a PDF file of an unedited peer-reviewed manuscript that has been accepted for publication but has not been copyedited or corrected. The official version of record that is published in the journal is kept up to date and so may therefore differ from this version.

Quality improvement refers to “systematic and continuous actions that lead to measurable improvement in health care services and the health status of targeted patient groups” (pp. 1, (U.S. Department of Health and Human Services Health Resources and Services Administration, April 2011)

For the sake of consistency, we use the term MBC although many sources use other terms such as progress monitoring and feedback. Widespread differences in terms referring to MBC are noted by Lewis and colleagues (2019), who recommend the term MBC to ensure explicit emphasis on routine measurement at each clinical encounter, practitioner and patient review of the data, and collaborative decision-making about the treatment plan.

The precision mental health approach is applicable for prevention and intervention. However, we focus primarily on “intervention” or “treatment” services provided by mental health agencies (terms used interchangeably).

References

- Agency for Healthcare Research and Quality. (September 2018). Quality improvement in primary care. Retrieved from https://www.ahrq.gov/research/findings/factsheets/quality/qipc/index.html

- Alberti PM, Bonham AC, & Kirch DG (2013). Making equity a value in value-based health care. Academic Medicine : Journal of the Association of American Medical Colleges, 88(11), 1619–1623. [DOI] [PubMed] [Google Scholar]

- American Psychological Association Task Force on Evidence-Based Practice for Children and Adolescents. (2008). Disseminating evidence-based practice for children & adolescents: A systems approach to enhancing care. Washington, DC: American Psychological Association; Retrieved from https://www.apa.org/practice/resources/evidence/children-report.pdf [Google Scholar]

- Ashworth M, Evans C, & Clement S (2009). Measuring psychological outcomes after cognitive behaviour therapy in primary care: A comparison between a new patient-generated measure “PSYCHLOPS”(psychological outcome profiles) and “HADS”(hospital anxiety and depression scale). Journal of Mental Health, 18(2), 169–177. [Google Scholar]

- Ashworth M, Guerra D, & Kordowicz M (2019). Individualised or standardised outcome measures: A co-habitation? Administration and Policy in Mental Health and Mental Health Services Research, 1–4. [DOI] [PubMed] [Google Scholar]

- Baumhauer JF, & Bozic KJ (2016). Value-based healthcare: Patient-reported outcomes in clinical decision making. Clinical Orthopaedics and Related Research®, 474(6), 1375–1378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker-Haimes EM, Tabachnick AR, Last BS, Stewart RE, Hasan-Granier A, & Beidas RS (2020). Evidence base update for brief, free, and accessible youth mental health measures. Journal of Clinical Child & Adolescent Psychology, 49(1), 1–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Stewart RE, Walsh L, Lucas S, Downey MM, Jackson K, … & Mandell DS (2015). Free, brief, and validated: Standardized instruments for low-resource mental health settings. Cognitive and Behavioral Practice, 22(1), 5–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beurs E. de, Warmerdam L, & Twisk J (2019). Bias through selective inclusion and attrition: Representativeness when comparing provider performance with routine outcome monitoring data. Clinical Psychology & Psychotherapy, 26(4), 430–439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergman H, Kornør H, Nikolakopoulou A, Hanssen-Bauer K, Soares-Weiser K, Tollefsen TK, & Bjørndal A (2018). Client feedback in psychological therapy for children and adolescents with mental health problems. Cochrane Database of Systematic Reviews, (8), Art. No.: CD011729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Athay M, Riemer M, Lambert E, Kelley S, Breda C, & Vides de Andrade A (2010). Manual of the peabody treatment progress battery. Nashville, TN: Vanderbilt University, Retrieved from https://peabody.vanderbilt.edu/docs/pdf/cepi/ptpb_2nd_ed/PTPB_2010_Entire_Manual_UPDATE_31212.pdf [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Kelley SD, & Athay M (2012). The technology of measurement feedback systems. Couple and Family Psychology: Research and Practice, 1(4), 274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Rosof-Williams J, Salzer MS, Summerfelt WT, Noser K, Wilson SJ, & Karver MS (2000). What information do clinicians value for monitoring adolescent client progress and outcomes? Professional Psychology: Research and Practice, 31(1), 70. [Google Scholar]

- Bickman L (2008). A measurement feedback system (MFS) is necessary to improve mental health outcomes. Journal of the American Academy of Child & Adolescent Psychiatry, 47(10), 1114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Lyon AR, & Wolpert M (2016). Achieving precision mental health through effective assessment, monitoring, and feedback processes: Introduction to the special issue. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 271–276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borntrager C, & Lyon AR (2015). Client progress monitoring and feedback in school-based mental health. Cognitive and Behavioral Practice, 22(1), 74–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruns EJ, Parker EM, Hensley S, Pullmann MD, Benjamin PH, Lyon AR, & Hoagwood KE (2019). The role of the outer setting in implementation: Associations between state demographic, fiscal, and policy factors and use of evidence-based treatments in mental healthcare. Implementation Science: IS, 14(1), 96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlier IV, Meuldijk D, Van Vliet IM, Van Fenema E, Van der Wee NJ, & Zitman FG (2012). Routine outcome monitoring and feedback on physical or mental health status: evidence and theory. Journal of evaluation in clinical practice, 18(1), 104–110. [DOI] [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services. (2016). Better care. smarter spending. healthier people: Improving quality and paying for what works Retrieved from https://www.cms.gov/newsroom/fact-sheets/better-care-smarter-spending-healthier-people-paying-providers-value-not-volume

- Chambers DA, Feero WG, & Khoury MJ (2016). Convergence of implementation science, precision medicine, and the learning health care system: A new model for biomedical research. JAMA, 315(18), 1941–1942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers DA, & Norton WE (2016). The adaptome: Advancing the science of intervention adaptation. American Journal of Preventive Medicine, 51(4), S124–S131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christon LM, McLeod BD, & Jensen-Doss A (2015). Evidence-based assessment meets evidence-based treatment: An approach to science-informed case conceptualization. Cognitive and Behavioral Practice, 22(1), 36–48. [Google Scholar]

- Chorpita BF, Bernstein A, Daleiden EL, & Research Network on Youth Mental Health. (2008). Driving with roadmaps and dashboards: Using information resources to structure the decision models in service organizations. Administration and Policy in Mental Health and Mental Health Services Research, 35(1–2), 114–123. [DOI] [PubMed] [Google Scholar]

- Cleghorn GD, & Headrick LA (1996). The PDSA cycle at the core of learning in health professions education. The Joint Commission Journal on Quality Improvement, 22(3), 206–212. [DOI] [PubMed] [Google Scholar]

- Coalition for the Advancement and Application of Psychological Science. (2018). Evidence-based practice decision-making for mental and behavioral health care. Retrieved from https://adaa.org/sites/default/files/CAAPS%20Statement%20September%202018.pdf

- Cohen JA, Mannarino AP, & Deblinger E (2016). Treating trauma and traumatic grief in children and adolescents. Guilford Publications. [Google Scholar]

- Cone JD, & Hoier TS (1986). Assessing children: The radical behavioral perspective. Advances in Behavioral Assessment of Children & Families. [Google Scholar]

- Connors EH, Arora P, Curtis L, & Stephan SH (2015). Evidence-based assessment in school mental health. Cognitive and Behavioral Practice, 22, 60–73. [Google Scholar]

- Daleiden EL, & Chorpita BF (2005). From data to wisdom: Quality improvement strategies supporting large-scale implementation of evidence-based services. Child and Adolescent Psychiatric Clinics of North America, 14(2), 329–49. [DOI] [PubMed] [Google Scholar]

- deJong K (2017). Effectiveness of progress monitoring: A meta-analysis. Toronto, CA: Paper presented at the 48th International Annual Meeting for the Society for Psychotherapy Research. [Google Scholar]

- deJong K, Barkham M, Wolpert M, Douglas S, & Delgadillo J. e. a. (2019). The impact of progress feedback on outcomes of psychological interventions: An overview of the literature and a roadmap for future research. Manuscript in Progress. [Google Scholar]

- Delgadillo J, Overend K, Lucock M, Groom M, Kirby N, McMillan D, … de Jong K (2017). Improving the efficiency of psychological treatment using outcome feedback technology. Behaviour Research and Therapy, 99, 89–97. [DOI] [PubMed] [Google Scholar]

- Dew-Reeves SE, & Athay MM (2012). Validation and use of the youth and caregiver treatment outcome expectations scale (TOES) to assess the relationships between expectations, pretreatment characteristics, and outcomes. Administration and Policy in Mental Health and Mental Health Services Research, 39(1–2), 90–103. [DOI] [PubMed] [Google Scholar]

- Donabedian A (2002). An introduction to quality assurance in health care. Oxford University Press. [Google Scholar]

- Doss BD, Thum YM, Sevier M, Atkins DC, & Christensen A (2005). Improving relationships: Mechanisms of change in couple therapy. Journal of Consulting and Clinical Psychology, 73(4), 624. [DOI] [PubMed] [Google Scholar]

- Douglas S, Button S, & Casey SE (2016). Implementing for sustainability: Promoting use of a measurement feedback system for innovation and quality improvement. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 286–291. [DOI] [PubMed] [Google Scholar]

- Douglas S, Jonghyuk B, Andrade A. R. V. de, Tomlinson MM, Hargraves RP, & Bickman L (2015). Feedback mechanisms of change: How problem alerts reported by youth clients and their caregivers impact clinician-reported session content. Psychotherapy Research, 25(6), 678–693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dowrick C, Leydon GM, McBride A, Howe A, Burgess H, Clarke P, … & Kendrick T (2009). Patients’ and doctors’ views on depression severity questionnaires incentivised in UK quality and outcomes framework: qualitative study. BMJ, 338, b663. [DOI] [PubMed] [Google Scholar]

- Edbrooke-Childs J, Jacob J, Law D, Deighton J, & Wolpert M (2015). Interpreting standardized and idiographic outcome measures in CAMHS: What does change mean and how does it relate to functioning and experience? Child and Adolescent Mental Health, 20(3), 142–148. [DOI] [PubMed] [Google Scholar]

- Eisen SV, Dickey B, & Sederer LI (2000). A self-report symptom and problem rating scale to increase inpatients’ involvement in treatment. Psychiatric Services, 51(3), 349–353. [DOI] [PubMed] [Google Scholar]

- Fleming I, Jones M, Bradley J, & Wolpert M (2016). Learning from a learning collaboration: The CORC approach to combining research, evaluation and practice in child mental health. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 297–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fortney JC, Unützer J, Wrenn G, Pyne JM, Smith GR, Schoenbaum M, & Harbin HT (2017). A tipping point for measurement-based care. Psychiatric Services, 68, 179–188. [DOI] [PubMed] [Google Scholar]

- Garland AF, Bickman L, & Chorpita BF (2010). Change what? identifying quality improvement targets by investigating usual mental health care. Administration and Policy in Mental Health and Mental Health Services Research, 37(1–2), 15–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbody SM, House AO, & Sheldon TA (2001). Routinely administered questionnaires for depression and anxiety: Systematic review British Medical Association. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow RE, & Riley WT (2013). Pragmatic measures: What they are and why we need them. American Journal of Preventive Medicine, 45(2), 237–243. [DOI] [PubMed] [Google Scholar]

- Gleacher AA, Olin SS, Nadeem E, Pollock M, Ringle V, Bickman L, … & Hoagwood K (2016). Implementing a measurement feedback system in community mental health clinics: A case study of multilevel barriers and facilitators. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 426–440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldberg SB, Babins-Wagner R, Rousmaniere T, Berzins S, Hoyt WT, Whipple JL, … Wampold BE (2016). Creating a climate for therapist improvement: A case study of an agency focused on outcomes and deliberate practice. Psychotherapy, 53(3), 367–375. [DOI] [PubMed] [Google Scholar]

- Gondek D, Edbrooke-Childs J, Fink E, Deighton J, & Wolpert M (2016). Feedback from outcome measures and treatment effectiveness, treatment efficiency, and collaborative practice: A systematic review. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 325–343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hafstad GS, Dyb G, Jensen TK, Steinberg AM, & Pynoos RS (2014). PTSD prevalence and symptom structure of DSM-5 criteria in adolescents and young adults surviving the 2011 shooting in norway. Journal of Affective Disorders, 169, 40–46. [DOI] [PubMed] [Google Scholar]

- Hall C, Moldavsky M, Taylor J, Sayal K, Marriott M, Batty M, … Hollis C (2014). Implementation of routine outcome measurement in child and adolescent mental health services in the united kingdom: A critical perspective. European Child & Adolescent Psychiatry, 23(4), 239–242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkins EJ, Baer JS, & Kivlahan DR (2008). Concurrent monitoring of psychological distress and satisfaction measures as predictors of addiction treatment retention. Journal of Substance Abuse Treatment, 35(2), 207–216. [DOI] [PubMed] [Google Scholar]

- Hunsley J, & Mash EJ (2007). Evidence-based assessment. Annu. Rev. Clin. Psychol, 3, 29–51. [DOI] [PubMed] [Google Scholar]

- Health Resources and Services Administration (2019). Collaborative Improvement and Innovation Networks (CoIINs). Retrieved from https://mchb.hrsa.gov/maternal-child-health-initiatives/collaborative-improvement-innovation-networks-coiins

- Hermann RC, Rollins CK, & Chan JA (2007). Risk-adjusting outcomes of mental health and substance-related care: A review of the literature. Harvard Review of Psychiatry, 15(2), 52–69. [DOI] [PubMed] [Google Scholar]

- Higa-McMillan CK, Powell C, Daleiden EL, & Mueller CW (2011). Pursuing an evidence-based culture through contextualized feedback: Aligning youth outcomes and practices. Professional Psychology: Research and Practice, 42(2), 137. [Google Scholar]

- Jensen-Doss A, Haimes EMB, Smith AM, Lyon AR, Lewis CC, Stanick CF, & Hawley KM (2018). Monitoring treatment progress and providing feedback is viewed favorably but rarely used in practice. Administration and Policy in Mental Health and Mental Health Services Research, 45(1), 48–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen-Doss A, Haimes EMB, Smith AM, Lyon AR, Lewis CC, Stanick CF, & Hawley KM (2018). Monitoring treatment progress and providing feedback is viewed favorably but rarely used in practice. Administration and Policy in Mental Health and Mental Health Services Research, 45(1), 48–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen-Doss A, Smith AM, Becker-Haimes EM, Ringle VM, Walsh LM, Nanda M, … Lyon AR (2018). Individualized progress measures are more acceptable to clinicians than standardized measures: Results of a national survey. Administration and Policy in Mental Health and Mental Health Services Research, 45(3), 392–403. [DOI] [PubMed] [Google Scholar]

- Kelley SD, de Andrade, Vides Ana Regina, Sheffer E, & Bickman L (2010). Exploring the black box: Measuring youth treatment process and progress in usual care. Administration and Policy in Mental Health and Mental Health Services Research, 37(3), 287–300. [DOI] [PubMed] [Google Scholar]

- Kelley SD, & Bickman L (2009). Beyond outcomes monitoring: Measurement feedback systems in child and adolescent clinical practice. Current Opinion in Psychiatry, 22(4), 363–368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendrick T, El-Gohary M, Stuart B, Gilbody S, Churchill R, Aiken L, … & Moore M (2016). Routine use of patient reported outcome measures (PROMs) for improving treatment of common mental health disorders in adults. Cochrane Database of Systematic Reviews, (7). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiresuk TJ, Stelmachers ZT, & Schultz SK (1982). Quality assurance and goal attainment scaling. Professional Psychology, 13(1), 145. [Google Scholar]

- Knaup C, Koesters M, Schoefer D, Becker T, & Puschner B (2009). Effect of feedback of treatment outcome in specialist mental healthcare: Meta-analysis. The British Journal of Psychiatry, 195, 15–22. [DOI] [PubMed] [Google Scholar]

- Kotte A, Hill KA, Mah AC, Korathu-Larson PA, Au JR, Izmirian S, … Higa-McMillan CK (2016). Facilitators and barriers of implementing a measurement feedback system in public youth mental health. Administration and Policy in Mental Health and Mental Health Services Research, 43(6), 861–878. [DOI] [PubMed] [Google Scholar]

- Krageloh C, Czuba K, Billington R, Kersten P, & Siegert R (2015). Using feedback from patient-reported outcome measures in mental health services: A scoping study and typology. Psychiatric Services, 66(3), 563–570. [DOI] [PubMed] [Google Scholar]

- Lambert MJ, Whipple JL, Hawkins EJ, Vermeersch DA, Nielsen SL, & Smart DW (2003). Is it time for clinicians to routinely track patient outcome? A meta-analysis. Clinical Psychology: Science and Practice, 10(3), 288–301. [Google Scholar]

- Lambert MJ, Whipple JL, & Kleinstäuber M (2018). Collecting and delivering progress feedback: A meta-analysis of routine outcome monitoring. Psychotherapy, 55(4), 520–537 [DOI] [PubMed] [Google Scholar]

- Langley GJ, Moen RD, Nolan KM, Nolan TW, Norman CL, & Provost LP (2009). The improvement guide: a practical approach to enhancing organizational performance. John Wiley & Sons. [Google Scholar]

- Lewis CC, Boyd M, Puspitasari A, Navarro E, Howard J, Kassab H, … Douglas S (2019). Implementing measurement-based care in behavioral health: A review. JAMA Psychiatry, 76(3), 324–335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis CC, Puspitasari A, Boyd MR, Scott K, Marriott BR, Hoffman M, … Kassab H (2018). Implementing measurement based care in community mental health: A description of tailored and standardized methods. BMC Research Notes, 11(1), 76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis CC, Scott K, Marti CN, Marriott BR, Kroenke K, Putz JW, … Rutkowski D (2015). Implementing measurement-based care (iMBC) for depression in community mental health: A dynamic cluster randomized trial study protocol. Implementation Science, 10(1), 127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindhiem O, Bennett CB, Orimoto TE, & Kolko DJ (2016). A Meta-Analysis of personalized treatment goals in psychotherapy: A preliminary report and call for more studies. Clinical Psychology: Science and Practice, 23(2), 165–176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd CE, Duncan C, & Cooper M (2019). Goal measures for psychotherapy: A systematic review of self-report, idiographic instruments. Clinical Psychology: Science and Practice, e12281. [Google Scholar]

- Lyon AR, Borntrager C, Nakamura B, & Higa-McMillan C (2013). From distal to proximal: Routine educational data monitoring in school-based mental health. Advances in School Mental Health Promotion, 6(4), 263–279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Connors E, Jensen-Doss A, Landes SJ, Lewis CC, McLeod BD, … Weiner BJ (2017). Intentional research design in implementation science: Implications for the use of nomothetic and idiographic assessment. Translational Behavioral Medicine, 7(3), 567–580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, & Lewis CC (2016). Designing health information technologies for uptake: Development and implementation of measurement feedback systems in mental health service delivery. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 344–349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Lewis CC, Boyd MR, Hendrix E, & Liu F (2016). Capabilities and characteristics of digital measurement feedback systems: Results from a comprehensive review. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 441–466. [DOI] [PMC free article] [PubMed] [Google Scholar]