Abstract

Lesion symptom mapping (LSM) tools are used on brain injury data to identify the neural structures critical for a given behavior or symptom. Univariate lesion symptom mapping (ULSM) methods provide statistical comparisons of behavioral test scores in patients with and without a lesion on a voxel by voxel basis. More recently, multivariate lesion symptom mapping (MLSM) methods have been developed that consider the effects of all lesioned voxels in one model simultaneously. In the current study, we provide a much‐needed systematic comparison of several ULSM and MLSM methods, using both synthetic and real data to identify the potential strengths and weaknesses of both approaches. We tested the spatial precision of each LSM method for both single and dual (network type) anatomical target simulations across anatomical target location, sample size, noise level, and lesion smoothing. Additionally, we performed false positive simulations to identify the characteristics associated with each method's spurious findings. Simulations showed no clear superiority of either ULSM or MLSM methods overall, but rather highlighted specific advantages of different methods. No single method produced a thresholded LSM map that exclusively delineated brain regions associated with the target behavior. Thus, different LSM methods are indicated, depending on the particular study design, specific hypotheses, and sample size. Overall, we recommend the use of both ULSM and MLSM methods in tandem to enhance confidence in the results: Brain foci identified as significant across both types of methods are unlikely to be spurious and can be confidently reported as robust results.

Keywords: aphasia, brain–behavior relationships, language, lesion symptom mapping, multivariate, stroke, VLSM

In the current study, we conducted the first comprehensive, empirical comparison of several univariate and multivariate lesion symptom mapping (LSM) methods using both synthetic and real behavioral data to identify the potential strengths and weaknesses of both approaches. Cumulatively, our analyses indicated that both univariate and multivariate methods can be equally robust in locating brain–behavior relationships, depending on the design of the study, the research question being asked, and with proper spatial metrics. The results provide crucial insights into the accuracy of different LSM methods and their susceptibility to artifact, providing a first of its kind data‐driven navigational guide for users of LSM analyses.

1. INTRODUCTION

1.1. Lesion symptom mapping

Throughout the 19th and much of the 20th century, systematic clinical observations of neurologic patients along with postmortem autopsy remained the main method for establishing brain correlates of cognitive functioning (Damasio & Damasio, 1989; Dronkers, Ivanova, & Baldo, 2017; Luria, 1980). The advent of modern neuroimaging methods in the 1970s greatly enhanced the ability to determine neural foundations of cognition, as the actual lesion site could be identified in‐vivo with unprecedented, continuously improving precision. In the early 2000s, an increase in computing power along with new statistical procedures brought lesion symptom mapping (LSM) to a new, more advanced level. Instead of relying on single‐case studies or viewing regions of lesion overlap in patients with a common syndrome, analysis of large group studies with continuous behavioral data became possible. Specifically, the mass‐univariate LSM (ULSM) method, such as the original voxel‐based LSM (VLSM; Bates et al., 2003), provides statistical comparisons of behavioral test scores across patients with and without a lesion on a voxel by voxel basis. Voxels that show significant differences for a particular behavior or symptom are inferred to be critical for the behavior under examination. ULSM methods complement functional neuroimaging studies in healthy participants, by testing the necessity of particular brain areas for a particular behavior, thereby demonstrating the crucial causal link in brain–behavior relationships (Bates et al., 2003; Karnath, Sperber, & Rorden, 2018; Rorden, Karnath, & Bonilha, 2007; Vaidya, Pujara, Petrides, Murray, & Fellows, 2019). Contemporary ULSM methods provide a fundamental shift in broadening our understanding of brain–behavior relationships, both confirming (Baldo, Arevalo, Patterson, & Dronkers, 2013) and challenging previously held beliefs about key neural structures for different cognitive functions (Baldo et al., 2018; Dronkers, Wilkins, Van Valin, Redfern, & Jaeger, 2004; Ivanova et al., 2018; Mirman, Chen, et al., 2015).

More recently, new multivariate lesion symptom mapping (MLSM) methods have been developed as an alternative to ULSM. The principal difference between ULSM and MLSM methods is that MLSM considers the entirety of all lesion patterns in one model simultaneously. This is in contrast to the parallel, independent analysis of lesion patterns on a voxel by voxel basis performed with ULSM models. While some papers have argued that MLSM methods should be superior to ULSM methods (DeMarco & Turkeltaub, 2018; Mah, Husain, Rees, & Nachev, 2014; Pustina, Avants, Faseyitan, Medaglia, & Coslett, 2018; Zhang, Kimberg, Coslett, Schwartz, & Wang, 2014), many of these arguments have been presented theoretically without rigorously comparing the two approaches (see also Sperber, Wiesen, & Karnath, 2019).

Below, we focus on two critical properties of LSM analyses that are foundational to the validity of the method: spatial accuracy and ability to detect networks. With regard to these two aspects, we first review issues that impact brain–behavior inferences made with both ULSM and MLSM methods and then report a comprehensive empirical evaluation of these two different approaches to lesion‐behavior mapping.

1.2. Spatial accuracy

1.2.1. Issues affecting spatial accuracy

The original ULSM method was superior in terms of its spatial accuracy to lesion overlays and lesion subtraction analyses as it provided a quantifiable statistical approach to capturing the continuous nature of behavioral data in relation to lesion site. However, recently multiple concerns over the spatial accuracy of the resulting LSM maps have been raised.

One issue that affects both ULSM and MLSM methods is that lesion distributions in stroke (the most frequently studied etiology with LSM techniques) are influenced by the vascular anatomy and are thus nonrandomly distributed in the brain with certain areas being more likely to be lesioned than others (Mah et al., 2014; Phan, Donnan, Wright, & Reutens, 2005; Sperber & Karnath, 2016; Xu, Jha, & Nachev, 2018). This nonrandom distribution of lesions impacts LSM analyses in the following ways. First, it limits analysis of certain brain areas that are rarely affected in stroke (e.g., the temporal pole). Second, neighboring voxels have a higher probability of being lesioned together, as strokes never affect just one voxel. The ULSM approach is potentially susceptible to this spatial autocorrelation, because it assumes independence of lesioned voxels throughout the brain, as thousands of independent tests (nonparametric, t tests, or linear regressions) are carried out serially in the affected voxels. While independence of tests is not an assumption of MLSM methods per se (since only one multivariate model incorporating all the lesion patterns is tested), lack of sufficient spatial distinction is an issue. In other words, if two voxels are always either damaged together or always spared, it is not possible to differentiate their unique contribution to the observed deficits with any LSM method. A third related concern that affects both ULSM and MLSM methods is differential statistical power across voxels/regions of the brain. For example, a voxel in which 50% of patients have a lesion has more power than a voxel where only 10% of patients have a lesion (Pustina et al., 2018). In general, given both the nonrandom nature of lesions and the inability to predict their specific pattern in a given study, it is hard to estimate statistical power in advance for any LSM method. Generally, one can only perform post hoc power analyses to determine the amount of power in different brain regions. Even with very large samples, statistical power can be low everywhere in the brain, for example, if lesions are small and voxels are only affected by a small proportion of all lesions. Finally, stroke lesions are also typically larger than the functional anatomical targets that LSM analyses attempt to uncover, thereby limiting the spatial resolution of the analysis.

Cumulative effects of nonrandom lesion distribution, autocorrelation across voxels, and differential power distribution can potentially lead to distortion in spatial localization of critical regions. Significant clusters are often “diverted” toward the most frequently damaged regions, which are regularly impacted together with the true correlates of a cognitive function (Inoue, Madhyastha, Rudrauf, Mehta, & Grabowski, 2014; Xu et al., 2018) and potentially along the brain's vasculature (Mah et al., 2014; Sperber, Wiesen, & Karnath, 2019). The open question is, to what degree do these lesion‐anatomical biases impact different ULSM and MLSM methods (Sperber, 2020), and how are they ameliorated by sample size, method choices, additional corrections, and interpretation? Currently, very few studies have investigated these biases systematically and compared them across different LSM methods.

1.2.2. Empirical studies investigating spatial accuracy of LSM methods

One of the original papers to raise awareness about spatial distortion in LSM by Mah et al. (2014) suggested that ULSM analyses mislocalized foci by an average of 16 mm. However, their model did not include lesion volume as a covariate in their analysis. The importance of using lesion volume as a nuisance covariate in LSM has been a standard recommendation for several years (Baldo, Wilson, & Dronkers, 2012; De Haan & Karnath, 2018; DeMarco & Turkeltaub, 2018; Price, Hope, & Seghier, 2017; Sperber & Karnath, 2017). In addition, Mah et al. used a minimum lesion load per voxel of <1% in their ULSM analyses, which is far below the standard recommendation of 5–10% (Baldo et al., 2012). Moreover, the displacement maps using synthetic data in Mah et al. showed single voxels (i.e., a single voxel leading to a specific deficit), which is an oversimplified and exclusively theoretical case that does not occur naturally. Furthermore, when damage to an anatomical region was used as a synthetic behavioral score in their study, the score was binarized rather than continuous, likely further reducing spatial resolution. Finally, Mah et al. did not explore spatial bias for MLSM, so within their study, it was not possible to directly compare the spatial displacement between ULSM and MLSM methods.

In another simulation study critiquing accuracy of ULSM (Inoue et al., 2014), lesion volume was included as a covariate, but the authors again used binarized synthetic behavioral scores (a deficit was indicated when 20% of voxels in the target parcel were damaged) and did not apply a minimal lesion load threshold. Also, the results in this study were predominantly analyzed with false discovery rate (FDR)‐based thresholding. This method of correction for multiple comparisons has been discontinued for some time in the ULSM literature, as it frequently leads to an increase in false positives (Baldo et al., 2012; Kimberg, Coslett, & Schwartz, 2007; Mirman et al., 2018). Also, accuracy of mapping was not systematically explored across sample sizes. Finally, the lesion data for this study came from highly heterogenous etiologies (stroke, traumatic brain injury, encephalitis), contrary to standard recommendations for any LSM study (De Haan & Karnath, 2018).

Most prominently, Sperber and Karnath (2017) empirically demonstrated that ensuring a sufficiently large minimal lesion load threshold as well as including lesion volume as a covariate have significant additive effects on improving spatial precision of results, although not entirely removing spatial bias. In their study, spatial displacement was calculated for single voxels, with displacement of larger clusters expected to be smaller. Furthermore, the lesion volume correction to enhance accuracy of localization has been strongly recommended for at least some MLSM approaches (DeMarco & Turkeltaub, 2018), again highlighting that MLSM methods are not immune to these types of spatial biases. In another simulation study, Sperber, Wiesen, Goldenberg, and Karnath (2019) and Sperber, Wiesen, and Karnath (2019) showed that a common support vector regression (SVR)‐based MLSM method was also susceptible to mislocalization along the brain's vasculature, even after applying a correction for lesion volume, and that this displacement error was actually higher than that observed for a ULSM method. However, since displacement was determined for single voxels in a single axial slice, these spatial biases require further exploration to fully understand their impact on LSM results with real behavioral data.

The most comprehensive simulation study to date by Pustina et al. (2018) showed that even one of the most advanced MLSM algorithms, sparse canonical correlation analysis for neuroimaging (SCCAN), exhibited spatial bias in the results. Here, the superiority of SCCAN using synthetic data was consistently demonstrated, but only when compared to the univariate analyses with inappropriate FDR‐based thresholding. Modern ULSM methods instead use a conservative, permutation‐based familywise error rate (FWER) correction, a nonparametric resampling approach to significance testing, which sets the overall probability rate of false positives across all of the results, while making almost no assumptions about the underlying data distributions (Hayasaka & Nichols, 2003; Nichols & Holmes, 2001). Permutation‐based FWER provides the most stringent and robust form of correction for multiple comparisons, providing an optimal balance between false positives and false negatives (see Kimberg et al., 2007; Mirman et al., 2018). Accordingly, in the same paper, the ULSM results obtained with this more appropriate thresholding using permutation‐based and Bonferoni FWER corrections, were comparable to SCCAN results across a number of spatial indices (Pustina et al., 2018). Moreover, Pustina et al. (2018) did not include lesion size as a covariate in the ULSM analysis, running counter to standard recommendations for ULSM and potentially biasing the comparison (Baldo et al., 2012; Sperber & Karnath, 2017). Furthermore, limited spatial metrics were used as measures of accurate mapping in comparing LSM methods, and most of these metrics produced similar levels of performance for all methods tested. For example, while the dice index (measure of overlap between two regions) was shown to be significantly higher for SCCAN compared to a nonparametric Brunner–Munzel version of ULSM, values were very low in nearly all cases with every method (predominantly <0.5 and often <0.2) rendering the statistical advantage uninformative. Also, results of statistical comparisons across different sample sizes for other spatial metrics were not provided (see Figure 4, p. 161, Pustina et al., 2018).

In short, the degree to which spatial bias affects ULSM versus MLSM methods has not yet been systematically and rigorously tested across a wide range of LSM methods with a wide range of metrics of spatial accuracy, while implementing best practices such as lesion volume control, minimum lesion load threshold, and proper correction for multiple comparisons.

1.3. Detection of networks

Another issue in LSM is the ability to detect complex relationships and functional dependencies in the data (i.e., networks). Given that most complex cognitive functions are supported by a number of regions working together in a coordinated fashion, it is pivotal that LSM methods are able to uncover multiple regions underpinning the target behavior.

Some papers have argued that MLSM should be better than ULSM at detecting multifocal relationships between lesion location and behavioral deficits (i.e., when damage to multiple areas leads to a specific behavioral deficit), as MSLM takes into account all the voxels simultaneously in a single model (DeMarco & Turkeltaub, 2018; Mah et al., 2014; Pustina et al., 2018; Sperber, 2020; Zhang et al., 2014). However, the empirical evidence favoring the superior ability of MLSM methods to identify networks remains inconclusive. This is in part due to the strong regularization (e.g., sparse vs. dense solutions) and additional assumptions (e.g., restriction on possible locations of solutions) required in order to solve a single, massively underdetermined, multivariate system of equations (typically with 1 patient per 100 or 1,000 voxels). Mah et al. (2014) claimed that MLSM resulted in higher sensitivity and specificity compared to ULSM in detecting a two‐parcel fragile network (when the synthetic score was based on the maximal lesion load among a set of anatomical regions). However, as described above, synthetic behavioral scores were binarized for their analysis, the statistical threshold used was not specified, and there was no quantification of the differences in spatial bias between ULSM and MLSM. Pustina et al. (2018) showed an advantage of MLSM over ULSM in detecting an extended network (“AND” rule; when the synthetic score was based on the average lesion load among a set of anatomical regions) consisting of three parcels. However, there was no significant advantage of the MLSM over ULSM in detecting other types of two‐ and three‐parcel networks when a proper FWER correction was included.

Findings with real behavioral data also remain mixed. For instance, in one study MLSM methods were able to detect a brain network underlying apraxia of pantomime, while ULSM could not (Sperber, Wiesen, Goldenberg, & Karnath, 2019). However, a number of studies have repeatedly shown that ULSM methods are able to detect spatially distinct regions in a network (Akinina et al., 2019; Baldo et al., 2018; Gajardo‐Vidal et al., 2018; Mirman, Chen, et al., 2015). With real behavioral data, however, there is no ground truth with which to compare the results, so it remains possible that the analyses should have uncovered even more relevant regions. Thus, further empirical evidence is needed to show what specific measurable advantage MLSM has over ULSM in detecting multifocal behavioral determinants.

1.4. Aims of the current study

To summarize, there are a number of theoretical concerns for both ULSM and MLSM methods. Some of these concerns raised originally with respect to ULSM (e.g., spatial bias and autocorrelation, differential statistical power) are actually concerns for both ULSM and MLSM methods and require further elucidation with respect to both approaches. Moreover, efficient controls already exist for both ULSM and MLSM methods that can be implemented to minimize the biasing effect of lesion physiology (e.g., lesion size correction, minimum lesion load threshold). The theoretical concerns about ULSM methods being less able to detect networks of brain regions (as opposed to a single target region) have not been systematically confirmed. Properties of new LSM models require further delineation and comparative evaluation in order to assess the mapping power and accuracy under varying conditions. Further, certain factors that can potentially impact accuracy of analysis such as lesion mask smoothing and behavioral noise levels have not been properly addressed in previous papers comparing different LSM methods. To date, the comparisons of ULSM and MLSM in the literature have been limited and when they are contrasted, a sub‐standard version of ULSM is often implemented without proper correction, leading to an unfavorable impression of ULSM (Inoue et al., 2014; Pustina et al., 2018; Zhang et al., 2014). Finally, neither ULSM nor MLSM methods have been properly explored with respect to the incidence of false positive results.

The current paper aimed to address these gaps in the LSM literature and provide a comprehensive appraisal of several versions of ULSM and MLSM methods with a large stroke lesion dataset, using both synthetic and real behavioral data, across a range of relevant parameters. Synthetic data were used to test the spatial accuracy of different LSM methods: (a) ULSM with five different permutation‐based thresholding approaches; (b) MLSM with voxel‐level lesion data using two different approaches; and (c) MLSM with dimension‐reduced lesion data using three different strategies for feature reduction. Obtained results were compared across different anatomical target locations, sample sizes, noise levels, lesion mask smoothing values, types of networks, and false positive simulations. We used a number of different distance‐ and overlap‐based spatial metrics as indices of mapping accuracy. We also compared performance of these LSM methods using real behavioral data (language scores) with multiple demographic and sampling covariates, along with subsampling to check the stability and agreement across methods. Our goal was to provide the first comprehensive comparison of ULSM and MLSM methods, in order to afford guidance on selecting the most appropriate LSM method(s) for a particular study with a specific lesion dataset.

2. METHODS

2.1. Participants

For the simulation analyses, lesion masks from 340 chronic left hemisphere stroke patients were obtained from two different sources: our Northern California stroke dataset (n = 209, NorCal) and the Moss Rehabilitation stroke dataset provided with the open‐source LESYMAP software (n = 131, LESYMAP, Pustina et al., 2018). Synthetic behavioral scores were based on lesion load to different cortical areas (described further below).

For the analysis of real behavioral data, we analyzed language data and lesion masks from a subset of patients in the NorCal database (n = 168; 36 female) who completed behavioral testing and met the following inclusion criteria: History of a single left hemisphere stroke (including both embolic and hemorrhagic etiologies), premorbidly right‐handed (based on the Edinburgh Handedness Inventory), native English speaker (English by age 5), minimum high school or equivalent education (i.e., 12 years), in the chronic stage of recovery (at least 12 months poststroke) at the time of behavioral testing, no other neurologic or severe psychiatric history (e.g., Parkinson's, dementia, schizophrenia), and no substance abuse history. The mean age of this subset of patients was 61.0 years (range 31–86, SD = 11.2), mean education was 14.9 (range 12–22, SD = 2.4), and mean months poststroke was 51.4 (range 12–271, SD = 54.0). All patients were administered the Western Aphasia Battery (WAB, Kertesz, 1982, 2007), which classified 47 patients with anomic aphasia, 45 with Broca's aphasia, 6 with conduction aphasia, 4 with global aphasia, 1 with transcortical motor aphasia, 3 with transcortical sensory aphasia, 14 with Wernicke's aphasia, and 48 patients who scored within normal limits (i.e., overall WAB language score of ≥93.8 points out of 100). This latter group included patients with very mild aphasic symptoms, such as mild word‐finding difficulty.

2.2. Behavioral data

Data for the LSM analyses with real behavioral scores were derived from 168 patients in the NorCal stroke dataset. Patients were tested on the WAB (Kertesz, 1982, 2007), which consists of several subtests measuring a wide range of speech and language functions. Here, we analyzed the most reliable and least‐confounded speech‐language scores on the WAB, which index three distinct language domains: speech fluency, single‐word auditory comprehension, and verbal repetition. All patients signed consent forms and were tested in accordance with the Helsinki Declaration.

2.3. Imaging and lesion reconstructions

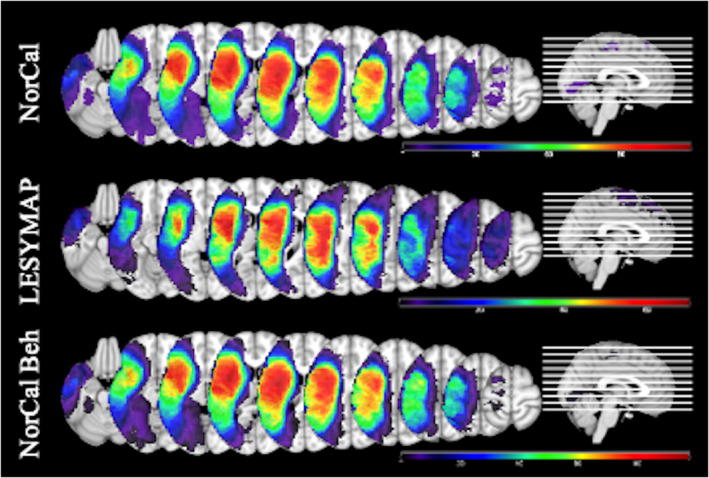

Real lesion masks were obtained from two different sources as detailed previously. All lesion masks were reconstructed from MRI or CT data acquired during the chronic phase of stroke (at least 2 months poststroke). Detailed information about data acquisition, lesion reconstruction, and normalization procedures for the NorCal and LESYMAP datasets can be found in Baldo et al. (2013) and Pustina et al. (2018), respectively. The lesion masks were converted to standard MNI space with a 2 mm isovoxel resolution. The overlay of patients' lesions from the two different databases is shown in Figure 1. Mean lesion volume was 119.6 cm3 for the NorCal dataset (range 0.1–455, SD = 97.9) and 100.0 cm3 for the Moss Rehab dataset (range 5.2–371.4 SD = 82.2).

FIGURE 1.

Lesion overlays for the three datasets. Top—NorCal (n = 209, coverage range 5–117). Middle—LESYMAP (n = 131, coverage range 5–68). Bottom—subset of NorCal used in the analysis of real behavioral data (n = 168, coverage range 5–96)

2.4. LSM methods

In the current study, we compared five ULSM and eight MLSM methods, using both synthetic and real behavioral data. All ULSM and MLSM methods discussed in this paper were implemented in a downloadable MATLAB script (freely available at https://www.nitrc.org/projects/clsm/) that was based on the original VLSM software developed by Stephen Wilson (Bates et al., 2003; Wilson et al., 2010).

2.4.1. ULSM methods

All ULSM variants were conducted using a linear regression with a voxel lesion value as the dependent variable (lesioned or not), the continuous behavioral data as the independent variable, and lesion volume as a covariate (Wilson et al., 2010; Baldo, Ivanova, Herron, Wilson, & Dronkers, in press). Linear regression was chosen because it is flexible, popular, and applicable to multiple data types (categorical or continuous) with multiple covariates. Also, the synthetic behavioral data contained linear effects, similar to other recent simulation studies (e.g., Pustina et al., 2018). Both the synthetic and real behavioral datasets were well controlled with respect to outliers; all the z‐scores were within 3 SD of the mean.

To correct for multiple comparisons, we used permutation‐based thresholding, which is a nonparametric approach to FWER correction that randomly permutes behavioral scores and which records the relevant t‐value or maximal cluster size for each permutation with the final threshold set at p = .05 (Kimberg et al., 2007; Mirman et al., 2018). Unlike some previous methodological LSM papers, we did not evaluate the performance of the ULSM method with FDR‐correction, given that it is a fundamentally inappropriate correction for lesion data (Baldo et al., 2012; Mirman et al., 2018). Finally, the use of permutation‐based significance testing with all of the ULSM methods protects against inflated false positives that can accompany non‐Gaussian noise that may appear in the real data (all synthetic behavioral noise was Gaussian).

ULSM results were generated with five different nonparametric FWER thresholding approaches that are commonly used in contemporary ULSM studies:

Maximum statistical t‐value (ULSM T‐max). This statistic corresponds to the most standard and conservative version of the permutation‐based FWER‐correction.

125th‐largest t‐value (ULSM T‐nu = 125). This statistic corresponds to the 125th largest voxel‐wise test statistic (n = 125 corresponds to 1 cm3 when working with 2‐mm‐sided voxels; see Mirman et al., 2018).

Cluster‐size thresholding with a fixed voxel‐wise threshold of p < .01 (ULSM T‐0.01).

Cluster‐size thresholding with a fixed voxel‐wise threshold of p < .001 (ULSM T‐0.001).

Cluster‐size thresholding with a fixed voxel‐wise threshold of p < .0001 (ULSM T‐0.0001).

2.4.2. MLSM methods

MLSM methods included multivariate regression methods with voxel‐level lesion data (two approaches) and with dimension‐reduced lesion data (three approaches). For all MLSM methods, lesion volume was regressed out of both the behavioral and the lesion variables. While other corrections for lesion volume are possible, such as direct total lesion volume control (Zhang et al., 2014), we opted for the most conservative option here based on recommendations from the only study to date that systematically tested different lesion volume corrections (DeMarco & Turkeltaub, 2018). We normalized both lesion and behavioral data to SD of 1 and centered the behavioral data (to mean 0), as is customary for multivariate methods to optimize regression estimation. Finally, permutation testing identical to that used with ULSM methods above (Kimberg et al., 2007; Mirman et al., 2018), was also applied to the voxel‐wise feature weights obtained for the MSLM methods. The resultant values were used to threshold and identify significant voxels in the maps at p < .05, using the maximum voxel‐wise feature weight value obtained (as in the ULSM T‐max). From here on, we use the term LSM statistical values to refer to both the actual voxel‐wise statistics for ULSM methods and voxel‐wise feature weights for MLSM methods that are ascertained following permutation thresholding and presented in the resulting LSM map output.

MLSM methods with voxel‐level lesion data.

SVR differs from ordinary multivariate regression in a number of ways, as it computes a solution based on many voxels' lesion status given the relatively small number of patients. First, it incorporates two regularization hyperparameters that help the regression model keep the model's parameter values small (to avoid overfitting), while at the same time controlling the model's prediction accuracy by partially ignoring small fitting errors. Second, SVR incorporates a radial basis kernel function that implicitly projects the lesion data into a high‐dimensional space in order to help model fitting succeed, in part by allowing some nonlinear effects to be incorporated into the model. SVR has been used in several previous LSM studies (Ghaleh et al., 2017; Griffis, Nenert, Allendorfer, & Szaflarski, 2017; Zhang et al., 2014). We used the SVR routine encoded as part of the SVR‐LSM package (https://github.com/atdemarco/svrlsmgui; DeMarco & Turkeltaub, 2018) with fixed hyperparameters (γ = 5, C = 30) previously tuned to work well in LSM with behavioral data and currently most commonly used in the field (DeMarco & Turkeltaub, 2018; Zhang et al., 2014).

The second voxel‐level MLSM regression method was partial least squares (PLS), which jointly extracts dual behavior and lesion factors that maximize the variance between behavior and lesion locations in a single step. PLS algorithms (and closely related canonical correlation algorithms) have been developed extensively in bioinformatics for use in genetics where there is a similar “wide” data structure: there are far more genes/voxels to be considered in a regression solution than there are subjects providing such data (Boulesteix & Strimmer, 2006). PLS has also previously been used in LSM (Phan et al., 2010). However, we used a basic version of PLS regression based on the singular value decomposition (SVD) function (Abdi, 2010; Krishnan, Williams, Randal, & Abdi, 2011) because it is known to be both a fast and reliable regression technique over wide data sets containing highly correlated variables, which is important given the number of simulations (using permutation testing) that were run. Although this version of PLS regression is known to produce “dense” solutions (Mehmood, Liland, Snipen, & Sæbø, 2012), resulting in (overly) large clusters, another important consideration for including it here as an exemplar of this class of algorithms is that it is easy to generalize basic PLS regression to integrate multiple target behaviors simultaneously (Abdi, 2010); an inviting prospect for investigating behavioral test batteries used to assess patient populations.

MLSM methods with dimension‐reduced lesion data.

Three different types of MLSM data reduction methods were tested in the current study. These methods reduce the spatial dimensionality of the lesion data first without considering behavior. Lesion status of thousands of voxels is reduced to a number of spatial lesion components that is fewer than the number of patients in the analysis. This results in a more tractable system of equations to solve, and the components' estimated weights are then transformed back into spatial maps, relating brain areas to the behavior being investigated. In other words, lesion status in all voxels for each patient are replaced with sums of weighted lesion components for that patient.

SVD (“svd”, MatLab v.7) (Ramsey et al., 2017) identifies an ordered set of orthogonal spatial components, each a weighted mixture of all voxels inside the lesion mask, that explain as much lesion variance across all voxels with as few of the ordered components as possible. These components can be linearly combined to reconstruct each patient's lesion mask. Our preliminary trials found that most individual patient lesion masks were well‐reconstructed (median dice >0.98; mean dice >0.85) when 90% of the cumulative variance in the SVD diagonal matrix was accounted for. Thus, in this data‐reduction version of MLSM, we used the number of components (approximately equal to half the number of patients) required to explain 90% variance in order to speed up LSM computations.

The second data reduction method we used was a logistic principal component analysis (LPCA) (Schein, Saul, & Ungar, 2003), which iteratively identifies a set of ordered spatial components whose lesion incidence maps are orthogonal under the logistic function (Siegel et al., 2016). Given the better fit of method to lesion data type, trials with lesion masks showed that approximately the first 40 LPCA components were able to reconstruct individual patient lesion masks quite well (median dice >0.99; mean dice >0.9). Thus, the number of components we used for the LPCA data reduction was the number of patients capped at 40 components in order to speed up LSM estimates.

The third data reduction method was an independent component analysis (ICA) (FastICA v2.5 as used by Hyvärinen & Oja, 2000) which is a generalization of PCA. The latter method is widely employed in fMRI studies for clustering brain regions, and although it has not been typically used in lesion analysis studies, we included it here for exploratory purposes. ICA in this context estimates independent linear mixtures of lesion incidence voxel data that are the most non‐Gaussian sources found within the data. We used the default cubic function as the fixed‐point nonlinearity for finding components under coarse iterations first and then used a hyperbolic tangent function (default setting) for fine iterations in order to reflect the bounded nature of lesion data. For ICA, we used the same number of components as with LPCA (maximum of 40), in order to see if ICA can outperform LPCA given its usefulness in other spatial dimension reduction applications in neuroimaging (Calhoun, Liu, & Adali, 2009).

After application of the three data reduction approaches described above, an elastic net linear regression (“glmnet” package; Qian, Hastie, Friedman, Tibshirani, & Simon, 2013; Tibshirani et al., 2010) was performed with the target behavior (real or simulated) as the dependent variable along with the data reduced spatial lesion components and the lesion size covariate. While data reduction is not required for MLSM, it is a necessary step for implementation of elastic net regression. Elastic net regression is only appropriate for relatively low‐dimensional datasets, because for high‐dimensional data (original voxel‐level lesion data), it will select too many voxels unrelated to behavior (Gilhodes et al., 2020).

We used two different elastic net regressions to see if either is superior in producing accurate or reliable LSM maps: one near to a pure lasso (which we will call “L1”) case (95% L1 penalty mixed with a 5% L2 penalty) and one with the opposite mixture (95% L2 and 5% L1), a ridge (“L2”) case. The elastic net regressions use cross‐validation to solve for the penalty hyperparameter that best fits the data. Thus, in total six variants of MLSM methods with dimension‐reduced lesion data were tested: SVD‐L1, SVD‐L2, LPCA‐L1, LPCA‐L2, ICA‐L1, and ICA‐L2. For technical details on implementation of the MLSM methods described above see Appendix B.

2.5. Simulations with synthetic behavioral data

Three sets of simulations were performed with all ULSM and MLSM methods using synthetic behavioral scores and real lesion masks: single anatomic target, dual anatomic targets, and zero anatomic target (i.e., false positive simulation). In each simulation, we varied several factors (described below for each simulation) in a fully crossed manner in order to systematically compare effect sizes and significance across the different ULSM and MLSM methods. For each simulation analysis, the specified number of lesion masks was randomly selected from one of the two datasets (i.e., not mixing NorCal and LESYMAP masks together). For all analyses, we only included voxels in which at least five patients had lesions, and which had statistical power ≥.1 at p < .01 (Hsieh, Bloch, & Larsen, 1998). We also performed behavioral value outlier scrubbing at |z| ≥ 3.0, which was particularly important at higher behavioral noise levels.

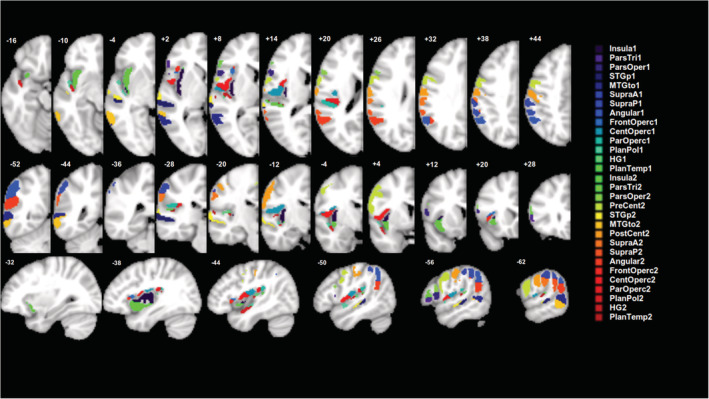

Synthetic behavioral scores (also called “artificial” or “fake” in the literature) for the single and dual anatomical target simulations were derived from the lesion load to the target anatomical parcels (or ROIs). For simple single target simulations, the synthetic behavioral score was calculated as the fraction of the target anatomical parcel that was spared (see next section for more details), that is the synthetic score was directly proportional to the lesion load of that anatomical parcel. Use of synthetic behavioral scores allows one to determine how well the different LSM methods are able to localize behavior, since we know the ground truth as to exactly which region in the brain it should localize to (i.e., the target anatomical parcel) (Pustina et al., 2018). To create target anatomical parcels, we used ROI masks of gray matter areas in the left middle cerebral artery region from FSL's version of the Harvard‐Oxford (H‐O) atlas, thresholded at 50% incidence. We used 16 such parcels that had 5% or greater lesioned area within at least 25% of the lesion masks. To create a set of smaller parcels, each of these 16 parcels was divided into two sections along the axis of maximal spatial extent. Two of the subdivided parcels failed to intersect the lesion mask sufficiently according to the above criteria, rendering a total of 30 smaller parcels (see Figure 2). We specifically chose larger ROIs (similar to Mirman et al., 2018), because small ROIs are unlikely to be accurately identified in patients who generally have much larger lesions than focal fMRI activation areas. Further, even assuming that fMRI properly delineates the size and location of specific functional areas, in the chronic stage of stroke recovery, these functional areas are likely to be altered by neural reorganization (Kiran, Meier, & Johnson, 2019; Stefaniak, Halai, & Ralph, 2019).

FIGURE 2.

Target anatomical parcels (n = 30) used to generate synthetic behavioral scores

2.5.1. Single anatomical target simulations

In the single anatomical target simulations, the synthetic behavioral data for each patient were calculated as one minus the lesion load (fraction of the target anatomical parcel covered by the patient's lesion), before noise was added. Accordingly, a score of 1 indicated “perfect performance”—complete sparing of the target parcel by the lesion, and a score of 0 indicated “complete impairment”—full coverage of the target parcel by the lesion. For the simulations, we varied the following factors in a fully crossed manner (obtaining all possible combinations of these factors):

Number of patients (n = 32, 48, 64, 80, 96, 112, 128). Additional simulations were run with n = 144–208 patients from the NorCal dataset for descriptive purposes only. Patients were randomly selected from one dataset on each run.

Behavioral noise level (0.00, 0.36, or 0.71 SD of normalized behavioral scores). Behavioral noise level was a fixed additive level of Gaussian noise at the specified fractional level of the mean across all target measures' SDs.

Lesion mask smoothing (0 mm [no smoothing] or 4 mm Gaussian FWHM). The smoothed mask values fell between 0 and 1, and the total mask weight was kept constant. All LSM methods could handle continuous values via regression.

Size of parcels (16 larger or 30 smaller as described above).

Anatomical target parcel (see Figure 2 for list).

2.5.2. Dual anatomical target simulations

In the dual anatomical target simulations, two spatially distinct target parcels were used to simulate a minimal brain “network.” We considered three different types of dual‐target networks:

Redundant network in which the behavioral score is reduced only if both target parcels are lesioned (one minus the minimum lesion load of the two parcels is used to generate the synthetic behavioral score).

Fragile network in which the behavioral score is reduced if either target is lesioned (one minus the maximal lesion load of the two parcels is used to generate the synthetic behavioral score; corresponds to what Pustina et al., 2018 called the “OR” rule for generating multiregion simulations and approximates the partial injury problem described in Rorden, Fridriksson, & Karnath, 2009).

Extended network in which the behavioral score is reduced proportionally to the overall damage to the two regions, which is similar to the single target simulation except the parcel is divided into two spatially separate components (one minus the average lesion load of the two parcels is used to generate the synthetic behavioral score; corresponds to what Pustina et al., 2018 called the “AND” rule).

For the dual‐target simulations, we only analyzed results with the larger 16 parcels, moderate behavioral noise level (0.36 SD), and lesion smoothing at 4 mm FWHM. We tested all 120 pairwise combinations of the target parcels for each type of network. The number of patients was varied systematically from n = 64 to 208. We did not use n = 32 or n = 48, because preliminary results showed a lack of power with this sample size. We only used the NorCal dataset for this analysis for consistency across these simulations.

2.5.3. False positive simulations

In the false positive simulation, the behavioral variable consisted of pure Gaussian white noise. Any clusters detected in this simulation are thus false positives. The number of patients included in the false positive simulation was systematically varied (from n = 32 to 128), along with lesion mask smoothing values (0 mm vs. 4 mm Gaussian FWHM). Given that a proper FWER correction for all methods was implemented in our study, false positive results for each method were produced in only 5% of trials in these simulations. Accordingly, given the parameters studied in order to characterize the false positive results in a jointly balanced manner we ended up running a very large number of simulations (~35,000). Subsequently, our evaluation of the performance of LSM methods is based only on the trials that actually generated a false positive solution for at least one of the methods, while all the other simulation runs (where no method produced a false positive result) were discarded. For trials with false positive results for a given method, we examined the spatial characteristics of the false positive clusters, including the size and number of clusters. We also evaluated which LSM methods produced false positive clusters in an interrelated fashion, in order to see how much independence the methods have from each other. This was accomplished by correlating false positive outcomes between different LSM methods by using an indicator (dummy) variable for each method. The indicator variable recorded when the method produced any above threshold result for a given noise simulation run (1 denoted any false positive result and 0 indicated that a blank map was returned).

2.5.4. Measures of LSM success in simulation analyses

As a proxy for statistical power in both single and dual‐target simulations, we calculated the percentage of trials that yielded any significant (above‐threshold) LSM statistical values (referred to here as the probability of obtaining a positive result).

To gauge the accuracy of an LSM method in our single and dual anatomical target simulations, we calculated two types of accuracy measures: distance and overlap. For each measure, the target anatomical parcel, whose lesion load was used to generate the specific synthetic behavioral score, was compared to the LSM output map (LSM thresholded statistical map). If an LSM method fully identified the underlying substrate, then the target parcel and the LSM output map should overlap perfectly. Our measures of accuracy were selected to provide a comprehensive evaluation of the precision of the different LSM methods.

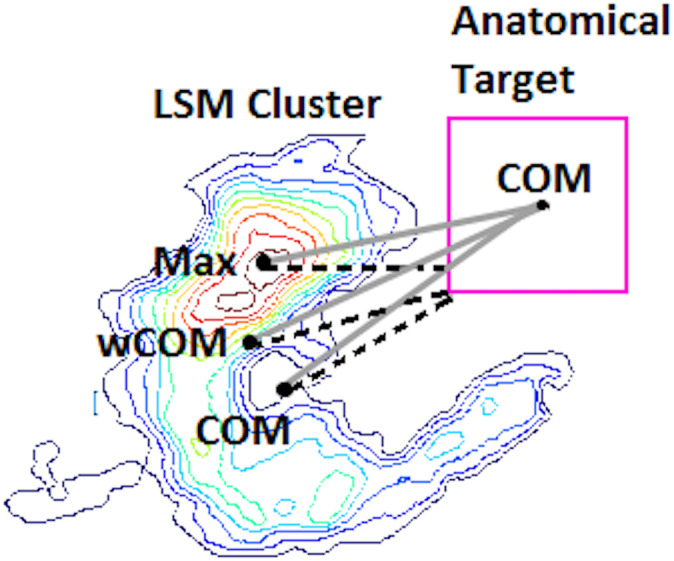

Distance‐based measures were used to compare a single target anatomical parcel to the LSM output. Three different indices of the LSM output map position were used: mean centroid location (COMLSM), mean centroid location weighted by statistical values (wCOMLSM), and maximum statistic location (MaxLSM). Likewise, two different indices of the target anatomical parcel position were employed: mean centroid location (center of mass; COMtarget), and nearest location to the LSM output map position (Closesttarget). This resulted in six possible measures used to evaluate the accuracy of mapping of single target location (i.e., distance between target parcel and LSM output). See Figure 3 for an illustration of these different indices. Distance‐based measures were not used for evaluation of dual‐target simulations, because distances between the target parcel and LSM output could not be calculated unambiguously in this instance.

FIGURE 3.

Visual representation of six possible distance‐based measures used to evaluate the accuracy of mapping in single target simulations. The lesion symptom mapping (LSM) map is represented as a contour heat map, and the hypothetical anatomical target parcel is a pink square on the right. Three different indices of the LSM output map position are used: mean centroid location (COMLSM), mean centroid location weighted by statistical values (wCOMLSM) and maximum statistic location (MaxLSM). The six distances are represented by distinct lines: solid gray for distances from the center of mass of the anatomical target (COMtarget) and dashed black for the nearest location to the LSM output map position (Closesttarget)

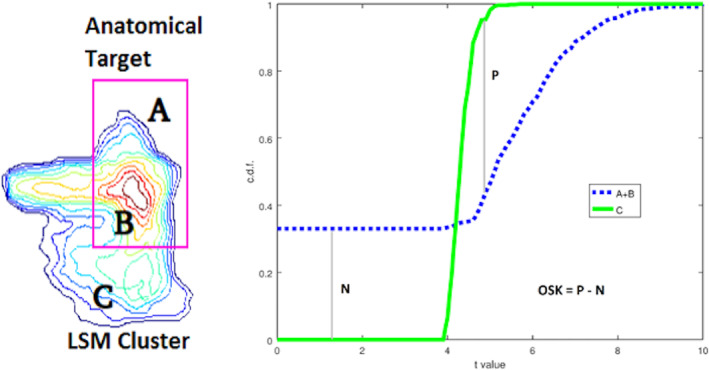

Overlap‐based measures included overlap and weighted overlap metrics between target anatomical parcel(s) and LSM output map. First, we used the dice coefficient, which is a simple measure of overlap between two binary maps, with 1 being perfect overlap between the two maps and with zero voxels falling outside of the overlapping area, and 0 being no overlap between the two (calculated as proportion of area 2B to the combined areas of the anatomical target and LSM cluster [A + 2B + C] in Figure 4). In addition, we also looked at the proportion of false negatives (part of the anatomical target not covered by statistically significant LSM values—A) and false positives (statistically significant LSM values falling outside the anatomical target—C) relative to the combined areas of the anatomical target and LSM cluster (A + 2B + C) to better understand what is driving specific dice values (see Figure 4). While the dice coefficient remains a standard means of measuring mapping accuracy, there are two clear limitations to this measure. First, the dice coefficient is greatly dependent on the size of the parcels being compared, with larger parcels generating a larger dice index compared to smaller regions, even when the relative overlap is smaller (Pustina et al., 2016). Second, the actual statistical values of the LSM output are not taken into account in the calculation of the dice index. To account for this latter limitation, we also computed a one‐sided Kuiper (OSK) distribution difference (Rubin, 1969) between statistically significant LSM values inside the target anatomical parcel(s) versus outside. This measure compares the LSM statistical values outside the target (C) to those inside the target (B) and it also assigns zero values to target areas not reaching threshold in the LSM output (A) (see Figure 4). The idea is that we want to reward finding LSM hotspots inside the anatomical target(s) while ignoring lower values in LSM clusters that are outside the target. OSK values range from −1 to 1 with 1 representing that the entire target is covered with LSM statistical values higher than all those outside the target; −1 representing that the lowest LSM values (or none at all) are inside the target, and 0 representing no difference in LSM statistic distributions inside versus outside the target anatomical parcel.

FIGURE 4.

One‐sided Kuiper (OSK) measure of weighted overlap. Left: The lesion symptom mapping (LSM) statistic values inside the target (B) are aggregated with 0's from areas inside the target not intersecting the LSM cluster (A) and both are compared with LSM values outside the target (C). Right: the cumulative distribution function (CDF) from LSM values Inside (A + B) vs. Outside (C) the target are compared and scored by subtracting the maximal CDF differences (P for C > A + B and N for C < A + B) from each other. To get a sense of how OSK values represent distributional separation, two 1‐dimensional standard Gaussian distributions being separated by d units obey the approximating equation OSK = 0.3778 • d—0.0092 • d 3, for d from [−4 to 4]

2.6. LSM analysis with real behavioral scores

In addition to the simulation analysis with synthetic behavioral scores, we also compared ULSM and MLSM methods using real behavioral scores. These data were generated from a subset of patients in the NorCal dataset (n = 168) who met specific inclusion criteria (described above in the participants section) and who were tested on speech fluency, single‐word auditory comprehension, and repetition subtests from the WAB (Kertesz, 1982, 2007). Since the aim of the current paper was to evaluate performance of different LSM methods with real behavioral data under typical conditions, we used a number of standard covariates. So in these analyses, in addition to lesion volume, we also covaried for age, education, gender, months poststroke (log‐transformed), test site (referring to one of the two locations where behavioral data were collected), image resolution (referring to the resolution of the original scan: low or high), and overall aphasia severity (WAB AQ minus the target subscore). The last covariate allowed us to account for overall aphasia severity, while focusing on the specific language function. Lesion smoothing was done at 4 mm FWHM. The minimum power per voxel was 0.25 at p < .001 for a d′ of 1.

We also performed 100 repetitions of the same 3 analyses but with 75% subsampling (n = 126) of the original full cohort in order to determine the stability of the solutions for each given LSM method. We considered the original LSM map generated with the full cohort (n = 168) to be the “target parcel” in this case and correspondingly analyzed the stability of the results obtained on subsequent runs (n = 126) with the same metrics we used to analyze the single target simulation results. We then tested whether there were significant differences between the different LSM methods with respect to the stability of the results produced.

2.7. Statistical analyses

Simulations with synthetic behavioral data were performed in a fully crossed manner: 6072 runs for the single target simulation and 10,800 runs for the dual‐target simulation, generating 13 LSM maps for each run. From each run of every LSM map, we collected four data types: presence of positive results (a binary indicator for the presence of any above threshold statistical values, no matter the location); overlap (dice scores of LSM map with anatomical target(s)); statistic‐weighted overlap (OSK, Figure 4), and, for the single target case, six distance measures (Figure 3) between the LSM map cluster(s) and the anatomical target.

Analysis of important differences between each simulation's factors was established using mixed between/within analysis of variance (ANOVA) applied to each fully crossed dataset type where we treated anatomical target parcel location as the random factor. We used high‐speed ANOVA software (CLEAVE, nitrc.og.projects/cleave) to compute partial omega squared) effect sizes (Olejnik & Algina, 2004) for factors and first‐order interactions. Typically, weak, moderate, and strong partial omega effect sizes are taken to be 0.2, 0.5, and 0.8, respectively (Keppel, 1991). We used partial omega squared cutoff of 0.35 to restrict ourselves to reporting moderate or strong effects (unless otherwise noted). Finally, we note that the distance, dice, and OSK values often did not conform to a Gaussian distribution as required by ANOVA. In these instances, we used a Kumaraswamy distribution (Jones, 2009) to model and then transform datasets into an approximately symmetric unimodal form (α = β = 2) prior to ANOVA. In the presentation of results, we focus specifically on the effect of different LSM methods on the outcomes and their interactions with other factors. Additionally, we also looked at the different distance‐based spatial accuracy metrics as factors and explored whether they yielded similar accuracy estimates or not. In the results, we concentrate specifically on effect sizes rather than significance testing, given that large‐scale simulations produce so much data, that even tiny differences can be significant (Kirk, 2007; Schmidt & Hunter, 1995; Stang, Poole, & Kuss, 2010).

The same ANOVA analyses were performed on the subsample analysis of the real behavioral data by using the full analysis LSM maps as targets for their respective LSM variant (e.g., SVR target for SVR LSM analysis). The only addition was that we included the maximum statistic location of the target ROI as another centroid to anchor three more distances per LSM map, because these target ROIs (the n = 168 maps) already have statistics.

3. RESULTS

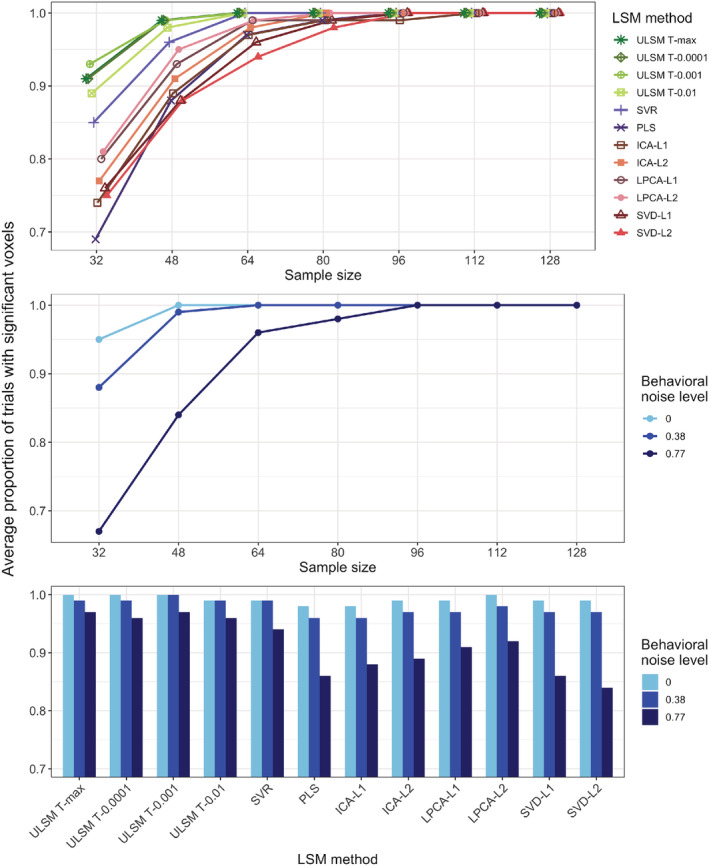

3.1. Single anatomical target simulations

3.1.1. Probability of positive results

For single anatomical target simulations, we first evaluated the probability of obtaining positive results (significant voxels) for the different LSM methods (see Table 1 for mean simulation values across all factor levels). Overall, all LSM methods demonstrated probability of positive results greater than 80% with a sample size of 48 or larger, but the probability varied substantially across LSM methods (= .71). ULSM methods demonstrated the greatest probability to detect significant voxels for smaller sample sizes, while MLSM methods required on average 10–20 more participants to achieve comparable levels of positive results up to a sample size of 80, when all methods reached close to 100% probability of obtaining positive results. See Appendix A for additional information on this analysis for single target simulations (Figure A1).

TABLE 1.

Mean simulation values for all manipulated factors for single anatomical target simulations: probability of obtaining a positive result, as a percentage of trials where significant voxels were detected (positive result [%]), average displacement error across all distance‐based metrics (displacement [mm]), Dice index, and OSK distribution statistic (OSK statistic: −1 worst to +1 best)

| LSM method | ULSM T‐max | ULSM T‐nu = 125 | ULSM T‐0.0001 | ULSM T‐0.001 | ULSM T‐0.01 | SVR | PLS | ICA‐L1 | ICA‐L2 | LPCA‐L1 | LPCA‐L2 | SVD‐L1 | SVD‐L2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Positive result (%) | 98.4 | — a | 98.5 | 98.8 | 98.1 | 97.2 | 93.2 | 93.9 | 95.0 | 95.8 | 96.4 | 93.9 | 93.3 |

| Displacement (mm) | 6.1 | 6.7 | 6.4 | 6.9 | 7.6 | 6.0 | 12.9 | 12.3 | 13.1 | 12.3 | 11.4 | 8.3 | 8.0 |

| Dice index | 0.12 | 0.08 | 0.09 | 0.07 | 0.05 | 0.14 | 0.03 | 0.05 | 0.04 | 0.03 | 0.03 | 0.04 | 0.05 |

| OSK statistic | 0.43 | 0.56 | 0.52 | 0.60 | 0.68 | 0.29 | 0.54 | 0.20 | 0.35 | 0.59 | 0.61 | 0.65 | 0.66 |

| Sample size | 32 | 48 | 64 | 80 | 96 | 112 | 128 | 208 b |

|---|---|---|---|---|---|---|---|---|

| Positive result (%) | 82.9 | 94 | 98.4 | 99.4 | 99.8 | 99.9 | 99.9 | 100 |

| Displacement (mm) | 10.2 | 9.4 | 9.0 | 8.9 | 8.8 | 8.7 | 8.7 | 9.8 |

| Dice index | 0.10 | 0.08 | 0.07 | 0.06 | 0.05 | 0.05 | 0.05 | 0.03 |

| OSK statistic | −0.10 | 0.36 | 0.50 | 0.57 | 0.63 | 0.67 | 0.71 | 0.74 |

| Behavioral noise level | 0 | 0.38 | 0.77 |

|---|---|---|---|

| Positive result (%) | 99.2 | 97.9 | 91.9 |

| Displacement (mm) | 8.9 | 9.1 | 9.2 |

| Dice index | 0.05 | 0.06 | 0.08 |

| OSK statistic | 0.62 | 0.55 | 0.37 |

| Mask smoothness | 0 mm | 4 mm |

|---|---|---|

| Positive result (%) | 96.3 | 96.4 |

| Displacement (mm) | 9.2 | 8.9 |

| Dice index | 0.06 | 0.05 |

| OSK statistic | 0.49 | 0.54 |

| Number of parcels | 16 | 30 |

|---|---|---|

| Positive result (%) | 96.4 | 96.3 |

| Displacement (mm) | 9.4 | 8.9 |

| Dice index | 0.08 | 0.05 |

| OSK statistic | 0.38 | 0.59 |

| Anatomical parcels | Mean | SD | Max | Min | Quart 1 | Quart 3 |

|---|---|---|---|---|---|---|

| Positive result (%) | 96.3 | 2.7 | 99.6 | 88 | 95.3 | 98.3 |

| Displacement (mm) | 9.1 | 2.6 | 15.0 | 5.0 | 6.3 | 10.7 |

| Dice index | 0.06 | 0.04 | 0.14 | 0.01 | 0.03 | 0.09 |

| OSK statistic | 0.51 | 0.27 | 0.82 | −0.37 | 0.38 | 0.70 |

Abbreviations: ICA, independent component analysis; LPCA, logistic principal component analysis; LSM, lesion symptom mapping; MLSM, multivariate lesion‐symptom mapping; OSK, one‐sided Kuiper; PLS, partial least squares; ULSM, univariate lesion‐symptom mapping; SVD, singular value decomposition; SVR, support vector regression.

Probability of obtaining a positive result not calculated due to a technical error.

Indices provided for informational purposes only and not included in the statistical analyses.

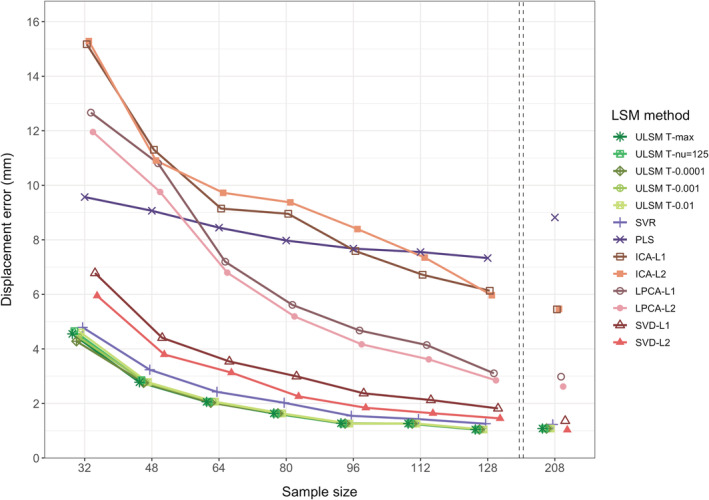

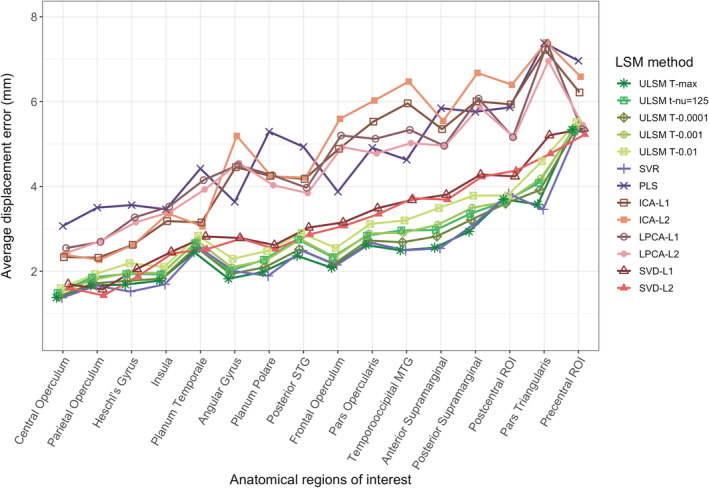

3.1.2. Spatial accuracy using distance‐based metrics

The spatial accuracy of the different LSM methods using distance‐based metrics was evaluated (see Table 1 for mean displacement error values). There was a main effect of LSM Method on accuracy ( = .84). ULSM methods demonstrated numerically higher mean accuracy across all output map locations and target locations, with the average displacement error ranging from 6 to 7.5 mm. Average displacement error across MLSM methods (excepting SVR) ranged from 8 to 13 mm. Across sample sizes, the conservative ULSM methods (T‐max and T‐nu = 125) and SVR produced the most accurate maps. Figure 5 shows the spatial displacement error based on mean MaxLSM to Closesttarget (these two metrics in combination provide the highest accuracy estimates; more on this below) of the different LSM methods at different sample sizes. As can be seen in Figure 5, spatial displacement error varied as a function of sample size across all LSM methods, but the interaction between Sample Size and LSM Method did not reach the preselected effect size. Overall, larger Sample Size ( = .63) and Mask Smoothing ( = .36) had independent positive effects on the accuracy of all LSM methods. Additionally, accuracy of LSM methods varied across the different anatomical parcels: The Rolandic operculum was detected most accurately, and the post central region was detected least accurately. See Figure 6 for displacement error across 16 anatomical parcels and LSM methods. Figure 7 shows LSM output maps for two regions (planum temporale and pars triangularis) across different LSM methods.

FIGURE 5.

Displacement (in mm) of lesion symptom mapping (LSM) output map position for single target simulations across different LSM methods at different sample sizes calculated as the average distance between maximum statistic location (MaxLSM) and nearest location on the target parcel to the LSM output map (Closesttarget). The left side of this figure focuses on small sample sizes as most representative of typical LSM studies, since minimal improvements in accuracy are observed for samples larger than 128

FIGURE 6.

Average displacement error (mean of all distance‐based metrics) of lesion symptom mapping (LSM) output map position for single target simulations across LSM methods for different anatomical parcels. Anatomical parcels are presented left to right from most accurately detected to least accurately detected

FIGURE 7.

Left side: Lesion symptom mapping (LSM) output maps for single target simulations across all LSM methods for planum temporale (one of the most accurately detected regions) and pars triangularis (one of the least accurately detected regions) for a sample size of 64 (typical for single target simulations), medium behavioral noise level with lesion mask smoothing (4 mm). Right side: LSM output maps for dual‐target simulations for these two regions for the three network types (redundant, fragile, extended) for a sample size of 112, medium behavioral noise level, and lesion mask smoothing (4 mm). On all the LSM maps, the location of the target is denoted by a black circle placed at the center of mass of the corresponding anatomical parcel(s) generating the synthetic score. The circle is used for visualization purposes only

As expected, the average spatial displacement error varied significantly depending on which metric was used for determining LSM output map location ( = .62; COMLSM, wCOMLSM, MaxLSM) or target location ( = .82; COMtarget, Closesttarget). Use of MaxLSM as the measure of LSM output map and Closesttarget as the measure of target parcel location led to the highest accuracy estimates. Additionally, measures of LSM output map location strongly interacted with behavioral noise level ( = .81), sample size ( = .81), and mask smoothing ( = .54). With higher behavioral noise levels, mean centroid location measurements (both COMLSM and wCOMLSM) improved, while accuracy measured via MaxLSM decreased. wCOMLSM was the most stable across all noise levels. With larger sample size, spatial accuracy improved, particularly, as indexed by the MaxLSM measure. Lesion mask smoothing was most beneficial for the MaxLSM measure. Tables with the mean simulation values for these analyses and further information on different distance‐based metrics can be found in Appendix A (Tables A1, A2, A3).

An additional post hoc analysis was run with smaller‐sized anatomical targets (n = 30) and the highest behavioral noise level (0.71 SD), with and without lesion size as a covariate. Using lesion size as a covariate resulted in higher spatial accuracy of LSM output maps across all sample sizes and all LSM methods ( = .87, mean displacement of 8.6 mm vs. 12.1 mm without it). The effect of covarying for lesion size varied as factor of LSM Method ( = .43) with MLSM methods showing more improvement in spatial accuracy (3–8 mm) relative to ULSM methods (2–3 mm) with the lesion size covariate (see Appendix A, Table A4).

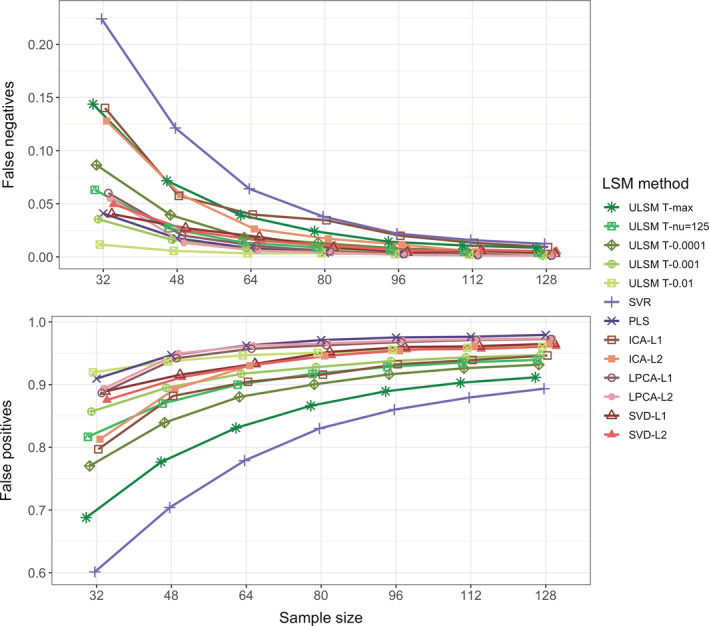

3.1.3. Spatial accuracy with overlap‐based metrics

Spatial accuracy as measured by dice coefficient values varied significantly across LSM Methods ( = .93). According to this measure ULSM (T‐max and T‐nu = 125) and SVR had the highest spatial accuracy. However, the mean dice index values were very low across all LSM methods (ranging from .17 to .02; see Table 1), rendering the dice coefficient relatively uninformative as a metric for evaluating overlap between LSM output map and the target anatomical parcel. Behavioral Noise Level ( = .86), Sample Size ( = .79), and Mask Smoothing ( = .41) all affected the spatial accuracy as measured by dice coefficients. Generally, dice coefficients were larger (more accurate) with increased behavioral noise levels and no mask smoothing but decreased with larger sample sizes as the significant clusters became larger and extended beyond the target parcel. First‐order interactions between LSM method × sample size ( = .49) and LSM method × behavioral noise level ( = .46) were significant (Figure 8).

FIGURE 8.

Dice index as a function of lesion symptom mapping (LSM) method and sample size (top panel) or behavioral noise level (bottom panel) for single target simulations

As a post hoc analysis to check whether our low dice index values may be the result of using thin, small volume ROIs, we correlated the overall mean dice coefficient values for each ROI with the volume of the ROI itself (using all the small and large ROIs [n = 46] from the single target simulations above). We found a large positive Pearson correlation (r = .88, p < .001), suggesting that indeed our dice coefficient values would have been higher if larger ROIs were used, such as the AAL atlas areas used by Sperber, Wiesen, and Karnath (2019).

Also, we looked at the proportion of false negatives and false positives in the LSM output across LSM methods and sample sizes (Figure 9). With increase in sample size, we observed a sharp decline in the proportion of false negatives across all LSM methods, with sparser methods showing greater changes. However, for false positives an inverse effect was seen: the proportion of false positives in LSM output increased across sample sizes, and overall showed more change than the false negatives effects. ULSM T‐max and SVR demonstrated the lowest rates of false positives across sample sizes. Thus, the observed decline in the dice coefficient values was largely driven by greater increase in the proportion of false positives in the LSM output.

FIGURE 9.

Proportion of false negatives (part of the anatomical target not covered by statistically significant lesion symptom mapping [LSM] values) shown in the top panel and false positives (statistically significant LSM values falling outside the anatomical target) shown in the bottom panel. Proportions are calculated relative to the combined areas of the anatomical target and LSM cluster as a function of LSM method and sample size for single target simulations

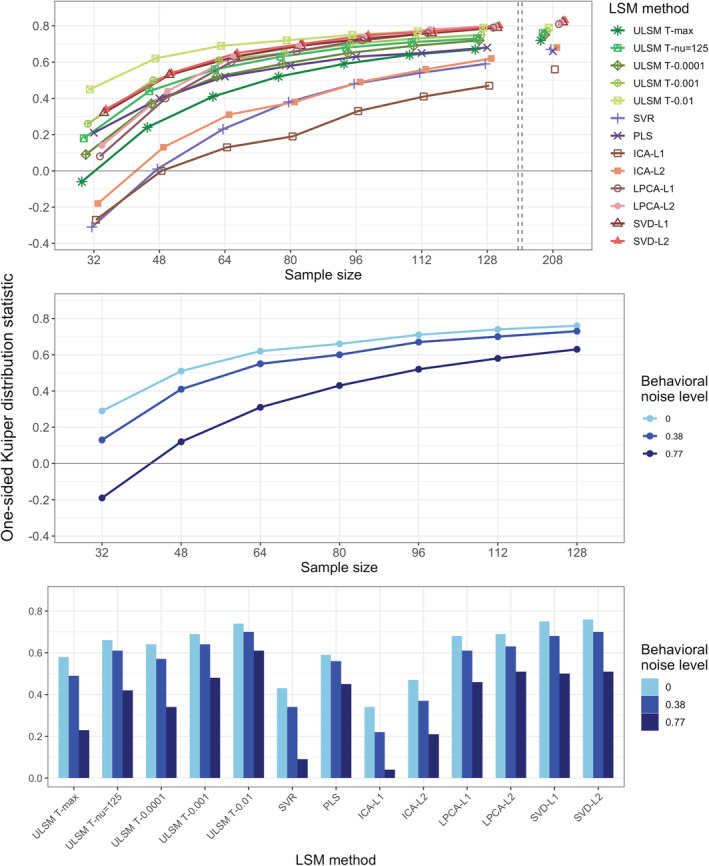

In contrast to the dice coefficient values, OSK distribution values were substantially higher and showed higher variability across factor levels, rendering it more useful than the dice coefficient for evaluation of spatial accuracy (see Table 1). The OSK distribution statistic greatly varied across different LSM methods ( = .85). Liberal ULSM methods (ULSM T‐0.01 and T‐0.001) and SVD showed the highest OSK values, with the advantage being particularly evident with smaller sample sizes. Behavioral noise level ( = .96), sample size ( = .95), and Mask Smoothing ( = .80) all had a strong effect on the OSK statistic. Larger sample sizes, lower noise levels, and mask smoothing resulted in higher OSK distribution statistic values. First‐order interactions between these factors also demonstrated moderate effect sizes ( ranged from .36 to .50). LSM methods showed varying degrees of improvement as sample size increased (Figure 10, top panel). ICA‐L1, ICA‐L2, SVR, and ULSM T‐max performed the worst at small sample sizes but showed the most improvement with increased sample sizes, although still performing below the remaining LSM methods. Higher behavioral noise levels required larger sample sizes to achieve similar levels of accuracy (Figure 10, middle panel). Further, different LSM methods demonstrated variable susceptibility to behavioral noise, with sparser solutions showing higher susceptibility to behavioral noise (more pronounced decrease in OSK values) (Figure 10, bottom panel).

FIGURE 10.

One‐sided Kuiper (OSK) distribution statistic as a function of the lesion symptom mapping (LSM) method and sample size (top panel), behavioral noise level and sample size (middle panel) or LSM method (bottom panel) for single target simulations

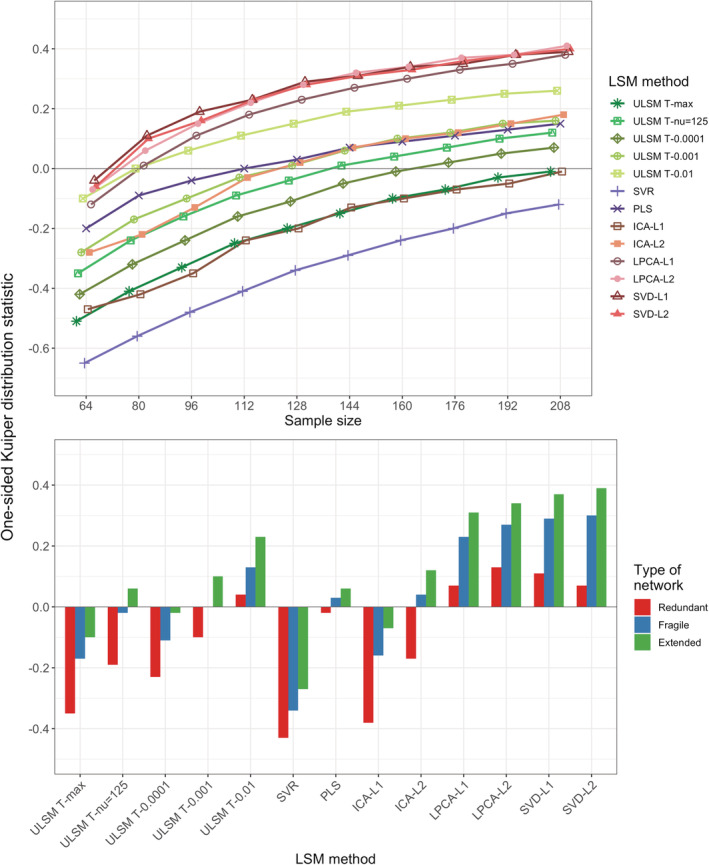

3.2. Dual anatomical target simulations

Dual‐target simulations were run on three network types: redundant, fragile, and extended. LSM Method, sample size and type of network all had a strong effect on the probability of obtaining a positive result ( ranged from .80 to .86; see Table 2 for mean simulation values). A sample size of 64 or larger was required to achieve a probability of 80% for all three network types. Two‐way interactions between these factors demonstrated medium to large effect size ( ranged from .36 to .50) (see Appendix A, Tables A5 and A6 for relevant data). The Redundant network was the hardest to detect for all LSM methods, requiring a much larger sample size to obtain probability levels similar to that of the fragile and extended networks.

TABLE 2.

Mean simulation values for all manipulated factors for dual anatomical target simulations: probability of obtaining a positive result, as a percentage of trials where significant voxels were detected (positive result [%)], Dice index, and OSK distribution statistic (OSK statistic: −1 worst to +1 best)

| LSM method | ULSM T‐max | ULSM T‐nu = 125 | ULSM T‐0.0001 | ULSM T‐0.001 | ULSM T‐0.01 | SVR | PLS | ICA‐L1 | ICA‐L2 | LPCA‐L1 | LPCA‐L2 | SVD‐L1 | SVD‐L2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Positive result (%) | 98.6 | — a | 98.7 | 99 | 99.1 | 98.5 | 96.7 | 97.4 | 98.4 | 98.2 | 98.6 | 95.9 | 95.7 |

| Dice index | 0.13 | 0.11 | 0.12 | 0.11 | 0.09 | 0.14 | 0.05 | 0.07 | 0.06 | 0.06 | 0.07 | 0.07 | 0.07 |

| OSK statistic | −0.21 | −0.05 | −0.12 | 0 | 0.13 | −0.34 | 0.02 | −0.2 | 0 | 0.2 | 0.25 | 0.26 | 0.25 |

| Sample size | 64 | 80 | 96 | 112 | 128 | 144 | 160 | 176 | 192 | 208 |

|---|---|---|---|---|---|---|---|---|---|---|

| Positive result (%) | 92.8 | 95.8 | 97.6 | 98.2 | 98.8 | 99.1 | 99.3 | 99.7 | 99.7 | 99.8 |

| Dice index | 0.1 | 0.1 | 0.09 | 0.09 | 0.09 | 0.09 | 0.08 | 0.08 | 0.08 | 0.08 |

| OSK statistic | −0.27 | −0.17 | −0.09 | −0.02 | 0.03 | 0.08 | 0.11 | 0.13 | 0.16 | 0.18 |

| Type of network | Redun‐dant | Fragile | Exten‐ded |

|---|---|---|---|

| Positive result (%) | 94.7 | 99.7 | 99.8 |

| Dice index | 0.09 | 0.09 | 0.09 |

| OSK statistic | −0.11 | 0.04 | 0.12 |

| Anatomical parcels | Mean | SD | Max |

|---|---|---|---|

| Positive result (%) | 98.1 | 0.1 | 99.5 |

| Dice index | 0.09 | 0.02 | 0.12 |

| OSK statistic | 0.01 | 0.2 | 0.22 |

Abbreviations: ICA, independent component analysis; LPCA, logistic principal component analysis; LSM, lesion symptom mapping; MLSM, multivariate lesion symptom mapping; OSK, one‐sided Kuiper; PLS, partial least squares; SVD, singular value decomposition; SVR, support vector regression; ULSM, univariate lesion‐symptom mapping.

Probability of obtaining a positive result not calculated due to a technical error.

Spatial accuracy of the detected networks (as determined by the OSK overlap measure) greatly varied based on the LSM method ( = .95). SVD and LPCA (both L1 and L2), as well as ULSM T‐0.01 showed consistently positive values (implying that values inside the target parcels were higher than outside) for sample size greater than 80 (Figure 11, top panel). Overall, OSK values were much lower for the dual‐target simulations. All LSM methods were numerically less accurate in detecting anatomical networks compared to the single target situation (average OSK across all LSM methods of 0.01 for networks vs. 0.5 for single targets; for best performing MLSM method OSK of 0.25 vs. 0.66, respectively). Effect size for sample size ( = .98), Type of Network ( = .84), and first‐order interactions of LSM method × type of network ( = .54) and LSM method × sample size ( = .61) were also large. The extended network resulted in the highest spatial accuracy, and the redundant network resulted in the lowest spatial accuracy across all LSM methods (Figure 11, bottom panel). A similar pattern was observed for the dice coefficient values, although with significantly smaller values and smaller variation between LSM methods (see Appendix A, Table A7). Patterns in the proportion of false negatives and false negatives across sample sizes were similar to those observed in single target simulations (see Appendix A, Table A8). See also Figure 7 for LSM output maps for the three network types for planum temporale and pars triangularis regions across different LSM methods.

FIGURE 11.

One‐sided Kuiper (OSK) weighted‐overlap statistic as a function of the lesion symptom mapping (LSM) method and sample size (top panel) or type of network (bottom panel) for dual‐target simulations

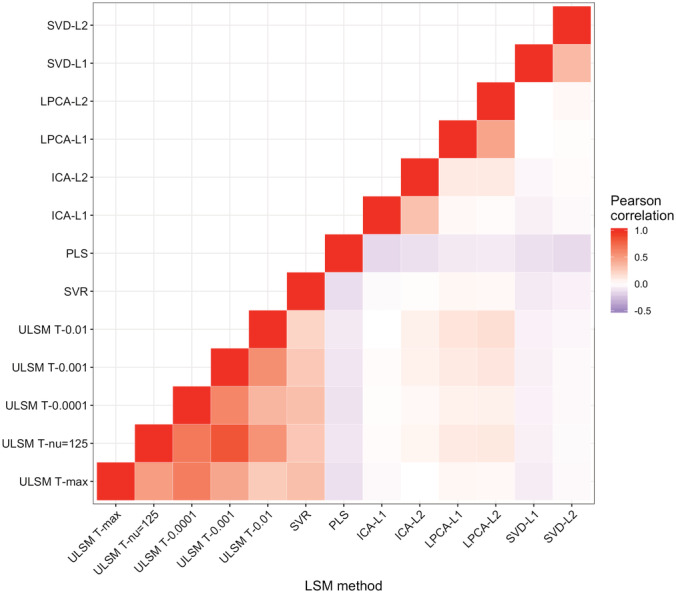

3.3. False positive simulations

For the false positive simulations, SVR and ULSM T‐max performed best, yielding the smallest number of clusters with the smallest number of voxels in those 5% of trials when a false positive solution was produced (see Table 3). Sample size and mask smoothing did not have a significant impact on the characteristics of false positive solutions. A Pearson correlation analysis of false positive outcomes (whether a false positive solution was present or not) between different LSM methods showed that false positive results were not produced consistently across different LSM methods. The correlation of false positive outcomes was lowest between ULSM and MLSM methods (Figure 12). In particular, PLS and SVD had low correlations with the other methods.

TABLE 3.

Mean values of above‐threshold (significant) cluster characteristics in false positive simulations for the 5% of trials for each LSM method that yielded false positive results

| LSM method | Number of clusters | Total number of voxels |

|---|---|---|

| T‐max | 1.5 | 17 |

| T‐nu = 125 | 4.6 | 312 |

| T‐0.0001 | 1.2 | 79 |

| T‐0.001 | 1.0 | 473 |

| T‐0.01 | 1.0 | 2,429 |

| SVR | 1.5 | 17 |

| PLS | 5.5 | 2,706 |

| ICA‐L1 | 4.7 | 644 |

| ICA‐L2 | 5.3 | 712 |

| LPCA‐L1 | 5.4 | 1,603 |

| LPCA‐L2 | 5.2 | 1957 |

| SVD‐L1 | 7.1 | 903 |

| SVD‐L2 | 7.5 | 982 |

Abbreviations: ICA, independent component analysis; LPCA, logistic principal component analysis; LSM, lesion symptom mapping; PLS, partial least squares; SVD, singular value decomposition; SVR, support vector regression.

FIGURE 12.

Pearson correlations between false positive outcomes across different lesion symptom mapping (LSM) methods

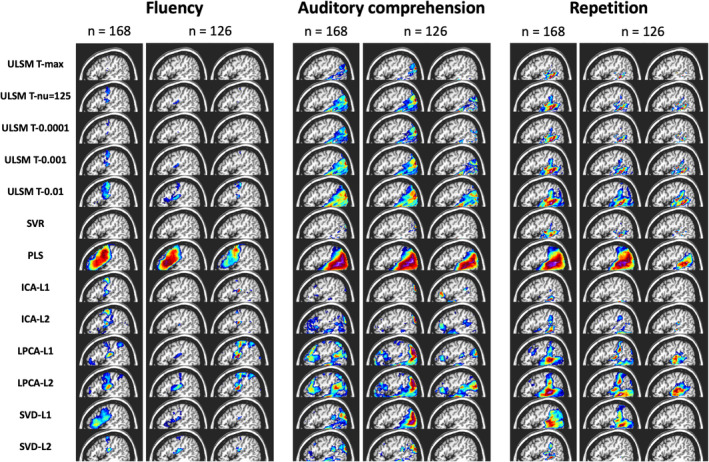

3.4. LSM analysis of real behavioral data

We next compared results across the different LSM methods, using real behavioral data collected from 168 patients from the NorCal dataset. The behavioral data consisted of WAB language subscores for speech fluency, single‐word auditory comprehension, and verbal repetition (Kertesz, 1982, 2007).

Results showed distinct areas identified for different behavioral scores, such as frontal regions for speech fluency and posterior temporal regions for single word comprehension (see Figure 13 for LSM maps across methods). However, as can be seen in Figure 13, substantial variability in the identified regions was observed between different LSM methods. MLSM methods tended to identify more regions, including regions not typically associated with the behavior (e.g., frontal regions for single‐word comprehension). In contrast, ULSM maps typically clustered around the maximum statistic location more traditionally associated with the behavior (e.g., posterior temporal cortex for single‐word comprehension). The methods that provided more sparse solutions (smaller LSM clusters) showed better differentiation between different behaviors under examination (e.g., conservative ULSM methods and ICA).

FIGURE 13.

Results of lesion symptom mapping (LSM) analysis of real behavioral data across all LSM methods. For each LSM method, output maps are presented for the three language variables under examination (speech fluency, single‐word auditory comprehension, verbal repetition): for the full sample (n = 168) and two random subsamples (n = 126)

Since there is no ground truth in the case of real behavioral data, we evaluated accuracy in terms of the stability of the obtained solutions using a random subsampling approach for n = 126 (using the full sample as the target map). The LSM maps produced in the subsample analysis varied between themselves and often clearly differed from the full sample map (see again Figure 13). Stability varied substantially across LSM techniques, as shown by spatial metrics averaged across the 100 subsample analyses and by the average correlation between the full LSM output and each of the subsample analyses (see Table 4). The methods that provided more dense solutions (larger LSM clusters) tended to show higher stability of results (e.g., liberal ULSM methods and PLS). Moderately conservative ULSM methods showed higher stability of results compared to MLSM as indicated by lower average displacement and higher OSK statistic, as well as higher correlations.

TABLE 4.

Mean subsampling analyses results: probability of obtaining positive results, as percentage of trials where significant voxels were detected (positive results [%]), average displacement error across all distance metrics (displacement average [mm]), average distance between maximum statistic and closest voxel within the target ROI (displacement of MaxLSM [mm]), Dice index, OSK distribution statistic (OSK statistic: −1 worst to +1 best), and average correlation between the full sample and the subsampling LSM maps (correlation)

| LSM method | ULSM T‐max | ULSM T‐nu = 125 | ULSM T‐0.0001 | ULSM T‐0.001 | ULSM T‐0.01 | SVR | PLS | ICA‐L1 | ICA‐L2 | LPCA‐L1 | LPCA‐L2 | SVD‐L1 | SVD‐L2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Positive results (%) | 100 | — a | 100 | 100 | 100 | 100 | 100 | 94.3 | 99 | 98.7 | 100 | 91.3 | 76.7 |

| Displacement average (mm) | 10.7 | 10.1 | 10.4 | 10.1 | 9.9 | 11.6 | 8.1 | 17.2 | 15.2 | 14.1 | 12.7 | 12.2 | 12.1 |

| Displacement of MaxLSM (mm) | 1 | 0.6 | 0.8 | 0.6 | 0.5 | 1.4 | 0.7 | 2.5 | 1.3 | 1.2 | 1.9 | 0.8 | 2.6 |

| Dice index | 0.47 | 0.69 | 0.6 | 0.73 | 0.83 | 0.44 | 0.89 | 0.2 | 0.34 | 0.54 | 0.67 | 0.57 | 0.32 |

| OSK statistic | −0.47 | −0.16 | −0.3 | −0.08 | 0.2 | −0.43 | 0.6 | −0.79 | −0.69 | −0.38 | −0.16 | −0.27 | −0.11 |

| Correlation | 0.1 | 0.31 | 0.19 | 0.37 | 0.57 | −0.03 | 0.86 | −0.33 | 0.02 | 0.03 | 0.32 | 0.29 | 0.06 |

Abbreviations: ICA, independent component analysis; LPCA, logistic principal component analysis; LSM, lesion symptom mapping; OSK, one‐sided Kuiper; PLS, partial least squares; SVD, singular value decomposition; SVR, support vector regression; ULSM, univariate lesion symptom mapping.

Probability of obtaining positive results not calculated due to a technical error.