Abstract

Dehumanization is a pernicious psychological process that often leads to extreme intergroup bias, hate speech, and violence aimed at targeted social groups. Despite these serious consequences and the wealth of available data, dehumanization has not yet been computationally studied on a large scale. Drawing upon social psychology research, we create a computational linguistic framework for analyzing dehumanizing language by identifying linguistic correlates of salient components of dehumanization. We then apply this framework to analyze discussions of LGBTQ people in the New York Times from 1986 to 2015. Overall, we find increasingly humanizing descriptions of LGBTQ people over time. However, we find that the label homosexual has emerged to be much more strongly associated with dehumanizing attitudes than other labels, such as gay. Our proposed techniques highlight processes of linguistic variation and change in discourses surrounding marginalized groups. Furthermore, the ability to analyze dehumanizing language at a large scale has implications for automatically detecting and understanding media bias as well as abusive language online.

Keywords: computational sociolinguistics, dehumanization, lexical variation, language change, media, New York Times, LGBTQ

1. Introduction

Despite the American public's increasing acceptance of LGBTQ people and recent legal successes, LGBTQ individuals remain targets of hate and violence (Dinakar et al., 2012; Silva et al., 2016; Gallup, 2019). At the core of this issue is dehumanization, “the act of perceiving or treating people as less than human” (Haslam and Stratemeyer, 2016), a process that heavily contributes to extreme intergroup bias (Haslam, 2006). Language is central to studying this phenomenon; like other forms of bias (Wiebe et al., 2004; Greene and Resnik, 2009; Recasens et al., 2013; Voigt et al., 2017; Breitfeller et al., 2019), dehumanizing attitudes are expressed through subtle linguistic manipulations, even in carefully-edited texts. It is crucial to understand the use of such linguistic signals in mainstream media, as the media's representation of marginalized social groups has far-reaching implications for social acceptance, policy, and safety.

While small-scale studies of dehumanization and media representation of marginalized communities provide valuable insights (e.g., Esses et al., 2013), there exist no known large-scale analyses, likely due to difficulties in quantifying such a subjective and multidimensional psychological process. However, the ability to do large-scale analysis is crucial for understanding how dehumanizing attitudes have evolved over long periods of time. Furthermore, by being able to account for a greater amount of media discourse, large-scale techniques can provide a more complete view of the media environment to which the public is exposed.

Linguistics and computer science offer valuable methods and insights on which large-scale techniques might be developed for the study of dehumanization. By leveraging more information about the contexts in which marginalized groups are discussed, computational linguistic methods enable large-scale study of a complex psychological phenomenon and can even reveal linguistic variations and changes not easily identifiable through qualitative analysis alone.

We develop a computational linguistic framework for analyzing dehumanizing language, with a focus on lexical signals of dehumanization. Social psychologists have identified numerous components of dehumanization, such as negative evaluations of a target group, denial of agency, moral disgust, and likening members of a target group to non-human entities, such as vermin. Drawing upon this rich body of literature, we first identify linguistic analogs for these components and propose several computational techniques to measure these linguistic correlates. We then apply this general framework to explore changing representations of LGBTQ groups in the New York Times over 30 years and both qualitatively and quantitatively evaluate our techniques within this case study. We additionally use this lens of dehumanization to investigate differences in social meaning between the denotationally-similar labels gay and homosexual. We focus on a single case study in order to conduct an in-depth analysis of our methodology, but our framework can generalize to study representations of other social groups, which we briefly explore in our discussion.

This paper aims to bridge the gaps between computational modeling, sociolinguistics, and dehumanization research with implications for several disciplines. In addition to enabling large-scale studies of dehumanizing language and media representation of marginalized social groups, these techniques can be built into systems that seek to capture both conscious and unconscious biases in text. Furthermore, this work has implications for improving machines' abilities to automatically detect hate speech and abusive language online, which are typically underpinned by dehumanizing language. Finally, our case study demonstrates that such computational analyses of discussions about marginalized groups can provide unique insights into language variation and change within sensitive sociopolitical contexts, and help us understand how people (and institutions) use language to express their ideologies and attitudes toward certain social groups.

Trigger Warning: this paper contains offensive material that some may find upsetting, especially in Table 4 and Table 7.

2. Background

2.1. Dehumanization

Our lexical semantic analysis involves quantifying linguistic correlates of component psychological processes that contribute to dehumanization. Our approaches are informed by social psychology research on dehumanization, which is briefly summarized here. Prior work has identified numerous related processes that comprise dehumanization (Haslam, 2006). One such component is likening members of the target group to non-human entities, such as machines or animals (Haslam, 2006; Goff et al., 2008; Kteily et al., 2015). By perceiving members of a target group to be non-human, they are “outside the boundary in which moral values, rules, and considerations of fairness apply” (Opotow, 1990), which thus leads to violence and other forms of abuse. Metaphors and imagery relating target groups to vermin are particularly insidious and played a prominent role in the genocide of Jews in Nazi Germany and Tutsis in Rwanda (Harris and Fiske, 2015). More recently, the vermin metaphor has been invoked by the media to discuss terrorists and political leaders of majority-Muslim countries after September 11 (Steuter and Wills, 2010). According to Tipler and Ruscher (2014), the vermin metaphor is particularly powerful because it conceptualizes the target group as “engaged in threatening behavior, but devoid of thought or emotional desire.”

Disgust underlies the dehumanizing nature of these metaphors and is itself another important element of dehumanization. Disgust contributes to members of target groups being perceived as less-than-human and of negative social value (Sherman and Haidt, 2011). It is often evoked (both in real life and experimental settings) through likening a target group to animals. Buckels and Trapnell (2013) find that priming participants to feel disgust facilitates “moral exclusion of out-groups.” Experiments by Sherman and Haidt (2011) and Hodson and Costello (2007) similarly find that disgust is a predictor of dehumanizing perceptions of a target group. Both moral disgust toward a particular social group and the invocation of non-human metaphors are facilitated by essentialist beliefs about groups, which Haslam (2006) presents as a necessary component of dehumanization. In order to distinguish between human and non-human, dehumanization requires an exaggerated perception of intergroup differences. Essentialist thinking thus contributes to dehumanization by leading to the perception of social groups as categorically distinct, which in turn emphasizes intergroup differences (Haslam, 2006).

According to Haslam (2006), prior work describes “extremely negative evaluations of others” as a major component of dehumanization. This is especially pronounced in Bar-Tal's account of delegitimization, which involves using negative characteristics to categorize groups that are “excluded from the realm of acceptable norms and values” (Bar-Tal, 1990). While Bar-Tal's defines delegitimization as a distinct process, he considers dehumanization to be one means of delegitimization. Opotow (1990) also discusses broader processes of moral exclusion, one of which is dehumanization. A closely related process is psychological distancing, in which one perceives others to be objects or non-existent (Opotow, 1990). Nussbaum (1999) identifies elements that contribute to the objectification (and thus dehumanization) of women, one of which is denial of subjectivity, or the habitual neglect of one's experiences, emotions, and feelings.

Another component of dehumanization is the denial of agency to members of the target group (Haslam, 2006). According to Tipler and Ruscher, there are three types of agency: the ability to (1) experience emotion and feel pain (affective mental states), (2) act and produce an effect on their environment (behavioral potential), and (3) think and hold beliefs (cognitive mental states) (Tipler and Ruscher, 2014). Dehumanization typically involves the denial of one or more of these types of agency (Tipler and Ruscher, 2014).

In section 3, we introduce computational linguistic methods to quantify several of these components.

2.2. Related Computational Work

While this is the first known computational work that focuses on dehumanization, we draw upon a growing body of literature at the intersection of natural language processing and social science. We are particularly inspired by the area of automatically detecting subjective language, largely pioneered by Wiebe et al. who developed novel lexical resources and algorithms for this task (Wiebe et al., 2004). These resources have been used as linguistically-informed features in machine learning classification of biased language (Recasens et al., 2013). Other work has expanded this lexicon-based approach to account for the role of syntactic form in identifying the writer's perspective toward different entities (Greene and Resnik, 2009; Rashkin et al., 2016).

These methods have been used and expanded to analyze pernicious, but often implicit social biases (Caliskan et al., 2017). For example, Voigt et al. analyze racial bias in police transcripts by training classifiers with linguistic features informed by politeness theory (Voigt et al., 2017), and Garg et al. investigate historical racial biases through changing word embeddings (Garg et al., 2018). Other studies focus on how people's positions in different syntactic contexts affect power and agency, and relate these concepts to gender bias in movies (Sap et al., 2017) and news articles about the #MeToo movement (Field et al., 2019). There is also a growing focus on identifying subtle manifestations of social biases, such as condescension (Wang and Potts, 2019), microagressions (Breitfeller et al., 2019), and “othering” language (Burnap and Williams, 2016; Alorainy et al., 2019). In addition, our focus on dehumanization is closely related to the detection and analysis of hate speech and abusive language (Schmidt and Wiegand, 2017; ElSherief et al., 2018).

Gender and racial bias have also been identified within widely-deployed NLP systems, for tasks including toxicity detection (Sap et al., 2019), sentiment analysis (Kiritchenko and Mohammad, 2018), coreference resolution (Rudinger et al., 2018), language identification (Blodgett and O'Connor, 2017), and in many other areas (Sun et al., 2019). Given the biases captured, reproduced, and perpetuated in NLP systems, there is a growing interest in mitigating subjective biases (Sun et al., 2019), with approaches including modifying embedding spaces (Bolukbasi et al., 2016; Manzini et al., 2019), augmenting datasets (Zhao et al., 2018), and adapting natural language generation methods to “neutralize” text (Pryzant et al., 2019).

A related line of research has developed computational approaches to investigate language use and variation in media discourse about sociopolitical issues. For example, some work has drawn upon political communication theory to automatically detect an issue's framing (Entman, 1993; Boydstun et al., 2013; Card et al., 2015) through both supervised classification (Boydstun et al., 2014; Baumer et al., 2015) and unsupervised methods, such as topic modeling and lexicon induction (Tsur et al., 2015; Field et al., 2018; Demszky et al., 2019). Scholars have also developed computational methods to identify lexical cues of partisan political speech, political slant in mass media, and polarization in social media (Monroe et al., 2008; Gentzkow and Shapiro, 2010; Demszky et al., 2019).

2.3. Attitudes Toward LGBTQ Communities in the United States

Some background about LGBTQ communities is necessary for our case study of LGBTQ dehumanization in the New York Times. Bias against LGBTQ people is longstanding in the United States. Overall, however, the American public has become more accepting of LGBTQ people and supportive of their rights. In 1977, equal percentages of respondents (43%) agreed and disagreed with the statement that gay or lesbian relations between consenting adults should be legal (Gallup, 2019). Approval of gay and lesbian relations then decreased in the 1980s; in 1986, only 32% of respondents believed they should be legal. According to Gallup, attitudes have become increasingly positive since the 1990s, and in 2019, 73% responded that gay or lesbian relations should be legal. The Pew Research center began surveying Americans about their beliefs about same-sex marriage in 2001 and found similar trends (Pew Research Center, 2017). Between 2001 and 2019, support for same-sex marriage jumped from 35 to 61%.

In addition to the public's overall attitudes, it is important to consider the specific words used to refer to LGBTQ people. Because different group labels potentially convey different social meanings, and thus have different relationships with dehumanization, our case study compares two LGBTQ labels: gay and homosexual. The Gallup survey asked for opinions on legality of “homosexual relations” until 2008, but then changed the wording to “gay and lesbian relations.” This was likely because many gay and lesbian people find the word homosexual to be outdated and derogatory. According to the LGBTQ media monitoring organization GLAAD, homosexual's offensiveness originates in the word's dehumanizing clinical history, which had falsely suggested that “people attracted to the same sex are somehow diseased or psychologically/emotionally disordered”1. Beyond its outdated clinical associations, some argue that the word homosexual is more closely associated with sex and all of its negative connotations simply by virtue of containing the word sex, while terms, such as gay and lesbian avoid such connotations (Peters, 2014). Most newspapers, including the New York Times, almost exclusively used the word homosexual in articles about gay and lesbian people until the late 1980s (Soller, 2018). The New York Times began using the word gay in non-quoted text in 1987. Many major newspapers began restricting the use of the word homosexual in 2006 (Peters, 2014). As of 2013, the New York Times has confined the use of homosexual to specific references to sexual activity or clinical orientation, in addition to direct quotes and paraphrases2.

Beyond differences in how LGBTQ people perceive the terms gay or lesbian relative to homosexual, the specific choice of label can affect attitudes toward LGBTQ people. In 2012, Smith et al. (2017) asked survey respondents about either “gay and lesbian rights” or “homosexual rights.” Respondents who read the word “homosexual” showed less support for LGBTQ rights. This effect was primarily driven by high authoritarians, people who show high sensitivity to intergroup distinctions. The authors posit that homosexual makes social group distinctions more blatant than gay or lesbian. This leads to greater psychological distancing, thus enabling participants to remove LGBTQ people from their realm of moral consideration (Smith et al., 2017). Based on prior research and evolving media guidelines, we expect our computational analysis to show that homosexual occurs in more dehumanizing contexts than the label gay.

3. Operationalizing Dehumanization

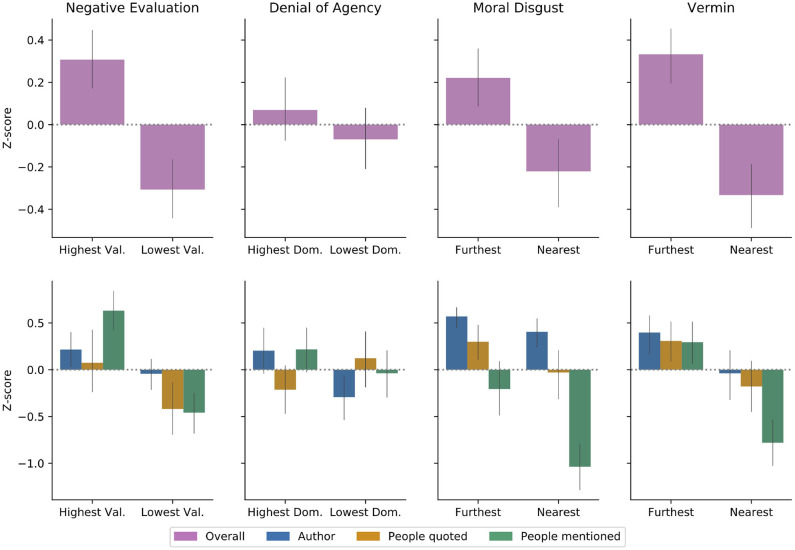

In section 2.1, we discussed multiple elements of dehumanization that have been identified in social psychology literature. Here we introduce and quantify lexical correlates to operationalize four of these components: negative evaluations of a target group, denial of agency, moral disgust, and use of vermin metaphors.

3.1. Negative Evaluation of a Target Group

One prominent aspect of dehumanization is extremely negative evaluations of members of a target group (Haslam, 2006). Attribution of negative characteristics to members of a target group in order to exclude that group from “the realm of acceptable norms and values” is specifically the key component of delegitimization, a process of moral exclusion closely related to dehumanization. We hypothesize that this negative evaluation of a target group can be realized by words and phrases whose connotations have extremely low valence, where valence refers to the dimension of meaning corresponding to positive/negative (or pleasure/displeasure) (Osgood et al., 1957; Mohammad, 2018). Thus, we propose several valence lexicon-based approaches to measure this component: paragraph-level valence analysis, Connotation Frames of perspective, and word embedding neighbor valence. Each technique has different advantages and drawbacks regarding precision and interpretability.

3.1.1. Paragraph-Level Valence Analysis

One dimension of affective meaning is valence, which corresponds to an individual's evaluation of an event or concept, ranging from negative/unpleasant to positive/pleasant (Osgood et al., 1957; Russell, 1980). A straightforward lexical approach to measure negative evaluations of a target group involves calculating the average valence of words occurring in discussions of the target group. We obtain valence scores for 20,000 words from the NRC VAD lexicon, which contains real-valued scores ranging from zero to one for valence, arousal and dominance. A score of zero represents the lowest valence (most negative emotion) and a score of one is the highest possible valence (most positive emotion) (Mohammad, 2018). Words with the highest valence include love and happy, while words with the lowest valence include nightmare and shit.

We use paragraphs as the unit of analysis because a paragraph represents a single coherent idea or theme (Hinds, 1977). This is particularly true for journalistic writing (Shuman, 1894), and studies on rhetoric in journalism often treat paragraphs as the unit of analysis (e.g., Barnhurst and Mutz, 1997; Katajamaki and Koskela, 2006). Furthermore, by looking at a small sample of our data, we found that paragraphs were optimal because full articles often discuss unrelated topics while single sentences do not provide enough context to understand how the newspaper represents the target group. We calculate paragraph-level scores by taking the average valence score over all words in the paragraph that appear (or whose lemmas appear) in the NRC VAD lexicon.

3.1.2. Connotation Frames of Perspective

While paragraph-level valence analysis is straightforward, it is sometimes too coarse because we aim to understand the sentiment directed toward the target group, not just nearby in the text. For example, suppose the target group is named “B.” A sentence, such as “A violently attacked B” would likely have extremely negative valence, but the writer may not feel negatively toward the victim, “B.”

We address this by using Rashkin et al.'s Connotation Frames Lexicon, which contains rich annotations for 900 English verbs (Rashkin et al., 2016). Among other things, for each verb, the Connotation Frames Lexicon provides scores (ranging from −0.87 to 0.8) for the writer's perspective toward the verb's subject and object. In the example above for the verb attack, the lexicon lists the writer's perspective toward the subject “A,” the attacker, as −0.6 (strongly negative) and the object “B” as 0.23 (weakly positive).

We extract all subject-verb-object tuples containing at least one target group label using the Spacy dependency parser3. For each subject and object, we capture the noun and the modifying adjectives, as group labels (such as gay) can often take either nominal or adjectival forms. For each tuple, we use the Connotation Frames lexicon to determine the writer's perspective toward the noun phrase containing the group label. We then average perspective scores over all tuples.

3.1.3. Word Embedding Neighbor Valence

While a Connotation Frames approach can be more precise than word-counting valence analysis, it limits us to analyzing SVO triples, which excludes a large portion of the available data about the target groups. This reveals a conundrum: broader context can provide valuable insights into the implicit evaluations of a social group, but we also want to directly probe attitudes toward the group itself.

We address this tension by training vector space models to represent the data, in which each unique word in a large corpus is represented by a vector (embedding) in high-dimensional space. The geometry of the resulting vector space captures many semantic relations between words. Furthermore, prior work has shown that vector space models trained on corpora from different time periods can capture semantic change (Kulkarni et al., 2015; Hamilton et al., 2016). For example, diachronic word embeddings reveal that the word gay meant “cheerful” or “dapper” in the early twentieth century, but shifted to its current meaning of sexual orientation by the 1970s. Because word embeddings are created from real-world data, they contain real-world biases. For example, Bolukbasi et al. (2016) demonstrated that gender stereotypes are deeply ingrained in these systems. Though problematic for the widespread use of these models in computational systems, these revealed biases indicate that word embeddings can actually be used to identify stereotypes about social groups and understand how they change over time (Garg et al., 2018).

This technique can similarly be applied to understand how a social group is negatively evaluated within a large text corpus. If the vector corresponding to a social group label is located in the semantic embedding space near words with clearly negative evaluations, that group is likely negatively evaluated (and possibly dehumanized) in the text.

We first preprocess the data by lowercasing, removing numbers, and removing punctuation. We then use the word2vec skip-gram model to create word embeddings (Mikolov et al., 2013). We use Gensim's default parameters with two exceptions; we train our models for ten iterations in order to ensure that the models converge to the optimal weights and we set the window size to 10 words, as word vectors trained with larger window sizes tend to capture more semantic relationships between words (Levy and Goldberg, 2014)4. For our diachronic analysis, we first train word2vec on the entire corpus, and then use the resulting vectors to initialize word2vec models for each year of data in order to encourage coherence and stability across years. After training word2vec, we zero-center and normalize all embeddings to alleviate the hubness problem (Dinu et al., 2014).

We then identify vectors for group labels by taking the centroid of all morphological forms of the label, weighted by frequency. For example, the vector representation for the label gay is actually the weighted centroid of the words gay and gays. This enables us to simultaneously account for adjectival, singular nominal, and plural nominal forms for each social group label with a single vector. Finally, we estimate the valence for each group label by identifying its 500 nearest neighbors via cosine similarity, and calculating the average valence of all neighbors that appear in the NRC VAD Valence Lexicon5.

We also induce a valence score directly from a group label's vector representation by adapting the regression-based sentiment prediction from Field and Tsvetkov (2019) for word embeddings. This approach yielded similar results as analyzing nearest neighbor valence but was difficult to interpret. More details for and results from this technique can be found in the Supplementary Material.

3.2. Denial of Agency

Denial of agency refers to the lack of attributing a target group member with the ability to control their own actions or decisions (Tipler and Ruscher, 2014). Automatically detecting the extent to which a writer attributes cognitive abilities to a target group member is an extraordinarily challenging computational task. Fortunately, the same lexicons used to operationalize negative evaluations provide resources for measuring lexical signals of denial of agency.

3.2.1. Connotation Frames

As in section 3.1, we use Connotation Frames to quantify the amount of agency attributed to a target group. We use Sap et al.'s extension of Connotation Frames for agency (Sap et al., 2017). Following Sap et al.'s interpretation, entities with high agency exert a high degree of control over their own decisions and are active decision-makers, while entities with low agency are more passive (Sap et al., 2017). This contrast is particularly apparent in example sentences, such as X searched for Y and X waited for Y, where the verb searched gives X high agency and waited gives X low agency (Sap et al., 2017). Additionally, Sap et al.'s released lexicon for agency indicates that subjects of verbs such as attack and praise have high agency, while subjects of doubts and needs have low agency (Sap et al., 2017).

This lexicon considers the agency attributed to subjects of nearly 2,000 transitive and intransitive verbs. To use this lexicon to quantify denial of agency, we extract all sentences' head verbs and their subjects, where the subject noun phrase contains a target group label. Unlike Rashkin et al.'s real-valued Connotation Frames lexicon for perspective, the agency lexicon only provides binary labels, so we calculate the fraction of subject-verb pairs where the subject has high agency.

3.2.2. Word Embedding Neighbor Dominance

The NRC VAD Dominance Lexicon provides another resource for quantifying dehumanization (Mohammad, 2018). The NRC VAD lexicon's dominance dimension contains real-valued scores between zero and one for 20,000 English words. However, the dominance lexicon primarily captures power, which is distinct from but closely related to agency. While power refers to one's control over others, agency refers to one's control over oneself. While this lexicon is a proxy, it qualitatively appears to capture signals of denial of agency; the highest dominance words are powerful, leadership, success, and govern, while the lowest dominance words are weak, frail, empty, and penniless. We thus take the same approach as in section 3.1.3, but instead calculate the average dominance of the 500 nearest neighbors to each group label representation5.

As in section 3.1.3, we also induced a dominance score directly from a group label's vector representation by adapting the regression-based sentiment prediction from Field and Tsvetkov (2019) for word embeddings. More details and results for this technique can be found in the Supplementary Material.

3.3. Moral Disgust

To operationalize moral disgust with lexical techniques, we draw inspiration from Moral Foundations theory, which postulates that there are five dimensions of moral intuitions: care, fairness/proportionality, loyalty/ingroup, authority/respect, and sanctity/purity (Haidt and Graham, 2007). The negative end of the sanctity/purity dimension corresponds to moral disgust. While we do not directly incorporate Moral Foundations Theory in our framework for dehumanization, we utilize lexicons created by Graham et al. (2009) corresponding to each moral foundation. The dictionary for moral disgust includes over thirty words and stems, including disgust*, sin, pervert, and obscen* (the asterisks indicate that the dictionary includes all words containing the preceding prefix)6.

We opt for a vector approach instead of counting raw frequencies of moral disgust-related words because such words are rare in our news corpus. Furthermore, vectors capture associations with the group label itself, while word counts would not directly capture such associations. Using the word embeddings from section 3.1.3, we construct a vector to represent the concept of moral disgust by averaging the vectors for all words in the “Moral Disgust” dictionary, weighted by frequency. This method of creating a vector from the Moral Foundations dictionary resembles that used by Garten et al. (2016). We identify implicit associations between a social group and moral disgust by calculating cosine similarity between the group label's vector and the Moral Disgust concept vector, where a higher similarity suggests closer associations between the social group and moral disgust.

3.4. Vermin as a Dehumanizing Metaphor

Metaphors comparing humans to vermin have been especially prominent in dehumanizing groups throughout history (Haslam, 2006; Steuter and Wills, 2010). Even if a marginalized social group is not directly equated to vermin in the press, this metaphor may be invoked in more subtle ways, such as through the use of verbs that are also associated with vermin (like scurry as opposed to the more neutral hurry) (Marshall and Shapiro, 2018). While there is some natural language processing work on the complex task of metaphor detection (e.g., Tsvetkov et al., 2014), these systems cannot easily quantify such indirect associations.

We thus quantify the metaphorical relationship between a social group and vermin by calculating similarities between these concepts in a distributional semantic vector space. As with moral disgust, we create a Vermin concept vector by averaging the following vermin words' vectors, weighted by frequency: vermin, rodent(s), rat(s) mice, cockroaches, termite(s), bedbug(s), fleas7. We do not include the singular mouse or flea because non-vermin senses of those words were more frequent, and word2vec does not account for polysemy. We calculate cosine similarity between each group label and the Vermin concept vector, where a high cosine similarity suggests that the group is closely associated with vermin.

Table 1 provides an overview of the four elements of dehumanization that we study and the lexical techniques used to quantify them.

Table 1.

Overview of linguistic correlates and our operationalizations for four elements of dehumanization.

| Dehumanization element | Operationalization |

|---|---|

| Negative evaluation of target group | Paragraph-level sentiment analysis Connotation frames of perspective Word embedding neighbor valence |

| Denial of agency | Connotation frames of agency Word embedding neighbor dominance |

| Moral disgust | Vector similarity to disgust |

| Vermin metaphor | Vector similarity to vermin |

4. Data

The data for our case study spans over 30 years of articles from the New York Times, from January 1986 to December 2015, and was originally collected by Fast and Horvitz (2016). The articles come from all sections of the newspaper, such as “World,” “New York & Region,” “Opinion,” “Style,” and “Sports.” Our distributional semantic methods rely on all of the available data in order to obtain the most fine-grained understanding of the relationships between words possible. For the other techniques, we extract paragraphs containing any of the following words from a predetermined list of LGTBQ terms: gay(s), lesbian(s), bisexual(s), homosexual(s), transgender(s), transsexual(s), transexual(s), transvestite(s), transgendered, asexual, agender, aromantic, lgb, lgbt, lgbtq, lgbtqia, glbt, lgbtqqia, genderqueer, genderfluid, intersex, pansexual.

Each acronym label is matched insensitive to case and punctuation. Some currently prominent LGBTQ terms, such as queer and trans are not included in this study, as other senses of these words were more frequent in earlier years. We filter out paragraphs from sections that typically do not pertain to news, such as “Arts,” “Theater,” and “Movies.” While these sections could provide valuable information, we focus on representation of LGBTQ groups in more news-related contexts.

A challenging question when analyzing mass media for subjective attitudes is deciding whose perspective we want to capture: an individual reporter, the institution, or society at large? In this case study, we aim to identify the institution's dehumanizing attitudes toward LGBTQ people. We represent the New York Times institution as a combination of the journalists' words in news articles, direct quotes, paraphrases from interviews, and published opinion articles. Therefore, despite our news focus, we include data from “Opinion” sections; while opinion articles are stylistically different from traditional journalistic reporting due to more overt biases and arguments, these articles are important in constructing the institution's perspective. In addition, we consider all text in each relevant paragraph, including quotes and paraphrases, because they are important to a newspaper's framing of an issue, as particular quotes representing specific stances are intentionally included or excluded from any given article (Niculae et al., 2015).

We refer to the remaining subset of the New York Times data after filtering as the LGBTQ corpus. The LGBTQ corpus consists of 93,977 paragraphs and 7.36 million tokens. A large increase in reporting on LGBTQ-related issues has led to a skewed distribution in the amount of data over years, with 1986 containing the least data (1,144 paragraphs and 73,549 tokens) and 2012 containing the most (5,924 paragraphs and 465,254 tokens).

For all experiments, we also include results for the terms American and Americans. We include American(s) to contrast changes in LGBTQ labels' representation with another social group label. This ensures that the changes we find in dehumanizing language toward LGBTQ groups do not apply uniformly to all social groups, and are thus not merely an artifact of the publication's overall language change. While a natural “control” variable would be labels, such as straight or heterosexual, these terms only occurred within discussions of LGBTQ communities because they name socially unmarked categories. We also considered comparing LGBTQ labels to person/people, but because word embedding-based experiments are sensitive to syntactic forms, we opt for a label that behaves more syntactically similar to gay and homosexual, particularly with both nominal and adjectival uses. Nevertheless, American(s) is by no means a neutral control variable. Because of its in-group status for the New York Times (a U.S. institution), we expect our measurements to show that American(s) appears in more humanizing contexts than LGBTQ labels; however, we do not expect to find substantial changes in the use of American(s) over time.

Figure 1 shows the counts of group labels for each year in the New York Times from 1986 to 2015. For visualization purposes, only words with a total count >1,000 are shown. The relative frequency of homosexual decreased substantially over time, while gay, lesbian, and bisexual are more frequent in later years. The terms lgbt and transgender also emerged after 2000. Counts for all LGBTQ labels can be found in the Supplementary Material.

Figure 1.

Counts for the six most frequent LGBTQ labels in each year of the New York Times data.

5. Results

5.1. Word Embeddings

Using all of the New York Times data, we create word2vec models for each year using the methods described in section 3.1.3. Because our computational techniques rely upon these word2vec models, it is useful to gain a sense of how LGBTQ terms are semantically represented within these models. We thus inspect the ten nearest neighbors, or most similar words, to LGBTQ terms in different years. Note that the neighboring words in Tables 2, 3 are shown purely for qualitative investigation; our measures for quantifying each dehumanization component incorporate far more information from the word2vec models beyond the top ten neighbors.

Table 2.

Nearest words to weighted average of all LGBTQ terms' vectors in 1986, 2000, and 2015.

| 1986 | 2000 | 2015 |

|---|---|---|

| Sex | Interracial | Sex |

| Premarital | Openly | Non-transgender |

| Sexual | Unwed | Unmarried |

| Abortion | Homophobia | Interracial |

| Promiscuity | Premarital | Closeted |

| Polygamy | Ordination | Equality |

| Promiscuous | Non-whites | Couples |

| Vigilantism | Ordaining | Abortion |

| Bestiality | Discrimination | Sexuality |

| Pornography | Abortion | Antiabortion |

Table 3.

Nearest words to vector representations of gay and homosexual in 1986, 2000, and 2015.

| 1986 | 2000 | 2015 | |||

|---|---|---|---|---|---|

| Gay | Homosexual | Gay | Homosexual | Gay | Homosexual |

| Homophobia | Premarital | Interracial | Premarital | Interracial | Premarital |

| Women | Abortion | Openly | Openly | Sex | Sexual |

| Feminist | Sexual | Homophobia | Deviant | Couples | Bestiality |

| Vigilante | Sex | Unwed | Interracial | Mormons | Pedophilia |

| Vigilantism | Promiscuity | Ordination | Promiscuity | Marriage | Adultery |

| Suffrage | Polygamy | Premarital | Immoral | Closeted | Infanticide |

| Sexism | Anal | Abortion | Sexual | Equality | Abhorrent |

| A.c.l.u. | Intercourse | Antigay | Criminalizing | Abortion | Sex |

| Amen | Consenting | Discrimination | Polygamy | Unmarried | Feticide |

| Queer | Consensual | Marriagelike | Consensual | Openly | Fornication |

Table 2 shows the 10 nearest neighbors (by cosine similarity) to our vector representation of all LGBTQ terms, which is the weighted average of the embeddings of all LGBTQ terms considered. For visual convenience, we filter out words occurring fewer than ten times, proper names, as well as other LGBTQ labels and forms of the word heterosexual, which are common neighbors for all terms across all years.

Table 2 shows that in 1986, LGBTQ groups were most highly associated with words that often convey a sense of sexual deviancy, including promiscuity, promiscuous, polygamy, bestiality, and pornography. These associations suggest that LGBTQ people were dehumanized to some extent at this time, and their identities were not fully recognized or valued. This shifted by 2000, where we no longer see associations between LGBTQ groups and ideas that evoke moral disgust. Instead, the 2000 vector space shows that LGBTQ people have become more associated with civil rights issues (suggested by interracial, homophobia, and discrimination). The words ordination and ordaining likely appear due to major controversies that arose at this time about whether LGBTQ people should be permitted to be ordained. We also see some indications of self-identification with the term openly. Finally, we see a slight shift toward associations with identity in 2015, with nearby words including nontransgender, closeted, equality, and sexuality. Curiously, the word abortion is a nearby term for all 3 years. Perhaps this is because opinions toward abortion and LGBTQ rights seem to be divided along similar partisan lines.

Table 3 shows the ten nearest neighboring words to our representations of gay and homosexual after filtering out proper names, words appearing <10 times that year, other LGBTQ terms, and forms of heterosexual. Table 3 reveals variation in social meaning between gay and homosexual despite denotational similarity, and these differences intensify over time. In 1986, gay is associated with terms of discrimination, civil rights and activism, such as homophobia, feminist, suffrage, sexism, and a.c.l.u. On the other hand, homosexual is primarily associated with words related to sexual activity (e.g., promiscuity, anal, intercourse, consenting).

In 1986, this pattern may be due to discussions about sexual transmission of AIDS, but the pejoration of homosexual continues over time. While gay becomes associated with issues related to marriage equality and identity in 2015, homosexual becomes extremely associated with moral disgust and illicit activity, with nearest neighbors including bestiality, pedophilia, adultery, infanticide, and abhorrent.

This qualitative analysis of word embedding neighbors reveals significant variation and change in the social meanings associated with LGBTQ group labels, with clear relationships to dehumanizing language. We will now present our quantitative results for measuring each component of dehumanization.

5.2. Negative Evaluation Toward Target Group

5.2.1. Quantitative Results

5.2.1.1. Paragraph-level valence analysis

Figure 2A shows the average valence for paragraphs containing LGBTQ labels [and American(s) for comparison], where a paragraph's valence is simply the average valence over its words (or lemmas) that appear in the NRC VAD Valence Lexicon. The NRC VAD lexicons actually contain several LGBTQ terms, which all have lower than the average valence score of 0.5: transsexual (0.264), homosexual (0.333), lesbian (0.385), gay (0.388), and bisexual (0.438). These values contrast starkly with more positively-valenced entries in the lexicon, such as heterosexual (0.561), person (0.646), human (0.767), man (0.688), and woman (0.865). These disparities likely reveal biases among the human annotators whose judgments were used to construct the NRC VAD lexicon (Mohammad, 2018). While the lexicon may itself be an interesting artifact of dehumanizing attitudes toward LGBTQ people, we remove these terms before calculating paragraph-level valence scores in order to isolate linguistic signals in the New York Times data from annotation biases. Without this preprocessing step, the temporal trends and relative differences between all LGBTQ terms, gay, and homosexual remain roughly the same, but all LGBTQ labels occur in significantly more negative paragraphs than American.

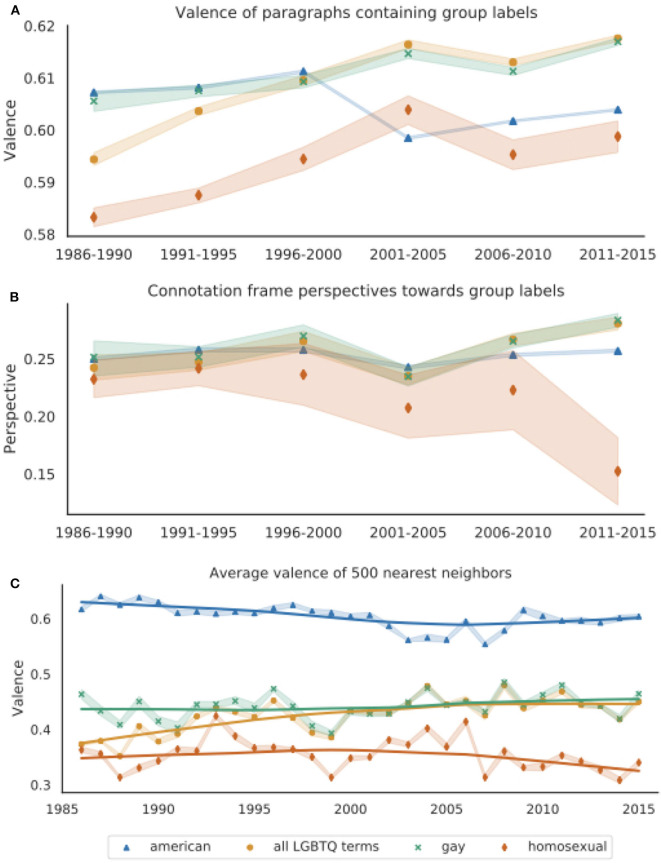

Figure 2.

(A) Average paragraph-level valence for paragraphs containing gay, homosexual, any LGBTQ term, and American, grouped into 5-years intervals. Paragraph-level scores are calculated as the average valence over all words that appear in the NRC VAD Valence Lexicon, which range from 0 (most negative) to 1 (most positive) (Mohammad, 2018). Paragraphs containing LGBTQ labels become more positive over time. Paragraphs containing homosexual are significantly more negative than those containing other LGBTQ labels. (B) Average connotation frame perspective scores over 5-years intervals. Scores are calculated for each subject-verb-object triple containing these group labels as the writer's perspective based on the head verb's entry in the Connotation Frames lexicon (Rashkin et al., 2016). (C) Average valence of 500 nearest words to vector representations of gay, homosexual, all LGBTQ terms, and American, averaged over 10 word2vec models trained on New York Times data from each year. The solid lines are Lowess curves for visualization purposes. Words' valence scores are from the NRC VAD Valence Lexicon. For all plots, the shaded bands represent 95% confidence intervals.

Figure 2A shows the average paragraph valence. For visualization purposes, we present the results over 5-year intervals due to data sparsity in later years for homosexual (there were just 208 paragraphs containing homosexual in 2014, relative to 3,669 containing gay in the same year). Analysis of overlapping confidence intervals and Wilcoxon signed-rank tests over the means for each of the 30 years indicates that gay and all LGBTQ terms occur in significantly more positive paragraphs than homosexual (p < 0.0001). A linear regression analysis over all years reveals that all LGBTQ terms, gay, and homosexual all significantly increase in paragraph-level valence over time (p < 0.0001). However, when considering just the last 15 years, gay still significantly increases in paragraph-level valence, while homosexual may be trending downward, although this trend does not reach significance in our data (p = 0.078).

The paragraph-level valence analysis shown in Figure 2A suggests that LGBTQ groups have become increasingly positively evaluated over time, and thus likely less dehumanized in the New York Times. However, the slight downward trend in valence for paragraphs containing homosexual between 2001 and 2015 suggests that evaluations of people described as homosexual have not improved in the same way as those described by other labels.

Finally, this measurement does not support our initial hypothesis that LGBTQ groups have been more negatively evaluated than American(s), but still reveals that the observed trends for LGBTQ labels are not merely artifacts of changing reporting styles, since paragraphs containing American(s) show a very different pattern. Overall, this result demonstrates substantial language change in the New York Times's discussion of LGBTQ people as well as variation in the contexts where different group labels appear, particularly homosexual.

5.2.1.2. Connotation frames of perspective

Figure 2B shows the writer's average perspective (valence) toward noun phrases containing either any LGBTQ labels, gay(s), homosexual(s), or the comparison group American(s) using the Connotation Frames lexicon (Rashkin et al., 2016). The wide variation, particularly for homosexual, is likely due to sparsity, as limiting the connotation frames analysis to verbs' immediate subject and direct object noun phrase dependents (consisting of only determiners, adjectives, and nouns) greatly reduced the amount of data for each year; there were only 39 triples for homosexual in 2015. We thus show results aggregated over 5-years intervals.

As with paragraph-level valence, the writer's perspective toward the label homosexual is significantly more negative than toward gay (p < 0.001). Linear regression indicates that perspectives toward noun phrases named by any LGBTQ term, gay, and American have all significantly increased over time (p < 0.01). However, the trends are still quite different, as the slopes for gay and all LGBTQ terms are an order of magnitude greater than American [m = (1.1±0.39) × 10−4 for American, m = (1.4±0.18) × 10−3 for all LGBTQ terms, and m = (1.1±0.22) × 10−3 for gay]. Furthermore, the writer's perspective toward noun phrases containing homosexual have significantly decreased over time (p < 0.0001).

Overall, Connotation Frames' perspective scores reveal a similar pattern as the paragraph-level valence analysis, where LGBTQ groups overall appear to be more positively evaluated in the New York Times over time. Unlike gay and the aggregated all LGBTQ terms, the label homosexual undergoes pejoration, as homosexual becomes increasingly used when (implicitly) expressing negative attitudes toward LGBTQ people.

5.2.1.3. Word embedding neighbor valence

Figure 2C shows the average valence scores of the 500 nearest neighbors to the vector representations of gay, homosexual, all LGBTQ terms, and American for each year. In contrast to our other techniques to quantify negative evaluations of a target group, this measurement notably shows that the valence of American's neighboring words is significantly greater than any of the LGBTQ group representations' neighbors every year (Wilcoxon's signed-rank test, p < 0.0001), indicating that American is used in more positive contexts than LGBTQ terms. Furthermore, all LGBTQ vectors' neighbors have an average valence below the neutral 0.5. The average valence for neighboring words of gay and the aggregated all LGBTQ terms representation significantly increase over time (p < 0.0001), suggesting some increasing humanization in the language used in discussions of LGBTQ people.

Figure 2C also reveals dramatic connotational differences between gay and homosexual. As shown by non-overlapping confidence intervals and a Wilcoxon signed-rank test, the average valence for homosexual's neighbors is significantly lower than gay's neighbors over all years (p < 0.0001). Furthermore, while gay's average neighbor valence increases over time (p < 0.0001), homosexual's neighboring words become slightly but significantly more negative over time (p < 0.001). Analyzing the valence of the nearest neighbors indicates that homosexual has long been used in more negative (and potentially dehumanizing) contexts than gay, and that these words' meanings have further diverged as the label homosexual has been used in increasingly negative contexts over time.

5.2.2. Qualitative Analysis

5.2.2.1. Paragraph-level valence analysis

How well does paragraph-level valence analysis capture negative evaluations of a target group? To facilitate a qualitative evaluation of this technique, we identify several hundred paragraphs with the highest and lowest average valence. Most paragraphs with high valence scores appear to express positive evaluations of LGBTQ individuals, and those with low scores express negative evaluations.

Table 4 contain examples with extremely high and low valence. We identify several major themes from these results. Most paragraphs with high valence scores emphasize equal rights, while some focus on the activities of advocacy organizations. On the other end, paragraphs with extremely low valence often focus on violence against LGBTQ people, disease (especially AIDS), and LGBTQ issues internationally. Other themes that emerge in low-valence paragraphs include reports on (and direct quotes from) public figures who dehumanized LGBTQ people and portrayals of LGBTQ people as reckless, irresponsible, and angry.

Table 4.

Example paragraphs with extremely high and low valence scores, along with an interpretation of the patterns we find.

| Valence | Score | Text | Year | Interpretation |

|---|---|---|---|---|

| High | 0.853 | All Americans, gay and non-gay, and for their and basic . | 2004 | Equality |

| High | 0.804 | The of the and that comes with that — it was a to me that homosexual was in itself a and could be a ,' McNeill said in the . | 2015 | Equality |

| High | 0.801 | The Straight for in is by PFLAG National, a non- for and of gay, lesbian, bisexual and transgender people. | 2013 | Advocacy |

| High | 0.780 | What do you consider the most and

LGBT

in the , with youth or more generally? How about more nationally? |

2010 | Advocacy |

| Low | 0.266 | “We the women. We the , we the . We the cripples. We them all. We the . We the lesbian…When you through them all, go to the and dig up the and them a--gain because they didn't hard enough.” | 1993 | Direct Quote |

| Low | 0.364 | A 21-years-old student yesterday to fatally a gay man in Queens in what prosecutors termed a of anti-homosexual . | 1991 | Violence |

| Low | 0.403 | One of his most clients was a transsexual and who was with the AIDS virus and presumably spreading it to her customers and . | 1987 | AIDS |

| Low | 0.373 | , indeed! Burroughs Wellcome is as for the of amyl nitrate by homosexuals as the manufacturers of analgesics are for the of . | 1996 | Recklessness |

| Low | 0.402 | The activists from Africa with and sank back on the benches. The gay Americans absolutely at the from the airline. | 2011 | Recklessness |

| Low | 0.397 | Homosexuality is in Iran. Last year a Nations report on in Iran that gays “face , , and even the .” | 2012 | International |

Words with extremely high valence scores (>0.85) appear in , and somewhat high-valence words (scores between 0.7 and 0.85) appear in . Words with extremely low valence scores (< 0.15) appear in , and somewhat low-valence words (scores between 0.15 and 0.3) appear in . LGBTQ terms are shown in bold.

While this technique accurately captures the valence for many paragraphs, we also identify several shortcomings. Some extreme outliers are extremely short paragraphs, including subtitles within articles which are included as paragraphs in the data. Table 5 shows several examples that were mischaracterized by our paragraph-level valence analysis technique. In addition, there are several paragraphs with highly positive average valence that actually express negative evaluations of LGBTQ people. The valence of the third paragraph in Table 5 is skewed by the positive words supported and marriage even though the paragraph is actually discussing low support for gay marriage. While the fourth paragraph argues that gay couples would be subpar parents relative to straight couples, it uses positive terms, such as love and ideal. Furthermore, kinship terms tend to be assigned highly positive values in the NRC VAD Valence Lexicon, including child and family. Similarly, even though the final example describes discrimination based on sexual orientation, the paragraph's average valence is impacted by positive kinship terms, such as father (0.812) and mother (0.931)8.

Table 5.

Examples mischaracterized by paragraph-level valence analysis.

| Valence | Score | Text | Year | Explanation |

|---|---|---|---|---|

| High | 0.929 | of Homosexuals | 1990 | Subtitle |

| Low | 0.031 | for Liberals and Gays | 2008 | Subtitle |

| High | 0.777 | Of the seven in , only the Rev. Al Sharpton and Dennis J. Kucinich gay unambiguously. | 2003 | Marriage |

| High | 0.765 | And I can receive from gay , but the is— and studies shown that the is where a is raised in a married with a man and a . | 2005 | Marriage Family |

| High | 0.776 | Ms. , now a sophomore, up in her home but regularly visited her gay , Lee, in Cartersville, Ga. She when a was not to her because he was gay. | 1993 | Family |

Words with extremely high valence scores (>0.85) appear in , and somewhat high-valence words (scores between 0.7 and 0.85) appear in . Words with extremely low valence scores (< 0.15) appear in . LGBTQ terms are shown in bold.

Overall, our qualitative analysis shows that highly positive valence often accompanies expressions of positive evaluation toward LGBTQ groups, and low valence often accompanies expressions of negative evaluation. However, paragraph-level valence scores are also impacted by specific words cued by various topics; paragraphs about same-sex marriage tend to be more positive because words like marriage, marry, and couple have high valence scores while paragraphs reporting on hate crimes tend to be more negative because they contain low-valence words related to crime, violence, and injury. Furthermore, this method cannot disentangle perspectives within the text; although there are linguistic signals of dehumanization expressed in reports on anti-LGBTQ violence and homophobic speech, these dehumanizing attitudes are not necessarily from the viewpoint of the journalist or the institution. Nevertheless, there could be an overall dehumanizing effect if the media's discussions of a marginalized social group emphasizes such events that harm people. Repeated associations between LGBTQ labels and such negative contexts could potentially contribute to negative evaluations of LGBTQ groups.

5.2.2.2. Connotation frames of perspective

To qualitatively analyze how well the connotation frames' lexicon capture negative evaluation of a target group, we identify SVO tuples where the verb indicates that the writer has extremely positive or negative perspective toward either the subject or object. The first paragraph in Table 6 contains an SVO tuple where the writer has the most negative perspective toward the noun phrases containing a group label. Inside a direct quote, this paragraph uses the phrase any homosexual act as the object to the verb committed, which has the effect of framing homosexuality as a crime. By deeming gay candidates unworthy of the priesthood, the speaker clearly negatively evaluates LGBTQ people. On the opposite end, many paragraphs labeled as containing extremely positive perspectives toward LGBTQ groups do appear to have positive evaluations of these groups. The second and third paragraphs of Table 6 illustrate this, where the gays are viewed positively for having saved a town, and gay rights advocates are praised for their work.

Table 6.

Examples of paragraphs where the writer expresses highly positive and negative perspective toward LGBTQ groups, according to the Connotation Frames lexicon.

| Perspective | Score | Text | SVO | Year |

|---|---|---|---|---|

| Negative | −0.83 | The most forceful comment came from Cardinal Anthony J. Bevilacqua of Philadelphia, who said his archdiocese screened out gay candidates. “We feel a person who is homosexual-oriented is not a suitable candidate for the priesthood, even if he had never committed any homosexual act,” the cardinal said. | S: he V: committed O: any homosexual act |

2002 |

| Positive | +0.80 | “Gays are accepted here and respected here,” said Mayor Tony Tarracino. “The gays saved a lot of the oldest parts of town, and they brought in art and culture. They deserve a lot of credit for what Key West is today.” |

S: the gays V: saved O: a lot |

1990 |

| Positive | +0.80 | In his speech, he praised gay rights advocates for their hard work and also thanked many elected officials, including his predecessor, Gov. David A. Paterson, and the four Republican state senators who provided the critical votes to pass the marriage bill and whom Mr. Cuomo named one by one to some of the loudest applause of the evening. | S: he V: praised O: gay rights advocates |

2011 |

| Assigned perspective | ||||

| Negative | −0.87 | Previously, Judge Vaughn Walker, who ruled the ban against same-sex unions unconstitutional in federal court, had said that ProtectMarriage could not appeal his decision to the Ninth Circuit, because they were never able to prove that gay marriage harmed them in any way. | S: gay marriage V: harmed O: them |

2011 |

| Positive | +0.73 | Following are excerpts from opinions by the Supreme Court today in its decision that the Constitution does not protect private homosexual relations between consenting adults (…) Justice Stevens wrote a separate dissenting opinion, joined by Justices Brennan and Marshall. | S: the Constitution V: protect O: private homosexual relations |

1986 |

| Positive | +0.70 | Do you know there is a Congressional candidate from Missouri who is saying that allowing gays into the military could strengthen Al Qaeda? I'm thinking, how exactly would that work? “They dance better than me, and they know how to accessorize. I'm very, very angry. It's time for jihad.” | S: gays V: strengthen O: Al Qaeda |

2010 |

Below the double line are examples of paragraphs where the writer's perspective is mischaracterized by the Connotation Frames lexicon. The relevant subject, verb, and object are shown in bold.

However, we found several instances where paragraphs are mislabeled, shown in the bottom half of Table 6. In the fourth paragraph of Table 6, our technique identifies gay marriage as the subject of the negative-perspective verb harmed, but does not account for the preceding text, which actually contradicts the premise that gay marriage causes harm, and thus does not overtly negatively evaluate of LGBTQ groups (although this particular example reveals the difficulty of operationalizing this component because ProtectMarriage groups strongly oppose same-sex marriage and may have negative evaluations of LGBTQ people). The second example similarly shows that this method does not adequately account for various forms of negation, as the positive-perspective verb protect is actually negated. The last example in Table 6 presents a complex case that is even challenging for qualitative analysis. Our method identifies gays as the subject of the verb strengthen, even though the subject should be the gerund allowing gays (into the military), and the lexicon's entry for the writer's perspective toward the subject of strengthen is a highly positive 0.7. However, the object of this verb is the terrorist organization Al Qaeda; our background knowledge suggests that the capacity to strengthen Al Qaeda would reflect negative perspectives. However, this additional context provided by the rest of the paragraph indicates that the writer is being sarcastic and considers the proposition that gays have any impact on strengthening Al Qaeda to be ridiculous. Finally, the writer emphasizes their own stance in opposition to the Missouri congressional candidate by calling upon common stereotypes of gay people being good at dancing and accessorizing.

Measuring the connotation frames' lexicon perspective scores over verbs' subjects and direct objects cannot leverage as much context as measuring valence over paragraphs using the NRC VAD lexicon labeled for 20,000 words. However, this technique can make more fine-grained distinctions regarding the writer's (and institution's) attitudes directed toward LGBTQ people and is not as dramatically impacted by the emotional valence of the topic discussed. Neither technique can disentangle the journalist's perspective from those expressed by others and simply reported by the journalist. While removing direct quotations may partially address this issue, we deliberately do not remove text from direct quotes or paraphrases. The journalists and newspaper make intentional decisions about what text to include and exclude from quotations, which could still meaningfully represent their perspectives and values (Niculae et al., 2015).

5.2.2.3. Word embedding neighbor valence

Compared to the previous methods, one limitation of using word embeddings to quantify negative evaluations of a target group is that embeddings are not easily interpretable by analyzing a small sample of data. Instead, we assess this technique by identifying LGBTQ terms' nearest neighbors in several outlier years. To facilitate this qualitative analysis, we identify a set of unique nearest neighbors for each LGBTQ label in each outlier year, where a word is a unique nearest neighbor for a given LGBTQ term and year if it is not in that term's top 500 nearest neighbors in any other year.

Table 7 contains several example paragraphs that illustrate overarching themes for the outlier years 1993, 1999, and 2014. In 1999, gay, homosexual and the aggregated representation of all LGBTQ terms were all more closely associated with low-valence words than in almost any other year. We connect this finding to a period of intense reporting in the months following the October 1998 murder of a gay Wyoming college student, Matthew Shepard, which drew national attention to anti-LGBTQ violence. Because LGBTQ labels frequently co-occurred with text about this incident, terms related to Matthew Shepard's case had closer representations to LGBTQ terms in this year. For example, gay and all LGBTQ terms's 500 nearest neighbors include wyoming in 1999 and shepard from the years 1998–2000. Unique nearest neighbors for gay in 1999 include other terms that could be connected to this incident, including homicidal, imprisoned, and hatred. Not only was Shepard's murder rooted in the dehumanization of LGBTQ people, but the media's emphasis on the gruesome details of Shepard's death further dehumanized him (Ott and Aoki, 2002). Ott and Aoki argue that the media's framing of this case actually further stigmatized LGBTQ people.

Table 7.

Example paragraphs from years where LGBTQ terms' nearest neighbors had exceptionally high and low valence.

| Valence | Year | Example |

|---|---|---|

| Low | 1999 | Matthew Shepard, a gay college student in Wyoming, had been pistol-whipped and left to die after being tied to a fence on Oct. 7, 1998. Aaron McKinney, who was charged with first-degree murder and other crimes in connection with Mr. Shepard's killing, went on trial Monday, denying that the act was a hate crime, but rather connected to drug use and outrage at a sexual advance he said Mr. Shepard made. |

| Low | 2014 | Uganda's vehement anti-gay movement began in 2009 after a group of American preachers went to Uganda for an anti-gay conference and then worked with Ugandan legislators to draft a bill that called for putting gay people to death. While the bill was being debated, attacks against gay Ugandans began to increase. In early 2011, David Kato, a slight, bespectacled man and one of the country's most outspoken gay rights activists, was beaten to death with a hammer. |

| Low | 2014 | “Hey, @McDonalds: You're sending #CheersToSochi while goons wearing Olympic uniforms assault LGBT people,” read one comment last week, from the author and activist Dan Savage. |

| High | 1993 | The regulations, which are to take effect Feb. 5, codify the Administration's policy that was worked out as a compromise with the Joints Chiefs of Staff, who had defended the 50-years-old ban, arguing that allowing homosexuals to serve openly would hurt the morale of troops, and thus hurt military readiness. |

LGBTQ terms are shown in bold.

Our word embedding neighbor valence measure reveals that the most negative year for gay and all LGBTQ terms since 1999 was 2014, the second most-recent year of data. We identify several major themes in 2014 that co-occurred with LGBTQ group labels and possibly led to this distributional semantic pattern, primarily reporting on anti-LGBTQ laws and attitudes in Uganda and Russia (particularly in light of the 2014 Winter Olympics in Sochi). The terms athletes and winterolympics appeared in gay's nearest neighbors in 2014. In addition, the terms Uganda, Ugandan, and Mugisha (a Ugandan LGBT advocate) are among gay's unique nearest 500 neighbors in 2014.

Unlike in 1999 and 2014, LGBTQ terms in 1993 are associated with higher-valence words, especially homosexual. Homosexual's unique nearest neighbors in 1993 include the high-valence words pledge, civilian, readiness, and inclusion. These words are likely connected with numerous stories in 1993 covering the controversy over whether LGBTQ people should be allowed to serve in the military.

5.3. Denial of Agency

5.3.1. Quantitative Results

5.3.1.1. Connotation frames of agency

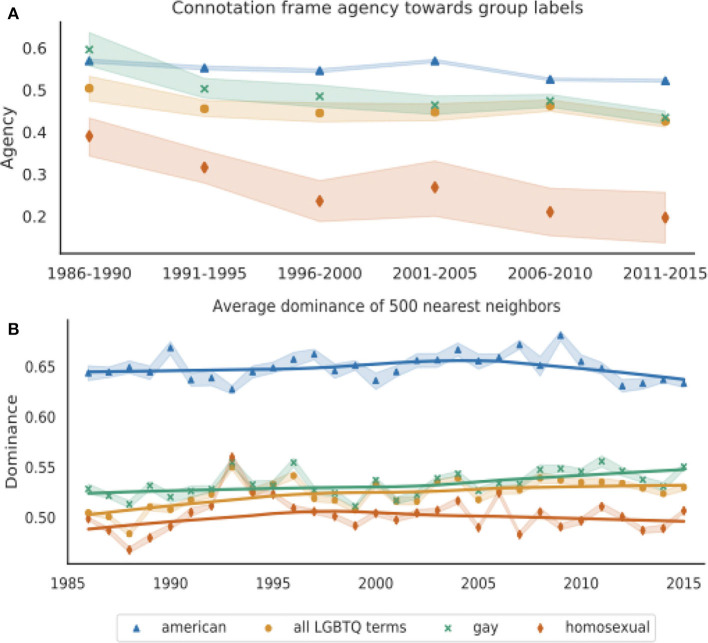

Figure 3A shows the agency of each group label based on its head verb's entry in the Connotation Frames lexicon for agency (Sap et al., 2017). As in Figure 2B, there is large variance due to data sparsity when using the Connotation Frames lexicon, particularly for homosexual, which is considerably less frequent than gay or other LGBTQ terms in later years. In order to maximize precision when extracting subject-verb pairs, we extract only nouns and their immediate adjectival modifiers, which limits the amount of data. We thus show average agency over 5-years intervals.

Figure 3.

(A) Agency of gay, homosexual, all LGBTQ terms, and American using the Connotation Frames lexicon for agency for all subject-verb-object tuples containing each group label (Sap et al., 2017), calculated over 5-years intervals. An SVO tuple received a score of 1 if the label appears in a positive agency position relative to its head verb and 0 if it does not. (B) Average dominance of 500 nearest words to our representations of gay, homosexual, all LGBTQ terms, and American, averaged over 10 word2vec models trained on New York Times data for each year. Dominance scores for each word come from the word's entry in the NRC VAD Dominance Lexicon (Mohammad, 2018), which range from 0 (least dominance) to 1 (most dominance). For both plots, the shaded bands represent 95% confidence intervals and the solid lines in (B) are Lowess curves for visualization purposes.

Wilcoxon signed-rank tests on the means for each group labels over all years indicate that gay occurs in contexts with significantly higher agency than homosexual (p < 0.0001). All four group labels significantly decrease in agency over time according to linear regressions over all 30 years (p < 0.001), but the slope for homosexual is much greater [m = (−7.9±1.3) × 10−3 for homosexual, compared to m = (−3.9±.55) × 10−3 for gay, and m = (−1.5±.46) × 10−3 for all LGBTQ terms]. Furthermore in the most recent 15 years, gay and all LGBTQ terms show no significant change (p = 0.097 for gay and p = 0.14 for all LGBTQ terms), but homosexual still decreases significantly in agency (p < 0.05).

Figure 3A suggests that LGBTQ groups experience greater denial of agency in the New York Times than the institution's in-group identifier American. Furthermore, people described as homosexual experience even more denial of agency than people who are described as gay. Unlike the improving attitudes indicated by our analysis of negative evaluations of a target group, it appears that denial of agency increased over time for all LGBTQ groups. However, the relatively rapid decrease in agency for homosexual is consistent with other results suggesting homosexual's pejoration.

5.3.1.2. Word embedding neighbor dominance

Figure 3B shows the average dominance of each group label's 500 nearest neighbors. American is significantly associated with greater dominance than gay, homosexual, and all LGBTQ terms (Wilcoxon signed-rank test; p < 0.0001), and gay has significantly higher dominance than homosexual (p < 0.0001). While the dominance associated with gay and all LGBTQ terms significantly increased over time (p < 0.0001), the dominance associated with homosexual did not significantly change (p = 0.65). Furthermore, the average nearest neighbor dominance for homosexual decreased in the most recent 15 years (p < 0.01).

Even though dominance may more directly encode power rather than agency, the NRC VAD Dominance Lexicon is useful for operationalizing denial of agency because of the close relationship between these concepts. As with Connotation Frames of agency, these results suggest that LGBTQ groups experience greater denial of agency than the New York Times's in-group American. Both techniques show differences between the labels gay and homosexual, where homosexual is consistently associated with lower agency than gay and further decreases over time. However, these two measurements suggest different temporal dynamics for the denial of agency of LGBTQ people; Connotation Frames' agency slightly decreases for all LGBTQ terms over time, but increases with word embedding neighbor dominance.

5.3.2. Qualitative Analysis

5.3.2.1. Connotation frames of agency

We qualitatively investigate the labels assigned by this technique for a sample of paragraphs. In general, the binary labels for positive and negative agency seem reasonably accurate, as shown by the first four example in Table 8. Verbs that attribute high agency to the subject include develop and endorse, suggesting that the LGBTQ-aligned subjects are in control and actively making their own decisions. On the other end, LGBTQ people have low agency when they are the subjects of passive verbs, such as face and acknowledge.

Table 8.

Examples where the writer attributes high and low agency toward LGBTQ groups, according to the Connotation Frames lexicon for agency.

| Agency | Text | SVO | Year |

|---|---|---|---|

| High | Within the close-knit world of professional childbearers, many of whom share their joys and disillusionments online and in support groups, gay couples have developed a reputation as especially grateful clients… | S: gay couples V: developed O: a reputation |

2005 |

| High | Tonight, the gay rights group Stonewall Democrats will endorse a candidate for A.G. It's a relatively big prize in the four-man Democratic primary, given that liberal city voters will have relatively serious sway… | S: the gay rights group V: endorse O: a candidate |

2006 |

| Low | Nigeria's gay men and lesbians regularly face harassment and arrest, gay activists here say. The criminal code bans acts “against the order of nature,” and imposes sentences of up to 14 years for those convicted… | S: gay men V: face O: harassment |

2005 |

| Low | Much of the debate among military and civilian officials is now focusing on some version of an approach called “don't ask, don't tell.” (…) But under the “don't tell” element, there would be restrictions on the extent to which homosexuals could acknowledge their homosexuality. | S: homosexuals V: acknowledge O: their homosexuality |

1993 |

The relevant subject, verb, and object are shown in bold.

The Connotation Frames lexicon for agency seems to be especially accurate for low agency; we could not find counterexamples in our sample where LGBTQ people were portrayed with high agency but labeled with low agency. However, we found several mischaracterizations where LGBTQ people were labeled as having high agency but are not portrayed as agentive or in control of their own actions. Our Connotation Frames technique considers the example below to attribute high agency to LGBTQ people because homosexual appears in the subject of the high-agency verb violate; however, homosexual actually modifies relationships, not people themselves. Furthermore, this debate within religion appears to be devoid of input from LGBTQ people and does not portray them as particularly agentive.

At the same time, it underscored a stark division in Judaism over the place of homosexuals in society. Orthodox rabbinical groups believe that homosexual relationships violate Jewish law…(1996)

5.3.2.2. Word embedding neighbor dominance

Using the VAD Dominance Lexicon to calculate average dominance of each social group label corresponds well to our notion of denial of agency. Because gay's nearest neighbors have a much higher average dominance than homosexual's for most years, we compare words that are nearby neighbors for gay and not homosexual for multiple years' word2vec spaces. Words frequently among the 500 words nearest to gay and not homosexual include high-agency words, such as activist, liberation, advocate, and advocacy, which have dominance scores of 0.877, 0.857, 0.818, and 0.731, respectively. Words frequently among homosexual's 500 nearest neighbors and not gay's include low-agency words, such as submissive (0.173), degrading (0.232), enslavement (0.302), and repressed (0.311).

We additionally investigate the word2vec models corresponding to several outlier years. Homosexual's neighbors have the highest average dominance in 1993, which is likely due to military-related language in debates surrounding the “Don't Ask, Don't Tell” legislation. High-dominance words unique to homosexual's nearest neighbors in 1993 include forces (0.886), military (0.875), enforce (0.836) and troops (0.804). Gay's neighbors' in 1999 have the lowest average dominance than any other year, which is likely connected to Matthew Shepard's death and the subsequent outrage; unique neighbors for gay in 1999 include imprisoned (0.302) and repressed (0.311).

5.4. Moral Disgust

5.4.1. Quantitative Results

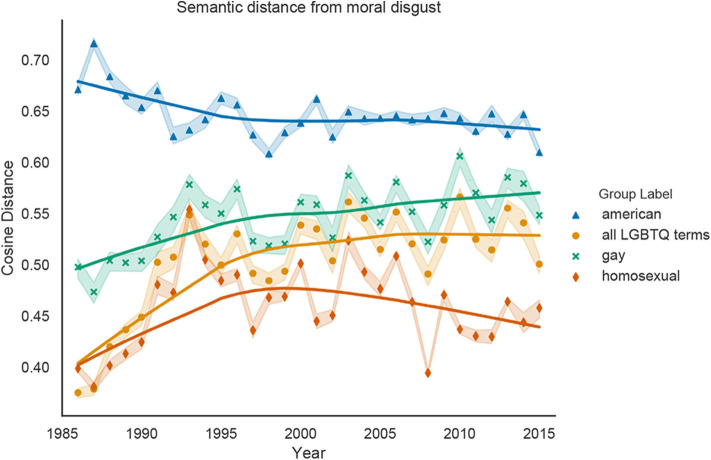

Figure 4 shows the changing relationships between all LGBTQ terms, gay, homosexual and the dehumanizing concept of Moral Disgust. Because the cosine distance between American and Moral Disgust is significantly greater over all years than any LGBTQ representation (Wilcoxon signed-rank test; p < 0.0001), American is the least associated with Moral Disgust. Furthermore, the cosine distance between gay and Moral Disgust is significantly greater than the distance between homosexual and Moral Disgust for every year (p < 0.0001), indicating that homosexual is more closely associated with Moral Disgust than gay is. Linear regression analyses show that all LGBTQ terms and gay significantly increase in cosine distance from the Moral Disgust vector (p < 0.0001), indicated weakening associations between LGBTQ people and moral disgust over time. On the other hand, the distance between homosexual and Moral Disgust does not change significantly over time (p = 0.54), and even decreases after 2000 (p < 0.05).

Figure 4.

Cosine distance between our representations of gay, homosexual, all LGBTQ terms, and American and the vector representation of the Moral Disgust concept, averaged over 10 word2vec models trained on New York Times data for each year. Increases in cosine distance indicate decreases in Moral Disgust; possible values range from 0 (most closely associated with Moral Disgust) to 1 (least associated with Moral Disgust). Shaded bands represent 95% confidence intervals and the solid lines are Lowess curves for visualization purposes.

Overall, these measurements of associations between LGBTQ people and Moral Disgust are consistent with our other operationalizations of dehumanization. All LGBTQ labels are more closely associated with Moral Disgust than the newspaper's in-group term American, but these associations weaken over time, suggesting increased humanization. Notably, the term homosexual has always been more associated with Moral Disgust than the denotationally-similar term gay, and homosexual actually becomes more closely associated with this dehumanizing concept in recent years.

5.4.2. Qualitative Analysis

Our analysis of homosexual's changing semantic neighbors from Table 3 has shown that this term has become more associated with immoral concepts, suggesting that moral disgust is a mechanism by which LGBTQ people are dehumanized. Although rarely directly invoked, the connection between LGBTQ people and disgust is supported by the data, such as in the examples shown below, where words belonging to the moral disgust lexicon are in bold. Figure 4 indicates that late 1980s and early 1990s, LGBTQ labels rapidly became more semantically distant from Moral Disgust. This likely reflects decreasing attention to the AIDS epidemic, as many disease-related words are included in the moral disgust lexicon.

Senator Jesse Helms, the North Carolina Republican who has vigorously fought homosexual rights, wants to reduce the amount of Federal money spent on AIDS sufferers, because, he says, it is their “deliberate, disgusting, revolting conduct” that is responsible for their disease (1995).

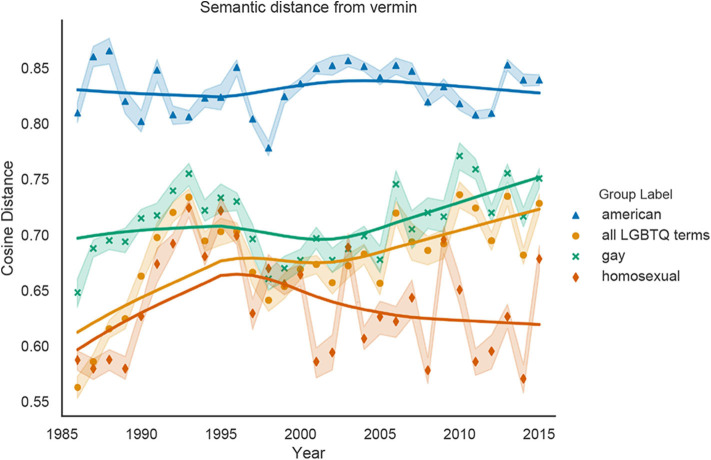

A lawyer named G. Sharp, address unknown, called the cover picture “utterly repulsive.” Donald Ingoglia of Sacramento was equally outraged. “Showing two smiling gays on the cover illustrates how sick our society has become,” he wrote. “You have my non-lawyer friends falling off their chairs” (1992).