Abstract

In this study, I investigate the evolution of authorship diversity in the scientific journals of three major pan-European professional associations of political research (ECPR, EPSA and EISA), since their first issue until 2020, through an analysis of the bibliometric information of each published item. Established between 1973 and 2019, the seven periodicals under scrutiny (European Journal of Political Research, European Journal of International Relations, European Political Science, European Political Science Review, Political Research Exchange, Political Science Research & Methods and Global Affairs) cover a wide spectrum of political science and offer a convenient gateway for exploring various disciplinary dynamics, with a focus mostly on comparative politics, international relations and political methodology. The dataset includes 5281 articles and 4533 unique authors affiliated to 1029 unique institutions from 73 countries. The analysis shows that, while currently more diverse than ever, all these journals still have a large Western European and/or US core. Research is overwhelmingly produced in OECD member states and about half originates in countries where English is an official language. Although collaborations are increasingly frequent and seem to become the norm, scholars affiliated with Central and Eastern European institutions, as well as women authors are still heavily underrepresented.

Supplementary information

The online version of this article (10.1057/s41304-021-00319-9)

Keywords: Authorship diversity, Bibliometric data, Collaborative patterns, Political science as a discipline, Professional associations, Structural inequalities

Introduction

When the European Consortium for Political Research (ECPR) marked its 25th anniversary, political science journals in both Europe and North America seemed to be largely parochial (Norris 1997). Shortly after, European Political Science (EPS) was established as an ECPR periodical aimed to offer a space to focus and advance the conversation on (European) political science as discipline and profession, including on matters related to diversity. So where do we stand now? Half a century after the establishment of the ECPR as the oldest pan-European political science association and at EPS’s own 20-year anniversary, to what extent do the journals of European professional associations reflect a more diverse authorship? Which are the openings and limitations of our methodological choices when addressing such topics? And what dynamics can these journals reveal about the evolution and current state of our discipline?

To explore these puzzles, I investigated the bibliometric features and institutional affiliations of the authors of all items published in seven European political science journals since their first until their latest available issue (as of September 2020). These periodicals are the European Journal of Political Research (EJPR, est. 1973); European Journal of International Relations (EJIR, est.1995); European Political Science (EPS, est.2001); European Political Science Review (EPSR, est. 2009); Political Research Exchange (PRX, est. 2019), Political Science Research & Methods (PSRM, est. 2013) and Global Affairs (GA, est. 2015). The first five are ECPR’s own journals, with the particularity that EJIR is currently published by the ECPR Standing Group on International Relations (SGIR) and the European International Studies Association (EISA). A distinct professional association established in 2013, the latter also publishes GA, while PSRM is the periodical of the European Political Science Association (EPSA, est. 2010). This selection offered the possibility to evaluate systematically and comparatively the periodicals of the two largest pan-European political science professional associations (ECPR and EPSA), as well as a specific subfield (international relations) which may be more sensitive to diversity, at least through its scope. In addition, some of these periodicals are among the best ranked European political science journals on several accounts, including the (in)famous Thomson Reuters Impact Factor.

Data collection and coding

From the official websites of the journals, I initially created a database with all articles assigned to an issue, including special issues. For the quantitative analysis, I eliminated items with a primarily administrative role such as errata and lists of contributors. Most items in each journal are original research articles, i.e. independent pieces providing new insights on a subject through their choice of methodology and/or data, occasionally grouped by topic in special issues or forums. Beyond that, the diversity and weight of other genres within each journal varies considerably, reflecting not only distinct editorial agendas but also different perspectives on how to engage publicly with scientific arguments. For instance, except for occasional editorials announcing the journal policies and/or achievements, EPSR, PSRM and PRX publish only research articles. The shorter pieces are usually labelled “research notes” although they are not necessarily less methodologically or analytically complex than the longer pieces in the same journal or than the items of similar length labelled as original/research articles in other journals. Then again, EPS and GA and, to a lesser extent, EJPR and EJIR also make room for other genres, especially extensive book reviews and review essays which are often as well-documented and sophisticated in argumentation as most typical research articles. In fact, for a significant part of its history, EPS published yearly an issue dedicated exclusively to reviewing new scholarship. More recently, it also started to promote review symposiums, a combined genre where authors of different volumes on a similar topic review each other’s arguments and respond to each other’s comments within the same issue.

Beyond their genre, for each article, I collected the basic bibliometric identification details (author(s), title, year, volume, issue, pages), as well as each author’s institutional affiliation (country, organization and, if specified, its subunit) as they appeared in the respective journal at the time of publication. The corrections indicated in subsequent errata were then applied. Finally, the database was consolidated so that items that may repeat such as authors, institutions or countries appear with a single spelling and in a single language. I then analysed coded data for each journal, as well as for the entire dataset and two subsets—all ECPR journals (EJPR, EPS, EPSR, PRX, EJIR) and IR journals (EJIR, GA), looking for frequencies that could indicate potential patterns and outliers.

The analysis focused primarily on the entire period and for the whole set for which information exists but for certain research goals, I selected several smaller time frames. For example, since 2020 data was incomplete, to assess recent evolutions where the full yearly data was relevant, I considered the period 2015–2019. For a more nuanced assessment, within the EJPR corpus, I also distinguished Political Data Yearbook (PDY) items from the rest. PDY institutionalized a research programme (henceforth referred to as PDY(p)) that presents similarly collected data on electoral and other major political developments in various countries. These articles follow almost the same methodology and structure every year, they are frequently authored by the same people for decades, and they represent a significant proportion of the entire collection. In the 1970s and 1980s, they were special comparative reports published as EJPR articles in regular issues but since 1992 they have been published in separate yearly EJPR issues (known since 2012 as the PDY) dedicated exclusively to the initiative. As of September 2020, the 2020 PDY issue was not fully available and consequently it was not included in the database. Articles that analyse political data but are not formally part of the PDY(p) project were counted as regular research articles. The distinction between the PDY(p) items and the rest is also reflected in the aggregated datasets (“ECPR journals” and “Total”, respectively). Altogether, I collected 5281 items from 551 issues in 132 volumes. Table 1 details their distribution by journal and dataset.

Table 1.

Dataset synopsis

| ASSOCIATION | JOURNAL | CURRENT PUBLISHER | LAST ISSUE | NO. VOLUMES | UNIQUE ISSUES | TOTAL ITEMS in the dataset | % Full dataset | |

|---|---|---|---|---|---|---|---|---|

| ECPR |

EJPR (1973–) |

Wiley | 3/2020 | 59 | 278 |

2645 (56.7% of ECPR) |

50.1 | |

| of which PDY(p) | 1/2019a | – | 28a |

976a (20.9% of ECPR 36,9% of EJPR) |

18.5 | |||

|

EPS (2001–) |

Palgrave Macmillan | 3/2020 | 19 | 73 |

987 ( 21.2% of ECPR) |

18.7 | ||

|

EPSR (2009–) |

Cambridge University Press | 3/2020 | 12 | 42 |

285 (6.1% of ECPR) |

5.4 | ||

|

PRX (2019–) |

Taylor & Francis | 1/2020b | 2 | 2 |

31 (0.7% of ECPR) |

0.6 | ||

| ECPR and EISA |

EJIR (1995–) |

Sage | S1/2020 | 26 | 104 |

716 (15.4% of ECPR; 67.9% of IR) |

13.6 | |

| EISA |

GA (2015–) |

Taylor & Francis | 2/2020 | 6 | 27 |

339 (32.1% of IR) |

6.4 | |

| EPSA |

PRSM (2013–) |

Cambridge University Press | 3/2020 | 8 | 25 | 278 | 5.3 | |

| ECPR subset (EJPR with PDY, EJIR, EPS, EPSR, PRX) | 118 | 499 | 4664 | 88.3 | ||||

| ECPR subset without PDY(p) | 118 | 471 | 3688 | 69.8 | ||||

| IR subset (EJIR & GA) | 32 | 131 | 1055 | 19.9 | ||||

| Full dataset | 132 | 551 | 5281 | 100 | ||||

a PDY(p) items were included in the 1970s and 1980s as special yearly articles but since 1992 they were published in distinct yearly issues (two single issues, 18 double issues and eight PDY). At the time of data analysis, the 2020 PDY issue was not fully available; consequently, it was not included in the dataset

bThe volume was not complete at the time of data analysis (i.e. ongoing publication until the end of the year)

To evaluate the number and distribution of authors I looked at the number of unique authors (UQA), each author’s number of appearances (author fingerprint—AFN) and authors’ gender (as reflected by the given names on a dichotomic scale—F/M). When the names could have been used for both genders or were unfamiliar, I checked the authors’ CV and, if available, the institutional webpage or other public documents mentioning them with gendered pronouns (usually brief bios presenting or promoting their work). For articles with multiple authors (maximum found: 21 authors/article), I coded whether their order was alphabetical or not. For authors with multiple affiliations (maximum found: 4 affiliations/author), I granted each affiliation equal value, as the order in which they are listed within an article did not seem to follow a consistent criterion within each journal or across the database. Their distribution by journal and cumulative sets is detailed in Table 2 for the entire collection and in Table 3 for the period 2015–2019. Figure 1 illustrates the distribution of the number of authors per article.

Table 2.

Authorship distribution (full dataset)

| Journal | Items | Authors/item | Authors fingerprint (AFN) | Authors (UQA) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Total | F | M | Total | F | M | |||||||

| EJPR (1975–2020) | 2645 | 1.6 | 4152 | 814 | 19.6% | 3338 | 80.4% | 1903 | 408 | 21.4% | 1495 | 78.6% |

| EJPR without PDY(p) | 1669 | 1.7 | 2765 | 510 | 18.4% | 2255 | 81.6% | 1793 | 379 | 21.1% | 1414 | 78.9% |

| EJPR: PDY(p) | 976 | 1.4 | 1387 | 304 | 21.9% | 1083 | 78.1% | 158 | 40 | 25.3% | 118 | 74.7% |

| EPS (2001–2020) | 985 | 1.5 | 1435 | 416 | 29.0% | 1019 | 71.0% | 1101 | 360 | 32.7% | 741 | 67.3% |

| EPSR (2009–2020) | 284 | 1.8 | 511 | 135 | 26.4% | 376 | 73.6% | 465 | 124 | 26.7% | 341 | 73.3% |

| PRX (2019–2020) | 31 | 2.1 | 65 | 15 | 23.1% | 50 | 76.9% | 65 | 15 | 23.1% | 50 | 76.9% |

| EJIR (1995–2020) | 716 | 1.3 | 956 | 227 | 23.7% | 729 | 76.3% | 779 | 197 | 25.3% | 582 | 74.7% |

| GA (2015–2020) | 339 | 1.3 | 429 | 128 | 29.8% | 301 | 70.2% | 357 | 113 | 31.7% | 244 | 68.3% |

| PRSM (2013–2020) | 278 | 2.2 | 612 | 105 | 17.2% | 507 | 82.8% | 513 | 93 | 18.1% | 420 | 81.9% |

| ECPR TOTAL | 4661 | 1.5 | 7119 | 1607 | 22.6% | 5512 | 77.4% | 3828 | 1008 | 26.3% | 2820 | 73.7% |

| ECPR without PDY(p) | 3685 | 1.6 | 5732 | 1303 | 22.7% | 4429 | 77.3% | 3730 | 985 | 26.4% | 2745 | 73.6% |

| IR TOTAL | 1055 | 1.3 | 1385 | 355 | 25.6% | 1030 | 74.4% | 1111 | 305 | 27.5% | 806 | 72.5% |

| TOTAL | 5278a | 1.5 | 8161 | 1841 | 22.6% | 6320 | 77.4% | 4533 | 1183 | 26.1% | 3350 | 73.9% |

| TOTAL without PDY(p) | 4302 | 1.6 | 6773 | 1536 | 22.7% | 5237 | 77.3% | 4436 | 1159 | 26.1% | 3277 | 73.9% |

aThe total number of items in the database is 5281 but three of them (all in EPS) were assumed only institutionally and thus not considered for the authors’ distribution analysis. Highest values for female authorship marked Bold.

Table 3.

Authorship distribution (2015–2019)

| Journal | Items | Authors/item | Authors fingerprint (AFN) | Unique authors (UQA) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Total | F | M | Total | F | M | |||||||

| EJPR (est.1973) | 434 | 1.9 | 827 | 226 | 27.3% | 601 | 72.7% | 552 | 145 | 26.3% | 407 | 73.7% |

| EJPR without PDY(p) | 244 | 2.1 | 521 | 140 | 26.9% | 381 | 73.1% | 473 | 124 | 26.2% | 349 | 73.8% |

| EJPR: PDY(p) | 190 | 1.6 | 306 | 86 | 28.1% | 220 | 71.9% | 83 | 24 | 28.9% | 59 | 71.1% |

| EPS (est.2001) | 276 | 1.7 | 472 | 162 | 34.3% | 310 | 65.7% | 421 | 149 | 35.4% | 272 | 64.6% |

| EPSR (est.2009) | 138 | 1.8 | 243 | 62 | 25.5% | 181 | 74.5% | 235 | 62 | 26.4% | 173 | 73.6% |

| PRX (est.2019) | 10 | 1.6 | 16 | 3 | 18.8% | 13 | 81.3% | 16 | 3 | 18.8% | 13 | 81.3% |

| EJIR (est.1995) | 204 | 1.4 | 287 | 85 | 29.6% | 202 | 70.4% | 272 | 80 | 29.4% | 192 | 70.6% |

| GA (est.2015) | 318 | 1.3 | 398 | 122 | 30.7% | 276 | 69.3% | 332 | 108 | 32.5% | 224 | 67.5% |

| PRSM (est.2013) | 203 | 2.2 | 456 | 73 | 16.0% | 383 | 84.0% | 391 | 66 | 16.9% | 325 | 83.1% |

| ECPR TOTAL | 1062 | 1.7 | 1845 | 538 | 29.2% | 1307 | 70.8% | 1375 | 409 | 29.7% | 966 | 70.3% |

| ECPR without PDY(p) | 872 | 1.8 | 1539 | 452 | 29.4% | 1087 | 70.6% | 1299 | 389 | 29.9% | 910 | 70.1% |

| IR TOTAL | 522 | 1.3 | 685 | 207 | 30.2% | 478 | 69.8% | 596 | 185 | 31.0% | 411 | 69.0% |

| TOTAL | 1583a | 1.7 | 2699 | 733 | 27.2% | 1966 | 72.8% | 2037 | 571 | 28.0% | 1466 | 72.0% |

| TOTAL without PDY(p) | 1393 | 1.7 | 2393 | 647 | 27.0% | 1746 | 73.0% | 1962 | 551 | 28.1% | 1411 | 71.9% |

aThe total number of items in the database is 1584 but one of them (in EPS) was signed only institutionally and thus not considered for the authors’ distribution analysis. Highest values for female authorship marked Bold.

Fig. 1.

Authorship and co-authorship distribution (full dataset, n = 5278*). Note: *Three items from the total of 5281 in the dataset are assumed with institutional authorship only and are not included in this synopsis. **8 authors: 1; 9 authors: 5; 13 authors: 1; 16 authors: 1; 21 authors: 1. M: MALE single authors, F: FEMALE single authors

To identify authors who may have appeared with different variations of their names or whose given names were indicated only through initials, I searched their public CVs and list of publications. These were available usually on their institutional webpages and/or academic social media profiles (Google Scholar, ResearchGate). To identify especially retired or deceased authors who published in earlier decades and whose digital presence is low, I eventually retrieved the necessary information from acknowledgement sections, bibliographies and contributors’ lists of various volumes connected to the research fields of the respective authors as suggested by the content of the article(s) associated with that author. When the official name or the status of an institutional affiliation was not clear, I consulted their official website(s), as well as scholarly and policy reports on the status and history of higher education in various countries and/or specific research areas. If the official name of an institution changed during the analysed period, the most recent name was used within the dataset for the entire time frame as a unique identifier. If several institutions merged, they were all coded under the most recent name. Universities that belong to larger consortia/federations and which are often better known than the system to which they belong were coded as separate entities. In the rare situations in which the publicly available information was still insufficient to establish such details (especially for older items), I contacted colleagues from the respective institutions, who kindly provided the requested details.

To evaluate the number and distribution of institutional affiliations I considered the number of unique institutions (UQI) and each institution’s number of appearances (institutional fingerprint—IFN). In this study, each appearance of a unique institution is counted as 1 and then added to its institutional fingerprint. While this measurement option provides a straightforward way to assess and compare the weight of each unique institution within the set, it does not fully reflect the context of the respective affiliation (e.g. authors with multiple institutional affiliations in the same article, different authors from the same institution within the same article, etc.).

Although the bibliometric identification details for each article provide technical information that may seem hardly politicized, as the codification process advanced, it became increasingly evident that a part of this information was linked to various political preferences of the authors and/or the journals, adopted consciously or not. Consequently, the coding process required several strategies to diminish the implicit political bias, while still identifying the mainstream narrative. This is the most evident in the case of country names. For accuracy reasons, these were coded with the name that the country of the institutional affiliation had in the journal at the time of the article publication and corrections were applied only for spelling or location misplacements. Then, for consistency reasons, these names were coded considering the potential border changes and the most recent official name. For instance, the Czech Republic and Czechia were both coded under Czechia, but Czechoslovakia was coded as a different entity, even if the same institution was associated with both Czechoslovakia and Czechia. In some cases, an additional criterion was required to maintain both data accuracy and a low level of political bias. To reflect the mainstream narrative present implicitly in the journals, this criterion was the most frequent way in which countries appear within the dataset. For example, since they were always listed as distinct in all the analysed journals, China, Taiwan, Macao and Hong Kong were coded as separate entities. However, when “Scotland” was named as country of the institutional affiliation (mostly in 1990s articles), it was still coded under “UK” because these mentions were rare and inconsistently used for the same institution, within the same journal.

The legal status of certain institutions to which authors are affiliated also generated additional challenges. For instance, there are universities and other organizations which opened campuses or chapters in different countries. Also, there are universities designed/ accredited, at least initially, in a different institutional and teaching model than the national system in which they were established and operate (e.g. Central European University—CEU). There are also institutions which were created as international initiatives such as universities (e.g. European University Institute—EUI), think tanks and research centres, professional associations or intergovernmental organizations. Some of the institutional affiliations in the dataset are also multinational companies. Not least, though rare, there are also non-affiliated authors (e.g. “independent scholar”, “attorney in private practice”) or authors who were already retired at the time of the article publication but used the previous affiliation (e.g.”Former EU Ambassador”).

For all these cases, the general rule was to assign them to the country in which the respective affiliation operates, according to data provided in the respective article, similarly to coding for local/national institutions. This rule was also applied for non-affiliated authors (e.g. “young French political scientist” was coded under “France”). Overseas university campuses were considered as distinct institutions and assigned to the country in which they operate, considering that they contribute mostly to the local dynamics in the teaching and research sector and that, in most international ranking/evaluation systems, they impact the reputation and metrics of the country in which they operate and not of the country of origin. If, through its mission, an organization does not operate in a specific country (e.g. International Political Science Association—IPSA), it was coded as “International”.

Exceptions were made in just two cases. First, political foundations acting in different countries were coded under the country of origin because, unlike other types of organizations with chapters abroad, their existence is intrinsically linked to the country of origin (e.g. Heinrich-Böll-Stiftung Washington was coded as a German and not as a US institution). For similar reasons, the “Delegation of the European Commission in the US” and “former EU ambassador” were coded as “International”, as they contribute mostly to the reputation of the European Union, an international organization. However, structures of intergovernmental organizations that are permanently established in a certain country were coded under the country where they are established, as they contribute significantly also to the reputation of that country and not only of the respective organization (e.g. NATO Defence College Rome was coded under “Italy” and the “European Commission” was coded under “Belgium”).

Where do we work?

Such diversity of affiliations raises several questions related to their typology, weight and evolution in the dataset. Therefore, for each institutional affiliation, I inductively coded the type of institution and whether it was a local or an international entity. This process generated two dozens of types of institutions, which were then grouped into seven larger categories: (1) education and research (universities; diplomatic, military or intelligence higher education training schools; independent research institutes; academies of sciences; research units within national research councils); (2) government (ministries; governmental agencies; local governments; intergovernmental organizations—IGOs); (3) parties and elections (political foundations; electoral authorities; parliaments); (4) think tanks and the NGO sector (local non-governmental organizations—NGOs and think tanks; international NGOs—INGOs); (5) professional associations (national and international political science associations); (6) dissemination of information (publishers, archives, libraries, data and information centres); (7) companies (consulting; digital economy; public opinion/market research; banking; law). In the rare cases in which an institution could belong to two or more types, only one was chosen considering the respective institution’s self-designation.

Of the 1029 unique institutions identified in the dataset, almost 89% are organizations dedicated primarily to education and/or research, most of which (799) are local universities. These organizations generated 97% of the collection’s total IFN (Table 4). Similar proportions exist also for the most recent period (i.e. 2015–2019), as well as at the journal level. While these distributions indicate that universities remain the main centres of political research, there are several notable dynamics. Most significantly, over time, the number and the fingerprint of research centres grew throughout the dataset, especially during the last decade. Both the number and fingerprint of research centres are still ten times smaller than those of universities, and the increase is largely due to contributions from a handful of North European countries, most notably Germany. However, this trend also suggests that in certain academic markets there is a stable influx of resources for political science expertise based in professional research centres outside universities and this flux contributes to the diversification of the institutional research landscape.

Table 4.

Categories of institutional affiliations

| Category | 1973–2020 | 2015–2019 | ||||||

|---|---|---|---|---|---|---|---|---|

| UQI | IFN | UQI | IFN | |||||

| Count | % | Count | % | Count | % | Count | % | |

| Education and research | 912 | 88.6 | 8242 | 97.35 | 583 | 91.52 | 2759 | 96.94 |

| Think tanks and NGOs (including INGOs) | 43 | 4.2 | 100 | 1.18 | 23 | 3.61 | 54 | 1.90 |

| Government (including IGOs) | 36 | 3.5 | 61 | 0.72 | 16 | 2.51 | 17 | 0.60 |

| Companies | 17 | 1.6 | 19 | 0.22 | 10 | 1.57 | 11 | 0.39 |

| Dissemination of information | 10 | 1.0 | 18 | 0.21 | 4 | 0.63 | 4 | 0.14 |

| Professional associations | 8 | 0.8 | 18 | 0.21 | – | – | – | – |

| Parties, parliaments and electoral authorities | 4 | 0.4 | 8 | 0.09 | 1 | 0.16 | 1 | 0.04 |

| Total | 1029 | 100 | 8466 | 100 | 637 | 100 | 2846 | 100 |

UQI: no. of unique institutions, IFN: institutional fingerprint

Diversification is also present within universities. Although data at the departmental level are limited since such details were not always mentioned in the journals, one may also notice a growing diversification of departmental affiliations, with authors particularly from methodology departments becoming increasingly common during the last two decades. Also, throughout the dataset, irrespective of the journal, the label “Political Science Department” is most often associated with US and Northern European universities. At the same time, for the last decade, one may notice a growing number of “Departments of Government”, especially in the US, UK, Northern European and Australian universities. Not least, in Europe, “international relations” is most often joined to “politics” or “government” departments while outside Europe IR departments seem to be rather independent or more likely associated with area studies.

Other noteworthy particularities are related to the local institutional context. For example, except for the EUI and CEU, most of the articles with authors affiliated to international universities, including international campuses, were published during the last decade, suggesting a growing relevance of such institutions on the international academic market. From the countries with a significant presence of items in the dataset, Belgium has the largest proportion of international organizations (a third of all its unique institutions), from both outside and within academia. This may reflect the role that Belgium has acquired internationally as host of several major international organizations, most notably EU and NATO, and consequently as a hub for networks that require specialized political research expertise.

Some characteristics seem nonetheless more specific to the journals in the collection. For instance, rather unexpectedly almost half of the government fingerprint is represented by IGOs and most of the IGOs fingerprint appears in the EJPR and the EPS, and not in the IR subset (i.e. EJIR and GA). This happens because, while the IGOs are more varied in the IR subset, altogether there are more articles with affiliations to EU institutions and these articles treat comparative politics/public policy topics rather than IR subjects. Another finding is that the number and the fingerprint of international non-governmental organizations are very small. Then again, the number and the fingerprint of local NGOs and think tanks have increased significantly during the last two decades. At the same time, affiliations to companies appear mostly during the last decade. These may be due to the higher presence in the dataset of articles and journals oriented more towards a comparative politics agenda (which includes research on topics relevant for the NGO sector), as well as to the increase of “big data” research (relevant for and often possible with the support of multinational companies in the digital economy). Nonetheless, if this dataset could be even a rough reflection of the job market for those with political research training, the trend related to the growing relevance of local NGOs/think tanks and companies might also suggest that political science graduates are increasingly embracing career paths beyond the more typical academic or governmental affiliations options.

Equal access?

Within the entire collection, the total number of affiliations (institutional fingerprint—IFN) is 8474 (8469 if one eliminates the cases of non-affiliated authors). This fingerprint is generated by 1020 unique institutions (UQI) from altogether 73 countries, and by nine affiliations labelled as “international”. However, only a much smaller number of countries and institutions have a significant impact. For example, the top five countries by IFN (UK: IFN = 1452, US: IFN = 1433, Germany: IFN = 829, Netherlands: IFN = 508, Italy: IFN = 359) generate 55% of the total institutional fingerprint. The first ten countries by IFN host more than half of all unique institutions (632), covering 72.6% of the total IFN, while the twenty-eight countries which are placed on the first twenty-five positions cover 95% of the entire IFN. Fifteen countries, mostly non-European, have a single appearance in the entire dataset (IFN = 1) and so do almost half of all unique institutions. Similar distributions exist also in the ECPR and IR subsets, as well as at journal level.

For the ECPR subset, in particular, these distributions also largely reflect the institutional membership and its history. As Ghica (2020) identified, 46% of the ECPR institutional members are located in the six countries of the ECPR founding institutions (i.e. France, Germany, Netherlands, Norway, Sweden, UK), and 2 in 3 members from this original group of six are from either UK or Germany. In the current collection, institutional affiliations from these six countries generate 44% of the ECPR subset’s IFN (49% without PDY(p) items), while two-thirds of the institutional affiliations are located within the original group of six are either British or German. Furthermore, although the overall fingerprint of the ECPR’s founding institutions is only 8.7% of the entire ECPR subset (without PDY(p) items), five of these institutions are in the subset’s top 10 by IFN (Table 5).

Table 5.

Top 10 universities by IFN and subset

| ECPRa (EJPR, EPS, EPSR, EJIR, PRX) | IFN | EISA: IR (EJIR, GA) | IFN | EPSA (PSRM) | IFN |

|---|---|---|---|---|---|

| 1. European University Institute (IT) | 130 | 1. London School of Economics and Political Science (UK) | 42 | 1. New York University (US) | 19 |

| 2. University of Amsterdamc (NL) | 112 | 2. University of Oxfordb (UK) | 25 | 2. University of Michigan (Ann Arbor) (US) | 18 |

| 3. University of Mannheimb (DE) | 100 | 3. Uppsala University (SE) | 24 | 3. Texas A&M University (US) | 17 |

| 4. London School of Economics and Political Science (UK) | 97 | 4. University of Warwick (UK) | 21 | 4. Washington University (St. Louis) (US) | 15 |

| 5. University of Aarhus (DK) | 90 | 5. University of Cambridge (UK) | 19 | 5. Princeton University (US); Stanford University (US); Yale University (US) | 14 |

| 6. University of Oxfordb (UK) | 86 | 6. University of Queensland (AS) | 18 | 6. University of Oxfordb (UK); University of Mannheimb (DE); Columbia University (US); LSE (UK) | 12 |

| 7. KU Leuven (BE); University of Leidenb (NL) | 77 | 7. King's College London (UK); University of Copenhagen (DK) | 17 | 7. University of Zürich (CH); University of Vienna (AT) | 10 |

| 8. University of Essexb,c (UK) | 75 | 8. KU Leuven (BE); Johns Hopkins University (US); Norwegian Institute of International Affairs (NO); Aberystwyth University (UK) | 15 | 8. Harvard University (US); Georgetown University (US); University of Chicago (US); University of Texas (Dallas) (US) | 9 |

| 9. University of Gothenburgb (SE) | 73 | 9. European University Institutec (IT); University of Kent (UK); University of Melbourne (AS) | 14 | 9. Duke University (US); Rice University (US); University of Pittsburgh (US) | 8 |

| 10. University of Oslo (NO) | 63 | 10. University of Bremenc (DE); University of Sheffield (UK); University of Stockholmc (SE); Vrije Universiteit Brussel (BE) | 13 | 10. KU Leuven (BE); Pennsylvania State University (US); University College Londonc (UK); University of Georgiac (US) | 7 |

| … | |||||

| 20. Sciences Po Parisb (FR) | 41 | … | … | ||

| … | |||||

| 23. University of Strathclydeb (UK) | 38 | 22. 231 institutions | 1 | 16. 97 institutions | 1 |

| … | |||||

| 31. University of Bergenb (NO) | 26 | ||||

| … | |||||

| 56. 382 institutions | 1 |

NOTE: IFN for the entire set. LEGEND: awithout PDY(p) items; bFounding ECPR institution; c the institution is not in top 10 for the 2015–2019 subset; COUNTRIES: AT Austria, AS Australia, BE Belgium, CH Switzerland, DE Germany, DK Denmark, IT Italy, NL Netherlands, NO Norway, SE Sweden, UK United Kingdom, US United States. European universities marked Bold

At first sight, the dataset seems to be largely European, as Europe-based institutions generate 73% of the entire institutional fingerprint (without the PDY(p) items). One may also easily argue for a transatlantic core because the rest of the IFN is associated mostly with institutions from the US (19.2%) and Canada (2.7%). However, several significant variations exist between the journals. For all ECPR journals (except the PRX), as well as for PSRM, the top three contributors by both IFN and UQI are the US, the UK and Germany. But in GA, only the UK makes it to the top 3 (in the first position), the United States does not make the top ten; and in the fifth place, Germany has less than half of UK’s IFN. Most strikingly, although it is the journal of a European political science association, PSRM seems to be largely a US affair, as contributions from the US dwarf all other inputs: 60% of the journal’s IFN is generated by authors affiliated to US institutions which also generate almost half of the journal’s total number of unique institutions. Furthermore, the IFN of US institutions is more than five times the IFN of the next placed (UK) and more than ten times the IFN of the third placed (Germany). In the EJIR, the contributions from the US and the UK also dominate all others: their combined IFN represents 53% of the journal’s IFN, their combined UQIs constitutes 51% of the journal’s UQIs, and the rest of the top five (Germany, Australia, Sweden) have between a third and less than a fifth of either UK’s or US’s IFN (Table 6). For PRX the number of items in the collection (31) is too small for a similar analysis. However, it is still remarkable in the context of this discussion for two reasons: (1) in less than 2 years, it already attracted contributions from authors affiliated to 53 institutions from 22 countries (IFN: 71); but (2) almost half of its institutional landscape originates in only two countries—Germany (IFN:21, UQI:13) and the UK (IFN:10, UQI:10).

Table 6.

Institutional affiliation overview (by journal and country, full dataset)

| EJPR (1973–2020)a | EPS (2001–2020) | EPSR (2009–2020) | EJIR (1995–2020) | GA (2015–2020) | PRSM (2013–2020) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Country | IFN | UQI | Country | IFN | UQI | Country | IFN | UQI | Country | IFN | UQI | Country | IFN | UQI | Country | IFN | UQI |

| 1.UK | 483 | 64 | 1.UK | 431 | 87 | 1.Germany | 74 | 30 | 1.USA | 270 | 127 | 1.UK | 85 | 31 | 1.USA | 377 | 98 |

| 2.USA | 441 | 128 | 2.USA | 180 | 99 | 2.UK | 73 | 26 | 2.UK | 258 | 51 | 2 Belgium | 65 | 16 | 2.UK | 70 | 18 |

| 3.Germany | 384 | 65 | 3.Germany | 143 | 42 | 3.USA | 64 | 41 | 3.Germany | 89 | 32 | 3.Sweden | 41 | 9 | 3.Germany | 37 | 15 |

| 4.Netherlands | 239 | 15 | 4.Italy | 126 | 17 | 4.Switzerland | 44 | 9 | 4.Australia | 63 | 14 | 4.Turkey | 37 | 18 | 4.Switzerland | 23 | 7 |

| 5.Switzerland | 137 | 13 | 5.Netherlands | 82 | 14 | 5.Sweden | 37 | 10 | 5.Sweden | 48 | 8 | 5.Germany | 32 | 22 | 5.Canada | 16 | 8 |

| 6.Denmark | 127 | 6 | 6.Belgium | 63 | 9 | 5.Netherlands (6) | 37 | 8 | 6.Canada | 36 | 16 | 6.Netherlands | 29 | 10 | 6.Sweden | 15 | 6 |

| 7.Italy | 126 | 20 | 7.Spain | 51 | 24 | 6.Denmark (7) | 31 | 7 | 7.Norway | 33 | 10 | 7.Denmark | 25 | 8 | 7.Austria | 13 | 3 |

| 8.Sweden | 125 | 11 | 8.Ireland | 35 | 8 | 7.Italy (8) | 29 | 15 | 8.Switzerland | 31 | 9 | 8.Italy | 21 | 7 | 8. Spain | 9 | 5 |

| 9.Norway | 107 | 17 | 9.Sweden | 31 | 12 | 8.Spain (9) | 20 | 10 | 9.Netherlands | 27 | 8 | 9.Norway | 18 | 8 | 8. Belgium (9) | 9 | 3 |

| 10.Canada | 90 | 21 | 10.Canada | 29 | 10 | 8. Norway (10) | 20 | 9 | 10.Denmark | 24 | 5 | 10.Australia | 14 | 7 | 9.Italy (10) | 8 | 4 |

| 11.Belgium | 87 | 9 | 11.Switzerland | 27 | 12 | 9.Belgium (11) | 18 | 5 | 11.Italy | 17 | 7 | 11.Finland | 9 | 4 | 10.Netherlands (11) | 7 | 5 |

| 12.France | 84 | 22 | 11.Denmark (12), Norway (12) | 27 | 8 | 10.Finland (12) | 15 | 5 | 12.Finland | 12 | 7 | 12.USA | 8 | 8 | 11.Finland (12) | 6 | 2 |

| 13.Spain | 72 | 21 | 12.Portugal (14) | 22 | 4 | 11.Canada (13) | 13 | 8 | 13.Belgium | 11 | 8 | 13.Canada | 7 | 5 | 12.Denmark (13) | 5 | 3 |

| 14.Ireland | 60 | 6 | 13.France (15) | 20 | 13 | 12.Austria (14) | 8 | 3 | 14.Israel | 8 | 4 | 13.Estonia (14) | 7 | 1 | 12.UAE (14) | 5 | 1 |

| 15.Australia | 54 | 11 | 14.Australia (16) | 19 | 5 | 13.Brazil (15) | 7 | 5 | 15.Turkey | 7 | 4 | 14.France, India (15) | 4 | 4 | 13.Norway (15) | 4 | 2 |

|

OTHER 31 countries + "International" |

221 | 75 | 15.Finland (17) | 18 | 5 | 13.Ireland (16) | 7 | 4 |

OTHER 24 countries |

55 | 41 | 14.China, Spain (17) | 4 | 3 | 14.Japan (16) | 3 | 3 |

|

OTHER 32 countries + "International" |

144 | 87 | 14.France (17) | 6 | 6 | 14.Ireland, Poland (19) | 4 | 2 | 14.Ireland (17) | 3 | 2 | ||||||

| 14.Hungary (18) | 6 | 3 | 14.Portugal (21) | 4 | 1 | 15.Argentina, France, Hong Kong, Singapore, Taiwan (18) | 2 | 2 | |||||||||

| 15. Australia (19) | 5 | 2 | 15.Russia (22) | 3 | 3 | ||||||||||||

|

OTHER 14 countries |

24 | 20 | 15.Bangladesh, Brazil, International (23) | 3 | 2 | ||||||||||||

| 15.Brunei, Greece, Iceland, Qatar (26) | 3 | 1 | 15.New Zealand (23) | 2 | 1 | ||||||||||||

|

OTHER 18 countries |

24 | 22 |

OTHER 6 countries |

6 | 6 | ||||||||||||

|

TOTAL 47b countries |

2837 | 504 |

TOTAL 50b countries |

1476 | 465 |

TOTAL 33 countries |

538 | 226 |

TOTAL 39 countries |

989 | 351 |

TOTAL 48b countries |

474 | 208 |

TOTAL 29 countries |

628 | 202 |

IFN: institutional fingerprint, UQI: no. of unique institutions

awithout the PDY(p) items; bincluding the category “International”.

Geographical distribution

If one considers the collection with and without the PDY(p) items, there are also several noteworthy differences, especially at the country level. For instance, PDY(p) items generate more than half of the national IFN for seventeen countries. These are mostly Central and Eastern Europe states (Bulgaria, Croatia, Czechia, Hungary, Latvia, Lithuania, Poland, Romania, Slovakia, Slovenia), and countries that are smaller or more isolated (Cyprus, Iceland, Luxembourg, Malta) or further away from Europe (Israel, Japan, New Zealand). In the case of Cyprus, affiliations to a university in Northern Cyprus appear only in the PDY(p) subset. As shown in Table 7, Southern European states (Greece, Portugal, Spain) or other smaller European states (Belgium, Estonia, Ireland) also have a large part of their IFN from PDY(p) contributions.

Table 7.

PDY(p) dataset impact on country IFN

| COUNTRY | Total IFN | IFN without PDY(p) | PDY(p) in national subset (%) | |

|---|---|---|---|---|

| 1 | Malta | 29 | 1 | 97 |

| 2 | Luxembourg | 61 | 5 | 92 |

| 3 | Slovakia | 48 | 4 | 92 |

| 4 | Latvia | 21 | 3 | 86 |

| 5 | Cyprus | 41 | 7 | 83 |

| 6 | Lithuania | 29 | 5 | 83 |

| 7 | Iceland | 59 | 11 | 81 |

| 8 | Slovenia | 24 | 6 | 75 |

| 9 | Czech Republic | 58 | 15 | 74 |

| 10 | New Zealand | 44 | 12 | 73 |

| 11 | Croatia | 17 | 5 | 71 |

| 12 | Poland | 61 | 19 | 69 |

| 13 | Bulgaria | 12 | 4 | 67 |

| 14 | Japan | 53 | 19 | 64 |

| 15 | Israel | 82 | 30 | 63 |

| 16 | Hungary | 82 | 34 | 59 |

| 17 | Romania | 21 | 9 | 57 |

| 18 | Greece | 48 | 26 | 46 |

| 19 | Estonia | 28 | 17 | 39 |

| 20 | Ireland | 161 | 114 | 29 |

| 21 | Belgium | 354 | 257 | 27 |

| 22 | Spain | 215 | 158 | 27 |

| 23 | Portugal | 54 | 40 | 26 |

| 24 | Austria | 124 | 96 | 23 |

| 25 | Finland | 144 | 116 | 19 |

| 26 | Canada | 237 | 192 | 19 |

| 27 | Australia | 194 | 158 | 19 |

| 28 | France | 145 | 119 | 18 |

| 29 | Netherlands | 508 | 425 | 16 |

| 30 | Norway | 247 | 209 | 15 |

| 31 | Switzerland | 296 | 264 | 11 |

| 32 | Denmark | 268 | 240 | 10 |

| 33 | UK | 1542 | 1410 | 9 |

| 34 | Italy | 359 | 332 | 8 |

| 35 | USA | 1432 | 1346 | 6 |

| 36 | Germany | 829 | 780 | 6 |

| 37 | Sweden | 316 | 298 | 6 |

Since within the PDY(p) project most authors who write about a certain country are also affiliated to institutions from that country or are part of its academic diaspora, it is likely that this long-term research programme had a substantial impact on making better-known expertise on and from less researched European countries, especially since many of these states are less visible as case studies or in comparative datasets. However, the range of this expertise is limited by the fact that the contributions are methodologically and structurally similar, and that the same 1–2 persons authored often for decades the respective country reports. At the same time, not all European countries are represented in the PDY project. For example, of the 47 member states of the Council of Europe, only two thirds (31) are currently profiled, with most of the missing countries being from the Balkans or Eastern Europe. These are also the areas from which European institutional affiliations are least represented or absent in the dataset beyond the PDY project. In fact, although Central and East European countries, including the Balkans, Russia and Turkey, cover half of the continent, affiliations to institutions from these states have generated altogether only 4% of the entire European IFN. About a third of these affiliations are from Turkish institutions, which are mostly in the IR subset. The rest are largely in EPS, GA and EJPR. Their number is, however, growing—about two-thirds of all articles with (co)authors affiliated to institutions from Central and Eastern European countries have been published during the last decade.

Access to resources

Beyond the Western European/transatlantic core and its stickiness within the European political science academic infrastructures, a feature which at least in the case of the ECPR has been extensively discussed at a previous major anniversary (De Sousa et al. 2010; Newton and Boncourt 2010), this dataset makes more visible also the outlines of lesser debated dynamics. These are related to access to resources. For example, all seven journals publish articles exclusively in English, the lingua franca of contemporary scientific research. Yet, to what extent that makes them genuinely international/cosmopolitan (as opposed to parochial) and offers similar access to both native and non-native English-speaking scholars, it is less clear. At least in terms of invested resources (including time) for academic language learning/language proofing, the costs for native speakers of English or for scholars located in countries/institutions where English is an official language are arguably lower than for non-native speakers or for scholars outside such countries/institutions.

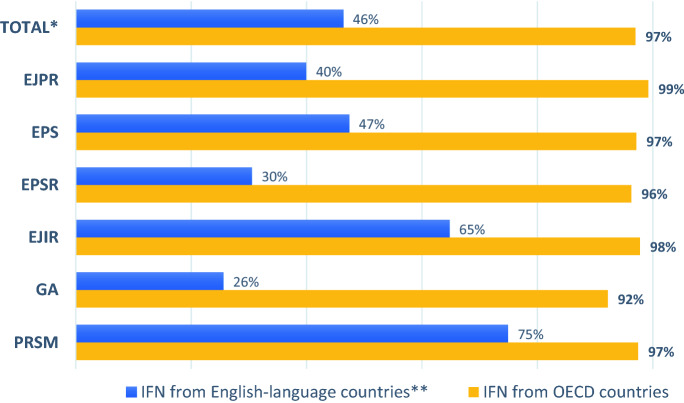

Within this collection, institutions located in countries where English is an official/de facto language of communication (Australia, Canada, India, Ireland, Malta, New Zealand, UK, US) produce almost half of the institutional fingerprint. In the extreme case of PSRM, they produce almost 75% of the journal’s IFN. At the opposite end is GA, an international relations journal, with only 25.5% of its IFN generated by institutions located in countries where English is an official/de facto language. However, being an IR journal does not necessarily guarantee a more varied institutional landscape: in EJIR, also an IR periodical, the IFN produced within a native English institutional context constitutes 65% of the outlet’s entire IFN (Fig. 2).

Fig. 2.

OECD and English-language countries IFN (full dataset). Notes: *Data for Total and EJPR without PDY(p) items. **Countries where English is an official or de facto language for a large part of the population and in higher education (Australia, Canada, Ireland, India, Malta, New Zealand, UK, US)

Even more striking than the language conundrum is the fact that institutions from countries which are members of the Organisation for Economic Co-Operation and Development (OECD), a common proxy for affluent states, generate 97.7% of the entire collection’s IFN (without PDY(p)) and between 92% and 99% for each journal (Fig. 2). The relevance of affluence and access to resources is notable also at the subnational level, as revealed by the distribution of universities and research institutes located in countries with larger fingerprints in the dataset. For example, in Germany, Italy and Spain, there are significantly fewer or no authors affiliated to institutions from poorer or more isolated regions (Table 8). In the case of Germany, three decades after the territorial reunification, East German institutions are still largely underrepresented, while the state with the highest investment in research—Baden-Würtemberg, 5% of the state’s GDP (German Federal Foreign Office 2020), also ranks first in academic output in this dataset (Table 8).

Table 8.

Institutional fingerprint distribution of education and research institutions at subnational level: Germany, Italy, Spain 1973–2020 (without PDY(p))

| GERMAN STATES | UQI | IFN | ITALIAN REGIONS | UQI | IFN | SPANISH REGIONS | UQI | IFN |

|---|---|---|---|---|---|---|---|---|

| Baden-Württemberg | 14 | 256 | Tuscany | 8 | 184 | Catalonia | 7 | 60 |

| Berlin* | 13 | 116 | Emilia-Romagna | 3 | 37 | Madrid | 13 | 58 |

| Nordrhein-Westfalen | 14 | 104 | Lombardy | 4 | 36 | Basque Country | 3 | 13 |

| Bayern (Bavaria) | 10 | 74 | Trentino-South Tyrol | 2 | 17 | Andalusia | 3 | 6 |

| Bremen | 2 | 39 | Piedmont | 3 | 16 | Galicia | 2 | 5 |

| Hamburg | 4 | 32 | Lazio | 4 | 12 | Valencian Community | 2 | 5 |

| Niedersachsen (Lower Saxony) | 6 | 28 | Sicily | 1 | 12 | Castile and León | 1 | 4 |

| Rheinland-Pfalz | 4 | 25 | Campania | 4 | 8 | La Rioja | 1 | 3 |

| Hessen | 6 | 11 | Calabria | 1 | 2 | Aragon | 1 | 1 |

| Brandenburg | 3 | 9 | Veneto | 1 | 2 | Asturias | 1 | 1 |

| Sachsen (Saxony) | 3 | 6 | Abruzzo | 1 | 1 | Navarre | 1 | 1 |

| Schleswig-Holstein | 3 | 6 | Friuli-Venezia Giulia | 1 | 1 | Region of Murcia | 1 | 1 |

| Thüringen (Thuringia) | 2 | 6 | Liguria | 1 | 1 | Balearic Islands | – | – |

| Sachsen-Anhalt (Saxony-Anhalt) | 2 | 5 | Aosta Valley | – | – | Canary Islands | – | – |

| Mecklenburg-Vorpommern | 2 | 3 | Apulia | – | – | Cantabria | – | – |

| Saarland | – | – | Basilicata | – | – | Castilla–La Mancha | – | – |

| TOTAL | 88 | 720 | Marche | – | – | Ceuta | – | – |

| Molise | – | – | Extremadura | – | – | |||

| Sardinia | – | – | Melilla | – | – | |||

| Umbria | – | – | TOTAL | 36 | 158 | |||

| TOTAL | 34 | 329 | ||||||

| NOTES: *There is no institution located in East Berlin in the dataset before the German reunification. States marked Bold and Italic are on the territory of the former East Germany. The University of Mannheim generates almost half of Baden-Württemberg’s IFN and 16% of Germany’s education and research IFN | NOTE: The European University Institute accounts for 73% of Tuscany’s IFN and 41 per cent of Italy’s education and research IFN | NOTE: Top 3 universities – Universitat Pompeu Fabra Barcelona (IFN 28), Autonomous University of Barcelona (IFN 21), Complutense University Madrid (IFN 13) generate 40% of Spain’s education and research IFN | ||||||

Such figures and distributions are not only a blunt reminder that research output is highly dependent on the resources invested in it and that richer/less isolated countries, regions and institutions are more likely to have such resources, but also a potential indicator of more structural/long-term biases and limitations that may be present in work published in (influential) scholarly journals and in the research agendas that they reflect. For a more nuanced analysis of such matters, of how they affect political science in Europe and of the impact of such distorted knowledge on decision-making, we need further investigation on larger and more complex datasets. However, as Wilson and Knutsen (2020) illustrate, this is a conversation that both globally and locally we can no longer avoid in the discipline.

Gender and collaboration patterns

Another structural challenge related to diversity and easily noticeable in this collection is that of gender inequality. Overall, the database includes 4533 unique authors (UQA) with a total author fingerprint (AFN) of 8161 (Table 2). While it seems to have increased lately (Table 3), the percentage of women authors and their fingerprint is still less than half of what men publish. At the same time, publications favouring quantitative methodology articles, such as PSRM, have significantly fewer women authors. This is a pattern already observed on multiple occasions within our discipline with different journals/datasets and beyond periodicals (Teele and Thelen 2017; Alter et al. 2020), as well as recently assessed in (relation to) ECPR publications (Grossman 2020; Closa et al. 2020).

Such analyses, which also consider the input level, have shown that, while they are more numerous than ever in academia and they sometimes have slightly more successful acceptance rates than men, women still tend to submit significantly fewer manuscripts (Stockemer et al. 2020). This is mostly due to unequal distributions of tasks/roles between genders both within academia and outside it. At the same time, the number of manuscript submissions and published work (co)authored by women seem to rise in European political science outlets when professional associations and publishers adopt structural measures aimed at reducing gender inequality such as reducing the gender gap in editorial teams and institutionally promoting systematic research and awareness on gender inequalities in academia (Deschouwer 2020; Grossman 2020).

However, as this dataset indicates, the overall pace in reducing gender inequality is still slow and the trend is non-linear. In fact, exploratory data visualization suggests that, if nothing changes, most journals still need around two decades to close the gender gap, with EPS and GA possibly closing it in about a decade (Fig. 3). As illustrated particularly by EPS and GA (Tables 2, 3), periodicals with more varied editorial agendas both in formal genre and methodology, as well as with a more varied geographical distribution of authors (Table 5) seem to be more gender-balanced. Interestingly, in the journals that include other genres than original research articles, gender inequality does not seem to be related to the form in which scholarly arguments are formulated, as the gender distribution by genre roughly mirrors the overall gender distribution in the respective journal.

Fig. 3.

Female authorship* fingerprint (yearly percentage, 2010–2019). Note: *including co-authorship

Where a gendered pattern seems to be most visible is in the models of cooperation. Traditionally, articles had a single author, most often male. This is particularly visible for the first three decades of EJPR (1973–2000), when 76% of all articles were single-authored and 85% of all articles had only male (co)authors. For that period, of all articles with male-only authors 80% were single-authored. In the case of female-only items, 92% were single-authored. Gradually, co-authorship has become increasingly common in all journals. For instance, during the last decade only 53.9% of articles have been single-authored (Fig. 4), a proportion which remained stable also during the last 5 years. However, male-only articles (of which 61% single-authored) still account for 62.7% of all published articles during the last decade. For the same period, female-only articles (of which 81% single-authored) account for just 19% of all published items and are thus virtually as many as mix-gender texts (18.3%) (Fig. 4). These proportions are similar also for the last 5 years.

Fig. 4.

Authorship distribution by type of collaboration and gender (2011–2020*, n = 2273). Note: * The period 2011–2020 was chosen as equivalent for “the most recent decade” because for this evaluation the most recent available data is more relevant than whether 2020 data is complete

At the same time, during the last decade, female-only collaborative articles had maximum three authors, while male-only collaborative articles had up to seven authors. This may suggest that, while the number of women in academia increased, men still have (access to) larger networks of male academic peers than women have to women academic peers. The differences identified in this dataset may be the effect of the increasing weight of quantitative research in political science journals. Due to the larger volume of work in collecting and processing data, collaboration (with more authors) is more likely in quantitative research and since men are still more numerous in this field, they are also more likely to collaborate among themselves.

Then again, for the entire period, for the last decade, as well as for the last 5 years, about 60% of all mix-gender articles are gender-balanced (i.e. between 40 and 60% for either gender, with most in fact at 50%). Furthermore, during the last decade, in mix-gender articles in which co-authors are not alphabetically listed (n = 185), men and women are equally distributed in a first-author position (F:92, M:93). In other words, apart from addressing the structural biases that generate gender gaps at manuscript submission level, the increase of mix-gender research teams might be also a potentially significant path to insure a more gender-balanced authorship.

Currently, the average number of authors per article oscillates around 2, with small differences at journal level (Table 3), which seem to be related mostly to certain (sub)disciplinary patterns. For example, items focusing more on methodological puzzles or quantitative analysis have more frequently two or more authors, while most IR articles are still largely single-authored. Throughout the entire collection, as well as during the last decade, most collaborative articles have authors listed in alphabetical order at a ratio of about 2:1 in favour of alphabetical listing. Significant variations exist among journals, especially more recently. For example, during the last 5 years, 95% of all collaborative articles that EJIR published had the co-authors alphabetically listed, while for EPS the non-alphabetically listed co-authorship has become the norm (i.e. 54% of all collaborative articles). EPSR and GA have almost an equal distribution of alphabetically and non-alphabetically listed collaborative articles, while EJPR and PSRM maintain the 2:1 ratio.

Irrespective of the journal, the higher the number of authors, the more likely it is that they are not alphabetically listed, suggesting that the first author assumes a coordinating role. A potential coordination role of the first listed author is noticeable also in articles with two or more authors who collaborate regularly, when the order in which they are listed changes. But such cases could also indicate an attempt to give each other a chance to be more visible because many readers may remember only the first name in a co-authors list. Not least, as illustrated most notably by the yearly alternation of the authors’ order for the PDY(p) items on Cyprus (with one author affiliated to a university on the Greek side and the other on the Turkish side), such changes in the authors’ order might also help appease political or institutional sensitivities.

Conclusion

Given the expanding landscape of research that requires larger and ever more complex teams, we may expect that the number of co-authored articles, as well as the accompanying reputational sensitivities related to the gender, geographical location/institutional affiliation and order of the co-authors will increase. How these dynamics will continue to reflect into scientific publications is still unclear. In a more optimistic scenario, which the patterns identified in the mix-gender articles in this dataset seem to support, the spread of collaborative research could diminish the gender gaps in publication output in the long run. Collaboration may be also key to have access to and make more visible knowledge potential from areas that are less studied, more scientifically isolated and/or have fewer (research) resources. Yet, without systematic monitoring, public awareness and measures aimed at reducing the gaps at all levels, geographical, gendered or resources-based structural inequalities such as those identified in this collection, as well as in other datasets will continue to reproduce.

Beyond fairness and other moral grounds, such concerns are ultimately about the quality of science and implicitly about the impact that decisions based on such science have on our societies. As illustrated particularly by the puzzles of geographical distribution and access to resources reflected within this collection, just being international in scope and reach, and/or using English as language of academic communication do not necessarily make scientific outlets less parochial. Within the current system of academic prestige and rankings, periodicals such as those analysed here have become major gatekeepers. Therefore, if they do not consider diversity as an editorial priority, journals may perform worse on such criteria, contributing thus to the reproduction of structural inequalities that favour scientific parochialism and groupthink. More significantly, without encouraging genuine diversity in authorship, journals may also facilitate the distortion of knowledge on political phenomena and consequently contribute to bad governance in our societies, even if they follow the highest international standards in matters of scientific publication.

However, journals also can and some of them have actively pushed forward this agenda in our discipline. After establishing itself as a primary venue for such debates in (European) political science and performing much better than the average on diversity criteria, EPS enjoys a privileged position. That is why, beyond reflecting on what we have achieved so far, its current anniversary could be also an opportunity to start a more systematic conversation on the responsibility that we as political scientists have in our societies, as well as on how the present limitations of our discipline impact the knowledge that we produce.

Supplementary information

Acknowledgements

The initial idea for this study came while documenting and receiving feedback from Thibaud Boncourt, Isabelle Engeli and Diego Garzia for a different piece of research that I prepared for a volume marking the 50-year anniversary of ECPR. I started building the dataset in late 2019, partly with the support of students enrolled in a research internship programme at the Centre for International Cooperation and Development Studies, University of Bucharest. Of these younger political scientists, I am particularly thankful to Sebastian Bălănică, Constança Botero Pires Soares, Lorena Dodu, Miruna Ioana Muscan and Mădălina Necoară, whose questions, careful data check and observations inspired me to open different research paths. Especially during the more difficult moments I had while working on this piece within the context of the COVID-19 pandemic, I received priceless peer support from Raluca Alexandrescu, Ionela Băluță, Luis De Sousa, Anca Dohotariu, Florin Feșnic, Bogdan Mihai Radu and Claudiu Tufiș. Although their list is too big to be included here, I also thank the many colleagues from European, North American, Asian and Latin American universities with whom I conversated on the topic during the last year and who helped me identify and understand significant details about the history of various higher education institutions, research infrastructures or national education systems relevant for the dataset. Not least, I am grateful to the editors and reviewers for their patience, as well as timely, detailed and gracious feedback. This research did not benefit from any funding.

Luciana Alexandra Ghica

is Associate Professor at the Faculty of Political Science (Department of Comparative Governance and European Studies), University of Bucharest, where she also acts as founding Director of the Centre for International Cooperation and Development Studies (IDC). She studied political science and international relations at the University of Bucharest, Central European University (Budapest, Hungary) and University of Oxford, where she specialized in the analysis of international cooperation processes, with a focus on the institutional and discursive impact of democratization on foreign policymaking. She edited the first Romanian encyclopaedia of the European Union (c2005, three editions), co-edited the first Romanian handbook of security studies (2007), and authored a monograph on the relations between Romania and the European Union (2006), as well as several studies on foreign policy, international cooperation, and the dynamics of political science as a discipline in Central and Eastern Europe. Since 2012 she serves as an elected member of the board of Research Committee 33 (The Study of Political Science as a Discipline) of the International Political Science Association (IPSA).

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Alter KJ, Clipperton J, Schraudenbach E, Rozier L. Gender and Status in American Political Science: Who Determines Whether a Scholar Is Noteworthy? Perspectives on Politics. 2020;18(4):1048–1067. doi: 10.1017/S1537592719004985. [DOI] [Google Scholar]

- Closa C, Moury C, Novakova Z, Qvortrup M, Ribeiro B. Mind the (Submission) Gap: EPSR Gender Data and Female Authors Publishing Perceptions. European Political Science. 2020;19(3):428–442. doi: 10.1057/s41304-020-00250-5. [DOI] [Google Scholar]

- De Sousa, L., J. Moses, J. Briggs, and M. Bull. 2010. Forty Years of European Political Science. European Political Science 9(S1): S1–S10.

- Deschouwer K. Reducing Gender Inequalities in ECPR Publications. European Political Science. 2020;19(3):416–427. doi: 10.1057/s41304-020-00249-y. [DOI] [Google Scholar]

- German Federal Foreign Office. 2020. Federal States of Germany [Section on Official Governmental Portal Deutschland.de]. Last updated 29 Sept. 2020. https://www.deutschland.de/en/topic/politics/germany-europe/federal-states. Accessed 2 October 2020.

- Ghica LA. From Imagined Disciplinary Communities to Building Professional Solidarity: Political Science in Postcommunist Europe. In: Boncourt T, Engeli I, Garzia D, editors. Political Science in Europe: Achievements, Challenges, Prospects. Lanham: Rowman & Littlefield; 2020. pp. 159–178. [Google Scholar]

- Grossman E. A Gender Bias in the European Journal of Political Research? European Political Science. 2020;19(3):416–427. doi: 10.1057/s41304-020-00252-3. [DOI] [Google Scholar]

- Newton K, Boncourt T. The ECPR’s First Forty Years 1970–2010. Colchester: ECPR Press; 2010. [Google Scholar]

- Norris P. Towards a More Cosmopolitan Political Science? European Journal of Political Science. 1997;31(1–2):17–34. doi: 10.1111/j.1475-6765.1997.tb00761.x. [DOI] [Google Scholar]

- Stockemer D, Blair A, Rashkova E. The Distribution of Authors and Reviewers in EPS. European Political Science. 2020;19(3):401–411. doi: 10.1057/s41304-020-00251-4. [DOI] [Google Scholar]

- Teele DL, Thelen K. Gender in the Journals: Publication Patterns in Political Science. PS: Political Science & Politics. 2017;50(2):433–447. [Google Scholar]

- Wilson MC, Knutsen CH. Geographical Coverage in Political Science Research. Perspectives on Politics. 2020 doi: 10.1017/S1537592720002509. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.