Abstract

Introduction

There is an urgent need to validate telephone versions of widely used general cognitive measures, such as the Montreal Cognitive Assessment (T‐MoCA), for remote assessments.

Methods

In the Einstein Aging Study, a diverse community cohort (n = 428; mean age = 78.1; 66% female; 54% non‐White), equivalence testing was used to examine concordance between the T‐MoCA and the corresponding in‐person MoCA assessment. Receiver operating characteristic analyses examined the diagnostic ability to discriminate between mild cognitive impairment and normal cognition. Conversion methods from T‐MoCA to the MoCA are presented.

Results

Education, race/ethnicity, gender, age, self‐reported cognitive concerns, and telephone administration difficulties were associated with both modes of administration; however, when examining the difference between modalities, these factors were not significant. Sensitivity and specificity for the T‐MoCA (using Youden's index optimal cut) were 72% and 59%, respectively.

Discussion

The T‐MoCA demonstrated sufficient psychometric properties to be useful for screening of MCI, especially when clinic visits are not feasible.

Keywords: cognitive screening scales, equivalence testing, mild cognitive impairment, Montreal Cognitive Assessment, neuropsychology, remote assessment, telephone screening

1. INTRODUCTION

The number of individuals at risk for cognitive impairment is growing rapidly as the population ages, 1 , 2 yet dementia often goes undiagnosed or diagnosed in late disease stages. 3 Sensitive screening measures are essential to identify individuals at risk for future cognitive decline. The Montreal Cognitive Assessment (MoCA‐30) 4 is a widely used, extensively studied, paper‐and‐pencil screening tool for distinguishing individuals with mild cognitive impairment (MCI; average sensitivity 85%, average specificity 76%) and Alzheimer's disease (AD; average sensitivity 94%, average specificity 76%) from those who are cognitively unimpaired. 5 The MoCA‐30 has been translated and validated for use in various languages, cultures, and administration modalities. 5 Compared to another commonly used cognitive screen, the Mini‐Mental State Examination (MMSE), 6 the MoCA has been consistently recognized as more sensitive to mild cognitive changes with similar levels of specificity. 7 , 8 , 9 , 10 , 11 , 12 , 13 , 14 , 15 The MoCA‐30 more comprehensively evaluates a broad range of cognitive domains and more closely aligns with neuropsychological test scores than does the MMSE. 16

Given the rapidly expanding older adult population, 1 , 2 remotely administered, validated screening tools for cognitive impairment are increasingly necessary and have far‐reaching applications, including use with rural‐living individuals, for ease of follow‐up and disease monitoring, and for continuity of care. Telephone 17 , 18 , 19 and videoconferencing‐based 20 , 21 , 22 , 23 , 24 versions of the MoCA‐30 have been developed, but their performance has not been assessed in diverse samples at increased risk for cognitive impairment based on age, race, and socioeconomic status. In addition, the broad applicability of videoconferencing is questionable because it relies on expensive technological resources (including high‐speed internet and access to a computer, smartphone, or tablet and a printer) and technological proficiency. This greatly reduces the applicability of this method for detecting dementia risk in under‐resourced individuals, or for evaluating older adults who do not have access to technology or who are already cognitively impaired. Telephone‐based screening has the potential to address many of these limitations. However, there is a need to establish the validity of telephone screens, especially in the age of the COVID‐19 pandemic, when clinicians and researchers alike are turning to remotely administered measures. 25 , 26 , 27

The telephone MoCA (T‐MoCA) generates a total score with a maximum of 22 points, eliminating the MoCA‐30 items that require visual stimuli or the use of paper and pencil. The T‐MoCA was originally validated by Pendlebury et al. 17 in a population of patients who experienced acute vascular events. In general, the T‐MoCA does not present the same barriers to access inherent to videoconferencing, as individuals can participate via landline or cellphone, without requiring more advanced technological equipment or proficiency. The T‐MoCA exhibits adequate sensitivity and specificity for MCI with area under the receiver operating characteristic curve (ROC) ranging from 0.73 to 0.94 in special samples such as in community‐dwelling patients after transient ischemic attack (TIA), stroke, 17 , 18 or in patients with atrial fibrillation. 19 In these samples, the T‐MoCA has demonstrated similar sensitivity for identifying MCI as the Telephone Interview for Cognitive Status (TICS), 17 , 18 which correlates highly with the MMSE. 28 The TICS is the most widely translated and validated telephone screen. 29 However, a broader literature has revealed that the TICS may be unreliable for distinguishing MCI from normal cognition. 30 , 31 , 32 Given that the MoCA outperforms the MMSE 7 for detecting mild cognitive difficulties, the T‐MoCA is a promising measure for use in less impaired samples when remote testing is required. To date, very few studies have independently validated the T‐MoCA, 18 , 19 and no validation studies have been conducted in representative, diverse, community‐residing samples of older adults.

HIGHLIGHTS

The telephone Montreal Cognitive Assessment (T‐MoCA) was validated in a diverse, community‐residing cohort.

The T‐MoCA differentiates mild cognitive impairment from cognitively normal older adults.

Two conversion methods are presented for estimating MoCA‐30 scores based on the T‐MoCA.

RESEARCH IN CONTEXT

Systematic review: There has been a dearth of publications on telephone‐administered instruments used to screen for cognitive impairment, particularly in community‐based rather than specialty clinic‐based settings. The authors carried out an extensive PubMed search for literature on approaches to telephone cognitive testing in older adults.

Interpretation: There was a strong concordance between two modified versions of the standard MoCA‐30, eg, the telephone MoCA (T‐MoCA) and an in‐person subset of the MoCA (MoCA‐22). Results indicated statistical equivalence between the modified versions and that the T‐MoCA could be used to discriminate between mild cognitive impairment and those who are cognitively normal. Conversion scores from the T‐MoCA to the MoCA‐30 are presented.

Future directions: While results indicate that the T‐MoCA is valid as a cognitive screen when in‐clinic assessment is not feasible, future research should use a longitudinal design to better evaluate the sensitivity of the T‐MoCA to cognitive changes.

Prior research has found that performance on the T‐MoCA is highly influenced by education level, 19 although it remains unclear how other relevant demographic variables impact performance for the T‐MoCA. This is a notable gap in knowledge, as evidence suggests that performance on the in‐person MoCA‐30 is associated with such factors as age, 33 , 34 race/ethnicity, 35 , 36 literacy, 37 educational attainment, 33 , 34 , 35 , 37 , 38 auditory and visual sensory loss, 40 background noise, 41 and depression. 42 Further, no study has directly examined the equivalence of the T‐MoCA with the original, in‐person MoCA‐30. Additionally, the ability to reliably convert between the T‐MoCA and the traditional, in‐person MoCA‐30 scores has not been established.

Data from the Einstein Aging Study (EAS) provide an ideal opportunity for comparing the MoCA and the T‐MoCA in a large, systematically recruited, multi‐racial, economically diverse sample. The EAS administered the T‐MoCA and the MoCA‐30 along with full in‐person neuropsychological battery and neurologic assessment used to assign clinical diagnosis. This allowed the current study to: (1) examine the equivalence of the in‐person MoCA (MoCA‐30), a shortened, in‐person version of the MoCA‐30 (comparable with the T‐MoCA, with visual cues and drawing excluded; MoCA‐22), and the T‐MoCA; (2) explore whether demographic variables impact performance differences between these measures; (3) assess the sensitivity and specificity of the T‐MoCA to detect MCI; and (4) use the equipercentile equating method with log‐linear smoothing and Poisson regression to establish conversion scores from the telephone to the in‐person MoCA‐30.

2. METHODS

2.1. Overview of participants and procedures

Data were drawn from the EAS, a longitudinal study of a community‐residing cohort of older adults who are systematically recruited from Bronx County, NY, a racially and ethnically diverse urban setting with a population of 1.4 million where 11.8% are seniors. 43 Since 2004, the EAS has used systematic sampling to recruit participants from the New York City Board of Elections registered voter lists of the Bronx. Individuals selected from the list were mailed introductory letters explaining the study and were then telephoned to complete a brief screening interview to determine preliminary eligibility. Eligibility criteria were: age ≥70 years, ambulatory status, Bronx residents, non‐institutionalized, English speaking, visual or auditory impairments that precluded neuropsychological testing, active psychiatric symptomatology that interfered with the ability to complete assessments, and absence of prevalent dementia based on the telephone version of the Memory Impairment Screen (MIS). 44 Individuals who met preliminary eligibility criteria on the telephone were invited to an in‐person assessment at the EAS clinical research center to confirm and determine final eligibility. This assessment included a full neuropsychological evaluation and clinical neurological exam, which were used to confirm that participants did not meet Diagnostic and Statistical Manual of Mental Disorders, 4th edition (DSM‐IV) standard criteria for dementia (see below). Oral and written informed consent were obtained according to protocols approved by the local institutional review board.

EAS participants are followed annually with telephone interviews and in‐person clinic assessments to extensively phenotype cognitive status and document clinical and cognitive change. The telephone component serves two purposes: (1) to screen for study eligibility at enrollment and (2) to provide follow‐up information for individuals no longer willing or able to return to the clinic for in‐person assessments. In May 2017, the EAS incorporated the T‐MoCA into the telephone assessment and the MoCA‐30 into the in‐person assessment battery.

The analyses presented are based on the first administration of the MoCA‐30 and T‐MoCA for 428 EAS participants. Data included are for the first time that participants completed the T‐MoCA and the MoCA‐30, regardless of whether it was the initial (baseline) or annual follow‐up study assessment. Of the 428 individuals, 288 had the MoCA‐30 and T‐MoCA administered at their first (enrollment) study assessment while the remaining 140 had previously completed annual cognitive assessments before these instruments were added to the protocol.

2.2. Measures of interest

The MoCA‐30, included in the in‐person cognitive battery, assesses aspects of memory, executive function, attention, concentration, language, abstract reasoning, and orientation, with a maximum score of 30. Two alternate forms of the MoCA‐30 are available to decrease the likelihood of practice effects due to repeated administration of identical items. At baseline, version 7.2 45 was administered. Scoring details are shown in Table S1 in supporting information.

The MoCA‐22 includes a subset of items from the in‐person MoCA‐30 (version 7.2), 45 excluding items that require visual cues or drawing, with a maximum score of 22 points. The MoCA‐22 was derived for comparison to the T‐MoCA (Table S1).

The T‐MoCA is a modified version of the MoCA‐30 (version 7.1) 45 administered by phone, 17 with minor modifications to scoring (Table S1). Just as with the MoCA‐22, this phone version excludes items that require visual stimuli and pencil and paper drawing, with the same maximum score (Table S1). At baseline, two alternate versions of the MoCA were administered: version 7.1 was administered on the telephone and the alternate form (version 7.2) was administered in‐person.

2.3. Covariates

Demographic information included self‐reported race/ethnicity as defined by the US Census Bureau in 1994, number of years of education, gender, and age. The Geriatric Depression Scale (GDS, short form) was used to screen for depressive symptoms. The GDS ranges from 0 to 15 with scores of 6 or above suggestive of clinically significant depressive symptoms. 46

As part of the telephone screen, nine questions about self‐perceived cognitive changes/difficulties were posed to the participant and a summary score was derived (potential range 0 to 18, Table S2 in supporting information). The interview also noted and coded five possible difficulties during the telephone assessment including hearing difficulty, suboptimal hearing conditions, poor attention/motivation, unauthorized use of external sources, and anxiety about performance. The difficulties are termed “telephone administration difficulties” and were scored as “present” by the phone interviewer if at least one difficulty occurred (binary variable; Table S3 in supporting information).

2.4. Cognitive classification

In addition to the MoCA‐30, the in‐person clinic assessments included the Uniform Data Set Neuropsychological Battery (UDS), 47 additional neuropsychological measures (Free and Cued Selective Reminding Test [FCSRT], 48 Wechsler Adult Intelligence Scale, Third Edition [WAIS III] Block Design, 49 Wechsler Logical Memory I, 50 and WAIS III Digit Symbol), 49 psychosocial measures, personal and family medical histories, demographics, indicators of activities of daily living, and self‐reports of cognitive concerns.

2.4.1. Dementia

A diagnosis of dementia was an exclusion criterion for these analyses. During the initial telephone screen, the MIS telephone version was used to screen for severe cognitive impairment. Among those who passed the initial eligibility screen and who attended the in‐person clinic visit, dementia diagnosis was based on DSM‐IV standardized clinical criteria, 51 which required impairment in memory and one other cognitive domain with evidence of functional decline. Diagnoses were assigned annually, at consensus case conferences, where results of the neuropsychological and neurological examinations were reviewed along with relevant functional, social, and informant histories. Clinical judgment of cognitive decline, particularly with respect to pre‐morbid and baseline levels of cognition, age, and education were routinely taken into account, both in making consensus diagnoses and in formal statistical analyses.

2.4.2. Mild cognitive impairment (MCI) and normal cognition (NC)

Participants who were not diagnosed with dementia were classified as having MCI or being cognitively normal based on the Jak/Bondi actuarial criteria. 52 Specifically, 10 neuropsychological instruments that are part of the EAS neuropsychological battery (modified UDS) measuring five cognitive domains were considered for this classification including: (1) Memory: Free recall from the Free and Cued Selective Reminding Test, Benson Complex Figure (Delayed); (2) Executive Function: Trail Making Test Part B (limit time 300 seconds), Phonemic Verbal Fluency (Letters F, and L for 1 minute each); (3) Attention: Trail Making Test Part A (limit 300 seconds), Number Span (forward and backward); (4) Language: Multilingual Naming Test (MINT, total score), Category Fluency (Animals, Vegetables: 1 minute each); (5) Visual‐spatial: Benson Immediate Recall, WAIS III Block Design. The following actuarial formula was used: (1) impaired scores, defined as >1 SD below the age, gender, and education adjusted normative mean, on both measures within at least one cognitive domain (i.e., memory, language, or speed/executive function); or (2) one impaired score, defined as >1 SD below the age, gender, and education adjusted normative mean, in each of three of the five cognitive domains measured. If neither of these criteria was met, a score of 4, indicating the number of items of functional inability on all four instrumental activities of daily activities items (IADL: Table S4 in supporting information) on the Lawton Brody scale 53 must occur for an individual to be classified as MCI. Otherwise, an individual was considered to be NC.

2.5. Data analyses

Baseline demographic and clinical characteristics were summarized by clinical cognitive status (MCI, NC) and compared using the Wilcoxon rank sum tests for continuous variables, and Chi‐square or Fisher's exact test for categorical variables. Pearson correlation was used to examine the association between the T‐MoCA and MoCA‐22. Where appropriate, effect sizes are reported using Cohen's d statistic.

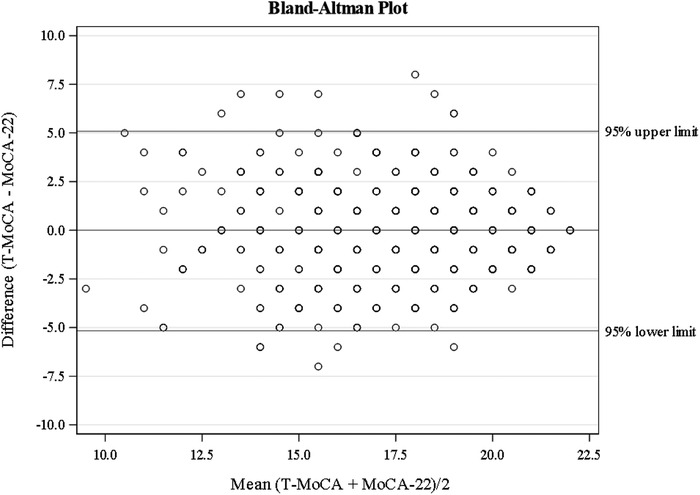

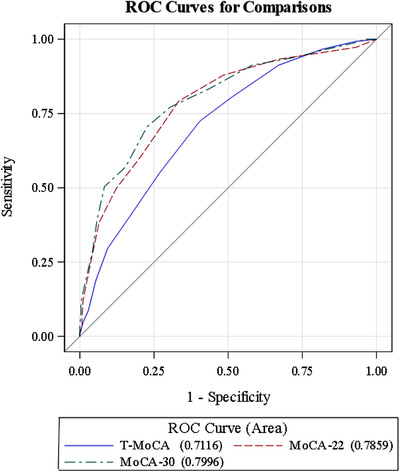

When making direct comparisons, the T‐MoCA and MoCA‐22 were examined because both instruments contained the same items. Linear regression models were applied to evaluate associations of the T‐MoCA, MoCA‐22, and the difference scores (T‐MoCA minus MoCA‐22) between them, controlling for demographic factors (age, gender, education, and race/ethnicity), depressive symptoms, cognitive concerns, and telephone administration difficulties. To determine whether the T‐MoCA and MoCA‐22 were equivalent, we conducted formal equivalence paired t‐tests. Using narrow equivalence bounds of −0.75 and 0.75 points on raw scores, we tested the equivalence between T‐MoCA and MoCA‐22 using the two one‐sided tests (TOST). 54 Equivalence testing was first introduced in pharmacokinetics to show that a generic drug has a profile equivalent to an existing drug. 55 , 56 Subsequently recommended in many research areas, 57 this approach was recently applied in the evaluation of different versions of cognitive tests. 58 The T‐MoCA and MoCA‐22 would be deemed equivalent if their difference lies within equivalence bounds defined as −0.75 and 0.75 points on the 22‐point scale, which corresponds to Cohen's d effect size of 0.3. 57 , 59 The Bland–Altman plot, 60 a plot of the difference against the mean of the data pairs for each participant, was also provided to visualize the agreement between T‐MOCA and MOCA‐22.

ROC analyses were used to evaluate the diagnostic ability of T‐MoCA, MoCA‐22, and MoCA‐30 for identifying prevalent MCI. Diagnosis of MCI was assigned without knowledge of the MoCA‐30 or T‐MoCA performance. Area under the curve (AUC) as a measure of diagnostic accuracy was reported and compared. 61 Youden's index, the sum of sensitivity and specificity minus one, was used to select the optimal cut‐off value. 62 , 63 Optimal cut scores were also obtained for reaching 80% sensitivity or specificity, depending on the application of use. For purposes of conversion from the T‐MoCA to the MoCA‐30, two methods were used. The first method was equipercentile equating with log‐linear smoothing, which mapped the T‐MoCA and MoCA‐30 based on their percentile ranks. In the second method, a Poisson regression model for MoCA‐30 was applied using T‐MoCA and covariates that may influence the estimate.

The equipercentile equating analysis was conducted in R 4.0.1 64 using the “equate” package. 65 All other analyses were performed using SAS statistical software version 9.4 (SAS Institute, Inc.).

3. RESULTS

3.1. Overview

Baseline administration of the T‐MoCA occurred within a mean of 22.7 (SD = 17.0) days of the in‐person MoCA‐30 administration. Participants’ age ranged from 70 to 94 (mean = 78.1, SD = 5.2) years, the sample was 66% female, and educational achievement averaged 14.9 ± 3.5 years. The sample was 46% White, 37% Black, 14% Hispanic, and 2% reported other or more than one race/ethnicity (Table 1). In total, 149 participants were classified as MCI using Jak/ Bondi actuarial criteria. As shown in Table 1, those classified as MCI were significantly older at baseline (mean 79.1, SD = 5.5. years vs. 77.6, SD = 5.0 years, Cohen's d = 0.30, P = 0.004), less educated (mean 14.3, SD = 3.6 years vs. 15.3, SD = 3.4 years, Cohen's d = 0.29, P = 0.02), and more likely to be Black (for MCI, 34% White, 47% Black,16% Hispanic, 3% other/more than one race vs. for No MCI, 53% White, 32% Black, 13% Hispanic, and 1% other/more than one race, P = 0.01). Those with MCI also had more cognitive concerns (mean 3.5, SD = 2.7 vs. 2.6, SD = 2.2, Cohen's d = 0.40, P < 0.001), more depressive symptoms (mean GDS 2.8, SD = 2.4 vs. 2.1, SD = 1.8, Cohen's d = 0.33, P = 0.004), and more concerns noted by telephone interviewers (17% vs. 8%, P = 0.005). The groups did not differ by gender (% women 65% vs. 66%, P =0 .80). The Pearson correlation between the T‐MoCA and the MoCA‐22 was 0.58 (P < 0.0001); the correlation between the T‐MoCA and MoCA‐30 was 0.56 (P < 0.0001).

TABLE 1.

Baseline descriptive characteristics of sample by MCI status

| All | No MCI | MCI | ||

|---|---|---|---|---|

| Mean (SD) or percentage | N = 428 | N = 279 | N = 149 | P* |

| Age (in years) at time of T‐MoCA | 78.1 (5.2) | 77.6 (5.0) | 79.1 (5.5) | 0.004 |

| Education, years | 14.9 (3.5) | 15.3 (3.4) | 14.3 (3.6) | 0.02 |

| Gender, % Female | 66% | 66% | 65% | 0.80 |

| Ethnicity: | ||||

| % White non‐Hispanic | 46% | 53% | 34% | 0.001 |

| % Black | 37% | 32% | 47% | |

| % Hispanic | 14% | 13% | 16% | |

| % Others or more than one race | 2% | 1% | 3% | |

| GDS Score | 2.3 (2.1) | 2.1 (1.8) | 2.8 (2.4) | 0.004 |

| MoCA‐30 – Standard | 23.3 (3.7) | 24.6 (3.0) | 20.7 (3.6) | <0.0001 |

| MoCA‐22 | 17.4 (2.8) | 18.3 (2.3) | 15.5 (2.7) | <0.0001 |

| T‐MoCA | 17.3 (2.8) | 18.0 (2.6) | 16.0 (2.7) | <0.0001 |

| Paired difference (T‐MoCA minus MoCA‐22) | −0.05 (2.6) | −0.3 (2.5) | 0.5 (2.7) | 0.002 |

| Interval between telephone and in person administration (in days) | 22.7 (17.0) | 22.5 (17.0) | 23.1 (17.1) | 0.68 |

| Subjective cognition self‐report score (range 0–9) | 2.9 (2.4) | 2.6 (2.2) | 3.5 (2.7) | <0.001 |

| Telephone administration difficulties (range 0–1) | 11% | 8% | 17% | 0.005 |

Abbreviations: GDS, Geriatric Depression Scale; MCI, mild cognitive impairment; MoCA, Montreal Cognitive Assessment; MoCA‐22, MoCA subset; T‐MoCA, telephone MoCA.

3.2. Characteristics of the MoCA‐22 and the T‐MoCA

Scores on the MoCA‐22 and T‐MoCA were significantly associated with education, race/ethnicity, depressive symptoms, and telephone administration difficulties. Age was significantly related to the MoCA‐22 only, and gender and self‐reported cognitive concerns were significantly associated only with the T‐MoCA, although the associations of these factors with both modalities of the MoCA trended in the same direction. Older age, Black or "All others" ethnicity (consisting of Hispanics and Others/more than one race), higher GDS scores, presence of telephone administration difficulties, and greater cognitive concerns were associated with worse MoCA performance on either instrument, while higher education and being a woman were associated with better performance (Table 2).

TABLE 2.

** Association of MoCA‐22, T‐MoCA, and the difference between the T‐MoCA and MoCA‐22 with demographics, depression, subjective concerns, and issues related to telephone administration

| MoCA‐22 | T‐MoCA | Difference between T‐MoCA and MoCA‐22 (T‐MoCA–MoCA22) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Estimates | S.E. | P‐value | Estimates | S.E. | P‐value | Estimates | S.E. | P‐value | |

| Intercept | 17.81 | 0.24 | <.0001 | 17.55 | 0.24 | <.0001 | −0.26 | 0.24 | 0.28 |

| Age* | −0.06 | 0.02 | 0.01 | −0.03 | 0.02 | 0.16 | 0.03 | 0.02 | 0.25 |

| Education* | 0.20 | 0.04 | <.0001 | 0.16 | 0.04 | <.0001 | −0.03 | 0.04 | 0.36 |

| Gender | 0.63 | 0.27 | 0.02 | 0.86 | 0.26 | 0.001 | 0.24 | 0.27 | 0.38 |

| Black (ref. non‐Hispanic White) | −1.39 | 0.29 | <.0001 | −1.23 | 0.28 | <.0001 | 0.17 | 0.29 | 0.57 |

| All others | −1.65 | 0.36 | <.0001 | −1.63 | 0.36 | <.0001 | 0.02 | 0.37 | 0.95 |

| Depression* (GDS) | −0.12 | 0.06 | 0.05 | −0.20 | 0.06 | 0.002 | −0.07 | 0.06 | 0.26 |

| Sum of subjective concerns* | −0.07 | 0.05 | 0.17 | −0.11 | 0.05 | 0.05 | −0.03 | 0.06 | 0.57 |

| Any telephone issues | −0.95 | 0.40 | 0.02 | −0.82 | 0.40 | 0.04 | 0.13 | 0.41 | 0.76 |

*Age, education, GDS; sum of subjective cognitive concerns were all centered at overall mean.

**Based on n = 425 participants; three participants had missing values for sum of subjective concerns and were excluded.

Abbreviations: GDS, Geriatric Depression Scale; MCI, mild cognitive impairment; MoCA, Montreal Cognitive Assessment; MoCA‐22, MoCA subset; T‐MoCA, telephone MoCA.

The difference in scores between the T‐MoCA and the MoCA‐22 was also computed for each participant. The mean difference was −0.04, SD = 2.56, 95% (confidence interval [CI]: −0.28 to 0.21) and the difference in scores was not related to any demographic factor, GDS, cognitive concerns, or telephone issues (Table 2). The difference between the T‐MoCA and the MoCA‐22 was not significantly different from zero (P = 0.76) using a paired t‐test. The equivalence test concluded that the T‐MoCA and the MoCA‐22 were equivalent (P < 0.0001). The equivalence between the two measures was also reflected in the 80% confidence interval of the difference (–0.24 to 0.17), which falls within the lower and upper equivalence bounds. We used a Bland–Altman plot to examine the relationship of the difference score between the MoCA‐22 and T‐MoCA and the average total score on both tests. The plot shows that when examining the average scores of both test versions, there is no consistent pattern for the difference scores between tests with approximately equal numbers of individuals with positive and negative differences (see Figure 1).

FIGURE 1.

Bland–Altman plot for examining the relationship of the difference score between the Montreal Cognitive Assessment subset (MoCA‐22) and telephone MoCA (T‐MoCA) and the average total score on both tests

3.3. Discriminative ability of the instruments for MCI

ROC analysis was used to evaluate the diagnostic ability of the T‐MoCA, MoCA‐22, and MoCA‐30 for MCI (see Figure 2). The T‐MoCA (AUC = 0.71) had a significantly lower AUC compared to the MoCA‐22 (AUC = 0.79; P = 0.002) and MoCA‐30 (AUC = 0.80; P = 0.003), while the two in‐person versions did not significantly differ from each other (P = 0.23). Comparisons of the various cut scores to classify MCI are shown in Table 3, which summarizes the operating characteristics of the three MoCA measures using three different cut scores (Youden's index, sensitivity > = 80%, and specificity > = 80%). Youden's index cut score, which optimizes sensitivity and specificity, showed that the MoCA‐30 was the most balanced, followed by the MoCA‐22. At the optimal cut point of 17 based on Youden's Index, the T‐MoCA had a sensitivity of 72%, however, specificity was only 60%. When setting sensitivity at > = 80%, the specificity of MoCA‐30 was 57%, followed by the MoCA‐22 (52%) and T‐MoCA (49%). Setting specificity at > = 80%, the sensitivity of the MoCA‐30 was 57%, compared to 50% for the MoCA ‐ 22 and 41% for the T‐MoCA.

FIGURE 2.

Receiver operating characteristic (ROC) curves to evaluate the diagnostic ability of the telephone Montreal Cognitive Assessment (T‐MoCA), MoCA subset (MoCA‐22), and full MoCA (MoCA‐30) for mild cognitive impairment

TABLE 3.

Sensitivity, specificity, positive predictive value, and negative predictive value for the MoCA‐30, MoCA‐22, and the T‐MoCA*

| Cut score based on | Sensitivity | Specificity | Positive predictive value | Negative predictive value | |

|---|---|---|---|---|---|

| MoCA‐30 | Youden's index cut = 22 | 0.70 | 0.77 | 0.63 | 0.83 |

| Sensitivity > = 80% cut = 24 | 0.83 | 0.57 | 0.51 | 0.86 | |

| Specificity > = 80% cut = 21 | 0.57 | 0.85 | 0.66 | 0.79 | |

| MoCA‐22 | Youden's index cut = 17 | 0.79 | 0.67 | 0.56 | 0.86 |

| Sensitivity > = 80% cut = 18 | 0.88 | 0.52 | 0.49 | 0.89 | |

| Specificity > = 80% cut = 15 | 0.50 | 0.88 | 0.69 | 0.77 | |

| T‐MoCA | Youden's index cut = 17 | 0.72 | 0.59 | 0.49 | 0.80 |

| Sensitivity > = 80% cut = 18 | 0.81 | 0.49 | 0.46 | 0.82 | |

| Specificity > = 80% cut = 15 | 0.41 | 0.83 | 0.56 | 0.72 |

(< = cut as positive).

Abbreviations: MoCA, Montreal Cognitive Assessment; MoCA‐22, MoCA subset; T‐MoCA, telephone MoCA.

To analyze the characteristics of individuals whose MCI classification disagreed between the T‐MoCA and the MoCA‐22, we used Youden's index cut score for assessment and examined the effect of age, education, gender, race/ethnicity, and telephone difficulties. Diagnostic discrepancies occurred in the 114 individuals. None of the covariates was significantly associated with the probability of having different diagnoses.

Sensitivity analyses were repeated on the subset (N = 288) who completed the MoCA‐30 and T‐MoCA at their initial (baseline) study visit. Results for the sensitivity analyses were similar to that within the larger sample (Tables S5 and S6 in supporting information).

3.4. Conversion of scores from the T‐MoCA to the MoCA‐30

Table 4 shows the results of the conversion from the T‐MoCA to the MoCA‐30 using the equipercentile equating method. Using Poisson regression, age, gender, race/ethnicity, and education were included in the initial model and only education was significant when mapping T‐MoCA onto MoCA‐30. Therefore, the final equation only included education. The estimating equation for conversion for MoCA‐30 is exp(2.49 + 0.028*T‐MoCA +0.011*education in years).

TABLE 4.

Conversion from T‐MoCA to MoCA‐30 score using the equipercentile method

| T‐MoCA | Converted MoCA‐30 |

|---|---|

| 0 | 0 |

| 1 | 0 |

| 2 | 1 |

| 3 | 3 |

| 4 | 4 |

| 5 | 6 |

| 6 | 8 |

| 7 | 9 |

| 8 | 11 |

| 9 | 12 |

| 10 | 14 |

| 11 | 15 |

| 12 | 16 |

| 13 | 18 |

| 14 | 19 |

| 15 | 20 |

| 16 | 22 |

| 17 | 23 |

| 18 | 24 |

| 19 | 25 |

| 20 | 27 |

| 21 | 28 |

| 22 | 30 |

Abbreviations: MoCA, Montreal Cognitive Assessment; T‐MoCA, telephone MoCA.

4. DISCUSSION

Remotely administered cognitive screening tools are increasingly used in research and health‐care settings. 27 Such screens are often modified versions of standard in‐person measures. 29 , 67 Although widely used, the remote assessment versions are frequently validated in small, select, homogenous samples; lack large‐scale empirical support; and direct comparisons between remotely administered and traditional in‐person screens are rarely reported. 29 To our knowledge, the current study is the first to directly examine correspondence of the T‐MoCA 17 with the widely used in‐person MoCA‐30 4 and to determine conversion scores in a well‐characterized, demographically diverse cohort of older adults. Results indicate that the T‐MoCA is equivalent to the MoCA‐22, and to the corresponding in‐person MoCA‐30. Consistent with previous work, 17 , 18 , 19 our findings support that the diagnostic accuracy of T‐MoCA to detect MCI is 0.72, which is slightly diminished compared to in‐person administration modalities. Nevertheless, our study indicates that the T‐MoCA is a valuable cognitive screen that can be used to detect MCI when in‐clinic assessment is not available.

Often appealing and efficient for health‐care professionals and researchers, remotely administered assessment tools like the T‐MoCA are also well accepted by older adult patients and study participants. For example, telephone‐administered measures are cost‐effective, allow for quick and flexible screening, overcome geographical barriers, and lower dropout rates in longitudinal aging studies. 68 , 69 , 70 For older adults, especially those with reduced mobility and/or medical comorbidities, remotely implemented screens are convenient and well tolerated. 29 Recent years have seen the emergence of videoconference‐based cognitive screens conducted via computer, smartphone, or tablet, yet they rely on costly equipment and technological knowledge for administration, which greatly limits their broad applicability. Telephone‐based screens can facilitate continuity of care, monitoring of disease progression, and clinical and research follow‐up when in‐person visits are not feasible.

An important application of the current study is to facilitate the transition from the standard MoCA‐30 to the T‐MoCA and vice versa. This is critical for when patients or study participants initially seen in person can no longer attend in‐person visits due to health problems; have moved; or as we have seen recently, have safety concerns related to a pandemic. Using the conversion scores derived from the log‐linear smoothing method presented in Table 4, participant scores on the T‐MoCA can be expressed in the terms of the MoCA‐30. This is especially useful for clinicians when in‐person assessments are not feasible. In addition, in research settings when harmonization across studies is necessary, relevant covariates should be considered. The Poisson equation affords the opportunity to take account of these factors (e.g., in the current study, education was a significant covariate, which is therefore included in the Poisson equation).

The optimal cut score for discriminating between those classified as MCI versus those with normal cognition depends upon the clinical, research, or public health context. The optimal rule is based on Youden's index, which selects the cut score that maximizes the sum of sensitivity and specificity, maximizing the number of correctly classified individuals. This index indicates that the in‐person MoCA‐30 and MoCA‐22 perform the best using this index; however, the T‐MoCA is adequate. The current investigation also provides cut scores to achieve at least 80% sensitivity or 80% specificity. If the objective is to use telephone‐administered screens to identify individuals who may have MCI for a low‐cost, low‐risk intervention, a cut score that emphasizes sensitivity may be optimal. In addition, if a positive telephone screen is to be followed by a safe and more specific test for definitive diagnosis, sensitivity may be more important than specificity, to maximize identification of individuals with MCI. Setting sensitivity to be at least 80%, our results show that the specificity of the three instruments suffer. However, if the goal is to identify candidates for a costly or invasive next step, such as positron emission tomography scanning or lumbar puncture, it may be desirable to maximize specificity of remotely administered screeners to minimize follow‐up screening in individuals falsely classified as MCI. When specificity is specified to be greater than or equal to 80%, our results show that the sensitivity of the MoCA‐30, the MoCA‐22, and the T‐MoCA are reduced. Just as the MoCA‐30 or the MoCA‐22, the T‐MoCA can deliver high specificity or high sensitivity but the trade‐offs are large. For this reason, the clinician or investigator using the T‐MoCA must select the cut scores optimized for their purpose.

In a screening context in which sensitivity matters, the T‐MoCA could be used to identify individuals who warrant further evaluation. MCI is difficult to target because diagnosis is assigned based on thresholds of cognitive performance on tests with imperfect retest reliabilities. In addition, back conversion from MCI to cognitive normality ranges from 16% to 39% of diagnosed cases in community‐based studies. 71 , 72 , 73 Therefore, disagreements between the MoCA in its various forms and MCI status reflects, at least in part, the fallibility of MCI as the gold standard. There are several approaches to extending this work. One approach would be to use more robust definitions of MCI, based on follow‐up data or biomarkers. Another approach might be to assess the relationship of MoCA performance to the distal outcomes of primary interest. A third approach would be to assess change in T‐MoCA performance as a predictor of distal MCI outcomes.

Participant age, education level, gender, race/ethnicity, level of subjective cognitive concerns, and/or telephone interviewer administration concerns did not result in a difference in performance between MoCA administration modalities. Therefore, our findings suggest that while these relevant individual characteristics may influence performance on the MoCA in general, 33 , 34 , 35 , 36 , 37 , 38 , 39 , 41 , 42 these same variables do not influence differential performance between MoCA versions administered in‐office or remotely. This finding is consistent with previous work examining a videoconference‐based MoCA version. 20 Confounding by demographic variables is not likely to differentially impact the administration mode and our study suggests that scores on the T‐MoCA reflect in‐person administration. It is also important to note that any concerns noted by the telephone screener (see Table S3), including problems with hearing, diminished attention or motivation, unauthorized use of external sources, and/or anxiety about performance did not affect performance on the T‐MoCA compared to the in‐clinic version. This is an important finding, as it demonstrates that possible confounding variables related to telephone administration do not meaningfully impact performance.

The current study demonstrates several notable strengths. First, despite growing demand for validated telephone cognitive measures, there is a dearth of literature specifically examining the T‐MoCA, which is the telephone version of one of the most widely used cognitive screens. 4 We identified only three articles characterizing the T‐MoCA, 17 , 18 , 19 including the initial development and validation study. 17 Sample sizes were small across studies (range: N = 68 17 to 10518), participants were diagnostically homogenous (consisting of TIA, 17 stroke, 17 , 18 and atrial fibrillation patients 19 ) and race/ethnicity was unreported in all three studies. Thus, we extended the work of others, addressing unanswered questions, making previously developed tools more useful. Also, we enrolled demographically diverse community‐dwelling older adults and directly examined such variables as age, education level, race/ethnicity, depressive symptoms, and/or issues with the telephone administration (such as hearing problems), and overall did not find that any of these variables affected T‐MoCA performance. This should be reassuring to those seeking to use this measure in diverse populations. Finally, this study is novel in its presentation of conversion scores between test modalities. We used a robust statistical approach with equipercentile equating with log‐linear transformation to establish a normal distribution of scores without irregularities due to sampling and the Poisson equation to include education as an influential factor when harmonization across studies is necessary.

Our study is not without limitations. We did not validate the T‐MoCA against other widely used telephone screens, such as the TICS. We used a cross‐sectional approach; future research should use a longitudinal design to better evaluate the sensitivity of the T‐MoCA to cognitive changes. In addition, we did not counterbalance the order of administration of the two MoCA forms. If the MoCA‐22 scores were higher than the T‐MoCA scores, one explanation might be practice effects (though the T‐MoCA and MoCA‐22 were equivalent in this study). Despite the promising utility of the T‐MoCA, the current study also indicated that in‐person administration of the MoCA, in both the original MoCA‐30 and the MoCA‐22 forms, is more sensitive for detection of MCI. Importantly, both the T‐MoCA and the MoCA‐30 should be viewed as screening rather than diagnostic measures. Patients positively screening for MCI according to these tools should undergo further cognitive testing before a clinical diagnosis is assigned. Another limitation is that formal hearing assessments were not performed. Instead, documentation of hearing difficulties was based on observations and assessments of research assistants. Thus, our findings underscore the necessity of in‐person assessment when possible, with the gold standard being comprehensive neuropsychological assessment of cognition.

Telephone screens of cognition are a convenient complement to clinical care and research, as they are efficient, inexpensive, and unaffected by traditional barriers to services, including geographic and socioeconomic factors. Beyond their broad accessibility, remotely administered tools like the T‐MoCA, have been proven crucial to facilitate continuity of care, disease monitoring, and follow‐up when in‐person assessment is not feasible. The current study provides support that the T‐MoCA is equivalent to in‐person administered modalities. Further, we provide two methods to facilitate conversion between T‐MoCA and original MoCA‐30, which will simplify long‐term follow‐up assessments of cognition in clinical settings and facilitate harmonization of cognitive data among multicenter longitudinal cohort studies.

CONFLICTS OF INTEREST

The authors report no competing interests.

Supporting information

Supporting information

Supporting information

ACKNOWLEDGMENTS

This work was supported by National Institutes of Health grants NIA 2 P01 AG03949, R03 AG046504; the Leonard and Sylvia Marx Foundation; and the Czap Foundation. The authors would like to thank the dedicated EAS participants for their time and effort in support of this research. This research was made possible through the hard work of EAS research assistants: we thank Diane Sparracio and April Russo for assistance with participant recruitment; Betty Forro, Maria Luisa Giraldi, and Sylvia Alcala for assistance with clinical and neuropsychological assessments; and Michael Potenza for assistance with data management. All authors contributed to and approved the final manuscript.

Katz MJ, Wang C, Nester CO, et al. T‐MoCA: A valid phone screen for cognitive impairment in diverse community‐samples. Alzheimer's Dement. 2021;13:e12144 10.1002/dad2.12144

REFERENCES

- 1. Bloom DE, Luca DL. The global demography of aging In: Piggott J, Woodland A, ed. Handbook of the Economics of Population Aging. Amsterdam: Elsevier; 2016:3‐56. [Google Scholar]

- 2. Dua T, Seeher KM, Sivananthan S, Chowdhary N, Pot AM, Saxena S. World health organization's global action plan on the public health response to dementia 2017‐2025. Alzheimers Dement. 2017;13(7):P1450‐P1451. [Google Scholar]

- 3. 2019 Alzheimer's disease facts and figures. Alzheimers Dement. 2019;15(3):321‐387. [Google Scholar]

- 4. Nasreddine ZS, Phillips NA, Bedirian V, et al. The Montreal Cognitive Assessment (MoCA): a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53:695‐699. [DOI] [PubMed] [Google Scholar]

- 5. Phillips N, Chertkow H. Montreal cognitive assessment (MoCA): concept and clinical review Cognitive Screening Instruments. 2013th ed. London: Springer London; 2013:111‐151. [Google Scholar]

- 6. Folstein MF, Folstein SE, McHugh PR. Mini‐mental state: a practical method for grading the cognitive state of patients for the clinician. J Psychiat Res. 1975;12:189‐198. [DOI] [PubMed] [Google Scholar]

- 7. Pinto TC, Machado L, Bulgacov TM, et al. Is the Montreal Cognitive Assessment (MoCA) screening superior to the Mini‐Mental State Examination (MMSE) in the detection of mild cognitive impairment (MCI) and Alzheimer's Disease (AD) in the elderly?. Int Psychogeriatr. 2019;31(4):491‐504. [DOI] [PubMed] [Google Scholar]

- 8. Saczynski JS, Inouye SK, Guess J, et al. The Montreal cognitive assessment: creating a crosswalk with the Mini‐Mental state examination. JAGS. 2015;63(11):2370‐2374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Small GW. What we need to know about age related memory loss. BMJ. 2002;324(7352):1502‐1505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Dong Y, Lee WY, Basri NA, et al. The Montreal cognitive assessment is superior to the Mini–Mental state examination in detecting patients at higher risk of dementia. Int Psychogeriatr. 2012;24(11):1749‐1755. [DOI] [PubMed] [Google Scholar]

- 11. Dong Y, Sharma VK, Chan BP, et al. The Montreal cognitive assessment (MoCA) is superior to the mini‐mental state examination (MMSE) for the detection of vascular cognitive impairment after acute stroke. J Neurol Sci. 2010;299(1):15‐18. [DOI] [PubMed] [Google Scholar]

- 12. Nazem S, Siderowf AD, Duda JE, et al. Montreal cognitive assessment performance in patients with Parkinson's disease with “Normal” global cognition according to mini‐mental state examination score. JAGS. 2008;57(2):304‐308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Freitas S, Simões MR, Alves L, Santana I. Montreal cognitive assessment: validation study for mild cognitive impairment and Alzheimer disease. Alzheimer Dis Assoc Disord. 2013;27(1):37‐43. [DOI] [PubMed] [Google Scholar]

- 14. Zadikoff C, Fox SH, Tang‐Wai DF, et al. A comparison of the mini mental state exam to the Montreal cognitive assessment in identifying cognitive deficits in Parkinson's disease. Mov Disord. 2008;23(2):297‐299. [DOI] [PubMed] [Google Scholar]

- 15. Pendlebury ST, Cuthbertson FC, Welch SJV, Mehta Z, Rothwell PM. Underestimation of cognitive impairment by mini‐mental state examination versus the Montreal cognitive assessment in patients with transient ischemic attack and stroke: a population‐based study. Stroke. 2010;41(6):1290‐1293. [DOI] [PubMed] [Google Scholar]

- 16. Lam B, Middleton LE, Masellis M, et al. Criterion and convergent validity of the Montreal cognitive assessment with screening and standardized neuropsychological testing. JAGS. 2013;61(12):2181‐2185. [DOI] [PubMed] [Google Scholar]

- 17. Pendlebury ST, Welch SJV, Cuthbertson FC, Mariz J, Mehta Z, Rothwell PM. Telephone assessment of cognition after transient ischemic attack and stroke: modified telephone interview of cognitive status and telephone Montreal cognitive assessment versus face‐to‐face Montreal cognitive assessment and neuropsychological battery. Stroke. 2013;44(1):227‐229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Zietemann V, Kopczak A, Müller C, Wollenweber F, Dichgans M. Validation of the telephone interview of cognitive status and telephone Montreal cognitive assessment against detailed cognitive testing and clinical diagnosis of mild cognitive impairment after stroke. Stroke. 2017;48(11):2952‐2957. [DOI] [PubMed] [Google Scholar]

- 19. Lai Y, Jiang C, Du X, et al. Validation of T‐MoCA in the screening of mild cognitive impairment in Chinese patients with atrial fibrillation. J Gerontol. 2020. 10.21203/rs.3.rs-18762/v1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Chapman JE, Cadilhac DA, Gardner B, Ponsford J, Bhalla R, Stolwyk RJ. Comparing face‐to‐face and videoconference completion of the montreal cognitive assessment (MoCA) in community‐based survivors of stroke. J Telemed Telecare. 2019; 10.1177/1357633X19890788. [DOI] [PubMed] [Google Scholar]

- 21. Abdolahi A, Bull MT, Darwin KC, et al. A feasibility study of conducting the Montreal cognitive assessment remotely in individuals with movement disorders. Health Inform J. 2016;22(2):304‐311. [DOI] [PubMed] [Google Scholar]

- 22. Stillerova T, Liddle J, Gustafsson L, Larmot R, Silburn P. Could everyday technology improve access to assessments? A pilot study on the feasibility of screening cognition in people with Parkinson's disease using the Montreal Cognitive Assessment via Internet videoconferencing. Aust Occup Ther J. 2016;63(6):373‐380. [DOI] [PubMed] [Google Scholar]

- 23. Iiboshi K, Yoshida K, Yamaoka Y, et al. A validation study of the remotely administered Montreal cognitive assessment tool in the elderly Japanese population. Telemed J E Health. 2020;26(7):920‐928. [DOI] [PubMed] [Google Scholar]

- 24. DeYoung N, Shenal BV. The reliability of the Montreal cognitive assessment using telehealth in a rural setting with veterans. J Telemed Telecare. 2019;25(4):197‐203. [DOI] [PubMed] [Google Scholar]

- 25. Boes CJ, Leep‐Hunderfund AN, Martinez‐Thompson JM, et al. A primer on the in‐home teleneurologic examination: a COVID‐19 pandemic imperative. Neuro Clin Pract. 2020. 10.1212/CPJ.0000000000000876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Phillips NA, Chertkow H, Pichora‐Fuller MK, Wittich W. Special issues on using the Montreal cognitive assessment for telemedicine assessment during COVID‐19. JAGS. 2020;68(5):942‐944. [DOI] [PubMed] [Google Scholar]

- 27. Hantke NC, Gould C. Examining older adult cognitive status in the time of COVID‐19. JAGS. 2020;68(7):1387‐1389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Brandt J, Spencer M, Folstein M. The telephone interview for cognitive status. Neuropsychiatry Neuropsychol Behav Neurol. 1988;1(2):111‐117. [Google Scholar]

- 29. Castanho TC, Amorim L, Zihl J, Palha JA, Sousa N, Santos NC. Telephone‐based screening tools for mild cognitive impairment and dementia in aging studies: a review of validated instruments. Front Aging Neurosci. 2014;6:16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Manly JJ, Schupf N, Stern Y, et al. Telephone‐based identification of mild cognitive impairment and dementia in a multicultural cohort. Arch Neurol. 2011;68(5):607‐614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Lindgren N, Rinne JO, Palviainen T, Kaprio J, Vuoksimaa E. Prevalence and correlates of dementia and mild cognitive impairment classified with different versions of the modified Telephone Interview for Cognitive Status (TICS‐m). Int J Geriatr Psychiatry. 2019;34:1883‐1891. [DOI] [PubMed] [Google Scholar]

- 32. Seo EH, Lee DY, Kim SG, et al. Validity of the telephone interview for cognitive status (TICS) and modified TICS(TICSm) for mild cognitive impairment (MCI) and dementia screening. Arch Gerontol Geriat. 2011;52:e26‐e30. [DOI] [PubMed] [Google Scholar]

- 33. Freitas S, Simões MR, Alves L, Santana I. Montreal cognitive assessment: influence of sociodemographic and health variables. Arch Clin Neuropsych. 2012;27(2):165‐175. [DOI] [PubMed] [Google Scholar]

- 34. Malek‐Ahmadi M, Powell JJ, Belden CM, et al. Age‐ and education‐adjusted normative data for the montreal cognitive assessment (MoCA) in older adults age 70‐99. Aging Neuropsychol Cogn. 2015;22(6):755‐761. [DOI] [PubMed] [Google Scholar]

- 35. Milani SA, Marsiske M, Cottler LB, Chen X, Striley CW. Optimal cutoffs for the Montreal cognitive assessment vary by race and ethnicity. DADM. 2018;10(1):773‐781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Goldstein FC, Ashley AV, Miller E, Alexeeva O, Zanders L, King V. Validity of the Montreal cognitive assessment as a screen for mild cognitive impairment and dementia in African Americans. J Geriatr Psych Neur. 2014;27(3):199‐203. [DOI] [PubMed] [Google Scholar]

- 37. Julayanont P, Hemrungrojn S, Tangwongchai S. The effect of education and literacy on performance on the Montreal cognitive assessment among cognitively normal elderly. Alzheimers Dement. 2013;9(4):P793. [Google Scholar]

- 38. Wu Y, Wang M, Ren M, Xu W. The effects of educational background on Montreal cognitive assessment screening for vascular cognitive impairment, no dementia, caused by ischemic stroke. J Clin Neurosci. 2013;20(10):1406‐1410. [DOI] [PubMed] [Google Scholar]

- 39. Gagon G, Hansen KT, Woolmore‐Goodwin S, et al. Correcting the MoCA for education: effect on sensitivity. Can J Neurol Sci. 2013;40:673‐683. [DOI] [PubMed] [Google Scholar]

- 40. Dupuis K, Pichora‐Fuller MK, Chasteen AL, Marchuk V, Singh G, Smith SL. Effects of hearing and vision impairments on the Montreal cognitive assessment. Aging Neuropsychol Cogn. 2015;22(4):413‐437. [DOI] [PubMed] [Google Scholar]

- 41. Dupuis K, Marchuk V, Pichora‐Fuller MK. Noise affects performance on the Montreal cognitive assessment. CJA. 2016;35(3):298‐307. [DOI] [PubMed] [Google Scholar]

- 42. Blair M, Coleman K, Jesso S, et al. Depressive symptoms negatively impact montreal cognitive assessment performance: a memory clinic experience. Can J Neurol Sci. 2016;43(4):513‐517. [DOI] [PubMed] [Google Scholar]

- 43. American Fact Finder 2013‐2017 American Community Survey 5‐Year Estimates. factfinder2.census.gov. http://factfinder2.census.gov/bkmk/table/1.0/en/ACS/13_5YR/DP03/0600000US3400705470. Accessed June 17, 2020.

- 44. Lipton RB, Katz MJ, Kuslansky G, et al. Screening for dementia by telephone using the memory impairment screen. J Am Geriatr Soc. 2003;51(10):1382‐1390. [DOI] [PubMed] [Google Scholar]

- 45. Montreal Cognitive Assessment (MoCA). https://www.mocatest.org/. Accessed October 21, 2020.

- 46. Sheikh JI, Yesavage JA. Geriatric depression scale (GDS); recent evidence and development of a shorter version. Gerontologist. 1986;5:165‐173. [Google Scholar]

- 47. Weintraub S, Besser L, Dodge HH, et al. Version 3 of the Alzheimer Disease Centers' Neuropsychological Test Battery in the Uniform Data Set (UDS). Alzheimer Dis Assoc Disord. 2018;32(1):10‐17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Buschke H. Cued recall in amnesia. J Clin Neuropsychol. 1984;6(4):433‐440. [DOI] [PubMed] [Google Scholar]

- 49. Wechsler D. Wechsler Adult Intelligence Scale‐ Third Edition. New York: The Psychological Corporation; 1997. [Google Scholar]

- 50. Wechsler D. Wechsler Memory Scale – Revised. New York: The Psychological Corporation; 1987. [Google Scholar]

- 51. Diagnostic and Statistical Manual of Mental Disorders, DSM‐IV. Washington, DC: American Psychiatric Association; 1994. [Google Scholar]

- 52. Bondi MW, Edmonds EC, Jak AJ, et al. Neuropsychological criteria for mild cognitive impairment improves diagnostic precision, biomarker associations, and progression rates. J Alzheimers Dis. 2014;42(1):275‐289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Lawton MP, Brody EM. Assessment of older people: self‐maintianing and instrumental activities of daily living. Gerontologist. 1969;9:179‐186. [Diagnostic and Statistical Manual of Mental Disorders, DSM‐IV. Washington, DC: American Psychiatric Association; 1994. PubMed: 5349366]. [PubMed] [Google Scholar]

- 54. Schuirmann DJ. A comparison of the two one‐sided tests procedure and the power approach for assessing the equivalence of average bioavailability. J Pharmacokinet Biopharm. 1987;15:657‐680. [DOI] [PubMed] [Google Scholar]

- 55. Hauck DWW, Anderson S. A new statistical procedure for testing equivalence in two‐group comparative bioavailability trials. J Pharmacokinet Biopharm. 1984;12:83‐91. [DOI] [PubMed] [Google Scholar]

- 56. Senn S. Statistical Issues in Drug Development. 2nd ed. Hoboken, NJ: Wiley; 2007. [Google Scholar]

- 57. Lakens D. Equivalence Tests: a Practical Primer for t Tests, Correlations, and Meta‐Analyses. Soc Psychol Personal Sci. 2017;8(4):355‐362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Björngrim S, van den Hurk W, Betancort M, Machado A, Lindau M. Comparing traditional and digitized cognitive tests used in standard clinical evaluation ‐ A Study of the digital application minnemera. Front Psychol. 2019;10:2327 10.3389/fpsyg.2019.02327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Seaman MA, Serlin RC. Equivalence confidence intervals for two‐group comparisons of means. Psychol Methods. 1998;3:403‐411. [Google Scholar]

- 60. Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986;327(8476):307‐310. [PubMed] [Google Scholar]

- 61. DeLong ER, DeLong DM, Clarke‐Pearson DL. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics. 1988;44:837‐845. [PubMed] [Google Scholar]

- 62. Youden WJ. Index for rating diagnostic tests. Cancer. 1950;3:32‐35. [DOI] [PubMed] [Google Scholar]

- 63. Schisterman EF, Perkins NJ, Liu A, Bondell H. Optimal cut‐point and its corresponding Youden Index to discriminate individuals using pooled blood samples. Epidemiology. 2005;16(1):73‐81. [DOI] [PubMed] [Google Scholar]

- 64. R Core Team . R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; 2020. https://www.R-project.org/. Accessed July 7, 2020. [Google Scholar]

- 65. Albano AD. equate: an R package for observed‐score linking and equating. J Stat Softw. 2016;74(8):1‐36. [Google Scholar]

- 66. Rossetti HC, Lacritz LH, Cullum CM, Weiner MF. Normative data for the Montreal Cognitive Assessment (MoCA) in a population‐based sample. Neurology. 2011;77(13):1272‐1275. 10.1212/WNL.0b013e318230208a. [DOI] [PubMed] [Google Scholar]

- 67. Munro Cullum C, Hynan LS, Grosch M, Parikh M, Weiner MF. Teleneuropsychology: evidence for video teleconference‐based neuropsychological assessment. J Int Neuropsychol Soc. 2014;20:1028‐1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Rabin LA, Saykin AJ, Wishart HA, et al. The Memory and Aging Telephone Screen (MATS): development and preliminary validation. Alzheimers Dement. 2007;3(2):109‐121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Beeri MS, Werner P, Davidson M, Schmidler J, Silverman J. Validation of the modified Telephone Interview for Cognitive Status (TICS‐m) in Hebrew. Int J Geriatr Psychiatry. 2003;18:381‐386. [DOI] [PubMed] [Google Scholar]

- 70. Ihle A, Gouveia ER, Gouveia BR, Kliegel M. The cognitive telephone screening instrument (COGTEL): a brief, reliable, and valid tool for capturing interindividual difference in cognitive functioning in epidemiological and aging studies. Dement Geriatr Cogn Disord Extra. 2017;7:339‐345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Koepsell TD, Monsell SE. Reversion from mild cognitive impairment to normal or near‐normal cognition: risk factors and prognosis. Neurology. 2012;79(15):1591‐1598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Roberts RO, Knopman DS, Mielke MM, et al. Higher risk of progression to dementia in mild cognitive impairment cases who revert to normal. Neurology. 2014;82(4):317‐325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Chung SJ, Park YH, Yoo HS, et al. Mild cognitive impairment reverters have a favorable cognitive prognosis and cortical integrity in Parkinson's disease. Neurobiol Aging. 2019;78:168‐177. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting information

Supporting information