Abstract

Background:

Stakeholder-engaged research is an umbrella term for the types of research that have community, patient, and/or stakeholder engagement, feedback, and bidirectional communication as approaches used in the research process. The level of stakeholder engagement across studies can vary greatly, from minimal engagement to fully collaborative partnerships.

Objectives:

To present the process of reaching consensus among stakeholder and academic experts on the stakeholder engagement principles (EPs) and to identify definitions for each principle.

Methods:

We convened 19 national experts, 18 of whom remained engaged in a five-round Delphi process. The Delphi panel consisted of a broad range of stakeholders (e.g., patients, caregivers, advocacy groups, clinicians, researchers). We used web-based surveys for most rounds (1–3 and 5) and an in-person meeting for round 4. Panelists evaluated EP titles and definitions with a goal of reaching consensus (>80% agreement). Panelists’ comments guided modifications, with greater weight given to non-academic stakeholder input.

Conclusions:

EP titles and definitions were modified over five Delphi rounds. The panel reached consensus on eight EPs (dropping four, modifying four, and adding one) and corresponding definitions. The Delphi process allowed for a stakeholder-engaged approach to methodological research. Stakeholder engagement in research is time consuming and requires greater effort but may yield a better, more relevant outcome than more traditional scientist-only processes. This stakeholder-engaged process of reaching consensus on EPs and definitions provides a key initial step for the content validation of a survey tool to examine the level of stakeholder engagement in research studies.

Keywords: Community health partnerships, evaluation studies, outcome and process assessment (health care), community-based participatory research, process issues

Stakeholder-engaged research is an umbrella term for the types of research (e.g., patient-centered outcomes research, community-based participatory research) that have community, patient, and/or stakeholder engagement, feedback, and dialogue as core principles. Two key elements of stakeholder-engaged research are 1) stakeholder engagement and involvement throughout the research process and 2) selection and measurement of outcomes that the population of interest cares about and that can inform decision making about the research topic.1,2 Stakeholder engagement is a powerful vehicle for effectuating changes that can improve health.3 Engaging community health stakeholders in the research process is often the missing link to improving the quality and outcomes of health promotion activities, disease prevention initiatives, and research studies.4,5 Stakeholder engagement requires a long-term process (e.g., time and effort from all partners) that builds trust, values all stakeholders’ contributions, and generates a collaborative framework.6

The benefits of engaging stakeholders—as consumers of health care and active partners in the full spectrum of translational research—include, for instance, identifying community health needs and priorities, providing input on research questions, contributing to appropriate research design and methods, developing culturally sensitive and ethical proposals, enhancing the recruitment and retention of research participants, and implementing and disseminating research findings more effectively.7–11

Most stakeholder engagement in research occurs during the recruitment and dissemination phases of translational research, so there is less experience on how to identify and involve stakeholders from the early research stages (e.g., research question and hypothesis development) and throughout the translational continuum (e.g., data analysis and interpretation). Because the optimal ways to involve relevant communities in each stage of the translational process have not been defined, stakeholder engagement needs to be addressed as a scientific problem—to identify best practices in an experimental, data-driven fashion.12

Although the usefulness of stakeholder-engaged health research has been well-established,7–11 measurement and evaluation of non-academic stakeholder engagement in research activities has primarily been done using qualitative research approaches.13–19 This is particularly true in assessments of how engaged the patient/stakeholder feels about the benefit of collaborations.20 Although qualitative methods are effective at assessing engagement, 1) they can be time consuming, 2) they do not easily scale up for the evaluation of large-scale or multisite research projects and intervention trials engaging multiple settings or stakeholders, and 3) the results cannot be easily compared over time and across programs or institutions.

To determine the level of stakeholder engagement in research studies, it is necessary to reach consensus on what determines how engaged stakeholders are in a project. Here, we discuss a stakeholder-engaged approach to reach consensus on each stakeholder engagement principle (EP) and definition.

METHODS

Evaluation of Stakeholder Engagement

The Program for the Elimination of Cancer Disparities (PECaD) at Siteman Cancer Center was established in 2003 in response to known racial/ethnic and socioeconomic cancer disparities in the St. Louis region. PECaD includes a community advisory board, the Disparities Elimination Advisory Committee (DEAC), which provides programmatic leadership. DEAC members represent multiple community interests and perspectives: survivors, community-based organizations, faith-based organizations, community physicians, and the media.21 PECaD began administering a biennial evaluation survey in 2011 to evaluate PECaD’s implementation of community EPs.22 Although this initial survey was informative in assessing PECaD’s adherence to the community EPs, it lacked specificity about how adherence was achieved and how this impacted PECaD’s research studies.22

To address this issue, the DEAC and PECaD researchers formally developed an evaluation team using a community–academic partnered framework. The evaluation team comprised PECaD staff (three investigators, the data manager, and the program coordinator) and the DEAC community co-chair. The evaluation team’s work was continuously reported back to DEAC; the team met individually and used DEAC meetings to obtain feedback at each stage of measure development. The evaluation team developed and pilot tested a survey tool on community engagement pertaining to 11 EPs.23 The EPs came from the literature11,13,19,24,25 and were selected based on feedback from the DEAC. These EPs were based on the principles of community-based participatory research9,11,19,26–28 and community engagement.6,13,29–31

The Patient Research Advisory Board (PRAB) was developed from a PECaD program that provides research literacy training to community health stakeholders.32 The PRAB works with researchers to develop and implement community-engaged and patient-centered research studies. The DEAC and PRAB both serve as advisory boards to the project and have dedicated several meetings for discussion of the project’s updates and to provide feedback to project investigators.

Delphi Panelists

Delphi panelists were recruited by email using a convenience snowball sampling approach based on the networks of the project team members (community-engaged researchers). Members of the panel were selected from the DEAC (n = 2) and the PRAB (n = 3) as key connections to both advisory boards for the project. Panelists were selected from each of the project team members’ institutions: Washington University in St. Louis (four stakeholders, two academics; including five from DEAC and PRAB), New York University (two stakeholders), and the University of Washington (two academics). In addition, nationally recognized scholars in community engagement were selected (n = 3) as well as nationally recognized community health stakeholders (n = 2). There was one academic who was also the director of a community-based organization. While she is able to understand both perspectives of a community–academic partnership, we considered her an academic on the Delphi panel. After initial selection, there was approximately an equal mix of academics and community health stakeholders. The list of panelists was shared with the funder to obtain additional recommendations for panelists. No specific panelists were suggested, but the funder requested greater representation from non-academics on the panel. To address this request, we asked academic panelists to identify community partners they worked with to be recruited to the panel. An additional three community health stakeholders joined the panel through this process.

Nineteen panelists were recruited to participate in the Delphi process. Most panelists were female (90%), African American (63%), and had some college or more education (100%). The panel consisted of 8 (42%) academic researchers and 11 (58%) community health stakeholders, including 4 (21%) current and 5 (26%) former direct services providers. The mean age of panelists at the start of the project was 55 years (range, 26–76 years). Panelists had an average of 10 years of research experience (range, 0–35 years) and 10 years of community-based participatory research experience (range, 0–30 years). We included one community health stakeholder panelist who had no research experience to provide the perspective of someone new to this type of work. One panelist dropped after completing the first round of the Delphi process, leaving 18 (95%) panelists who remained engaged throughout the entire five-round process. Table 1 displays the name, affiliation, partner type, and location of these panel members, who are patients, caregivers, advocacy group members, clinicians, and researchers.

Table 1.

Members of the Delphi Panel

| Name | Affiliation | Partner Type | Location |

|---|---|---|---|

| Elizabeth Baker | Saint Louis University | Academic | St. Louis, MO |

| Sylvia Burns | St. Louis Patient Research Advisory Board | Stakeholder | St. Louis, MO |

| Nell Meade Fields | UK Mountain Air Project | Stakeholder | Whitesburg, KY |

| Sheila Grigsby | University of Missouri—St. Louis | Academic | St. Louis, MO |

| Fern Herzberg | ARC XVI Fort Washington, Inc. | Stakeholder | New York, NY |

| Denise Hooks-Anderson | St. Louis DEAC Saint Louis University School of Medicine |

Academic | St. Louis, MO |

| Melvin Jackson | Strengthening the Black Family | Stakeholder | Raleigh-Durham, NC |

| Sherrill Jackson | St. Louis DEAC Breakfast Club Breast Cancer Support Group |

Stakeholder | St. Louis, MO |

| Loretta Jones | Healthy African American Families II | Stakeholder | Los Angeles, CA |

| Alison King | Washington University School of Medicine | Academic | St. Louis, MO |

| Danielle King | Kentucky River Community Care | Stakeholder | Hazard, KY |

| Danielle Lavallee | University of Washington, Surgical Outcomes Research Center | Academic | Seattle, WA |

| Chavelle Patterson | St. Louis Patient Research Advisory Board | Stakeholder | St. Louis, MO |

| Rosita Romero | Dominican Women’s Development Center | Stakeholder | New York, NY |

| Nancy Schoenberg | University of Kentucky College of Medicine | Academic | Lexington, KY |

| Kate McGlone West | University of Washington, Institute for Public Health Genetics | Academic | Seattle, WA |

| Consuelo Wilkins | Meharry-Vanderbilt Alliance | Academic | Nashville, TN |

| Jackie Wilkins | St. Louis Patient Research Advisory Board | Stakeholder | St. Louis, MO |

Reaching Consensus on the Engagement Principles

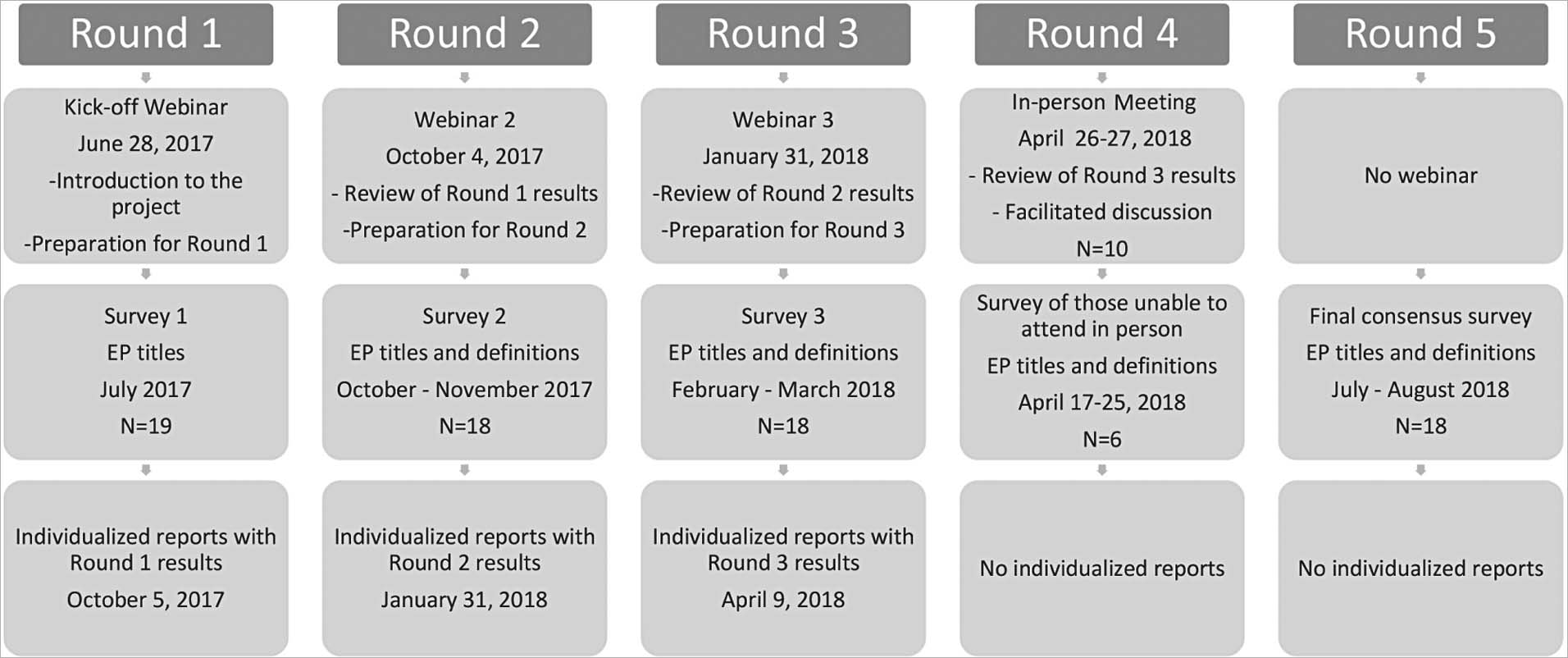

To identify the strongest EPs possible, we used a consensus process with the group of 19 national experts, in a five-round modified Delphi process (Figure 1). We used web-based surveys (via Qualtrics survey platform for rounds 1–3 and 5) and an in-person meeting (round 4). Panelists unable to attend the in-person meeting could participate in real time via webinar (using the GoToMeeting platform) or in advance via web-based survey. Synchronous voting for in-person and webinar attendees was conducted using mobile devices and the Poll Everywhere web survey platform. A professional editor participated in the in-person meeting to ensure proper grammar and consistency across items and definitions. After the in-person meeting, a final edit of the EP titles and definitions was done. These edited versions were voted on in round 5 (final consensus). This study was approved by two institutional review boards: the University Committee on Activities Involving Human Subjects, Office of Research Compliance at New York University and the Human Research Protections Office at Washington University in St. Louis.

Figure 1.

Implementation of Modified Delphi Process and Timeline

Each round (except the final round) was preceded by a presentation (recorded webinar in rounds 1–3 and in person for round 4) summarizing the results from the previous round and/or preparing panelists for the upcoming round. In addition, after rounds 1 to 3, panelists were provided with individual reports, which included each panelist’s own responses and the aggregate responses and comments from other panelists. During the Delphi process, panelists evaluated EP titles and definitions with a goal of reaching consensus (>80% agreement). In rounds 1 through 3, panelists were presented each principle and definition (starting in round 2) and asked to keep, modify, or remove. If modify or remove was selected, panelists were asked a follow-up open-ended question on the reason for their choice. In rounds 4 and 5, panelists were asked whether they agreed or disagreed with each EP and definition. The project team discussed them when consensus was not reached—that is, when more than four (21%) panelists suggested additions, deletions, or modifications.

Panelists’ recommendations on deletion or modification of wording guided survey changes, with greater weight given to community health stakeholder input. Consensus percentage was calculated for the panel overall and then stratified by partner type (stakeholder/academic). Once panelists’ responses were quantified, the study investigators and staff met to review quantitative data and panelists comments. Consistency in recommendations for wording change guided modifications, whereas the percentage in favor of remove guided decisions to delete. In cases where the team could not agree, items were retained and advanced to the next round to obtain additional feedback from panelists.

RESULTS

The Delphi process took approximately 1 year: round 1, July 2017 (n = 19); round 2, October to November 2017 (n = 18); round 3, February to March 2018 (n = 18); round 4, April 2018 (n = 16); and round 5, July to August 2018 (n = 18). The participation level varied during the 2-day, in-person meeting (round 4) from 11 to 16 participants (10 in person, 6 using a pre-meeting online survey [3 of these participating remotely]). We do not have any round 4 responses for 2 panelists (Figure 1).

Delphi Round 1

In round 1, panelists provided feedback on the 11 PECaD EPs.23 Based on round 1 feedback from panelists, four EPs were dropped (i.e., “acknowledge the community,” “disseminate findings and knowledge gained to all partners,” “integrate and achieve a balance of all partners,” “and plan for a long-term process and commitment”), and one EP was added (i.e., “build trust”). Two principles (EP 2 and EP 11) lacked consensus (79% overall; 91% stakeholder; 63% academic); both were dropped after round 1. The primary reasons for dropping EPs were that they were not applicable to a broad range of projects and that they overlapped other EPs. An additional principle was added because panelists stated that trust is a key component of stakeholder engagement that contributes to the success of partnerships, and this concept was not captured in any of the other EPs.

Two EPs were modified despite reaching consensus. The EP “seek and use the input of community partners” was changed to “seek and use the input of all partners.” “Build on strengths and resources within the community” was modified to “build on strengths and resources within the community/target population.” Revisions were presented to panelists in round 2. Five EPs had consensus (≥ 90%) and were not modified after round 1; these EPs were excluded from the round 2 survey (Table 2). The EPs not modified after round 1 include “focus on local relevance and social determinants of health”; “involve a cyclical and iterative process in pursuit of objectives”; “foster co-learning, capacity building, and co-benefit for all partners”; facilitate collaborative, equitable partnerships; and “involve all partners in the dissemination process”. Panelists’ comments and edits about the EPs on the round 1 survey suggested the need to define each principle and reach consensus on the definitions.

Table 2.

Consensus in Engagement Principle (EP) Titles During Delphi Process Rounds 1–3

| Original EP | New EP | Round 1 (n = 19) | Round 2 (n = 18) | Round 3 (n = 18) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Total | Stakeholder | Academic | Total | Stakeholder | Academic | Total | Stakeholder | Academic | ||

| 1 | 1 | 18 (94.7%) | 11 (100.0%) | 7 (87.5%) | NM | NM | NM | 14 (78.0%) | 9 (90.0%) | 5 (62.5%) |

| 2 | — | 15 (79.0%) | 10 (90.9%) | 5 (62.5%) | — | — | — | — | — | — |

| 3 | — | 18 (94.7%) | 11 (100.0%) | 7 (87.5%) | — | — | — | — | — | — |

| 4 | 2 | 17 (89.5%) | 10 (90.1%) | 7 (87.5%) | 16 (88.9%) | 9 (90.0%) | 7 (87.5%) | 17 (94.0%) | 10 (100.0%) | 7 (87.5%) |

| 5 | 3 | 19 (100.0%) | 11 (100.0%) | 8 (100.0%) | NM | NM | NM | 13 (72.0%) | 8 (80.0%) | 5 (62.5%) |

| 6 | 4 | 19 (100.0%) | 11 (100.0%) | 8 (100.0%) | NM | NM | NM | NM | NM | NM |

| 7 | 5 | 18 (94.7%) | 11 (100.0%) | 7 (87.5%) | 16 (88.9%) | 10 (100.0%) | 6 (75%) | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) |

| 8 | 6 | 18 (94.7%) | 11 (100.0%) | 7 (87.5%) | NM | NM | NM | NM | NM | NM |

| 9 | — | 16 (84.2%) | 11 (100.0%) | 5 (62.5%) | — | — | — | — | — | — |

| 10 | 7 | 17 (89.5%) | 11 (100.0%) | 6 (75%) | NM | NM | NM | NM | NM | NM |

| 11 | — | 15 (79.0%) | 10 (90.9%) | 5 (62.5%) | — | — | — | — | — | — |

| — | 8 | — | 14 (77.8 %) | 8 (80.0%) | 6 (75.0%) | 16 (89.0%) | 9 (90.0%) | 7 (87.5%) | ||

NM = not modified (there were no changes made to the EP title; thus, we did not ask panelists to vote on them in the Delphi round).

Delphi Round 2

In round 2, panelists provided feedback on the new EP (“build trust”) and the 2 EPs that were modified based on feedback from the previous round (Table 2). In addition, preliminary definitions based on the literature were provided for each EP for panelists’ feedback (Table 3). Consensus was not reached (78% overall; 80% stakeholder; 75% academic) on the added EP; panelists felt the principle needed more description, but consensus was reached on two EPs modified after round 1 (90% overall; 90%–100% stakeholder; 75%–88% academic). However, lack of consensus on EP definitions required modifications of EP titles for clarity and consistency with the definition.

Table 3.

Consensus in Engagement Principle (EP) Definitions Rounds 2 and 3

| EP | Round 2 | Round 3 | ||||

|---|---|---|---|---|---|---|

| Total (n = 18) | Stakeholder (n = 10) | Academic (n = 8) | Total (n = 18) | Stakeholder (n = 10) | Academic (n = 8) | |

| 1 | 12 (66.7%) | 8 (80.0%) | 4 (50.0%) | 15 (83.3%) | 10 (100.0%) | 5 (62.5%) |

| 2 | 14 (77.8%) | 9 (90.0%) | 5 (62.5%) | 15 (83.3%) | 9 (90.0%) | 6 (75.0%) |

| 3 | 15 (83.3%) | 10 (100.0%) | 5 (62.5%) | 16 (88.9%) | 9 (90.0%) | 7 (87.5%) |

| 4 | 17 (94.4%) | 10 (100.0%) | 7 (87.5%) | 13 (72.2%) | 8 (80.0%) | 5 (62.5%) |

| 5 | 13 (72.2%) | 8 (80.0%) | 5 (62.5%) | 16 (88.9%) | 10 (100.0%) | 6 (75.0%) |

| 6 | 16 (88.9%) | 10 (100.0%) | 6 (75.0%) | 14 (77.8%) | 9 (90.0%) | 5 (62.5%) |

| 7 | 14 (77.8%) | 9 (90.0%) | 5 (62.5%) | 16 (88.9%) | 10 (100.0%) | 6 (75.0%) |

| 8 | 14 (77.8%) | 10 (100.0%) | 4 (50.0%) | 16 (88.9%) | 9 (90.0%) | 7 (87.5%) |

Based on responses in round 2, all three principles presented in this round required additional modification. “Seek and use the input of all partners” was changed to “partnership input is vital.” “Build on strengths and resources within the community/target population” was modified to “build on strengths and resources within the community/patient population.” “Build trust” was changed to “build and maintain trust in the partnership.” In addition, two other EPs were modified for clarity related to their definition. “Focus on local relevance and social determinants of health” was changed to “focus on community perspectives and determinants of health.” “Involve a cyclical and iterative process in pursuit of objectives” was changed to “partnership sustainability to meet goals and objectives.” Definitions for EP 1 (67% overall; 80% stakeholder; 50% academic), EP 2 (78% overall; 90% stakeholder; 63% academic), EP 5 (72% overall; 80% stakeholder; 63% academic), EP 7 (78% overall; 90% stakeholder; 63% academic), and EP 8 (78% overall; 100% stakeholder; 50% academic) lacked consensus. Despite three EP definitions (EPs 3, 4, and 6) reaching the consensus threshold (Table 3), all of the preliminary definitions were modified based on panelists’ feedback and presented again in round 3. For example, for EP 1, panelists commented on missing “local relevance” in the definition, using a word other than “biomedical,” and that social determinants of health may not be what is currently most important to a certain community. For EP 5, panelists commented on disagreeing with the use of the term “target population.” For EP 8, panelists commented on the need to include historical context and understand the history of the community.

Delphi Round 3

In round 3, panelists reached consensus on three (“partnership input is vital,” “build on strengths and resources within the community or patient population,” and “build and maintain trust in the partnership”) of five EPs presented in this round (Table 2). Consensus was not reached on “focus on community perspectives and determinants of health” (78% overall; 90% stakeholder; 63% academic) and “partnership sustainability to meet goals and objectives” (72% overall; 80% stakeholder; 63% academic).

Consensus was reached on six EP definitions (Table 3). The panelists did not reach consensus for the definitions of “foster co-learning, capacity building, and co-benefit for all partners” (72% overall; 80% stakeholder; 63% academic) and “facilitate collaborative, equitable partnerships” (78% overall; 90% stakeholder; 63% academic). EPs and definitions for which consensus was not reached in round 3 were put on the agenda for the in-person meeting (round 4).

Delphi Round 4

Round 4 took place in person over 2 days, but only some panelists could attend (n = 10). This meeting was facilitated by the two project co-principal investigators, who have experience facilitating group discussions and stakeholder-engaged research. Facilitators kept the discussion focused and worked toward reaching consensus or understanding why consensus could not be reached. A professional editor attended the meeting to help ensure consistency, language clarity, and proper grammar. After a vibrant, thoughtful, and insightful discussion on each EP and definition, which was followed by editing for cohesion and clarity, all attending panelists reached consensus on eight EP titles and definitions on day 2. Given the reduction in participation for this round, the variable levels of participation of webinar attendees, and the editor’s final edits, we decided to add an additional round to reach final consensus.

Delphi Round 5

In round 5, the panel reached consensus (> 80%) on eight EPs and definitions (Table 4). One academic panelist disagreed with some titles (EPs 1–3). However, the community health stakeholder panelists had total consensus. Two academic panelists disagreed with the EP 1 definition, and one academic panelist disagreed with two definitions (EPs 4–5; Table 4). The final EPs and definitions are listed in Table 4.

Table 4.

Engagement Principles (EP) and Definitions: Frequency and Percent Final Consensus (Round 5)

| EP Title | EP Titles | EP Definition | EP Definitions | ||||

|---|---|---|---|---|---|---|---|

| Total (n = 18) | Stakeholder (n = 10) | Academic (n = 8) | Total (n = 18) | Stakeholder (n = 10) | Academic (n = 8) | ||

| 1. Focus on community perspectives and determinants of health | 17 (94.4%) | 10 (100.0%) | 7 (87.5%) | Community-engaged research addresses the concept of health from a holistic model that emphasizes physical, mental, and social well-being. It also emphasizes a community perspective on health that includes individual, social, economic, cultural, historical, and political factors as determinants of health and disease. | 16 (88.9%) | 10 (100.0%) | 6 (75%) |

| 2. Partner input is vital | 17 (94.4%) | 10 (100.0%) | 7 (87.5%) | Meaningful participation is achieved through the collective efforts of all partners contributing to the generation of ideas. All partners understand each other’s needs, goals, available resources, and capacity to develop and participate in community engagement activities. Structures and processes facilitate sharing information, decision-making power, and resources among partners. | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) |

| 3. Partnership sustainability to meet goals and objectives | 17 (94.4%) | 10 (100.0%) | 7 (87.5%) | Community-engaged research involves a cyclical, iterative process that includes partnership development and maintenance, where communications between the community partner(s) and the academic researchers are ongoing and bidirectional to meet mutually agreed-upon goals and objectives. | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) |

| 4. Foster co-learning, capacity building, and co-benefit for all partners | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) | Community-engaged research is a co-learning and empowering process that facilitates all partners sharing and transferring knowledge, skills, capacity, and power so that findings and knowledge benefit all partners. It integrates knowledge and action for mutual benefit of all partners. There is a commitment to the integration of research results and community change efforts with the intention that involved partners will benefit. | 17 (94.4%) | 10 (100.0%) | 7 (87.5%) |

| 5. Build on strengths and resources within the community or patient population | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) | Community-engaged research seeks to identify and build on strengths, assets, resources, opportunities, and relationships that exist within identified communities to address their communal concerns. All partners appreciate the complementary assets and resources that communities and patients may provide. | 17 (94.4%) | 10 (100.0%) | 7 (87.5%) |

| 6. Facilitate collaborative, equitable partnerships | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) | Community partners and academic researchers share power and responsibility equitably. Diverse perspectives and populations are included in an equitable manner. Potential barriers to participation are addressed, communication and program activities are culturally appropriate, and all partners receive equal respect. Partners agree on who has access to research data and where the data will be stored. The academic researchers and the community partners commit to working in partnership toward achieving the study goals and to honor the commitments made to one another throughout the research process. | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) |

| 7. Involve all partners in the dissemination process | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) | The partners establish appropriate policies regarding ownership and dissemination of results. With partner agreement, findings are disseminated to all partners and beyond the partnership in understandable and respectful language. All partners are invited to review and be coauthors of publications and co-presenters at conferences as appropriate. | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) |

| 8. Build and maintain trust in the partnership | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) | Researchers and practitioners need to understand the cultural dynamics and history of specific groups and institutions in order to effectively identify ways to collaborate and to build respect and trust among all partners. This is an ongoing effort for all involved in the community engagement process and includes demonstrating one’s own trustworthiness and the ability to follow through on promises and commitment. | 18 (100.0%) | 10 (100.0%) | 8 (100.0%) |

DISCUSSION

The Delphi process allowed for a stakeholder-engaged approach for reaching consensus on EPs and definitions. This approach is particularly significant in light of the Institute of Medicine Committee report highlighting stakeholder engagement as an integral component in all phases of clinical, translational, community, and public health research to identify health needs, set priorities, and promote diverse participation in research studies.33 The work presented here on reaching consensus on EPs and definitions would not have been so comprehensive without the input of stakeholders in the process. It became clear after round 1, that if we wanted to reach consensus on the EPs, we were also going to have to reach consensus on how each EP was defined. The discussion of engagement has been different across the many types of stakeholder-engaged research literature, requiring the need to assure agreement on what we meant by each EP.

Stakeholder engagement in research is time consuming and requires greater effort, but may yield a better, more relevant tool to assess stakeholder engagement in research than more traditional scientist-only processes. This became most evident during the in-person meeting where key components of language and meaning needed to be discussed to reach consensus. For example, the definitions of partnership, partners, and stakeholders were important in finalizing the EP definitions. This initial step—reaching consensus on what is to be measured—lays the foundation for content and construct validation of a quantitative stakeholder engagement measure.

The results of the Delphi process presented here should be considered in light of the study limitations. The sample of Delphi panelists was recruited using a convenience snowball sampling approach based on the networks of the project team members. The resulting sample was majority female (90%), non-Hispanic (95%), African American or Black (63%), with some college or higher education (100%) and resided in the Midwest or Southern region of the United States (72%). The views of other ethnic groups or gender identities, particularly those with no representation in the sample (e.g., Asian, Native American, and transgender) might be inadequately reflected in the Delphi process. In addition, other relevant identities were not queried (e.g., sexual orientation, health status), and those with limited English proficiency, from some health professions, and from other disciplines were not included; the impact of their presence or absence is unknown. Despite these limitations, we recruited a diverse national sample of Delphi panelists with a range of experience in community engagement and research.

Several panel members (n = 8; 44%) were not able to attend the round 4 in-person meeting. We were able to have six of these panel members complete a web-based survey that provided feedback in advance of the meeting, and three of these panelists participated via webinar or phone during part of the meeting. To address this issue and to reach final consensus, an additional web-based round was added to the Delphi process in which 18 panelists participated.

The results of this Delphi process make several significant contributions to community-engaged science.34 It is important to reach consensus on key principles (and definitions) of stakeholder engagement in research that studies should measure to determine the influence of community–academic partnerships on the scientific process and scientific discovery. The project originated from a community–academic partnership, used a stakeholder engaged Delphi process, and integrated different approaches to engagement (e.g., community-based participatory research, patient-centered outcomes research) to determine key EPs across approaches. In future work, the authors intend to conduct content validation of items used to measure each EP and examine their psychometric properties. The results will be used to refine and validate a quantitative stakeholder engagement measure that can be used to identify crosscutting best practices and tailored strategies for engaging specific populations.

ACKNOWLEDGMENTS

Supported through a Patient Centered Outcomes Research Institute (PCORI) Award (ME-1511-33027). All statements in this report, including its findings and conclusions, are solely the authors’ and do not necessarily represent the views of PCORI, its Board of Governors, or its Methodology Committee. We thank Dr. Goldie Komaie for her project management throughout the Delphi process and Dr. Sharese Willis for her participation in the round 4 in-person meeting and help editing the article.

REFERENCES

- 1.Kwan B, Sills M. Stakeholder engagement in a patient-reported outcomes (PRO) measure implementation: a report from the SAFTINet practice-based research network [updated 2016; cited 2016 Jan 21]. Available from: www.jabfm.org/content/29/1/102.short. [DOI] [PubMed]

- 2.Hickman D, Totten A, Berg A, Rader K, Goodman S, Newhouse R (Eds.). PCORI (Patient-Centered Outcomes Research Institute) Methodology Committee “The PCORI Methodology Report.” Washington (DC); 2013. pcori.org/research-we-support/research-methodology-standards [Google Scholar]

- 3.Fawcett SB, Paine-Andrews A, Francisco VT, et al. Using empowerment theory in collaborative partnerships for community health and development. Am J Community Psychol. 1995;23(5):677–97. [DOI] [PubMed] [Google Scholar]

- 4.Minkler M Ethical challenges for the “outside” researcher in community-based participatory research. Heal Educ Behav. 2004;31(6):684. [DOI] [PubMed] [Google Scholar]

- 5.Minkler ME, Wallerstein NE. Community Based Participatory Research for Health. San Francisco (CA): Jossey-Bass; 2003. [Google Scholar]

- 6.Butterfoss FD, Francisco VT. evaluating community partnerships and coalitions with practitioners in mind. Health Promot Pract. 2004;5(2):108–114. [DOI] [PubMed] [Google Scholar]

- 7.Minkler M, Wallerstein NN (Eds.). Community-Based Participatory Research for Health: From Process to Outcomes. San Francisco (CA): Jossey-Bass; 2010. [Google Scholar]

- 8.Wallerstein N, Duran B. Community-based participatory research contributions to intervention research: the intersection of science and practice to improve health equity. Am J Public Health. 2010;100(Suppl. 1):S40–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wallerstein NB, Duran B. Using community-based participatory research to address health disparities. Health Promot Pract. 2006;7(3):312. [DOI] [PubMed] [Google Scholar]

- 10.Israel BA. Methods in Community-Based Participatory Research for Health. San Francisco (CA): Jossey-Bass; 2005. [Google Scholar]

- 11.Israel BA, Schulz AJ, Parker EA, Becker A. Review of community- based research: assessing partnership approaches to improve public health. Annu Rev Public Health. 1998;19: 173–202. [DOI] [PubMed] [Google Scholar]

- 12.Goodman MS, Sanders Thompson VL. The science of stakeholder engagement in research: classification, implementation, and evaluation. Transl Behav Med. 2017;7(3). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Khodyakov D, Stockdale S, Jones A, Mango J, Jones F, Lizaola E. On Measuring Community Participation in Research. Heal Educ Behav. 2013;40(3):346–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Goodman RM, Speers MA, Mcleroy K, et al. Identifying and defining the dimensions of community capacity to provide a basis for measurement. Heal Educ Behav. 1998;25(3):258–78. [DOI] [PubMed] [Google Scholar]

- 15.Sanchez V, Carrillo C, Wallerstein N. From the ground up: Building a participatory evaluation model. Prog Community Health Partnersh. 2011;5(1):45–52. [DOI] [PubMed] [Google Scholar]

- 16.Schulz AJ, Israel BA, Lantz P. Instrument for evaluating dimensions of group dynamics within community-based participatory research partnerships. Eval Program Plann. 2003;26(3):249–62. [Google Scholar]

- 17.Lantz PM, Viruell-Fuentes E, Israel BA, Softley D, Guzman R. Can communities and academia work together on public health research? Evaluation results from a community-based participatory research partnership in Detroit. J Urban Health. 2001;78(3):495–507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Francisco VT, Paine AL, Fawcett SB. A methodology for monitoring and evaluating community health coalitions. Health Educ Res. 1993;8(3):403–16. [DOI] [PubMed] [Google Scholar]

- 19.Israel BA, Schulz AJ, Parker EA, Becker AB. (2008). Critical Issues in Developing and Following Community-Based Participatory Research Principles In Minkler M, and Wallerstein N (Eds.), Community-Based Participatory Research for Health (pp. 47–62). San Francisco, CA: Jossey-Bass. [Google Scholar]

- 20.Bowen DJ, Hyams T, Goodman M, West KM, Harris-Wai J, Yu J-H. Systematic review of quantitative measures of stakeholder engagement. Clin Transl Sci. 2017;10(5). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Thompson VLS, Drake B, James AS, et al. A community coalition to address cancer disparities: Transitions, successes and challenges. J Cancer Educ. 2014;30(4):616–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Arroyo-Johnson C, Allen ML, Colditz GA, et al. A tale of two community networks program centers: Operationalizing and assessing CBPR principles and evaluating partnership outcomes. Prog Community Health Partnersh. 2015;9(Suppl): 61–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Goodman MS, Sanders Thompson VL, Arroyo Johnson C, et al. Evaluating community engagement in research: Quantitative measure development. J Community Psychol. 2017;45:17–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.McCloskey DJ, McDonald MA, Cook J, et al. Community engagement: Definitions and organizing concepts from the literature In: Principles of Community Engagement, 2nd ed Atlanta (GA): The Centers for Disease Control and Prevention; 2012. P. 41. [Google Scholar]

- 25.Khodyakov D, Stockdale S, Jones F, et al. An exploration of the effect of community engagement in research on perceived outcomes of partnered mental health services projects. Soc Ment Health. 2011;1(3):185–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nueces DD Las, Hacker K, DiGirolamo A, Hicks S, De las Nueces D, Hicks LS. A systematic review of community-based participatory research to enhance clinical trials in racial and ethnic minority groups. Health Serv Res. 2012;47(3 Pt 2):1363–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Viswanathan M, Ammerman A, Eng E, Gartlehner G, Lohr KN, Griffith D, Rhodes S, Samuel-Hodge C, Maty S, Lux, Webb L, Sutton SF, Swinson T, Jackman A, Whitener L. Community-Based Participatory Research: Assessing the Evidence. Evidence Report/Technology Assessment No. 99 (Prepared by RTI—University of North Carolina Evidence-based Practice Center under Contract No. 290-02-0016) AHRQ Publication 04-E022–2. Rockville, MD: Agency for Healthcare Research and Quality; July 2004. [PMC free article] [PubMed] [Google Scholar]

- 28.Burke JG, Hess S, Hoffmann K, et al. Translating community-based participatory research principles into practice. Prog Community Health Partnersh. 2013;7(2):109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Clinical and Translational Science Awards Consortium Community Engagement Key Function Committee Task Force on the Principles of Community Engagement. Principles of Community Engagement. NIH Publication No. 11–7782. [updated 2011]. Available from: www.atsdr.cdc.gov/communityengagement/ [Google Scholar]

- 30.Ahmed SM, Palermo A-GS. Community engagement in research: frameworks for education and peer review. Am J Public Health. 2010;100(8):1380–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Butterfoss FD, Goodman RM, Wandersman A. Community coalitions for prevention and health promotion: Factors predicting satisfaction, participation, and planning. Heal Educ Behav. 1996;23(1):65–79. [DOI] [PubMed] [Google Scholar]

- 32.Komaie G, Ekenga CC, Thompson VLS, Goodman MS. Increasing community research capacity to address health disparities: A qualitative program evaluation of the Community Research Fellows Training Program Goldie. J Empir Res Hum Res Ethics. 2017;12(1):55–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Institute of Medicine (IOM). The CTSA program at NIH: Opportunities for advancing clinical and translational research. [updated 2013]. Available from: www.nap.edu/download/18323. [PubMed]

- 34.Goodman MS, Sanders Thompson VL. The science of stakeholder engagement in research: classification, implementation, and evaluation. Transl Behav Med. April 2017;7(3):186–91. [DOI] [PMC free article] [PubMed] [Google Scholar]