Abstract

Background

Medical Council of India, introduced the Post Graduate (PG) curriculum as ‘Competency Based Medical Education’ (CBME). Feedback from the end users is a vital step in curriculum evaluation. Therefore, the primary objective of this study was to develop and validate a Structured Feedback Questionnaire (SFQ) for postgraduates, encompassing all the components of the PG-CBME curriculum.

Methods

SFQ was developed with 23 Likert based questions and four open ended questions. Content validation was done by Lawshe method. After getting institutional ethics clearance and informed consent, SFQ was administered to 121 final year PGs (response rate 100%). We performed Principal component analysis (PCA), Structural equation modeling (SEM), Chi squared test (χ2/df); goodness-of-fit index (GFI); adjusted GFI; comparative fit index (CFI) and root mean square error of approximation (RMSEA). Cronbach's alpha was done for estimating the internal consistency.

Results

The validation resulted in a three-factor model comprising of “curriculum” (42.1%), “assessment” (28%), and “support” (18.5%). Chi squared test (χ2/df ratio) < 2, CFI (0.78), GFI (0.72) and RMSEA (0.09) indicated superior goodness of fit for the three-factor model for the sample data. All the extracted factors had good internal consistency of ≥0.9.

Conclusion

We believe that this 23 item SFQ is a valid and reliable tool which can be utilized for curriculum evaluation and thereby formulating recommendations to modify the existing curriculum wherever required, facilitating enriched program outcomes.

Keywords: Validation, Questionnaire, Medical education, Factor analysis, Post graduate studies

Introduction

Post Graduate (PG) curriculum is constantly subjected to continuous transformation because of novel developments in the field of science.1,2 The most visible PG curricular change in recent times happened in India, with introduction of revised PG curriculum which is outcome driven ‘Competency Based Medical Education’ (CBME) in alignment with global trends.3,4 New PG curriculum by MCI is more learner friendly, patient oriented and gender sensitive. There is strong emphasis on horizontal and vertical integration, while preserving the autonomy of discipline-based teaching and evaluation. Formative and summative assessments have been restructured and curricular governance is streamlined for better logistics and administration.4,5 All curricular changes mandate evaluation and revision at regular intervals to meet the changing requirements of all the stake holders i.e. learners, patients and community.6 Curriculum Evaluation (CE) is defined as a process of reviewing the value or worthiness of part, or the whole of a curriculum, to make it more meaningful.7, 8, 9 Many techniques such as questionnaires, focus group discussions, in depth interviews, workshops and Delphi methods have been employed as tools for CE.10, 11 Feedback from students, who are one of the important stake holders in the process of educational delivery, plays an increasingly significant role in the implementation of high-quality learner-friendly education.12 Understanding of feedback from the end users (postgraduates) about their learning environment) in terms of curriculum design, instructional strategies, teaching-learning environment, quality of resources, and so on, is a vital step, in CE for planning possible revisions in future.13 The development of a structured feedback questionnaire is a laborious and intricate process. In addition, the developed instrument should be validated for its usefulness before implementation.14 Therefore, the main objective of this study was to develop a structured questionnaire to seek feedback on PG CBME curriculum and to evaluate the questionnaire for its content validity and psychometric reliability.

Material and methods

In this study a structured feedback questionnaire (SFQ) was developed and validated following the modified Zhou's Mixed Methods Model of Scale Development and Validation.15

Item generation

Series of informal focus group meetings were held with Heads of the departments of various PG specialties and PG curriculum committee members under the guidance of Vice Chancellor and Deans. Discussion during all the meetings were transcribed verbatim in a word document.

Qualitative experts in the institution performed thematic analysis and generated themes which eventually became the items of SFQ, comprising of eight essential domains pertaining to the vital aspects of PG curricular program namely Orientation Program, Academics, Research, Monthly feedback, Common Internal Assessment, Infrastructure, Professionalism and value added courses. In addition, few questions were added to obtain demographic details and other related general information.

Justification for eight domains

1) Orientation Program is usually conducted at the start of first year during which the students are briefed and sensitized about the objectives of PG curriculum, MCI norms and program outcomes to be achieved at the end of PG training, 2) Academics is imparted by means of structured academic schedules using a variety of teaching learning methods such as lectures, group discussions, practical demonstrations along with a provision for self-directed learning avenues such as symposiums, seminars, journal club discussions, didactic lectures taken for UG courses and pedagogy, 3) Research during PG curriculum is rooted with both training offered by conducting Institutional Research Methodology Workshops and by providing support and motivation by faculties, 4) Monthly constructive feedback to PGs are given by Heads of the Departments and Dean of the Institution, 5) periodic, centralized, biannual, structured, common internal assessment exams are held for all PGs, 6)State of the art, high end infrastructure amenities are provided for PG training, 7) Professionalism or non-scholastic skills are advocated by providing opportunities to work as a part of a team, accomplish tasks within appropriate deadlines, manage cordial relationships with colleagues, faculties, staff and other members, 8) value added courses such as Postgraduate Teaching Skills Workshops and Department specific Rapid Review Courses, widen the horizons for exam preparation by PGs.

Content validation

This was carried out by 3 internal and 3 external experts by Lawshe method.16 The internal and external experts chosen were PG teachers and medical educationists who were judiciously applying the medical education principles they had acquainted from within and outside our university respectively. Agreement of minimum of five out of six experts is taken as acceptance for including that item in the SFQ. The valuable input and suggestions from experts enabled significant modifications leading to formation of a more comprehensive SFQ.

The resultant SFQ had 23 closed ended questions measured on a Likert scale of 1 (strongly disagree) to 5 (Strongly agree) and three open ended questions to capture perceptions regard to facilitating factors, hindering factors and suggestions. Likert scale was used for scoring the SFQ as it is a widely accepted and most commonly used psychometric tool in educational research. It offers an advantage of providing a wide range of options to respondents in order to measure their attitudes in a scientifically accepted and validated manner.17

Participants and procedure

After obtaining clearance from Institutional Ethics Committee (IEC_NI/19/FEB/68/07) and informed consent from all participants, the hard copy of PG SFQ was administered to final year PGs (29 from pre & paraclinical; 92 from clinical departments). The response rate was 100% and all the responses were coded and entered into the excel. Missing item responses were replaced with mean.

Quantitative validation

The responses to the SFQ were analyzed with a series of statistical tests for evidence of construct-based validity and internal reliability using explanatory factor analysis (EFA) and confirmatory factor analysis (CFA).

Qualitative validation

The results of the open-ended questions were examined using thematic analysis and prominent answers were grouped as themes.

Statistical analysis

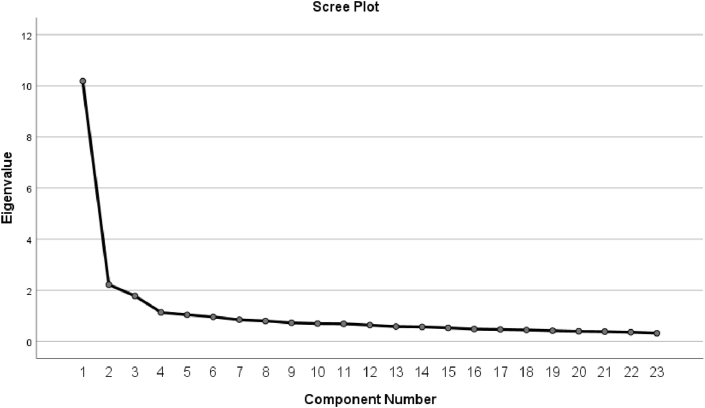

We performed Principal component analysis (PCA) to explore the relationship between the items (observed variables) and factors (latent variables). The nature of PCA is explanatory rather than confirmatory. We fixed factors only with eigenvalues greater than 1.2.18

The Kaiser-Meyer-Olkin (KMO) and Bartlett's test of sphericity analysis was carried out to examine the criteria of PCA for identifying the factor structure. To determine the internal consistency of the items, Cronbach's alpha of >0.70 is considered to be an acceptable reliability coefficient.19 Structural equation modeling (SEM) was performed to evaluate the relationship between the structural path and factors using AMOS version 22. We have assessed global goodness of fit model indices by R statistical version 4.0.2. These indices include χ2and its subsequent ratio with degrees of freedom (χ2/df); goodness-of-fit index (GFI); comparative fit index (CFI), root mean square error of approximation (RMSEA), approximate goodness of fit indices (AGFI); normed fit index (NFI); standardized root mean square residuals (SRMR). GFI is calculated to describe how well the model fits the set of observed data and it shows the degree of variance and covariance together. The value ranges from the 0 to 1 and a value of 1 indicates a perfect fit. AGFI adjusts for the model's degrees of freedom relative to the number of observed variables and typically range between zero and one with larger values indicating a better fit. CFI is done for comparison of null model with the fits of proposed model. If the value is greater than 0.90 means the data is acceptable. RMSEA also describes how the well the model fits the observed data quantitatively. A value below 0.05 is considered as good fit. SRMR defined as closed fit and values ≤ 0.05 can be considered as a good fit and values between 0.05 and 0.08 as an adequate fit. NFI values range from 0 to 1, with higher values indicating better fit.20

Results

Principal component analysis (PCA)

KMO index of 0.89 was obtained for our model and as it is greater than 0.50 the data set is suitable for factor analysis. Bartlett's test of sphericity also highly significant (χ2 (253) = 4207; p = 0.00). This information was used to recognize the factor model using the PCA approach. PCA of 23 items yielded a three-factor model that accounted for 80.87% of the variance (Fig. 1). The first factor, which accounted for 42.1% of the variance, was explained by the nine items denoted as “curriculum”. Second factor, labeled “Assessment”, accounted for 28% variance included five items. The final factor labeled “Support”, accounted for 18.5% variance, and consisted of nine items. All the items were well loaded (Table 1) with respective factors. We calculated the Pearson's correlation coefficients to explore the inter-relationships between factors (Table 2). All the correlation coefficients were significant and positively correlated with one another. The strength of the association was greater between curriculum and assessment.

Fig. 1.

Scree plot shows first three factors account for most of the total variability of the 23 items by the eigenvalue.

Table 1.

Principle component analysis with factor loadings and communalities (h2) of each item.

| Factor | No with items | Factor loading | h2 |

|---|---|---|---|

| Curriculum | C1. Structured academic schedule by the departments was useful in planning my learning on a daily basis | 0.897 | 0.82 |

| C2. Structured academic schedule by the departments was available to me well in advance | 0.887 | 0.91 | |

| C3. Timing of the sessions and format of the structured academic schedule matched my expectations | 0.906 | 0.83 | |

| C4.i) Adequate patient resources were available to obtain clinical/practical skills. ii) Adequate equipments were available to obtain clinical/practical skills. iii) Adequate opportunities were available to obtain clinical/practical skills. | 0.919 | 0.83 | |

| C5. Faculty guided/trained me adequately to obtain clinical/practical skills | 0.893 | 0.87 | |

| C6. Appropriate teaching-learning methods were used by the faculty to obtain clinical/practical/attitudinal/communication skills | 0.921 | 0.79 | |

| C7. Adequate opportunities were available for self-directed learning/active learning such as i) Symposium ii) Seminar iii) Journal club discussions, iv) Didactic lectures taken for UG courses, v) Pedagogy, vi) Others (Specify) | 0.758 | 0.75 | |

| C8. Faculty helped me in my research activities (Oral/poster presentation/dissertation) | 0.783 | 0.79 | |

| C9. Faculty trained me to do oral/poster presentation in conferences | 0.862 | 0.74 | |

| Assessment | A1. Monthly feedback given by the faculty was very beneficial for improving my learning outcomes | 0.929 | 0.76 |

| A2. Faculty gave constructive feedback | 0.918 | 0.77 | |

| A3. I was informed about the structured internal assessment schedule well in advance enabling me to prepare well | 0.918 | 0.82 | |

| A4. The timing and format of the structured internal assessment was appropriate for my course | 0.937 | 0.76 | |

| A5. Common internal assessment at regular intervals improved my confidence towards final exams | 0.937 | 0.78 | |

| Support | S1. At the start of the first year I was oriented to the objectives of the Post Graduate (PG) program | 0.749 | 0.83 |

| S2. I was sensitized about the MCI norms and program outcomes to be achieved at the end of PG training | 0.611 | 0.83 | |

| S3. PG training and infrastructure was adequate to achieve what I wanted to learn | 0.892 | 0.76 | |

| S4. The high-class infrastructure enabled me to be confident of clinical and procedural skills that makes me a better professional in my chosen field | 0.947 | 0.84 | |

| S5. Facilities for stay, food and library were adequate and appropriate | 0.782 | 0.66 | |

| S6. I had the opportunity to participate in quality improvement processes such as NAAC, JCI | 0.891 | 0.81 | |

| S7. I had a good team to work with | 0.821 | 0.84 | |

| S8. Faculty, staff and other members had a cordial working relationship with me and were always willing and available to help | 0.823 | 0.85 | |

| S9. I have been empowered to effectively manage time and meet workload demands | 0.819 | 0.86 | |

| Cronbach's Alpha | 0.95 | ||

| % variance | 42.1 | ||

| KMO | 0.92 | ||

| Bartlett's Test of SphericityApprox. Chi-Square | 4207 | ||

| Df | 253 | ||

| Sig. | 0 |

Table 2.

Correlation matrixes for the factors.

| Curriculum | Assessment | Support | |

|---|---|---|---|

| Curriculum | 0.79 | 0.53 | 0.26 |

| Assessment | 0.59 | 0.76 | 0.24 |

| Support | 0.07 | 0.35 | 0.93 |

Confirmatory factor analysis

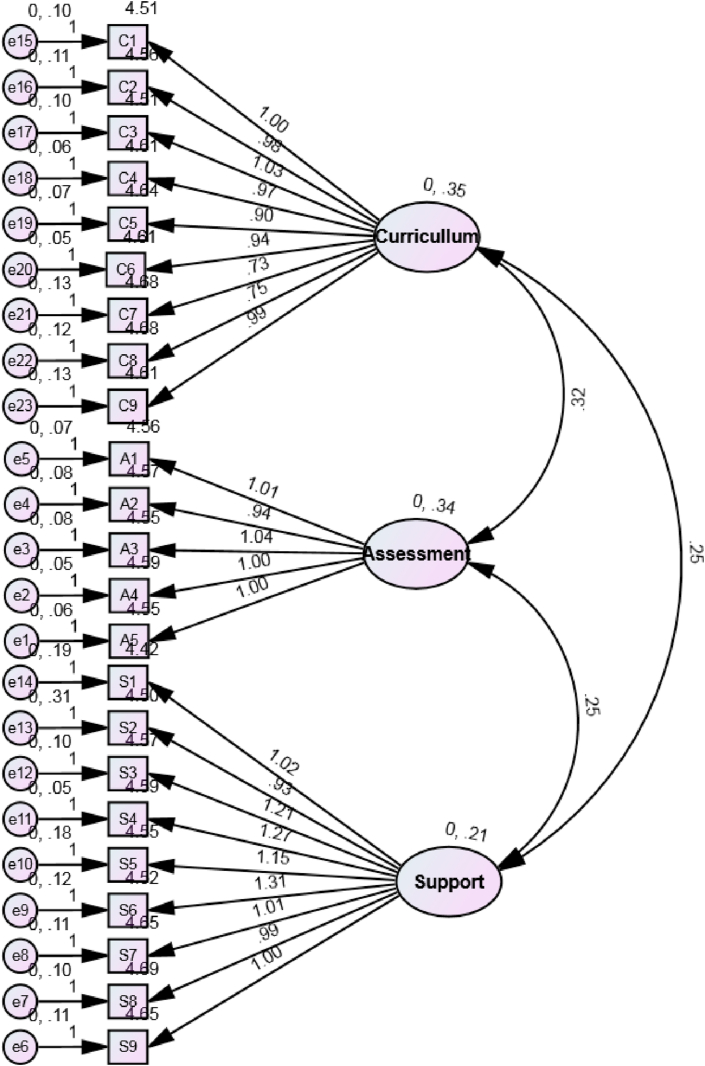

While conducting the CFA, no warning messages were noticed from the AMOS regarding the parameters estimated. So based on this information, the three factor model passed the first step for identification. Next step was to illustrate the items (observed) and factors (unobserved) in the hypothesized model (Fig. 2). The factors are represented as rectangles; ellipses represent the items; and the circles represents measurement errors. The arrow between the items and factors represents a regression path and numerical on that represents standardized regression weight. The arrow between the small circle and items represents a measurement error term. The double headed arrows between the two factors represented the correlation of covariance of the model.

Fig. 2.

SEM results of the confirmatory factor analysis for the three factor model.

Assessment of model fit

In Table 3, the significant χ2 value (p-0.001) does not imply support for the three model factors. It can be interpreted as the model has a good fit for the observed data, but the p value for chi-squared test in not significant. However, the empirical studies have shown that p value to be significant if the sample is large. Since chi squared test depends on the sample size, χ2/df ratio will be the best index for the goodness of fit. A ratio <2 indicates a superior goodness of fit for the three-factor model for the sample data. CFI (0.78), GFI (0.72), AGFI (0.71), NFI (0.89), SRMR (0.06) and RMSEA (0.09) values represents that three factor model fits to satisfactory.

Table 3.

Goodness-of-fit indices for the three-factor model.

| Statistics | CFI | RMSEA | GFI | AGFI | NFI | SRMR | χ2 | df | χ2/df | P value |

|---|---|---|---|---|---|---|---|---|---|---|

| Model fit for basic model with three domains | 0.78 | 0.09 | 0.72 | 0.71 | 0.89 | 0.06 | 298 | 153 | 1.9 | 0.0001 |

GFI; Goodness-of-Fit Index, AGFI; Adjusted Goodness of Fit, NFI; Normed Fit Index, CFI; Comparative fit index, RMSEA; root mean square error of approximation, SRMR; standardized root mean square residual, χ2; Model Chi Square, df; degrees of freedom.

Internal consistency

For the internal consistency of the items, Cronbach's ∝ was estimated for the items and factors. All the extracted factors had good internal consistency of ≥0.9 (Table 1).

Qualitative analysis

When asked about ‘What were the top three things that facilitated your PG training?’, many PGs responded as “teaching in the form of lectures, small group discussions and bed side teaching during rounds facilitated our learning”, “hands on training enabled us to learn practical skills”, “regular training made us proficient”, “periodic biannual assessments with feedback facilitated our learning process”, “research projects widened our horizons of learning”.

For question on ‘What were the top three things that did not facilitate your PG training?’, the most common responses were, “doing research/dissertation in a very advanced topic is challenging”, “too many lectures and small group discussions take our time away from research”, “ hands on training sessions should be increased”, “ more number of assessments make it overwhelming for us to study”.

When asked about ‘suggestions for improvement, if any’, the top responses were “Additional training is required in the field of administration of health centers, manpower management and framing of policies”, “Frequent family health study is a useful concept, should be promoted more”, “to improve training in PHC (primary health centres) and include occupational health”, “we are happy, no need to change anything”.

Discussion

PG-CBME curriculum by MCI is seen as a “living document” that has the potential to evolve as per the changing requirements of educational milieu. Feedback from the stake holders will contribute to the revisions in the curriculum.

The present study was undertaken to develop and validate SFQ to measure the perceptions regarding various aspects of PG-CBME curriculum in order to identify the strengths and areas to improve in the PG-CBME curriculum and recommend changes, if required.

There are many questionnaires available for curriculum feedback, however, to our knowledge, this is the first study to develop and validate a SFQ specifically targeting PG-CBME curriculum. The validation of 23 item SFQ, using PCA approach, resulted in a three-factor model comprising of “curriculum” (42.1%), “assessment” (28%), and “support” (18.5%).

The psychometric properties of SFQ and calculation of fit using CFA approach indicated that the three-factor model agrees with the data and offered the best fit. All the three factors had more than three items which is the minimum requirement for model generation. SEM showed the pattern of interrelationship among all the variables.

Under the factor of ‘Curriculum’, the item on ‘Appropriate teaching-learning methods were used by the faculty to obtain clinical/practical/attitudinal/communication skills’ loaded the highest (0.921) followed by item ‘Adequate patient resources/equipment's/opportunities were available to obtain clinical/practical skills (0.919). This finding is endorsed by other studies, which support the fact that an ideal curriculum should have properly aligned Teaching Learning methods with sufficient learning opportunities, to ensure delivery of expected learning outcomes.21

‘Assessment’ factor had highest loading for, ‘the timing and format of the structured internal assessment was appropriate for my course’ and ‘common internal assessment at regular intervals improved my confidence towards final exams’ (0.937). This is in line with observations made by Sharma S, approving that scheduled formative assessments were found to facilitate learning.22 The assessment coupled with constructive feedback can be utilized by students to upgrade their knowledge and academic achievements. These outcomes of regular assessment also help educators to reorganize their teaching methods to suit their learner's needs.23 Our college conducted common internal assessment (CIA) twice a year for the all the postgraduates, starting from their first year of post graduate period. This helps the postgraduates and the faculty in assessing the level of competency achieved by the student and can take appropriate remedial measures for students who are not able to achieve the specified level of competence and provide additional resources for the high achievers. Postgraduate students have given a positive feedback on CIA and they have found it very useful.

The item on,‘the high-class infrastructure enabled me to be confident of clinical and procedural skills’ scored highest (0.947)loading under the factor named ‘support’, which clearly shows that CBME implementation is a resource intensive process, therefore, the program should be supported with adequate materials and infrastructure for proper medical training.24 The feedback has indicated that our institution is able to meet the infrastructure needs of all the postgraduates from all the disciplines.

We were not able to compare with previous studies, as there are no studies on development and validation of SFQ employing CFA and SEM approach, specifically designed to address PG CBME curriculum. This 23 item SFQ is a valid and reliable tool and we encourage all academicians and researchers to consider using this SFQ across all medical colleges in India, so that the resulting data from a large sample of PG students can be utilized for formulating recommendations to MCI to modify the existing curriculum wherever required, and thereby, enhance the program outcomes. This feedback is a mechanism by which MCI can be provided with the scientific evidence on the positive feedback and successful implementation of the revised curriculum.

Limitations

Present study is based on data obtained from a single institution; hence, the findings may have limited generalizability. However, our sample is characterized by students from culturally and socially diverse backgrounds, which might minimize the aforesaid limitation. PCA and CFA are performed on the same data set, which may be considered as a shortcoming.

Conclusion

The SEM generated a meaningful model highlighting three important elements namely ‘Curriculum’, ‘Assessment’ and ‘Support’. This study has generated and validated a SFQ for procuring feedback from postgraduates on various elements of postgraduate curriculum and has provided scientific evidence for the application of SFQ as a valid, reliable and psychometrically tested scale for evaluating the medical PGs' perceptions on newly introduced MCI CBME curriculum.

Declaration of competing interests

The authors have none to declare.

Acknowledgment

The authors would like to acknowledge the unstinted support by the management of SRIHER (DU), Chennai and the postgraduate students who participated in the study.

References

- 1.Tanner D., Tanner L.N. 4th ed. Pearson Merrill/Prentice Hall; Upper Saddle River, NJ: 2006. Curriculum Development: Theory into Practice. Macmillan College. [Google Scholar]

- 2.Hussain A., Dogar A.H., Azeem M., Shakoor A. Evaluation of curriculum development process. Int J Humanit Soc Sci. 2011;1:263–271. [Google Scholar]

- 3.Padmavathi R., Dilara K., MaheshKumar K., Anandan S., Vijayaraghavan P.V. RAPTS–An empowerment to the medical postgraduates. Clin Epidemiol Glob Health. 2020;8:806–807. [Google Scholar]

- 4.MCI . 2020 April 01. Competency Based under Graduate Curriculum.https://www.mciindia.org/CMS/information-desk/for-colleges/ug-curriculum [Google Scholar]

- 5.MCI . 2018. Attitude, Ethics and Communication (AETCOM) Competencies for the Indian Medical Graduate.https://www.mciindia.org/CMS/wp-content/uploads/2019/01/AETCOM_book.pdf [Google Scholar]

- 6.Thomas P.A., Kern D.E., Hughes M.T., Chen B.Y. JHU Press; 2016. Curriculum Development for Medical Education: A Six-step Approach. [DOI] [PubMed] [Google Scholar]

- 7.Kern D., Thomas P., Howard D., Bass E.B. Johns Hopkins University; Baltimore, Md: 1998. Curriculum Development for Medical Education: A Six-step Approach. [Google Scholar]

- 8.Musingafi M.C., Mhute I., Zebron S., Kaseke K.E. Planning to teach: interrogating the link among the curricula, the syllabi, schemes and lesson plans in the teaching process. J Educ Pract. 2015;6:54–59. [Google Scholar]

- 9.Morrison J. Evaluation. BMJ. 2003;326:385–387. doi: 10.1136/bmj.326.7385.385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bharvad A.J. Curriculum evaluation. Int J Res. 2010;1:72–74. [Google Scholar]

- 11.Jacobs P.M., Koehn M.L. Curriculum evaluation: who, when, why, how? Nurs Educ Perspect. 2004;25:30–35. [PubMed] [Google Scholar]

- 12.Steyn C., Davies C., Sambo A. Eliciting student feedback for course development: the application of a qualitative course evaluation tool among business research students. Assess Eval High Educ. 2019;44:11–24. [Google Scholar]

- 13.Goldfarb S., Morrison G. Continuous curricular feedback: a formative evaluation approach to curricular improvement. Acad Med. 2014;89:264–269. doi: 10.1097/ACM.0000000000000103. [DOI] [PubMed] [Google Scholar]

- 14.Nievas Soriano B.J., García Duarte S., Fernández Alonso A.M., Bonillo Perales A., Parrón Carreño T. Validation of a questionnaire developed to evaluate a pediatric eHealth website for parents. Int J Environ Res Publ Health. 2020;17:2671. doi: 10.3390/ijerph17082671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zhou Y. A mixed methods model of scale development and validation analysis. Meas Interdiscip Res Perspect. 2019;17:38–47. [Google Scholar]

- 16.Yapa Y., Dilan M., Karunaratne W., Widisinghe C., Hewapathirana R., Karunathilake I. Computer literacy and attitudes towards eLearning among Sri Lankan medical students. Sri Lanka J Biomed Inform. 2012;3:82–96. [Google Scholar]

- 17.Leung S.O. A comparison of psychometric properties and normality in 4-, 5-, 6-, and 11-point Likert scales. J Soc Serv Res. 2011;37:412–421. [Google Scholar]

- 18.Henson R.K., Roberts J.K. Use of exploratory factor analysis in published research: common errors and some comment on improved practice. Educ Psychol Meas. 2006;66:393–416. [Google Scholar]

- 19.Tavakol M., Dennick R. Making sense of Cronbach's alpha. Int J Med Educ. 2011;2:53. doi: 10.5116/ijme.4dfb.8dfd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Babyak M.A., Green S.B. Confirmatory factor analysis: an introduction for psychosomatic medicine researchers. Psychosom Med. 2010;72:587–597. doi: 10.1097/PSY.0b013e3181de3f8a. [DOI] [PubMed] [Google Scholar]

- 21.Ali L. The design of curriculum, assessment and evaluation in higher education with constructive alignment. J Educ e-Learn Res. 2018;5:72–78. [Google Scholar]

- 22.Sharma S. From chaos to clarity: using the research portfolio to teach and assess information literacy skills. J Acad Librarian. 2007;33:127–135. [Google Scholar]

- 23.Nicol D., Macfarlane-Dick D. The Higher Education Academy; York: 2004. Rethinking Formative Assessment in HE: A Theoretical Model and Seven Principles of Good Feedback Practice. [Google Scholar]

- 24.Pirie J., Amant L.S., Takahashi S.G. Managing residents in difficulty within CBME residency educational systems: a scoping review. BMC Med Educ. 2020;20:1–12. doi: 10.1186/s12909-020-02150-0. [DOI] [PMC free article] [PubMed] [Google Scholar]