Abstract

Classifying mental disorder is a big issue in psychology in recent years. This article focuses on offering a relation between decision tree and encoding of fMRI that can simplify the analysis of different mental disorders and has a high ROC over 0.9. Here we encode fMRI information to the power-law distribution with integer elements by the graph theory in which the network is characterized by degrees that measure the number of effective links exceeding the threshold of Pearson correlation among voxels. When the degrees are ranked from low to high, the network equation can be fit by the power-law distribution. Here we use the mentally disordered SHR and WKY rats as samples and employ decision tree from chi2 algorithm to classify different states of mental disorder. This method not only provides the decision tree and encoding, but also enables the construction of a transformation matrix that is capable of connecting different metal disorders. Although the latter attempt is still in its fancy, it may have a contribution to unraveling the mystery of psychological processes.

Subject terms: Computational biology and bioinformatics, Neuroscience, Diseases

Introduction

What are the roots of mental disorder1 In the path to answering this question, researchers are beginning to untangle the common biology that links supposedly distinct psychiatric conditions. Along this line of efforts, the purpose of this study is to determine whether the combination of power law and decision trees would improve the efficiency at classifying different mental disorders or states. In light of such a concern, this article comprises two steps: (a) providing a method to encode fMRI to the power-law distribution; (b) using decision trees to classify encoding to correct state. Recent studies showed that human brain activity can be expressed by different network equations2 with the aid of graph method by fMRI samples3. Power law has been reported for many complex physical systems. Examples are the city population4, world wide web5, fluctuations in financial market6 et al. In this paper, fMRI information are encoded by power law distribution. we discuss the power law trait of authorized7 rat samples with SHR and WKY. Besides, Isoflurane (ISO) is used to further divide our samples into four states: high isoflurane WKY = HW, high isoflurane SHR = HS, low isoflurane SHR = LS, and low isoflurane WKY = LW. The format of our sample is 11 slices, 525 times, , FOV = 30 mm, and slice thickness = 1 mm. And each state contains 20 data. mental disorder is regarded as the mental difference, isoflurane is regarded as stimuli.

We use the same procedure as Ref.1 to obtain the power-law network distribution for the fMRI data of rats. Pearson correlation defined in Eq. (1) plays an important role because this calculable quantity can reflect the strength of positive correlation between any two voxels:

| 1 |

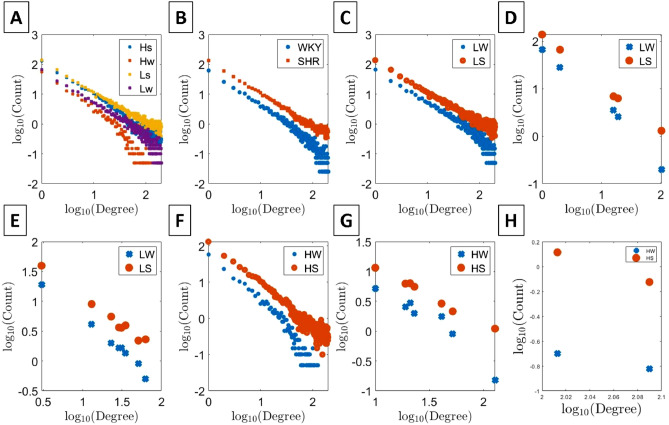

where a voxel at position x and time t is denoted as v(x, t) while represents the standard deviation. When Eq. (1) exceeds a threshold value, chosen to be 0.7, these two voxels are regarded as being linked. After statistically analyzing all different degree of connectivity in the whole brain, we can obtain the power law distribution. Figure 1A shows the average distribution of these four states and Fig. 1B,C and F are average distribution of two states. Power law distribution is extremely useful because it sheds light on the difficult problem of analysing mental states. But it is insufficient to merely use its exponent to distinguish samples because ROC index is always less than 0.7. Based on this reason, chi2 algorithm will be quoted to help us select the significant difference from a group of degrees.

Figure 1.

Power-law distribution consists of degree and its counted number. Each state, such as HS, HW, LS and LW, has 20 samples. Plots (A), (B), (C) and (F) are the distribution before chi2 algorithm. In (B), WKY and SHR are plotted without considering the anesthesia factor, and each distribution is deduced from 40 samples. In contrast, plots (D), (E), (G), and (H) are the distribution after chi2 algorithm.

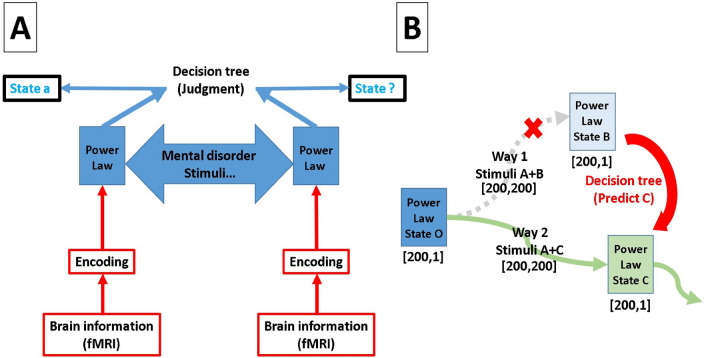

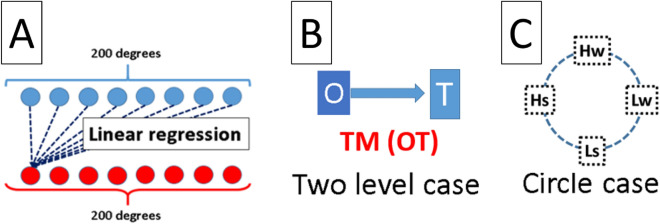

After chi2 algorithm.C4.5 decision trees can produce a tree structure. Chi2 algorithm is one of them of algorithm to make tree, ID3 and ID5 and so on is popular too, Fig. 1D,E,G and H are distribution made by chi2 algorithm. There are significant differences between groups. In order to test ROC of tree. We use tenfold validation to avoid over-fitting. Our purpose is to demonstrate whether C4.5 decision trees can offer a better way to help us observe and detect power law. Decision trees is a very popular tool for classification in data mining, which is widely used in deep-learning and machine learning8,9, industrial application10,11, medical treatments12–14, and bioinformatics15–17. To familiarize the readers with how decision trees can be of use in practical problems, let’s imagine if we want to know who was dead among the passengers who boarded the Titanic. First, we can quote the list of passenger, such as gender, age or level of class on the boat. Second, using this list to make decision trees. Finally, decision trees will tell us which condition can effect the fate of each passenger. Obviously, in this paper power law is the analogy of the list - different degrees are like the factors. In the Result section of this paper, we will ensure the relation between C4.5 decision trees and the encoding fMRI of power law, such as a bar code and detector. This relation is rule how to readout sample condition, see Fig. 2A. In addition to this, this relation can help us to face a psychological processing which Encoding will be changed during dynamical, see Fig. 2B. in this project, we use MATLAB to deal with fMRI raw data. The GPU and CPU mixing program allows us to increase the execution efficiency. The former transforms the 4D (3D voxel space and time) into a 2D matrix (voxel position and time), while the latter handles the calculation of correlation. As for the decision trees, we save the MATLAB matrix file by csv format, input the data into Excel, and output to Java to build the decision trees for the final calculation of the outcome of tenfold cross-validation. We construct the transform matrix (TM) by using linear regression to find the relation between two mental disorder states . For example, to obtain the TM from LS to LW, we treat LW as a target state and a dependent variable which is defined by averaging power law distributions of LW. Our data set consists of 15 power law distributions from LS, defined as the original state. The weights of 200 linear regression equations are then utilized to construct TM, as shown in Fig. 4A. The process of encoding and decision tree is arranged in the “Materials and methods” section.

Figure 2.

Plot (A) is a flow chart that includes encoding fMRI to power law and using decision tree to classify states. Plot (B) shows the transition between two states. If a situation has several paths, decision tree can help us detect which is the right way during evolution.

Figure 4.

Plot (A) expresses TM constructed from linear regression between degrees. Plots (B) and (C) show the simulation about two level transition and four level transition.

Results

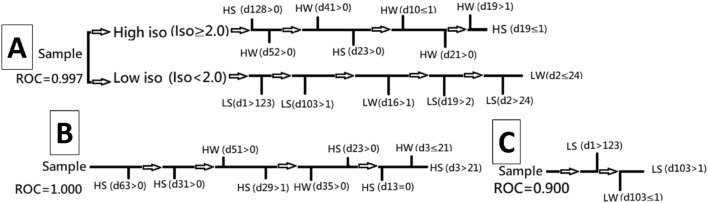

All the branches of decision tree are important features from chi2 algorithm, see Fig. 3, while Fig. 1D–H show the distribution for degree after chi2 algorithm. By comparing with Fig. 1A–C, one can see that Fig. 1D–H manage to widen the separation between distributions for degree. Now we can input important features into C4.5 by tenfold cross-validation before selecting the trees whose ROC approaches 0.9. Figure 3A explains how the four states are distinguished. Initially, data are categorized into two groups, based on whether the dosage of isoflurane (iso) . Figure 3B and C show results from different situations of high ios and low ios groups. Our data are processed by ten fold cross-validation to achieve good results with . We double-confirm that power law has high sensitivity to represent the rat problem.

Figure 3.

Plot (A) shows the decision tree of HS, HW, LS, and LW states. In contrast, plot (B) is of HS and HW states, and plot (C) of LS and LW states.

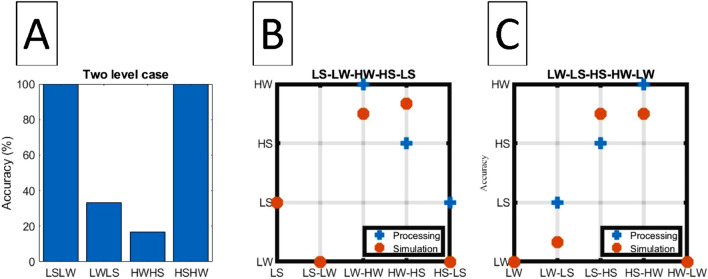

Schematic plot in Fig. 4B describes the relation between original and target states which we regard as a two-level case. After the transformation via TM and being judged by decision tree, the accuracy is shown in Fig. 5A. Figure 4C describes that four TM are applied for each original state in order to obtain the results in Fig. 5B and C.

Figure 5.

Plot (A) shows the accuracy in the case of two level transition from 5 testing samples. Compare with LSLW and HSHW that have a complete transition after detection by decision tree, the accuracy for LWLS and HWHS is lower. Several factors must be solved to obtain TM. Plots (B) and (C) show the circle case testing in Fig. 4B.

Discussion

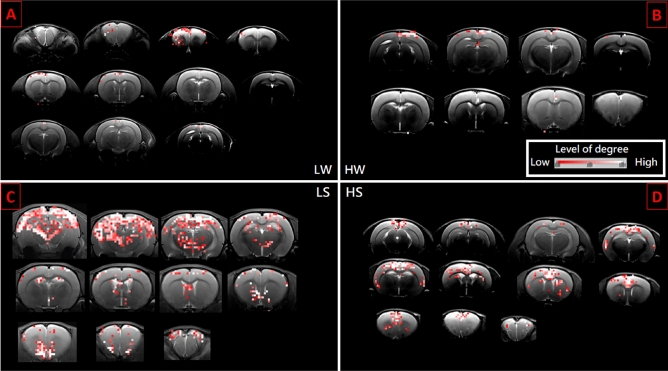

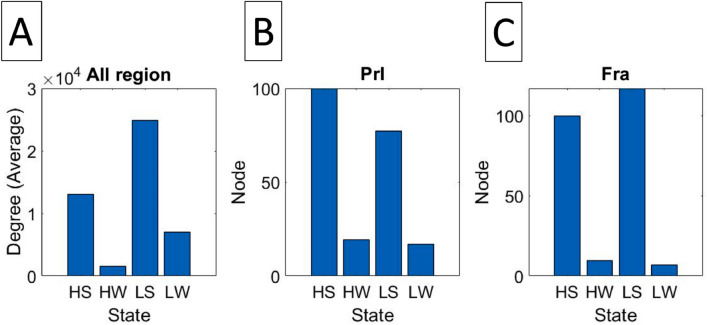

Figure 6 shows that all states have different distributions for degree. Only the most representative result is selected among 20 rat samples for each state. In general, SHR/low-ISO has more degrees and covers more brain regions than WKY/ high-ISO. The rank 1 in HS is the Primary motor cortex, while HW, LS, and LW the Secondary motor cortex. In the mean time, the rank 2 in HS is Retrosplenial agranular cortex, while HW, LS, and LW are Primary motor cortex. Whenever the brain develops disorder, it exhibits a different functional network that consequently gives rise to a new exponent for the power law in Fig. 1. This is similar to the finding of Ref.2 that the exponent may vary as the trial subject engages in different activities. We can find that the most active brain regions are the same. However, if we just focus on the samples of SHR, we discover that the secondary motor18 will fall to rank four. This is the reason why LS rat is more active than HS rat. More details will rely on more biological experiments in the future. The prefrontal cortex of ADHD patients has been reported to show abnormalities19,20. In our case, we can check two important regions in the prefrontal cortex to distinguish different states. These regions, Prl21–24 and Fra25, are related to the self-control and ADHD. The prefrontal cortex of SHR rat has been studied26–28. Figure 7A shows the summation of degree over whole brain region with 6 samples in 4 states, while Fig. 7B and C show the average number of node in Prl and Fra. Note that the stimulus interaction for LS being lower than LW in Fra is contrary to our expectation. Future biological experiments are needed to clarify the source of this problem.

Figure 6.

Panels (A) to (D) illustrate the activated region in LW, HW, LS, and HS states, respectively. The grade of brightness signifies different degrees of connectivity in the cross section. We only show the brain section with points.

Figure 7.

Plot (A) shows the total count of degree for the whole brain. Each state consists of 20 samples. (B) and (C) show Prl and Fra regions where the difference between SHR and WKY is significant. SHR has more nodes than WKY. Each state has 6 samples.

One common method in RS-fMRI (Resting state-fMRI) is Seed-based Correlation Analysis (SCA). SHR and WKY have been studied7. In general, SCA requires the choice of a Region Of Interest (ROI) to be a priori assumption, and needs to average over seed regions before calculating connectivity. In contrast, we forgo this step to avoid any subjective and abnormal seed from affecting the outcome. Instead, we upgrade and simplify the graph theory by numerical representations. Whether this approach is applicable to all states of mental disorder and has restrictions are important questions to answer in the future. In this work we have managed to establish that the power-law distribution carries enough information to deal with the trial subjects in this case. To locate the characteristics of any mental state, one only needs to use chi2 algorithm to pick out important degrees filtered from the power-law distribution.

In the past, researchers found that brains have two large imposing systems in the resting state. One is the DMN (Default mode network), while the other is composed of attentional or task-based systems29. This motivates us to check whether double power laws may turn out to describe the distribution for degree better than the usual simple power law. In other words, can it be that each of these two systems contributes independently and gives rise to two different exponents? AIC is a statistical method for distinguishing the best fitting function among multiple candidates. Basically it balances the principles of accuracy (i.e., minimum loss of information) and frugality. Here we show the outcome of four states and human resting-state by AIC (Akaike information criterion)30. Respectively, the AIC values calculated for single/double power law are (1) 729564/722132 for healthy humans in resting state, (2) 4016.756 /4020.304 in HS, (3) 1036.135/1025.771 in HW, (4) 2265.964/2266.234 in LW, and (5) 5904.362/5908.362 in LS. Based on this information, we conclude that (1) healthy humans in resting-state for which double power laws fit better, (2) single power law wins out by a small margin for low iso rats. It is worth noting that this result should be treated with cautions because LW and LS rats are hard to remain still during fMRI scanning, (3) high iso WKY rats also favor the double powers, and (4) single power law is a better fit for SHR rats. Recent studies found that SHR (ADHD) children usually exhibit abnormal DMN network31. It has also been reported that mental disorder such as Alzheimer32,33, depression34, Schizophrenia35, and ASD36 can render DMN abnormal. It remains a pressing task to clarify whether the transition of double powers to single power correlates with abnormal DMN. In summary, power-law distribution can not only reflect the mental condition of our samples, but also reveal detail information about their network properties.

It is desirable to have more samples to maximize our use of Decision Tree to select power law from MRI data of mentally disordered rats. Although our results have demonstrated that the power-law distribution can be analyzed by Decision Tree to classify dosage of ISO and SHR vs. WKY, to vindicate its versatility more promotions are needed, e.g., depression, hypertension, or transient ischemic attack. We have two ideas to improve current understandings of the dynamics of brain: first, establish a relationship between observers (i.e., Decision Tree) and the objects being observed (i.e., power law from different states.). Once this relationship is available, it may function as a starting point to reveal possible connections among different observed objects.

In the TM, several problems remain to be solved: (1) different group samples may exhibit different ISO, age and so on. Although TM has enjoyed success in some transitions, (2) we yet need to determine whether TM is a linear or nonlinear system. Finally, (3) what are the relations between TM and stimuli is also an urgent question.

Materials and methods

Process

Step 1: Using Pearson correlation to calculate fMRI (spatial and temporal dimension) to power law distribution. Step 2: Using chi2 algorithm to select important features from degrees and making tree structure during tenfold. Afterwards, outputting the tree with the best ROC.

Animals: rat

All the experimental animals were admitted by the National Tsing Hua University Institutional Animal Care and Use Committee and complied with experimental guidelines. (https://drive.google.com/file/d/17cHSrbvBxaqpvEb_b-huZahQ7Ti3aqjL/view?usp=sharing).

Human subjects

We used resting-state fMRI images collected from six normal human subjects to test single and double power law. The datasets were downloaded from the ADHD-200 Consortium (http://fcon_1000.projects.nitrc.org/indi/adhd200). All MRI scans of the datasets were performed in the New York University Child Study Center37. The scan protocol was approved by the institutional review boards of the New York University School of Medicine and New York University. The informed consents were obtained from all subjects. The protocols were performed in accordance with HIPAA guidelines and 1000 Functional Connectomes Project protocols. Our retrospective study using the ADHD-200 database was approved by the institutional review boards of National Taiwan University Hospital. The ADHD-200 Consortium provided de-identified datasets are and removed the protected health information.

Magnetic resonance imaging

Our raw data come from the same source in Ref.29, in this section, copyright is owing to the author who wrote “Magnetic resonance imaging” in the Ref.15. We scanned all animals with 7-Tesla Bruker Clinscan, which had a volume coil for signal excitation and a brain surface coil for signal receiving. The anesthesia process is operated by 1.4–1.5% isoflurane mixed with O2 at flow rate of 1 L/min. We monitored all rats, made sure the respiratory rate in the range of 65–75 breaths/min while the scanning period, and body temperature maintained at by a temperature-controlled water circulation machine. During the rs-fMRI experiments, we used gradient echo echo-planar-imaging (EPI) getting the 300 consecutive volumes with 11 coronal slices. The EPI specification is TE/TR ms/1000 ms, matrix size is , FOV and slice thickness = 1 mm. We get the anatomical images by turbo-spin-echo (TSE) with scanning parameters of TE/TR, matrix size = , FOV, slice thickness mm, number of average = 2. To inspect the result of deep anesthesia, we applied 2.5–2.7% isoflurane mixed with O2, and monitored respiratory rate in the range of 40–45 breaths/min during the whole scanning period.

Data processing for distribution for degree

Here we analyze our raw data from fMRI Grayscale image. Afterward, we transform them to scalar value matrix by MATLAB 2015a and 2018 version. This matrix is four dimensional, where the first three components denote spatial position, while the last component refers to the time section in the scanning. Four dimensions render the matrix hard to manipulate, and it costs a lot of computer time. One can use GPU computing to disassemble it to two-dimensional form (). After using Eq. (1) to calculate all voxels of degree in whole brain, we can get the distribution for degree for any sample. We calculate all 80 rats (each state has 20 samples) in order to obtain the form of the distribution for degree.

Chi2 algorithm

The feature selection on this study stems from chi2 algorithm38 which is designed to discretize numeric attributes based on the statistic, and consists of two phases. In the first phase, it begins with a high significance level (sigLevel), Phase 1 is, as a matter of fact, a generalized version of ChiMerge of Kerber. Phase 2 is a ner process of Phase 1. Starting with sigLevel0 determined in Phase 1, each attribute i is associated with a sigLevel[i], and takes turns for merging. Consistency checking is conducted after each at- tribute’s merging. At the end of Phase 2, if an attribute is merged to only one value, it simply means that this attribute is not relevant in representing the original data set. As a result, when discretization ends, feature selection is accomplished.

Data processing for C4.5 decision tree

In this study, we promote a set of new algorithms to enhance the Identifying effectiveness of SHR and WKY. The classifier algorithms are a combination of chi2 algorithm and C4.5 decision tree (C4.5), the chi2 algorithm evaluates the worth of a subset of attributes and C4.5 speculate the mental disorder. The chi2 algorithm is commonly used for testing relationships between categorical variables. The calculation of the chi2 algorithm is follows , where the observed frequency (the observed counts in the cells) and he expected frequency if NO relationship existed between the variables.The decision tree algorithm is well known for its robustness and learning efficiency with a learning time complexity of 39. C4.5 has been listed in the item 10 algorithms in data mining40. It is a popular statistical classifier developed by Ross Quinlan in 1993. Basically, C4.5 is an extension of Quinlan’s earlier ID3 algorithm. In C4.5 the Information Gain split criterion is replaced by an Information Gain Ratio criterion which penalizes variables with many states. C4.5 can be used to generate a decision tree for classification. The learning algorithm applies a divide-and-conquer strategy41. to construct the tree. The sets of instances are accompanied by a set of genes (attributes). This classifier has additional features, such as handling missing values, categorizing continuous attributes, pruning decision trees, deriving rules, endotestae Information gain (S, A) of a feature A relative to a collection of examples S, is defined as , where Values (A) is the set of all possible values for attribute A, and Sv is the subset of S for which feature A has value v (i.e ), Note the first term in the equation for Gain is just the entropy of the original collection S and the second term is the expected value of the entropy after S is partitioned using feature A. The expected entropy described by the second term is the direct sum of the entropy of each subset Sv, weighed by the fraction of samples that belong to , Gain (S, A) is therefore the expected reduction in entropy caused by knowing the value of feature A. The Entropy is given by .

Data processing for testing single and double power law

We choose the same method and procedures described in Ref.41. Details can be found in Sec.IV42.

Data processing for calculating L

When we calculated the degree for any voxels, the path length (L) between two voxels is defined as the minimum number of links necessary to connect each other. Also, we collect L from data of six rats for each state at the same time. Afterward, MATLAB 2018a is employed to find the max path and average L.

Data processing for C and structure network patterns

First, we print out all connection information between two voxles for any sample in txt format. Then, insert the data in MATLAB 2018a to obtain the functional brain network pattern. If interested at calculating the clustering coefficient for any voxel linked, you have to get information of degree and the number of links connecting the neighbors. Finally, the average C can be determined from this equation where N is the number of voxels and i the voxel number.

Acknowledgements

We acknowledge funding from the Ministry of Science and Technology in Taiwan under Grant No. 105-2112-M-007-008-MY3 and 108-2112-M007-011-MY3. We also thank Prof. Chung-Chuan Lo for helpful advices, Prof. Che-Rung Lee and Quey-Liang Kao for the assess to their GPU workstation, and Kun-I Chao and Li-Jie Chen for helping us process images of fMRI.

Author contributions

Hosting Plan: P.-J.T. and K.-H.C. Coding program: P.-J.T. Image processing: P.-J.T. Data processing: P.-J.T. Section about Magnetic resonance imaging: author of Ref.29. Statistical Analysis: K.-H.C. (for chi2 algorithm, C4.5 decision tree, t-test, and ROC), P.-J.T. (for AIC and power law). Academic guidance: F.-N.W., T.-M.H. and T.-Y.H. Writing: P.-J.T. and T.-M.H. (Abstract, Introduction, Result, Discussion), P.-J.T., K.-H.C., and author of Ref.29 (“Materials and methods”).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Ping-Rui Tsai and Kun-Huang Chen.

Contributor Information

Ping-Rui Tsai, Email: ron104022509@gmail.com.

Tzay-Ming Hong, Email: ming@phys.nthu.edu.tw.

References

- 1.Marshall M. The hidden links between mental disorders. Nature. 2020;581:19–21. doi: 10.1038/d41586-020-00922-8. [DOI] [PubMed] [Google Scholar]

- 2.Egulluz VM, et al. Scale-free brain functional network. Phys. Rev. Lett. 2005;94:018102. doi: 10.1103/PhysRevLett.94.018102. [DOI] [PubMed] [Google Scholar]

- 3.Maslov, S., Sneppen, K. & Alon, U. In Handbook of Graphs and Networks (eds Bornholdt, S. & Schuster, H. G.) (Wiley, Weinheim, New York, 2003).

- 4.Gabaix X. Zipf’s law for cities: an explanation. Q. J. Econ. 1999;144:739–767. doi: 10.1162/003355399556133. [DOI] [Google Scholar]

- 5.Albert R, et al. Internet: diameter of the world-wide web. Nature. 1999;401:130–131. doi: 10.1038/43601. [DOI] [Google Scholar]

- 6.Gabaix X, et al. A theory of power-law distributions in financial market fluctuations. Nature. 2003;423:267–270. doi: 10.1038/nature01624. [DOI] [PubMed] [Google Scholar]

- 7.Huang S-M, et al. Inter-strain differences in default mode network: a resting state fMRI study on spontaneously hypertensive rat and Wistar Kyoto Rat. Sci. Rep. 2016;6:21697. doi: 10.1038/srep21697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zen, H. et al. Statistical parametric speech synthesis using deep neural networks. In IEEE International Conference on Acoustics, Speech and Signal Processing 7962–7966 (2013).

- 9.Fayyad UM, et al. On the handling of continuous-valued attributes in decision tree generation. Mach. Learn. 1992;8:87–102. [Google Scholar]

- 10.Sheng Y, et al. Decision tree-based methodology for high impedance fault detection. IEEE Trans. Power Deliv. 2004;19:533–536. doi: 10.1109/TPWRD.2003.820418. [DOI] [Google Scholar]

- 11.Kumar R, et al. Recognition of power-quality disturbances using S-transform-based ANN classifier and rule-based decision tree. IEEE Trans. Ind. Appl. 2015;51:1249–1258. doi: 10.1109/TIA.2014.2356639. [DOI] [Google Scholar]

- 12.Shouman, M. et al. Using decision tree for diagnosing heart disease patients. In Proceedings of the 9th Australasian Data Mining Conference (AusDM’11), Vol. 121, 23–29 (2011).

- 13.Pavlopoulos SA, et al. A decision tree—based method for the differential diagnosis of aortic stenosis from mitral regurgitation using heart sounds. Biomed. Eng. Online. 2004;3:21. doi: 10.1186/1475-925X-3-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Qu Y, et al. Boosted decision tree analysis of surface-enhanced laser desorption/ionization mass spectral serum profiles discriminates prostate cancer from noncancer patients. Clin. Chem. 2002;48:10. doi: 10.1093/clinchem/48.10.1835. [DOI] [PubMed] [Google Scholar]

- 15.Chen, K.-H. et al. Gene selection for cancer identification: a decision tree model empowered by particle swarm optimization algorithm. BMC Bioinform.15, 15–49 (2014). [DOI] [PMC free article] [PubMed]

- 16.Dima S, et al. Decision tree approach to the impact of parents’ oral health on dental caries experience in children: a cross-sectional study. Int. J. Environ. Res. Public Health. 2018;15:692. doi: 10.3390/ijerph15040692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chen K-H, et al. Applying particle swarm optimization-based decision tree classifier for cancer classification on gene expression data. Appl. Soft Comput. 2014;24:773–780. doi: 10.1016/j.asoc.2014.08.032. [DOI] [Google Scholar]

- 18.Sul JH, et al. Role of rodent secondary motor cortex in value-based action selection. Nat. Neurosci. 2011;14:1202–1208. doi: 10.1038/nn.2881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Molupe M, et al. NMDA receptor function in the prefrontal cortex of a rat model for attention-deficit hyperactivity disorder. Metab. Br. Dis. 2004;19:35–42. doi: 10.1023/B:MEBR.0000027415.75432.ad. [DOI] [PubMed] [Google Scholar]

- 20.Khorevin VI, et al. Effect of L-DopA on the behavioral activity of wistar and spontaneously hypertensive (SHR) rats in the open-field test. Neurophysiology. 2004;36:116–125. doi: 10.1023/B:NEPH.0000042563.19489.5f. [DOI] [Google Scholar]

- 21.dela Peña IJI, et al. Transcriptional profiling of SHR/NCrl prefrontal cortex shows hyperactivity-associated genes responsive to amphetamine challenge. Genes Br. Behav. 2017;16:664–674. doi: 10.1111/gbb.12388. [DOI] [PubMed] [Google Scholar]

- 22.Bymaster FP, et al. Atomoxetine increases extracellular levels of norepinephrine and dopamine in prefrontal cortex of rat: a potential mechanism for efficacy in attention deficit/hyperactivity disorder. Neuropsychopharmacology. 2002;27:699–711. doi: 10.1016/S0893-133X(02)00346-9. [DOI] [PubMed] [Google Scholar]

- 23.Russell VA, et al. Hypodopaminergic and hypernoradrenergic activity in prefrontal cortex slices of an animal model for attention-deficit hyperactivity disorder–the spontaneously hypertensive rat. Behav. Br. Res. 2002;130:191–196. doi: 10.1016/S0166-4328(01)00425-9. [DOI] [PubMed] [Google Scholar]

- 24.Duan CA, et al. Requirement of prefrontal and midbrain regions for rapid executive control of behavior in the rat. Neuron. 2015;86:1491–1503. doi: 10.1016/j.neuron.2015.05.042. [DOI] [PubMed] [Google Scholar]

- 25.Hambrecht VS, et al. G proteins in rat prefrontal cortex (PFC) are differentially activated as a function of oxygen status and PFC region. J. Chem. Neuroanatomy. 2009;37:112–117. doi: 10.1016/j.jchemneu.2008.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Russell V, et al. Altered dopaminergic function in the prefrontal cortex, nucleus accumbens and caudate-putamen of an animal model of attention-deficit hyperactivity disorder–the spontaneously hypertensive rat. Br. Res. 1995;676:235–424. doi: 10.1016/0006-8993(95)00095-8. [DOI] [PubMed] [Google Scholar]

- 27.Russell V, et al. Increased noradrenergic activity in prefrontal cortex slices of an animal model for attention-deficit hyperactivity disorder—the spontaneously hypertensive rat. Behav. Br. Res. 2000;117:69–74. doi: 10.1016/S0166-4328(00)00291-6. [DOI] [PubMed] [Google Scholar]

- 28.Russell VA. Increased AMPA receptor function in slices containing the prefrontal cortex of spontaneously hypertensive rats. Metab. Br. Dis. 2001;16:143–149. doi: 10.1023/A:1012584826144. [DOI] [PubMed] [Google Scholar]

- 29.Bullmore E, Sporns O. Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 2009;10:186–198. doi: 10.1038/nrn2575. [DOI] [PubMed] [Google Scholar]

- 30.Akaike, H. In Proceedings of the Second International Symposium on Information Theory (ed. Petrov, B. N. and Csaki, F.) (Akademiai Kiado, Budapest, 1973), pp. 267–281; IEEE Trans. Autom. Control19, 716 (1974); A Celebration of Statistics (ed. Atkinson, A. C. and Fienberg, S. E.) 1–24 (Springer, Berlin, 1985).

- 31.Liddle EB, et al. Task-relate d default mode network modulation and inhibitory control in ADHD: effects of motivation and methylphenidate. J. Child Psychol. Psychiatry. 2011;52:761–771. doi: 10.1111/j.1469-7610.2010.02333.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Mevel, Katell et al. The default mode network in healthy aging and alzheimer’s disease. Int. J. Alzheimer’s Dis. 535816 (2011). [DOI] [PMC free article] [PubMed]

- 33.Jones DT, et al. Age-related changes in the default mode network are more advanced in Alzheimer disease. Neurology. 2011;77:1524–31. doi: 10.1212/WNL.0b013e318233b33d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Shelinea YI, et al. The default mode network and self-referential processes in depression. PNAS. 2009;106:1942–1947. doi: 10.1073/pnas.0812686106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Salgado-Pineda P, et al. Correlated structural and functional brain abnormalities in the default mode network in schizophrenia patients. Schizophr. Res. 2011;125:101–109. doi: 10.1016/j.schres.2010.10.027. [DOI] [PubMed] [Google Scholar]

- 36.Starck T, et al. Resting state fMRI reveals a default mode dissociation between retrosplenial and medial prefrontal subnetworks in ASD despite motion scrubbing. Front. Hum. Neurosci. 2013;7:802. doi: 10.3389/fnhum.2013.00802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.The ADHD-200 Consortium, Front Syst Neurosci. 2012; 6:62 [DOI] [PMC free article] [PubMed]

- 38.Liu, H. & Setiono, R. Chi2: feature selection and discretization of numeric attributes. In Proceedings of 7th IEEE International Conference on Tools with Artificial Intelligence, 388–391 (Herndon, VA, 1995).

- 39.Tan AC, Gilbert D. Ensemble machine learning on gene expression data for cancer classification. Appl. Bioinform. 2003;2:75–83. [PubMed] [Google Scholar]

- 40.Wu X, et al. Top 10 algorithms in data mining. Knowl. Inform. Syst. 2008;16:1–37. doi: 10.1007/s10115-007-0114-2. [DOI] [Google Scholar]

- 41.Quinlan JR. C4.5: Programs for Machine Learning. Los Altos: Morgan Kaufmann; 1993. [Google Scholar]

- 42.Tsai S-T, et al. Power-law ansatz in complex systems: excessive loss of information. Phys. Rev. E. 2015;92:062925. doi: 10.1103/PhysRevE.92.062925. [DOI] [PubMed] [Google Scholar]