Abstract

The COVID‐19 pandemic forced medical schools to rapidly transform their curricula using online learning approaches. At our institution, the preclinical Practice of Medicine (POM) course was transitioned to large‐group, synchronous, video‐conference sessions. The aim of this study is to assess whether there were differences in learner engagement, as evidenced by student question‐asking behaviors between in‐person and videoconferenced sessions in one preclinical medical student course. In Spring, 2020, large‐group didactic sessions in POM were converted to video‐conference sessions. During these sessions, student microphones were muted, and video capabilities were turned off. Students submitted typed questions via a Q&A box, which was monitored by a senior student teaching assistant. We compared student question asking behavior in recorded video‐conference course sessions from POM in Spring, 2020 to matched, recorded, in‐person sessions from the same course in Spring, 2019. We found that, on average, the instructors answered a greater number of student questions and spent a greater percentage of time on Q&A in the online sessions compared with the in‐person sessions. We also found that students asked a greater number of higher complexity questions in the online version of the course compared with the in‐person course. The video‐conference learning environment can promote higher student engagement when compared with the in‐person learning environment, as measured by student question‐asking behavior. Developing an understanding of the specific elements of the online learning environment that foster student engagement has important implications for instructional design in both the online and in‐person setting.

Keywords: COVID‐19, distance, education, learning, medical, undergraduate, videoconferencing

1. INTRODUCTION

In the Spring of 2020, the COVID‐19 pandemic forced medical schools around the world to quickly pivot from traditional, in‐person, curricular approaches to online education delivery for medical students. This type of online education delivery fits under the umbrella of “distance learning,” a model which is defined by four components – (a) it is institutionally based, (b) there is separation of teacher and student (usually geographically), (c) there is use of interactive telecommunications, and (d) there is sharing of resources (data, voice, and video). 1 , 2 Online learning, which is the use of digital platforms to connect and engage individuals across geographic boundaries, can be used to facilitate distance learning. 3 , 4 Videoconferencing is one form of synchronous online learning frequently employed in distance learning models and adopted by many medical schools in their curricular response to COVID‐19‐related restrictions, including at our institution. 5 , 6

In medical education, videoconferencing has historically been used to facilitate participation in learning activities when learners are at geographically distant sites. The few existing studies on videoconferencing in this context report similar learning outcomes and mixed student satisfaction when compared with in‐person instruction. 7 , 8 , 9 , 10 The literature on videoconferencing as a modality for distance learning also raises concerns about its potentially negative impact on learner engagement. 5 , 11 , 12

Consequently, in the Spring of 2020, when we transitioned our preclinical medical school curriculum from in‐person to videoconference, we were concerned about the effect this shift would have on learner engagement. However, as students participated in the online curriculum, we watched with great interest as they continued to ask questions and participate in the virtual classroom. We even wondered whether students were asking more questions and more complex questions than was noted in previous years. This was a particularly relevant observation, because student question‐asking is known to be an important marker of learner engagement. 13

In this article, we ask the question: were there differences in engagement as evidenced by student question‐asking behaviors between in‐person and videoconferenced sessions in a preclinical medical student course? We hypothesized that the quantity and quality of question‐asking was greater in the online version of the curriculum. Since little is known about the optimal way to structure videoconferences to maximize student engagement, if we found that our students exhibited high levels of engagement in the online environment, this could have important implications for best‐practices in videoconference‐based curricula moving forward.

2. METHODS

2.1. Curricular change

Practice of Medicine (POM) is a preclinical course that spans the medical school preclinical curriculum and focuses on core skills training in medical interviewing, clinical reasoning, and the physical examination, with other threads addressing the foundations of medical practice, including information literacy, nutrition principles, quantitative medicine, psychiatry, biomedical ethics, health policy, and population health. POM is broken down into two separate three‐quarter sub‐courses, POM Year 1 and POM Year 2. The course's curriculum is delivered in a variety of settings including small group facilitated discussions and skills‐based sessions, simulation, bedside sessions, and large‐group didactics. In this study, we specifically examine the changes that took place in large‐group didactic sessions in POM Year 2.

In the traditional, in‐person version of the course, last taught in Spring 2019 (Academic Year 2018, AY18), the large‐group didactic sessions are conducted in a single classroom with students seated in small groups around tables. The class consists of approximately 80 students, and all students are present for the large‐group sessions. Each session is taught by one or several rotating instructors with the aid of a senior student teaching assistant (TA). These sessions are typically video recorded and uploaded to the course website for students to review after the session. The POM Year 2 spring quarter curriculum is focused on transitioning from the preclinical to the clerkship curriculum and large group didactic session topics include: pain management, ethics, palliative care, bedside rounding, how to promote a respectful learning environment, oral presentations on the wards, writing progress notes on clerkships, advanced communication skills and physical exam workshops, and evaluation and feedback during clerkships.

As part of the curricular changes necessitated by COVID‐19, the large‐group didactic sessions during the Spring of 2020 (Academic Year 2019, AY19) were converted from in‐person sessions to synchronous videoconference sessions. These sessions were delivered using an online conference platform ZoomTM (Zoom Video Communications Inc.). The course sessions’ instructors, objectives, and educational content remained constant from AY18 to AY19. In the videoconference version of the curriculum, the senior student TA served as a session moderator. Students’ audio and video were automatically turned off so that only the instructor(s) and moderator had use of audio and video during the session. This was done to allow recording of the sessions for future student use in accordance with our institution's student privacy policy. Students participated by submitting written questions through a Q&A box function or responding to instructor prompts using a chat box. Students were able to submit questions at any time during the session and could submit questions either anonymously or with their name attached. All students were able to view the Q&A box in real time and could anonymously up‐vote their classmates’ questions. The senior student TA monitored the Q&A box and posed student questions to the session instructors throughout the session. Questions were typically asked in batches at various stopping points during the session. Videoconference sessions were recorded and uploaded to the course website for students to review after each session. Attendance was mandatory and recorded for both the in‐person and online iterations of the curriculum. In AY18, 83 students were enrolled in the course and in AY19 83 students were enrolled in the course.

2.2. Study design overview

We set out to measure and compare student question‐asking behaviors in the in‐person versus the online of the curriculum. To do this, we used a retrospective comparison cohort of students who were enrolled in the Spring quarter of POM Year 2 the previous year (AY18) when the course was conducted in‐person.

During the spring quarter of AY19, POM Year 2 met in an online format for 18 total sessions. Of these sessions, 12 were conducted as synchronous sessions with the whole class in attendance in the same webinar, and 10 of these sessions were recorded. The remaining six sessions were either conducted asynchronously, in small groups, or were large group sessions that were not recorded. Of the 10 recorded, synchronous large‐group online sessions, four had a recorded counterpart from the AY18 iteration of the course. The remaining six recorded, synchronous, large‐group sessions were either new content in AY19, conducted in AY18 but not recorded, or altered significantly in either educational content or strategies such that the AY18 and AY19 versions of the session were not comparable. The topics of the four sessions included in this analysis were: Evaluation and Feedback on Clerkships, Oral Presentations for Hospital Rounds, How to Promote a Respectful Learning Environment, and Pain Management.

We conducted a retrospective review of student questions asked and answered in the video recordings of these eight sessions (N = 4 from AY18 and N = 4 from AY19). For each video‐recorded session, we tabulated the number of questions answered, time spent on Q&A, and, as a balancing measure, time spent on other interactive activities such as large or small group discussion. Of note, in the virtual version of the course, discussion was conducted via the chat box.

To capture student question complexity, we used a previously described framework of student question‐asking that classifies student questions as confirmation or transformation questions. 14 In their review on theories of student questions in the context of learning science, Chin and Osborne summarized this framework as follows:

“Confirmation questions seek to clarify information and detail, attempt to differentiate between fact and speculation, tackle issues of specificity, and ask for exemplification and/or definition. Transformation questions, on the other hand, involve some restructuring or reorganization of the students’ understanding. They tend to be hypothetic‐deductive, seek extension of knowledge, explore argumentative steps, identify omissions, examine structures of thinking, and challenge accepted reasoning.” 15

This framework recognizes that transformation questions might stimulate student engagement to a greater extent than confirmation questions. 15 Thus, this categorization proved a useful lens for understanding student questions as a marker of overall engagement.

2.3. Video review

Two members of the study team (JC and NA) reviewed the videos and tabulated the number of student questions answered in each session and time‐stamp data for each question and answer and for time spent on other interactive class activities. Timestamp data were used to calculate the percentage of class time spent on Q&A as well as other interactive activities. Because the video recordings from AY19 included only a screen capture of the instructor's video and screen, and not the Q&A box where students submitted their questions, we were only able to tally the number of questions answered, and not the total number of questions submitted or whether the question was asked anonymously or not. Time stamps were recorded starting with the first word of the student question and ended when the instructor completed their answer.

Prior to reviewing the videos, the two reviewers met to determine consistent practices for transcribing time stamps from the recordings and to review the coding frame for confirmation and transformation questions. One reviewer (JC) watched all eight session recordings and the other reviewer (NA) watched four of the recordings to allow for measurement of interrater reliability.

2.4. Data analysis

Interrater reliability was calculated from the four video recordings reviewed by JC and NA for each reviewer task, and is shown in Appendix A. For the tally of the number of questions, measurement of percentage of time spent on Q&A, percentage of time spent on other interactive activities, and categorization of confirmation questions, the intraclass correlation coefficients (ICC) ranged from 0.97 to 1.0, which indicate excellent interrater reliability. 16 For categorization of transformation questions, the ICC was 0.66, which indicates good interrater reliability. 16 We used descriptive statistics (proportion; mean and standard deviations (SD)) to compare session characteristics of the in person and online sessions. Given the small sample size, we did not conduct formal statistical tests for the comparison, but visually illustrated the magnitude of differences using bar charts. All analyses were performed using Microsoft Excel and Stata statistical software version 16.0 (College Station, TX).

2.5. Ethical approval

The Stanford University institutional review board determined that the project met criteria for institutional review board exemption because the project was conducted in established educational settings.

3. RESULTS

The eight video recordings of large‐group, didactic class sessions from the Practice of Medicine Year 2 course spanned from April 2019 to April 2020. This included four sessions that took place in‐person (AY18, April 2019) and seven sessions that took place online using videoconference technology due to the Covid‐19 pandemic (AY19, April 2020).

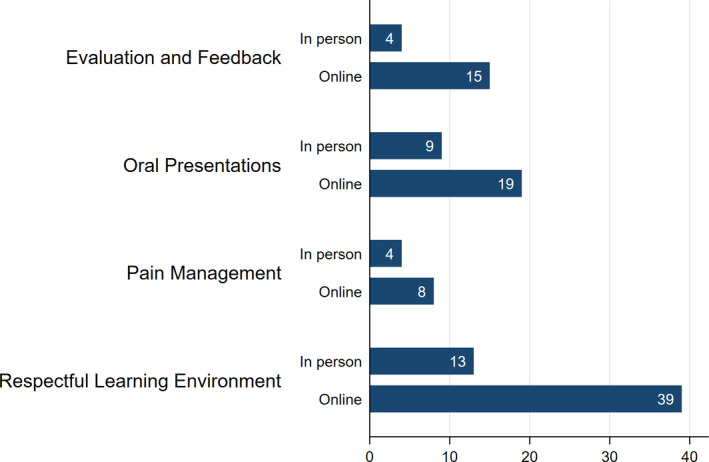

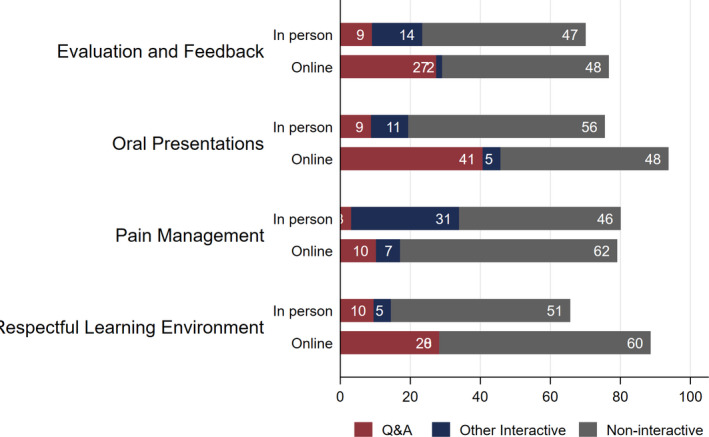

Overall, we found that, on average, the instructors answered a greater number of student questions and spent a greater percentage of time on Q&A in the online sessions compared with the in‐person sessions (Table 1). The mean number and duration of questions per online course session was 20.3 (SD 13.3) questions and 26.6 (SD 12.5) minutes, whereas for the in‐person course it was 7.5 (SD 4.4) questions and 7.6 minutes (SD 3.0). This difference in question‐asking behaviors between online and in‐person learning held true for every dyad of sessions from AY18 and AY 19 reviewed (Figure 1). While more time was spent on Q&A in the online version of the course compared with the in‐person version, comparatively less time was spent on other forms of interactive activities in the online versus in‐person course (Table 1; Figure 2). In terms of question classification, we found that students asked a greater number of transformation questions in the online version of the course compared with the in‐person course, with a mean of 5.0 (SD 3.7) transformation questions per session in the online course compared with a mean of 0.5 (SD 1.0) transformation questions per session in the in‐person course (Table 1; Figure 1).

Table 1.

Interactions during in‐person and online course sessions.

| In‐Person (N = 4) | Online (N = 4) | |

|---|---|---|

| Mean (SD) | Mean (SD) | |

| Number of questions | 7.5 (4.4) | 20.3 (13.3) |

| Number of confirmation questions | 6.8 (4.5) | 15.3 (10.0) |

| Number of transformation questions | 0.5 (1.0) | 5.0 (3.7) |

| Time spent on Q&A (minutes) | 7.6 (3.0) | 26.6 (12.5) |

| Time spent on other interactive activities (minutes) | 15.2 (11.1) | 3.4 (3.1) |

| Time spent on noninteractive activities (minutes) | 72.8 (6.3) | 84.5 (8.0) |

FIGURE 1.

Bar graph showing total number of student questions answered by the instructor in the AY18, in‐person, class session compared to the AY19, videoconferenced, class session for four large‐group, didactic sessions in Practice of Medicine Year 2

FIGURE 2.

Bar graph showing the breakdown of class time activities in minutes. Activities were categorized as “Q&A”; “other interactive,” which comprised large group discussion, small group discussion, and individual reflection; and “non‐interactive,” which comprised instructor‐led didactics. The breakdown of class time activities is shown for each of the four sessions reviewed for the AY18, in‐person, class session compared to the AY19, videoconferenced, class session

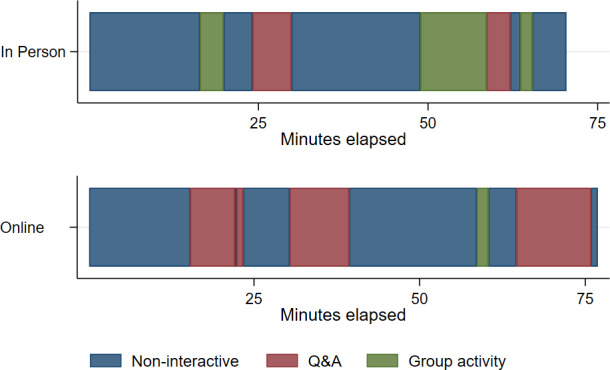

We also used the timestamp data to examine and compare the cadence of time dedicated to Q&A over the course of a given class session. An example from one of the course sessions reviewed is shown in Figure 3. Notably, in the online iteration of the session, there are more blocks of time dedicated to Q&A, and the session time dedicated to Q&A starts earlier in the session and ends later in the session compared with the in‐person version of the same session.

FIGURE 3.

Timeline of one class session displaying the duration and timing of various class time activities. The top timeline represents the AY18 in‐person session on the topic of evaluation and feedback in the clerkships and the bottom timeline represents the same session in videoconferenced format conducted in AY19. The figure shows that in the videoconferenced version of the class more time was spent on Q&A, there were more discrete blocks of time dedicated to Q&A, and Q&A started earlier in the session and ended later in the session compared with the in‐person session in AY18. On the contrary, in AY18 more time, and a greater number of discrete blocks of time, was spent on group activities

4. DISCUSSION

In the distance learning, online, videoconference version of the large‐group course sessions of POM Year 2, students asked more questions, more of the questions they asked could be classified as “transformation” questions, and the instructor dedicated a greater percentage of time to question and answer compared with the prior year's in‐person versions of the same course sessions. Notably, in all of the videoconference sessions, students submitted more written questions via the Q&A box than the instructor was able to answer live. Since we were only able to capture answered questions, our findings underestimate the total number of questions asked in the online sessions, further strengthening our findings.

The finding that students participating in the online version of the course asked more “transformation” questions is relevant to the question of student engagement. As Chin and Osborne wrote in their review on student questions as a resource for teaching and learning science, for student “questions to provoke thoughtful, intellectual engagement and guide their learning, students need to ask deeper questions than the common transactional, procedural, and basic information questions.” 15 “Transformation” questions embody this notion of “deeper” questions. Given that the volume of student questions as well as the asking of transformation‐type questions are both markers of learner engagement, our findings suggest a higher level of overall student engagement in the online version of the course compared to the in‐person course. 13 , 15

Notably, our results differ from the small number of prior studies looking at student engagement and question‐asking behavior in distance learning models. In their evaluation of student satisfaction with distance learning using videoconferencing in clerkship didactics for medical students at remote sites, Callas et al. found that students who participated in the videoconference curriculum felt significantly less capable of asking questions compared with their classmates in the in‐person curriculum. 7 The authors suggest that this piece of data around question‐asking is the main contributor to their overall conclusion that students were less satisfied with videoconference learning compared with in‐person learning. 7

Macleod and colleagues conducted an ethnographic study of videoconferenced distributed medical education (VDME), a form of videoconferenced distance learning, at a Canadian medical school. They found, “The VDME environment created a degree of removal, dulling or even obscuring the humanity of classmates by rendering them two‐dimensional. This dimming of colleagues’ humanity, in turn, allowed participants to passively watch lecturers or their fellow students on the screen, as a viewer might watch a television.” 11 This description of student passivity mediated by the videoconference environment runs counter to our finding that the videoconference setting promoted more active student engagement.

The theoretical framework of “sociomateriality” is a helpful lens through which we can understand our results in the context of these prior disparate findings. This theory describes a constant interplay of social and material factors in creating lived experience. 1 In the context of distance learning, sociomaterial theory suggests that the technological strategies and procedures employed affect students’ and teachers’ social and cognitive presence in the classroom, and thus, play a large role in shaping the learning experience. 1 Simply put, a videoconference is not a mere extension of in‐person classroom learning because the use of this technology changes the context for learners and instructors. From this, we can conclude that the specifics of the way in which technology is employed is profoundly important to the ultimate learning experience. 11

The specific social and technological conditions of our institution's transition to distance learning using videoconference for large‐group didactic sessions differs in a few key ways from previously described distance learning, videoconference curricula. From a social perspective, prior to the abrupt shift to distance learning, the students enrolled in our course had been learning together in the in‐person environment, and so they had established social relationships grounded in prior in‐person interactions. Our use of a senior student teaching assistant as a moderator meant that students’ written questions were vocalized by a near‐peer; which added a layer of separation between the student and their question.

In terms of technology, we used videoconference settings that kept students’ videos off and microphones muted for our sessions. As a result, students in our course submitted their questions in writing via a Q&A box embedded in the videoconference platform. The experience of submitting a question in writing certainly differs from using a microphone and/or video. Furthermore, the accessibility of a Q&A box varies based on the online conference platform and user electronic device. Notably, the ability to submit written questions over the internet has been shown in prior studies to increase the overall number of student questions and number of students who ask a question. 13 Thus, this specific technical choice may have been important for our results.

Additionally, aspects of technical accessibility and interactivity are relevant. Students’ written questions could be submitted at any time, including while the instructor was speaking, did not require a raised hand or permission from the instructor to speak, and could be submitted anonymously. It is possible that quieter or more introverted students may have found the ability to ask questions unobtrusively appealing. Others may have appreciated the ability to ask questions in real time without interrupting the speaker. The fact that the Q&A box was visible on students’ screens along with the session video meant that students could process the formal content of the session along with their classmates’ questions simultaneously and could even interact with these written questions by “upvoting” them. Each of these specific features and technological choices played a role in mediating the learning experience and may have contributed to our findings of increased student question‐asking in the virtual version of the course.

4.1. Limitations

This study has several limitations. First, our findings are presented as descriptive statistics without statistical inference due to our small sample size of session recordings; however, even with the small number of course sessions reviewed for this study, we are able to see a very clear pattern emerge. Similarly, the study was conducted in only one course at one academic institution, and student question‐asking behavior might differ based on course, institution, or learner type. Next, while the course objectives, educational content, and instructors were the same between the two groups, the AY18 course recordings are not a perfect control given that the AY18 course comprised a different group of students than the AY19 course. Furthermore, we were only able to record the number of questions answered in the virtual sessions, and not the number of questions asked, as no copy of the Zoom transcript from the Q&A box was saved. Additionally, we had no way to determine the proportion of students in the class that asked a question in each session and so it is possible that multiple questions were asked by the same student or small group of students. Lastly, our recordings of the in‐person sessions did not capture student question‐asking individually after class. While we hypothesize that the option to ask questions anonymously may have contributed to increased student question‐asking and engagement, we were not able to specifically quantify the number of questions asked anonymously versus nonanonymously.

4.2. Implications and future directions

In a recent editorial on how the experience with COVID‐19 might improve health professions education Sklar wrote, “there is an opportunity to use what we have learned under duress to alter our current educational and clinical approaches to something better for students, residents, and patients.” 17 In this case, our rapid conversion to distance learning necessitated by COVID‐19 provides an opportunity to improve our understanding of best practices in distance learning for optimization of student engagement. Furthermore, refining our knowledge of how to most effectively use technology to stimulate student questioning and engagement has implications for the in‐person classroom environment as well as the virtual.

Our findings show that the videoconference learning environment can produce higher levels of engagement compared with the in‐person environment. Future research should focus on defining the conditions of online learning that facilitate student engagement, how these conditions mediate engagement, and whether these strategies can be implemented in other contexts.

5. CONCLUSIONS

The rapid transformation of our course from in‐person to online due to COVID‐19 allowed us to conduct a natural experiment on learner engagement in the in‐person versus videoconference classroom setting. Using student question‐asking behavior as a measure of learner engagement, we found that in the videoconferenced setting students asked a greater number of questions, asked more complex questions, and instructors dedicated a larger proportion of class time to Q&A compared with the in‐person version of our course. These findings speak to the way that the specific social conditions and technical aspects of online classrooms mediate student engagement. Given the increasing prevalence of distance learning in medical education facilitated by online learning modalities, developing an understanding of the conditions that best promote learner engagement has important implications for best practices in future curriculum design.

CONFLICT OF INTEREST

The authors have stated explicitly that there are no conflicts of interest in connection with this article.

AUTHOR CONTRIBUTIONS

J. Caton, S. Chung, N. Adeniji, J. Hom, K. Brar, A. Gallant, M. Bryant, A. Hain, P. Basaviah, and P. Hosamani conceived and designed the project; J. Caton and N. Adeniji acquired the data; J. Caton, S. Chung analyzed and interpreted the data; J. Caton and S. Chung drafted the article; N. Adeniji, J. Hom, K. Brar, A. Gallant, M. Bryant, A. Hain, P. Basaviah, and P. Hosamani revised the article critically for important intellectual content; J. Caton, S. Chung, N. Adeniji, J. Hom, K. Brar, A. Gallant, M. Bryant, A. Hain, P. Basaviah, and P. Hosamani gave final approval of the version to be submitted.

APPENDIX A.

| (1) Interrater reliability | ||||

|---|---|---|---|---|

| Coder 1 (N = 4) | Coder 2 (N = 4) | p value | ||

| Mean (SD) | Mean (SD) | ICC | (H0: ICC=.6) | |

| Number of questions | 7.8 (5.2) | 8.5 (5.5) | 0.98 | 0.014 |

| Number of confirmatory questions | 6.3 (3.3) | 6.0 (3.8) | 0.99 | 0.01 |

| Number of transformative questions | 1.3 (2.5) | 2.5 (1.9) | 0.66 | 0.43 |

| Time spent on Q&A | 12.4 (10.4) | 13.8 (9.4) | 0.97 | 0.018 |

| Time spent on other interactive activities | 13.4 (12.7) | 15.6 (11.4) | 0.99 | 0.005 |

| Time spent on noninteractive activities | 76.5 (4.5) | 76.5 (4.5) | 1 | <0.001 |

Pree Basaviah and Poonam Hosamani contributed equally.

This article is part of the Managing Medical Curricula In the Pandemic Special Collection.

REFERENCES

- 1. MacLeod A, Kits O, Whelan E, et al. Sociomateriality: a theoretical framework for studying distributed medical education. Acad Med. 2015;90(11):1451‐1456. [DOI] [PubMed] [Google Scholar]

- 2. Simonson M, Zvacek SM, Smaldino S. Teaching and Learning at a Distance: Foundations of Distance Education, 7th edn IAP; 2019. [Google Scholar]

- 3. Sharif S, Sherbino J, Centofanti J, Karachi T. Pandemics and innovation: how medical education programs can adapt extraclinical teaching to maintain social distancing. ATS Scholar. Published online. 2020. 10.34197/ats-scholar.2020-0084cm [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet‐based learning in the health professions. JAMA. 2008;300(10):1181 10.1001/jama.300.10.1181 [DOI] [PubMed] [Google Scholar]

- 5. Gordon M, Patricio M, Horne L, et al. Developments in medical education in response to the COVID‐19 pandemic: A rapid BEME systematic review: BEME Guide No. 63. Med Teach. 2020;42(11):1202‐1215. [DOI] [PubMed] [Google Scholar]

- 6. Mian A, Khan S. Medical education during pandemics: a UK perspective. BMC Med. 2020;18(1):100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Callas PW, Bertsch TF, Caputo MP, Flynn BS, Doheny‐Farina S, Ricci MA. Medical student evaluations of lectures attended in person or from rural sites via interactive videoconferencing. Teach Learn Med. 2004;16(1):46‐50. [DOI] [PubMed] [Google Scholar]

- 8. Bertsch TF, Callas PW, Rubin A, Caputo MP, Ricci MA. Effectiveness of lectures attended via interactive video conferencing versus in‐person in preparing third‐year internal medicine clerkship students for Clinical Practice Examinations (CPX). Teach Learn Med. 2007;19(1):4‐8. [DOI] [PubMed] [Google Scholar]

- 9. Sandhu A, Fliker A, Leitao D, Jones J, Gooi A. Adding live‐streaming to recorded lectures in a non‐distributed pre‐clerkship medical education model. Stud Health Technol Inform. 2017;234:292‐297. [PubMed] [Google Scholar]

- 10. Hortos K, Sefcik D, Wilson SG, McDaniel JT, Zemper E. Synchronous videoconferencing: impact on achievement of medical students. Teach Learn Med. 2013;25(3):211‐215. [DOI] [PubMed] [Google Scholar]

- 11. MacLeod A, Cameron P, Kits O, Tummons J. Technologies of exposure: videoconferenced distributed medical education as a sociomaterial practice. Acad Med. 2019;94(3):412‐418. [DOI] [PubMed] [Google Scholar]

- 12. Durrani M. Debate style lecturing to engage and enrich resident education virtually. Med Educ. 2020;54(10):955‐956. 10.1111/medu.14217 [DOI] [PubMed] [Google Scholar]

- 13. Saperstein AK, Ledford CJW, Servey J, Cafferty LA, McClintick SH, Bernstein E. Microblog use and student engagement in the large‐classroom setting. Fam Med. 2015;47(3):204‐209. [PubMed] [Google Scholar]

- 14. De Jesus HP, Teixeira‐Dias JJC, Watts M. Questions of chemistry. Int J Sci Educ. 2003;25(8):1015‐1034. [Google Scholar]

- 15. Chin C, Osborne J. Students’ questions: a potential resource for teaching and learning science. Stud Sci Educ. 2008;44(1):1‐39. 10.1080/03057260701828101 [DOI] [Google Scholar]

- 16. Cicchetti DV. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol Assess. 1994;6(4):284‐290. [Google Scholar]

- 17. Sklar DP. COVID‐19: lessons from the disaster that can improve health professions education. Acad Med. 2020;95(11):1631‐1633. 10.1097/ACM.0000000000003547 [DOI] [PMC free article] [PubMed] [Google Scholar]