People living with HIV who use a mobile app for HIV self-management and social support interact with the app in distinct patterns based on needs and preferences. Clinical outcomes differ among the distinct usage patterns

Keywords: PLWH, Mobile health, Viral suppression, Latent class analysis

Abstract

PositiveLinks (PL) is a multi-feature smartphone-based platform to improve engagement-in-care and viral suppression (VS) among clinic patients living with HIV. Features include medication reminders, mood/stress check-ins, a community board, and secure provider messaging. Our goal was to examine how PL users interact with the app and determine whether usage patterns correlate with clinical outcomes. Patients (N = 83) at a university-based Ryan White clinic enrolled in PL from June 2016 to March 2017 and were followed for up to 12 months. A subset (N = 49) completed interviews after 3 weeks of enrollment to explore their experiences with and opinions of PL. We differentiated PL members based on 6-month usage of app features using latent class analysis. We explored characteristics associated with class membership, compared reported needs and preferences by class, and examined association between class and VS. The sample of 83 PL members fell into four classes. “Maximizers” used all app features frequently (27%); “Check-in Users” tended to interact only with daily queries (22%); “Moderate All-Feature Users” used all features occasionally (33%); and “As-Needed Communicators” interacted with the app minimally (19%). VS improved or remained high among all classes after 6 months. VS remained high at 12 months among Maximizers (baseline and 12-month VS: 100%, 94%), Check-in Users (82%, 100%), and Moderate All-Feature Users (73%, 94%) but not among As-Needed Communicators (69%, 60%). This mixed-methods study identified four classes based on PL usage patterns that were distinct in characteristics and clinical outcomes. Identifying and characterizing mHealth user classes offers opportunities to tailor interventions appropriately based on patient needs and preferences as well as to provide targeted alternative support to achieve clinical goals.

Implications.

Practice: Mobile health interventions support self-care for people living with HIV through a variety of mechanisms; these mechanisms should be targeted based on user needs and preferences to provide maximal benefit.

Policy: Effective use of mobile health should incorporate an understanding that multi-featured interventions impact users differently and should incentivize use of technology in ways that best fit with individuals’ needs and preferences.

Research: Researchers should consider that the mechanism of action for multi-featured interventions may vary across participants and future research should consider examining patterns of engagement with interventions to better understand their impact.

INTRODUCTION

Mobile technology (mHealth) is emerging as a beneficial tool for managing chronic conditions and minimizing the distance between patients and the healthcare system. Benefits of mHealth derive from varied mechanisms. These may include providing self-management tools to allow personal monitoring of one’s health, connecting patients to social networks of peers with similar conditions, facilitating communication with healthcare providers, and providing accessible, targeted, and easily digestible information related to a condition [1–3]. Multi-feature mHealth interventions are typically bundled as single interventions, with all of their features offered to recipients in their entirety. mHealth users may achieve maximal benefit if they use all features; however, they have the option to choose which features they want to use. Bundled features in mHealth tools make it difficult to identify the mechanism(s) of action behind multi-featured interventions. Therefore, it may be useful to tease apart the various aspects of a multi-feature mobile app to determine the potential impact and usability of its components. Understanding usage of component features can help the field move toward understanding the impact of specific features, informing future modifications.

PositiveLinks (PL) is a multi-feature smartphone app developed to improve engagement-in-care and viral suppression (VS) among people living with HIV (PLWH) [4]. Briefly, PL’s features include medication reminders, daily self-monitoring of mood and stress on a 5-point Likert scale (“check-ins”), weekly quizzes, a community board allowing anonymous communication with other PL users, and secure messaging with clinic providers and PL staff. PL was developed using evidence-based principles for improving self-management in chronic disease and was also informed by behavior change theory, the emerging mHealth literature, and formative work with our clinic population [5–14]. Features were designed to include different aspects of self-management and were delivered as a package. For example, the medication, mood, and stress check-ins were intended to promote self-regulation via the processes of self-monitoring and receiving personalized feedback, while the community board targeted social support and stigma, and the secure messaging facilitated patient-provider communication and just-in-time assistance in between clinic visits [4].

In prior studies, PL use demonstrated a positive clinical and social benefit to its users, referred to as “members” [15,16]. However, the relative importance of its different features is unknown. As further research continues to support the use of PL and other bundled mHealth interventions for chronic disease management, it is valuable to understand the potential mechanisms of action behind their impact. Understanding which app features most directly correlate with improvements in health outcomes may help us to better understand how and why PL is effective, which is an important step in generalizing its benefit, and which could inform other mHealth initiatives for PLWH. Further, PL members are heterogeneous individuals with different needs and attitudes towards app usage, and may use PL in various ways. Understanding usage profiles may help clinics to guide individuals towards PL features that best fit their unique needs and preferences, which could help to maximize its benefit.

To better understand this multi-feature mHealth intervention, we examined patterns of app usage, then categorized users based on their PL usage profile. A key assumption guiding this analysis is that all PL members belong to an underlying “latent” class that informs their type of app usage. Members of each class may differ in their underlying level of motivation and attitude towards PL, which influences how they interact with PL and the impact PL has on them. Classes cannot be measured directly; they are identified using a technique called latent class analysis [17,18], in which patterns of observable, categorical behaviors or measures (i.e., app usage patterns) are analyzed to classify members of a study population into unobservable subgroups. Latent class analysis has been used to characterize individuals in prior studies of eHealth interventions [19–23] and of PLWH [24–28]. Latent class analysis may be a valuable methodology to study multi-feature mHealth interventions such as PL that members choose to use differently based on underlying and unmeasurable traits.

In this analysis we: (a) identify “phenotypes” or classes of PL members defined by levels of usage of various app features, (b) compare demographic characteristics and clinical outcomes among classes of PL users, and (c) describe patient-reported differences in PL-related needs and experiences to explore and better understand the different user classes.

METHODS

Study sample

Patients at an academic medical center clinic were identified by their providers as being at risk for falling out of HIV care and were recruited to enroll in PL between June 2016 and March 2017. Providers identified participants as “at risk” if they had missed one or more appointment, had a non-suppressed viral load, or were experiencing social stressors that, in the opinion of the provider, might result in loss to follow-up. A PL coordinator instructed patients on how to use the app at the time of enrollment and PL staff were available throughout the study period for troubleshooting. The training provided by the PL coordinator included an orientation to a smartphone, if needed, as well as a review, with practice, of the app features. Depending on the participant’s prior familiarity with smartphones, the orientation lasted 15–45 min. Monthly phone credits of $50 were provided to PL members to ensure they could access cellular data continuously. All PL members with at least 6 months enrollment in PL were eligible for inclusion in the analysis. The PL study was approved by the academic center’s Institutional Review Board and informed consent was obtained from all individual participants included in the study.

Statistical methods: latent class analysis

We categorized individuals’ use of six primary PL features: daily medication, stress, and mood check-ins, weekly quiz responses, community board posts, and messages sent. Use of each feature was measured cumulatively in the first 6 months of PL enrollment. Cutoff values were chosen based on an exploration of the data distribution for each app feature. Check-ins and quiz response rates were categorized as high (≥90%), medium (48–89%), or low (<48%); community board posts were dichotomized as ever versus never; and number of messages sent were classified as high (≥7), medium [1–6], or none. We fit latent class models with 2, 3, 4, 5, and 6 classes and chose an optimal model based on a combination of fit statistics (AIC, BIC, and log-likelihood) and interpretability.

We examined patterns of PL use using the best-fitting model. PL use patterns were summarized for each class and informed the names given to the classes. We described demographic and clinical characteristics of members in each class, including age, sex, race, education, income, insurance status, trust in the medical system, baseline CD4, and VS. We tested for significant differences in baseline characteristics between classes using ANOVA or Fisher’s exact tests for continuous and categorical variables, respectively. We assessed changes in VS from baseline to 6 months and 12 months in each class. All analyses were conducted using SAS software, Version 9.4 [29] and the latent class analysis was performed using PROC LCA developed by the Penn State Methodology Center [30,31].

Qualitative analysis: usability interviews

We conducted semi-structured interviews with 50 PL members 2–3 weeks after enrollment. Interviews included questions about members’ like or dislike of various app features and their reasons for using or not using PL features. The interviews assessed usability of PL and were performed soon after enrollment to assist users who may be having difficulties. Interviewers used an interview guide as a framework for the interviews, but they were permitted to ask additional questions to clarify responses or follow-up on interviewee’s comments. Questions from the interview guide asked about overall impressions of PL, features liked or disliked the most and why, features used or not used and why, ease of use and functionality, suggestions for improvement, changes in communication with care providers, and other comments. Participants were also asked to provide their opinion of each feature individually. Feedback provided to the development team allowed the team to address issues that arose.

A codebook was developed for the analysis of the interviews, which included both deductive and inductive codes. Deductive codes identified which app feature the PL member was discussing in each statement (e.g., “medication tracking” or “community message board”) and included all 12 features of PL [4]. Inductive codes were determined from the members’ own words about their reasons for using or not using features. A subset of 15 interviews was coded by three independent coders. The codebook was refined until good reliability was achieved with a kappa statistic of 0.74. The codebook was then applied to the entire data set, so that frequencies of codes could be established. Qualitative analyses were conducted using Dedoose software [32].

For each feature, coders assigned a rating to quantify the interviewee’s opinion of the particular feature, as follows: −1 (dislike); 0 (ambivalent); +1 (like); or +2 (strongly like). This a priori rating system initially included both −1 and −2 ratings. However, no −2 ratings were present in the data, so coders captured all negative opinions as −1 ratings. If a feature was not mentioned at all by the interviewee, its rating was left blank. Feature ratings and reasons for use/non-use were summarized for each class of PL user to identify differences and similarities between groups.

RESULTS

Among 87 enrolled participants, 83 (95.4%) PL members had at least 6 months of PL use and were included in the latent class analysis. Of these, 49 members had completed a usability interview. The sample was 62% male, had a median age of 46 years and was 51% black. Seventy-nine percent of the sample completed high school/equivalent or beyond, 74% earned less than the federal poverty level, defined as earning a total income below the federally set guideline for poverty based on household size, and slightly more than half (55%) had private insurance. At baseline, approximately 82% of PL members were virally suppressed. PL use was fairly high in the study population. Approximately half of all PL users responded to ≥90% of daily medication, stress, and mood check-ins, and about 35% responded to ≥90% of weekly quizzes. Slightly more than half (54%) ever posted to the community board. Approximately, one in four PL users never sent any messages, while 35% sent 7 or more messages. These usage patterns differed by user class (Table 1).

Table 1 .

Characteristics by class

| Maximizers N = 22 | Check-in Users N = 18 | Moderate All-Feature Users N = 27 | As-Needed Communicators N = 16 | Total N = 83 | p-value | |

|---|---|---|---|---|---|---|

| Demographic characteristics | ||||||

| Age, median (IQR) | 48 (41–54) | 50 (35–54) | 46 (32–49) | 37 (24–49) | 46 (33–53) | .052 |

| Sex, N (%) | ||||||

| Male | 13 (59.1) | 12 (66.7) | 14 (51.8) | 12 (75.0) | 51 (61.5) | .511 |

| Female | 8 (36.4) | 6 (33.3) | 12 (44.4) | 3 (18.8) | 29 (34.9) | |

| Other/Unknowna | 1 (4.5) | 0 (0) | 1 (3.7) | 1 (6.3) | 3 (3.6) | |

| Race, N (%) | ||||||

| Black | 8 (36.4) | 15 (83.3) | 13 (48.2) | 6 (37.5) | 42 (50.6) | .038 |

| White | 7 (31.8) | 0 (0) | 10 (37.0) | 4 (25.0) | 21 (25.3) | |

| Hispanic | 1 (4.6) | 1 (5.6) | 0 (0) | 2 (12.5) | 4 (4.8) | |

| Multiple races | 4 (18.2) | 1 (5.6) | 2 (7.4) | 2 (12.5) | 9 (10.8) | |

| Other/Unknown | 2 (9.1) | 0 (0) | 2 (7.4) | 2 (12.5) | (8.4) | |

| Education, N (%) | ||||||

| Less than HS | 4 (19.1) | 3 (16.7) | 6 (23.0) | 4 (25.0) | 17 (21.3) | .488 |

| HS or equivalent | 6 (28.6) | 9 (50.0) | 10 (38.5) | 8 (50.0) | 33 (41.3) | |

| Some college | 8 (38.1) | 6 (33.3) | 5 (19.2) | 4 (25.0) | 23 (28.7) | |

| College degree | 3 (14.3) | 0 (0) | 3 (11.5) | 0 (0) | 7 (8.8) | |

| Missing | 1 | 0 | 3 | 0 | 3 | |

| Income, N (%) | ||||||

| <100% FPL | 14 (63.6) | 14 (77.7) | 19 (73.1) | 12 (85.7) | 59 (73.8) | .365 |

| ≥100% FPL | 8 (36.4) | 4 (22.2) | 7 (26.9) | 2 (14.3) | 21 (26.3) | |

| Missing | 0 | 0 | 1 | 2 | 3 | |

| Insurance, N (%) | ||||||

| Private | 12 (54.6) | 11 (61.1) | 17 (62.9) | 6 (37.5) | 46 (55.4) | .714 |

| Public | 8 (36.4) | 6 (33.3) | 7 (25.9) | 7 (43.8) | 28 (33.7) | |

| None | 2 (9.1) | 1 (5.6) | 3 (11.1) | 3 (18.8) | 9 (10.8) | |

| Distrust of medical systemb, median (IQR) | 22 (20–25) | 20 (20–26) | 22 (20–26) | 24 (22–28) | 22 (20–26) | .341 |

| Missing | 2 | 0 | 2 | 0 | 4 | |

| Baseline clinical characteristics | ||||||

| CD4, median (IQR) | 779 (426–986) | 620 (228–931) | 576 (355–853) | 479 (195–828) | 603 (353–885) | .393 |

| Missing | 2 | 2 | 1 | 2 | 7 | |

| Viral suppression, N (%) | 21 (100.0) | 14 (82.4) | 19 (73.1) | 9 (69.2) | 63 (81.8) | .026 |

| Missing | 1 | 1 | 1 | 2 | 5 | |

| Engaged in carec, N (%) | 16 (72.7) | 13 (72.1) | 21 (77.8) | 13 (81.3) | 63 (75.9) | .927 |

| Taking ART, N (%) | 21 (100.0) | 17 (94.4) | 22 (88.0) | 15 (93.4) | 75 (93.8) | .468 |

| Missing | 1 | 0 | 2 | 0 | 3 | |

| App usage | ||||||

| Medication response | ||||||

| ≥90% | 22 (100.0) | 18 (100.0) | 3 (11.1) | 0 (0) | 43 (51.8) | <.001 |

| 48%–90% | 0 (0) | 0 (0) | 24 (88.9) | 0 (0) | 24 (28.9) | |

| <48% | 0 (0) | 0 (0) | 0 | 16 (100.0) | 16 (19.3) | |

| Mood response | ||||||

| ≥90% | 22 (100.0) | 17 (94.4) | 1 (3.7) | 0 (0) | 40 (48.2) | <.001 |

| 48%–90% | 0 (0) | 1 (5.6) | 26 (96.3) | 0 (0) | 27 (32.5) | |

| <48% | 0 (0) | 0 (0) | 0 (0) | 16 (100.0) | 16 (19.3) | |

| Stress response | ||||||

| ≥90% | 22 (100.0) | 18 (100.0) | 0 (0) | 0 (0) | 40 (48.2) | <.001 |

| 48%–90% | 0 (0) | 0 (0) | 27 (100.0) | 1 (6.3) | 28 (33.7) | |

| <48% | 0 (0) | 0 (0) | 0 (0) | 15 (93.8) | 15 (18.1) | |

| Quiz response | ||||||

| ≥90% | 12 (54.6) | 16 (88.9) | 1 (3.7) | 0 (0) | 29 (34.9) | <.001 |

| 48%–90% | 8 (36.4) | 2 (11.1) | 21 (77.8) | 0 (0) | 31 (37.4) | |

| <48% | 2 (9.1) | 0 (0) | 5 (18.5) | 16 (100.0) | 23 (27.7) | |

| Community board posts | ||||||

| ≥1 | 21 (95.5) | 6 (33.3) | 12 (44.4) | 6 (37.5) | 45 (54.2) | <.001 |

| 0 | 1 (4.6) | 12 (66.7) | 15 (56.6) | 10 (62.5) | 38 (45.8) | |

| Messages sent | ||||||

| ≥7 | 17 (77.3) | 0 (0) | 7 (25.9) | 5 (31.3) | 29 (34.9) | <.001 |

| 1–6 | 0 (0) | 15 (83.3) | 14 (51.9) | 5 (31.3) | 34 (41.0) | |

| 0 | 5 (22.7) | 3 (16.7) | 6 (22.2) | 6 (37.5) | 20 (24.1) |

aTwo participants listed transgender male to female as their preferred gender identity. One participant’s gender was unknown.

bDistrust of medical system measured using the Health Care System Distrust Scale, which is scored from 10 (low distrust) to 50 (high distrust).

cEngagement-in-care is defined as having attended 2 or more HIV appointments separated by at least 90 days within the past year.

*Statistically significant differences between groups were observed for race, baseline viral suppression, and all app usage measures (p < .05).

Identifying classes of PL users

The best-fitting latent class model had four classes. Details of the models, including fit statistics for all models and item response probabilities for the best-fitting model, are shown in the Supplementary Material. The first class, which comprises 27% of the study sample, was defined by high usage of all PL features, including daily check-ins, the community board, and messaging. We refer to this class as “Maximizers.” Class 2 users (22% of all users) were frequent responders to daily check-ins but tended not to post to the community board and sent few to no messages; these users are “Check-in Users.” Class 3 was the largest, representing 33% of all PL users, and was defined by moderate use of all app features. Most users in this class responded to some (48%–90%) daily check-ins, about one third posted in the community board, and most sent between 1 and 6 messages. We refer to this class as “Moderate All-Feature Users.” The final class (19% of all users) had the lowest PL usage; the typical Class 4 user did not regularly respond to daily check-ins but occasionally sent a message, with about 31% sending 1–6 messages and an additional 31% sending seven or more messages. We call these users “As-Needed Communicators.” Details on the usage of each app feature by class are shown in Table 1; Table 2 provides a summary of typical usage by class.

Table 2 .

Summary of typical PL usage by class

| Class 1: Maximizers | Class 2: Check-in Users | Class 3: Moderate All-Feature Users | Class 4: As-Needed Communicators | |

|---|---|---|---|---|

| Daily Check-in Response Rate | High | High | Moderate | Low |

| Community Board Posts | Ever Posted | Never Posted | Never Posted | Never Posted |

| Provider/Staff Messages Sent | High | Moderate | Moderate | Mixed |

Characterizing PL user classes

The four classes differed in both demographic and clinical characteristics. On demographics, “Maximizers” were most likely to have education beyond a high school degree (52%) and earn above the federal poverty level (36%). “Check-in Users” had the highest median age (50 years), were most likely to be Black (83%) and least likely to have less than a high school degree (17%). “Moderate All-Feature Users” were most likely to be female (44%), white (37%), and have private insurance (63%). “As-Needed Communicators” were youngest (median age 37 years), most likely to be male (75%) and earn below the federal poverty level (86%). On clinical markers, baseline CD4 and the percentage of virally suppressed individuals was highest among Maximizers (median baseline CD4 = 779 cells/mm3; 100% were virally suppressed at baseline) and declined with each subsequent class of user (As-Needed Communicators had the lowest median CD4 at 479 cells/mm3; 69% were virally suppressed at baseline). Statistically significant differences (p < .05) between groups were observed for race (p = .038) and baseline VS (p = .026).

VS over time by class

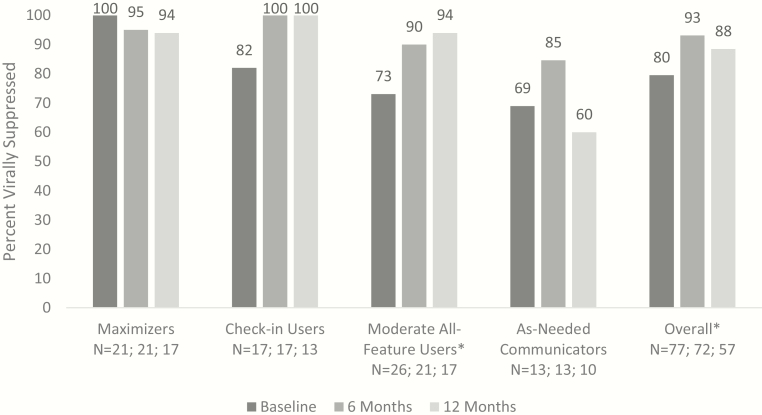

Lab values were available for 77 PL members at study baseline, 72 PL members at 6 months, and 57 PL members at 12 months. VS improved or remained high among all classes in the first 6 months of PL use. Maximizers had the highest prevalence of VS at study baseline (100%, N = 21), and they maintained a high level after 6 and 12 months (95%, N = 21 and 94%, N = 17, respectively). Check-in Users had a similarly high proportion of VS at follow-up, with the prevalence improving from 82% (N = 17) at baseline to 100% (N = 17) and 100% (N = 13) at 6 and 12 months, respectively. Moderate All-Feature Users also showed a dramatic improvement in VS, with prevalence increasing from 73% (N = 26) at baseline to 90% (N = 21) at 6 months and 94% (N = 16) at 12 months. VS was the lowest among As-needed Communicators and they were least likely to maintain the improvement seen at 6 months. VS among As-Needed Communicators was 69% (N = 13) at baseline, 85% (N = 13) at 6 months, and 60% (N = 10) at 12 months (Fig. 1). Only Moderate All-Feature Users showed a statistically significant (p < .05) increase in VS due to small sample sizes and ceiling effects in other groups.

Fig 1.

Prevalence of viral suppression (HIV viral load <200 copies/mL) at baseline, 6 months, and 12 months by user class. *Change in viral suppression shows a statistically significant increase among Moderate All-Feature Users and Overall group from baseline to six months. (p < .05).

PL feature preferences by class

Direct messaging and the appointment log were the highest rated PL features, with all four classes rating these two features positively. The community board had the most diverse ratings: Maximizers rated the community board highly, Moderate All-Feature Users were positive towards the community board, Check-in Users were positive toward the community board despite their low usage of it, and As-Needed Communicators were neutral. Overall, the lowest ratings were given by As-Needed Communicators with typical average ratings between “neutral” and “positive” (Table 3).

Table 3 .

Average rating for each PositiveLinks (PL) feature by class (N = 49)

| Maximizers N = 14 | Check-in Users N = 12 | Moderate All-Feature Users N = 17 | As-Needed Communicators N = 6 | |

|---|---|---|---|---|

| Appointment log | ||||

| Meana (SD) | 1.3 (0.5) | 1.0 (0.8) | 1.3 (0.7) | 1.0 (0) |

| N | 10 | 8 | 15 | 2 |

| Badges | ||||

| Mean (SD) | 0.9 (0.7) | 0.4 (0.7) | 0.8 (0.6) | 0.4 (0.5) |

| N | 12 | 10 | 16 | 5 |

| Community board | ||||

| Mean (SD) | 1.5 (0.9) | 1.0 (0.8) | 0.8 (1.0) | 0.2 (1.2) |

| N | 14 | 11 | 16 | 6 |

| Direct messaging | ||||

| Mean (SD) | 1.7 (0.5) | 0.9 (1.2) | 1.3 (0.8) | 2.0 (0) |

| N | 7 | 7 | 6 | 2 |

| General usability | ||||

| Mean (SD) | 1.2 (0.4) | 0.7 (0.8) | 1.0 (0.6) | 0.4 (0.9) |

| N | 14 | 11 | 17 | 5 |

| Meds tracking | ||||

| Mean (SD) | 1.2 (0.8) | 0.9 (0.9) | 1.2 (0.8) | 0 (0.6) |

| N | 12 | 12 | 15 | 6 |

| Mood/stress tracking | ||||

| Mean (SD) | 1.2 (0.8) | 0.7 (0.7) | 1.1 (0.8) | 0.2 (0.8) |

| N | 13 | 12 | 17 | 6 |

| Resources | ||||

| Mean (SD) | 0.8 (0.8) | 0.7 (0.8) | 0.8 (0.6) | 0 (0.7) |

| N | 13 | 10 | 16 | 5 |

| Weekly quizzes | ||||

| Mean (SD) | 1.0 (0.6) | 0.8 (0.8) | 0.9 (0.7) | 0.4 (0.9) |

| N | 13 | 12 | 16 | 5 |

aRating options: −1 = negative; 0 = neutral; 1 = positive; 2 = strongly positive.

bHighest scoring features within each latent class are highlighted in bold.

Common reasons for using PL, across all classes, included social connection with other PL members and with providers. Other reasons commonly provided by Check-in Users, Moderate All-Feature Users, and Maximizers included insight, memory/adherence, positivity, and user friendliness/usability. Common reasons for disuse included: feature does not fit needs, lack of awareness of a feature, lack of usability, and repetition/monotony (Table 4).

Table 4.

Reasons for use and disuse by class (N = 49)

| Number of people (Number of times mentioned) | ||||

|---|---|---|---|---|

| Maximizers N = 14 | Check-in Users N = 12 | Moderate All-Feature Users N = 17 | As-Needed Communicators N = 6 | |

| Reasons for use | ||||

| Information/education | 5 (8) | 4 (4) | 7 (9) | 1 (1) |

| Educating self | 3 (4) | 3 (9) | 6 (9) | 0 |

| Educating others | 0 | 0 | 1 (5) | 0 |

| Insight | 8 (12) | 5 (6) | 9 (17) | 1 (1) |

| Memory/adherence | 7 (11) | 9 (18) | 10 (20) | 1 (1) |

| Positivity | 8 (13) | 5 (11) | 6 (6) | 0 |

| Social connection | 3 (3) | 4 (5) | 2 (2) | 1 (1) |

| With PL participants | 7 (12) | 4 (8) | 10 (13) | 2 (4) |

| With providers | 9 (17) | 6 (8) | 7 (16) | 2 (3) |

| With others | 0 | 0 | 1 (1) | 0 |

| Giving support | 5 (10) | 0 (0) | 3 (4) | 0 |

| Receiving support | 4 (8) | 2 (2) | 0 | 1 (2) |

| User friendliness/usability | 12 (15) | 7 (7) | 14 (20) | 2 (2) |

| Reasons for disuse/dislike | ||||

| Feature does not fit needs | 5 (6) | 1 (1) | 7 (12) | 2 (16) |

| Lack of awareness of feature | 4 (5) | 8 (12) | 5 (8) | 2 (3) |

| Lack of usability of app/ user-friendliness | 4 (5) | 3 (8) | 5 (7) | 0 |

| Privacy/anonymity | 0 | 0 | 1 (1) | 0 |

| Repetition/monotony | 3 (3) | 0 (0) | 3 (4) | 2 (6) |

| Social disconnection from PL community | 0 | 1 (1) | 0 | 2 (4) |

| Suggestions for improvement | 8 (13) | 6 (20) | 9 (12) | 2 (4) |

Maximizers

Participants characterized as “Maximizers” expressed that the check-in and appointment features were helpful for memory/adherence. The check-ins and dashboard summaries also provided insight: “It just made me more aware of it, more aware of my progress and how I’m doing.” The community board provided connection to other PL members and allowed the opportunity to receive and give social support. One participant said, “I try to encourage other people to have a more positive outlook on life itself…I was at that same point in my life. And I know exactly how it feels.” The secure messaging facilitated connection with the healthcare team. As one participant observed, “It makes it a little bit easier for me to ask a question because I can just ask the question through the app. And they can get back to me in a day or two because it’s not an emergency—if I have any issues. Yeah, I like that.” For this group, most “negatives” were suggestions for improvement, such as expansion of the resources section.

Check-in Users

Check-in Users valued the appointment log and medication check-ins to support memory and adherence: “I think that helps a lot of people. Not only me but a lot of people, especially with memory.” Reviewing the dashboards to track and monitor their own check-in responses provided members with insight: “It makes you think about it because you’re able to see it.” Although Check-in Users had low usage of messaging, it was among their highest rated features. They expressed appreciation that it was there if they need it. One stated “I can send messages through the app to my doctor and then get feedback. That helps.”

Check-in Users also had low usage of the community board but rated it positively. This may be due to “lurking,” deriving value from reading others’ posts. As one said, “to read some of the things that other people put on the message board helps me.” A potential barrier to community board posting for Check-in Users was lack of awareness or understanding of how to post. One said, “I use the message board just to read. But I haven’t figured out how to get on there.”

Moderate All-Feature Users

Similar to other classes, Moderate All-Feature Users valued the appointment and check-in features as aids to memory about appointments and medication adherence. One participant attributed a change in adherence and VS to the app: “it’s actually helped me as far as reminding me to take my meds. I had a problem with taking medicine. But since I’ve been on the app, I’ve been non-detectable.” Moderate All-Feature Users also associated the messaging features with improved connection with the healthcare team. The automated responses they received after answering the check-ins also provided positive reinforcement and a sense of connection: “I feel good inside because it’s just to know people out there actually care about how you’re feeling.”

In this group, there were mixed opinions on the community board. For some, the community board provided connection and support: “It’s almost like a little support group but you do not have to go to a support group. It’s just right there on your phone.” However, some felt disconnected from the online community. Some were not interested in interacting with others, stating “That social stuff really hasn’t been my strong suit.” For some, the community was too negative: “I notice a lot of them are kind of down”. For others, it was too positive: “everybody on there’s all sunflowers and rainbows. And that’s annoying.” Some did not like the religious tone of some discussions, saying “all that religious stuff is not really what I’m into.”

Although the appointment feature was popular with most Moderate All-Feature Users, some found it did not fit their needs, especially if they had other reminder systems in place already. Some Moderate All-Feature Users expressed more general barriers to app use, such as lack of familiarity with technology (“All this smartphone stuff, I’m not educated to it”) and low literacy (“when ya can’t read that good, everything’s confusing”). Only one member mentioned privacy/anonymity as a potential barrier, in particular regarding community board use: “one of the reasons why I haven’t done it is the fact that my username is linked to my actual name.”

As-Needed Communicators

As-Needed Communicators also valued the memory/adherence support function of the program. Although they used messaging infrequently, they valued having it available, similar to the Check-in Users. One stated, “it’s convenient to be able to talk with doctors and nurses… it’s kind of made me care more about wanting to continue with care.”

Reasons for dislike or disuse mostly focused on features that did not fit their needs. Regarding the check-ins, one said, “Overall, it’s really annoying. It’s not helpful at all.” Regarding the community board, users stated “Why would I want to talk to random people I do not even know?” As-Needed Communicators did not perceive the social support benefit of reading others’ posts that was expressed by Check-in Users.

DISCUSSION

This study is one of the first investigations of how different mHealth features are used by patients, and to find that patient demographics, clinical outcomes, preferences, and reasons related to use differ by latent class. PL members can be divided into four classes, or “phenotypes,” defined by levels of usage of each PL feature. Each class of user varied not only in demographic and clinical characteristics but also on their preferences and reasons they gave for use or non-use of app features. Importantly, user classes related to clinical outcomes, with higher usage related to better outcomes. This association highlights the opportunity to provide near real-time personalized care based on data from mHealth technologies [33]. Specific to PL, monitoring and understanding a patient’s PL use class could identify a need to provide additional interventions to maximize clinical outcomes, especially for members in a user class associated with less clinical benefit, or for those who change their use pattern.

Response rates to check-ins are the most direct reflection of daily PL use, as members are prompted to answer check-ins daily. Maximizers and Check-in Users both had high check-in response rates, but differed in their interaction with other app features. Moderate All-Feature Users did not have particularly high usage for any single feature but instead used all the features moderately. Moderate All-Feature Users and Check-in Users showed the biggest improvement in VS, suggesting that PL features may have the greatest impact on these individuals. Those with good VS at baseline (i.e., Maximizers) may have been most motivated even before PL enrollment and PL provided a good fit for their self-care strategies. Patients with moderate response rates might lack the inherent motivation found in the Maximizers, leading to their lower VS prevalence at baseline. For these members, PL may have provided meaningful nudges resulting in the largest impact on clinical outcomes.

As-Needed Communicators, who primarily use the messaging feature, also showed large improvements in VS, though this improvement did not persist at 1 year. We classified all PL members based on 6 months of PL use; it is possible that As-Needed Communicators did not continue using the messaging feature after this time. Their improved VS at 6 months suggests a key role for app-facilitated communication for non-virally suppressed individuals. However, the drop-off in VS at 12 months indicates a need for a modified or different intervention to support achievement of sustained VS.

Most PL members found the app to be user-friendly. Certain features, like the appointment log and messaging, were liked by all users while other features varied by class. For example, mood and stress tracking was rated positively among all classes except As-Needed Communicators, and the community board was rated highly among Maximizers but less so among the other groups. The four classes of users expressed many common themes related to app acceptability, such as the value of reminders and the ability to contact their healthcare team through the messaging feature. However, each class also expressed that some features resonated more with them than others. The feature most frequently associated with “does not fit needs” was the community board, followed by mood/stress tracking.

The fit of each feature may depend on the specific barriers to adherence and retention in HIV care faced by users, as some may struggle more with the logistics of appointments and others with lack of social support. Allowing patients to tailor the features of the app to their own perceived needs is an option to consider in the future. However, this choice would need to be balanced against the possibility that a patient would opt-out of an evidence-based behavior change feature, such as self-monitoring via check-ins, or could later want to change their preferences or usage of features. Both possibilities indicate a need for a simple way for HIV patients to tailor mHealth apps for themselves, such as toggling features on or off, rather than implying that mHealth tools should have a limited set of features.

This study had some limitations. We did not have information on pre-enrollment levels of motivation or self-efficacy, which are likely associated with an individual’s decision to interact with PL features. Secondly, we did not have paired pre- and post-measures of mood, quality-of-life, or stigma; differential interaction with the app may have also impacted these important components of well-being. Additionally, we were unable to assess community board views, which may contribute to the social benefit of PL. Finally, our sample size and the length of the study precluded a detailed analysis of a longer-term impact of PL on VS. Patients were enrolled on a rolling basis, and consequently, some patients did not meet their 12-month follow-up prior to the study completion date. Despite these limitations, this study has many strengths. These include a systematic, mixed-methods approach to identifying classes of PL usage by members and examining the relationships of class membership with demographics, clinical outcomes, and member opinions and preferences.

CONCLUSION

While these findings relate to a specific mHealth tool, it has broader implications for the field of mHealth in HIV patient care. Creating a multi-feature mHealth intervention to improve HV patient care and outcomes requires understanding that users will interact with the intervention differently based on their own unique needs and preferences. In our mixed-methods analysis of PL, we found that PL users tend to fall into one of four latent classes of user. Patients who use the app most regularly showed the highest prevalence of VS both at baseline and follow-up, while Moderate All-Feature Users and Check-in Users showed the greatest improvement in VS. Monitoring PL usage and user class may offer an opportunity to provide timely additional assistance to support achievement of clinical outcomes, including VS. Features that take users’ needs into account should be a primary focus of mHealth tools for PLWH. This may include the option of patient-led tailoring of features and should also recognize that PLWH may accrue benefits beyond achievement of VS from use of an app, such as improved social support or well-being.

Supplementary Material

Acknowledgments

This study was funded by the Virginia Department of Health and AIDS United. We thank the Virginia Department of Health, AIDS United and the UVA Ryan White HIV Clinic patients and staff for supporting and inspiring this work, respectively. We offer particular thanks to Anna Greenlee, Ashley Shoell, and Nahuel Smith Becerra.

Compliance with Ethical Standards

Conflict of Interest: Rebecca Dillingham, Ava Lena Waldman, and Karen Ingersoll report consulting services to Warm Health Technology, Inc. No other authors have conflicts to declare.

Ethical Approval: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent: Informed consent was obtained from all individual participants included in the study.

Welfare of Animals: This article does not contain any studies with animals performed by any of the authors.

References

- 1. Cooper V, Clatworthy J, Whetham J, Consortium E. mHealth interventions to support self-management in HIV: a systematic review. Open AIDS J. 2017;11:119–132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Hall CS, Fottrell E, Wilkinson S, Byass P. Assessing the impact of mHealth interventions in low- and middle-income countries–what has been shown to work? Glob Health Action. 2014;7:25606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Hamine S, Gerth-Guyette E, Faulx D, Green BB, Ginsburg AS. Impact of mHealth chronic disease management on treatment adherence and patient outcomes: a systematic review. J Med Internet Res. 2015;17(2):e52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Laurence C, Wispelwey E, Flickinger TE, et al. Development of PositiveLinks: a mobile phone app to promote linkage and retention in care for people with HIV. JMIR Form Res. 2019;3(1):e11578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Catalani C, Philbrick W, Fraser H, Mechael P, Israelski DM. mHealth for HIV treatment & prevention: a systematic review of the literature. Open AIDS J. 2013;7:17–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Horvath KJ, Danilenko GP, Williams ML, et al. Technology use and reasons to participate in social networking health websites among people living with HIV in the US. AIDS Behav. 2012;16(4):900–910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Mo PK, Coulson NS. Online support group use and psychological health for individuals living with HIV/AIDS. Patient Educ Couns. 2013;93(3):426–432. [DOI] [PubMed] [Google Scholar]

- 8. Muessig KE, Nekkanti M, Bauermeister J, Bull S, Hightow-Weidman LB. A systematic review of recent smartphone, Internet and Web 2.0 interventions to address the HIV continuum of care. Curr HIV/AIDS Rep. 2015;12(1):173–190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Poole ES. HCI and mobile health interventions: how human-computer interaction can contribute to successful mobile health interventions. Transl Behav Med. 2013;3(4):402–405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Ramanathan N, Swendeman D, Comulada WS, Estrin D, Rotheram-Borus MJ. Identifying preferences for mobile health applications for self-monitoring and self-management: focus group findings from HIV-positive persons and young mothers. Int J Med Inform. 2013;82(4):e38–e46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Rosen RK, Ranney ML, Boyer EW. Formative research for mhealth HIV adherence: the iHAART app. Proc Annu Hawaii Int Conf Syst Sci. 2015;2015:2778–2785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Saberi P, Siedle-Khan R, Sheon N, Lightfoot M. The use of mobile health applications among youth and young adults living with HIV: focus group findings. AIDS Patient Care STDS. 2016;30(6):254–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Schnall R, Mosley JP, Iribarren SJ, Bakken S, Carballo-Diéguez A, Brown Iii W. Comparison of a user-centered design, self-management app to existing mHealth Apps for persons living with HIV. JMIR Mhealth Uhealth. 2015;3(3):e91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. van Velthoven MH, Brusamento S, Majeed A, Car J. Scope and effectiveness of mobile phone messaging for HIV/AIDS care: a systematic review. Psychol Health Med. 2013;18(2):182–202. [DOI] [PubMed] [Google Scholar]

- 15. Dillingham R, Ingersoll K, Flickinger TE, et al. PositiveLinks: a mobile health intervention for retention in HIV care and clinical outcomes with 12-month follow-up. AIDS Patient Care STDS. 2018;32(6):241–250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Flickinger TE, DeBolt C, Waldman AL, et al. Social support in a virtual community: analysis of a clinic-affiliated online support group for persons living with HIV/AIDS. AIDS Behav. 2017;21(11):3087–3099. [DOI] [PubMed] [Google Scholar]

- 17. Lazarsfeld PF, Henry NW. Latent Structure Analysis. Boston, MA: Houghton Mifflin; 1968. [Google Scholar]

- 18. Goodman LA. Exploratory latent structure analysis using both identifiable and unidentifiable models. Biometrika. 1974;61(2):215–231. [Google Scholar]

- 19. Børøsund E, Cvancarova M, Ekstedt M, Moore SM, Ruland CM. How user characteristics affect use patterns in web-based illness management support for patients with breast and prostate cancer. J Med Internet Res. 2013;15(3):e34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Collins KM, Armenta RF, Cuevas-Mota J, Liu L, Strathdee SA, Garfein RS. Factors associated with patterns of mobile technology use among persons who inject drugs. Subst Abus. 2016;37(4):606–612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Demment MM, Graham ML, Olson CM. How an online intervention to prevent excessive gestational weight gain is used and by whom: a randomized controlled process evaluation. J Med Internet Res. 2014;16(8):e194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Graham ML, Strawderman MS, Demment M, Olson CM. Does usage of an eHealth intervention reduce the risk of excessive gestational weight gain? Secondary analysis from a randomized controlled trial. J Med Internet Res. 2017;19(1):e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Wienert J, Kuhlmann T, Storm V, Reinwand D, Lippke S. Latent user groups of an eHealth physical activity behaviour change intervention for people interested in reducing their cardiovascular risk. Res Sports Med. January-March 2019;27(1):34–49. doi:10.1080/15438627.2018.1502181 [DOI] [PubMed] [Google Scholar]

- 24. Althoff MD, Theall K, Schmidt N, et al. Social support networks and HIV/STI risk behaviors among Latino immigrants in a new receiving environment. AIDS Behav. 2017;21(12):3607–3617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Carter A, Roth EA, Ding E, et al. Substance use, violence, and antiretroviral adherence: a latent class analysis of women living with HIV in Canada. AIDS Behav. March 2018;22(3):971–985. doi:10.1007/s10461-017-1863-x [DOI] [PubMed] [Google Scholar]

- 26. Dangerfield DT II, Craddock JB, Bruce OJ, Gilreath TD. HIV testing and health care utilization behaviors among men in the United States: a latent class analysis. J Assoc Nurses AIDS Care. 2017;28(3):306–315. [DOI] [PubMed] [Google Scholar]

- 27. Nunn A, Brinkley-Rubinstein L, Rose J, et al. Latent class analysis of acceptability and willingness to pay for self-HIV testing in a United States urban neighbourhood with high rates of HIV infection. J Int AIDS Soc. 2017;20(1):21290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Youssef E, Cooper V, Miners A, et al. Understanding HIV-positive patients’ preferences for healthcare services: a protocol for a discrete choice experiment. BMJ Open. 2016;6(7):e008549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. SAS Institute Inc. SAS for Windows. Cary, NC: SAS institute, Inc. 2002–2012:9.4. [Google Scholar]

- 30. The Methodology Center, Penn State. PROC LCA & PROC LTA (Version 1.2.7) [Software]. 2011. Available at http://methodology.psu.edu. Accessed February 1, 2018

- 31. Lanza ST, Dziak JJ, Huang L, Xu S, Collins LM. PROC LCA & PROC LTA user’s guide (Version 1.2.7). 2011. Available at http://methodology.psu.edu. Accessed February 1, 2018

- 32. SocioCultural Research Consultants L. Dedoose, Web Application for Managing, Analyzing, and Presenting Qualitative and Mixed Method Research Data. Los Angeles, CA: SocioCultural Research Consultants, LLC. 2018;8.0.35. [Google Scholar]

- 33. Weiler A. mHealth and big data will bring meaning and value to patient-reported outcomes. Mhealth. 2016;2:2. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.