Abstract

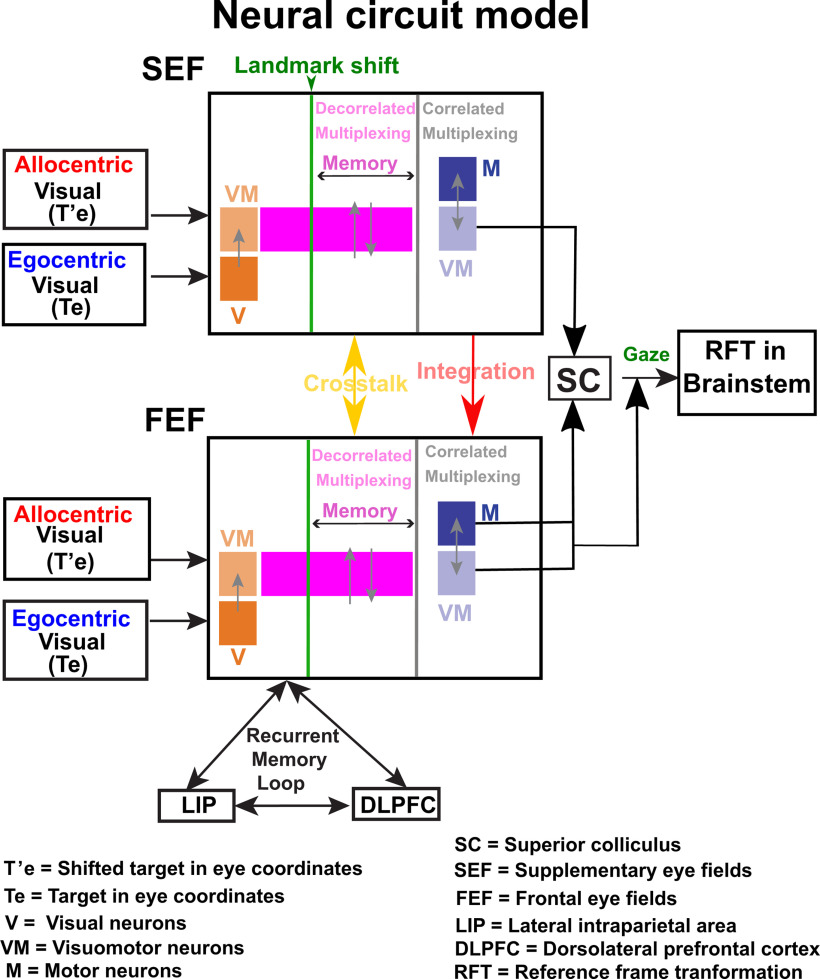

Eye-centered (egocentric) and landmark-centered (allocentric) visual signals influence spatial cognition, navigation, and goal-directed action, but the neural mechanisms that integrate these signals for motor control are poorly understood. A likely candidate for egocentric/allocentric integration in the gaze control system is the supplementary eye fields (SEF), a mediofrontal structure with high-level “executive” functions, spatially tuned visual/motor response fields, and reciprocal projections with the frontal eye fields (FEF). To test this hypothesis, we trained two head-unrestrained monkeys (Macaca mulatta) to saccade toward a remembered visual target in the presence of a visual landmark that shifted during the delay, causing gaze end points to shift partially in the same direction. A total of 256 SEF neurons were recorded, including 68 with spatially tuned response fields. Model fits to the latter established that, like the FEF and superior colliculus (SC), spatially tuned SEF responses primarily showed an egocentric (eye-centered) target-to-gaze position transformation. However, the landmark shift influenced this default egocentric transformation: during the delay, motor neurons (with no visual response) showed a transient but unintegrated shift (i.e., not correlated with the target-to-gaze transformation), whereas during the saccade-related burst visuomotor (VM) neurons showed an integrated shift (i.e., correlated with the target-to-gaze transformation). This differed from our simultaneous FEF recordings (Bharmauria et al., 2020), which showed a transient shift in VM neurons, followed by an integrated response in all motor responses. Based on these findings and past literature, we propose that prefrontal cortex incorporates landmark-centered information into a distributed, eye-centered target-to-gaze transformation through a reciprocal prefrontal circuit.

Keywords: frontal cortex, head-unrestrained, landmarks, macaques, neural response fields, reference frames

Significance Statement

It is thought that the brain integrates egocentric (self-centered) and allocentric (landmark-centered) visual signals to generate accurate goal-directed movements, but the neural mechanism is not known. Here, by shifting a visual landmark while recording frontal cortex activity in awake behaving monkeys, we show that the supplementary eye fields (SEF) incorporates landmark-centered information (in memory and motor activity) when it transforms target location into future gaze position commands. We propose a circuit model in which the SEF provides control signals to implement an integrated gaze command in the frontal eye fields (FEF; Bharmauria et al., 2020). Taken together, these experiments explain normal egocentric/allocentric integration and might suggest rehabilitation strategies for neurologic patients who have lost one of these visual mechanisms.

Introduction

The brain integrates egocentric (eye-centered) and allocentric (landmark-centered) visual cues to guide goal-directed behavior (Goodale and Haffenden, 1998; Ball et al., 2009; Chen et al., 2011; Karimpur et al., 2020). For example, to score a goal, a soccer forward must derive allocentric relationships (e.g., where is the goaltender relative to the posts?) from eye-centered visual inputs to predict an opening, and then transform this into body-centered motor commands. The neural mechanisms for egocentric/allocentric coding for vision, cognition, and navigation have been extensively studied (O'Keefe and Dostrovsky, 1971; O'Keefe, 1976; Rosenbaum et al., 2004; Milner and Goodale, 2006; Schenk, 2006; Ekstrom et al., 2014), but the mechanisms for goal-directed behavior are poorly understood. One clue is that humans aim reaches toward some intermediate point between conflicting egocentric/allocentric cues, suggesting Bayesian integration (Bridgeman et al., 1997; Lemay et al., 2004; Neely et al., 2008; Byrne and Crawford, 2010; Fiehler et al., 2014; Klinghammer et al., 2017). Neuroimaging studies suggest this may occur in parietofrontal cortex (Chen et al., 2018) but could not reveal the cellular mechanisms. However, similar behavior has been observed in the primate gaze system (Li et al., 2017), suggesting this system can be also used to study egocentric/allocentric integration.

It is thought that higher level gaze structures, lateral intraparietal cortex (LIP), frontal eye fields (FEF), superior colliculus (SC), primarily employ eye-centered codes (Russo and Bruce, 1993; Tehovnik et al., 2000; Klier et al., 2001; Paré and Wurtz, 2001; Goldberg et al., 2002), and transform target location (T) into future gaze position (G; Schall et al., 1995; Everling et al., 1999; Constantin et al., 2007). We recently confirmed this by fitting various spatial models against FEF and SC response field activity (Sadeh et al., 2015, 2020; Sajad et al., 2015, 2016). Visual responses coded for target position in eye coordinates (Te), whereas motor responses (separated from vision by a delay) coded for future gaze position in eye coordinates (Ge), and a progressive target-to-gaze (T-G) transformation (where G includes errors relative to T) along visual-memory-motor activity. Further, when we introduced a visual landmark, and then shifted it during the delay period, response field coordinate systems (in cells with visual, delay, and motor activity) shifted partially in the same direction (Bharmauria et al., 2020). Eventually this allocentric shift became integrated into the egocentric (T-G) transformation of all cells that produced a motor burst during the saccade. However, it is unclear whether this occurs independently through a direct visuomotor (VM) path to FEF, or in concert with higher control mechanisms.

One likely executive control mechanism could be the supplementary eye fields (SEF), located in the dorsomedial frontal cortex (Schlag and Schlag-Rey, 1987) and reciprocally connected to the FEF (Huerta and Kaas, 1990; Stuphorn, 2015). The SEF has visual and gaze motor response fields (Schlag and Schlag-Rey, 1987; Schall, 1991), but its role in gaze control is controversial (Abzug and Sommer, 2017). The SEF is involved in various high-level oculomotor functions (Olson and Gettner, 1995; Stuphorn et al., 2000; Tremblay et al., 2002; Sajad et al., 2019) and reference frame transformations, both egocentric (Schlag and Schlag-Rey, 1987; Schall et al., 1993; Martinez-Trujillo et al., 2004), and object-centered, i.e., one part of an object relative to another (Olson and Gettner, 1995; Tremblay et al., 2002). However, the role of the SEF in coding egocentric VM signals for head-unrestrained gaze shifts is untested, and its role in the implicit coding of gaze relative to independent visual landmarks is unknown.

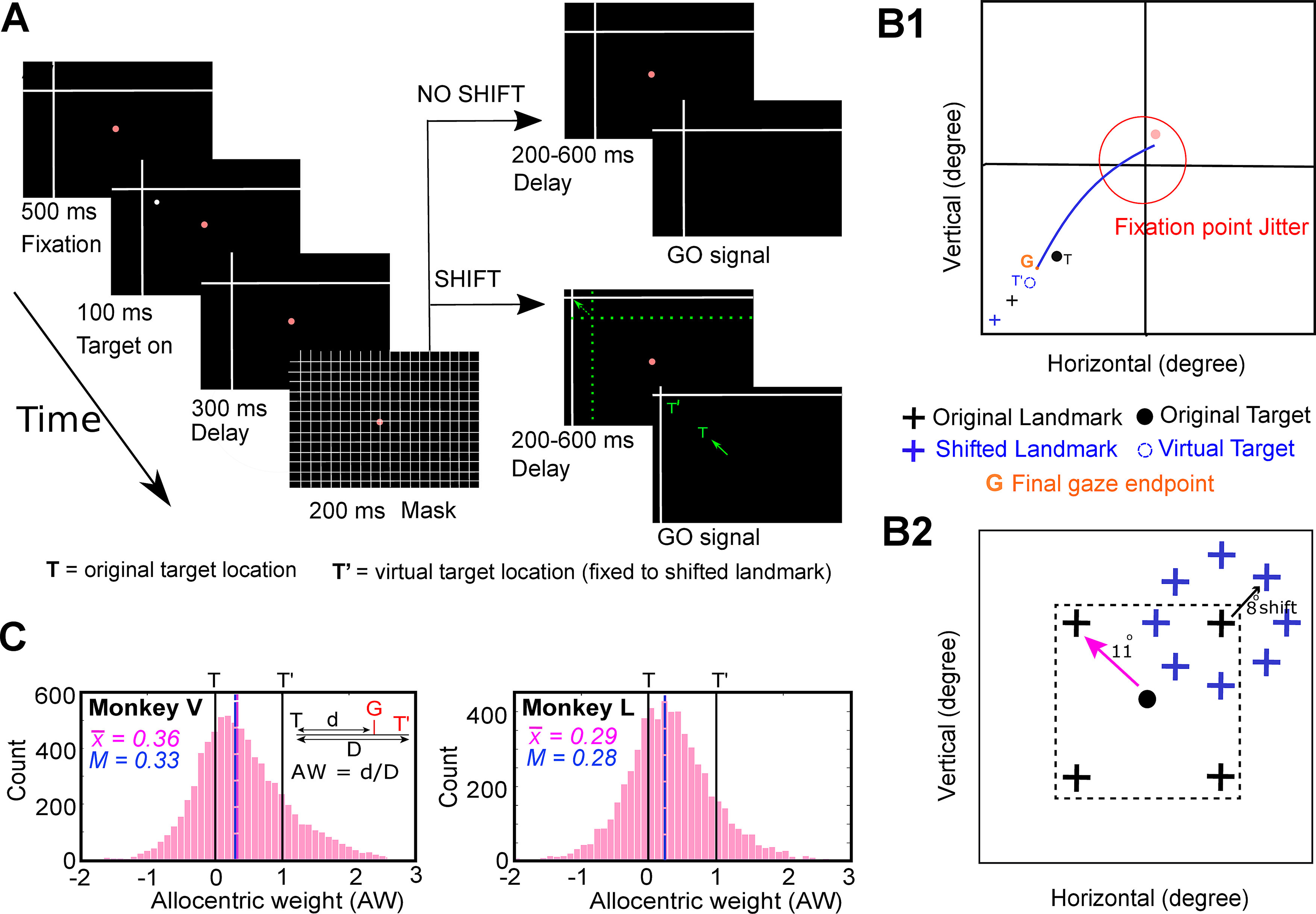

Here, we recorded SEF neurons in two head-unrestrained monkeys while they performed gaze shifts in the presence of implicitly conflicting egocentric and allocentric cues (Fig. 1A). As reported previously, gaze shifted away from the target, in the direction of a shifted landmark (Byrne and Crawford, 2010; Li et al., 2017). We employed our model-fitting procedure (Keith et al., 2009; DeSouza et al., 2011; Sadeh et al., 2015; Sajad et al., 2015) to analyze the neural data. First, we tested all possible egocentric and allocentric models. Second, we performed a spatial continuum analysis (between both egocentric and allocentric models) through time to see if, when, and how egocentric and allocentric transformations are integrated in the SEF. We find that (1) SEF neurons predominantly possess an eye-centered transformation from target-to-gaze coding and (2) landmark-centered information is integrated into this transformation, but through somewhat different cellular mechanisms than the FEF (Bharmauria et al., 2020). We thus propose a reciprocal SEF-FEF model for allocentric/egocentric integration in the gaze system.

Figure 1.

Experimental paradigm and behavior. A, Cue-conflict paradigm and its timeline. The monkey began the trial by fixating for 500 ms on a red dot in the presence of a landmark (L, intersecting lines) was already present on the screen. Then a target (white dot) was presented for 100 ms, followed by a 300-ms delay and a grid-like mask (200 ms). After the mask, and a second memory delay (200–600 ms), the animal was signaled (extinguishing of the fixation dot, i.e., go signal) to saccade head-unrestrained to the remembered target location either when the landmark was shifted (L’, denoted by the broken green arrow) or when it was not shifted (i.e., present at the original location). The monkey was rewarded for landing the gaze (G) within a radius of 8–12° around the original target (monkeys were rewarded for looking at T = original target, at T’ = virtually shifted target fixed to landmark, or between T and T’). The green arrow represents the head-unrestrained gaze shift to the remembered location. The reward window was centered on T so that the behavior was not biased. Note that the actual landmark shift was 8°, but for clarity, it has been increased in this schematic figure as indicated by the broken green arrow. Notably, the green colored items are only for the purposes of representaion (they were not present on the screen). B1, Schematic of a gaze shift (blue line) from the home fixation point (red dot) toward the virtual target (broken blue circle, T’) fixed to the shifted landmark (blue cross, L’). G refers to the final gaze endpoint. B2, Schematic illustration of all (four) possible locations of the landmark (black cross) for an example target (black dot, T), with all possible locations of the shifted landmark relative to original landmark location. The landmark was presented 11° (indicated by pink arrow) from the target, in one of the four oblique directions and postmask it shifted 8° (blue cross stands for the shifted landmark, black arrow depicts the shift) from its initial location in one of the eight radial directions around the original landmark. C, AW distribution (x-axis) for Monkey V (left) and Monkey L (right) plotted as a function of the number of trials (y-axis) for all the analyzed trials from the spatially tuned neurons. The mean AW (vertical pink line) for MV and ML was 0.36 and 0.29 (mean = 0.33), respectively. Note: the “shift” condition included 90% of trials and the “no shift” condition only included 10% of the trials. The AW was calculated for the shifted condition only. The no-shift condition allowed to test for all the range of shifts. To not bias the behavior, the reward window included scores from –1 to +1.

Materials and Methods

Surgical procedures and recordings of 3D gaze, eye, and head

The experimental protocols complied the guidelines of Canadian Council on Animal Care on the use of laboratory animals and were approved by the York University Animal Care Committee. Neural recordings were performed on two female Macaca mulatta monkeys (Monkeys V and L) and they were implanted with 2D and 3D sclera search coils in left eyes for eye-movement and electrophysiological recordings (Crawford et al., 1999; Klier et al., 2003). The eye coils allowed us to register 3D eye movements (i.e., gaze) and orientation (horizontal, vertical, and torsional components of eye orientation relative to space). During the experiment, two head coils (orthogonal to each other) were also mounted that allowed similar recordings of the head orientation in space. We then implanted the recording chamber centered in stereotaxic coordinates at 25 mm anterior and 0 mm lateral for both animals. A craniotomy of 19 mm (diameter) that covered the chamber base (adhered over the trephination with dental acrylic) allowed access to the right SEF. Animals were seated within a custom-made primate chair during experiments, and this allowed free head movements at the center of three mutually orthogonal magnetic fields (Crawford et al., 1999). The values acquired from 2D and 3D eye and head coils allowed us to compute other variables such as the orientation of the eye relative to the head, eye-velocity and head-velocity, and accelerations (Crawford et al., 1999).

Basic behavioral paradigm

The visual stimuli were presented on a flat screen (placed 80 cm away from the animal) using laser projections (Fig. 1A). The monkeys were trained on a standard memory-guided gaze task to remember a target relative to a visual allocentric landmark (two intersecting lines acted as an allocentric landmark) thus leading to a temporal delay between the target presentation and initiation of the eye movement. This allowed us to separately analyze visual (aligned to target) and eye-movement-related (saccade onset) responses in the SEF. To not provide any additional allocentric cues, the experiment was conducted in a dark room. In a single trial, the animal began by fixating on a red dot (placed centrally) for 500 ms while the landmark was present on the screen. This was followed by a brief flash of visual target (T, white dot) for 100 ms, and then a brief delay (300 ms), a grid-like mask (200 ms, this hides the past visual traces, and also the current and future landmark), and a second memory delay (200–600 ms, i.e., from the onset of the landmark until the go signal). As the red fixation dot extinguished, the animal was signaled to saccade head-unrestrained (indicated by the solid green arrow) toward the memorized location of the target either in the presence of a shifted (indicated by broken green arrow) landmark (90% of trials) or in absence of it (10%, no-shift/zero-shift condition, i.e., landmark was present at the same location as before mask). These trials with zero-shift were used to compute data at the “origin” of the coordinate system for the T-T’ spatial model fits. The saccade targets were flashed one-by-one randomly throughout the response field of a neuron. Note: green color highlights the items that were not presented on the screen (they are only for representational purposes).

The spatial details of the task are in Figure 1B. Figure 1B1 shows an illustration of a gaze shift (blue curve) to an example target (T) in presence of a shifted landmark (blue cross). G refers to the final gaze endpoint, and T’ stands for the virtual target (fixed to the shifted landmark). The landmark vertex could initially appear at one of four spots located 11° obliquely relative to the target and then shift in any one of eight directions (Fig. 1B2). Importantly, the timing and amplitude (8°) of this shift was fixed. Since these animals had been trained, tested behaviorally (Li et al., 2017) and then retrained for this study over a period exceeding two years, it is reasonable to expect that they may have learned to anticipate the timing and the amount of influence of the landmark shift. However, we were careful not to bias this influence: animals were rewarded with a water-drop if gaze was placed (G) within 8–12° radius around the original target (i.e., they were rewarded if they looked at T, toward or away from T’, or anywhere in between). Based on our previous behavioral result in these animals (Li et al., 2017), we expected this paradigm to cause gaze to shift partially toward the virtually shifted target in landmark coordinates (T’).

Note that this paradigm was optimized for our method for fitting spatial models to neural activity (see below in the section ‘Fitting neural response fields against spatial models’), which is based on variable dissociations between measurable parameters such as target location and effectors (gaze, eye, head), and various egocentric/allocentric reference frames (Keith et al., 2009; Sajad et al., 2015). This was optimized by providing variable landmark locations and shift directions, and the use of a large reward window to allow these shifts (and other endogenous factors) to influence gaze errors relative to T. We also jittered the initial fixation locations within a 7–12° window to dissociate gaze-centered and space-centered frames of reference (note that no correlation was observed between the initial gaze location and final gaze errors). Further dissociations between effectors and egocentric frames were provided by the animals themselves, i.e., in the naturally variable contributions of the eye and head to initial gaze position and the amplitude/direction of gaze shifts. Details of such behavior have been described in detail in our previous papers (Sadeh et al., 2015; Sajad et al., 2015).

Behavioral recordings and analysis

During experiments, we recorded the movement of eye and head orientations (in space) with a sampling rate of 1000 Hz. For the analysis of eye movement, the saccade onset (eye movement in space) was marked at the point in time when the gaze velocity exceeded 50°/s, and the gaze offset was marked as the point in time when the velocity declined below 30°/s. The head movement was marked from the saccade onset till the time point at which the head velocity declined below 15°/s.

When the landmark shifted (90% of trials), its influence on measured future gaze position (G) was called allocentric weight (AW), computed as follows:

| (1) |

where d is the component of T-G (error space between the actual target location and the final measured gaze position) that projects onto the vector direction of the landmark shift, and D is the magnitude of the landmark shift (Byrne and Crawford, 2010; Li et al., 2017). This was done for each trial, and then averaged to find the representative landmark influence on behavior in a large number of trials. A mean AW score of zero signifies no landmark influence, i.e., gaze shifts headed on average toward T. A mean AW score of 1.0 means that on average, gaze headed toward a virtual target position (T’) that remained fixed to the shifted landmark position. As we shall see (Fig. 1C), AW scores for individual trials often fell between 0 and 1 but varied considerably, possibly because of trial-to-trial variations in landmark influence and/or other sources of variable gaze error that are present without a landmark shift (Sajad et al., 2015, 2016, 2020).

Electrophysiological recordings and response field mapping

Tungsten electrodes (0.2- to 2.0-mΩ impedance, FHC Inc.) were lowered into the SEF [using Narishige (MO-90) hydraulic micromanipulator] to record extracellular activity. Then the recorded activity was digitized, amplified, filtered, and saved for offline spike sorting using template matching and applying principal component analysis on the isolated clusters with Plexon MAP System. The recorded sites of SEF (in head-restrained conditions) were further confirmed by injecting a low-threshold electrical microstimulation (50 μA) as previously used (Bruce et al., 1985). A superimposed pictorial of the recorded sites from both animals is presented in Figure 2A,B (Monkey L in blue and Monkey V in red).

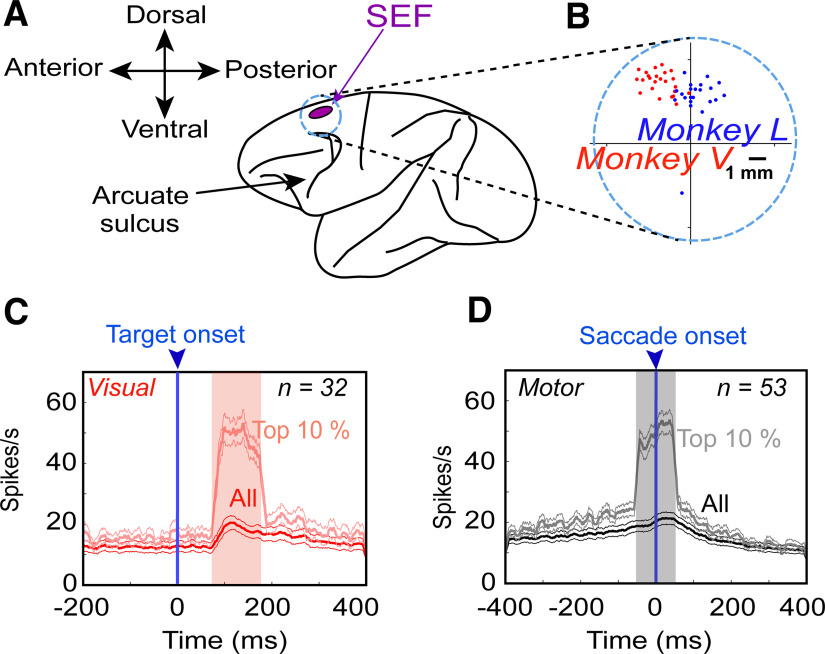

Figure 2.

SEF recordings A, Purple inset represents the location of the SEF and the circle (note that it does not correspond to the original size of chamber) corresponds to the chamber. B, A zoomed-in overlapped section of the chamber and the sites of neural recordings (dots) confirmed with 50-μA current stimulation from Monkey V (red) and Monkey L (blue). C, Mean (±95% confidence) of the spike-density plots [dark red: all trials from all neurons; light red: top 10% best trials most likely representing the hot spot of every neuron’s response field in the visual population, aligned to the onset of the target (blue arrow)]. D, Same as C but for motor responses aligned to the saccade onset (blue arrow). The shaded region denotes the 100-ms sampling window. Note that, in both plots, the “top 10%” neural data were selected using the above sampling windows; therefore, delay activity is not completely represented in these plots (it will be examined later in Results).

We mostly searched for neurons while the monkey freely (head-unrestrained) scanned the environment. Once we noticed that a neuron had reliable spiking, the experiment started. The response field (visual and/or motor) of neuron was characterized while the animal performed the memory-guided gaze shift paradigm as described above in the section ‘Basic behavioral paradigm’. After an initial sampling period to determine the horizontal and vertical extent of the response field, targets were presented (one per trial) in a 4 × 4 to 7 × 7 array (5–10° from each other) spanning 30–80°. This allowed the mapping of visual and motor response fields such as those shown in Results. For analysis we aimed at ∼10 trials per target, so the bigger the response field (and thus the more targets), the more the number of recorded trials were required and vice versa. On average, 343 ± 166 (mean ± SD) trials/neuron were recorded, again depending on the size of the response field. We did such recordings from >200 SEF sites, often in conjunction with simultaneous FEF recordings, as reported previously (Bharmauria et al., 2020).

Data inclusion criteria, sampling window, and neuronal classification

In total, we isolated 256 SEF neurons: 102 and 154 neurons were recorded from Monkeys V and L, respectively. Of these, we only analyzed task-modulated neurons with clear visual burst and/or with perisaccadic movement response (Fig. 2C,D). Neurons that only had postsaccadic activity (activity after the saccade onset) were excluded. Moreover, neurons that lacked significant spatial tuning were also eliminated (see below, Testing for spatial tuning). In the end, after applying our exclusion criteria, we were left with 37 and 31 spatially tuned neurons in Monkeys V and L, respectively. Only those trials were included where monkeys landed their gaze within the reward acceptance window, however, we eliminated gaze end points beyond ±2° of the mean distribution from our analysis. For the analysis of the neural activity, the “visual epoch” sampling window was chosen as a fixed 100-ms window of 80–180 ms aligned to the target onset, and the “movement period” was characterized as a high-frequency perisaccadic 100-ms (−50 to +50 ms relative to saccade onset) period (Sajad et al., 2015). This allowed us to get a good signal/noise ratio for neuronal activity analysis, and most likely corresponded to the epoch in which gaze shifts were influenced by SEF activity. After rigorously employing our exclusion criteria and data analyses (Table 1), we further dissociated the spatially tuned neurons into pure visual (V; n = 6), pure motor (M; n = 26) and VM neurons (which possessed visual, maximal delay, and motor activity, n = 36) based on the common dissociation procedure (Bruce and Goldberg, 1985; Sajad et al., 2015; Schall, 2015; Khanna et al., 2019). Note: the motor population also includes neurons which have delayed memory activity (Sajad et al., 2016).

Table 1.

Statistical analyses performed

| Analysis | Statistical test | Power |

|---|---|---|

| Distribution significantly shifted from a point | One-sampled Wilcoxon signed-rank test | p < 0.05 |

| Comparison between models | Brown–Forsythe test | p = 100 = 1; the best model |

| Comparison between distributions | Mann–Whitney U test | p < 0.05 |

| Transformation between the visual and the motor responses for VM neurons |

Wilcoxon matched-pairs signed-rank test | p < 0.05 |

| Spatial tuning test for a neuron | CI (see above in the section ‘Testing for spatial tuning’) | p < 0.05 |

| Comparison between VM and motor neurons in SEF and FEF |

Three-factor ANOVA analysis | p < 0.05 |

| Correlation between T-G and T-T’ | Spearman correlation | p < 0.05 |

Spatial models analyzed (egocentric and allocentric)

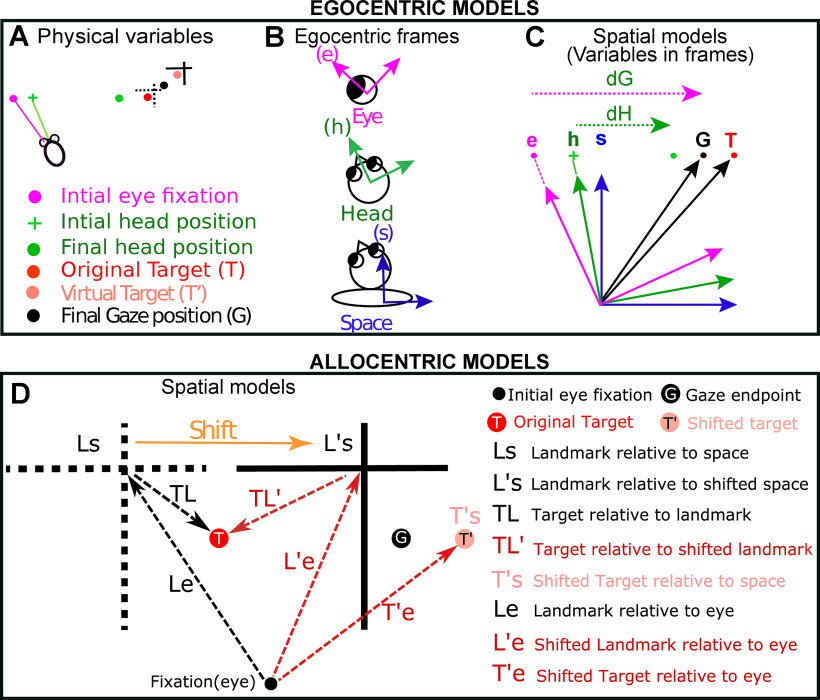

Figure 3 graphically shows how we derived the 11 egocentric “canonical” models tested in this study and then chose the best egocentric model to compare with the allocentric models. Briefly, based on these models, this figure illustrates the formal means for comparing between target versus gaze position coding and gaze versus eye versus head displacement/position (Fig. 3A–C), with each plotted in different possible frames of reference. We then use the landmark-centered information to test between the egocentric versus allocentric modes of coding (Fig. 3D). Note that in our analysis, these models were based on actual target, gaze, eye, and head data, either derived from geometric calculations (in the case of T) or eye/head coil measurements. As described previously, the variability required to distinguish between these models was either provided by ourselves (i.e., stimulus placement) or the monkeys’ own behavior (i.e., variable gaze errors and variable combinations of eye-head position; Sajad et al., 2015).

Figure 3.

Description of the different spatial models (egocentric and allocentric) tested in this study. A, Physical variables in one trial. The projections of initial gaze (magenta dot), initial head (green cross), final gaze (black dot), and final head (green dot) orientations on the screen are depicted. Note: final gaze often lands between the target (T, red dot) and shifted target (T’, light red dot). B, While head-unrestrained, these positions can be encoded in relation to three egocentric frames of reference: eye (e), head (h), and space/body (s). Since the target was sufficiently far from the head to minimize translation effects, and because our model-fitting method is insensitive to these biases, we centered these frames to be aligned as shown in C. C, Different canonical models derived after plotting the physical parameters in fundamental egocentric frames. dG: gaze displacement referring to final gaze position with respect to the home fixation point (not the eye); dH: displacement of head in space coordinates; dE (data not shown): the displacement of eye in the head accompanying the gaze shift. Target and final gaze, eye, and head positions can be represented in any one of the three initial reference frames. See text for details of all egocentric models. D, Allocentric models that were compared with the most relevant egocentric models (Te, Ge). The broken black cross represents the location of initial landmark (L) and the solid black cross stands for the shifted (indicated by orange arrow) landmark (L’). The red, the black, and the light red solid circles correspond to the target (T), the gaze endpoint (G), and the virtual target (T’) locations, respectively. Tested allocentric models: Ls, landmark relative to space; Le, landmark relative to eye; TL, target relative to landmark; L’s, shifted landmark relative to space; L’e, shifted landmark relative to eye, TL’, target relative to shifted landmark, T’e, shifted target relative to eye; T’s, shifted target relative to space.

We first tested for all the egocentric models as reported in our previous studies (Sajad et al., 2015, 2016) and then tested the best egocentric model with all “pure” allocentric models. Based on different spatial parameters [most importantly target (T) and final gaze position (G)], we fitted visual and motor response fields of SEF against previously tested 11 (Fig. 3A–C) egocentric canonical models in FEF (Sajad et al., 2015). Note that Te and Ge codes were obtained by mathematically rotating the experimental measures of T and G in space coordinates by the inverse of experimentally measured initial eye orientation to obtain the measures in eye coordinates (Klier et al., 2001). In other words, Te is based on the actual target location relative to initial eye orientation, whereas Ge is based on the final gaze position relative to initial eye orientation.

The following models were also tested: dH, difference between the initial and final head orientation in space coordinates; dE: difference between the initial and final eye orientation in head coordinates; dG, difference between the initial and final gaze position in space coordinates; Hs, final position of the head in space coordinates; Eh, final position of the eye in head coordinates; Gs, Ge, Gh: gaze in space, eye, and coordinates, respectively; Ts, Te, Th: target in space, eye, and head coordinates, respectively. The final position refers to orientation of eye/head after the gaze saccade. These models are detailed in the previous study (Sajad et al., 2015). Note that some of these models (like dG and Ge) might not be distinguishable within the range of initial gaze positions and saccade amplitudes described here (Crawford and Guitton, 1997).

Since an allocentric landmark was involved in this task, we analyzed eight additional (allocentric) models of target coding based on the original and shifted landmark location (Fig. 3D). In the allocentric analysis, we retained the best egocentric model (Te for visual neurons and Ge for motor neurons) for comparison. The tested allocentric models are: Ls, landmark within the space coordinates; L’s, shifted landmark within the space coordinates; Le, landmark within eye coordinates; L’e, shifted landmark within eye coordinates; T’s, shifted target in space coordinates; T’e, shifted target in eye coordinates; TL, target relative to landmark; TL’, target relative to shifted landmark. Note: prime (’) stands for the positions that are related to the shifted location of the landmark.

Intermediate spatial models used in main analysis

Previous reports on FEF responses from our lab have reported that responses do not fit exactly against spatial models like Te or Ge, but actually may fit best against intermediate models between the canonical ones (Fig. 4A, lower left; Sajad et al., 2020). As in our previous studies (Sajad et al., 2016; Sadeh et al., 2020), we found that a T-G continuum (specifically, steps along the “error line” between Te and Ge) best quantified the SEF egocentric transformation (Fig. 4A, lower left). This continuum is similar to the concept of an intermediate frame of reference (e.g., between the eye and head) but is instead intermediate between target and gaze position within the same frame of reference. Hereafter, we will sometimes refer to T-G as “the egocentric code.”

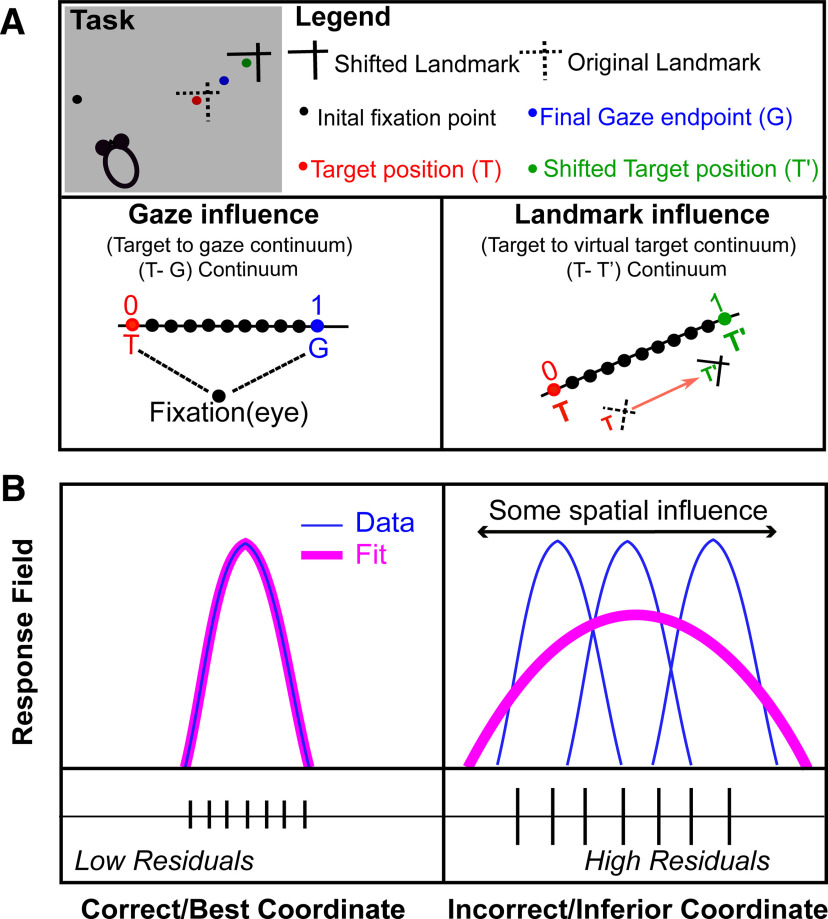

Figure 4.

Schematic representation of spatial parameters and spatial model fitting technique. A, An illustration of different spatial parameters in a single trial. Black dot represents the projections of initial fixation/gaze and the blue dot corresponds to final gaze (G). The red dot depicts the location of target (T) in relation to the original landmark (L) position (broken intersecting lines), and the green dot represents the virtually shifted target (T’) fixed to the shifted landmark (L’) location (solid intersecting lines). Note that the final gaze was placed between the T and T’ (i.e., it shifted toward the shifted landmark). In head-unrestrained conditions, the target can be encoded in egocentric coordinates (eye, head, or body; Sajad et al., 2015). We plotted two continuums: a T-G continuum (egocentric, we divided the space between T and G into 10 equal steps, thus treating these spatial codes as a continuous spatiotemporal variable) to compute the gaze influence and an allocentric shift T-T’ continuum (original target to virtual target) based on the landmark shift to compute the influence of landmark shift on the neuronal activity. B, A logical schematic of response field analysis; x-axis depicts the coordinate frame, and the y-axis corresponds to the related activity to the target. Simply, if the activity related to a fixed target is plotted in the correct reference frame, this will lead to the lowest residuals, i.e., if the neural activity to a fixed target location is fixed (left), then the data (blue) would fit (pink) better on that, leading to lower residuals in comparison with when the activity for the target is plotted in an incorrect frame, thus leading to higher residuals (right).

Further, to quantify the influence of the landmark shift, we created (Fig. 4A, lower right) another continuum (T-T’, the line between the original target and the target if it were fixed to the landmark) between Te (target fixed in eye coordinates) and T’e (virtual target fixed in landmark coordinates), computed in eye coordinates with 10 intermediary steps between, and additional steps on the flanks (10 beyond Te and 10 beyond T’e). These additional 10 steps beyond the canonical models were included (1) to quantify if neurons can carry abstract spatial codes around the canonical models and (2) to eliminate the misleading edge effects (else the best spatial models would cluster around the canonical models). The T-T’ continuum will allow us to test whether SEF code is purely egocentric (based on T), allocentric (based on T’), or contains the integrated allocentric + egocentric information that eventually actuates the behavior. Note that AW and T-T’ are geometrically similar, but the first describes behavioral data, whereas the second describes neural data. Hereafter, we will refer to T-T’ as the “allocentric shift.”

Fitting neural response fields against spatial models

In order to test different spatial models, they should be spatially separable (Keith et al., 2009; Sajad et al., 2015). The natural variation in monkeys’ behavior allowed this spatial separation (for details, see Results). For instance, the variability induced by memory-guided gaze shifts permitted us to discriminate target coding from the gaze coding; the initial eye and head locations allowed us to distinguish between different egocentric reference frames and variable eye and head movements for a gaze shift permitted the separation of different effectors. As opposed to the decoding methods that generally test whether a spatial characteristic is implicitly coded in patterns of population activity (Bremmer et al., 2016; Brandman et al., 2017), the method employed in this study method directly examines which model best predicts the activity in spatially tuned neural responses. The logic of our response field fitting in different reference frames is schematized in Figure 4B. Precisely, if the response field data are plotted in the correct best reference frame, this will yield the lowest residuals (errors between the fit and data points) compared with other models, i.e., if a fit computed to its response field matches the data, then this will yield low residuals (Fig. 4B, left). On the other hand, if the fit does not match the data well, this will lead to higher residuals (Fig. 4B, right). For example, if an eye-fixed response field is computed in eye-coordinates this will lead to lower residuals and if it is plotted in any other inferior/incorrect coordinate, such as space coordinate, this will produce higher residuals (Sajad et al., 2015).

In reality, we employed a non-parametric fitting method to characterize the neural activity with reference to a spatial location and also varied the spatial bandwidth of the fit to plot any response field size, shape, or contour (Keith et al., 2009). We tested between various spatial models using predicted residual error some of squares (PRESS)-statistics. To independently compute the residual for a trial, the actual activity related to it was subtracted from the corresponding point on the fit computed over all the other trials (like cross-validation). Notably, if the spatial physical shift between two models results in a systematic shift (direction and amount), this will appear as a shifted response field or expanded response field and our model fitting approach would not be able to distinguish two models as they would produce virtually indistinguishable residuals. Because in our study, the distribution of relative positions across different models also possesses a non-systematic variable component (e.g., variability in gaze endpoint errors, or unpredictable landmark shifts), the response fields invariably stayed at the same location, but the dissociation between spatial models was based on the residual analysis.

We plotted response fields (visual and movement) of neurons in the coordinates of all the canonical (and intermediate) models. To map the visual response in egocentric coordinates, we took eye and head orientations at the time of target presentation, and for movement response fields, we used behavioral measurements at the time when the gaze shift started (Keith et al., 2009; DeSouza et al., 2011; Sajad et al., 2015). Likewise, for the allocentric models, we used the initial and the shifted landmark location to plot our data. Since we did not know the size and shape of a response field a priori and since the spatial distribution of data were different for every spatial model (e.g., the models would have a smaller range for head than the eye models), we computed the non-parametric fits with different kernel bandwidth (2–25°), thus making sure that we did not bias the fits toward a particular size and spatial distribution. For all the tested models with different non-parametric fits, we computed the PRESS residuals to reveal the best model for the neural activity (that yielded the least PRESS residuals). We then statistically (Keith et al., 2009) compared the mean PRESS residuals of the best model with the mean PRESS residuals of other models at the same kernel bandwidth (two-tailed Brown–Forsythe test). Finally, we performed the same statistical analysis (Brown–Forsythe) at the population level (Keith et al., 2009; DeSouza et al., 2011). For the models in intermediate continua, a similar procedure was used to compute the best fits.

Testing for spatial tuning

The above-described method in the section ‘Fitting neural response fields against spatial models’ assumes that neuronal activity is structured as spatially tuned response fields. This does not imply that other neurons do not subserve the overall population code (Goris et al., 2014; Bharmauria et al., 2016; Leavitt et al., 2017; Chaplin et al., 2018; Zylberberg, 2018; Pruszynski and Zylberberg, 2019), but with our method, only tuned neurons can be explicitly tested. We tested for the neuronal spatial tuning as follows. We randomly (100 times to obtain random 100 response fields) shuffled the firing rate data points across the position data that we obtained from the best model. The mean PRESS residual distribution (PRESSrandom) of the 100 randomly generated response fields was then statistically compared with the mean PRESS residual (PRESSbest-fit) distribution of the best-fit model (unshuffled, original data). If the best-fit mean PRESS fell outside of the 95% confidence interval of the distribution of the shuffled mean PRESS, then the neuron’s activity was deemed spatially selective. At the population level, some neurons displayed spatial tuning at certain time-steps and others did not because of low signal/noise ratio. Thus, we removed the time steps where the populational mean spatial coherence (goodness of fit) was statistically indiscriminable from the baseline (before target onset) because there was no task-related information at this time and thus neural activity exhibited no spatial tuning. We defined an index (coherence index; CI) for spatial tuning. CI for a single neuron, which was calculated as (Sajad et al., 2016):

| (2) |

If the PRESSbest-fit was similar to PRESSrandom then the CI would be roughly 0, whereas if the best-fit model is a perfect fit (i.e., PRESSbest-fit = 0), then the CI would be 1. We only included those neurons in our analysis that showed significant spatial tuning.

Time normalization for spatiotemporal analysis

A major aim of this study was to track the progression of the T-G and T-T’ codes in spatially tuned neuron populations, from the landmark-shift until the saccade onset. The challenge here was that this period was variable, so that averaging data across trials for each neuron would result in the mixing of very different signals (see Extended Data Fig. 11-1). To compensate for this, we time-normalized this data (Sajad et al., 2016; Bharmauria et al., 2020). To do this, the neural firing rate (in spikes per second; the number of spikes divided by the sampling interval for each trial) was sampled at seven half-overlapping time windows (with a range of 80.5–180.5 ms depending on trial duration). The rationale behind the bin number choice was to make sure that the sampling time window was wide enough, and therefore robust enough to account for the stochastic nature of spiking activity (thus ensuring that there were sufficient neuronal spikes in the sampling window to do effective spatial analysis; Sajad et al., 2016). Once the firing rate was estimated for each trial at a given time-step, they were pooled together for the spatial modeling. Note that the final (seventh) time step also contained some part of the perisaccadic sampling window. Finally, we performed our T-G/T-T’ fits on the data for each of these time bins. In short, this procedure allowed us to treat the whole sequence of memory-motor responses from the landmark shift until saccade onset as a continuum.

Results

Influence of landmark shift on behavior

To investigate how the landmark influences behavior toward an object of interest, we used a cue-conflict task, where a visual landmark shifted during the memory delay, i.e., between the target presentation and the gaze shift (Fig. 1A). The task is further schematized in Figure 1B with possible target, original landmark, virtual target and shifted landmark locations. We then computed the influence of the landmark shift as described previously (Li et al., 2017) and further confirmed it in the current dataset. This is computed as AW, i.e., the component of gaze end points along the axis between the target and the cue shift direction. If AW equals 0, then it implies no influence of the shifted landmark, whereas if AW equals 1 then it indicates a complete influence of landmark shift on the gaze (see Materials and Methods). Note that we performed this analysis on the same trials as used for the analysis of spatially tuned neural activity in the results section.

In both animals (Monkeys V and L), the gaze endpoints scattered along the one-dimensional axis of the landmark shift (Fig. 1C), producing a bell-like distribution of gaze errors. However, both distributions showed a highly significant (p < 0.001, one sampled Wilcoxon signed-rank test) shift from the original target (0) in the direction of the landmark shift (with a mean AW of 0.36 in Monkey V and 0.29 in Monkey L). There was considerable trial-to-trial variance around these means, but note that such variance was present or even larger in the absence of a landmark (Sajad et al., 2015; Li et al., 2017). The overall average error (distance) between T and final gaze, i.e., including errors in all directions, was 8.19 ± 5.27 (mean ± SD) and 10.35 ± 5.89 for Monkeys V and L, respectively. No correlation was found between the AW as a function of saccade latency (Bharmauria et al., 2020). These results generally agree with previous cue-conflict landmark studies (Neggers et al., 2005; Byrne and Crawford, 2010; Fiehler et al., 2014) and specifically with our use of this paradigm in the same animals, with only slight differences owing to collection of data on different days (Li et al., 2017; Bharmauria et al., 2020).

In order to determine when the landmark first influenced the SEF spatial code, and when that influence became fully integrated with the default SEF codes, we asked the following questions in their logical order: (1) what are the fundamental egocentric and/or allocentric codes in the SEF; (2) how does the landmark shift influence these neural codes; (3) what is the contribution of different SEF cell types (visual, VM, and motor); and finally, (4) is there any correlation between the SEF allocentric and egocentric codes, as we found in the FEF?

Neural recordings: general observation and classification of neurons

To understand the neural underpinnings of the behavior (as revealed above in the section ‘Influence of landmark shift on behavior’) in the SEF activity, we recorded visual and motor responses from over 200 SEF sites using tungsten microelectrodes, while the monkey performed the cue-conflict task (Fig. 2A,B). We analyzed a total of 256 neurons and after applying our rigorous exclusion criteria (see Materials and Methods and Table 1), we were finally left with 68 significantly spatially tuned neurons (see Materials and Methods): 32 significantly spatially tuned visual responses (including V and VM) and 53 (including M and VM) significantly spatially tuned motor responses. Many other neurons (n = 188) were not spatially tuned or did not respond to the event and were thus excluded from further analyses. Typically, neurons that were not spatially tuned were responsive at some time during the task throughout both visual fields and did not show preference for any of the spatial models.

The mean spike density graphs (with the 100-ms sampling window) for the visual (red) and motor (black) neurons are shown in Figure 2C,D, respectively. We then performed the non-parametric analysis (Fig. 4A,B) while using all trials throughout the response field of each neuron (dark black and red curves), but we also display the top 10% from every neuron during the sampling window (light red and gray for visual and motor activity, respectively), roughly corresponding to the “hot-spot” of the response field. We first employed our model-fitting approach to investigate SEF spatial codes, starting (since this has not been done with SEF before) with a test of the most fundamental models.

Visual activity fitting in egocentric and allocentric spatial models

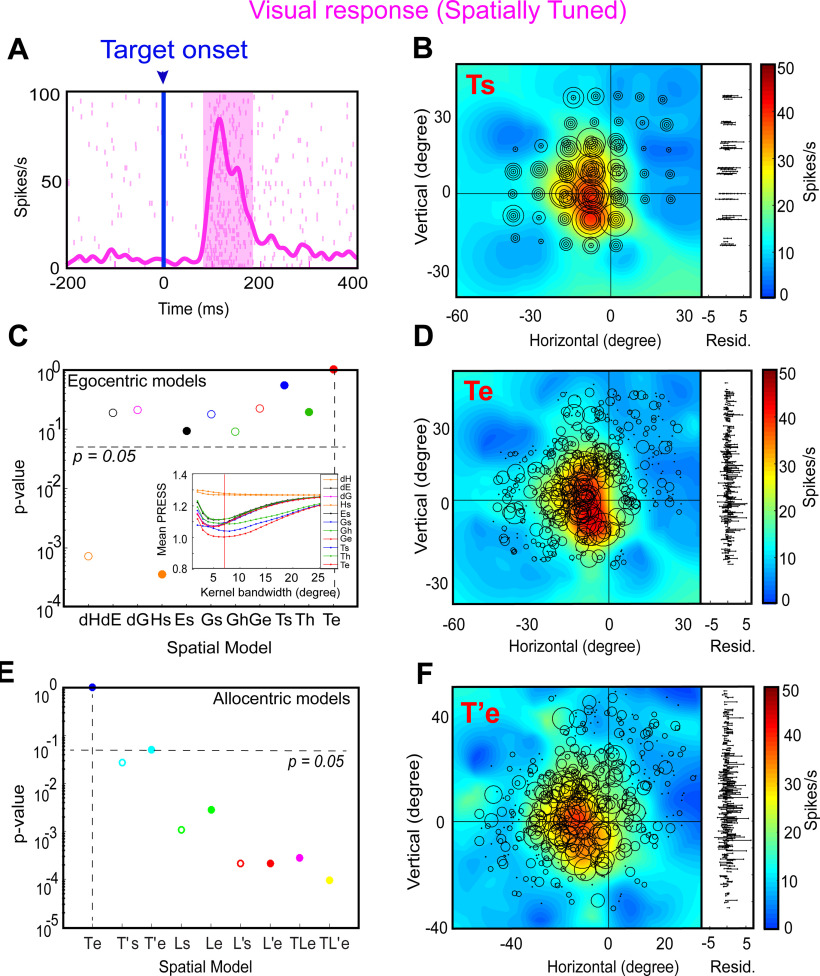

We began by testing all the egocentric and allocentric models (Fig. 3) in the sampling window (80–180 ms relative to target onset) of the visual response (including pure visual and VM neurons). Figure 5 shows a typical example of analysis of a visual response field. Figure 5A displays the raster and spike density (pink curve) plot of a visually responsive neuron aligned to the target onset (blue line as indicated by downward blue arrow). The shaded pink area corresponds to the sampling window for response field analysis. Figure 5B shows the closed (spatially restricted) response field for this neuron in the original target-in-space coordinates (Ts) corresponding to actual stimulus locations. Each circle corresponds to the magnitude of response in a single trial. The heat map represents the non-paramedic fit to the neural data, with residuals (difference between data and fit) plotted to the right. This neuron has a hot-spot (red) near the center of the response field.

Figure 5.

An example of response field analysis of a visual neuron. A, Raster/spike density plot (with top 10% responses) of the visual neuron aligned to the onset of target (blue arrow); the shaded pink region corresponds to the sampling window (80–180 ms) for response field analysis. B, Representation of neural activity for Ts: target in space (screen). The circle corresponds to the magnitude of the response and heat map represents the non-parametric fit to these data (red blob depicts the hot-spot of the neuronal response field). The corresponding residuals are displayed to the right. To independently compute the residual for a trial, its activity was subtracted from the point corresponding to the fit computed on the rest of the trials. The residuals are one-dimensional for every data point from the fit projected onto the vertical axis for illustrative purposes. C, The p value statistics and comparison between Te (p = 10° = 1; the best-fit spatial model that yielded lowest residuals) and other tested models (Brown–Forsythe test). Inset shows the mean residuals from the PRESS-statistics for all spatial models at different kernel bandwidths (2–25°). The red vertical line depicts the lowest mean PRESS for Te (target in eye coordinates) with a kernel bandwidth of 7. D, Representation of the neural activity in Te (target relative to eye), the best coordinate. E, The p value statistics and the comparison of the best egocentric model, Te, with other allocentric spatial models. Te is still the best-fit model. F, Representation of the neural activity in T’e, i.e., shifted target relative eye; 0, 0 represents the center of the coordinate system that led to the lowest residuals (best fit).

Figure 5C provides a statistical summary (p values) comparing the goodness of fits for each of our canonical egocentric models (Fig. 3A, x-axis) relative to the model with the lowest residuals. For this neuron, the response field was best fit across target in eye coordinates (Te) with a Gaussian kernel bandwidth of 7° (Fig. 5C, inset, the red vertical line indicates the best kernel bandwidth). Thus, Te is the preferred egocentric model for this neuron, although most other models were not significantly eliminated. Figure 5D shows the response field plot of the neuron in Te coordinates. Comparing Ts and Te, one can see how larger circles (larger bursts of activity) tend to cluster more together at the response field hot spot in Te than Ts, and the residuals look (and were) smaller, implying that Te is the best coordinate system for this neuron. The bottom row of Figure 5 shows a similar analysis of the same neuron with respect to our allocentric models (from Fig. 3B). Figure 5E provides a statistical comparison (p values) of the residuals of these models relative to Te, which we kept as a reference from the egocentric analysis. Te still provided the lowest residuals, whereas nearly every allocentric model was statistically eliminated. The exception was T’e (target-in-eye coordinates but shifted with the landmark) perhaps because it was most similar to Te. We have used this coordinate system for the example response field plot in Figure 5F. An example of a spatially untuned neuron is shown in Extended Data Figure 5-1.

An example of a spatially untuned visual neuron. A, Raster/spike density plot (with top 10% responses) of the visual neuron aligned to the onset of target (blue arrow); the shaded pink region corresponds to the sampling window (80–180 ms) for response field analysis. B, Mean residuals from the PRESS-statistics for all spatial models at different kernel bandwidths (2–25°). C, The p values statistics and comparison between different models. No model was significantly eliminated. D, Representation of neural activity for Ts: target in space (screen). The circle corresponds to the magnitude of the response, and heat map represents the non-parametric fit to these data. The corresponding residuals are displayed to the right. E, Representation of the neural activity in Te (target in eye). Download Figure 5-1, TIF file (3.8MB, tif) .

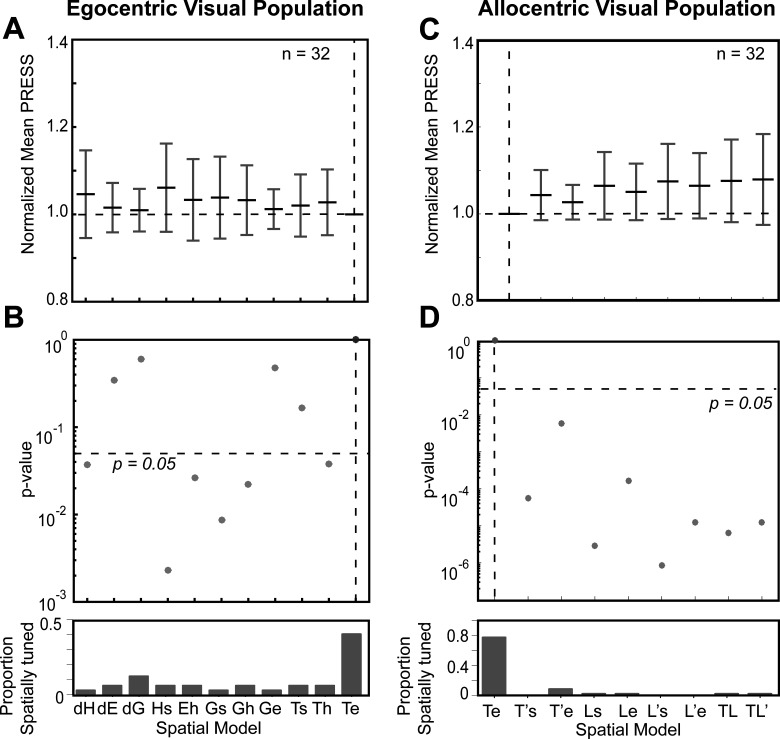

Figure 6 shows the pooled fits for visual (V + VM) responses across all spatially tuned neurons (n = 32) for both egocentric (left column) and allocentric (right column) analyses. In each column, the top row provides the distributions of mean residuals, the middle the p value comparisons, and the bottom row the fraction of spatially tuned neurons that preferred each model. In the egocentric analysis (Fig. 6A,B), Te was still the best fit overall (and was preferred in ∼40% of the neurons). The head-centered models and most of the effector-specific models were statistically eliminated but dE, dG, Ge, and Ts were not eliminated. Compared with the allocentric models (Fig. 6C,D), Te performed even better, statistically eliminating all other models. Overall, these analyses of egocentric and allocentric models suggest that Te was the predominant code in the SEF visual responses, just as we found for the FEF in the same task (Bharmauria et al., 2020) and both the FEF and SC in a purely egocentric gaze task (Sadeh et al., 2015; Sajad et al., 2015).

Figure 6.

Egocentric and allocentric population fit residuals and statistics for all visual neurons. A, The mean PRESS (±SEM) residuals of all visually responsive neurons (n = 32), i.e., data relative to fits computed to all tested egocentric models. These values were normalized by dividing by the mean PRESS residuals of the best spatial model, in this case Te. B, p value statistics performed on the residuals shown above (Brown–Forsythe test). Te (broken vertical black line) is the best fit; however, dE, dG, Ge, and Ts were also retained. C, Same as A but for best-fit egocentric model comparison with all allocentric models. D, Same as B, Te is still the best fit.

Motor activity fitting in egocentric and allocentric spatial models

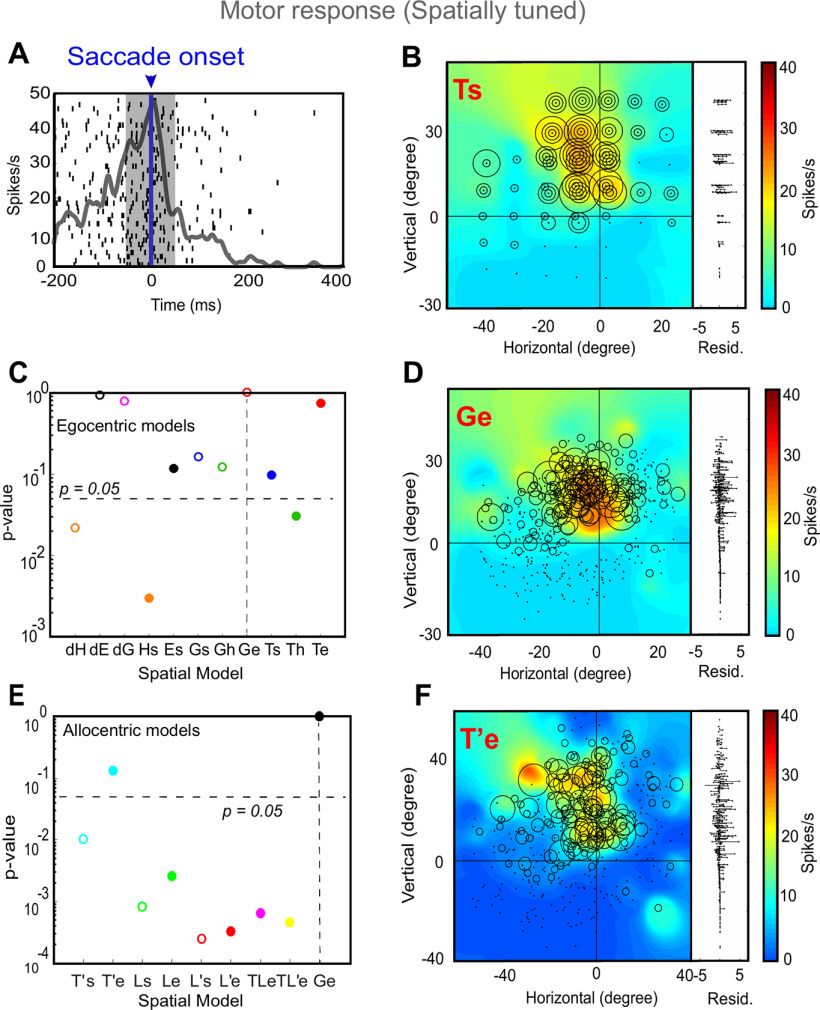

We then proceeded with the analysis of neural activity for motor responses (including the M and VM neurons), i.e., neurons firing in relation to the saccade onset. Despite the landmark-induced shifts and variable errors in gaze, overall there was still a strong correlation between target direction and final gaze position (Bharmauria et al., 2020), and thus motor response fields tended to align with sensory response fields. Figure 7 shows a typical analysis of a motor neuron. Figure 7A displays the raster and spike density plot for a motor response (the shaded area corresponds to the sampling window and Fig. 7B shows the corresponding response field, plotted in Ts coordinates). Figure 7C provides a statistical analysis of this neuron’s activity against all egocentric spatial models (same convention as Fig. 5). Here, Ge (future gaze direction relative to initial eye orientation) was the preferred model for this neuron, although several other models were also retained. Figure 7D shows the corresponding data in Ge coordinates, where again, residuals are lower and similar firing rates cluster. Figure 7E shows the statistical testing of different allocentric models using Ge as the egocentric reference. Once again, the egocentric model “wins,” eliminating all of the allocentric models except for T’e (used in the example response field plot; Fig. 7F).

Figure 7.

An example of response field analysis of a motor response. A, Raster/spike density plot (with top 10% responses) of the motor neuron aligned to the saccade onset (blue arrow); the shaded gray area corresponds to the sampling window (–50 to 50 ms) for response field analysis. B, Representation of neural activity for Ts: target in space (screen). The corresponding residuals are displayed to the right. C, The p values statistics and comparison between Ge (p = 10° = 1; the best-fit spatial model that yielded lowest residuals) and other tested models (Brown–Forsythe test). D, Representation of the neural activity in Ge (future gaze relative to eye), the best coordinate. E, The p value statistics and the comparison of the best egocentric model, Ge, with other allocentric spatial models. Ge is still the best-fit model, with T’e (shifted target in eye coordinates) as the second best model implying the influence of the shifted landmark on the motor response. F, Representation of the neural activity in T’e, i.e., shifted target relative eye. 0, 0 represents the center of the coordinate system that led to the lowest residuals (best fit).

Figure 8 summarizes our population analysis of motor responses (n = 53, M + VM) against our egocentric and allocentric models, using the same conventions as Figure 6. Overall, of the egocentric models, dE yielded the lowest residuals, but dE was statistically indistinguishable from dG and Ge, which also yielded similar amounts of spatial tuning (Fig. 8A). Importantly, Te was now eliminated, along with dH, Hs, Ts, and Th (Fig. 8B). We retained Ge as our egocentric reference for comparison with the allocentric fits, because it is mathematically similar to Te (used for the visual analysis), and has usually outperformed most motor models in previous studies (Klier et al., 2001; Martinez-Trujillo et al., 2004; Sadeh et al., 2015; Sajad et al., 2015; Bharmauria et al., 2020). This time, comparisons with Ge statistically eliminated all of our allocentric models at the population level. In summary, these analyses suggest that Ge (or something similar), and not Te, was the preferred model for motor responses, as reported in our studies on FEF and SC (Sadeh et al., 2015; Sajad et al., 2015; Bharmauria et al., 2020).

Figure 8.

Egocentric and allocentric population fit residuals and statistics for all motor neurons. A, The mean PRESS (±SEM) residuals of all motor responses (n = 53), i.e., data relative to fits computed to all tested egocentric models. These values were normalized by dividing by the mean PRESS residuals of the best spatial model, in this case dE. B, p value statistics performed on the residuals shown above (Brown–Forsythe test). dE (broken vertical black line) is the best fit; however, dG and Ge were also retained and very close to dE. C, Same as A but for best-fit egocentric model comparison with all allocentric models. D, Same as B, Ge is the best fit.

Visual-motor transformation along the intermediate frames: T-G and T-T’ continua

Thus far, we found that SEF continued to be dominated by eye-centered target (T) and gaze (G) codes, like other saccade-related areas (FEF and SC). However, it is possible that the actual codes fall within some intermediate code, as we have found previously in the FEF (Bharmauria et al., 2020). Therefore, as described in Materials and Methods (Fig. 4) and Figure 9, we constructed the same spatial continua to quantify the detailed sensorimotor transformations in the SEF: a T-G (specifically Te-Ge) continuum to quantify the amount of transition from target to future gaze coding, and a T-T’ continuum (specifically Te-T’e; similar to our behavioral AW score) to quantify the influence of the landmark on the target code. Following analysis shows an example of a visual and a motor response.

Figure 9.

Egocentric (target to gaze, T-G continuum) and allocentric (target to virtually shifted target, T-T’ continuum). A, Raster/spike density plot (with top 10% responses) of the visual neuron (same neuron as Fig. 6) aligned to the target onset (blue arrow). B, Representation of the response field in the eye-centered coordinates obtained from the best-fit of the neural data along the T-G continuum (bar above the response field). To the right of the plot are the residuals between the individual trial data and the response field fit. The response field was best located exactly at T. C, Response field along the T-T’ continuum, the best fit is located one step beyond T indication no influence of the landmark shift. D, Raster/spike density plot of a motor response aligned to the onset of the saccade (blue arrow). E, Representation of the motor response field along the T-G continuum, the response field fits best at the ninth step (converging broken lines) from T (one step to G). F, Representation of the motor response field along the T-T’ continuum, the response field fits best at fourth step from T, indicating the influence of the landmark shift on the motor response.

Figure 9A shows the raster and the spike density plot for a visually responsive neuron aligned to the target onset (same neuron as Fig. 5). Figure 9B displays the best-fit response field plot of the neuron along the T-G continuum, where the circle represents the magnitude of the response, the heat map represents the non-parametric fit to the data, and the residuals are plotted to the right. The converging broken lines pointing to bar at the top represent the corresponding point of best fit along the 10 equal steps between T and G. Here, the response field of neuron fits best exactly at T as indicated by the broken lines. Figure 9C shows the response field plot of the same data along the T-T’ continuum. Here, the best fit for the response field was located only one step (10% beyond T, in the direction away from T’) demonstrating no influence of the future landmark shift on the initial visual response.

What then happens after the landmark shift? Figure 9D depicts the raster and spike density plot of a motor neuron, aligned to the saccade onset. Along the T-G continuum (Fig. 9E), the best response field fit was at the ninth step, i.e., 90% toward G (suggesting a near-complete transformation to gaze coordinates), whereas the best fit along the T-T’ continuum (Fig. 9F), was at fourth step from T, i.e., 40% toward T’ (suggesting landmark influence similar to that seen in our behavioral measure).

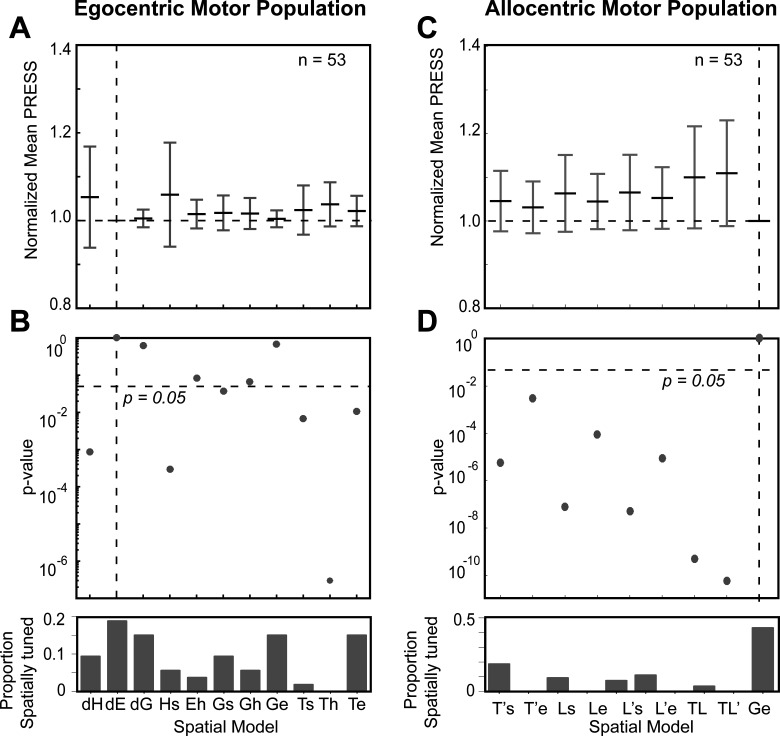

Population analysis along the T-G and T-T’ continuum

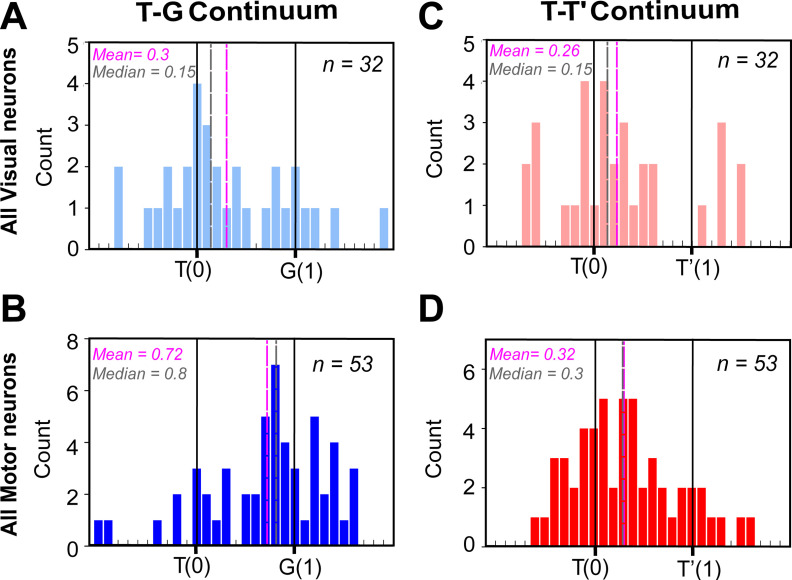

How representative were the examples shown above (Fig. 9) of our entire population of data? To answer that question, we performed the same analysis on our entire population of spatially tuned visual (both visual and VM) and motor (both VM and motor) responses. Figure 10 shows the distribution of best fits for visual responses (top row) and motor responses (bottom row) neurons. T-G distribution of visually responding neurons (Fig. 10A) showed a primary cluster and peak around T, but overall was shifted slightly toward G (mean = 0.3; median = 0.15), because of a smaller secondary peak at G. This suggests that most visual responses encoded the target, but some already predicated the future gaze location. This is similar to what has been reported in FEF too (Sajad et al., 2015; Bharmauria et al., 2020). The motor distribution (Fig. 10B, n = 53) showed the opposite trend: a smaller cluster remained at T, but the major cluster and peaks were near G. Overall, this resulted in a significant (p < 0.0001, one sampled Wilcoxon signed-rank test) shift toward G (mean = 0.72; median= 0.8). Notably, the motor and visual distributions were significantly different from each other (p < 0.0001; Mann–Whitney U test).

Figure 10.

Frequency distribution along the T-G and T-T’ continua at the population level. A, Frequency distribution of all spatially tuned visual responses (n = 32) along the T-G continuum with the best fit closer to T (mean = 0.3; median = 0.15). B, Frequency distribution of all spatially tuned motor responses (n = 53) along the T-G continuum with a significantly shifted distribution toward G (mean = 0.72; median = 0.8; p < 0.0001, one sampled Wilcoxon signed-rank test). C, Frequency distribution of all spatially tuned visual responses (n = 32) along the T-T’ continuum with the best fit closer to T (mean = 0.26; median = 0.15). D, Frequency distribution of all spatially tuned motor responses (n = 53) along the T-T’ continuum with a significantly shifted distribution toward T’ (mean = 0.32; median = 0.3; p = 0.0002, one sampled Wilcoxon signed-rank test) indicating the influence of the landmark shift on the motor responses.

Along the T-T’ continuum (Fig. 10C), the best fits for the visual population peaked mainly around T, but overall showed a small (mean = 0.26; median = 0.15) but non-significant shift toward T’ (p = 0.07, one sampled Wilcoxon signed-rank test). The motor population (Fig. 10D) shifted further toward T’ (mean = 0.32; median= 0.3). This overall motor shift was not significantly different from the overall visual population (p = 0.53; Mann–Whitney U test), but it was significantly shifted from T (p = 0.0002, one sampled Wilcoxon signed-rank test). In general, this T-T’ shift resembled the landmark influence on actual gaze behavior. Notably, at the single cell level there was a significant T-G transition (Extended Data Fig. 10-1) between the visual and the motor responses within VM neurons (n = 16; p = 0.04, Wilcoxon matched-pairs signed-rank test), but not along the TT’ continuum (n = 16; p = 0.32, Wilcoxon matched-pairs signed-rank test).

VM transformation at the single cell level. A, Significant visual to motor transformation within the VM neurons along the T-G continuum. B, No significant visual to motor transformation along the TT’ continuum. Download Figure 10-1, TIF file (123KB, tif) .

Overall, this demonstrates a target-to-gaze transformation similar to the SC and FEF (Sadeh et al., 2015; Sajad et al., 2015) and a similar significant landmark influence in the motor response as we found in FEF (Bharmauria et al., 2020). However, we do not yet know how different cell types contribute to this shift and if this landmark influence has some relationship to the egocentric (T-G) transformation as revealed in FEF (Bharmauria et al., 2020).

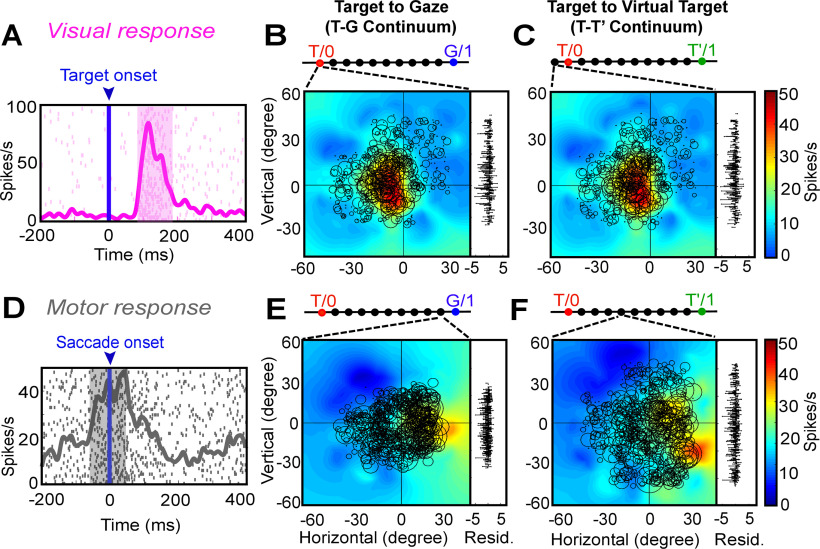

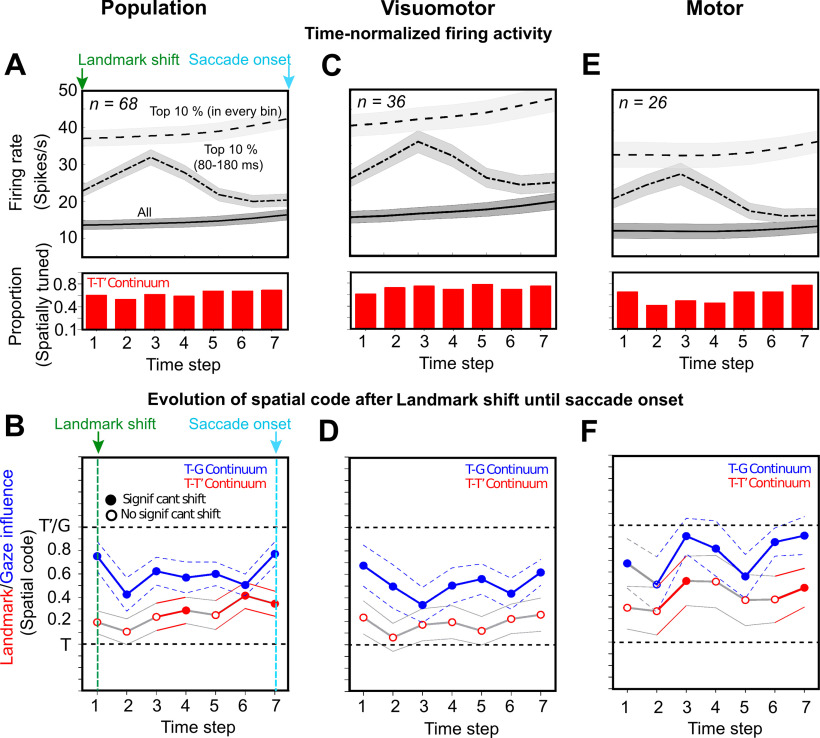

Contribution of different cell types and the allocentric shift

We next examined how different neuronal classes are implicated in the landmark influence as noticed above (Fig. 11). To this goal, as in our FEF study on the same task (Bharmauria et al., 2020), we focused our analysis on a seven-step time-normalized analysis aligned to onset of the landmark-shift until the saccade onset (Extended Data Fig. 11-1). Since the second delay was variable, the time-normalization procedure allowed us to treat the corresponding neural activity as a single temporal continuum (Sajad et al., 2016; Bharmauria et al., 2020). By employing this procedure on the neural activity, we tracked the progression of T-G and T-T’ continua to quantify the gaze and landmark-shift influence, respectively.

Figure 11.

Spatiotemporal analysis aligned to landmark shift until saccade onset. A, B, Spatiotemporal analysis of whole of the population (n = 68) aligned to the landmark onset (green arrow) unit the saccade onset (cyan arrow). A, Time-normalized neural activity divided into seven half-overlapping bins for all trials (bottom trace), top 10% trials in each bin (top trace), and top 10% trials in 80- to 180-ms window aligned to landmark-shift (middle trace). B, Progression of the spatial code for all neurons (n = 68) along the T-G and T-T’ continua. C, Same as A but for VM neurons (n = 36). D, Progression of spatial code for VM neurons along the T-G and T-T’ continua. None of the steps displayed a significant shift toward T’ along the T-T’ continuum. E, Same as A but for motor neurons (n = 26). F, Progression of the spatial code in time for motor neurons along the T-G and T’-T’ continua. A significant shift toward T’ was noted along the T-T’ continuum at the third step and the seventh step (just before the saccade onset). Note: the histogram below the spike density plots displays the proportion of spatially tuned neurons at each time-step.

Time normalization procedure. A, Alignment of responses to the landmark shift. B, Alignment of responses to the saccade onset. Note: the alignment of responses in the standard was as in A, B leads to loss and/or mixing of responses thus not allowing us to track spatial codes through the entire trial across all trials. C, Time normalization from landmark shift until saccade onset. This procedure where we divide the activity into equal half-overlapping bins across all trials allows us to treat all the trials equally, thus as a continuum. Download Figure 11-1, TIF file (41.1KB, tif) .

Figure 11A displays the mean activity of the entire spatially tuned population (n = 68) of neurons divided into seven time-normalized bins from landmark-shift onset to the saccade onset (for details, see Materials and Methods). The mean spike density plots are shown for (1) all trials (bottom trace), (2) top 10% activity corresponding to each time step (top trace), and (3) top 10% activity from 80 to 180 ms aligned to the landmark-shift (middle trace). Note (the red histograms below the spike density plots) that the delay period possessed substantial spatially tuned neural activity along the T-T’ continuum, ∼50% of the neurons were tuned. A similar trend was noticed along the T-G continuum (data not shown). Figure 11B shows the data (mean ± SEM) in the corresponding time steps for the population along the T-G (blue) and the T-T’ (red) continua. The solid circles indicate a significant shift from T (p < 0.005, one sampled Wilcoxon signed-rank test), whereas the empty circle indicates a non-significant shift. The T-G code showed a significant shift at all the steps as reported previously (Sajad et al., 2015; Bharmauria et al., 2020). The T-T’ fits were slightly shifted from T at the first step, but this shift was significantly embedded only at the fourth step (p = 0.02), then it shifted back at the fifth step before significantly shifting toward T’ at the sixth (p = 0.001) and seventh (p = 0.003) steps when the gaze was just imminent.

To further tease apart the contribution of different cell types to the embedding of landmark influence, we divided the population into visual only (V), VM, and motor (M) cell types. The V neurons (n = 6; data not shown) did not display any significant shift at any of the time steps, therefore they were eliminated from further analyses. Figure 11C shows the spike density plots as shown for the population in Figure 11A. We did not notice any significant shift in the delay period along the T-T’ continuum for the VM neurons (Fig. 11D). For the delay activity (Fig. 11E) in M neurons, along the T-T’ continuum, a significant shift was observed at the third step (p = 0.04), then the code shifted back before significantly shifting toward T’ at the seventh step (p = 0.02) with impending gaze.

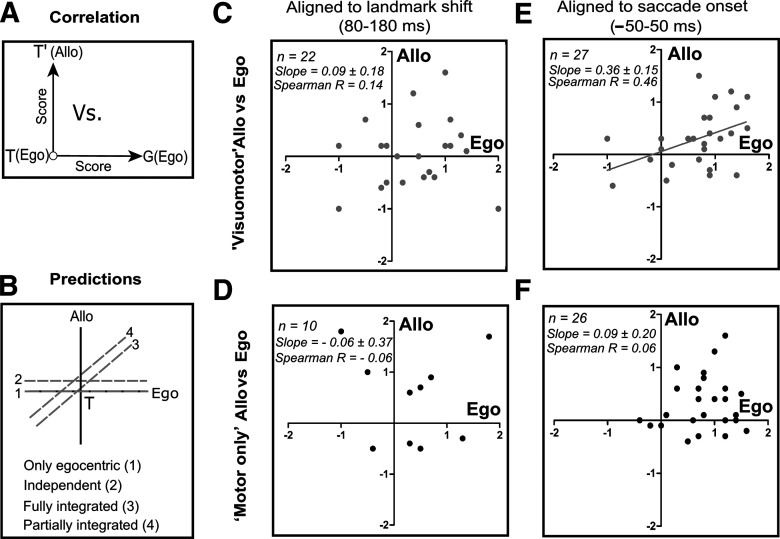

Integration of the allocentric shift with the egocentric code

Until this point we observed that the landmark shift influences the motor code along the T-T’ coordinates, but we still need to address whether there is any relation between the T-G and T-T’ transformations. To address this, post landmark-shift until the perisaccadic burst, we plotted the T-T’ score as a function of the corresponding T-G score for each neuron that exhibited spatial tuning for both (Fig. 12A). As for FEF (Bharmauria et al., 2020), we made following predictions for the embedding of the allocentric influence with the egocentric coordinates (Fig. 12B): (1) no influence, i.e., the coding was purely egocentric but as we have shown above (Figs. 10 and 11) that is not the case; (2) independent, the egocentric and allocentric codes are completely independent of each other; (3) fully integrated, the allocentric influence varies as a function of G; and (4) partial integration, a mix of (2) and (3).

Figure 12.

Correlation between T-G (egocentric) and T-T’ (allocentric) scores. A, Schematic drawing of T-T’ plotting as a function of T-G scores. B, Predictions for the embedding of allocentric shift with the egocentric codes: (1) only egocentric, (2) independent, (3) fully integrated, or (4) partially integrated. C, No significant correlation between the corresponding T-T’ and T-G scores in the early post landmark-shift response for the VM (n = 22, Spearman R = 0.14, slope = 0.09 ± 0.18, p = 0.60) and (D) M neurons (n = 10, Spearman R = –0.06, slope = –0.06 ± 0.37, p = 0.87). E, A significant correlation between the corresponding T-T’ and T-G scores of VM neurons in the peri-saccadic burst (n = 27, Spearman R = 0.46, slope = 0.36 ± 0.15, p = 0.02). F, No significant correlation in the perisaccadic burst between the T-T’ and T-G scores for M neurons (n = 26, Spearman R = 0.06, slope = 0.09 ± 0.20, p = 0.64).

We first did this analysis for early postshift visual response in the 80- to 180-ms window of analysis for the VM and M neurons (Fig. 12C,D). No significant correlation was noticed for both the VM (Spearman R = 0.14; slope = 0.09 ± 0.18, intercept = 0.03 ± 0.16, p = 0.60; Fig. 12C) and the M (Spearman R = –0.06; slope = –0.06 ± 0.37, intercept = 0.52 ± 0.32, p = 0.87; Fig. 12D) neurons, suggesting no integration in this period. We further plotted the correlation for the delay activity from early postshift response until the saccade onset (roughly corresponding to steps 2–7 from the previous figure) for the whole population (M + VM) and the individual M and VM populations. We found no significant correlation at each of these steps either for the entire population and the subpopulations, implying that yet integration had not occurred, although a shift was noticed in the delay and the impending saccadic activity of M neurons (Fig. 11F). Finally, a significant correlation between the T-T’ and T-G was noticed for the VM (n = 27; Spearman R = 0.46, slope = 0.36 ± 0.15, intercept = 0.05 ± 0.13, p = 0.02; Fig. 12E) neurons in the perisaccadic burst (–50 to 50 ms), but not for the M (n = 26; Spearman R = 0.06, slope = 0.09 ± 0.20, intercept = 0.27 ± 0.18, p = 0.64; Fig. 12E) neurons. After combining the M and the VM neurons, a significant correlation still existed (Spearman R = 0.28, slope = 0.26 ± 0.12, intercept = 0.13 ± 0.11, p = 0.03).

Comparison with spatiotemporal integration in FEF

Notably, we performed SEF and FEF recordings concurrently, providing an opportunity to compare the current dataset with the FEF dataset published previously published (Bharmauria et al., 2020); focusing on the spatiotemporal progression of egocentric/allocentric integration after the landmark shift. To do this, we performed a three-factor ANOVA analysis on the VM and motor populations from both areas (F1 = FEF/SEF, F2 = M/VM, F3 = time step). We found a significant difference between the VM and M neurons along the T-G (p = 0.009), T-T’ (p = 0.04) continua. We found no significant difference between the SEF and FEF along the T-G continuum (p = 0.10, suggesting similar egocentric transformations), but we found a significant difference along the T-T’ continuum (p = 0.009), implying a difference in allocentric processing. Moreover, a significant interaction was also noticed between the VM/M neurons of FEF/SEF (p = 0.04) along the T-T’ continuum. Finally, the M neurons of SEF displayed a significant shift in their delay activity (fourth step, Bonferroni corrected Mann–Whitney U test, p = 0.006) toward T’ compared with the M neurons of FEF. These statistics support the observation that both areas showed transient T-T’ shifts in delay activity, but this primarily occurred in VM neurons in the FEF (Bharmauria et al., 2020), as opposed to motor neurons in SEF. Finally, we note that whereas only SEF VM neurons showed T-G/T-T’ correlation during the saccade burst (Fig. 12), both VM and M neurons showed this correlation in the FEF (Bharmauria et al., 2020).

Discussion

This study addressed a fundamental question in cognitive neuroscience: how does the brain represent and integrate allocentric and egocentric spatial information? We used a cue-conflict memory-guided saccade task, in which a visual landmark shifted after a mask, to establish the basic egocentric coding mechanisms used by the SEF during head-unrestrained gaze shifts, and investigate how allocentric information is incorporated into these transformations. We found the following. (1) Despite the presence of a visual landmark, spatially tuned SEF neurons predominantly show the same eye-centered codes as the FEF and SC (Sadeh et al., 2015; Sajad et al., 2015), i.e., target coding (T) in the visual burst and gaze position coding (G) in the motor burst. (2) After the landmark shift, motor neuron delay activity showed a transient shift in the same direction (T-T’). (3) A second perisaccadic shift was observed in VM neurons. (4) Only the latter shift was correlated with T-G. Overall, the SEF showed similar egocentric visual-motor transformations, however, it integrated the landmark information into this transformation in a manner complementary to the FEF. Briefly, the novel results of this investigation implicate the SEF (and thus the frontal cortex) in the integration of allocentric and egocentric visual cues.

General SEF function: spatial or non-spatial?

The FEF, LIP, and SC show (primarily) contralateral visual and motor response fields involved in various spatial functions for gaze control (Andersen et al., 1985; Schlag and Schlag-Rey, 1987; Schall, 1991; Munoz, 2002), but the role of SEF is less clear (Purcell et al., 2012; Abzug and Sommer, 2018). Only 27% of our SEF neurons were spatially tuned, lower than our FEF recordings (50%) in the same sessions (Bharmauria et al., 2020). This is consistent with previous studies (Schall, 1991; Purcell et al., 2012) and the notion that the SEF also has non-spatial functions, such as, learning (Chen and Wise, 1995) prediction error encoding (Schlag-Rey et al., 1997; Amador et al., 2000; So and Stuphorn, 2012), performance monitoring (Sajad et al., 2019) and decision-making (Abzug and Sommer, 2018). The general consensus is that SEF subserves various cognitive functions (Stuphorn et al., 2000; Tremblay et al., 2002) while also representing multiple spatial frames (Martinez-Trujillo et al., 2004; Stuphorn, 2015; Abzug and Sommer, 2017). It should be noted that these diverse signals (So and Stuphorn, 2012; Abzug and Sommer, 2018; Sajad et al., 2019) may be prominent in many of the spatially untuned neurons that were rejected in our analysis. However, the possibility of these signals influencing the spatially tuned response fields cannot be eliminated here (Purcell et al., 2012; Abzug and Sommer, 2017; Sajad et al., 2019). It can be reasonably hypothesized that spatially tuned neurons integrate non-spatial signals from untuned neurons (Pruszynski and Zylberberg, 2019) with spatial signals and forward these integrated signals to FEF neurons, thereby influencing gaze behavior in real space, thus providing a mechanism for SEF to implement executive function as behavior.

Egocentric transformations in the gaze system

In the gaze control system, the consensus is that eye-centered visual and motor codes predominate (Russo and Bruce, 1993; Tehovnik et al., 2000; Klier et al., 2001; Paré and Wurtz, 2001; Goldberg et al., 2002), but alternative views persist (Mueller and Fiehler, 2017; Caruso et al., 2018). Visual-motor dissociation tasks (e.g., antisaccades) found that visual and motor activities coded target and saccade direction, respectively (Everling and Munoz, 2000; Sato and Schall, 2003; Takeda and Funahashi, 2004). However, this requires additional training and signals that would not be present during ordinary visually guided saccades (Munoz and Everling, 2004; Medendorp et al., 2005; Amemori and Sawaguchi, 2006), and the head was fixed in most such studies. We have previously extended these results to natural head-unrestrained gaze shifts in the SC and FEF (Sadeh et al., 2015, 2020; Sajad et al., 2015) and here in the SEF.

Consistent with previous reports we found that SEF response fields are primarily organized in eye-centered coordinates (Russo and Bruce, 1993; Park et al., 2006), and participate in progressive target-to-gaze transition like FEF and SEF (Sajad et al., 2016; Sadeh et al., 2020). Note that in the current study, deviations of gaze from the target (used to fit response fields against G) were produced in part by the landmark shift. However, this alone does not likely explain the T-G transition in our cells, because it happened continuously through the task, it was spatially separable and often uncorrelated with the neural response to the landmark shift (discussed below in the section ‘A circuit model for allocentric/egocentric integration’), and much of the gaze errors used to calculate this transition were not because of the landmark shift, but appeared to be because of general internal “noise,” as in our previous studies (Sajad et al., 2016, 2020; Sadeh et al., 2020) which was even larger without a landmark (Li et al., 2017). In short, the landmark shift clearly contributed to gaze errors, but cannot alone explain the T-G transition we observed here in SEF cells.