Randomized controlled trials (RCTs) are the gold standard for generating evidence on the effectiveness of healthcare interventions. Unfortunately, RCTs are frequently uninformative in terms of providing results that patients, clinicians, researchers, or policymakers can confidently apply as the basis for clinical decision-making in the real world.1 Safeguards against uninformative research begin early in study development. Thus, well-conceived pilot studies can play a critical role in the conduct of high-quality clinical trials.2 We propose using implementation science—the study of how to adopt best practices into real-world settings—as a natural framework for pre-RCT pilot studies, viewing these pilot studies as a critical opportunity to improve the informativeness of RCTs.

WHY DO WE NEED RIGOROUS PILOT TRIALS?

The burden of uninformative RCTs is an important one. Money, time, and participants’ efforts are wasted when research is conducted without taking sufficient account of the contextual factors necessary for applying study results. In some cases, these factors are related to standard RCT quality criteria (e.g., CONSORT). In other cases, however, a study’s lack of informativeness is related to inadequate consideration of broader factors. Zarin et al. posited five necessary conditions for a trial to be informative: (1) the study hypothesis must address an important and unresolved question; (2) the study must be designed to provide meaningful evidence related to this question; (3) the study must be feasible; (4) the study must be conducted and analyzed in a scientifically valid manner; and (5) the study must report methods and results accurately, completely, and promptly.3

Unfortunately, many contemporary trials fail these necessary conditions. One overt example is a multicenter randomized assessing the impact of pre-hospital antibiotics for sepsis administered via emergency medical service personnel.4 The trial found no mortality difference, but application of the results is limited by randomization violations—some emergency medical services personnel “purposefully opened the envelopes until they found an envelope instructing randomization to the intervention group.” The motivation for this violation of study procedures was attributed to “overenthusiasm of EMS personnel wanting to treat as many patients as possible with antibiotics.” Plausibly, pre-trial identification of these beliefs about the acceptability of withholding treatment from study patients could have prompted a responsive approach that might have preserved the fidelity of randomization.

Even trials with careful attention to internal validity may provide less meaningful results if the trial context turns out to be different than expected.5, 6 For example, studies of protocolized early sepsis management found no difference between protocolized and usual care groups, largely because sepsis management in the usual care group was similar to the protocolized care group.7 Similarly, a large well-conducted trial evaluating conservative oxygen therapy versus usual care during mechanical ventilation found no difference between groups, but informativeness was bounded by an unexpectedly low dose of oxygen in the usual care group.8 Again, such validity and context limitations could conceivably be mitigated by specifically evaluating and addressing them during the pilot phase.2 Although guidelines are available to support pilot study methodology,9, 10 we suggest that the yield of pre-RCT pilot studies could be further enhanced by applying implementation science principles.

WHAT IS IMPLEMENTATION SCIENCE AND HOW CAN ITS STRATEGIES APPLY TO PILOT STUDIES?

Implementation science is the study of how to best deliver evidence-based practices in the real world.11 It is an emerging field that applies rigorous theory, process models, and frameworks to gain insights and emphasizes transdisciplinary collaboration and stakeholder engagement to promote external validity and scalability.12 In simplified terminology, implementation research identifies how best to help people “do the thing,” where ‘the thing’ is an effective intervention or practice.13 In parallel, pre-RCT pilot studies can be represented as research identifying how to best help investigators conduct informative RCTs. Importantly, a hallmark of implementation science is its focus on multilevel contextual factors.14 Traditional pre-RCT pilot studies evaluate the feasibility of the planned trial’s design focusing primarily on patient-level context and do not typically seek to identify key provider-, organization-, and policy-level contextual factors that may affect the ultimate informativeness of the planned RCT. Using implementation science to inform pre-RCT pilot studies, in contrast, aims to anticipate and ameliorate these contextual factors related to the RCT’s eventual informativeness.

To preempt obstacles leading to uninformative RCTs, we suggest early integration of implementation science principles in the pre-RCT pilot phase. This is particularly applicable to investigations of complex interventions—the nuanced relationship between complex interventions and the clinical and experimental contexts in which they are tested poses greater potential threat to informativeness. We encourage researchers planning RCTs of complex interventions to consider conducting preparatory pilot studies with the following elements:

Measure and report implementation outcomes

Pre-RCT pilot studies should have explicit objectives and testable hypotheses or evaluation questions related to implementation of the planned RCT. Because pilot studies are not designed to provide stable estimates of treatment effectiveness, investigators should avoid evaluating efficacy endpoints.15 Rather, valuable objectives can be drawn from the core set of established implementation outcomes including acceptability, adoptability, and feasibility.16 Additionally, given increasing awareness of the importance of patient-centered outcomes but lack of a standardized approach for outcome selection,17 an important role of pilot studies may be to identify and prioritize outcomes that matter most to patients and other stakeholders. Table 1 presents examples of how theses outcomes can be used in pre-RCT pilot studies. Measures should be individualized to each pilot study and can include both quantitative and qualitative outcomes. Sample sizes should be thoughtfully chosen based on the primary outcome measures selected for the pilot study.

-

(2)

Apply a conceptual framework

Table 1.

Potential Application of Implementation Science Outcomes to Pilot Study Design and Interpretation

| Implementation outcome | Example pilot study result | Implication/responsive adjustment | Available measurement |

|---|---|---|---|

| Acceptability | Stakeholders perceive randomization to control group unacceptable | Intervention momentum or perceived lack of equipoise may hinder recruitment; consider education or switch to non-traditional trial design |

Survey Semi-structured interviews |

| Planned RCT outcomes unimportant to stakeholders | Consider highly valued outcomes as primary outcome of planned RCT if feasible | Include Survey, semistructured interviews here or merge cell with above | |

| Adoption | Low adoption (number of patients willing to try intervention) | Consider modifying intervention to encourage uptake, anticipate dilution of treatment effect in intention-to-treat analyses | Study records |

| Appropriateness | Intervention perceived as poor fit for certain settings or subgroups | Consider enhancing support for settings or subgroups, anticipate generalizability limitations. Safeguard against disparities in enrollment |

Surveys Semi-structured interviews |

| Feasibility |

Study protocol perceived to be complex, difficult to adhere to Trial intervention has become usual care |

Adapt protocol to maximize likelihood of successful RCT Anticipate poor separation between usual care and intervention groups |

Surveys Semi-structured interviews Administrative data |

| Fidelity | High rate of protocol violations, crossovers | Anticipate dilution of treatment effect | Study records |

| Penetration | Low screening, eligibility, or consent rates, poor sample representativeness | Adapt recruitment strategies | Study records |

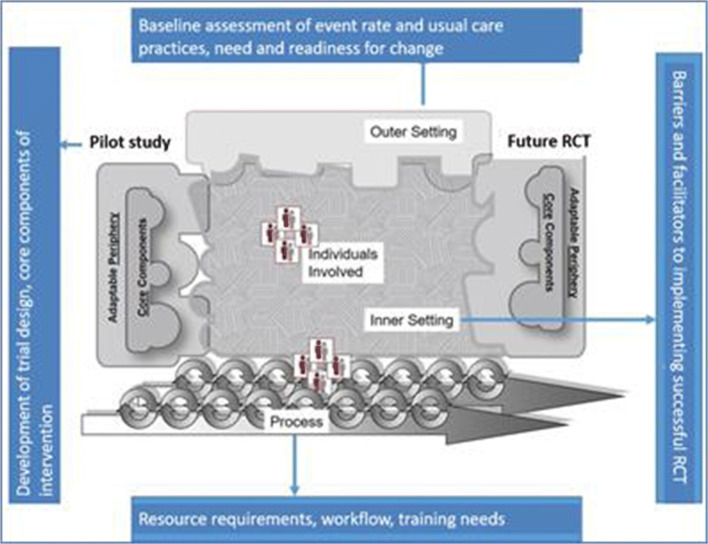

A conceptual framework explains phenomena, organizes conceptually distinct ideas, and helps visualize relationships that cannot be observed directly. There are many implementation-oriented frameworks, and it is beyond the scope of this perspective to review them comprehensively. One we have found to be particularly helpful is the Consolidated Framework for Implementation Research (CFIR). The CFIR is a widely used taxonomy in implementation science that was developed to guide systematic assessment of multilevel implementation contexts to identify factors that might influence intervention implementation and effectiveness.18 In the CFIR, five domains are described to interact and influence implementation effectiveness: the intervention, individuals, the inner setting, the outer setting, and the implementation process. Applying this framework to a pilot study enables a richer understanding of the factors that impede or support the conduct of a successful subsequent clinical trial. Figure 1 demonstrates how the CFIR can be used to uncover and organize the complex factors associated with the translation of a pilot study to a successful subsequent RCT.

-

(3)

Use the pilot study implementation findings to inform and adapt the planned RCT

Fig. 1.

Conceptualization of a pilot study within the Consolidated Framework for Implementation Research model. Adapted from www.cfirwiki.net.

Note that Fig. 1 depicts the pilot study intervention component as a jagged puzzle piece that has an imperfect fit into the other domains, but through the application of the CFIR constructs in the pilot process, the piece representing the planned RCT intervention fits much more precisely into the context in which it will be implemented. Depending on the goals and timeline of the preparatory pilot study, this can be a traditional one-time process with careful data collection to inform and adapt the planned RCT, or a rapid cycle iterative improvement process with intervention delivery improvements made in real time. Table 1 includes examples of how pre-RCT pilot study findings might be used to inform a future RCT.

-

(4)

Report pilot study results including qualitative and quantitative findings

Pre-RCT pilot studies can be reported as implementation science works, using explicitly defined a priori objectives, rigorous scientific processes, and systematic documentation of the results and subsequent adaptations to the planned RCT. Applying an implementation science framework to preparatory pilot studies will arguably improve the informativeness of future RCTs. Using this grounded approach will also improve pilot study reporting, increasing knowledge gained through dissemination of the results. An explicit implementation science framework ensures that key contextual factors are identified and addressed, as well as rigorously recorded and reported. Reporting implementation science-informed pilot results may improve the informativeness of RCTs by documenting intended implementation-oriented design elements to be included in the RCT or planned elements of the RCT that required redesign due to problems detected by the pilot. In addition, pilot study reports can serve to inform the broader research community about issues related to the studied intervention, and thus advance future research in the target area more broadly.

LIMITATIONS TO THIS APPROACH

The value of conducting implementation science-guided preparatory pilot studies is likely to favor RCTs testing complex interventions and may not be equally informative across all RCT designs. In fact, utility may be differentially observed by implementation outcome (e.g., acceptability of trial primary outcome versus adoption of intervention components). Furthermore, applying an implementation science approach may not be feasible or affordable within traditional research time or budget limits. Investigators should carefully consider the full range of implementation science methods available and select those that best align with the budget, timeline, and objectives of their pilot study (e.g., brief quantitative, electronic surveys versus in-depth, face-to-face interviews with key stakeholders). In addition, changes in traditional research evaluation and funding mechanisms may be required to support this approach to pilot study methodology.

CONCLUSION

Uninformative clinical trials are a major challenge in medicine. Carefully designed, conducted, and interpreted pilot studies can be important foundations for successful clinical trials. Using implementation science to guide these pre-RCT pilot studies may improve the value of investments in RCTs by addressing contextual factors influencing the informativeness of RCT results to the healthcare delivery community and its patients.

Compliance with Ethical Standards

Conflict of Interest

The authors have no relevant conflicts of interest to disclose.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Ioannidis JP. Why Most Clinical Research Is Not Useful. PLoS Med. 2016;13(6):e1002049. doi: 10.1371/journal.pmed.1002049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kistin C, Silverstein M. Pilot Studies: A Critical but Potentially Misused Component of Interventional Research. JAMA. 2015;314:1561–1562. doi: 10.1001/jama.2015.10962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Zarin DA, Goodman SN, Kimmelman J. Harms from uninformative clinical trials. JAMA. 2019;322:813–814. doi: 10.1001/jama.2019.9892. [DOI] [PubMed] [Google Scholar]

- 4.Alam N, Oskam E, Stassen PM, et al. Prehospital antibiotics in the ambulance for sepsis: a multicentre, open label, randomised trial. Lancet Respir Med. 2018;6(1):40–50. doi: 10.1016/S2213-2600(17)30469-1. [DOI] [PubMed] [Google Scholar]

- 5.Wells M, Williams B, Treweek S, Coyle J, Taylor J. Intervention description is not enough: evidence from an in-depth multiple case study on the untold role and impact of context in randomised controlled trials of seven complex interventions. Trials. 2012;13:95. doi: 10.1186/1745-6215-13-95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Haenssgen MJ, Charoenboon N, Do NTT, et al. How context can impact clinical trials: a multi-country qualitative case study comparison of diagnostic biomarker test interventions. Trials. 2019;20:111. doi: 10.1186/s13063-019-3215-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.PRISM Investigators. Rowan KM, Angus DC, et al. Early, Goal-Directed Therapy for Septic Shock - A Patient-Level Meta-Analysis. N Engl J Med. 2017;376(23):2223–2234. doi: 10.1056/NEJMoa1701380. [DOI] [PubMed] [Google Scholar]

- 8.Young P, Mackle D, Bellomo R, et al. Conservative oxygen therapy for mechanically ventilated adults with sepsis: a post hoc analysis of data from the intensive care unit randomized trial comparing two approaches to oxygen therapy (ICU-ROX) Intensive Care Med. 2020;46(1):17–26. doi: 10.1007/s00134-019-05857-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Eldridge SM, Chan CL, Campbell MJ, Bond CM, Hopewell S, Thabane L, et al. CONSORT 2010 statement: extension to randomised pilot and feasibility trials. BMJ. 2016;355:i5239. doi: 10.1136/bmj.i5239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Moore CG, Carter RE, Nietert PJ, Stewart PW. Recommendations for planning pilot studies in clinical and translational research. Clin Transl Sci. 2011;4(5):332–337. doi: 10.1111/j.1752-8062.2011.00347.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Brownson RC, Colditz GA, Proctor EK. Dissemination and Implementation Research in Health: Translating Science to Practice. Oxford University Press; 2018.

- 12.Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53. doi: 10.1186/s13012-015-0242-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Curran GM. Implementation science made too simple: a teaching tool. Implement Sci Commun. 2020;1:27. doi: 10.1186/s43058-020-00001-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nilsen P, Bernhardsson S. Context matters in implementation science: a scoping review of determinant frameworks that describe contextual determinants for implementation outcomes. BMC Health Serv Res. 2019;19:189. doi: 10.1186/s12913-019-4015-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lee EC, Whitehead AL, Jacques RM, Julious SA. The statistical interpretation of pilot trials: should significance thresholds be reconsidered? BMC Med Res Methodol. 2014;14:41. doi: 10.1186/1471-2288-14-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Pol Ment Health. 2011;38:65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Calvert M, Kyte D, Mercieca-Bebber R, et al. Guidelines for Inclusion of Patient-Reported Outcomes in Clinical Trial Protocols: The SPIRIT-PRO Extension. JAMA. 2018;319:483–494. doi: 10.1001/jama.2017.21903. [DOI] [PubMed] [Google Scholar]

- 18.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]