Abstract

Charles W. Eriksen (1923–2018), long-time editor of Perception & Psychophysics (1971–1993) – the precursor to the present journal – undoubtedly made a profound contribution to the study of selective attention in the visual modality. Working primarily with neurologically normal adults, his early research provided both theoretical accounts for behavioral phenomena as well as robust experimental tasks, including the well-known Eriksen flanker task. The latter paradigm has been used and adapted by many researchers over the subsequent decades. While Eriksen’s research interests were primarily focused on situations of unimodal visual spatially selective attention, here I review evidence from those studies that have attempted to extend Eriksen’s general approach to non-visual (i.e., auditory and tactile) selection and the more realistic situations of multisensory spatial attentional selection.

Keywords: Eriksen, Flanker task, Zoom lens, Visual, Spatial attention, Crossmodal

Introduction: Visual spatially selective attention

As an undergraduate student studying Experimental Psychology at Oxford University at the end of the 1980s, I was taught, or rather tutored, by the likes of Alan Allport (e.g., Allport, 1992; Neumann, Van der Heijden, & Allport, 1986), Peter McLeod (e.g., McLeod, Driver, & Crisp, 1988), and the late Jon Driver (e.g., Driver, 2001; Driver, McLeod, & Dienes, 1992; Driver & Tipper, 1989). At the time, the study of visual attention was a core component of the Human Information Processing (HIP) course. The research of Steve Tipper (e.g., Tipper, 1985; Tipper, Driver, & Weaver, 1991; Tipper, Weaver, Jerreat, & Burak, 1994) and Gordon Baylis (e.g., Baylis & Driver, 1993), then both also based in the Oxford department, helped to keep the focus of attention research squarely on the visual modality. The spotlight of attention (note the distinctly visual metaphor; Eriksen & Hoffman, 1973), and the Eriksen flanker task (B. A. Eriksen & C. W. Eriksen, 1974), were often mentioned. Indeed, many an undergraduate essay discussed the modifications to the conception of visual selective attention that had been facilitated by developments added to the cognitive psychology paradigms introduced by Charles W. Eriksen and his collaborators in the 1970s and 1980s (see LaBerge, 1995; and Styles, 2006, for a review).

This change in focus from early auditory selective attention research (e.g., on, or at least inspired by, the cocktail party situation; Cherry, 1953; Conway, Cowan, & Bunting, 2001; Moray, 1959, 1969b; see Bronkhorst, 2000, for a review) was brought about, at least in part, by the arrival of the personal computer (see Styles, 2006). While similarities and differences between the mechanisms of selective attention operating in the auditory and visual modalities were occasionally commented on in the review papers that we were invited to read as undergraduates (e.g., see Moray, 1969a),1 the view of attention was seemingly only ever a unimodal, or unisensory, one. I have devoted my own research career to the question of attentional selection in a multisensory world (e.g., see Spence, 2013; Spence & Driver, 2004; Spence & Ho, 2015a, b).2 The question that I would like to address in this review, therefore, is how well Eriksen’s paradigms, not to mention the insights and theoretical accounts that were based on them subsequently, stand-up in a world in which spatial attentional selection is, in fact, very often multisensory (e.g., see Soto-Faraco, Kvasova, Biau, Ikumi, Ruzzoli, Morís-Fernández, & Torralba, 2019; Theeuwes, van der Burg, Olivers, & Bronkhorst, 2007).

The Eriksen flanker task

The Eriksen flanker task (Eriksen & Eriksen, 1974) was a popular paradigm amongst cognitive psychologists in Oxford and elsewhere. Indeed, as of the end of 2019, the paper had been cited more than 6,000 times. The original study involved participants making speeded discrimination responses to a visual target letter that was always presented from just above fixation, while trying to ignore any visual flanker stimuli that were sometimes presented to either side of the target. Of course, under such conditions, one can easily imagine how both overt and covert spatial attention would have been focused on the same external location (see Spence, 2014a, for a review). Indeed, the fixing of the target location was specifically designed to eliminate the search element that likely slowed participants’ responses in the other visual noise studies that were published at around the same time (e.g., Colegate, Hoffman, & Eriksen, 1973; Eriksen & Collins, 1969; Eriksen & Schultz, 1979; Estes, 1972). Treisman’s visual search paradigm, by contrast, focused specifically on the “search” element. That said, the distractors in the latter’s studies would very often share features with the target (see Treisman & Gelade, 1980; Treisman & Souther, 1985). The 1-s monocular presentation of the visual target and distractor letters in Eriksen and Eriksen’s (1974) study was achieved by means of a tachistoscope (at this point, the widespread introduction of the personal computer to the field of experimental psychology was still a few years off). The six participants (this being a number that would be unlikely to cut the mustard with assiduous reviewers these days) who took part in the study were instructed to pull a response lever in one direction for the target letters “H” and “K,” and to move the lever in the opposite direction if the target letters “S” and “C” should be presented at the target location instead. Eriksen and Eriksen (1974) varied whether or not there were any visual distractors, and if so, how they were related to the target (see Table 1 for a summary of the conditions tested in their study). Of particular interest, the distractor (or noise) letters could be congruent or incongruent with the target letter, or else unrelated (that is, not specifically associated with a response). The spatial separation between the seven letters in the visual display was varied on a trial-by-trial basis.

Table 1.

Experimental conditions and representative displays. [Reprinted from Eriksen & Eriksen (1974)]

| Condition | Example | ||||||

|---|---|---|---|---|---|---|---|

| 1 Noise same as target | H | H | H | H | H | H | H |

| 2 Noise response compatible | K | K | K | H | K | K | K |

| 3 Noise response incompatible | S | S | S | H | S | S | S |

| 4 Noise heterogeneous—Similar | N | W | Z | H | N | W | Z |

| 5 Noise heterogeneous—Dissimilar | G | J | Q | H | G | J | Q |

| 6 Target alone | H | ||||||

The results revealed that speeded target discrimination reaction times (RTs) decreased as the target-distractor separation increased (from 0.06°, 0.5°, to 1.0° of visual angle). As the authors put it: “In all noise conditions, reaction time (RT) decreased as between-letter spacing increased.” In fact, the interference effects were greatest at the smallest separation, with performance at the other two separations being more or less equivalent. Eriksen and Eriksen went on to say that: “However, noise letters of the opposite response set were found to impair RT significantly more than same response set noise, while mixed noise letters belonging to neither set but having set-related features produced intermediate impairment” (Eriksen & Eriksen, 1974, p. 143).

When thinking about how to explain their results, Eriksen and Eriksen (1974, p. 147) clearly stated that the slowing of participants’ performance was “not a sort of ‘distraction effect’.” Nowadays, I suppose, one might consider whether their effects might, at least in part, be explained in terms of crowding instead (e.g., Cavanagh, 2001; Tyler & Likova, 2007; Vatakis & Spence, 2006), given the small stimulus separations involved. At the time their study was published, the Eriksens, wife and husband, argued that their results were most compatible with a response compatibility explanation (see also Miller, 1991).

Subsequently, it has been noted that the participants in Eriksen and Eriksen’s (1974) original study could potentially have resolved the task that they had been given on the basis of simple feature discrimination (i.e., curved vs. angular lines), rather than by necessarily having to discriminate the letters themselves (see Watt, 1988, pp. 127-129).3 Addressing this potential criticism, C. W. Eriksen and B. A. Eriksen (1979) had their participants respond to the target letters “H” and “S” with one response key and to the letters “K” and “C” with the other. The results of increasing the target-distractor (or -noise) distance were, however, the same as in their original flanker study.

Another potential concern with the original Eriksen flanker task relates to the distinction between the effects of overt and covert visual attentional orienting (e.g., Remington, 1980; Shepherd, Findlay, & Hockey, 1986). Indeed, Hagenaar and van der Heijden (1986) suggested that the distance effects reported in Eriksen and Eriksen’s (1974) seminal study might actually have reflected little more than the consequences of differences in visual acuity. That is, they suggested that distant distractors might have interfered less simply because they were presented in regions of the visual field with lower acuity (see also Jonides, 1981a). That said, subsequent research by Yantis and Johnston (1990), Driver and Baylis (1991), and many others showed that acuity effects did not constitute the whole story as far as flanker interference is concerned. The latter researchers presented the target and distractor letters on a virtual circle centred on fixation (to equate acuity). By pre-cuing the likely target location, they were able to demonstrate an effect of target-distractor separation despite the fact that visual acuity was now equivalent for all stimuli (i.e., regardless of the distance between the target and distractors). Interestingly though, Driver and Baylis argued that their results did not fit easily with Treisman’s Feature Integration Theory (FIT; see Treisman & Gelade, 1980).4

In summary, while it is undoubtedly appropriate for researchers to try and eliminate the putative effects of changes in visual acuity on performance, and to try and ensure that the participants really are discriminating between letters rather than merely line features, the basic flanker effect has remained surprisingly robust to a wide range of experimental modifications (improvements) to Eriksen and Eriksen’s original design (see also Miller, 1988, 1991).

Elsewhere, my former supervisor, Jon Driver, used a modified version of the Eriksen flanker task in order to investigate questions of proximity versus grouping by common fate in the case of visual selective attention (Driver & Baylis, 1989). In this series of four studies, a row of five letters was presented, centered on fixation. Once again, the participant’s task involved trying to discriminate the identity of the central target letter, and ignore the pair of letters presented on either side. In this case, though, the target letter sometimes moved downward with the outer distractors, while the inner distractors remained stationary. The results revealed that the Gestalt grouping by common motion (e.g., Kubovy & Pomerantz, 1981; Spence, 2015; Wagemans, 2015) determined flanker interference rather than the absolute distance between the target and the distractor, as might have been suggested by a simple reading of the attentional spotlight metaphor. In a related vein, some years earlier, Harms and Bundesen (1983) had already demonstrated that flanker interference was reduced when the colour of the distractors was made different from that of the target stimulus.

The spotlight of visual attention

At around the same time that the Eriksen’s introduced their flanker interference task, Charles Eriksen and his colleagues were also amongst the first to start talking about the spotlight of spatial attention (Erikson & Hoffman, 1973; see also Broadbent, 1982; Klein & Hansen, 1990; LaBerge, Carlson, Williams, & Bunney, 1997; Posner, 1980; Posner, Snyder, & Davidson, 1980; Treisman & Gelade, 1980; Tsal, 1983). As Driver and Baylis (1991, p. 102) put it: “The crux of this metaphor is the idea that space is the medium for visual attention, which selects contiguous regions of the visual field, as if focusing some beam to illuminate an area in greater detail.”

Now, as might be expected, the notion of a contiguous uniform spotlight of visual attention was soon challenged from a number of directions. On the one hand, researchers, including Charles Eriksen, questioned the limits on its spatial distribution. The spotlight model of attention, and its successors (e.g., Shulman, Remington, & McLean, 1979), was often-discussed in Oxford tutorials. From a fixed spotlight model, with the spotlight also moving at a fixed and, according to Tsal (1983), measurable speed of 8 ms per degree of visual angle (see also Eriksen, & Murphy, 1987; Eriksen & Yeh, 1985; though see Eriksen & Webb, 1989; Kramer, Tham, & Yeh, 1991; Murphy & Eriksen, 1987; Remington & Pierce, 1984; Sagi & Julesz, 1985, for contrary findings) through to an adjustable beam (LaBerge, 1983) or “zoom lens” (Eriksen & St. James, 1986; Eriksen & Yeh, 1985; and other gradient-type models; Shulman, Sheehy, & Wilson, 1986).

The central idea behind Eriksen and St. James’ (1986) “zoom lens” model was that there was a fixed amount of attentional resources that could either be focused intensively over a narrow region of space, or else spread out more widely across the visual field. Subsequently, others came out with the rather more curious-sounding “donut” model (Müller & Hübner, 2002). If you were wondering, the latter was put forward to allow for the finding that attention could seemingly be divided between two different locations simultaneously (e.g., McMains & Somers, 2004; Müller, Malinowski, Gruber, & Hillyard, 2003; Tong, 2004).

Separate from the question of how attention is focused spatially, there was also a question of how attention moved between different locations, as when one probable target location was cued before another. However, as Eriksen and Murphy (1987, p. 303) noted early on when considering the seemingly contradictory evidence concerning whether visual spatial attention moves in a time-consuming and continuous manner or not: “How attention shifts from one locus to another in the visual field is still an open question. Not only is the experimental evidence contradictory, but the experiments are based on a string of tenuous assumptions that render interpretations of the data quite problematic.” As we will see below, though, this precautionary warning did not stop others from trying to extend the spotlight-type account beyond the visual modality.

One of the other uncertainties about the spotlight of attention subsequently concerned whether or not Posner’s “beam” (e.g., Posner, 1978, 1980) was the same as Treisman’s “glue” (e.g., Treisman, 1986). Perhaps there were actually multiple spatial spotlights of attention in mind. In this regard, informative research from Briand and Klein (1987) highlighted some important differences. The latter researchers concluding that only exogenous attentional orienting behaved like Treisman’s glue.

Flanker interference and perceptual load

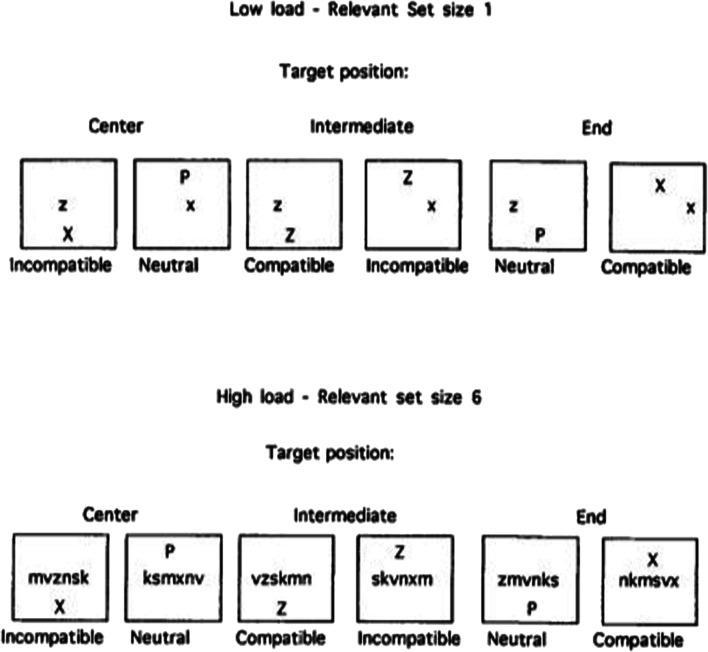

Lavie (1995; see also Lavie & Tsal, 1994) modelled a number of her early experiments on perceptual load on a modified version of Eriksen flanker task. Here, the perceptual load of the visual task was manipulated by, for example, increasing the number, and/or heterogeneity, of distractors presented in the display (see Fig. 1). The basic idea was that we have a fixed amount of attentional resources that need to be used at any one time (see also Miller, 1991, for an earlier consideration of perceptual load in the context of the Eriksen flanker task). Hence, if processing/perceptual load is high then attentional selection is likely to occur early, whereas if the perceptual load of the primary task is low, late selection might be observed instead. One challenge around load theory relates to the question of how to move beyond a merely operational definition of load. Another challenge has come from those researchers wondering whether attentional narrowing, rather than specifically attentional selection, might explain the results of manipulations of load (e.g., Beck & Lavie, 2005; Van Steenbergen, Band, & Hommel, 2011). The latter concern was often raised in response to the fact that, just as in Eriksen and Eriksen’s (1974) original flanker study, the relevant target stimuli were typically always presented from fixation, or else very close to it.

Fig. 1.

Experimental stimuli used in Lavie’s (1995, Fig. 1) Experiment 1. The participants had to make speeded discrimination responses concerning whether the target letter presented in the middle row of the display was an “X” or a “Z.” Meanwhile a distractor stimulus was presented unpredictably from either above or below the middle row

Interim summary

Ultimately, the primarily spatial account of attentional selection stressed by much of Eriksen’s early research came to be challenged by other findings that started to emerge highlighting the object-based nature of visual selection (e.g., Baylis & Driver, 1993; Duncan, 1984; Treisman, Kahneman, & Burkell, 1983; Shinn-Cunningham, 2008; Tipper et al., 1991, 1994). While the latter research by no means eliminated the important role played by space in attentional selection, it nevertheless highlighted that in those environments in which objects are present in the scene/display, object-based selection might win out over a straight space-based account (Abrams & Law, 2000; Lavie & Driver, 1996; Richard, Lee, & Vecera, 2008; see Chen, 2012, for a review). Before moving on, it is perhaps also worth noting that while C. W. Eriksen’s interests primarily lay with trying to understand spatial attentional selection in the normal brain, many other researchers, including a number of my former collaborators here in Oxford, subsequently took Eriksen’s approach as a basis for trying to understand how mechanisms of selective attention might suffer following brain damage such as stroke or neglect (see Driver, 1998, for a review).

Extending Eriksen’s approach beyond vision

Taking the three key ideas from Eriksen’s work that have been discussed so far,5 the Eriksen flanker task, the idea of a spatial attentional spotlight (remaining agnostic, for now, about its precise shape), and the notion that it might take time for spatial attention to move from one location to another, I will now take a look at how these ideas were extended to the auditory and tactile modalities in those wanting to study attentional selection beyond vision. One point to highlight at the outset here when comparing the same, or similar, behavioral task when presented in different senses is the differing spatial resolution typically encountered in vision, audition, and touch. For instance, resolution at, or close to, the fovea, where the vast majority of the visual flanker interference research has been conducted to date, tends to be much better than at the fingertip, where the majority of the tactile research has been conducted (see Gallace & Spence, 2014), or in audition. At the same time, however, it is also worth bearing in mind the very dramatic fall-off in spatial resolution that is seen in the visual modality as one moves out from the fovea into the periphery. A similar marked decline has also been documented in the tactile modality when stimuli are presented away from the fingertips (e.g., Stevens & Choo, 1996; Weinstein, 1968), what Finnish architect Juhani Pallasmaa (1996) once called “the eyes of the skin.” One of the most relevant questions, therefore, in what follows, is what determines the resolution of the spatial spotlight in the cases of selection within, and also between, different sensory modalities.

One other related, though presumably not quite synonymous, difference between the spatial senses that is worth keeping in mind here relates to their differing bandwidths. Zimmerman (1989) estimated the channel capacities as 107 bits/s for the visual modality, 105 for auditory modality, and 106 bits/s for touch. However, in terms of effective psychophysical channel capacity (presumably a more appropriate metric when thinking about flanker interference as assessed in psychophysical tasks), Zimmerman estimated these figures at 40 (vision), 30 (audition), and 5 (touch) bits/s (see also Gallace, Ngo, Sulaitis, & Spence, 2012).

The non-visual flanker task

As researchers started to consider attentional selection outside the visual modality, it was natural to try and adapt Eriksen’s robust spatial tasks to the auditory and tactile modalities – that is, to the other spatial senses (e.g., Chan, Merrifield, & Spence, 2005; Gallace, Soto-Faraco, Dalton, Kreukniet, & Spence, 2008; Soto-Faraco, Ronald, & Spence, 2004). Importantly, however, extending the flanker interference task into the other spatial senses raised its own problems. For instance, as we have just seen, the auditory modality is generally less acute in the spatial domain and more acute in the temporal dimension (e.g., Julesz & Hirsh, 1972; Welch, DuttonHurt, & Warren, 1986). At the same time, however, moving beyond a unimodal visual setting also raises some intriguing possibilities as far as the empirical research questions that could be addressed were concerned (such as, for instance, the nature of the spatial representation on which the spotlight of attention operates).

Chan et al. (2005) adapted the Eriksen flanker task to the auditory modality. These researchers had their participants sit in front of a semi-circular array of five loudspeaker cones. The participant’s task in Chan et al.’s first experiment involved trying to discriminate the identity of the target word (“bat” vs. “bed”) presented from the central loudspeaker situated directly behind fixation, while trying to ignore the identity of the auditory distractor words (spoken by a different person) presented from one of the two loudspeakers positioned equidistant 30° to either side of fixation (note, here, the much larger spatial separation in audition than typically seen in visual studies). The results revealed a robust flanker interference effect, with speeded discrimination responses to the target being significantly slower (and much less accurate) if the distractor voices repeated the non-target (incongruent) word as compared to when repeating the target word instead.6

Intriguingly, a second experiment revealed little variation in the magnitude of the auditory flanker interference effect as a function of whether the distractors were placed 30°, 60°, or 90° from the central target loudspeaker (with distance varied unpredictably on a trial-by-trial basis). This result suggests a very different spatial fall-off in distractor interference as compared to what had been reported in Eriksen and Eriksen’s (1974) original visual study. Their response compatibility effects fell off within 1° of visual angle of the target location. One account for such between-modality effects might simply be framed in terms of differences in spatial resolution between the senses involved. However, another important difference between the auditory and the visual versions of the Eriksen flanker interference task that it is important to bear in mind here is that in the former case both energetic and informational masking effects may be compromising auditory performance (Arbogast, Mason, & Kidd, 2002; Brungart, Simpsom, Ericson, & Scott, 2001; Kidd, Jr., Mason, Rohtla, & Deliwala, 1998; Leek, Brown, & Dorman, 1991). By contrast, in the visual studies, any interference is attributable only to informational masking. Note that energetic masking is attributable to the physical overlap of the auditory signals in space/time. Informational masking, by contrast, is attributable to the informational content conveyed by the stimuli themselves (e.g., Lidestam, Holgersson, & Moradi, 2014).

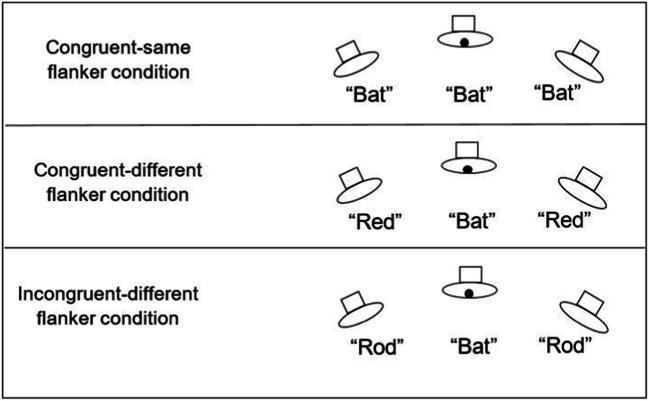

In order to address the concern over an energetic masking account, in a third experiment Chan and his colleagues (2005) had two words associated with one response and another two words with another response (see Fig. 2). Intriguingly, participants’ performance in the Congruent-same and the Congruent-different conditions was indistinguishable and, in both cases, it was much better than the performance seen in the Incongruent-different condition. This despite the fact that any energetic masking effects should have been matched in the latter two conditions.

Fig. 2.

The three experimental conditions used in Chan et al.’s (2005) Experiment 3, an auditory version of the Eriksen flanker task (response key 1 = “Bat” and “Red” Response key 2 = “Rod” and “Bed”)

Although still present, concerns about the impact of visual fixation on auditory selection have been less of a concern than was the case in the visual modality (though see Reisberg, 1978; Reisberg, Scheiber, & Potemken, 1981; Spence, Ranson, & Driver, 2000c). Nevertheless, when the flanker interference paradigm was adapted to the tactile modality, the targets and distractors have nearly always been presented equidistant from central visual fixation. In this case, the target stimulus was presented to the finger or thumb of one hand while the distractor stimulus was presented to the finger or thumb of the other hand. Once again, robust distractor interference effects were observed (e.g., Soto-Faraco et al., 2004). In the tactile interference case, however, one of the intriguing new questions that we were able to address concerned what happens when the separation between the participant’s hands was varied, while keeping the skin sites stimulated constant. The results of a series of such laboratory experiments demonstrated that it was the separation in external space, rather than the somatotopic separation (i.e., the distance across the skin surface), that primarily determined how difficult participants found it to ignore the vibrotactile distractors. Intriguingly, however, subsequent research using variants of the same intramodal tactile paradigm (Gallace et al., 2008) went on to reveal that compatibility effects could be minimized simply by having the participants respond vocally/verbally rather than by depressing, or releasing, one of two response buttons/foot-pedals (cf. Eriksen and Hoffman, 1972a, b). Notice how the use of a vocal response removes any spatial component from the pattern of responding.

Does the spotlight of attention operate outside the visual modality?

In recent decades, a number of researchers have taken the spatial spotlight metaphor and extended it beyond the visual modality (e.g., Lakatos & Shepard, 1997; Rhodes, 1987; Rosli, Jones, Tan, Proctor, & Gray, 2009; see also Rosenbaum, Hindorff, & Barnes, 1986). For instance, in the study reported by Rhodes, participants had to specify the location of a target sound by means of a learned verbal label. A series of evenly-spaced locations around the participant were each associated with numbers in a conventional sequence (1, 2, 3, etc.). The latency of the verbal localizing response on a given trial increased linearly with the distance of the target from its position on the preceding trial. Rhodes argued that this increase reflected the time taken to shift the spatial spotlight of attention between locations and therefore implied that attention moved through empty space at a constant rate (as had been suggested previously by Tsal, 1983, for vision; see also Shepherd & Müller, 1989). However, the movement could as well have been along some numerical, rather than spatial, representation.

At this point, it is worth stressing that space is not intrinsically relevant to the auditory modality in quite the same way (Rhodes, 1987). Hence, according to certain researchers, attentional selection is perhaps better thought of as frequency-based rather than as intrinsically space-based (e.g., Handel, 1988a,b; Kubovy, 1988). Yet, at the same time, it is also clear that we do integrate auditory, visual, and tactile stimuli spatially. The vibration I feel, the ringtone I hear, all seem to come from the mobile device I see resting in my palm. That is, multisensory feature binding would appear to give rise to what feels like multisensory object representations. While the phenomenology of multisensory objecthood (O’Callaghan, 2014) is not in doubt (though see Spence & Bayne, 2015), little thought has seemingly been given over to the question of how such binding is achieved, especially in the complex multisensory scenes of everyday life. Think here only of the famous cocktail party situation (see Spence, 2010b; Spence & Frings, 2020). Intriguingly, Cinel, Humphreys, and Poli (2002) conducted one of the few studies to have demonstrated illusory conjunctions between visual and tactile stimuli presented at, or near to, the fingertips.

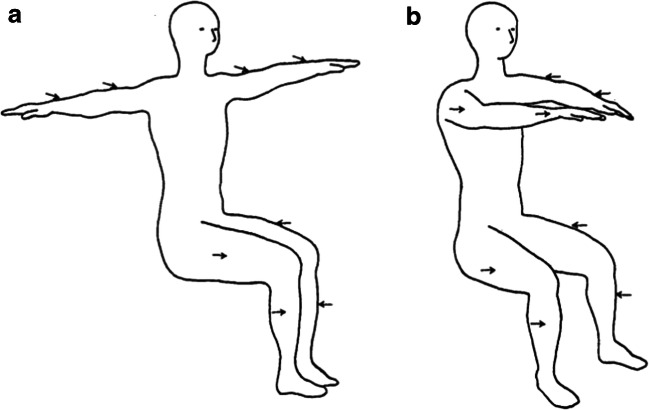

Lakatos and Shepard (1997) asked a similar question in the tactile modality (see Fig. 3). First, one of eight locations was identified verbally. Two seconds later, a second location was also identified verbally. At the same time, air-puff stimuli were presented from four of the eight possible locations distributed across the participant’s body surface. The latter had to respond in a forced choice manner as to whether an air-puff stimulus had been presented from the second-named location. In order to try and ensure that the participants did indeed focus their attention on the first-named location, the first- and second-named locations were the same on 70% of the trials. On the remaining 30% of the trials, the second location was picked at random from one of the remaining seven positions. Once again, the question of interest was whether RTs would increase in line with the distance that the putative attentional spotlight had to move through space. The results revealed a clear linear effect of distance on RTs. In a second experiment, when a different posture was adopted (again see Fig. 3), the results suggested that it was straight-line distance between the named locations that determined RTs.

Fig. 3.

Arrows indicating the position from which air-puff stimuli could be presented in Lakatos and Shepard’s (1997) study of tactile spatial attentional shifts. By varying the participant’s posture, it was possible to demonstrate that it was straight-line distance through space that mattered more to reaction times than necessarily distance across the body surface [Figure reprinted from Lakatos and Shepard (1997, Fig. 3)]

Given that spatial acuity varies so dramatically across the body surface (e.g., Stevens & Choo, 1996; Weinstein, 1968), one interesting question to consider here is whether similar speeds of movement would also be documented in areas of higher tactile spatial resolution, such as, for example, within the fingers/hand. I am not, however, aware of anyone having addressed the question of what role, if any, spatial resolution has on the speed of the spotlight’s spatial movement across a given representation of space (see also Gallace & Spence, 2014).

Another important issue relates to the differing spatial resolution documented in the different senses. In, or close to, foveal vision, where the vast majority of visual selection studies have been conducted, spatial resolution is undoubtedly much better than for the other spatial senses of hearing and touch. Indeed, the spatial separation between target and distractor locations is always much, much larger in the case of auditory or tactile versions of the flanker task, though typically little mention is made of this fact. The lower spatial resolution when one moves away from the situation mostly studied with foveal vision will likely reduce the signal/noise ratio associated with any given stimulus event, thereby presumably increasing the processing time needed to identify any particular stimulus event. Such issues are clearly important when it comes to a consideration of multisensory selection, as discussed briefly below. To put the question bluntly, one might wonder what is the effective spatial resolution for the spotlight of attention, say, when dealing with multisensory inputs? At the same time, however, it is also worth stressing that in everyday life much of our multisensory information processing presumably takes place outside of foveal vision, where the spatial resolution of the spatial senses (vision, audition, and touch) often turn out to be much more evenly matched.

Multisensory selection

The crossmodal congruency task

Having taken flanker interference out of the unisensory visual setting into the unisensory auditory and tactile modalities, it then became only natural to ask the question about crossmodal attentional selection in the distractor interference setting. This led to the emergence of the widely used crossmodal congruency task (CCT; Pavani, Spence, & Driver, 2000; Spence, Pavani, & Driver, 1998, 2004b; see Spence, Pavani, Maravita, & Holmes, 2008, for a review). In the basic version of the paradigm, participants are required to discriminate the elevation of vibrotactile targets presented to the index finger or thumb of either hand, while at the same time trying to ignore the visual distractors (so, presented in a different modality from the target) presented from an upper or lower LED situated on either the same or the opposite hand (see Fig. 4). Typically, the onset of the distractors precedes that of the targets by about 30 ms (cf. Gathercole & Broadbent, 1987). A robust crossmodal response compatibility effect, often referred to as the crossmodal congruency effect, or CCE for short, has been documented across a wide range of stimulus conditions.

Fig. 4.

Schematic view of the apparatus and participant in a typical study of the crossmodal congruency task. The participant holds a foam cube in each hand. Two vibrotactile stimulators and two visual distractor lights (zig-zag-shaded rectangles and filled circles, respectively, in the enlarged inset) are embedded in each foam block, positioned next to the participant’s thumb or index finger. Note that white noise is presented continuously over headphones to mask the sound of the operation of the vibrotactile stimulators and foot-pedals. The participants made speeded elevation discrimination responses (by raising the toes or heel of the right foot) in response to vibrotactile targets presented either from the “top” by the index finger of one or the other hand or from the “bottom” by one or the other thumb, respectively

There have since been many studies using the crossmodal congruency task (first presented at the 1998 meeting of the Psychonomic Society; Spence, Pavani, & Driver, 1998). What is more, similar, if somewhat smaller, interference effects can also be obtained if the target and distractor modalities are reversed such that participants now have to respond to discriminate the elevation of visual targets while attempting to ignore the location of vibrotactile distractors (Spence & Walton, 2005; Walton & Spence, 2004). A few researchers have also demonstrated crossmodal congruency effects between auditory and tactile elevation cues (Merat, Spence, Lloyd, Withington, & McGlone, 1999; see also Occelli, Spence, & Zampini, 2009). Intriguingly, however, one of the important differences between the crossmodal and intramodal versions of the flanker task is that perceptual interactions (i.e., the ventriloquism effect and/or multisensory integration) may account for a part of the distractor interference effect in the crossmodal case (e.g., Marini, Romano, & Maravita, 2017; Shore, Barnes, & Spence, 2006). By contrast, spatial ventriloquism and multisensory integration presumably play no such role in the intramodal visual Eriksen flanker task.

A multisensory spotlight of attention

Eventually, the spotlight metaphor made it into the world of multisensory and crossmodal attention research (e.g., Buchtel & Butter, 1988; Butter, Buchtel, & Santucci, 1989; Farah, Wong, Monheit, & Morrow, 1989; Posner, 1990; Ward, 1994). In the case of exogenous spatial attention orienting, Farah et al. (1989, p. 462) suggested that there may be “a single supramodal subsystem that allocates attention to locations in space regardless of the modality of the stimulus being attended, modulating perception as a function of location across modalities” (see also Spence, Lloyd, McGlone, Nicholls, & Driver, 2000a; Spence, McDonald, & Driver, 2004a; Spence, 2010a). By contrast, in the case of endogenous spatial attention (Jonides, 1981b), much of the spatial attention research subsequently switched the focus to the question of whether the spotlight of attention could be split between different locations, in different modalities simultaneously (e.g., Lloyd, Merat, McGlone, & Spence, 2003; Spence & Driver, 1996; Spence, Pavani, & Driver, 2000b). Much of the experimental evidence supported the view that while endogenous spatial attention could be split between different locations, there were likely to be significant performance costs (picked up as a drop in the speed or accuracy of participants’ responses; see Driver & Spence, 2004, for a review). Such results, note, are seemingly inconsistent with Posner’s (1990) early suggestion that modality-specific attentional spotlights might be organized hierarchically under an overarching multisensory attentional spotlight.

Conclusions

To conclude, Eriksen’s seminal research in the 1970s and 1980s was focused squarely on questions of visual spatially selective attention in neurologically healthy adult participants. His theoretical accounts of attention operating as a zoom lens undoubtedly generated much subsequent empirical research (e.g., Chen & Cave, 2014). What is more, versions of the Eriksen flanker paradigm have often been used by researchers working across cognitive psychology, and specifically attention research. At the same time, however, a number of esearchers have subsequently attempted to extend Eriksen’s theoretical approach/experimental paradigms out of the visual modality into the other spatial senses, namely audition and touch, but there have been challenges. Researchers, including your current author, have been able to make what seem like useful predictions into the multisensory situations of selection that are perhaps more representative of what happens in everyday life.

Ultimately, therefore, I would like to argue that C. W. Eriksen’s primarily visual focus can, and has, by now been successfully extended to the case of non-visual and multisensory selective attention. At the same time, however, it is important to be cognizant of differences in the representation of space outside vision, as well as the other salient differences in information processing capacity that likely make any simple comparison across the senses less than straightforward.

For the vision scientist, one might want to know what additional insights are to be gained from the extension of the Eriksen flanker task outside its original unisensory visual setting? One conclusion must undoubtedly be that the spotlight of attention should not be considered as operating on the space provided by a given modality of sensory receptors (such as the retinal array). Rather, the spotlight of attention would appear to operate on a higher-level representation of environmental space that presumably results from the integration of inputs from the different spatial senses, presumably incorporating proprioceptive inputs too (see Spence & Driver, 2004). At the same time, however, that also leaves open the question of the spatial resolution of this multisensory representation, given the very apparent differences in resolution that have been highlighted by the various unisensory studies of Eriksen flanker interference in the visual, auditory, and tactile modalities. This remains an intriguing question for future research (see Chong & Mattingley, 2000; Spence & Driver, 2004; Spence, McDonald, & Driver, 2004a, for a discussion of this issue in the context of crossmodal exogenous spatial cuing of attention; cf. Stewart & Amitay, 2015).

Open Practices Statement

As this is a review paper, there are no original data or materials to share.

Footnotes

Julesz and Hirsh (1972) are amongst the researchers interested in considering the similarities and differences between auditory and visual information processing. See Hsiao (1998) for a similar comparison of visual and tactile information processing.

The switch from unisensory to multisensory attention research motivated by my then supervisor, Jon Driver’s, broken TV. The sound would emerge from the hi-fi loudspeakers in his cramped Oxford bedsit giving rise to an intriguing ventriloquism illusion (see Driver & Spence, 1994; Spence, 2013, 2014b). That said, there was also some more general interest emerging at the time in trying to extend the Stroop (Cowan, 1989a, b; Cowan & Barron, 1987; Miles & Jones, 1989; Miles, Madden, & Jones, 1989) and negative priming paradigms (Driver & Baylis, 1993) from their original unisensory visual setting to a crossmodal, specifically audiovisual, one.

This distinction is important in terms of Treisman’s FIT, while makes a meaningful distinction, note, between the processing of features and feature conjunctions (e.g., Treisman & Gelade, 1980).

The reason, at least according to Driver and Baylis (1991), being that FIT predicts a distance effect for feature conjunctions but not for feature singletons, which are thought to be processed in parallel across the entire display. In fact, under their specific presentation conditions, Driver and Baylis obtained the opposite result, hence seemingly incongruent with Treisman’s account (see also Shulman, 1990).

There were, or course, many more findings, but covering any of them more fully falls beyond the scope of the present article.

Note that in this study RT and proportion correct were combined into a single measure of performance, namely inverse efficiency (see Townsend & Ashby, 1978, 1983).

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Abrams RA, Law MB. Object-based visual attention with endogenous orienting. Perception & Psychophysics. 2000;62:818–833. doi: 10.3758/bf03206925. [DOI] [PubMed] [Google Scholar]

- Allport DA. Selection and control: A critical review of 25 years. In: Meyer DE, Kornblum S, editors. Attention and performance: Synergies in experimental psychology, artificial intelligence, and cognitive neuroscience. Hillsdale, NJ: Erlbaum; 1992. pp. 183–218. [Google Scholar]

- Arbogast TL, Mason CR, Kidd G., Jr The effect of spatial separation on informational and energetic masking of speech. Journal of the Acoustical Society of America. 2002;112:2086–2098. doi: 10.1121/1.1510141. [DOI] [PubMed] [Google Scholar]

- Baylis GC, Driver J. Visual attention and objects: Evidence for hierarchical coding of location. Journal of Experimental Psychology: Human Perception and Performance. 1993;19:451–470. doi: 10.1037//0096-1523.19.3.451. [DOI] [PubMed] [Google Scholar]

- Beck DM, Lavie N. Look here but ignore what you see: Effects of distractors at fixation. Journal of Experimental Psychology: Human Perception and Performance. 2005;31:592–607. doi: 10.1037/0096-1523.31.3.592. [DOI] [PubMed] [Google Scholar]

- Briand KA, Klein RM. Is Posner's "beam" the same as Treisman's "glue"?: On the relation between visual orienting and feature integration theory. Journal of Experimental Psychology: Human Perception and Performance. 1987;13:228–241. doi: 10.1037//0096-1523.13.2.228. [DOI] [PubMed] [Google Scholar]

- Broadbent DE. Task combination and selective intake of information. Acta Psychologica. 1982;50:253–290. doi: 10.1016/0001-6918(82)90043-9. [DOI] [PubMed] [Google Scholar]

- Bronkhorst A. The cocktail party phenomenon: A review of research on speech intelligibility in multiple-talker conditions. Acustica. 2000;86:117–128. [Google Scholar]

- Brungart DS, Simpsom BD, Ericson MA, Scott KR. Informational and energetic masking effects in the perception of multiple simultaneous talkers. Journal of the Acoustical Society of America. 2001;110:2527–2538. doi: 10.1121/1.1408946. [DOI] [PubMed] [Google Scholar]

- Buchtel HA, Butter CM. Spatial attention shifts: Implications for the role of polysensory mechanisms. Neuropsychologia. 1988;26:499–509. doi: 10.1016/0028-3932(88)90107-8. [DOI] [PubMed] [Google Scholar]

- Butter CM, Buchtel HA, Santucci R. Spatial attentional shifts: Further evidence for the role of polysensory mechanisms using visual and tactile stimuli. Neuropsychologia. 1989;27:1231–1240. doi: 10.1016/0028-3932(89)90035-3. [DOI] [PubMed] [Google Scholar]

- Cavanagh P. Seeing the forest but not the trees. Nature Neuroscience. 2001;4:673–674. doi: 10.1038/89436. [DOI] [PubMed] [Google Scholar]

- Chan JS, Merrifield K, Spence C. Auditory spatial attention assessed in a flanker interference task. Acta Acustica. 2005;91:554–563. [Google Scholar]

- Chen Z. Object-based attention: A tutorial review. Attention, Perception, & Psychophysics. 2012;74:784–802. doi: 10.3758/s13414-012-0322-z. [DOI] [PubMed] [Google Scholar]

- Chen Z, Cave KR. Constraints on dilution from a narrow attentional zoom reveal how spatial and color cues direct selection. Vision Research. 2014;101:125–137. doi: 10.1016/j.visres.2014.06.006. [DOI] [PubMed] [Google Scholar]

- Cherry EC. Some experiments upon the recognition of speech with one and two ears. Journal of the Acoustical Society of America. 1953;25:975–979. [Google Scholar]

- Chong, T., & Mattingley, J. B. (2000). Preserved cross-modal attentional links in the absence of conscious vision: Evidence from patients with primary visual cortex lesions. Journal of Cognitive Neuroscience, 12 (Supp.), 38.

- Cinel C, Humphreys GW, Poli R. Cross-modal illusory conjunctions between vision and touch. Journal of Experimental Psychology: Human Perception & Performance. 2002;28:1243–1266. doi: 10.1037//0096-1523.28.5.1243. [DOI] [PubMed] [Google Scholar]

- Colegate RL, Hoffman JE, Eriksen CW. Selective encoding from multielement visual displays. Perception & Psychophysics. 1973;14:217–224. [Google Scholar]

- Conway ARA, Cowan N, Bunting MF. The cocktail party phenomenon revisited: The importance of working memory capacity. Psychonomic Bulletin & Review. 2001;8:331–335. doi: 10.3758/bf03196169. [DOI] [PubMed] [Google Scholar]

- Cowan N. The reality of cross-modal Stroop effects. Perception & Psychophysics. 1989;45:87–88. doi: 10.3758/bf03208039. [DOI] [PubMed] [Google Scholar]

- Cowan N. A reply to Miles, Madden, and Jones (1989): Mistakes and other flaws in the challenge to the cross-modal Stroop effect. Perception & Psychophysics. 1989;45:82–84. doi: 10.3758/bf03208036. [DOI] [PubMed] [Google Scholar]

- Cowan N, Barron A. Cross-modal, auditory-visual Stroop interference and possible implications for speech memory. Perception & Psychophysics. 1987;41:393–401. doi: 10.3758/bf03203031. [DOI] [PubMed] [Google Scholar]

- Driver J. The neuropsychology of spatial attention. In: Pashler H, editor. Attention. Hove, East Sussex: Psychology Press; 1998. pp. 297–340. [Google Scholar]

- Driver J. A selective review of selective attention research from the past century. British Journal of Psychology. 2001;92(1):53–78. [PubMed] [Google Scholar]

- Driver J, Baylis GC. Movement and visual attention: The spotlight metaphor breaks down. Journal of Experimental Psychology: Human Perception and Performance. 1989;15:448–456. doi: 10.1037//0096-1523.15.3.448. [DOI] [PubMed] [Google Scholar]

- Driver J, Baylis GC. Target-distractor separation and feature integration in visual attention to letters. Acta Psychologica. 1991;76:101–119. doi: 10.1016/0001-6918(91)90040-7. [DOI] [PubMed] [Google Scholar]

- Driver J, Baylis GC. Cross-modal negative priming and interference in selective attention. Bulletin of the Psychonomic Society. 1993;31:45–48. [Google Scholar]

- Driver J, Spence C. Spatial synergies between auditory and visual attention. In: Umiltà C, Moscovitch M, editors. Attention and performance XV: Conscious and nonconcious information processing. Cambridge, MA: MIT Press; 1994. pp. 311–331. [Google Scholar]

- Driver J, Spence C. Crossmodal spatial attention: Evidence from human performance. In: Spence C, Driver J, editors. Crossmodal space and crossmodal attention. Oxford, UK: Oxford University Press; 2004. pp. 179–220. [Google Scholar]

- Driver J, Tipper SP. On the nonselectivity of 'selective' seeing: Contrasts between interference and priming in selective attention. Journal of Experimental Psychology: Human Perception and Performance. 1989;15:304–314. [Google Scholar]

- Driver J, McLeod P, Dienes Z. Motion coherence and conjunction search: Implications for guided search theory. Perception & Psychophysics. 1992;51:79–85. doi: 10.3758/bf03205076. [DOI] [PubMed] [Google Scholar]

- Duncan J. Selective attention and the organization of visual information. Journal of Experimental Psychology: General. 1984;113:501–517. doi: 10.1037//0096-3445.113.4.501. [DOI] [PubMed] [Google Scholar]

- Eriksen BA, Eriksen CW. Effects of noise letters upon the identification of a target letter in a nonsearch task. Perception & Psychophysics. 1974;16:143–149. [Google Scholar]

- Eriksen BA, Hoffman JE. Some characteristics of selective attention in visual perception determined by vocal reaction time. Perception & Psychophysics. 1972;11:169–171. [Google Scholar]

- Eriksen CW, Collins JF. Temporal course of selective attention. Journal of Experimental Psychology. 1969;80:254–261. doi: 10.1037/h0027268. [DOI] [PubMed] [Google Scholar]

- Eriksen CW, Eriksen BA. Target redundancy in visual search: Do repetitions of teh target within the display impair processing? Perception & Psychophysics. 1979;26:195–205. [Google Scholar]

- Eriksen CW, Hoffman JE. Temporal and spatial characteristics of selective encoding from visual displays. Perception & Psychophysics. 1972;11:201–204. [Google Scholar]

- Eriksen CW, Hoffman JE. The extent of processing of noise elements during selective encoding from visual displays. Perception & Psychophysics. 1973;14:155–160. [Google Scholar]

- Eriksen CW, Murphy T. Movement of the attentional focus across the visual field: A critical look at the evidence. Perception & Psychophysics. 1987;42:299–305. doi: 10.3758/bf03203082. [DOI] [PubMed] [Google Scholar]

- Eriksen CW, Schultz DW. Information processing in visual search: A continuous flow conception and experimental results. Perception & Psychophysics. 1979;25:249–263. doi: 10.3758/bf03198804. [DOI] [PubMed] [Google Scholar]

- Eriksen CW, St. James JD. Visual attention within and around the field of focal attention: A zoom lens model. Perception & Psychophysics. 1986;40:225–240. doi: 10.3758/bf03211502. [DOI] [PubMed] [Google Scholar]

- Eriksen CW, Webb JM. Shifting of attentional focus within and about a visual display. Perception & Psychophysics. 1989;45:175–183. doi: 10.3758/bf03208052. [DOI] [PubMed] [Google Scholar]

- Eriksen CW, Yeh Y-Y. Allocation of attention in the visual field. Journal of Experimental Psychology: Human Perception and Performance. 1985;11:583–597. doi: 10.1037//0096-1523.11.5.583. [DOI] [PubMed] [Google Scholar]

- Estes WK. Interactions of signal and background variables in visual processing. Perception & Psychophysics. 1972;12:278–286. [Google Scholar]

- Farah MJ, Wong AB, Monheit MA, Morrow LA. Parietal lobe mechanisms of spatial attention: Modality-specific or supramodal? Neuropsychologia. 1989;27:461–470. doi: 10.1016/0028-3932(89)90051-1. [DOI] [PubMed] [Google Scholar]

- Gallace A, Spence C. In touch with the future: The sense of touch from cognitive neuroscience to virtual reality. Oxford, UK: Oxford University Press; 2014. [Google Scholar]

- Gallace A, Soto-Faraco S, Dalton P, Kreukniet B, Spence C. Response requirements modulate tactile spatial congruency effects. Experimental Brain Research. 2008;191:171–186. doi: 10.1007/s00221-008-1510-x. [DOI] [PubMed] [Google Scholar]

- Gallace A, Ngo MK, Sulaitis J, Spence C. Multisensory presence in virtual reality: Possibilities & limitations. In: Ghinea G, Andres F, Gulliver S, editors. Multiple sensorial media advances and applications: New developments in MulSeMedia. Hershey, PA: IGI Global; 2012. pp. 1–38. [Google Scholar]

- Gathercole SE, Broadbent DE. Spatial factors in visual attention: Some compensatory effects of location and time of arrival of nontargets. Perception. 1987;16:433–443. doi: 10.1068/p160433. [DOI] [PubMed] [Google Scholar]

- Hagenaar R, van der Heijden AHC. Target-noise separation in visual selective attention. Acta Psychologica. 1986;62:161–176. doi: 10.1016/0001-6918(86)90066-1. [DOI] [PubMed] [Google Scholar]

- Handel S. Space is to time as vision is to audition: Seductive but misleading. Journal of Experimental Psychology: Human Perception and Performance. 1988;14:315–317. doi: 10.1037//0096-1523.14.2.315. [DOI] [PubMed] [Google Scholar]

- Handel S. No one analogy is sufficient: Rejoinder to Kubovy. Journal of Experimental Psychology: Human Perception and Performance. 1988;14:321. doi: 10.1037//0096-1523.14.2.315. [DOI] [PubMed] [Google Scholar]

- Harms L, Bundesen C. Color segregation and selective attention in a nonsearch task. Perception & Psychophysics. 1983;33:11–19. doi: 10.3758/bf03205861. [DOI] [PubMed] [Google Scholar]

- Hsiao SS. Similarities between touch and vision. Advances in Psychology. 1998;127:131–165. [Google Scholar]

- Jonides J. Towards a model of the mind's eye's movement. Canadian Journal of Psychology. 1981;34:103–112. doi: 10.1037/h0081031. [DOI] [PubMed] [Google Scholar]

- Jonides J. Voluntary versus automatic control over the mind's eye's movement. In: Long J, Baddeley A, editors. Attention and performance. Hillsdale, NJ: Erlbaum; 1981. pp. 187–203. [Google Scholar]

- Julesz B, Hirsh IJ. Visual and auditory perception - An essay of comparison. In: David EE Jr, Denes PB, editors. Human communication: A unified view. New York, NY: McGraw-Hill; 1972. pp. 283–340. [Google Scholar]

- Kidd G, Jr, Mason CR, Rohtla TL, Deliwala PS. Release from masking due to spatial separation of sources in the identification of nonspeech auditory patterns. Journal of the Acoustical Society of America. 1998;104:422–431. doi: 10.1121/1.423246. [DOI] [PubMed] [Google Scholar]

- Klein R, Hansen E. Chronometric analysis of apparent spotlight failure in endogenous visual orienting. Journal of Experimental Psychology: Human Perception and Performance. 1990;16:790–801. doi: 10.1037//0096-1523.16.4.790. [DOI] [PubMed] [Google Scholar]

- Kramer AF, Tham MP, Yeh YY. Movement and focused attention: A failure to replicate. Perception & Psychophysics. 1991;50:537–546. doi: 10.3758/bf03207537. [DOI] [PubMed] [Google Scholar]

- Kubovy M. Should we resist the seductiveness of the space:time::vision:audition analogy? Journal of Experimental Psychology: Human Perception and Performance. 1988;14:318–320. [Google Scholar]

- Kubovy M, Pomerantz JJ, editors. Perceptual organization. Hillsdale, NJ: Erlbaum; 1981. [Google Scholar]

- LaBerge D. The spatial extent of attention to letters and words. Journal of Experimental Psychology: Human Perception and Performance. 1983;9:371–379. doi: 10.1037//0096-1523.9.3.371. [DOI] [PubMed] [Google Scholar]

- LaBerge D. Attentional processing. Cambridge, MA: Harvard University Press; 1995. [Google Scholar]

- LaBerge D, Carlson RL, Williams JK, Bunney BG. Shifting attention in visual space: Tests of moving-spotlight models versus an activity-distribution model. Journal of Experimental Psychology: Human Perception and Performance. 1997;23:1380–1392. doi: 10.1037//0096-1523.23.5.1380. [DOI] [PubMed] [Google Scholar]

- Lakatos S, Shepard RN. Time-distance relations in shifting attention between locations on one's body. Perception & Psychophysics. 1997;59:557–566. doi: 10.3758/bf03211864. [DOI] [PubMed] [Google Scholar]

- Lavie N. Perceptual load as a necessary condition for selective attention. Journal of Experimental Psychology: Human Perception & Performance. 1995;21:451–468. doi: 10.1037//0096-1523.21.3.451. [DOI] [PubMed] [Google Scholar]

- Lavie N, Driver J. On the spatial extent of attention in object-based visual selection. Perception & Psychophysics. 1996;58:1238–1251. doi: 10.3758/bf03207556. [DOI] [PubMed] [Google Scholar]

- Lavie N, Tsal Y. Perceptual load as a major determinant of the locus of selection in visual attention. Perception & Psychophysics. 1994;56:183–197. doi: 10.3758/bf03213897. [DOI] [PubMed] [Google Scholar]

- Leek MR, Brown ME, Dorman MF. Information masking and auditory attention. Perception & Psychophysics. 1991;50:205–214. doi: 10.3758/bf03206743. [DOI] [PubMed] [Google Scholar]

- Lidestam B, Holgersson J, Moradi S. Comparison of informational vs. energetic masking effects on speechreading performance. Frontiers in Psychology. 2014;5:639. doi: 10.3389/fpsyg.2014.00639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd DM, Merat N, McGlone F, Spence C. Crossmodal links between audition and touch in covert endogenous spatial attention. Perception & Psychophysics. 2003;65:901–924. doi: 10.3758/bf03194823. [DOI] [PubMed] [Google Scholar]

- Marini F, Romano D, Maravita A. The contribution of response conflict, multisensory integration, and body-mediated attention to the crossmodal congruency effect. Experimental Brain Research. 2017;235:873–887. doi: 10.1007/s00221-016-4849-4. [DOI] [PubMed] [Google Scholar]

- McLeod P, Driver J, Crisp J. Visual search for a conjunction of movement and form is parallel. Nature. 1988;332:154–155. doi: 10.1038/332154a0. [DOI] [PubMed] [Google Scholar]

- McMains SA, Somers DC. Multiple spotlights of attentional selection in human visual cortex. Neuron. 2004;42:677–686. doi: 10.1016/s0896-6273(04)00263-6. [DOI] [PubMed] [Google Scholar]

- Merat N, Spence C, Lloyd DM, Withington DJ, McGlone F. Audiotactile links in focused and divided spatial attention. Society for Neuroscience Abstracts. 1999;25:1417. [Google Scholar]

- Miles C, Jones DM. The fallacy of the cross-modal Stroop effect: A rejoinder to Cowan (1989) Perception & Psychophysics. 1989;45:85–86. doi: 10.3758/bf03208036. [DOI] [PubMed] [Google Scholar]

- Miller J. Response-compatibility effects in focused-attention tasks: A same-hand advantage in response activation. Perception & Psychophysics. 1988;43:83–89. doi: 10.3758/bf03208977. [DOI] [PubMed] [Google Scholar]

- Miller J. The flanker compatibility effect as a function of visual angle, attentional focus, visual transients, and perceptual load: A search for boundary conditions. Perception & Psychophysics. 1991;49:270–288. doi: 10.3758/bf03214311. [DOI] [PubMed] [Google Scholar]

- Moray N. Attention in dichotic listening: Affective cues and the influence of instructions. Quarterly Journal of Experimental Psychology. 1959;11:56–60. [Google Scholar]

- Moray N. Attention: Selective processes in vision and hearing. London, UK: Hutchinson Educational; 1969. [Google Scholar]

- Moray N. Listening and attention. Middlesex, UK: Penguin Books; 1969. [Google Scholar]

- Müller MM, Hübner R. Can the spotlight of attention be shaped like a doughnut? Evidence from steady-state visual evoked potentials. Psychological Science. 2002;13:119–124. doi: 10.1111/1467-9280.00422. [DOI] [PubMed] [Google Scholar]

- Müller MM, Malinowski P, Gruber T, Hillyard SA. Sustained division of the attentional spotlight. Nature. 2003;424:309–312. doi: 10.1038/nature01812. [DOI] [PubMed] [Google Scholar]

- Murphy TD, Eriksen CW. Temporal changes in the distribution of attention in the visual field in response to precues. Perception & Psychophysics. 1987;42:576–586. doi: 10.3758/bf03207989. [DOI] [PubMed] [Google Scholar]

- Neumann O, Van der Heijden AHC, Allport DA. Visual selective attention: Introductory remarks. Psychological Research. 1986;48:185–188. doi: 10.1007/BF00309082. [DOI] [PubMed] [Google Scholar]

- O’Callaghan C. Not all perceptual experience is modality specific. In: Stokes D, Biggs S, Matthen M, editors. Perception and its modalities. Oxford, UK: Oxford University Press; 2014. pp. 73–103. [Google Scholar]

- Occelli V, Spence C, Zampini M. Compatibility effects between sound frequencies and tactile elevation. Neuroreport. 2009;20:793–797. doi: 10.1097/WNR.0b013e32832b8069. [DOI] [PubMed] [Google Scholar]

- Pallasmaa J. The eyes of the skin: Architecture and the senses (Polemics) London, UK: Academy Editions; 1996. [Google Scholar]

- Pavani F, Spence C, Driver J. Visual capture of touch: Out-of-the-body experiences with rubber gloves. Psychological Science. 2000;11:353–359. doi: 10.1111/1467-9280.00270. [DOI] [PubMed] [Google Scholar]

- Posner MI. Chronometric explorations of mind. Hillsdale, NJ: Erlbaum; 1978. [Google Scholar]

- Posner MI. Orienting of attention. Quarterly Journal of Experimental Psychology. 1980;32:3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Posner MI. Hierarchical distributed networks in the neuropsychology of selective attention. In: Caramazza A, editor. Cognitive neuropsychology and neurolinguistics: Advances in models of cognitive function and impairment. Hillsdale, NJ: Erlbaum; 1990. pp. 187–210. [Google Scholar]

- Posner MI, Snyder CR, Davidson BJ. Attention and the detection of signals. Journal of Experimental Psychology. 1980;2:160–174. [PubMed] [Google Scholar]

- Reisberg D. Looking where you listen: Visual cues and auditory attention. Acta Psychologica. 1978;42:331–341. doi: 10.1016/0001-6918(78)90007-0. [DOI] [PubMed] [Google Scholar]

- Reisberg D, Scheiber R, Potemken L. Eye position and the control of auditory attention. Journal of Experimental Psychology: Human Perception and Performance. 1981;7:318–323. doi: 10.1037//0096-1523.7.2.318. [DOI] [PubMed] [Google Scholar]

- Remington R, Pierce L. Moving attention: Evidence for time-invariant shifts of visual selective attention. Perception & Psychophysics. 1984;35:393–399. doi: 10.3758/bf03206344. [DOI] [PubMed] [Google Scholar]

- Remington RW. Attention and saccadic eye movements. Journal of Experimental Psychology: Human Perception and Performance. 1980;6:726–744. doi: 10.1037//0096-1523.6.4.726. [DOI] [PubMed] [Google Scholar]

- Rhodes G. Auditory attention and the representation of spatial information. Perception & Psychophysics. 1987;42:1–14. doi: 10.3758/bf03211508. [DOI] [PubMed] [Google Scholar]

- Rosenbaum DA, Hindorff V, Barnes HJ. Paper presented at the 27th Annual Meeting of the Psychonomic Society. New Orleans: November; 1986. Internal representation of the body surface. [Google Scholar]

- Rosli, R. M., Jones, C. M., Tan, H. Z., Proctor, R. W., & Gray, R. (2009). The haptic cuing of visual spatial attention: Evidence of a spotlight effect. In Proceedings of SPIE - The International Society for Optical Engineering. San Jose, CA, USA, January 19–22, 2009. 10.1117/12.817168.

- Sagi D, Julesz B. Fast inertial shifts of attention. Spatial Vision. 1985;1:141–149. doi: 10.1163/156856885x00152. [DOI] [PubMed] [Google Scholar]

- Shepherd M, Müller HJ. Movement versus focusing of visual attention. Perception & Psychophysics. 1989;46:146–154. doi: 10.3758/bf03204974. [DOI] [PubMed] [Google Scholar]

- Shepherd M, Findlay JM, Hockey RJ. The relationship between eye movements and spatial attention. Quarterly Journal of Experimental Psychology. 1986;38A:475–491. doi: 10.1080/14640748608401609. [DOI] [PubMed] [Google Scholar]

- Shinn-Cunningham BG. Object-based auditory and visual attention. Trends in Cognitive Sciences. 2008;12:182–186. doi: 10.1016/j.tics.2008.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shore DI, Barnes ME, Spence C. The temporal evolution of the crossmodal congruency effect. Neuroscience Letters. 2006;392:96–100. doi: 10.1016/j.neulet.2005.09.001. [DOI] [PubMed] [Google Scholar]

- Shulman GL. Relating attention to visual mechanisms. Perception & Psychophysics. 1990;47:199–203. doi: 10.3758/bf03205984. [DOI] [PubMed] [Google Scholar]

- Shulman GL, Remington RW, McLean JP. Moving attention through visual space. Journal of Experimental Psychology: Human Perception and Performance. 1979;5:522–526. doi: 10.1037//0096-1523.5.3.522. [DOI] [PubMed] [Google Scholar]

- Shulman GL, Sheehy JB, Wilson J. Gradients of spatial attention. Acta Psychologica. 1986;61:167–181. doi: 10.1016/0001-6918(86)90029-6. [DOI] [PubMed] [Google Scholar]

- Soto-Faraco S, Ronald A, Spence C. Tactile selective attention and body posture: Assessing the contribution of vision and proprioception. Perception & Psychophysics. 2004;66:1077–1094. doi: 10.3758/bf03196837. [DOI] [PubMed] [Google Scholar]

- Soto-Faraco S, Kvasova D, Biau E, Ikumi N, Ruzzoli M, Morís-Fernández L, Torralba M. Multisensory interactions in the real world. Cambridge Elements: Perception. Cambridge, UK: Cambridge University Press; 2019. [Google Scholar]

- Spence C. Crossmodal spatial attention. Annals of the New York Academy of Sciences (The Year in Cognitive Neuroscience) 2010;1191:182–200. doi: 10.1111/j.1749-6632.2010.05440.x. [DOI] [PubMed] [Google Scholar]

- Spence C. Multisensory integration: Solving the crossmodal binding problem. Comment on “Crossmodal influences on visual perception” by Shams & Kim. Physics of Life Reviews. 2010;7:285–286. doi: 10.1016/j.plrev.2010.06.004. [DOI] [PubMed] [Google Scholar]

- Spence C. Just how important is spatial coincidence to multisensory integration? Evaluating the spatial rule. Annals of the New York Academy of Sciences. 2013;1296:31–49. doi: 10.1111/nyas.12121. [DOI] [PubMed] [Google Scholar]

- Spence C. Orienting attention: A crossmodal perspective. In: Nobre AC, Kastner S, editors. The Oxford handbook of attention. Oxford, UK: Oxford University Press; 2014. pp. 446–471. [Google Scholar]

- Spence C. Q & A: Charles Spence. Current Biology. 2014;24:R506–R508. doi: 10.1016/j.cub.2014.03.065. [DOI] [PubMed] [Google Scholar]

- Spence C. Cross-modal perceptual organization. In: Wagemans J, editor. The Oxford handbook of perceptual organization. Oxford, UK: Oxford University Press; 2015. pp. 649–664. [Google Scholar]

- Spence C, Driver J. Covert spatial orienting in audition: Exogenous and endogenous mechanisms. Journal of Experimental Psychology: Human Perception and Performance. 1994;20:555–574. [Google Scholar]

- Spence C, Bayne T. Is consciousness multisensory? In: Stokes D, Matthen M, Biggs S, editors. Perception and its modalities. Oxford, UK: Oxford University Press; 2015. pp. 95–132. [Google Scholar]

- Spence C, Driver J. Audiovisual links in endogenous covert spatial attention. Journal of Experimental Psychology: Human Perception and Performance. 1996;22:1005–1030. doi: 10.1037//0096-1523.22.4.1005. [DOI] [PubMed] [Google Scholar]

- Spence C, Driver J, editors. Crossmodal space and crossmodal attention. Oxford, UK: Oxford University Press; 2004. [Google Scholar]

- Spence C, Frings C. Multisensory feature integration in (and out) of the focus of spatial attention. Attention, Perception, & Psychophysics. 2020;82:363–376. doi: 10.3758/s13414-019-01813-5. [DOI] [PubMed] [Google Scholar]

- Spence C, Ho C. Crossmodal attention: From the laboratory to the real world (and back again) In: Fawcett JM, Risko EF, Kingstone A, editors. The handbook of attention. Cambridge, MA: MIT Press; 2015. pp. 119–138. [Google Scholar]

- Spence C, Ho C. Multisensory perception. In: Boehm-Davis DA, Durso FT, Lee JD, editors. Handbook of human systems integration. Washington, DC: American Psychological Association; 2015. pp. 435–448. [Google Scholar]

- Spence C, Walton M. On the inability to ignore touch when responding to vision in the crossmodal congruency task. Acta Psychologica. 2005;118:47–70. doi: 10.1016/j.actpsy.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Spence C, Pavani F, Driver J. What crossing the hands can reveal about crossmodal links in spatial attention. Abstracts of the Psychonomic Society. 1998;3:13. [Google Scholar]

- Spence C, Lloyd D, McGlone F, Nicholls MER, Driver J. Inhibition of return is supramodal: A demonstration between all possible pairings of vision, touch and audition. Experimental Brain Research. 2000;134:42–48. doi: 10.1007/s002210000442. [DOI] [PubMed] [Google Scholar]

- Spence C, Pavani F, Driver J. Crossmodal links between vision and touch in covert endogenous spatial attention. Journal of Experimental Psychology: Human Perception & Performance. 2000;26:1298–1319. doi: 10.1037//0096-1523.26.4.1298. [DOI] [PubMed] [Google Scholar]

- Spence C, Ranson J, Driver J. Crossmodal selective attention: Ignoring auditory stimuli presented at the focus of visual attention. Perception & Psychophysics. 2000;62:410–424. doi: 10.3758/bf03205560. [DOI] [PubMed] [Google Scholar]

- Spence C, McDonald J, Driver J. Exogenous spatial cuing studies of human crossmodal attention and multisensory integration. In: Spence C, Driver J, editors. Crossmodal space and crossmodal attention. Oxford, UK: Oxford University Press; 2004. pp. 277–320. [Google Scholar]

- Spence C, Pavani F, Driver J. Spatial constraints on visual-tactile crossmodal distractor congruency effects. Cognitive, Affective, & Behavioral Neuroscience. 2004;4:148–169. doi: 10.3758/cabn.4.2.148. [DOI] [PubMed] [Google Scholar]

- Spence C, Pavani F, Maravita A, Holmes NP. Multi-sensory interactions. In: Lin MC, Otaduy MA, editors. Haptic rendering: Foundations, algorithms, and applications. Wellesley, MA: AK Peters; 2008. pp. 21–52. [Google Scholar]

- Stevens JC, Choo KK. Spatial acuity of the body surface over the life span. Somatosensory and Motor Research. 1996;13:153–166. doi: 10.3109/08990229609051403. [DOI] [PubMed] [Google Scholar]

- Stewart HJ, Amitay S. Modality-specificity of selective attention networks. Frontiers in Psychology. 2015;6:1826. doi: 10.3389/fpsyg.2015.01826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Styles EA. The psychology of attention (2nd) Hove, UK: Psychology Press; 2006. [Google Scholar]

- Theeuwes J, van der Burg E, Olivers CNL, Bronkhorst A. Cross-modal interactions between sensory modalities: Implications for the design of multisensory displays. In: Kramer AF, Wiegmann DA, Kirlik A, editors. Attention: From theory to practice. Oxford, UK: Oxford University Press; 2007. pp. 196–205. [Google Scholar]

- Tipper SP. The negative priming effect: Inhibitory priming by ignored objects. Quarterly Journal of Experimental Psychology. 1985;37A:571–590. doi: 10.1080/14640748508400920. [DOI] [PubMed] [Google Scholar]

- Tipper SP, Driver J, Weaver B. Object-centred inhibition of return of visual attention. Quarterly Journal of Experimental Psychology. 1991;43A:289–298. doi: 10.1080/14640749108400971. [DOI] [PubMed] [Google Scholar]

- Tipper SP, Weaver B, Jerreat LM, Burak AL. Object-based and environment-based inhibition of return of visual attention. Journal of Experimental Psychology: Human Perception & Performance. 1994;20:478–499. [PubMed] [Google Scholar]

- Tong F. Splitting the spotlight of visual attention. Neuron. 2004;42:524–526. doi: 10.1016/j.neuron.2004.05.005. [DOI] [PubMed] [Google Scholar]

- Townsend JT, Ashby FG. Methods of modeling capacity in simple processing systems. In: Castellan NJ, Restle F, editors. Cognitive theory. Hillsdale, NJ: Erlbaum; 1978. pp. 199–239. [Google Scholar]

- Townsend JT, Ashby FG. Stochastic modelling of elementary psychological processes. New York, NY: Cambridge University Press; 1983. [Google Scholar]

- Treisman A. Features and objects in visual processing. Scientific American. 1986;255:106–111. [Google Scholar]

- Treisman A, Souther J. Search asymmetry: A diagnostic for preattentive processing of separable features. Journal of Experimental Psychology: General. 1985;114:285–310. doi: 10.1037//0096-3445.114.3.285. [DOI] [PubMed] [Google Scholar]

- Treisman A, Kahneman D, Burkell J. Perceptual objects and the cost of filtering. Perception & Psychophysics. 1983;33:527–532. doi: 10.3758/bf03202934. [DOI] [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cognitive Psychology. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Tsal Y. Movements of attention across the visual field. Journal of Experimental Psychology: Human Perception and Performance. 1983;9:523–530. doi: 10.1037//0096-1523.9.4.523. [DOI] [PubMed] [Google Scholar]

- Tyler, C. W., & Likova, L. T. (2007). Crowding: A neuro-analytic approach. Journal of Vision, 7(2): 16, 1–9. [DOI] [PubMed]

- Van Steenbergen H, Band GPH, Hommel B. Threat but not arousal narrows attention: Evidence from pupil dilation and saccade control. Frontiers in Psychology. 2011;2:281. doi: 10.3389/fpsyg.2011.00281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vatakis A, Spence C. Temporal order judgments for audiovisual targets embedded in unimodal and bimodal distractor streams. Neuroscience Letters. 2006;408:5–9. doi: 10.1016/j.neulet.2006.06.017. [DOI] [PubMed] [Google Scholar]

- Wagemans J, editor. The Oxford handbook of perceptual organization. Oxford, UK: Oxford University Press; 2015. [Google Scholar]

- Walton M, Spence C. Cross-modal congruency and visual capture in a visual elevation discrimination task. Experimental Brain Research. 2004;154:113–120. doi: 10.1007/s00221-003-1706-z. [DOI] [PubMed] [Google Scholar]

- Ward LM. Supramodal and modality-specific mechanisms for stimulus-driven shifts of auditory and visual attention. Canadian Journal of Experimental Psychology. 1994;48:242–259. doi: 10.1037/1196-1961.48.2.242. [DOI] [PubMed] [Google Scholar]

- Watt RJ. Visual processing: Computational, psychophysical, and cognitive research. Hillsdale, NJ: Erlbaum; 1988. [Google Scholar]

- Weinstein, S. (1968). Intensive and extensive aspects of tactile sensitivity as a function of body part, sex, and laterality. In D. R. Kenshalo (Ed.), The skin senses (pp. 195–222). Springfield, Ill.: Thomas.

- Welch RB, DuttonHurt LD, Warren DH. Contributions of audition and vision to temporal rate perception. Perception & Psychophysics. 1986;39:294–300. doi: 10.3758/bf03204939. [DOI] [PubMed] [Google Scholar]

- Yantis S, Johnston JC. On the locus of visual selection: Evidence from focused attention tasks. Journal of Experimental Psychology: Human Perception and Performance. 1990;16:121–134. doi: 10.1037//0096-1523.16.1.135. [DOI] [PubMed] [Google Scholar]

- Zimmerman M. The nervous system in the context of information theory. In: Schmidt RF, Thews G, editors. Human Physiology (2nd. Complete Ed.) Berlin, Germany: Springer-Verlag; 1989. pp. 166–173. [Google Scholar]