Abstract

The extraordinary diversity, variability, and complexity of cell types in the vertebrate brain is overwhelming and far exceeds that of any other organ. This complexity is the result of multiple cell divisions and intricate gene regulation and cell movements that take place during embryonic development. Understanding the cellular and molecular mechanisms underlying these complicated developmental processes requires the ability to obtain a complete registry of interconnected events often taking place far apart from each other. To assist with this challenging task, developmental neuroscientists take advantage of a broad set of methods and technologies, often adopted from other fields of research. Here, we review some of the methods developed in recent years whose use has rapidly spread for application in the field of developmental neuroscience. We also provide several considerations regarding the promise that these techniques hold for the near future and share some ideas on how existing methods from other research fields could help with the analysis of how neural circuits emerge.

Keywords: Neural development tools, Light sheet microscopy, Clearing, scRNAseq, Machine learning

Moving forward

Long gone are the times when budding neuroscientists would picture themselves working like Don Santiago Ramón y Cajal using only a simple microscope and a shelf full of chemical reagents1. Today, neuroscientists of all stripes, including those working on the development of the nervous system, are taking advantage of the breadth of new methods and technologies that Don Santiago could have only dreamt about. These methods accelerate our capacity to collect and analyse biological information in large and complex specimens. For instance, we can now reconstruct in three dimensions (3D) the complete peripheral nervous system of a cleared lizard embryo2 or obtain a transcriptomic map of gene expression at the single cell (or nucleus) resolution from almost any tissue and species, including humans. Science and technology have been interconnected always, and advances in one historically translate into important progress in the other. Today, the number of available advanced techniques can be overwhelming. In this review, we discuss some recently developed techniques that are currently becoming common in laboratories studying neural development.

Shining light through the 3D embryonic nervous system

Our capacity to document the 3D organisation of the embryonic brain to understand the basic mechanisms underlying circuit formation has been limited until very recently. Studies on the development of the nervous system of most vertebrates have traditionally relied on histological sectioning methods or open book preparations that enable the visualisation of two-dimensional organisation of the axon tracts in the samples under epifluorescence or confocal microscopes. These approaches, which are based on the observation of selected slices or planes of observation (a process that can inherently introduce biases), provide only partial information about the sample. Even though 3D imaging of small embryos had been performed for many years using wide-field and confocal microscopy3, these techniques were very slow and do not scale well to larger embryos or postnatal tissues. This situation began to change with the appearance of light sheet fluorescence microscopy (LSFM). The main advantage of LSFM is the high speed of acquisition and the ability to image large sample sizes that were unpractical to image with conventional microscopes. LSFM was initially used in the field of colloidal chemistry4, and about 30 years ago it was adapted to biology to visualise guinea-pig cochleas in 3D5. LSFM combines the speed of wide-field imaging with optical sectioning and low photobleaching. In conventional fluorescence microscopy, the entire thickness of the sample is illuminated in the same direction as the detection optics, and, as such, the regions outside the detection focal plane of the objective are potentially damaged by extraneous out-of-focus light that increases the photobleaching. In contrast, in LSFM, the sample is illuminated from the side, perpendicular to the direction of observation, thereby placing the excitation light only where it is required. Therefore, this technique enables the visualisation of tissue samples by shining a sheet of light through the specimen, generating a series of images that can then be digitally reconstructed thanks to the development of sophisticated algorithms and huge improvement in the capacity of computers to store and analyse data6. In developmental biology, LSFM was used for the first time to visualise the transparent tissues of zebrafish and Drosophila embryos in 3D in vivo. About 8 years ago, Tomer and colleagues were able to visualise the development of the Drosophila ventral nerve cord for the first time7, and Ahrens and co-authors measured the activity of single neurons in the brain of larval zebrafish embryos8 using in vivo light sheet microscopy.

However, what eventually enabled LSFM to be used for the analysis of the nervous system was the remarkable improvement in brain clearing techniques. The rapid optimisation of clearing protocols has expanded the application of LSFM in the field of developmental neuroscience in the last 4 to 5 years. Since then, a myriad of different approaches to perform tissue clearing have been developed; these approaches vary based on the type of chemical reagents used and depend on the size of the samples. Although exhaustive reviews about the diversity of clearing protocols have been published9–11, it is worth mentioning the variants of the CUBIC and DISCO series because their excellent results and easy performance ultimately exalted them as the most widespread methods for brain clearing (see https://idisco.info and http://cubic.riken.jp).

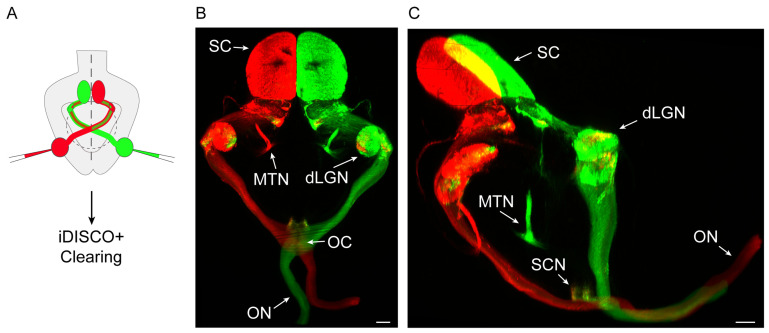

Now that we have methods to make the mammalian brain transparent and visualise it in 3D, a new world has opened up. The combination of tissue clearing and LSFM in neurodevelopmental research is rapidly contributing to important findings in the areas of cell migration and axon pathfinding. One representative example of such advances is the discovery of a small population of neurons in human embryos that secrete gonadotropin-releasing hormones and follow two different pathways of migration beyond the hypothalamus12. In axon guidance studies, this combination approach is proving to be extremely useful for visualising neuronal axons growing across the whole embryo and for detecting pathfinding defects in mutants of different members of the main families of axon guidance molecules13. It has been possible to visualise for the first time the development of the peripheral nervous system and the innervation patterns of human embryos14. Now, the power of combining these approaches with axonal tracings15 or antibody staining after functional manipulations (transgenesis16, in utero electroporation, or viral transduction17) holds the promise of interesting times ahead (Figure 1). The possibility of applying these techniques with large samples is attractive, and many labs worldwide use them for their studies in many different species18–23. Understanding how developmental processes take place in 3D will certainly extend our comprehension of how the brain develops in both healthy and diseased states.

Figure 1. Three-dimensional (3D) view of retinal axons projecting to the visual nuclei within the mouse brain.

A. Scheme of the experimental approach. A postnatal mouse is injected with fluorescent tracers of different colours in each eye and then processed through the iDISCO+ clearing protocol24. B. Dorsal view of a light sheet fluorescence microscope (LSFM)-acquired 3D image stack from the whole brain of a mouse injected with different colour tracers into each eye. dLGN, dorsal lateral geniculate nucleus; OC, optic chiasm; ON, optic nerve; MTN, medial terminal nucleus; SC, superior colliculus. Scale bar: 300 µm. C. Mediolateral view of an LSFM-acquired 3D image stack from the whole brain of a mouse injected with different colour tracers into each eye. dLGN, dorsal lateral geniculate nucleus; MTN, medial terminal nucleus; ON, optic nerve; SC, superior colliculus; SCN, suprachiasmatic nucleus. Scale bar: 300 µm.

Deconstructing development one cell at a time

The complexity and diversity of cell types is one of the most remarkable characteristics of the mature nervous system. Corticospinal neurons that connect the brain with the spinal cord, sensory neurons that detect and conduct touch information from the skin surrounding our bodies to the central nervous system, or glial cells that modulate neural activity are different examples of the richness and huge variability in cell types that make up the nervous system. Cell specification occurs during development and, until recently, researchers had very limited ways to quantify cell diversity. For many years, the quantification of diversity was constrained by the use of pooling approaches and techniques that require either harvesting cells from the same tissue or combining cells from different individuals to obtain enough material for downstream analysis. For example, in bulk RNA sequencing (RNAseq) approaches, the transcriptomic expression level of a particular gene is not measured from an individual cell but rather as the average level of expression of that gene over many cells present within the same sample. The revolution in the molecular analysis of individual neural progenitors started when the ability to sequence DNA or RNA at the single-cell level became possible. In 2013, the journal Nature Methods highlighted the ability to sequence DNA and RNA in single cells as the “Method of the Year”25, and since then single cell approaches have been continuously developed to measure and characterise different aspects of cell identity (chromatin accessibility, the genome, transcriptome, and proteome). In fact, combinations of transcriptomics plus epigenomics or transcriptomics plus proteomics in single cell analyses are rapidly emerging26,27. Here, we focus our attention on one of the most frequently used modalities in neural development, the transcriptomic characterisation of single cells.

The first protocol to perform single cell RNAseq (scRNAseq) was published in 200928, and the myriad of protocols that have been developed since then have quickly transformed several research fields and the way developmental studies are performed. The key step in scRNAseq protocols consists of tagging all transcripts inside each cell in such a way that RNA molecules coming from the same cell are easily identifiable and quantifiable29. scRNAseq enables transcriptomic cell types in the sampled tissue to be defined through the analysis of differentially expressed genes in each cell.

Nowadays, commercialisation of droplet-based sequencing, for example the 10x Genomic Chromium platform, has enabled the widespread use of scRNAseq. In the field of developmental neuroscience, scRNAseq has been used to profile the entire developing mouse brain and spinal cord30 as well as the prefrontal cortex of human embryos31 or the temporal changes in the transcriptional landscape of apical progenitors and their successive cohorts of daughter neurons in the cortex32. In general, developing tissues are characterised by the presence of a mix of cells in different stages of differentiation (progenitors, neuroblasts, early postmitotic neurons, and mature neurons). These stages are captured at the time of scRNAseq processing, thus resulting in a continuous representation of cellular states transitioning from one to another. These transitional stages may be modelled computationally by recapitulating the probable trajectory of the cells through a representation called pseudotime33, which defines the order of the cells through development. This representation therefore enables mapping of particular cell types to different states of the developmental trajectory34.

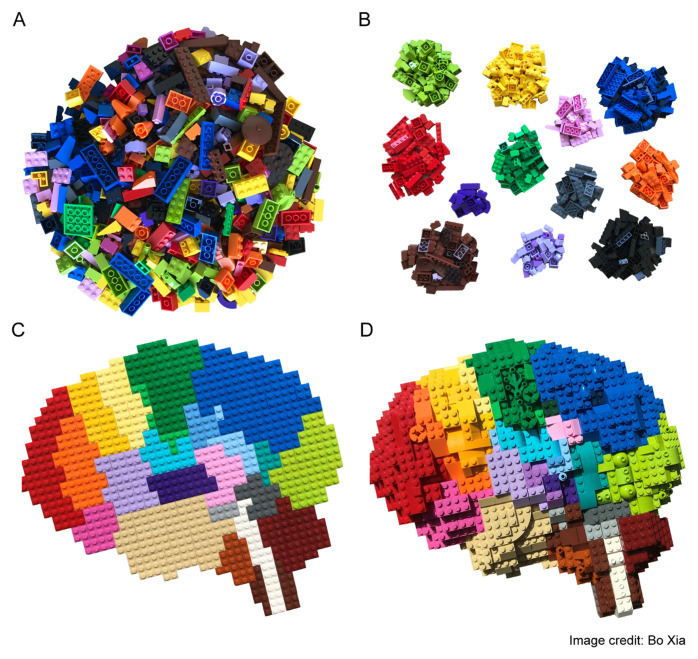

Unfortunately, this now-widespread technique has an important weakness: the loss of spatial information. Tissues, especially during development, are highly structured and dynamic, a fact that underpins the biological relevance of spatial information. The preparation of single cell suspensions needed to perform scRNAseq analyses requires the homogenisation of tissues and, as such, results in a loss of such spatial information. To overcome this limitation, several labs have now developed a series of methods commonly referred to as spatial transcriptomics. These methods vary in the way in which they maintain spatial information in the tissue sample as well as in their sensitivity, the number of transcripts that can be probed, and the spatial resolution attainable35–39, with the latest iteration of the high-definition spatial transcriptomics (HDST) method40 reaching a spatial resolution of 2 µm (Figure 2).

Figure 2. Building-block representation of single cell transcriptome modalities.

A. Bulk RNA sequencing (RNAseq) experiments use a large number of cells as starting material, which results in a high depth and resolution at the transcriptomic level. However, because the measurements obtained represent an average of gene expression across all of the cells present in the sample, any differences between cells become occluded. B. Single cell RNAseq (scRNAseq) methods are capable of maintaining cell individuality during isolation of mRNA molecules. mRNAs are tagged and reconstructed informatically so that they can be assigned to a particular cell. This enables the identification of cell clusters according to their transcriptomic signatures, but spatial information is still lost. C. The spatial location of each cell is maintained in spatial transcriptomics approaches. By fluorescently tagging each mRNA species or recording the position in situ with barcodes, spatial information may be assigned to each cell together with their transcriptomic profile. Currently, the best attainable resolution is within the tens of microns range, which is still far from ideal and sensitivity remains low. As this is a novel method, the availability of the protocol is scarce and its adoption outside originator labs is therefore difficult. D. Researchers are now advancing towards an integrated (genetic, transcriptomic, and proteomic) representation of the brain in time and space. This figure has been reused with permission from the creator Bo Xia.

It is also worth mentioning that scRNAseq approaches can be similarly applied to single nuclei. The advantages become obvious when we consider that nuclei extraction is a routine method performed in molecular biology labs, that nucleic acids are stable in fixed and frozen samples, and that clinical human tissue banks from where nuclei can be easily obtained are abundant. Moreover, enzymatic dissociation during sample preparation is not required for single nuclei RNAseq (snRNAseq), thereby yielding cell types that are more representative of the original tissue and less affected by transcriptional artefacts. A potential disadvantage of using nuclei is that lower quantities of messenger RNA are obtained from the nuclei than from whole cells, and consequently fewer genes are typically detected. Nonetheless, recent studies have shown that single nuclei and single cell approaches identify similar cell types41,42.

Exploiting artificial intelligence to understand neural development

The heading of this section might sound as if it were taken from a sci-fi movie. However, combining the computational power of modern processors and graphics processing units (GPUs) is exactly what high-throughput methodologies such as those described above require. These techniques generate huge quantities of data that need to be analysed. The simple generation of sequencing results or imaging data by itself does not provide new insights that can advance our understanding of how the nervous system develops. Intense developments in the field of machine learning have generated algorithms that may now be used to deconstruct the complexity of such data.

The imaging of a mouse brain by high-resolution LSFM generates between 20 gigabytes and up to several terabytes of data depending on the resolution9. Navigating your way through such an enormous amount of information to draw conclusions quickly becomes a dead-end, both in time and in computing requirements. Working with such vast quantities of bytes imposes a heavy processing burden on a lab’s computing capability but also makes analysing such datasets a time-consuming task. To solve this problem, the development of software capable of handling huge quantities of data becomes an urgent requirement; it becomes just as important as the need for the hardware that generates the dataset itself.

Paradoxically, even though a researcher spends just a few minutes to image a whole mouse embryo in 3D using LSFM, the quantification of such datasets often relies on tools and systems that require manual and time-consuming annotations. Fortunately, in the last few years, an unparalleled development of informatics tools has begun to help researchers quickly and accurately analyse big datasets in a short period of time. Some examples of these programs are Cell Profiler from the Broad Institute, which can easily segment nuclei in dense tissue images, and its more powerful sibling, Cell Profiler Analyst, which makes use of machine learning algorithms to recognise defined cell types from large imaging datasets43,44. Cell Profiler has been used, for example, to quantify the differences in neuronal numbers between the sulci and gyri of the cortex of Flrt3 mutant mouse embryos45 and to help elucidate the role of PTPRD in neurogenesis46. More recently, the Pachitariu lab47 released a complementary approach for cell segmentation called Cellpose. This is a generalist algorithm for cellular segmentation and is based on the use of a neural network that is trained on thousands of images from different microscope modalities (fluorescent, bright field, etc.) combined with non-biological images of similar structure. The system creates a platform capable of recognising cells from a wide array of image types. It also enables the generation of researcher-defined custom models by training the algorithm on specific types of images.

The use of machine learning, particularly neural networks trained to recognise structures of interest such as nuclei, cells, blood vessels, noise, etc. in images, has exploded in the last few years, and it is quickly becoming the go-to solution for many biomedical research problems48–57. Beyond solving imaging tasks, machine learning approaches may be used in many other applications within the field of neurodevelopment. The quantity of data generated during sequencing experiments such as scRNAseq face the same challenges as those derived from large imaging experiments. Newer and more refined technologies yielding an ever-increasing number of sequenced cells quickly translate not only into larger datasets but also into a higher number of dimensions that need to be non-linearly reduced to define particular cell types. Several packages that allow the processing of sequencing data and perform efficient dimensionality reduction or help to identify defined cell types of interest within the datasets have been released58,59. While processing of image and sequencing data are both examples from the blooming field of computational biology that are useful for studying neural development, many more developments and applications are predicted to emerge60.

What lies ahead?

Although transformational technological revolutions are constantly occurring in science, the advances that have been made in the last few years have been spectacular. Here we have highlighted what, in our opinion, are very relevant and novel approaches for investigating the developmental processes that control the formation of neural circuits. It is our belief that we will experience amazing changes in the years to come that will dwarf what we know today. We envision that tissue clearing technologies will evolve into fully applicable methods that will no longer be limited by antibody compatibility. The recent publication of CUBIC-HistoVision points in that direction, as it describes a systematic interrogation of the properties and conditions that preserve antigens and facilitate antibody penetration into fixed animal tissues61. Community crowd-sharing of resources such as those mentioned, antibody-related optimisations, and tested protocol modifications and reagents will form the basis for advancing current and future protocols, likely to the point that many antibodies will work for 3D immunostaining applications. Concurrently with advancements in staining methods, parallel development of LSFM will likely enhance the imaging resolution of transparent samples while also reducing the time required for acquisition62,63.

The most important missing piece for single cell approaches is the development of high-throughput proteomics to individually measure protein content in each cell with enough depth to cover the whole proteome. Beyond basic estimation per cell, single cell DNA or RNA technologies are incapable of measuring the abundance and activity of proteins, which are regulated by both post-translational modifications and degradation. Although single cell proteomic approaches are already available, most of them currently rely on antibodies to detect the proteins of interest; this imposes an important throughput limitation. Methods to quantify thousands of proteins in hundreds of cells through the use of mass spectrometry (MS) are emerging, and improvements in MS are expected to increase the sensitivity of single cell proteomics64. The development of effective and high-throughput approaches in single cell proteomics will aid the quest to fully characterize cells, their functional and developmental states, and the mechanisms involved in transitioning from one state to another. Matched single cell genetic, transcriptomic, and proteomic data will help to elucidate the mechanisms behind the formation of a fully developed nervous system.

The application of machine learning in the field of developmental neuroscience is still in its infancy but will likely explode in the near future. Examples stemming from cancer research65, such as those using neural networks trained to identify different types of tumours based on their location and composition in cleared whole mouse bodies66, highlight the possibilities of gathering current computing power so that it can be applied to other fields such as neural development. Similar applications of machine learning algorithms could aid in the recognition of changing mRNA/protein expression patterns in brain development. Labour-intensive tasks commonly used to study the developing nervous system could also greatly benefit from the implementation of tools developed in other neuroscience-related areas. For example, the automated identification and tracking of migrating neurons should be easily adopted following the lead of algorithms like DeepLabCut that behavioural neuroscience labs are using to track the position of different parts of the mouse body without the use of markers67. ClearMap is another algorithm that maps cells automatically in the mouse brain of LSFM datasets, which could be applied to neonate brains24. Another very promising avenue is the algorithm Trailmap, which was recently developed in the Luo lab to automatically identify and extract axonal projections in 3D image volumes68 and may be easily implemented to improve the quantification of axon guidance studies. Adoption of such neural networks will probably require re-training and optimisation to the specific use-case scenario and dataset, which highlights the need for fast-training computational strategies in order to facilitate the broader use of these techniques.

Therefore, despite the impressive amount of state-of-the-art technologies developed in the last few years, there is still room for improvement of some of the latest methods available to study the developing nervous system. We could envision a not-so-distant day when 3D embryonic brain imaging will be combined with single-cell technologies to elucidate the chromatin, mRNA, and protein signatures of each cell in situ at the same time. Such datasets would contain information about what are considered the main determinants of cell identity while maintaining the intact structure, shape, and form of the tissue. This “fantasy technical improvement” could be pictured even one step further by introducing the fourth dimension into account and analyse datasets of embryos at different stages of development to provide the most detailed description of development progression to date. However, writing a “what will the future look like” piece is bound to fail. History has demonstrated that both the imagination and the driving force of scientists are many orders of magnitude beyond what can be anticipated. As such, while this review will likely become obsolete shortly, it will be a good sign that developmental neuroscience maintains its exponential progression in advancing our understanding of the assembly of neural circuits.

The peer reviewers who approve this article are:

Nicolas Renier, Sorbonne Université, Paris Brain Institute, Inserm, CNRS, Paris, France

Carol Mason, Departments of Pathology and Cell Biology, Neuroscience, and Ophthalmology, Zuckerman Institute, Columbia University, College of Physicians and Surgeons, New York, USA

Funding Statement

The laboratory of EH is funded by the following grants: PID2019-110535GB-I00 from the National Grant Research Program, PROMETEO Program (2020/007) from Generalitat Valenciana, and RAF-20191956 from the Ramón Areces Foundation. AE is funded by LaCaixa Foundation through the Postdoctoral Junior Leader Fellowship Programme (LCF/BQ/PI18/11630005).

The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. The Cajal Legacy: Consejo Superior de Investigaciones Científicas - CSIC - csic.es Reference Source [Google Scholar]

- 2. Alain Chédotal en Twitter: ‘It was a risky experiment but thanks to Ripley we did it: this is our contribution to #AlienDay enjoy https://t.co/QyZHFtfkYy’ / Twitter. Twitter https://twitter.com/alainchedotal/status/1254456825514254337. [Google Scholar]

- 3. Dent JA, Polson AG, Klymkowsky MW: A whole-mount immunocytochemical analysis of the expression of the intermediate filament protein vimentin in Xenopus. Development. 1989; 105(1): 61–74. [DOI] [PubMed] [Google Scholar]

- 4. Keller PJ, Dodt HU: Light sheet microscopy of living or cleared specimens. Curr Opin Neurobiol. 2012; 22(1): 138–43. 10.1016/j.conb.2011.08.003 [DOI] [PubMed] [Google Scholar]

- 5. Voie AH, Burns DH, Spelman FA: Orthogonal-plane fluorescence optical sectioning: Three-dimensional imaging of macroscopic biological specimens. J Microsc. 1993; 170(Pt 3): 229–36. 10.1111/j.1365-2818.1993.tb03346.x [DOI] [PubMed] [Google Scholar]

- 6. Amat F, Höckendorf B, Wan Y, et al. : Efficient processing and analysis of large-scale light-sheet microscopy data. Nat Protoc. 2015; 10(11): 1679–96. 10.1038/nprot.2015.111 [DOI] [PubMed] [Google Scholar]

- 7. Tomer R, Khairy K, Amat F, et al. : Quantitative high-speed imaging of entire developing embryos with simultaneous multiview light-sheet microscopy. Nat Methods. 2012; 9(7): 755–63. 10.1038/nmeth.2062 [DOI] [PubMed] [Google Scholar]

- 8. Ahrens MB, Orger MB, Robson DN, et al. : Whole-brain functional imaging at cellular resolution using light-sheet microscopy. Nat Methods. 2013; 10(5): 413–20. 10.1038/nmeth.2434 [DOI] [PubMed] [Google Scholar]

- 9. Ueda HR, Ertürk A, Chung K, et al. : Tissue clearing and its applications in neuroscience. Nat Rev Neurosci. 2020; 21(2): 61–79. 10.1038/s41583-019-0250-1 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 10. Vigouroux RJ, Belle M, Chédotal A: Neuroscience in the third dimension: Shedding new light on the brain with tissue clearing. Mol Brain. 2017; 10(1): 33. 10.1186/s13041-017-0314-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Porter DDL, Morton PD: Clearing techniques for visualizing the nervous system in development, injury, and disease. J Neurosci Methods. 2020; 334: 108594. 10.1016/j.jneumeth.2020.108594 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 12. Casoni F, Malone SA, Belle M, et al. : Development of the neurons controlling fertility in humans: New insights from 3D imaging and transparent fetal brains. Development. 2016; 143(21): 3969–81. 10.1242/dev.139444 [DOI] [PubMed] [Google Scholar]

- 13. Belle M, Godefroy D, Dominici C, et al. : A simple method for 3D analysis of immunolabeled axonal tracts in a transparent nervous system. Cell Rep. 2014; 9(4): 1191–201. 10.1016/j.celrep.2014.10.037 [DOI] [PubMed] [Google Scholar]

- 14. Belle M, Godefroy D, Couly G, et al. : Tridimensional Visualization and Analysis of Early Human Development. Cell. 2017; 169(1): 161–173.e12. 10.1016/j.cell.2017.03.008 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 15. Vigouroux RJ, Cesar Q, Chédotal A, et al. : Revisiting the role of Dcc in visual system development with a novel eye clearing method. eLife. 2020; 9: e51275. 10.7554/eLife.51275 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 16. Moreno-Bravo JA, Puiggros SR, Blockus H, et al. : Commissural neurons transgress the CNS/PNS boundary in absence of ventricular zone-derived netrin 1. Development. 2018; 145(2): dev159400. 10.1242/dev.159400 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 17. Yu T, Zhu J, Li Y, et al. : RTF: A rapid and versatile tissue optical clearing method. Sci Rep. 2018; 8(1): 1964. 10.1038/s41598-018-20306-3 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 18. Pende M, Becker K, Wanis M, et al. : High-resolution ultramicroscopy of the developing and adult nervous system in optically cleared Drosophila melanogaster. Nat Commun. 2018; 9(1): 4731. 10.1038/s41467-018-07192-z [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 19. Wan Y, Wei Z, Looger LL, et al. : Single-Cell Reconstruction of Emerging Population Activity in an Entire Developing Circuit. Cell. 2019; 179(2): 355–372.e23. 10.1016/j.cell.2019.08.039 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 20. McDole K, Guignard L, Amat F, et al. : In Toto Imaging and Reconstruction of Post-Implantation Mouse Development at the Single-Cell Level. Cell. 2018; 175(3): 859–876.e33. 10.1016/j.cell.2018.09.031 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 21. Whitman MC, Nguyen EH, Bell JL, et al. : Loss of CXCR4/CXCL12 Signaling Causes Oculomotor Nerve Misrouting and Development of Motor Trigeminal to Oculomotor Synkinesis. Invest Ophthalmol Vis Sci. 2018; 59(12): 5201–9. 10.1167/iovs.18-25190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Wu Z, Makihara S, Yam PT, et al. : Long-Range Guidance of Spinal Commissural Axons by Netrin1 and Sonic Hedgehog from Midline Floor Plate Cells. Neuron. 2019; 101(4): 635–647.e4. 10.1016/j.neuron.2018.12.025 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 23. Hertz NT, Adams EL, Weber RA, et al. : Neuronally Enriched RUFY3 Is Required for Caspase-Mediated Axon Degeneration. Neuron. 2019; 103(3): 412–422.e4. 10.1016/j.neuron.2019.05.030 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 24. Renier N, Adams EL, Kirst C, et al. : Mapping of Brain Activity by Automated Volume Analysis of Immediate Early Genes. Cell. 2016; 165(7): 1789–802. 10.1016/j.cell.2016.05.007 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 25. Method of the year 2013. Nat Methods. 2014; 11(1): 1. 10.1038/nmeth.2801 [DOI] [PubMed] [Google Scholar]

- 26. Lake BB, Chen S, Sos BC, et al. : Integrative single-cell analysis of transcriptional and epigenetic states in the human adult brain. Nat Biotechnol. 2018; 36(1): 70–80. 10.1038/nbt.4038 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 27. Schenk S, Bannister SC, Sedlazeck FJ, et al. : Combined transcriptome and proteome profiling reveals specific molecular brain signatures for sex, maturation and circalunar clock phase. eLife. 2019; 8: e41556. 10.7554/eLife.41556 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 28. Tang F, Barbacioru C, Wang Y, et al. : mRNA-Seq whole-transcriptome analysis of a single cell. Nat Methods. 2009; 6(5): 377–82. 10.1038/nmeth.1315 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 29. Chen G, Ning B, Shi T: Single-Cell RNA-Seq Technologies and Related Computational Data Analysis. Front Genet. 2019; 10: 317. 10.3389/fgene.2019.00317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Rosenberg AB, Roco CM, Muscat RA, et al. : Single-cell profiling of the developing mouse brain and spinal cord with split-pool barcoding. Science. 2018; 360(6385): 176–82. 10.1126/science.aam8999 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 31. Zhong S, Zhang S, Fan X, et al. : A single-cell RNA-seq survey of the developmental landscape of the human prefrontal cortex. Nature. 2018; 555(7697): 524–8. 10.1038/nature25980 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 32. Telley L, Agirman G, Prados J, et al. : Temporal patterning of apical progenitors and their daughter neurons in the developing neocortex. Science. 2019; 364(6440): eaav2522. 10.1126/science.aav2522 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 33. Trapnell C, Cacchiarelli D, Grimsby J, et al. : The dynamics and regulators of cell fate decisions are revealed by pseudotemporal ordering of single cells. Nat Biotechnol. 2014; 32(4): 381–6. 10.1038/nbt.2859 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Griffiths JA, Scialdone A, Marioni JC: Using single-cell genomics to understand developmental processes and cell fate decisions. Mol Syst Biol. 2018; 14(4): e8046. 10.15252/msb.20178046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Codeluppi S, Borm LE, Zeisel A, et al. : Spatial organization of the somatosensory cortex revealed by osmFISH. Nat Methods. 2018; 15(11): 932–5. 10.1038/s41592-018-0175-z [DOI] [PubMed] [Google Scholar]

- 36. Eng CHL, Lawson M, Zhu Q, et al. : Transcriptome-scale super-resolved imaging in tissues by RNA seqFISH. Nature. 2019; 568(7751): 235–9. 10.1038/s41586-019-1049-y [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 37. Salmén F, Ståhl PL, Mollbrink A, et al. : Barcoded solid-phase RNA capture for Spatial Transcriptomics profiling in mammalian tissue sections. Nat Protoc. 2018; 13(11): 2501–34. 10.1038/s41596-018-0045-2 [DOI] [PubMed] [Google Scholar]

- 38. Wang X, Allen WE, Wright MA, et al. : Three-dimensional intact-tissue sequencing of single-cell transcriptional states. Science. 2018; 361(6400): eaat5691. 10.1126/science.aat5691 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 39. Rodriques SG, Stickels RR, Goeva A, et al. : Slide-seq: A scalable technology for measuring genome-wide expression at high spatial resolution. Science. 2019; 363(6434): 1463–7. 10.1126/science.aaw1219 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 40. Vickovic S, Eraslan G, Salmén F, et al. : High-definition spatial transcriptomics for in situ tissue profiling. Nat Methods. 2019; 16(10): 987–90. 10.1038/s41592-019-0548-y [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 41. Habib N, Avraham-Davidi I, Basu A, et al. : Massively parallel single-nucleus RNA-seq with DroNc-seq. Nat Methods. 2017; 14(10): 955–8. 10.1038/nmeth.4407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Bakken TE, Hodge RD, Miller JA, et al. : Single-nucleus and single-cell transcriptomes compared in matched cortical cell types. PLoS One. 2018; 13(12): e0209648. 10.1371/journal.pone.0209648 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 43. Carpenter AE, Jones TR, Lamprecht MR, et al. : CellProfiler: Image analysis software for identifying and quantifying cell phenotypes. Genome Biol. 2006; 7(10): R100. 10.1186/gb-2006-7-10-r100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Jones TR, Carpenter AE, Lamprecht MR, et al. : Scoring diverse cellular morphologies in image-based screens with iterative feedback and machine learning. Proc Natl Acad Sci U S A. 2009; 106(6): 1826–31. 10.1073/pnas.0808843106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Del Toro D, Ruff T, Cederfjäll E, et al. : Regulation of Cerebral Cortex Folding by Controlling Neuronal Migration via FLRT Adhesion Molecules. Cell. 2017; 169(4): 621–635.e16. 10.1016/j.cell.2017.04.012 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 46. Tomita H, Cornejo F, Aranda-Pino B, et al. : The Protein Tyrosine Phosphatase Receptor Delta Regulates Developmental Neurogenesis. Cell Rep. 2020; 30(7): 215–228.e5. 10.1016/j.celrep.2019.11.033 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 47. Stringer C, Wang T, Michaelos M, et al. : Cellpose: a generalist algorithm for cellular segmentation. 2020. 10.1101/2020.02.02.931238 [DOI] [PubMed] [Google Scholar]

- 48. Caicedo JC, Cooper S, Heigwer F, et al. : Data-analysis strategies for image-based cell profiling. Nat Methods. 2017; 14(9): 849–63. 10.1038/nmeth.4397 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Tetteh G, Efremov V, Forkert ND, et al. : DeepVesselNet: Vessel Segmentation, Centerline Prediction, and Bifurcation Detection in 3-D Angiographic Volumes. 13 Reference Source [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Weigert M, Schmidt U, Boothe T, et al. : Content-aware image restoration: Pushing the limits of fluorescence microscopy. Nat Methods. 2018; 15(12): 1090–7. 10.1038/s41592-018-0216-7 [DOI] [PubMed] [Google Scholar]

- 51. Wang H, Rivenson Y, Jin Y, et al. : Deep learning enables cross-modality super-resolution in fluorescence microscopy. Nat Methods. 2019; 16(1): 103–10. 10.1038/s41592-018-0239-0 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 52. Falk T, Mai D, Bensch R, et al. : U-Net: deep learning for cell counting, detection, and morphometry. Nat Methods. 2019; 16(1): 67–70. 10.1038/s41592-018-0261-2 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 53. Dorkenwald S, Schubert PJ, Killinger MF, et al. : Automated synaptic connectivity inference for volume electron microscopy. Nat Methods. 2017; 14(4): 435–42. 10.1038/nmeth.4206 [DOI] [PubMed] [Google Scholar]

- 54. Livne M, Rieger J, Aydin OU, et al. : A U-Net Deep Learning Framework for High Performance Vessel Segmentation in Patients With Cerebrovascular Disease. Front Neurosci. 2019; 13: 97. 10.3389/fnins.2019.00097 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 55. Todorov MI, Paetzold JC, Schoppe O, et al. : Machine learning analysis of whole mouse brain vasculature. Nat Methods. 2020; 17(4): 442–9. 10.1038/s41592-020-0792-1 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 56. Kirst C, Skriabine S, Vieites-Prado A, et al. : Mapping the Fine-Scale Organization and Plasticity of the Brain Vasculature. Cell. 2020; 180(4): 780–795.e25. 10.1016/j.cell.2020.01.028 [DOI] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 57. Di Giovanna AP, Tibo A, Silvestri L, et al. : Whole-Brain Vasculature Reconstruction at the Single Capillary Level. Sci Rep. 2018; 8(1): 12573. 10.1038/s41598-018-30533-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Ding J, Condon A, Shah SP: Interpretable dimensionality reduction of single cell transcriptome data with deep generative models. Nat Commun. 2018; 9(1): 2002. 10.1038/s41467-018-04368-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Torroja C, Sanchez-Cabo F: Digitaldlsorter: Deep-Learning on scRNA-Seq to Deconvolute Gene Expression Data. Front Genet. 2019; 10: 978. 10.3389/fgene.2019.00978 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 60. Zheng J, Wang K: Emerging deep learning methods for single-cell RNA-seq data analysis. Quant Biol. 2019; 7: 247–54. 10.1007/s40484-019-0189-2 [DOI] [Google Scholar]

- 61. Susaki EA, Shimizu C, Kuno A, et al. : Versatile whole-organ/body staining and imaging based on electrolyte-gel properties of biological tissues. Nat Commun. 2020; 11: 1982 10.1038/s41467-020-15906-5 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 62. Saghafi S, Haghi-Danaloo N, Becker K, et al. : Reshaping a multimode laser beam into a constructed Gaussian beam for generating a thin light sheet. J Biophotonics. 2018; 11(6): e201700213. 10.1002/jbio.201700213 [DOI] [PubMed] [Google Scholar]

- 63. Voigt FF, Kirschenbaum D, Platonova E, et al. : The mesoSPIM initiative: open-source light-sheet microscopes for imaging cleared tissue. Nat Methods. 2019; 16(11): 1105–8. 10.1038/s41592-019-0554-0 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 64. Slavov N: Unpicking the proteome in single cells. Science. 2020; 367(6477): 512–3. 10.1126/science.aaz6695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Levine AB, Schlosser C, Grewal J, et al. : Rise of the Machines: Advances in Deep Learning for Cancer Diagnosis. Trends Cancer. 2019; 5(3): 157–69. 10.1016/j.trecan.2019.02.002 [DOI] [PubMed] [Google Scholar]

- 66. Pan C, Schoppe O, Parra-Damas A, et al. : Deep Learning Reveals Cancer Metastasis and Therapeutic Antibody Targeting in the Entire Body. Cell. 2019; 179(7): 1661–1676.e19. 10.1016/j.cell.2019.11.013 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation

- 67. Mathis A, Mamidanna P, Abe T, et al. : Markerless tracking of user-defined features with deep learning. ArXiv180403142 Cs Q-Bio Stat. 2018. Reference Source [Google Scholar]

- 68. Friedmann D, Pun A, Adams EL, et al. : Mapping mesoscale axonal projections in the mouse brain using a 3D convolutional network. Proc Natl Acad Sci U S A. 2020; 117(20): 11068–75. 10.1073/pnas.1918465117 [DOI] [PMC free article] [PubMed] [Google Scholar]; Faculty Opinions Recommendation