Abstract

Although much deep learning research has focused on mammographic detection of breast cancer, relatively little attention has been paid to mammography triage for radiologist review. The purpose of this study was to develop and test DeepCAT, a deep learning system for mammography triage based on suspicion of cancer. Specifically, we evaluate DeepCAT’s ability to provide two augmentations to radiologists: (1) discarding images unlikely to have cancer from radiologist review and (2) prioritization of images likely to contain cancer. We used 1878 2D-mammographic images (CC & MLO) from the Digital Database for Screening Mammography to develop DeepCAT, a deep learning triage system composed of 2 components: (1) mammogram classifier cascade and (2) mass detector, which are combined to generate an overall priority score. This priority score is used to order images for radiologist review. Of 595 testing images, DeepCAT recommended low priority for 315 images (53%), of which none contained a malignant mass. In evaluation of prioritizing images according to likelihood of containing cancer, DeepCAT’s study ordering required an average of 26 adjacent swaps to obtain perfect review order. Our results suggest that DeepCAT could substantially increase efficiency for breast imagers and effectively triage review of mammograms with malignant masses.

Keywords: Deep learning, Artificial intelligence, Mammography, Triage, Breast cancer screening, Breast imaging

Introduction

A fundamental question facing the radiology community is how artificial intelligence (AI) will impact the field. One dominant view is that AI will augment radiologists and other physicians to do their jobs more efficiently and accurately for use cases ranging from triaging neuroradiology CT studies for acute abnormalities in the emergency department [1] to improving clinician detection rates of bony extremity fractures [2]. A prime use for AI, and deep learning, in particular, is to augment radiologists in breast cancer screening mammography, which is currently recommended in the USA for all women age 40 and over [3]. As the demand for screening mammography increases [4], radiologists are tasked with maintaining high levels of accuracy, interpretation speed, and efficient use of resources in the setting of increased images to interpret, a problem compounded by the advent of digital breast tomosynthesis (3D mammography), which results in more images per patient to be reviewed. Furthermore, radiologist shortages are a global issue, especially in developing countries like Mexico, which has 291 breast radiologists to interpret mammograms for 14 million eligible women [5]; similar, albeit less dramatic, shortages have been projected in developed countries, including the USA [4] and Europe, where double reading of mammograms is standard [6]. Augmentation of radiologists in breast cancer screening could thus be of great benefit in both developed and developing world.

Deep learning has shown promise for detection of breast cancer on mammography, with recent studies demonstrating equivalent detection rates of breast cancer when compared with radiologists [7–9], with the possibility to enhance human radiologist interpretation for synergistic improvements in cancer detection rate [7]. However, in the context of screening mammography workflow, radiologists are tasked with interpretation of very high volumes of images, and, therefore, improvement in the radiologist’s workflow may be just as important as breast cancer detection. The topic of workflow improvement has received much less attention than breast cancer detection rates.

The purpose of this study was to develop and test DeepCAT, a deep learning system for mammography triage based on suspicion of cancer. Specifically, we evaluate 2 theoretical radiologist augmentations: (1) discarding images unlikely to contain cancer and (2) prioritization of images likely to contain cancer.

Materials and Methods

All data used for the present study were publicly available, as described below. Our institutional review board (IRB) classified this research as non-human subjects research without the need for formal IRB review per our institutional policy.

Dataset

The Digital Database for Screening Mammography [10] consists of 3034 de-identified digitized 2D film mammography images from 2620 patients from 4 USA hospitals (Massachusetts General Hospital, Wake Forest, Sacred Heart, and Washington University in St. Louis). The DDSM images contain both craniocaudal (CC) and mediolateral oblique (MLO) views and are categorized as normal, benign (defined as having at least one mass proven to be benign by pathology or imaging follow-up without the presence of a malignant mass), or malignant (defined as having at least one malignant mass proven by pathology); each mass had been previously segmented by a radiologist. For the purpose of this study, we focused on prioritizing images with mass abnormalities and excluded images with microcalcifications. After excluding images with microcalcifications, our dataset of masses contained 1438 total masses (77%), which we combined with 440 normal mammograms (23%) for a final dataset of 1878 images.

Image Pre-processing and Programming Specifications

We converted all mammography images from DICOM into portable network graphics (PNG) format and resized all images into a 448 × 448 matrix as a balance between exploiting high image detail and accommodating computational memory limitations; representative pre-processed images are presented in Fig. 1.

Fig. 1.

Representative mammography images used in our study

The images were randomly split into training, validation, and test datasets according to a 55%/13.5%/31.5% split (Table 1). There were roughly equal distributions of malignant and benign masses across all 3 datasets, with normal images being underrepresented in the training set, and slightly overrepresented in the testing set; we account for the class imbalances in the deep convolutional neural network (DCNN) training processes, described below. The training dataset was used to train our models, and the validation dataset was used to finetune our training hyperparameters. The test dataset was used only after the models were trained and finetuned for final evaluation of the models and the overall DeepCAT system.

Table 1.

Dataset splits for DeepCAT training, validation, and testing

| Total | Malignant | Benign | Normal | |

|---|---|---|---|---|

| Training (55%) | 1027 | 490 | 437 | 100 |

| Validation (13.5%) | 256 | 83 | 73 | 100 |

| Testing (31.5%) | 595 | 152 | 203 | 240 |

| Total | 1878 | 725 | 713 | 440 |

All programming was performed using the PyTorch deep learning framework (Version 0.3.1, https://pytorch.org). All DCNN development work was performed on a Tesla M40 GPU (Nvidia, Corporation, Santa Clara, CA).

DeepCAT Overview

DeepCAT (Deep Computer-Aided Triage) is a triage system that focuses on two key elements for mammogram suspicion scoring: (1) discrete masses and (2) other image features indicative of cancer, which are not necessarily contained in a mass, such as architectural distortion. Accordingly, there are 2 components to DeepCAT: (1) mass detector and (2) mammogram classifier cascade, both of which will be described below. These two components are combined in a sequential workflow of image processing with the generation of an overall priority score, which is used to order studies for radiologist review (Fig. 2).

Fig. 2.

DeepCAT workflow overview

Mammogram Classifier Cascade

The mammogram classifier cascade is composed of two mammogram classifiers, each trained to classify images into three classes: normal, benign, and malignant. One is referred to as “malignancy-weighted” and specifically trained to maximize malignancy recall (sensitivity) and non-malignant precision (positive predictive value). This type of classifier would thus be expected to have very precise predictions for non-malignant images (i.e., normal images), thereby being able to confidently predict images as not containing a cancer. This classifier could thus be theoretically used to identify normal images with high confidence as ones that can be excluded from further review by a radiologist, or, alternatively, triage for later review. The second classifier is referred to as “balanced” and trained to maximize overall accuracy.

The classifiers were developed using transfer learning [11, 12], utilizing ResNet-34 [13] deep convolutional neural network (DCNN) architectures pretrained on ImageNet. Specifically, the final pooling layer of ResNet-34 was replaced with an adaptive average pooling layer (to accommodate input images of varying size), followed by dropout, and finally a linear layer. Weighted Cross Entropy was used as the objective function with the malignant class being weighted twice as much and the benign mass class being weighted 1.5 times as much for the malignancy-weighted classifier. Training hyperparameters are described later in this section.

The predictions from these two classifiers are then combined in a weighted average to generate an overall classifier cascade score. We experimented with a variety of weightings to optimize image prioritization to identify an optimal weighting, ultimately based on our prioritization performance (described below).

In test evaluation of the mammogram classifiers, we utilize recall (sensitivity) and precision (positive predictive value) as clinically relevant parameters for the purposes of designing a mammography triage system.

Mammogram Mass Detector

The second component of DeepCAT is the mass detector, which is based on RetinaNet [14]. Briefly, RetinaNet is composed of a feature backbone network for extracting image features, a bounding box regressor for determining the position of an object (region of interest) in the image, and a classifier subnet for classifying detections. For our mass detector, the feature backbone is based on a Resnet50 based Feature Pyramid Network [13]. By utilizing focal loss [13], RetinaNet architectures account for the typical class imbalance between an object of interest and the background (as is the case for breast masses and background tissue).

The mass detector was trained to detect benign masses, malignant masses, and background breast tissue while generating a bounding box around any potential malignant lesions. To train the box regressor, bounding boxes were generated from the segmentation masks included in the DDSM dataset. These were mapped to the best training anchors, defined to have the highest Intersection Over Union (IoU). To improve object detection, we additionally trained the detector to predict the object mask by adding a third head for segmentation using features corresponding to the 1st pyramid level (P0). Because training with cross entropy can bias the model toward the background since the vast majority of pixels correspond to background, the subnet was trained with mean combination of Dice loss and cross entropy (α = 0.5). The IoU threshold for true positive for the mass detector was set at 0.1. Training hyperparameters are described in the following section.

In test evaluation of the mass detector, we evaluated the true positive rate (TPR) for detection of malignant and benign masses at a given average number of false positives per image (FPPI) as a clinically relevant outcome parameter for the purposes of identifying masses as input for an overall priority score. We note, however, that only the malignant mass detection predictions from the mass detector are used in our priority scoring (described in the subsequent sections).

Classifier and Detector Training Details and Hyperparameters

To reduce the chances of overfitting, we applied extensive combinations of augmentations during training: rotations, horizontal flips, contrast variations, Gaussian blur, coarse dropout, and additive Gaussian noise. Adam was used as optimizer, with a batch size of 80 and 8 for the classifier and detector respectively. We used a learning rate of 1 × 10−4 with a rate scheduler to reduce the rate on plateaus alongside early stopping of training. Hyperparameters were optimized using the validation dataset and by using random search.

DeepCAT Mammogram Workflow and Scoring

DeepCAT’s workflow starts with input of a stack of mammogram images to be analyzed and ordered for radiologist review based on priority score (directly based on their probability for malignant mass) (Fig. 2). The images are first analyzed by a sequential classifier cascade to potentially reduce the radiologist’s workload while also triaging images for radiologist review based on suspicion of presence of breast cancer. Input images are first analyzed by the malignancy-weighted classifier, which again has high malignant recall/sensitivity, and thus high normal precision. All normal predictions are subsequently removed from further analysis from the remainder of the DeepCAT workflow and given a low priority score corresponding to zero probability of malignant mass.

Because the images classified as malignant by this first classifier would be expected to have a high proportion of false positives (secondary to its low precision for malignancy), we utilize a second balanced classifier in this classifier cascade. The remaining images which were not removed from further consideration by the first malignancy-weighted classifier are analyzed by this balanced classifier, in order to provide a more precise evaluation for malignancy risk, thereby improving the subsequent ordering of images for radiologist review. The balanced classifier’s prediction score is then combined in weighted average with that of the malignancy-weighted classifier to provide a weighted classifier score for malignancy.

Finally, the images are analyzed by the mass detector, with the most suspicious mass (i.e., with the highest confidence prediction for malignant mass) identified; if the confidence for malignant mass is > 50%, this is averaged with the classifier cascade predictions to generate an overall priority score. We did not use the benign mass detector’s predictions in our final priority scoring because our goal was to prioritize images with cancer (i.e., malignant masses) over those without cancer without distinction between benign masses and images without any masses.

Once the images have been analyzed, they are ordered according to their overall priority score, with the goal of ordering malignant masses before images with benign masses and normal images without masses.

Evaluation of Theoretical Workload Reduction

We evaluated the theoretical workload reduction if DeepCAT’s malignancy-weighted classifier were used as a “screener” for normal studies, whereby any normal predictions would be excluded from radiologist review. Additionally, we evaluated how many of these theoretical excluded images contained a cancer.

Evaluation of Prioritization of Images for Radiologist Review

As described above, prioritizing mammograms are sorted based on a priority score based on probability of malignancy with the goal to order all malignant images appearing before images with benign lesions which should appear before normal images. While much of the prior deep learning work in mammography analysis has evaluated classification accuracy, we instead are interested in how reliably we can order cases. Our goal was to come up with a measure for how many normal exams a radiologist might see that should have been ordered behind abnormal exams. In the sorting literature, ordering performance is measured using the number of adjacent swaps required to order the data in an optimal sequence and can be computed in linear time given the ground truth labels for a set of images [15]. For example, if we have classes a, b, and c and the desired order is alphabetical, the sequence aabcbc requires 1 swap to perfectly order the sequence. The string baabcc requires two swaps, and so forth. We measure prioritization performance of DeepCAT by computing the number of adjacent swaps necessary to reorder data in desired ordering for each input image, which we term priority ordinal distance (POD). For the purposes of DeepCAT’s prioritization, we consider optimal ordering to be prioritization of images with a malignant mass over those with a benign mass or normal images; the POD is thus the number of adjacent swaps needed to obtain this order. An alternative, intuitive way to think about the POD is “how many additional examinations do I have to read (in excess of the total number of suspicious images) to see all of the suspicious images?”.

Results

Mammogram Classifier Performance

The malignancy-weighted classifier demonstrated relatively high recall and low precision for malignant masses of 89% and 39%, respectively, while its recall was low and precision high for normal images at 53% and 100%, respectively. Its performance for benign masses was low for both recall and precision at 25% and 40%, respectively. The balanced classifier demonstrated a more balanced performance across all 3 categories, with a high of 82% and 75% recall and precision, respectively, for normal images and a low of 40% recall and 64% precision for malignant images. The classifier cascade had an intermediate set of performances, in between that of the malignancy-weighted and balanced classifiers. The specific performance parameters for each classifier is summarized in Table 2.

Table 2.

DeepCAT classifier performance on normal exams and malignant and benign masses

| Classifier | RecallN | PrecisionN | RecallM | PrecisionM | RecallB | PrecisionB |

|---|---|---|---|---|---|---|

| Balanced | 0.82 | 0.75 | 0.40 | 0.64 | 0.57 | 0.67 |

| Malignant | 0.53 | 1.0 | 0.89 | 0.39 | 0.25 | 0.40 |

| Cascade | 0.81 | 0.91 | 0.70 | 0.52 | 0.52 | 0.59 |

N normal, M malignant, B benign

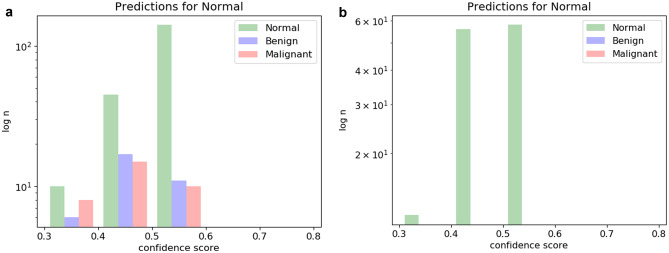

With respect to the goal of using the mammogram classifier cascade to identify normal studies with high confidence and either discard them from further evaluation (or triage them for later review), Fig. 3 demonstrates histograms of test cases predicted to be normal and their ground truth. As can be seen in Fig. 3a, the balanced classifier makes many high-confidence false-positive normal predictions, with several malignant images incorrectly classified as normal. On the other hand, the malignancy-weighted classifier is more conservative, making fewer overall predictions for normal, but with 100% accuracy, accounting for 53% of the normal images (Fig. 3b).

Fig. 3.

Histograms of normal prediction studies with ground truth. a Balanced classifier results in many high-probability false positive predictions for normal cases, including several cancers. b Malignancy-weighted classifier makes fewer predictions for normal, but with 100% accuracy

Mass Detector Performance

Our best-performing malignant mass detector had an optimal TPR of 70% at 0.3 average FPPI. Our best-performing benign mass detector had an optimal true positive rate of 50% at 1.28 average FPPI; we again highlight that the benign mass detector’s predictions were not used in the final priority scoring.

Theoretical Radiologist Augmentation

Of 595 testing images, DeepCAT would theoretically discard 315 images (53%) as normal using the malignancy-weighted classifier. Importantly, none of these images contained a malignant mass; in other words, no cancers would be missed in this test set.

Our best-performing configuration for DeepCAT had an average POD of 25.5 per study, which weighted the malignancy-weighted classifier outputs 2.3 times greater than the balanced classifier outputs. In more intuitive terms, this would mean that one would need to read approximately 26 extra images to see all of the suspicious images.

Discussion

Deep learning for breast cancer detection [7–9] is promising. However, for the goals of augmenting a radiologist in practice, the impact of such systems on a radiologist’s workflow is just as important as breast cancer detection, with recent suggestions that deep learning may one day help make mammography “lean” by eliminating tasks which are low-yield and “wasteful” [16], such as interpreting normal images. In our study, we developed DeepCAT, a system for screening mammography triage and study prioritization. Our system, which utilized an image classifier cascade and a mass detector, demonstrated two potential augmentations to radiologists for film screening mammography: (1) theoretically discarding approximately one-half of test images as normal and (2) prioritization of studies in an optimized review order.

Our triage system depends on two components, a classifier cascade and a mass detector, both of which must perform well in order for image triage and prioritization to be effective. The first classifier in the cascade (malignancy-weighted) was designed to predict normal images with high precision in order to facilitate potential reduction in radiologist workload by excluding images predicted as normal. This classifier achieved 100% precision for normal studies, which is similar to that reported recently by Kyono et al., who reported a 99% precision for normal studies for a deep learning system for mammogram triage, assuming a cancer prevalence of 1% [17]. Images not classified as normal by the malignancy-weighted classifier then underwent evaluation by the second balanced classifier, whose results were combined with the first classifier’s predictions, ultimately achieving a pooled recall and precision of 81% and 91% for normal images, which is comparable to a recent study by Yala et al. [23], who developed a deep learning triage system for mammogram workload reduction, achieving an area under the receiver operating characteristic curve of 0.82 for classification of images as having breast cancer or not. The second component of our system was the malignant mass detector, which achieved a TPR of 0.7 at 0.3 average FPPI, which is comparable with prior mass detectors trained and evaluated on the DDSM dataset used in our study [18–22], and which have achieved TPRs as high as 0.92 at average FPPI of 2.7 [19] and 0.81 at average FPPI of 0.6 [20]. We note that we intentionally balanced TPR with average FPPI to avoid having an overly sensitive mass detector.

By utilizing a mammogram classifier optimized for sorting high likelihood of malignancy for rapid recall and high precision for deprioritizing the interpretation of lower likelihood of malignancy, DeepCAT was able to classify 53% of test studies as normal without missing a single cancer. Such a system to discard normal studies could result in large time savings in the midst of ever-increasing study volumes, both by reducing the workload of images requiring human interpretation and facilitating the focus of radiologists’ time and energy on tasks that require more mental energy, such as identifying cancers in dense breasts, in which it is more difficult to distinguish between normal breast tissue and cancer. Our findings are consistent with a recent study by Yala et al., which demonstrated that a high-sensitivity deep learning system could effectively discard 19% of normal breast cancer images with high confidence and without missing cancers, ultimately resulting in a non-inferior sensitivity for breast cancer detection [23]. Similar findings have been reported by Kyono et al., who trained a deep learning system to theoretically identify 91% of normal mammograms while maintaining a negative predictive value of 99%, concluding that such systems could “successfully reduce the number of normal mammograms that radiologists need to read without degrading diagnostic accuracy” [17].

Although in our current medicolegal and financial paradigms, mammography images cannot be discarded without radiologist review, a paradigm shift in the future is possible. In the field of ophthalmology, a diabetic retinopathy deep learning detection system called IDx-DR has been approved by the FDA as the first autonomous, AI-based diagnostic system authorized for commercialization by the FDA without the need for human over-reading of the images [24, 25]. A similar type of FDA approval could reasonably be predicted in the near future. Furthermore, we propose that such a strategy, while not viable in the immediacy in the USA, could be a viable option in developing countries, where there are severe shortages of radiologists, provided that mammography equipment is available (currently, many developing countries do not have mammography equipment).

Through the combined use of a classifier cascade composed of classifiers optimized for different tasks and a mass detector, we were able to prioritize studies in a more optimal order. With an average POD of 25.5 per study, a radiologist would need to read approximately 26 additional studies to see all of the suspicious images. A direct implication of prioritization of studies is the fatigue and tedium/boredom factor related to interpreting high volumes of screening mammography, which can be a monotonous and repetitive task. Such tedium could be curtailed by ordering images in such a way that would allow a radiologist to focus his or her most attentive hours (typically earlier in the workday) toward the studies that are most suspicious and warrant the highest levels of acuity and vigilance. Alternatively, double readers could be assigned to these cases that warrant closer review. Such re-ordering of studies also has implications for setting expectations of a breast imager for when and how often to expect encountering a suspicious image.

We acknowledge that the utilization of a mammography triage tool like DeepCAT has a number of potential negative consequences, as well. Although interpreting normal studies may be seen as a wasteful or low-yield activity, such studies are often seen as a “break” from more intellectually demanding suspicious or abnormal studies, which require greater mental faculties and attention. If these studies were eliminated, although the total number of images to be interpreted may decrease, the difficulty and complexity of the average case could increase, which could ironically worsen a radiologist’s attention span and, possibly, their accuracy. Separately, if one were to triage studies and order them according to suspicion (and not discard normal studies), in the setting of a shared reading list, it is possible that radiologists could “cherry pick” the easier studies to more quickly and easily amass relative value units (RVUs) to meet a quota provided by a group or to increase their reimbursement. A third potential disadvantage is for the training of residents and fellows; a key component to learning to interpret images is volume of images, both normal and abnormal. If a large proportion of normal studies are discarded, trainee performance could be adversely affected. On the other hand, a deep learning tool could be used to provide an “answer key” for trainees, who could have an immediate feedback tool to help hone their interpretation skills and thought processes. Solutions for these potential adverse results exist; for example, to help reduce the chances of cherry picking and prohibitively mentally taxing workload of studies, one could utilize an automated worklist generator that takes into account these specific image suspicions and create a list for individual radiologists. Indeed, such tools are in the marketplace, such as Carestream Workflow Orchestrator [26]. Alternatively, a “complexity score” to adjust for RVUs could also be instituted based on how suspicious a deep learning system deems an image is to discourage cherry picking of easier studies. Additionally, the suspicion score for a given image could be used to create an optimal set of studies suitable for resident or fellow training. At any rate, the implications of implementing AI systems into medical image workflow are complex with the need for consideration of both positive and negative outcomes.

Our study has several limitations. First, we excluded all images with microcalcifications, which can also be associated with breast cancer. We excluded images with microcalcifications because we wanted to develop a proof-of-concept for the DeepCAT system by focusing on masses without microcalcifications, which are a more subtle finding than masses. Given the promising results in our study, we plan to expand the proof-of-concept to include images with microcalcifications by utilizing a variety of methods for establishing benign or malignant status. Secondly, we utilized 2D images of digitized film mammography, which are not the current state-of-the-art, both with regard to the film medium (as opposed to contemporary digital medium) and 2D images (as opposed to DBT, also known as 3D mammography); our system would likely not generalize well to contemporary technologies. We are currently in progress to train a DeepCAT system for DBT and 2D digital mammography. Related to this, our system’s generalizability is limited because we did not perform testing on an external dataset. However, our intention was not to necessarily develop an externally valid deep learning system but rather to develop a proof-of-concept of a potential triage pipeline for mammography that could be adapted for use on modern-day mammography images, such as DBT, rather than the older scanned film mammography that the DDSM is composed of. We do highlight some technical challenges in expanding the DeepCAT model to DBT (3D images), including the increase in network complexity and parameters and concomitant increase in computational expense and propensity to overfit when using 3D DCNNs. These problems may be overcome by using recently described 3D transfer learning approaches described for medical imaging tasks [27, 28] or using 2D CNNs to evaluate images on a slice-by-slice basis with averaging of priority scores. Thirdly, in our finetuning of the DeepCAT system for weighted averages of the mammogram classifier cascade, as well as configuration for the priority scoring of each image, although we tested a range of arbitrary values to find an optimal configuration, we did not evaluate every single possible combination; a more systematic evaluation of optimal configuration points may improve our results. Fourthly, our mass detector only accounted for the single most suspicious mass; in reality, breast cancer can be multifocal with several masses, which could make an image more suspicious for cancer than a single mass. Finally, because individual patients in the DDSM had variable numbers of studies, with some having both a CC and MLO view and others having only one of the views, we designed our model to handle each image as a separate input, as opposed to pooling all images for a given patient, necessitating prioritization to be performed for single images, rather than on the patient level. One solution to this limitation would be to order images at a patient level by prioritizing patients by the image in their study with the highest priority score. Alternatively, the images could be evaluated and prioritized individually using the DeepCAT system, but when reviewed by the radiologist, when one image is opened for study, all associated images for that patient could be presented for radiologist review and interpretation.

Our results suggest that a system like DeepCAT could theoretically reduce a breast imager’s workload and effectively triage review of mammograms with malignant masses over benign ones. Although the implementation of such a system into clinical practice is complex, a system like the one proposed could augment a radiologist’s workflow, and, ultimately, improve the safe and expedited delivery of mammography care to patients. Our next steps include expansion of DeepCAT to DBT/3D mammography and prospective clinical validation to bring this technology from bench to bedside.

Funding

This work was funded by the Johns Hopkins Discovery Award (2018-2019).

Compliance with Ethical Standards

Conflict of Interest

The authors declare that there is no conflict of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Prevedello LM, Erdal BS, Ryu JL, Little KJ, Demirer M, Qian S, et al. Automated Critical Test Findings Identification and Online Notification System Using Artificial Intelligence in Imaging. Radiology. 2017;285:923–31. doi: 10.1038/s41598-019-48995-4. [DOI] [PubMed] [Google Scholar]

- 2.Lindsey R, Daluiski A, Chopra S, Lachapelle A, Mozer M, Sicular S, et al. Deep neural network improves fracture detection by clinicians. Proc Natl Acad Sci. 2018;115:11591–6. doi: 10.1073/pnas.1806905115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Monticciolo DL, Newell MS, Hendrick RE, Helvie MA, Moy L, Monsees B, et al. Breast Cancer Screening for Average-Risk Women: Recommendations From the ACR Commission on Breast Imaging. J Am Coll Radiol. 2017;14:1137–43. doi: 10.1016/J.JACR.2017.06.001. [DOI] [PubMed] [Google Scholar]

- 4.Wing P, Langelier MH. Workforce Shortages in Breast Imaging: Impact on Mammography Utilization. Am J Roentgenol. 2009;192:370–8. doi: 10.2214/AJR.08.1665. [DOI] [PubMed] [Google Scholar]

- 5.Torres-Mejía G, Smith RA, Carranza-Flores M de la L, Bogart A, Martínez-Matsushita L, Miglioretti DL, et al: Radiographers supporting radiologists in the interpretation of screening mammography: A viable strategy to meet the shortage in the number of radiologists. BMC Cancer 2015;15:410. 10.1186/s12885-015-1399-2 [DOI] [PMC free article] [PubMed]

- 6.Duijm LEM, Groenewoud JH, Fracheboud J, van Ineveld BM, Roumen RMH, de Koning HJ. Introduction of additional double reading of mammograms by radiographers: Effects on a biennial screening programme outcome. Eur J Cancer. 2008;44:1223–8. doi: 10.1016/j.ejca.2008.03.003. [DOI] [PubMed] [Google Scholar]

- 7.Wu N, Phang J, Park J, Shen Y, Huang Z, Zorin M, et al: Deep Neural Networks Improve Radiologists’ Performance in Breast Cancer Screening: IEEE Trans Med Imaging 2019;1. 10.1109/TMI.2019.2945514 [DOI] [PMC free article] [PubMed]

- 8.Chougrad H, Zouaki H, Alheyane O. Deep Convolutional Neural Networks for breast cancer screening. Comput Methods Programs Biomed. 2018;157:19–30. doi: 10.1016/j.cmpb.2018.01.011. [DOI] [PubMed] [Google Scholar]

- 9.Shen L, Margolies LR, Rothstein JH, Fluder E, McBride R, Sieh W. Deep Learning to Improve Breast Cancer Detection on Screening Mammography. Sci Rep. 2019;9:12495. doi: 10.1038/s41598-019-48995-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lee RS, Gimenez F, Hoogi A, Miyake KK, Gorovoy M, Rubin DL. A curated mammography data set for use in computer-aided detection and diagnosis research. Sci Data. 2017;4:170177. doi: 10.1038/sdata.2017.177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rajkomar A, Lingam S, Taylor AG, Blum M, Mongan J. High-Throughput Classification of Radiographs Using Deep Convolutional Neural Networks. J Digit Imaging. 2017;30:95–101. doi: 10.1007/s10278-016-9914-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lakhani P, Sundaram B. Deep Learning at Chest Radiography: Automated Classification of Pulmonary Tuberculosis by Using Convolutional Neural Networks. Radiology. 2017;284:574–82. doi: 10.1148/radiol.2017162326. [DOI] [PubMed] [Google Scholar]

- 13.He K, Zhang X, Ren S, Sun J: Deep Residual Learning for Image Recognition 2015

- 14.Lin T-Y, Goyal P, Girshick R, He K, Dollár P. Focal Loss for Dense Object Detection 2017 [DOI] [PubMed]

- 15.Chitturi B, Sudborough H, Voit W, Feng X: Adjacent Swaps on Strings. Comput. Comb., Berlin, Heidelberg: Springer Berlin Heidelberg 2008;299–308. 10.1007/978-3-540-69733-6_30

- 16.Kontos D, Conant EF. Can AI Help Make Screening Mammography “Lean”? Radiology. 2019;293:47–8. doi: 10.1148/radiol.2019191542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kyono T, Gilbert FJ, van der Schaar M. Improving Workflow Efficiency for Mammography Using Machine Learning. J Am Coll Radiol. 2020;17:56–63. doi: 10.1016/j.jacr.2019.05.012. [DOI] [PubMed] [Google Scholar]

- 18.Dhungel N, Carneiro G, Bradley AP: Automated Mass Detection in Mammograms Using Cascaded Deep Learning and Random Forests. Int. Conf. Digit. Image Comput. Tech. Appl. IEEE. 2015;2015:1–8. doi: 10.1109/DICTA.2015.7371234. [DOI] [Google Scholar]

- 19.Sampat MP, Bovik AC, Whitman GJ, Markey MK. A model-based framework for the detection of spiculated masses on mammographya) Med Phys. 2008;35:2110–23. doi: 10.1118/1.2890080. [DOI] [PubMed] [Google Scholar]

- 20.Eltonsy NH, Tourassi GD, Elmaghraby AS. A concentric morphology model for the detection of masses in mammography. IEEE Trans Med Imaging. 2007;26:880–9. doi: 10.1109/TMI.2007.895460. [DOI] [PubMed] [Google Scholar]

- 21.Beller M, Stotzka R, Müller TO, Gemmeke H: An Example-Based System to Support the Segmentation of Stellate Lesions. Bild. für die Medizin 2005, Berlin/Heidelberg: Springer-Verlag; 2005, p. 475–9. 10.1007/3-540-26431-0_97

- 22.Campanini R, Dongiovanni D, Iampieri E, Lanconelli N, Masotti M, Palermo G, et al. A novel featureless approach to mass detection in digital mammograms based on support vector machines. Phys Med Biol. 2004;49:961–75. doi: 10.1088/0031-9155/49/6/007. [DOI] [PubMed] [Google Scholar]

- 23.Yala A, Schuster T, Miles R, Barzilay R, Lehman C. A Deep Learning Model to Triage Screening Mammograms: A Simulation Study. Radiology. 2019;293:38–46. doi: 10.1148/radiol.2019182908. [DOI] [PubMed] [Google Scholar]

- 24.FDA Permits Marketing of IDx-DR for Automated Detection of Diabetic Retinopathy in Primary Care | Department of Ophthalmology and Visual Sciences n.d. https://medicine.uiowa.edu/eye/facilities/eye/content/fda-permits-marketing-idx-dr-automated-detection-diabetic-retinopathy-primary-care (accessed November 12, 2019)

- 25.IDx-DR US n.d. https://www.eyediagnosis.net/idx-dr (accessed November 12, 2019)

- 26.Orchestrator | Carestream CCP n.d. https://collaboration.carestream.com/solutions/workflow-management/workflow-orchestrator (accessed November 12, 2019)

- 27.P R, A P, J I, C C, M B, D M, et al: AppendiXNet: Deep Learning for Diagnosis of Appendicitis from A Small Dataset of CT Exams Using Video Pretraining. Sci Rep 2020;10. 10.1038/S41598-020-61055-6 [DOI] [PMC free article] [PubMed]

- 28.SC H, T K, I B, C C, RL B, N B, et al: PENet-a scalable deep-learning model for automated diagnosis of pulmonary embolism using volumetric CT imaging. NPJ Digit Med 2020;3. 10.1038/S41746-020-0266-Y [DOI] [PMC free article] [PubMed]