Abstract

Background

Population-based surveys play an important role in measuring vaccination coverage. Surveys measuring vaccination coverage may be commissioned by the Expanded Programme on Immunization (EPI surveys) or part of multi-domain non-EPI surveys such as Demographic and Health Surveys (DHS) or Multiple Indicator Cluster Surveys (MICS). Surveys conducted too close in time to each other may not only be an inefficient use of resources but may also create problems for programme staff when results suggest inconsistent patterns of programme performance for similar time periods.

Objective

To summarize the occurrence of vaccination coverage surveys conducted close in time during 2000–2019 and compare results of EPI and non-EPI coverage surveys when the surveys were conducted within one year of each other.

Methods

Using a database of published national-level vaccination coverage survey results compiled by the World Health Organization (WHO) and the United Nations Children’s Fund (UNICEF), the authors abstracted information on survey field work dates, sample size, percentage of children with documented history of vaccination and the percent coverage, as well as published uncertainty intervals from DHS and MICS, for the first and third doses of diphtheria-tetanus toxoid-pertussis containing vaccine (DTP1, DTP3) and first dose of measles containing vaccine (MCV1). Survey results of EPI and non-EPI surveys were compared.

Results

The authors identified 646 surveys with final reports and estimates of national-level vaccination coverage for DTP1, DTP3, or MCV1 from a total of 687 surveys with data collection start date from 2000 to 2019. Of the 140 countries with at least one vaccination coverage survey, a median of four surveys was observed. Most countries were Gavi-eligible and located in the WHO Africa Region. Sixty-six survey dyads were identified where an EPI survey occurred within one year of a non-EPI survey. For the 66 dyads, in 49 of 59 with information available, EPI surveys reported higher proportion of documented evidence of vaccination and EPI survey results tended to suggest higher levels of vaccination coverage compared to the non-EPI surveys; quite often, differences were substantial. Surveys that found higher proportions of children with documented vaccination evidence tended to also find higher proportions of children who had been vaccinated.

Summary

Opportunities exist to improve overall planning of vaccination coverage measurement in population-based household surveys so that both EPI and non-EPI surveys are more comparable and survey coverage estimates are more appropriately spaced in time. When surveys occur too close in time, careful attention is warranted to ensure comparability and assess sources of documented evidence of vaccination and related coverage differences.

Keywords: Immunization, Coverage, Household survey, Home-based records

1. Introduction

Vaccination coverage for the recommended infant immunization series is often used as a marker of primary healthcare system strength. Vaccination coverage serves as an indicator within the Sustainable Development Goals [1] (which carries on the momentum generated by the Millennium Development Goals) and is one source of informational input used by Gavi, the Vaccine Alliance [2] and the Millennium Challenge Corporation [3] for planning and in making country-level investment decisions. Furthermore, coverage is used to monitor progress against vaccine-preventable diseases and has been used as a benchmark of several global and regional immunization initiatives, including the Universal Childhood Immunization (UCI) in 1990 [4], the Decade of Vaccines and its Global Vaccine Action Plan [5] as well as the Immunization Agenda 2030 [6], endorsed by the World Health Assembly in August 2020.

Population-based surveys are one of few existing approaches to measure vaccination coverage [7], [8]. As vaccination coverage levels in countries around the world improve alongside global and regional development initiatives, attention towards the role of surveys for monitoring and assessing vaccination coverage has increased [9]. In addition, greater attention has been directed towards methods to reduce bias and improve the accuracy and precision of population-based survey results [9], [10], [11]. Vaccination coverage may be measured in surveys commissioned by national expanded programmes on immunization, or EPI, (henceforth referred to as EPI surveys) as well as within Demographic and Health Surveys (DHS) [12], Multiple Indicator Cluster Surveys (MICS) [13] and other population-based survey platforms, e.g., the World Bank–supported Living Standard Measurement Study.

In 2017, during a consultation convened by the World Health Organization (WHO) [14], issues surrounding vaccination coverage survey methods and survey planning and implementation were discussed with particular emphasis on lessons learned from early application of the updated WHO Vaccination Coverage Survey Reference Manual [15]. During those discussions and spurred by observations made in preparation for the 2017 WHO meeting [14], concerns were raised about vaccination coverage surveys conducted close in time. Concurrently, it was recognized that these same surveys represent an opportunity to better understand the possible biases and sensitivities of underlying assumptions and implementation practices across different survey types. Because immunization service delivery is part of a broad primary care delivery system and because vaccination coverage does not tend to change rapidly from one year to the next [16] (at least at the national level), surveys conducted too close together may reflect instances of poor communication between EPI managers and other entities in the Ministry of Health, poor planning, lack of trust in non-EPI surveys, lack of information sharing, or a combination of these factors [17]. (N.B. Throughout this paper, our reference to surveys reflects surveys that measure vaccination coverage rather than speaking to all surveys such as those focused on other domains, e.g., education, economics, etc.) In some cases, EPI surveys are commissioned because the programme “is not happy” with non-EPI survey results (i.e., results suggest lower than desired or expected coverage levels). And in other instances, EPI and non-EPI surveys are originally well-coordinated and scheduled to occur several years apart, but one or the other is delayed for budgetary, security or administrative reasons and the surveys go into the field very near in time. Because population-based household surveys are resource intensive efforts, surveys that are not spaced several years apart may represent suboptimal utilization of scarce human and financial resources. Beyond resource considerations, technical challenges may arise if EPI and non-EPI surveys suggest different coverage estimates in spite of the surveys theoretically measuring some of the exact same indicators (i.e., the proportion of children who received vaccines recommended in infancy) and being conducted close in time. Contradictory coverage estimates create challenges for immunization programme stakeholders who must synthesize and critically assess which results more accurately reflect programme performance. In some instances, the reasons for different vaccination coverage estimates are not obvious [18].

This report summarizes the occurrence of surveys measuring vaccination coverage that were conducted close in time during 2000–2019 and highlights observed patterns in vaccination coverage based on crude comparisons of results from EPI and non-EPI coverage surveys when surveys were conducted within one year of each other.

2. Methods

We obtained a database compiled by WHO and the United Nations Children’s Fund (UNICEF) with information on surveys measuring national level vaccination coverage conducted by WHO Member States. The survey database, which serves as an input to the WHO and UNICEF estimates of national immunization coverage [19], includes information on the type of survey (e.g., DHS, MICS, EPI) as well as survey field work dates, sample size, sample target population (e.g., children 12–23 months) and the estimated national coverage results, as described in survey reports. Estimated coverage results include the percent of children in the target age group with available documented evidence of vaccination history and percent of children in the target age group who were vaccinated for a selected set of vaccine-doses by the time of the survey based on documented evidence (e.g., doses documented in a vaccination card or home-based record [HBR] or facility-based record [FBR]) and the combination of documented evidence and respondent recall (in the absence of documented evidence). All information included in the database was abstracted by WHO and UNICEF staff as part of their annual immunization programme performance assessment exercise.

In the database, we identified surveys that captured vaccination history and for which survey results were finalized and a report that included descriptions of methods and results had been issued. All surveys included in the database as of 31 May 2020 with data collection start dates from January 2000 to December 2019 were eligible for inclusion. We abstracted the following information from the database: survey field work dates, survey sample size, the percent of children with documented evidence of vaccination, the percent coverage for the first and third doses of diphtheria-tetanus toxoid-pertussis containing vaccine (DTP1, DTP3) and percent coverage for the first dose of measles containing vaccine (MCV1) by documented evidence and percent coverage by the combination of documented evidence and respondent recall. For each survey, we identified the year during which data collection ended and assigned this value to the survey for descriptive purposes, regardless of when the survey field work began and the final report was published. Using the survey type information provided, we categorized surveys as UNICEF-supported MICS surveys, United States Agency for International Development-supported DHS, EPI coverage surveys, or other coverage surveys (OCS). Survey reports titled as “Demographic and Health Surveys” that were not included in the online listing of DHS surveys [12] were classified as OCS; likewise, non-UNICEF-supported MICS were classified as OCS. We retained a MICS classification for a 2000 survey conducted in Bangladesh in spite of its absence in the online listing of MICS surveys based on mention of the survey as a MICS in subsequent Bangladesh MICS reports. DHS, MICS, and OCS are considered non-EPI surveys throughout the paper.

For identified EPI–non-EPI survey dyads, that is, instances where an EPI survey was conducted within 12 months of a non-EPI survey based on field work dates (month and year), we also abstracted unweighted sample size and published upper and lower bounds of the ± 2-standard error confidence intervals (±2SECI), where available, for DTP3 and MCV1 coverage from survey reports. Because confidence intervals are not consistently published for OCS, this additional data abstraction was restricted to dyads where the non-EPI survey was a DHS or MICS. For all EPI–non-EPI survey dyads, we examined the magnitude of estimated coverage differences between EPI and non-EPI surveys. And, for dyads with available upper and lower confidence bounds for the non-EPI survey coverage, we examined the frequency of dyads where EPI survey coverage fell within or outside the uncertainty interval.

We merged 2019 information on WHO regional classification [20] and World Bank income group classification [21] as well as with eligibility for Phase 2 financial support from Gavi, the Vaccine Alliance (i.e., Gavi-73) [22]. All data management and descriptive analysis were conducted using MS Excel (Microsoft Corp, Redmond, Washington) and Stata statistical software (Stata Corp, College Station, Texas).

3. Results

From the WHO and UNICEF survey database, we identified 687 surveys with data collection start date years of 2000 to 2019. Of these, 646 surveys captured vaccination history; produced estimates of national level vaccination coverage for DTP1, DTP3, or MCV1; and yielded a final report. EPI, DHS and MICS surveys, were the most common survey types, respectively (Fig. 1). The frequency and distribution of surveys by type and country characteristics are shown in Table 1. The 646 surveys were conducted by 140 countries, most of which were located in the WHO Africa Region, and the total number of surveys was greatest among countries classified as middle-income countries. All Gavi-73 countries, except Georgia, conducted at least one survey that assessed vaccination coverage during the period; the median and mean number of surveys among Gavi-73 countries was 6—twice that conducted in non-Gavi-73 countries. (N.B. Georgia conducted a MICS in 2005, but the survey did not report vaccination coverage estimates due to limited presence of documented evidence. A MICS was also conducted in 2018 but the immunization module was not included.) Across the 140 countries conducting at least one survey, the median number of surveys conducted per country was four. Bangladesh conducted 19 surveys, the most of any country. A visual timeline of surveys for each of these countries is shown in Annex 1.

Fig. 1.

Frequency of surveys reporting vaccination coverage estimates during 2000–2019 by survey type.

Table 1.

Summary of surveys reporting vaccination coverage estimates during 2000–2019 by survey type and country characteristics.

| Number of countries conducting surveys |

Frequency of surveys by survey type |

|||||

|---|---|---|---|---|---|---|

| MICS | DHS | OCS | EPI | All Surveys | ||

| n = 140 | n = 172 | n = 193 | n = 86 | n = 195 | n = 646 | |

| Survey field work year | ||||||

| 2000–2004 | 99 | 47 | 42 | 24 | 38 | 151 |

| 2005–2009 | 116 | 45 | 55 | 32 | 50 | 182 |

| 2010–2014 | 105 | 53 | 59 | 21 | 65 | 198 |

| 2015–2019 | 86 | 27 | 37 | 9 | 42 | 115 |

| WHO Region | ||||||

| Africa (n = 47) | 45 | 72 | 100 | 10 | 107 | 289 |

| Americas (n = 35) | 23 | 22 | 30 | 17 | 19 | 88 |

| Eastern Mediterranean (n = 21) | 16 | 24 | 15 | 23 | 6 | 68 |

| Europe (n = 53) | 26 | 25 | 14 | 4 | 20 | 63 |

| South-East Asia (n = 11) | 11 | 15 | 20 | 17 | 28 | 80 |

| Western Pacific (n = 27) | 19 | 14 | 14 | 15 | 15 | 58 |

| World Bank income group | ||||||

| Low-Income (n = 31) | 31 | 50 | 67 | 16 | 62 | 195 |

| Middle-Income (n = 104) | 94 | 122 | 126 | 68 | 97 | 413 |

| Lower-Middle (n = 46) | 46 | 72 | 80 | 40 | 79 | 271 |

| Upper-Middle (n = 58) | 48 | 50 | 46 | 28 | 18 | 142 |

| High-Income (n = 57) | 15 | 0 | 0 | 2 | 36 | 38 |

| Gavi-73 (n = 73) | 72 | 115 | 142 | 49 | 129 | 435 |

MICS, Multiple Indicator Cluster Survey; DHS, Demographic and Health Survey; OCS, Other coverage survey; EPI, Expanded Programme on Immunization survey.

Among the 646 surveys conducted during 2000–2019, we identified 66 EPI–non-EPI survey dyads conducted by 38 countries—129 surveys for which an EPI survey occurred within one year of a DHS, MICS or OCS based on field work timelines. (N.B. Both India and Zimbabwe had an EPI survey that was part of two EPI–non-EPI dyads, and Bangladesh had a non-EPI survey that was part of two EPI–non-EPI dyads.) A table detailing the survey dyads is provided in Annex 2. Table 2 summarizes observations for these dyads: 27 of the 66 dyads occurred during 2010–2014, 18 during 2005–2009, 12 during 2000–2004 and 9 during 2015–2019 (there were no dyads with field work dates during 2018 or 2019); 48 dyads occurred in Africa; and 60 of the 66 dyads occurred among Gavi-73 countries. The EPI survey preceded the non-EPI survey in 27 dyads and followed the non-EPI survey in 20 dyads. Survey field work overlapped in time in 12 dyads, and exact field work dates were unknown for at least one survey in seven dyads.

Table 2.

Frequency of countries with dyads defined by an EPI coverage evaluation survey conducted within one year of a non-EPI survey by country and survey timing characteristics.

| Survey dyads where EPI survey field work within 1-year of non-EPI survey field work |

||

|---|---|---|

| Number of countries | Number of dyads | |

| n = 38 | n = 66 | |

| Survey field work of EPI survey | ||

| 2000–2004 | 10* | 12 |

| 2005–2009 | 16 | 18 |

| 2010–2014 | 24 | 27 |

| 2015–2019 | 9 | 9 |

| WHO Region | ||

| Africa | 27 | 48 |

| Americas | 1 | 1 |

| Eastern Mediterranean | 1 | 1 |

| Europe | 1 | 1 |

| South-East Asia | 6 | 13 |

| Western Pacific | 2 | 2 |

| World Bank Income | ||

| Low-Income | 14 | 26 |

| Middle-Income | 24 | 40 |

| High-Income | 0 | 0 |

| Gavi-73 | 33 | 60 |

| Timing of EPI vs non-EPI survey | ||

| Before | 21* | 27 |

| After | 16 | 20 |

| Same time | 10 | 12 |

| Unknown | 7 | 7 |

non-EPI survey = DHS/MICS/OCS.

Because some countries were identified with more than one EPI-non-EPI dyad, a country may fall into more than one time period.

A summary of estimated percentage of children with documented evidence and percent coverage for DTP1, DTP3 and MCV1 observed in the 66 dyads is shown in Table 3. Some surveys did not include all measures of interest in the survey reports; for example, nearly one-third of EPI surveys did not report estimated vaccination coverage by documented evidence only. A quick review of Table 3 highlights that measures of central tendency (mean and median) were greater among EPI than non-EPI surveys, suggesting that EPI surveys tend to find higher levels of programme performance. The estimated percentage of children with documented evidence was more than 5 percentage points greater among the EPI survey than its neighbouring non-EPI survey in 49 of the 59 dyads where both surveys had reported data. In 35 dyads, the EPI-estimated percentage with documented evidence was more than 10 percentage points greater and in 7 dyads it was more than 20 points higher.

Table 3.

Summary statistics for the percentage of children with documented evidence and percent coverage for DTP1, DTP3 and MCV1 among 66 survey dyads comprised by an EPI coverage survey and a non-EPI survey separated by no more than 12 months during 2000–2019.

| EPI |

Non-EPI |

Dyads with data* | # dyads with EPI value < non-EPI value | # dyads with EPI > non-EPI by |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| # surveys | min/max | mean (SD) | median (IQR) | # surveys | min/max | mean (SD) | median (IQR) | ≤5%-pts | >5%-pts | >10%-pts | |||

| Documented evidence available to be seen, % | 62 | 11/100 | 72 (20.8) | 77 (58,87) | 63 | 12/100 | 63 (20.9) | 68 (49,74) | 59 | 7 | 3 | 49 | 35 |

| DTP1 by D, % | 45 | 10/99 | 69 (23.5) | 77 (54,84) | 59 | 17/99 | 63 (19.5) | 67 (52,75) | 41 | 9 | 3 | 29 | 19 |

| DTP3 by D, % | 46 | 9/98 | 63 (24.3) | 68 (47,81) | 59 | 10/99 | 56 (20.5) | 58 (47,70) | 42 | 10 | 1 | 31 | 18 |

| MCV1 by D, % | 46 | 9/99 | 60 (23.9) | 64 (42,78) | 59 | 14/99 | 53 (20.2) | 56 (43,66) | 42 | 7 | 7 | 28 | 19 |

| DTP1 by D + R, % | 60 | 43/100 | 89 (10.2) | 92 (85,97) | 66 | 43/100 | 85 (15.0) | 90 (82,95) | 60 | 11 | 28 | 21 | 12 |

| DTP3 by D + R, % | 63 | 25/100 | 81 (14.5) | 82 (73,93) | 66 | 21/100 | 71 (19.4) | 73 (64,87) | 63 | 8 | 12 | 43 | 28 |

| MCV1 by D + R, % | 63 | 25/100 | 78 (14.0) | 78 (71,90) | 66 | 27/100 | 73 (16.3) | 76 (67,85) | 63 | 18 | 15 | 30 | 16 |

D, documented evidence of vaccination history.

D + R, combination of documented evidence of vaccination or respondent recall of child’s vaccination history in absence of documented evidence.

EPI, Expanded Programme on Immunization Survey.

non-EPI survey = DHS/MICS/OCS.

DTP1, first dose of diphtheria-tetanus toxoid-pertussis containing vaccine.

DTP3, third dose of diphtheria-tetanus toxoid-pertussis containing vaccine.

MCV1, first dose of measles containing vaccine.

min, minimum.

max, maximum.

SD, standard deviation.

IQR, inter-quartile range.

Number of dyads where both EPI and non-EPI surveys reported information for the particular measure.

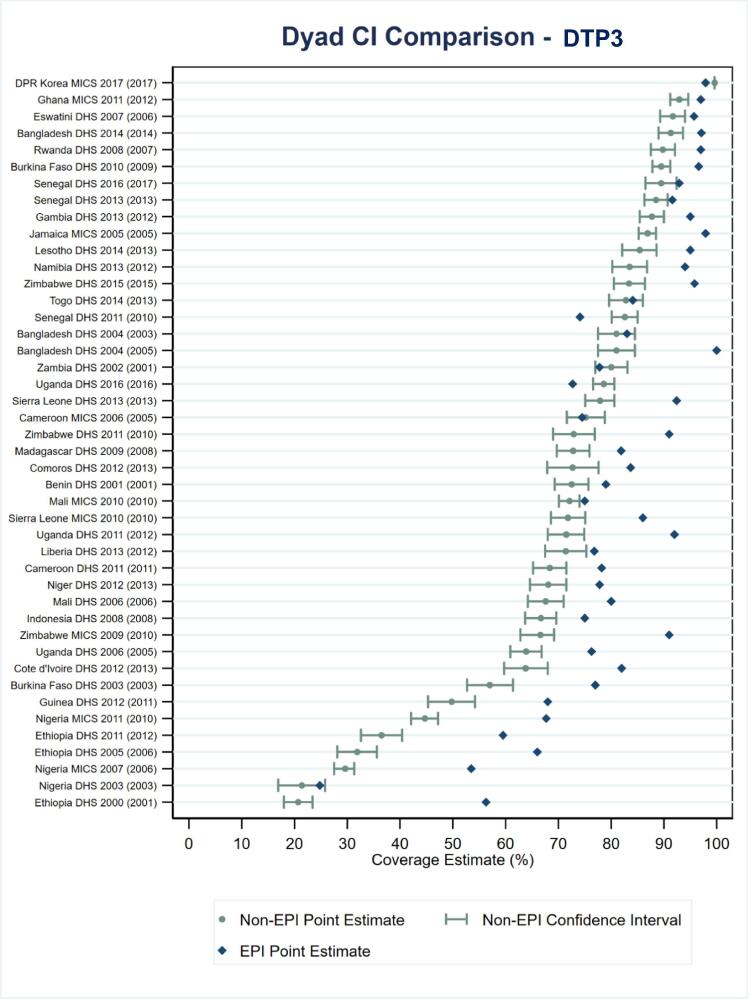

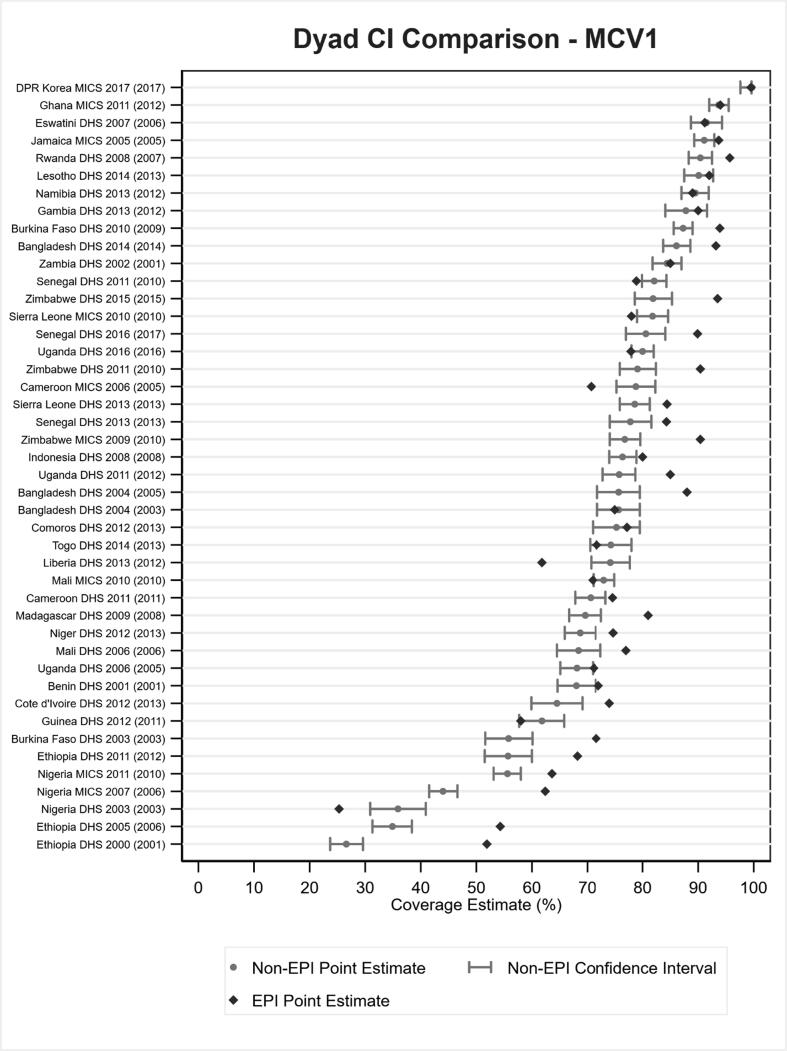

EPI-estimated coverage frequently exceeded corresponding coverage in non-EPI surveys for DTP1, DTP3 and MCV1 coverage based on documented evidence or respondent recall. In fact, EPI estimated coverage exceeded the upper bound of the 2SECI more times than coverage fell within or below the interval, as shown in Fig. 2, Fig. 3 for DTP3 and MCV1, respectively. EPI survey estimated coverage for DTP3 was more than 10 percentage points beyond the upper bound in 14 of the 44 survey dyads for which ± 2SECI values were available and was more than 10 percentage points greater than estimated coverage in the non-EPI survey in 28, nearly half, of the 63 dyads with reported data. The EPI-estimated DTP3 coverage was more than 10 percentage points greater than non-EPI survey estimates in 13 of the 19 (68%) EPI surveys that occurred after a non-EPI compared to 9 of the 27 (33%) EPI surveys that occurred before a non-EPI (data not shown; 6 of 17 surveys conducted at the same time or with unknown field work dates were observed to have EPI-survey estimated DTP3 coverage more than 10 percentage points greater than that estimated by the corresponding non-EPI survey).

Fig. 2.

Estimated DTP3 coverage by documented evidence or recall for 44 EPI–non-EPI survey dyads alongside estimated ± 2-standard error confidence intervals for the non-EPI surveys. Note: Survey dyads are ordered by non-EPI estimated coverage level. The year in parentheses at left is the year of the EPI survey.

Fig. 3.

Estimated MCV1 coverage by documented evidence or recall for 44 EPI–non-EPI survey dyads alongside estimated ± 2-standard error confidence intervals for the non-EPI surveys. Note: Survey dyads are ordered by non-EPI estimated coverage level. The year in parentheses at left is the year of the EPI survey.

Relationships between the availability of documented evidence and estimated coverage levels between the EPI–non-EPI survey dyads (Fig. 4, Fig. 5) were also noted. Among dyads with a small (or negative) difference in available documented evidence between the EPI and non-EPI survey, the difference in percent coverage for DTP3 between EPI and non-EPI survey was also relatively small. In fact, most EPI estimated coverage levels were less than 10 percentage points from the 2SECI. In contrast, dyads with large differences in available documented evidence had larger differences in coverage estimates. Similar results were observed for MCV1.

Fig. 4.

Estimated DTP3 coverage by documented evidence or recall for 44 EPI–non-EPI survey dyads alongside estimated ± 2-standard error confidence intervals for the non-EPI surveys (i.e., data from Fig. 2) stratified by degree to which the EPI survey percentage with documented evidence exceeds that of the non-EPI survey. Note: Survey dyads are ordered by non-EPI estimated coverage level. The year in parentheses at left is the year of the EPI survey.

Fig. 5.

Estimated MCV1 coverage by documented evidence or recall for 44 EPI–non-EPI survey dyads alongside estimated ± 2-standard error confidence intervals for the non-EPI surveys (i.e., data from Fig. 3) stratified by degree to which the EPI survey percentage with documented evidence exceeds that of the non-EPI survey; the year in parentheses is the year of the EPI survey. Note: Survey dyads are ordered by non-EPI estimated coverage level. The year in parentheses at left is the year of the EPI survey.

4. Discussion

A total of 646 surveys measuring vaccination coverage were conducted by 140 countries during 2000–2019. One-in-five of these surveys was identified as part of an EPI–non-EPI survey dyad where the field work for the surveys was conducted within one year of each other. This can occur for several reasons. We recognize that non-EPI surveys, such as DHS and MICS, almost always involve government units outside the influence of the national immunization programme (e.g., Ministry of Planning, Ministry of Education). It is possible that even when the Ministry of Health is engaged with non-EPI survey planning, the immunization programme is not. Anecdotal accounts suggest that survey questionnaires have been developed and field team trainings have been conducted without valuable immunization programme input, which may help explain issues sometimes seen with the vaccine schedule used, the miscalculation or, in some instances, the complete absence of standard vaccination coverage indicators in multipurpose health surveys. For a variety of reasons, collaboration between planners of non-EPI surveys and the national immunization programme may be insufficient. We are unaware of any research to quantify and more broadly understand immunization programme engagement in non-EPI surveys. The absence of immunization programme input can be problematic given the increasing complexity of national immunization schedules with more vaccines and doses recommended today than at any other time. Moreover, there are increasing demands of survey field staff to capture accurate information from imperfect data tools: standard HBRs are not always available, or properly completed and may be damaged making them difficult to read [23], [24], [25], and FBRs, when included in a survey, may also present challenges given that children may not always visit the same facility and facility registers may lack organization [26]. WHO and UNICEF have taken steps since 2015 to improve the collaboration between survey planners, national programme staff, and WHO and UNICEF immunization focal persons. WHO also produced a white paper [27] to provide survey planners—who may or may not be familiar with immunization—with important information to help improve survey planning, data collection, data analysis and data reporting. It remains unclear whether these steps are resulting in meaningful change towards improving coordination of survey planning.

We also recognize why immunization programme leadership might commission their own survey even when they are aware of a non-EPI survey. Non-EPI surveys usually only produce vaccination coverage estimates at national, or at most, at provincial/state levels, while programmes may desire sub-national level coverage estimates. EPI surveys producing subnational level estimates were common before the release of WHO current immunization survey guidance calling for more methodological rigour. Also, lengthy intervals often exist between the completion of non-EPI survey field work and release of a final report. Though these intervals appear to be decreasing for DHS and MICS, in some instances, the release of results has occurred many months or even a year or more after the completion of field work (e.g., South Africa DHS field work was completed in November 2016 and the report date is January 2019). In contrast, although it is recommended that an immunization programme allow at least 12 months to plan and an implement an EPI survey [15], many EPI surveys complete planning, field work collection, analysis and report writing in less than one year. This was particularly true prior to the revised WHO survey manual. At a minimum, when EPI surveys are commissioned by an immunization programme, the programme controls the survey timeline and can use results as appropriate prior to publishing a final report. Programme access to results prior to final report publication is not always possible with a DHS or MICS. While it is difficult to critique without contextual knowledge, we are nonetheless concerned when surveys occur close in time given the resources required to conduct these surveys.

Beginning in 2015, Gavi, the Vaccine Alliance began requiring countries to conduct periodic independent assessments of vaccination coverage through nationally representative, population-based surveys (at least one survey every five years). In many countries, the survey requirement is satisfied through a periodic DHS or MICS. For countries that do not periodically conduct DHS or MICS (e.g., Eritrea, whose last DHS was conducted in 2002 and which has never conducted a MICS), it is often necessary to conduct an EPI coverage survey. Beginning in 2017 [28], countries receiving Gavi support for vaccination campaigns were required to conduct a high-quality, nationally representative survey using probability sampling to assess the coverage of each Gavi-supported campaign. In some instances, countries have taken the opportunity to jointly assess the campaign performance and routine immunization service delivery. Although inferences are limited without direct information on what motivated a country to conduct a EPI coverage survey, it seems reasonable to conclude that Gavi-related survey requirements, which are intended to catalyse greater attention towards and use of data to inform programme decisions, have been an important force driving the number of EPI surveys conducted since 2000 at the inception of the Alliance.

On average, Gavi-73 countries conducted 6 surveys during 2000–2019, twice the number of surveys conducted by non-Gavi-73 countries. During 2000–2004, at the inception of the Alliance, at least 38 EPI surveys were conducted in all countries. (N.B. This is a minimum estimate since there may have been some surveys conducted for which reports were not shared with WHO/UNICEF.) During 2005–2009 and 2010–2014, the number of surveys increased to 50 and 65, respectively. Surveys for 2015–2019 totalled 42, lower than the total for the prior 5-year period but a value which may not reflect surveys that were initiated in 2018 or 2019 and not completed by the time of this writing. Furthermore, nine in ten of the EPI–non-EPI survey dyads occurred among Gavi-73 countries. It is important, however, for EPI surveys to be appropriately spaced vis-à-vis other survey platforms (or combined as was done by Nigeria [29]), whether those are DHS, MICS, World Bank sponsored surveys or nutrition surveys that include vaccination coverage measurement. Ultimately, however, the benefits of periodic coverage surveys will depend on their quality and actual use of their results to inform planning decisions, which was often not the case in Gavi-eligible countries [17].

Patterns suggest that EPI coverage surveys tend to report higher levels of performance than non-EPI surveys, which raises some interesting questions. For reasons noted above, one might expect an EPI survey to find higher levels of vaccination coverage than other surveys because of attention to immunization in questionnaire design and field team training, particularly related to an interviewer’s skill in probing for documented evidence or vaccination history in the absence of documented evidence. At the same time, concerns have been raised about the of programmes monitoring themselves given the importance placed on achieving performance thresholds [30]. Performance-based monitoring schemes may create perverse incentives to report higher levels of coverage—an objective that can be obtained by sampling respondents in areas where access and utilization of services are known to be higher (e.g., close to a health post) [31]. Unfortunately, it is often difficult to assess issues of sampling bias in EPI survey reports; this was particularly true before the release of the revised WHO Survey Manual [15] (released as a draft in 2015), when quota sampling was common and documentation of skipped households was uncommon in EPI surveys. Again, WHO has attempted to improve the quality of survey reporting through training activities and reference materials [31], [32].

While the comparison of EPI point estimates with non-EPI confidence intervals is provocative, it is also somewhat unsatisfying. Based on our experience, immunization programme staff are making similar comparisons of coverage estimates without regard to measures of uncertainty. Our comparisons are unsatisfying in part because we cannot show the matching EPI confidence interval from report summaries because we do not know the EPI design effects. (Furthermore, we do not know that the EPI surveys employed strict probability sampling which is required for a confidence interval to be meaningful, and information to assess survey design and implementation quality is lacking.) But, it seems safe to suggest that when the point estimate from one survey falls numerous confidence interval widths above the (meaningful) upper confidence bound of the other survey, there are substantial differences in survey bias at play. The unsatisfying aspects of the comparison bring into focus the need for survey report authors to describe clearly what steps were taken to actively minimize bias and what aspects of the work may still contain some bias.

As we highlight our findings, some EPI–non-EPI survey dyads may have gone unidentified and are not reflected here. For example, there are some surveys for which field work was completed as of this writing, but final reports were not available (e.g., the 2019 Bangladesh EPI coverage evaluation survey). We also acknowledge that the number of survey dyads identified here is a function of the overlapping window; that is, more dyads would have been identified if the window had been expanded from one to two years. We acknowledge absent contextual information on EPI coverage survey funding that may be related to the survey timing. Although the WHO cluster coverage survey reference guide [15] recommends that survey reports acknowledge the funding source, this practice is not yet widely adopted. Moreover, the comparison of EPI and non-EPI surveys would be improved if microdata were available for both (currently microdata are not centrally available for EPI surveys) and standardized measures of coverage and study populations could be assured. Given the quality of methods and variable analytic descriptions in survey reports, it is possible that vaccination coverage measures differed across surveys, for example, the manner by which observations with missing data or “don’t know” answers were coded in the analysis.

We encourage greater collaboration between immunization programme staff and experts in population surveys, importantly leveraging the qualified expertise around nationally representative surveys that may exist within a national statistics office or calling on external technical assistance when necessary. Certainly, many (though not all) of the methodological recommendations for surveys in the WHO Vaccination Coverage Survey Reference Manual [15] may increase the cost of a coverage survey, and we encourage donors and partners to critically review and consider making necessary funds available.

Although we encourage improved collaboration between EPI and non-EPI survey planning and implementation machinery in countries in order to avoid EPI–non-EPI survey dyads, these situations are likely to continue to occur. As a result, stakeholders will continue to be burdened with digesting the results, including those from dyads reporting discordant coverage. With an awareness that persons who prepare survey reports are unlikely to acknowledge the other surveys [18], we believe it is important for the EPI program or its partners to take responsibility for assuring that comparable sampling frames are used and conducting a meta-analysis following the release of survey reports that are conducted close in temporal proximity. This meta-analysis should look for differences in stratum-level weights [18] and re-post-stratify if warranted. The analysis should look for differences in the availability of documented evidence of vaccination history in HBRs or FBRs as well as differences in coverage among those with and without documented evidence, again exploring post-stratification adjustments to see how the results change. The analysis might scrutinize the data collected across strata within a given survey for ‘poolability’ before combining data at the microdata level [29]. Analysis should also critically review survey protocols and document identified sources of differential bias. Although such reviews take time to conduct, they provide necessary information for stakeholders to appropriately review and draw inferences when comparing survey results. Ultimately, better documentation of vaccine doses administered and improved immunization information systems may facilitate coverage assessment using both administrative methods and surveys [31].

In summary, well-planned and implemented nationally representative, population-based vaccination coverage surveys are resource intensive efforts. Few studies have quantified and documented the units of effort and logistical requirements involved. Although the 129 surveys accounted for by the 66 EPI–non-EPI survey dyads conducted within one year of each other reflect only a fraction of the 646 total identified surveys reporting vaccination coverage data worldwide since 2000, we believe that each of these occurrences represents an opportunity to improve overall planning of vaccination coverage measurement in population-based household surveys. We encourage national immunization programme leadership and their partners to take an active role, in as much as possible, in non-EPI surveys to avoid conducting EPI coverage surveys without regard for non-EPI surveys and so that the complexities of the immunization schedule and technical nuances of HBRs are well accounted for in questionnaire adaptation and field staff training. With improved coordination, opportunities may exist to leverage non-EPI surveys to meet programmatic and monitoring needs.

5. Disclaimer

The thoughts and opinions expressed here are those of the authors alone and do not necessarily reflect those of their respective institutions. M. Carolina Danovaro[-Holliday] works for the World Health Organization. The author alone is responsible for the views expressed in this publication and they do not necessarily represent the decisions, policy or views of the World Health Organization.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

The authors thank Stacy Young for editorial guidance and review provided in the finalization of this manuscript. The authors are grateful to Mary Kay Trimner and Caitlin Clary for data abstraction from DHS and MICS reports and to Becca Robinson for abstraction and production of Fig. 2, Fig. 3, Fig. 4, Fig. 5.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.jvacx.2021.100085.

Appendix A. Supplementary material

The following are the Supplementary data to this article:

References

- 1.United Nations. Sustainable Development Goals. Available at: https://sdgs.un.org/goals [accessed 2020 August 15].

- 2.Gavi, the Vaccine Alliance. Available at: https://www.gavi.org [accessed 2020 August 15].

- 3.Millennium Challenge Corporation. Available at: https://www.mcc.gov/ [accessed 2020 August 15].

- 4.Roalkvam S., McNeill D., Blume S., editors. Protecting the World’s Children: Immunization Policies and Practices. Oxford University Press; Oxford: 2013. [Google Scholar]

- 5.World Health Organization. Decade of Vaccines --- Global Vaccine Action Plan 2011–2020. Available at: https://www.who.int/immunization/global_vaccine_action_plan/DoV_GVAP_2012_2020 [accessed 2020 August 15].

- 6.World Health Organization. Immunization Agenda 2030: A Global Strategy to Leave No One Behind. Available at: https://www.who.int/immunization/immunization_agenda_2030 [accessed 2020 August 15].

- 7.Dietz V., Venczel L., Izurieta H., Stroh G., Zell E.R. Assessing and monitoring vaccination coverage levels: lessons from the Americas. Rev Panam Salud Publica. 2004;16:432–442. doi: 10.1590/s1020-49892004001200013. [DOI] [PubMed] [Google Scholar]

- 8.World Health Organization Meeting of the Strategic Advisory Group of Experts on Immunization, November 2011 – conclusions and recommendations. Wkly Epidemiol Rec. 2012;87(1):1–16. https://www.who.int/wer/2012/wer8701.pdf Available at: [PubMed] [Google Scholar]

- 9.Cutts F.T., Claquin P., Danovaro-Holliday M.C., Rhoda D.A. Monitoring vaccination coverage: defining the role of surveys. Vaccine. 2016;34(35):4103–4109. doi: 10.1016/j.vaccine.2016.06.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cutts F.T., Izurieta H.S., Rhoda D.A. Measuring coverage in MNCH: design, implementation, and interpretation challenges associated with tracking vaccination coverage using household surveys. PLoS Med. 2013;10(5) doi: 10.1371/journal.pmed.1001404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Eisele T.P., Rhoda D.A., Cutts F.T., Keating J., Ren R., Barros A.J.D. Measuring coverage in MNCH: total survey error and the interpretation of intervention coverage estimates from household surveys. PLoS Med. 2013;10(5) doi: 10.1371/journal.pmed.1001386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.DHS Program. Demographic and Health Surveys (DHS). Available at: https://dhsprogram.com [accessed 2020 August 15].

- 13.UNICEF. Multiple Indicator Cluster Survey (MICS). Available at: http://mics.unicef.org [accessed 2020 August 15].

- 14.Danovaro-Holliday MC, Dansereau E, Rhoda DA, Brown DW, Cutts FT, Gacic-Dobo M. Collecting and using reliable vaccination coverage survey estimates: summary and recommendations from the “Meeting to Share Lessons Learnt From the Roll-Out of the Updated WHO Vaccination Coverage Cluster Survey Reference Manual and to Set an Operational Research Agenda Around Vaccination Coverage Surveys”, Geneva, 18-21 April 2017 Vaccine. 2018;36(34):5150–9. https://doi.org/10.1016/j.vaccine.2018.07.019. [DOI] [PMC free article] [PubMed]

- 15.World Health Organization. World Health Organization Vaccination Coverage Cluster Surveys: Reference Manual. Geneva, Switzerland. 2018. Available at https://www.who.int/immunization/documents/who_ivb_18.09 [accessed 2020 August 15].

- 16.Wallace AS, Ryman TK, Dietz V. Overview of global, regional, and national routine vaccination coverage trends and growth patterns from 1980 to 2009: implications for vaccine-preventable disease eradication and elimination initiatives. J Infect Dis 2014;210 Suppl 1(01):S514-22. https://doi.org/10.1093/infdis/jiu108. [DOI] [PMC free article] [PubMed]

- 17.Bosch-Capblanch X., Kelly M., Garner P. Do existing research summaries on health systems match immunisation managers' needs in middle- and low-income countries? Analysis of GAVI health systems strengthening support. BMC Public Health. 2011;11:449. doi: 10.1186/1471-2458-11-449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dong T.Q., Rhoda D.A., Mercer L.D. Impact of state weights on national vaccination coverage estimates from household surveys in Nigeria. Vaccine. 2020 doi: 10.1016/j.vaccine.2020.05.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Burton A., Monasch R., Lautenbach B., Gacic-Dobo M., Neill M., Karimov R. WHO and UNICEF estimates of national infant immunization coverage: methods and processes. Bull World Health Organ. 2009;87(7):535–541. doi: 10.2471/blt.08.053819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.World Health Organization. Definition of WHO regional groupings. Available at: https://www.who.int/healthinfo/global_burden_disease/definition_regions [accessed 2020 August 15].

- 21.The World Bank. World Bank Country and Lending Groups. Available at: https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups [accessed 2020 August 15].

- 22.Gavi, the Vaccine Alliance. Eligibility since 2000. Available at: https://www.gavi.org/types-support/sustainability/eligibility [accessed 2020 August 15].

- 23.Tabu C, Brown DW, Ogbuanu I. Vaccination related recording errors in homemade home-based records: lessons from the field. White Paper. July 2018. Available at: https://doi.org/10.13140/RG.2.2.29550.92482 [accessed 2020 August 15].

- 24.Mansour Z., Brandt L., Said R., Fahmy K., Riedner G., Danovaro-Holliday M.C. Home-based records' quality and validity of caregivers' recall of children's vaccination in Lebanon. Vaccine. 2019;37(30):4177–4183. doi: 10.1016/j.vaccine.2019.05.032. [DOI] [PubMed] [Google Scholar]

- 25.Kaboré L., Méda C.Z., Sawadogo F. Quality and reliability of vaccination documentation in the routine childhood immunization program in Burkina Faso: results from a cross-sectional survey. Vaccine. 2020;38(13):2808–2815. doi: 10.1016/j.vaccine.2020.02.023. [DOI] [PubMed] [Google Scholar]

- 26.Swiss TPH and PHISICC partners. WS3—Characterization of the Health Information Systems in Nigeria, Côte d’Ivoire, and Mozambique. Available at: https://paperbased.info/15422-2/ [accessed 2020 August 15].

- 27.World Health Organization. Harmonizing vaccination coverage measures in household surveys: A primer. WHO: Geneva, Switzerland, 2019. Available at: https://www.who.int/immunization/monitoring_surveillance/Surveys_White_Paper_immunization_2019.pdf [accessed 2020 August 15].

- 28.Gavi, the Vaccine Alliance. Guidance for Post-campaign surveys to measure campaign-vaccination coverage of Gavi supported campaigns. Available at: https://www.gavi.org/sites/default/files/document/guidance-on-post-campaign-coverage-surveyspdf.pdf [accessed 2020 August 15].

- 29.Rhoda D.A., Wagai J.N., Beshanski-Pedersen B.R., Yusafari Y., Sequeira J., Hayford K. Combining cluster surveys to estimate vaccination coverage: experiences from Nigeria's Multiple Indicator Cluster Survey/National Immunization Coverage Survey (MICS/NICS), 2016–17. Vaccine. 2020 doi: 10.1016/j.vaccine.2020.05.058. S0264-410X(20)30703-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Brown D.W. Keeping immunisation coverage targets in perspective. Open Infect Dis J. 2011;5:6–7. https://benthamopen.com/contents/pdf/TOIDJ/TOIDJ-5-6.pdf Available at: [Google Scholar]

- 31.World Health Organization SAGE Working Group on Quality and Use of Immunization and Surveillance Data. Report of the SAGE Working Group on Quality and Use of Immunization and Surveillance Data. October 2019. Available at: https://www.who.int/immunization/sage/meetings/2019/october/presentations_background_docs/en [accessed 2020 August 15].

- 32.World Health Organization. WHO Vaccination Coverage Survey. Available at: https://www.who.int/immunization/monitoring_surveillance/routine/coverage/en/index2.html [accessed 2020 August 15].

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.