Abstract

Cells respond to external signals and stresses by activating transcription factors (TF), which induce gene expression changes. Prior work suggests that signal‐specific gene expression changes are partly achieved because different gene promoters exhibit distinct induction dynamics in response to the same TF input signal. Here, using high‐throughput quantitative single‐cell measurements and a novel statistical method, we systematically analyzed transcriptional responses to a large number of dynamic TF inputs. In particular, we quantified the scaling behavior among different transcriptional features extracted from the measured trajectories such as the gene activation delay or duration of promoter activity. Surprisingly, we found that even the same gene promoter can exhibit qualitatively distinct induction and scaling behaviors when exposed to different dynamic TF contexts. While it was previously known that promoters fall into distinct classes, here we show that the same promoter can switch between different classes depending on context. Thus, promoters can adopt context‐dependent “manifestations”. Our analysis suggests that the full complexity of signal processing by genetic circuits may be significantly underestimated when studied in only specific contexts.

Keywords: Bayesian inference, manifestation, Msn2, promoter class switching, transcription factor dynamics

Subject Categories: Chromatin, Epigenetics, Genomics & Functional Genomics; Computational Biology

Gene promoters can be classified into distinct classes. Here we show that a single gene promoter can switch between different promoter classes depending on transcription factor context.

Introduction

Exquisite regulation of gene expression underlies essentially all biological processes, including the remarkable ability of a single cell to develop into a fully formed organism. Transcription factors (TFs) control gene expression by binding to the promoters of genes and recruiting chromatin remodelers and the general transcriptional machinery. Recruitment of RNA Polymerase II enables the initiation of transcription, which produces mRNAs that are exported to the cytoplasm, where they are finally translated into proteins by the ribosome. Gene expression is primarily regulated at the level of promoter switching dynamics and initiation of transcription, which is associated with large cell‐to‐cell variability (Coulon et al, 2013). For practical reasons, however, gene expression is typically analyzed at the level of mRNAs (e.g., FISH) or proteins (e.g., immunofluorescence or GFP reporters) using bulk or single‐cell approaches. Although powerful, these data provide only partial and indirect information about the underlying promoter states and transcription initiation dynamics. Moreover, although natural gene regulation is complex in both time (e.g., time‐varying signals) and space (e.g., signaling gradients) (Li & Elowitz, 2019), experimental measurements tend to be limited to simple perturbations such as ON/OFF or dose–response curves under steady‐state conditions.

Ideally, gene regulation should be studied at the level of promoter switching dynamics and transcription initiation events, using experimental approaches that capture gene expression in a sufficiently large number of single living cells in response to a broad range of dynamic inputs. Several studies have addressed some, but not all, of these challenges (Suter et al, 2011; Coulon et al, 2013; Hansen & O’Shea, 2013; Toettcher et al, 2013; Zoller et al, 2015). Here, through an integrated experimental and computational approach, we make a first attempt to realize this goal. We focus on a simple system, where a single inducible TF activates a target gene. Surprisingly, our approach reveals that even single gene promoters can display complex and counter‐intuitive behaviors, which are difficult to explain by simple kinetic models. In particular, we show that genes exhibit ”context‐dependent manifestations”, such that the same gene can switch between qualitatively different kinetic behaviors depending on which dynamic input it is exposed to. While it was previously known that promoters fall into distinct classes, we thus show here that the same promoter can switch class depending on context.

Results

Single‐cell time‐series measurements of promoter dynamics under complex TF inputs

To study how genes respond to complex and dynamic TF inputs, we focus on a large dataset that we previously generated (Fig EV1) (Hansen & O’Shea, 2013; Hansen & O’Shea, 2015) and which we have here converted from arbitrary fluorescence units to absolute protein abundances. In our setup, addition of a small molecule causes the budding yeast TF, Msn2, to rapidly translocate to the nucleus and activate gene expression (Fig 1A). Using microfluidics, rapid addition or removal of 1NM‐PP1 allowed us to control both pulse length, pulse interval, and pulse amplitude of the TF (fraction of Msn2 that is activated) and simultaneously measure the single‐cell response of natural and mutant Msn2 target genes using fluorescent reporters (Hansen & O’Shea, 2013; Hansen et al, 2015; Hansen & O’Shea, 2015) (Fig 1A).

Figure EV1. Overview of Msn2 input experiments.

Left: heatmap overview of the 30 different Msn2‐mCherry input. Right: Raw experimentally measured Msn2‐mCherry input (black) and standard deviation (black error bars) for each of the 30 Msn2 inputs. The fitted Msn2‐mCherry input is overlaid in red. This figure has been partially reproduced with permission from Molecular Systems Biology (Hansen & O’Shea, 2013).

Figure 1. Overview of Msn2 system and inference approach.

- Overview of microfluidic control of Msn2 activity and read‐out of gene expression.

- Overview of range of Msn2 input dynamics.

- Stochastic model of gene expression. The promoter (left) can switch from its inactive state to its active state in an Msn2‐dependent manner. Once active, mRNA can be transcribed at a certain rate z 1. Transcription can be further tuned by recruitment of additional factors, which is captured by a third state with distinct transcription rate z 2. Messenger RNA and protein dynamics are described as a two‐stage birth‐and‐death process, accounting for extrinsic variability in the translation rate (right). A detailed description of the model can be found in Methods and Protocols: Stochastic model of Msn2‐dependent gene expression).

- Statistical reconstruction of promoter switching and transcription dynamics. Gene expression output trajectories were quantified for diverse Msn2 inputs in a large number of cells. One half of the trajectories was used to calibrate the model using a moment‐based inference approach (Zechner et al, 2012). The model parameters corresponding to mRNA degradation, translation, and protein degradation where estimated once for each promoter from a single‐pulse condition (50 min, 100% Msn2) but then held fixed for all other conditions. In contrast, the parameters corresponding to promoter switching and mRNA production where re‐calibrated for each condition. The remaining half of the trajectories were used to reconstruct time‐varying transcription rates and promoter state occupancies using the previously calibrated models in combination with the hybrid SMC algorithm (Methods and Protocols Hybrid sequential Monte Carlo). Several features characterizing the promoter and transcription dynamics were calculated from the single‐cell reconstructions for all promoters and experimental conditions.

- Hierarchical clustering of promoter dynamics. We considered all single‐pulse experiments (10–50 min duration, 25–100% Msn2 induction, see (B) top row) for all promoters except the two SIP18 mutants. For each condition, we calculated the percentage of responders, the average transcriptional output, the average time active, and the average time to activate. All features were averaged over five repeated runs of the inference pipeline. For a particular promoter and Msn2 induction level, we grouped together the respective features for all pulse lengths, giving rise to a 20‐dimensional data point. In total, this leads to 28 20‐dimensional data points (four Msn2 levels for seven promoters), which were normalized across individual features. Data points which had zero % responders for at least one of the pulse lengths were excluded from the analysis, since the remaining three features are not defined in this case. The data were clustered using a Euclidian distance metric and are shown as a heatmap, with cluster annotation.

We note that Msn2 naturally exhibits complex signal‐dependent activation dynamics (Hao & O’Shea, 2012). First, Msn2 exhibits short pulses of nuclear localization in response to glucose starvation with dose‐dependent frequency/number, and our pulse number/interval experiments were designed to match those (Fig 1B). Second, Msn2 largely exhibits a single pulse of nuclear localization in response to osmotic stress with dose‐dependent duration, and our pulse duration experiments were designed to match this (Fig 1B). Third, Msn2 exhibits a sustained pulse of nuclear localization in response to oxidative stress with dose‐dependent amplitude, and our amplitude‐modulated experiments were designed to match this (Fig 1B) (Hao & O’Shea, 2012). In summary, we chose our TF inputs to be physiologically relevant. We note that the system is not subject to known feedback from Msn4 since Msn4 has been deleted in our system (Hao & O’Shea, 2012; Hansen & O’Shea, 2013; AkhavanAghdam et al, 2016), though we cannot rule out other forms of feedback. We also note that we replaced the target gene ORF with YFP and measured the endogenous gene response (Hansen & O’Shea, 2013; Hansen & O’Shea, 2015) and that the target genes are strictly Msn2‐dependent (Hansen & O’Shea, 2013). Our extensive dataset contains 30 distinct dynamical Msn2 inputs for nine genes (270 conditions) and ∼ 500 cells per condition, numbering more than 100,000 single‐cell trajectories in total (Fig 1B).

Bayesian inference of promoter dynamics from time‐lapse measurements

Gene promoters can generally exist in different transcriptionally active and inactive states (Coulon et al, 2013; Neuert et al, 2013). However, although our dataset is rich, since protein synthesis and degradation are slow processes, the raw YFP traces provide only indirect information about promoter state occupancies and dynamics. Bayesian methods provide an effective means to obtain statistical reconstructions of promoter states and transcription dynamics from time‐lapse reporter measurements (Suter et al, 2011; Golightly & Wilkinson, 2011; Amrein & Künsch, 2012; Zechner et al, 2014). However, performing such reconstruction is computationally very demanding and existing approaches are typically too slow to handle large datasets like the one considered here, or rely on certain approximations which may be incompatible with the considered experimental system. To address this problem, we have developed a hybrid approach, which achieves accurate reconstructions while maintaining scalability.

Bayesian state reconstruction requires a mathematical model that captures the dynamics of the underlying molecular states and how those relate to the corresponding time‐series measurements. To describe the dynamics of gene expression, we focus on a standard Markov chain model, in which a promoter can switch between different states with distinct transcription rates (e.g., transcriptionally inactive vs. active). Messenger RNA and protein YFP reporter copy numbers are described by two coupled birth‐and‐death processes. We account for extrinsic variability (Elowitz et al, 2002) at the translational level by considering the translation rate to be randomly distributed across a population of cells. The dynamic state of the overall gene expression system at time is denoted by , with as the instantaneous transcription rate and and as the mRNA and YFP reporter copy numbers, respectively. We denote by a complete trajectory of on the time interval . We consider a sequence of partial and noisy measurements at times along the trajectory. The statistical relationship between the measurements and the underlying state of the system is captured by a measurement density with for all . In the scenario considered here, the measurements represent noisy readouts of the reporter copy number extracted from time‐lapse fluorescence movies. In order to infer from a measured trajectory , we employ Bayes’ rule, which can be stated as

| (1) |

with as the prior probability distribution over trajectories , governed by the stochastic model of gene expression. The corresponding posterior distribution on the left‐hand side captures the knowledge about a cell’s trajectory that we gain once we take into account the experimentally measured time series.

However, the posterior distribution in equation (1) is analytically intractable and one is typically left with numerical approaches. Sequential Monte Carlo (SMC) methods have been successfully applied to address this problem in the context of time‐lapse reporter measurements (Zechner et al, 2014; Feigelman et al, 2016; Kuzmanovska et al, 2017). The core idea of these approaches is to generate a sufficiently large number of random sample paths from the prior distribution and reweighing them using the measurement density to be consistent with the posterior distribution. This is performed sequentially over individual measurement time points, which allows splitting the overall sampling problem into a sequence of smaller ones that can be solved more effectively (Methods and Protocols: Recursive Bayesian estimation).

The resulting SMC methods, however, are still computationally very expensive since the generation of an individual sample path can span thousands or even millions of chemical events when considered on realistic experimental time scales. In the Msn2 induction system, for instance, trajectories often involve a large number of transcription and translation events, which would render conventional SMC approaches impractically inefficient. Alternatively, equation (1) can be calculated using analytical approximations (Huang et al, 2016). The main idea is to approximate the posterior distribution by a “simpler” distribution, such as a normal or log‐normal distribution, which can be handled analytically. While analytical approximations can be substantially more efficient than SMC methods, the underlying distributional assumptions may not be suitable in certain scenarios and lead to poor approximations. For instance, switch‐like promoter dynamics are unlikely to be captured accurately by a continuous distribution such as a Gaussian. To address these problems, we developed a hybrid approach, which combines efficient analytical approximations with SMC sampling and thus strikes a balance between computational efficiency and accuracy. More precisely, only the promoter switching events have to be simulated stochastically, while the more expensive transcription and translation dynamics are eliminated from the simulation and handled analytically. This hybrid inference scheme targets the marginal posterior distribution

| (2) |

where the mRNA and reporter dynamics and have been integrated out. We derived expressions for the marginal likelihood functions using an analytical approximation based on conditional moments (Methods and Protocols: Hybrid sequential Monte Carlo). The resulting method can be understood as a Rao‐Blackwellized SMC approach (Doucet et al, 2000; Zechner et al, 2014). Using this hybrid approach, the sampling space can be significantly reduced, which makes inference efficient enough to deal with the large dataset considered in this study. A complete description of the method and a quantitative analysis of its accuracy based on simulated data can be found in Methods and Protocols and Fig EV2A and B.

Figure EV2. Evaluation of the inference method.

-

A, BEvaluation of the hybrid SMC method using synthetic data. Inference was performed using artificially generated time‐course data as described in Methods and Protocols: Quantitative characterization of promoter dynamics. (A) Inference results for a promoter model with slow switching kinetics. (B) Inference results for a promoter model with fast‐switching kinetics. Respective top panels show the results assuming perfect knowledge of the model parameters. Bottom panels show the corresponding results for random parameter mismatch, where each parameter was drawn from a log‐normal distribution LN(log(b), 0.12) for each reconstruction, with b as the true value of the parameter. The R2 and slope k between the true and predicted features were determined using linear regression (red dashed lines).

-

C, DEvaluation of the model calibration using moment‐based inference. (C) Example fits are shown for some promoters for the 50min pulse condition with 100% Msn2 induction level for one of the five independent inference runs. The models were calibrated using 50% of the trajectories and compared against means and variances calculated from the remaining pool of cells (i.e., those that were not used for fitting). Solid lines indicate averages and shaded areas mark one standard deviation above and below the average. (D) Quantitative analysis of the calibrated models across all conditions. To analyze the accuracy of the models, we calculated the absolute mismatch between the predicted and experimental means and variances at each time point. The resulting values were divided by the standard error of the experimental moments that we obtained using bootstrapping. Subsequently, we averaged these values across all time points and all five inference repeats. The resulting error statistic measures the accuracy relative to the uncertainty of the moment estimates obtained from data. A value close to one would indicate that the mismatch between the moments is comparable to the uncertainty of the experimental moments. The leftmost panel in (D) shows density histograms of the error statistic (err) for the mean and variance, respectively. The second and third panels show the correlation between the error statistic and the maximum of the average YFP level for each condition. The fourth and fifth panels show a cumulative error statistic for each promoter, calculated as the median across all conditions.

-

E, FEvaluation of the trajectory inference (C, D). To validate the reconstructed promoter switching and transcription dynamics, we calculated a maximum a posterior reconstruction of the time‐varying transcription rate Z(t) for all cells that were used for trajectory inference (i.e., 50% of the total pool of cells). These rates were then used to forward‐simulate means and variances of YFP in combination with the calibrated parameters ω. The resulting means and variances were compared against the remaining pool of single‐cell measurements, which were not used for trajectory inference as in (C, D). In general, the error statistics indicate a relatively good accuracy of the method. Larger errors were predominantly found for conditions with low YFP output (less than several hundred copies).

Inference of Msn2‐dependent promoter and transcription dynamics

To quantify and understand how promoters respond to different dynamic TF inputs, we applied the hybrid SMC algorithm to the Msn2 datasets. To describe promoter activation and transcription, we focus on a canonical three‐state promoter architecture (Fig 1C), which has been widely used in the literature (Coulon et al, 2013; Hansen & O’Shea, 2013). This model accounts for Msn2‐dependent activation of the promoter after which mRNA can be transcribed at a certain rate. Transcription can be further tuned (for instance by recruitment of additional factors), which is captured by a third state with distinct transcription rate (Fig 1C).

The model involves a number of unknown parameters, which have to be determined prior to applying the hybrid SMC algorithm. To achieve this, we used a randomly selected subset of the Msn2 dataset in combination with an efficient moment‐based approach, which reveals maximum a posterior estimates of the unknown parameters (Zechner et al, 2012). The inference was performed for each promoter and condition separately using 50% of the available single‐cell trajectories. However, only the promoter switching and transcription rates were allowed to vary between conditions. The remaining parameters associated with mRNA degradation, translation, and protein degradation were estimated only for the first condition within experimentally constrained ranges (Hansen & O’Shea, 2013) and then held fixed for all other conditions (Methods and Protocols: Statistical inference of kinetic parameters).

The resulting calibrated models were then used to infer time‐varying transcription rates and promoter state occupancies within individual cells from the remaining 50% of trajectories using the hybrid SMC algorithm (Fig 1D). From the large number of reconstructions, in turn, we computed a number of transcriptional features that summarize the single‐cell expression dynamics of each promoter and condition (Methods and Protocols: Quantitative characterization of promoter dynamics). For the purpose of this study, we mainly focus on four transcriptional features. First, each cell was classified as responder or non‐responder, depending on whether it was inferred to have resided in a promoter state with significant transcriptional activity for more than 2 min. For all responders, we estimated the time it took the promoter to switch into an active state (time to activate), the total time the promoter was in an active state (time active) as well as the integral over the time‐varying transcription rate over the whole time course, which we refer to as transcriptional output. These dynamical features are chosen to be generic such that they do not rely on the particular structure of the considered promoter model. We remark that since the overall analysis pipeline depends on random number generation (e.g., splitting of data between model calibration and trajectory inference, Monte Carlo sampling), the inferred transcriptional features exhibit a certain degree of variability between repeated runs of the analysis. To quantify this variation, we performed five independent runs of the overall pipeline and calculated averages and standard errors. Data points shown in the following correspond to the inferred transcriptional features averaged across individual runs, unless stated otherwise. Both the calibrated models and temporal reconstructions were validated using a cross‐validation approach (Fig EV2C–F). In summary, this combined experimental and computational approach allowed us to compare different promoters under a wide range of Msn2 contexts.

Promoters exhibit context‐dependent scaling behaviors and manifestations

To gain an overview of this high‐dimensional dataset, we analyzed the gene expression responses to single pulses of nuclear Msn2 of different amplitudes (25, 50, 75, or 100%) for each promoter. Using hierarchical clustering, we uncovered the known promoter classes (Hansen & O’Shea, 2013) for most conditions (Fig 1E): slow activation, high amplitude threshold promoters (SIP18, TKL2) clustered together and fast activation, and low amplitude threshold promoters (HXK1, DCS2) also clustered together. Surprisingly, however, DDR2 (Figs 1E and EV3) clustered with the slow, high threshold promoters at low Msn2 amplitudes (25, 50%), but with the fast, low threshold promoters at high Msn2 amplitudes (75, 100%). This suggests that the same promoter can switch promoter class and exhibit qualitatively different promoter and transcription dynamics when exposed to different Msn2 contexts.

Figure EV3.

Example single‐cell trajectories for DDR2 for the 10, 30, and 50 min pulse conditions with 25 and 100% Msn2 induction level, respectively

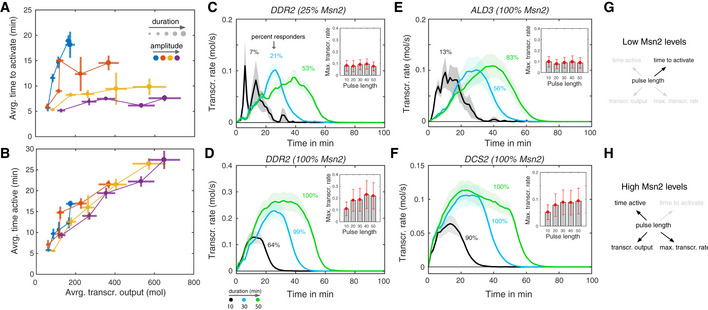

To gain a better understanding of this phenomenon, we plotted the average time it took to activate the promoter (Fig 2A) and the average time the promoter was active (Fig 2B) against the transcriptional output for single‐pulse inputs for DDR2. At low amplitude Msn2 input, the time it takes to activate DDR2 for the first time increases with pulse length (Fig 2A), while both the time active (Fig 2B) and the transcriptional output increase only moderately (Fig 2A). In contrast, at high Msn2 amplitude, the time to activate appears fixed at approximately 5–10 min, but now transcriptional output and time active increase significantly with pulse duration.

Figure 2. Context‐dependent scaling behaviors.

-

A, BScaling behaviors for DDR2. Scaling of time to activate (A) and total time active (B) for DDR2 with transcriptional output. All three features were calculated as population averages across all responding cells per condition. Circles correspond to the mean of these features calculated over five independent inference runs and error bars indicate two times the standard error above and below the mean.

-

C, DPopulation averages of the time‐varying transcription rate were calculated for the 10, 30, and 50 min pulse conditions for 25% Msn2 amplitude (C) and 100% Msn2 amplitude (D) for DDR2 considering only responding cells. Solid lines correspond to the mean calculated over five independent inference runs and shaded areas mark two times the standard error above and below the mean. The colored numbers indicate the estimated fraction of responding cells for the respective condition, averaged over all five inference runs. Inset plots show the maximum of the population‐averaged transcription rate calculated over the whole time course. Circles correspond to means calculated over five independent inference runs and error bars mark two times the standard error above and below the means.

-

E, FTime‐varying transcription rates were calculated as in (C, D) for the 10, 30, and 50 min pulse conditions, and 100% Msn2 amplitude are shown for ALD3 and DCS2 for comparison.

-

G, HSchematic model of DDR2 promoter manifestations for low and high Msn2 induction levels. At Low Msn2 levels, Msn2 pulse length regulates the time to activate but not the other features. At High Msn2 levels, Msn2 pulse length regulates the time the promoter is active, transcriptional output, and maximal transcription rate, but it no longer regulates the time to activate.

This can be seen more clearly when plotting the dynamics of the inferred transcription rates of responding cells for low (Fig 2C) and high (Fig 2D) Msn2 amplitudes. For 25% Msn2, the population‐averaged transcription rate peaks at a time that scales with pulse length, while the maximum of the peak remains almost constant. This suggests that Msn2 duration predominantly regulates the probability to activate the promoter rather than the rate of transcription once the promoter becomes active. This behavior is in qualitative agreement with the slow activation, high amplitude threshold promoters such as ALD3 (Fig 2E). In contrast, for 100% Msn2, the maximum transcription rate of DDR2 increases by twofold to threefold between the 10 min and 50 min duration pulses, indicating that upon promoter activation, transcription can be further enhanced by the presence of Msn2. This behavior is characteristic for the fast activation, low amplitude promoters such as DCS2 (Fig 2F).

In summary, this shows that a single promoter can switch between qualitatively distinct behaviors depending on Msn2 context. Here, DDR2 behaves like one promoter class at low Msn2 amplitudes (pulse length regulates time to activate, but nothing else), but a distinct class at high Msn2 amplitudes (pulse length regulates time active, transcription output and maximum rate, but not time to activate) (Fig 2G and H). While it is well known that promoters fall into distinct classes (Stavreva et al, 2009; Suter et al, 2011; Hao & O’Shea, 2012; Sharon et al, 2012; Hansen & O’Shea, 2013; Hansen & O’Shea, 2015; Haberle & Stark, 2018; King et al, 2020), what we show here is that the same promoter can switch from one class to another depending on context. To explain this phenomenon, we introduce the concept of ”context‐dependent manifestations”. Operationally, we define a context‐dependent manifestation of a promoter as a situation where the same promoter exhibits qualitatively distinct kinetic behaviors under different input contexts.

Context‐dependent promoter manifestations control gene expression noise

We next studied if promoters other than DDR2 exhibit similar context‐dependent promoter class switching. To this end, we analyzed the relationship between different promoter features under all input contexts and compared them with each other.

First, we analyzed the correlation between transcriptional output and the time the promoter was in any of the two transcriptionally permissive states (i.e., states 1 or 2 in Fig 1C) within individual cells (Fig 3A) for TKL2, DDR2, and DCS2. We refer to the latter as time transcribing. For DCS2, transcriptional output at the single‐cell level shows a linear and nearly deterministic dependence on time transcribing. To validate this, we performed a regression analysis and found that a simple linear model where transcriptional output is proportional to time transcribing (with slope k) can explain most of the variation in transcriptional output (R 2 ≈ 1; Fig 3A). Thus, for a given Msn2 amplitude, the effective rate of DCS2 transcription is fixed and the single‐cell transcriptional output can be determined very accurately by the time the promoter is in the transcriptionally permissive states. However, the rate of transcription is set by the Msn2 amplitude (i.e., k increases with Msn2 amplitude). Thus, DCS2 is remarkably simple within the considered contexts and regulation by time transcribing and transcription rate can be decoupled. Similarly, for DDR2, the rate of transcription is also set by Msn2 amplitude. However, in comparison with DCS2, it exhibits larger variation for low and intermediate Msn2 amplitudes, which decrease toward higher Msn2 amplitudes. The inverse scaling of variability with amplitude can be explained by simple Markovian models with Msn2‐dependent switching rates (Peccoud & Ycart, 1995; Hansen & O’Shea, 2013).

Figure 3. Single‐cell manifestations control gene expression noise.

- Dependency of transcriptional output with time transcribing, defined as the time the promoter spends in any of the two transcriptionally permissive states (see Fig 1C). The left panel plots transcriptional output against time transcribing for individual cells for a 50 min pulse with 25, 50, 75, and 100% Msn2 input for all single‐cell responses (responders and non‐responders). Results are shown for one of the five independent inference runs. Linear regression analysis was performed to determine the R 2 and slope k between transcr. output and time transcribing as shown in the center and bottom panels. Circles correspond to averages across five independent inference runs and error bars mark two times the standard error below and above the average.

- Scaling of noise with average transcriptional output for all Msn2 contexts. Noise is defined as the squared coefficient of variation of the transcriptional output calculated across individual cells. Single‐pulse experiments of different Msn2 induction level and duration are shown as circles of varying size and color whereas all repeated‐pulse experiments are shown as orange and equally sized triangles for visual clarity. Individual data points correspond to averages over five independent inference runs.

In contrast, TKL2 resembles DCS2 and DDR2 at low Msn2 amplitudes (R 2 ≈ 1), but at intermediate Msn2 amplitudes (Fig 3A, yellow), TKL2 exhibits large variation, which decreases again for higher Msn2 amplitudes. Thus, surprisingly, time transcribing is a fairly poor predictor of TKL2 transcriptional output at intermediate levels of Msn2 but a much better predictor at low and high Msn2 amplitudes. This non‐monotonic relationship indicates that above a certain Msn2 concentration, additional promoter states with larger transcriptional activity become accessible, which increase in occupancy toward higher Msn2 amplitudes. This again suggests that the behavior of a single promoter can be dominated by distinct promoter architectures depending on input context.

The analysis above was concerned with the statistical relationship between time transcribing and transcriptional output in single cells for a single 50 min Msn2 pulse at different amplitudes. To generalize our analysis, we next studied how noise in transcriptional output (quantified using CV 2 = std2/mean2) scales with mean transcriptional output under all conditions (Fig 3B). As expected from previous studies (Bar‐Even et al, 2006, Newman et al, 2006, Taniguchi et al, 2010), transcriptional noise uniformly decreases as transcriptional output increases for some genes such as DCS2. In contrast, TKL2 and also SIP18 exhibit more complex and non‐monotonic noise scaling: low noise during low transcription, high noise during intermediate levels of transcription and again lower noise during high levels of transcription (Fig 3B), similar to the previous example in Fig 3A.

To further investigate this “inverse‐U” scaling, we compared the behavior of the wild‐type SIP18 promoter with the two mutants A4 and D6 (Hansen & O’Shea, 2015) (Fig 3B). Mutant A4 resembles the simple inverse scaling relationship of DCS2. Similarly, mutant D6 also more closely resembles DCS2, albeit with a slightly weaker relationship between stronger expression and lower noise, suggesting that attenuation of this relationship can similarly be encoded in the promoter sequence. Taken together, these results demonstrate that modifying the number and location of Msn2 DNA binding sites in the promoter is sufficient to switch scaling and manifestation behavior.

Memory‐dependent promoter manifestations revealed by pulsatile Msn2 activation

We next analyzed how promoters respond to pulsatile Msn2 activation. Cells were exposed to four 5‐min Msn2 pulses separated by 5, 7.5, 10, 15, or 20 min intervals. Some promoters behaved relatively simply, e.g., DCS2 (Fig 4A). Most cells activate the DCS2 promoter during the first pulse, and the promoter displays limited positive memory between pulses (Fig 4A). By positive memory, we refer to the fact that successive pulses of Msn2 activation increase the susceptibility of the promoter to become activated and induce higher gene expression. This has also been termed the head‐start effect (Hao & O’Shea, 2012).

Figure 4. Context‐dependent promoter memory and model.

- Interval‐dependent regulation of promoter memory. Cells where treated with four consecutive Msn2 pulses (75% induction level) with 5‐min duration. The intervals between the pulses were 5, 7.5, 10, 15, and 20 min, respectively (left column). Population averages of the time‐varying transcription rates were calculated for DCS2 (middle column) and SIP18 mutant D6 (right column) considering all cells per condition (responding and non‐responding cells). Solid lines correspond to the mean of the population‐averaged transcription rate calculated over five independent inference runs and shaded areas mark two times the standard error above and below the mean.

- Toy model of context‐dependent promoter manifestations. We considered a four‐state promoter model with complex, nonlinear Msn2‐dependent transition rates (top row). Green and red arrows indicate transitions, which are promoted or repressed by Msn2, respectively. Gray arrows correspond to Msn2‐independent transitions. We simulated the promoter response to all thirty Msn2 inputs and quantified its dynamics by calculating the expected total number of transitions between all states (middle row heatmaps; blue show transitions with high probability (e.g., state 2 is rarely occupied in the middle scenario (High Msn2))). Depending on the Msn2 inputs, certain state transitions are favored, while others are effectively repressed. Therefore, different classes of dynamical inputs can reveal distinct manifestations of the same promoter (bottom row).

In contrast, the SIP18 mutant D6 promoter (Hansen & O’Shea, 2015) exhibited very curious behavior: at 5‐min intervals, there was significant positive memory (Fig 4A, top row). In contrast, with 20 min intervals, we observed negative memory: there was much lower expression during pulse 2–4, than during pulse 1(Fig 4A, bottom row). In other words, exposure to one pulse of Msn2 inhibited transcription during subsequent pulses. Furthermore, comparing the different pulse intervals we observed a transition from positive memory at 5 and 7.5 min intervals to negative memory at 15 and 20 min intervals (Fig 4A).

While positive memory has previously been reported (Hao & O’Shea, 2012; Hansen & O’Shea, 2013), a context‐dependent switch from positive to negative memory has not. We note that a sharp transition from positive to negative promoter memory is difficult to explain by simple kinetic models and that this type of behavior only becomes visible once the response to diverse dynamic inputs are analyzed. Although the underlying molecular mechanism is unknown, we show in Fig EV4 a hypothetical toy model that could explain such a switch from positive to negative memory. In conclusion, these data provide another example of how the same promoter can exhibit very different quantitative and qualitative behaviors depending on the context—in this case, depending on the interval between Msn2 pulses.

Figure EV4. Toy model with interval‐dependent promoter memory.

- Model scheme. Once Msn2 binds to the promoter, activator molecules can be recruited, which causes the promoter to switch into a transcriptionally active state with a rate proportional to the number of activators present. Once the promoter switches back into the Msn2‐unbound state, the activator can be converted into an inhibitor, which causes the promoter to switch into a transcriptionally inactive state with a rate proportional to the number of inhibitors present.

- Average transcription rate for 5 and 20 min pulse intervals as a function of time obtained by forward simulation of the model. Blue lines indicate averages computed from stochastic simulations (n = 2,000).

- Corresponding average transcriptional output for 5 and 20 min pulse intervals. A detailed reaction scheme and parameters used for simulation can be found in Methods and Protocols: Toy model of interval‐dependent promoter memory. We emphasize that this toy model only serves to illustrate one possible scenario, which could result in a pulse interval‐dependent switch from positive to negative memory, as observed in Fig 4A. We do not currently understand the mechanism underlying the observation in Fig 4A.

Discussion

Here, we quantitatively analyze the dynamic input–output relationship in a simple inducible gene regulation system. Previously, a large number of studies have shown that promoters fall into distinct classes (e.g., fast vs. slow; low vs. high threshold) and that different promoters decode dynamic stimuli differently (Stavreva et al, 2009; Suter et al, 2011; Hao & O’Shea, 2012; Sharon et al, 2012; Hansen & O’Shea, 2013; Hansen & O’Shea, 2015; Haberle & Stark, 2018; King et al, 2020). For example, a slow promoter may filter out a brief and transient stimulus (Purvis & Lahav, 2013). However, promoter class was assumed to be a fixed property.

Here, we show that promoters can switch between distinct classes depending on context. We show that even under these relatively simple conditions, the same promoter can exhibit context‐dependent scaling and induction behaviors (Figs 1, 2, 3, 4 and EV5). To describe this observation, we introduce the concept of context‐dependent manifestations. The underlying number of molecular states of a promoter is potentially enormous; if we were to enumerate the combinatorial number of states based on nucleosome positions, TF occupancy at each binding site, binding of co‐factors such as Mediator, SAGA, TFIID, RNA Polymerase II, and numerous other factors, the number of discrete molecular states would be astronomically high. When we measure a dose–response, we may observe only certain rate‐limiting regimes or manifestations of the system. What we show here is that the particular observed manifestation can be highly context‐dependent and very distinct quantitative behaviors can be observed under different contexts even in systems that are seemingly simple.

Figure EV5. Additional examples of curious behaviors and potential manifestations.

- For RTN2, the noise of transcriptional output shows a non‐monotonic scaling with Msn2 amplitude for 10 min Msn2 single‐pulse duration, but a monotonically decreasing relationship for 50 min Msn2 duration. The average transcriptional output increases monotonically with Msn2 amplitude for both durations.

- For SIP18 mutant D6, time active is largely independent of pulse length for 25% Msn2 amplitude but increases with pulse length for higher Msn2 amplitudes. The transcriptional output averaged over all cells (bottom panel, blue) increases with pulse length and Msn2 amplitude. However, the average transcriptional output of only responding cells is very similar for both Msn2 amplitudes. The examples shown in (A, B) illustrate further interesting behaviors and potential manifestations, but we emphasize that more analysis will be required to validate the robustness of these results.

Does this mean that the concept of a few discrete promoter states is too strong an approximation to be useful? We suggest that this is not necessarily the case. Our analyses show that for a given context, a 3‐state promoter architecture was capable of quantitatively describing promoter dynamics. However, the specific three promoter states and their associated rates were in general dependent on Msn2 context. In other words, a complicated system can manifest itself in a simpler form under specific conditions. Comparing different manifestations across multiple input contexts can thus help to unravel the overall complexity of promoter dynamics.

To illustrate this point further, consider a hypothetical promoter with four major states (Fig 4B). If under some dynamical Msn2 inputs, this promoter reduces to simpler architectures (e.g., 2‐state), but not under other Msn2 inputs (e.g., remains 4‐state), then the observed quantitative manifestation of the promoter is dependent on Msn2‐context. To more concretely demonstrate an example of this, we performed simulations of a complex 4‐state promoter with nonlinear Msn2‐dependent switching rates (Fig 4B; see Methods and Protocols: Toy model of a complex, context‐dependent promoter for details on the model) to all thirty dynamical Msn2 inputs. To characterize the dynamics of the promoter, we calculated the average number of transitions between all promoter states. These results show that depending on the Msn2 inputs, certain state transitions are favored, while others are effectively repressed. In particular, the same promoter can behave effectively like a 2‐, 3‐, or 4‐state promoter, depending on which type of dynamical input it is exposed to (Fig 4B). Mechanistically, one could imagine a promoter state that requires sustained chromatin remodeling and only becomes available if the Msn2 pulse and concentration is sufficiently high, as we previously suggested for SIP18 and its two promoter mutants studied here (Hansen & O’Shea, 2015). But this is speculative, and the precise molecular mechanisms underlying the distinct promoter manifestations observed here remain unknown. We suggest elucidating the molecular mechanisms underlying promoter manifestations as an important area for future research.

Our results have two important potential implications. First, our results suggest that system identification efforts based on limited sets of experimental conditions within complex systems are unlikely to be successful in the sense of capturing the full range of relevant behaviors of the underlying molecular pathways. In extreme cases, we may arrive at different and possibly contradictory conclusions about a pathway’s inner workings depending on which experimental context we choose to study. The only solution to this problem is to resort to experimental and computational approaches that capture a pathway’s response to a sufficiently broad range of physiologically meaningful contexts. Much more work on simple systems will be necessary to truly understand the relevant complexity of signal processing in cells, and we hope the approaches developed here will be helpful in this regard.

Second, a major conundrum in quantitative biology has been how to reconcile the remarkable spatiotemporal precision of biological systems with the high degree of gene expression noise observed at the single‐cell level (Elowitz et al, 2002; Cai et al, 2006; Li & Elowitz, 2019). For example, when information transduction capacities have been measured for simple pathways, such systems appear to be barely capable of reliable distinguishing ON from OFF (∼ 1 bit) (Cheong et al, 2011; Uda et al, 2013; Selimkhanov et al, 2014; Voliotis et al, 2014). Since these studies were done under strict experimental conditions, they may have captured only one out of multiple manifestations. Our results suggest that if all physiologically relevant manifestations could be captured, the estimated information transduction capacity of biochemical pathways could be substantially greater than previously estimated. This could, in part, explain the remarkable signal processing capabilities of biological systems.

Materials and Methods

Reagents and Tools Table

| Reagent/Resource | Reference or source | Identifier or catalog number |

|---|---|---|

| Experimental models | ||

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::kanMX hxk1::mCitrineV163A/SCFP3A‐spHIS5 (Diploid) | Hansen and O’Shea (2013) | EY2810 |

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::kanMX sip18::mCitrineV163A/SCFP3A‐spHIS5 (Diploid) | Hansen and O’Shea (2013) | EY2813 |

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::kanMX rtn2::mCitrineV163A/SCFP3A‐spHIS5 (Diploid) | Hansen and O’Shea (2013) | EY2816 |

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::kanMX dcs2::mCitrineV163A/SCFP3A‐spHIS5 (Diploid) | Hansen and O’Shea (2013) | EY2819 |

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::kanMX tkl2::mCitrineV163A/SCFP3A‐spHIS5 (Diploid) | Hansen and O’Shea (2013) | EY2822 |

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::kanMX ddr2::mCitrineV163A/SCFP3A‐spHIS5 (Diploid) | Hansen and O’Shea (2013) | EY2825 |

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::kanMX ald3::mCitrineV163A/SCFP3A‐spHIS5 (Diploid) | Hansen and O’Shea (2013) | EY2828 |

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::KAN sip18::mCitrine_V163A/SCFP3A‐spHIS5 pSIP18 Mutant A4 with 4 STREs (Diploid) | Hansen and O’Shea (2015) | EY2967 |

| TPK1 M164G TPK2 M147G TPK3 M165G msn4Δ::TRP1/LEU2 MSN2‐mCherry NHP6a‐iRFP::KAN sip18::mCitrine_V163A/SCFP3A‐spHIS5 pSIP18 Mutant D6 with 6 STREs (Diploid) | Hansen and O’Shea (2015) | EY2996 |

| Chemicals, enzymes, and other reagents | ||

| 1‐NM‐PP1 | Hansen and O’Shea (2013) | 1‐NM‐PP1 |

| Software | ||

| Image analysis code | Hansen et al (2015) | https://www.nature.com/articles/nprot.2015.079 |

| Code and raw data to reproduce all plots in this manuscript | This study | https://github.com/zechnerlab/PromoterManifest/ |

| Other | ||

| Gene expression data for ALD3, TKL2, DCS2, DDR2, HXK1, RTNA, and SIP18 | Hansen and O’Shea (2013) | https://www.embopress.org/doi/10.1038/msb.2013.56 |

| Gene expression data for pSIP18 mutant A4 and D6 | Hansen and O’Shea (2015) | https://www.sciencedirect.com/science/article/pii/S2211124715007950 |

| Compilation of all single‐cell trajectories used in this study | This study | https://zenodo.org/record/2755026 |

Methods and Protocols

Overview of experiments and source data

We note that the data used here were acquired previously (Hansen & O’Shea, 2013; Hansen & O’Shea, 2015), but in the interest of making it clear how the experiments were conducted, we provide a brief outline of the experimental setup in the sections below. The data in concentration units of arbitrary fluorescence were previously acquired and described (Hansen & O’Shea, 2013; Hansen & O’Shea, 2015). Here, we used absolute abundance quantification (Huang et al, 2016) to convert the data to absolute numbers of YFP and CFP proteins per cell. All the source data supporting this manuscript are freely available together with a detailed ReadMe file at https://zenodo.org/record/2755026. Information about the yeast strains can be found in the Reagent and Tools Table.

Microfluidics and time‐lapse microscope

Since the unnormalized data were previously acquired, here we only briefly describe the experimental methods. Microfluidic devices were constructed as previously described (Hansen & O’Shea, 2013). We furthermore refer the reader to a detailed protocol describing how to construct microfluidic devices and computer code for controlling the solenoid valves (Hansen et al, 2015). Briefly, for microscopy experiments, diploid yeast cells were grown overnight at 30°C with shaking at 180 RPM to an OD600 nm of ca. 0.1 in low fluorescence medium without leucine and tryptophan, quickly collected by suction filtration and loaded into the five channels of a microfluidic device pretreated with concanavalin A (4 mg/ml). The setup was mounted on an inverted fluorescence microscope kept at 30°C. The microscope automatically maintains focus and acquires phase‐contrast, YFP, CFP, RFP, and iRFP images from each of five microfluidic channels for 64 frames with a 2.5 min time resolution corresponding to imaging from −5 to 152.5 min. Solenoid valves control delivery of 1‐NM‐PP1 to each microfluidic channel. For full details on the range of input conditions, please see source data at https://zenodo.org/record/2755026.

Image analysis and YFP quantification and normalization

Time‐lapse movies were analyzed using custom‐written software (MATLAB) that automatically segments yeast cells based on phase‐contrast images and tracks cells between frames. The image analysis software and a protocol describing how to use it is available elsewhere (Hansen et al, 2015). The arbitrary fluorescence units were converted to absolute abundances by comparing fluorescence to strains with known absolute abundances and by segmenting the cell to calculate the total number of YFP molecules per cell per timepoint (Huang et al, 2016). Maturation delay was accounted for by shifting the YFP trajectories by a fixed time interval of 12.5 min, corresponding to the first five measurement time points.

Quantification of nuclear Msn2 dynamics

Msn2 was visualized as an Msn2‐mCherry fusion protein. This allows accurate quantification of the nuclear concentration of Msn2 over time (Msn2 only activates gene expression when nuclear) as previously described (Hao & O’Shea, 2012; Hansen & O’Shea, 2013). From the resulting time courses, we extracted continuous functions u(t), which served as inputs to our stochastic promoter model. Since we found nuclear Msn2 concentration to vary very little between cells (Fig EV1), we considered u(t) to be deterministic. We performed this as described previously (Hansen & O’Shea, 2013) and elaborated on here. We model nuclear Msn2 import with first‐order kinetics:

| (3) |

That is, if Msn2 is cytoplasmic at time , the nuclear level of Msn2 at a later time is given by the above expression where is the maximal level of nuclear Msn2 for the given concentration of 1‐NM‐PP1. We chose the 1‐NM‐PP1 concentrations as 100, 275, 690, and 3,000 nM such that they would correspond to approximately , , , and of maximal nuclear Msn2. The parameter is a fit parameter describing the rate of nuclear import, which we found to vary slightly depending on the 1‐NM‐PP1 concentration. Similarly, we model export of Msn2 from the nucleus as a first‐order process:

| (4) |

Here, is the nuclear level of Msn2 when the microfluidic device was switched to medium without 1‐NM‐PP1. Correspondingly, is the nuclear level of Msn2 at some later time . This is to account for the fact that, depending on the pulse duration, Msn2 may not have reached its maximal nuclear level, . The parameters , and were determined through fitting. Specifically, we took the full 30 different pulses and inferred the best‐fit values for , and using least squares fitting. The values are shown below:

| [1‐NM‐PP1] (nM) |

|

|

|

|||

|---|---|---|---|---|---|---|

| 100 | 313.2 | 1.11 | 0.97 | |||

| 275 | 774.5 | 0.61 | 0.81 | |||

| 690 | 1,107.8 | 0.59 | 0.57 | |||

| 3,000 | 1,410.1 | 1.07 | 0.29 |

Stochastic model of Msn2‐dependent gene expression

We describe Msn2‐dependent gene expression using a canonical three‐state model as shown in Fig 1C. The promoter is described as a continuous‐time Markov chain, which switches stochastically between three states of different transcriptional activity. Correspondingly, the rate of transcription at time is governed by a stochastic process , whose value changes discontinuously whenever the promoter transitions from one state into another. In the absence of nuclear Msn2, the promoter is in its transcriptionally inactive state (), where no transcripts are produced. Upon recruitment of Msn2 to the promoter, it can switch into a transcriptionally permissive state in which transcription takes place with propensity . To account for Msn2‐dependent promoter activation, we consider the switching rate from to to depend on the nuclear Msn2 abundance. For simplicity, we consider a linear dependency, i.e., , with as the Msn2 abundance at time . The corresponding reverse rate is considered to be constant. We assume that transcription can be further enhanced by recruitment of additional factors such as chromatin remodeling complexes and general transcriptional factors. This is captured in our model by introducing a third state with transcription rate and corresponding transition rates and . With this, we can describe the time‐dependent probability distribution over the transcription rate in terms of a forward equation.

| (5) |

with as some initial distribution over and as a set of parameters. In the following, we denote by a complete realization of on a fixed time interval . Furthermore, we introduce the conditional path distribution which measures the likelihood of observing a particular trajectory for a given parameter set . Note that it is straightforward to draw random sample paths from this distribution using Gillespie’s stochastic simulation algorithm (SSA) (Gillespie, 2007) or its variants.

Transcription and translation are modeled as a two‐stage reaction network as shown in Fig 1C. We denote by and the copy numbers of mRNA and protein at time , respectively. The parameters and are the mRNA and protein degradation rates and is the protein translation rate. To account for cell‐to‐cell variability in protein translation, we consider the latter to be randomly distributed across isogenic cells, i.e., , with as an arbitrary probability density function (pdf) with positive support and as a set of hyperparameters characterizing this distribution (Zechner et al, 2012; Zechner et al, 2014). Here, we consider as hyperparameters the average and coefficient of variation (CV) of such that . Consequently, captures the magnitude and variability associated with protein translation. In the following, we denote by the set of parameters corresponding to transcription and translation.

For a given set of parameters and and a concrete realization of the translation rate , the overall dynamics of the joint system state can be described by a Markov chain. However, due to the random variability over , each cell is associated with a differently parameterized Markov chain. This results in a heterogeneous Markov model, whose computational analysis turns out to be challenging (Zechner et al, 2014). One way to address this issue is to augment the state space by the random variable and to formulate a master equation on this extended space. For , such master equation reads

| (6) |

with . Differential equations for arbitrary moments with as a polynomial can be computed by multiplying (6) with and summing or integrating over all possible values of , , and , respectively (Zechner et al, 2012). In the following, we will denote by a complete sample path of the full system state between time zero and and introduce a corresponding path distribution . The path distribution conditional on a particular initial state is denoted by .

Conditional dynamics of transcription and translation

One major difficulty in inferring gene networks like the one in Fig 1C is that they involve both very lowly and highly abundant components. This is why moment‐based descriptions of the full system state are of limited use for the time‐series inference problem considered here as will be discussed later. On the other hand, approaches purely based on stochastic simulation become computationally expensive, since transcription and translation often involve thousands or even millions of events over the duration of a time‐course experiment. In such cases, hybrid approaches can be beneficial, where only the lowly abundant components are described stochastically, whereas the remaining components are handled using moment equations (Hasenauer et al, 2014). In the scenario considered here, for instance, the time evolution of the transcription rate can be efficiently simulated using stochastic simulation since the number of times the promoter switches between states is comparably small. For a given , one could then calculate a corresponding set of conditional moments characterizing the dynamics of mRNA and protein. More technically, this can be understood by the fact that the path distribution over the total system state factorizes into . Correspondingly, we can describe the dynamics over as a conditional Markov process , whose state probability distribution satisfies

| (7) |

where as we assume for the initial condition . For simplicity, we further consider the initial mRNA and protein copy numbers to be independent of the transcription rate such that . In order to derive conditional moments, we multiply (7) with polynomials in and sum and integrate over all , , and , respectively. Here, we consider moments of mRNA and protein up to order two, which can be fully described by the system of differential equations

| (8) |

Note that (8) involves all first‐ and second‐order moments, but also a few additional moments of order three and four, which are needed in order to obtain a closed set of differential equations.

Statistical model of time‐series reporter measurements

As detailed above, we analyzed quantitative single‐cell time‐lapse measurements of reporter expression for different Msn2‐inducible promoters and Msn2 activation profiles. We denote by the time points at which measurements were taken. Correspondingly, we define by a complete sample path of the gene expression system between times and . If , we refer to the state at time , which does not necessarily coincide with the first measurement time point . The measurements—denoted by for —provide noisy information about the system state according to a measurement density

We consider the measurement noise to be independent among time points such that

| (9) |

In our particular case, the measurements correspond to the reporter abundance corrupted by measurement noise such that

For a given set of parameters , the relation between a complete sample path and the observed measurements is captured by a joint distribution

| (10) |

with as the distribution over complete sample paths . Correspondingly, the posterior distribution over is proportional to (10), i.e.,

| (11) |

Recursive Bayesian estimation

The posterior distribution (11) is generally intractable but several approximate techniques can be employed. Most of them rely on Bayesian filtering methods, which construct an approximation of (11) recursively over measurement time points. In those approaches, one exploits the fact that the posterior distribution at any measurement time can be written recursively as

| (12) |

with as the posterior distribution at time . In order to solve the Bayesian recursion between consecutive time steps, one can either employ analytical approximations, or Monte Carlo methods. In a recent study, for instance, we have proposed normal and log‐normal approximation of the Bayesian filtering problem, which rely on the time evolution of the first and second order moments of the gene network dynamics (Huang et al, 2016). While computationally efficient, the underlying continuous approximations may not be suitable for discrete and switch‐like components, such as the transcription rate in our promoter model. Alternative approaches are mostly based on sequential Monte Carlo techniques, which approximate (11) using a sufficiently large number of Monte Carlo samples drawn by SSA. The main advantage of these techniques is that they are exact up to sampling variance but on their downside, suffer from limited scalability. In particular, forward simulation via SSA can become prohibitively slow, especially when RNAs and proteins are highly abundant. Therefore, they are currently not able to tackle large datasets like the one considered here. In the following, we will present a hybrid inference algorithm, which bypasses expensive SSA simulations of highly abundant species, making it sufficiently scalable to deal with datasets that span tens or even hundreds of thousands of single‐cell trajectories.

Hybrid sequential Monte Carlo

One strategy to improve the scalability of sequential Monte Carlo techniques is to analytically eliminate variables that are not of direct interest to a particular inference problem (Doucet et al, 2000; Zechner et al, 2014). In our case, for instance, we are specifically interested in the promoter switching dynamics and the corresponding transcription rate . From this perspective, it would therefore suffice to calculate the marginal posterior distribution

| (13) |

in which the dynamics of have been “integrated out”. In order to perform this integration, we first realize that the joint distribution can be rewritten as

| (14) |

where we have made use of the identities and . Next, we integrate (14) over all subpaths such that only the values of at the time points remain in the model. Informally, this integration can be carried out by replacing the path distribution by the state transition kernel , i.e.,

| (15) |

The marginalization over the remaining variables then reduces to a summation

| (16) |

Most conveniently, this summation can be solved iteratively, by first summing over , subsequently over and so forth. The first summation yields

| (17) |

whereas the last step follows from the fact that via Bayes’ rule. Repeating the same procedure for yields

| (18) |

Continuing the above procedure for finally leads to.

| (19) |

Therefore, the marginal posterior distribution over the transcription dynamics is proportional to (19), which can also be expressed recursively as.

| (20) |

Importantly, using equation (20) we can perform a sequential Monte Carlo algorithm on a significantly reduced sampling space, where only the transcription dynamics have to be simulated explicitly. However, in order to perform this algorithm, we need to be able to calculate the marginal likelihood terms , which are given by

| (21) |

The two sums in (21) are gerally intractable, but analytical solutions exist if the measurement likelihood function and the state transition kernel belong to certain classes of distributions. This is the case, for instance, if both are Gaussian. However, this is likely not a good assumption in the scenario considered here, since both the measurement and state distributions are generally positive and asymmetric. As it turns out, however, equation (11) has an analytical solution also if both and are log‐normally distributed. Log‐normal distributions have been used previously to model measurement noise in time‐lapse fluorescence data (Zechner et al, 2014) and gene product distributions (Taniguchi et al, 2010). We therefore assume

| (22) |

where corresponds to the strength of the measurement noise and and characterize the distribution over conditionally on a particular realization of . More precisely, and are the mean and covariance of and we therefore refer to them as logarithmic moments in the following.

Now, assuming that the posterior distribution over is log‐normally distributed at time ,

| (23) |

it will—based on our assumption—remain log‐normal upon applying the state transition kernel, i.e.,

| (24) |

where the sum has now been replaced by an integral. In order to calculate the logarithmic moments and for a given and , one first has to calculate all moments that enter equation (8) from the log‐normal distribution, propagate those forward in time until using (8), and subsequently convert them back into the logarithmic domain to obtain and . For instance, the relationship between logarithmic and standard moments of order one and two is given by

| (25) |

In order to determine the posterior distribution at the next measurement time , we multiply (24) with the log‐normal measurement density such that

| (26) |

with as the protein abundance at time . One can show that the product of the two log‐normal distributions in (26) is again proportional to a log‐normal distribution such that

| (27) |

with

| (28) |

| (29) |

and as a vector that reflects the fact that from , the second component (i.e., the protein abundance) is measured experimentally.

For the likelihood term we obtain

| (30) |

where the last line follows from the fact that each dimension of a multivariate log‐normal distribution with logarithmic moments and is marginally log‐normal with parameters and . This integral can be solved in closed form such that we obtain for the logarithm of the marginal likelihood function

| (31) |

Together, equations (8), (28), (29), and (31) define a recursive Bayesian filter, which allows us to eliminate the components from the inference problem. As mentioned above, the remaining component can then be inferred efficiently using a conventional sequential importance sampler. To this end, we define a set of particles, each of them consisting of a path , a set of logarithmic moments and as well as a particle probability . This set of particles serves as a finite sample approximation of the posterior distribution at each iteration . At the th time step, new particles are drawn randomly according to the particle probabilities . For each particle , the path is first extended to the next measurement using SSA. The new probability of this particle is then determined by first propagating the corresponding logarithmic moments until using equation (8) and then evaluating equation (31). The particle probabilities are then normalized across the particles such that they sum up to one. Subsequently, and are updated using (28) and (29) and the algorithm proceeds with the next iteration. At the final time , the paths associated with the particles represent samples from the desired marginal posterior distribution, which can be used for further analysis.

Quantitative characterization of promoter dynamics

The inference algorithm described above allows as to compute an arbitrary number of samples from the desired posterior distribution. In order to compare the dynamics of the different promoters under various experimental conditions, we extracted a number of features from these samples that characterize the transcriptional response for each individual cell. More technically, these features can be defined as functionals that map a random path to a real or discrete number. This functional can then be averaged with respect to the posterior distribution associated with a particular cell, i.e.,

| (32) |

with as the measurements of this cell and as samples from the posterior distribution obtained from the inference method. The following list summarizes the different features that were used in this study.

Responding/non‐responding. A cell is considered a responder if it resided in a state of significant transcriptional activity for at least 2 min. To this end, we defined a functional , which is one only if this criterion is met. We define a transcriptionally significant state as one that has a transcription rate of at least of the maximum transcription rate taken over all 50 min pulse conditions. Depending on the promoter and condition, this could encompass one, two, or none of the promoter states. We then estimated the response probability for each cell by averaging over all the individual samples paths obtained from the sequential Monte Carlo algorithm. A cell was then classified as a responder if . Subsequently, we calculated the percentage of responders for each promoter and condition.

Time to activate. For all responding cells, we calculated the posterior expectation of the time it took until the cell switched into a transcriptionally significant state, i.e., with as a functional that measures the time until the first transition into a responsive state happened. Paths for which the promoter was in a responsive state for less than two minutes were excluded from this expectation. We further calculated the mean and variance of the time until activation over all cells in an experiment.

Total time active. Analogously to the time to activate, we quantified the total time the promoter was active, i.e., with as a functional that extracts the total time the promoter spent in any of the active states.

Time spent in state . We calculated the total time the promoter spent in any of the three states, i.e., with

Maximum transcription. We calculated the maximum transcription rate that the promoter achieved during a time‐course experiment. In particular, we computed the expected transcription rate for each cell and subsequently the corresponding population average , whereas only cells that were classified as responders were considered. We then determined the maximum of this average, i.e, .

Time to maximum transcription. Next to the maximum transcription, we also determined the time when this maximum was achieved, i.e., .

Transcriptional output. To quantify the amount of transcription along a whole time course, we calculated the integral over the inferred transcription rates, i.e., .

Evaluation of the inference method using synthetic data

In order to study the accuracy of the proposed inference method, we tested it using artificially generated data. In particular, we considered two differently parameterized versions of the stochastic model in Fig 1C. The first one resembled a fast promoter like DCS2 or HXK1 whereas the second one had slow and switch‐like promoter activation kinetics like SIP18 or TKL1. In particular, the parameters of the system were chosen to be , , , , , , , , , , whereas for the slow and fast promoter model, respectively. All rate parameters are given in units .

For each promoter, we generated 30 single‐cell trajectories between time zero and using SSA and sampled the protein abundance at 55 equidistant time points . For the Msn2 activation function , we used the experimentally determined profile for a single‐pulse experiment (75% Msn2 induction level, 40min duration). The measurements were then simulated from a log‐normal measurement density , with as the protein copy number at time and as the logarithmic standard deviation of this density. For this study, we set .

We applied the hybrid sequential Monte Carlo algorithm to reconstruct the promoter dynamics and compared it with the true realization. In particular, we analyzed three of the path functionals described in Section “Quantitative characterization of promoter dynamics”: total time active, time to activate and transcriptional output. We estimated posterior expectations of these functionals using Monte Carlo samples and analyzed how they compared with the true values extracted from the exact sample paths . We first assumed perfect knowledge of all process parameters. The top panels in Fig EV2A and B show the inferred values plotted against the ground truth. For all three features, we found a linear relationship with a slope close to one. The corresponding indicates the reconstruction accuracy of the inference method. For the slowly switching promoter, we found values close to one, indicating very high accuracy. For the fast‐switching promoter, the inference results become slightly less accurate because individual switching events are more difficult to infer from the relatively slow reporter dynamics. We furthermore analyzed the robustness of the method with respect to parameter mismatch. To this end, we randomly perturbed all of the parameters using a log‐normal distribution with as the underlying true value. Note that the random parameter perturbation was performed for each of the considered trajectories separately. In case of poor robustness, we would thus expect a significantly reduced correlation between the true and inferred values. However, we found for all three features that both the and slope changed only marginally indicating a relatively high robustness of the method. This is an important feature in practical scenarios where knowledge about process parameters is generally imperfect.

Statistical analysis of Msn2‐dependent gene expression

In the following, we provide details on the statistical analysis of Msn2‐dependent gene expression as shown in the main text. In this case, the function corresponds to the nuclear Msn2 level that was measured experimentally for each condition (Fig EV1). In combination with the measured YFP time series, this allowed us to infer the input–output relationship of different promoters under different experimental conditions using the recursive inference method described in Section “Hybrid sequential Monte Carlo”. However, before this method could be applied, the stochastic model from Fig 1C had to be parameterized. For this purpose, we used a portion of the experimental single‐cell trajectories to infer the kinetic parameters of the model (Section “Statistical inference of kinetic parameters”). Subsequently, we reconstructed the transcription dynamics of each promoter and condition as described in Section “Statistical inference of transcription dynamics”.

Statistical inference of kinetic parameters

In order to parameterize the stochastic gene expression model for different promoters and experimental conditions, we used an established moment‐based inference method (Zechner et al, 2012). This method uses a Markov chain Monte Carlo sampler to match the first and second order moments of the stochastic gene network to the experimentally determined ones. For detailed information on this approach, the reader shall refer to (Zechner et al, 2012).

For each promoter, we first estimated the total set of parameters and using the single‐pulse experiments with maximum level and duration (100% Msn2, 50 min). Since the promoter switching dynamics can be concentration‐ and pulse length‐dependent, we re‐estimated the promoter parameters for all other conditions, while keeping fixed at the previously inferred values. The kinetics of the same gene expression system have been previously quantified using a deterministic model (Hansen & O’Shea, 2013). We incorporated this additional information in the form of prior distributions over some of the kinetic parameters. In particular, we considered Gamma prior distributions and for the mRNA degradation and average protein translation rates, respectively. Additionally, the protein degradation rate was fixed to . For the switching parameters and the transcription rates and , we used prior distributions . To infer the parameters, we applied a Metropolis‐Hastings sampler with log‐normal proposal distributions to generate samples from which we extracted maximum a posterior (MAP) estimates of the model parameters.

Statistical inference of transcription dynamics

Using the calibrated models, we inferred the transcription and promoter switching dynamics using the hybrid sequential Monte Carlo inference scheme from Section “Hybrid sequential Monte Carlo”. Based on our previous study (Zechner et al, 2014), which uses a similar data processing and calibration pipeline, we set the measurement noise parameter to corresponding to an expected relative variation of roughly 15 percent. For each condition and promoter, we processed each individual cell using particles. From the resulting particles, we estimated the promoter features as summarized in Section “Quantitative characterization of promoter dynamics”. We note that in some circumstances, the hybrid SMC algorithm can become numerically unstable. For instance, this may be the case in the presence of outliers, where two consecutive data points are very far away from each other. All cells that led to unstable results were excluded from our analyses. The ratio of excluded cells was fairly small for most promoters and conditions (i.e., for around of the 270 experiments less than of trajectories were excluded). For a small fraction of around of the experiments, between of the trajectories had to be dismissed. However, all these experiments correspond to promoters and conditions were gene expression signals were very low and close to background. Therefore, the exclusion of trajectories should affect our analyses to no significant extent. Moreover, we performed a quantitative analysis, which shows that the exclusion of trajectories did not strongly affect the statistical properties of the gene expression levels for individual promoters and conditions. The corresponding analysis can be found in the provided GitHub repository.