Abstract

Background

Numerous instruments are designed to measure digital literacy among the general population. However, few studies have assessed the use and appropriateness of these measurements for older populations.

Objective

This systematic review aims to identify and critically appraise studies assessing digital literacy among older adults and to evaluate how digital literacy instruments used in existing studies address the elements of age-appropriate digital literacy using the European Commission’s Digital Competence (DigComp) Framework.

Methods

Electronic databases were searched for studies using validated instruments to assess digital literacy among older adults. The quality of all included studies was evaluated using the Crowe Critical Appraisal Tool (CCAT). Instruments were assessed according to their ability to incorporate the competence areas of digital literacy as defined by the DigComp Framework: (1) information and data literacy, (2) communication and collaboration, (3) digital content creation, (4) safety, and (5) problem-solving ability, or attitudes toward information and communication technology use.

Results

Searches yielded 1561 studies, of which 27 studies (17 cross-sectional, 2 before and after, 2 randomized controlled trials, 1 longitudinal, and 1 mixed methods) were included in the final analysis. Studies were conducted in the United States (18/27), Germany (3/27), China (1/27), Italy (1/27), Sweden (1/27), Canada (1/27), Iran (1/27), and Bangladesh (1/27). Studies mostly defined older adults as aged ≥50 years (10/27) or ≥60 years (8/27). Overall, the eHealth Literacy Scale (eHEALS) was the most frequently used instrument measuring digital literacy among older adults (16/27, 59%). Scores on the CCAT ranged from 34 (34/40, 85%) to 40 (40/40, 100%). Most instruments measured 1 or 2 of the DigComp Framework’s elements, but the Mobile Device Proficiency Questionnaire (MDPQ) measured all 5 elements, including “digital content creation” and “safety.”

Conclusions

The current digital literacy assessment instruments targeting older adults have both strengths and weaknesses, relative to their study design, administration method, and ease of use. Certain instrument modalities like the MDPQ are more generalizable and inclusive and thus, favorable for measuring the digital literacy of older adults. More studies focusing on the suitability of such instruments for older populations are warranted, especially for areas like “digital content creation” and “safety” that currently lack assessment. Evidence-based discussions regarding the implications of digitalization for the treatment of older adults and how health care professionals may benefit from this phenomenon are encouraged.

Keywords: healthy aging, eHealth, telehealth, mobile health, digital literacy, ehealth literacy, aging, elderly, older adults, review, literacy

Introduction

Background

Adopting digital technology is becoming imperative for all areas of service and business including health care. In the era of global aging, digital technology is viewed as a new opportunity to overcome various challenges associated with aging, such as reduced physical and cognitive function, multiple chronic conditions, and altered social networking [1]. Consistent with this trend, the proportion of older populations using digital technology has increased exponentially [2], although this proportion is still smaller than that of younger generations. According to the latest Digital Economy Outlook Report from the Organization for Economic Cooperation and Development (OECD), 62.8% of 55–74-year-olds are now connected to the internet, as are 96.5% of 16–24-year-olds [3].

Improving the inclusion and engagement of older adults in digital technology is becoming increasingly important for the promotion of their health and function [4]. While numerous studies have measured the digital literacy of younger generations [5,6], few have examined the inclusion of older adults in the research and design of digital technologies. Moreover, existing measures of digital literacy for older adults are generally focused on acceptance models and barriers to adoption [7-9], which fail to consider heterogeneity in user ability. As emphasized by Mannheim et al [10], designs that focus heavily on barriers may be marginalizing older adults by assuming that they are less capable of utilizing digital technologies than their younger counterparts.

For health care professionals, the rapid digitalization of social and health care services has various implications for providing older adults with improved access, knowledge, and behavior [11]. Telehealth platforms are a solution for frailer, older adults to receive medical support remotely [12], while GPS can be used to mine personalized data to locate older patients and track or predict their needs [13]. Internet use is associated with reduced likelihood of depression among the retired, and social networking sites represent an opportunity for older adults to reduce feelings of loneliness through online interactions with family and friends [14]. The increasing number of Alzheimer’s disease forums on the microblogging system, Twitter, for example, shows how social networking systems serve as a platform for older individuals to share the latest health-related information with others [13].

Quantifying the digital literacy of older adults is the first step to assist older adults to take advantage of this trend of digitalization in health care. However, when measuring digital literacy among older adults, measures must consider how basic competencies among one age cohort can be harder to achieve for another cohort with fewer information-and-communication-technology experiences and opportunities [15]. In the case of older adults, other age-related factors including life transitions, personal health, attitudes, and economic incentives must also be considered during instrument research and design [16].

Prior Work

To our knowledge, few systematic reviews to date have evaluated instruments of digital literacy for older adults in general, although 1 systematic review of digitally underserved populations attributed poor eHealth literacy to age, as well as language, educational attainment, residential area, and race [17]. Furthermore, the compatibility between these instruments and older adults has not been measured according to a validated framework.

Goal of This Study

Therefore, this systematic review aimed to (1) identify and critically appraise studies that involved the assessment of digital literacy among older adults and (2) evaluate how digital literacy instruments used in existing studies address the elements of age-appropriate digital literacy using the European Commission’s Digital Competence (DigComp) Framework [18]. According to DigComp, digital literacy is defined in 5 areas: (1) information and data literacy, (2) communication and collaboration, (3) digital content creation, (4) safety, and (5) problem solving [18]. For this review, we chose the DigComp over other frameworks, such as the International Computer and Information Literacy Study [19] and OECD’s Program for the International Assessment of Adult Competencies [20] because the DigComp Framework is the most generalizable across different regions [21] and age groups [15].

Methods

Search Strategy and Data Sources

This systematic review was conducted by searching multiple electronic databases according to Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [22]. Electronic databases and search engines employed in the initial screening period included PubMed, CINAHL, Embase, and MeSH. The combination of search keywords for each database was summarized in a table (See Multimedia Appendix 1). Keywords were matched to database-specific indexing terms, and searches were not limited to a specific region or study design. However, we limited the year of study to those that were conducted after 2009 for a more recent conceptualization of digital literacy.

The reference lists of identified studies were manually reviewed by a team of academics to prevent relevant studies from being excluded in our search for relevant articles. EndNote X9 was used for database management.

Eligibility Criteria

We included studies that (1) were published in English, (2) targeted older adults, and (3) measured the use of a validated instrument to assess digital literacy. However, publications were excluded if older adults were not the study’s main target population. To elaborate, publications targeting the general population, for example, were excluded from our list of eligible articles as older adults were not the main target population examined.

Exceptions to this rule were studies that compared older populations to younger populations with the aim of addressing the age-related digital divide, like the study by Schneider and colleagues [23] comparing the digital literacy of “baby boomers” (50-65 years old) to that of millennials (18-35 years old).

Study Selection

Using these eligibility criteria, 3 independent investigators (SO, MK, and JO) examined all studies reporting the use of a digital literacy instrument in the databases and search engines. All studies were screened according to their title and excluded if the main target population did not consist of older adults.

Subsequently, abstracts were screened so that non-English studies and studies not assessing digital literacy through a validated instrument could be excluded from our investigation. During this process, any studies that were incapable of providing information on the required general characteristics were excluded.

Last, full-text reviews were performed to ensure that all articles measured the digital literacy of older adults through validated instruments. In this process, investigator-developed questionnaires were included only if authors mentioned that they had been evaluated by experts for face validity. The instruments mentioned in each article were checked to ensure that they were accessible for our quality assessment. All processes were supervised by 2 independent reviewers (SC and JC), and any disagreement was resolved through discussions.

Data Collection

Data on the general characteristics of the included studies included a summary of the year of publication, study design, region where the study was conducted, age of older adults studied, and main literacy instrument used. Regarding the region where the study was conducted, 2 studies were international collaborations, including 1 study between Italy and Sweden [24] and another study between the United States, United Kingdom, and New Zealand [25]. For these 2 studies, the first author’s region of study was used in our general characteristics summary.

Quality Assessment

Three independent reviewers (SO, JC, and KK) assessed the quality of each included study using the Crowe Critical Appraisal Tool (CCAT) [26]. The CCAT is a validated quality assessment tool developed to rate research papers in systematic reviews based on a number of criteria relative to research design, variables and analysis, sampling methods, and data collection (Multimedia Appendix 2) [26]. Many systematic reviews targeting older adults have used this tool [27,28] for quality appraisal.

Instruments were also assessed to the DigComp’s definition of the 5 areas of digital literacy: (1) information and data literacy (browsing, searching, filtering data), (2) communication and collaboration (interacting, sharing, engaging in citizenship, collaborating), (3) digital content creation (developing, integrating, and re-elaborating digital content; copyright; licenses; programming), (4) safety (protecting devices, protecting personal data and privacy, protecting health and well-being), and (5) problem solving (solving technical problems, identifying needs and technological responses, creatively using digital technologies, identifying digital competence gaps) [18].

Results

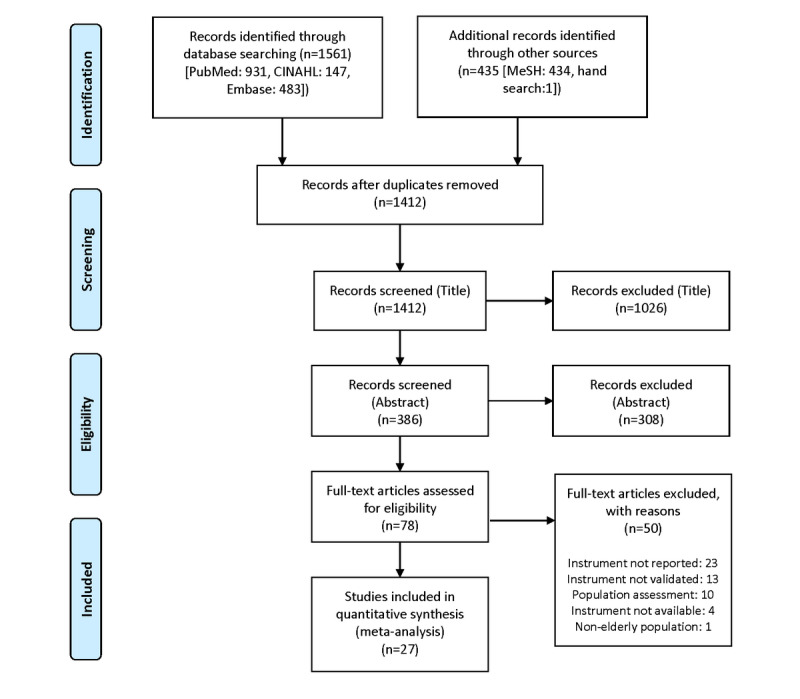

The PRISMA flow diagram in Figure 1 summarizes the search results and selection process of all studies included in our synthesis. Overall, the number of records identified in our database was 1561 (PubMed: 931; CINAHL: 147; Embase: 483). The number of additional records identified through other sources was 435 (MeSH: 434, hand search: 1). Of these records, 1412 remained after duplicates were electronically removed. An additional 1026 articles were removed after title screening, and 308 articles were removed after abstract screening.

Figure 1.

PRISMA flowchart of the literature search and study selection process.

Study Characteristics

Of the 78 articles assessed for eligibility, 50 were excluded for the following reasons: (1) no report of an instrument for digital literacy despite the title or abstract of the paper alluding to measures of digital literacy (n=23); (2) the instrument presented was not validated (n=13); (3) studies were mainly on population assessments and measured digital literacy only as part of a wider assessment of multiple factors (n=10); (3) instruments were not available in English or in a publicly accessible format (n=4); and (4) the study did not specifically target older adults (n=1). Ultimately, 27 articles were included in our review.

Table 1 provides a general summary of the included studies. While publication years ranged from 2009 to 2020, most articles reviewed were conducted between 2015 and 2020. The majority (17/27, 63%) of included studies were cross-sectional, but 2 studies were pre- and post-test studies, 2 were randomized controlled trials (RCTs), 1 was longitudinal, and 1 was a mixed-method study with both surveys and focus group interviews. Most studies were conducted in the United States (18/27), but some studies were also conducted in Europe (Germany, 3/27; Italy, 1/27; Sweden, 1/27). Studies mostly defined older adults as aged ≥50 years (10/27) or ≥60 years (8/27).

Table 1.

Summary of included studies (n=27).

| Categories | Results, n (%) | |

| Year of study publication | ||

|

|

2009-2010 | 2 (7) |

|

|

2011-2012 | 3 (11) |

|

|

2013-2014 | 2 (7) |

|

|

2015-2016 | 6 (22) |

|

|

2017-2018 | 9 (33) |

|

|

2019-2020 | 5 (19) |

| Study design | ||

|

|

Cross-sectional | 17 (63) |

|

|

Before and after study | 2 (7) |

|

|

Randomized controlled trial | 2 (7) |

|

|

Longitudinal | 1 (4) |

|

|

Mixed methodsa | 1 (4) |

| Region where study was conducted | ||

|

|

United States | 18 (67) |

|

|

Germany | 3 (11) |

|

|

China | 1 (4) |

|

|

Italy | 1 (4) |

|

|

Sweden | 1 (4) |

|

|

Canada | 1 (4) |

|

|

Iran | 1 (4) |

|

|

Bangladesh | 1 (4) |

| Definition of older adults (years) | ||

|

|

≥50 | 10 (37) |

|

|

≥55 | 4 (15) |

|

|

≥60 | 8 (30) |

|

|

≥65 | 5 (19) |

| Main health literacy instrument used | ||

|

|

eHealth Literacy Scale (eHEALS) | 16 (59) |

|

|

Unified Theory of Acceptance and Use of Technology (UTAUT) | 3 (11) |

|

|

Computer Anxiety Scale (CAS) | 2 (7) |

|

|

Technology Acceptance Model (TAM) | 2 (7) |

|

|

Swedish Zimbardo Time Perspective Inventory (S-ZTPI) | 1 (4) |

|

|

Mobile Device Proficiency Questionnaire (MDPQ) | 1 (4) |

|

|

Everyday Technology Use Questionnaire (ETUQ) | 1 (4) |

|

|

Attitudes towards Psychological Online Interventions (APOI) | 1 (4) |

aSurvey and focus group interviews.

Table 2 presents the detailed characteristics of all 27 included studies. Overall, the eHealth Literacy Scale (eHEALS) [29] was the most frequently used instrument to measure digital literacy among older adults (16/27, 59%). The Unified Theory of Acceptance and Usage of Technology (UTAUT) was also used by 2 studies from Germany [9,30] and 1 study from Bangladesh [31]. Loyd-Gressard’s Computer Attitude Scale (CAS) was used in 2 studies that focused heavily on computer anxiety and confidence [32,33]. There was not wide variation in the quality of studies assessed via the CCAT, with scores ranging from 34 (34/40, 85%) to 40 (40/40, 100%) of a total of 40 points.

Table 2.

Characteristics of included studies.

| Author | Year | Country | Sample size, n | Design | Study aim | Measure | CCATa,b score, points (% of total) |

| Roque et al [34] | 2016 | United States | 109 | Cross-sectional | To validate a new tool for measuring mobile device proficiency across the life span by assessing both basic and advanced proficiencies related to smartphone and tablet use | MDPQc, CPQd-12 | 37 (93) |

| Zambianchi et al [24] | 2019 | Italy and Sweden | 638 | Cross-sectional | To examine the determinants of attitudes towards and use of ICTse in older adults | S-ZTPIf, ATTQg | 38 (95) |

| Schneider et al [23] | 2018 | Germany | 577 | RCTh | To examine whether there are any differences in use of an online psychological intervention between generational groups based on Deprexis user data, responses on a questionnaire, and data in the EVIDENT study | APOIi | 40 (100) |

| Nagle et al [9] | 2012 | Germany | 52 | Cross-sectional | To get a better understanding of the factors affecting older adults' intention towards and usage of computers | UTAUTj | 34 (85) |

| Yoon et al [33] | 2015 | United States | 209 | Cross-sectional | To examine predictors of computer use and computer anxiety in older Korean Americans | CASk | 38 (95) |

| Cherid et al [35] | 2020 | Canada | 401 | Cross-sectional | To identify the current level of technology adoption, health, and eHealth literacy among older adults with a recent fracture, to determine if the use of electronic interventions would be feasible and acceptable in this population | eHEALSl | 39 (98) |

| Xie and Bo [36] | 2011 | United States | 146 | Cross-sectional | To examine the effects of a theory-driven eHealth literacy intervention for older adults | eHEALS | 39 (98) |

| Tennant et al [37] | 2015 | United States | 393 | Cross-sectional | To explore the extent to which sociodemographic, social determinants, and electronic device use influence eHealth literacy and use of Web 2.0 for health information among baby boomers and older adults | eHEALS | 36 (90) |

| Hoogland et al [38] | 2020 | United States | 198 | Cross-sectional | To examine age differences in eHealth literacy and use of technology devices/HITm in patients with cancer and characterize receptivity towards using home-based HIT to communicate with the oncology care team | eHEALS | 36 (90) |

| Price-Haywood et al [39] | 2017 | United States | 247 | Cross-sectional | To examine relationships between portal usages, interest in health-tracking tools, and eHealth literacy and to solicit practical solutions to encourage technology adoption. | eHEALS | 37 (93) |

| Paige et al [40] | 2018 | United States | 830 | Cross-sectional | To examine the structure of eHEALS scores and the degree of measurement invariance among US adults representing the following generations: millennials (18-35 years old), Generation X (36-51 years old), baby boomers (52-70 years old), and the silent generation (71-84 years old) | eHEALS | 38 (95) |

| Aponte et al [41] | 2017 | United States | 20 | Cross-sectional | To explore the experiences of older Hispanics with type 2 diabetes in using the internet for diabetes management | eHEALS | 37 (93) |

| Xie and Bo [42] | 2011 | United States | 124 | Cross-sectional | To generate scientific knowledge about the potential impact of learning methods and information presentation channels on older adults' eHealth literacy | eHEALS | 39 (98) |

| Sudbury-Riley and Lynn [25] | 2017 | United States, United Kingdom, New Zealand | 996 | Cross-sectional | To examine the factorial validity and measurement invariance of the eHEALS among baby boomers in the United States, the United Kingdom, and New Zealand who had used the internet to search for health information in the last 6 months | eHEALS | 35 (88) |

| Noblin et al [43] | 2017 | United States | 181 | Cross-sectional | To determine the willingness of older adults to use health information from a variety of sources | eHEALS | 38 (95) |

| Cajita et al [44] | 2018 | United States | 129 | Cross-sectional | To examine factors that influence intention to use mobile technology in health care (mHealth) among older adults with heart failure | TAMn | 37 (93) |

| Lin et al [45] | 2019 | Iran | 468 | Longitudinal | To examine the temporal associations between eHealth literacy, insomnia, psychological distress, medication adherence, quality of life, and cardiac events among older patients with heart failure | eHEALS | 39 (98) |

| Chu et al [32] | 2009 | United States | 137 | RCT | To measure the psychosocial influences of computer anxiety, computer confidence, and computer self-efficacy in older adults at 6 meal congregate sites | CAS | 40 (100) |

| Rosenberg et al [46] | 2009 | Sweden | 157 | Cross-sectional | To measure the perceived difficulty in everyday technology use such as remote controls, cell phones, and microwave ovens by older adults with or without cognitive deficits | ETUQo | 37 (93) |

| Stellefson et al [47] | 2017 | United States | 283 | Cross-sectional | To examine the reliability and internal structure of eHEALS data collected from older adults aged ≥50 years responding to items over the telephone | eHEALS | 36 (90) |

| Chung et al [48] | 2015 | United States | 866 | Cross-sectional | To test the psychometric aspects of the eHEALS for older adults using secondary data analysis | eHEALS | 36 (90) |

| Li et al [49] | 2020 | China | 1201 | Cross-sectional | To examine the associations among health-promoting lifestyles, eHealth literacy, and cognitive health in older adults | eHEALS | 37 (93) |

| Choi et al [29] | 2013 | United States | 980 | Mixed methods | To examine internet use patterns, reasons for discontinued use, eHealth literacy, and attitudes toward computer or internet use among low-income homebound individuals aged ≥60 years in comparison to their younger counterparts (homebound adults <60 years old) | eHEALS, ATC/IQp | 37 (93) |

| Moore et al [8] | 2015 | United States | 30 | Cross-sectional | To offer design considerations in developing internet-based hearing health care for older adults by analyzing and discussing the relationship between chronological age, computer skills, and the acceptance of internet-based hearing health care | TAM | 35 (88) |

| Hoque et al [31] | 2017 | Bangladesh | 300 | Cross-sectional | To develop a theoretical model based on the UTAUT and then empirically test it to determine the key factors influencing elderly users’ intention to adopt and use mHealth services | UTAUT | 36 (90) |

| Niehaves et al [30] | 2014 | Germany | 150 | Cross-sectional | To study the intentions of the elderly with regard to internet use and identify important influencing factors | UTAUT | 35 (88) |

| Aponte et al [41] | 2017 | United States | 100 | Cross-sectional | To examine the validity of the Spanish version of the eHEALS with an older Hispanic population from a number of Spanish-language countries living in New York City | eHEALS | 37 (93) |

aCCAT: Crowe Critical Appraisal Tool.

bTotal CCAT score is 40 points.

cMDPQ: Mobile Device Proficiency Questionnaire.

dCPQ: Computer Proficiency Questionnaire.

eICTs: information and communication technologies.

fS-ZTPI: Swedish Zimbardo Time Perspective Inventory.

gATTQ: Attitudes Toward Technologies Questionnaire.

hRCT: randomized controlled trial.

iAPOI: Attitudes towards Psychological Online Interventions.

jUTAUT: Unified Theory of Acceptance and Use of Technology.

kCAS: Computer Attitude Scale.

leHEALS: eHealth Literacy Scale.

mHIT: health information technology.

nTAM: Adapted Technology Acceptance Model.

oETUQ: Everyday Technology Use Questionnaire.

pATC/IQ: Attitudes Toward Computer/Internet Questionnaire.

As seen in Table 3, all instruments were analyzed for quality assessment to assess which DigComp elements of digital literacy were met [18]. Studies mostly satisfied 1 or 2 aspects of the information and data literacy criteria, but the Mobile Device Proficiency Questionnaire (MDPQ) satisfied all 5 elements, including those related to safety and data creation.

Table 3.

Inclusion of the European Commission’s Digital Competence (DigComp) Framework criteria and quality assessment of the included studies.

| Measure | Literacy elementsa | Mode | Scoring | Reliability, Cronbach α | ||||

| 1 | 2 | 3 | 4 | 5 |

|

|||

| Attitude Toward Technologies Questionnaire (ATTQ) | Ob | O | Xc | X | X | Self-administered | 6 5-point Likert questions | 0.91 (Italy), 0.92 (Sweden) [50] |

| Adapted Technology Acceptance Model (TAM) | O | O | X | X | X | Self-administered | 6 7-point Likert questions | 0.91 (perceived ease of use), 0.97 (perceived usefulness), 0.96 (attitude toward using), 0.70 (actual system use) [51] |

| Attitudes Toward Computer/Internet Questionnaire (ATC/IQ) | O | X | X | X | X | Interview (semistructured) | 10 5-point Likert questions | 0.98 (usefulness), 0.94 (ease of use) [52], adapted by Choi and DiNitto [29] |

| Attitudes Towards Psychological Online Intervention Questionnaire (APOI) | O | X | X | X | X | Self-administered | 16 5-point Likert questions | 0.77 (total), 0.62 (skepticism and perception of risks), 0.62 (anonymity benefits), 0.64 (technologization threat), 0.72 (confidence in effectiveness) [53] |

| Computer Attitude Scale (CAS) | X | X | X | O | O | Self-administered | 4 10-point Likert questions | 0.95 (total), 0.90 (computer anxiety), 0.89 (computer confidence), 0.89 (computer liking), 0.82 (computer usefulness) [54] |

| eHealth Literacy Scale (eHEALS) | O | O | X | O | O | Self-administered | 8 5-point Likert questions | 0.88, 0.60-0.84 (range among items) [55] |

| Computer Proficiency Questionnaire (CPQ) | O | O | O | X | X | Self-administered | 33 5-point Likert questions | 0.98 (total for CPQ) 0.95 (total for CPQ-12), 0.91 (computer basics), 0.94 (printing), 0.95 (communication), 0.97 (internet), 0.96 (scheduling), 0.86 (multimedia) [56] |

| Mobile Device Proficiency Questionnaire (MDPQ) | O | O | O | O | O | Self-administered | 46 5-point Likert questions | 0.75 (MDPQ-46), 0.99 (MDPQ-16) [34] |

| Unified Theory of Acceptance and Usage of Technology (UTAUT) | O | O | O | X | O | Interview (face-to-face) | 15 7-point Likert questions | 0.7879-0.9497 [57] |

aEuropean Commission’s Digital Competence (DigComp) Framework criteria of (1) information and data literacy (browsing, searching, filtering data), (2) communication and collaboration (interacting, sharing, engaging in citizenship, collaborating), (3) digital content creation (developing, integrating, and re-elaborating digital content; copyright; licenses; programming), (4) safety (protecting devices, protecting personal data and privacy, protecting health and well-being), and (5) problem solving (solving technical problems, identifying needs and technological responses, creatively using digital technologies, identifying digital competence gaps) [18].

bO: included in the questionnaire.

cX: not included in the questionnaire.

Discussion

Principal Findings

In this systematic review, we highlighted the importance of digital literacy among older adults and provided a comprehensive overview of the instruments that are being employed to measure their digital literacy. We also illustrated the various strengths and limitations of each instrument, relative to age-appropriateness and suitability for older adults, in accordance with the components of a validated, digital competency framework [18]. Our review is timely because, to the best of our knowledge, few systematic reviews to date have evaluated measurements of digital literacy for older adults specifically.

In the digital era, providing education for patients regarding management of their physical or mental illness or injury, explaining posttreatment home care needs, and managing their diet, nutrition, and exercise are all duties that are beginning to be “digitalized” [58]. Moreover, digital technologies are providing practitioners with more effective and user-centered ways to educate, inform, and treat older patients. For example, in a systematic review of “virtual visits” in home care for older patients, both service users and providers found online visits to be more flexible, easy to arrange, and personal than offline visits [59]. In another study of an internet-based videoconferencing system for frail elderly people in Nordic countries, telehealth was associated with reduced loneliness among 88% of users, while simultaneously reducing the odds of catching a cold during winter months due to leaving the house [60].

Overall, we discovered that while the eHEALS is most frequently used to measure digital literacy among older adults, the MDPQ may be more appropriate for measuring the literacy of older adults. Unlike the eHEALS, the MDPQ attempts to measure older adults’ digital content creation capacity (developing, integrating, and re-elaborating digital content; copyright; licenses; programming), which according to the European Commission, can give valuable information regarding an individual’s ability to add value to new media for self-expression and knowledge creation [18].

Also, the MDPQ contains numerous items related to data protection and privacy such as “passwords can be created to block/unblock a mobile device” or “search history and temporary files can be deleted” despite the fact that security was the least measured element of the DigComp Framework among the instruments in our study. Only the CAS, eHEALS, and MDPQ provide items related to data protection and privacy, which is concerning given that older adults comprise a significant proportion of the target population for internet scams or email attacks [61].

In our review of 27 selected articles, more than half (16/27, 59%) used the eHEALS to measure the digital literacy of older adults. Several reasons can be speculated; this instrument is short (8 items), and the questions are simple to understand (eg, “I know how to use the Internet to answer my health questions”). Scholars claim that it is easy to administer to older adults [48]. It should be noted that because of its simplicity, there has been some debate regarding the validity of the eHEALS [62-64]. As described by Jordan and colleagues [64], the eHEALS has a “lack of an explicit definition of the concept that many health literacy indices were developed to measure limit... ...its ability to make fully informed judgments... ...about a person’s ability to seek, understand, and use health information.”

Studies focusing on similar research aims also employ similar instruments. For example, the CAS was used in 2 studies that focused on computer anxiety and confidence. In the existing body of literature, the CAS has often been used for studies targeting individuals in highly stressful environments such as business graduate students [65], psychiatric inpatients [66], and students studying at a 2-year technical college experiencing “technostress” [67]. As explained by Kelley and Charness [68], older adults “commit more errors in post-training evaluations” than the general population, which may result in greater stress and anxiety. This may demonstrate the suitability of the CAS for older adult populations.

Regarding the overall quality of the included studies evaluated using the CCAT, some variation existed among the studies reviewed. Studies that were cross-sectional or lacked acquisition of written informed consent and used alternate approaches, such as telephone or self-reported, web-based or email surveys, scored poorly in the “design” and “ethical matters” category. Studies also lost marks if there was no flow diagram, there was no mention of design methods in the title of their manuscript, or they had biased sampling methods (convenience sampling, pertaining only to 1 or 2 ethnic groups).

Contrastingly, 2 RCTs in our review received a score of 100% on the CCAT, as they had excellent preliminaries, introductions, study design, sampling methods, data collection methods, ethical matters, results, and discussions. These studies employed performance-based measures like the Attitudes Toward Computer/Internet Questionnaire (ATC-IQ; semistructured interview) and UTAUT model (face-to-face interview), which are more reliable data collection methods than self-administered questionnaires. Performance-based measures like these may be suitable for studies targeting older adults, but it should be noted that clinical environments and personal fitness can greatly influence outcomes, especially if environments contain learners of mixed ability [69], rapid progression [34], and the possibility for embarrassment or discomfort [70]. Positive clinical settings are associated with improved performance, as observed in 1 of the RCTs in our review, where “a combination of patience, perseverance, and peer-to-peer or instructor encouragement, whether with words or a pat on the shoulder” were successful in reducing older adults’ stress and anxiety during digital learning [32].

As aforementioned, for older adults, it is important that the research and design of digital technologies encompass the heterogeneity of their capacity. While we believe that instructions should be “clear and understandable” to study participants [34], we also believe that literacy elements that are generalizable to the rest of the population (relative to communication, safety, problem solving, and competence) should be measured for this population as well. As described by Hänninen et al [16], the digital capacity of older adults lies on a continuum or spectrum and can range from actively independent to limited.

Previous studies recommend that, instead of employing the full MDPQ or technology acceptance model (TAM), the shorter, 16-question version [34], or senior version of the TAM (Senior Technology Acceptance & Adoption Model), may be more appropriate for relatively older and frailer populations [7]. User-centeredness in instrument development and measurement is crucial for this population, as the functional status of older adults varies immensely. Furthermore, scales and scoring methods are encouraged to be as inclusive as possible, so that they encompass the diversity in functionality that exists among study subjects.

Limitations

Ultimately, many limitations exist in our review. First, it is important to mention that the association between age and digital capacity is controversial among certain scholars who argue that age-based divisions are too simplistic [23] and unclear [71] to explain the digital divide. In the Netherlands, for example, “digital natives” do not appear to exist, and other factors like life stages and socialization are considered to be more relevant proxies of digital literacy than age [71]. Also, in a German study, perceptions of threat due to technologization were perceived as the main predictors of digital capacity, rather than age itself [23]. Older adults with lower perceptions of threat could be digitally fluent, just as younger adults with higher perceptions of threat could be digitally illiterate. Future questionnaires should consider measuring this factor in depth and the possible interaction that it has with age in predicting digital capacity outcomes.

Likewise, digital literacy is a process-oriented skill, and measuring it in isolation may be inaccurate for quantifying an individual’s skillset [72]. In the Lily Model, Norman and Skinner [72] posit that there are 6 core skills of literacy: traditional, media, information, computer, scientific, and health. Not only are these skills heavily interconnected with one another but also only an in-depth analysis of all 6 can fully contextualize an individual’s personal, social, and environmental contexts [72]. For example, computer literacy may be heavily influenced by an individual’s ability to understand and read passages (traditional literacy) as well as their ability to find information on a topic (information literacy) and understand certain scientific terms (science literacy). Because these literacy types are interconnected, only an in-depth analysis of all 6 may accurately measure an individual’s knowledge.

Also, as observed in our review, many of the investigated instruments, including the Attitudes Toward Technologies Questionnaire, TAM, ATC-IQ, APOI, and CAS, measured attitudes or perceptions toward technology rather than digital aptitude itself. While studies on attitude are important, the lack of measures examining older adults’ abilities to use information and communications technology was an unexpected limitation of the reviews studied.

Last, even though previous studies have argued that the DigComp Framework is one of the broadest and most generalizable frameworks for assessing digital literacy measures [15,21], it is undeniable that certain types of survey error are more likely to occur among older populations relative to memory loss, health problems, sensory and cognitive impairments, and personal or motivational factors that influence their ability to participate in an investigation [73]. The author and editors of this framework specifically mention in their proposal that, because they adopted a “general” rather than “individual” approach, their framework should be considered only as a starting point in interpreting digital competence among different age groups [18].

Conclusions

In conclusion, more studies are required so that the measurement of digital literacy among older adults can become more elaborate and specific. Digital literacy evidently has strong associations with the utility of information and communications technologies that promote physical and mental well-being among older adults. Further assessments and studies of digital literacy among older adults that overcome the limitations of existing research and measurement designs would allow for better allocation of support and resources to address the diverse health care needs of this growing but vulnerable population.

Acknowledgments

This research was supported by the Brain Korea 21 FOUR Project funded by the National Research Foundation of Korea, Yonsei University College of Nursing. This study received funding from the National Research Foundation of Korea (grant number 2020R1A6A1A0304198911 [SO, KK, and JC]), the Ministry of Education of the Republic of Korea, and Yonsei University College of Nursing Faculty Research Fund (6-2020-0188 [SHC and JC]).

Abbreviations

- APOI

Attitudes towards Psychological Online Interventions

- ATC-IQ

Attitudes Toward Computer/Internet Questionnaire

- CAS

Computer Attitude Scale

- CCAT

Crowe Critical Appraisal Tool

- DigComp

European Commission’s Digital Competence framework

- eHEALS

eHealth Literacy Scale

- MDPQ

Mobile Device Proficiency Questionnaire

- OECD

Organization for Economic Cooperation and Development

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- RCT

randomized controlled trial

- TAM

technology acceptance model

- UTAUT

unified theory of acceptance and usage of technology

Appendix

Summary of database (DB) search terms.

Crowe Critical Appraisal Tool (CCAT) form.

Footnotes

Authors' Contributions: JC and SC conceptualized and supervised the study. JC, SC, and SO developed the methodology. SO, MK, and JO screened the studies and performed the formal analysis. KK and JC performed the validation. SO, KK, SC, and JC wrote, reviewed, and edited the manuscript. All authors have read and agreed to the published version of the manuscript.

Conflicts of Interest: None declared.

References

- 1.Maharani A, Pendleton N, Leroi I. Hearing Impairment, Loneliness, Social Isolation, and Cognitive Function: Longitudinal Analysis Using English Longitudinal Study on Ageing. Am J Geriatr Psychiatry. 2019 Dec;27(12):1348–1356. doi: 10.1016/j.jagp.2019.07.010. [DOI] [PubMed] [Google Scholar]

- 2.Anderson M, Perrin A. Tech adoption climbs among older adults. Pew Research Center. 2017. May 17, [2021-01-23]. https://www.pewresearch.org/internet/2017/05/17/tech-adoption-climbs-among-older-adults/

- 3.OECD Secretariat OECD Digital Economy Outlook 2017. Organisation for Economic Cooperation and Development (OECD) 2017. Oct 11, [2021-01-23]. https://www.oecd.org/digital/oecd-digital-economy-outlook-2017-9789264276284-en.htm.

- 4.Rogers SE. Bridging the 21st Century Digital Divide. TechTrends. 2016 Mar 30;60(3):197–199. doi: 10.1007/s11528-016-0057-0. [DOI] [Google Scholar]

- 5.Akçayır M, Dündar H, Akçayır G. What makes you a digital native? Is it enough to be born after 1980? Computers in Human Behavior. 2016 Jul;60:435–440. doi: 10.1016/j.chb.2016.02.089. [DOI] [Google Scholar]

- 6.Oblinger DG, Oblinger JL, Lippincott JK, editors. Educating the net generation. Boulder, CO: EDUCAUSE; 2005. [Google Scholar]

- 7.Chen K, Chan AHS. Gerontechnology acceptance by elderly Hong Kong Chinese: a senior technology acceptance model (STAM) Ergonomics. 2014 Mar 24;57(5):635–52. doi: 10.1080/00140139.2014.895855. [DOI] [PubMed] [Google Scholar]

- 8.Moore AN, Rothpletz AM, Preminger JE. The Effect of Chronological Age on the Acceptance of Internet-Based Hearing Health Care. Am J Audiol. 2015 Sep;24(3):280–283. doi: 10.1044/2015_aja-14-0082. [DOI] [PubMed] [Google Scholar]

- 9.Nägle S, Schmidt L. Computer acceptance of older adults. Work. 2012;41 Suppl 1:3541–8. doi: 10.3233/WOR-2012-0633-3541. [DOI] [PubMed] [Google Scholar]

- 10.Mannheim I, Schwartz E, Xi W, Buttigieg SC, McDonnell-Naughton M, Wouters EJM, van Zaalen Y. Inclusion of Older Adults in the Research and Design of Digital Technology. Int J Environ Res Public Health. 2019 Oct 02;16(19):3718. doi: 10.3390/ijerph16193718. https://www.mdpi.com/resolver?pii=ijerph16193718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.DeWalt DA, Broucksou KA, Hawk V, Brach C, Hink A, Rudd R, Callahan L. Developing and testing the health literacy universal precautions toolkit. Nurs Outlook. 2011 Mar;59(2):85–94. doi: 10.1016/j.outlook.2010.12.002. http://europepmc.org/abstract/MED/21402204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ramsetty A, Adams C. Impact of the digital divide in the age of COVID-19. J Am Med Inform Assoc. 2020 Jul 01;27(7):1147–1148. doi: 10.1093/jamia/ocaa078. http://europepmc.org/abstract/MED/32343813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cotten S. Examining the Roles of Technology in Aging and Quality of Life. J Gerontol B Psychol Sci Soc Sci. 2017 Sep 01;72(5):823–826. doi: 10.1093/geronb/gbx109. [DOI] [PubMed] [Google Scholar]

- 14.McCausland L, Falk NL. From dinner table to digital tablet: technology's potential for reducing loneliness in older adults. J Psychosoc Nurs Ment Health Serv. 2012 May 25;50(5):22–6. doi: 10.3928/02793695-20120410-01. https://pubmed.ncbi.nlm.nih.gov/22533840/ [DOI] [PubMed] [Google Scholar]

- 15.Jin K, Reichert F, Cagasan LP, de la Torre J, Law N. Measuring digital literacy across three age cohorts: Exploring test dimensionality and performance differences. Computers & Education. 2020 Nov;157:103968. doi: 10.1016/j.compedu.2020.103968. [DOI] [Google Scholar]

- 16.Hänninen R, Taipale S, Luostari R. Exploring heterogeneous ICT use among older adults:The warm experts’ perspective. New Media & Society. 2020 May 06;:146144482091735. doi: 10.1177/1461444820917353. [DOI] [Google Scholar]

- 17.Chesser A, Burke A, Reyes J, Rohrberg T. Navigating the digital divide: A systematic review of eHealth literacy in underserved populations in the United States. Inform Health Soc Care. 2016 Feb 24;41(1):1–19. doi: 10.3109/17538157.2014.948171. [DOI] [PubMed] [Google Scholar]

- 18.Ferrari A, Punie Y, Brecko B. DIGCOMP: A framework for developing and understanding digital competence in Europe. Luxembourg: Publications Office of the European Union; 2013. [Google Scholar]

- 19.Fraillon J, Ainley J. The IEA international study of computer and information literacy (ICILS) Camberwell, Victoria: Australian Council for Educational Research; 2013. [2021-01-23]. https://cms.education.gov.il/NR/rdonlyres/A07C37A9-8709-4B9B-85C0-63B9D57F1715/167512/ICILSProjectDescription1.pdf. [Google Scholar]

- 20.Organisation for Economic Cooperation and Development (OECD) Paris, France: OECD Publishing; 2012. [2021-01-15]. Literacy, Numeracy and Problem Solving in Technology-Rich Environments: Framework for the OECD Survey of Adult Skills. https://www.oecd-ilibrary.org/education/literacy-numeracy-and-problem-solving-in-technology-rich-environments_9789264128859-en. [Google Scholar]

- 21.Law N, Woo D, de Ia J, Wong G. A global framework of reference on digital literacy skills for indicator 4.4.2. UNESCO Institute for Statistics. 2018. [2021-01-23]. https://unesdoc.unesco.org/ark:/48223/pf0000265403.

- 22.McInnes MDF, Moher D, Thombs BD, McGrath TA, Bossuyt PM, the PRISMA-DTA Group. Clifford T, Cohen JF, Deeks JJ, Gatsonis C, Hooft L, Hunt HA, Hyde CJ, Korevaar DA, Leeflang MMG, Macaskill P, Reitsma JB, Rodin R, Rutjes AWS, Salameh JP, Stevens A, Takwoingi Y, Tonelli M, Weeks L, Whiting P, Willis BH. Preferred Reporting Items for a Systematic Review and Meta-analysis of Diagnostic Test Accuracy Studies: The PRISMA-DTA Statement. JAMA. 2018 Jan 23;319(4):388–396. doi: 10.1001/jama.2017.19163. [DOI] [PubMed] [Google Scholar]

- 23.Schneider BC, Schröder J, Berger T, Hohagen F, Meyer B, Späth C, Greiner W, Hautzinger M, Lutz W, Rose M, Vettorazzi E, Moritz S, Klein JP. Bridging the "digital divide": A comparison of use and effectiveness of an online intervention for depression between Baby Boomers and Millennials. J Affect Disord. 2018 Aug 15;236:243–251. doi: 10.1016/j.jad.2018.04.101. [DOI] [PubMed] [Google Scholar]

- 24.Zambianchi M, Rönnlund M, Carelli MG. Attitudes Towards and Use of Information and Communication Technologies (ICTs) Among Older Adults in Italy and Sweden: the Influence of Cultural Context, Socio-Demographic Factors, and Time Perspective. J Cross Cult Gerontol. 2019 Sep 11;34(3):291–306. doi: 10.1007/s10823-019-09370-y. [DOI] [PubMed] [Google Scholar]

- 25.Sudbury-Riley L, FitzPatrick M, Schulz PJ. Exploring the Measurement Properties of the eHealth Literacy Scale (eHEALS) Among Baby Boomers: A Multinational Test of Measurement Invariance. J Med Internet Res. 2017 Feb 27;19(2):e53. doi: 10.2196/jmir.5998. https://www.jmir.org/2017/2/e53/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Crowe M. Crowe critical appraisal tool (CCAT) user guide. Conchra House. 2013. [2021-01-23]. https://conchra.com.au/wp-content/uploads/2015/12/CCAT-user-guide-v1.4.pdf.

- 27.Donnelly N, Hickey A, Burns A, Murphy P, Doyle F. Systematic review and meta-analysis of the impact of carer stress on subsequent institutionalisation of community-dwelling older people. PLoS One. 2015 Jun 2;10(6):e0128213. doi: 10.1371/journal.pone.0128213. https://dx.plos.org/10.1371/journal.pone.0128213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sezgin D, Hendry A, Liew A, O'Donovan M, Salem M, Carriazo AM, López-Samaniego L, Rodríguez-Acuña R, Kennelly S, Illario M, Arnal Carda C, Inzitari M, Hammar T, O'Caoimh R. Transitional palliative care interventions for older adults with advanced non-malignant diseases and frailty: a systematic review. JICA. 2020 Jun 17;28(4):387–403. doi: 10.1108/jica-02-2020-0012. [DOI] [Google Scholar]

- 29.Choi NG, Dinitto Diana M. The digital divide among low-income homebound older adults: Internet use patterns, eHealth literacy, and attitudes toward computer/Internet use. J Med Internet Res. 2013 May 02;15(5):e93. doi: 10.2196/jmir.2645. https://www.jmir.org/2013/5/e93/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Niehaves B, Plattfaut R. Internet adoption by the elderly: employing IS technology acceptance theories for understanding the age-related digital divide. European Journal of Information Systems. 2017 Dec 19;23(6):708–726. doi: 10.1057/ejis.2013.19. [DOI] [Google Scholar]

- 31.Hoque R, Sorwar G. Understanding factors influencing the adoption of mHealth by the elderly: An extension of the UTAUT model. Int J Med Inform. 2017 May;101:75–84. doi: 10.1016/j.ijmedinf.2017.02.002. [DOI] [PubMed] [Google Scholar]

- 32.Chu AYM. Psychosocial influences of computer anxiety, computer confidence, and computer self-efficacy with online health information in older adults. Denton, TX: Texas Woman's University; 2008. [Google Scholar]

- 33.Yoon H, Jang Y, Xie B. Computer Use and Computer Anxiety in Older Korean Americans. J Appl Gerontol. 2016 Sep 09;35(9):1000–10. doi: 10.1177/0733464815570665. [DOI] [PubMed] [Google Scholar]

- 34.Roque NA, Boot WR. A New Tool for Assessing Mobile Device Proficiency in Older Adults: The Mobile Device Proficiency Questionnaire. J Appl Gerontol. 2018 Feb 11;37(2):131–156. doi: 10.1177/0733464816642582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cherid C, Baghdadli A, Wall M, Mayo NE, Berry G, Harvey EJ, Albers A, Bergeron SG, Morin SN. Current level of technology use, health and eHealth literacy in older Canadians with a recent fracture-a survey in orthopedic clinics. Osteoporos Int. 2020 Jul 28;31(7):1333–1340. doi: 10.1007/s00198-020-05359-3. [DOI] [PubMed] [Google Scholar]

- 36.Xie B. Effects of an eHealth literacy intervention for older adults. J Med Internet Res. 2011 Nov 03;13(4):e90. doi: 10.2196/jmir.1880. https://www.jmir.org/2011/4/e90/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tennant B, Stellefson M, Dodd V, Chaney B, Chaney D, Paige S, Alber J. eHealth literacy and Web 2.0 health information seeking behaviors among baby boomers and older adults. J Med Internet Res. 2015 Mar 17;17(3):e70. doi: 10.2196/jmir.3992. https://www.jmir.org/2015/3/e70/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hoogland AI, Mansfield J, Lafranchise EA, Bulls HW, Johnstone PA, Jim HS. eHealth literacy in older adults with cancer. J Geriatr Oncol. 2020 Jul;11(6):1020–1022. doi: 10.1016/j.jgo.2019.12.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Price-Haywood EG, Harden-Barrios J, Ulep R, Luo Q. eHealth Literacy: Patient Engagement in Identifying Strategies to Encourage Use of Patient Portals Among Older Adults. Popul Health Manag. 2017 Dec;20(6):486–494. doi: 10.1089/pop.2016.0164. [DOI] [PubMed] [Google Scholar]

- 40.Paige SR, Miller MD, Krieger JL, Stellefson M, Cheong J. Electronic Health Literacy Across the Lifespan: Measurement Invariance Study. J Med Internet Res. 2018 Jul 09;20(7):e10434. doi: 10.2196/10434. https://www.jmir.org/2018/7/e10434/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Aponte J, Nokes K. Electronic health literacy of older Hispanics with diabetes. Health Promot Int. 2017 Jun 01;32(3):482–489. doi: 10.1093/heapro/dav112. [DOI] [PubMed] [Google Scholar]

- 42.Xie B. Experimenting on the impact of learning methods and information presentation channels on older adults' e-health literacy. J. Am. Soc. Inf. Sci. 2011 Jun 06;62(9):1797–1807. doi: 10.1002/asi.21575. [DOI] [Google Scholar]

- 43.Noblin AM, Rutherford A. Impact of Health Literacy on Senior Citizen Engagement in Health Care IT Usage. Gerontol Geriatr Med. 2017 Apr 26;3:2333721417706300. doi: 10.1177/2333721417706300. https://journals.sagepub.com/doi/10.1177/2333721417706300?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cajita MI, Hodgson NA, Budhathoki C, Han H. Intention to Use mHealth in Older Adults With Heart Failure. The Journal of Cardiovascular Nursing. 2017;32(6):E1–E7. doi: 10.1097/jcn.0000000000000401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Lin C, Ganji M, Griffiths MD, Bravell ME, Broström A, Pakpour AH. Mediated effects of insomnia, psychological distress and medication adherence in the association of eHealth literacy and cardiac events among Iranian older patients with heart failure: a longitudinal study. Eur J Cardiovasc Nurs. 2020 Feb 13;19(2):155–164. doi: 10.1177/1474515119873648. [DOI] [PubMed] [Google Scholar]

- 46.Rosenberg L, Kottorp A, Winblad B, Nygård L. Perceived difficulty in everyday technology use among older adults with or without cognitive deficits. Scand. J. of Occupational Therapy. 2009:1–11. doi: 10.3109/11038120802684299. [DOI] [PubMed] [Google Scholar]

- 47.Stellefson M, Chaney B, Barry AE, Chavarria E, Tennant B, Walsh-Childers K, Sriram P, Zagora J. Web 2.0 chronic disease self-management for older adults: a systematic review. J Med Internet Res. 2013 Feb 14;15(2):e35. doi: 10.2196/jmir.2439. https://www.jmir.org/2013/2/e35/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Chung S, Nahm E. Testing reliability and validity of the eHealth Literacy Scale (eHEALS) for older adults recruited online. Comput Inform Nurs. 2015 Apr;33(4):150–6. doi: 10.1097/CIN.0000000000000146. http://europepmc.org/abstract/MED/25783223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Li S, Yin Y, Cui G, Xu H. The Associations Among Health-Promoting Lifestyle, eHealth Literacy, and Cognitive Health in Older Chinese Adults: A Cross-Sectional Study. Int J Environ Res Public Health. 2020 Mar 27;17(7):2263. doi: 10.3390/ijerph17072263. https://www.mdpi.com/resolver?pii=ijerph17072263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zambianchi M, Carelli M. Attitudes Toward Technologies Questionnaire (ATTQ) Umeå, Sweden: Umeå University; 2013. [Google Scholar]

- 51.Davis F. Doctoral Dissertation: A technology acceptance model for empirically testing new end-user information systems: Theory and results. Massachusetts Institute of Technology. 1985. [2021-01-23]. https://dspace.mit.edu/handle/1721.1/15192.

- 52.Bear GG, Richards HC, Lancaster P. Attitudes toward Computers: Validation of a Computer Attitudes Scale. Journal of Educational Computing Research. 1995 Jan 01;3(2):207–218. doi: 10.2190/1dyt-1jej-t8j5-1yc7. [DOI] [Google Scholar]

- 53.Schröder J, Sautier L, Kriston L, Berger T, Meyer B, Späth C, Köther U, Nestoriuc Y, Klein JP, Moritz S. Development of a questionnaire measuring Attitudes towards Psychological Online Interventions-the APOI. J Affect Disord. 2015 Nov 15;187:136–41. doi: 10.1016/j.jad.2015.08.044. [DOI] [PubMed] [Google Scholar]

- 54.Loyd BH, Gressard C. Reliability and Factorial Validity of Computer Attitude Scales. Educational and Psychological Measurement. 2016 Sep 07;44(2):501–505. doi: 10.1177/0013164484442033. [DOI] [Google Scholar]

- 55.Norman CD, Skinner HA. eHEALS: The eHealth Literacy Scale. J Med Internet Res. 2006 Nov 14;8(4):e27. doi: 10.2196/jmir.8.4.e27. https://www.jmir.org/2006/4/e27/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Boot WR, Charness N, Czaja SJ, Sharit J, Rogers WA, Fisk AD, Mitzner T, Lee CC, Nair S. Computer proficiency questionnaire: assessing low and high computer proficient seniors. Gerontologist. 2015 Jun;55(3):404–11. doi: 10.1093/geront/gnt117. http://europepmc.org/abstract/MED/24107443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Venkatesh V, Morris MG, Davis GB, Davis FD. User Acceptance of Information Technology: Toward a Unified View. MIS Quarterly. 2003;27(3):425. doi: 10.2307/30036540. [DOI] [Google Scholar]

- 58.Edirippulige S. Changing Role of Nurses in the Digital Era: Nurses and Telehealth. In: Yogesan K, Bos L, Brett P, Gibbons MC, editors. Handbook of Digital Homecare. Cham, Switzerland: Springer Nature Switzerland AG; 2009. pp. 269–85. [Google Scholar]

- 59.Husebø AML, Storm M. Virtual visits in home health care for older adults. ScientificWorldJournal. 2014;2014:689873–11. doi: 10.1155/2014/689873. doi: 10.1155/2014/689873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Savolainen L, Hanson E, Magnusson L, Gustavsson T. An Internet-based videoconferencing system for supporting frail elderly people and their carers. J Telemed Telecare. 2008 Mar;14(2):79–82. doi: 10.1258/jtt.2007.070601. [DOI] [PubMed] [Google Scholar]

- 61.Munteanu C, Tennakoon C, Garner J, Goel A, Ho M, Shen C, Windeyer R. Improving older adults' online security: An exercise in participatory design. Symposium on Usable Privacy and Security (SOUPS); July 22-24, 2015; Ottawa, Canada. 2015. [Google Scholar]

- 62.Collins SA, Currie LM, Bakken S, Vawdrey DK, Stone PW. Health literacy screening instruments for eHealth applications: a systematic review. J Biomed Inform. 2012 Jun;45(3):598–607. doi: 10.1016/j.jbi.2012.04.001. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(12)00054-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Hu X, Bell RA, Kravitz RL, Orrange S. The prepared patient: information seeking of online support group members before their medical appointments. J Health Commun. 2012;17(8):960–78. doi: 10.1080/10810730.2011.650828. [DOI] [PubMed] [Google Scholar]

- 64.Jordan JE, Osborne RH, Buchbinder R. Critical appraisal of health literacy indices revealed variable underlying constructs, narrow content and psychometric weaknesses. J Clin Epidemiol. 2011 Apr;64(4):366–79. doi: 10.1016/j.jclinepi.2010.04.005. [DOI] [PubMed] [Google Scholar]

- 65.Hsu MK, Wang SW, Chiu KK. Computer attitude, statistics anxiety and self-efficacy on statistical software adoption behavior: An empirical study of online MBA learners. Computers in Human Behavior. 2009 Mar;25(2):412–420. doi: 10.1016/j.chb.2008.10.003. [DOI] [Google Scholar]

- 66.Weber B, Schneider B, Hornung S, Wetterling T, Fritze J. Computer attitude in psychiatric inpatients. Computers in Human Behavior. 2008 Jul;24(4):1741–1752. doi: 10.1016/j.chb.2007.07.006. [DOI] [Google Scholar]

- 67.Ballance CT. Psychology of Computer Use: XXIV. Computer-Related Stress Among Technical College Students. Psychological Reports. 1991 Oct;69(6):539. doi: 10.2466/pr0.1991.69.2.539. [DOI] [PubMed] [Google Scholar]

- 68.Kelley CL, Charness N. Issues in training older adults to use computers. Behaviour & Information Technology. 1995 Mar;14(2):107–120. doi: 10.1080/01449299508914630. [DOI] [Google Scholar]

- 69.Czaja SJ, Charness N, Fisk AD, Hertzog C, Nair SN, Rogers WA, Sharit J. Factors predicting the use of technology: findings from the Center for Research and Education on Aging and Technology Enhancement (CREATE) Psychol Aging. 2006 Jun;21(2):333–52. doi: 10.1037/0882-7974.21.2.333. http://europepmc.org/abstract/MED/16768579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Sutherland LM, Kowalski KC, Ferguson LJ, Sabiston CM, Sedgwick WA, Crocker PR. Narratives of young women athletes’ experiences of emotional pain and self-compassion. Qualitative Research in Sport, Exercise and Health. 2014 Mar 14;6(4):499–516. doi: 10.1080/2159676x.2014.888587. [DOI] [Google Scholar]

- 71.Loos E. Senior citizens: Digital immigrants in their own country? Observatorio. 2012;6(1):1–23. http://obs.obercom.pt/index.php/obs/article/view/513/477. [Google Scholar]

- 72.Norman CD, Skinner HA. eHealth Literacy: Essential Skills for Consumer Health in a Networked World. J Med Internet Res. 2006 Jun 16;8(2):e9. doi: 10.2196/jmir.8.2.e9. https://www.jmir.org/2006/2/e9/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Rodgers W, Herzog A. Collecting data about the oldest old: Problems and procedures. In: Manton KG, Willis DP, Suzman RM, editors. The oldest old. Oxford, England: Oxford University Press; 1995. pp. 135–56. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Summary of database (DB) search terms.

Crowe Critical Appraisal Tool (CCAT) form.