Abstract

In this month’s Hospital Pediatrics, Liao et al1 share their team’s journey to improve the accuracy of their institution’s electronic health record (EHR) problem list. They presented their results as statistical process control (SPC) charts, which are a mainstay for visualization and analysis for improvers to understand processes, test hypotheses, and quickly learn their interventions’ effectiveness. Although many readers might understand that 8 consecutive points above or below the mean signifies special cause variation resulting in a centerline “shift,” there are many more special cause variation rules revealed in these charts that likely provided valuable real-time information to the improvement team. These “signals” might not be apparent to casual readers when looking at the complete data set in article form.

Shewhart2 first introduced SPC charts to the world with the publication of Economic Control of Quality of Manufactured Product in 1931. Although control charts were initially used more broadly in industrial settings, health care providers have also recently begun to understand that the use of SPC charts is vital in improvement work.3,4 Deming,5 often seen as the “grandfather” of quality improvement (QI), saw SPC charts as vital to understanding variation as part of his well-known Theory of Profound Knowledge, outlined in his book The New Economics for Industry Government, Education. Improvement science harnesses the scientific method in which improvers create and rapidly test hypotheses and learn from their data to determine if their hypotheses are correct.6 This testing is central to the Model for Improvement’s plan-do-study-act cycle.3 Liao et al1 nicely laid out their hypotheses in a key driver diagram, and they tested these hypotheses with multiple interventions. In the following paragraphs, we will walk through some of their SPC charts to demonstrate how this improvement team was gaining valuable knowledge about their hypotheses through different types of special cause variation long before they had 8 points to reveal shifts. We recommend readers have the charts from the original article (OA) available for reference.

A fundamental concept in improvement science is understanding the difference between common cause and special cause variation. By understanding how to apply these concepts to your data, you will more quickly identify when a change has occurred and whether action should be taken. The authors’ SPC charts reveal examples of both common cause and special cause variation.

Common cause variations are those causes that are inherent in the system or process.4 Evidence of common cause variation can be seen visually in the OA’s Fig 3, from January 2017 to October 2017, because the data points vary around the mean but remain between the upper and lower control limits (dotted lines). In contrast, special cause variations are causes of variations that are not inherent to the system.4 Although there are different rules that signify special cause variation in SPC charts, some of the most common rules that we will focus on here include (1) a single data point outside of the control limits, (2) 8 consecutive points above or below the mean line, and (3) ≥6 consecutive points all moving in the same direction, termed a “trend.”4 When any of these occur, it is paramount to identify when and why the special cause occurred, learn from the special cause, and then take appropriate action. By quickly detecting special cause variation, improvement teams can more readily assess the impact of interventions by validating whether their hypothesis for improvement is correct.

An example of special cause variation can be seen in the OA’s Fig 2, noted by the shift in the centerline in May 2018 from a baseline of 70% of problem lists revised during admission to a new centerline of 90% of problem lists reviewed during admission. Notice that this new, stable process represented by the new centerline starts after the team tested 3 separate interventions that were directly testing hypotheses related to their key drivers. Although the shift began in May 2018, the first special cause signal the improvement team would have seen is the first point outside of the upper control limit in January 2018, which comes immediately after their first 2 interventions. As more months go by, each month after continues to represent special cause variation because they are outside of the control limits. Finally, when the data point in May 2018 is plotted, it is apparent that an upward trend started in December 2017, with 6 consecutive data points increasing through May 2018. Therefore, the authors recognized special cause variation (a trend) by having 6 consecutive increasing points. Given their interventions were grounded in theory and the temporal relationship of the trend beginning in December 2017, with the preceding interventions in November and December 2017, there is a high degree of belief that the interventions are driving these results. In other words, their hypothesis that the EHR enhancements, the dissemination of a protocol, and the designation of a bonus would improve the percentage of times that the problem list is “reviewed” was confirmed as early as December 2017, long before the eventual centerline shift in May 2018.

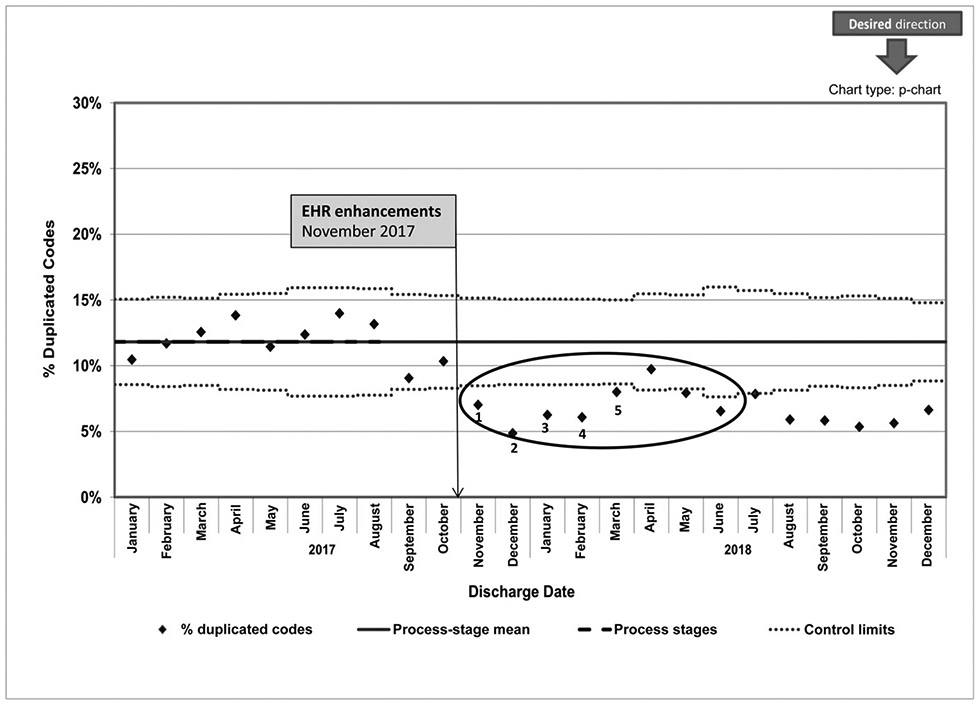

Figure 3 in the OA is an SPC chart of one of the team’s process measures revealing the percentage of discharges with duplicate codes on the problem list. The authors demonstrate that the November 2017 EHR impacted the process, reducing the mean from 12% to 7%. The data contained in our Fig 1 are the same data as those shown in Fig 3 of the authors’ OA but without the first centerline shift, which reveals what the authors would have seen in real time during the course of their improvement efforts. With the November 2017 data point (labeled point 1 in Fig 1), the authors immediately have evidence of special cause variation, with a point outside of the lower control limit after their intervention. This continues with points 2 through 5, each below the lower control limit. Statistically speaking, any one of these is unlikely to happen by chance (which is why they are considered special cause), but the fact that the team is seeing this month after month reinforced their hypothesis. With the eighth consecutive point below the mean line occurring in June 2018 (circle), the team was able to finally shift the centerline. Looking at this from the perspective of the improvement team, the immediacy and consistency of feedback that they witnessed with points outside of the control limits from November 2017 through March 2018 were likely much more informative to their improvement efforts than the moment when they finally were able to shift the mean line. The authors highlight that the EHR enhancement was chosen for its higher reliability design concept,7 making it easier for the providers to complete the intended behavior. The immediacy of special cause signal in November 2017 would indicate that their hypothesis was correct.

FIGURE 1.

OA Fig 3 re-designed to represent data visualization prior to centerline shift.

Finally, viewing charts in combination provides further support of the team’s overall improvement theory. Notice that the special cause shift in Fig 3 of the OA (a process measure) occurs at the same time as the beginning of the special cause that is noted in Fig 2 of the OA, which is their outcome measure. In this case, a driving change in their process was temporally associated with recognizable change in their outcome. Similarly, the OA’ Fig 4, viewed in combination with its Figs 2 and 3, provide our final example of how revealing special cause variation across measures relates to the broader theory of the team’s improvement. Special cause variation is evident in Fig 4 of the OA, with points outside of the control limits associated with interventions in both November and December 2019. A similar pattern is seen in the authors’ other process measure chart, Fig 3 of the OA, during those same months associated with those interventions. Here, a couple of associations are addressed in the data. First, a high degree of belief that those two interventions affect those measures is provided in the data, as the authors hypothesized. Second, with such data, the authors also confirm the hypothesis that underlies the entire article: simply “reviewing” the problem list is also associated with active management of the problem list, and improvements to their process measures help drive their outcome. After >1.5 years of a fairly stable outcome measure (mainly common cause variation), the team’s use of these two interventions not only improved their process measures but also were associated with the December 2019 data point being outside of the control limits in the outcome measure in Fig 2 of the OA. In these situations, the team’s use of SPC charts provided the ability to understand relationships between process and outcome measures, in addition to rapidly testing hypotheses.

As revealed in the work by Liao et al,1 we can improve the care we provide to patients every day with QI methodology. When researchers use SPC charts to report QI in scholarly venues such as this, readers often focus on centerline shifts. Although improvement teams take great joy in shifting a centerline, experienced teams much more commonly work to detect other types of special cause variation quickly to test their hypotheses and work through plan-do-study-act cycles. By understanding the rules of special cause variation and applying them to data in real time, teams will be provided with information that will inform hypotheses testing, bolster knowledge about a system, and ultimately accelerate improvement work.

Acknowledgments

FUNDING: Supported by the Agency for Healthcare Research and Quality (grant T32HS026122). The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

Footnotes

FINANCIAL DISCLOSURE: The authors have indicated they have no financial relationships relevant to this article to disclose.

POTENTIAL CONFLICT OF INTEREST: The authors have indicated they have no potential conflicts of interest to disclose.

REFERENCES

- 1.Liao N, Kasick R, Allen K, et al. Pediatrics inpatient problem list review and accuracy improvement. Hosp Pediatr. 2020:10(11) [DOI] [PubMed] [Google Scholar]

- 2.Shewhart WA. Economic Control of Quality of Manufactured Product. New York: D. Van Nostrand Company, Inc; 1931 [Google Scholar]

- 3.Langley GJ, Nolan T, Nolan K. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance. San Francisco, CA: Jossey-Bass; 1996 [Google Scholar]

- 4.Provost LP, Murray SK. The Health Care Data Guide: Learning from Data for Improvement. San Francisco, CA: Jossey-Bass; 2011 [Google Scholar]

- 5.Deming WE. The New Economics for Industry Government, Education. 2nd ed. Cambridge, MA: MIT Press; 2000 [Google Scholar]

- 6.Perla RJ, Provost LP, Parry GJ. Seven propositions of the science of improvement: exploring foundations. Qual Manag Health Care. 2013;22(3) :170–186 [DOI] [PubMed] [Google Scholar]

- 7.Luria JW, Muething SE, Schoettker PJ, Kotagal UR. Reliability science and patient safety. Pediatr Clin North Am. 2006;53(6):1121–1133 [DOI] [PubMed] [Google Scholar]