Abstract

Purpose

Literature was reviewed on the development of vowels in children's speech and on vowel disorders in children and adults, with an emphasis on studies using acoustic methods.

Method

Searches were conducted with PubMed/MEDLINE, Google Scholar, CINAHL, HighWire Press, and legacy sources in retrieved articles. The primary search items included, but were not limited to, vowels, vowel development, vowel disorders, vowel formants, vowel therapy, vowel inherent spectral change, speech rhythm, and prosody.

Results/Discussion

The main conclusions reached in this review are that vowels are (a) important to speech intelligibility; (b) intrinsically dynamic; (c) refined in both perceptual and productive aspects beyond the age typically given for their phonetic mastery; (d) produced to compensate for articulatory and auditory perturbations; (e) influenced by language and dialect even in early childhood; (f) affected by a variety of speech, language, and hearing disorders in children and adults; (g) inadequately assessed by standardized articulation tests; and (h) characterized by at least three factors—articulatory configuration, extrinsic and intrinsic regulation of duration, and role in speech rhythm and prosody. Also discussed are stages in typical vowel ontogeny, acoustic characterization of rhotic vowels, a sensory-motor perspective on vowel production, and implications for clinical assessment of vowels.

Vowels make up about half of the acoustic stream of speech, but these sounds are eclipsed by consonants in the literature on speech development and disorders. Davis and MacNeilage (1990) commented that, “Vowels are the poor relations of child phonology. There is perhaps less than one study of vowels for every 20 studies of consonants; and studies ostensibly dealing with a child's complete vocal repertoire usually pay little attention to vowels” (p. 16). Since the time of that comment, the situation has changed, albeit slowly. Speake et al. (2012) wrote that, “Compared to the treatment of consonant segments, the treatment of vowels is infrequently described in the literature on children's speech difficulties” (p. 277). A landmark in this literature is a book devoted to vowels and vowel disorders (Ball & Gibbon, 2013), which addressed a variety of issues pertaining to vowel development and vowel disorders, but research reports continue to be few in number compared to those on consonants. The main theme of this review is that research in several disciplines points to a number of reasons why vowels are important in understanding how speech develops in childhood and how speech is disrupted in various communication disorders. Acoustic analysis and its advancement through technology have had a major role in these discoveries, and a particular objective of this review article is to show how acoustic studies have enlarged and refined the understanding of vowels. This may lead to improvements in the clinical assessment of vowel disorders and ultimately to improved treatments. Implications for clinical assessment are discussed in a concluding section.

“A vowel is a speech sound that is formed without a significant constriction of the oral and pharyngeal cavities and that serves as a syllable nucleus” (Shriberg et al., 2019, p. 31). This definition hinges on a basic duality of physiology and phonology; that is, vowels have an articulatory basis and a distinct role in phonology. Vowels appear in the neonatal stage of development and are used throughout the normal life span, which makes them one of the earliest to appear and most enduring of human behaviors. They have a central role in phonology given that all syllables—except syllabic consonants—contain a vowel.

Much of what we know about vowels in developing and disordered speech is derived from perceptual analysis, especially phonetic transcription and articulation tests (Howard & Heselwood, 2013; Stoel-Gammon & Pollock, 2008). However, questions have been raised about the validity and reliability of perceptual methods in the study of vowels (Cox, 2008; Howard & Heselwood, 2013), the adequacy of commonly used tests of articulation to assess vowels (Eisenberg & Hitchcock, 2010; Pollock, 1991), and the nature of typical vowel development (James et al., 2001). Questions relating to perceptual methods have been raised in language science generally. For example, Sloos et al. (2019) commented that, “…speech sound perception is shaped by what is actually in the speech signal as well as by expectations on the local phonological and global sociolinguistic, and geolinguistic level” (p. 2). These authors go on to distinguish reliability (transcribers' agreement) and validity (the relation between the acoustic signal and the transcription). The clinical literature has emphasized the former, but the latter is equally important. These concerns are good reasons to take a closer look at how vowels develop in children and at the nature of vowel disorders in children and adults. This “look” can be accomplished partly through the lens of acoustic analysis, which is the major substance of this review.

Acoustic studies reviewed here contribute to the contemporary understanding of vowel production and perception in typical and atypical speech. The acoustic properties of vowels relate to recent work on speech perception, auditory neurophysiology, speech articulation, and modeling of speech production. Taken together, these lines of research lead to an integrated sensory-motor conceptualization of vowel sounds and eventually to a framework for clinical intervention targeting this class of sounds. The authors also recognize diversity related to native language and acknowledge these differences in this review, though the perspective of this work is rooted in American English.

Research Questions

The main content of this review article is a review of what acoustic studies reveal about several aspects of vowel development and disorders, leading to a general discussion that bears on implications of vowel assessment. The principle questions addressed in this review are below. Answers to these questions cohere in an improved understanding of both the development of vowels in children and the nature of vowel errors in disordered speech.

Regarding the nature and development of vowels, the following questions are posited: What is the contribution of vowels to speech intelligibility? Are vowels in American English inherently intrinsically dynamic? How can acoustic data help to create a picture of vowel development in children? What are distinctive acoustic properties of rhotic vowels and diphthongs?

Regarding production and perception of vowels, the following questions are posited: What is the interaction between vowel perception and vowel production in early speech development? Can acoustic measures serve as an index of the precision of vowel production? What do acoustic data add to the knowledge of phonetic mastery of vowels? How is vowel production compensated for in articulatory and auditory perturbations? How and when do language and dialect differences influence vowel production in infant vocalizations and early childhood?

Regarding the functional and clinical application of our knowledge of vowel development and disorders, the following questions are posited: How do speakers adjust their vowel space area (VSA) in accord with listener characteristics and communication settings? In what ways is vowel perception and production vulnerable to speech and language disorders in children and adults? How can sensory-motor mapping serve in a theoretical framework for treating vowel perception and production? How is the clinical assessment of vowels evolving?

This review is intended for two general audiences: clinicians who assess and treat speech sound disorders and researchers who seek to understand the development of vowel sounds and the nature of vowel disorders in various clinical populations. Our goal is not to prescribe clinical methods but rather to describe the implications of research on the eventual refinement of those methods. The basic content of this review article pertains to the lessons learned from acoustic analysis of vowels in developing and disordered speech. A culminating section addresses the clinical assessment of vowels and the need for further study regarding clinical applications of the evidence. In the same vein, an appeal is made for research on the treatment of vowel disorders.

Method

Searches were conducted with PubMed/MEDLINE, Google Scholar, CINAHL, HighWire Press, and legacy sources in retrieved articles. The primary search terms were vowel, vowels, and vocalization (with the following qualifiers or associations: assessment, development, dialect, disorders, duration, formants, intelligibility, phonetic mastery, perception, production, spectral inherent change, spectrum, therapy, treatment). Results of the literature search were organized with respect to the questions listed in the previous section. Retrieved articles were categorized by search terms and by their relationship to the aforementioned questions.

As mentioned earlier, the review was focused on American English and was not intended to be comprehensive of vowels in other languages. However, selected aspects of vowels in different languages are noted in connection with potentially universal principles or tendencies.

Review of Methods of Measurement

This section reviews basic aspects of acoustic theory and analysis that underlie the methods used in the studies under review. Particular attention is given to the estimation of formant frequencies associated with vowel production. Readers who want an introduction or review may find this section helpful, as it lays out fundamental concepts needed to appreciate acoustic analysis of speech.

Acoustic Analysis Is a Tool to Study Vocal Tract Anatomy, Vowel Articulation, and Vowel Perception

Vowels are produced with two essential processes: generation of acoustic energy (phonation in the case of ordinary voiced speech) and articulation (vocal tract shaping) to produce distinctive patterns of resonance classically represented by formants. The two processes are largely, but not completely, independent, so that to a first approximation, vowel articulation is unaffected by phonation, and vice versa. This quasi-independence is critical to the dual role of vowels in conveying segmental information (vowel identification) related largely to formant pattern along with prosodic and paralinguistic information signaled by changes in vocal fundamental frequency (F0) and other acoustic modifications. The classic source–filter theory of speech production characterized vowel production in terms of the voicing source and the filter effects of the vocal tract (Fant, 1970).

Methods of Acoustic Analysis

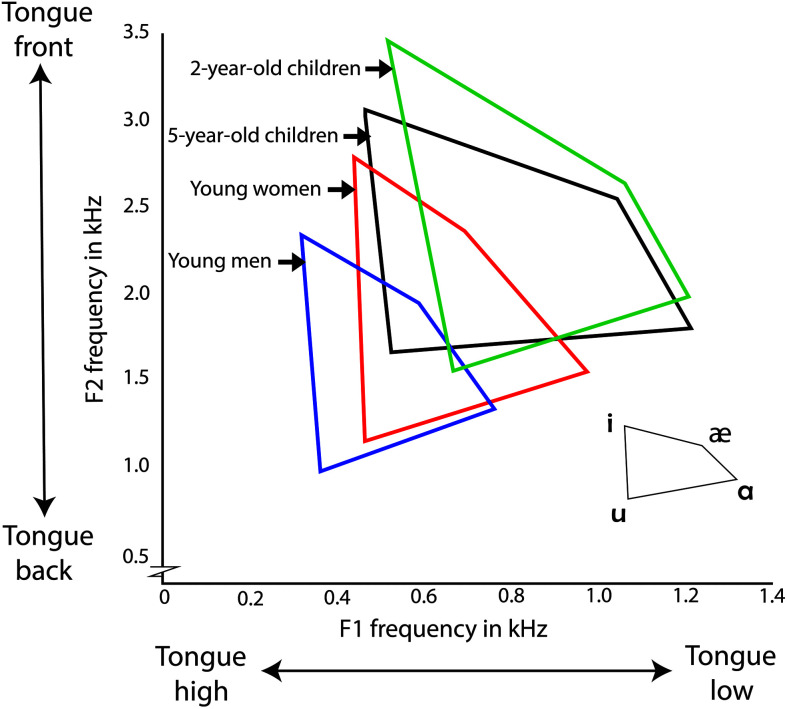

Different approaches can be taken to analyze the acoustic properties of vowels, but estimation of formant frequencies is the most commonly used and has a long history in speech research (Kent & Vorperian, 2018; Vilain et al., 2015). Conventional notation is to identify individual formants as Fn where n is the formant number (e.g., F1 is the first formant, which can be specified in terms of its frequency and bandwidth). A major attraction accruing to formants in studies of speech production is that, at least to a first approximation, individual formants can be associated with articulatory features, as shown in Figure 1. F1 frequency is correlated with vowel height (i.e., tongue position in the superior–inferior axis), such that the higher the vowel, the lower the F1 frequency. F1 frequency also is correlated with vowel duration in many languages, such that vowels with high F1 frequency are longer than vowels with a low F1 frequency. This relationship may be based on physiological factors (especially jaw movement) that have been “phonologized” (i.e., voluntary and extrinsic) in languages such as English but do not necessarily establish a universal pattern (Solé & Ohala, 2010). F2 frequency correlates with the articulatory dimension of tongue advancement or backness (i.e., tongue position in the anterior–posterior axis). Alternatively, the F2–F1 difference correlates with tongue advancement, such that back vowels have a smaller difference than front vowels. Both F1 and F2 (and all formants for that matter) are affected by lip rounding or lip protrusion. Rounding and protrusion have the same acoustic consequence of reducing all formant frequencies. In English, only back vowels are rounded so that rounding and backness tend to co-occur, leading to a low-frequency dominance of acoustic energy. Rounding can be considered as the third dimension in a three-dimensional phonetic space for vowels.

Figure 1.

F1–F2 graph of the vowel quadrilateral, showing representative data for adults and children of different ages. F1 frequency varies to a first approximation with tongue height, and F2 frequency varies to a first approximation with tongue advancement. There is almost no overlap of the formant frequencies for 2-year-olds and male adults.

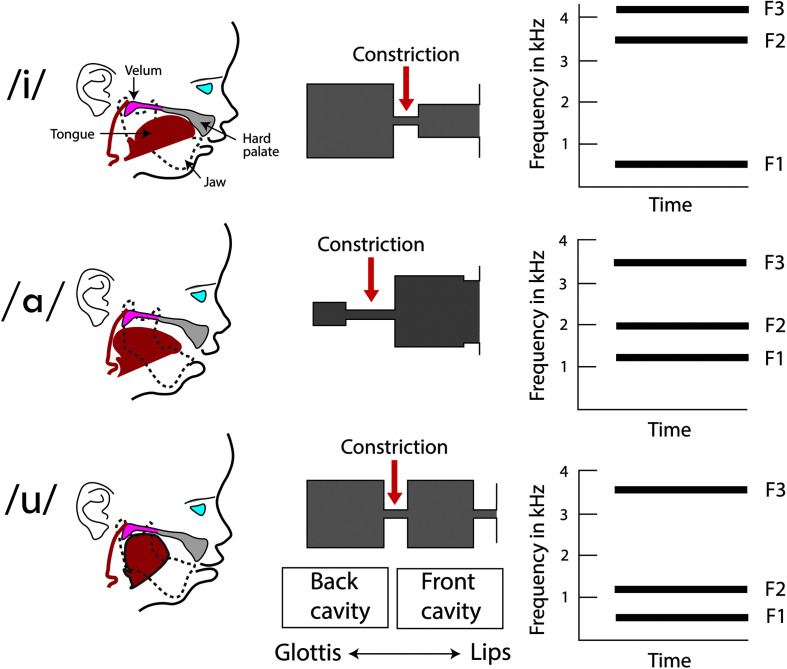

The relationship between vowel articulation and the sensory experience of speech acoustics is further illustrated in Figure 2, which pertains to the three corner vowels /i/, /u/, and /ɑ/, which are remarkably common in the world's languages (Maddieson, 1984). This illustration shows for each vowel its vocal tract configuration, a simplified vocal tract model consisting of front and back cavities and a stylized representation of the formant pattern (F1, F2, and F3). Figure 2 pertains to vowel production by a 4-year-old child; for example, the formant frequencies are suitable for a child's vowels and therefore will differ from the majority of formant frequency values reported in the literature, which is dominated by data on adults—especially male adults. Because this review emphasizes vowel development and vowel disorders in children, data for children are included in the discussion of anatomic and acoustic features related to vowel production.

Figure 2.

Drawings to show for each of the three corner vowels /i/, /u/, and /ɑ/ its vocal tract configuration (left), a simplified vocal tract model consisting of front and back cavities (center), and a stylized representation of the formant pattern (F1, F2, and F3; right). The vowel productions pertain to a 4-year-old child.

Theoretically, there is an infinite number of formants, but only the first few are needed to identify and discriminate the vowels of a language. The higher formants, such as F3 and F4, are often neglected in discussions of acoustic–anatomic–articulatory relationships, but these formants are important in several respects, including describing rhotic sounds (Hagiwara, 1995); normalizing both rhotic and nonrhotic vowels (Disner, 1980; Hillenbrand & Gayvert, 1993); explaining the speaker's formant, a local energy maximum in the vicinity of F4 (Bele, 2006; Leino et al., 2011); describing resonances of the hypopharynx (Takemoto et al., 2008); identifying acoustic correlates of maxillary arch dimensions (Hamdan et al., 2018); and describing acoustic consequences of procedures such as tonsillectomy (Švancara et al., 2006) and supracricoid laryngectomy (Buzaneli et al., 2018). Unfortunately, data on the higher formants are not as abundant as those for F1 and F2, so the potential value of a more complete formant description is not established. To be sure, estimation of these higher formant frequencies can be difficult because of their relatively low energy, but improvements in methods of acoustic analysis enhance the likelihood of obtaining data throughout the life span (Kent & Vorperian, 2018).

Vowel Acoustics Related to Speaker Age and Sex

In general, vowel formant frequencies across all vowels decrease as vocal tract length (VTL) increases. Therefore, the overall developmental pattern from birth to adulthood is one of decreasing formant frequencies, with larger changes in males than females (as can be seen in Figure 1). However, the literature on this topic does not give an entirely coherent view of the acoustic changes. Some studies have reported that vowel formant frequencies change little, if at all, during the first 2–4 years of life (Buhr, 1980; Gilbert et al., 1997; Kent & Murray, 1982; McGowan et al., 2014), although increased range or dispersion of formant frequencies has been observed (Gilbert et al., 1997; Kent & Murray, 1982; Robb et al., 1997). Other studies indicate rapid expansions of the vowel acoustic space during the first 2 years of age (Bond et al., 1982; Ishizuka et al., 2007; Yamashita et al., 2013). Increases in formant frequencies are expected in infancy based on increases in VTL and increased sizes of individual articulators during the first 2 years of life (Vorperian et al., 2005). Because the acoustic data reported to date are based on small numbers of infants and different methods of data collection and analysis, it is difficult to determine with confidence the relationship between acoustic and anatomic changes.

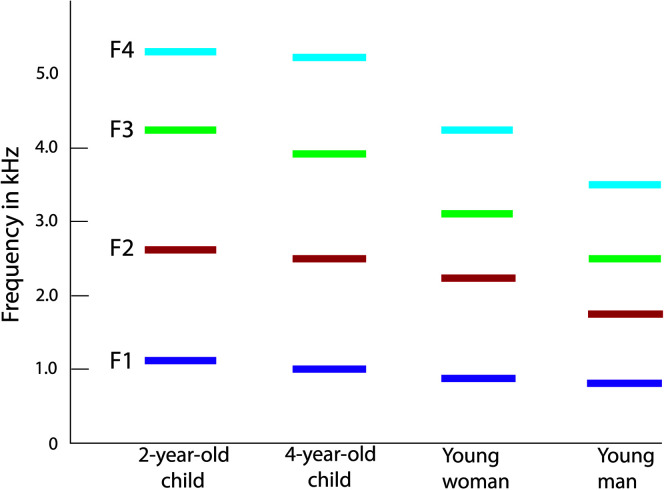

Figure 3 shows the frequencies of the first four formants of vowel /ae/ spoken by a 2-year-old child, a 4-year-old child, a young woman, and a young man. The formants span a considerable range of frequencies, which is important for clinical reasons, such as assessing the effects of hearing loss on the perception of self- and other-produced vowels. For example, frequencies of just over 5 kHz are needed to encompass the first four formants of a 2-year-old child, which implies that a child with a hearing loss affecting frequencies above 4 kHz will have difficulty hearing the higher formants of their vocalizations, as well as the high-frequency energy of fricatives such as /s/.

Figure 3.

Frequencies of the first four formants of vowel /ae/ for four speaker groups.

Another unresolved topic in anatomic–acoustic correlations in speech is the emergence of sexual dimorphism, that is, when differences appear between boys and girls. Gender differences in vowel formant frequencies are present by 4 years of age, with boys having lower formant frequencies than girls (Perry et al., 2001; Vorperian & Kent, 2007). However, gender differences in VTL have not been observed until the age of puberty (Fitch & Giedd, 1999; Markova et al., 2016). Therefore, the formant frequency differences are not explained by increased VTL. Boys and girls may differ in other anatomic and articulatory features, which are a topic of continuing research. Gender differences in speech production may also result from learned behavioral patterns. Evidence for a learning or sociocultural hypothesis of gender differences has been reported in several studies (Cartei et al., 2014, 2012; Cartei & Reby, 2013). The basic idea is that children spontaneously attempt to sound like adults of their own gender. In learning speech, children aspire not only to be understood but also to be identified as to their own gender. Aspects of vowel production, including fundamental and formant frequencies, appear to be important in accounting for gender-related speech patterns (Munson et al., 2015).

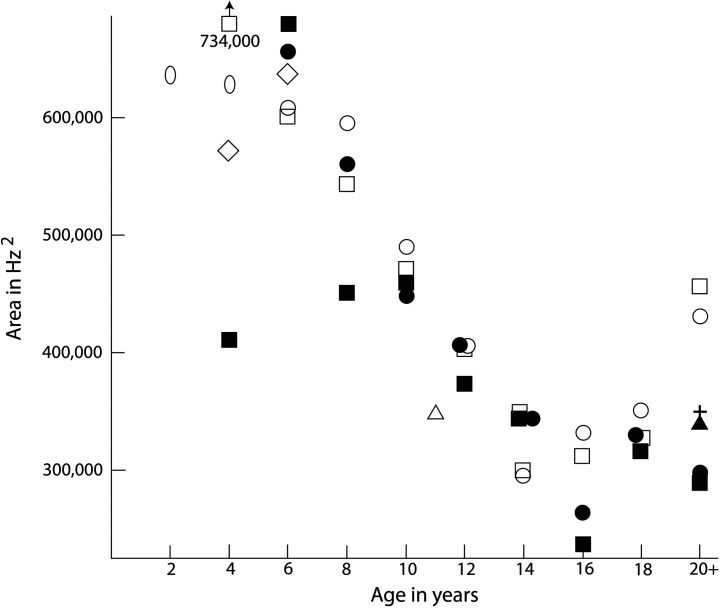

VSA, a measure of the area contained within the vowel quadrilateral or vowel triangle, is one of the most frequently used acoustic indices of vowel production in studies of both developing and disordered speech. VSA generally decreases with speaker age, as illustrated in Figure 4 (also see Vorperian & Kent, 2007). This reduction is a consequence of changing VTL, but VSA can be affected by other factors, including communication setting, speech sample, speaking rate and prosody, and speech disorders (as discussed in a later section). The primary point to be made here is that VSA can be construed as the articulatory working space for vowels, usually determined by the quadrilateral (or triangle) formed by the point vowels. Figure 4 shows that VSA values vary widely across studies, and this variation hinders the application of normative data to clinical assessments. Note, for example, the large ranges of VSA values at ages 4 years and adults aged 20 years or more.

Figure 4.

Vowel space area from several studies of children and adults speaking American English. Data sources are as follows: cross = mean for two males in Bunton and Leddy (2011); filled circles = males in Flipsen and Lee (2012); unfilled circles = females in Flipsen and Lee (2012); diamond = Hustad et al. (2010); ovals = McGowan et al. (2014); filled triangle = G. S. Turner et al. (1995), estimated from graph for males; filled squares = males in Vorperian and Kent (2007); unfilled squares = females in Vorperian and Kent (2007); unfilled triangle = Zajac et al. (2006).

Given that formant frequencies vary with speaker age and gender, studies have used either of two strategies in analyzing and reporting formant data. The first strategy is to report the actual frequency data in hertz, which unavoidably results in aggregates of data corresponding to age–gender characteristics of the speakers. The second is to normalize the formant frequencies in an attempt to render the data from different speakers directly comparable for purposes such as phonetic identification. Both approaches have advantages and disadvantages, depending on the purpose of the study. For a discussion of different approaches to vowel normalization, see Adank et al. (2004). The purposes of this review article are served by using nonnormalized data for formant frequencies, but it is acknowledged that normalization holds considerable value in the ultimate understanding of vowel perception.

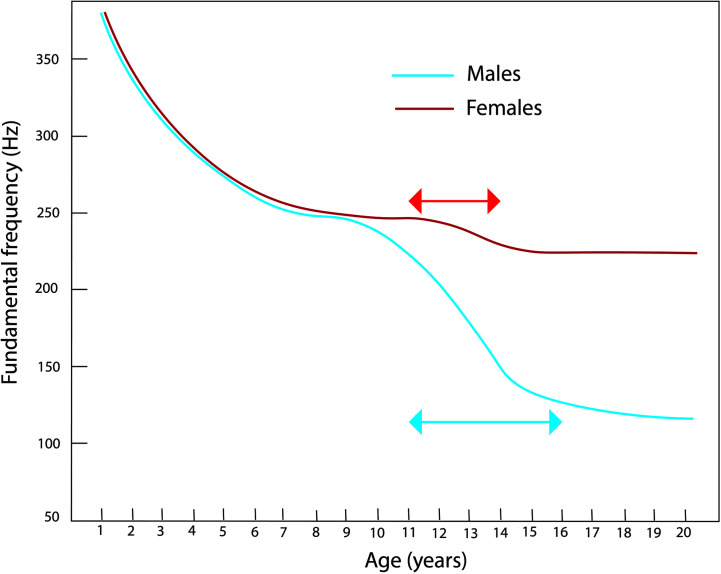

With respect to the energy source for vowels, vocal F0 has a generally falling pattern from birth to adulthood (Kent, 1976), but the decrease is not monotonic and appears to depend on the nature of the vocalization. Rothgänger (2003) reported that, during the first year of life, the mean F0 of crying increased (from about 440 to 500 Hz) and the mean F0 of babbling (comfort state vocalizations) decreased (from about 390 to 337 Hz). The data also revealed that the melody (prosody) of babbling bore similarities to the ambient language within the first year of age. Figure 5 shows average values of F0 over the age interval of 1–20 years for both males and females. Gender differences in F0 occur after about 10 years of age. During puberty, F0 drops sharply for males and more gradually for females (Berger et al., 2019; Maturo et al., 2012). The lines with double arrowheads show the approximate transition periods of F0 for both males and females. The developmental pattern for F0 is important for understanding changes in the pitch of the voice and also to account for age-dependent differences in the accuracy of formant measurements (Kent & Vorperian, 2018).

Figure 5.

Mean vocal fundamental frequency (F0) from ages 1 to 20 years for males and females (based on data in Ludlow et al., 2019). The lines with double arrowheads show the approximate transition periods of F0 for both males and females.

Advantages of Acoustic Analysis

According to Ciocca and Whitehill (2013), acoustic analysis of speech is relatively inexpensive and accessible, compared to auditory–perceptual analysis and articulatory analysis. Acoustic analysis is also considered more objective than some other methods (e.g., perceptual rating) and serves as a quantifiable “bridge” between articulatory and perceptual analysis, taking into account both kinematics of the source (speaker) and the perception by the recipient (listener). Recent developments in technology are increasing the efficiency and efficacy of acoustic analysis.

These analysis techniques are not only useful in a research context. The visual displays provided by readily available tools, such as waveforms, amplitude spectra, and spectrograms, can be used clinically for biofeedback to clients; analyzing speech and the quantitative analysis that comes from acoustic waveforms and spectrograms is valuable for speech assessment (Neel, 2010). Two no-/low-cost acoustic measurement tools include Praat (Boersma & Weenink, 2018) and WinPitch (P. Martin, 2004). Note that for further discussion of basic speech acoustics, see Gramley (2010), which covers source–filter theory, and for detailed discussion of methods and issues in acoustic studies of speech development and disorders, see Ciocca and Whitehill (2013), Hodge (2013), Kent and Vorperian (2018), and Neel (2010).

Results

We will be answering the posited research questions in the order that they were asked in the following sections.

What Is the Contribution of Vowels to Speech Intelligibility?

The contribution of vowels to speech intelligibility may have been underestimated, perhaps because it has been simply assumed that consonants have a greater effect than vowels on speech intelligibility. One way of determining the relative contributions of vowels and consonants to intelligibility is to use the “noise replacement paradigm,” in which either vowels or consonants are placed by noise. Studies using this paradigm have shown a 2:1 intelligibility advantage of vowel-only (consonants replaced by noise) over consonant-only (vowels replaced by noise) sentences (F. Chen & Hu, 2019; R. A. Cole et al., 1996; Fogerty & Kewley-Port, 2009; Kewley-Port et al., 2007). The same effect occurs even in Mandarin, a language with many fewer vowels (F. Chen et al., 2013). Kewley-Port et al. (2007) concluded that, “for spoken sentences, vowels carry more information about sentence intelligibility than consonants for both young normal-hearing and elderly hearing-impaired listeners” (p. 2365). This conclusion should be regarded with some caution, given that it is difficult to isolate consonants and vowels in the acoustic signal of running speech. Inevitably, segments attributed to vowels contain some consonant information if only because of coarticulation, and segments attributed to consonants likewise may contain some vowel information. As noted by Stilp and Kluender (2010), nonlinguistic sensory measures of uncertainty in the speech signal may be better predictors of intelligibility than traditional acoustic measures or linguistic constructs. However, the central point is that, so long as the distinction between vowels and consonants is made, vowels are important to speech intelligibility and should not be regarded as the poor sister of consonants in the goals and means of speech communication.

Moreover, vowels in sentences have the potential to carry information of several kinds, including information on the identity of the vowel itself, identity of flanking consonant(s) and neighboring vowels, syllable pattern based on the amplitude envelope of the utterance, prosodic content (rhythm, stress pattern, and rate), age and gender of the speaker, and affective content related to genuine or feigned emotion. The linkage between vowels and the prosody of an utterance makes them pivotal in the study of multisyllabic utterances, as discussed later. The linkage derives from the capacity of vowels to convey the acoustic cues of prosody but also perhaps from a shared bilateral cortical representation. In a functional magnetic resonance imaging study in which participants were asked to attend to either the vowels or consonants of syllables, generalization maps were bilateral for vowels but unilateral for consonants (Archila-Meléndez et al., 2018). Prosody is also typically presumed to be bilateral in its cortical representation (Wildgruber et al., 2009).

The proportion of time given to vowels in the speech input varies with the rhythmic class of languages. The percentage of the input stream for vowels is about 45% for stress-timed languages such as Dutch and English, about 50% for syllable-timed languages such as French and Italian, and about 55% for mora-timed languages such as Japanese (Ramus et al., 1999). This information may underlie the ability of newborns to discriminate between languages of different rhythmic classes (Ramus et al., 2000) and could provide an early foundation for linking the prosodic and segmental components of a language. It has been proposed that vowels and consonants play different roles in early phonological learning (Hochmann et al., 2011).

Given the capacity of vowels to signal different types of information, it is not surprising that they have a dynamic structure. The presumed vowel steady state identified as an essentially static formant pattern, as in the production of a sustained vowel, often is not observed in connected speech. The dynamic nature of vowels derives not only from embedded information on surrounding sounds (coarticulation) and prosody but also from the intrinsic properties of vowels themselves.

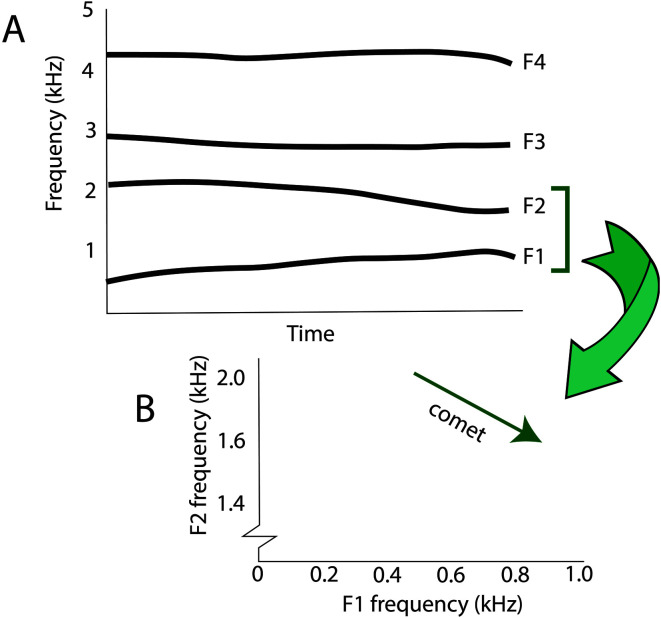

Are Vowels in American English Inherently Intrinsically Dynamic?

Spectral change shows that vowels are intrinsically dynamic. Peeters (2019) wrote that, “In treating vowels like static particles in an articulatory and acoustic space perhaps the most important information to be conveyed by vowels is suppressed” (p. 67). The static particle perspective is reinforced by common practices in articulatory descriptions (such as classifying vowels as fixed patterns of tongue height and advancement) and acoustic analysis (such as representing vowels by single points in the F1–F2 plane). The traditional classification of English vowels into monophthongs and diphthongs has come under question with the recognition that even presumed monophthongs in several dialects of North American English are characterized by substantial spectral change during the vowel segment. In other words, the vowel is not strictly or satisfactorily defined by an invariant acoustic pattern. The concept of “vowel inherent spectral change” (Morrison & Assmann, 2012) appears in natural speech production and has been demonstrated to influence listeners' perception of vowels. Vowel inherent spectral change is a relatively slow frequency variation compared to the rapid frequency variations associated with consonant–vowel or vowel–consonant transitions. An example is shown in Figure 6 for the formant patterns of vowel /ae/ as produced by an adult male speaker. Note that in the spectrogram (Part A of the illustration), the frequencies of F1 and F2 change during the vocalic nucleus. The implication of such formant shifts is that the acoustic representation of vowels requires more than formant estimation at a single time point (such as the middle of a vowel steady state, if such a segment can even be identified; it frequently cannot). Specification of the spectrotemporal pattern requires that formant trajectories be represented by two time points or, alternatively, by one time point and the slope of the formant trajectory. This idea is shown in part by using a comet (line with an arrowhead) to indicate the changes in F1 and F2 frequencies in a bivariate plot.

Figure 6.

Illustration of vowel inherent spectral change. The frequency shifts for F1 and F2 in Part A are shown as a comet in Part B.

The implication is that the vowels of American English should probably be plotted in the F1–F2 plane as comets (line segments) rather than single points. Classic illustrations of vowel formants (e.g., Peterson & Barney, 1952) represent individual vowels as points, but alternative representations are likely to become more common as research into vowel inherent spectral change continues. For examples of averaged vowel formant trajectories determined from two large databases, see Sandoval et al. (2019). Children as young as 3–5 years of age show evidence of vowel inherent spectral change similar to that in adults (Assmann & Katz, 2000; Assmann et al., 2013). This phenomenon applies as well to vowels in second-language learners (Rogers et al., 2012; G. Schwartz et al., 2016) and may be useful in assessing learning progress. Vowel inherent spectral change is a challenge to conventional analyses that focus on vowel steady states or single time points of formant measurement. This phenomenon is also of interest in developing dynamic specifications of articulatory–acoustic features of vowels. For a more complete discussion, see Morrison and Assmann (2012).

Vowel inherent spectral change should be distinguished from context-specific coarticulatory effects, in which production of a target sound is affected by surrounding sounds, especially the flanking consonants but even by nonadjacent vowels (J. Cole et al., 2010). Therefore, the production of a vowel sound incorporates both intrinsic spectral change and coarticulation, a combination that often results in time-varying acoustic properties.

How Can Acoustic Data Help to Create a Picture of Vowel Development in Children?

The development of vowel perception and production involves a cascade of events beginning with the fetus and proceeding through adolescence. The following discussion addresses both developmental data on vowels and the theoretical interpretations of these data. Selected aspects are summarized in Table 1, which shows developmental events at various ages, beginning before birth and extending to 15 years of age. The discussion in this section amplifies some of these events.

Table 1.

Normative milestones in the development of vowel perception and production.

| Age | Perception feature | Production feature |

|---|---|---|

| In utero | Exposure to an ambient language affects aspects of speech perception after birth (see examples below). | n/a |

| 1–2 days | Neonates prefer vowels from the native language as opposed to vowels from a foreign language (Moon et al., 2013). | Vowellike sounds are produced in cry and in comfort vocalizations (humming and cooing). Four basic cries have been identified within the first month. Following the birth cry, these are basic cry, pain cry, and temper cry (Petrovich-Bartell et al., 1982). |

| 2 months | n/a | Production of vowels in early vocalizations in all languages. Especially in the first month, vocalizations may take the form of quasiresonant nuclei (lacking the full resonant quality of vowels). Fully resonant nuclei appear between 2 and 4 months. |

| 3–5 months | Vowel prosody emerges? “Cry” vs. “fuss” perceived by mothers based on peak intensity, F2, and F1:F2 ratio (Petrovich-Bartell et al., 1982). | In infants who imitate adult vowels, the vowels /i u ɑ/ become more tightly clustered from 3 to 5 months (Kuhl & Meltzoff, 1996). |

| 6 months | Discrimination of extrinsic vowel durations in some infants (Eilers et al., 1984). Generalization of vowel exemplars from adult male to adult female or child (i.e., speaker normalization; Kuhl, 1983). Discrimination of spectrally dissimilar vowels (Kuhl, 1979). |

Onset of canonical babbling based largely on consonant + vowel (CV) syllables. FSL common in babbling but may disappear only to reappear later (U-shaped developmental curve; Nathani et al., 2003). |

| 10 months | Development of the duration distinction for PVCV (Ko et al., 2009). | Typical onset of jargon babbling, which has characteristics of intonation, rhythm, and pausing carried largely by vowels. Vowel formants in infant babbling take on characteristics of the ambient language (de Boysson-Bardies et al., 1989). 10-month-olds prefer vowels of normal duration over stretched vowels (Kitamura & Notley, 2009). |

| 12 months | Discrimination of tense vs. lax vowels. Theories such as NLM (1993), NLM-e (Kuhl et al., 2008), and PRIMIR (Werker & Curtin, 2005) assert that experience-based perceptual reorganization occurs in the first year, leading to decreased sensitivity to nonnative contrasts and increased sensitivity to native contrasts. Infants are sensitive to mispronunciations of vowels in familiar words by as early as 14 months of age (Mani et al., 2012). |

The corner vowels [i u ɑ] appear around this age or shortly after (Davis & MacNeilage, 1990; Selby et al., 2000; Templin, 1957). These vowels correspond to the natural referent vowels (Polka & Bohn, 2011), and they appear to establish the basic vowel triangle of articulation and acoustics. These vowels also meet the dual criteria of dispersion and focalization. Language-specific rhythm begins to emerge at 12 months (Post & Payne, 2018). |

| 24 months | 18–24 months—what was perceived in the 7-month time frame regarding native language gives rise to phonetic categorization leading to language learning (Werker & Hensch, 2015). | Production of diphthongs in most children. Vowel duration is adjusted for tense–lax distinction and PVCV (Ko, 2007). |

| 36 months | n/a | PVCV generally present (Krause, 1982; Raphael et al., 1980). Vowel inherent spectral change may be present (Assmann & Katz, 2000). |

| 4 years | n/a | Production of all nonhrotic vowels in most children and production of rhotic vowels by many but not all children. Onset of sexual dimorphism of vowel formants, with boys having lower formant frequencies than girls (Kent & Vorperian, 2018; Vorperian & Kent, 2007). |

| 5 years | Adultlike perception categorization of PVCV (category boundary and category separation; Lehman & Sharf, 1989). | Rhythmic patterning in stress-timed languages such as English is still being refined (Post & Payne, 2018). |

| 8 years | n/a | Postvocalic voicing—category boundary and category separation in production were adultlike by 8 years of age. Supralaryngeal anatomy is now mature (de Boer & Fitch, 2010). |

| 10 years | Adultlike perceptual consistency of PVCV (Lehman & Sharf, 1989). | Variability in production still greater than in adults (Lehman & Sharf, 1989). |

| 11–15 years | n/a | Voice transition in both sexes, larger change in boys (Berger et al., 2019; Maturo et al., 2012). |

Note. FSL = final syllable lengthening; PVCV = postvocalic consonant voicing; NLM = Native Language Magnet Model; NLM-e = Native Language Magnet Model–Expanded; PRIMIR = Processing Rich Information from Multidimensional Interactive Representations; n/a = not applicable.

As a theory, acoustic phonetics has to do with the sounds coming from the speech mechanism and how they are processed by the human ear. This constant interplay between production and perception is at work in such young developing children. The innate need to connect drives development, and reinforcement in communicative exchanges hones these skills.

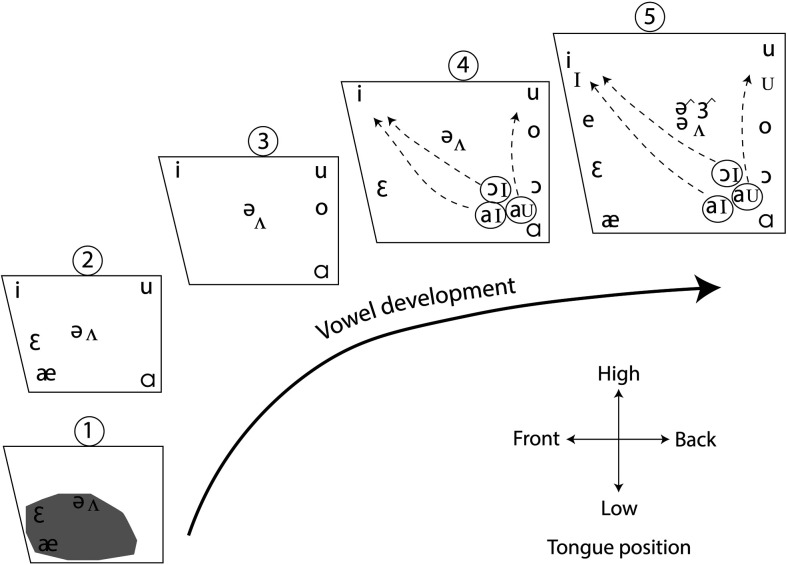

General Developmental Features

This section begins with some general features of the developmental pattern of auditory function and vocal tract anatomy pertinent to vowel production. With respect to auditory function, the human ear functions 2.5–3 months before birth, so that the fetus has some auditory exposure well before birth (Pujol et al., 1991; Querleu et al., 1988). A meta-analysis of studies of vowel discrimination in infants (Tsuji & Cristia, 2013) supported the conclusion that native and nonnative discrimination proceed in opposite directions over the first year of life with a distinction evident by about 6 months of age. With respect to vocal tract anatomy, between birth and adulthood, the structures that form the tract undergo changes in size and shape (Kent & Vorperian, 1995). These changes are considerable, as reflected in the assertion that humans begin life with a vocal tract like that of a chimpanzee (P. Lieberman et al., 1972). An adultlike anatomy unfolds gradually so that an essentially mature morphology (but not length) of the vocal tract is present by the age of 6–8 years (de Boer & Fitch, 2010). However, additional growth and reshaping continue until late adolescence, especially in males. More detailed discussion follows with respect to development stages. It should be emphasized that there are substantial individual variations in vowel development (Donegan, 2013). This summary should be considered a general pattern to which exceptions are likely in individual children. Figure 7 gives a graphic summary of typical patterns in vowel acquisition and will be referenced in the following discussion of various developmental stages.

Figure 7.

Diagrammatic representation of typical vowel development in children. The numbers correspond generally to age in years, but there is substantial individual variation. Based on patterns described by Donegan (2013), Otomo and Stoel-Gammon (1992), and Stoel-Gammon and Herrington (1990).

Prenatal Stage

An account of vowel perception begins before birth. Studies have shown that vowels can be perceived and discriminated in utero (Groome et al., 1997; Lecanuet et al., 1987; Shahidullah & Hepper 1994), but the same has not been shown for consonants (Granier-Deferre et al., 2011). It also has been reported that in utero experience with an ambient language affects vowel perception after birth (Moon et al., 2013) so that babies enter the world with an orientation to the language they will learn from the adult community. Newborns prefer stories heard in the womb over unheard stories (DeCasper & Spence, 1986), probably because of the influence of vowels and the rhythmic pattern of speech. For these and other reasons, neonates can be said to show a vowel advantage in speech processing (Nazzi & Cutler, 2019). The fetus also is exposed to the mother's voice and is biased toward that voice after birth (Fifer, 1987). Spence and DeCasper (1987) presented evidence that prenatal experience with low-frequency characteristics of maternal voices carries over into preferences in postnatal perception of maternal voices. Such low-frequency characteristics likely derive from vowel sounds, which can be transmitted into the intrauterine environment.

Neonatal Stage

Vocalization of vowels (or vocants, in the terminology introduced by J. A. M. Martin, 1981) is one of the first reliably observed behaviors to emerge in neonates. In an observational study involving four different countries, Ertem et al. (2018) reported that at least 50% of babies vocalized vowels by 1 month of age. The fact that babies produce and hear their own vowel sounds shortly after birth may lay the foundation for auditory–motor correlations that will be expanded and refined with maturation.

First Year of Life

Vowel development in early infancy is not a process of simple and gradual accretion in which vowels are added one at a time to form a language-specific repertoire. As explained in the following, it is better to consider vowel development in three fundamental aspects: early vocal behaviors favoring low vowels, mostly front or central; establishing the corner vowels; and phonetic mastery of the vowel repertoire. The rationale for this alternative is discussed in the following.

Vowellike sounds produced in early infancy are not isomorphic with vowels produced during later speech development. As Oller et al. (2013) noted, “Protophones occurring before canonical babbling cannot be transcribed sensibly in the International Phonetic Alphabet…because they generally do not contain well-formed and distinguishable consonants and vowels” (p. 6319). Although phonetic symbols often are used as convenient labels for early vowellike sounds, such usage should not imply that the sounds so identified are identical to vowels later used to form words. J. A. M. Martin (1981) used the term “vocant” for such a vowellike sound. Especially in the early stages of infancy, vocants are not necessarily tightly linked to vowel representations in an adult phonetic system but rather may be developmentally specific to particular combinations of perceptual experience, anatomic configuration, and motor capability. From an acoustic point of view, vocants produced in the first year of life are perhaps best regarded as regions of variable density in the vowel articulatory (or acoustic) space. As Donegan (2013) noted, there is an apparent affinity for vowels in the “lower left quadrant” of the vowel space (Stage 1 in Figure 7), a feature evident in the data reported by O. C. Irwin (1948), Davis and MacNeilage (1990), MacNeilage and Davis (1990), Kent and Bauer (1985), and Ménard et al. (2004). The high frequency of occurrence of vocants in this region likely reflects articulatory preferences based on the vocal tract anatomy in which the tongue is relatively wide and flat with a relatively anterior mass, the pharynx is short, dentition is emergent, and the vocal tract shape lacks the 90° craniovertebral angle that is characteristic of adults. These combined features are conducive to tongue carriage within the lower left quadrant, giving rise to vocants that resemble especially the adult vowels /ɪ ɛ æ ə/. The phonetic symbols are useful to acknowledge some degree of auditory similarity between infant and adult vowels but, as argued earlier, should not be taken as evidence of phonemic acquisition. The statistical preponderance of vocants in one quadrant of vowel space is a notable feature that is most confidently interpreted with respect to developmental anatomy and physiology.

A feature that may occur less frequently but is nevertheless of considerable importance is formation of the vowel space determined by the point vowels (the word “vowels” is now used in favor of “vocants”). The corner vowels /i/, /u/, and /ɑ/ appear in the first or second year of life (Buhr, 1980; Davis & MacNeilage, 1990; O. C. Irwin, 1948; Selby et al., 2000; Templin, 1957; Wellman et al., 1931). These vowels, shown in Stage 2 in Figure 7, establish the acoustic and articulatory boundaries of the vowel space, within which other vowels can be produced (Kent, 1992). This aspect of vowel production has a correlate in perception. Polka and Bohn (2003) reported that infants have a perceptual bias for vowels that are close to the periphery of the F1/F2 vowel space and suggested that a bias for these vowels is language universal. J.-L. Schwartz et al. (2005) cast these results in the framework of the dispersion–focalization theory of vowel systems, proposing that focalization (the convergence between two consecutive formants in a vowel spectrum) increases the perceptual salience of the peripheral vowels relative to other vowels not having this property. They commented that “focal vowels, more salient in perception, provide both a stable percept and a reference for comparison and categorization” (p. 425). Focalization can be seen in Figure 1 as the proximity of F2 and F3 for vowel /i/ and F1 and F2 for vowels /ɑ/ and /u/. Polka and Bohn (2011) accepted the J.-L. Schwartz et al. (2005) interpretation of the data in their earlier report and further proposed a natural reference vowel framework to account for phonetic development in children. The peripheral vowels are anchors within the natural reference vowel framework. The perceptual salience of the focal vowels has an articulatory counterpart insofar as these vowels are produced with extreme positions in the oral cavity (see Figure 2). As infants explore their vocal abilities, they may come to rely on peripheral vowels because of both the perceptual bias of focalization and the anatomic boundaries of vowel production.

Japanese and American English vowels differ in number but share similar spectral and temporal characteristics when they are produced in connected speech (Nishi et al., 2008). Using an acoustic–articulatory inversion model with scalable vocal tract size, Oohashi et al. (2017) noted the following developmental sequence of vowel development in Japanese: At 6–9 months, coordination of the tongue body and lip aperture forms three vowels (front, back, and central); at 10–17 months, the jaw and tongue apex are recruited to differentiate the original three vowels into five; and at 18 months and older, tongue shape is further refined to produce the vowels of Japanese. Research is needed to determine if the same general pattern occurs in other languages.

Second Year of Life

Most infants make considerable progress in speech acquisition by the age of 24 months. Vowels typically produced at this stage are shown in Stage 3 in Figure 7. Vowel production is likely enhanced by several factors beyond increased familiarity with the ambient language. The vocal tract has been sufficiently remodeled so that the larynx is well separated from the nasopharynx, which contributes to a lengthening of the pharynx and greater motility of the tongue (de Boer & Fitch, 2010; Kent, 1992). Velopharyngeal closure for spontaneous speech production is reliably accomplished by about 19 months (Bunton & Hoit, 2018), so that vowels are produced with a well-defined oral resonance. In addition to changes in macroanatomy, there appear to be changes in microanatomy. For example, by 2 years of life, the proportion of slow-twitch and fast-twitch fibers in the tongue has reached adult values, which may be evidence that the tongue musculature is being adapted to the requirements of speech production (Sanders et al., 2013). Among these requirements is fatigue resistance during the performance of long stretches of speech. The high proportion of slow-twitch fibers in the posterior part of the tongue may be advantageous for the continuous vowel–vowel movements in conversational speech. This is not to argue for anatomic and biological determinism, but rather to say that musculoskeletal development contributes to the conditions favoring intelligible speech and the identification of phonetic units comparable to those in adult speech.

Vowel development in this period has been studied principally with diary studies (Leopold, 1947; Menn, 1976; Velten, 1943) and cross-sectional studies (Buhr, 1980; Davis & MacNeilage, 1990; O. C. Irwin, 1948; Selby et al., 2000; Templin, 1957; Wellman et al., 1931). The most general conclusion is that vowels at the extremes of the quadrilateral are acquired before those in more central positions, with the exception of an early preference for vowels in the low-front region of the quadrilateral (as discussed previously). This developmental pattern is consistent with establishing the acoustic and articulatory boundaries of the vowel space as a framework for vowel acquisition. Many theories have been advanced to account for phonological development beginning around 2 years of age.

At some point in development, it is appropriate and useful to describe vowel production in terms of phonetic mastery, that is, the age at which a sound produced in a specified context (e.g., a target word in an articulation test). This is judged to be produced correctly by a certain percentage (e.g., 50%, 75%, 90%) of children or productions by a given child. Inferences of mastery tend to be associated with morphology and the lexicon in that assessment tools typically rely on words as the units in which sounds are judged. This aspect of development is discussed in following sections for later ages. As noted earlier, by the end of the first year of life, vocants are being replaced by vowels (i.e., it becomes appropriate to describe development in phonemic terms), so that it becomes appropriate to use terms such as phonetic mastery.

Third Year of Life

Studies such as those by J. V. Irwin and Wong (1983) and Templin (1957) indicate that nonrhotic vowels are mastered in typical development by the age of 3 years. Although mastery may be delayed in some children, the received wisdom based on these early studies appears to be that vowel development is largely accomplished by this age except for the rhotics. However, more recent studies indicate that mastery of vowels in polysyllabic words and connected speech is not achieved until several years later (James et al., 2001; Wren et al., 2013). Possibly, the refinement of vowel production depends in part on other aspects of speech development, such as prosody. Acoustic research, summarized in a following section, also indicates that refinement of vowel production continues beyond the age of 3 years. Stage 4 in Figure 7 summarizes typical development at about this age.

Fourth to Eighth Year of Life

By the age of 4–5 years, the vowel system is typically complete except for rhotic vowels in some children. Stage 5 in Figure 7 represents the essentially mature vowel system. De Boer and Fitch (2010), drawing on research from Fitch and Giedd (1999), D. E. Lieberman and McCarthy (1999), and Vorperian et al. (2005), stated, “Independent studies have shown that a mature supralaryngeal vocal tract anatomy, with a rough match between oral and pharyngeal cavity length is not achieved until age 6–8 years” (p. 43). Although the usual perception-based criteria of phonetic mastery may be satisfied as early as 3 or 4 years, maturation of motor control is a continuing process and likely reflects the emergence of adultlike morphology of the vocal tract.

Children of this age have largely consolidated the perceptual, motor, and phonological aspects of speech into adultlike patterns (Ball & Gibbon, 2013). However, refinements continue in many children for both the perceptual and motor skills of speech (as shown in Table 1).

What Are the Distinctive Acoustic Properties of Rhotic Vowels and Diphthongs?

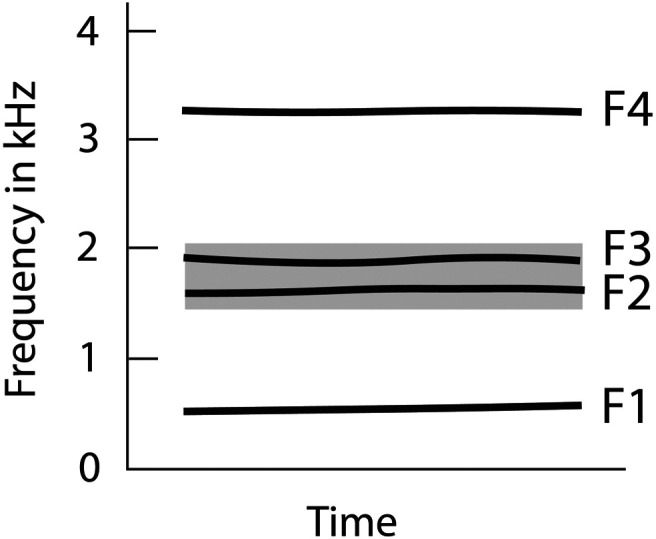

The rhotic vowels (such as the vowels in the word “further”) and the rhotic diphthongs (as in the words “ear,” “oar,” and “our”) have distinctive properties. As discussed, they often are the last vowels to be acquired by children (Stoel-Gammon & Pollock, 2008). The class of rhotics in American English (both consonants and vowels) share a common acoustic feature—a reduced F3 frequency that sets them apart from other sound classes (Alwan et al., 1997). However, it may not be a low F3 frequency per se that is the hallmark of rhotic acoustics. Rather, it may be that a near-merging of F2 and F3 produces a spectral band of energy that is perceived as a “rhotic formant” (i.e., F2 and F3 are not discriminated separately but integrated as a single energy band). Figure 8 shows a stylized spectrogram of the formant pattern for a production of the rhotic vowel /ɝ/. The gray band represents the combined energy of F2 and F3. Heselwood and Plug (2011) conducted two perceptual experiments that showed that F3 contributes to the perception of rhoticity insofar as the proximity of F3 to F2 produces a dominant band of energy in the F2 frequency region. According to the broad-band auditory integration hypothesis (Bladon, 1983), the F2–F3 convergence is fused in perception if the two formants are within 3.5 Bark of each other. A surprising outcome of the perceptual studies reported by Heselwood and Plug was that reducing the amplitude of F3 actually enhanced perceived rhoticity. This result may be important in designing systems of visual feedback for the acoustic properties of rhotics.

Figure 8.

Stylized spectrogram of the rhotic vowel /ɝ/ showing the close positioning of F3 and F2. The gray band illustrates the combined energy of these formants and may be considered the rhotic formant (i.e., an integration of the energy in F2 and F3).

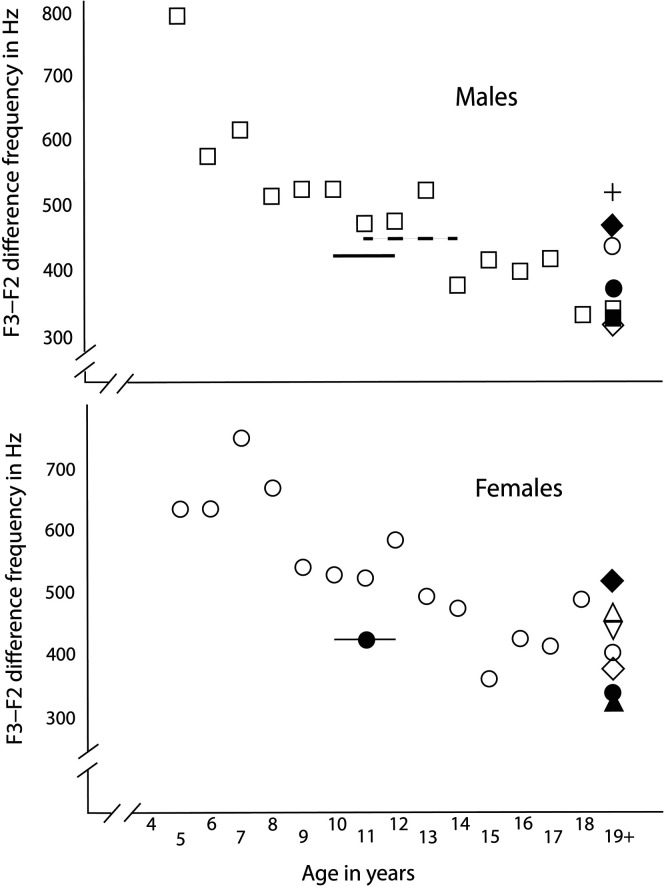

Rhotics can be characterized acoustically as either a ratio of F3/F2 or the difference in frequency between the two formants (Chung & Pollock, 2019; Flipsen et al., 2001). The frequency difference is useful in estimating the likelihood of broad-band auditory integration. Figure 9 shows for males and females, respectively, the F3–F2 frequency difference for vowel /ɝ/ across age. The mean difference is about 600–700 Hz for young children and about 400–500 Hz for adults. Across the ages represented, the F3–F2 differences for both males and females fall in rather tight bands, which is evidence of the salience of this acoustic cue of rhoticity.

Figure 9.

F3–F2 differences for vowel /ɝ/ as a function of speaker age in males and females. Data sources for males are as follows: dashed line = 11- to 14-year-olds in Angelocci et al. (1964); cross = Childers and Wu (1991); unfilled circle = Hagiwara (1995); solid line = 10- to 12-year-olds in Hillenbrand et al. (1995); unfilled squares = Lee et al. (1999); unfilled diamond = Peterson and Barney (1952); filled circle = B. Yang (1996); filled diamond = Zahorian and Jagharghi (1993). Data sources for females are as follows: filled diamond = Childers and Wu (1991); inverted triangle = Hagiwara (1995); solid line with filled circle = 10- to 12-year-olds in Hillenbrand et al. (1995); filled circle = adults in Hillenbrand et al. (1995); unfilled circles = Lee et al. (1999); filled triangle = Peterson and Barney (1952); unfilled diamond = B. Yang (1996); unfilled diamond = Zahorian and Jagharghi (1993).

What Is the Interaction Between Vowel Perception and Vowel Production in Early Development?

Infants preferentially attend to vowel sounds that have infant-like voice pitch and/or formants over vowel sounds that do not have infant-like properties (Masapollo et al., 2016). The authors interpreted this finding to mean that infants' production of speech sounds influences their perception of infant speech. Research on cortical auditory evoked potentials (McCarthy et al., 2015) revealed that 4- to 5-month-old infants have two-dimensional perceptual maps that reflect F1 and F2 acoustic differences between vowels, but 10- to 11-month-old infants have maps that are less related to acoustic differences but tend to give greater weight to adjacent vowels in the vowel quadrilateral (e.g., /i/–/ɪ/). These results were interpreted to indicate a shift from a primarily acoustic to a more phonologically driven processing. These two studies are examples of perception–production interaction in the development of vowel systems in children. Evidence of this interaction can be seen in a wide range of studies, including speech perception and production in infants (Altvater-Mackensen et al., 2016; Bruderer et al., 2015; Majorano et al., 2014), perception–production transfer from birth language memory (Choi et al., 2017), vowel perception and production in adults (Fox, 1982), and adjustment to an articulatory disruption (Seidl et al., 2018). Taken together, these discoveries point to neural processes that link the perceptual experience of speech sounds with the motor processes involved in their production. Computational models incorporating this idea are discussed in a later section, (How Can Sensory-Motor Mapping Serve in a Theoretical Framework for Treating Vowel Production and Perception?).

Can Acoustic Measures Serve as an Index of the Precision of Vowel Production?

Acoustic methods have contributed to the study of variability in both the temporal and spectral aspects of speech production (Kent, 1976; Lee et al., 1999). Work on vowels pertains primarily to formant patterns and segment durations. Perceptual methods, such as phonetic transcription, are not sensitive to all variations in vowel articulation. Acoustic data on formant patterns and durations evince variability even in tokens that are judged to represent the same phoneme. In motor behavior generally, precision is often assessed by determining the variability in repeated tokens of a behavior, and a typical hypothesis in the study of motor skills is that precision will increase (and variability will decrease) with maturation. Increased precision of vowel production has been reported in several studies (Eguchi & Hirsh, 1969; Gerosa et al., 2007; Lee et al., 1999; J. Yang & Fox, 2013). Adultlike precision is reached at about 12 years of age, about 2 or 3 years later than adultlike precision for temporal aspects of speech.

Low vowels are produced with greater acoustic (and presumably articulatory) variability than high vowels (J. Yang & Fox, 2013). The reasons for this difference may be that production of the high vowels benefits from (a) somatosensory feedback of the tongue against the palate, teeth, or both (Gick et al., 2017) and (b) lateral lingual bracing against the upper structures of the oral cavity (Gick et al., 2017). In addition, the high vowels may have a more critical constriction to achieve the desired acoustic results, which is consistent with greater coarticulatory resistance in these vowels (Recasens & Rodríguez, 2016).

What Do Acoustic Data Add to the Knowledge of Phonetic Mastery of Vowels?

The developmental primacy of vowels seems desirable from the perspective of motor control given that consonant articulation often is adjusted to the articulatory features of vowels (e.g., the articulatory accommodation of velar stops to the following vowel). From the perspective of phonetic mastery, vowels as a class appear to be produced more accurately than consonants, but perceptual judgments may not be sensitive to all aspects of speech maturation. Formant frequency data show developmental changes in the organization of acoustic vowel categories beyond the age typically given for the phonetic mastery of vowels in English (J. Yang & Fox, 2013); Mandarin (J. Yang & Fox, 2017); Hungarian (Auszmann & Neuberger, 2014); and, in a multilanguage study, Cantonese, American English, Greek, Japanese, and Korean (Chung et al., 2012). Because these languages represent different language families and different vowel inventories, it appears that stabilization of vowels beyond supposed phonetic mastery is universal. The implication is that children continue to refine the characteristics of vowels between the ages of 3 and 7 years and perhaps even later (up to age 13 years according to Auszmann & Neuberger, 2014). Similarly, protracted development of aspects of speech motor control has been reported in acoustic and kinematic studies showing improved precision of speech production until at least 16 years of age (Walsh & Smith, 2002) and perhaps as late as 30 years (Schötz et al., 2013). Fixing an exact age for maturation is problematic because different aspects of speech motor skill may have distinct developmental trajectories. Phonetic mastery, as typically determined by perceptual judgments, is useful in determining overall conformity with a language's phonological system, but it is not sensitive to the full spectrum of underlying processes of sensory and motor maturation. Refinement of speech motor control is an ongoing process that is adapted to anatomic changes, sociocultural influences, and perhaps other variables yet to be identified.

How Is Vowel Production Compensated for in Articulatory and Auditory Perturbations?

A given vowel often can be produced with substantially different underlying articulations, so long as an acoustically critical vocal tract shape is formed for the specific vowel. Particularly notable in this respect are different contributions of jaw and tongue positions. Because the jaw provides carriage for the tongue, articulatory movements of the tongue are predicated on the current position of the jaw. In both research and clinical practice, bite blocks are a convenient way to create perturbations or adjustments requiring compensatory articulation (Bahr & Rosenfeld-Johnson, 2010; Crary, 1995; Dworkin, 1978; Netsell, 1985). Adults are capable of making compensations to a bite block on the first glottal cycle of phonation, that is, well before auditory feedback is available to compute and amend motor commands (Gay et al., 1981). Virtually instantaneous compensation occurs even in a condition of bilateral anesthetization of the temporomandibular joint, application of a topical anesthetic to reduce tactile information from the oral mucosa, and white noise masking to reduce auditory information (Kelso & Tuller, 1983). However, Hoole (1987) reports a case study in which auditory masking prevented bite block compensation in a 29-year-old man who had suffered closed-head trauma and whiplash injury to the cervical cord in a sporting accident. The man apparently recovered completely from postaccident dysarthria but had a persisting loss of oral sensation extending from the pharynx to the lips.

Studies that have been aimed at determining when children are capable of bite block compensation have yielded somewhat discrepant results. Gibson and McPhearson (1979/1980) concluded that bite block compensation is incomplete for children aged 6–7 years. However, apparently successful compensation was reported in several later studies, including studies of de Jarnette (1988), Baum and Katz (1988), and Smith and McLean-Muse (1987). De Jarnette found that compensation was accomplished by all participants in three groups (five children with typical speech development, aged 6.4–7 years; five children with moderate articulatory disorders, aged 5.9–8.1 years; and five adults with typical speech, aged 19.3–32.1 years). Baum and Katz observed no differences in F1 or F2 in two groups of children aged 4–5 and 7–8 years in producing both jaw-free and jaw-fixed conditions. In a kinematic study, Smith and McLean-Muse observed essentially no differences between children and adults in compensating to a bite block, concluding that “the ability to produce speech under experimental conditions such as these is apparently acquired by normally developing children by at least 4–5 years of age” (p. 752). As noted earlier, bite blocks are sometimes used in assessing and treating speech disorders, and it is therefore important to know the age at which compensation for bite blocks is accomplished. Apparently, this ability is present by about 4 years of age in typically developing children. Effective acoustic measurement should be considered when making clinical decisions such as what effect a bite block has on an individual speaker's vowel production.

The effect of auditory perturbations has been investigated by introducing formant changes in vowels produced by talkers. MacDonald et al. (2012) studied responses in a real-time formant perturbation task in three age groups: toddlers, children, and adults. Children and adults reacted by changing their vowels in a direction opposite to the perturbation, that is, correcting for it. In contrast, the toddlers did not change their production in response to altered feedback. Similarly, Ménard et al. (2008) concluded from a study of compensation strategies for a lip-tube perturbation that 4-year-old children did not integrate the auditory feedback in a way that contributed to motor learning, a failure that was attributed to immature internal models. This task may contribute to a deeper understanding of developmental speech sound disorders. Terband et al. (2014) reported that, although most children with this disorder can detect discrepancies in auditory feedback and can adapt their target representations, they fail to compensate for the perturbed auditory feedback.

How and When Do Language and Dialect Differences Influence Vowel Production in Infant Vocalizations and Early Childhood?

In an early and influential report on cross-language differences in babbling, de Boysson-Bardies et al. (1989) obtained F1 and F2 frequencies of vowels produced by twenty 10-month-old infants from Parisian French, London English, Hong Kong Cantonese, and Algiers Arabic language backgrounds. Significant differences in formants were observed between infants across language backgrounds, and these differences reflected those in adult speech in the corresponding languages. This study showed that, even before the age of 1 year, infants are adjusting their vowel production in ways that accord with the ambient language.

The effect of ambient language on infant vocalizations has been corroborated in a number of studies (Alhaidary & Rvachew, 2018; L. M. Chen & Kent, 2005, 2010; de Boysson-Bardies & Vihman, 1991; Engstrand et al., 2003; Grenon et al., 2007; Rothgänger, 2003; Rvachew et al., 2008, 2006). This line of research shows that infants are aware of their linguistic environment and that they reproduce selected aspects of the ambient language in their own vocalizations. This auditory–motor correspondence is consistent with the hypothesis that, even in the first year of life, infants are shaping their vocalizations in ways that are compatible with the language to be learned. Research on speech perception in infants has given rise to influential theories such as those discussed in the following.

The “Native Language Magnet/Neural Commitment Theory” or “Native Language Magnet Model–Expanded” (Kuhl, 1992; Kuhl et al., 2008) accounts for the developmental processes by which the ability of infants to discriminate speech sounds is progressively adapted to their native language. It is proposed that early auditory experience produces a neural commitment to the phonetic units of the native language, forming prototypical representations of the phonemic inventory. This process enhances the auditory processing of native sounds but interferes with the detection of the sounds in nonnative languages. The theory integrates several processes and abilities, including cognitive and auditory processing skills, connections between speech perception and production, statistical learning, and social factors that affect learning. Therefore, the Native Language Magnet Model–Expanded proposes that speech perception develops through the interplay of several factors in the communicative environment. It can thereby account for the influence of the ambient language and the maturation of sensory and cognitive abilities.

The “Processing Rich Information from Multidimensional Interactive Representations” (Werker & Curtin, 2005) holds that the speech signal is processed by three dynamic filters (initial biases, developmental level of the child, and requirements of the specific language task at hand). The Processing Rich Information from Multidimensional Interactive Representations was developed to address two fundamental issues in infant speech perception. The first, that speech perception is both categorical and gradient, is resolved by the use of multidimensional planes. The second, that perception is influenced by both ontogenetic development and online processing, is handled by assuming that performance is continually changing and flexible as a function of age and task so that processing and representations are interwoven. Exposure to the ambient language is one aspect of the nascent representations.

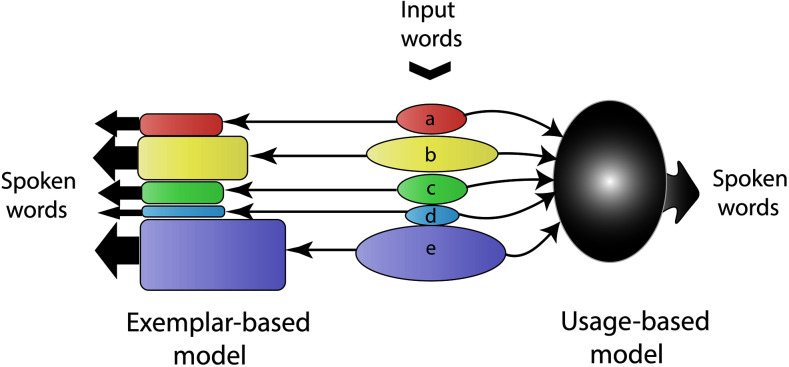

Acoustic measures of formant patterns show that vowel production is influenced by regional dialects of American English (Clopper et al., 2005; Fox & Jacewicz, 2009) and that children's vowel systems are regionally distinct by the age of 8–12 years (Jacewicz et al., 2011). Jacewicz et al. (2011) concluded that children acquire not only systemic relations among vowels but also dialect-specific patterns of formant dynamics. Vowel production in children may be influenced by variability of lexical exposure and the frequency of words encountered. Levy and Hanulíková (2019) concluded in an acoustic study of vowel production that children who experience greater input variability produce more variable vowels. This conclusion is particularly important for children in bilingual or bidialectal environments, for whom the diversity of acoustic input for vowels may be reflected in vowel production variability. Such an effect can be explained by either usage-based or exemplar-based models, as graphically conceptualized in Figure 10, which depicts versions of the two models. The input words labeled “a” through “e” represent word productions that vary in frequency of occurrence in a child's auditory experience. For example, the word production labeled “e” has a high frequency of occurrence. Usage-based models assume that linguistic units are gradient categories formed in continuous fashion from experienced tokens (Bybee & Beckner, 2010). Exemplar-based models assume that every perceived variant of a word gives rise to an exemplar in a direct acoustic-to-lexical mapping. As shown in Figure 10, words that are heard more frequently have a greater number of exemplars (a larger box in the illustration) than infrequent words (Schweitzer et al., 2015) and are therefore more likely to be produced.

Figure 10.

Illustration of exemplar-based and usage-based models to account for the effects of diversity in input on production of vowels. The exemplar-based model assumes that every perceived variant of a word (the boxes labeled “a” through “e”) gives rise to an exemplar in a direct acoustic-to-lexical mapping. Frequency of occurrence is represented by the size of the box for the exemplar. The usage-based model assumes that linguistic units are gradient categories formed in continuous fashion from experienced tokens. For both models, spoken words are produced in variable fashion reflecting the input diversity.

How Do Speakers Adjust Their VSA in Accord With Listener Characteristics and Communication Settings?

As noted earlier, VSA is a measure of the area contained within the vowel quadrilateral or vowel triangle. When speakers are asked to speak clearly (or do so spontaneously in an effort to communicate successfully under less than optimal conditions), they adjust their speech in several ways, often including expansion of the vowel space (called vowel hyperarticulation by some authors or overarticulation by some clinicians). Such expansion is a frequently noted characteristic of infant-directed speech, and it has been suggested that parents make this adjustment as a didactic strategy to aid children's speech development. Talkers alter their speaking patterns for various types of listeners, including pets as well as young children and foreigners (Uther et al., 2007). However, what appears to be unique in infant-directed speech as compared with pet-directed speech is that expansion of vowel space occurs in the former and not in the latter (unless the pet in question is a talking parrot in which case a modest hyperarticulation is performed; Xu et al., 2013).

Vowel hyperarticulation also may be used intentionally or unintentionally by speech-language clinicians, especially when addressing young children. Mothers using infant-directed speech appear to use a raised larynx to achieve an expanded vowel space (Kalashnikova et al., 2017) even as they deploy an increased vocal pitch, which may be a signal for nonaggressiveness or rapport. Both hypoarticulation expressed as a reduced VSA and hyperarticulation expressed as an increased VSA are of interest in assessing vowel production in developing and disordered speech. The main point to be made is that VSA is modulated by several factors, including characteristics of the listener and the communication environment. In other words, VSA is not an invariant physical value of the vocal tract of a given speaker.

In What Ways Is Vowel Perception and Production Vulnerable to Speech and Language Disorders in Children and Adults?

As reviewed in this section, both the perception and production of vowels are affected by a number of communication disorders in children and adults. We begin with a discussion of VSA (or similar acoustic indices) used to quantify atypical acoustic patterns of vowel production. Then, examples are given of vowel disorders in selected clinical populations.

The Acoustic Vowel Space in Communication Disorders, a Focus on Production

VSA is perhaps the most frequently reported index of disordered vowel production in both children and adults and is one of the most extensively reported acoustic measures for any aspect of speech articulation. Sandoval et al. (2013) commented that, “Vowel space area (VSA) is an attractive metric for the study of speech production deficits and reductions in intelligibility, in addition to the traditional study of vowel distinctiveness.” However, as discussed in the following, the usual method of calculating VSA has come under criticism, and it is likely that, in the future, other metrics will gain favor. Alternative metrics are summarized later in this section. In the immediately following discussion, VSA is emphasized because of its frequent mention in the literature under review.

For children's speech, a reduced VSA has been noted for several disorders, including cerebral palsy and other childhood neurological disorders (Higgins & Hodge, 2002; Hustad et al., 2010; Liu et al., 2005; Narasimhan et al., 2016), dyslexia (Bertucci et al., 2003), residual speech sound disorders (Spencer et al., 2017), and Down syndrome (Bunton & Leddy, 2011). These results can be explained largely by auditory, motor, or auditory–motor limitations. However, increased VSA also has been observed in clinical populations, including two groups of children with hearing impairment (deaf with cochlear implants and hearing-impaired with hearing aids; Baudonck et al., 2011). The authors suggested that the enlarged VSA was the consequence of overarticulation (synonymous with hyperarticulation, mentioned earlier), a compensation for reduced auditory feedback by relying on proprioceptive feedback during speech production.

Reduced VSA has also been reported for adults with various disorders and conditions, including, but not limited to, acquired dysarthria (Bang et al., 2013; S. Kim et al., 2014; G. S. Turner et al., 1995; Weismer et al., 2001), glossectomy (Kaipa et al., 2012; Takatsu et al., 2017; Whitehill et al., 2006), oral or oropharyngeal cancer (de Bruijn et al., 2009; van Son et al., 2018), Class III malocclusion (Xue et al., 2011), stuttering (Blomgren et al., 1998; Hirsch et al., 2008), and psychological distress or with self-reported symptoms of depression and posttraumatic stress disorder (Scherer et al., 2016, 2015). It is likely that increased VSA can occur in some clinical populations. Using a measure of dispersion of density, Kelley and Aalto (2019) concluded that head and neck cancer patients may use hyperarticulation strategies to increase the clarity of their speech postsurgery.

It is becoming clear that VSA as typically measured may not be sensitive to all features of clinical interest, and it is advisable to consider other analyses in preference to VSA or to complement it (Karlsson & van Doorn, 2012; Kent & Vorperian, 2018). For example, vowel articulation index and its inverse, formant centralization ratio, effectively reduce noise variability between speakers while maintaining high sensitivity to vowel centralization, which assists in differentiation of disordered speech compared to VSA in persons with reduced articulatory movements seen in hypokinetic dysarthria (Sapir et al., 2011). The point cloud has been posited as a good tool for childlike speech models (Story & Bunton, 2016), as it treats the acoustic space as a three-dimensional cloud (Coen et al., 2015). One advantage of this approach is that little to no information is abstracted or lost as in the case of derived indices such as VSA or vowel articulation index. The point cloud is faithful to the source data and reveals the distribution of values rather than a summary statistic such as the mean. Triangular VSA has been posited to correlate closely to intelligibility in normal speakers (Bradlow et al., 1996) and to account for dialectical differences in American English (Fox & Jacewicz, 2009). Because different analyses have relative strengths and weaknesses with different speech patterns, clinicians and researchers are called upon to make an educated decision on what tool to use, considering client's age, etiology of disability, and functioning level.

The Backbone of Production: Perception