Abstract

Background:

Performance assessments based on in-hospital mortality for intensive care unit patients can be affected by discharge practices such that differences in mortality may reflect variation in discharge patterns rather than quality of care. The degree to which this “discharge bias” varies by hospital type is unknown.

Objective:

To quantify variation in discharge bias across hospitals and determine the hospital characteristics associated with greater discharge bias.

Design:

Retrospective cohort study.

Setting:

Nonfederal Pennsylvania hospital discharges in 2008.

Patients:

Eligible patients were aged 18 years or older and admitted to an intensive care unit.

Measurements:

We used logistic regression with hospital-level random effects to calculate hospital-specific risk-adjusted 30-day and in-hospital mortality rates. We then calculated discharge bias, defined as the difference between 30-day and in-hospital mortality rates, and used multivariate linear regression to compare discharge bias across hospital types.

Results:

43,830 patients and 134 hospitals were included in the analysis. Mean (standard deviation) risk-adjusted hospital-specific in-hospital and 30-day ICU mortality rates were 9.6% (1.3) and 13.1% (1.6), respectively. The mean hospital-specific discharge bias was 3.5% (1.3). Discharge bias was smaller in large compared to small hospitals, making large hospitals appear comparatively worse from a benchmarking standpoint when using in-hospital instead of 30-day mortality.

Limitations:

This was a retrospective study using administrative data and restricted to hospital discharges from Pennsylvania.

Conclusions:

Discharge practices bias in-hospital ICU mortality measures in a way that disadvantages large hospitals. Accounting for discharge bias will prevent these hospitals from being unfairly disadvantaged in public reporting and pay-for-performance.

Introduction

Hospital performance assessments based on mortality are an important component of efforts to improve healthcare quality in the United States (US). Mortality-based performance measures are frequently used not only for internal benchmarking but also large-scale quality improvement initiatives such as public reporting and pay-for-performance. Increasingly, these efforts are focusing on the intensive care unit (ICU) where morbidity, mortality, and costs are high (1–3). Indeed, the National Quality Forum (NQF) recently endorsed in-hospital mortality for ICU patients as a hospital quality measure (4), and several regional and national benchmarking initiatives use this measure to assess hospital quality (5, 6). However, a large body of data suggests that in-hospital mortality measurement can be biased by discharge practices (7). Risk-adjusted in-hospital mortality for ICU patients improves as the number of transfers to post-acute care facilities or other hospitals increases (8–11), and early post-discharge death for these patients is associated with discharge to an acute care hospital or subacute facility compared to home (12). Additionally, in a demonstration project of public reporting of in-hospital mortality for high-risk patients, patients were increasingly transferred to post-acute care facilities, shifting mortality from in the hospital to the period immediately after discharge without reducing 30-day mortality (13). This finding suggests that in-hospital mortality may be more a reflection of variation in discharge patterns than quality of care.

An alternative to in-hospital mortality measures are time-specific mortality measures such as mortality within 30-days of hospital admission. Time-specific measures are currently used in national, payer-based programs such as the Centers for Medicare & Medicaid’s Hospital Compare program, in which post-discharge mortality data are available for all patients (14). These measures may not be as susceptible to discharge bias compared to in-hospital mortality. However, most state and regional quality improvement programs do not have access to post-discharge outcomes, making 30-day mortality harder to calculate. Moreover, as long as the difference between in-hospital mortality and 30-day mortality (so-called “discharge bias”) is relatively small and consistent across hospitals, it may not be of sufficient concern to preclude the use of in-hospital mortality, especially since other benefits of small regional programs, such as high quality risk-adjustment, may outweigh any harms.

Whether or not the degree of discharge bias in ICU patients is sufficient to meaningfully alter performance assessment and whether discharge bias disproportionately affects certain types of hospitals is unknown. In this study, we quantify discharge bias for ICU patients and examine hospital characteristics associated with greater discharge bias. We hypothesized that discharge bias would vary across hospitals and differ based on hospital characteristics. Some of the results of this study have been previously reported in abstract form (15).

Methods

Study Design

We performed a retrospective cohort study of patients admitted to ICUs in Pennsylvania in fiscal year 2008 using data from the Pennsylvania Health Care Cost Containment Council (PHC4) state hospital discharge dataset. PHC4 is a non-profit government agency which collects detailed clinical and administrative information on all patients admitted to non-federal Pennsylvania hospitals for benchmarking and research purposes. PHC4 data have been used for numerous observational studies involving ICU patients (16–18). The PHC4 data were linked to the Pennsylvania Department of Health’s vital status files to obtain post-discharge death information for each patient and to Medicare’s Healthcare Cost Report Information System (HCRIS) to obtain hospital characteristics.

Patient Selection

All patients admitted to an ICU, defined using ICU-specific revenue codes, were eligible for the study. We excluded patients less than 18 years of age, those transferred from an acute care hospital ICU, patients readmitted to an ICU, those with missing or invalid risk-adjustment variables, patients admitted to hospitals that could not be merged with HCRIS, and patients admitted to hospitals with less than 50 annual ICU admissions. This study was reviewed and approved by the institutional review board of the University of Pittsburgh. A waiver for individual informed consent was provided due to the minimal risk to participants.

Variables

The primary outcome variable was discharge bias, defined as the difference between hospital-specific risk-adjusted 30-day and in-hospital ICU mortality. Under this parameterization, greater discharge bias makes a hospital appear of relatively higher quality when using in-hospital instead of 30-day mortality. The secondary outcome variable was change in hospital rank, defined as the difference between hospital rank when using in-hospital ICU mortality versus 30-day ICU mortality, such that a greater change in rank makes a hospital appear of relatively higher quality when using in-hospital instead of 30-day mortality. Hospitals were ranked in order according to their 30-day and in-hospital mortality rates, with lower ranks assigned to hospitals with higher mortality.

The primary exposure variables were key hospital characteristics including hospital bed size, teaching status defined using resident-to-bed ratio (non-teaching 0, small teaching >0 to <0.25, large teaching ≥0.25), ownership (non-profit or for profit), urban/rural status according to metropolitan statistical area (MSA) size, safety-net status defined by the percentage of Medicaid patients (divided into tertiles), health-maintenance organization (HMO) penetration defined by the percentage of commercial HMO patients (divided into tertiles), and the number of long-term acute care hospital (LTAC) beds in each hospital’s Dartmouth Atlas hospital referral region (HRR). These hospital characteristics were chosen based on a conceptual model of hospital-level factors postulated to affect discharge practices, such as how likely a hospital is to transfer sick patients to a higher level of care, the ability to transfer patients to other facilities, and motivation to increase patient turn-over and financial earnings (19–21).

Statistical Analysis

Our analysis had four components. First, we calculated hospital-specific risk-adjusted in-hospital and 30-day mortality rates using patient-level hierarchical regression models as previously described (22, 23). These models account for variation in case-mix across hospitals as well as the inherent unreliability of mortality measurement in hospitals with small sample sizes. Variables used in risk-adjustment included patient age, gender, race, admission source, comorbidities, primary diagnosis, and predicted risk of death on presentation. Age, gender, race, and admission source were directly available in the PHC4 dataset. Comorbidities were defined using the Deyo modification of the Charlson Comorbidity Index (24). Primary diagnosis was categorized into groups using the Agency for Healthcare Research and Quality Clinical Classifications Software (25). Predicted risk of death was determined using the MediQual Atlas predicted mortality risk score, a validated severity of illness score calculated by the PHC4 for each patient using key clinical and demographic variables measured at the onset of hospitalization (26).

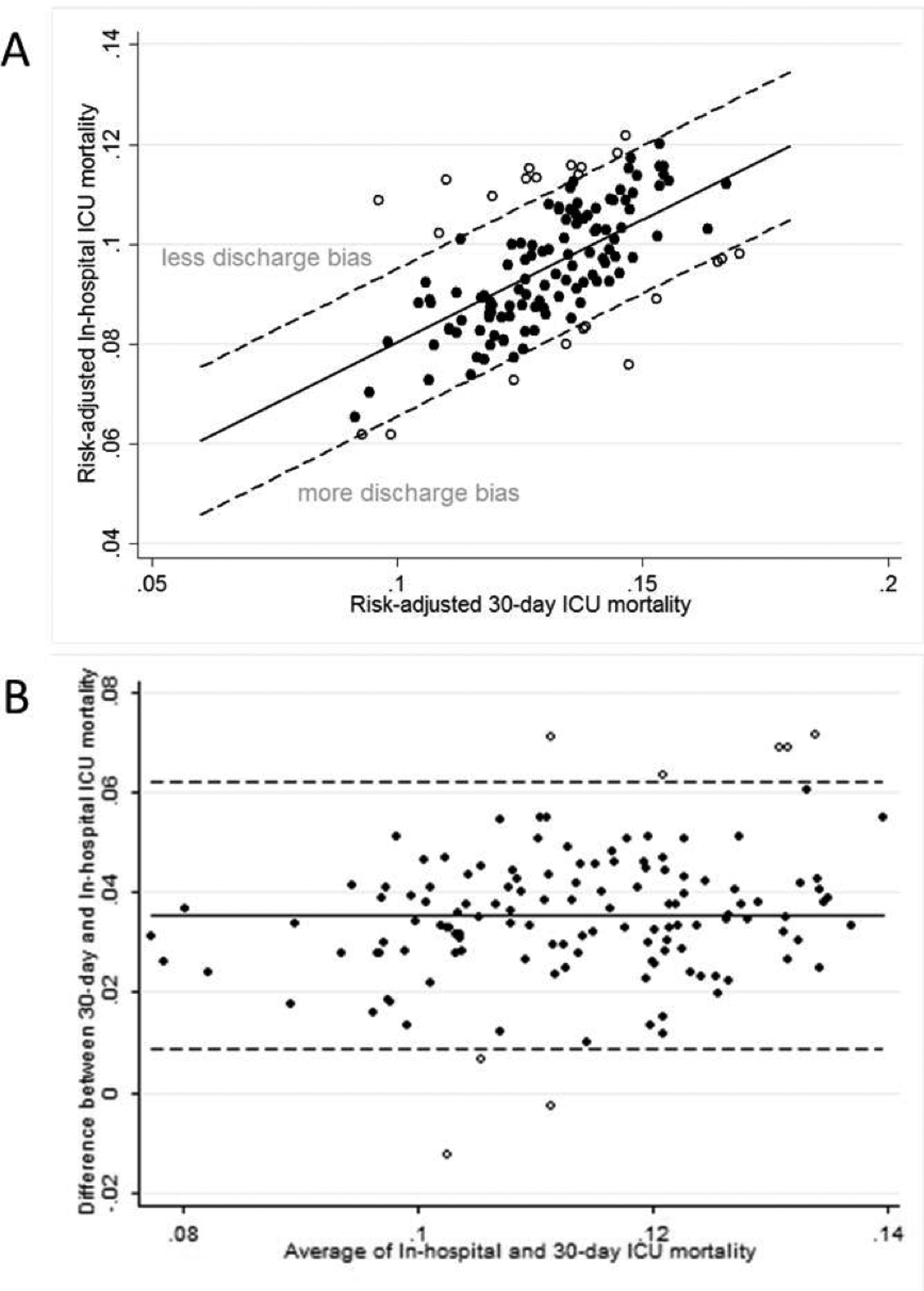

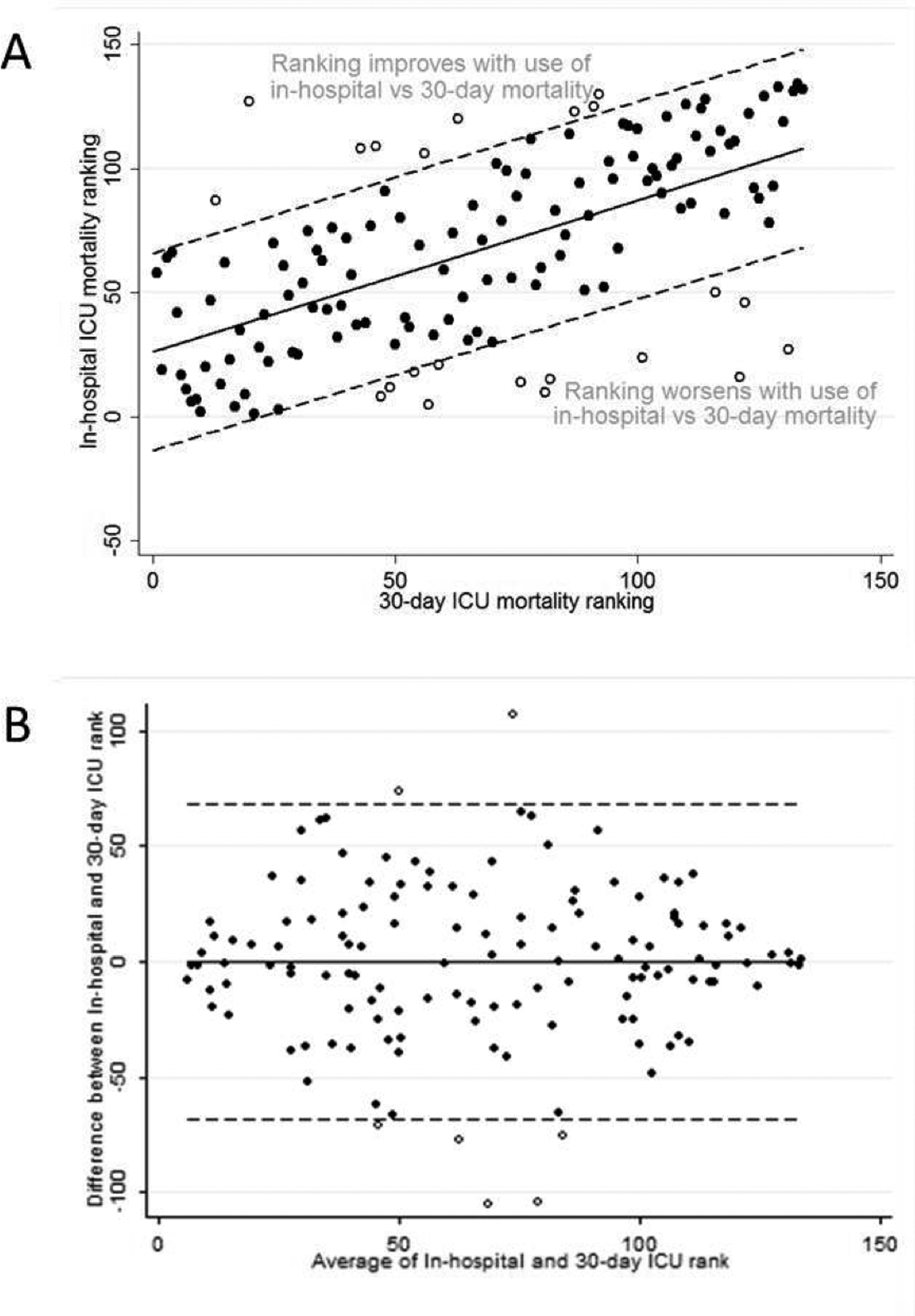

Second, using the risk-adjusted mortality rates from step one we calculated discharge bias (defined as the difference between hospital-specific risk-adjusted 30-day and in-hospital ICU mortality) and change in hospital rank (defined as the difference between hospital rank when using in-hospital ICU mortality versus 30-day ICU mortality), as well as the percentage of hospitals whose quartile of hospital ranking changed when using 30-day instead of in-hospital mortality. We determined the relationship between in-hospital and 30-day risk-adjusted ICU mortality and hospital rank by calculating the Pearson correlation coefficient and graphing the relationship using scatter plots and Bland-Altman plots.

Third, we used linear regression to compare discharge bias and change in hospital rank due to discharge bias across hospital characteristics, with discharge bias or change in rank as the dependent variable and hospital characteristics as the independent variables. Both bivariate and multivariate linear regression analyses were performed.

Finally, we performed a series of subgroup analyses to better understand the etiology of discharge bias and potential approaches to overcome it when only in-hospital mortality measures are available. To understand the etiology of discharge bias we examined discharge location and early post-discharge mortality (defined as death within 30 days of admission but after hospital discharge) among patients who were discharged alive prior to day 30, stratifying by hospital characteristics found to be significantly associated with discharge bias by multivariate regression. This analysis was designed to determine whether differences in discharge bias between hospital types was due to differences in transfer rates, differences in early post-discharge mortality among transferred patients, or both. To understand potential approaches to overcome discharge bias, we measured variation in discharge bias stratifying by hospital characteristics found to be significantly associated with discharge bias by multivariate regression. This analysis was designed to determine if the effects of discharge bias could be minimized by presenting in-hospital mortality rates stratified by key hospital characteristics.

Sensitivity Analyses

The MediQual Atlas predicted mortality risk score was missing for a high proportion of patients. Previous analyses suggest that this value is missing completely at random and can be ignored (27). However, to assess the effect of missing MediQual Atlas predicted mortality risk scores on our findings, we repeated the primary analysis without using predicted risk of death in risk-adjustment. We did this in two ways, once using the same cohort of patients included in the primary analysis, and a second time using the primary cohort plus those patients excluded from the primary cohort because of missing MediQual Atlas predicted mortality risk scores.

Role of the Funding Source

The funding sources had no role in the design, conduct, or reporting of the study or in the decision to submit the manuscript for publication.

Results

A total of 79,359 patients were admitted to Pennsylvania hospital ICUs during the study period. We excluded 21,687 patients because it was their second or greater ICU admission, 1,853 patients less than 18 years of age, 668 patients admitted to 26 hospitals with less than 50 annual ICU admissions, 2,379 patients at 10 hospitals that could not be merged with HCRIS, and 8,942 with missing or invalid risk-adjustment variables of which 8,851 had missing Mediqual Atlas predicted mortality risk scores. This resulted in a final analysis of 43,830 patients and 134 hospitals.

The observed in-hospital mortality rate was 9.6% and the observed 30-day mortality rate was 12.6%. Other demographic and clinical characteristics of patients are shown in Table 1. The hospitals were diverse with respect to the number of hospital and ICU beds, teaching status, urban/rural status, and the number of LTAC beds in each HRR (Table 2). The percentage of Medicaid patients at each hospital varied from 0 to 40% and the percentage of commercial HMO insurance patients at each hospital varied from 0 to 21%. Mean risk-adjusted hospital-specific in-hospital and 30-day ICU mortality rates were 9.6% (1.3) [mean (SD)] and 13.1% (1.6), respectively.

Table 1.

Demographic and Clinical Characteristics of Patients

| Variable | n = 43,830 |

|---|---|

| Age in years, mean (SD) | 68.1 (16.0) |

| Female | 21,116 (48.2) |

| Race | |

| White | 35,793 (81.7) |

| Black | 4,813 (11.0) |

| Other | 3,224 (7.4) |

| Primary payer* | |

| Medicare | 29,852 (68.2) |

| Medicaid | 4,284 (9.8) |

| Commercial | 8,194 (18.7) |

| Other | 869 (2.0) |

| None | 595 (1.4) |

| Admission source | |

| Emergency department | 24,491 (55.9) |

| Outside hospital | 17,683 (40.3) |

| Skilled nursing facility | 799 (1.8) |

| Other | 857 (2.0) |

| Primary diagnosis | |

| Cardiac | 13,622 (31.1) |

| Gastrointestinal | 3,829 (8.7) |

| Neurologic | 3,916 (8.9) |

| Oncologic | 1,897 (4.3) |

| Respiratory | 6,800 (15.5) |

| Trauma | 1,545 (3.5) |

| Other | 12,221 (27.9) |

| Charlson comorbidity count | |

| 0 | 8,402 (19.2) |

| 1–2 | 27,377 (62.5) |

| 3–4 | 7,800 (17.8) |

| ≥5 | 251 (0.6) |

| MediQual predicted death probability | |

| <5% | 27,439 (62.6) |

| 5-<15% | 8,823 (20.1) |

| 15-<25% | 3,061 (7.0) |

| >25% | 4,507 (10.3) |

| Discharge location | |

| Home | 24,914 (56.8) |

| Acute care hospital | 2,077 (4.7) |

| Post-acute care facility | 11,627 (26.5) |

| Hospice | 954 (2.2) |

| Expired (Observed in-hospital mortality) | 4,185 (9.6) |

| Unknown | 73 (0.2) |

| Observed 30-day mortality | 5,510 (12.6) |

Results are listed as frequency (%) unless otherwise specified in the table. Not all percentages add to 100 because of rounding. SD = standard deviation

Missing in 36 patient records

Table 2.

Hospital Characteristics

| Variable | n = 134 |

|---|---|

| Hospital bed size | |

| <100 beds | 40 (30) |

| 100–250 beds | 61 (46) |

| >250 beds | 33 (25) |

| ICU bed size* | |

| <10 beds | 28 (23) |

| 10–25 beds | 70 (56) |

| >25 beds | 26 (21) |

| Teaching status† | |

| Non-teaching | 70 (52) |

| Small teaching | 37 (28) |

| Large teaching | 27 (20) |

| Ownership | |

| Non-profit | 117 (87) |

| For-profit | 17 (13) |

| MSA size | |

| <100,000 or non-MSA | 27 (20) |

| 100,000 – 1 million | 44 (33) |

| >1 million | 63 (47) |

| Medicaid penetration | |

| Lowest tertile (0–7%) | 44 (33) |

| Middle tertile (7–11%) | 45 (34) |

| Highest tertile (11–40%) | 45 (34) |

| HMO penetration | |

| Lowest tertile (0–3%) | 45 (34) |

| Middle tertile (3–7%) | 45 (34) |

| Highest tertile (7–21%) | 44 (33) |

| LTAC beds in HRR | |

| 0 | 19 (14) |

| 1–200 | 52 (39) |

| >200 | 63 (47) |

Results are listed as frequency (%). Not all percentages add to 100 because of rounding. MSA = metropolitan statistical area size; HMO = health maintenance organization; LTAC = long-term acute care hospital; HRR = Dartmouth Atlas hospital referral region

Missing in 10 hospital records

Teaching status categorized by resident-to-bed ratio (non-teaching 0, small teaching >0 to <0.25, large teaching ≥0.25)

The mean hospital-specific discharge bias was 3.5% (1.3), meaning that the average hospital’s risk-adjusted mortality improved by 3.5% due to discharge bias, with a range of −1.3% to 7.2%. The relationship between risk-adjusted hospital-specific in-hospital and 30-day ICU mortality rates showed moderate correlation (Pearson correlation coefficient: r = 0.60), with clear variability and outliers (Figure 1). Using bivariate linear regression, discharge bias was found to be significantly greater in small and non-teaching hospitals, as well as hospitals with the lowest percentage of patients with commercial HMO insurance, making these hospitals appear comparatively better from a benchmarking standpoint when using in-hospital instead of 30-day mortality (Table 3). Using multivariate linear regression, only hospital bed size was found to be significantly associated with discharge bias (−0.7% discharge bias per bed size category, 95% CI −1.1 to −0.4, p<0.001).

Figure 1.

Comparison of Hospital-specific Risk-adjusted In-hospital and 30-day ICU Mortality by (A) Scatter Plot and (B) Bland-Altman Plot.

Table 3.

Discharge Bias and Change in Rank by Hospital Characteristics

| Hospital characteristic | Mean bias (SD) | p-value | Mean change in rank (SD) | p-value |

|---|---|---|---|---|

| Bed size | ||||

| <100 beds | 4.11 (1.26) | 15.33 (29.13) | ||

| 100–250 beds | 3.71 (1.03) | 4.00 (27.54) | ||

| >250 beds | 2.54 (1.38) | <0.001 | −25.97 (37.27) | <0.001 |

| Teaching status* | ||||

| Non-teaching | 3.83 (1.09) | 8.97 (28.41) | ||

| Small teaching | 3.47 (1.14) | −4.43 (27.71) | ||

| Large teaching | 2.88 (1.85) | 0.001 | −17.19 (47.24) | <0.001 |

| Ownership | ||||

| Non-profit | 3.55 (1.35) | 0.68 (34.88) | ||

| For profit | 3.48 (1.23) | 0.83 | −4.65 (29.72) | 0.55 |

| MSA size | ||||

| <100,000 or non-MSA | 4.14 (1.30) | 16.48 (28.99) | ||

| 100,000 – 1 million | 3.29 (0.92) | −6.11 (25.90) | ||

| <1 million | 3.46 (1.52) | 0.076 | −2.79 (39.25) | 0.042 |

| Medicaid penetration | ||||

| Lowest tertile | 3.46 (1.02) | −0.11 (26.70) | ||

| Middle tertile | 3.84 (1.27) | 8.78 (34.26) | ||

| Highest tertile | 3.32 (1.61) | 0.62 | −8.67 (38.89) | 0.23 |

| HMO penetration | ||||

| Lowest tertile | 3.89 (1.02) | 11.44 (23.07) | ||

| Middle tertile | 3.57 (1.24) | −1.13 (30.10) | ||

| Highest tertile | 3.15 (1.60) | 0.009 | −10.55 (43.55) | 0.002 |

| LTAC beds in HRR | ||||

| 0 | 4.05 (1.30) | 12.84 (36.50) | ||

| 1–200 | 3.34 (0.89) | −3.40 (24.72) | ||

| >200 | 3.55 (1.59) | 0.41 | −1.06 (39.50) | 0.27 |

MSA = metropolitan statistical area size; HMO = health maintenance organization; LTAC = long-term acute care hospital; HRR = Dartmouth Atlas hospital referral region; SD = standard deviation

Teaching status categorized by resident-to-bed ratio (non-teaching 0, small teaching >0 to <0.25, large teaching ≥0.25)

Hospitals were ranked from 1 to 134 based on their in-hospital and 30-day ICU mortality rates, with the hospital having the lowest mortality rate being ranked highest. There were substantial changes in rank, with some hospitals improving or worsening in rank by more than 100. Discharge bias led to hospitals improving in rank by at least one quartile 29.1% of the time and worsening in rank by at least one quartile 26.9% of the time when using in-hospital instead of 30-day mortality. The relationship between hospital rank using in-hospital versus 30-day mortality rates showed moderate correlation (Pearson correlation coefficient: r = 0.60) (Figure 2). Using bivariate linear regression, the hospital characteristics associated with significant change in rank were hospital bed size, teaching status, MSA category, and HMO penetration (Table 3). Using multivariate linear regression, only hospital bed size was found to be significantly associated with change in rank (−18.7 change in rank per bed size category, 95% CI −27.8 to −9.5, p<0.001). Similar to the findings for discharge bias, this means that small hospitals appear comparatively better than large hospitals when using hospital rank determined by in-hospital instead of 30-day ICU mortality rates.

Figure 2.

Comparison of Hospital Rank Using In-hospital and 30-day ICU Mortality by (A) Scatter Plot and (B) Bland-Altman Plot.

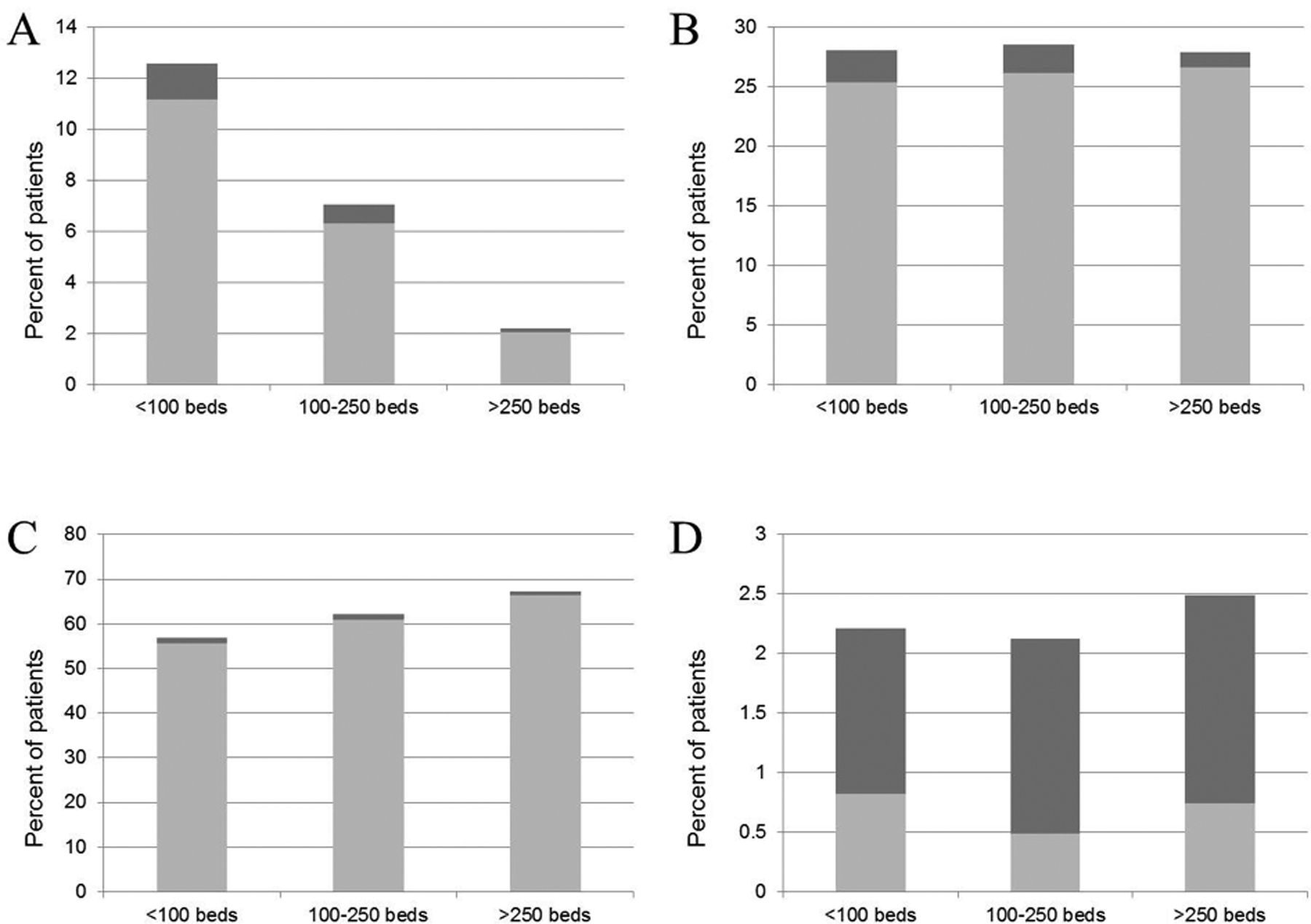

Based upon these regression results, we performed our subgroup analyses stratifying by hospital size (<100 beds, 100–250 beds and >250 beds). Among patients discharged alive before day 30, we found that small hospitals were more likely than large hospitals to transfer patients to other acute care hospitals, less likely to discharge patients home, and similarly likely to transfer patients to post-acute care facilities or hospice (Figure 3). Early post-discharge mortality was relatively higher in patients transferred to other acute care hospitals from small compared to large hospitals, as well as in patients transferred to post-acute care facilities or home. This indicates that the discharge bias favoring small hospitals has two main components: more transfers to acute care hospitals and higher early post-discharge mortality rates.

Figure 3.

Early Post-discharge Mortality Among Patients Discharged Alive Prior to Day 30 for Patients Discharged to (A) an Acute Care Hospital, (B) a Post-acute Care Facility, (C) Home, and (D) Hospice.

Values are expressed as a percentage of total patients discharged alive prior to day 30. Dark bars = discharged patients who died before day 30; light bars = discharged patients alive at day 30.

When the distribution of discharge bias was examined after stratifying by hospital bed size, the range and variation in discharge bias decreased (Appendix Figure). This indicates that reporting in-hospital mortality rates by hospital bed size can minimize the effect of discharge bias.

In the sensitivity analyses in which we did not adjust for MediQual Atlas risk of death, observed mortality, risk-adjusted mortality, and discharge bias rates were similar to those in the primary analysis (Appendix Table 1), as was the relationship between discharge bias and hospital characteristics using both bivariate and multivariate linear regression (Appendix Table 2). This was true for models in which we included all eligible patients and models in which we excluded patients with missing MediQual values.

Discussion

This study confirms that discharge practices can substantially affect in-hospital mortality measurements and demonstrates that discharge bias varies across hospital types in a way that disproportionately harms large hospitals. Academic hospitals and those that care for a large number of patients insured through HMOs are also disproportionately affected by discharge bias, although these results were not statistically significant in the multivariate analysis. We also found that discharge bias has practical implications in terms of performance assessment, as discharge bias caused many hospitals to change significantly in rank. The types of hospitals whose rank significantly worsened with the use of in-hospital compared to 30-day mortality rate-based ranking were similar to the hospitals found to have less discharge bias. The greater discharge bias in small compared to large hospitals is due to a combination of factors, including higher transfer rates to other acute care hospitals and greater early post-discharge mortality among patients discharged alive.

This study is consistent with prior literature demonstrating that discharge practices affect in-hospital ICU mortality measures and expands on this knowledge by demonstrating that these effects vary between different types of hospitals. In a prior study of ICU patients in California, teaching compared to non-teaching hospitals were found to have a decreased risk of early post-discharge death, and thus theoretically less discharge bias, which is consistent with our findings (12). However, in contrast to our study, that study did not find a relationship between hospital bed size and early post-discharge mortality. This difference may be due to the fact that our study examined hospital-level risk-adjusted discharge bias rather than whether or not an individual patient experienced early post-discharge death, or it may be related to state-level differences in the populations examined since our study was done in Pennsylvania rather than California.

Contrary to our hypothesis, we did not find that hospitals located in regions with higher LTAC penetration had greater discharge bias. A previous study found that LTAC transfer rates substantially bias in-hospital mortality measures, at least among ventilated patients (8). However, we found that differences in post-acute care transfers were relatively small, and that discharge bias related to post-acute care facility transfers was more likely due to mortality among transferred patients than transfer rates themselves. Additionally, although patients transferred to LTACs have high mortality (20), it may be that this mortality occurs after the 30-day mark, minimizing its practical effect on short-term mortality measurements.

The results of this study suggest that quality improvement initiatives such as public reporting and pay-for-performance programs that use in-hospital ICU mortality as a performance measure should account for discharge bias to prevent large hospitals from being unfairly disadvantaged. Whenever possible, time-specific measures such as 30-day mortality should be used. However, if this is not feasible, one solution is to stratify reporting of in-hospital ICU mortality by hospital bed size since this hospital characteristic is associated with both discharge bias and change in hospital rank. While this does not completely eliminate bias inherent in measuring in-hospital ICU mortality, reporting according to hospital bed size categories eliminates much of the variability in discharge bias and is easier to implement in practice than calculating 30-day mortality rates.

Our findings also have implications for multicenter benchmarking programs, even those that are not tied to public reporting or pay-for-performance. Benchmarking programs such as the Acute Physiology and Chronic Health Evaluation (APACHE) program (6), Project IMPACT (28), and the Simplified Acute Physiology Score (SAPS) III program (29) all use in-hospital mortality as their primary outcome measure. Our results show that these severity scores might poorly calibrate in large hospitals, potentially introducing another source of bias in these programs. Risk-adjusted outcome reports within these programs should be interpreted with caution, and perhaps stratified by hospital size, to maximize their utility.

Our study has several limitations. First, we used an administrative data source which may not fully capture all of the clinical factors associated with discharge bias, meaning that the differences in discharge bias that we observed may be partially attributable to unmeasured clinical variables. Additionally, our study was restricted to hospital discharges occurring in Pennsylvania, and therefore it is not known whether findings in this study would be the same in other states. For example, the state of Pennsylvania has a relatively high LTAC penetration compared to other states (30), and this may be another reason why the number of LTAC beds in each hospital’s HRR was not found to be associated with discharge bias in this study. Finally, we chose to examine 30-day mortality instead of longer-term outcomes such as 60- or 90-day mortality, which may have resulted in different findings, such as with regard to the relationship between LTAC penetration and discharge bias as previously mentioned.

Our study demonstrates that discharge practices bias in-hospital ICU measures in a way that disproportionately hurts large hospitals, and that discharge bias is due to differences in rates of transfers to acute care hospitals and early post-discharge mortality. Using time-specific measures such as 30-day mortality or accounting for discharge bias, potentially by stratifying reporting of in-hospital ICU mortality rates by hospital bed size, will prevent large hospitals from being unfairly punished in quality improvement initiatives such as public reporting and pay-for-performance.

Supplementary Material

Appendix Figure. Distribution of Discharge Bias for (A) All Hospitals, (B) Hospitals with <100 Beds, (C) Hospitals with 100–250 Beds, and (D) Hospitals with >250 Beds.

Financial support:

NIH 5T32HL7563, NIH R01HL096651

Contributor Information

Lora A. Reineck, Division of Pulmonary, Allergy, and Critical Care Medicine, University of Pittsburgh School of Medicine; CRISMA Center, Department of Critical Care Medicine, University of Pittsburgh School of Medicine.

Francis Pike, CRISMA Center, Department of Critical Care Medicine, University of Pittsburgh School of Medicine.

Tri Le, CRISMA Center, Department of Critical Care Medicine, University of Pittsburgh School of Medicine; Department of Health Policy and Management, University of Pittsburgh School of Public Health.

Brandon D. Cicero, CRISMA Center, Department of Critical Care Medicine, University of Pittsburgh School of Medicine.

Theodore J. Iwashyna, Division of Pulmonary and Critical Care Medicine, University of Michigan; Center for Clinical Management Research, Ann Arbor VA.

Jeremy M. Kahn, CRISMA Center, Department of Critical Care Medicine, University of Pittsburgh School of Medicine; Department of Health Policy and Management, University of Pittsburgh School of Public Health.

References

- 1.Halpern NA, Pastores SM. Critical care medicine in the United States 2000–2005: an analysis of bed numbers, occupancy rates, payer mix, and costs. Crit Care Med. 2010;38(1):65–71. [DOI] [PubMed] [Google Scholar]

- 2.Desai SV, Law TJ, Needham DM. Long-term complications of critical care. Crit Care Med. 2011;39(2):371–9. [DOI] [PubMed] [Google Scholar]

- 3.Knaus WA, Wagner DP, Zimmerman JE, Draper EA. Variations in mortality and length of stay in intensive care units. Ann Intern Med. 1993;118(10):753–61. [DOI] [PubMed] [Google Scholar]

- 4.National Quality Forum. Intensive care: in-hospital mortality rate. 2012. Accessed at http://www.qualityforum.org/MeasureDetails.aspx?actid=0&SubmissionId=26#k=mortality&e=1&st=&sd=&s=n&so=a&p=4&mt=&cs=&ss= on 29 May 2012.

- 5.California HealthCare Foundation. CalHospitalCompare.org. 2012. Accessed at www.calhospitalcompare.org on 2 July 2012.

- 6.Zimmerman JE, Kramer AA, McNair DS, Malila FM. Acute Physiology and Chronic Health Evaluation (APACHE) IV: hospital mortality assessment for today’s critically ill patients. Crit Care Med. 2006;34(5):1297–310. [DOI] [PubMed] [Google Scholar]

- 7.Drye EE, Normand SL, Wang Y, Ross JS, Schreiner GC, Han L, et al. Comparison of hospital risk-standardized mortality rates calculated by using in-hospital and 30-day models: an observational study with implications for hospital profiling. Ann Intern Med. 2012;156(1 Pt 1):19–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hall WB, Willis LE, Medvedev S, Carson SS. The implications of long-term acute care hospital transfer practices for measures of in-hospital mortality and length of stay. Am J Respir Crit Care Med. 2012;185(1):53–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kahn JM, Kramer AA, Rubenfeld GD. Transferring critically ill patients out of hospital improves the standardized mortality ratio: a simulation study. Chest. 2007;131(1):68–75. [DOI] [PubMed] [Google Scholar]

- 10.Vasilevskis EE, Kuzniewicz MW, Dean ML, Clay T, Vittinghoff E, Rennie DJ, et al. Relationship between discharge practices and intensive care unit in-hospital mortality performance: evidence of a discharge bias. Med Care. 2009;47(7):803–12. [DOI] [PubMed] [Google Scholar]

- 11.Sirio CA, Shepardson LB, Rotondi AJ, Cooper GS, Angus DC, Harper DL, et al. Community-wide assessment of intensive care outcomes using a physiologically based prognostic measure: implications for critical care delivery from Cleveland Health Quality Choice. Chest. 1999;115(3):793–801. [DOI] [PubMed] [Google Scholar]

- 12.Vasilevskis EE, Kuzniewicz MW, Cason BA, Lane RK, Dean ML, Clay T, et al. Predictors of early postdischarge mortality in critically ill patients: a retrospective cohort study from the California Intensive Care Outcomes project. J Crit Care. 2011;26(1):65–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Baker DW, Einstadter D, Thomas CL, Husak SS, Gordon NH, Cebul RD. Mortality trends during a program that publicly reported hospital performance. Med Care. 2002;40(10):879–90. [DOI] [PubMed] [Google Scholar]

- 14.US Department of Health and Human Services. Hospital Compare. 2012. Accessed at http://www.hospitalcompare.hhs.gov on 8 March 2012.

- 15.Reineck LA PF, Le T, Cicero B, Iwashyna TJ, Kahn JM. Bias in quality measurement resulting from in-hospital mortality as an ICU quality measure. Am J Respir Crit Care Med. 2012;185:A2457. [Google Scholar]

- 16.Barnato AE, Chang CC, Farrell MH, Lave JR, Roberts MS, Angus DC. Is survival better at hospitals with higher “end-of-life” treatment intensity? Med Care. 2010;48(2):125–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wallace DJ, Angus DC, Barnato AE, Kramer AA, Kahn JM. Nighttime intensivist staffing and mortality among critically ill patients. N Engl J Med. 2012;366(22):2093–101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lane-Fall MB, Iwashyna TJ, Cooke CR, Benson NM, Kahn JM. Insurance and racial differences in long-term acute care utilization after critical illness. Crit Care Med. 2012;40(4):1143–9. [DOI] [PubMed] [Google Scholar]

- 19.Iwashyna TJ, Christie JD, Moody J, Kahn JM, Asch DA. The structure of critical care transfer networks. Med Care. 2009;47(7):787–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kahn JM, Benson NM, Appleby D, Carson SS, Iwashyna TJ. Long-term acute care hospital utilization after critical illness. JAMA. 2010;303(22):2253–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Green A, Showstack J, Rennie D, Goldman L. The relationship of insurance status, hospital ownership, and teaching status with interhospital transfers in California in 2000. Acad Med. 2005;80(8):774–9. [DOI] [PubMed] [Google Scholar]

- 22.Krumholz HM, Wang Y, Mattera JA, Wang Y, Han LF, Ingber MJ, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation. 2006;113(13):1683–92. [DOI] [PubMed] [Google Scholar]

- 23.Krumholz HM, Wang Y, Mattera JA, Wang Y, Han LF, Ingber MJ, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with heart failure. Circulation. 2006;113(13):1693–701. [DOI] [PubMed] [Google Scholar]

- 24.Deyo RA, Cherkin DC, Ciol MA. Adapting a clinical comorbidity index for use with ICD-9-CM administrative databases. J Clin Epidemiol. 1992;45(6):613–9. [DOI] [PubMed] [Google Scholar]

- 25.Healthcare Cost and Utilization Project. Clinical Classifications Software (CCS) for ICD-9-CM. 2012. Accessed at http://www.hcup-us.ahrq.gov/toolssoftware/ccs/ccs.jsp on 8 June 2012.

- 26.Iezzoni LI, Moskowitz MA. A clinical assessment of MedisGroups. JAMA. 1988;260(21):3159–63. [DOI] [PubMed] [Google Scholar]

- 27.Kim MM, Barnato AE, Angus DC, Fleisher LA, Kahn JM. The effect of multidisciplinary care teams on intensive care unit mortality. Arch Intern Med. 2010;170(4):369–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Nelson LD. Data, data everywhere. Crit Care Med. 1997;25(8):1265. [DOI] [PubMed] [Google Scholar]

- 29.Moreno RP, Metnitz PG, Almeida E, Jordan B, Bauer P, Campos RA, et al. SAPS 3--From evaluation of the patient to evaluation of the intensive care unit. Part 2: Development of a prognostic model for hospital mortality at ICU admission. Intensive Care Med. 2005;31(10):1345–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kahn JM, Iwashyna TJ. Accuracy of the discharge destination field in administrative data for identifying transfer to a long-term acute care hospital. BMC Res Notes. 2010;3:205. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix Figure. Distribution of Discharge Bias for (A) All Hospitals, (B) Hospitals with <100 Beds, (C) Hospitals with 100–250 Beds, and (D) Hospitals with >250 Beds.