Abstract

Objective

To describe a novel, five-phase approach to collecting qualitative data from hard-to-reach populations using crowdsourcing methods.

Methods

Drawing from experiences across recent studies with type 1 diabetes and congenital heart disease stakeholders, we describe five phases of crowdsourcing methodology, an innovative approach to conducting qualitative research within an online environment, and discuss relevant practical and ethical issues.

Results

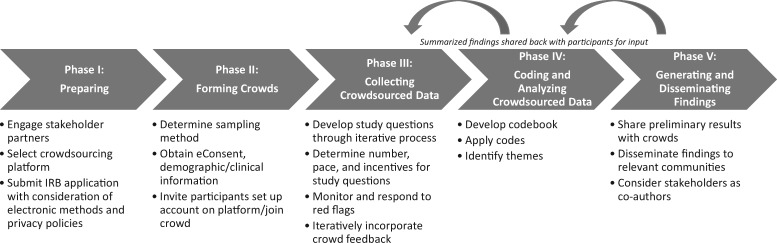

Phases of crowdsourcing methodology are: (I) Preparing; (II) Forming Crowds; (III) Collecting Crowdsourced Data; (IV) Coding and Analyzing Crowdsourced Data; and (V) Generating and Disseminating Findings. Iterative feedback from stakeholders is obtained in all five phases. Practical and ethical issues include accessing diverse stakeholders, emotional engagement of crowd participants, responsiveness and transparency of crowdsourcing methodology, and limited personal contact with crowd participants.

Conclusions

Crowdsourcing is an innovative, efficient, feasible, and timely approach to engaging hard-to-reach populations in qualitative research.

Keywords: cardiology, chronic illness, diabetes, mental health, parent stress, psychosocial functioning, qualitative methods

Introduction

Pediatric health researchers have long recognized the need to study the lived experiences of patients and families to develop interventions to improve child and family outcomes. Over the last decade, qualitative research methodology (i.e., semi-structured interviews, focus groups) has gained popularity and acceptance as a method to better understand the perspectives of families and develop theories, models of care, and interventions (Alderfer & Sood, 2016; Czajkowski et al., 2015). While qualitative methods are acceptable and valuable for certain research questions (Wu et al., 2016), many studies consist of one-time interviews with small, single-institution samples. Oftentimes, these samples are limited in diversity in terms of sociodemographics and healthcare system experiences, limiting how widely findings may generalize across settings and families. Our research teams have recently used crowdsourcing, which refers to the process of generating ideas and solving problems by soliciting contributions from online communities (Brabham, 2013), as a method to engage stakeholders in qualitative research related to pediatric illness. This topical review presents a novel, five-phase approach to collecting qualitative data from hard-to-reach populations using crowdsourcing methods.

Crowdsourcing Methodology

Crowdsourcing is a type of participative online activity that has been applied to problems in diverse fields (Brabham, 2013; Estellés-Arolas & González-Ladrón-de-Guevara, 2012). Crowdsourcing typically includes four elements: (a) an organization with a task to perform; (b) a community, or crowd, uniquely qualified to contribute to the task; (c) an online environment that facilitates collaboration between the organization and crowd; and (d) mutual benefit for the organization and crowd (Brabham et al., 2014). The use of crowdsourcing is increasing in health promotion, research, and care. According to a recent systematic review, crowdsourcing is most frequently used to collect patient-reported survey data, engage stakeholders in data processing (e.g., ranking a large set of adverse drug reactions, classifying tweets about diabetes into topic categories), and in health surveillance or monitoring (e.g., reporting symptoms of a disease or treatment) (Créquit et al., 2018). Crowdsourcing has also been used in psychology to validate theoretical frameworks (Cushing et al., 2018), conduct longitudinal studies (Schleider & Weisz, 2015), and identify strategies to enhance the use of evidence-based practices (Stewart et al., 2019). To our knowledge, crowdsourcing has not previously been used to conduct qualitative research in pediatric populations. Crowdsourcing as a qualitative research tool incorporates the research team as the organization developing a behavioral or preventative intervention, the crowd as a group of diverse stakeholders including patients or caregivers, and the online environment as a social networking platform that facilitates qualitative data collection while incorporating stakeholder input (e.g., patients, parents, healthcare providers) throughout each phase of crowdsourcing. Stakeholders could benefit directly from the resulting intervention or indirectly by contributing to future improvements in care that they perceive as pertinent, valuable, and helpful.

Crowdsourcing is conducted in a highly accessible online environment, such as a social networking application (app) that can be accessed through a mobile device. Social media and text messaging are preferred forms of communication for many age groups. In a 2019 poll, 86% of 23- to 38-year olds used social media and 93% owned a smartphone (Vogels, 2019). Rates of online social networking and smartphone use are similar across racial/ethnic and socioeconomic groups, though there are differences in how digital technology is used (e.g., racial/ethnic minority and low socioeconomic groups depend more on their smartphone for online access) (Smith, 2015). Methodology that facilitates research participation anywhere, anytime, using any device may help overcome common barriers to participation for youth with pediatric illnesses and their parents (Wray et al., 2018).

Phases of Crowdsourcing Methodology

Crowdsourcing is particularly fruitful for pediatric psychology research because its online environment facilitates recruitment from a geographically broad population, so it can target illness groups or subgroups (e.g., specific age ranges or developmental stages) that may have unique characteristics, but are not highly prevalent. To this end, our research teams recently used crowdsourcing to engage type 1 diabetes (T1D) and congenital heart disease (CHD) stakeholders in qualitative research. Pierce, Wysocki, and colleagues formed a geographically diverse online “crowd” of 153 parents of young children (<6 years old) with T1D to collect qualitative data via 19 open-ended questions to develop an online coping intervention for this population (Pierce et al., 2017; Wysocki et al., 2018). Building on this work, Sood and colleagues then formed two geographically diverse crowds of 108 parents of young children with CHD and 16 leaders of CHD patient advocacy organizations to collect qualitative data via 37 and 32 open-ended questions, respectively, to inform recommendations for psychosocial care delivery (Gramszlo et al., 2020; Hoffman et al., 2020). A second ongoing CHD study is collecting (in English and Spanish) qualitative data from a racially and ethnically diverse crowd of 41 parents who learned prenatally that their child had CHD with the goal of designing a prenatal psychosocial intervention. Through experiences using crowdsourcing to conduct qualitative research, our research teams have identified five phases of crowdsourcing methodology: (I) Preparing; (II) Forming Crowds; (III) Collecting Crowdsourced Data; (IV) Coding and Analyzing Crowdsourced Data; and (V) Generating and Disseminating Findings (Figure 1). Stakeholders play an integral role in every crowdsourcing phase, serving not only as crowd participants but as research partners embedded within the study team.

Figure 1.

Phases of crowdsourcing methodology with key tasks.

Phase I: Preparing

Researchers should identify and engage stakeholder partners early in the Preparing phase to provide input on planned approaches to recruitment, data collection, and use and dissemination of findings. For our T1D and CHD research, we included mothers and fathers from a variety of racial/ethnic and socioeconomic backgrounds and clinicians from multiple disciplines as members of the study team. We recruited parent partners through nominations from their healthcare providers and referrals from leaders of patient advocacy groups. We recruited clinician partners through our professional networks. Parent and clinician partners convened by phone and email with the researchers throughout the study and spent about 2 hr per month on study-related activities (i.e., 1 hr on a monthly research team phone call and 1 hr on other tasks such as reviewing participant responses). Since they contributed significant time, effort, and expertise, parent partners received payment for their contributions (e.g., $50 per hour or $100 per month) (Patient-Centered Outcomes Research Institute, 2015). We determined this amount during budget planning and discussed it with parent partners before they agreed to serve in this role. Other important aspects of this phase include preparing institutional review board and electronic informed consent (eConsent) documents (Department of Health and Human Services: Food and Drug Administration & Office for Human Research Protections, 2016), materials for recruitment and data collection, and the crowdsourcing platform (i.e., social networking site to facilitate crowdsourcing). For the T1D and CHD studies, we selected Yammer since it offers a variety of features that facilitate crowdsourcing for qualitative research. Specifically, users can “post” open-ended questions, share documents, track participant activities, and compile results. For the open-ended questions, participants can post their response and also comment on other participants’ responses, or interact as part of a crowd. Other crowdsourcing platforms such as Amazon Mechanical Turk do not provide these interactive features because they typically involve completion of a one-time survey or task rather than ongoing data collection from the same crowd (Créquit et al., 2018). Researchers should become familiar with the privacy policies of the social networking site selected for the study and the identifiers that must be entered to set up an online profile (e.g., email address) and should consider whether participants will be given the option of including their name and other identifiers in their profile versus requiring a de-identified online profile name (e.g., ID number, study code). This information and potential risks to privacy should be included in the eConsent. Time required to prepare for a crowdsourcing study may be greater than for a traditional qualitative study and is dependent on the research team’s existing facility with a crowdsourcing platform, the IRB’s comfort and familiarity with research using online social networks, and the extent of existing relationships with pertinent stakeholders.

Phase II: Forming Crowds

The way researchers approach recruitment fundamentally impacts the demographic composition of the resulting “crowd.” Our teams used a variety of approaches across our T1D and CHD studies in an effort to obtain diverse, representative crowds, with varying success in achieving sufficient diversity. These approaches included snowball sampling through disease-specific social media groups and websites, electronic medical record queries, and purposive sampling by clinician collaborators (Hoffman et al., 2020; Pierce et al., 2017). The first CHD study also utilized a two-step process through which interested individuals provided basic demographic and clinical information and a diverse subset was then purposively invited to participate (Hoffman et al., 2020). Across these studies, reliance on disease-specific social media groups and websites for recruitment did not result in sufficiently diverse samples, particularly with regard to race and ethnicity, and purposive sampling tended to be more successful in this regard. A commonality across all approaches was the use of an IRB-approved study flyer, shared electronically, that included a link to a detailed study description and an eConsent form. The options of discussing the study with a research team member and a traditional informed consent process should also be offered. Once enrolled, participants completed an online demographic questionnaire and were provided with instructions on joining the private group on Yammer and setting up their online profile. Crowds of parents participating in T1D and CHD studies have ranged from 41 to 153 depending on the method of recruitment (e.g., purposive versus snowball sampling) and the scope of the study aims, with no observable differences in participation or engagement. However, unlike traditional qualitative research in which additional individual interviews or focus groups can be conducted until thematic saturation is reached, a crowd is fully formed prior to data collection and works together for an extended period of time, and an inability to reach thematic saturation with a particular crowd would not be easily resolved. Given this consideration along with the lower costs of collecting qualitative data through crowdsourcing as compared to interviews or focus groups (e.g., interviewer time, transcription costs), we recommend recruiting a larger sample for crowdsourcing research than may be typical for traditional qualitative research.

Phase III: Collecting Crowdsourced Data

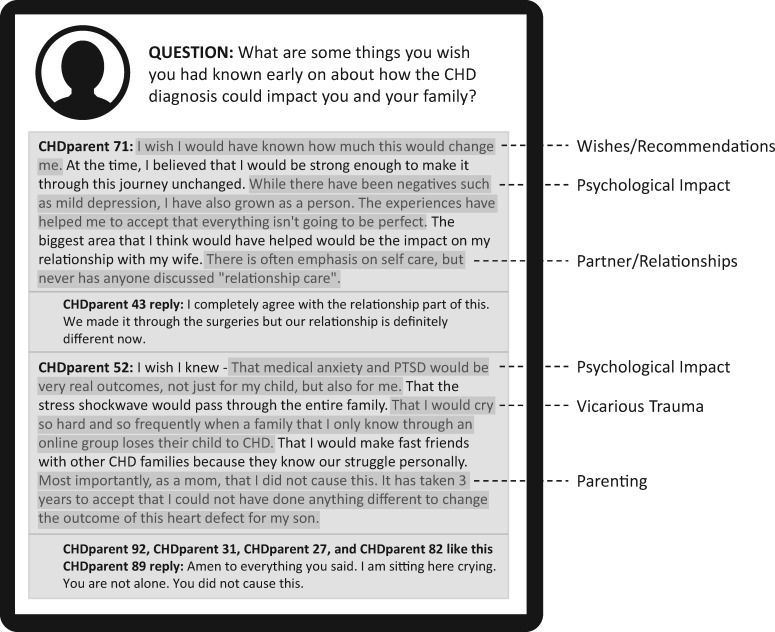

Existing evidence, theoretical models, and study aims should inform the development of study questions. While these questions may be drafted during the Preparing phase, they should be iteratively refined by the study team, including stakeholder partners, based on themes emerging from participant responses. For the first CHD study, 10 domains of psychosocial care were initially identified based on a review of the CHD literature and psychosocial standards for other pediatric illness populations (Hynan & Hall, 2015; Wiener et al., 2015) and through discussion with parent and clinician partners, and study questions were structured by these 10 domains (Supplementary File). The researchers then “post” study questions to the crowdsourcing platforms and participants provide a response of any length on their mobile device and can view and comment on other participants’ responses (i.e., interact as a “crowd”) (Figure 2). Participants receive app or email notifications when a question is posted, with regularly scheduled reminders to those who do not respond. To ensure that participants remain focused on the goals of the study and to reduce the likelihood of irrelevant responses, instructions or reminders can be included with each study question (Supplementary File). Researchers decide on the number of questions posted at a time (e.g., 2 or 3), how long participants have to respond (e.g., 1 week), how much time will elapse before the next set of questions is posted (e.g., 1–2 weeks), how much participants are paid per response (e.g., $5 for open-ended responses, $2 for polls), and how often and through what mechanism participants will be paid (e.g., monthly via reloadable study debit card). The specific examples provided above were based on our experiences conducing T1D and CHD studies, but other researchers may need to adjust based on their research questions, timelines, and budgets. The point at which data collection is complete is informed by the study aims. Across our T1D and CHD studies, the process of collecting crowdsourced data ranged from 4 months (19 questions) to 6 months (37 questions). For the first CHD study, rates of response for the parent crowd dropped gradually throughout the 6-month period of data collection, dropping below 75% at Question 19 (3 months into data collection) and below 66% at Question 24 (nearly 4 months into data collection), although brief upticks in response rate occurred for certain domains (e.g., questions about experiences of grief and loss). Given that additional questions and clarifications may arise while coding and analyzing the data in Phase IV and additional feedback is obtained from crowd participants in Phase V, crowds should not be dissolved until the study has progressed through all five phases and the investigators are confident that they have obtained all information needed to fulfill the study aims.

Figure 2.

Crowdsourcing platform: sample responses and assigned codes.

Phase IV: Coding and Analyzing Crowdsourced Data

The qualitative data generated from crowdsourcing include participant responses to open-ended questions, dialog between participants, and participant feedback on preliminary findings (see Phase V). The researchers extract data from the online platform, upload it into qualitative analysis software, and code and analyze it using a qualitative analytic approach consistent with the study goals. Phases III and IV are not linear; data analysis guides generation of new questions. For example, in the T1D study, we added two questions about positive experiences related to parenting a young child with T1D after parents spontaneously described a “benefit finding” phenomena that became a code in earlier questions (Pierce et al., 2017). Unlike one-time interviews or focus groups, questions are asked to the same group of participants thereby giving the research team control over digging deeper into emerging findings. Participants’ reflections on one another’s comments provided a rich source of detail on individual differences in affective adjustment to their children’s health and medical care.

Phase V: Generating and Disseminating Findings

The researchers share emerging themes from coded qualitative data with crowd participants to assess accuracy and resonance with their experiences (i.e., member checking). In our research, preliminary findings were presented back to crowds in the form of online posts, and participants were instructed to provide feedback through “likes,” comments, and suggestions. We incorporated participant feedback into final analyses that we then utilized to develop the intended resource, intervention, or measure with ongoing crowd involvement. Dissemination of findings occurs through community presentations, social media posts, and lay research summaries, in addition to more traditional methods of dissemination such as conference presentations and peer-reviewed manuscripts.

Practical and Ethical Considerations

There are important practical and ethical considerations when conducting crowdsourcing research.

Accessing Diverse Stakeholders

Although crowdsourcing facilitated seamless access to geographically diverse samples, accessing sociodemographically diverse stakeholders was more challenging. Participants from ethnic/racial minority backgrounds were underrepresented in the first set of T1D and CHD studies, likely due to recruitment through disease-specific social media sites and patient advocacy organizations where minorities may also be underrepresented (Long et al., 2015; Wray et al., 2018). An ongoing CHD study used purposive sampling by clinician collaborators across eight pediatric health systems to recruit a racially and ethnically diverse crowd of English and Spanish speaking parents, indicating that purposive sampling strategies and recruitment by trusted clinicians may result in more diverse samples. Alternative strategies (e.g., individual interviews, diversity focus groups; Pierce et al., 2017), could be used to complement crowdsourcing methods for stakeholders less likely to participate in research conducted online due to barriers such as inconsistent internet access or low literacy.

Emotional Engagement of Crowd Participants

To keep participants involved throughout crowdsourcing research, it is crucial that participants perceive the “product” being developed or problem being solved as high value to themselves and/or their community. Several parents in the T1D and CHD studies commented on the balance of costs versus benefits of participation and weighed the potential benefits to the community (improved psychosocial care for other families) against their personal emotional costs (distress when revisiting difficult experiences). This balance must be considered by investigators to ensure the study aim is of high value to the community to justify the investment of emotional resources. Of note, many parents also commented on personal benefits resulting from participation in the crowdsourcing process (e.g., realizing their emotional reactions are common, feeling less alone). Another consideration is that the pace of research can feel very slow for stakeholders who have a strong personal investment in the product (Long et al., 2015). Research teams should ensure that study findings have a meaningful impact through timely dissemination via community outlets such as patient advocacy groups.

Responsiveness and Transparency of Crowdsourcing Methodology

Crowdsourcing methodology by nature is responsive, iterative, and flexible (Brabham, 2013). We continually refined study questions for the T1D and CHD studies in collaboration with parent and clinician partners to ensure responsiveness to themes emerging from past responses. Another unique feature of crowdsourcing is that crowd participants have access to and can interact with data by responding to other participants’ posts. Use of a social networking platform also facilitates sharing of preliminary findings with participants and obtaining feedback before findings are broadly disseminated. Giving participants multiple opportunities to reflect on a specific question, and to appraise other participants’ responses to those same questions, was invaluable in capturing a very deep perspective of our subject matter. Compared to in-person focus groups where a small number of active, vocal participants may influence others’ responses, crowdsourcing participants have time and space to voice their opinions in writing rather than succumbing to a dominant crowd participant. We did not observe obvious influence by vocal participants or inappropriate responses in the T1D and CHD studies. Requiring that crowd participants set up their online profile without the use of identifiers may further reduce the likelihood of social influence, particularly with regard to sensitive or controversial topics or within smaller communities. The option to submit responses directly to the study coordinator (by email, through a private message on the online platform) could also be given for any participants who prefer that particular responses not be viewed by the whole crowd.

Efficiency of Crowdsourcing Methodology

Crowdsourcing methodology is more efficient than traditional qualitative research. First, it offers the ability to share experiences and opinions via text messaging and social media, preferred methods of communication (i.e., compared to in-person or over the phone) for many pediatric psychology research participants (Vogels, 2019). Second, it provides the ability to conduct iterative research by taking findings “back to the crowd” for input. Third, since responses are typed and extracted from the social networking site, it eliminates the need for transcription, which can be costly and time intensive. Fourth, it eliminates the need for travel and omits scheduling difficulties in the context of busy participant schedules. Fifth, data are obtained from multiple participants, but can also be linked back to individual participants which allow for subgroup analysis (e.g., fathers; Hoffman et al., 2020).

Limited Personal Contact with Participants

While study teams have online access to participants of crowdsourcing research, direct personal contact is limited or nonexistent. This poses challenges if a participant posts a response that raises a “red flag” and warrants further assessment. Unlike traditional data collection where follow-up can occur as part of the interaction, crowdsourcing methodology is remote and samples are dispersed geographically. Prior to initiating crowdsourcing research, study teams should consider the potential need to assist participants struggling with anxiety, loss, or depression and work with their IRB on a plan for monitoring posts and responding to “red flags” raised as part of the research process. For the first CHD study, we provided participants with a list of general mental health resources, including websites, national organizations, hotlines, and instructions on how to locate a local mental health provider. Over the 6-month period of data collection, we made direct contact with two participants based on their responses and provided information about local resources.

Conclusions

Drawing from the experience of recent studies in T1D and CHD, crowdsourcing methodology is an innovative, efficient, feasible, and timely approach to engaging stakeholders in qualitative research. Crowdsourcing methodology overcomes certain limitations of traditional qualitative research and may be well-suited for the development of interventions or models of care that can be widely disseminated and implemented across settings for a broad range of families.

Supplementary Data

Supplementary data can be found at: https://academic.oup.com/jpepsy.

Supplementary Material

Acknowledgments

The authors thank the stakeholder partners and crowd participants who contributed to the research described in this topical review.

Funding

This work was supported by the National Institute of Diabetes and Digestive and Kidney Disorders (grant number DP3-DK108198), National Institute of General Medical Sciences (grant number U54-GM104941), and Agency for Healthcare Research and Quality (grant number 1K12HS026393-01).

Conflict of interest: None declared.

References

- Alderfer M., Sood E. (2016). Using qualitative research methods to improve clinical care in pediatric psychology. Clinical Practice in Pediatric Psychology, 4(4), 358–361. 10.1037/cpp0000164 [DOI] [Google Scholar]

- Brabham D. C. (2013). Crowdsourcing. MIT Press Essential Knowledge Series. Cambridge, Massachusetts. [Google Scholar]

- Brabham D., Ribisl K., Kirchner T., Bernhardt J. (2014). Crowdsourcing applications for public health. American Journal of Preventive Medicine, 46(2), 179–187. 10.1016/j.amepre.2013.10.016 [DOI] [PubMed] [Google Scholar]

- Créquit P., Mansouri G., Benchoufi M., Vivot A., Ravaud P. (2018). Mapping of crowdsourcing in health: Systematic review (2018). Journal of Medical Internet Research, 20(5), e187. 10.2196/jmir.9330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cushing C., Fedele D., Brannon E., Kichline T. (2018). Parents’ perspectives on the theoretical domains framework elements needed in a pediatric health behavior app: A crowdsourced social validity study. JMIR Mhealth and Uhealth, 6(12), e192. 10.2196/mhealth.9808 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czajkowski S. M., Powell L. H., Adler N., Naar-King S., Reynolds K. D., Hunter C. M., Laraia B., Olster D. H., Perna F. M., Peterson J. C., Epel E., Boyington J. E., Charlson M. E. (2015). From ideas to efficacy: The ORBIT model for developing behavioral treatments for chronic diseases. Health Psychology, 34(10), 971–982. 10.1037/hea0000161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Department of Health and Human Services: Food and Drug Administration & Office for Human Research Protections. (2016, December 15). Use of electronic informed consent—questions and answers. https://www.govinfo.gov/content/pkg/FR-2016-12-15/pdf/2016-30146.pdf (Last accessed October 21, 2020)

- Estellés-Arolas E., González-Ladrón-de-Guevara F. (2012). Towards an integrated crowdsourcing definition. Journal of Information Science, 38(2), 189–200. 10.1177/0165551512437638 [DOI] [Google Scholar]

- Gramszlo C., Karpyn A., Christofferson J., McWhorter L., Demianczyk A., Lihn S., Tanem J., Zyblewski, S., Boyle, E. L., Kazak, A. E., & , Sood E. (2020). Supporting parenting during infant hospitalisation for CHD. Cardiology in the Young. 30, 1422–1428. 10.1017/S1047951120002139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman M. F., Karpyn A., Christofferson J., Neely T., McWhorter L. G., Demianczyk A. C., James, Mslis R., Hafer J., Kazak A. E., Sood E. (2020). Fathers of children with congenital heart disease: Sources of stress and opportunities for intervention. Pediatric Critical Care Medicine. 10.1097/PCC.0000000000002388 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hynan M. T., Hall S. L. (2015). Psychosocial program standards for NICU parents. Journal of Perinatology, 35(S1), S1–S4. 10.1038/jp.2015.141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long K. A., Goldish M., Lown E. A., Ostrowski N. L., Alderfer M. A., Marsland A. L., Ring S., Skala S., Ewing L. J. (2015). Major lessons learned from a nationally-based community–academic partnership: Addressing sibling adjustment to childhood cancer. Families, Systems, & Health, 33(1), 61–67. 10.1037/fsh0000084 [DOI] [PubMed] [Google Scholar]

- Patient-Centered Outcomes Research Institute (2015). Financial compensation of patients, caregivers, and patient/caregiver organizations engaged in PCORI-funded research as engaged research partners.https://www.pcori.org/sites/default/files/PCORI-Compensation-Framework-for-Engaged-Research-Partners.pdf (Last accessed October 21, 2020)

- Pierce J. S., Aroian K., Caldwell C., Ross J. L., Lee J. M., Schifano E., Novotny R., Tamayo A., Wysocki T. (2017). The ups and downs of parenting young children with type 1 diabetes: A crowdsourcing study. Journal of Pediatric Psychology, 42(8), 846–860. 10.1093/jpepsy/jsx056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schleider J., Weisz J. (2015). Using Mechanical Turk to study family processes and youth mental health: A test of feasibility. Journal of Child and Family Studies, 24(11), 3235–3246. 10.1007/s10826-015-0126-6 [DOI] [Google Scholar]

- Smith A. (2015, April 1). U.S. smart phone use in 2015 Pew Research Center. http://www.pewinternet.org/2015/04/01/us-smartphone-use-in-2015/ (Last accessed October 21, 2020)

- Stewart R. E., Williams N., Byeon Y. V., Buttenheim A., Sridharan S., Zentgraf K., Jones D. T., Hoskins K., Candon M., Beidas R. S. (2019). The clinician crowdsourcing challenge: Using participatory design to seed implementation strategies. Implementation Science, 14(1), 63. 10.1186/s13012-019-0914-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogels E. A. (2019, September 9). Millennials stand out for their technology use, but older generations also embrace digital life Pew Research Center. https://www.pewresearch.org/fact-tank/2019/09/09/us-generations-technology-use/ (Last accessed October 21, 2020)

- Wiener L., Kazak A. E., Noll R. B., Patenaude A. F., Kupst M. J. (2015). Standards for the psychosocial care of children with cancer and their families: An introduction to the special issue. Pediatric Blood & Cancer, 62(S5), S419–S424. 10.1002/pbc.25675 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wray J., Brown K., Tregay J., Crowe S., Knowles R., Bull K., Gibson F. (2018). Parents’ experiences of caring for their child at the time of discharge after cardiac surgery and during the postdischarge period: Qualitative study using an online forum. Journal of Medical Internet Research, 20(5), e155. 10.2196/jmir.9104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y., Thompson D., Aroian K., McQuaid E., Deatrick J. (2016). Commentary: Writing and evaluating qualitative research reports. Journal of Pediatric Psychology, 41(5), 493–505. 10.1093/jpepsy/jsw032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wysocki T., Pierce J., Caldwell C., Aroian K., Miller L., Farless R., Hafezzadeh I., McAninch T., Lee J. M. (2018). A web-based coping intervention by and for parents of very young children with type 1 diabetes: User-centered design. JMIR Diabetes, 3(4), e16. 10.2196/diabetes.9926 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.