Abstract

Throughout the last decade, augmented reality (AR) head-mounted displays (HMDs) have gradually become a substantial part of modern life, with increasing applications ranging from gaming and driver assistance to medical training. Owing to the tremendous progress in miniaturized displays, cameras, and sensors, HMDs are now used for the diagnosis, treatment, and follow-up of several eye diseases. In this review, we discuss the current state-of-the-art as well as potential uses of AR in ophthalmology. This review includes the following topics: (i) underlying optical technologies, displays and trackers, holography, and adaptive optics; (ii) accommodation, 3D vision, and related problems such as presbyopia, amblyopia, strabismus, and refractive errors; (iii) AR technologies in lens and corneal disorders, in particular cataract and keratoconus; (iv) AR technologies in retinal disorders including age-related macular degeneration (AMD), glaucoma, color blindness, and vision simulators developed for other types of low-vision patients.

1. Introduction

Globally 1.3 billion people suffer from visual disorders, which cannot be fully corrected with eyeglasses or contact lenses [1]. Over the age of 65, there is a 25% chance of visual impairment or blindness. Even more concerning is that about 1.4 million children are blind and 12 million are visually impaired [2]. However, 80% of all vision impairment is avoidable if sufficient resources were in place; though, many people still do not have access to treatment, medication or ophthalmologists [3 –5]. Lens and corneal disorders including cataract and keratoconus, and optics nerve-retinal disorders such as glaucoma, diabetic retinopathy and age-related macular degeneration (AMD) are major blinding eye diseases [6]. These conditions may reduce the quality of life severely and several of them leads to total blindness if not treated correctly. The economic and social impact of blindness is devastating [7]. Although there are several approaches to address these diseases for early diagnosis and reducing some of the effects, the definite treatment is still surgery for some of them, especially cataract is the most frequently performed surgery worldwide. During cataract surgery, patients’ cataractous lens inside is removed and an intraocular lens (IOL) is implanted into the capsule. In the last decade, newer IOL designs have emerged for cataract surgery such as trifocal IOLs, which are becoming popular among patients and ophthalmologists [8]. However, these newer IOLs are not suitable for everyone. Since these IOLs have diffractive surface profiles that vary across the pupil diameter, certain disturbing visual phenomena (glare, halo, and dysphotopsias) can occur among some of the patients. The main obstacle is that it is impossible to predict which patients will develop these visual phenomena before surgery. Pre-surgery simulations can be a powerful tool to improve the visual outcomes.

According to experts, within the next 10 years healthcare services have to be transformed into fast, precise, non-invasive and cost-effective services that will leverage advanced diagnostics, pervasive monitoring and innovative e-health applications [9] in order to detect body signals, symptoms and diseases early on [10]. There is a need for point-of-care diagnostics to be placed at the core of a patient-centered approach. Experts underline that the ability to share patient’s data is now a necessity for healthcare systems and that mobile diagnostics with cloud capabilities can offer the possibility of real-time big data analytics to diagnose and prescribe medical conditions [11,12]. These can best be met through breakthroughs in and the deployment of bio-photonics technologies for the development of easy-to-access, non-invasive, low-cost screening methods [13,14]. With the advances in augmented reality (AR) and adaptive optics (AO), head-mounted displays (HMDs) offer unique and disruptive solutions that can be used for diagnosis, treatment, and follow-up of several eye diseases.

HMDs combined with real-world cameras enhance users’ existing visual capabilities using computer vision techniques such as optical character recognition, pupil tracking and enhancement methods including magnification, contrast enhancement, edge enhancement, and black/white reversal. The optical and software architecture plays a key role in simulating the diseases. Whilst off-the-shelf hardware are preferred widely among researchers including AR-HMDs such as Google Glass [15], Microsoft HoloLens [16], Epson Moverio [17] and virtual reality (VR) headsets including HTC Vive [18] and Oculus Rift [19], some researchers prefer to develop their own optical structure for better customization and adaptation. The so-called “video see-through displays” which consist of a VR headset and a real-world camera, substitute the image cast by the world on the retina with an enhanced view. However, these types of displays suffer from the temporal lag or latency, bulky hardware, and reduced visual field. On the other hand, “optical see-through displays” can render the augmented image on top of real objects without covering the eyes with an opaque screen, keeping the user’s natural field of view intact and their eyes unblocked. Although video see-through systems are excellent candidates to explore how HMDs and wearable cameras can be used to enhance visual perception, they suffer from inherent limitations in augmenting the 2D image from a video camera. In contrast, optical see-through displays integrate 3D virtual objects into the 3D physical environment, which would allow for new visual enhancement possibilities that are better integrated with the user's real-world tasks, therefore opening possibilities for diagnosis, treatment, and visual aid for several ophthalmic diseases. Visual impairments mostly occur in the elderly population and these people are not accustomed to use new technological devices in confidence [20]. However, technological devices could significantly improve their life style by providing them enhanced information about their surroundings, notifying them about obstacles and hazardous incidents. It can be stated that AR-HMDs could improve the visual perception of low vision people when used as a visual aid [21]. Several studies that are referred in this review address the needs of visually impaired people in a specific context, whereas some systems aim to aid low vision in general, all with a different optical architecture.

Human eye is a complex optical system that transmits light through its components to be focused on the retina. Incident light is first refracted by cornea, then enters inside the eye through the pupil, and is focused by the crystalline lens. Any malfunctioning or impairment in one of these parts could cause a visual disorder, and even lead to total blindness. Figure 1 illustrates the visions caused by various visual impairments. Although the quality of the vision is determined by the severity of the impairment, the figure depicts the typical levels. We will discuss these disorders separately, since their association with AR-based devices are handled in the literature according to the anatomical structure, effects, and symptoms of the disease.

Fig. 1.

Illustrations of visual impairments implemented in Adobe Photoshop. (a) Cataract; (b) Keratoconus; (c) Age-related macular degeneration (d) Glaucoma; (e) Color Blindness (Tritanopia).

AR, pupil trackers, and adaptive optics integrated with HMDs provide very powerful technologies not only for low vision aids, but also for diagnosis and vision simulation. In this review, we analyze technical capabilities, systemic limitations, challenges and future directions of using those technologies in ophthalmology.

In Section 2 we discuss AR and underlying technologies, which include AR head-worn displays, spatial-light-modulators, computer-generated holography, pupil tracking, and adaptive optics. In Section 3, we discuss accommodation, 3D vision, and related problems where AR HMDs are useful. Binocular head-worn displays make it easy to test 3D vision, accommodation and associated problems including presbyopia. Using HMDs, amblyopia becomes easier to diagnose and the wrong lens prescription problems in hypermetropia can be prevented using HMDs. Section 4 discusses the use of AR technologies in lens and corneal disorders. We predict the most important use for HMDs will be related to cataract, which is the most common surgery in the world. There are many intraocular lens replacement options and there are no preoperative simulators. There is research in the holographic and adaptive optics domains to address this need. Other important uses of AR technologies are related to corneal disorders such as keratoconus. Section 5 discusses the use of AR technologies in optic nerve-retinal disorders including AMD, glaucoma, color blindness, and other types of low-vision disorders.

2. Underlying technologies

Augmented reality is a technology that enhances the real-world environment by overlaying digital information on the objects or locations. The purpose of AR, contrary to virtual reality, is to enhance the user experience with interactive contents that combines real and computer-generated objects instead of creating a complete virtual environment [22,23].

Although there exist different AR systems, there are certain common elements: input devices, tracking systems, computation units and displays [24]. Users should be able to interact with virtual contents via input devices. An input device can be a simple remote controller, an interactive glove [25], a wireless wristband [26], or a finger/hand tracking camera system. A tracking device enables AR devices to align the digital information with the real world. In other words, to match the virtual content with what the user is seeing, these devices provide the location of the real objects to the computation unit. These devices can be digital cameras, optical sensors, GPS, accelerometers, solid state compasses, wireless sensors. In order to render the virtual scene and properly align the virtual content with real objects, AR devices should utilize powerful computational units. These units are usually equipped with CPUs and GPUs in order to match the real-time processing criteria [23]. Lastly, there must be a way for the user to perceive both the real scene and the virtual content. There are three main displays that are used in AR systems: head-mounted displays (HMD), handheld displays and spatial displays [24].

2.1. Augmented reality head-mounted displays (AR-HMDs)

Augmented reality head-mounted displays (AR-HMDs) is one of these systems that seeks to integrate the digital content with the real world by introducing a display device worn on the head. Head-mounted displays can either be video-see-through or optical see-through as illustrated in Fig. 2. Depending on their optical architectures, both displays can be monocular or binocular. Video-see-through systems require the user to wear two cameras on his head and require the processing of both cameras to provide both the real part of the augmented scene and the virtual objects [24]. On the other hand, optical-see-through systems employ optical components that allow the user to observe the physical world and graphically overlay information with their own eyes. The scene as well as the real world is perceived more naturally than at the resolution of the display [27].

Fig. 2.

Schematics of (a) an optical see-through display and (b) a video see-through display.

Optical see-through systems are considered a superior structure since the user can see the real world through an optical system as well as the virtual content. These systems benefit from both the transmissive and reflective properties of the optical combiners [28].

There are different commercially available optical see-through HMDs that are adopted to a wide range of AR applications. Google Glass is being prevalently used in healthcare applications including ophthalmology. Its user-friendly setting, adaptable software implementations, and easy information access are overshadowed by its technical limitations of low battery life, low resolution images, limited memory, and poor connectivity. These technical limitations in medical settings are thoroughly analyzed previously [29], concluding that are not suitable for complex applications such as large data analysis, image processing, and multi-threading. HoloLens and Magic Leap [30] are good devices for AR research despite their high cost and relatively complex waveguide design. On the other hand, Lumus [31] and Epson Moverio have small form factor, are cost effective, and their simpler waveguide design allow for customization. While the former offers better field-of-view than the latter, both have a good color reproduction [32].

2.2. Micro-displays

A micro-display is a crucial element of any AR-HMD system. They are electronic information display panels that are intended to be used with an optical architecture that provides a magnification to the image of the panel. Micro-displays can be generalized into two categories by the means of light output in each pixel: emissive and modulating micro displays [33]. In emissive micro displays, the emission of light by electroluminescence is created by an electrical signal that is applied to the optical layer of a pixel. Whereas in modulating micro displays when an electrical signal applied to each pixel which is illuminated by an external light source causes either a direct change in the amplitude, phase, frequency or some other property of the output light. In terms of micro display technology there are various platforms used in AR-HMD [33].

Liquid crystal on silicon (LCoS) is a miniaturized reflective active-matrix liquid-crystal micro display. It is also referred to as a spatial light modulator (SLM) that produces some form of spatially varying modulation on a beam of light. It is a key device for controlling light in two dimensions, consisting of an address part and a light modulation part. The optical characteristics of the light modulation part are changed by the information written into the address part, and the readout light is then modulated according to that change, producing an optical output that reflects the written information [34]. The spatial distribution of light properties such as the phase, polarization state, intensity, and propagation direction can be modulated according to the written information. Spatial light modulators can directly control the wavefront of light by modulating the phase by each pixel on the device. Among spatial light modulators, those specifically designed to modulate the phase of light are referred to as phase-SLMs [35].

Emissive micro displays, such as OLED and inorganic LED panels, are alternatives to transmissive LCoS micro displays. These panels do not require an additional back or front lighting as they produce a Lambertian illumination. OLED panels have already been used by several AR-HMDs such as Sony Morpheus, Oculus Rift DK2 etc. [34]

Microelectromechanical systems (MEMS) based micromirror scanners provide some desirable image generators for AR-HMD devices due to their collimated laser beams. This display technology uses a small (∼1 millimeter) sized scanning mirror and three rapidly modulated lasers (Red, Green, Blue). The image is generated by simultaneously scanning these three lasers in a raster pattern. In order to display the image, the rastered pattern can be projected on a surface in head mounted projection displays (HMPD) or it may be relay imaged to the eye in AR-HMD [33].

2.3. Computer generated holography and adaptive optics

Both computer generated holography (CGH) and adaptive optics (AO) are technologies that enable to control the wavefront that is generated within the head mounted displays. This is particularly important for ophthalmology applications since the ability of controlling the wavefront allows also to simulate and correct higher order aberrations.

A hologram captures and records both the amplitude and phase of the scattered waves that are generated by the scene that is illuminated by a collimated laser beam. For AR-HMD devices, holography is a promising technology that can recreate a three-dimensional virtual scene with all-natural depth cues. Computer generated holography methods allow us to synthesize the corresponding wavefronts of the virtual objects in the same manner of how we see the real objects that are surrounding our environment. In computer generated holography, wavefronts that are generated for virtual content are indistinguishable from the physical objects [36]. An AR-HMD device with computer generated holography technology requires several optical and computational devices and can provide binocular disparity, motion parallax cues, correct occlusion and accommodation effects as shown in Fig. 3 [37].

Fig. 3.

Schematic top view of a user wearing the HNED (left eye only). The CGH on the SLM converts the diverging wave from the point light source directly to the true light wave (with correct ray angles) from virtual objects. That wave goes through the eye pupil along with the light from real objects and forms a retinal image. The eye in the figure is focused on nearby objects. (Reference [37], Fig. 2; Creative Commons CC BY; published by Taylor & Francis.)

CGH is a type of holography that replaces the optical acquisition process of optical holography with computational methods. To explain the process of CGH systems, in the simplest sense we can analyze this process under three major steps: 3D content generation, object wave computation, and 3D image reconstruction [38]. First step of acquiring a CGH is to represent the geometry of the input 3D scene. In this step the 3D scene geometry, object positions as well as the location of the light sources are described. There is already specialized computer graphics software that can provide this information. They can provide 2D projections of the scene from different perspectives corresponding depth map images of the perspective images [39]. The next step is to compute the object wave of the scene with respect to scalar diffraction theory. The complex valued object waves that are calculated to represent the 3D scene are reduced to only real positive values to be displayed. The CGH systems generally uses three methods for encoding: the amplitude holograms where the amplitude of the reference wave is modulated, phase holograms which modulate its phase, and complex holograms where both amplitude and phase is modulated. As a final step, once the encoded CGH has been acquired to reproduce the 3D image of the scene, it can be displayed on a beam shaping device that we have previously explained, SLM. These displays reproduce the 3D scene by modulating the amplitude or phase depending on the CGH system. The image of SLM is reflected to the subject's eye by a combiner or a beam splitter.

Adaptive optics is a technique that allows to modify the wavefronts. It was initially developed in astronomy for correction of the blurring effect caused by the atmospheric turbulence on the images registered by the telescopes. But sooner it was clear that a similar problem in the retinal images due to the aberrations in the eye's optics could be tackled by using AO. In its first ophthalmology implementations, AO was used to correct the optical aberrations in the eye to obtain sharp retinal images [40 –42]. On the other hand, modifying the phase of the wavefront to simulate ophthalmic devices (contact lens, intraocular lens) or the optical conditions found in pathological eyes presents another application of AO for vision science. These instruments, named as adaptive optics vision simulators (AOVS) have been extremely useful for both basic research and the design of ophthalmic solutions [43 –46]. One major disadvantage that prevents these instruments from being accessible for the general population is the size. Overall, while significant advancements in its use in ophthalmology have been made, miniaturisation is still the biggest challenge for its integration in mobile diagnostics devices. Being able to have a miniaturized version of the AOVS would be a breakthrough in our way to translate the benefits of this technology to the public.

Another challenge that AOVS faces is the limited refraction range of the simulator. This is mainly due to the limited amplitude in phase modulation that can be achieved by deformable mirrors or liquid crystal based spatial light modulators. Although the pixel size is an inherent restriction for the functioning of SLMs; in fact, it is the diffraction effects due to the large amount of phase wrapping that limits the amplitude of SLMs. This limitation is a main performance indicator for AO devices, especially for patients with large refractive error. Suchkov et al. [47] proposed an instrument that addresses this problem of limited range by dividing the task of aberration modulation between a liquid-crystal-on-silicon SLM (LCoS-SLM) and a tunable lens, allowing to extend AOVS technology to highly aberrated eyes. The schematic of the proposed system is depicted in Fig. 4.

Fig. 4.

Schematic of the new adaptive optics visual simulator with an extended dioptric range. BS1, BS2, beam splitters; FS, field stop; HM, hot mirror; L1 to L7, achromatic doublets. Figure not to scale. Further description of the elements is provided in the main text. (Reprinted with permission from [47], The Optical Society.)

2.4. Tracking technologies

In AR-HMD systems, it is important to track the user’s head position, viewing point and its location with respect to surrounding objects in the environment. Various software algorithms combined with digital cameras, optical sensors, GPS, accelerometers, solid state compasses, wireless sensors can track the subject’s position and eye movements [24].

Detecting the pupil from images under real-world conditions is a challenging task due to significant variabilities such as illumination, reflections and occlusions. Although robust and accurate detection of the pupil position can vary due to individual differences in the eye physiology, there exists some well-established commercially available pupil trackers, such as Dikablis Mobile eye tracker, Pupil Labs eye tracker, SMI Glasses, or Tobii Glasses that measure the eye movements with at least one camera that is directed to the user’s eye. Whereas some systems use a single camera for gaze tracking, some of them take advantage from stereo camera or depth camera systems. A crucial prerequisite for a robust tracking is an accurate detection of the pupil center in the eye images. The gaze point is then mapped to the viewed scene based on the center of the pupil and a user-specific calibration routine [33]. Dedicated eye’s trackers using GPU’s are also being developed [48].

These technologies underlie the groundwork of assistive and diagnostic devices for visual impairments for the last two decades. We will summarize these devices in the following sections, though a preliminary look at the diseases and some of the associated studies are given in Table 1.

Table 1. Summary of assistive and diagnostic display devices for ophthalmic diseases.

| Study | Device | Technology | Eye Tracker | Display | |

|---|---|---|---|---|---|

| Presbyopia | Hasan et al. [49,50] | Autofocusing Eyeglass | Adaptive Optics | No | N/A |

| Jarosz et al. [51] | Adaptive Eyeglasses | Adaptive Optics | No | N/A | |

| Padmanaban et al. [52] | Auto-focal Glasses | Adaptive Optics | Yes | N/A | |

| Wu et al. [53] | Near Eye Display | Holographic Optical Waveguide | No | Micro display with picoprojector | |

| Mompeán et al. [54] | Near Eye Display | Adaptive Optics | Yes | Smartphone display | |

| Amblyopia | Nowak et al. [55] | Microsoft Hololens | Holographic Waveguide (Optical see-through) | Yes | Waveguide display |

| Strabismus | Vivid Vision [56] | Oculus Rift | Virtual Reality | No | LCD |

| Samsung GearVR | Smartphone display | ||||

| HTC Vive | OLED | ||||

| Miao et al. [57] | FOVE | Virtual Reality | Yes | OLED | |

| Refractive Errors | Wu et al. [58] | Near Eye Display | Waveguide AR | No | Micro OLED |

| Cataract | Arias et al. [59] | Tabletop setup | Adaptive Optics | Yes | SLM-LCoS |

| Vinas et al. [60] | Tabletop setup | Adaptive Optics | No | SLM-LCoS | |

| Keratoconus | Rompapas et al. [61] | Tabletop setup | Augmented Reality | No | OLED |

| AMD | Hwang and Peli [62] | Google Glass | Augmented Reality (Optical see-through) | No | Prism Projector |

| Moshtael et al. [63] | Epson Moverio BT-200 | Augmented Reality (Optical see-through) | No | LCD | |

| Ho et al. [64] | ODG R-7 | Augmented Reality | No | LCD | |

| Glaucoma | Peli et al. [65] | Glasstron PLM-50 | Augmented Reality (Optical see-through) | No | LCD |

| Virtual Stereo I-O | |||||

| PC Eye-Trek | |||||

| Integrated EyeGlass | |||||

| Younis et al. [66] | Epson Moverio BT-200 | Augmented Reality (Optical see-through) | No | LCD | |

| Jones et al. [67] | FOVE | Virtual Reality | Yes | OLED | |

| HTC Vive | Augmented Reality (Video see-through) | ||||

| Color Blindness | Tanuwidjaja et al. [68] | Google Glass | Augmented Reality (Optical see-through) | No | Prism Projector |

| Sutton et al. [32] | Computational Glass | Augmented Reality (Optical see-through) | No | Phase-only SLM | |

| Tabletop setup | SLM-LCoS | ||||

| Langlotz et al. [69] | Epson Moverio BT-300 | Augmented Reality (Optical see-through) | No | OLED |

3. AR in accommodation and 3D vision

Humans see the world in binocular vision which brings the perception of depth and 3D vision. However, there are some difficulties that near-sighted and far-sighted people must deal with in their everyday lives caused by the accommodation distance of the eyes from the focused object [70]. Normally, the eyes can focus at various distances by changing the shape of the crystalline lens, thereby changing the effective focal distance. This process of changing the focal power to bring objects into focus on the retina is called accommodation. The accommodation range is defined by the subtraction of the reciprocals of the near point and the far point expressed in diopters. When a person focuses an object at some distance, both eyes must converge to fixate upon the same point in space, which is called the vergence distance. And the eyes must accommodate at a specific distance to image that exact point in sharp focus. These two mechanisms are neurally coupled, so if the vergence angle changes, the eye adjusts its accommodation depth to see the image in focus. If the two distances do not properly match, it will cause fatigue and discomfort. This problem is called vergence-accommodation conflict (VAC) [71], and it has been a crucial issue in AR systems even with people with normal vision, because the real world must be closely matched to the virtual world. Figure 5 depicts two different accommodation distances when 3D virtual images with correct focus cues are displayed.

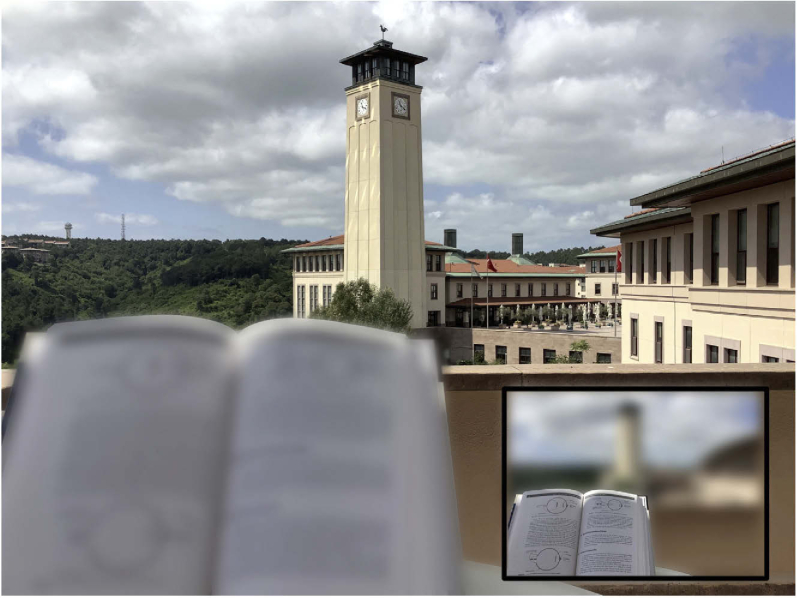

Fig. 5.

Image captured by a camera when the focus is adjusted to 250 and 500 mm, respectively. The experiment confirms that 3D images with correct focus cues are displayed without relay lenses at the retinal resolution. (Reference [37], Fig. 7; Creative Commons CC BY; published by Taylor & Francis.)

This is even a harder problem for people with presbyopia, the age-related inability to accommodate, because they need glasses or lenses to focus on different distances. Some HMDs (e.g., Lumus DK-32) allow users to wear prescription lenses by providing physical space between the eyes and the display, but this is not a constructive solution for presbyopes. Today’s HMDs offer solutions to overcome the VAC [72 –74]. Particularly for AR displays, there are promising solutions by using outward looking depth sensing, hand-based user interaction, and binocular eye tracking along with an adaptive focus system for the real images [75]. Such an auto-focus AR system could both replace the prescription glasses and can be used as an AR-smart glass at the same time. Although AR-glasses must be comfortable and compact to be used in everyday life without affecting the individual’s social life, there are proof-of-concept studies for auto-focus AR glasses that dynamically supports appropriate focus cues for both real and virtual content. Deformable membrane mirrors were used as a focus tunable element in several optical systems along with hyperbolic half-silvered mirrors for NEDs [76]. Although this design addresses the accommodation cue and wide field of view, it suffers from high form factor and low latency due to eye-tracking and adaptation time of the membrane. Inspired from this study, Chakravarthula et al. developed a prototype by using tunable-focus lenses for the real world and a varifocal beam combiner design for the virtual content for auto-focus augmented reality eyeglasses that can let a wide range of users appropriately focus on both real and virtual content simultaneously [77]. Albeit the limited field of view, their early-stage prototype demonstrates preliminary capabilities to display both rendered and real content in sharp focus for users with and without any refractive errors.

Another study [78] addresses the VAC problem by developing a novel design for switchable VR/AR near-eye displays. They used time-multiplexing to integrate virtual image and real environment visually, combined with a tunable lens that adjusts focus for virtual display and see-through real scene separately, thus providing a correct accommodation for both VR and AR. Verified experimentally, tunable lenses inside the display device compensate the lack of prescription glasses, which become redundant for both near- and far-sighted users.

3.1. Presbyopia

Presbyopia is a condition which causes an individual to degrade their ability to accommodate to near distances that fall within the range of the arm’s reach. This is due to the stiffening of the crystalline lens of the eye. It affects every individual after the age of 50 [79]. The average accommodation amplitude of a healthy young eye is 12 D (corresponds to the nearest point of clear vision of 8 cm), and it reduces to less than 1 D (corresponds to 100 cm) in subjects over 50 years old.

Presbyopia reduces quality of life significantly by causing a high risk of falling, difficulties in reading traffic signs and other important information in the course of daily life. As a countermeasure, contact lenses and prescription glasses have spread through the market, however they can only shift the focus distance of the eye, producing sharp images at a particular distance, while leaving other distances blurry. Adding or subtracting a fixed amount of optical power can compensate for myopia or hyperopia, which are caused by the inadequate length of the eyeball, but a fixed lens cannot correct presbyopia. Multifocal, monovision, or progressive eyeglasses can reduce this problem, but at a cost of depth of sense and focused field of view as shown in Fig. 6 [80,81]. This cannot account for the accommodation of the crystalline lens of the eye, unless a variable focus is introduced. There are several studies that address presbyopia using autofocusing lenses, but they are often activated manually. A piezoelectric actuation system was introduced by Hasan et al. [49,50], who developed a lightweight auto-focusing eyeglass that utilizes liquid lens eyepieces that adaptively calculates the optical power required to produce sharp images from the prescription of the wearer and object distance range measurements. Another presbyopia-correcting adaptive approach is an original opto-fluidic engine with a variable fluid-filled lens which consists of an ultra-thin membrane sandwiched between two different refractive index liquids and actuated by a low-power high-volume microfluidic pump. The system is implanted within an ophthalmic lens of any external shape which provides the static refractive correction [51].

Fig. 6.

Typical presbyopic vision. Inset figure shows the corrected vision with monovision lenses. (Illustrated in Adobe Photoshop.)

While these are significant steps towards alleviating the trade-off between focus and depth, they still require the user to move their head instead of just eyes because of the lack of eye-tracking modules. These methods have a high refocusing response time and bulky designs; furthermore, it has not been clinically evaluated whether their refocusing properties replace the accommodation behavior of a healthy eye.

Recently, Padmanaman et al. [52] developed an “autofocal” system that consist of electronically controlled liquid lenses, a wide field of view stereo camera and binocular eye tracking. The liquid lenses automatically adjust the focal power according to the information retrieved from the eye tracking and depth sensors at a cost of high power consumption. Although this device outperforms the traditional methods, the pupil-tracking unit requires a desktop PC, preventing from becoming a fully mobile solution.

The definitive solution for presbyopia that includes a near-eye display should be ergonomically and esthetically fulfilling in order to be socially acceptable Another compact design of a see-through near eye display that consists of a plano-convex waveguide coated with two volume holograms has been proposed [53]. The monocular prototype features a field of view of 61° and a distortion less than 3.90% for the virtual image. A fully portable device with optoelectronic lenses that provide a high-range of defocus correction up to 10 D are recently proposed [54]. Deploying the pupil tracking algorithm on a smartphone, this device holds a promising position in future wearable display devices for presbyopia correction. Relatively small field-of-view, bulky design, and limited battery capacity are the current problems that are addressable with future implementations.

3.2. Amblyopia

Amblyopia, also known as the lazy eye syndrome, is the reduction of visual acuity in one or both eyes, caused by poor eye’s optics during the period of visual development in childhood. If one of the eyes performs better than the other, the brain chooses the best one for vision. With a prevalence of 5% in the general population, amblyopia mostly affects children, reducing the quality of life greatly [82].

The conventional method of treating amblyopia is to cover the healthy eye with a patch and force the other eye to increase its physical and visual activities under parental control. This procedure contains some major difficulties such that the child may not be collaborative with wearing the patch and may feel themselves socially isolated. Some possible solutions regarding VR-based video games increase patient collaboration drastically, considering most of the patients are children; however, this process requires parental control, since the children will lack the ambient awareness. Nowak et al. [55] addressed this issue with an AR approach implemented to Microsoft HoloLens, providing a spaceship-asteroid game for the children. While the healthy eye is looking at the background image, the lazy eye is observing the asteroids and trying to prevent the ship from crashing, hence providing a stereo vision along with the real-world vision of the surroundings. Although being a promising first prototype, the developed device still needs further improvements concerning the game interface and eye tracking implementation for long-term usage and effective feedback.

3.3. Strabismus (squint)

Strabismus is one of the most common ophthalmic diseases that would cause amblyopia, weak 3D perception, and even permanent vision loss if not diagnosed and treated in time. It is a condition in which a person cannot align their eyes properly, and they point in different directions. Besides the visual impediment that comes with the disease, people with strabismus also suffer from the social and psychological consequences that may cause social alienation, and disadvantages in employment and education. Patients can avoid these consequences upon diagnosis and treatment at the early ages. However, the conventional diagnosis methods including Hirschberg test, Maddox rod, and cover test are manually conducted by professionals; hence, it is difficult for children with uninformed parents or lower income to visit an ophthalmologist. In response to this, recent research addresses easy-to-access solutions that yields objective diagnosis. One of the hot topics in present translational medicine regarding ophthalmology and engineering is the implementation of eye-tracking algorithms and machine learning methods to evaluate patient’s pupillary movements and associated visual disorders. There are a few eye-tracking approaches to strabismus that intent to replace classical methods, however these methods fail to produce accurate results due to the small number of test subjects [83 –85]. One recent study distinguishes itself by employing convolutional neural network for the classification of strabismus [86]. Chen et al., proposed an objective, noninvasive, cost-friendly, and automatic diagnosis system based on an eye-tracking module to carry out examinations in large communities such as primary schools.

The role of near-eye display systems in diagnosis and treatment of strabismus mainly gravitate towards VR displays. The transition of stereo acuity tests from real world to virtual environment started in the last decade and still an emerging research topic [87 –90]. VR headsets eliminates the need for head fixation and provides complete autonomy over the illumination and target visuals. In a recent study [57], Miao et al. developed an eye-tracking-based virtual reality device for the automated measurement of gaze deviation with high accuracy and efficiency. On the commercial side, Vivid Vision [56] offers automated VR vision treatment for strabismus and amblyopia as well.

AR-based display devices hold a potential for the purposes of treatment, education and surgical assistance, since 3D-spatial properties of AR technology provide great advantage for strabismus, which is a disease regarding the misaligned three-dimensional movements of the eyes, thus leading to a better understanding compared to 2D-based methods. A recent study focuses on education of patients with AR to increase awareness on medical examinations and eye surgeries [91].

3.4. Refractive errors

In the last decade, numerous AR-HMDs were introduced both commercially and research-based. Although these instruments approach the associated visual impairments in a specific context, no single device is widely adopted by the consumers, because they haven’t reached small form factor, high resolution, wide field of view, large eye box and variable focus all at once. The diversity of human anatomy, even a small difference in interpupillary eye distance or shape of the head requires a design change in HMDs [92], and many subjects use prescription glasses, or contact lenses, for vision correction due to hypermetropia or myopia. The importance of prescription glasses especially in children is discussed in [93]. The basic procedure of prescribing glasses especially for children must consider the age, accommodation tendency, and risk of amblyopia [94]. This being considered, a customizable and prescription-friendly HMD, satisfying the necessary optical specifications is still a major requirement for smart glasses to become a part of daily life like smartphones did in the last decade. A recent study holds promise to present a fully customized, prescription-embedded AR display, that combines the user-specific features, i.e., individual’s prescription, interpupillary distance, and structure of the head with esthetics and ergonomics of the glass structure [58]. Their optical architecture employs the prescription lens as a wave-guide for the AR display to cover myopia, hyperopia, astigmatism, and presbyopia. The mechanical structure is ergonomically designed using a 3D face scanning method to fit on the user faultlessly. The display device enables 1 diopter vision correction, 23 cycles per degree angular resolution at center, 6 mm x 4 mm eye-box, 40° x 20° virtual image and varifocal capability between 0.33D and 2D. With a 70% transparency this lightweight device offers a nice vision of the real world as well.

4. AR in lens and corneal disorders

4.1. Cataracts

4.1.1. Definition and treatment

Cataracts is the world’s most common cause of preventable blindness and is alone responsible for the complete loss of vision of 65 million people [1]. It is caused by clouding of the crystalline lens. The progression of cataracts is usually gradual and painless with a continuous degradation in vision, so it is quite unnoticeable to the people in the earlier phases. Later on, the symptoms start to manifest including blurry vision, glare and halos around lights, poor vision at night, and in some cases double vision. There are several types of cataracts including age related, traumatic, and metabolic [95]. Neither of those can be corrected with prescription glasses and eventually lead to blindness if untreated. The only definitive treatment is surgery.

During cataract surgery, a patient's cataractous lens inside the lens capsule is replaced with a new artificial intraocular lens (IOL). Trifocal IOLs are an important innovation in this area as they offer good vision at three different focal distances simultaneously and eliminate the use of near eyeglasses. Following the surgery, doctors perform auto-refractometer and visual acuity tests to assess the success of the operation as well as the optical performance of the IOL. However, since trifocal IOLs have diffractive surface profiles that vary across the pupil, they cannot be measured using current auto-refractometer or other measurement devices used in everyday ophthalmology practice.

When the patient does not have the expected ‘good’ vision after surgery, the source of the problem cannot be easily determined. Ophthalmologists must rely on subjective evaluations, which leads to surprising and often confusing results. Therefore, a system that provides objective measurements for patients’ post-operative condition after IOL replacement will be appealing and lead to better understanding of the problem, hence the treatment. On the other hand, understanding how patients experience cataracts is important for diagnosis. Krösl et al. recently developed an AR-based cataract simulator reinforced by eye-tracking to give medical personnel some insight on the effects of cataracts [96]. They verified their simulation method with cataract patients between surgeries of their two cataract affected eyes.

Although cataract surgery is proven successful, non-invasive techniques are appealing, especially for applications in areas with limited access to healthcare. More than four decades ago, Miller et al. [97] conducted an experiment, in which the phase map of an extracted lens was retrieved using holography for its posterior phase conjugation. Since that time, different methods were used to compensate for the effects of cataracts such as wavefront sensing, where focusing is achieved by locally manipulating the wavefront to produce constructive interference on a specific spatial position of the diffraction pattern. In a recent study [59], an optical setup was developed to present a noninvasive technique using wavefront shaping (WS) with different spatial resolutions of the corrector phase maps to partially correct the effects of cataracts. In this feedback-based AO approach, realistic effects of different cataract grades simultaneously simulated and corrected to some level. Although the spatial resolution of the images is increased significantly, the contrast is still insufficient. A solution to this problem is proposed in the article as well. Incorporating the WS corrector system to an augmented reality setup, which would virtually enhance some of the key insights of the surrounding, would increase the perceived information of the environment. With such a system, the environment would be recorded, digitally processed, and projected onto the retina, enabling the user to experience a much more detailed vision. A physical simulation of the proposed system is illustrated in Fig. 7.

Fig. 7.

Simulation of a scene seen through: (a) clear optics, (b) the advanced cataractous effects, and (c) their partial correction by WS. (d) Proposed simplification to lead (e) the increased contrast image. (Reprinted with permission from [59], The Optical Society.)

4.1.2. IOL simulator

Due to the advent of different kinds of IOLs, there is a wider range of choices for cataract patients as well as ophthalmologists before surgery. The general IOL designs are spherical, which contain inherent aberrations. Some aspheric designs partially correct the spherical aberrations [98], hence the most suitable IOL for each patient is a subjective choice of the doctor. It is natural that the patients and doctors want to know which IOL is best for them. In that regard, simulators are designed to help patients better understand their vision, conditions and possible treatment options, and offer an in-depth guided tour of intraocular lens options [99 –102]. Although the selection of the optimal IOL design drastically increases the post-operative vision of the patient, the selection criteria mainly depends on the commercial information of the IOL and the clinical experience. Therefore, a quantitative method is needed for pre-operative simulation. There are only a limited number of commercially available IOL simulators in the market, and most of the existing devices are not portable.

Even before the AR technology has reached a certain level to play a role in diagnosis of eye diseases, vision simulators are in demand to simulate pre-operative vision to guide both ophthalmologists and companies that manufacture IOLs. It is very important for them to evaluate the performance of the IOL design before fabrication to spare time and resources. Adaptive optics technology is a practical approach for clinical testing of IOLs [44,103]. Beyond the capacity of minimizing the refractive errors and spherical or aspherical aberrations of the AO-simulators, further physical conditions must be taken into account. The centration and tilt of the IOL could affect the visual quality greatly even though a good match is determined.

One important drawback of the IOL simulators is the mismatch between the pre-operative simulation and post-operative vision due to the presence of the faulty crystalline lens during the simulation. The factors that affect the retinal image are the cornea, crystalline lens, and induced profile of the simulated IOL; however, the crystalline lens will be removed after the surgery, and its effects to the simulation will not be present in the post-operative vision of the patient. To eliminate this effect, lens aberrations must be removed in the simulated profile, and the only way to measure the ocular and corneal aberrations directly is through corneal topography. In corneal topography, corneal aberrations can be calculated, then the subtraction of corneal aberrations from the whole eye profile will yield the lens aberrations. Despite the more accurate results, this procedure adds another bulky and complex instrument to the simulation and increases the time span of the overall process.

To evaluate the need to use a corneal topographer in IOL simulations, Villegas et al. [104] measured the impact of lens aberrations on visual simulation of monofocal and multifocal diffractive profiles by measuring high and low contrast visual acuity in phakic eyes for different pupil sizes. They reported that the effect of lens aberrations on visual simulation is imperceptible for a small pupil diameter of 3 mm.

An AO-based IOL simulator that consist of an SLM and a tunable lens to provide pre-operative multifocal correction shows a good correspondence between pre-operative and post-operative visual acuity test results [60]. The key idea is to provide superimposed images on the retina with the same position and magnification but different focal planes by scanning multiple foci with the opto-tunable lens.

4.1.3. AR-based preoperative simulation

Monitoring the refractive error in the eye is a well-known and necessary method to investigate the visual acuity of a patient. A phoropter is an ideal instrument to inspect the refractive error by placing different lenses one at a time in front of the user's eye, while looking at an eye chart at some distance away. This process is time consuming and requires constant patient feedback, which is often not reliable, since the patient is expected to evaluate the performances of all the lenses, keep them in their memory, and make an accurate selection. This is even more confusing with children, elderly, and people with disabilities and communication problems. Therefore, a more user-friendly and compact instrument which requires less patient collaboration is in demand, especially for under-developed countries, since their accessibility to screening devices are sufficiently low. Adaptive optics and wavefront detectors provide promising solutions up to a certain level such as autorefractors, however their ability of simultaneous data verification through using different lenses is limited. Recently, a study using fluidic lenses along with holographic optical elements (HOEs) claims to overcome this issue with the compact form factor and unique diffractive properties of HOEs [105]. One main advantage of HOEs is their ability to work in specific wavelengths while being transparent to other wavelengths, which enables them to project the pupil onto the wavefront detector without blocking the patient’s view. Moreover, employing holographic optical elements exploits their unique property in steering the IR light towards the detector without affecting the patient’s line of sight in the visible range. In conclusion, they presented a hand-held RE measurement system with sphero-cylindrical correction power range of −10 to +10 diopters and with 0.1 diopter increments. The fluidic lenses developed in this study can be deployed in applications that need high speed ophthalmic correction such as virtual and augmented reality systems.

There are few cases, apart from a measuring device, where augmented reality is implemented into contact lenses for surgical training and simulation. A wide field of view of 120°, high resolution, and compact display device was developed by Innovega Inc. [106], which collimates the incident light into the pupil via a focusing lens located at the center. The eye’s biological optics then forms the image on the retina in a natural way. With its sufficiently small size, the optical components do not block the real-world view of the user. Another commercial contact lens display that adopts AR technology is Mojo Vision [107]. Still on the prototype stage, Mojo Lens offers high resolution and fast computing display with a micro-LED screen, a microprocessor, wireless communication, and several other sensors.

Driven by the advances in the contact lens displays, Chen et al. presented a foveated NED design, which features an array of collimated LEDs and a contact lens that employs augmented reality [108]. Despite the advantages of contact lens displays such as free-head and eye motion, small weight, and lack of head-related human factors, i.e., interpupillary distance, shape of head, these displays are held back by significant limitations including built-in battery space, electromagnetic induction due to wireless charging, and safety of the display.

Considering the recent developments both in adaptive optics and in augmented reality, no HMDs have been developed to provide a pre-operative simulation using AR technology. Our group is building a wearable cataract simulator that determines the opaque regions on the crystalline lens and sends the virtual images through the healthy regions of the lens using pupil tracking and computer-generated holography techniques as shown in Fig. 8. This will allow patients to experience the post-operative vision and to decide on the most suitable IOL.

Fig. 8.

(a) Illustration of the proposed vision simulator instrument built by our research group. (b) Holographic beam shaping and aberration control by targeting the healthy regions on pupil.

Pre-operative simulators and diagnostic devices do not prioritize the cosmetics of the instrument compared to assistive tools which are meant to be worn daily. For assistive tools, weight and battery life take precedence over field-of-view and resolution because they are to be worn in social environments. This gives an advantage to simulators and diagnostic devices, so that additional real-world or eye-tracking cameras and motion sensors does not become an intimidating burden.

4.2. Corneal disorders (keratoconus)

Keratoconus is a bilateral ectacic progressive noninflammatory corneal disease that gives cornea an asymmetrical conical shape. It affects around 1 in 2000 of the general population. It usually roots at puberty and progresses unnoticeably until the forth decade. The initial phases of the disease usually do not possess any symptoms, though the corneal thinning may trigger irregular astigmatism, myopia, and blurry vision. Since there are several variable symptoms, the diagnosis of the disease can be done by the ophthalmologist with a corneal topography due to the patient complaints, however this would be past early stages. In the advanced phases significant vision loss prevails, though keratoconus does not cause total blindness [109,110].

Keratoconus is most commonly an isolated disorder, although several reports describe an association with Down syndrome, Leber’s congenital amaurosis, and mitral valve prolapse. The differential diagnosis of keratoconus includes keratoglobus, pellucid marginal degeneration and Terrien’s marginal degeneration. Contact lenses are the most common treatment modality. When contact lenses fail, corneal transplant is the best and most successful surgical option. Since it is associated with frequent changes in refractive prescription, it can cause serious vision impairment, and typically requires treatment. Keratoconus affects the quality of an individual's life severely. A good visual acuity does not ensure a good quality of vision or a good quality of life for people with keratoconus [111]. Therefore, early diagnosis of the disease is quite important.

The conventional methods for detection of keratoconus includes keratometer, retinoscopy, corneal topography, and corneal tomography. Due to the fact that not all ophthalmology clinics are in possession of these instruments, more reachable devices are in need for a huge part of the world population. A recent study [112] proposed a keratoconus detection method using only smartphones and a 3D-printed gadget which enables the smartphone to capture 180° panoramic images from the eye. With its novel image processing algorithm, this method can detect different stages of keratoconus with an accuracy of 89% on average.

Another approach to keratoconus treatment is to use a personalized IOL. Today, IOLs are often used in cataract surgery to replace the crystalline lens of the eye to eliminate the visual loss due to the cataract. The corneal replacement surgery might also be an attractive solution to the corneal diseases like keratoconus, though an alternative approach would be quite useful to avoid surgery which may result in substantial refractive errors, long healing process, and risk of complications such as graft rejection [113]. Using a personalized IOL along with corneal cross-linking would compensate for advanced refractive errors. Wadbro et al. propose a computational procedure based on 3D ray tracing method and a shape description of the posterior IOL surface for optimization of personalized IOLs to overcome the refractive errors in the cornea [114,115].

Apart from the smartphone applications or personalized IOL designs, optical see-through displays that employ augmented reality technology offer promising solutions that addresses the refractive errors in the cornea. Refocusable HMDs have been studied [116] previously and suffer from increased diffraction, contrast, color issues, and bulkiness. Rompapas et al. addressed the need for a method to develop computer graphics that is always in focus and creates realistic depth of field effects for displays [61]. They proposed EyeAR, a tabletop prototype consisting of an OLED display and mirrors that generates refocusable AR content by continuously measuring the individual’s focus distance and pupil size, thus matching the depth of field of the eye.

5. AR in retinal-optic nerve disorders

5.1. Age-related macular degeneration (AMD)

Age-related macular degeneration (AMD) is a progressive disease that causes loss of central vision which is a severe visual disability for the affected individual such as low quality of life, increased depression, and reduced ability to handle the daily activities. Besides degraded visual acuity and contrast sensitivity, these indications have an impact on individuals’ economic and social life such as risk of falling, provision of vision aid equipment, depression treatment, and assistance with daily activities [117 –119]. Considering the 8.7% of the world population suffer from AMD, the technological advancements regarding the diagnosis and treatment of this disease are of great importance [120]. There are several approaches to address the loss of central vision by retinal degeneration including gene therapy [121], cell transplantation [122], optogenetics [123], and electronic implants [124,125]. While these invasive methods have different limitations and difficulties, they are not prevalent around the globe for technological incapability and economic competence of some countries. Therefore, using HMDs offers major simplicities and accessibility [126 –128].

Hwang and Peli developed an AR edge enhancement application using Google Glass [62], which displays enhanced edge information over the user's real-world view, providing a high contrast central vision for people with macular degeneration. The environment is captured by a camera, the edges of the objects are highlighted by a cartooned outline and overlaid onto the real-world image as such the user sees enhanced contrast at the location of edges in the real-world. Being the first such implementation on a see-through HMD device, it has been demonstrated that edge enhancement improves performance in search tasks performed on computer screen [129,130] and preferred by AMD patients when watching TV or viewing images [131,132]. Despite the relatively short battery time of Google Glass and varying level of contrast enhancement in different areas of the scene, the device comes at a very reasonable cost with a cosmetically acceptable and socially desirable format and with flexibility that supports further innovation.

Recent studies address more specific daily tasks beneficial for most of the professions such as reading and using a keyboard. Moshatel et al. [63] developed a dynamic text presentation on see-through smart glasses using Epson Moverio BT-200, that employs biomimetic scrolling, which is basically generating text movements that mimic eye movements for reading. With a substantial increase in reading speed and preference by AMD patients, this study holds potential as a substitute for reading glasses for patients with later stages of the disease. A magnification-based vision enhancement method for AMD patients has also been proposed recently [133], that magnifies the unimpaired peripheral vision of the patient to assist them while using keyboard buttons. Combined with hand and eye trackers, this video-see through display device captures the environment, magnifies the video stream and projects it inside HTC Vive Pro display within square window placed at fixation. Their configuration differentiates from similar studies [134,135] by integrating eye trackers and focusing only on AMD instead of addressing low vision in general.

Besides the corrective display devices, simulating the visual impairment on healthy people is an important task for better understanding of the disease. These devices can also be used as pre-operative simulators to improve and optimized surgical settings such as photovoltaic subretinal prosthesis to restore the central vision [125]. Recently, Ho, et al. [64] performed complex visual tasks on healthy people wearing AR glasses (ODG R-7, developed by Osterhout Design Group) that simulate symptoms similar to that of AMD, i.e., reduced visual acuity, contrast, and field of view. They evaluate the performance of the participants regarding letter acuity, reading speed, and face recognition. As a result, subjects demonstrated letter acuity slightly exceeding the sampling limit and high efficacy in face recognition. These results indicate that photovoltaic subretinal implants with 100 µm pixels currently available for clinical testing may be helpful for reading and face recognition in patients who lost central vision due to retinal degeneration.

5.2. Glaucoma

In contrast to the central vision effects of AMD, glaucoma patients suffer from the loss of peripheral vision. Although visual content in these regions is often not blocked out completely, but rather reduced in different ways of becoming blurry, faded, jumbled, or distorted, creating a “tunnel vision” for the individual [136]. Despite being one of the major causes of untreatable blindness worldwide, the symptoms of glaucoma are often misunderstood [137,138]. The loss of peripheral vision usually causes risk of falling, difficulty in ascending/descending stairs, locating objects and affects mobility, resulting in anxiety, depression, and physical inactivity [139]. Furthermore, the so-called “tunnel vision” creates difficulties to the patients with their social interactions when noticing the speakers in a group or recognizing familiar faces on the street. The psychological aspect of glaucoma may lead to a severe impact on the individual. Understanding the effects of visual impairments is difficult for healthy people and the symptoms of glaucoma are not always explicit even for ophthalmologists. Therefore, vision simulators play a critical role for a systematic approach for diagnosis and treatment of glaucoma.

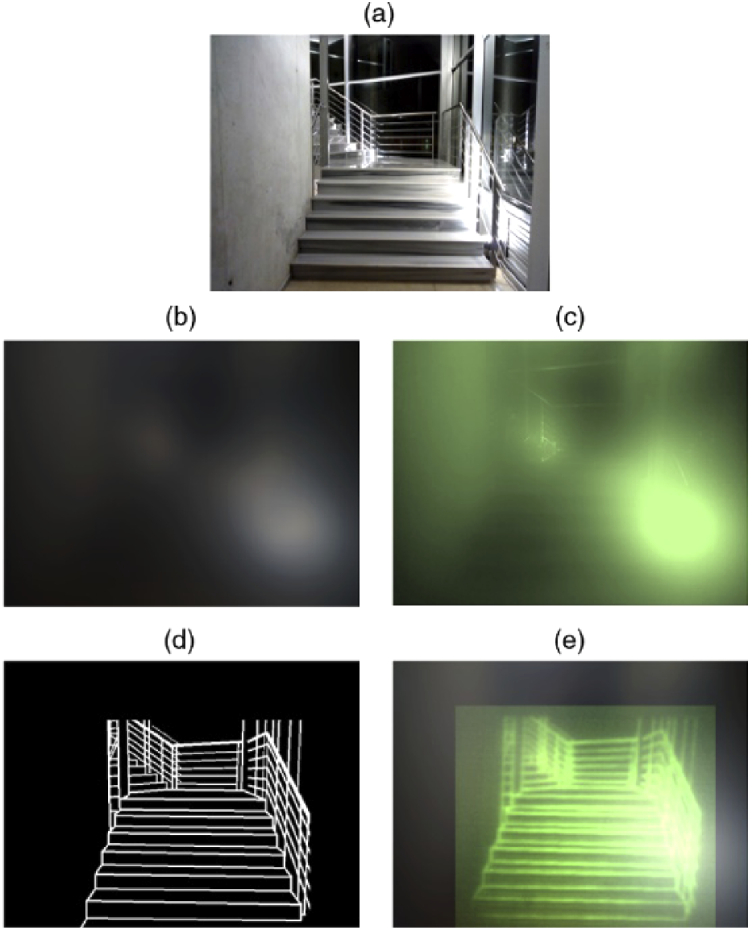

Augmented reality has been an agent to address the effects of glaucoma for almost two decades and has been employed by several HMDs to focus on the damaged peripheral vision of the patient [65,140 –145]. Vargas-Martin et al. [146] proposed an augmented view device that collects the information from the severed peripheral vision of the patient and displays the miniaturized version of this region as white lines towards the well-functioning tunnel vision as shown in Fig. 9. As opposed to magnification tools that limit the field-of-view, and minification tools that compromise the high resolution, this “vision multiplexing” method overcomes the trade-off between field-of-view and resolution. The evaluations on the performance of this device on visual search activities of the patients are discussed in [147], and it is reported that the augmented view device has improved the ability on the visual search task of some patients with tunnel vision. This method is further developed in a subsequent study [65] with evaluations of visual search, collision detection, and night vision. Device cosmetics and ergonomics are rated positive by subjects with tunnel vision, although the edge images are not yet sufficiently bright.

Fig. 9.

Simulation of a street-crossing scene as it might appear to a patient with tunnel vision using an augmented-vision visual field expander. The faded area in the wide-scene picture (upper panel) represents the peripheral field that patients with tunnel vision typically cannot see when looking straight ahead. The photograph in the lower panel provides a magnified representation of the display area and the view seen through the display. The natural (see-through) view is observed in full resolution, while the superimposed minified edge images provide a wide field of view, enabling detection of all the pedestrians (not just the lady in the see-through view), which could be potential collisions not visible without the display. (Reference [65], Fig. 1; with permission of John Wiley and Sons.)

Another real-time vision correction method is proposed by Younis et al. [66] by detecting the moving objects in the surrounding that poses a threat and notifying the users with a warning message through their healthy central vision. The algorithm classifies the possible obstacles and hazards by their danger level and notify the user about the most dangerous threat to minimize the number of notifications on the screen. Epson Moverio BT-200 is chosen as the display device combined with a real-world camera and a head motion sensor. Despite a relatively slow performance on the initial experiments, this hazard detection and tracking system shows promising potential for people with peripheral vision loss with a 93% success rate of moving object detection and 79% success rate of tracking accuracy.

In a recent study [67], Jones et al. reported two methods (object search task with a VR set and visual mobility task with an AR set) to simulate how glaucoma patients see the real world. Their research is important for educating and informing the public, raising awareness, training caretakers and overcoming psychological difficulties. It is the first functioning system that associates real-time, gaze-contingent image manipulations, clinical data, and stereoscopic presentation with an HMD. Although the results are consistent with the clinical data and the simulator is a good first-approximation to the glaucoma, it still doesn’t reflect some aspects reported by patients such as the impact of ambient light, spatial distortions, and filling-in effects.

5.3. Color blindness

People suffering from color blindness perceive a narrower spectrum of colors mostly in red-green hue discrimination and no treatment exists up to now [148]. This is due to a genetic anomaly of the cones in the eye, which are responsible for perceiving colors. Although color blindness is regarded as a ‘gentle’ disability compared to others, it affects the daily life of the patients starting from early childhood and even forbids people from some professions, e.g., pilots, air traffic controllers, signal conductors, and commercial drivers. Some studies revealed that color blinds are having difficulties while driving or parking, picking clothes, cooking, and playing games including board games, video games or smartphone applications. Especially, medical students and doctors with color deficiency are having difficulties recognizing body colors, test strips for blood and urine samples, ophthalmoscopy tests, and different body fluids.

There are many applications to aid colorblind people by correcting colors on a screen [149 –151]; however, the recent progresses in AR-HMDs paved the way to hands-free color correction glasses [152 –154]. Color vision deficiency (CVD) requires individual settings and the devices should be optimized for specific wavelengths that the patient is sensitive to. Tanuwidjaja et al. [68] developed an algorithm to highlight certain colors of the recorded scene in real-time and present them back to users through Google Glass. Despite the inherent limitations on processing power, battery life and camera resolutions of Google Glass-based displays, this study shows that a wearable AR tool performing real-time color correction can alleviate some of the obstacles that the color-blind people face in their daily lives.

In another study regarding computational glasses [32], Sutton et al. have completed two case studies, using near-eye displays with a micro-display and near-eye optics with a dynamic focal distance. Whilst the near-eye display study focused on the ability of Computational Glasses to work as visual aids for CVD, bench prototypes were developed in the near-eye optics study using phase modulation capable of pixel-wise color modification. They have reported successful results, but with a drawback of having VAC.

Langlotz et al. [69] developed a computational glass using Epson Moverio BT-300 as the display component and offered novel contributions for compensating the effects of CVD. They overlay the augmented images on the real-world locations with high precision, since they are using an additional beam splitter to align the real-world camera with the same axis that of the eye, which poses difficulties on other devices. Their algorithmic approach is the method called Daltonization used in visualizing the critical colors for CVD [155]. The algorithm shifts the colors from the confusion lines, which are specific colors on CIE 1931 color space that the eye of a color blind cannot distinguish. Individuals who use computational glasses have passed the color blindness tests; however, hardware miniaturization should be necessary for everyday use and image transfer speed should be increased.

5.4. Vision simulators for low vision

Head mounted displays can become effective and practical solutions for essential ophthalmic diseases when tailored specifically for the disease e.g., vision multiplexing, edge enhancement, or autofocusing lenses. Although visual impairments at later stages require these specific measurements, more comprehensive designs can be adopted widely by people with low vision in general, who suffer from reduced contrast sensitivity, loss of visual field, and lower visual acuity [129,130,156 –161]. There are also several ready-to-use low vision simulators in the market [162 –164]. Besides the prevalent visual impairments, which were discussed in this review, AR-HMDs might be helpful for retinitis pigmentosa and night blindness as well [165,166]. Addressing the visual impairments requires a quantitative understanding of the target population and it would be useful for device developers and interface designers. Ates et al. [167] presented a low-cost see-through simulation tool using Oculus Rift VR display and a wide angle camera to allow for simulating visual impairments including cataract, glaucoma, AMD, color blindness, diabetic retinopathy, and diplopia. Their design offers the flexibility of a software simulator and the immersion and stereoscopic vision of a VR display. Although the low resolution and lack of eye tracking modules of Oculus Rift imposes some barriers, their simulation tool can raise public awareness to certain visual impairments especially the preventable diseases such as obesity-related diabetic retinopathy or cataract. Another study for understanding low-vision people’s visual perception is conducted by Zhao et al. [168] with people who suffer from early stages of various visual impairments including cataract, glaucoma, retinitis pigmentosa, or diabetic retinopathy. They aim to understand what kinds of virtual elements low-vision people can perceive through optical see-through displays by comparing the examination results with a healthy control group. They explored their visual perception of shapes and text on the AR glasses in both stationary and mobile settings, using Epson Moverio BT-200 glasses. They reported that low-vision people could identify shapes easier than text, and their perception is superior to white, yellow, and green colors than red. With these advantages, low-vision participants could benefit from AR glasses in mobility tasks just like healthy people can.

Zhao et al. [135,169] developed a video see-through augmented reality system called ForeSee, which uses five different enhancement methods: magnification, contrast enhancement, edge enhancement, black/white reversal, and text extraction. Both the camera and the display need a computer as the processor; consequently, the device suffers from the bulky design. The low resolution of Oculus display and the web camera sets another limitation on the quality of the image and latency. On the other hand, the most recognizable advantage of ForeSee is that it is customizable to each user, therefore enhancing the users’ visual experience greatly. Building upon the previous work conducted by the same authors that improves the visual mobility abilities with a video see-through display, CueSee [134], the adaptability of ForeSee is extremely useful on different visual abilities and shows promise with a lighter design, higher resolution and more processing power, enabling ForeSee for everyday use.

A recent study addresses the difficulties of sign-reading in daily life when the visual wayfinding is impaired, and proposes a sign-reading assistant for users with reduced vision [170]. Huang et al. developed a software application adapted to Microsoft HoloLens that identifies real-world text (e.g., signs and room numbers) on manual command, highlights the text location, converts it to high contrast AR lettering directly in the user’s natural field of view, and optionally reads the content aloud via text-to-speech. Despite the promising results, the system encounters several limitations. The reliance of the OCR algorithm to a cloud-based service requires a wireless internet connection and causes a slight delay in obtaining the results. Furthermore, the accuracy of the OCR algorithm decreases in the presence of a high number of signages, and the AR sign does not always precisely overlap with the physical sign.

Another Microsoft HoloLens adaptation is developed by Kinateder et al. [171] to implement a distance-based visual augmentation, with a focus on usability for individuals with near-complete vision loss. The application translates spatial information from the real-world into the AR view, while imposing high-contrast edges between objects at different distances. The findings showed that simplifying visual scenes by edge enhancement is helpful for people with impaired vision and this approach can be implemented in a see-through HMD.

Stearns et al. [172] developed an augmented reality magnification aid using Microsoft HoloLens, displaying 3D virtual content onto the physical world that is received via either a smartphone camera or a finger-worn camera. The participants of the study are satisfied with the general concept for its improved mobility, privacy, and readiness level compared to other magnification aids. The narrow field of view, low-contrast holograms, and distorted colors are open to further development.

All the vision-correction tools depend on the inputs from the physical world, creating a need for advanced micro-processors and long battery life. Furthermore, the performance of these devices is mostly specified by the level of computer vision techniques. Optical character recognition and pupil tracking technologies are being used to extract texts and signs from the environment and displayed on the glasses for people with low vision. Future work could include navigation assistance similar to that of head-up displays in cars and face recognition software that helps low vision peoples in daily life [173,174].

6. Conclusion

In summary, we have discussed the emerging field of augmented reality head-mounted displays and their application areas in ophthalmology. As AR-HMDs are securing their place with diverse application areas [71,175,176] amongst other display technologies, the day-to-day adaptation of AR glasses and HMDs still requires further effort; nonetheless, current level of technology offers potential non-invasive solutions to many ophthalmic diseases [14]. Translational medicine, the meeting point of engineering and medical sciences, shows a progressive path on how visual impairments are dealt with and offers solutions to these diseases, which otherwise would dramatically decrease the quality of life of the individual, even lead to total blindness. Augmented reality, when combined with computer generated holography and adaptive optics, holds promise to offer non-invasive vision simulators, pre-operative simulators, and diagnostic devices. It is essential to consider the necessities of each visual impairment originated from different parts of the eye, e.g., lens, cornea, or retina. Especially lens- and cornea-related diseases, cataract and keratoconus, can favor AR-HMDs the most because of their reversable nature, whereas retina- or optic nerve-related irreversible diseases, i.e., age-related macular degeneration, glaucoma, and color blindness benefit from enhanced vision of these instruments. We predict that the dissuasive limitations of head-worn displays such as bulkiness, cosmetics, and battery life would not be limiting in ophthalmic implementations and carry this research area for further adaptation as low-vision aids and in the clinic.

Funding

European Research Council10.13039/501100000781 (acronym EYECAS, ERC-PoC); Agencia Estatal de Investigación10.13039/501100011033 (PID2019-105684RB-I00); Fundación Séneca10.13039/100007801 (19897/GERM/15).

Disclosures

PA is the inventor of several patents related to adaptive optics in ophthalmology. HU is the inventor of several patents related to holographic displays. No potential conflict of interest was reported by the other authors.

References

- 1.“WHO Fact Sheet,” https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment.

- 2. Solebo A. L., Teoh L., Rahi J., “Epidemiology of blindness in children,” Arch. Dis. Child. 102(9), 853–857 (2017). 10.1136/archdischild-2016-310532 [DOI] [PubMed] [Google Scholar]

- 3. Flaxman S. R., Bourne R. R., Resnikoff S., Ackland P., Braithwaite T., Cicinelli M. V., Das A., Jonas J. B., Keeffe J., Kempen J. H., Leasher J., “Global causes of blindness and distance vision impairment 1990–2020: a systematic review and meta-analysis,” The Lancet Global Health 5(12), e1221–e1234 (2017). 10.1016/S2214-109X(17)30393-5. [DOI] [PubMed] [Google Scholar]

- 4. Song P., Wang J., Bucan K., Theodoratou E., Rudan I., Chan K. Y., “National and subnational prevalence and burden of glaucoma in China: A systematic analysis,” J. Glob. Health 7(2), 020705 (2017). 10.7189/jogh.07.020705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Tham Y.-C., Li X., Wong T. Y., Quigley H. A., Aung T., Cheng C.-Y., “Global prevalence of glaucoma and projections of glaucoma burden through 2040: a systematic review and meta-analysis,” Ophthalmology 121(11), 2081–2090 (2014). 10.1016/j.ophtha.2014.05.013. [DOI] [PubMed] [Google Scholar]

- 6. Cheung N., Wong T. Y., “Obesity and eye diseases,” Surv. Ophthalmol. 52(2), 180–195 (2007). 10.1016/j.survophthal.2006.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]