Abstract

Compared to open surgical techniques, laparoscopic surgical methods aim to reduce the collateral tissue damage and hence decrease the patient recovery time. However, constraints imposed by the laparoscopic surgery, i.e. the operation of surgical tools in limited spaces, turn simple surgical tasks such as suturing into time-consuming and inconsistent tasks for surgeons. In this paper, we develop an autonomous laparoscopic robotic suturing system. More specific, we expand our smart tissue anastomosis robot (STAR) by developing i) a new 3D imaging endoscope, ii) a novel actuated laparoscopic suturing tool, and iii) a suture planning strategy for the autonomous suturing. We experimentally test the accuracy and consistency of our developed system and compare it to sutures performed manually by surgeons. Our test results on suture pads indicate that STAR can reach 2.9 times better consistency in suture spacing compared to manual method and also eliminate suture repositioning and adjustments. Moreover, the consistency of suture bite sizes obtained by STAR matches with those obtained by manual suturing.

I. Introduction

The benefits of minimally invasive surgery (MIS) approach, such as reducing collateral tissue damage, has motivated the recent transition of surgical procedures from open to laparoscopic [1], [2]. However, compared to open surgical techniques, laparoscopic surgical methods entail several challenges due to limitations in the motion of surgical tools and visual cues form the surgical scene. Such limitations can turn simple tasks in open surgery to inconsistent and time-consuming tasks in the laparoscopic setting for the surgeon [3]. In the same vein, suturing, as a crucial element of almost all surgical procedures that directly affects the anatomic reconstruction, suffers from such limitations.

Advances in the field of medical robotics over the past two decades have enabled highly dexterous and precise robotic assisted surgery (RAS) systems capable of performing a MIS approach and hence reducing duration of surgery, and patient’s recovery time [4]. Currently, robotic surgeries exclusively rely on a tele-operated control strategy, where every motion of the surgical tool is performed by the surgeon on a controller. Examples of such systems include the da Vinci Surgical System (Intuitive Surgical, Sunnyvale, California) [5] that has been used in various surgical procedures in urology, gynecology, cardiothoracic, and general surgery. one of the most recent commercially available robots is Senhance (Transenterix, Morrisville, NC) and has been used in inguinal hernia repair as well as upper gastrointestinal surgery and cholecystectomies [6]. However, functional outcomes of the above-mentioned master-slave systems depend on the training, proficiency, and daily performance changes of the surgeons that vary between the individuals and introduce human-related risk factors in the surgical system contributing to complication rates reaching up to 20% in general surgeries [7].

In this paper, we further extend our smart tissue autonomous robot (STAR) [8]-[10] and enable autonomous laparoscopic suturing. The STAR aims to provide consistent and effective laparoscopic suturing via a specifically-designed robotic system and ultimately substitute human skills. This can be achieved by reducing the total time of suturing task compared to the state-of-the-art tele-operated RAS [5], and standardizing and uniforming the quality of suturing outcome [11]. The STAR system consists of 1) a robotic arm equipped with, 2) an actuated suturing tool, and 3) a camera system to support vision-guidance and control system. Our contributions in this paper are threefold and expand on the components 2 and 3 of STAR. As the first contribution, we develop and test a novel laparoscopic 3D camera system suitable for surgical robotic applications. The 3D imaging endoscope provides a quantified peripheral construction of the surgical scene with an expansion to point cloud segmentation for autonomous planning of suturing.

As the second contribution, we develop a new robotic laparoscopic suturing tool via modifying the commercially available Proxisure suturing tool (Ethicon Inc. Somerville, NJ, United States). Proxisure is the only commercial suturing tool designed to compensate for laparoscopic constraints by adding 2 additional degrees of freedom (DOF) at the distal tip. By motorizing and mounting the Proxisure tool on a 7 DOF medical light weight robot (LWR) from KUKA (KUKA AG, Augsburg Germany), we enable STAR to place sutures in any orientation in the surgical field. We design and implement specialized hardware and software based on the developed camera and tool to execute autonomous suturing steps under supervision of human.

Finally, as the third contribution of this paper, we propose a segmented suturing planning method based on point clouds obtained from the 3D endoscope. The proposed method calculates the suture point locations based on the coordinates of the incision groove and cut. We experimentally demonstrate the accuracy and consistency of our new laparoscopic robotic suturing system and compare the results against manual laparoscopic suturing performed by experienced surgeons.

II. Motivation and Previous Work

A. Surgical Robotic Camera Systems

For autonomous surgical planning in a minimally invasive setting, a quantified 3D reconstruction of the scene with less to no data loss is critical. Current 3D reconstruction techniques such as stereoscopy, time-of-flight, plenoptic imaging and dot-like structured illumination have their limitations in reconstructing 3D based on natural inherent tissue features [12]-[14]. This limitation constrains the 3D accuracy to highly featured samples, and thus is not applicable for internal organs with few features such as intestine, kidney, or bladder. Moreover, the computational cost in the corresponding search between overlapped binocular view (stereoscopy), or hardware limitation (high source noise at the laser illumination or at the detector in time-of-flight, and customized microlens array in plenoptic imaging) also prevent miniaturization of cameras using these techniques into a laparoscope.

To improve these limitations for minimally invasive robotic surgery, a structured illumination technique using fringe projection profilometry is applied in this study to deduce a dense 3D point cloud. The technique reconstructs 3D geometry of the scene based on a continuous phase distribution. The phase is calculated based on multiple modulated shifted vertical fringe patterns to reflect sample height based on the captured fringed concavity and convexity. A 3D imaging endoscopic system using this technique has been demonstrated within a minimally invasive setting. The system provides dense point cloud with up to 250 μm accuracy in non-tissue and 500 μm on porcine tissue such as skin and intestinal tissues, detailed in [15]-[17]. Specularity with liquid on tissue in-vivo was taken care of at the image processing base with this setup due to lack of light input for hardware modification. This is achieved in 2D scale in which each image is denoised and filtered with Gaussian average filtering, as well as in 3D scale with neighboring filtering.

B. Robotic Suturing Tools

Anastomosis is a critical step of every surgical procedure and occurs more than a million times each year. Studies show that efficient and effective anastomosis is necessary for patient healing [18], with prolonged procedures correlating to poor surgical outcomes. Despite the frequency of surgical intervention and importance to clinical outcome, the surgical motions required for knot tying and suturing tissue require high dexterity that is difficult to perform with traditional tools under laparoscopic conditions. on average, manual laparoscopic anastomosis can last from 30 to 90 min depending on the tissue sutured [19], [20]. Two laparoscopic suturing devices, Endo StitchTM (Covidien, MA) and Endo360 (Endo-Evolution, Raynham, MA) [21], enable surgeons to manually suture with simplified motion. By incorporating either a linear (Endostitch) or circular (Endo360) needle, the throwing motion to pass suture through tissue has been reduced to the pull of a trigger. Comparison of manual anastomosis to these suturing tools demonstrates both time and cost of suturing are improved [22]. Unfortunately, inter-surgeon variations in technique, experience, and decision still directly impact the consistency and quality of anastomosis [23]. In our previous work, we achieve superior consistency in suture placement and tension using a motorized Endo360 suturing tool coupled to the KUKA LWR 4+ robot, resulting in a more leak free anastomosis [10]. However, despite this advancement, the motorized Endo360 only provides one of the two motions needed at the distal tip to provide complete 6 degrees of motion in the laparoscopic setting.

To achieve the full 6 degrees of freedom within the laparoscopic surgical field, our team has previously designed and prototyped a laparoscopic clipping tool [24]. The laparoscopic tool includes two pitch and yaw motions to provide wristed motion of the distal tip, as well as a circular needle to simplify the motion of passing a needle through tissue. When actuated, the tool approximates tissues by applying individual clips to target tissues. The resulting anastomosis is similar to that performed by an interrupted stitch. Unfortunately, the complexity of the tool and wear of back drive springs in the clipping mechanism makes it currently impractical for prolonged use. Most recently, Ethicon released Proxisure, the first commercial tool with circular needle drive and two degrees of freedom at the distal tip. The Proxisure tool provides both pitch and roll so the distal needle can be positioned in any orientation. In this paper, we adapt the Proxisure suturing tool to the STAR system by motorizing the pitch, roll, and needle drive mechanisms with the help of a tool adapter and three axis motor pack.

C. Autonomous Suturing Robots

Medical RAS have been utilized in different but limited forms including general surgeries, orthopedics, neurology and urology. In suturing applications specifically, master-slave paradigms have been utilized to address the problem of providing a dexterous workspace given the constraints of tool insertions in small volumes as well as improved visualization. Examples include, da Vinci surgical system [5], MiroSurge [25], and the Raven surgical robot [26]. However, such systems do not fully or partially replace surgeons. Therefore, some techniques have been proposed to augment the master-slave robots with active monitoring and modification methods assisting with specific task features.

Virtual fixtures are one of the common methods for guiding the motion of medical robots. These techniques either prevent the motion of robots from entering forbidden regions of the task space or steer the robot towards desired paths in the workspace [27]. Other efforts include the development of hand-held laparoscopic suturing tools for knot tying [28], [29]. The performance of such method solely depends on the existence of a surgeon in the loop.

In addition to virtual fixtures and hand-held tools, different estimation techniques have also been utilized towards increasing the autonomy of robotic suturing. Some methods are only focused on real-time visual feedback for autonomous suturing such as dynamic suture thread tracking [30] and adaptive 3D tracking of needle in laparoscopic tasks [31]. Computational methods are proposed for optimal selection of entry ports and needle grasps for autonomous robotic surgical assistants but the results are not experimentally implemented [32]. Other techniques include the estimation of internal deformation force during suturing to minimize tissue deformation and pave the way for future fully autonomous suturing [33]. Automation of needle grasping in laparoscopic suturing is proposed in [34] to ease and shorten the start of suturing process. Some novel methods have also introduced optimal algorithms for choosing needle diameter, shape, and path for robotic suturing via conventional needle drivers [35].

Given the current state-of-the-art laparoscopic surgery and RAS instruments, there is clear need for increasing the autonomy of robotic suturing. In this work we propose a method for calculating the suture points for the autonomous robot controller. Our method uses a point cloud segmentation technique, based on the coordinates of the incision groove obtained, to determine the 3D coordinates of the suture placement locations. This method aims to replace the linear interpolation method in our previous autonomous suturing system [10] and provide more accurate placement of sutures.

III. METHODS

A. Testbed

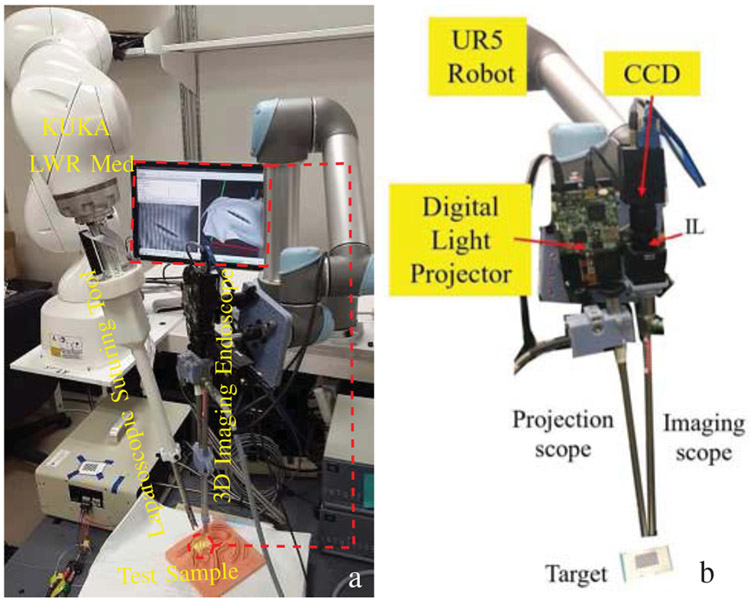

The experimental testbed developed in this paper is shown in Fig. 1.a. This testbed includes our novel robotic laparoscopic tool which is mounted on a 7-DOF KUKA Med lightweight arm (KUKA LWR Med) as the surgical robot, and our novel 3D imaging endoscope. The 3D endoscope and robotic suturing tools are detailed in the following.

Fig. 1:

a) Robotic laparoscopic suturing system, b) 3D imaging endoscope (IL: Imaging Lens).

1). 3D Imaging Endoscopic System:

The vision system supporting robotic manipulation in this paper incorporates a quantified 3D reconstruction with surgical planning method for autonomous suturing path.

a). System Setup:

The main components of the imaging system (Fig. 1.b) include a digital light projector with variable optical assembly (TI-DLP EVM3000, Texas Instrument, Dallas, Texas, USA) to couple into a rigid endoscope (260030 AA, Karl Storz Endoscopy, Tuttlingen, Germany) for fringe projections to the sample. The reflected fringed tissue images then are focused and relayed to a second rigid scope (ICG 260030 ACA, Karl Storz Endoscopy, Tuttlingen, Germany) to an imaging lens (IL, AC254-060-A, Thorlabs, NJ, USA) to a CCD (GS3-U3-15S5M-C, Point Grey Research Inc, Richmond, Canada). The 3D reconstruction technique is based on the relations between camera calibration parameters [36] and a calculated wrapped phase distribution.

b). Hand-Eye Calibration:

The hand-to-eye calibration between the base of the KUKA and the camera is based on a 3D-3D registration. First, a 12x9 chessboard is positioned in the field of view of the camera and the 3D coordinates of its four corners are measured. Then, a 614 mm long pointed concentricity tool with a circular runout tolerance of 0.1 mm is mounted on the KUKA and the 3D coordinates of the same corners are measured in the base coordinate system of the robot by touching them with the tip of the tool. Finally, the position and rotation between the two coordinate systems is estimated by minimizing the sum of least-squares errors between the two sets of 3D points [37]. The calibration procedure takes about twenty minutes to execute.

2). Suturing Tool:

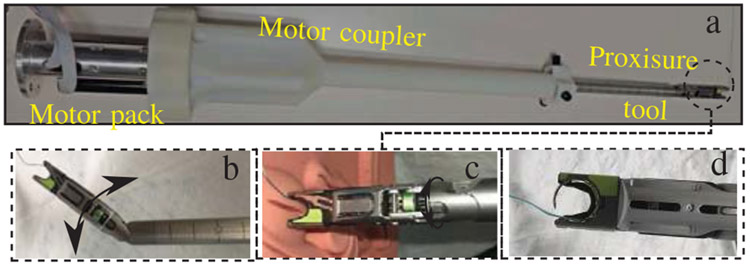

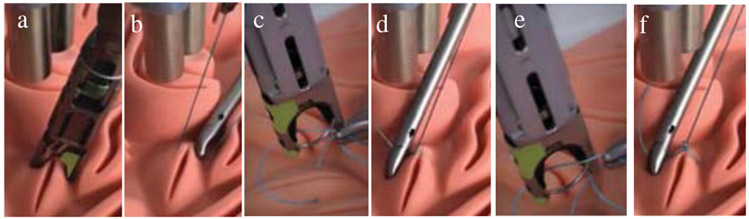

A novel multi-axis suturing tool (Fig. 2) was designed and prototyped to perform the autonomous suturing tasks in this study. The suturing tool was assembled by deconstructing the handle of a commercially available Proxisure suturing device and individually coupling each joint to a three axis motor pack using a customized tool adapter (Fig. 2.a). Each of the motors are energized independently to actuate one of three actions: pitch (Fig. 2.b), roll (Fig. 2.c), and needle drive (Fig. 2.d). The pitch and roll motions of the tool can be combined to provide an extra two degrees of freedom within the surgical space. When the tool is used under laparoscopic constraints, these two degrees of freedom restore full 6 degree of freedom positioning of the tool tip, enabling the robotic system to suture in any orientation. Each motor includes an encoder for precise positioning using EPOS2 controllers (Maxon Motors, Sachseln, Switzerland). Communication between the robot and tool is through a controller area network (CAN). The pitch and needle drive motors are homed by energizing each motor with current control until they reach a hard stop. The multi-axis tool is compatible with Ethicon brand suture of various size and materials. In the following studies, 2-0 polyester Ethibond suture is used for testing.

Fig. 2:

a) Suturing tool, b) pitch actuation, c) roll actuation, d) needle drive.

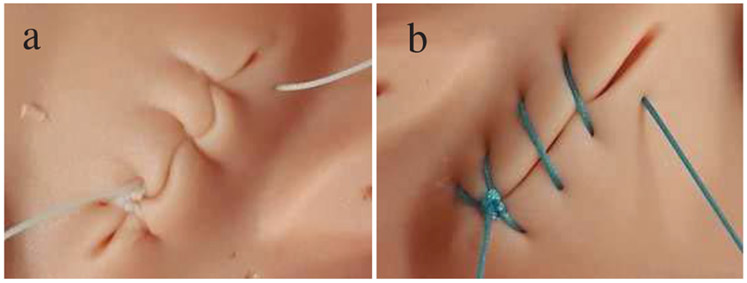

B. Surgical Task and Evaluation Criteria

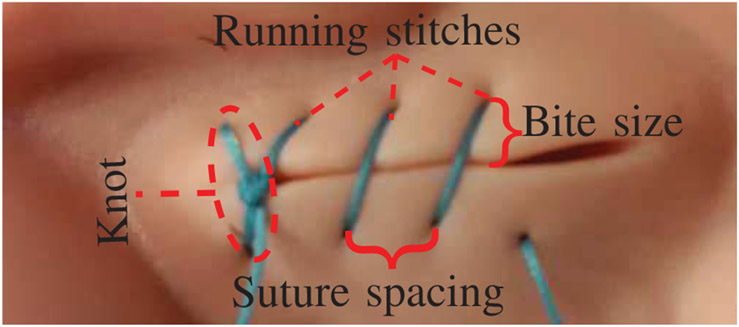

The surgical task considered in this paper includes performing a laparoscopic suturing on a straight incision. For the experiments, surgeons and STAR were instructed to complete a knot and 3 running stitches to close a 21mm incision (Fig. 3) on a 3-Dmed (Ohio, United States) training suture pad. The metrics for measuring the efficiency of STAR consist of i) task completion time, ii) the distance between consecutive stitches, iii) bite size (i.e. distance between stitch and the incision edge), and iv) repositioning mistakes during suturing. The latter three measures are known to influence the complications of healing process such as breaking and infections [38], [39].

Fig. 3:

Suture task and metrics.

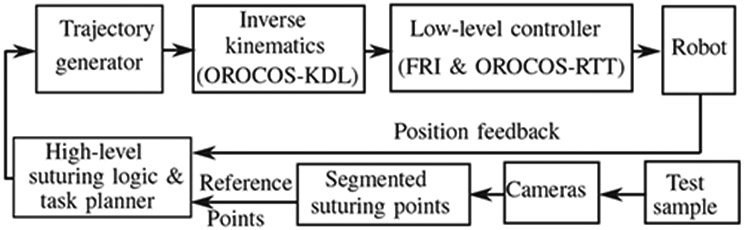

C. Control System

Fig. 4 shows the block diagram of the autonomous controller. In this control loop, 3D surface of tissue is constructed via the point cloud obtained by the camera shown in Fig. 1.b described earlier in this section. A suture point planning strategy uses the 3D surface information to determine the desired location of each knot and running stitch (later detailed in Section III-C.1). The resulting 3D coordinates of the reference suture points in the robot frame along with the current robot positions are passed to a high-level suturing logic and task planner (later detailed in Section III-C.2) which plans the sequence of robot motions to complete the knot and running stitches on the desired and equally spaced positions. The corresponding smooth time-based trajectories of the robot motion are calculated in real-time using Reflexxes Motion Libraries [40]. These trajectories are converted from task space to joint space of the robot via Kinematics and Dynamics Library (KDL) in Open Robot Control Systems (OROCOS) [41]. Finally, low-level controllers of robot and tool are implemented via Fast Research Interface (FRI) [42] and real-time toolkit (RTT) [43] in OROCOS library to guarantee that robot and tool follow the desired joint-space trajectories. Next, we explain the suture planning method.

Fig. 4:

The autonomous control loop.

1). Planning Strategy for Suturing:

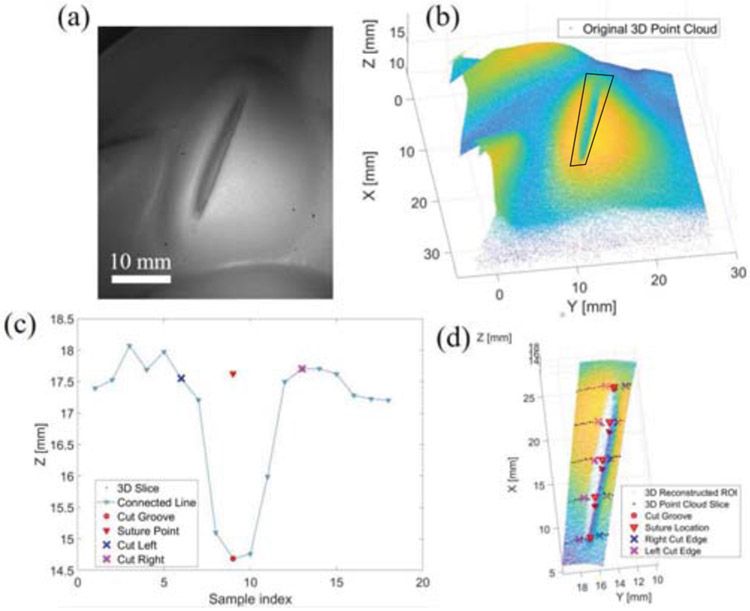

The suture pad is placed approximately 5 cm from the distal end of the endoscope for a desired field of view and to mitigate specular reflectance from the projected light. The cut line on a suture pad is located within the imaging field of view. A 3D point cloud distribution of the cut is analyzed to calculate the suture points for the robot based on the coordinates of the segmented cut groove and edge. The algorithm is executed in these 5 steps:

Region of interest (ROI) localization: An interactive polygon is drawn manually with boundary coordinates defining the point cloud boundary to focusing subsequent point cloud segmentation analysis within a smaller ROI (black polygon in Fig. 5.b). The purpose of this sampling method is to speed up the subsequent cut analysis by restraining only the cut feature ROI. The ROI precision and accuracy is related to the field of view and degrees of freedom of the optics. A thorough assessment of this relation is examined in [15], [16].

Point cloud sectioning: The overall point cloud with the cut feature inside the cropped ROI is sub-sampled along one direction that most reveals the cut groove (black dots in Fig 5.c and Fig 5.d). The spacing between each section is the planned suture spacing. In this study, we chose a 4-mm spacing to provide adequate and equal spacing on the desired 21 mm suture path.

Cut groove determination: The cut groove is identified by finding a prominent minimal peak along the point cloud section based on height differentiation from the neighboring depth values (red circles in Fig. 5.c and Fig. 5.d).

Cut edge calculation: Once the cut groove is defined, two regions on the left and right side of the cut groove are selected to find the cut edge. The index of the cut edge is deduced based on a point, which is farthest from the line connecting the cut groove point and the boundary point (blue and magenta cross markers in Fig. 5.c and Fig. 5d).

Suture point detection: The suture point is determined with x-y coordinates from the cut groove and z-coordinates as the average depth of the left and right cut edge (red triangles in Fig. 5.c and Fig. 5.d). The 3D coordinates of the calculated suture points are transformed to world coordinates for robotic manipulation.

Fig. 5:

Suture planning strategy: (a) A white reflectance image of the cut sample. (b) Collected point cloud with ROI. (c) An example of calculated cut groove, left and right cut edges, and the suture point. (d) An overlay of the calculated coordinates with suture spacing of 4 mm.

With the total size of the targeted cut line of 21 mm, 4 target points representing the location of knot and three running sutures are computed for a 4-mm suture spacing. The computational time for all 5 steps is within 30 seconds on an Intel Core i7-6500 CPU, 16 GB RAM laptop.

2). High-Level Suturing Logic and Task Planner:

As mentioned earlier in Section III-B, the suturing task includes a first knot followed by 3 running stitches. The location of the knot and each stitch is determined on the suture line (incision) via the algorithm explained in Section III-C.1. These points will be the references for the tool control point (TCP) of the robot. The reference/desired orientation of TCP is determined in a way that the jaw of the suturing tool is perpendicular to the suturing path.

The first subtask is to complete a knot at the first reference position and orientation. Completing a knot requires biting the tissue, tensioning the thread, and making two loops of suture to lock the knot into place. As shown in Fig. 6, the TCP of robot is commanded to the first position of the knot on the incision. The needle drive is then actuated so that the suture completes a bite of the suture pad (i.e. insert the needle through the tissue) (Fig. 6.a). Next, the initial bite of suture is tensioned to a height of 9cm (Fig. 6.b) and the tool is rotated to an orientation parallel to the suture line. To perform the first loop of the knot, a surgical assistant places the tail of the suture within the jaw of the suturing tool using a laparoscopic needle driver, and STAR actuates the needle drive (Fig. 6.c). The first loop of the knot is then tensioned by raising the suture tool above the suture plane (Fig. 6.d). After STAR tensions the first loop, the process is repeated to throw and tension a second loop of the knot, locking it into place on the suture pad (i.e. Fig. 6.e and Fig. 6.f).

Fig. 6:

Steps of executing a knot: a) bite, b) tensioning, c) first loop, d) tension of first loop, e) second loop, f) tension of second loop.

The second subtask is to complete three running stitches along the length of the incision. To complete the running stitches, STAR executes the first two steps of the knot tying sequence for each suture location that was found in Section III-C.1 (i.e. Fig. 6.a and Fig. 6.b). The position and orientation of the suture tool is autonomously adjusted for each stitch, such that the jaws of the tool remain perpendicular to the cut line. The amount of thread needed for the running sutures can be estimated by the following equation [8]:

where L is the total length of thread needed to complete N stitches of width T with the distance between stitches of d. In our experiments, N = 4, T = 8 mm, and d = 4 mm and hence an estimation of required length is L = 100 mm. The tensioning distance at each stitch can be easily calculated by reducing the amount of thread used before the current stitch from the total required length of thread at the start of the suturing. Here on average 18 mm is subtracted from the tensioning distance after each completed stitch.

IV. Experiments and Results

A. Test Conditions

For each of the manual and autonomous suturing tasks, 5 experiments were conducted and the aforementioned criteria were recorded and analyzed. The manual suturing experiments were completed by two expert surgeons using a laparoscopic trainer.During the suturing, the surgeon views the suturing scene via a tablet screen and operates two needle drivers through the ports on the laparoscopic trainer box.

For the autonomous suturing, first the tissue surface is detected by the 3D endoscope described earlier in Section III-A.1. The suturing way-points are determined via the method detailed in Section III-C.1. Finally, we complete the knot and running stitches with an autonomous control loop detailed earlier in Section III-C (Fig. 4). Since the robotic suturing is performed by one robotic arm, a surgical assistant uses a laparoscopic needle driver to manage the excess length of the suture thread.

B. Results

The results of the experiments are summarized in Tables I and II. Representative examples of manual suturing and autonomous suturing results via STAR are shown in Fig. 7.

TABLE I:

Comparison of the results via completion time.

| Total (sec) | Knot (sec) | Stitches (sec) | ||||

|---|---|---|---|---|---|---|

| Test | Avg. | Std. | Avg. | Std. | Avg. | Std. |

| Manual | 180.20 | 13.53 | 92.2 | 17.28 | 88.00 | 9.59 |

| STAR | 286.60 | 6.68 | 175.4 | 6.34 | 111.2 | 5.56 |

TABLE II:

Comparison of the results via distance between stitches, bite size, and number of suture repositioning.

| Dist between stitches (mm) |

Bite size (mm) |

Number of repositioning |

||||

|---|---|---|---|---|---|---|

| Test | Avg. | Std. | Avg. | Std. | Avg. | Std. |

| Manual | 3.68 | 1.19 | 2.53 | 0.86 | 1.60 | 0.80 |

| STAR | 4.70 | 0.41 | 3.41 | 0.83 | 0.00 | 0.00 |

Fig. 7:

Examples of suturing: a) manual, b) autonomous.

1). Completion Time:

As presented in Table I, the average total task completion time for the autonomous method is 106.4 seconds longer than manual method. Completing the knot contributes to the majority of this difference (i.e. 83.2 seconds longer in the autonomous mode with p < 0.001), while the remaining difference is related to the speed of the stitching steps (i.e. 23.2 seconds longer in autonomous mode, p < 0.01, for 3 stitches).

2). Distance Between Stitches:

The average suturing distance obtained by STAR is statistically l.02 mm larger than the manual, p < 0.001. The desired value of spacing between the stitches for STAR is 4 mm and the experimental results show an average spacing of 4.7 mm. However, variance of suture spacing is significantly less for STAR than human p < 0.001 which indicates that STAR placed the running stitches more uniformly (2.9 times better) compared to the human surgeons.

3). Bite Size:

Although the average bite size of STAR is statistically larger than manual p < 0.001 the variance of the bite size is non significant for STAR and human (p = 0.837). This indicates that STAR is just as consistent as human surgeons when suturing a specified depth.

4). Number of Stitch Repositions:

In the experiments, the human surgeons made an average of 1.6 suture corrections per test (i.e. 1.6 corrections for 1 knot and 3 stitches). In contrast, because of the positioning accuracy and repeatability, STAR required zero corrections per test.

V. DISCUSSION

From the total of four criteria used in this paper, the consistency of STAR outperforms manual suturing in two metrics. High consistency and accuracy in suture spacing provides a reliable method for placing sutures at the desired planned locations. Also, compared to manual method, STAR prevents additional throws for placing the sutures as the number of suture corrections are zero, minimizing unnecessary damage to the tissues. Furthermore, in our experiments the consistency of bite size is equal to human.

Despite high consistency and accuracy of STAR in performing the suturing task, the overall process is slower than the manual method. One of the main reasons behind the slower pace of suturing in autonomous mode is due to the limited maximum speed of the motors used in the suturing tool. Given the current motors and internal mechanism of the tool, when the needle drive motor is actuated at full speed, completing each knot loop or bite with the tool takes 18 seconds (i.e. the time for the needle to complete a full circular drive). With faster motors this can be reduced to 2 seconds. Moreover, the maximum values of robot speed were intentionally chosen low (1 cm/s) to ensure safety during tests (e.g. preventing sudden suture tensions or fast contacts with the suture pad). These values, can be optimized in future to make a balance between safety and speed.

In this paper, we proved the feasibility of using the new laparoscopic suturing tool and camera system with our STAR system. We will add a remote center of motion (RCM) to the planning and control algorithms of the robot to accommodate the motion of robot via the laparoscopic ports and also will include a trainer box in the autonomous control experiment to ensure the performance of STAR in restricted abdominal spaces. Other limitations of the current work include the use of a static endoscopic image for suture path planning. This method is effective for the static suture pads used in the current experiments. However, for more complex suturing task on real tissues, the suture planning should be updated regularly based on the current status and motion of tissue detected via real-time 3D image frames. Furthermore, in order to make the suture planning strategy robust to dynamic disturbances such as blood on the tissue, we will integrate biocompatible near-infrared (NIR) marking into the current camera system and use them as descriptive landmarks for tracking the tissues and updating the suture plans in real-time.

VI. Conclusion

In this paper we advanced our STAR system by developing a new 3D imaging laparoscope and a new suturing tool for enabling robotic laparoscopic suturing. Moreover, we proposed a suture planning technique based on the 3D images obtained from the new imaging system and implemented it in the robot control system. Our test results indicate that with our enhanced STAR system we can achieve a superior consistency in suture spacing and also minimize the suture repositioning compared to manual laparoscopic methods. Future work will include improving the suturing speed, while maintaining the consistency and accuracy of the suturing, as well as anastomosis tests on animal cadavers and in-vivo.

Acknowledgments

Research reported in this paper was supported by National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health under award numbers 1R01EB020610. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- [1].Noel JK, Fahrbach K, Estok R, Cella C, Frame D, Linz H, Cima RR, Dozois EJ, and Senagore AJ, “Minimally invasive colorectal resection outcomes: short-term comparison with open procedures,” J. of the American College of Surgeons, vol. 204, no. 2, pp. 291–307, 2007. [DOI] [PubMed] [Google Scholar]

- [2].Fullum TM, Ladapo JA, Borah BJ, and Gunnarsson CL, “Comparison of the clinical and economic outcomes between open and minimally invasive appendectomy and colectomy: evidence from a large commercial payer database,” Surgical endoscopy, vol. 24, no. 4, pp. 845–853, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Finkelstein J, Eckersberger E, Sadri H, Taneja SS, Lepor H, and Djavan B, “Open versus laparoscopic versus robot-assisted laparoscopic prostatectomy: the European and US experience,” Reviews in urology, vol. 12, no. 1, p. 35, 2010. [PMC free article] [PubMed] [Google Scholar]

- [4].Mercante G, Ruscito P, Pellini R, Cristalli G, and Spriano G, “Transoral robotic surgery (TORS) for tongue base tumours,” Acta otorhinolaryngologica italica, vol. 33, no. 4, pp. 230–235, 2013. [PMC free article] [PubMed] [Google Scholar]

- [5].Kang CM, Chi HS, Kim JY, Choi GH, Kim KS, Choi JS, Lee WJ, and Kim BR, “A case of robot-assisted excision of choledochal cyst, hepaticojejunostomy, and extracorporeal Roux-en-y anastomosis using the da Vinci surgical system,” Surgical Laparoscopy, Endoscopy & Percutaneous Techniques, vol. 17, no. 6, pp. 538–541, December 2007. [DOI] [PubMed] [Google Scholar]

- [6].Stephan D, Slzer H, and Willeke F, “First experiences with the new Senhance telerobotic system in visceral surgery,” Visceral medicine, vol. 34, no. 1, pp. 31–36, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Salman M, Bell T, Martin J, Bhuva K, Grim R, and Ahuja V, “Use, cost, complications, and mortality of robotic versus nonrobotic general surgery procedures based on a nationwide database,” The American surgeon, vol. 79, no. 6, pp. 553–560, 2013. [PubMed] [Google Scholar]

- [8].Leonard S, Shademan A, Kim Y, Krieger A, and Kim PC, “Smart Tissue Anastomosis Robot (STAR): Accuracy evaluation for supervisory suturing using near-infrared fluorescent markers,” in Int. Conf. on Robotics and Automation (ICRA), 2014, pp. 1889–1894. [Google Scholar]

- [9].Leonard S, Wu KL, Kim Y, Krieger A, and Kim PC, “Smart tissue anastomosis robot (STAR): A vision-guided robotics system for laparoscopic suturing,” IEEE Trans. on Biomedical Engineering, vol. 61, no. 4, pp. 1305–1317, 2014. [DOI] [PubMed] [Google Scholar]

- [10].Shademan A, Decker RS, Opfermann JD, Leonard S, Krieger A, and Kim PCW, “Supervised autonomous robotic soft tissue surgery,” Science Translational Medicine, vol. 8, no. 337, pp. 337ra64–337ra64, May 2016. [DOI] [PubMed] [Google Scholar]

- [11].Honda M, Kuriyama A, Noma H, Nunobe S, and Furukawa TA, “Hand-sewn versus mechanical esophagogastric anastomosis after esophagectomy: a systematic review and meta-analysis,” Annals of surgery, vol. 257, no. 2, pp. 238–248, 2013. [DOI] [PubMed] [Google Scholar]

- [12].Wu TT and Qu JY, “Optical imaging for medical diagnosis based on active stereo vision and motion tracking,” Optics express, vol. 15, no. 16, pp. 10 421–10 426, 2007. [DOI] [PubMed] [Google Scholar]

- [13].Hennersperger C, Fuerst B, Virga S, Zettinig O, Frisch B, Neff T, and Navab N, “Towards MRI-based autonomous robotic US acquisitions: a first feasibility study,” IEEE transactions on medical imaging, vol. 36, no. 2, pp. 538–548, 2017. [DOI] [PubMed] [Google Scholar]

- [14].Le HN, Decker R, Krieger A, and Kang JU, “Experimental assessment of a 3-D plenoptic endoscopic imaging system,” Chinese Optics Letters, vol. 15, no. 5, p. 051701, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Le HN, Nguyen H, Wang Z, Opfermann J, Leonard S, Krieger A, and Kang JU, “Demonstration of a laparoscopic structured-illumination three-dimensional imaging system for guiding reconstructive bowel anastomosis,” J. of Biomedical Optics, vol. 23, no. 5, p. 056009, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Le HN, Opfermann JD, Kam M, Raghunathan S, Saeidi H, Leonard S, Kang JU, and Krieger A, “Semi-autonomous laparoscopic robotic electro-surgery with a novel 3d endoscope,” in 2018 IEEE Int. Conf. on Robotics and Automation (ICRA) IEEE, 2018, pp. 6637–6644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Le HN, Nguyen H, Wang Z, Opfermann J, Leonard S, Krieger A, and Kang J, “An endoscopic 3d structured illumination imaging system for robotic anastomosis surgery (conference presentation),” in Advanced Biomedical and Clinical Diagnostic and Surgical Guidance Systems XVI, vol. 10484, 2018, p. 104840C. [Google Scholar]

- [18].Lau W-Y, Leung K-L, Kwong K-H, Davey IC, Robertson C, Dawson JJ, Chung SC, and Li AK, “A randomized study comparing laparoscopic versus open repair of perforated peptic ulcer using suture or sutureless technique.” Annals of Surgery, vol. 224, no. 2, pp. 131–138, 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Dion Y-M, Hartung O, Gracia C, and Doillon C, “Experimental laparoscopic aortobifemoral bypass with end-to-side aortic anastomosis.” Surgical laparoscopy & endoscopy, vol. 9, no. 1, pp. 35–38, 1999. [PubMed] [Google Scholar]

- [20].Hoznek A, Salomon L, Rabii R, Slama M-RB, Cicco A, Antiphon P, and Abbou C-C, “Techniques in endourology vesicourethral anastomosis during laparoscopic radical prostatectomy: The running suture method,” J. of endourology, vol. 14, no. 9, pp. 749–753, 2000. [DOI] [PubMed] [Google Scholar]

- [21].Adams JB, Schulam PG, Moore RG, Partin AW, and Kavoussi LR, “New laparoscopic suturing device: initial clinical experience,” Urology, vol. 46, no. 2, pp. 242–245, 1995. [DOI] [PubMed] [Google Scholar]

- [22].Hashemi L, Hart S, Craig S, Geraci D, and Shatskih E, “Effect of an automated suturing device on cost and operating room time in laparoscopic total abdominal hysterectomies,” Journal of Minimally Invasive Gynecology, vol. 18, no. 6, pp. S96–S97, 2011. [Google Scholar]

- [23].Harold KL, Matthews BD, Backus CL, Pratt BL, and Heniford BT, “Prospective randomized evaluation of surgical resident proficiency with laparoscopic suturing after course instruction,” Surgical Endoscopy And Other Interventional Techniques, vol. 16, no. 12, pp. 1729–1731, 2002. [DOI] [PubMed] [Google Scholar]

- [24].Krieger A, Opfermann J, and Kim PC, “Development and Feasibility of a Robotic Laparoscopic Clipping Tool for Wound Closure and Anastomosis,” J. of medical devices, vol. 12, no. 1, pp. 0 110 051–110 056, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Hagn U, Konietschke R, Tobergte A, Nickl M, Jrg S, Kbler B, Passig G, Grger M, Frhlich F, Seibold U, Le-Tien L, Albu-Schffer A, Nothhelfer A, Hacker F, Grebenstein M, and Hirzinger G, “DLR MiroSurge: a versatile system for research in endoscopic telesurgery,” Int. J. of Computer Assisted Radiology and Surgery, vol. 5, no. 2, pp. 183–193, March 2010. [DOI] [PubMed] [Google Scholar]

- [26].Lum MJH, Friedman DCW, Sankaranarayanan G, King H, Fodero K, Leuschke R, Hannaford B, Rosen J, and Sinanan MN, “The RAVEN: Design and Validation of a Telesurgery System,” The Int. J. of Robotics Research, vol. 28, no. 9, pp. 1183–1197, September 2009. [Google Scholar]

- [27].Kapoor A, Li M, and Taylor RH, “Spatial motion constraints for robot assisted suturing using virtual fixtures,” in Int. Conf. on Medical Image Computing and Computer-Assisted Intervention Springer, 2005, pp. 89–96. [DOI] [PubMed] [Google Scholar]

- [28].Wang H, Wang S, Ding J, and Luo H, “Suturing and tying knots assisted by a surgical robot system in laryngeal MIS,” Robotica, vol. 28, no. 2, pp. 241–252, 2010. [Google Scholar]

- [29].Gpel T, Hrtl F, Schneider A, Buss M, and Feussner H, “Automation of a suturing device for minimally invasive surgery,” Surgical endoscopy, vol. 25, no. 7, pp. 2100–2104, 2011. [DOI] [PubMed] [Google Scholar]

- [30].Jackson RC, Yuan R, Chow D, Newman WS, and avuolu MC, “Real-Time Visual Tracking of Dynamic Surgical Suture Threads,” IEEE Trans. on Automation Science and Engineering, vol. 15, no. 3, pp. 1078–1090, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Zhong F, Navarro-Alarcon D, Wang Z, Liu Y, Zhang T, Yip HM, and Wang H, “Adaptive 3d pose computation of suturing needle using constraints from static monocular image feedback,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), October 2016, pp. 5521–5526. [Google Scholar]

- [32].Liu T and Cavusoglu MC, “Needle Grasp and Entry Port Selection for Automatic Execution of Suturing Tasks in Robotic Minimally Invasive Surgery,” IEEE Transactions on Automation Science and Engineering, vol. 13, no. 2, pp. 552–563, April 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Jackson RC, Desai V, Castillo JP, and avuolu MC, “Needle-tissue interaction force state estimation for robotic surgical suturing,” in 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), October 2016, pp. 3659–3664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].“Automated pick-up of suturing needles for robotic surgical assistance,” arXiv:1804.03141 [cs], April 2018, arXiv: 1804.03141. [Online]. Available: http://arxiv.org/abs/1804.03141 [Google Scholar]

- [35].Pedram SA, Ferguson P, Ma J, Dutson E, and Rosen J, “Autonomous suturing via surgical robot: An algorithm for optimal selection of needle diameter, shape, and path,” in 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), May 2017, pp. 2391–2398. [Google Scholar]

- [36].Wang Z, Du H, Park S, and Xie H, “Three-dimensional shape measurement with a fast and accurate approach,” Applied optics, vol. 48, no. 6, pp. 1052–1061, 2009. [DOI] [PubMed] [Google Scholar]

- [37].Arun KS, Huang TS, and Blostein SD, “Least-squares fitting of two 3-d point sets,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. PAMI-9, no. 5, pp. 698–700, September 1987. [DOI] [PubMed] [Google Scholar]

- [38].Cengiz Y, Blomquist P, and Israelsson LA, “Small tissue bites and wound strength: an experimental study,” Archives of Surgery, vol. 136, no. 3, pp. 272–275, 2001. [DOI] [PubMed] [Google Scholar]

- [39].Millbourn D, Cengiz Y, and Israelsson LA, “Effect of stitch length on wound complications after closure of midline incisions: a randomized controlled trial,” Archives of Surgery, vol. 144, no. 11, pp. 1056–1059, 2009. [DOI] [PubMed] [Google Scholar]

- [40].Reflexxes motion libraries for online trajectory generation. [Online]. Available: http://www.reflexxes.ws/

- [41].Smits R, “KDL: Kinematics and Dynamics Library,” http://www.orocos.org/kdl.

- [42].Schreiber G, Stemmer A, and Bischoff R, “The fast research interface for the kuka lightweight robot,” in IEEE Workshop on Innovative Robot Control Architectures for Demanding (Research) Applications How to Modify and Enhance Commercial Controllers (ICRA 2010) Citeseer, 2010, pp. 15–21. [Google Scholar]

- [43].Soetens P, “RTT: Real-Time Toolkit,” http://www.orocos.org/rtt.