Abstract

COVID-19 is the present-day pandemic around the globe. WHO has estimated that approx 15% of the world's population may have been infected with coronavirus with a large number of population on the verge of being infected. It is quite difficult to break the virus chain since asymptomatic patients can result in the spreading of the infection apart from the seriously infected patients. COVID-19 has many similar symptoms to SARS-D however, the symptoms can worsen depending on the immunity power of the patients. It is necessary to be able to find the infected patients even with no symptoms to be able to break the spread of the chain. In this paper, the comparison table describes the accuracy of deep learning architectures by the implementation of different optimizers with different learning rates. In order to remove the overfitting issue, different learning rate has been experimented. Further in this paper, we have proposed the classification of the COVID-19 images using the ensemble of 2 layered Convolutional Neural Network with the Transfer learning method which consumed lesser time for classification and attained an accuracy of nearly 90.45%.

Keywords: COVID-19, CNN, Deep learning, Optimizers, Transfer learning

1. Introduction

COVID-19 has been a global health emergency and it has spread across the globe with more than 45 million confirmed cases and 1.2 million deaths with the vast majority of the world remains at the risk. The cases of COVID-19 have been rising again with a steep increase with the onset of the winters in the northern hemisphere. During this COVID-19 pandemic, prognostic predictions are required to improve and manage patient care. COVID-19 is caused by the virus due to which antibiotics are unable to treat the patients thereby complicating their infection if the body immunity is low. There exist large public datasets of more typical chest X-rays from the NIH [Wang 2017], Spain [Bustos 2019], Stanford [Irvin 2019], MIT [Johnson 2019], and Indiana University [Demner-Fushman 2016], however, no public datasets in large quantity have been available for COVID-19 to be used for computational analysis. Most of the diagnosis has been making use of the Polymerase Chain Reaction (PCR) or with Computed Tomography (CT) images. Since the advent of the Machine Learning Algorithms, Decision Trees are being used for the decision making intelligently in different walks of the life [1], [2]. Similarly better software tools can help the doctors arrange for better analysis and have a second opinion that can act as the confirmation of the diagnosis by the radiologist and treatment procedures can then be decided thereby improving the patient survival rate [3]. If datasets of images related to the COVID-19 can be added with only the important features then better models with higher prediction accuracy can be developed to differentiate between the different types of virus-infected problems. The major challenge for this problem statement is the Unavailability of a large dataset of images of different types of the severity of the infection. In the present paper, we have made use of the COVIDGR 1.0 dataset that consists of images of COVID-19 tested patients that had an equal number of images of positive and negative COVID-19 patients. Another major issue is that the COVID-19 infection has many symptoms similar to the other flu infections. Being similar to other lung infections, it becomes a major challenge to classify it into accurate class.

In this paper, we have proposed a deep learning framework that constitutes the ensemble of 2 layered CNN for the classification of the COVID-19 images making use of transfer learning. This paper is organized as research works carried out on the data by other authors in Section 2, where the Literature Review of the COVID-19 is being discussed. Deep Learning Architectures compared and proposed have been discussed in Section 3. Section 4 defines the Methodology of the classifiers with an overview of the architecture of the proposed approach. Section 5 with results and comparison analysis with other classifiers and finally we conclude the open research challenges in Section 6.

2. Literature review

Due to the severe upsurge of the infection of COVID-19, a lot of research is going on that has been focussed on various aspects of the COVID-19 ranging from the genome comparison of the COVID-19 with the genomes of malaria, SARS, etc, effect of the geographical factors on the infection and image analysis of CT scans and X-rays of the positive and negative patients. Since the last decade, Image Processing has proved as an important tool to classify the images of cancer and other diseases for diagnosis purposes. Further, the advancement of Machine Learning has helped in the easy and accurate Image Analysis. Large numbers of researches are being presently conducted for the Image analysis of the CT scans and X-rays of the lungs with better classification results that can decide the path of the treatment for the patients that can increase the survival rate of the patients. The advancements in the computing power of the systems and GPUs have helped to carry out complicated tasks are possible.

The proposed schema helps not only in the improvement of image classification but also in analysis with better symptomatic accuracies. Further, we have presented the comparison of the 4 state-of-the-art predesigned networks like VGG-16, ResNet-50, InceptionV3 & EfficientNet apart from the transfer learning implemented on the CNN. All these networks were fine-tuned with the transfer learning and multiple experiments were conducted on the acquired datasets using multiple optimizers and learning rates. Recent studies on the CT and X-Ray images of the COVID-19 patients reported that patients present abnormalities in chest CT images with most having bilateral involvement. Bilateral multiple lobular and subsegmental areas of consolidation constitute the typical findings in chest CT images of intensive care unit (ICU) patients on admission. In comparison, non-ICU patients show bilateral ground-glass opacity and subsegmental areas of consolidation in their chest CT images. In these patients, later chest CT images display bilateral ground-glass opacity with resolved consolidation [4], [5], [6]. In order to detect whether the CXR images of the patient have an infection or not, it is not possible to implement traditional machine learning algorithms since the input has a fixed dimension. Hence we have utilized deep learning networks for image classification.

3. Deep learning architectures

Deep learning Architectures have been used for heavy computational tasks and these architectures have been able to provide the output with reduced time complexity. One of the first neural networks has been Convolutional Neural Network (CNN). CNN is termed as the group of deep neural networks that can help to classify the different classes of images. It reduces the preprocessing of the input data by assigning the weights to the most important features of the input data as the input data moves deeper into the layers for further processing. CNN is based on the multilayer perceptron. It utilizes a large number of neurons in the layers. CNN has been successfully used for the application of the classification of images.[7].

The network ingests images as separate layers of 3 colors overlapping each other. When images are fed into the CNN, and the images are expressed as multidimensional matrices. The square patch of pixels has a definite pattern and filters are used to further extract these patterns. Further Activation Maps are used to describe the number of steps for filtering the images [7], [8]. The images are augmented by slicing and rotating at 15°, 30°, and 45°, and then, rotated and flip by 90°, 180°, and 270°. Further, a Random Gaussian variable was added to the pixel values to make the noised applied in each presence of the infection. If the input image had no presence of the infection in the pixels. Those images will be removed and if the infection is present in the pixels, the images would be stored.

The standard way to model a neuron is determined by the following equation-

| (1) |

whose processing is steady [9]. Thus, the RELU function which describes the “non-saturating nonlinearity” is used and its equation is-

| (2) |

VGG-16: This deep learning architecture consists of 13 Convolutional layers with 3 layers for fully connected layers. The final output layer consists of the 1000 classes of the images for an input of 224 × 224 images. All the layers are not followed by the convolutional layers and max-pooling layers [10]. The activation function used in the hidden layers is RELU.

ResNet : ResNet stands for the Residual Network and it is an Artificial Neural Network that builds pyramidal cells using skip connections and contains nonlinearities [11]. The skip connections are carried out to overcome the problem of the vanishing gradients and further makes the network more simple [12], [13]. ResNet-50 has 50 layers where 48 convolution layers are present. It has a large number of tunable parameters. It carries out the 3.8 × 109 operations.

Inception V3: Inception is made up of many building blocks consisting of convolutions, average pooling, dropouts, and fully connected layers with softmax acting as the loss function [14], [15]. The input images are required to be resized to the dimension of 299 × 299 × 3. To improve the accuracy of the classification, it is required that the training samples are repeatedly passed for the training and it is termed as steps of an epoch. RMSProp is considered to be one of the best default optimizers that makes use of decay and momentum variables to achieve the best accuracy of the image classification. With the increase in the volume of the training dataset, it requires the model to learn gradually about the training samples, hence the implementation of the learning rate is helpful. Furthermore, the learning rate also avoids the issue of overfitting.

EfficientNet : This Deep Learning was proposed for scaling all the dimensions of the images with a stable coefficient. Those coefficients have been added to the baseline network to improve the performance of the network and increase the classification accuracy and time complexity. There are B0 to B7 variants of this model with the resolution ranging from 224 to 600 [16], [17], [18].

4. Methodology

The proposed Methodology consisted of the feature extraction with the help of Transfer Learning techniques implemented on the ensemble 2 layered network of the CNN. The classification of the images starts with the preprocessing of the images that are required to be converted into the same channel so that the deep learning models can process the data. The COVID-19 has created a drastic issue over the world, however, a very less amount of images of the patients are available for open public domain access. To scale up the volume of the images, different data augmentation techniques including rotation, pixel swapping, etc can be used. In the second stage, models were defined with the different layers overlapping [19]. These layers were further enhanced with the different training parameters like number of epochs, number of folds per epoch, batch size, different optimizers, different learning rates. Further, the classification was carried out and the models defined were validated.

This section brings a series of statistical plots (Table 1 ) with the final presentation of the results achieved in the deep learning architectures described in the above sections. We considered a total of five algorithms for accuracy assessment. It was seen that the accuracy of the proposed approach (Transfer learning on the CNN) was the highest (85.81%) with a specificity of 0.732 and a sensitivity of 0.812. The performance of CNN is found to be comparable to the state of the art machine learning methods with only little pre-processing and fine-tuning [20]. These results were obtained using the network on the X-Ray images dataset of COVID-19. Fine-tuning of the CNN architecture with our own dataset was also done and then the results were finally computed.

Table 1.

Comparison of Different Deep Learning Architectures.

| Architecture | Input Size | Size | Layers | Tunable Parameters (Apprx) |

|---|---|---|---|---|

| VGG-16 | 224 × 224 × 3 | 528 MB | 16 | 138 Million |

| VGG-19 | 224 × 224 × 3 | 549 MB | 19 | 143 Million |

| ResNet-50 | 224 × 224 × 3 | 98 MB | 50 | 25 Million |

| Inception V3 | 299 × 299 × 3 | 92 MB | 159 | 23 Million |

| EfficientNet | 224 × 224 x3 | 29 MB to 256 MB | 237–831 (dependent on the variant of the model) | 4 Million to 17 Million |

CNN gives better results owning to better training of model for different classes, deep features of CNN provide much more information. Instead of using hand-crafted features, CNN has the potential to extract features automatically. Therefore, deep models are of great benefit to the ability to learn very complex tasks, which we hardly understand. In addition, intensive learning models can exploit non-linear relationships. It can be easily implemented in a neural network model with various activation functions. Another advantage is that there are several associated parameters in it and their tuning is known as hyper-parameter tuning which has generalization capability. Among the problems associated with CNN, one problem is that the images are high-dimensional due to which the process becomes more time-consuming.

The input of the data consists of the dataset described as above. It has images of the lungs of 426 positive and 426 negative patients. The models selected are VGG-16, ResNet-50, Inception V3, and EfficientNet. All four were experimented with two different optimizers RMSProp and Adam. Apart from the change in the optimizers, two different learning rates were used for the model training to avoid overfitting.

Each model consisted of the training run which had an epoch of 10 and steps per epochs to be 50. Features were extracted before the training procedure in the preprocessing phase of the images. In each validation fold out of 101 images, 40 images were randomly used for the validation tests. These training and testing simulations of all the specified models were performed on Google Colab using the 32 GB RAM.

Since the diagnosis through the medical images is a sensitive issue, therefore the results were validated using different techniques recursively. Major concern if of False Negative where not only the patient but also people around the patient tested false negative will be in grave danger. The statistical metrics used for the validation purposes are:

Accuracy = TP + TN/(TP + TN + FP + FN)

Where TP defines True Positive, TN defines True Negative, FP defines the False Positive & FN defines the False Negative.

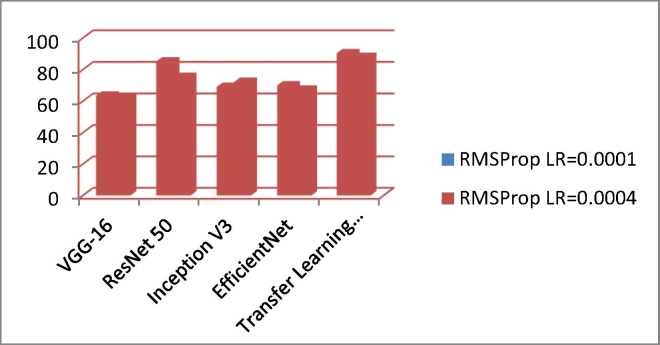

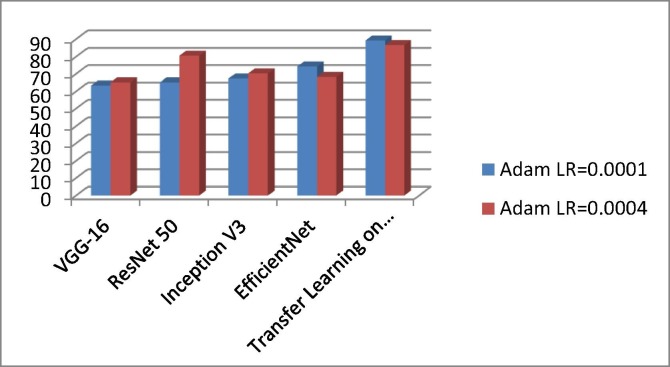

The term Accuracy can help to measure the samples that have been classified correctly [21], [22]. Precision can help to define the ratio of the true positive cases to the total positive observations predicted. CNN implemented with Transfer Learning synthesized the highest accuracy of approx 91% with “ Adam” Optimizer at a learning rate of 1 e-4. VGG-16 showed balanced results with both the optimizers reaching a maximum of output efficiency of 65% on “Adam” and “RMSProp” optimizers with the learning rate of 4e-4. ResNet-50 was able to achieve an accuracy of approx. 85% with a learning rate of 1e-4. It can be clearly observed that transfer learning applied on the CNN with RMSProp optimizer and learning rate of 1e-4 has achieved the highest accuracy with reduced time complexity. Table 2 describes the comparison of the time complexity of the different Architectures over different optimizers (Fig. 1, Fig. 2 ).

Table 2.

Accuracy Assessment.

| Architecture |

Optimizers |

|||

|---|---|---|---|---|

|

RMSProp |

Adam |

|||

| LR = 0.0001 | LR = 0.0004 | LR = 0.0001 | LR = 0.0004 | |

| VGG-16 | 63.47 | 62.38 | 67.38 | 66.34 |

| ResNet 50 | 85.12 | 75.29 | 65.12 | 80.59 |

| Inception V3 | 69.14 | 72.14 | 67.33 | 70.30 |

| EfficientNet | 70.09 | 67.36 | 74.29 | 68.23 |

| Transfer Learning on CNN | 90.45 | 87.12 | 82.45 | 80.12 |

Fig. 1.

Accuracy of Different Architectures over the Optimizer = RMSProp with different learning rate.

Fig. 2.

Accuracy of Different Architectures over the Optimizer = Adam with different learning rate.

5. Discussions

Since the beginning of the pandemic, numerous research studies are taking place. In our paper, the X-Ray images of the patients were used. Zhang et al. [23] made use of the pre-trained neural Networks of ResNet-50 to perform the classification of the COVID-19 infected patients. Numerous studies have been performed on the detection of COVID-19 symptoms via different techniques. Shan et al. [24] used VB-net for the image segmentations of patients. A study similar to ours was conducted in [25] where they achieved 98% accuracy. But, their results could be prone to overfitting as they did not use multiple optimizers or different learning rates and only used three transfer learning methods. Zhang et al. [26] performed X-Ray image classification with the help of ResNet. Wang and Wong [27] adopted a convolutional neural network method for the classification of X-Ray images which achieved successfully 83.5% accuracy. A very famous transfer learning model “inception” was used by Wang et al. [28] to predict COVID. The COVIDX-Net includes seven different architectures of deep convolutional neural network models, such as modified Visual Geometry Group Network (VGG19) and the second version of Google MobileNet [29]. Popular Deep Learning Architectures such as Recurrent Neural Network (RNN), Long short-term memory (LSTM), Bidirectional LSTM (BiLSTM), Gated recurrent units (GRUs) and Variational AutoEncoder (VAE) are compared for the new and the recovered cases [30].

6. Conclusion

In this paper, we have assessed that the Transfer Learning has improved the accuracy of the classification of the Images, although the dataset was limited in nature. The proposed Methodology pre-processed the COVID-19 X-Ray Images and input was provided into 5 different deep learning models of VGG-16, ResNet-50, Inception V3, EfficientNet and Transfer Learning on CNN. These models were evaluated for accuracy of classification of the Images to distinguish COVID-19 Positive and Negative Patients. These models were evaluated with the optimizers RMSProp and Adam with learning rate of 0.0001 and 0.0004. From all the models assessed the best accuracy was achieved by the Transfer Learning with CNN i.e. 90.45% with an optimizer RMSProp and Learning Rate of 0.0001. ResNet-50 model also achieved a good overall accuracy. In order to improve the accuracy of the ResNet-50, further Transfer Learning on ResNet-50 can be implemented over the COVID-19 X-Ray Images.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Quinlan J.R. Simplifying decision trees. Int. J. Man Mach. Stud. 1987;27(3):221–234. [Google Scholar]

- 2.J.R. Quinlan, Decision trees as probabilistic classifiers. In Proceedings of the Fourth International Workshop on Machine Learning. Morgan Kaufmann, January 1987, pp. 31-37.

- 3.Franceschi S., Levi F., Randimbison L., La Vecchia C. Site distribution of different types of skin cancer: new aetiological clues. Int. J. Cancer. 1996;67(1):24–28. doi: 10.1002/(SICI)1097-0215(19960703)67:1<24::AID-IJC6>3.0.CO;2-1. [DOI] [PubMed] [Google Scholar]

- 4.Huang C., Wang Y., Li X., Ren L., Zhao J., Hu Y., Zhang L., Fan G., Xu J., Gu X., Cheng Z., Yu T., Xia J., Wei Y., Wu W., Xie X., Yin W., Li H., Liu M., Xiao Y., Gao H., Guo L., Xie J., Wang G., Jiang R., Gao Z., Jin Q., Wang J., Cao B. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. The Lancet. 2020;395(10223):497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhu, N., Zhang, D., Wang, W., Li, X., Yang, B., Song, J., ... & Tan, W. (2020). A novel coronavirus from patients with pneumonia in China, 2019. New England journal of medicine. [DOI] [PMC free article] [PubMed]

- 6.Zhou P., Yang X.L., Wang X.G., Hu B., Zhang L., Zhang W., Shi Z.L. A pneumonia outbreak associated with a new coronavirus of probable bat origin. Nature. 2020;579(7798):270–273. doi: 10.1038/s41586-020-2012-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Xu, Li, Jimmy SJ Ren, Ce Liu, and Jiaya Jia. “Deep convolutional neural network for image deconvolution.” In Advances in Neural Information Processing Systems, pp. 1790-1798. 2014.

- 8.Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097-1105).

- 9.Kayalibay, B., Jensen, G., & van der Smagt, P. (2017). CNN-based segmentation of medical imaging data. arXiv preprint arXiv:1701.03056.

- 10.Sitaula C., Hossain M.B. Attention-based VGG-16 model for COVID-19 chest X-ray image classification. Applied Intelligence. 2020:1–14. doi: 10.1007/s10489-020-02055-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Farooq, M., & Hafeez, A. (2020). Covid-resnet: A deep learning framework for screening of covid19 from radiographs. arXiv preprint arXiv:2003.14395.

- 12.Aleem, M., Raj, R., & Khan, A. (2020). Comparative performance analysis of the resnet backbones of mask rcnn to segment the signs of covid-19 in chest ct scans. arXiv preprint arXiv:2008.09713.

- 13.Lu S., Wang S.-H., Zhang Y.-D. Detecting pathological brain via ResNet and randomized neural networks. Heliyon. 2020;6(12):e05625. doi: 10.1016/j.heliyon.2020.e05625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Narin, A., Kaya, C., & Pamuk, Z. (2020). Automatic detection of coronavirus disease (covid-19) using x-ray images and deep convolutional neural networks. arXiv preprint arXiv:2003.1084. [DOI] [PMC free article] [PubMed]

- 15.R. D.D. Deep net model for detection of covid-19 using radiographs based on roc analysis. J. Innovative Image Processing (JIIP) 2020;2(3):135–140. [Google Scholar]

- 16.Marques G., Agarwal D., de la Torre Díez I. Automated medical diagnosis of COVID-19 through EfficientNet convolutional neural network. Appl. Soft Comput. 2020;96:106691. doi: 10.1016/j.asoc.2020.106691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chowdhury, N. K., Rahman, M., Rezoana, N., & Kabir, M. A. (2020). ECOVNet: An Ensemble of Deep Convolutional Neural Networks Based on EfficientNet to Detect COVID-19 From Chest X-rays. arXiv preprint arXiv:2009.11850.

- 18.Shin H.-C., Roth H.R., Gao M., Lu L.e., Xu Z., Nogues I., Yao J., Mollura D., Summers R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging. 2016;35(5):1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Han D., Liu Q., Fan W. A new image classification method using CNN transfer learning and web data augmentation. Expert Syst. Appl. 2018;95:43–56. [Google Scholar]

- 20.Radenovic F., Tolias G., Chum O. Fine-tuning CNN image retrieval with no human annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2019;41(7):1655–1668. doi: 10.1109/TPAMI.2018.2846566. [DOI] [PubMed] [Google Scholar]

- 21.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM. 2017;60(6):84–90. [Google Scholar]

- 22.Ni Q., Sun Z.Y., Qi L., Chen W., Yang Y., Wang L., Zhang X., Yang L., Fang Y., Xing Z., Zhou Z., Yu Y., Lu G.M., Zhang L.J. A deep learning approach to characterize 2019 coronavirus disease (COVID-19) pneumonia in chest CT images. Eur. Radiol. 2020;30(12):6517–6527. doi: 10.1007/s00330-020-07044-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.T. He, Z. Zhang, H. Zhang, Z. Zhang, J. Xie, M. Li, Bag of tricks for image classification with convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, (2019) pp. 558-567

- 24.Open Database of COVID-19 Cases with chest X-Ray or CT images, https://github.com/ieee8023/covid-chestxray-dataset.

- 25.Rawat W., Wang Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017;29(9):2352–2449. doi: 10.1162/NECO_a_00990. [DOI] [PubMed] [Google Scholar]

- 26.Gu J., Wang Z., Kuen J., Ma L., Shahroudy A., Shuai B., Liu T., Wang X., Wang G., Cai J., Chen T. Recent advances in convolutional neural networks. Pattern Recogn. 2018;77:354–377. [Google Scholar]

- 27.He X., Lau E.H.Y., Wu P., Deng X., Wang J., Hao X., Lau Y.C., Wong J.Y., Guan Y., Tan X., Mo X., Chen Y., Liao B., Chen W., Hu F., Zhang Q., Zhong M., Wu Y., Zhao L., Zhang F., Cowling B.J., Li F., Leung G.M. Temporal dynamics in viral shedding and transmissibility of COVID-19. Nat. Med. 2020;26(5):672–675. doi: 10.1038/s41591-020-0869-5. [DOI] [PubMed] [Google Scholar]

- 28.Wang C., Chen D., Hao L., Liu X., Zeng Y.u., Chen J., Zhang G. Pulmonary image classification based on inception-v3 transfer learning model. IEEE Access. 2019;7:146533–146541. [Google Scholar]

- 29.Hemdan, E. E. D., Shouman, M. A., & Karar, M. E. (2020). Covidx-net: A framework of deep learning classifiers to diagnose covid-19 in x-ray images. arXiv preprint arXiv:2003.11055.

- 30.Zeroual A., Harrou F., Dairi A., Sun Y. Deep learning methods for forecasting COVID-19 time-Series data: A Comparative study. Chaos, Solitons Fractals. 2020;140:110121. doi: 10.1016/j.chaos.2020.110121. [DOI] [PMC free article] [PubMed] [Google Scholar]

Further Reading

- 1.Bernheim A., Mei X., Huang M., Yang Y., Fayad Z.A., Zhang N., Diao K., Lin B., Zhu X., Li K., Li S., Shan H., Jacobi A., Chung M. “Chest CT findings in coronavirus disease-19 (COVID-19): relationship to duration of infection”. Radiology. 2020;295(3):200463. doi: 10.1148/radiol.2020200463. [DOI] [PMC free article] [PubMed] [Google Scholar]