Abstract

An issue commonly expressed by hearing aid users is a difficulty to understand speech in complex hearing scenarios, that is, when speech is presented together with background noise or in situations with multiple speakers. Conventional hearing aids are already designed with these issues in mind, using beamforming to only enhance sound from a specific direction, but these are limited in solving these issues as they can only modulate incoming sound at the cochlear level. However, evidence exists that age-related hearing loss might partially be caused later in the hearing processes due to brain processes slowing down and becoming less efficient. In this study, we tested whether it would be possible to improve the hearing process at the cortical level by improving neural tracking of speech. The speech envelopes of target sentences were transformed into an electrical signal and stimulated onto elderly participants’ cortices using transcranial alternating current stimulation (tACS). We compared 2 different signal to noise ratios (SNRs) with 5 different delays between sound presentation and stimulation ranging from 50 ms to 150 ms, and the differences in effects between elderly normal hearing and elderly hearing impaired participants. When the task was performed at a high SNR, hearing impaired participants appeared to gain more from envelope-tACS compared to when the task was performed at a lower SNR. This was not the case for normal hearing participants. Furthermore, a post-hoc analysis of the different time-lags suggest that elderly were significantly better at a stimulation time-lag of 150 ms when the task was presented at a high SNR. In this paper, we outline why these effects are worth exploring further, and what they tell us about the optimal tACS time-lag.

Keywords: EEG, tACS, hearing impairment, hearing aid, speech processing, speech envelope, entrainment, aging

Introduction

The average life span of the world population is continuously increasing, and with this increase comes a higher prevalence of hearing loss. In 2030, the prevalence of clinically relevant hearing loss is expected to be doubled; consequently, hearing loss will be 1 of the 7 most prevalent chronic diseases.1-3 This sensorineural hearing loss can, to some extent, be compensated for with hearing aids amplifying relevant speech signals and reducing irrelevant sounds (for reviews see Pichora-Fuller and Singh4 and Lunner et al.5). However, hearing aid users still often mention hearing issues, specifically when trying to comprehend speech in situations with more background noise. In this study, we performed an experiment to test transcranial alternating current stimulation (tACS) as a method to improve speech comprehension in hearing impaired as an addition to conventional hearing aids to potentially alleviate these issues. We investigated the optimal delay between auditory target and stimulation, as well as how hearing impairment and task difficulty affect the effectivity of tACS.

Speech comprehension involves cortical entrainment to the rhythmic elements of the speech signal in the auditory cortex;6-9 a rhythmically presented stimulus leads to a phase and amplitude alignment of neural oscillations within the frequency of the presented rhythm.10,11 When speech is perceived, neural oscillations in the auditory cortex entrain most strongly to the slower fluctuations of the speech signal, that is, the speech envelope in the 4 to 8-Hz range. This entrainment to the envelope has been argued to track acoustic properties of the speech stream12,13 (but see also Zoefel and VanRullen14) or to be a mechanism of syllabic parsing7 (for reviews on the functional role of cortical entrainment to speech see Ding and Simon15 and Kösem and van Wassenhove16). Acoustic degradation of a speech signal, for example due to a noise masker, impedes entrainment to the speech envelope and consequently decreases intelligibility.17-19

In people suffering from sensorineural hearing loss, an inner degradation of auditory information takes place.5,20 As hearing loss is widely common among older people, cognitive decline with age is an additional factor impeding comprehension. This comes into play especially when speech is acoustically degraded. Older people with and without hearing loss show a severe decline in speech comprehension when the signal is masked with other speech signals or with noise.21-23 This is explained by a decrease in cognitive abilities that compensate for acoustic degradation and comprehension compared to younger adults. Borch Petersen et al.24 showed that, next to hearing loss, separating speech from noise was also related to a decline in working memory. Hearing aids and cochlear implants can aid patients by amplifying sound at the cochlear level, but can’t influence mechanisms later in the hearing process. Any loss of auditory information beyond the cochlea, will not be compensated for by conventional hearing aids. This hearing loss is a natural result of well-established changes that occur in the aging brain. As people get older, cognitive processes slow down.25 Because of this, the processing of speech is decreased in the elderly due to the distortion of temporal cues.26,27 Entrainment appears to be altered through aging; over the course of a lifetime, people’s preferred frequency decreases for motor tasks, and perception of rhythmic events changes.28 When comparing EEG data of participants listening to speech-paced rhythms, neural oscillations entrain less strongly in elderly participants compared to young ones.29 Petersen et al.30 conducted an EEG study in which older participants with variable hearing loss performed a speech comprehension task. Participants had to listen to 2 competing speech streams, attending to 1 and ignoring the other. Cross-correlating the speech envelopes with the EEG response demonstrated speech envelope tracking of the attended stream, and that tracking improved with a better signal-to-noise ratio of the speech stimulus. In participants with hearing loss however, this improved entrainment was reduced. These findings are in line with previous findings that cortical entrainment plays a large role in speech comprehension, and that reduced entrainment in elderly is a factor in hearing loss. A hearing aid capable of restoring cortical entrainment to the speech envelope could therefore improve hearing significantly in ways that conventional hearing aids cannot. One possible method to achieve this would be transcranial alternating current stimulation (tACS). Using tACS, oscillating electrical current are used to modulate the resting membrane potential of neurons.31-33 In this manner, tACS can either facilitate or inhibit neural firing depending on the phase of the stimulation. tACS has already been successfully used to improve auditory perception of speech,9,34,35 of syllables36 of pure tones37 and of click tracks.38,39 Using an electric current shaped like the speech envelope of the target sentence (ie, envelope-tACS) and timing the stimulation to coincide with the processing of the auditory stimulus, we can manipulate the local field potential of the cortex to better manifest cortical entrainment to the speech envelope. Envelope-tACS has been used on normal hearing participants34,35,40-42 but whether hearing impaired users could benefit from it is so far unknown; as of now, there have been results of improving phoneme detection in younger and hearing impaired adults.43

In this study, we performed an experiment to see whether envelope-tACS could be used to improve hearing in hearing impaired participants. As the effectiveness of envelope-tACS depends on the stimulation being in phase with the processing of the auditory signal in the brain,34,35,40,44 we presented envelope-tACS at 5 different time delays between the onset of the auditory signal and the onset of the electrical stimulation. We recruited both normal hearing elderly participants and hearing impaired elderly participants as defined by the World Health Organization (WHO), and presented a hearing task with 2 different difficulty levels by varying the signal to noise ratio (SNR) of the target speech. In this way, we explored how envelope-tACS affected hearing impaired and normal hearing participants differently, as well as how different levels of background noise affected the effectivity of envelope-tACS. If hearing impaired participants would benefit from envelope-tACS, it could possibly be used as an addition to conventional hearing aids.

Power analysis

To estimate the number of participants required, a power analysis was performed. As our experimental design was based on the studies of Rufener et al.43 and Riecke et al.,34 we used these studies for our estimation. Rufener et al. had a comparable experimental design to ours, stimulating 25 young adults (mean age 24.1) and 20 older adults (mean age 69.8) with tACS. In this study, a repeated measures ANOVA revealed a significant interaction of stimulation × age group (F(1,43) = 9.4; P = .004; η2 = 0.18). Riecke et al.34 measured 22 young adults in an experiment that used different audio to stimulation time-lags of envelope-tACS. Using a one-way ANOVA, Riecke et al.34 found that speech benefit varied significantly across time-lags (F(5,105) = 2.7, P = .025, η2 = 0.05). As our experimental design was similar to that of Rufener et al.43 and using envelope-tACS similar to the stimulation used by Riecke et al.,34 we expected a small to medium effect size (η2 = 0.1), similar to the effects found in these studies. This meant we would require a minimum of 30 participants to detect such effects with a power (1-β) of 0.95. We therefore chose a sample size of 40 participants; 20 normal hearing and 20 hearing impaired participants.

Participants

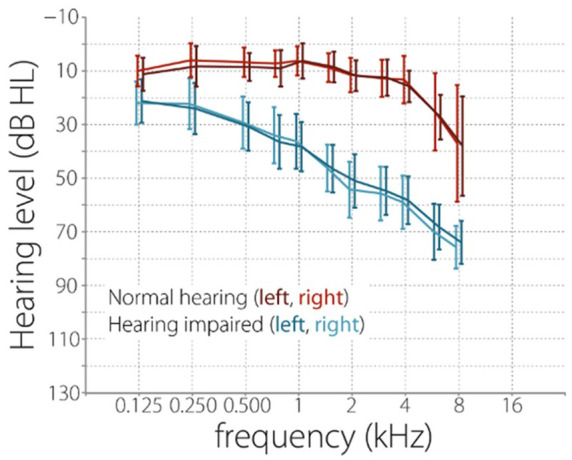

Twenty normal hearing older adults (10 female) and twenty hearing impaired older adults (10 female) participated in the study. Participants were recruited through the database of the Hörzentrum Oldenburg and pre-screened on their hearing abilities (Figure 1); Hearing thresholds were obtained for different frequencies ranging from 125 Hz to 8000 Hz for both ears separately. Both groups were age matched and had a mean age of 66.5 years (age range 49 to 79). All participants reported to have neither neurological nor psychiatric disorders, nor having suffered from either of them in the past. All participants gave written informed consent before the beginning of the experiment. Due to technical issues, 5 participants (2 normal hearing and 3 hearing impaired) had to be excluded from the final analysis. The remaining normal hearing participant group had a mean age of 68.8 years (age range 56 to 79) and the hearing impaired participant group had a mean age of 67.6 years (age range 49 to 78).

Figure 1.

Hearing levels of participants at different frequencies. Prior to partaking in the experiment, participants had their hearing capabilities assessed using pure tone audiometry using air conduction. Hearing thresholds were obtained for different frequencies ranging from 125 to 8000 Hz for both ears separately. Participants were rated as normal hearing or hearing impaired as defined by the WHO. Out of the 20 hearing impaired participants, 18 had been using a hearing aid for at least 1 year.

Materials and methods

Experimental procedure

Participants were seated upright in a recliner. They were familiarized with the OLSA (see section 2.3.2: Oldenburg sentence test) followed by the experimental task. During the experiment, participants completed 7 test lists of the OLSA that were randomly assigned to 5 tACS conditions and 2 control conditions (see section 2.3.4: Envelope-tACS signal). Sentences were presented via a single speaker (8020C Studio Monitor, Genelic Oy, Iisalmi, Finland) with 1.50 m distance to the center of the head. Completion of the 7 OLSA lists took approximately 70 minutes in total. Following the speech test, participants were asked to complete a questionnaire on possible adverse effects of tACS.45,46 The entire session including electrode montage and briefing of the participants took approximately 2.5 hours. Participants did not use hearing aids during the task.

Oldenburg sentence test

The Oldenburg sentence test47 is an adaptive test that can be used to obtain a speech reception threshold (SRT) in noise—the minimal SNR at which a participant can comprehend a certain percentage of speech. Grammatically correct German sentences consisting of 5 words were presented together with a noise masker with the same spectral characteristics as the sentences. Participants were asked to verbally repeat as many words as possible. The test material consisted of 45 test lists of 20 sentences each. Each participant first completed 2 training lists to be familiarized with the test. Subsequently, 14 test lists were presented for the experimental conditions, separated by self-paced breaks; in total, 2 test lists were presented per condition. Condition order was randomized for each participant.

Both the noise masker and sentences were presented at 65 dB SPL for the first trial of each test list. Sound stimuli were sampled at 44 100 Hz. Task difficulty was adapted using a staircase procedure, adjusting difficulty of the task depending on the participant’s performance. This was achieved by changing the sound level of the sentences, whilst the level of the noise masker was kept constant. The noise masker was presented from 0.5 seconds prior to sentence onset until 0.5 seconds after sentence offset. Two OLSA test lists were presented interleaved, and 2 SRTs were computed. Over the course of 2 times twenty trials, the threshold at which participants could understand 80% of the presented sentence in 1 condition and understand 20% in the other were calculated. The SRT scores they received were the difference of the sound pressure of the speech signal and the sound pressure of the background noise. For example, a 20% SRT of −7 dB indicates that the participant was able to comprehend 20% of the words that were presented 7 dB lower than the masking noise. As the staircase procedure aimed to make the participant only comprehend 20% of the words (or 20% correct rate), this was considered the difficult condition. A 80% SRT of −7 dB indicates that the participant was able to comprehend 80% of the words that were presented 7 dB lower than the masking noise (80% correct rate). As the staircase procedure aimed to make the participant comprehend 80% of the words, this was considered the easy condition. The step size of the staircase task depended on the amount of correctly repeated words, the results of previous trials in the block, and the targeted SRT level.47,48

Transcranial alternating current stimulation

tACS was applied using a neuroConn multichannel stimulator (DC-STIMULATOR MC, neuroConn GmbH, Ilmenau, Germany) with 2 channels, 1 for each hemisphere. Round stimulation electrodes with a diameter of 2.5 cm were placed on the EEG positions FC5, P7, FC6, and P8 according to the international 10 to 20 system. These electrode locations were chosen to optimally stimulate the desired regions (Figure 2a;).49-52. Impedances were kept below 10 kΩ by applying ABRALYT gel (Abrasive electrolyte-Gel, EASYCAP, Herrsching, Germany) and Ten20 Conductive paste (Weaver and company, Aurora, USA). In total, participants received tACS stimulation for approximately 20 minutes, divided over the stimulation conditions.

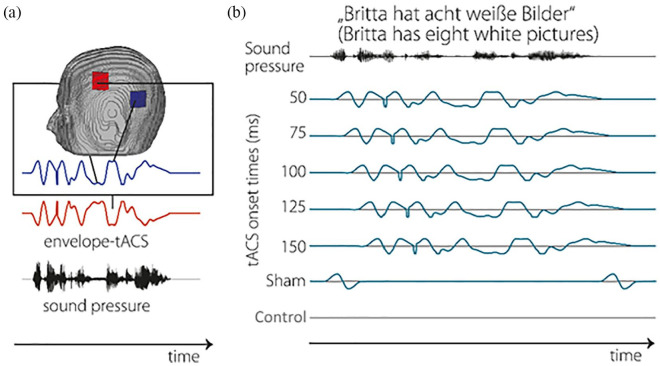

Figure 2.

(a) Envelope-tACS setup. Stimulation shape and locations of the stimulation electrodes; positions are mirrored on the other side of the head. The shape of the tACS waveform is derived from the speech envelope of a presented sentence. (b) Different tACS time-lags. Whilst the electrical stimulation of the cortex is virtually instantaneous, there is a delay between the presentation of the speech stimulus and the cortical processing of said stimulus; the exact length of this delay varies per participant. To compensate for this, different delays between the presentation of the auditory stimulus and the onset of electrical stimulation are used. Although most participants cannot differentiate whether they received electrical stimulation or not, 2 sham conditions were used; 1 with short peaks of stimulation before and after presentation of the sentence to invoke the feeling of being stimulated (sham), and 1 devoid of any electrical stimulation (control).

Envelope-tACS signal

Stimulation was presented at 1 mA peak to peak for all participants. The stimulation signal corresponded to the envelope of the concurrent speech signal (ie, envelope-tACS). The envelope-tACS was generated by extracting the envelope of each OLSA sentence. Here, the absolute values of the Hilbert transform of the audio signal were computed and then filtered with a second order Butterworth filter (10 Hz, low-pass). Next, the signal was demeaned to allow for fluctuations around zero. In order to avoid acoustic onset and offset artefacts, sounds started and ended with a 20-ms halved Hanning window starting and ending at 0 amplitude. This was done as large changes in current from 1 sampling point to the next at the start and end of the signal caused technical issues, as well as being more noticeable to participants when piloting the task.

In total, each OLSA sentence in the stimulation conditions was presented with envelope-tACS with a duration of the length of the paired sentence, plus 2 times 20 ms of the Hanning window signal start and end, for a total stimulation length of approximately 3 seconds per sentence. Two sham conditions were used; in 1 sham condition, no electric stimulation was induced (control condition). In the other sham condition, 2 20 ms sinusoidal onset and offsets (1 mA peak to peak) were presented to generate a sensation of tACS stimulation (sham condition). There was no stimulation during presentation of the sentences during either of these condition. The purpose of these 2 sham conditions was to control for any placebo effects for participants who reported to have felt the stimulation (Figure 2b). Due to individual differences in anatomy, the optimal delay for tACS stimulation to have the maximum effect on performance differs between participants. In a previous study, we found that envelope-tACS had the most benefit after a delay of 100 ms;35 however, the optimal tACS delay varied across participants. Therefore, envelope-tACS was assigned to 5 different delay conditions centered around 100 ms (Figure 2b). Envelope-tACS was initiated between 50 and 150 ms (25 ms step-size) after the onset of the speech signal. The timing of the tACS signal and the acoustic signal was mutually controlled via MATLAB (8.2.0.701; 64bit, The Mathworks, Natick, USA) and SoundMexPro (HörTech GmbH, Germany), which delivered the stimulation waveform to the multichannel stimulator through a D/A converter (NIDAQ, National Instruments, TX, USA).

Results

Data analysis was performed using Matlab (R2016a, MathWorks, Natick, MA). Statistical analysis was done using SPSS 24.0 (IBMCorp, Armonk, NY, USA). Prior to our main results, a control analysis was performed to assure the intended manipulation of our stimulation conditions were successful. Namely, to assure the SRT20 task was more difficult than the SRT80 task, and to see whether both tasks were more difficult for hearing impaired participants compared to normal hearing participants. We also wanted to test whether there was a significant difference between the control condition and the sham condition. A 3-way mixed repeated measures ANOVA using hearing impairment (normal hearing × hearing impaired) as a between-subjects factor and task difficulty (SRT20 × SRT80) and the 2 sham type conditions as within-subjects factors (sham stimulation × no stimulation) revealed a significant effect of hearing impairment (F(1, 33) = 33.6, P < .001, η2 = 0.52) in the expected direction, that is, hearing impaired participants performed worse at the task than normal hearing participants, requiring a better (higher) SNR to perform at a 20% correct rate in the SRT20 condition and an 80% correct rate in the SRT80 conditions respectively, regardless of sham type. A significant effect of task difficulty (F(1, 33) = 841.9, P < .001, η2 = 0.96) confirmed that participants scored a lower SNR in the SRT20 condition than they scored in the SNR80 condition, meaning participants needed a better (higher) SNR to score at 80% correct rate than at 20% correct rate. Furthermore, there was a significant interaction between hearing impairment and task difficulty (F(1, 33) = 14.0, P = .001, η2 = 0.31), indicating there was a larger difference between the performance of hearing impaired participants compared to normal hearing participants in the SRT80 condition than there was in the SRT20 condition. This implies that hearing impaired participants did indeed suffer more from the effects of background noise in the SRT80 condition than normal hearing participants, causing a larger difference in performance between the 2 groups.

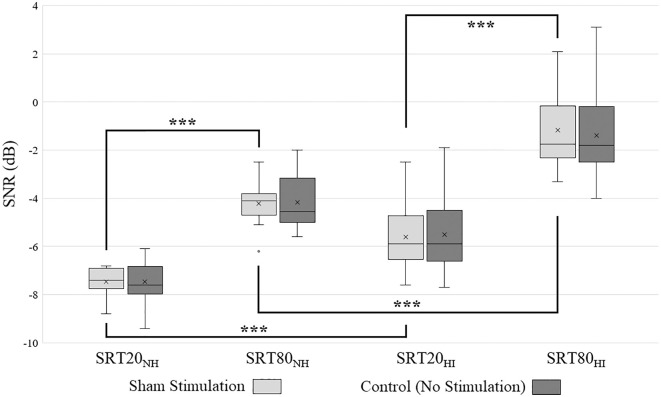

No significant effect of sham type was found (F(1, 33) = 0.15, P = .90, η2 < 0.001), nor was there an interaction between sham type and task difficulty (F(1, 33) = 0.26, P = .61, η2 = 0.008), nor between sham type and hearing impairment (F(1, 33) = 0.23, P = .64, η2 < 0.007). As 2 participants did not have the no stimulation control condition recorded due to technical issues, and to avoid unnecessary multiple comparisons, best time-lag tACS trials were only compared to the sham condition for the remainder of the analysis. A paired samples t-test between the SRT20 conditions (normal hearing: M = −7.5, SD = 0.59, hearing impaired: M = −5.6, SD = 1.3) and SRT80 conditions (normal hearing: M = −4.2, SD = 0.81, hearing impaired: M = −1.2, SD = 1.6) showed significant effects of task difficulty in the expected direction (normal hearing: t(16) = −20,6, P < .001, hearing impaired: t(17) = −17.9, P < .001). Independent samples t-tests between normal hearing and hearing impaired participants showed significant effects for hearing impairment (SRT20: t(33) = 5.4, P < .001, SRT80: t(33) = 7.2, P < .001, Figure 3). This confirmed that hearing impaired participants had more difficulty with the task than normal hearing participants, and that the SRT20 task resulted in a lower SNR than the SRT80 task for both normal hearing and hearing impaired participants.

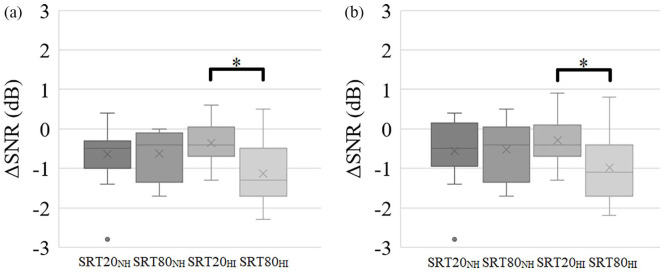

Figure 3.

Differences in SNR between sham condition and no stimulation control condition. To control for any placebo effects caused by potentially perceived stimulation, we compared performance between the sham and control condition. There was no significant difference between performance in the sham stimulation conditions (light grey) and the no stimulation control conditions (dark grey). To avoid multiple comparisons, the no stimulation control condition was therefore removed from further analysis. When comparing the sham stimulation conditions, normal hearing (NH) participants were significantly better at the task than hearing impaired (HI) participants, and participants arrived at a significantly lower SNR in the SRT20 condition compared to the SRT80 condition.

After establishing that the effects of different SRT levels and hearing abilities of the participants were significant in the expected direction, we could investigate the effects of envelope-tACS. To do this, we baseline corrected participants’ tACS-SRTs by subtracting the SRTs of the sham stimulation conditions. By baseline correcting in this manner, we removed the change in performance caused by task difficulty and hearing impairment. The resulting ΔSNR then provided information about the relative change in SRT in the tACS conditions compared to sham. Inspection of the data revealed 1 hearing impaired participant varied greatly in performance compared to the other hearing impaired participants in the same conditions. Out of the ten stimulation conditions, they scored more than 2.5 standard deviations from the mean in 2 conditions and more than 3 standard deviations from the mean in 1 condition. Furthermore, their best performing stimulation SRT20 condition was also more than 3 standard deviations from the mean. We therefore removed this participant due to being an outlier (Figure 4).

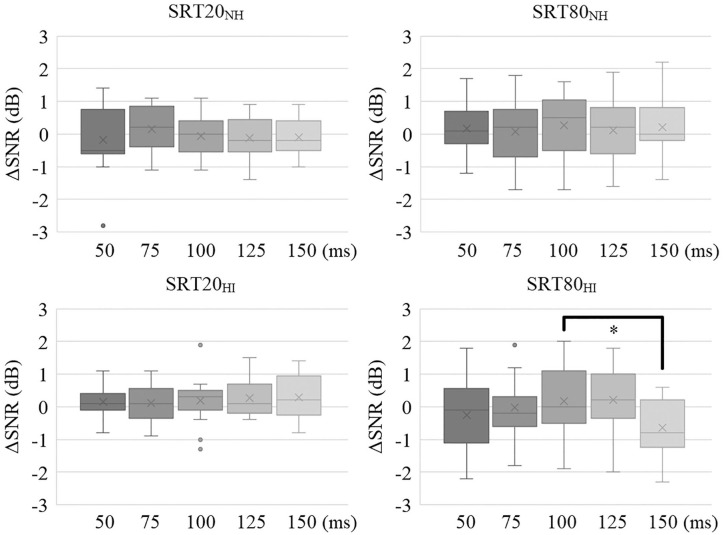

Figure 4.

Distribution of baseline-corrected score (ΔSNR) for the 5 different time-lags, separate for the 2 different task difficulties (SRT20 and SRT80) and separate for normal hearing (NH) and hearing impaired (HI) participants. Black crosses represent the performances of the excluded participant. After resolving a 3-way interaction between task difficulty, hearing impairment and the 5 different time-lags, a significant difference in between the 100 and 150 ms time-lags was revealed for the hearing impaired participants in the SRT80 condition.

To look at the general effect of envelope-tACS at different stimulation time-lags, a mixed model ANOVA with the within subjects factor task difficulty (SRT80 and SRT20) and tACS time-lags (50, 75, 100, 125, 150 ms) and the between-subjects factor hearing impairment (normal hearing vs. hearing impaired) was performed. The effects of hearing impairment (F(1, 32) = 0.001, P = .98 η2 < 0.001), task difficulty (F(1, 32) = 0.098, P = .76 η2 = 0.003) and time-lag (F(4, 128) = 1.0, P = .39 η2 = 0.03) were not significant, nor were the 2-way interaction effects (task difficulty × hearing impairment: F(1, 32) = 3.9, P = .06, η2 = 0.11; time-lag × hearing impairment: F(4, 128) = 1.0, P = .40, η2 = 0.031, task difficulty × time-lag: F(4, 128) = 1.5, P = .21, η2 = 0.044), however there was a significant 3-way interaction (F(4, 128) = 2.6, P = .04, η2 = 0.076).

To resolve the 3-way interaction, we performed a repeated measures 2-way ANOVA for the hearing impaired and normal hearing groups separately. Using the factors task difficulty (SRT80 and SRT20) and tACS time-lags (50, 75, 100, 125, 150 ms) revealed no significant effects for the normal hearing group (task difficulty: F(1, 16) = 2.0, P = .17, η2 = 0.11, time-lag: F(4, 64) = 0.25, P = .91, η2 = 0.016, interaction: F(4,64) = 0.86, P = .50, η2 = 0.051), but a significant interaction effect for the hearing impaired group (task difficulty: F(1, 16) = 2.0, P = .18, η2 = 0.11, time-lag: F(4, 64) = 1.6, P = .18, η2 = 0.093, interaction: F(4,64) = 3.1, P = .022, η2 = 0.16). A repeated measures ANOVA over the 5 time-lags separately for the 2 task difficulty conditions revealed no significant effects in the SRT20 conditions (F(4,64) = 0.40, P = .81, η2 = 0.024), but did show a result in the SRT80 conditions (F(4,64) = 2.9, P = .027, η2 = 0.16). Finally, paired samples t-tests comparing the different time-lags of the SRT80 conditions of hearing impaired participants revealed a significant difference only between the 100 ms time-lag and 150 ms time-lag conditions (t(16) = 4.1, P = .009 Bonferroni corrected, d = 0.84, Figure 4).

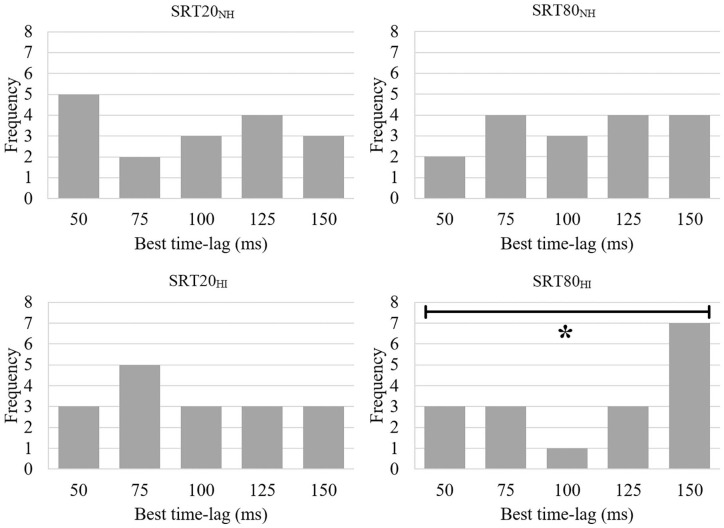

Previous studies have established that participants differ in optimal time-lag.34,35,41 Because of this, the best performing time-lag condition (ie, the stimulation time-lag at which the participant had the lowest ΔSNR) was selected, separately for the SRT80 and SRT20 conditions. Using a Kolmogorov-Smirnov test for uniformity, we investigated whether participants were more likely to have a specific time-lag as their optimum, separately for both conditions and for normal hearing and hearing impaired participants (Figure 5). The optimal time-lags were uniformly distributed in both conditions for normal hearing participants (SRT20: D = 1.2, P = .11, SRT80: D = 0.97, P = .30), as well as for hearing impaired participants in the SRT20 condition (D = 0.91, P = .38). However, the optimal time-lags of hearing impaired participants were not uniformly distributed in the SRT80 condition, with the 150 ms time-lag occurring most frequently (D = 1.7, P = .006).

Figure 5.

Distribution of optimal time-lags, separate for hearing impairment (NH, normal hearing and HI, hearing impaired) and task difficulty (SRT20 and SRT80). Optimal time-lags were uniformly distributed except for SRT80HI.

A mixed model ANOVA with task difficulty (SRT80 and SRT20) as a within subjects factor and hearing impairment (impaired hearing and normal hearing) as a between subjects factor revealed a significant effect of task difficulty (F(1, 32) = 6.2, P = .018, η2 = 0.16) and a significant interaction effect (F(1, 32) = 6.6, P = .015, η2 = 0.17) but no significant effect of hearing impairment (F(1, 32) = 0.39, P = .53, η2 = 0.012). Paired samples t-tests comparing SRT20NH (M = −0.64, SD = 0.77) with SRT80NH (M = −0.63, SD = 0.63) and SRT20HI (M = −0.35, SD = 0.48) with SRT80HI (M = −1.1, SD = 0.78) revealed a significant effect of task difficulty for hearing impaired participants (t(16) = 3.2, P = .012, d = 1.2 Bonferroni corrected) but not for normal hearing participants (t(16) = −0.062, P = .95, d = 0.016, Figure 6a).

Figure 6.

(a) For each participant, their best performing time-lag out of the 5 time-lags was selected separately for the SRT20 and SRT80 conditions. Hearing impaired participants performed significantly better (ie, at a lower ΔSNR) in the SRT80 condition compared to their performance in the SRT20 condition. (b) As hearing impaired participants performed exceptionally well in the 150 ms time-lag of the SRT80 condition, several of them had their best time-lag at 150 ms in this condition. Therefore, it could be argued that the effect found of task difficulty was only caused by the effect in this 150 ms time-lag condition. However, after re-selecting participants’ best time-lags whilst excluding the 150 ms condition, the effect persisted.

Seeing as there were multiple hearing impaired participants that had their best time-lag at 150 ms for the SRT80 condition, our findings comparing the best time-lag conditions to each other was not statistically independent from the effect found for the 150 ms time-lag condition. Therefore, the effect of the best conditions could have been entirely dependent on the results in this 1 condition. We therefore repeated the analysis removing the 150 ms time-lag to control whether these effects were independent from each other. A mixed model ANOVA with the within subjects factor task difficulty (SRT80 and SRT20) and 4 tACS time-lags (50, 75, 100, 125 ms) and the between-subjects factor hearing impairment (normal hearing vs. hearing impaired) revealed no significant main effects, (task difficulty: F(1, 33) = 0.027, P = .87 η2 = 0.001; time-lag: F(3, 96) = 0.76, P = .52, η2 = 0.023; hearing impairment: F(1, 33) = 0.096, P = .76, η2 = 0.003), nor interaction effects (task difficulty × hearing impairment: F(1, 32) = 1.7, P = .20, η2 = 0.050; time-lag × hearing impairment: F(3, 96) = 0.69, P = .56, η2 = 0.021, task difficulty × time-lag: F(3, 96) = 0.58, P = .63, η2 = 0.018; task difficulty × time-lag × hearing impairment: F(3, 96) = 0.97, P = .41, η2 = 0.030).

Next, we repeated the analysis of the best time-lags, excluding the performances on the 150 ms time-lag condition. A mixed model ANOVA with task difficulty (SRT80 and SRT20) as the within subjects factor and hearing impairment (impaired hearing and normal hearing) as a between subjects factor revealed a significant effect of task difficulty (F(1, 32) = 4.2, P = .0497, η2 = 0.11) and a significant interaction effect (F(1, 32) = 5.3, P = .028, η2 = 0.14) but no significant effect of hearing impairment (F(1, 32) = 0.27, P = .61, η2 = 0.008). Paired samples t-tests comparing SRT20NH (M = −0.56, SD = 0.80) with SRT80NH (M = −0.52, SD = 0.76) and SRT20HI (M = −0.29, SD = 0.56) with SRT80HI (M = −0.99, SD = 0.81) revealed a significant effect of task difficulty for hearing impaired participants (t(16) = 2.8, P = .024, d = 1.0 Bonferroni corrected) but not for normal hearing participants (t(16) = −0.20, P = .84, d = 0.051, Figure 6b).

Discussion

The main goal of our study was to explore the potential for envelope-tACS to aid hearing impaired participants. For this purpose, we investigated the effects that task difficulty and hearing impairment had on the effects of envelope-tACS. As participants’ score differed depending on task difficulty and hearing impairment, we baseline corrected the best performance time-lag to the sham condition for each condition. This way, we compared the effects of envelope-tACS under different hearing conditions and hearing capabilities. Analysis revealed that hearing impaired participants gained more from envelope-tACS when the task used a high SNR (ie, SRT80) compared to the same participants in the low SNR condition (SRT20). When comparing the different stimulation time-lags, hearing impaired participants in the SRT80 condition performed better in the 150 ms time-lag condition, but this difference was only significant compared to the 100 ms time-lag after correction for multiple comparisons. To better separate the effect found for the best time-lag conditions from the effect found for the 150 ms time-lag condition, we then repeated the analysis whilst omitting the 150 ms time-lag conditions. This revealed that, without any individual time-lag having better performance compared to the other ones, the effect of the best time-lag conditions persisted. Both with and without the 150 ms time-lag conditions, hearing impaired participants scored a lower (ie, better) ΔSNR in the SRT80 condition compared to the SRT20 condition.

Effects of hearing impairment and task difficulty

The finding that hearing impaired participants benefited more from envelope-tACS in the SRT80 condition compared to the SRT20 condition could imply that hearing impaired participants could use envelope-tACS to compensate for speech envelope information lost in the auditory signal due to background noise. In the SRT20 condition however, too much information is lost in the auditory signal condition to “reconstruct” with the expected subthreshold effects of envelope-tACS. It is a common complaint amongst hearing impaired patients to have problems with speech comprehension in everyday noisy situations.54-57 Studies on compensatory mechanics in the cognitive processes of elderly consistently find a reduction in the ability to inhibit irrelevant information4,58 and an increase in working memory usage compared to younger participants;24,30,59-61 specifically, experiments with tasks similar to ours (ie, a co-located sounds speech in noise task) have demonstrated an effect of working memory capacity on performance for hearing impaired participants.62-64 Envelope-tACS might compensate for a loss of auditory signal in a similar matter as working memory recruiting does; sub-threshold effects of tACS enhancing the envelope of the stimulus, but only if there is still enough signal left to enhance. If this is true, envelope-tACS has potential for solving hearing issues that conventional hearing aids cannot fix; more specifically, envelope-tACS could be used to alleviate hearing fatigue. Recruiting working memory for compensation demands for more active hearing, making it mentally taxing. If envelope-tACS enhances the speech signal in the manner we hypothesized, it could enhance speech envelope tracking, reducing the amount of working memory required to compensate for signal loss and relieving hearing strain. However, it should be noted that these benefits on relieving hearing strain are so far only hypothetical; although our results are in line with established models of hearing strain and listening fatigue, we had no objective measurement of the hearing strain experienced by participants depending on stimulation condition.

Optimal time-lag

Investigation of the different time-lags found an effect of different stimulation phase conditions; although individual differences in optimal time-lag of envelope-tACS is a well-established effect34,35,38,39,53,65 post-hoc analysis discovered that performance in the 150 ms time-lag condition was significantly better for hearing impaired participants, but only in the SRT80 conditions, and only when comparing to 100 ms time-lag condition. Although the repeated measures ANOVA over the 5 time-lags of the SRT80 condition for hearing impaired participants reported a large effect size (F(4,64) = 2.9, P = .027, η2 = 0.16) with no apparent outlier data, this effect is surprising, and difficult to interpret.

Although generally envelope-tACS has been based on investigating the individual best time-lag, specific time-lags being better on average is not unheard of.34,40 The 150 ms delay has also been brought in relationship with speech tracking before,30,66-69 although phase resets related to incoming stimuli in general are often measured at 100 ms.70-72 This also does not explain why 150 ms was the best delay only for hearing impaired. One explanation is that only hearing impaired participants benefited enough from envelope-tACS to make this preference of the 150 ms delay condition significant compared to other effects like between and within subject variance in performance. Another possibility for why 150 ms was the best time-lag for hearing impaired participants was that the actual preferred time-lag of participants was later than 150 ms, and thus out of our range of measured delays. The slowing down of cognitive processing in elderly is a well-reported phenomenon.30,73,74 If participants’ actual optimal time-lag was later, and thus outside the range of time-lags we tested, then 150 ms time-lag would be the best approximation of their actual optimal time-lag by default. If this was the case, it could also mean that the gain these participants received due to envelope-tACS could potentially be higher, as we were unable to approximate their optimal time-lag. This is important, as it should be noted that with current results, the changes in performance caused by envelope-tACS are minor; in the easy task condition for hearing impaired participants, SRT improved by 0.99 dB on average for their best time-lag compared to sham.

Comparison to prior results

The design and goal of this study were based on our previous study from Wilsch et al.35 Between these studies, several design changes were made that should be considered. Firstly, although the stimulation waveform shape was calculated in the same way between the current study and Wilsch et al.,35 the intensity of the stimulation differed between the 2 studies. In our previous study, we used a stepwise method to decide the stimulation intensity per participant. Starting off at 400 μA, stimulation intensity was increased by 100 μA until the participant indicated they perceived skin sensations or phosphenes, up to a maximum of 1500 μA. For this study we opted to not use this method and instead used 1000 μA (peak to peak) for all participants. Although the purpose of individual stimulation levels was to reduce skin sensation, by deciding the stimulation intensity on the subjective experience of the participants prior to the task, it was nevertheless difficult to assess whether participants experienced sensation from the stimulation during the task. It also presented the question how much effect each participant experienced from stimulation as some participants received stronger stimulation than others. Therefore, for this study we kept stimulation intensity the same across participants as to remove the difference in intensity as a potential confounding factor.

Another difference between this study and Wilsch et al.35 is the location of the stimulation electrodes. The electrode locations used in this study were based on the locations found in Baltus et al.,51 in which finite element modeling was used to calculate which electrode locations could be used to optimally use the regions of interest. An argument could be made that the stimulation used in Baltus et al.51 was sinusoidal tACS, not envelope-tACS, and that perhaps the electrode setup used in Wilsch et al.35 might potentially be better for envelope-tACS. However, the Baltus et al.51 electrode setup was designed to target the same cortical regions we are interested in stimulating with envelope-tACS.

Finally, we used a smaller range of time-lags for envelope-tACS than in the previous study. Because of the limitations on how many minutes of tACS participants are allowed to receive per day, there is a trade-off to consider when deciding what time-lags to choose. Whilst smaller steps between time-lags results in more precise approximation of the optimal time-lag and thus should cause the largest effect of tACS, it comes with the risk of participants’ average optimal time-lag to be outside of that window. As we found an average optimal time-lag of 100 ms in Wilsch et al.,35 we designed our experiment under the assumption that participants’ optimal time-lag would average around 100 ms for this study as well. However, considering our finding that hearing impaired were on average best at the 150 ms time-lag, that is, our latest tested time-lag, there is a possibility that participants’ actual optimal time-lag was later than 150 ms. That this optimal average time-lag was later than expected could be because our previous study was recorded with young adults as compared to this study’s older population. Nevertheless, the ΔSNRs found in this study are in line with the ΔSNRs found in Wilsch et al.35

Limitations

When interpreting the results of our study, it is important to keep in mind the effect of task difficulty on the performance of hearing impaired participants was on ΔSNR. Meaning that what is being compared is the improvement in performance a given condition had compared to sham. Given that we measured 5 different stimulation time-lags, but recorded only 1 sham condition for each SRT level, we cannot compare the performance on the best stimulation time-lag condition to sham.75 Therefore, our study can only show how certain conditions are potentially more favourable for the use of envelope-tACS compared to other conditions, that is, that hearing impaired participants might gain more from it, and that envelope-tACS has a more beneficial effect if there is still enough of the sound envelope to reconstruct. However, as we cannot make a comparison to sham, we can’t conclude anything about the actual effects of envelope-tACS. Investigating phasic effects of envelope-tACS using the methods proposed by Zoefel et al.76 and as done in previous envelope-tACS studies34,35 is not possible. Although our number of time-lags, interval between the time-lags, and the window between 50 ms and 150 ms was deliberately chosen to what we believed was optimal to investigate the effects of envelope-tACS, the 50 ms to 150 ms time window can’t investigate half cycles of frequencies lower than 10 Hz.

Another limitation to our results is that the absolute effects in dB of envelope-tACS compared to sham are small. As mentioned above, in the SRT80 task, hearing impaired participants improved by −0.99 dB on average compared to sham. One participant in this condition even had a ΔSNR of 0.8 dB, meaning that even in their best time-lag condition, they still performed .8 dB worse than in the sham condition. Although our results show a potential application of envelope-tACS, the current gain in performance of around 1 dB is not strong enough for any practical application. Furthermore, with the difficulty of more precise timing of envelope-tACS, results should be better than those of “conventional” sinusoidal tACS, which as of now, does not appear to be the case.36,43,76,77 On top of this, the small absolute changes in dB raise some questions regarding repeatability of these results, as the OLSA has a test-retest reliability of about 1 dB.47 With the current inaccuracies of finding participants’ optimal time-lag and the sizes of the found effects, this might mean the OLSA is not accurate enough in approximating participants’ optimal time-lag. However, it should also be noted that this does not dismiss our findings, as a consistent and systematic shift in performance between conditions of 0.99 dB is not the same as an individual shift of 1 dB.

Future research

Given that the time window we chose for our experiment might have diminished the beneficial effects of envelope-tACS, we believe further research on tACS as a potential hearing aid is necessary. Our findings imply that hearing impaired participants might benefit more from envelope-tACS, given that the task has a high enough SNR that envelope-tACS is still capable of aiding in reconstructing the lost speech envelope. If true, this would potentially make envelope-tACS useful as a hearing aid. In particular, we believe it is worth exploring the effects of envelope-tACS on hearing impaired participants. Exploring and improving the approximation of the optimal stimulation time-lag for this group has potential for medical application, given that the absolute improvement in dB can be increased.

Conclusion

In this study, we explored the use of tACS as a potential addition to conventional hearing aids; although conventional hearing aids and cochlear implants can improve hearing on the cochlear level, other hearing issues related to aging like the slowing of cognition can’t be solved without improving signal processing at the cortical level. Our results suggest hearing impaired participants gain more from envelope-tACS in the higher signal to noise task (SNR80) compared to the low signal to noise task (SNR20). Relatedly, we found that hearing impaired participants performed on average better at a 150 ms time-lag compared to earlier time-lags in the condition, which suggests that hearing impaired participants’ optimal time-lag might have been later than 150 ms on average, meaning that a better approximation of the optimal time-lag could improve performance further. Considering speech in noise tracking is as of now still a weakness of conventional hearing aids, and the rising prevalence of hearing impairment, we believe the development of envelope-tACS to be a worthwhile endeavour for its potential to directly influence auditory processing in the cortex. However, in its current stage, the beneficial effects of envelope-tACS are not strong enough to have practical application. A primary goal of current tACS research should be to better estimate and approach participants’ optimal time-lag and develop a deeper understanding of the underlying neural processes of understanding speech.

Footnotes

Funding:The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was part of the mEEGaHStim project and funded by the BMBF grant V5IKM035.

Declaration of conflicting interests:The author(s) declared the following potential conflicts of interest with respect to the research, authorship, and/or publication of this article: CSH holds a patent on brain stimulation and received honoraria as editor from Elsevier Publishers, Amsterdam. All other authors declare no competing interests.

Author Contribution: JE designed experiment, analysed data and wrote the manuscript. MS designed experiment and analysed data. MV planned and designed experiment. AW designed and conducted experiment, analysed data, and wrote manuscript. CSH designed experiment and wrote the manuscript.

ORCID iD: Jules Erkens  https://orcid.org/0000-0001-5994-5795

https://orcid.org/0000-0001-5994-5795

Data and Code Availability Statement: Data will be made available upon request to the authors under a formal data sharing agreement.

References

- 1. Kaplan W, Laing R. Priority Medicines for Europe and the World. WHO; 2004. [Google Scholar]

- 2. Kaplan W, Wirtz V, Mantel A, et al. Priority medicines for Europe and the world update 2013 report. Methodology. 2013;2: 99–102. [Google Scholar]

- 3. Mathers C, Fat D, Boerma J. The Global Burden of Disease: 2004 Update. World Health Organization; 2008. [Google Scholar]

- 4. Pichora-Fuller MK, Singh G. Effects of age on auditory and cognitive processing: implications for hearing aid fitting and audiologic rehabilitation. Trends Amplif. 2006;10:29-59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Lunner T, Rudner M, Rönnberg J. Cognition and hearing aids. Scand J Psychol. 2009;50:395-403. [DOI] [PubMed] [Google Scholar]

- 6. Luo H, Poeppel D. Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex. Neuron. 2007;54:1001-1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Abrams DA, Nicol T, Zecker S, et al. Right-hemisphere auditory cortex is dominant for coding syllable patterns in speech. J Neurosci. 2008;28:3958-3965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Giraud A-L, Poeppel D. Cortical oscillations and speech processing: emerging computational principles and operations. Nat Neurosci. 2012;15:511-517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Zoefel B, Archer-Boyd A, Davis MH. Phase entrainment of brain oscillations causally modulates neural responses to intelligible speech. Curr Biol. 2018. a;28:401-408.e5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Thut G, Schyns PG, Gross J. Entrainment of perceptually relevant brain oscillations by non-invasive rhythmic stimulation of the human brain. Front Psychol. 2011;2:1-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Zoefel B, ten Oever S, Sack AT. The involvement of endogenous neural oscillations in the processing of rhythmic input: more than a regular repetition of evoked neural responses. Front Neurosci. 2018b;12:1-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Steinschneider M, Nourski KV, Fishman YI. Representation of speech in human auditory cortex: is it special? Hear Res. 2013;305:57-73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Millman RE, Johnson SR, Prendergast G. The role of phase-locking to the temporal envelope of speech in auditory perception and speech intelligibility. J Cognit Neurosci. 2015;27:533-545. [DOI] [PubMed] [Google Scholar]

- 14. Zoefel B, VanRullen R. EEG oscillations entrain their phase to high-level features of speech sound. Neuroimage 2016;124:16-23. [DOI] [PubMed] [Google Scholar]

- 15. Ding N, Simon JZ. Cortical entrainment to continuous speech: functional roles and interpretations. Front Hum Neurosci. 2014;8:1-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Kösem A, van Wassenhove V. Distinct contributions of low- and high-frequency neural oscillations to speech comprehension. Lang Cognit Neurosci. 2017;32:536-544. [Google Scholar]

- 17. Peelle JE, Davis MH. Neural oscillations carry speech rhythm through to comprehension. Front Psychol. 2012;3:1-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Ding N, Simon JZ. Power and phase properties of oscillatory neural responses in the presence of background activity. J Comput Neurosci. 2013;34:337-343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Peelle JE, Gross J, Davis MH. Phase-locked responses to speech in human auditory cortex are enhanced during comprehension. Cereb Cortex. 2013;23:1378-1387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Pichora-Fuller MK, Schneider BA, Daneman M. How young and old adults listen to and remember speech in noise. J Acoust Soc Am. 1995;97:593-608. [DOI] [PubMed] [Google Scholar]

- 21. Schneider BA, Daneman M, Pichora-Fuller MK. Listening in aging adults: from discourse comprehension to psychoacoustics. Can J Exp Psychol. 2002;56:139-152. [DOI] [PubMed] [Google Scholar]

- 22. Peelle JE, Troiani V, Wingfield A, et al. Neural processing during older adults’ comprehension of spoken sentences: age differences in resource allocation and connectivity. Cereb Cortex. 2010;20:773-782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Besle J, Schevon CA, Mehta AD, et al. Tuning of the human neocortex to the temporal dynamics of attended events. J Neurosci. 2011;31:3176-3185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Borch Petersen E, Lunner T, Vestergaard MD, et al. Danish reading span data from 283 hearing-aid users, including a sub-group analysis of their relationship to speech-in-noise performance. Int J Audiol. 2016;55:254-261 . [DOI] [PubMed] [Google Scholar]

- 25. Schneider BA, Daneman M, Murphy DR. Speech comprehension difficulties in older adults: cognitive slowing or age-related changes in hearing? Psychol Aging. 2005;20:261-271. [DOI] [PubMed] [Google Scholar]

- 26. Fitzgibbons PJ, Gordon-Salant S. Aging and temporal discrimination in auditory sequences. J Acoust Soc Am. 2001;109:2955-2963. [DOI] [PubMed] [Google Scholar]

- 27. Gordon-Salant S, Yeni-Komshian GH, Fitzgibbons PJ, et al. Age-related differences in identification and discrimination of temporal cues in speech segments. J Acoust Soc Am. 2006;119:2455-2466. [DOI] [PubMed] [Google Scholar]

- 28. McAuley JD, Jones MR, Holub S, et al. The time of our lives: life span development of timing and event tracking. J Exp Psychol Gen. 2006;135:348-367. [DOI] [PubMed] [Google Scholar]

- 29. Henry MJ, Herrmann B, Kunke D, et al. Aging affects the balance of neural entrainment and top-down neural modulation in the listening brain. Nat Commun. 2017;8:15801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Petersen EB, Wöstmann M, Obleser J, et al. Neural tracking of attended versus ignored speech is differentially affected by hearing loss. J Neurophysiol. 2017;117:18-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Fröhlich F, McCormick DA. Endogenous electric fields may guide neocortical network activity. Neuron 2010;67:129-143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Antal A, Paulus W. Transcranial alternating current stimulation (tACS). Front Hum Neurosci. 2013;7:1-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Herrmann CS, Rach S, Neuling T, et al. Transcranial alternating current stimulation: a review of the underlying mechanisms and modulation of cognitive processes. Front Hum Neurosci. 2013;7:1-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Riecke L, Formisano E, Sorger B, et al. Neural entrainment to speech modulates speech intelligibility. Curr Biol. 2018;28:161-169.e5. [DOI] [PubMed] [Google Scholar]

- 35. Wilsch A, Neuling T, Obleser J, et al. Transcranial alternating current stimulation with speech envelopes modulates speech comprehension. Neuroimage. 2018;172:766-774. [DOI] [PubMed] [Google Scholar]

- 36. Heimrath K, Fiene M, Rufener KS, et al. Modulating human auditory processing by transcranial electrical stimulation. Front Cell Neurosci. 2016;10:53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Neuling T, Rach S, Wagner S, et al. Good vibrations: oscillatory phase shapes perception. Neuroimage. 2012. a;63:771-778. [DOI] [PubMed] [Google Scholar]

- 38. Riecke L, Formisano E, Herrmann CS, et al. 4-Hz transcranial alternating current stimulation phase modulates hearing. Brain Stimul. 2015. a;8:777-783. [DOI] [PubMed] [Google Scholar]

- 39. Riecke L, Sack AT, Schroeder CE, et al. Endogenous delta/theta sound-brain phase entrainment accelerates the buildup of auditory streaming. Curr Biol. 2015. b;25:3196-3201. [DOI] [PubMed] [Google Scholar]

- 40. Kadir S, Kaza C, Weissbart H, et al. Modulation of speech-in-noise comprehension through transcranial current stimulation with the phase-shifted speech envelope. IEEE Trans Neural Syst Rehabil Eng. 2020;28:23-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Keshavarzi M, Kegler M, Kadir S, et al. Transcranial alternating current stimulation in the theta band but not in the delta band modulates the comprehension of naturalistic speech in noise. Neuroimage. 2020;210:116557. [DOI] [PubMed] [Google Scholar]

- 42. Ruhnau P, Rufener KS, Heinze HJ, et al. Pulsed transcranial electric brain stimulation enhances speech comprehension. Brain Stimul. 2020;13:1402-1411. [DOI] [PubMed] [Google Scholar]

- 43. Rufener KS, Oechslin MS, Zaehle T, et al. Transcranial Alternating Current Stimulation (tACS) differentially modulates speech perception in young and older adults. Brain Stimul. 2016;9:560-565. [DOI] [PubMed] [Google Scholar]

- 44. Erkens J, Schulte M, Vormann M, et al. Lacking effects of envelope transcranial alternating current stimulation indicate the need to revise envelope transcranial alternating current stimulation methods. Neurosci Insights. 2020;15:263310552093662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Brunoni AR, Amadera J, Berbel B, et al. A systematic review on reporting and assessment of adverse effects associated with transcranial direct current stimulation. Int J Neuropsychopharmacol. 2011;14:1133-1145. [DOI] [PubMed] [Google Scholar]

- 46. Neuling T, Rach S, Herrmann CS. Orchestrating neuronal networks: sustained after-effects of transcranial alternating current stimulation depend upon brain states. Front Hum Neurosci. 2013;7:161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Wagner K, Kühnel V, Kollmeier B. Entwicklung und Evaluation eines Satztests für die deutsche Sprache, Teil 1: Design des Oldenburger Satztests. Zeischrift für Audiol. 2001;1:4-15. [Google Scholar]

- 48. Brand T, Kollmeier B. Efficient adaptive procedures for threshold and concurrent slope estimates for psychophysics and speech intelligibility tests. J Acoust Soc Am. 2002;111:2801-2810. [DOI] [PubMed] [Google Scholar]

- 49. Wagner S, Rampersad SM, Aydin Ü, et al. Investigation of tDCS volume conduction effects in a highly realistic head model. J Neural Eng. 2014;11:016002. [DOI] [PubMed] [Google Scholar]

- 50. Wagner S, Burger M, Wolters CH. An optimization approach for well-targeted transcranial direct current stimulation. SIAM J Appl Math. 2015;76:2154-2174. [Google Scholar]

- 51. Baltus A, Wagner S, Wolters CH, et al. Optimized auditory transcranial alternating current stimulation improves individual auditory temporal resolution. Brain Stimul. 2018;11:118-124. [DOI] [PubMed] [Google Scholar]

- 52. Neuling T, Wagner S, Wolters CH, et al. Finite-element model predicts current density distribution for clinical applications of tDCS and tACS. Front Psychiatry. 2012b;3:1-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Krause B, Kadosh RC. (2014) Not all brains are created equal: the relevance of individual differences in responsiveness to transcranial electrical stimulation. Front Syst Neurosci. 2014;8:1-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Kochkin S. MarkeTrak V: “Why my hearing aids are in the drawer”: the consumers’ perspective. Hear J. 2000;53:34-41. [Google Scholar]

- 55. Kochkin S. MarkeTrak VI: 10-year customer satisfaction trends in the US hearing instrument market. Hear Rev. 2002;9:14-25. [Google Scholar]

- 56. Sarampalis A, Kalluri S, Edwards B, et al. Objective measures of listening effort: effects of background noise and noise reduction. J Speech Lang Hear Res. 2009;52:1230-1240. [DOI] [PubMed] [Google Scholar]

- 57. Hornsby BWY, Hornsby BWY. The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear Hear. 2013;34:523-534. [DOI] [PubMed] [Google Scholar]

- 58. Passow S, Westerhausen R, Wartenburger I, et al. Human aging compromises attentional control of auditory perception. Psychol Aging. 2012;27:99-105. [DOI] [PubMed] [Google Scholar]

- 59. Hasher L, Zacks RT. Working memory, comprehension, and aging: a review and a new view. Psychol Learn Motiv - Adv Res Theory. 1988;22:193-225. [Google Scholar]

- 60. Cabeza R, Anderson ND, Locantore JK, et al. Aging gracefully: compensatory brain activity in high-performing older adults. NeuroImage. 2002;17:1394-1402. [DOI] [PubMed] [Google Scholar]

- 61. Cabeza R, Daselaar SM, Dolcos F, et al. Task-independent and task-specific age effects on brain activity during working memory, visual attention and episodic retrieval. Cereb Cortex. 2004;14:364-375. [DOI] [PubMed] [Google Scholar]

- 62. Lunner T, Sundewall-Thorén E. Interactions between cognition, compression, and listening conditions: effects on speech-in-noise performance in a two-channel hearing aid. J Am Acad Audiol. 2007;18:604-617. [DOI] [PubMed] [Google Scholar]

- 63. Souza P, Arehart KH, Kates JM, et al. Age, hearing loss and cognition: susceptibility to hearing aid distortion. 2010;7(1989). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Ng EH-N, Rudner M, Lunner T, et al. Effects of noise and working memory capacity on memory processing of speech for hearing-aid users. Int J Audiol. 2013;52:433-441. [DOI] [PubMed] [Google Scholar]

- 65. Tavakoli AV, Yun K. Transcranial alternating current stimulation (tACS) mechanisms and protocols. Front Cell Neurosci. 2017;11:1-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Aiken SJ, Picton TW. Human cortical responses to the speech envelope. Ear Hear. 2008;29:139-157. [DOI] [PubMed] [Google Scholar]

- 67. Ding N, Simon JZ. Neural coding of continuous speech in auditory cortex during monaural and dichotic listening. J Neurophysiol. 2012. a;107:78-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Ding N, Simon JZ. Emergence of neural encoding of auditory objects while listening to competing speakers. Proc Natl Acad Sci. 2012. b;109:11854-11859. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Power AJ, Foxe JJ, Forde EJ, et al. At what time is the cocktail party? A late locus of selective attention to natural speech. Eur J Neurosci. 2012;35:1497-1503. [DOI] [PubMed] [Google Scholar]

- 70. Naatanen R, Winkler I. The concept of auditory stimulus represetation in neuroscience. Psychol Bull. 1999;125:826-859. [DOI] [PubMed] [Google Scholar]

- 71. Kubanek J, Brunner P, Gunduz A, et al. The tracking of speech envelope in the human cortex. PLoS One 2013;8:e53398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Baltzell LS, Horton C, Shen Y, et al. Attention selectively modulates cortical entrainment in different regions of the speech spectrum. Brain Res. 2017;1644:203-212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Lunner T. Cognitive function in relation to hearing aid use. Int J Audiol. 2003;42:49-58. [DOI] [PubMed] [Google Scholar]

- 74. Wong PCM, Jin JX, Gunasekera GM, et al. Aging and cortical mechanisms of speech perception in noise patrick. Neuropsychologia. 2009;47:693-703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Asamoah B, Khatoun A, Mc Laughlin M. Analytical bias accounts for some of the reported effects of tACS on auditory perception. Brain Stimul. 2019;12:1001-1009. [DOI] [PubMed] [Google Scholar]

- 76. Zoefel B, Allard I, Anil M, et al. Perception of rhythmic speech is modulated by focal bilateral transcranial alternating current stimulation. J Cognit Neurosci. 2019;32:226-240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Wöstmann M, Vosskuhl J, Obleser J, et al. Opposite effects of lateralised transcranial alpha versus gamma stimulation on auditory spatial attention. Brain Stimul. 2018;11:752-758. [DOI] [PubMed] [Google Scholar]