Abstract

Over the past 20 years there has been a growing interest in the neural underpinnings of cost/benefit decision-making. Recent studies with animal models have made considerable advances in our understanding of how different prefrontal, striatal, limbic and monoaminergic circuits interact to promote efficient risk/reward decision-making, and how dysfunction in these circuits underlies aberrant decision-making observed in numerous psychiatric disorders. This review will highlight recent findings from studies exploring these questions using a variety of behavioural assays, as well as molecular, pharmacological, neurophysiological, and translational approaches. We begin with a discussion of how neural systems related to decision subcomponents may interact to generate more complex decisions involving risk and uncertainty. This is followed by an overview of interactions between prefrontal-amygdala-dopamine and habenular circuits in regulating choice between certain and uncertain rewards and how different modes of dopamine transmission may contribute to these processes. These data will be compared with results from other studies investigating the contribution of some of these systems to guiding decision-making related to rewards versus punishment. Lastly, we provide a brief summary of impairments in risk-related decision-making associated with psychiatric disorders, highlighting recent translational studies in laboratory animals.

Keywords: decision making, uncertainty, punishment, prefrontal cortex, nucleus accumbens, lateral habenula, dopamine, mania

Cost/benefit decision-making is a fundamental executive process that is common across species, ranging from worms, rodents, non-human primates and of course, humans. In particular, all organisms are faced on a daily basis with choices between options that differ in their expected reward and potentially negative consequences that may accompany those rewards. Thus, a system that integrates information related to risk and reward, as well as internal motivational drives and environmental factors, is crucial to be able to make adaptive decisions and guide subsequent behavior. In humans, most individuals are able to calculate the relative costs and benefits of options and make appropriate choices; however, maladaptive decision-making is a behavioral hallmark of several psychiatric conditions. For example, individuals diagnosed with substance use disorders display an increased propensity to engage in risky behavior, such as unprotected sex and intoxicated driving (Lejuez et al., 2005; Pulido et al., 2011). Other psychiatric conditions, such as anorexia and schizophrenia, are characterized by a pathological decrease in risk-taking behavior (Kaye et al., 2013; Reddy et al., 2014). Thus, a better understanding of the neurobiology underlying normal risky decision-making will provide insight into how these processes may go awry in pathological conditions.

Seminal work by Bechara and colleagues provided the first neurobiological clues as to how the brain mediates decision-making under risk. Using what has become a well-established behavioral assay of risky decision-making, the Iowa Gambling Task (IGT), Bechara et al. (1994, 1999) demonstrated that patients with prefrontal cortical damage were impaired in this task. In the IGT, subjects are asked to choose between different decks of cards, which differ in their long-term profitability. While healthy subjects choose cards that yield longer term payoffs, patients with prefrontal damage [encompassing the ventromedial and orbitofrontal (OFC) subregions] choose cards that yield a large immediate gain, but are accompanied by even larger losses in the long-term, indicating that damage to this brain region increases risky choice. Further insight into the role of the prefrontal cortex (PFC) in risky decision-making derives from more recent neuroimaging experiments in subjects diagnosed with psychiatric conditions characterized by pathological risk-taking behavior. Several studies have shown that substance abusers exhibit hypoactivation of various subregions of the PFC during decision-making (Fishbein et al., 2005; Crowley et al., 2010) and that this decreased functional activity is associated with preference for risky choices (Fishbein et al., 2005). Similarly, adults with attention deficit hyperactivity disorder (ADHD), a condition also associated with elevated risky decision-making (DeVito et al., 2008; Klein et al., 2012), have less extensive activation of the PFC relative to controls during risky decision-making (Ernst et al., 2003).

The PFC, as well as other brain regions implicated in risky decision-making, receives robust dopaminergic input from the ventral tegmental area (VTA). As such, alterations in dopamine (DA) signaling may have a deleterious impact on decision-making under risk. This is indirectly supported by studies that have assessed decision-making in individuals suffering from psychiatric conditions in which perturbations in the dopaminergic system are thought to be an underlying cause. For instance, stimulant abusers, schizophrenics, and patients with ADHD display maladaptive risky decision-making both in real world measures (Friedman, 1998; Lejuez et al., 2005; Klein et al., 2012; Ramos Olazagasti et al., 2013) and when assessed in laboratory gambling tasks (Rogers et al., 1999b; Bornovalova et al., 2005; Leland and Paulus, 2005; Schneider et al., 2012). As further evidence, when treated with DA agonists, patients with Parkinson’s disease and Restless Legs Syndrome can develop increased risk-taking (Dagher and Robbins, 2009), clearly indicating a role of DA in modulating risk-based decision-making. Finally, more recent studies have shown that poor performance in the IGT in pathological gamblers is associated with increased DA release in the ventral striatum (Linnet et al., 2011a, b). Altogether, these studies reveal the important contribution of the dopaminergic system to risky decision-making, and suggest that dysregulation in this system is a major cause of pathological risk-taking.

While informative, studies in humans are limited in their ability to determine how specific brain regions, and in particular, how neurochemical signaling within these regions, facilitate different component processes related to decision-making. Animal models have allowed researchers to address fundamental questions about the neural mechanisms supporting different forms of risky decision-making and how these systems may be impacted in pathological states. Critical to these questions, however, is understanding how different behavioral and cognitive elements of decision-making sculpt subsequent behavior. For example, making a decision between multiple options entails consideration of past outcomes, the relative value of the benefits associated with each option, and the valence and type of risk that may accompany those options, all of which must be integrated with other environmental and motivational factors in order to execute or inhibit behavior. The main endeavor of this review is to describe these processes and to highlight recent animal studies investigating the neural bases of different forms of risk-based decision-making. It begins with a discussion of the basic cognitive and neural systems underlying decision-making behaviors, followed by reviews of the neurobiology of two distinct animal models of decision-making involving different types of uncertainty or risk. Finally, the review extends this discussion to psychiatric conditions characterized by impaired risk-based decision-making, and highlights the necessity of assessing different components of decision-making so as to gain a more comprehensive understanding of these disorders.

Decision Making Is Supported by Multiple, Diverse Neural Systems

As alluded to previously, decision-making encompasses a complex conjunction of many behaviors. This is exemplified by the fact that there is an entire field related to sensorimotor decision-making, a close cousin of the type of motivational decision-making addressed here. The framework for sensorimotor decision-making is frequently rooted in neurophysiological studies, in which temporal components of behavior are well-controlled. These studies focus on the accumulation of information that ultimately allows a decision to be made and a response to be executed. For example, many studies have investigated decisions related to ambiguous visual stimuli, such as a field of dots in which some percentage (0% to 100%) is moving coherently in one direction while the others are moving randomly (Shadlen and Newsome, 1996). Subjects in this case must decide which direction the dots are moving by making an eye movement in that direction. Based on the percentage of motion coherence, the decision can be easier (100%) or harder (<25%). For many years, investigators have used neuronal recording, inactivation, and stimulation to interrogate the brain areas involved in this type of task, with a focus on determining at what point sensory information has accumulated such that the subject is able to make a decision. Work from many labs (Gold and Shadlen, 2007; Lee et al., 2007; Hayden and Pasternak, 2013; Romo, 2013; Shadlen and Kiani, 2013) has shown that neuronal activity, particularly in higher-level sensory and sensorimotor cortex, builds from cue onset and peaks at decision completion. This decision completion is demonstrated by the execution of a behavioral response, for example, a saccadic eye movement. Neural signals across multiple brain areas, including sensory, motor and prefrontal cortex (Uchida et al., 2006; Lee et al., 2007; Romo, 2013; Shadlen and Kiani, 2013), basal ganglia and midbrain (Felsen and Mainen, 2012; Ding and Gold, 2013), and neuromodulatory systems such as the locus coeruleus (Aston-Jones and Cohen, 2005) are involved in aspects of these relatively simple types of decision (e.g., deciding if the dots are moving left or right). Stimulation of these key regions can bias decisions depending on the area influenced (Salzman et al., 1990; Cohen and Newsome, 2004). Computational models (e.g.; drift diffusion model), have also been developed which accurately characterize this accumulation of neural information ultimately leading to a decision (Aston-Jones and Cohen, 2005; Shadlen and Kiani, 2013). Despite the relatively straightforward question (“is the cue going left or right?”), a comprehensive characterization of sensorimotor decision-making is still in development. However, the accumulated body of research has demonstrated that critical neural dynamics, such as escalation of neuronal activity with accumulating decision certainty (Gold and Shadlen, 2007), underlie many types of decisions and have allowed a close inspection of the neural components of the multifaceted aspects of decision-making (Hayden and Pasternak, 2013; Shadlen and Kiani, 2013).

One important implication from this growing body of work is that no one brain area independently regulates decision-making. Even the types of basic sensorimotor decisions described above require a wide range of neural systems, likely working in parallel. The complexity of neural signaling underlying simple perceptual decisions argues that cost/benefit decision-making, as discussed below, will involve equally, and likely more, diverse neural representations at the level of brain regions, neuromodulators, and neural circuits. In addition, complex decisions underlying risk and probability are all extensions of fundamental sensory, cognitive, emotional, and motor functions. These processes summate to allow risky decision-making, and some subset of these processes is disrupted in psychiatric and neurological diseases in which decision-making is impaired (Paulus, 2007). For example, when deciding between options associated with either high-risk or low-risk rewarded outcomes, a rat must have a neural representation of the location of each lever associated with these options. In addition, the rat must associate each potential response with a possible outcome, guided by memory, context and other cognitive factors (which lever is associated with which outcome and the history of outcomes after selecting that lever) as well as motivational and emotional states (hunger, fear of a negative outcome, etc.). A behavioral plan must then be developed and executed based on the accumulated information. At a relatively high level, this involves planning the execution of some behaviors while inhibiting others (e.g., directing behavior toward one option versus the other). After completion of the motor sequence, neural signals reflect acquisition and receipt of reward, a non-rewarded event, or some form of punishment, and there is an evaluatory component to behavior: did the expected outcome match the actual outcome? This information is factored into future planning by the animal to maximize rewards. If the probability of receiving a reward is low, the animal may switch strategies at the next opportunity and pursue higher probability rewards even if they may be smaller in magnitude (Cardinal, 2006; Floresco et al., 2008a). All of this information is integrated to bias action selection in what we consider cost/benefit decision-making.

When discussing disorders related to decision-making, it is equally important to consider the specific deficits exhibited by patients in order to precisely localize the source of the disease and address possible treatments. Thus, although patients suffering from psychiatric or neurological disease may present deficits in decision-making, these deficits could stem from a range of disordered subcomponents of decision-making, such as response planning or value representation. This conceptualization is very much in line with the National Institute of Mental Health’s recent emphasis on research domain criteria (RDoC), the goal of which is to emphasize neural systems related to specific behavioral and cognitive functions that cut across disorders, such as working memory, reward learning, or goal selection (http://www.nimh.nih.gov/research-priorities/rdoc/index.shtml). In essence, this is an emphasis on behavioral (and cognitive) neuroscience as a way of furthering our understanding of mental disorders. The RDoC framework is also informative in the context of understanding the neural basis of complex behaviors such as decision-making. At the same time that we probe the neural systems specifically related to explicit decision-making tasks (see sections on uncertainty and punishment, below), investigations should dissect the fundamental behavioral and cognitive elements of decision-making and attempt to understand their relationships to neural function.

Thus, when attempting to characterize the neural correlates of decision-making, it is essential to consider the cognitive, emotional, motivational, and behavioral subprocesses that are combined to produce a decision. As noted above and elsewhere in this review, a diverse set of brain areas likely function together to guide risky decision-making. However, there is an additional critical issue to take into consideration: each of these neural systems does not perform a single function, but rather, may actually play multiple roles in tasks used to test decision-making. In both decision-related tasks and other types, these roles may even be opposite one another – signaling appetitive vs. aversive outcomes, for example. Hence, throughout this review, we will discuss, for example, what function the PFC, nucleus accumbens (NAc) or basolateral amygdala (BLA) plays in decision-making behavior. In reality, these areas and others can perform multiple functions that may be unrelated or even interfere with efficient decision-making.

One obvious example of a brain area involved in a wide array of behaviors (including risky decision-making), is the medial PFC (mPFC). The mPFC plays a major role in the regulation of decision-related behavior, as seen in the context of risky decision-making and related tasks in rodents (Floresco et al., 2008a; Jentsch et al., 2010; St Onge and Floresco, 2010a; Simon et al., 2011; St Onge et al., 2011; St Onge et al., 2012a; St Onge et al., 2012b), and more generally across a number of other tasks (Kesner and Churchwell, 2011; Euston et al., 2012; Cassaday et al., 2014). Broadly speaking, the mPFC in rodents is widely considered to subserve a variety of executive functions that are engaged during decision-making (Cardinal, 2006; St Onge and Floresco, 2010; Euston et al., 2012; Chudasama, 2011). These include working memory, attention, planning, impulse control, action/outcome monitoring and behavioral flexibility, etc. (De Bruin et al., 2000; Dalley et al., 2004; Kesner and Churchwell, 2011; Euston et al., 2012; Bissonette et al., 2013; Cassaday et al., 2014). In many ways, these cognitive phenomena are all root functions of decision-making. However, the mPFC has also been associated with fundamental behavioral processes such as the motivational states associated with rewards or punishments. In many cases, the functions ascribed to the mPFC seem at odds with one another. For example, some studies suggest that mPFC activity plays a role in regulating seeking of drug- or natural rewards (Tzschentke, 2000; Peters et al., 2009; Lasseter et al., 2010b; Ishikawa et al., 2008b, a) whereas other studies emphasize the mPFC in the representation of aversive stimuli and contexts as in fear conditioning (Morgan and LeDoux, 1995; Maren and Quirk, 2004; Peters et al., 2009; Sotres-Bayon and Quirk, 2010; Quirk and Mueller, 2008; Orsini et al., 2011). An important question, then, is what are the basic properties of the mPFC that allow such a diversity of behaviors and, consequently, play such an important role in decision-making?

Anatomical segregation of mPFC function - dichotomies and beyond

One possible explanation for these differential and even diverging properties of mPFC function lies in anatomical differences. The mPFC is made up of multiple subregions along the dorsal ventral axis (Heidbreder and Groenewegen, 2003; Vertes, 2004). In particular, the dorsal regions of the mPFC (typically the prelimbic cortex, PL, and sometimes including the anterior cingulate cortex, ACC) have been demonstrated to play a different, and sometimes even an opposite, role to the ventral mPFC (infralimbic cortex, IL) Killcross and Coutureau, 2003; Peters et al., 2009; Van den Oever et al., 2010; Euston et al., 2012; Gass and Chandler, 2013; Smith and Graybiel, 2013; Cassaday et al., 2014). In the context of fear conditioning, PL plays a more important role in encoding fear-related stimuli and associated behavioral responses (e.g., freezing). In contrast IL appears to play a more important role in inhibition of fear responses, particularly in the context of extinction learning (Morgan and LeDoux, 1995; Maren and Quirk, 2004; Peters et al., 2009; Sotres-Bayon and Quirk, 2010; Quirk and Mueller, 2008; Orsini et al., 2011). Similar dichotomies have been proposed in the realm of appetitive behaviors: PL appears to be more associated with driving responses to acquire reward whereas IL may be more associated with withholding responses (Ishikawa et al., 2008; Peters et al., 2009; Gass and Chandler, 2013). Consideration of this dichotomy is of particular interest in decision-making as virtually all decisions require a complex conjunction of response execution and response inhibition. In the context of the rodent studies described in this review, a risk-based decision ultimately comes about through execution of a response to the chosen option (e.g., a press on a “risky” lever) and inhibition of alternate responses (e.g., not pressing the “safe” lever). Although studies of risk-based decision-making incorporate additional parameters such as outcome prediction and evaluation, understanding how responses are executed and inhibited has the potential to provide fundamental insights into the nature of decision-making. A dichotomous model of mPFC function whereby dorsal and ventral mPFC compete for control over behavior would be an appealing way to explain the respective contributions of these areas to decision-making. Based on the results described above and elsewhere (Peters et al., 2009; Moorman et al., 2014) one possible model might be that PL drives active execution of behavior (reward-seeking or fear-related freezing) whereas IL supports inhibition of behavior (restraint from reward seeking or freezing during extinction).

Unfortunately, a clear dichotomy along these lines is complicated by diverging results. This is particularly noticeable in literature pertaining to appetitive motivation (Moorman et al., 2014). Thus, in some circumstances PL activation appears to promote the seeking of natural or drug rewards (Ishikawa et al., 2008b; McFarland et al., 2003; McLaughlin and See, 2003; McFarland et al., 2004; Hearing et al., 2008; Stefanik et al., 2013; Sparta et al., 2014), whereas in other contexts it plays an important role in inhibition of responses (Ishikawa et al., 2008b; Jonkman et al., 2009; Willcocks and McNally, 2013; Chen et al., 2013; Mihindou et al., 2013; Martin-Garcia et al., 2014, Broersen and Uylings, 1999; Risterucci et al., 2003; Narayanan et al., 2006; Narayanan and Laubach, 2006; Bari et al., 2011). Similarly, although IL has been characterized as suppressing behavior, particularly after extinction (Ishikawa et al., 2008b; Peters et al., 2008; Peters et al., 2009; LaLumiere et al., 2010; Rhodes and Killcross, 2007; Peters and De Vries, 2013; Van den Oever et al., 2013; Chudasama et al., 2003; Murphy et al., 2005; Murphy et al., 2012), the region is also important for driving the seeking of drugs or natural rewards (Bossert et al., 2011; Ishikawa et al., 2008b; Koya et al., 2009; Rogers et al., 2008; Sangha et al., 2014) in other contexts. In fact, neurons in both areas appear to be activated during both execution and inhibition of reward and drug-seeking (Burgos-Robles et al., 2013; Narayanan and Laubach, 2009; West et al., 2014). This lack of consistent association between region and function has profound relevance for the role of these areas in decision-making tasks, particularly those motivated by reward acquisition. In general, it underscores that there are additional levels of complexity that should be considered when ascribing functions to brain areas.

Lest we assume that this level of complexity is unique to the prefrontal cortex, it should be noted that there are striking degrees of functional heterogeneity in a wide range of brain areas frequently associated with decision-making behavior. For example, the BLA plays important roles in fear conditioning, appetitive motivation, salience encoding, memory, and a wide range of other cognitive and emotional functions (Janak and Tye, 2015). In some cases, these differences may be due to different functions being associated with different amygdala subregions. For example, whereas the rostral but not caudal BLA is important for responding to drugs of abuse but not food rewards (Kantak et al., 2002, McLaughlin and See, 2003), the caudal but not rostral BLA has been shown to be important for withholding responses to extinguished food-related cues (McLaughlin and Floresco, 2007). Even in the same region, neurons in the BLA sometimes the same neuron – fire in relation to both appetitive and aversive outcomes (Sangha et al., 2013) and during response execution and inhibition (Tye and Janak, 2007; Tye et al., 2010). In addition to the BLA, recent results have demonstrated that DA neurons in the VTA encode appetitive and aversive outcomes depending on anatomical connectivity (Brischoux et al., 2009; Matsumoto and Hikosaka, 2009b; Bromberg-Martin et al., 2010; Schultz, 2010; Lammel et al., 2014). Similarly, although it is well established that dopamine release in the NAc occurs during rewarding situations, aversive outcomes and their predictors can enhance ventral striatal DA transmission as well (Anstrom et al., 2009; Oleson et al., 2012; Wenzel et al., 2015).

These findings in mPFC and other brain areas underscore two points with respect to interpreting the function of brain regions in the context of decision-making and other complex behaviors. First, the behavioral context in which neural systems are tested makes an important contribution to interpretation. So, for example, it might be less useful to consider whether PL vs. IL encodes initiation vs. suppression of behaviors because these behavioral dimensions may be tangential to the main functions of these regions. Along these lines, there have been other frameworks proposed for mPFC subdivisions. A notable example that is supported by a considerable amount of data is the distinction between goal directed behavior (thought to be driven by dorsal mPFC) vs. habitual behavior (thought to be driven by ventral mPFC) (Balleine and Dickinson, 1998; Killcross and Coutureau, 2003; Balleine and O’Doherty, 2010; Smith et al., 2012). Of course we caution against shifting from one strict dichotomous assignment of function (Go vs. Stop) to another (Goal vs. Habit). However, it is certainly likely that one of the functions of PL is to drive goal directed behavior, etc., perhaps in conjunction with playing important roles in other behavioral parameters – fear expression, direction of attention, memory, and representation of context, for example. Rather than see these regional functions as absolute, it might be more valuable to consider each behavioral association as a member of a constellation of behaviors in which each area plays a role.

The second point is that within-area neural heterogeneity is likely an important contributor to the diversity of behaviors associated with these regions. One clear explanation for how brain regions as complex as the mPFC and amygdala can play a broad range of roles across behavior is through the presence of multiple, interdigitated networks within each region (Moorman et al., 2014; Riga et al., 2014; Janak and Tye, 2015). So, for example, PL neurons that project to the NAc may play an important role in appetitive motivated behaviors whereas PL neurons that project to the BLA may play a more important role in directing the expression of certain emotional responses. Of course, this characterization, while more sophisticated than asking what the PL “does” is still likely an oversimplification – there are also probably PL-BLA networks associated with appetitive motivation, for example. Conversely, afferent and neuromodulatory factors may play a role in defining functions. Prefrontal neurons with specific receptor subtypes or receiving afferent input from specific brain areas may play a vastly different role than members of other networks. Finally, it is important to remember that there are different phenotypes of neurons in almost all brain areas studied in the context of decision-making. In cortex, there are multiple subpopulations of both glutamatergic pyramidal and GABAergic neurons (Kvitsiani et al., 2013; Pi et al., 2013; van Aerde and Feldmeyer, 2013; Lee et al., 2014). In the NAc, there are subpopulations of medium spiny neurons (e.g., D1 vs. D2 receptor expressing) and multiple types of interneurons (Lenz and Lobo, 2013). In the VTA, there are complex conjunctions of dopamine, glutamate, and GABA, both across and even within neurons (Tritsch et al., 2012; Stamatakis et al., 2013; Morales and Root, 2014). In addition to network connectivity, it is crucial to incorporate diversity of neuronal phenotype into an overarching model of neural systems sculpting decision-making and related behaviors. By incorporating the confluence of network connectivity, neuronal phenotype, and possibly even other factors, we will begin to develop a more sophisticated understanding of the neural systems associated with specific behaviors, likely resolving many of the apparent contradictions described above.

It is essential to remind ourselves that decision-making is a complex behavior. This is particularly the case when we consider decisions made based on evaluation of risk, reward uncertainty and the likelihood of punishment, as discussed below. In considering how neural systems support decision-making, we must incorporate multiple levels of complexity, as discussed above. This complexity includes behavioral complexity – all of the cognitive and motivational components, each with neural systems supporting that specific function – as well as neural complexity. On the one hand, decision-making related behaviors are supported by interaction across a broad collection of brain areas, many of these are described below. On the other hand, each brain area performs multiple functions, including those unrelated to decision-making, likely supported by diverse ensembles of neurons within and across brain areas. Moving forward, merging a large-scale network view (the interaction of brain areas involved) with a more refined, neuronal network view (the interaction of specific populations of neurons within each region) will ultimately provide a crucial perspective on decision-making that either view alone will not permit. However, in order to know where to look for decision-related circuits, we must first have a well-characterized description of which brain regions and modulatory systems are even involved.

Neural circuitry mediating choice of uncertain rewards

A key component of more complex forms of reward-related decision-making entails monitoring the outcomes of one’s actions over time. Sometimes certain actions are rewarded, while other times they are not, and a decision-maker must keep track of how well his or her choices are paying off in order to maximize long-term gains. These decisions can be modulated further by information about the relative payoffs and risk associated with different actions. For example, imagine tracking a stock on a day its price increases after favorable news. With every uptick and downtick in price, you battle competing urges to either sell and put the proceeds into savings, or hold on and see if you can maximize your gains, and a variety of factors can influence your decision (historical performance of the stock, how much you stand to make, your individual tolerance for risk, etc). As another example, envision playing blackjack with a 7-deck shoe. The house odds on each hand are a little better than 50%, yet wins (or losses) often come in streaks. In lieu of counting cards, a typical player may keep rough estimates of how the last few hands have gone, factor that in with how much money has been won or lost during the session, and judge whether to continue gambling or if it is time to leave the table (hopefully with a profit, or at least minimizing the losses).

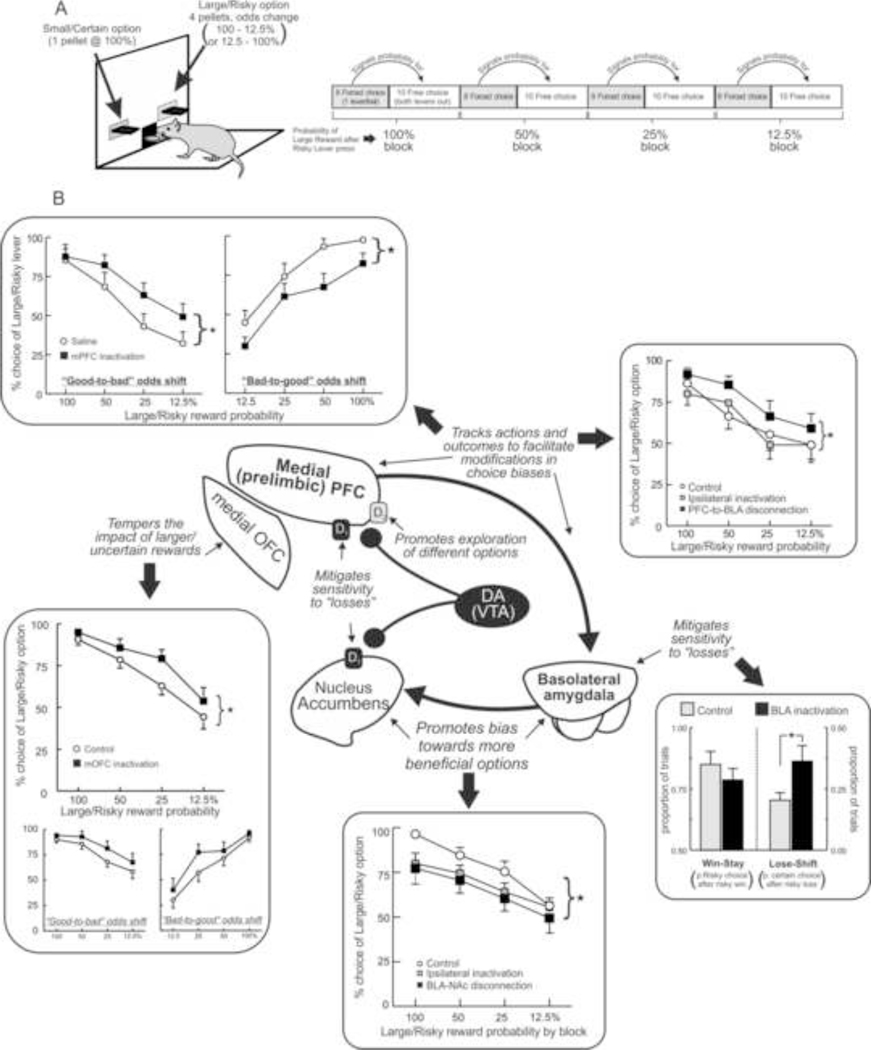

In laboratory animals, these types of processes can be modeled using tasks that require subjects to choose between options associated with different magnitudes of reward and varying probabilities of obtaining them. A series of studies by Floresco and colleagues have utilized a probabilistic discounting procedure wherein rats choose between a small/certain (1 reward pellet) and large/risky reward (4 pellets; Figure 1A). Over the course of a daily session, the probability of obtaining the larger reward changes in a systematic manner over blocks of free-choice trials, ranging from 100 to 12.5% either in a descending (“good-to-bad”) or ascending (“bad-to-good”) manner. Aside from blocks of forced-choice trials that precede free-choice periods, there are no explicit cues signaling changes in reward probabilities. Thus, over the course of a session, rats must keep track of their choices and their outcomes to ascertain when it may be more profitable to choose more risky or conservative. After approximately 20 days of training, rats display stable patterns of discounting, selecting the large reward more often when the probabilities are high (100–50%) and less so when the odds are lower. This extended training ensures that rats are familiar with both the basic reinforcement contingencies associated with the different options and with the changes in reward probabilities that occur during a session. Neural manipulations are then employed using a within-subjects design to probe how transient disruptions of different nodes within decision circuitry alter choice biases and ascertain how they may contribute to different processes related to risk/reward decision-making.

Figure 1:

A summary of some of the neural circuits involved in risk/reward decision-making involving choice between smaller certain and larger uncertain (or risky) rewards. (A) Depiction of the probabilistic discounting task used to asses risk/reward decision-making in rodents. (B) Summary of the dissociable functions of cortical, limbic and striatal nodes within DA circuitry in regulating probabilistic discounting, as inferred by inactivation and circuit disconnection studies. BLA inactivation reduces risky choice by increasing sensitivity to reward omissions (bottom right). Subcortical circuits incorporating the BLA and the NAc bias choices towards larger uncertain rewards, as disconnection between these regions reduces risky choice [bottom center: adapted from St Onge et al., (2012b)]. In contrast, the medial OFC tempers the impact that large, uncertain rewards exert over subsequent choices, as inactivation of this region increases risky choice [bottom left: adapted from Stopper et al., (2014a)]. Inactivation of the prelimbic region of the medial PFC impairs modifications in decision biases, affecting risky choice differentially depending on whether reward probabilities decrease or increase over time [top left: adapted from St Onge and Floresco, (2010)]. This function of the medial PFC is mediated via top-down interactions with the BLA; disconnection of descending PFC→BLA projections also impairs shifts in choice biases [top right: adapted from St Onge et al., (2012b)]. DA further refines risk/reward decision-making, with D1 receptors in the PFC and NAc reducing the impact of non-rewarded choices, and D2 receptors in the medial PFC facilitating adjustments in choice biases to promote exploration of different options (center). For all panels, stars denote significant differences between groups at p<0.05.

Amygdala-ventral striatal circuits bias choice towards larger, risky rewards

Studies using lesions or reversible inactivation of different brain nuclei have revealed a critical role for subcortical circuits linking the BLA and the NAc in biasing choice towards larger, more costly rewards. It has been shown repeatedly that disruption of BLA functioning shifts bias away from larger rewards when their delivery is i) delayed (Winstanley et al., 2004) ii) requires more effort to obtain them (Floresco and Ghods-Sharifi, 2007; Ghods-Sharifi et al., 2009; Ostrander et al., 2011) or iii) uncertain (Ghods-Sharifi et al., 2009). With respect to risk/reward decision-making, neural activity within the BLA appears to play a key role in mediating sensitivity to “losses”, as reductions in risky choice following inactivation of this nucleus are associated with an increased tendency to shift to smaller/certain rewards following a non-rewarded risky choice (Ghods-Sharifi et al., 2009; Figure 1B, bottom right). It is important to note, however, that the BLA does not appear to play a role in biasing choice towards larger, cost-free rewards vs smaller ones (Winstanley et al., 2004; Ghods-Sharifi et al., 2009; Ostrander et al., 2011), suggesting that it plays a more prominent role in guiding choice when a decision-maker must evaluate both the relative benefits and costs associated with different actions. This influence of the BLA appears to be mediated via interactions with the NAc, as lesions or inactivation of this nucleus also reduce preference for larger, more costly rewards (Cardinal et al., 2001; Ghods-Sharifi and Floresco, 2010; Stopper and Floresco, 2011). Interestingly, as opposed to decisions involving effort or delay-related costs, which recruit the lateral core region of the NAc, action selection involving reward uncertainty appears to be more dependent on activity within the medial shell region of this nucleus, although the core may also contribute to certain aspects of performance as well (Cardinal and Howes, 2005; Stopper and Floresco, 2011; Dalton et al., 2014). Moreover, unlike the BLA, which mediates sensitivity to reward omissions, inactivation of the NAc diminishes sensitivity to rewards, reducing the tendency to follow a rewarded risky choice with another risky choice (Stopper and Floresco, 2011). Thus, the BLA and NAc work in concert to promote biases towards larger uncertain rewards via somewhat different mechanisms, a notion supported by the findings that disrupting communication between these regions also reduces risky choice (Figure 1B, bottom) (St Onge et al., 2012b). As such, BLA-NAc circuity seems to help a decision-maker overcome uncertainty costs and provides a visceral, intuitive bias towards chasing larger payoffs.

Prefrontal regions mitigate and refine risk/reward decision-making

The drive provided by BLA-NAc circuitry towards potentially larger payoffs is in turn tempered by different regions of the OFC and mPFC, which may act as a brake on these impulses and help refine choice biases when reward probabilities change. Within the OFC, the medial portion plays a key role in mitigating the impact that larger, probabilistic rewards exert on subsequent choice behavior. Inactivation of this region leads to an increased tendency to select riskier options after these options have recently paid off, irrespective of whether the odds of obtaining the larger reward decreased or increased over a session (Figure 1B, bottom left; Stopper et al., 2014a). In contrast, disruption of lateral OFC function does not alter probabilistic discounting in well-trained animals (St Onge and Floresco, 2010). Instead the contribution of the lateral OFC to risk/reward decision-making may be more important during the initial learning of the relative magnitude and likelihood of rewards associated with different options (Mobini et al., 2002; Pais-Vieira et al., 2007; Zeeb and Winstanley, 2011).

The PL region of the mPFC (anatomically homologous to Area 32 of the anterior cingulate cortex in primates) plays a more evaluative role in refining risk/reward decision biases. Inactivation of this region increased risky choice when reward shifted from good-to-bad (100→12.5%), but had the opposite effect when reward probabilities increased over a session (Figure 1B, top left; St Onge and Floresco, 2010). However, this region of the PFC does not appear to influence the direction of risky choice when reward probabilities remain static within a session. This combination of findings led to the conclusion that the PL region of the PFC guides decisions by monitoring actions and outcomes to track variations in reward probability and modify decision biases to promote optimal patterns of choice. These functions are in turn mediated via top-down control of the BLA. Disconnection of descending PFC→BLA pathway (but not ascending BLA→PFC projections) disrupted decision-making in a manner similar to bilateral inactivation of the mPFC (Figure 1B, top right; St Onge et al., 2012b). Under these conditions, animals were less sensitive to reward omissions, showing a decrease in lose-shift behavior. This collection of findings suggests that the PL region of the PFC appears to play a supervisory role during risk/reward decision-making, monitoring rewarded and non-rewarded actions over time. As the profitability of riskier options varies, the PFC aids in learning about changes in reward contingencies, which may facilitate shifts in decision biases. Alterations in decision biases are executed via descending pathways from PFC to BLA, which temper the influence that BLA-NAc circuitry exerts over the direction of choice. Thus, within these networks, subcortical circuits linking the BLA and NAc provide a visceral, intuitive bias towards options that may yield larger rewards (“go big”), whereas the frontal lobes aid in refining the choice biases to promote more optimal patterns of choice.

Dopamine

The influence that these limbic-cortico-striatal networks exert over action selection during risk/reward decision-making is further refined by DA transmission within forebrain terminal regions. Psychopharmacological studies have revealed key roles for mPFC and NAc D1 receptors in mitigating sensitivity to non-rewarded actions. Blockade of these receptors in either region reduces risky choice during probabilistic discounting, with these effects driven by increased lose-shift behavior (St Onge et al., 2011; Stopper et al., 2013). Thus, activity at these receptor sites promotes exploitation of more favorable situations by facilitating biases towards potentially more profitable options despite their uncertainty. In essence, D1 receptors aid in overcoming uncertainty costs and help a decision-maker keep an “eye on the prize”, maintaining choice biases even when risky choices do not always yield rewards. These functions may be mediated in part through modulation of BLA inputs to these regions, given that the BLA also mediates negative feedback sensitivity (Figure 1B) and D1 receptor activity can potentiate firing of NAc neurons driven by BLA inputs (Floresco et al., 2001; Floresco 2007).

In comparison to D1 receptor activity, D2 receptors in the PFC appear to facilitate modifications in decision biases and promote exploration of different options in response to changes in reward probabilities. Antagonism of these receptors retards shifts in choice biases and increases risky choice during probabilistic discounting when the odds vary from “good-to-bad”, similar to the effects of PFC inactivation (St Onge et al., 2011). The seemingly opposite effects of PFC D1 and D2 receptors may be mediated via actions of DA on separate populations of PFC pyramidal neurons that express one of these receptors exclusively (Gee et al., 2012; Seong and Carter, 2012), or their differential effects on the network activity of PFC neuronal populations (Durstewitz et al., 2000; Seamans and Yang, 2004). On the other hand, manipulation of NAc D2 receptors does not alter risky choice in this assay (Stopper et al., 2013). This is somewhat surprising, given that systemic D2 antagonists reduce preference for larger, uncertain rewards (St Onge and Floresco, 2009). In this regard, it is notable that separate populations of striatal neurons expressing D1 or D2 receptors have been proposed to regulate different patterns of behavior. D1-containing cells may be more important for promoting approach behaviors, whereas selective activation of neurons expressing D2 receptors can be aversive, suggesting that these cells may regulate avoidance behaviors (Lobo et al., 2010; Kravitz et al., 2012). Thus, the apparent lack of involvement of NAc D2 receptors in mediating probabilistic discounting may be related to the fact that in this assay, risky choices are not associated with any explicit punishments per se, but only the possibility of receiving a reward or not. As will be discussed in subsequent sections, striatal D2 receptors may play a more prominent role in regulating risky decision-making when certain actions may yield more preferred rewards but also deliver aversive consequences as well.

Tonic and phasic dopamine signaling and decision-making

Subsequent microdialysis studies provided additional insight into how fluctuations in PFC and NAc DA release relate to modifications in decision biases in well-trained rats performing the same probabilistic discounting task (St Onge et al., 2012a). Within the PFC, DA levels corresponded to changes in large/risky reward probabilities irrespective of whether the odds of obtaining the larger reward decreased or increased over a session. Thus, when the odds were initially 100% and then decreased across blocks, there was a robust initial increase in PFC DA efflux (~80–90% above baseline) that steadily declined over the session, and the opposite profile was observed when the odds shifted from “bad-to-good” over a session. This experiment included a key, yoked-reward control group consisting of rats that were not required to press any levers or make any decisions, but instead were trained to receive food delivered passively on a schedule matched to rats performing the decision-making task. Yoked rats displayed a near-identical profile of PFC DA efflux to that observed during decision-making, confirming that the fluctuations in PFC DA transmission during either condition corresponded primarily to changes in the relative rate of reward received. These findings suggest that DA input to the frontal lobes conveys information about changes in the relative amount of reward availability, and that dynamic fluctuations in mesocortical DA efflux may serve as a reward “running-rate meter,” informing the PFC about changes in reward rates that can aid in adjusting choice accordingly.

Within the NAc, fluctuations in tonic DA levels were also related to changes in reward availability over time. However, DA levels were higher in choice situations during periods when delivery of the larger reward was uncertain, compared to yoked animals that received the same amount of food delivered passively. Thus, rats that had to choose during periods when their actions might not yield a reward displayed higher DA levels compared to those that received the same amount of food but did not have to make any actions to receive it. In addition, changes in NAc DA corresponded very closely to changes in choice behavior, and DA levels were higher during free choice versus forced choice periods. Thus, variation in NAc DA signaling during risk/reward decision-making appears to integrate multiple types of information, including reward uncertainty, choice behavior, and changes in reward availability over time.

Drugs like amphetamine cause large increases in tonic DA efflux within the PFC and NAc. With respect to risk/reward decision-making, these treatments would be expected to occlude changes in these signals associated with variations in reward availability, leading to a more static tonic DA signal. In this regard, amphetamine markedly impairs shifts in risk/reward decision biases during probabilistic discounting, “locking in” choice preferences apparent during the start of a session, in a manner similar to PFC inactivation (St Onge and Floresco, 2009; St Onge et al., 2010). This combination of findings suggests that modifications in choice biases when reward probabilities are volatile may not be regulated as much by the absolute levels of tonic DA within forebrain regions, but rather may be driven primarily by fluctuations of these signals that occur over time.

DA signaling in the NAc is segregated into different compartments regulated by distinct modes of transmission (Grace, 1991; Floresco, 2007; Grace et al., 2007). Microdialysis techniques measure slower changing levels of extrasynaptic or “tonic” DA. In contrast, “phasic” signaling comprises a more spatially and temporally restricted signal (<1 s) mediated by burst firing of DA neurons. Recent in vivo voltammetry studies have revealed that different aspects of cost/benefit decision-making are associated with changes in phasic DA signaling within the NAc. Phasic DA signals track decision outcomes during risk/reward decision-making, wherein receipt of larger versus smaller rewards triggers proportional increases in DA, and reward omissions cause phasic suppressions or “dips” in DA levels (Sugam et al., 2012), in keeping with the idea that these signals encode reward prediction errors (Schultz, 2013). In addition, phasic DA signals prior to action selection appear to encode the expected availability of larger/more-preferred rewards (Day et al., 2010; Sugam et al., 2012).

Pharmacological manipulations disrupt both tonic and phasic DA signaling, making it difficult to disentangle the specific contribution of pre-choice and outcome-related phasic DA signals to action selection during risk/reward decision-making, relative to slower-changing tonic DA levels. Circumvention of this issue may be achieved through the use of more temporally-precise manipulations to override natural phasic signals associated with different phases of decision-making. A recent study used this approach with rats well-trained on a modified version of probabilistic discounting task described previously (Stopper et al., 2014b). Brief trains of electrical stimulation were delivered to either the DA cell body region within the ventral tegmental area, or to the lateral habenula (LHb), a nucleus that exerts powerful inhibitory control over the firing of midbrain DA neurons. LHb stimulation induces robust suppression of DA neural firing that resembles phasic dips associated with reward omissions (Christoph et al., 1986; Ji and Shepard, 2007). This is mediated via disynaptic circuits linking excitatory LHb projections to the rostromedial tegmental nucleus (RMTg), which in turn sends inhibitory GABAergic projections onto DA neurons (Jhou et al., 2009; Lammel et al., 2012).

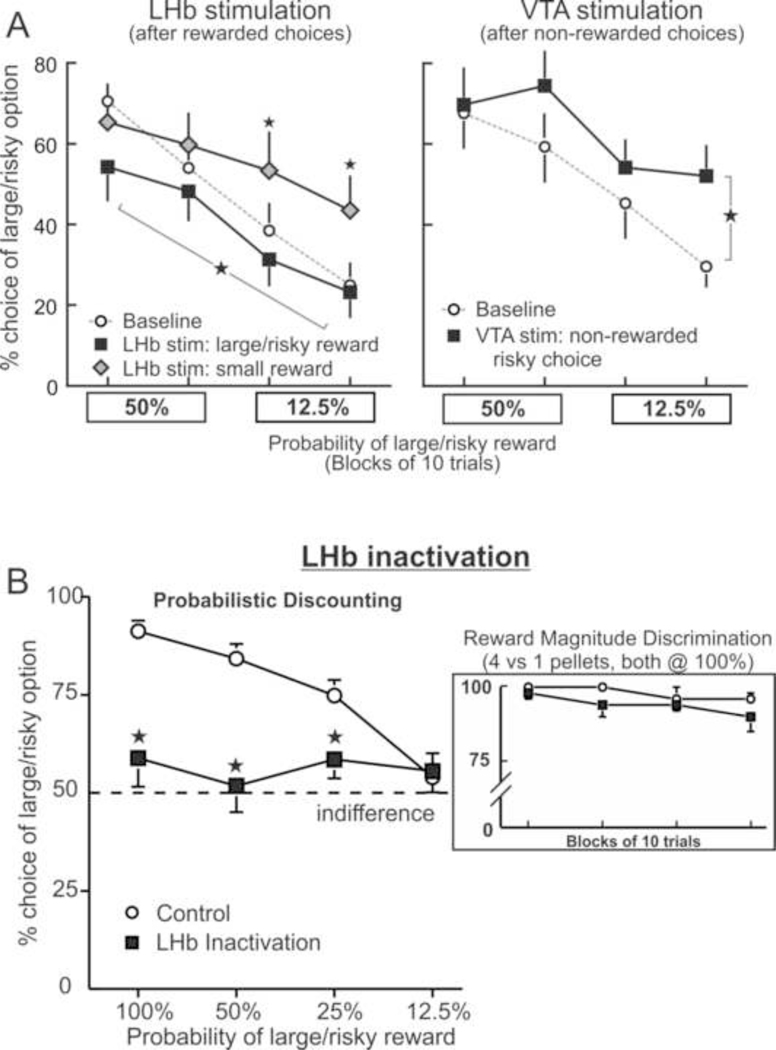

Stimulation of the LHb (or RMTg) coinciding with rewarded choices markedly affected subsequent action selection. When animals played risky and were rewarded, stimulation during reward delivery (i.e. periods associated with phasic increases in DA; Sugam et al., 2012) shifted bias towards the smaller/certain option (Figure 2A, left). Likewise, stimulation contingent with delivery of the smaller/certain reward caused the opposite effect, shifting biases towards the large/risky option. Notably, these manipulations did not affect preference for larger, certain rewards versus smaller ones, in keeping with the idea that DA does not play a role in mediating decisions based primarily on differences in reward magnitude. Conversely, when the VTA was stimulated following a non-rewarded risky choice (i.e. overriding DA phasic dips associated with these events), rats showed reduced sensitivity to reward omissions and an increase in risky choice, behaving as if the risky option was providing more reward than it actually was (Figure 2A, right). The findings that overriding phasic bursts and dips in DA activity associated with decision outcomes can redirect action selection provide compelling evidence that these signals convey short-term feedback information about recent action outcomes that can increase or decrease the likelihood that those actions are selected again (Schultz, 2013).

Figure 2.

Phasic DA signals and risk/reward decision-making (A) Suppression of outcome-related phasic DA signals via LHb stimulation altered choice biases. LHb during receipt of larger or smaller rewards (left) shifted bias towards the alternative option. Conversely, inducing phasic DA burst in DA activity non-rewarded risky choices (via VTA stimulation, right) increased risky choice. Adapted from Stopper et al. (2014b). (B) Inactivation of the LHb induced a massive disruption in decision-making, causing rats to be indifferent to either option irrespective of their relative value. However, these manipulations did not affect choice during simpler decisions when choosing between smaller vs. larger certain rewards of equal costs (inset). Adapted from Stopper and Floresco (2014). For all figures stars denote significant differences at p < 0.05.

In another experiment, LHb stimulation was given prior to rats making a choice, to ascertain whether these signals can influence action selection. This manipulation markedly increased choice latencies, suggestive of reduced incentive salience attributed to the reward-associated levers (Flagel et al., 2011; Danna et al., 2013). Suppression of pre-choice DA signals also altered choice patterns, in that it reduced selection of the more preferred of the two options, with this effect being most pronounced when rats normally preferred the option associated with the larger reward. Thus, phasic increases in DA occurring prior to action selection appear to aid in directing behavior towards more preferable rewards (Morris et al., 2006).

This collection of findings suggests that DA phasic bursts and dips, in conjunction with fluctuations in tonic DA signaling, play separate yet complementary roles that may form a system of reward checks and balances. Outcome-related phasic DA signals provide rapid feedback on whether or not recent actions were rewarded to quickly update a decision-maker’s framework for subsequent action selection. As these signals are integrated by other nodes of DA decision circuitry to establish and modify choice biases, phasic increases in DA prior to a choice promote expression of preferences for more desirable options. In comparison, slower fluctuations in tonic DA may provide a longer-term accounting of reward histories and average expected utility so that individual outcomes are not overemphasized, ensuring that ongoing decision-making proceeds in an efficient, adaptive and more rational manner.

The lateral habenula and subjective decision biases.

Stimulation of the LHb can be a useful tool to manipulate phasic bursts in DA neuron activity. With respect to the normal function of this nucleus, neurophysiological studies have revealed that LHb neurons encode negative reward prediction errors opposite to that displayed by DA neurons, exhibiting increased phasic firing in expectation of, or after, aversive events and reduced firing after positive outcomes (Matsumoto and Hikosaka, 2007, 2009a; Bromberg-Martin and Hikosaka, 2011). Importantly, glutamatergic neurons of the LHb display relatively high rates of spontaneous activity [>30 Hz; (Matsumoto and Hikosaka, 2007; Hong et al., 2011)]. As such, reductions in firing associated with rewarded outcomes would be expected to convey as much information to downstream structures as phasic increases in activity associated with reward omissions or other unpleasant events. Nevertheless, an impression that has emerged is that the LHb may serve as an “anti-reward” center that may underlie aversion or disappointment, a supposition supported by the findings that LHb stimulation promotes conditioned avoidance and reduces reward-related responding (Lammel et al., 2012; Stamatakis and Stuber, 2012). However, a limitation of these approaches is that they cannot readily identify how normal functioning of this nucleus contributes to overt behavior. Although prolonged stimulation of the LHb can induce aversive states, these studies are looking at only one side of the coin, as they cannot mimic the dynamic increases and decreases in phasic firing normally associated with aversive/rewarding events.

To explore how LHb signals may contribute to risk/reward decision-making, Stopper and Floresco (2014) investigated the effects of inactivation of this nucleus on probabilistic discounting. Given the general impression that the LHb conveys some form of “disappointment” signal that encodes reward omissions, combined with the observations that LHb inactivation increases mesolimbic DA release (Lecourtier et al., 2008), a parsimonious expectation would be that these manipulations might increase preference for the larger/uncertain reward. However, these manipulations induced a much more profound effect, in that they completely abolished any bias subjects had for either option (Figure 2B). Disruption of LHb signal outflow rendered animals absolutely indifferent to which option may be more preferable, inducing unbiased and random patterns of responding so that choice of either option did not differ from chance. Similar effects of LHb inactivation were observed in rats performing a delay discounting task in which they chose between either a small, immediate reward or larger, delayed reward (both delivered with 100% certainty). However, LHb inactivation did not affect preference for larger, cost free rewards vs smaller ones (Figure 2B, inset).

The massive disruption in decision biases induced by suppression of the LHb suggests that the differential signals it encodes about the expectation or occurrence of negative/positive events play a critical role in helping an organism make up its mind when faced with ambiguous decisions regarding the costs and benefits of different actions. Activity within this nucleus aids in biasing behavior from a point of indifference toward committing to choices that may yield outcomes perceived as more beneficial. Given the powerful influence this nucleus exerts over DA neural activity, expression of these subjective preferences is likely achieved through subsequent integration of these dynamic signals by regions downstream that include midbrain DA neurons. Thus, integration of differential LHb reward/aversion signals may provide a tone that is crucial for expression of preferences for one course of action over another. In turn, suppression of these signals would be expected to leave phasic (and tonic) DA signaling in disarray, rendering a decision-maker incapable of determining which option may be “better”, indicating the importance of these signals in guiding ongoing reward seeking.

The findings reviewed above have broadened our understanding of the relative contribution of different limbic, striatal, cortical and dopaminergic circuits to decision-making about uncertain rewards. However, it is important to emphasize that many real-life decisions that we face not only involve choices to maximize our payoffs, but often require us to avoid unpleasant consequences as well. In this regard, recent studies have shown that although there exists considerable overlap between the circuits that guide choice towards better rewards and those that move us away from aversive outcomes, there are considerable dissociations within the neural machinery that regulate these different types of decisions.

Neural circuitry mediating punishment-related decision-making

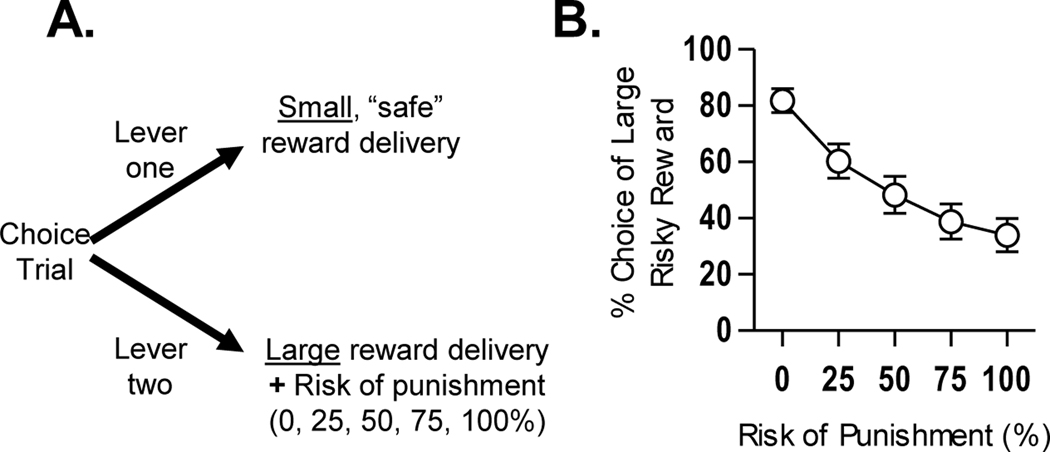

As described in the preceding section, many real-life decisions involve choices in which there is a risk that a desired outcome will be delivered (i.e., be omitted). In other situations, however, the “risk” does not solely involve reward omission, but may also involve the possibility of a distinct, adverse outcome (a punishment), which can offset the subjective value of the desired reward. For example, a compulsive gambler’s loss in a high-stakes game of blackjack represents not only a failure to win (reward omission), but also a set of punishing consequences resulting from the lost money (e.g., gambling debts, family discord, etc.). Similarly, the decision to purchase and consume cocaine will likely result in the desired outcome (a cocaine high), but may be accompanied by risks of medical or legal consequences. The presence of these potential punishments in the latter example will likely alter a cocaine user’s decision calculus, and may decrease the likelihood of future cocaine use, depending on the probability, magnitude, and timing of the punishment. Unlike reward omission, however, for which the value can be calculated as a function of reward probability, it is less clear how punishment value is calculated (as it involves a stimulus modality different from the reward), and how such punishment value is integrated with reward value to ultimately arrive at a decision. To begin to address these issues, Setlow and colleagues have employed a behavioral task in rats that involves choices between a small, “safe” food reward and a large, “risky” food reward accompanied by risk of punishment. This task is described below, along with data indicating important differences in the neural mechanisms underlying the effects of punishment vs. reward omission on choice behavior.

A rat model of decision-making under risk of punishment.

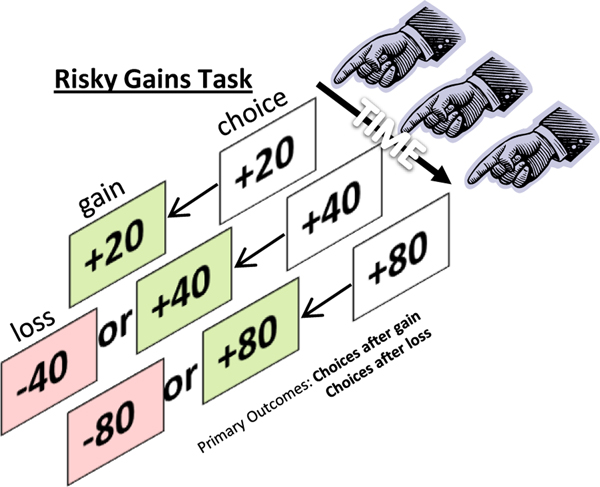

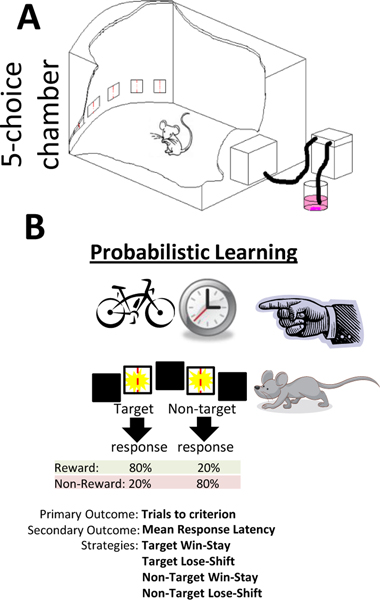

A number of behavioral tasks have been developed in rodents (largely in rats) to assess how factors such as effort, reward delay, and reward omission influence choice behavior (e.g. Evenden and Ryan, 1996; Cardinal and Howes, 2005; Floresco et al., 2008b; see preceding sections). These tasks employ the same basic design, in which rats make discrete-trial choices between two response options, one which yields a small reward and the other which yields a large reward accompanied by a variable response “cost” (effort, delay to reward delivery, probability of reward omission, etc.). The “Risky Decision-making Task” (RDT) uses this same design, but incorporates a variable probability of footshock punishment that accompanies delivery of the large reward (see Figure 3A). As shown in Figure 3B, rats tested in this task prefer the large reward when the probability of punishment is low, but shift their preference away from the large reward (and toward the small, safe reward) as the probability of punishment increases. The degree of preference for the large, “risky” reward is strongly dependent on shock intensity, with higher intensities resulting in decreased choice of the large reward (Simon et al., 2009; Cooper et al., 2014; Shimp et al., 2015). Somewhat surprisingly, the magnitude of the large reward appears to play less of a role in directing choice behavior in this task. In well-trained rats, varying the large reward magnitude from 2 to 5 food pellets does not shift preference for this reward (although decreasing its magnitude to 1 food pellet does sharply reduce preference for this reward, indicating maintained attention to reward magnitude; (Shimp et al., 2015; Orsini et al., 2015). These data are supported by the finding that reducing food motivation via pre-test satiation does not alter choice preference, although it does increase the number of omitted trials (Simon et al., 2009). In combination, these data suggest that, at least under these conditions, punishment plays a more significant role than reward motivation in guiding reward-related decision-making under risk of punishment.

Figure 3.

(A) Schematic of the risky decision-making task. Food-restricted rats are trained in operant chambers to make discrete-trial choices between two response levers. A press on one of the levers (“Lever one”) delivers a small, “safe” food reward (a single food pellet) and a press on the other (“Lever two”) delivers a large food reward (several food pellets) that is accompanied by a variable probability of footshock. Test sessions are organized into five blocks of trials, in which the probabilities of shock delivery are 0, 25, 50, 75, and 100% (usually in ascending order). Each block consists of 8–10 free-choice trials, which are preceded by 8 forced-choice trials used to familiarize rats with the probability of shock delivery for that block. The large reward is delivered after each choice of the large reward lever, irrespective of shock delivery [see Simon and Setlow (2012) for further details of task design]. (B) Mean (+/− SEM) performance of 42 male Long-Evans rats tested in the risky decision-making task. After 20–25 training sessions, rats on average prefer the large reward when the risk of accompanying shock is low, but shift their choices to the small, safe reward as the risk of shock increases.

Dopamine signaling and decision-making under risk of punishment.

As described throughout this review, DA signaling plays a central role in modulating decision-making. This role is usually framed in terms of reward [for example, by providing phasic signals that convey information regarding reward probability, timing, or magnitude (Schultz, 2013)]. However, there is considerable evidence that DA signaling is involved in processing aversive events as well (Brischoux et al., 2009; Bromberg-Martin et al., 2010; Badrinarayan et al., 2012; McCutcheon et al., 2012). In the RDT, acute systemic administration of amphetamine causes a dose-dependent decrease in rats’ choice of the large, risky reward (i.e., increased risk aversion) (Simon et al., 2009; Mitchell et al., 2011). This effect appears to be mediated by D2-like DA receptors, as it is blocked by co-administration of a D2-like (eticlopride), but not D1-like (SCH23390) antagonist, and mimicked by systemic administration of a D2-like (bromocriptine), but not D1-like (SKF81297) agonist (Simon et al., 2011). Further support for a role of D2 receptor signaling in risk aversion is provided by data showing that expression of D2 receptor messenger RNA (mRNA) in the striatum as assessed with in situ hybridization (which likely reflects post-synaptic receptors) is inversely related to risk-taking, such that greater preference for the large, risky reward is associated with lower D2 receptor mRNA expression (Simon et al., 2011; Mitchell et al., 2014). These mRNA data are consistent with the systemic behavioral pharmacological data (D2-like receptor activation reducing choice of the large reward associated with a potential footshock), and their functional significance is supported by the findings that direct administration of a D2-like agonist (quinpirole) into NAc reduces choice of the large, risky reward (Mitchell et al., 2014). Considered together, these data suggest that activation of (ventral) striatal D2 receptors biases choice behavior away from options associated with potential punishment.

Findings that D2 receptor activation decreases preference for rewards associated with potential punishment are consistent with a large literature implicating these receptors in performance of active avoidance tasks. In such tasks, rodents learn to make a response (a lever press or movement from one chamber to another) upon delivery of an auditory or visual signal in order to avoid a footshock punishment. D2 receptor antagonists reduce the number of successful avoidance responses, suggesting that activation of these receptors is necessary to motivate punishment avoidance (Salamone, 1994; Wadenberg and Hicks, 1999). This idea may appear to contradict recent theoretical proposals that reductions in D2 receptor activity (caused by phasic decreases in DA tone induced by aversive events) are critical for avoidance (Frank and Surmeier, 2009; Bromberg-Martin et al., 2010). A recent paper by Oleson et al. (2012), however, provides a potential resolution. These authors showed that during performance of a signaled avoidance task, anticipation or delivery of a footshock causes a transient decrease in DA release in ventral striatum measured with in vivo voltammetry, but anticipation of the shock causes an increase in DA release when that anticipation is followed by a successful avoidance response. In combination with data from the RDT and active avoidance tasks described above, these findings suggest that a transient increase in DA release (and consequent D2 receptor activation) in anticipation of an aversive outcome may promote avoidance of that outcome. In contrast, transient decreases in DA release may be more important for learning about aversive events than for actively addressing them. It will be of considerable interest in future work to determine how transient DA signals are affected in choice contexts that involve concurrent presentation of rewards and punishments (as in the RDT).

Aside from potentially distinct roles in learning versus performance of avoidance behavior, the role of D2 receptors in risky decision-making may depend on the nature of the risky outcome involved. In the probabilistic discounting task described above, systemic administration of a D2-like agonist increases choices of large, risky rewards, whereas the same drug decreases choice of large, risky rewards in the RDT (Simon et al., 2009; St Onge and Floresco, 2009). The difference in the direction of these effects may be due to the relative salience of the rewarding and aversive elements of each task. Killcross et al. (1997) showed that amphetamine potentiates the degree to which both appetitive and aversive cues control choice behavior, suggesting that the drug enhances the salience of these stimuli irrespective of their valence (positive or negative). As described above, choice preference in the RDT is largely directed by the magnitude and probability of the footshock punishment (Shimp et al., 2015), whereas in the probabilistic discounting task (which is very similar in structure and task demands) it seems likely that the reward plays a more salient role in choice behavior. Hence, the apparently opposite effects of D2-like receptor stimulation in these two otherwise similar risk-taking tasks may be due to the valence of the most salient feature of the tasks. In the RDT, D2 receptor activation most strongly potentiates the impact of the punishment (leading to greater risk aversion), whereas in the probabilistic discounting task, D2 receptor activation most strongly potentiates the impact of the large reward (leading to increased risky choice). A potential neural substrate for these effects lies in the recent discovery of a population of midbrain dopamine neurons that increases phasic activity in response to both appetitive and aversive stimuli (Matsumoto and Hikosaka, 2009b). Such neurons are proposed to encode the “motivational salience” of significant events, irrespective of their affective valence (Bromberg-Martin et al., 2010), and DA signals produced by these neurons could account for the effects of D2 receptor activation in both appetitive and aversive contexts. It should be noted, however, that the type of aversive outcome (reward omission vs. punishment) is likely not the sole determinant of the effects of dopaminergic manipulations on risky decision-making. For example, whereas D2-like agonist administration into NAc decreases choice of large, risky rewards in the RDT (Mitchell et al., 2014), this same manipulation has no effect in the probabilistic discounting task (Stopper et al., 2013). Moreover, research from several laboratories employing rodent versions of the Iowa Gambling Task, in which the risky, aversive outcome is a timeout period during which rewards cannot be earned, shows that systemic amphetamine administration reduces preference for risky in favor of safer options, which is similar to its effects in the RDT (Zeeb et al., 2009; van Enkhuizen et al., 2013a). Clearly, much remains to be understood regarding how different types of aversive outcomes are encoded in the context of risk taking tasks, and how such encoding is reflected at the neurobiological level.

Neural circuitry of decision-making under risk of punishment

Beyond dopamine signaling in the striatum, the neural mechanisms of decision-making under risk of punishment are only beginning to be investigated. However, recent data indicate roles for several ventral striatal afferent regions. The BLA sends a strong, direct projection to the NAc, and the two structures have been implicated as a functional circuit involved in several aspects of behavior (Everitt et al., 1991; Setlow et al., 2000; Floresco et al., 2001; Setlow et al., 2002; Stuber et al., 2011). The BLA plays a critical role in decision-making under risk of punishment as well, as lesions of this structure in well-trained rats increase choice of the large, risky reward in the RDT (Orsini et al., 2015). A series of control experiments show that this lesion effect is not due to altered reward sensitivity or impaired sensitivity to the footshock punishment, suggesting that the critical role for the BLA is in integrating the risk of punishment with reward magnitude information to guide adaptive choice behavior. In contrast to the BLA, lesions of the OFC in well-trained rats cause a decrease in choice of the large, risky reward in the RDT (Orsini et al. 2015). This effect does not appear to be due to increased anxiety or impaired sensitivity to the difference between the large and small reward [although see Kheramin et al. (2002)], but instead may result from an impaired ability to accurately calculate punishment probabilities, leading to a default strategy of punishment avoidance. Interestingly, both of these lesion effects are opposite of those observed in the probabilistic discounting task, in which BLA inactivation decreases and (medial) OFC increases choices of large rewards associated with risk of omission (Ghods-Sharifi et al., 2009; Stopper et al., 2014a). This pattern of differential results in the two tasks is consistent with the differential effects of dopaminergic manipulations described above, and further indicates that the neural systems supporting risky decision-making are engaged differently depending on the nature of the risk involved. The involvement of neural systems beyond BLA and OFC are only beginning to be evaluated. In situ hybridization data suggest that D2 receptor signaling in OFC and mPFC may be involved in the ability to flexibly adapt choice strategies in response to changing risks of punishment (Durstewitz and Seamans, 2008; Simon et al., 2011; see preceding section); however this hypothesis has yet to be tested experimentally in the RDT.

Future considerations for research on decision-making under risk of punishment

The findings described above suggest that decision-making under risk of punishment has unique features that render it at least partially distinct from decision-making involving other types of risks (particularly reward omission). These distinctions are of considerable interest from a basic science perspective; however, they represent a challenge for applying knowledge gained in these laboratory models to clinical settings. For example, if DA receptor activation can either increase or decrease preference for large, risky rewards depending on the type of risk involved (Simon et al., 2009; St Onge and Floresco, 2009), what is the predicted outcome of stimulant medications on real-world risky decision-making in patient populations (e.g., does it depend on the decision context and/or individual sensitivity to rewards vs. punishments?). One study indicates that methylphenidate administration in children with ADHD reduces risk-taking in the Cambridge Gambling Task, in which the “risk” is possible loss of points earned during the task (DeVito et al., 2008); however, to our knowledge, this issue has not been evaluated systematically.

Finally, although progress has been made in elucidating the neurobehavioral mechanisms that guide decision-making under risk of punishment, there is much that remains unknown. In particular, it is as of yet largely unclear how risk of punishment is encoded at the circuit, neurochemical, and single-cell activity levels. Such information is important, as risky decision-making is dysregulated in a number of psychiatric disorders, including addiction, bipolar disorder, ADHD, and anorexia (see the following section for more on this topic). In this regard, studies using animal models will continue to provide insight into the pathophysiological mechanisms that underlies abnormal decision-making in these disorders. For example, a recent study showed that greater preference for large, risky rewards in the RDT during adolescence predicts greater cocaine self-administration during adulthood, and that cocaine self-administration itself can in turn cause further increases in preference for large, risky rewards (Mitchell et al., 2014). Such links between high levels of risk-taking behavior and drug use are evident in humans as well (Bechara et al., 2001; Bornovalova et al., 2005; Leland and Paulus, 2005; Gowin et al., 2013), and support the utility of modeling this type of decision-making in animals.

Insights into abnormal risky decision-making in humans from animal models

One of the key points in understanding the neural mechanisms underlying decision-making and risk-taking behavior in animals is how these mechanisms pertain to human performance in similar tasks. While there is an academic interest in understanding such mechanisms, there is also a very real-world need to understand them since they are deleteriously affected in numerous neuropsychiatric disorders (Paulus, 2007). The loss of optimal real-world decision-making can have dramatic effect on a sufferer’s everyday life, their ability to integrate into society, and chances for employment. The subsequent section will review some of the tasks used to measure decision-making in clinical populations, deleterious behaviors associated with these disorders, and links between these findings and those obtained from preclinical studies.

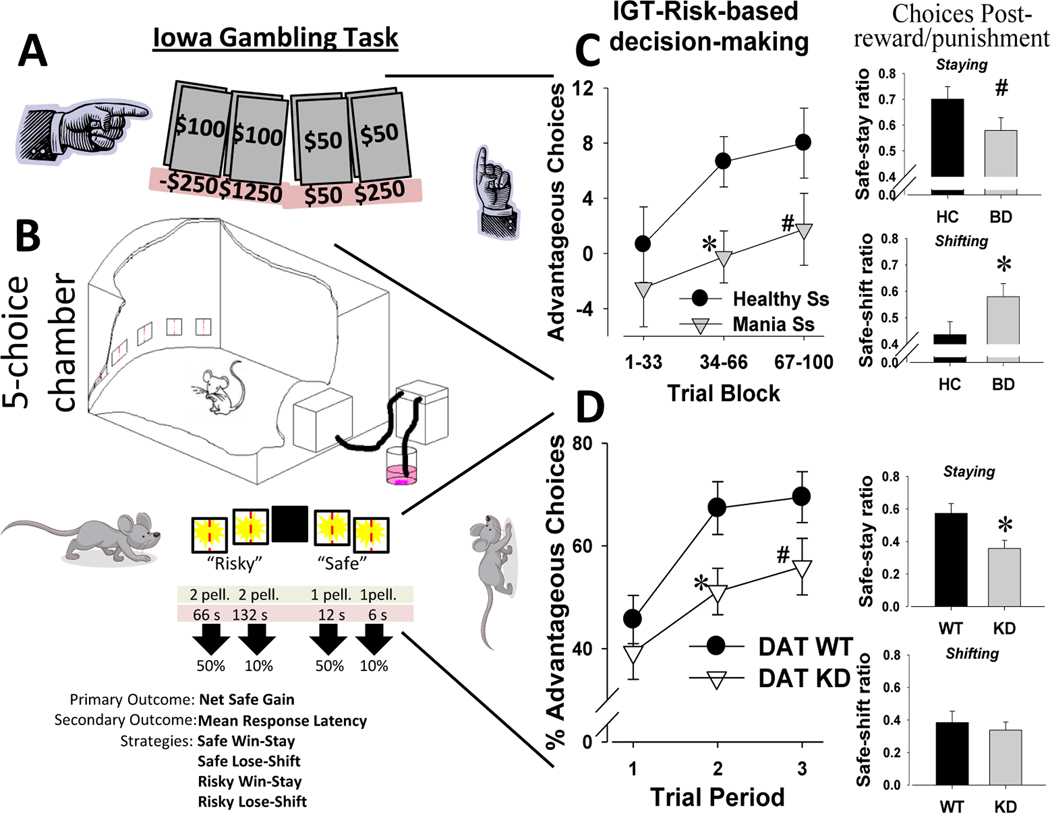

Assessing real-world decision making under reward and punishment using the Iowa Gambling Task

As described above, Bechara and colleagues provided the first neurobiological clues as to how the brain mediates decision-making under risk using the IGT (Bechara et al., 1994). The IGT assesses real-world decision-making by providing subjects with four card deck options mixed with varying reward (plus points), punishment (minus points), and ratio magnitudes (Bechara et al., 1994; Figure 4A). The IGT has become arguably the most popular paradigm to assess decision-making deficits in psychiatric patients (Toplak et al., 2010; Steingroever et al., 2013). The IGT decks are stacked so that selecting from the high reward but higher punishment (“risky”) sides result in overall loss over the 100 trials, whereas selecting from the low reward but lower punishment (“safe”) sides result in overall gains. While performing the IGT, subjects tend to initially select the risky decks but rapidly (often by trial 20) switch to selecting from the safe decks, although there is a great deal of variability between subjects (Steingroever et al., 2013; van Enkhuizen et al., 2014). The IGT can be extremely useful when assessing the ability of subjects to withhold responding directed towards highly rewarding stimuli in order to obtain even greater gains in the future. As such the IGT has been used to examine decision-making under risk in numerous clinical populations.

Figure 4.