Abstract

A current trend in automotive research is autonomous driving. For the proper testing and validation of automated driving functions a reference vehicle state is required. Global Navigation Satellite Systems (GNSS) are useful in the automation of the vehicles because of their practicality and accuracy. However, there are situations where the satellite signal is absent or unusable. This research work presents a methodology that addresses those situations, thus largely reducing the dependency of Inertial Navigation Systems (INSs) on the SatNav. The proposed methodology includes (1) a standstill recognition based on machine learning, (2) a detailed mathematical description of the horizontation of inertial measurements, (3) sensor fusion by means of statistical filtering, (4) an outlier detection for correction data, (5) a drift detector, and (6) a novel LiDAR-based Positioning Method (LbPM) for indoor navigation. The robustness and accuracy of the methodology are validated with a state-of-the-art INS with Real-Time Kinematic (RTK) correction data. The results obtained show a great improvement in the accuracy of vehicle state estimation under adverse driving conditions, such as when the correction data is corrupted, when there are extended periods with no correction data and in the case of drifting. The proposed LbPM method achieves an accuracy closely resembling that of a system with RTK.

Keywords: machine learning, autonomous vehicles, Inertial Navigation System, Satellite Navigation, Real-Time Kinematic, indoor navigation, reference state

1. Introduction

Autonomous driving has become a popular trend in automotive research. The motivation for autonomous driving ranges from comfort or practicality functions to safety critical applications. Independently of the final use, the fact that the vehicles are machines that can cause serious harm to humans in the event of a malfunction has to be taken into consideration. Therefore, autonomous driving functions need to be subjected to an extensive process of testing and validation which requires a highly accurate reference vehicle state.

A common practice to generate such a highly accurate reference vehicle state is the fusion of data from Inertial Measurement Units (IMU) with measurements from external sensors. Given the extensive scientific community working on these systems, their practicality, and especially their high accuracy, Satellite Navigation (SatNav) receivers have become the most common choice of sensor to fuse with IMUs. Even consumer-grade receivers are capable of acquiring information from various GNSS (such as GPS, GLONASS, Galileo, Beidou, etc.), which enables the receivers to accurately estimate several state variables. Unfortunately, there are common driving situations where satellite signals are either absent or corrupted to an extent that renders them unusable. Some examples of these driving situations are tunnels, bridge underpasses, parking structures or testing halls.

The objective of the present work is to reduce the dependency of INSs on external sensors, while still being able to generate a reference vehicle state. Six key aspects of the estimation of the vehicle state are addressed: (i) the standstill recognition (Section 2.1), (ii) the horizontation of IMU measurements (Section 2.2), (iii) sensor fusion (Section 2.3), (iv) drift detection (Section 2.4), (v) outlier detection for correction data (Section 2.5), and (vi) highly accurate indoor navigation (Section 2.6). It will be shown that the proposed methods are able to provide important improvements in the estimation of the vehicle state even when used individually. Furthermore, if used in combination, the proposed methods allow for an accurate enough estimation of the vehicle state to test and validate autonomous driving functions even in indoor environments.

For an adequate estimation of the vehicle state by means of IMUs, it is essential to ascertain when the vehicle is not moving because it is at this time that both the velocity over ground and the proper acceleration are equal to zero [1]. Even though, historically, vehicle speed sensors were designed to measure velocity rather than when a vehicle is at a standstill [2], they are now able to accurately measure a standstill. Nonetheless, there could be disadvantages if external devices continuously read the vehicle sensor data in real time (for example, through the OBDII interface). Even when various methods [3,4,5] and tools used by manufacturers [6] theoretically allow a CAN-bus use of ≈80% [7], recent studies suggest that CAN-bus messages can still miss their deadlines [8,9,10]. The traffic within a CAN-bus would increment if an external device continuously accesses the vehicle sensor data in real time, which could further increase the risk that the CAN-bus messages miss their deadlines. Consequently, more research is required before considering fusing the vehicle on-board sensors with external IMUs. Alternative sensors for measuring the velocity over ground, such as the Wheel Pulse Transducer (WPT) [11] or the Correvit [12,13] increase testing costs and complexity.

To the best of authors’ knowledge, the only method specifically designed for standstill detection is patented by Robert Bosch GmbH [14]. This method compares the position of an object in consecutive frames of a video provided by a camera in the vehicle, and an algorithm estimates the velocity of the vehicle relative to the object. As with all camera-based methods, there is a trade-off between the spatial resolution and the distance to the recorded objects, which negatively affects the standstill recognition.

The method proposed in this work for standstill recognition is based on a machine learning classifier, and has the key advantage that all required inputs are generated solely with IMU measurements. No additional sensors or infrastructural elements are required, which reduces testing complexity, improves the robustness of the method and makes it highly adequate for indoor navigation.

The state of the vehicle can be estimated once the algorithm has reliably established that the vehicle is moving. If Gauss-Markov stochastic processes are assumed, the most computationally efficient method to estimate the optimum state of moving objects is the Kalman Filter (KF) [15]. The KF algorithm can fuse sensor measurements with the vehicle state predicted by means of a motion model. Since the aim of this work is to generate a reference vehicle state for testing and validation of autonomous driving functions, the motion model used is especially designed for accurate performance under tractive driving.

Two critical parameters for the KF are the measurement noise and the system noise, because they are required to calculate the Kalman gain. Therefore, two methods are proposed to monitor both values, and thus to improve the robustness of the KF: a drift recognition and an outlier detector.

The drift recognition tackles the task of monitoring the vehicle state to detect when the vehicle is drifting. This information is extremely valuable because the motion model used in this research work is optimized for tractive-driving situations. When the vehicle starts to drift, the previously estimated system noise is no longer valid. Knowing when the vehicle is drifting enables the possibility of adjusting the system noise or switching to a better suited motion model.

Other works found in the literature address the issue of drifting too, however, they tend to limit the scope of their research to simulation environments [16,17] or require additional parameters that cannot easily be estimated with an IMU [18]. Conversely, the drift recognition in this work is designed to operate by performing consistency checks between two estimated state variables: the lateral acceleration and the sideslip angle. As demonstrated later, these state variables provide enough information to perform a robust drift detection.

The outlier detector serves as a complement to the drift detector. Although the drift detector monitors the validity of the motion model, the outlier detector does the same for the measurements. This is especially beneficial for SatNav measurements due to the multi-path effect. This effect appears when the signal received from the satellites does not follow a straight path, but bounces off other objects before reaching the SatNav receiver. The multi-path effect can occur as the vehicle drives near objects that are able to reflect the SatNav signal, such as trailers, tall buildings or metallic structures, and causes problems for the methods that measure the vehicle state (trilateration, carrier-phase measurements, etc.). A relevant consequence of the multi-path effect is that it reduces the accuracy of the measured vehicle state. It is quite common to see significant jumps in the measured state variables while the multi-path effect is present. There are several approaches in the literature to deal with the multi-path problem, such as [19,20,21,22,23]. However, to implement these approaches would require access to the pseudo-ranges of the SatNav receivers which is not possible for most consumer-grade devices. Therefore, the problem is addressed by filtering outliers. This filtering is achieved by a consistency check of the measurement vector before it is used as correction data.

In this research work a dynamic low-pass filter is designed to refine the outlier detection method by means of taking the measurement noise into account. This dynamic low-pass filter is highly beneficial for all sensors that estimate a quality indicator for their own measurements, as is the case of the “dilution of precision”, “diminution of precision” [24,25] or standard deviation of the SatNav receivers. Many sensors can track the accuracy associated with the measurements that they perform, but are not always capable of detecting estimation errors of this self-reported accuracy.

The investments made in GNSS [26,27] have provided various advantages, such as (i) high accuracy [28,29], (ii) a low cost for end users [30], and (iii) an extensive scientific community that works on refining its measurements and overcoming its limitations [31]. Arguably, these three advantages make the GNSS the best choice for external sensors in open-sky use-cases. However, there are still situations where the GNSS are not available or usable, as is the case in tunnels, parking structures or urban canyons. Moreover, there is an interest in testing in indoor halls because this allows engineers to test sensors, algorithms and systems under simulated and reproducible weather conditions such as day, night, rain, fog or even SatNav outages.

Due to the aforementioned reasons, it is necessary to investigate approaches for acquiring feedback in closed-sky situations. Comprehensive surveys on indoor positioning systems can be found in [32,33]. These approaches are based on the combination of diverse methods and sensors, such as WiFi, sonar, radar, or proprietary technologies such as the iBeacon [34].

The LiDAR-based approach with the best performance is presented on [35] with a mean localization error of 11.5 cm and standard deviation of 5.4 cm. The accuracy is evaluated by comparing the outputs of the algorithm to “human-labeled” ground-truth data. The indoor positioning system with the best performance that was found is the “Active Bat” [36,37] with a mean localization error of 3 cm, but the evaluation methodology is not clearly specified [38]. The biggest disadvantage of the Active Bat is the numerous receivers required which must be placed with separations of 1.2 m and must cover the complete measurement area. Therefore, the installation of such a system might not be practical. Other indoor positioning systems already on the market [39,40,41] are designed for applications that do not require a centimeter-precise localization. Alternative navigation methods that use LiDARs are presented in [42,43,44]. However, the existing methods still face a series of challenges, such as the positioning accuracy, the number of state variables they can measure or the algorithm runtime.

2. Materials and Methods

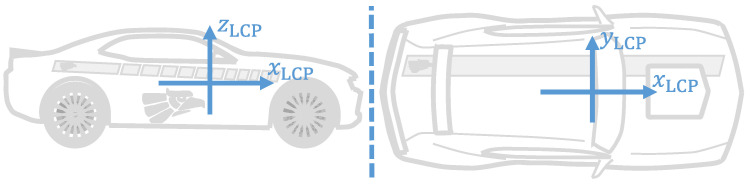

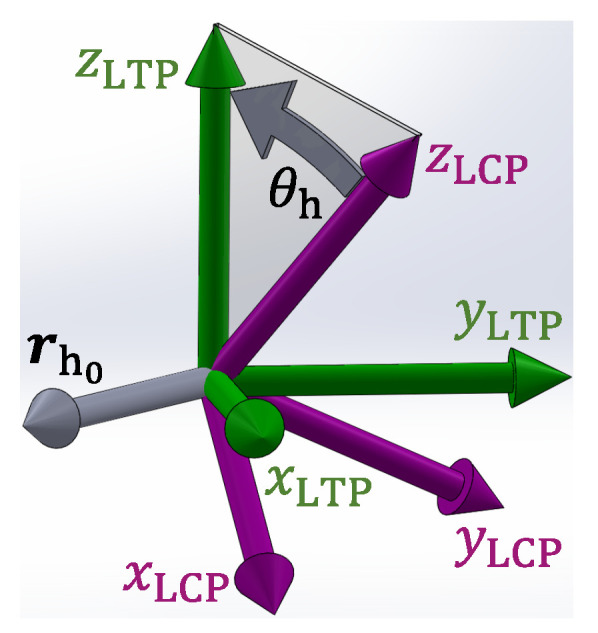

The present work deals with the measurements provided by different sensors, such as IMUs, LiDARs, SatNav receivers, etc. For simplification purposes, it is assumed that all sensors output their measurements in the Local Car Plane (LCP). The LCP is the vehicle reference frame and is defined analogue to the ISO 8855:2011 norm [45]. The LCP is composed by the mutually perpendicular , , and axes, with and origin at the Center of sprung Mass (CoM) of the vehicle. The axis is parallel to the longitudinal axis of the vehicle and points towards the hood, the axis points upwards, and the axis is given according to the right-hand rule. The LCP is illustrated in Figure 1.

Figure 1.

Graphical depiction of the Local Car Plane.

The vehicles move on the Local Tangent Plane (LTP). The LTP is a Cartesian reference frame, composed by the mutually perpendicular , and axes, with ; and with origin at an arbitrary location on the surface of the Earth. The axis orientation of the LTP is similar to the East-North-Up (ENU) reference frame, where the -plane is perpendicular to the gravitational pull of the Earth, the axis points to the true north of the Earth, and the axis is parallel to the gravitational pull of the Earth, and points upwards.

2.1. Standstill Recognition

The first step for estimating the vehicle state is the standstill detection. The strategy to achieve this, is to generate features from the IMU signals that can describe whether the vehicle is standing still or not. The generated features are then classified by means of machine learning. The proposed method uses the Random Forest (RF) [46] for classifying standstill or motion according to the features generated from the IMU signals as inputs. The classes used are shown in Table 1. False positives are unacceptable because they can affect greatly the estimation of the vehicle state, especially if they occur at highway velocities. False negatives can be tolerated because, as shown later, their effect is negligible for the estimation of the vehicle state. If this holds, the standstill classification is considered robust.

Table 1.

Standstill classification.

| Classified State | Standstill | Motion | |

|---|---|---|---|

| True State | |||

| Standstill | True positive | False negative | |

| Motion | False positive | True negative | |

The use of the RF is justified mainly because it is not possible to manually determine thresholds for the IMU signals that allow a robust standstill classification. This happens because some driving conditions are almost equal to a standstill, such as cruising on a highway or driving at walking velocity. This fact is further strengthened when considering the numerous configurations possible for the same vehicle chassis (engine, suspension, tires, etc.), and that even small components, such as the engine mounts, can have a significant impact on the vibrations and rotations sensed by the IMU.

The RF not only enables the implementation of a robust standstill recognition, but a 10 min dataset is also enough for a robust classification, and the training process is done within seconds. Also, given that the RF is real-time capable, it allows the online use of the proposed method. Further details about the RF can be found in [46]. The procedure to recognize a standstill consists of three steps: (i) generation of the training dataset, (ii) training of the RF, and (iii) classification of the vehicle state. The specifics applicable to the present research work is detailed below.

The first step to recognize a standstill, is to generate a training dataset for the RF. The 10 min-long dataset that is required to generate the features for the RF is composed by the proper acceleration vector and the composition of rotations that are measured by the IMU, these are such that

| (1) |

| (2) |

The training dataset for the RF is equally distributed between three conditions: standstill, moving at walking pace and random driving.

For the standstill condition, the vehicle should be as motionless as possible, but ready to drive off. In the case of vehicles with automatic transmission, “drive” should be selected; and vehicles with manual transmission should be either in neutral or in gear and with the clutch engaged. The reason for this, is to let the IMU sense engine and drivetrain vibrations as well. During the standstill, all dead loads are negligible, and all live loads are undesirable. Except for engine and drivetrain, all actions that provoke chassis vibration or motion should be avoided: passenger movement, vehicle loading, opening or closing doors, trunk or hood, etc.

For the condition of moving at walking pace, the vehicle is driven as slow as possible and on a straight line. Contact with pedals or steering wheel should be avoided. This allows one to generate samples with the “motion” label but that are very similar to a “standstill”, which is highly relevant for differentiating between both.

For the condition of random driving, the vehicle is driven with random accelerations, velocities and directions. The more diverse, the better for the dataset.

Next, the features for the RF are generated in the time and frequency domain. The features in the time domain are: (i) , (ii) , (iii) , and (iv) .

The features in the frequency domain are created from the features in the time domain by generating the Discrete Fourier Transform (DFT) of each feature in the time domain. In the present work, the DFT is generated with a MATLAB Toolbox that is based on [47,48,49]. The frequency range that the DFT uses is given as in Equation (3).

| (3) |

where is the sampling frequency and is the number of samples used for the DFT. The highest meaningful frequency from the DFT is [50,51], and a 0 Hz frequency (direct current) is not relevant in this work. Therefore, the used frequency range is given by Equation (4).

| (4) |

The IMU sampling rate is set to , and a time window of is used. Therefore, samples are used each time for the DFT generation: the latest values of the features in the time domain, and their values from the previous samples. Therefore, the used DFT frequencies are (1) 5.8824 Hz, (ii) 11.7647 Hz, (iii) 17.6471 Hz, (iv) 23.5294 Hz, (v) 29.4118 Hz, (vi) 35.2941 Hz, (vii) 41.1765 Hz, and (viii) 47.0588 Hz. The single-sided amplitude of these 8 frequencies are the features in the frequency domain for a total of 36 features: 4 in the time domain and 32 in the frequency domain. It should be noted that due to the sliding window, it is not possible to generate a DFT for the first samples. In this case, the amplitudes of their frequencies are set to zero.

By analyzing the features of the training datasets of different vehicles, it can be noticed that the dominant frequencies at standstill can be associated with the vehicle motorization. For the vehicles with internal combustion engines, to the idling Revolutions Per Minute (RPM) and the number of cylinders. When the vehicles move at walking velocity, the dominant frequency can be associated mainly with the vehicle differential. Finally, when the vehicle moves faster than walking velocity, the dominant frequencies come from the live loads of the vehicle, such as road imperfections and suspension travel, among others.

As shown in Table 1, “standstill” and “motion” are the two labels used for classification. The labeling of the training dataset can be done by using other sensors as reference, by manually analyzing the IMU signals, or a combination of both. Whichever way, it is important to notice that the labeling directly affects the classification performance of the RF.

A best practice for labeling the training datasets is to include the jerks that appear at drive-off into the “motion” class. When the vehicle comes to a stop, the “standstill” class should start only once the chassis has stopped moving and there are no more jerks or rotations present in the IMU signals. In case of uncertainty, it is preferable to label a sample as “motion” rather than “standstill” because, as is shown later, false negatives can be tolerated, but false positives are unacceptable.

The algorithm initialization and the transition between states are two effects of the proposed method to keep in mind. As for the initialization, samples are needed for algorithm initialization. This is because, as explained above, the DFT can be generated only once samples from the IMU are obtained. The single-sided amplitudes for the previous samples are set to zero, which might not be representative of the vehicle state.

Regarding the transition between states, as a vehicle goes from one state to the other (motion to standstill or vice versa), it might happen that the samples used for the DFT belong to different classes. The smaller the sliding window , the faster all samples for the DFT fall within a single class. Otherwise, as the sliding window gets bigger, more features can be used for the classification, but this also increases the lapse where samples from both classes are fed to the RF. The current size of is found to be adequate for a robust standstill recognition, and is determined after testing the RF with several hours of test datasets, and by comparing the classification performance of the RF with different values for .

Once the features in time and frequency domain are generated and labeled, the second step to recognize a standstill, is to train the RF. The training parameters used in this work are (i) number of trees: 12, (ii) stopping criteria: minimum leaf size, and (iii) minimum leaf size: 5. These parameters provide a robust classification and can be used for a variety of land vehicles.

It should be noted that the trained RF is not specific to the vehicle used to generate the training dataset, but to all vehicles with the same configuration (chassis, engine, transmission, suspension, etc.). This means that the training occurs only once, and the trained RF can be used for as long as the vehicle configuration remains the same.

Once the RF is trained, the third step to recognize a standstill, is to use the RF for the standstill recognition on test data. For this, the 36 features required as inputs for the RF are generated as the vehicle drives. The first step to do so is to buffer IMU samples, i.e., the most recent IMU measurements and the previous . The latest IMU measurements are the features in the time domain (, , , and ), and the features in the frequency domain are generated with all IMU buffered samples. When all 36 features (4 in the time domain and 32 in the frequency domain) are generated, they are used as input for the RF.

If a standstill is recognized, all change rates (velocities, accelerations and rotation rates) are set to zero. Only the position and orientation are carried forward from the previous time step. Otherwise, if the vehicle moves, its state is estimated as is detailed later.

2.2. Horizontation of IMU Measurements

The horizontation is understood as the transformation of the IMU measurements, as if the and planes were parallel, and the axis had the same orientation and direction as the axis. The horizontation is required mainly because most vehicle motion models found in the literature depict the movement of cars from a bird’s-eye perspective. Also, it is not a practical solution to physically maintain the and planes parallel when the vehicles are in motion. The horizontation requires tracking of the LTP axes relative to the LCP, so that the IMU measurements can be projected on the tracked LTP axes. This process varies depending on whether the vehicle is standing still or not. Both cases are detailed in what follows.

With exception of the engine vibrations, there are no live loads present in a vehicle during a standstill. Therefore, the gravitational pull G is the only force sensed by the IMU. This is given at standstill by

| (5) |

By using the gravitational pull as reference, the roll and pitch angles are calculated as follows

| (6) |

| (7) |

Then, the easiest manner to obtain an initial value of the yaw angle , is to equip the vehicle with a sensor that measures the Course Over Ground (COG), such as a SatNav receiver, and to drive the vehicle on a straight line. This is so because driving on a straight line results in .

Two rotations are necessary to transform the LTP to the LCP: one rotation around an arbitrary axis and one rotation around the axis. The first rotation aligns the axis with the axis. The second rotation aligns the axis with the axis. The two parameters of the first rotation are the arbitrary axis and the rotation angle . They are expressed as follows

| (8) |

| (9) |

The rotation matrix that aligns the axis with the axis is then given by:

| (10) |

| (11) |

| (12) |

The Equation (10) is known as the “Rodriguez’ Formula”, and its step-by-step derivation can be found in [52]. A graphical representation of a rotation around an arbitrary axis is shown in Figure 2. It should be noted that Equation (10) can present a singularity if and , because in that case as well. However, this would mean that the axis and the axis are aligned. Hence, , where is the identity matrix.

Figure 2.

Shown is a rotation around an arbitrary axis to align the axis with the axis. The axes of the LTP are shown in green, the axes of the LCP are shown in magenta, and the arbitrary axis and the rotation angle with grey.

The matrix that tracks the LTP with respect to the LCP is then given by

| (13) |

Once the vehicle pose at standstill is determined, it must be updated as the vehicle moves. The update is done primarily with the gyroscopes because even when cruising in a straight line, random forces act on road vehicles. Some sources of these forces could be road irregularities, bumps, road joints or the wind. These forces are sensed by the IMU, which complicates the update of with the accelerometers. Therefore, the composition of rotations is used instead. This is detailed in what follows.

With the instantaneous rotation rates , and around the , , and axes respectively, the instantaneous composition of rotations is expressed as follows

| (14) |

| (15) |

Next, the instantaneous composition of rotations is integrated as if it was a vector and added to . The step-by-step derivation of this procedure is shown below.

| (16) |

| (17) |

| (18) |

| (19) |

| (20) |

| (21) |

where . Equation (21) is used each time that a new IMU measurement is available to keep updating .

To add rotations as vectors is an approximation that is valid only for rotations that tend to zero [53]. This is because is not an orthonormal matrix. Therefore, must be adjusted to make its axes perpendicular to each other. The procedure is shown in the following.

| (22) |

| (23) |

| (24) |

| (25) |

| (26) |

The next step is to project on the LTP the acceleration vector and the composition of rotations measured by the IMU. This is done by using the tracker matrix as follows

| (27) |

| (28) |

where is the acceleration vector in LTP at time instance , is the composition of rotations in LTP at time instance , , and are the accelerations at time instance along the , and axes accordingly; and , and are the rotations at time instance around the , and axes correspondingly.

The acceleration vector and composition of rotations are then projected on the -plane. The magnitude and direction of the acceleration over ground are calculated as follows

| (29) |

| (30) |

The magnitude and direction of the composition of rotations over ground are given by

| (31) |

| (32) |

Once the accelerations along and rotations around the LTP axes are known, they are projected on the projection on the -plane of the longitudinal axis of the vehicle, i.e., the acceleration vector and composition of rotations are projected on a vector located on the -plane and with orientation. Therefore,

| (33) |

| (34) |

where and are called “horizontal accelerations”. The measurement vector of the Extended Kalman Filter (EKF) that is designed in this research work (Section 2.3) includes , and as measurement variables. The process from Equation (14) to Equation (34) is repeated with each new inertial measurement while the vehicle is in motion to keep updating the values of the measurement vector of the EKF.

2.3. Statistical Filtering

The next step is to estimate the vehicle state. This is done by using a motion model to predict the vehicle motion and by using exteroceptive sensors to get feedback.

If one assumes Gauss-Markov stochastic processes, the most computationally efficient method to estimate the optimum state of moving objects is the Kalman Filter. The Kalman Filter fuses the predicted vehicle motion with observed measurements. Its detailed derivation can be found in [15], and the specifics for the present research work are given in what follows.

The measurement vector is defined in the present work as

| (35) |

where are the coordinates of in LTP, is the yaw angle of the vehicle, is the velocity over ground of the vehicle, and is the sideslip angle of the vehicle. The used measurement noise covariance matrix and state vector are defined as follows

| (36) |

| (37) |

where are the coordinates of in LTP, is the yaw angle, is the yaw rate, is the velocity over ground, is the first estimation of the vehicle sideslip angle, is the rate of change of the vehicle sideslip angle, is the second estimation of the vehicle sideslip angle, and and are the vehicle horizontal accelerations. Two sideslip estimators are used to improve the prediction of the vehicle motion. The first sideslip estimator ( and ) is based on the balance of moments of inertia and provides a better performance for the estimation of the vehicle velocity. The second sideslip estimator () is based on a geometrical model under the assumption of tractive driving, and gives a better performance for the position estimation.

With a distance along the longitudinal axis of the vehicle from to the rear transaxle, and additive system noise , the used state equations are given by

| (38) |

| (39) |

From and , one deducts that as the vehicle drives slower than , the sideslip estimators lead to a mathematical indetermination. To solve this, when , then , and are set to zero. Therefore, no sideslip is considered for the velocity or position estimation. However, given the low velocities where the indetermination happens, the integration error is negligible.

2.4. Drift Recognition

The geometrical model from which is derived, assumes tractive driving. Hence, the performance of the motion model to predict the vehicle motion decays during a drift. By recognizing a drift, it is possible to (i) identify when the performance of the motion model decays, and (ii) take measures such as adjusting the system noise, or switching to other motion models. Therefore, an objective of this research work is to create a drift detector. Other approaches achieve this with multiple SatNav receivers or with specific sensors, such as the Correvit. A drift is recognized in this work by checking the consistency between state variables to reduce the dependence of INSs on external sensors.

The tire longitudinal and lateral slips are a consequence of the forces that act on the tires as well as their mechanical properties. As such, both slips indicate how much force can the tires transmit to the ground. When classified by the tire slips, the driving state of a vehicle can be classified as either “tractive” or “non-tractive” [54]. The tractive driving is characterized by high lateral and longitudinal tire tractive forces and by tire slips usually less than 10 sideslip and less than 10% longitudinal slip. The non-tractive driving is characterized by low tire tractive forces and by high slips that are usually greater than 10 sideslip and greater than 10% longitudinal slip.

Hence, the first pair of state variables to compare to detect a drift, are both sideslip estimators. During tractive driving, . However, as the vehicle starts drifting, the difference between both gets bigger because is derived under the assumption of tractive driving. Therefore, the difference between and is a strong indicator that shows whether the vehicle is drifting or not.

The second pair of variables that are used for the drift recognition are the estimated lateral acceleration and the expected lateral acceleration . The latter results from assuming a tire sideslip of . This would mean that the path of a turning vehicle describes an arch with constant radius, so that . Therefore, estimates the lateral acceleration in reality, and describes how the lateral acceleration should ideally be. During tractive driving, . And as the vehicle starts drifting, the difference between and becomes statistically significant.

In the present work, it is said that the vehicle is drifting if and . These thresholds are determined after comparing the corresponding state variables as measured during drifting tests, and as estimated during tractive driving on open roads.

2.5. Outlier Detection

The next step is to fuse the predicted vehicle motion with measurements from external sensors (correction data). However, the sensor information could be corrupted, which would affect negatively the estimation of the vehicle state. To avoid this, the state vector is used as reference to filter out flawed sensor measurements. This is because, by definition, the state vector represents the last known optimal state of the vehicle, and because, assuming that both the motion model and the sensors depict reality with 100% fidelity, the measurement vector is equal to the predicted vehicle state.

The consistency check is done with two consecutive sensor measurements made at time instances and , which are compared to the corresponding predicted state variable. Therefore, the difference between two consecutive velocity measurements could be expressed as follows

| (40) |

and to consider the inaccuracies of the sensor and the motion model, this can be rewritten as:

| (41) |

Since the purpose is to filter outliers, an additional margin is given by means of a factor . Also, only the absolute difference is taken to consider both positive and negative accelerations of the vehicle. Therefore, the measured velocity at time instance is used as correction data if

| (42) |

The value of is determined after analyzing hours of real-world data, where the behavior of the SatNav measurements during regular operation is compared to the behavior of the measurements during periods of time where the multi-path effect is present.

Since during tractive driving, the COG measured by the SatNav receivers can be used as correction data for when . Analogue to the velocity measurement, the measured COG at time instance is used as correction data if

| (43) |

Analogue to the velocity and the COG, the SatNav location measurement at time instance is used as correction data if

| (44) |

The same method to detect outliers can be applied for and if necessary.

Some sensors can compute quality indicators for the own measurements, such as a dilution of precision or a standard deviation. The problem is that sometimes the sensors do not recognize when they miscalculate their quality measures, as is the case with the standard deviation of SatNav receivers.

To address this, the quality measure is modelled as a PT1 element to dampen it over time. Therefore, let and be an element of at time instances and , and be the values corresponding to and that the sensor calculates, a saturation parameter, and a timer at time instances and . The update of the standard deviation is then modelled as follows

| (45) |

| (46) |

The saturation parameter is optimized for a SatNav receiver by analyzing its measurements with and without the multi-path effect, and can be optimized for other sensors as required.

From Equations (45) and (46), it can be deducted that the strategy is to quickly shift the Kalman gain towards the motion model if the quality of the sensor measurements decays, and to gradually shift the gain towards the sensor if the quality of its measurements continuously improve.

2.6. LiDAR-Based Positioning Method

As stated above, there are various situations where no SatNav is available, but where highly precise correction data is required. A highly precise source of correction data is generated in this work by means of a Velodyne LiDAR HDL-32E [55], and the method is detailed in what follows.

The first step is to identify references or “markers” with the LiDAR. Since recognizing markers by their shape is computationally intensive, they are identified by their reflectivity. It should be noted that the point cloud resolution decreases (i) as the distance dvelo between the LiDAR and the measured object increases, and (ii) as the rotational velocity ωvelo of the LiDAR head increases. The Euclidean distance dhor,velo between two horizontally adjacent points as a function of dvelo and ωvelo is given by

| (47) |

where is the time required for one firing cycle (a single shot of all lasers for a single LiDAR azimuth). From Equation (47), the relation between , and the point cloud resolution can be deducted. This relation can be very relevant as the vehicle velocity increases.

The chosen LiDAR can measure the NIST calibrated reflectivity [56], which ranges from 0 for a lost reflection to 255 for a reflection with no loses. This allows differentiation of highly reflective objects from the rest of the world, but not to identify the highly reflective objects individually.

Once only the points with high reflectivity remain, they are clustered using a time threshold of . Therefore, the points measured within one another, are clustered together. Hence, all points that are within a clustering distance from each other are clustered together, so that

| (48) |

The value of is important when defining , and the spacing between markers.

The next step is to determine a single set of (x,y) coordinates for the cluster. For this work, the motion of the vehicle is limited to the -plane. Therefore, the i-th cluster point is defined as

| (49) |

and the i-th cluster of LiDAR measurements is then defined as

| (50) |

where and are respectively the range and azimuth angle of the i-th measured point, and is the number of points of the cluster.

The coordinates that express the measured position in LCP of the i-th cluster (marker) are then calculated by means of the mid-range arithmetic mean, so that

| (51) |

The mid-range arithmetic is applied as well to , and the timestamp of the clustered measurements. This allows one to obtain the distance , the azimuth and the time instance at which the i-th marker is seen. Next, the true location in LTP of the markers must be known. This is defined for the i-th marker as follows

| (52) |

The true location in LTP of all markers is stored in the marker map, which is assumed to be available beforehand. This map can be generated, for example, by means of a tachymeter or by stitching together LiDAR measurements of the test area. The marker map is then defined as follows

| (53) |

where is the total number markers, and the first row is a unique identifier for each marker.

In order to identify which marker is being measured, an approximate position and an approximate orientation of the vehicle in LTP is required. This approximate position can be, for example, , or initialization values, and is defined as follows

| (54) |

The positioning error is then defined as follows

| (55) |

were is the true position of the vehicle in LTP. Since the location of the vehicle is tracked by means of an EKF, during tractive driving it holds that

| (56) |

The approximate orientation can be or an initialization value, and during tractive driving it holds that

| (57) |

were is the true orientation of the vehicle in LTP. The orientation error is defined as follows

| (58) |

So as to prevent ambiguities in the marker identification, and are constrained as follows

| (59) |

where is a vector with the Euclidean distance between all possible marker combinations. The markers do not have to be placed in specific patterns, as long as the constraint of Equation (59) holds. The measured position in LTP of the i-th marker is then given by

| (60) |

The element of closest to is then the measured marker.

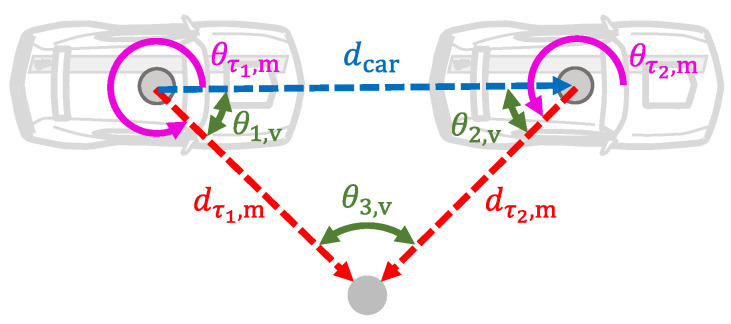

Once the markers can be individually identified, the measurement variables are calculated. The first one is the velocity over ground . To calculate it, it is required that two LiDAR measurements (consecutive or not) point to the same marker. So, let and be the measurements in LCP of one marker done at time instances and accordingly, so that

| (61) |

| (62) |

From and , the geometry of a cone is constructed as shown in Figure 3.

Figure 3.

Shown is a graphical depiction of the cone geometry used for the velocity calculation from two LiDAR measurements that point to the same marker.

Since the sum of the internal angles of a triangle is , the internal angles of the constructed geometry are calculated as follows

| (63) |

| (64) |

| (65) |

The vehicle displacement between the time instances and is calculated by means of the cosine law. In addition, since the LiDAR measurements have a timestamp, the measured velocity over ground of the vehicle between and is calculated as follows

| (66) |

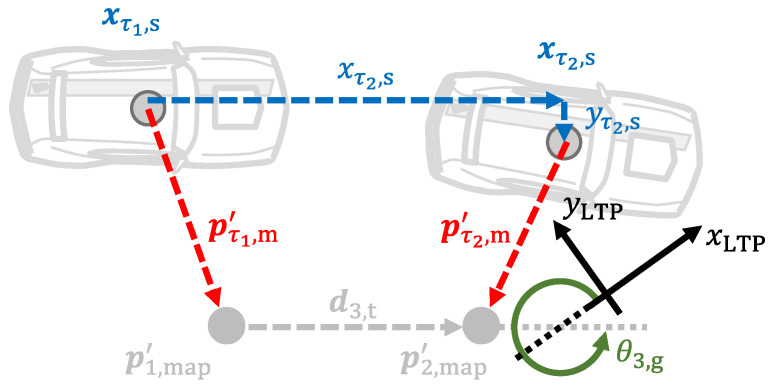

Nevertheless, the cone geometry is not completely defined as it can rotate around the marker. Therefore, it cannot be used to calculate the vehicle location. Instead, both LiDAR measurements must point to different markers. Also, to completely define the geometry shown in Figure 4, is expressed in the LCP at time instance as follows

| (67) |

Figure 4.

Shown is a graphical depiction of the geometry used for calculating the vehicle location.

Next, assuming that and point to and respectively, the angular offset from the LTP to the LCP at time instance is given by

| (68) |

Afterwards, the marker measurements in LCP at time instance are rotated as follows

| (69) |

| (70) |

Finally, the linear offsets from the LTP to the LCP at time instance can be estimated by comparing and with the true marker location and from the marker library. Since both measurements have the same accuracy, the average of both offsets is calculated as follows

| (71) |

The location and orientation measurement variables at time instances and are then given by

| (72) |

| (73) |

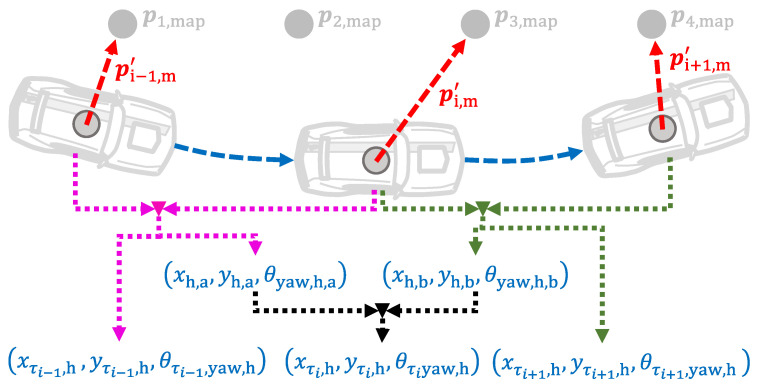

As can be deducted from the previous equations, the measurement variables for two consecutive time instances are calculated at each iteration, i.e., once the LiDAR measurement from the time instance is acquired, the vehicle pose for the time instances and is calculated. When the LiDAR measurement from the time instance is acquired, the vehicle pose for the time instances and is calculated; and so on. Since this creates an overlap of two pose measurements per time instance, both are averaged. Therefore, let and be the measured location of the vehicle at the i-th time instance that is calculated with the LiDAR measurements from the time instances to . The measurement variables for the vehicle location at the i-th time instance are then given by

| (74) |

Analogously, let and be the measured orientation of the vehicle at the i-th time instance that is calculated with the LiDAR measurements from the time instances to . The measurement variable for the vehicle orientation at the i-th time instance is then given by

| (75) |

As can be deducted from Equations (74) and (75), to average the measurement variables that are obtained using different pairs of LiDAR measurements aids to compensate LiDAR measurement errors, and creates a time-smoothing effect for the measurement vector. A graphical depiction of this process is shown in Figure 5.

Figure 5.

Shown is a graphical depiction of the generation of the measurement variables for the vehicle pose, as computed from LiDAR measurements.

To ensure that the measured object is a marker and not any other reflective object, outliers are filtered out. For this, the LiDAR measurements are translated to the LTP to compare them with the true marker position from the marker library. Therefore, the locations and in LTP of the LiDAR measurements and made at the i-th and -th time instance are expressed as follows

| (76) |

| (77) |

Assuming that and are closest to the i-th and -th markers respectively, their measurement errors and are given by

| (78) |

The previous because, as shown in Equations (71) and (75), the angular and linear offsets are estimated with both LiDAR measurements.

A distance threshold is then used to separate outliers according to the following criteria:

| (79) |

If an outlier is detected, both measurements are discarded.

From what is detailed above, the LbPM can be used only in places with known markers, or an extra effort is needed to place and measure markers in new areas. However, traffic signs have a fixed position and are highly reflective as well. Therefore, they too can be used as markers for Simultaneous Localization and Mapping (SLAM) instead of having a marker library a priori.

Ongoing work of the present research focuses on evaluating the adequacy of the LbPM for SLAM purposes. A critical aspect to evaluate an LbPM-based SLAM, is to identify the sources of error individually. Since the vehicle motion prediction between LiDAR measurements can be approximated as circular movement, the LbPM does not depend on the motion model of the EKF. Hence, it is possible to analyze the motion model with no correction data and the LbPM separately. However, the recursiveness of the prediction-correction process of the EKF and localization-mapping process of the SLAM complicate the isolation of the sources of error when the EKF and the LbPM are combined.

To study separately the sources of error, the mapping performance of the LbPM-based SLAM is inspected under two circumstances: (i) with position, velocity and COG correction data; and (ii) with only velocity correction data. The first variant excludes all possible LbPM feedback errors. The second variant excludes only the velocity feedback from the LbPM as a source of error. The presented method is based on [57,58,59,60], and the specifics applicable to this work are detailed in what follows.

First, some measurement variables are considered instead as inputs to perform the prediction of vehicle state. An input vector and the control covariance matrix are then defined as follows

| (80) |

| (81) |

With this, the vehicle state process covariance matrix can be updated as follows

| (82) |

where is the Jacobian matrix of , derived after the control vector .

Next, a measurement vector is defined as

| (83) |

where and are respectively the range and azimuth angle of the i-th cluster.

The task of the observation model is to calculate the estimated measurement , for a given vehicle state and one specific marker . For this, two cases can be distinguished. If the current marker library is empty, then the marker is added to it with the location derived from and . If the marker library is not empty, then an association check is required to compute the probability that the current observation corresponds to an existing marker. For this, the Mahalanobis Distance [61] Individual Compatibility (IC) check is performed for each marker in the library. The one yielding the minimum Mahalanobis Distance is the marker from the library with the highest probability to be the observed one. For this, the innovation term is calculated as follows

| (84) |

The md is then given by

| (85) |

with being the corresponding covariance matrix for the innovation term . Finally, the marker from the library is associated with the observation according to the following criteria

| (86) |

| (87) |

This is because a threshold means a probability of that the sensor measurement and the estimated measurement refer to the same marker [62].

3. Results

To validate the performance of the From methods that are detailed above, a series of tests are designed and performed. The From methods are tested with several hours of real-world data. In what follows, the testing details, the evaluation metrics and the most relevant results are presented.

3.1. Standstill Recognition

The performance of the standstill recognition is measured in terms of its classification performance according to Table 1, as well as its robustness against false positives. The four most relevant tests and their results are detailed in what follows.

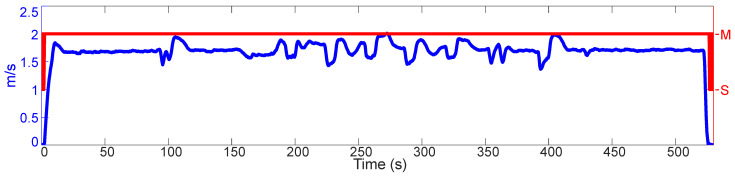

The results of the 1st test are shown on the Figure 6. Here, a 3rd generation Smart ForTwo electric drive is placed on a test track. The INS is mounted in the trunk of the vehicle. The vehicle is turned on, the front tires are set to point straight ahead, and the gear selector placed on “D”. Some seconds after the data recording starts, the brake pedal is released. The car is driven on the test track for approximately 530 s. The accelerator pedal is not touched at any point, and the brake pedal is used only at the beginning to let the car roll and at the end to stop it. The steering wheel is used only to realign the vehicle towards paths that allow prolonged straight-line driving.

Figure 6.

Shown is the RF output (red, S = standstill, M = motion) and the velocity over ground of the vehicle (blue). Vehicle: 3rd Gen. Smart ForTwo. Motorization: Electric Drive. Power source: Electricity.

What makes this test special is the combination of (i) the low driving velocity, (ii) the drivetrain of the vehicle (electrical motor and single gear reduction transmission), and (iii) the prolonged driving moments without rotations.

As can be seen, the RF is able to recognize that the vehicle is in motion, even when driving several seconds constantly at walking velocity on a straight line: (17.87–92.53 s) and (434.80–522.00 s). It is seen as well that the RF has no false positives.

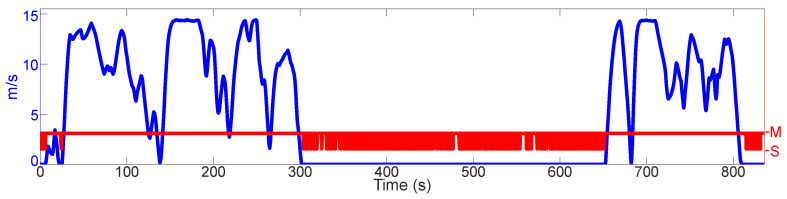

The results of the 2nd test are shown on the Figure 7. Here, a 5th generation Audi A4 3.0 L TDI with an S-Tronic 7-gear transmission is driven randomly in a city. The INS is mounted in the trunk of the vehicle. On three occasions, the gear selector is placed on “N”, and the car is allowed to roll. On one of those occasions (22.26 s), the vehicle does come to a brief stop. On the other two occasions (138.10 s and 682.20 s), the car reaches very low velocities ( and respectively), but it does not come to a full stop. A prolonged standstill (302.10–652.00 s) is also included. During this period of time, the gear selector is kept in “S”.

Figure 7.

Shown is the RF output (red, S = standstill, M = motion) and the velocity over ground of the vehicle (blue). Vehicle: 5th Gen. Audi A4. Motorization: 6 cylinder, 3.0 L TDI. Power source: Diesel.

This test shows (i) three rolling instances, and (ii) a prolonged standstill. Diesel engines are known to produce more vibrations than their gasoline counterparts. Also, when the gear selector is placed on “N”, much less vibrations are transmitted to the vehicle chassis because the transmission is not engaged, and because the engine idles. Therefore, the IMU signals when the vehicle rolls closely resemble those when the car is standing still, especially at low velocities. Contrarily, when the gear selector is on “S” instead of “D”, the vibrations transmitted to the chassis increase notably.

As can be seen, in the first rolling instance (22.26 s) the RF is able to recognize the standstill first when the vehicle does come to a stop and not before. Also, the RF can recognize that the vehicle is still in motion at the other two rolling instances (138.10 s and 682.20 s), despite the velocity over ground almost reaching zero. The RF is also mostly able to recognize that the vehicle is standing still (302.10–652.00 s), despite the increased vibrations provoked by selecting “S”. After 720.20 s of true standstill, the estimated total displacement of the vehicle is 0.008 m (8 mm) and the estimated total rotation of the vehicle is 0.011 rad (0.6282 deg). It is seen as well that the RF has no false positives.

The results of the 3rd test are shown on the Figure 8. Here, a Suzuki GSX-R750 K2 is driven on a test track. The INS is mounted by means of a metal plate directly on the chassis of the vehicle. On four occasions (251.80 s, 327.60 s, 391.20 s and 457.10 s), the “N” gear is selected, and the vehicle can roll to a full stop. Various instances of several seconds of standstill are included as well. In two of those instances (43.88–53.75 s and 94.08–108.20 s), the vehicle was let to rest on its side stand, with the motor idling and the gear “N” selected.

Figure 8.

Shown is the RF output (red, S = standstill, M = motion) and the velocity over ground of the vehicle (blue). Vehicle: Suzuki GSX-R750 K2. Motorization: 4 cylinder, 749 cc. Power source: Gasoline.

What makes this test special are (i) the chassis-drivetrain configuration, and (ii) the rolling instances. By design, the engine mounts of motorcycles are not designed to dampen the engine vibrations as well as the engine mounts of cars. Thus, these vibrations are transmitted in a much more direct manner to the motorcycle chassis. Also, given that the INS is mounted on the chassis with no dampening, it senses the engine vibrations in a much more direct manner. Thus, the INS signals under these conditions, in combination with the rolling instances, echo the signals that are present at standstill.

As can be seen, on two of the occasions where the motorcycle can roll (251.80 s and 327.60 s), false positives appear. On the other two occasions (391.20 s and 457.10 s), the RF detects a standstill first when the vehicle comes to a stop. It is seen as well that the RF has no false positives under regular driving conditions (driving always on gear).

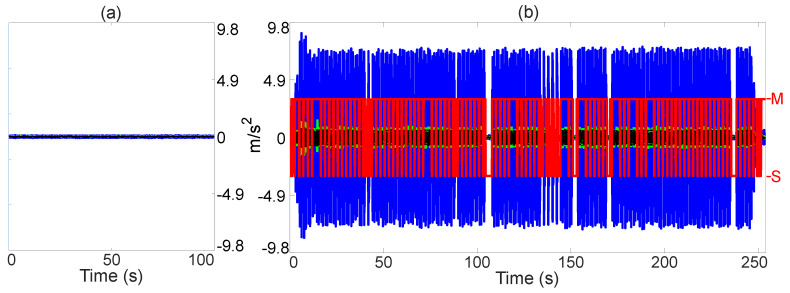

The results of the 4th test are shown on the Figure 9. Here, a 2nd generation Audi Q7 is parked on a test track with the engine idling and the gear “P” selected. The INS is mounted in the trunk of the vehicle. The volume of the sound system is set to the maximum and music with high bass level is played for 254 s, to induce very strong vibrations for prolonged periods of time. The objective is to test if the RF can recognize the standstill regardless of the induced vibrations.

Figure 9.

Shown are the vehicle accelerations along the (blue), (green) and (black) axes. (a) Shows the vehicle accelerations of the RF training dataset with the label “Standstill”. (b) Shows the vehicle accelerations while the music is playing and the RF output (red, S = standstill, M = motion). Vehicle: 2nd Gen. Audi Q7. Motorization: 6 cylinder, 3.0 L TFSI. Power source: Gasoline.

What makes this test special is the constant induction of vibrations which by magnitude is many times bigger than that of when the vehicle is standing still.

As can be seen, the output of the RF toggles much more than in the other tests. However, it is often still able to correctly detect a standstill. The maximum period of time where the vehicle state is wrongly classified as “motion” is 4.20 s, between 190.3 s and 194.50 s. In this test, after 254.01 s of true standstill, the estimated total displacement of the vehicle is 0.12 m (12.12 cm) and the estimated total rotation of the vehicle is 0.018 rad (1.08 deg).

3.2. Horizontation of IMU Measurements

The performance of this module is measured in terms of its capability to describe the pose (roll, pitch and yaw angles) of a vehicle. The motorcycle from the 3rd test of Section 3.1 is used because bigger roll angles can be achieved with it than with a car. The motorcycle is driven randomly on a test track, including a U-turn (772.70–780.00 s), a slalom (783.30–791.00 s), and “8” figures (791.00–843.70 s).

What makes this test special is that it serves as a practical demonstration of the mathematical methods detailed in Section 2.2. The results of the horizontation of the IMU measurements are shown on the Figure 10. As can be seen, the practical implementation of the mathematical methods is able to describe the vehicle pose.

Figure 10.

Shown are the roll (blue), pitch (green) and yaw (magenta) angles. Vehicle: Suzuki GSX-R750 K2. Motorization: 4 cylinder, 749 cc. Power source: Gasoline.

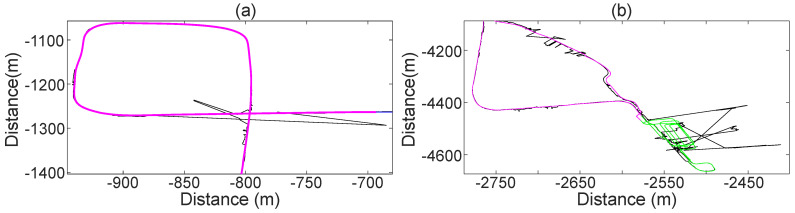

3.3. Statistical Filtering

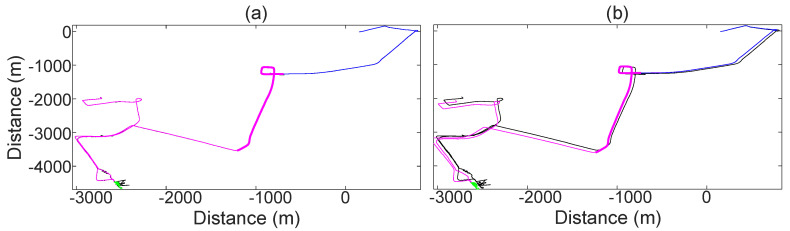

The results of the statistical filtering are shown on the Figure 11. The performance of this module is measured in terms of its ability to (i) fuse the vehicle path (as estimated with the motion model) with correction data, (ii) estimate the vehicle path despite SatNav shortages or with corrupted SatNav measurements, and (iii) estimate the vehicle path solely by means of the motion model (with no correction data). For this, a vehicle is driven in various types of roads for 1904.15 s. The test starts at a country road (blue), followed by an express way (magenta, thick), then in-city driving (magenta, thin) and a parking structure (green). A standstill instance of 40.00 s inside the parking structure is included as well. The average velocity of the vehicle during the test, including the 40.00 s standstill inside the parking structure, is .

Figure 11.

Shown is the vehicle position as measured by a SatNav receiver (black) and as estimated by means of statistical filtering (blue, magenta and green). (a) Shows the statistical filtering when SatNav correction data is used. (b) Shows the statistical filtering with no correction data.

What makes this test special is (i) the inclusion of various driving conditions, (ii) the inclusion of extended periods of time without or with corrupted SatNav correction data (parking structure), and (iii) the dead-reckoning navigation.

As can be seen, the statistical filtering is able to fuse the estimation of the motion model with the SatNav measurements. It can also be seen that even in total absence of SatNav correction data, the motion model is able to accurately estimate the vehicle path. The test shown in Figure 11 is a representative case of the average accuracy of the motion model because it includes various driving situations. At the end of the test, the cumulative deviation of the position estimated by the motion model from the position measured by the SatNav receiver is 130.00 m, which accounts for a deviation of 245.79 m per driven hour at an average velocity of .

3.4. Outlier Detection

The performance of this module is measured in terms of its ability to recognize corrupted correction data, even when the sensors suggest otherwise. For this, two segments of the results of Section 3.3 are highlighted.

What makes this test special are the extended periods of time without or with corrupted correction data. As can be seen, the outlier detection recognizes the faulty correction data, thus dynamically adapting the Kalman gains accordingly. This greatly improves the estimation of the vehicle state. Some relevant results of the outlier detection are shown on the Figure 12.

Figure 12.

Shown is the vehicle position as measured by a SatNav receiver (black) and as estimated by means of statistical filtering (blue, magenta and green). (a) Shows the dataset section of a bridge underpass. (b) Shows the dataset section of a parking structure.

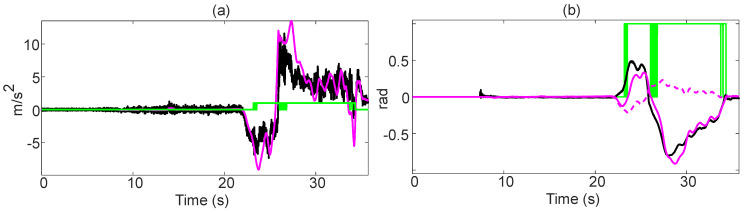

3.5. Drift Recognition

A representative result of the drift recognition is shown on the Figure 13. The performance of this module is measured in terms of its ability to recognize that the vehicle is drifting. For this, a 3rd generation BMW M5 is driven 51 times on a test track, alternating between tractive and non-tractive driving. The test vehicle is equipped with an INS and a Correvit S-Motion. No correction data is used in these tests to test the accuracy of the motion model under these conditions.

Figure 13.

Shown are (a) the drift bit (green), the estimated (black) and expected (magenta) lateral acceleration, (b) the drift bit (green), the measured (black) and estimated (magenta solid, magenta dashed) sideslips. Drift bit = 1 means drifting.

The relevance of this test lies in (i) the extended periods of non-tractive driving, and (ii) the exclusion of correction data. As can be seen, when the vehicle enters the first curve, the module recognizes that the vehicle is not drifting. It is only when the sideslip starts to abruptly change (22.98 s) that the module starts to detect a drift. As the vehicle remains on a steady state drift (23.54–26.05 s), the drift bit stabilizes at “drifting”. During the drift transition (26.05–26.83 s), when there are moments of tractive driving, the drift bit toggles between tractive and non-tractive driving. Once the transition is finished, and the vehicle enters again into a steady state drift, the drift bit does not toggle any more. As the vehicle ends the drift (34.31 s) the drift bit toggles for a few milliseconds before stabilizing at tractive driving.

The average deviation of the final position as estimated by the motion model when compared to the position measured by the SatNav receiver for all 51 tests is ≈24.47 s, what accounts for a deviation of 2465.30 m per driven hour at . However, it should be noted that (i) the average duration of these tests is ≈35 s, (ii) there is tractive and non-tractive driving in each test, (iii) around the first 20 s of each test are solely straight-line driving, and (iv) there is a full reset at the beginning of every test.

3.6. LiDAR-Based Positioning Method

The accuracy results of the LbPM are shown on the Table 2. The performance of this module is measured in terms of its accuracy to measure the vehicle state. For this, a vehicle is equipped with an INS with RTK and a LiDAR. An array of reflective markers is placed on a test track, and the marker library is generated by measuring the markers with a SatNav receiver with RTK. Two maneuvers (a slalom and a drive-by) are driven with various velocities ranging from and up to . The outputs from the LbPM are then compared to those of the INS with RTK. It should be noted that the INS serves as an accurate and objective reference, but no correction data is used for the LbPM.

Table 2.

Accuracy results for the proposed LbPM. Shown are the maneuvers, maneuverer velocity, mean deviation from the reference and Std. dev. of the corresponding errors.

| Manoeuvrers | Positioning Accuracy | Orientation Accuracy | Velocity Accuracy | |||

|---|---|---|---|---|---|---|

| Drive-by | Mean (m) | Std. dev. (m) | Mean (deg) |

Std. dev.

(deg) |

Mean | Std. dev. |

| 5 | 0.04 | 0.02 | 0.73 | 0.25 | 0.06 | 0.08 |

| 10 | 0.03 | 0.02 | 0.19 | 0.20 | 0.08 | 0.10 |

| 15 | 0.03 | 0.02 | 0.26 | 0.19 | 0.07 | 0.09 |

| 20 | 0.03 | 0.02 | 0.37 | 0.23 | 0.08 | 0.09 |

| 25 | 0.04 | 0.02 | 0.58 | 0.23 | 0.08 | 0.10 |

| 30 | 0.06 | 0.02 | 0.51 | 0.22 | 0.08 | 0.10 |

| 35 | 0.07 | 0.03 | 0.44 | 0.25 | 0.08 | 0.11 |

| 40 | 0.08 | 0.03 | 0.41 | 0.26 | 0.11 | 0.13 |

| Slalom | Mean (m) | Std. dev. (m) | Mean (deg) |

Std. dev.

(deg) |

Mean | Std. dev. |

| 5 | 0.04 | 0.02 | 0.24 | 0.29 | 0.08 | 0.11 |

| 10 | 0.04 | 0.02 | 0.40 | 0.29 | 0.09 | 0.12 |

| 20 | 0.04 | 0.02 | 0.32 | 0.36 | 0.14 | 0.17 |

| 30 | 0.05 | 0.02 | 0.36 | 0.40 | 0.18 | 0.24 |

| 40 | 0.10 | 0.02 | 0.53 | 0.43 | 0.18 | 0.22 |

What makes this test special are (i) the exclusion of correction data, and (ii) the validation with both maneuvers and various velocities. As can be seen, the mean accuracy of the LbPM is almost the same as that of the INS with RTK for all the state variables that the LbPM can measure.

3.7. LbPM Adequacy for SLAM

The adequacy of the LbPM for SLAM is measured in terms of its accuracy to generate the marker library. For this, the same test setup from Section 3.6 is used. Two tests are performed. In the first test, the position, velocity and orientation of an INS with RTK is used as correction data. In the second test, only the velocity from the INS with RTK is used as correction data. In both tests, the location of the markers is computed with the estimated vehicle state and the LiDAR measurements. The observed location of the markers is then compared to their true position from the library.

The relevance of these tests lies in that (i) it allows identify some sources of error, and (ii) it allows evaluation of the identified sources of error individually. The results are presented in Table 3. As can be seen, the marker library that is generated when the INS-RTK correction data is present, closely resembles the marker library that is generated with the RTK SatNav receiver. As expected, the marker library that is generated with only velocity correction data is less accurate. However, this inaccuracy is still smaller than that of SatNav with no correction data.

Table 3.

Results of the mapping accuracy. Shown are the maneuvers, driving velocity, mean deviation from the observed to the true marker location, std. dev. of the errors and the maximum deviation. The columns (a) show the results when using position, velocity and orientation correction data. The columns (b) show the results when using only velocity correction data.

| Manoeuvre | Mapping Accuracy | Manoeuvre | Mapping Accuracy | ||||

|---|---|---|---|---|---|---|---|

| Drive-by | Mean (m) | Std. dev. (m) |

Max (m) | Slalom | Mean (m) | Std. dev. (m) |

Max (m) |

| (a)/(b) | (a)/(b) | (a)/(b) | (a)/(b) | (a)/(b) | (a)/(b) | ||

| 5 | 0.11/0.90 | 0.04/0.51 | 0.19/1.56 | 5 | 0.33/0.93 | 0.24/0.51 | 0.81/1.62 |

| 10 | 0.11/0.13 | 0.04/0.06 | 0.19/0.22 | 10 | 0.08/0.27 | 0.03/0.11 | 0.14/0.42 |

| 15 | 0.12/0.18 | 0.05/0.07 | 0.22/0.34 | 15 | 0.08/0.53 | 0.03/0.25 | 0.16/1.04 |

| 20 | 0.14/0.29 | 0.06/0.12 | 0.28/0.48 | 20 | 0.08/0.78 | 0.03/0.56 | 0.14/1.70 |

| 25 | 0.17/0.11 | 0.08/0.06 | 0.32/0.39 | 25 | 0.10/0.30 | 0.05/0.14 | 0.23/0.64 |

| 30 | 0.14/0.16 | 0.06/0.09 | 0.28/0.44 | 30 | 0.08/0.67 | 0.04/0.36 | 0.21/1.53 |

| 35 | 0.10/0.20 | 0.05/0.15 | 0.20/0.69 | 35 | 0.11/0.96 | 0.06/0.52 | 0.25/1.96 |

| 40 | 0.10/0.38 | 0.05/0.19 | 0.23/0.77 | 40 | 0.11/0.26 | 0.06/0.15 | 0.25/0.64 |

3.8. Runtime

The runtime results of the presented methodology are shown on Table 4. These values are obtained by executing the Matlab code of each module for 1.1+ million cycles on an Intel i7-6820HQ CPU, and by using the Matlab Profiler to measure the runtime of each module. Given that the IMU horizontation, the outlier and the drift detectors are embedded in the statistical filtering module, the runtime of these four modules is considered to be a single one.

Table 4.

Shown is the median and the std. dev. of the runtime of the modules presented above.

| Module | Median (μs) | Std. dev. (μs) |

|---|---|---|

| Standstill classifier | 123 | 50 |

| Statistical filtering | 403 | 98 |

| LbPM-point clustering | 43 | 20 |

| LbPM-velocity estimation | 11 | 14 |

| LbPM-position estimation | 42 | 54 |

The relevance of this test lies in that it gives a baseline to estimate the possibility of using the proposed methodology in real-time applications. As can be seen, the median runtime of all modules is always in the microsecond area. Even in a worst-case scenario (median plus 3σ, the runtime for the complete methodology is ≈1.33 ms, which remains under a typical IMU sampling time of 10 ms.

4. Discussion

As stated in Section 1, the focus of this research is to generate a reference state for ground vehicles, while reducing the dependency of the INSs on the SatNav. This allows the INSs to bridge SatNav outages for much longer periods of time, or even to function with no SatNav at all. Specifically, for the automotive industry, there are various use-cases that justify the use of SatNav-deprived INSs, such as the navigation in tunnels, underpasses or parking structures. A highly precise and prolonged navigation in places with no SatNav, such as testing halls, is also very relevant for the automotive research. As shown in Section 3, the methods developed in this research work address the aspects where the INSs profit the most.

Starting with the standstill recognition, it is shown that it is possible to classify whether a vehicle is moving or standing still by using only machine learning techniques and IMU measurements. The fact that no additional sensor is required, clearly implies important advantages, such as (i) reduced costs, (ii) less testing complexity, (iii) simplified information processing, and (iv) stand-alone functioning.

The proposed method uses the same features in the frequency domain, regardless of the vehicle. However, a first glance at the Laplace transformation of the features in the time domain, suggests that the choice of frequencies can be further refined if one considers them on a vehicle-specific basis. This could eventually lead to an improvement in the standstill recognition. Therefore, future work could include a vehicle-specific analysis of the features in the frequency domain. The use of the on-board vehicle sensors could also help refine and automate the data labeling.

As for the horizontation of INS measurements, modern INSs do use correction data (typically from the SatNav) to refine the IMU measurements. However, it is shown that the pose of the vehicle can be accurately described with the raw IMU measurements. This implies independence from the SatNav. The inclusion of the detailed mathematics to horizontate IMU measurements, implies an aid to scientists working on similar topics or trying to replicate the results presented here.

One challenging aspect of the horizontation of the IMU measurements is to detect the IMU mounting pose in the vehicle, i.e., it is possible to estimate the pose of the IMU with respect to the gravity vector and the true north of the Earth. However, it is not an easy task to relate the pitch and roll of the IMU with that of the vehicle because the IMU could be mounted in such a manner that its -plane is not parallel to the -plane of the vehicle. Knowing the offsets between both planes could be highly valuable to recognize certain driving situations, such as driving inside parking structures, wheelies or stoppies. Since the acquisition of these offsets on a vehicle-to-vehicle basis is a complicated and time-consuming task, future work could include the investigation of automated methods that allow estimation of these offsets.

The KF is a computationally efficient algorithm for fusing data. However, the accuracy of its output is limited by the accuracy of the information to fuse. Therefore, it is important to refine the sources of information as much as possible before using them as inputs for the KF. In this work, the EKF fuses the state vector estimated by the motion model with the measurements of the vehicle state made with external sensors. Therefore, a lot of effort is put on refining the motion model for tractive-driving applications. As shown above, the proposed motion model can accurately estimate the vehicle state for extended periods of time with no correction data, which implies a high confidence on the vehicle state, even when navigating by dead reckoning.

Given that the motion model is designed to perform best for tractive driving, its performance is reduced during non-tractive driving. Also, given that the drifting tests are designed to test the drift recognition, they are not long enough to objectively determine the accuracy of the motion model during a drift. Therefore, future work could include a deeper investigation of the accuracy of the motion model during non-tractive driving. This could help to estimate adequate values for the system noise, and so improve the performance of the KF during drifting.

As for the outlier detector, it further helps in refining the sources of information before they are fed to the KF. Its effect is especially noticeable when the sensors deliver quality metrics for their own measurements, as is the case of the standard deviation for the SatNav. As shown above, to include a smart outlier detector implies a very useful consistency check of the sensor measurements, thus avoiding fusing information that would negatively affect the estimation of the vehicle state.

This detector uses the latest state vector because it is the last known vehicle state. However, as time passes, the predicted state without correction data might differ so much from the true state that the constraints shown in Section 2.5 filter out meaningful sensor measurements. Future work could include the combination of the system noise with the state vector to better adapt the outlier detector.

Yet another step that is taken to improve the KF is the drift detector. Given that the motion model is designed to function best in tractive driving, it is very useful to differentiate whether the vehicle is drifting or not. As shown above, the drift recognition can make this distinction. This implies that the time instance to make changes in the KF is known. This can be, for example, to adjust the system noise accordingly or to use a more adequate motion model.

The drift recognition here presented is based on the thresholds of two features: the magnitude of the sideslip and the difference between the estimated and expected lateral acceleration. Future work on this method could include the analysis of a bigger dataset that includes vehicles with different drivetrains (front, rear and all-wheel drive). This could help to gain a better understanding on the ideal thresholds for vehicles according to their drivetrain.

One crucial module to reduce the dependency of INSs on the SatNav is the LbPM. The results clearly show that the accuracy of the vehicle state as measured by this method closely resembles that of an INS with RTK correction data. Considering the refresh rate of the LiDAR and the state variables that can be measured by means of the LbPM, this could already be enough for an accurate vehicle indoor navigation. Future work on this module could include the implementation of the method on dedicated hardware to analyze the computing requirements for a real-time implementation.

The results on Table 3, suggest that the LbPM is adequate for SLAM. Given that the LbPM is capable of delivering RTK-like correction data, it could enable the generation of an accurate marker library in unknown environments. It is not clear whether or not a lane-accurate indoor navigation could be possible in places with a reduced marker density. Future work on this module could include adapting the LbPM algorithm for SLAM, and implementing it on dedicated hardware for real-world testing. For this purpose, tailored-made hardware solutions, such as [63,64], could aid in the navigation of complex environments, as can be parking structures. The use of lightweight artificial intelligence techniques, as shown in [65,66] could aid in the implementation of the standstill recognition in hardware with limited computational resources, as are embedded systems. Finally, the sensor fusion with on-board sensors in the vehicles could improve the dead-reckoning navigation in indoor environments.

Regarding the methodology runtime, it should be noted that even with high-level programming code, such as a Matlab, its runtime never exceeded 2 ms. This implies that it is possible to implement the methodology in real-time applications. Future work could include the implementation using a more efficient programming language, such as C++, and the use of dedicated hardware.

5. Conclusions

The objective of generating a reference vehicle state for proper testing and validation of automated driving functions is achieved in a prototypical manner by developing novel methods and by adapting existing ones to the problems at hand. This while reducing the dependency of INSs on external sensors, such as the SatNav. The testing results demonstrate that the proposed methods greatly improve the accuracy of the estimated vehicle state. The measured runtime suggests the possibility of real-time implementation of the proposed methodology.

Abbreviations

The following abbreviations are used in this manuscript:

| SatNav | Satellite Navigation |

| IMU(s) | Inertial Measurement Unit(s) |

| INS(s) | Inertial Navigation System(s) |

| RTK | Real-Time Kinematic |

| LbPM | LiDAR-based Positioning Method |

| RF | Random Forest |

| DFT | Discrete Fourier Transform |

| RPM | Revolutions Per Minute |

| COG | Course Over Ground |

| EKF | Extended Kalman Filter |

| GPS | Global Positioning System |

Supplementary Materials

The following are available online at https://www.mdpi.com/1424-8220/21/4/1131/s1.

Author Contributions

E.S.M. contributed with most of the presented methodology, its implementation and its validation. J.D. contributed with the methodology of the LiDAR-based SLAM. B.H. contributed with the conceptualization of the methodology. A.G.H. contributed with the supervision of the methodology. M.B. contributed with the conceptualization and supervision of the methodology, the funding acquisition and the project administration. All authors contributed in the writing, review and editing. Andrés García Higuera is a policy analyst in the European Parliamentary Research Service (EPRS), the internal research service and think-tank of the European Parliament. He is writing in a personal capacity and any views expressed do not represent an official position of the Parliament. All authors have read and agreed to the published version of the manuscript.

Funding

This research and the APC was funded by the Federal Ministry of Education and Research of Germany (Bundesministerium für Bildung und Forschung) in the framework of FH-Impuls, project number 03FH7I02IA.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Datasets of the IMU signals and SatNav measurements of the results shown are available within the article as Supplementary Materials. The data presented in this study are available within the article as Supplementary Materials.

Conflicts of Interest