Abstract

Gait patterns are a result of the complex kinematics that enable human two-legged locomotion, and they can reveal a lot about a person’s state and health. Analysing them is useful for researchers to get new insights into the course of diseases, and for physicians to track the progress after healing from injuries. When a person walks and is interfered with in any way, the resulting disturbance can show up and be found in the gait patterns. This paper describes an experimental setup for capturing gait patterns with a capacitive sensor floor, which can detect the time and position of foot contacts on the floor. With this setup, a dataset was recorded where 42 participants walked over a sensor floor in different modes, inter alia, normal pace, closed eyes, and dual-task. A recurrent neural network based on Long Short-Term Memory units was trained and evaluated for the classification task of recognising the walking mode solely from the floor sensor data. Furthermore, participants were asked to do the Unilateral Heel-Rise Test, and their gait was recorded before and after doing the test. Another neural network instance was trained to predict the number of repetitions participants were able to do on the test. As the results of the classification tasks turned out to be promising, the combination of this sensor floor and the recurrent neural network architecture seems like a good system for further investigation leading to applications in health and care.

Keywords: gait patterns, gait analysis, machine learning, feature learning, time series analysis, recurrent neural network, artificial neural network, sensor floor, SensFloor, long short-term memory, unilateral heel-rise test, dual-task

1. Introduction

Evaluating gait patterns is an essential resource in diagnosing neurological states or orthopedic problems. They play an important role in monitoring the development of diseases and controlling clinical and therapeutic decisions [1], and can be used to track the progress after healing from an injury. By identifying the properties of gait patterns that deviate from the norm values, it is possible to decide on a precisely targeted therapy as an intervention back towards a more normal gait. Gait patterns can be picked up with all kinds of sensors, by measuring motion as in accelerations and rotations, or tracking locations of limbs or footfalls. For some of that, one can use sensors that are below the floor and which deliver the time and location of steps—the specific sensor floor model that was used for this study, SensFloor®, achieves this by detecting changes in the electric capacitance on a grid of sensor fields. The sensor floor is easy to install and integrates well with the environment as it is hidden below common flooring types. SensFloor allows collecting the most intensively researched parameters in this field of gait analysis like cadence, step width and step length, or the timings of the stance and swing phases that are found in human gait [2]. These parameters can then be used to identify asymmetries or for comparing them with tables of normal parameters for a person’s physique. Alternatively, gait can be analysed by formulating a task of interest as a classification or regression problem and train a machine learning model, which is the approach we chose for this project. This is useful when working with sensors, where patterns can be found directly in the data stream without a detour of calculating intermediate, semantically meaningful or descriptive parameters or features. In a pilot study [3] it was shown that the data generated by the sensor floor is suitable to train an artificial neural network to recognise if persons have a high risk of falling, or not. The study described here evaluated if this sensor technology is also capable of recognising very subtle changes in walking patterns. For this, data from a young and healthy cohort was collected and examined, which was expected to show only very small differences in the gait patterns within the cohort, and also in test-retest for every individual person. To induce changes in the gait patterns, extra requirements were set for the participants to be fulfilled while walking, like putting on a blindfold or performing a dual-task like spelling backwards [4,5]. These tasks were chosen as it was expected that they generate the very small variations in the gait patterns which were needed for the system evaluation. The detection of these subtle changes is a step towards applying the method in the future to applications such as the detection of changes in gait caused by neurological or orthopaedic diseases. The main contribution of this paper is in the methodological setup, which is a combination of using the floor sensor hardware for recording gait patterns, processing the raw data with its unique properties, and the application of machine learning models for the analysis tasks on the specific kind of data that was gathered. A trained system that automatically delivers relevant hints or predicts parameters which are of high clinical interest would save a lot of time and work in everyday clinical practice as it could help in identifying those patients who may benefit from targeted interventions.

2. Related Work

2.1. Gait Patterns, Interventions and the Unilateral Heel-Rise Test

The study protocol included different speeds of walking as well as walking challenges. These conditions were derived from findings in the research of gait analysis, which suggest that these walking modes should influence individuals’ gait parameters. It is generally known that the stance phase and swing phase in human gait vary due to gait speed. Slow walking speed leads to an increase of double limb support, while fast walking speed increases single limb support [2]. Further, it has already been demonstrated in young healthy adults as well as in older adults that visual impairments lead to changes in spatial and temporal parameters [6,7], thus one of our conditions was to let participants walk with closed eyes. Moreover, dual-tasking is a common feature in activities of daily living, generally including a motor and cognitive task [8]. The combination of walking with a mental tracking task, like spelling backwards (the task we chose), is often used to evaluate the effect in spatiotemporal gait parameters in older and in younger adults [5,9,10,11]. Finally, power and endurance of the calf muscles, especially M. triceps surae, is essential for human gait, balance and for mobility in everyday activities [2]. The importance of calf muscle strength in static and dynamic balance has been identified in several studies [12,13,14]. A standard test of muscle strength is a manual muscle testing with the examiner providing the resistance. Due to the short lever of ankle plantar flexors (M. triceps surae) this technique could affect ceiling effects. For this, a standing Unilateral Heel-Rise test (UHR test) that uses body weight as the resistance has been substituted [15]. The UHR test was used in combination with sensor analysis before [16]. In the current study, adults without lower-limb lesions performed as many unilateral heel rise repetitions as possible. The UHR-test procedure and the criteria for application are based on previous examinations [15,17]. It has not been examined yet if and how muscle calf endurance and strength affect spatiotemporal parameters in younger healthy adults.

2.2. Sensors for Gait and Behaviour Analysis

Depending on what one is interested in, different sensors can be used to collect different aspects of gait patterns. The historically first sensor that was used in gait analysis as early as in the 19th century was the camera [18,19]. In a modern form, it is still in use as cameras are widely available, and algorithms to process images are ever advancing [20]. Camera images can either be processed directly by extracting limb positions and joint angles, or by learning features with machine learning algorithms. Cameras are also often used in a motion capture approach, with markers reflecting infrared light, which are tracked in three dimensions with a very high precision and sample rate. Adding a spatial dimension to the camera image is possible with RGB-D cameras (Red-Green-Blue-Depth), like the Microsoft Kinect®, which was for example used previously for gait analysis in a classification problem in patients with Parkinson’s disease [21,22] or Multiple Sclerosis [23]. Further research using Kinect sensor was conducted in gait analysis of children with ataxia [24] or cerebral palsy [25].

Another very common type of sensors used in gait analysis are Wearable Inertial Measurement Units (IMUs). They are easy to handle, and gather motion data such as linear and rotational accelerations, as well as absolute orientation in the room via magnetometers and barometers, all of that in three dimensions and with a very high precision. In gait recording setups, they get attached to the limbs of the person who is being recorded. By varying the number of IMUs and their position, one can easily focus the data gathering process on aspects of the gait for a certain purpose.

A different, very direct way of measuring the timing and positioning of feet on the floor is to put the sensing elements directly onto or into the floor. There are a few measuring techniques that are suitable for this. Typically these systems either use force or pressure sensors, or exploit the fact that humans, with their high share of water in the body, influence electric fields and the electric capacitance. A common system for the purpose of gait analysis is the model GAITRite®. This system is a pressure-sensitive sensor with a very high spatial and temporal resolution. It is extensively used in gait research, and its validity and reliability has been demonstrated in several papers [26,27,28,29,30]. Other sensor floor projects that rely on measuring force are for instance described in [31] using pressure sensors, and [32] with a piezoelectric polymer. A completely different class of floor sensors relies on the measurement of electric field properties instead of force or pressure. The first type of these floor sensors measures the impedance of electric field couplings for an array of sensor plates, which is also called near field imaging [33]. A second type measures the electric capacitance, like the ELSI® Smart Floor by Mari Mils [34,35], or SensFloor® by Future-Shape [3,36,37,38,39,40,41,42,43]. The latter one was used for the data acquisition here, and is described in more detail later-on.

Each class of sensors has their own unique advantages. On the one hand, the advantage of pressure-sensitive floors is that they directly deliver information about forces applied to the floor. Given a high enough spatial resolution, one can even get a distribution of pressure that the foot exerts on the floor, which is a useful gait parameter in itself and which can give hints about malpositioning of the feet or a disturbed foot roll-over behaviour. This is hard to achieve with contactless measurement systems that measure capacitances or perturbations of the electric field, as they actually react on a combination of area and distance to the sensing units, but not necessarily forces. The contactless and forceless sensors, on the other hand, can be used under nearly all kind of flooring layers, as long as they are not conductive. This is not the case for pressure sensors, which need to be used with either no flooring layer on top, or a soft one that propagates the forces adequately. Generally, sensors that measure electric properties are therefore suitable to be used in larger areas like whole rooms and building floors, and they can be built to be more robust. There is no mechanical wear and they are protected and shielded by a common flooring layer that are often themselves engineered to last up to several decades. Generally speaking, floor sensors are more unobtrusive than other sensors. The anonymous and privacy-conserving way of tracking people with a sensor floor, especially when compared to camera-based approaches opens for instance the possibility to track customers in stores [44]. When using cameras, the environment usually has to be controlled for lighting and obstacles in the line of sight, and there are often a lot of cables running, which makes them unsuitable for some use cases, especially for a day-to-day practical use in a doctor’s office or hospital. Furthermore, the use of cameras comes with highly sensitive video data of individuals which can hardly be anonymised. This introduces questions of data privacy that have to be handled appropriately. For the application of IMUs, sensors have to be attached to the patient, which may be experienced as burdensome or uncomfortable, and takes some time that might not be available in the hospital routines. However, both camera and IMU sensors are very convenient and useful for research, where there are less concerns of practicality and robustness of the setup. Pressure sensor floors are convenient to use, but take up a lot of space that cannot be used otherwise. Plus, they can easily get damaged, for example when rolling a very heavy hospital bed with wheels over them. Capacitance based sensor floors have the same level of convenience in daily use and do not take up space, but come at the cost of significant changes to the premises on installation, as they have to be put below the flooring. In the prospect of practical applications they seem to be the most promising sensor for many use cases, given that the necessary construction work at the time of installation is tolerable.

2.3. Recurrent Neural Networks for Time Series Analysis

Machine Learning Models, and more specifically Artificial Neural Networks are a class of algorithms that were successfully applied to a wide variety of classification and regression tasks. The different neural network architectures have in common that they are usually used in a Supervised Learning manner, meaning that they are presented with inputs and correct outputs (or targets) in a training phase. In the training phase, internal weights are adjusted to minimise the error between the network output and the true output. The weight adjusting is done by Backpropagation of Errors [45,46]. By this, the network builds up an internal model of the inputs to outputs relation. For time series data, as are produced by the sensor floor used in this project, Recurrent Neural Networks (RNNs) showed to be especially powerful [47]. Recurrent neural networks stand in contrast to feedforward neural networks as they include internal feedback connections which carry neuron activations from previously seen inputs and internal states. This enables recurrent neural networks to have a memory of past inputs and activations, which is the reason for them being very useful for learning on time series data. They show very good results for instance in audio analysis applications like speech recognition [48,49,50] or handwriting recognition [51]. A very common variant of RNNs is the Long Short-Term Memory (LSTM) model [46]. The LSTM model was designed by combining internal input, output and forget gates with adjustable weights. Giving a general view on the function of these elements of a LSTM cell, the input gate controls the amount of new information that is processed at a time step inside the LSTM unit, while the output gate controls the amount of information that is forwarded to subsequent layers of the network. The forget gate controls how much and which information is kept or dropped (forgotten) from the previous timesteps. LSTM networks can be trained by backpropagation of errors, and they are less prone to problems like vanishing gradients, which is often the case with more basic Recurrent Neural Network architectures. Its special structure enables a fine-grained self-control over which values to remember in the training phase in sequence learning. This is also the architecture that we used here, followed by some densely connected layers, or Multi-Layer Perceptron [52,53]. The Multi-Layer Perceptron is a structure of several layers of neurons between the input and output layer. All outputs of the neurons of one layer are connected to all inputs of the neurons of the next layer, which is why they are also called densely connected layers. These connections consist of weights that are adjusted in the training phase by backpropagation of errors. Multi-Layer Perceptrons can be used in combination with LSTM layers. Generally, the major strength of artificial neural networks in regression and classification problems is that they will find relevant features in the input patterns autonomously in the training phase. Furthermore, once a good architecture is found for a certain type of input signal, it can be re-used for similar problems by training a new instance with other target labels—taking the current study as an example, the same network architecture was trained for different walking modes as targets.

3. Methods

3.1. Capacitive Floor Sensor

The floor sensor used for the data acquisition in this project is called SensFloor® and is developed and produced by the company Future-Shape GmbH [37,38]. This system is of the type that makes use of measurements of the electric capacitance. The sensors can be installed in all indoor environments under common flooring materials, which enables many types of applications. Most commonly, the system is used in elderly care facilities for fall detection [40], but also for ambient assisted living at home [42]. Various approaches exist to extract gait parameters from the sensor data, for instance with an automatic step detection algorithm [36]. The SensFloor base material is a three-layered composite, with a thin aluminium foil at the bottom, a polyester fleece in the middle with a height of 3 mm, and a thin top layer of polyester fleece which is metal-coated and thereby electrically conductive. The floor sensor is organised as a grid of independently operating modules. Each module has a microcontroller board in the center of it, which is connected to power supply lines and eight triangular sensor shapes. Both the power supply lines and sensor shapes are created by cutting the conductive top layer of the base material and removing intermediate material. By doing this, the top layer can work in a similar way as a printed circuit board. For covering the floor of a room, the modules are put next to each other, and the power supply lines of adjacent modules are electrically connected with textile stripes of the same material as the top layer.

The topological advantage of using a triangular grid for the sensor fields is that it doubles the number of sensitive areas as compared to a rectangular grid of the same edge length. Most rooms have their walls meeting at right angles, therefore it is most convenient to engineer the outline of the whole module to have a rectangular shape. This way, the rectangular modules can reasonably be placed next to each other, aligned to all the walls for covering the whole room. By choosing a triangular shape for the sensor fields inside the module, one can connect eight instead of four sensor fields to every microcontroller board, thereby making better use of the microcontroller capabilities and increasing the spatial resolution. The installation is typically powered by a single power supply unit delivering 12 V, which can be connected at any position along the edges of the room. A schematic of how SensFloor is composed, and a photo of a real arrangement of modules after installation is shown in Figure 1. To accommodate for peculiarities of the ground plan such as columns or non-rectangular corners, the modules can even be cut into better fitting shapes (as long as the microcontroller circuit board remains undamaged). SensFloor comes in three different standard shapes which translate into three different spatial resolutions. The Low Resolution type means every module is a rectangle of size 1 m × 0.5 m (area of 0.031 m ), resulting in a spatial resolution of 16 sensor triangles per square meter. High Resolution modules have a square shape of size 0.5 m × 0.5 m (area of 0.031 m ) or 32 sensors per square meter, and Gait Resolution is the highest resolution: 0.38 m × 0.38 m (area of 0.018 m ) with approximately 55 sensors per square meter. For special applications, other resolutions and shapes can be produced by cutting the top layer respectively. The microcontrollers measure the electric capacitance of the eight sensor fields that are connected to it. When someone steps on a sensor field, the foot acts as the second plate of the capacitor system, increasing the measured capacitance. If, incidentally, both feet touch the same sensor field, the capacitance is increased further as a result from the increased covered area. The capacitance measurement is done with a sample rate of 10 Hz. However, the system does not report the measurements in a fixed-rate mode. Instead, consecutive measurements of the capacitance of a sensor field are compared to each other, and only if they differ by a certain amount for at least one sensor field of a module, a sensor message is generated. This behaviour is called event-based and has the advantage that there is less excess information generated by the sensor system at a very early stage in the sensor data processing pipeline. Sensor Messages are sent out by the modules over radio on the Industrial, Scientific, and Medical Band (ISM) on 868 Mhz or 920 Mhz (depending on the region and jurisdiction). A central transceiver collects the wireless sensor messages in a connection-less mode. As the radio range is quite high for this indoor use case (approximately 20 m), there is usually only one single transceiver needed per room. For very large rooms, multiple transceivers can be used. Due to the event-based nature of the sensor system, the data rate depends more on the number of people on the floor and how active they are (walking or standing) than on the floor area of the sensors. This makes it possible to install the sensor in rooms or buildings up to several hundreds of square meters. In the scope of behaviour and gait analysis, SensFloor was previously used for identifying persons in a sensor fusion setup together with wearable accelerometers [39]. It was also shown that it is possible to discern if a cat or human walks over the floor [41]. Using a multi layer perceptron with a feature extraction preprocessing step made it possible to distinguish between humans with a low or high risk of falling due to unstable gait [3] or to (roughly) estimate a walking person’s age [43].

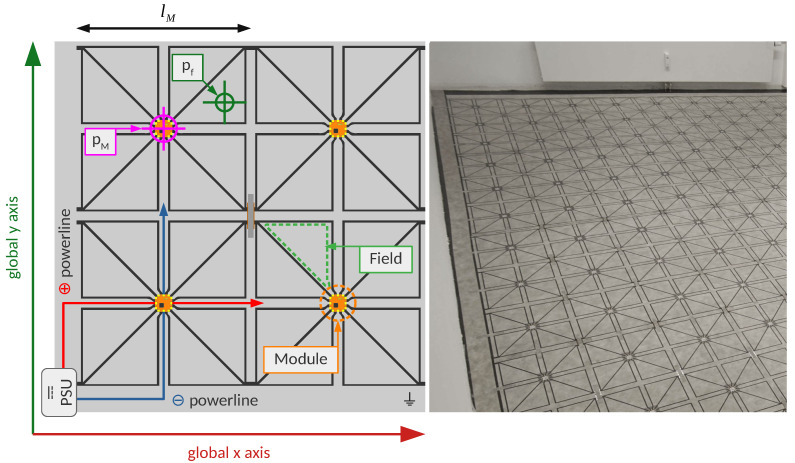

Figure 1.

Left side: Schematic of SensFloor. On the bottom left module, the power transmission ways are shown (PSU = Power Supply Unit). The top left module shows the coordinates of a module center ( ) and a sensor field ( ). The module length is typically m for Gait Analysis. The electric capacitance is measured on the sensor fields which are shaped as triangles. Right side: SensFloor sensor modules in the study lab. This photo was taken during the installation of the sensors. Afterwards, a carpet was laid on top of the modules, concealing them completely.

3.2. From Sensfloor Messages to the State of Electric Capacitances

The sensor floor is built up of modules that operate independently. Every module is attached to eight sensor fields on which the electric capacitance is measured (see Figure 1). If one or more of the field capacitances changes a certain amount compared to the previous measurement for that field, a message is sent out via radio. The message contains the unique id of the module, and the current capacitance values of all eight fields, indexed by j. On the receiver side, these messages appear as a stream of messages over time which can be recorded. The measurements of the capacitances that are communicated by one received message are valid until there is a new message received from the same module. For this reason, past messages need to be remembered until they are invalidated by a new message concerning the same sensor fields. This is done by introducing a data structure that is updated with every arriving sensor message, which is called the sensor state (of electric capacitances at time t) . From the module id that is contained in the message, and the ordered numbering of the sensor fields of a module, a new id can be generated, which uniquely identifies a single sensor field. This id is called field id . For every sensor field, their number being n in a sensor floor installation, there is one entry in the sensor state at any time. The entries contain the field id , the position of the field centroid in 2D coordinates on the floor, the time of the last measurement of this field as the time since the start of the recording, and the electric capacitance that was measured within the measurement range. In Figure 1, it is shown where is located in a SensFloor setup. When having the length of the module edge as , and approximating the sensor field as right-angled triangles, one can get the eight field centroid positions from the module center position (which is known at installation time) by adding/subtracting and , respectively (centroid position in any right-angle triangle). At the start of any recording, the initial state is initialised with capacitances and measurement times all zero.

For updating the previous state to get the new state when a new message arrives at time t, it is convenient to handle a SensFloor message as a set of updates on individual sensorfields. The state is updated by replacing the capacitances and update times of the sensor fields that are part of the message.

The sensor state can be interpreted as a discrete-time dynamical system. In this analogy, the message would be the only input to the system. The sensor state is similar to a camera image as it is a snapshot of the capacitances at one point in time. Processing the time series of these states makes it possible to track persons moving on the floor over time, or extracting foot positions for gait parameter calculation. Here, we directly use the time series of states as input to an artificial neural network after applying a geometric transformation and resampling.

3.3. Transformation to Local Coordinates

The sensor floor delivers data as a time series of capacitance measurements in a two-dimensional coordinate system that is aligned with the floor. The sensor can be used in arbitrary dimensions and shapes, following the ground plan of a building, and expanding to extremely large areas of floor. It is therefore not useful to take the sensor data and process it in the global x/y coordinates it is delivered in, as this would hardly generalise to other shapes and dimensions. Furthermore, the properties of gait are generally neither dependent on the location of where a person is walking, nor on the walking direction, they are therefore translation- and rotation-invariant. For these reasons it seems worthwile to only look at the sensor activations in the close vicinity of a walking person, as it includes all the information that is relevant and necessary to extract gait patterns and greatly reduces the input dimensionality by ignoring all the sensor fields that are too far away to measure associated footfalls anyway. The preprocessing step transforms the sensor activations as given in the sensor state of the sensor floor to the position and walking direction of the person, and removes all sensor activations that are too far away from the tracked position to be considered as relevant. This process is shown graphically in Figure 2.

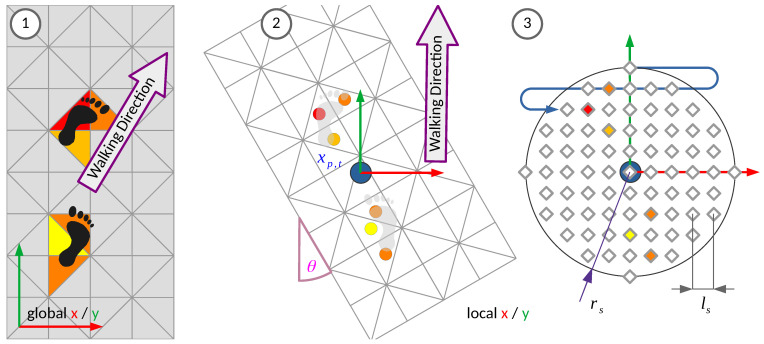

Figure 2.

Transformation of the capacitance measurements into the local coordinate system of the tracked person and resampling. 1. Sensor activations in the global coordinates, with examples of how a foot on the floor influences the measured capacitances (grey = unoccupied, yellow to red = level of electric capacitance). 2. The sensor state is translated to the tracked position and rotated into the walking direction by walking angle . 3. The sensor activations are resampled to the grid that is shown with the diamonds, which is defined by the sampling resolution and radius . The sample point that is closest to a transformed sensor field centroid takes its capacitance value. Then, the grid is reshaped into vector form as shown by the meandering blue arrow.

In our recordings only the person to be recorded was supposed to be in the region of interest. In a real scenario, a more sophisticated tracker should be used which can also separate multiple persons walking on one floor area. In the case of only one person on the floor, their position can be tracked by taking the weighted mean of the positions of all active sensor fields, with “active” meaning that the capacitance is above a certain threshold. Filtering by applying a threshold leads to the sparse representation of the sensor state . The threshold capacitance can be chosen arbitrarily. In the current study, based on previous observations of sensor fluctuations, ( of the measuring range) was chosen to account for noise. The position vectors of the remaining sensor fields in the sparse state are weighted (multiplied) with their capacitances, added up and normalised by the total sum of capacitances of the state. This approach is similar to calculating the center of gravity or center of mass of a particle system:

The walking direction is determined by taking the vector from the first to the last tracked position points, using the first and last state that were captured during a recording, respectively. From this, the average walking angle relative to the global y axis can be derived, which is used for rotating the sensor field positions into the local coordinate system of the walking person.

All entries in the sparse state that are further away from the tracked person’s position than are removed. Usually, this should not happen, but it can be the case if any capacitance reading exceeds the threshold due to higher than normal noise (e.g., if someone else than the recorded person accidentally steps into the recording area). Combining translation to the tracked position, rotation into the walking direction, and removing all field entries that are too far away, the sparse state in local coordinates then follows as:

As a final step, the local sparse state field positions get resampled to a local 2D cartesian grid, with a positional resolution of and again limited to a radius of . The grid points have a capacitance value associated. This is comparable to a receptive field for the artificial neural network.

For every field that is contained in a local sparse state , the grid capacitance value of the grid point with the position that is closest to the field centroid is updated to the corresponding value of . This resampling step is necessary as at some point, the field capacitances (at one timestep) are reshaped into a 1D vector which corresponds to the array of input neurons of the artificial neural network. It is done in a fixed association manner, any one grid position in the local coordinate system is therefore connected to the same input neuron. The whole transformation and resampling procedure is shown (with example data) in Figure 2. The task of associating input vector entry positions that are close to each other geometrically, and which therefore have a similar meaning for the gait pattern data analysis, is left to the artificial neural network. The capacitance value of the grid point is used as the activation value of the respective input neuron. Resampling the sensor activations into the local context of the participant’s position and walking direction results in a reduced set of positions and activations in the vicinity and local coordinate system. It is a sparse view on the whole state of sensor activations that contains all the relevant information for gait analysis. This set can be unrolled into a vector of activations by discarding the positions and collecting the capacitances in any order as shown in Figure 2 with the blue meandering arrow. By applying the state update, transformation and resampling at any time a message arrives, one gets a time series of vectors that is very suitable to use as input to time series machine learning algorithm like the Long Short-Term Memory based artificial neural network that we used here.

3.4. Data Collection

The data recordings were carried out in the APPS Lab (Assessment of Physiological and Psychological Signals Lab) at the Institute of Medical Informatics, University of Lübeck. In this lab, a room with a floor area of 24 m is fully equipped with the SensFloor capacitive sensor system. For this installation, the Gait Resolution variant of SensFloor was used, with a module side length of 0.38 m. The sensors cover the whole area of the room except for a negligible slim (10 cm) non-sensitive zone along the border which is needed for the power supply of the sensors. In Figure 1, it is shown what the system looks like before the final floor covering is installed on top. After completing the installation, the room looks just like any other normal room, as the sensor system is not visible any longer. In addition, Inertial Measurement Units (IMUs) delivered movement data for the gait pattern recording. For this, four devices of the LPMS-B2 Series from the Company LP-RESEARCH Inc. were used. This model is a combination of several sensors like an accelerometer, gyroscope, magnetometer and barometer, and can thereby produce a comprehensive measurement of the motion of the device in all three dimensions. The IMU data was sampled with a fixed rate of 50 Hz. Although the IMU data is not part of the current analysis, it will be in the focus of a later examination. Both the IMU and SensFloor data were collected and recorded at a central small-form-factor Intel NUC computer running Ubuntu 18.04 and ROS Melodic [54], the Robot Operating System on top.

By using ROS and its included data handling tools, it was ensured that all data was recorded in a time-synchronized manner although it was gathered from multiple sources. The IMUs had a Bluetooth connection to the recording computer and the SensFloor Data Transceiver transmitted data over an Ethernet network connection. Before the start of the recording, IMUs were attached to the participants in the following position: two at the outside facing side of the ankle, one at the lower abdomen (e.g., belt buckle height), and one at the sternum. As part of this project, a recording software and graphical interface was developed. The software ran on the recording computer, handled the connections to the sensors and the starting and stopping of recordings. Over a wireless network, it offered a graphical user interface running in the web browser to the person who supervised the recordings. In that interface, the current connection state of all sensors is shown, to inform the recording supervisor whether it is fine to proceed with a recording. A free text field is available for entering an ID for the participant. This ID is associated with the sensor recordings and also noted down on the case report form regarding that participant. The web interface also shows a recording ID, which increases automatically after every recording. The recording ID is noted down by the supervisor together with the walking interference mode that was performed by the participant for the respective recording. Finally, the interface has buttons for starting and stopping the recording, and resetting the connection to the sensors in case of any problems. Participants of the study started in the corridor outside the room, walked into the room, and in a straight line towards the windowsill opposing the door. Arriving there, they turned on spot, walked back and left the room again through the door. This procedure was repeated five times per participant and per walking mode. The start point was chosen to be outside the room such that the first few steps were not captured by the sensors and could thereby easily be disregarded. This is useful as when starting to walk, the gait patterns typically look different while accelerating from standing still, as compared to after taking some steps. The steps right before and right after the turning point inside the room which were captured by the sensor, were excluded for the same reason. The participants were asked to walk in one of five different modes in the following order: “walk at your normal pace”, “walk faster than normal”, “walk slower than normal”, “walk with your eyes closed”, “walk while spelling a word backwards (dual-task)”. For the dual-task mode the instructor told the participant a random word from a list of 100 words. After that, they performed the Unilateral Heel-Rise (UHR) test, and then had their walk recorded again, which we call “post UHR” recording. An example of a recorded walk is shown in Figure 3. For the dataset, 42 participants (age 19 to 31, M = 25, SD = 3.1) were recorded in total. Summed up over all walking modes, they performed 2506 walks from either door to window or window to door. All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki, and the protocol was approved by the Ethics Committee of the University of Lübeck on 4 June 2020, file reference 20-214.

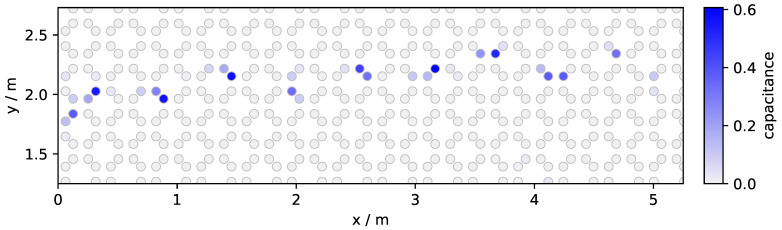

Figure 3.

Example data of a person walking on the sensor floor. This plot is generated by showing the maximum capacitance per field that was measured in a single recording. The circles are the centroids of the sensor fields, clusters of centroids with a high maximum capacity are interrelated to steps (footfalls).

3.5. Data Analysis

All recordings were preprocessed by transforming the sensor activations into the local context, thereby building a time series of 1D vectors. The time series of every recording was further split into multiple time series of equal length by moving a time window of 30 steps over the whole recording in increments of one step. These were then used to train artificial neural networks. The architecture that turned out to work best for our case was a stack of one Long Short-Term Memory (LSTM) layer directly after the input layer, followed by four dense layers. The LSTM layer had an output size of 20, and the dense layers had 20 neurons each. The dense layer neurons were Rectified Linear Units (ReLU) with a linear identity activation function for input values above zero, and all zero output for input values below or equal to zero. Several goals exist that were tackled by solving classification and regression tasks. The output layer size was two neurons with Softmax activation function for the classification tasks, and one ReLU neuron for the regression tasks. As error metric and loss function the Mean Squared Error was chosen for the regression analysis tasks, and the binary cross-entropy for the classification task. The artificial neural network was trained with early stopping in the case of non-improvement on the validation error with a patience of 12 epochs, with a maximum number of 60 epochs for training. The implementation was done using the Tensorflow library (v2.3.0) in Python (v3.8.5) [55]. To assess the robustness of the model and the whole training and evaluation process, every run was done multiple times, to ensure that results were not incidental. Furthermore, the random seed for the network operations was not set to a fixed value, but chosen randomly every time. All networks were evaluated by Leave-One-Out-Crossvalidation (LOOCV). For the idiosyncratic analysis plan, one single walk was left out for the test set, one walk was left out for the validation set, and the rest was used for training, as the goal was to detect intrapersonal differences. For the generalised analysis, all recordings from one participant were left out from training for each test and validation set, as the goal was there to train the neural networks on patterns that were common to all participants. The split into an idiosyncratic and a generalised analysis was done to get results for both possibilities; (1) that the walking modes are reflected on a very individual level in the gait patterns, and (2) the case that there is a common effect on the gait patterns over the participants. In summary, neural networks were trained in three different experiment designs:

Predicting the Walking Mode—Idiosyncratic: In the first experiment, one artificial neural network was trained for every individual participant of the study and interference mode (except for the different walking speeds, as this was beyond the scope of this paper). The goal of this was to find out if there are intrapersonal differences in the walking patterns of the different modes that can be learned by the network. The task was set out as a binary classification task between the class of walking patterns from the normal mode and either “closed-eyes”, “dual-task” or “post UHR”.

Predicting the Walking Mode—Generalised: For this experiment, the machine learning model was trained on the walk data of all participants. The goal was to evaluate the possibility of classifying between the walk at normal pace and the interference modes, but this time in a generalised manner, to find out if there is a change in pattern that is common to all participants.

Predicting the UHR Repetitions: The goal of the last experiment was to predict the results a participant would achieve on the Unilateral Heel-Rise Test as a regression task. The neural network was again trained to achieve generalisation across participants. One network was trained for each leg, left and right.

4. Results

4.1. Results for Predicting the Walking Mode—Idiosyncratic

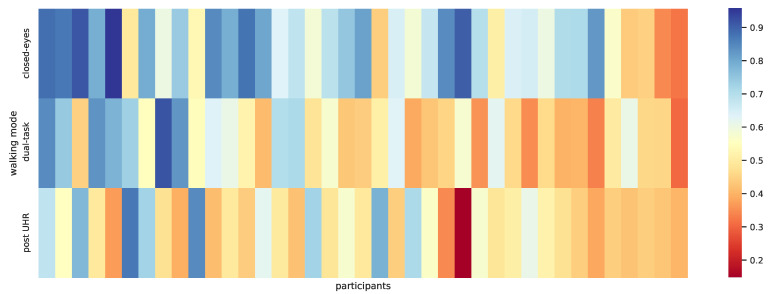

For the closed-eyes walking task, the classification accuracies for the participants on an intrapersonal, idiosyncratic level varied between 0.32 and 0.96, (M = 0.68, SD = 0.16). For the dual-task recordings, the range of accuracies was between 0.30 and 0.92, (M = 0.54, SD = 0.16), and for the post UHR walk between 0.15 and 0.87 (M = 0.52, SD = 0.15). The accuracy calculates as the amount of correct classifications divided by the number of all predictions, thus guessing corresponds to a value of 0.5. These results are graphically presented in Figure 4.

Figure 4.

Classification accuracies visualised for the individual participants when classifying between different walking modes. The participants go from left to right, every column is one participant. The walking modes are: normal vs. closed-eyes, normal vs. dualtask, normal vs. post UHR. The columns are sorted from left to right according to the mean accuracy over all three modes for one participant.

4.2. Results for Predicting the Walking Mode—Generalised

The network that was trained to generalise over walking patterns that are common to the whole cohort produced classification results that are shown in Table 1. Precision (also known as positive predictive value) calculates as the share of true positive predictions of all positive predictions, when the interference mode is the positive outcome. Recall (or sensitivity) is defined as the division of true positive predictions by all positive data points. is the harmonic mean of Precision and Recall. The values for Precision, Recall and Score happen to fall on the same value for the first two comparisons.

Table 1.

Results for generalised classification.

| Mode | Accuracy | Precision | Recall | Score |

|---|---|---|---|---|

| normal vs. closed-eyes | 0.77 | 0.80 | 0.80 | 0.80 |

| normal vs. dual-task | 0.56 | 0.58 | 0.58 | 0.58 |

| normal vs. post uhr | 0.50 | 0.48 | 0.46 | 0.47 |

4.3. Results for Predicting the UHR Repetitions

The mean number of maximum heel rises performed in this cohort was 25.7 (SD = 7.8, with a minimum of 10 and a maximum of 45 repetitions) for the right leg and 24.3 (SD = 7.9, with a minimum of 13 and a maximum of 45 repetitions) for the left leg. When predicting the results on the UHR test from the “normal” gait recording, the artificial neural network performed with a Root Mean Square Error of 11.7. The true and predicted values did not correlate (Pearson coefficient of −0.02). The high error and low correlation is a limit of the current study.

5. Discussion

5.1. Summary

In this project, we aimed to evaluate the capability of SensFloor data for use with a recurrent neural network architecture to learn subtle differences in gait. The sensor floor that was used here delivers its data as a time series of capacitance measurements in two dimensions. For time series data, recurrent neural network architectures like Long Short-Term Memory Units (LSTM) often work especially well. This was also the case in this project, where we trained a LSTM-based neural network on the sensor data. For the dataset, we recorded participants in different walking modes. At first, the participants were told to walk at their normal pace, then faster than normal, followed by walking slower than normal. Then, the participants kept their eyes closed and their gait was recorded again. As another intervention, the participants carried out a dual-task walk, where they were asked to spell words backwards while walking and again being recorded. Subsequently, the participants performed the Unilateral Heel-Rise test (UHR), and were then again recorded walking at their normal pace and unimpeded. The intention of letting the participants walk at these different modes was to artificially introduce some variation into the gait patterns by interfering with their normal walking. Consequently, the first goal of training the neural networks was to have them learn the differences between the normal walking patterns and the artificially disturbed walking patterns or the walk immediately after the UHR test. The second goal was to predict the number of heel rise repetitions a participant could do. The UHR test is a feasible measure of muscle strength in the foot moving apparatus, and therefore a surrogate marker for the gait patterns. The results show that it is in principle possible to distinguish between the different walking modes. Overall, the idiosyncratic analysis, where the walking mode was classified after training the neural network intraindividually on the data of only one person, one after the other, showed the best results, while the generalised predictions were only satisfying for distinguishing between a normal and a closed-eyes walk. In this current study sensor floor was not able to reliably predict the number of repetitions in the UHR test.

5.2. Interpretation

As a first important result, we found that a neural network can distinguish between different walking modes, which works best when person-dependent analyses are used. For some persons, it worked very good (up to 95% accuracy), while it did not work at all for others (<50% accuracy, which is not better than guessing). We attribute this outcome to personal differences in walking and coordination in a way that for some persons, it just might not be too much of an interference to walk with closed eyes or walking while spelling words backwards. Speculatively, such differences could stem, for example, from age, athleticism, fatigue, or the ability to multitask. This would also explain the promising, but not perfect results for the generalised classification. When the walking pattern differences are really person-dependent, the artificial neural network will be presented lots of similar input examples with different target labels for the person who are not challenged by having to walk with closed eyes or dual-tasking. The generalised walking mode classification approach worked best for classifying between the closed-eyes and normal walk, and worse for the distinction between dual-task and normal walking mode. It seems like the closed-eyes mode is the one that introduces the highest disturbance to the gait coordination, resulting in the greatest variation to the walk patterns as compared to the normal walk. This is in line with previous work that shows that visual impairments also affect the gait of young and healthy adults [7].

It was not possible for the artificial neural network to learn differences between the walks before and after doing the UHR in the generalised classification, nor was it possible to predict the number of repetitions. This should not imply that there is no effect from doing the UHR, but it might be a limitation of the sensor, sample, and analysis that renders the distinction impossible, for example because they are too subtle to be captured by the relatively low floor sensor resolution.

5.3. Limitations

In general, some limitations arise from the choice of sensor floor. For gait analysis, e.g., the GaitRite system has a higher resolution in space as well as in time. So, if one only aims for the best quality in reaching the classification and regression goals, another sensor might work better. However, the SensFloor system is suitable for other areas of application. As it is very robust, it is reasonable to use it as a part of everyday medical routine. It was due to the prospect of actual usefulness in a clinical setting that we chose to check what information could be extracted from data generated by this kind of sensor. As participants do not really perceive the floor as a medical device or a piece of technology at all, the recording does not feel like an examination situation, thus we expect a more natural gait than in other settings.

The processing scheme that was chosen for the data analysis introduces some limitations as well. Although we aimed for a minimal loss of data by avoiding preprocessing steps as much as possible, a small positional error is introduced by resampling the floor sensor data into a grid in the local view of the walking participant, thereby discretising the field positions. However, the resulting time series of vectors is a perfect format as input for Machine Learning algorithms of all kinds. We chose this architecture as it is a good fit to the time series sensor data.

5.4. Implications and Outlook

The actuation of the calf muscles is a very important screw during the stance phase of human gait and the heel rise test is a common test to assess calf muscle endurance and strength. In a reliability study of the UHR test [56] with 40 healthy adults over 18 years and without current ankle injury or chronic ankle pain, the following results were declared as clinical reference: mean plantarflexion of 23 with a standard deviation of 13.3 repetitions. In our cohort, the mean number of heel rises was slightly higher, but comparable, with 25.7 (SD = 7.8, right leg) and 24.3 (SD = 6.9, left leg). Thus, we can be confident that our results are representative for clinical practice. Still, we analysed a young and healthy cohort with relatively high plantarflexion mean repetitions. We do not know yet, if there are no differences in gait parameters before and after the UHR test or if these differences are too low for the sensor floor to identify. It must be evaluated in further studies if this method could predict repetitions within a cohort of older adults, 65 years and above, with poorer functional abilities, lower muscle endurance of the M.triceps surae and more asymmetries in gait parameters. Furthermore, further research in a young healthy cohort is needed, using another test protocol like calf muscle training over a time of weeks or months, to enable the differentiation between a pre- and post-test using SensFloor data. Furthermore, it could be worthwile to examine if the gait patterns vary enough between the participants to be able to distinguish between individuals.

The dataset that was recorded also contains measurements from Inertial Measurement Units. This data was not yet evaluated and not part of this study. Other gait analysis studies [57] showed that a lot of information is contained in IMU data, especially about aspects that the floor sensor can not cover, like joint movements and angles. However, whenever integration is involved in the processing of IMU data, there are errors generated from drifts. This is not the case with the SensFloor, which has a global fixed reference frame for the capacitance measurements. Therefore, the sensor floor data is a perfect complement to the IMUs as it delivers its measurements in absolute positions which are not prone to drift over time. It appears to be very promising to work towards a sensor fusion approach combining the best properties of both sensors.

6. Conclusions

We gained insights into walking patterns of people with a sensor floor that measures the electric capacitance on a discrete grid of triangular sensor fields over time. For the dataset, we recorded participants in different walking modes which were expected to induce changes in the walking patterns. It was shown that the sensor data generally contains enough information for detecting walking challenges in gait with a Machine Learning approach. We aimed for a minimal loss of data in the processing chain by choosing a methodological approach of learning features directly from the raw stream following some minimal geometric transformations and resampling. The approach as it is described is a promising path, because it can easily be transferred to other applications and project goals in gait analysis using this sensor and analysis strategy. A very relevant future research goal that should be pursued in follow-up research this way concerns a cohort of elderly people, who are often prone to falls and where higher variances in gait can be expected as frailty is more prevalent. This age group could profit a lot from a better availability of gait analysis. The floor sensor is a viable implementation of a gait pattern recording sensor that is suitable for everyday practical use and thereby opens the possibility of a wide variety of applications. Given further developments of the proposed algorithms, the unobtrusiveness and ease of use of the sensor floor is a major advantage for practical settings, where it could meaningfully support physiotherapeutic diagnostics, and revolutionise the assessment of gait patterns.

Acknowledgments

We thank our colleagues and students from the Institute of Health Sciences, Minettchen Herchenröder, Tabea Bendfeldt, Pia Wertz and Yuting Qin for their great work in recruiting and recording participants. We also want to express out gratitude towards our colleagues from the Institute of Medical Informatics, Adeel Muhammad Nisaar, Philip Gouverneur and Frédéric Li for their great on site technical support with the recording setup, and Kerstin Lüdtke, Christl Lauterbach, as well as Caroline Zygar-Hoffmann for helpful comments on the manuscript.

Abbreviations

The following abbreviations are used in this manuscript:

| APPS Lab | Assessment of Physiological and Psychological Signals Lab |

| IMU | Inertial Measurement Unit |

| ISM | Industrial, Scientific and Medical Band |

| LSTM | Long Short-Term Memory |

| LOOCV | Leave-One-Out-Cross-Validation |

| PSU | Power Supply Unit |

| ReLU | Rectified Linear Unit |

| RNN | Recurrent Neural Network |

| RGB-D | Red, Green, Blue, Depth |

| ROS | Robot Operating System |

| SD | Standard Deviation |

| UHR | Unilateral Heel-Rise Test |

Author Contributions

Conceptualization, R.H., H.B., A.S. and M.G.; methodology, R.H., H.B. and M.G.; software, R.H.; validation, R.H. and H.B.; formal analysis, R.H.; investigation, R.H. and H.B.; resources, M.G.; data curation, R.H. and H.B.; writing—original draft preparation, R.H. and H.B.; writing—review and editing, A.S. and M.G.; visualization, R.H.; supervision, A.S. and M.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Ethics Committee of University of Lübeck (protocol code 20-214 and date of approval 4 June 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study will be openly available in the future in osf.io at DOI 10.17605/OSF.IO/XMSU2 after further analyses have been conducted by the authors.

Conflicts of Interest

R.H. and A.S. are employees of the company SensProtect GmbH. SensProtect is active in research and development on the SensFloor sensor system. H.B. and M.G. declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Shumway-Cook A., Woollacott M.H. Motor Control: Translating Research into Clinical Practice. Lippincott Williams & Wilkins; Philadelphia, PA, USA: 2017. [Google Scholar]

- 2.Götz-Neumann K. Gehen Verstehen: Ganganalyse in der Physiotherapie. Georg Thieme Verlag; Stuttgart, Germany: 2006. [Google Scholar]

- 3.Hoffmann R., Lauterbach C., Techmer A., Conradt J., Steinhage A. Recognising Gait Patterns of People in Risk of Falling with a Multi-Layer Perceptron. In: Piętka E., Badura P., Kawa J., Wieclawek W., editors. Conference of Information Technologies in Biomedicine. Springer International Publishing; Cham, Switzerland: 2016. pp. 87–97. [DOI] [Google Scholar]

- 4.Beurskens R., Steinberg F., Gutmann F., Wolff W., Granacher U. Neural Correlates of Dual-Task Walking: Effects of Cognitive versus Motor Interference in Young Adults. Neural Plast. 2016;2016 doi: 10.1155/2016/8032180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kimura N., van Deursen R. The Effect of Visual Dual-Tasking Interference on Walking in Healthy Young Adults. Gait Posture. 2020;79:80–85. doi: 10.1016/j.gaitpost.2020.04.018. [DOI] [PubMed] [Google Scholar]

- 6.Helbostad J.L., Vereijken B., Hesseberg K., Sletvold O. Altered Vision Destabilizes Gait in Older Persons. Gait Posture. 2009;30:233–238. doi: 10.1016/j.gaitpost.2009.05.004. [DOI] [PubMed] [Google Scholar]

- 7.Kanzler C.M., Barth J., Klucken J., Eskofier B.M. Inertial Sensor Based Gait Analysis Discriminates Subjects with and without Visual Impairment Caused by Simulated Macular Degeneration; Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Orlando, FL, USA. 16–20 August 2016; pp. 4979–4982. [DOI] [PubMed] [Google Scholar]

- 8.McIsaac T.L., Lamberg E.M., Muratori L.M. Building a Framework for a Dual Task Taxonomy. BioMed Res. Int. 2015;2015:591475. doi: 10.1155/2015/591475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bonetti L.V., Hassan S.A., Kasawara K.T., Reid W.D. The Effect of Mental Tracking Task on Spatiotemporal Gait Parameters in Healthy Younger and Middle- and Older Aged Participants during Dual Tasking. Exp. Brain Res. 2019;237:3123–3132. doi: 10.1007/s00221-019-05659-z. [DOI] [PubMed] [Google Scholar]

- 10.Hollman J.H., Childs K.B., McNeil M.L., Mueller A.C., Quilter C.M., Youdas J.W. Number of Strides Required for Reliable Measurements of Pace, Rhythm and Variability Parameters of Gait during Normal and Dual Task Walking in Older Individuals. Gait Posture. 2010;32:23–28. doi: 10.1016/j.gaitpost.2010.02.017. [DOI] [PubMed] [Google Scholar]

- 11.Hollman J.H., Kovash F.M., Kubik J.J., Linbo R.A. Age-Related Differences in Spatiotemporal Markers of Gait Stability during Dual Task Walking. Gait Posture. 2007;26:113–119. doi: 10.1016/j.gaitpost.2006.08.005. [DOI] [PubMed] [Google Scholar]

- 12.Bok S.K., Lee T.H., Lee S.S. The Effects of Changes of Ankle Strength and Range of Motion According to Aging on Balance. Ann. Rehabil. Med. 2013 doi: 10.5535/arm.2013.37.1.10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hashish R., Samarawickrame S.D., Wang M.Y., Yu S.S.Y., Salem G.J. The Association between Unilateral Heel-Rise Performance with Static and Dynamic Balance in Community Dwelling Older Adults. Geriatr. Nurs. 2015;36:30–34. doi: 10.1016/j.gerinurse.2014.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Maritz C.A., Silbernagel K.G. A Prospective Cohort Study on the Effect of a Balance Training Program, Including Calf Muscle Strengthening, in Community-Dwelling Older Adults. J. Geriatr. Phys. Ther. 2016;39:125–131. doi: 10.1519/JPT.0000000000000059. [DOI] [PubMed] [Google Scholar]

- 15.Lunsford B.R., Perry J. The Standing Heel-Rise Test for Ankle Plantar Flexion: Criterion for Normal. Phys. Ther. 1995;75:694–698. doi: 10.1093/ptj/75.8.694. [DOI] [PubMed] [Google Scholar]

- 16.Pires I.M., Ponciano V., Garcia N.M., Zdravevski E. Analysis of the Results of Heel-Rise Test with Sensors: A Systematic Review. Electronics. 2020;9:1154. doi: 10.3390/electronics9071154. [DOI] [Google Scholar]

- 17.Jan M.H., Chai H.M., Lin Y.F., Lin J.C.H., Tsai L.Y., Ou Y.C., Lin D.H. Effects of Age and Sex on the Results of an Ankle Plantar-Flexor Manual Muscle Test. Phys. Ther. 2005;85:1078–1084. doi: 10.1093/ptj/85.10.1078. [DOI] [PubMed] [Google Scholar]

- 18.Marey E.J. La Méthode Graphique dans les Sciences Expérimentales et Particulièrement en Physiologie et en Médecine. G. Masson; Paris, France: 1878. [Google Scholar]

- 19.Marey E.J. Movement. Arno Press; New York, NY, USA: 1972. [Google Scholar]

- 20.Khan M.H., Farid M.S., Grzegorzek M. A Non-Linear View Transformations Model for Cross-View Gait Recognition. Neurocomputing. 2020;402:100–111. doi: 10.1016/j.neucom.2020.03.101. [DOI] [Google Scholar]

- 21.Rocha A.P., Choupina H., Fernandes J.M., Rosas M.J., Vaz R., Cunha J.P.S. Parkinson’s Disease Assessment Based on Gait Analysis Using an Innovative RGB-D Camera System; Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Chicago, IL, USA. 26–30 August 2014; pp. 3126–3129. [DOI] [PubMed] [Google Scholar]

- 22.Muñoz B., Castaño-Pino Y.J., Paredes J.D.A., Navarro A. Automated Gait Analysis Using a Kinect Camera and Wavelets; Proceedings of the 2018 IEEE 20th International Conference on E-Health Networking, Applications and Services (Healthcom); Ostrava, Czech Republic. 17–20 September 2018; pp. 1–5. [DOI] [Google Scholar]

- 23.Grobelny A., Behrens J.R., Mertens S., Otte K., Mansow-Model S., Krüger T., Gusho E., Bellmann-Strobl J., Paul F., Brandt A.U., et al. Maximum Walking Speed in Multiple Sclerosis Assessed with Visual Perceptive Computing. PLoS ONE. 2017;12:e0189281. doi: 10.1371/journal.pone.0189281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Summa S., Tartarisco G., Favetta M., Buzachis A., Romano A., Bernava G.M., Sancesario A., Vasco G., Pioggia G., Petrarca M., et al. Validation of Low-Cost System for Gait Assessment in Children with Ataxia. Comput. Methods Progr. Biomed. 2020;196:105705. doi: 10.1016/j.cmpb.2020.105705. [DOI] [PubMed] [Google Scholar]

- 25.Ma Y., Mithraratne K., Wilson N.C., Wang X., Ma Y., Zhang Y. The Validity and Reliability of a Kinect V2-Based Gait Analysis System for Children with Cerebral Palsy. Sensors. 2019;19:1660. doi: 10.3390/s19071660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.McDonough A.L., Batavia M., Chen F.C., Kwon S., Ziai J. The Validity and Reliability of the GAITRite System’s Measurements: A Preliminary Evaluation. Arch. Phys. Med. Rehabil. 2001;82:419–425. doi: 10.1053/apmr.2001.19778. [DOI] [PubMed] [Google Scholar]

- 27.Webster K.E., Wittwer J.E., Feller J.A. Validity of the GAITRite Walkway System for the Measurement of Averaged and Individual Step Parameters of Gait. Gait Posture. 2005;22:317–321. doi: 10.1016/j.gaitpost.2004.10.005. [DOI] [PubMed] [Google Scholar]

- 28.van Uden C.J., Besser M.P. Test-Retest Reliability of Temporal and Spatial Gait Characteristics Measured with an Instrumented Walkway System (GAITRite) BMC Musculoskelet. Disord. 2004;5:13. doi: 10.1186/1471-2474-5-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Menz H.B., Latt M.D., Tiedemann A., Mun San Kwan M., Lord S.R. Reliability of the GAITRite Walkway System for the Quantification of Temporo-Spatial Parameters of Gait in Young and Older People. Gait Posture. 2004;20:20–25. doi: 10.1016/S0966-6362(03)00068-7. [DOI] [PubMed] [Google Scholar]

- 30.Bilney B., Morris M., Webster K. Concurrent Related Validity of the GAITRite Walkway System for Quantification of the Spatial and Temporal Parameters of Gait. Gait Posture. 2003;17:68–74. doi: 10.1016/S0966-6362(02)00053-X. [DOI] [PubMed] [Google Scholar]

- 31.Tanaka O., Ryu T., Hayashida A., Moshnyaga V.G., Hashimoto K. A Smart Carpet Design for Monitoring People with Dementia. In: Selvaraj H., Zydek D., Chmaj G., editors. Progress in Systems Engineering. Springer International Publishing; Cham, Switzerland: 2015. pp. 653–659. Advances in Intelligent Systems and Computing. [DOI] [Google Scholar]

- 32.Serra R., Knittel D., Di Croce P., Peres R. Activity Recognition With Smart Polymer Floor Sensor: Application to Human Footstep Recognition. IEEE Sens. J. 2016;16:5757–5775. doi: 10.1109/JSEN.2016.2554360. [DOI] [Google Scholar]

- 33.Henry R., Matti L., Raimo S. Human Tracking Using near Field Imaging; Proceedings of the 2008 Second International Conference on Pervasive Computing Technologies for Healthcare; Tampere, Finland. 30 January–1 February 2008; pp. 148–151. [DOI] [Google Scholar]

- 34.Ropponen A., Rimminen H., Sepponen R. Robust System for Indoor Localisation and Identification for the Health Care Environment. Wirel. Pers. Commun. 2011;59:57–71. doi: 10.1007/s11277-010-0189-z. [DOI] [Google Scholar]

- 35.Beevi F.H.A., Wagner S., Hallerstede S., Pedersen C.F. Data Quality Oriented Taxonomy of Ambient Assisted Living Systems; Proceedings of the IET International Conference on Technologies for Active and Assisted Living (TechAAL); London, UK. 5 November 2015; p. 6. [DOI] [Google Scholar]

- 36.Bagarotti R., Zini E.M., Salvi E., Sacchi L., Quaglini S., Lanzola G. An Algorithm for Estimating Gait Parameters Through a Commercial Sensorized Carpet; Proceedings of the 2018 IEEE 4th International Forum on Research and Technology for Society and Industry (RTSI); Palermo, Italy. 10–13 September 2018; Palermo, Italy: IEEE; 2018. pp. 1–6. [DOI] [Google Scholar]

- 37.Steinhage A., Lauterbach C. Handbook of Research on Ambient Intelligence and Smart Environments: Trends and Perspectives. Information Science Reference; Hershey, PA, USA: 2011. SensFloor and NaviFloor: Large-Area Sensor Systems Beneath Your Feet. [DOI] [Google Scholar]

- 38.Lauterbach C., Steinhage A., Techmer A. A Large-Area Sensor System Underneath the Floor for Ambient Assisted Living Applications. In: Mukhopadhyay S.C., Postolache O.A., editors. Pervasive and Mobile Sensing and Computing for Healthcare: Technological and Social Issues. Springer; Berlin/Heidelberg, Germany: 2013. pp. 69–87. Smart Sensors, Measurement and Instrumentation. [DOI] [Google Scholar]

- 39.Sousa M., Techmer A., Steinhage A., Lauterbach C., Lukowicz P. Human Tracking and Identification Using a Sensitive Floor and Wearable Accelerometers; Proceedings of the 2013 IEEE International Conference on Pervasive Computing and Communications (PerCom); San Diego, CA, USA. 18–22 March 2013; pp. 166–171. [DOI] [Google Scholar]

- 40.Steinhage A., Lauterbach C. SensFloor and NaviFloor: Robotics Applications for a Large-Area Sensor System. Int. J. Intell. Mechatron. Robot. (IJIMR) 2013;3 doi: 10.4018/ijimr.2013070104. [DOI] [Google Scholar]

- 41.Hoffmann R., Steinhage A., Lauterbach C. C5.4—Increasing the Reliability of Applications in AAL by Distinguishing Moving Persons from Pets by Means of a Sensor Floor. Proc. Sens. 2015:436–440. doi: 10.5162/sensor/C5.4. [DOI] [Google Scholar]

- 42.Lauterbach C., Steinhage A., Techmer A., Sousa M., Hoffmann R. AAL Functions for Home Care and Security: A Sensor Floor Supports Residents and Carers. Curr. Dir. Biomed. Eng. 2018;4:127–129. doi: 10.1515/cdbme-2018-0032. [DOI] [Google Scholar]

- 43.Hoffmann R., Lauterbach C., Conradt J., Steinhage A. Estimating a Person’s Age from Walking over a Sensor Floor. Comput. Biol. Med. 2018;95:271–276. doi: 10.1016/j.compbiomed.2017.11.003. [DOI] [PubMed] [Google Scholar]

- 44.Steinhage A., Lauterbach C., Techmer A., Hoffmann R., Sousa M. Handbook of Research on Investigations in Artificial Life Research and Development. Engineering Science Reference; Hershey, PA, USA: 2018. Innovative Features and Applications Provided by a Large-Area Sensor Floor. [DOI] [Google Scholar]

- 45.Learning Representations by Back-Propagating Errors. Nature. 1986;323:533–536. doi: 10.1038/323533a0. [DOI] [Google Scholar]

- 46.Hochreiter S., Schmidhuber J. Long Short-Term Memory. Neural Comput. 1997;9:1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

- 47.Lipton Z.C., Berkowitz J., Elkan C. A Critical Review of Recurrent Neural Networks for Sequence Learning. arXiv. 20151506.00019 [Google Scholar]

- 48.Sak H., Senior A., Rao K., Beaufays F. Fast and Accurate Recurrent Neural Network Acoustic Models for Speech Recognition. arXiv. 20151507.06947 [Google Scholar]

- 49.Sak H., Senior A., Beaufays F. Long Short-Term Memory Based Recurrent Neural Network Architectures for Large Vocabulary Speech Recognition. arXiv. 20141402.1128 [Google Scholar]

- 50.Graves A., Mohamed A.R., Hinton G. Speech Recognition with Deep Recurrent Neural Networks. arXiv. 20131303.5778 [Google Scholar]

- 51.Graves A., Schmidhuber J. Offline Handwriting Recognition with Multidimensional Recurrent Neural Networks. In: Koller D., Schuurmans D., Bengio Y., Bottou L., editors. Advances in Neural Information Processing Systems 21. Curran Associates Inc.; Red Hook, NY, USA: 2009. pp. 545–552. [Google Scholar]

- 52.Rosenblatt F. Principles of Neurodynamics: Perceptrons and the Theory of Brain Mechanisms. Am. J. Psychol. 1963;76:705–707. doi: 10.2307/1419730. [DOI] [Google Scholar]

- 53.Fukumizu K. Active Learning in Multilayer Perceptrons; Proceedings of the Advances in Neural Information Processing Systems; Denver, CO, USA. 27–30 November 1995; p. 7. [Google Scholar]

- 54.Quigley M., Conley K., Gerkey B., Faust J., Foote T., Leibs J., Wheeler R., Ng A.Y. ROS: An Open-Source Robot Operating System; Proceedings of the ICRA Workshop on Open Source Software; Kobe, Japan. 12–17 May 2009; p. 5. [Google Scholar]

- 55.Abadi M., Barham P., Chen J., Chen Z., Davis A., Dean J., Devin M., Ghemawat S., Irving G., Isard M., et al. TensorFlow: A System for Large-Scale Machine Learning. arxiv. 20161605.08695 [Google Scholar]

- 56.Sman A.D., Hiller C.E., Imer A., Ocsing A., Burns J., Refshauge K.M. Design and Reliability of a Novel Heel Rise Test Measuring Device for Plantarflexion Endurance. BioMed Res. Int. 2014;2014:391646. doi: 10.1155/2014/391646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Washabaugh E.P., Kalyanaraman T., Adamczyk P.G., Claflin E.S., Krishnan C. Validity and Repeatability of Inertial Measurement Units for Measuring Gait Parameters. Gait Posture. 2017;55:87–93. doi: 10.1016/j.gaitpost.2017.04.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study will be openly available in the future in osf.io at DOI 10.17605/OSF.IO/XMSU2 after further analyses have been conducted by the authors.