Abstract

Background: Systems Medicine is a novel approach to medicine, that is, an interdisciplinary field that considers the human body as a system, composed of multiple parts and of complex relationships at multiple levels, and further integrated into an environment. Exploring Systems Medicine implies understanding and combining concepts coming from diametral different fields, including medicine, biology, statistics, modeling and simulation, and data science. Such heterogeneity leads to semantic issues, which may slow down implementation and fruitful interaction between these highly diverse fields.

Methods: In this review, we collect and explain more than100 terms related to Systems Medicine. These include both modeling and data science terms and basic systems medicine terms, along with some synthetic definitions, examples of applications, and lists of relevant references.

Results: This glossary aims at being a first aid kit for the Systems Medicine researcher facing an unfamiliar term, where he/she can get a first understanding of them, and, more importantly, examples and references for digging into the topic.

Keywords: systems medicine, multiscale modeling, multiscale data science

Introduction

Although death has always been the end of every human's life, mankind has been trying to delay that as much as possible. It is, thus, not surprising that one of the most ancient forms of science, if not the first, has been medicine, starting with documents going back to ancient Egypt and Greece.1 In the previous century, technical advances (from vaccines to genome sequencing) have supposed a revolution in medicine, and have allowed a substantial reduction in mortality rates. However, this trend is now experiencing diminishing returns: New drugs are nowadays being developed less frequently and at a higher cost; they are beneficial to smaller subsets of the population, and consequently have less impact on life expectancy. In parallel, mankind has recently witnessed an Information Technology (IT) revolution, in which data are gathered and processed at unprecedented rates, given birth to applications that would have appeared as science fiction as recently as 20 years ago. Following the theory of Kondratiev waves,2 postulating the existence of waves of 40–60 years with high sectoral growth, could it be that the next wave will have medicine at its focus, and specifically through the merging of both revolutions?

Such merging is actually taking the form of the so-called Systems Medicine, an interdisciplinary field of study that looks at the human body as a system, composed of interacting parts, and further integrated into an environment.3,4 It considers that these complex relationships exist on multiple levels, and that they have to be understood in light of a patient's genomics, behavior, and environment. The analysis of a disease then starts with real data, coming from a large number of patients (thus to ensure that the natural variability is taken into account) and covering all aspect of them, from genetics to the environment. Machine-learning and mathematical models are then developed, aimed at finding the most efficient way of disrupting the disease in a specific patient.

Even after this oversimplified description, it is clear that systems medicine requires skills and knowledge not considered in standard medical curricula, or alternatively the collaboration between researchers of different backgrounds. The revolutionary idea behind systems medicine is, thus, responsible for its main drawback: the need for understanding and combining concepts coming from diametral different fields, including statistics, modeling and simulation, and data science.5 The researcher wanting to enter this world will face an additional problem: Although a large number of books and papers can be found on, for example, data-mining concepts, these are usually not written with a medical practitioner in mind. Not just the required background, but even the basic terminology can become a major barrier.

This review addresses the semantic issues this implies, which may slow down implementation and fruitful interaction between these highly diverse fields, by providing the first version of the Systems Medicine Dictionary.* Specifically, the practitioner coming from medicine will find in it a large number of modeling and data science terms, along with some synthetic (although comprehensive) definitions and a list of relevant references. Similarly, a researcher with a background in modeling and data will here find an explanation of the basic systems medicine terms. It is worth noting that these definitions are not exhaustive, as both their selection and the corresponding content have been guided by the personal view of the authors. In addition, some terms described here represent fields of research on their own, whose characterization can hardly be contained in a monographic book. This work, thus, represents the first aid kit for the systems medicine researchers facing an unfamiliar term. They will here get a first understanding of it; and, more importantly, examples and references for digging into the topic.

Science, in general, and medicine, in particular, can benefit from approaches that are different from what was done earlier, as these can have multiplicative effects on knowledge and understanding in general; this may lead to new insights and ideas for new hypotheses, and eventually to breakthroughs unattainable via the old and tested ways of thinking and acting. In turn, this requires crossing discipline boundaries and provides new angles to old information. We expect this glossary to be especially useful to the younger readership, for example, PhD students and early career researchers, as they are at a better position to break away from old conventionalisms while significantly boosting their careers.

Concepts from Systems Medicine, Modeling, and Data Science

All terms are included here in alphabetical order, and they are further listed in Table 1. Table 2 also reports a list of the acronyms that appear in the text, and the corresponding meaning. Finally, underlined words, for example, agent-based modeling (ABM), refer to terms that are defined here.

Table 1.

List of the terms described here

| Agent-based modeling | Artificial neural networks | Bayesian filtering |

| Bayesian networks | Bayesian smoothing | Bayesian statistics |

| Biofluid mechanics | Bioheat transfer | Biological networks |

| Biomaterials | Biomechanics | Cellular automata |

| Clinical decision support systems | Clustering | Complex networks |

| Complex systems | Computational drug repurposing | Constraints |

| Context awareness systems | Correlation networks | CRISP-DM |

| Cross-validation | Data analysis software | Data fusion and data integration |

| Data mining | Decision Tree | Decision support systems |

| Deep learning | Digital Health | Digital Twin |

| Dissipative particle dynamics | Erdős–Rényi model | Exposome |

| FAIR principles | Feature selection | Finite element method |

| Finite volume method | Frequentist statistics | Functional networks |

| Gene set enrichment analysis | Granger causality | Graph embedding |

| Hidden conditional random fields | Imputation | In silico modeling |

| Integrative analysis | Interactome | Internet of things |

| Lattice Boltzmann method | Machine learning | Mediation analysis |

| Medical informatics | metaboAnalyst | Metabolomics |

| Model robustness | Model verification and validation | Morphometric similarity networks |

| Multiphysics systems | Multilayer networks | Multiscale biomolecular simulations |

| Multiscale modeling | Network analysis software | networkAnalyst |

| Network medicine | Null models | Nvidia Clara |

| Object-oriented modeling | Ontologies | Parameter estimation |

| Parameter identifiability | Parameter sensitivity analysis and uncertainty quantification | Permutation test |

| Phase transition | Physiome | Precision medicine |

| Probabilistic risk analysis | Quantitative systems pharmacology | Random forest |

| Random graphs | Scale-free networks | Simulated annealing |

| Small-world network | Smoothed-particle hydrodynamics | Solid–fluid interaction |

| Statistical bioinformatics | Statistical networks | Support vector machine |

| Surrogate model | Systems biology | Systems bioinformatics |

| Systems dynamics | Systems engineering | Systems medicine |

| System of systems | Standards | Structural covariance networks |

| Time-evolving networks | Time-scale separation | Variation partitioning |

| Virtual physiological human |

CRISP-DM, Cross-Industry Standard Process for Data Mining; FAIR, Findability, Accessibility, Interoperability, and Reusability.

Table 2.

List and explanation of the acronyms used throughout the review

| 2SSP | Two-Stage Stochastic Programming |

| AAL | Ambient-assisted living |

| ABM | Agent-based modeling |

| AI | Artificial intelligence |

| ANN | Artificial neural networks |

| BI | Business intelligence |

| BIC | Bayes information criteria |

| BPPV | Benign paroxysmal positional vertigo |

| CA | Cellular automata |

| CDSS | Clinical decision support system |

| CFD | Computational fluid dynamics |

| DDA | Drug–disease association |

| DDI | Drug–drug interaction |

| DPD | Dissipative particle dynamics |

| DSS | Decision support system |

| DT | Decision tree |

| EEG | Electro-encephalography |

| FBA | Flux balance analysis |

| FEA | Finite element analysis |

| FEM | Finite element method |

| fMRI | Functional magnetic resonance imaging |

| FVM | Finite volume method |

| GCN | Gene co-expression network |

| GRN | Gene-regulatory network |

| GSEA | Gene set enrichment analysis |

| HCRF | Hidden conditional random fields |

| HMS | Health care monitoring system |

| HSH | Health smart homes |

| ICT | Information and communication technologies |

| IoMT | Internet of medical things |

| IoT | Internet of things |

| IT | Information technology |

| LB | Lattice Boltzmann |

| LDL | Low-density lipoprotein |

| MEG | Magneto-encephalography |

| MFA | Metabolic flux analysis |

| MICE | Multiple imputation by chained equations |

| MMS | Multiscale modeling and simulation |

| MSC | Multiscale computing |

| NLP | Natural language processing |

| PaaS | Platform as a service |

| PCA | Principal-component analysis |

| PIN | Protein interaction network |

| PK/PD | Pharmacokinetic/pharmacodynamic |

| PPI | Protein–protein interaction |

| PRA | Probabilistic risk analysis |

| QM/MM | Quantum mechanical and molecular mechanical |

| QSP | Quantitative systems pharmacology |

| RF | Random forest |

| RFE | Recursive feature elimination |

| RSM | Response surface models |

| SA | Simulated annealing |

| SDK | Software Development Kit |

| SPH | Smoothed-particle hydrodynamics |

| TF | Transcription factor |

| t-SNE | t-Distributed stochastic neighbor embedding |

| UPR | Unfolded protein response |

Agent-based modeling

ABM (also known as Individual-based modeling, Multi-agent Systems, and Multi-agent autonomous Systems) is a modeling/simulation paradigm that is especially suited for studying complex systems, that is, systems composed of a large number of heterogeneous interacting entities, with each having many degrees of freedom. A very open definition of this mathematical discrete modeling paradigm is to represent a physical or biological system on the basis of entities (called agents) with defined properties and behavioral rules, and then to simulate them in a computer to reproduce the real phenomena and to perform what-if analysis.6 Agents have, thus, to be understood as autonomous entities, each one with an internal state representing its knowledge about the environment, and rules (or algorithms) to interact with other agents. This broad definition can then encompass from simple particles to autonomous software with learning capabilities. To illustrate, these can be from “helper” agents for web retrieval,7,8 robotic agents to explore inhospitable environments,9 up to lymphocytes in an immune system reaction simulation.10–12 Roughly speaking, an entity is an “agent” if it is distinguishable from its environment by some kind of spatial, temporal, or functional attribute: An agent must be identifiable. In addition, agents can be identified on the basis of four basic properties: autonomy, that is, the behavior of each agent is not guided by rules defined at a higher tier; social ability, that is, their capacity of interacting with other agents; reactivity, in that they react to perceived changes in the environment; and pro-activeness, that is, the ability to take the initiative. Moreover, it is also conceptually important to define what the agent “environment” in an ABM is. This can be implicitly embedded in the behavioral rules or be explicitly represented as a different “modeled object” with a well-defined set of characteristics that influence the agent's behavior.

An ABM simulation may start from simple agents, locally interacting with simple rules of behavior, responding to perceived environmental cues and trying to achieve a local goal. However, the simplicity of the composing elements does not derive in the simplicity of the overall dynamics. From this simple configuration, a synergy may emerge, which leads to a higher-level whole with much more intricate behavior than the component agents (holism, meaning all, entire, total).

If the first examples of agent-based models were developed in the late 1940s, only computers could really show their modeling power. These include the Von Neumann machine, a theoretical machine capable of reproduction,13 that is, of producing an identical copy of itself by following a set of instructions. This idea was then improved by Ulam,14 by suggesting machines to be built on paper, as collections of cells on a grid. This idea inspired von Neumann to create the first of the models later termed cellular automata (CA). Building on top of these, John Conway constructed the well-known “Game of Life,” a simple set of rules that allow evolving a virtual world in the form of a two-dimensional checkerboard, and which has become a paradigmatic example of the emergence of order in nature. How do systems self-organize themselves and spontaneously achieve a higher-ordered state? These and other questions have been addressed in-depth in the first workshop on Artificial Life (ALife) held in the late 1980s in Santa Fe. This workshop shaped the ALife field of research,15 in which ABM models are the main form of modeling and simulation.

The ABM proved very successful in theoretical biology. In this specific research domain, ABM is emerging as the best modeling paradigm that is able to accommodate the need to represent more than one level of space-time description, thus fitting the multiscale specification. Beyond the aforementioned works on the immune system, examples include cancer modeling,16,17 or epidemics predictions.18,19 For further discussions and examples, the reader may refer to An et al.20

Artificial neural networks

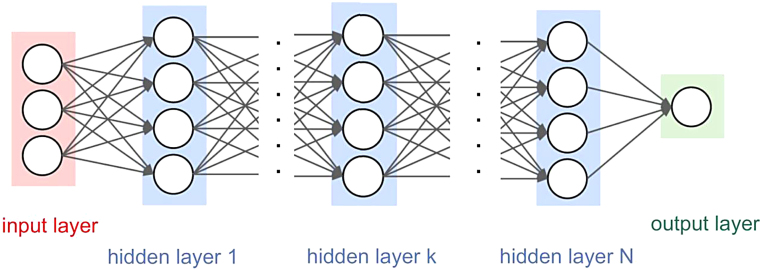

Artificial neural networks (ANN) are inspired by the neural networks that exist in mammal brains.21 They represent a programming paradigm that helps a computer to process complex information to learn from the observational data. The network itself consists of connected units or nodes called artificial neurons (based on neurons in a biological brain) that are organized in layers. The first layer is called the input layer and is connected to the input signals. The input layer is followed by one or more hidden layers, all the way to the output layer connected to the output signals. Analogous to the synapses in a biological brain, signals are transmitted from one neuron to another. The output of one artificial neuron is computed when a nonlinear function is applied on the sum of its inputs. Usually, the weights and biases are added to adjust the learning process. Weights increase or decrease the strength of the signal at a connection, and biases represent the threshold to delay the triggering of the activation function. Mathematically, this can be represented as (Fig. 1):

FIG. 1.

Graphical representation of ANN. ANN, artificial neural network.

For ANN to learn from the provided data, they need to have a huge amount of information used as a training set. During the training period, the ANNs output is compared to the human-provided description of what should be observed (called target). If they are the same, weights are validated, and in case of incorrect classification, its learning will be adjusted.22 In the end, an unknown signal (not used in the training set) will be used as the input, and we expect the network to correctly predict the output (this process is called generalisation). As an example, in the process of classification of images as images with a dog or cat, the training set would be thousands of images already classified as dog or cat image. After the training, the ANN should be able to classify future images based on the trained model.

Although ANNs were originally aimed at solving specific biology problems, over time their application extended to a wide spectrum of tasks, including systems medicine through genomics, drug repurposing, or personalized medicine. Not surprisingly, many reviews are available. For instance, Awwalu et al. investigated the adequacy of using ANN, among other artificial intelligence (AI) algorithms, in solving personalized medicine and precision medicine problems.23 Ching et al. have developed an ANN framework called Cox-nnet to predict patient prognosis from high-throughput transcriptomics data.24 Bica et al. have introduced a novel neural network architecture for exploring and integrating modalities in omics datasets, especially in cases where a limited number of training examples was available.25 Also, some examples of application of deep neural networks could be found in using neural networks to learn an embedding that substantially denoises expression data, making replicates of the same compound more similar.26 Donner et al. used ANNs to identify drugs with shared therapeutic and biological targets, even for compounds with structural dissimilarity, revealing functional relationships between compounds and making a step forward toward the drug repurposing based on expression data.26

Bayesian filtering

A class of methods that allows estimating the current state, that is, the value of the observed variable(s), based on noisy measurements of the current and previous states. For instance, the spread of infectious diseases could be modeled with the help of Bayesian filters, where the time-varying variables are, for example, estimations of the number of susceptible, infected, healed, and dead individuals taken in the current and some previous time moments.27 For more information, see Särkkä.28

Bayesian networks

Bayesian networks (also known as Bayes networks, belief networks, Bayes/Bayesian models, and probabilistic directed acyclic graphical models) are a type of directed graphical model (i.e., a graph expressing the conditional dependencies between variables) that combines graph theory and probability theory (see also the Bayesian Statistics section). They present a formalism designed to address problems involving uncertainty and complexity. The Bayesian network approach can be seen as both a statistical and an AI-like knowledge-representation formalism. It is a useful tool for describing mechanisms involving stochasticity, cohort heterogeneity, and knowledge gaps, which are common features of medical problems, and has been utilized for diagnosis, treatment selection, and prognosis29 as well as for analyzing probabilistic cause–effect relationships (i.e., estimating the likelihood of a set of factors to be contributing to an observation, e.g., the relationship between symptoms and potential underlying mechanisms). Bayesian networks are constructed as directed acyclic graphs, where nodes represent unique variables that have a finite set of mutually exclusive states, whereas edges represent conditional dependence and the absence of edges conditional independence.30 For each variable A with parents , there is a conditional probability table P given as .30 Importantly, Bayesian networks satisfy the local Markov property, meaning that nodes are conditionally independent of its nondescendants given its respective parents. This characteristic permits a simplification of joint distributions within the model, allowing for efficient computation. In the most simple approach, a Bayesian network is specified by using expert knowledge; in the case of complex interactions, the network structure and parameters need to be learned from data.

Inference and learning in Bayesian networks

Given probability tables of the variables in a Bayesian network and conditional independencies, joint probability distributions can be calculated and utilized to infer information within the network and for structural learning. This approach can be used for different probabilistic inference methods, for example, for estimating the distribution of subsets of unobserved variables given observed variables (so-called evidence variables). Further, Bayesian networks can be utilized to express causal relationships and combine domain knowledge with data, and, importantly, can thus be used for probabilistic parameter estimation.

Examples of the use of Bayesian networks in medicine include the diagnosis and prediction of disease trajectory,31–33 health care planning,34,35 and molecular data analysis.36 Although this is a popular and successful option for modeling in the medical domain, they should be used with caution in complex problems with multiple feedback loos and closed-loop conditions.

Most relevant limitations

Bayesian networks commonly rely on prior knowledge/belief for construction and inference; thus, the quality and usefulness of a respective network is directly dependent on the usefulness and reliability of this prior knowledge. In the case of expert-constructed networks, it may further be challenging to translate this knowledge into probability distributions. Bayesian networks are constructed as acyclic graphs and thus do not support the implementation of feedback-loops,37 although this may be addressed by using dynamic Bayesian networks.38 Bayesian networks have limited ability to deal with continuous variables, a limitation most commonly addressed by discretizing these variables, which, in turn, has tradeoffs.39 Lastly, Bayesian learning and inference can become very computationally expensive, to the point that a network becomes impossible to compute and the search space needs to be reduced by using different heuristics.40,41

Bayesian smoothing

This is a class of methods for reconstructing previous state(s), having noisy measurements of the current and the previous states. Brain imaging is an example of an area that can take advantage of the Bayesian filters and smoothers relying on sensor measurements of different values.28

Bayesian statistics

Bayesian statistics is a Bayesian interpretation of probability in which probability expresses a degree of belief in an event, as opposed to a fixed value based on frequency—see the Frequentist Statistics section.

The basic framework of Bayesian analysis is quite straightforward. Prior distributions are associated with parameters of interest to represent our initial beliefs about them, for example, based on objective evidence, subjective judgment, or a combination of both. Evidence provided by further data is summarized by a likelihood function, and the normalized product of prior and the likelihood forms a posterior distribution. This posterior distribution contains all the currently available information about the model parameters. Note that this is different from the standard frequentist approach, and that both methods do not always give the same answers; and this is fueling an ongoing debate between proponents of both approaches.42–44 At the same time, the use of a Bayesian approach yields results that go beyond what are obtainable through a frequentist perspective.45–47 In what follows, the most important points of Bayesian and frequentists disagreements and differences are discussed: prior distributions, sequential analysis, and confidence intervals.

The (subjective) choice of prior distribution

The specification of prior distribution is a matter of ongoing concern for those contemplating the use of Bayesian methods in medical research.48 It is not without a reason that frequentists object to this concept. Any conclusions drawn from the posterior distribution will be impacted by this choice. If the prior distribution is informative, that is, already carries strong evidence for certain values of unknown parameters, then new data might have no significant impact at all (which is not a bad thing if our prior distribution reflects the truth). Many authors devoted their thoughts to the formalization of the prior distribution selection,49–52 and they all have made suggestions regarding the elicitation and quantification of prior opinions of clinicians. However, it is still a very difficult task. Even minor mistakes in the prior elicitation can propagate to significant errors in the posterior inferences. The subjectivity in the elicitation of expert opinions is the main critique of the Bayesian approach. Actually, in very complex problems, such elicitation might even be impossible to many parameters. However, uninformative priors, the kind that also have a claim to objectivity, are the Bayesian response.53 In fact, there is a strong movement toward objective uninformative priors in the Bayesian community.

This struggle to develop the objective Bayesian framework produced quite many different approaches on how to devise objective prior distribution. The most famous of these is the Jeffreys-rule prior.54 Reference priors55,56 are a refinement of the Jeffreys-rule priors for higher dimensional problems and have proven to be remarkably successful from both Bayesian and non-Bayesian perspectives. Maximum entropy priors57 are another well-known type of noninformative prior, although they often also reflect certain informative features of the system being analyzed. Invariance priors, as mentioned earlier, matching priors,58 and admissible priors59 are other approaches being extensively studied today. Methods on how to select a prior distribution from this vast universe of possible distributions are discussed in Kass and Wasserman.60 Caution is advised when considering a noninformative distribution. Sensitivity analysis should always be performed, because in small sample cases, noninformative prior distribution can still influence the posterior results.61 On the other hand, arbitrariness is not so unfamiliar to frequentists' practices as well.

Sequential analysis

The Bayesian approach includes a generally accepted stopping rule principle: Once the data have been observed, the reasons for stopping the experiment should have no effect on the evidence reported about unknown model parameters. Frequentists' practice, on the other hand, is different. If there are to be interim analysis during the clinical trial, with the option for stopping the trial early should the data look convincing, frequentists feel that it is mandatory to adjust allowed error probability (down) to account for the multiple analysis.42

Stopping rules are especially important in clinical trials, and Bayesians pick up on this theme as early as 1992, with four seminal papers on colorectal cancer clinical trials.62–66 Currently, Bayesian stopping rules are being used in all phases of trials—see Ashby46 for a complete review. In fact, the increasing use of Bayesian statistical methods in clinical research is supported by their capacity to adapt to information that is gathered during a trial, potentially allowing for smaller, but yet more informative trials, and for patients to receive better treatment.67

Confidence intervals

The concept of confidence intervals is purely frequentists. However, the way it is (wrongly) interpreted is Bayesian. Confidence interval represents the precision of a parameter estimate as the size of an interval of values that necessarily include estimate itself. A true understanding of the concept would look like this: If new data were to be repeatedly sampled, the same analysis carried out, and a series of 95% confidence intervals calculated, 19 out of 20 of such intervals would, in the long run, include the true value of the quantity being estimated.68 However, many researchers (mistakenly and fundamentally incorrect) interpret this interval as a 0.95 probability that the true parameter is in the interval. If one would be truly Bayesian from the beginning of the analysis, Bayesian credible intervals69 would be considered as exactly the probability that the unknown parameter is contained in it. In fact, in certain prior distribution cases, Bayesian credible intervals are exactly the confidence intervals, only the interpretation is different.

The interplay of Bayesian and frequentist analysis

Currently, there is a trend of using notions from one type of approach to support analysis of another approach. Of many topics, several should be mentioned in this brief note: empirical Bayesian analysis, where prior distribution is estimated from the data70; approximate model selection methods, such as BIC,71 similar to the usage of Akaike information criteria; robust Bayesian analysis,72 which recognize the impossibility of complete subjective specification of the model and prior distribution, etc. From the frequentist theory viewpoint, the most convincing argument in favor of the Bayesian approach is that it intersects widely with the three notions of classical optimality, namely, minimaxity, admissibility, and equivariance.73

Biofluid mechanics

Biofluid mechanics is the application of principles of fluid mechanics on the dynamics of motion of biofluids inside and around of living organisms and cells.74 The main applications of biofluid dynamics are the study of the circulatory system with the blood-flow inside vessels of various sizes, the study of the respiratory system with the air-flow inside the lungs, and also the lubrication of synovial joints.75 The study of biofluid dynamics has allowed many therapeutic applications such as artificial heart valves,76 stents, and in the future artificial lungs.77 Biofluid dynamics can be studied with simulations and experiments. Computational fluid dynamics simulations can be used to better understand the flow phenomena of the biofluids inside the complex geometry of vessels. Biofluid dynamics can also be studied with in vivo experiments, with the use of noninvasive medical imaging methods such as Doppler ultrasound and magnetic resonance imaging (MRI), invasive methods such as angiography but also with more straightforward methods as the pressure cuff used to measure blood pressure.78

Bioheat transfer

Bioheat transfer concerns the rate of heat transfer between a biological system and its environment. The main difference regarding heat transfer of biological systems to nonbiological ones is the blood perfusion through the extended network of vasculature in biological systems that directly affects the local temperature of the living tissue.79 The main research subjects of bioheat transfer are the thermal interaction between the vasculature and tissue, tissue thermal parameter estimation,80 human thermal comfort, thermoregulation, safety of heat transfer to living tissue due to microwave, ultrasound or laser exposure due to environmental exposure or for therapeutic applications.81 Because biochemical processes are governed by local temperature, bioheat transfer also plays a major role in the rate of these processes.

Biological networks

The concept of complex networks represents a powerful tool for the representation and the analysis of complex systems, and especially to describe their internal interaction structure. Recently, the so-called network biology approach82 has been fruitfully applied in many different biological areas, from gene regulation, to protein–protein interactions (PPIs), to neural signals,83 to finally hit clinical applications: Network medicine is today at the forefront of modern quantitative approaches in medical sciences.84 Here, with no claim of exhaustiveness, we list the main types of biological networks.

PPI networks

PPIs are physical contacts, stable or transitory, between two or more proteins created by electrostatic forces between the so-called protein surfaces, that is, the “exposed” regions of the three-dimensional structures of folded proteins. These contacts are at the base of most biological functions, as, for instance, of signal transduction, cell metabolism, membrane transport, or muscle contraction. It is, thus, clear that the analysis of how proteins interact between each other is essential to understand cellular processes in healthy and in pathological conditions. Sets of proteins and their interactions are generally referred to as protein interaction networks (PINs), mathematically represented by undirected graphs. The specific analyses performed on PINs depends on the overall goal of the study; to illustrate, one may try to identify the most prominent element for a given function (e.g., gene target prioritization),85 or the set of lethal proteins in a cell.86 Methods for the detection of protein interaction encompass experimental (e.g., yeast-two-hybrids, mass spectrometry) or in silico (ortholog-based) approaches.87,88

Gene-regulatory networks

Gene-regulatory networks (GRNs) are networks of causative and regulative interactions (biochemical processes such as reactions, transformations, interactions, activations, inhibitions: the links) between transcription factors (TFs) and downstream genes (the nodes), represented with directed graphs and inferred by gene expression data.

Methods to extrapolate GRNs are based on information-theoretic criteria, co-expression metrics, or regression approaches, among others. For example, the mutual information (MI) approach is often used, that is, a dimensionless metric that states how much the knowledge of a random variable tells about another one. A value of MI of zero indicates that the two variables are completely independent; on the other hand, MI >0 implies that they are connected, as knowing one of them is equivalent to (partially) knowing the other. Thus, if MI >0 for the expression of two genes, we can infer that one of them is (partially, at least) driving the other.89

Though created in an indirect way, inferred GRNs aim at representing real physical, directed, and quantitatively determined interaction events, both between genes and, and between them and their products. The final aim is the discovery of key functional relationships between RNA expression and chemotherapeutic susceptibility.90 Recently, data from single-cell gene expression have become mature and have been approached by using partial information decomposition to detect putative functional associations and to formulate systematic hypotheses.91,92

Validation of GRNs has traditionally been performed in two ways. On the one hand, one can resort to “gold standards,” that is, sets of interactions that have been validated; on the other hand, one can observe the biological system under study in vitro, by inducing a perturbation and by observing whether the real and predicted effects coincide.93,94

Gene co-expression networks

Gene co-expression networks (GCNs) are basically RNA transcript–RNA transcript association networks: Nodes of the network correspond to genes, which are pairwise connected when an appreciable transcript co-expression association between them exists. Networks are then calculated by estimating some kind of similarity score from expression data and by applying a significance threshold; the result is usually a undirected graph. In reconstructing GCNs, normalization methods, co-expression correlation (e.g., Pearson's or Spearman's correlation measures), significance, and relevance estimation are calculated. Graphical Gaussian Models (e.g., “concentration graph” or “covariance selection” models) are also used, along with edge removal based on gene triplets analysis (e.g., the ARACNE tool), regression methods, and Bayesian networks.95

Signaling networks

Signaling pathways are cascades of molecular/chemical interactions and modifications to carry signals from cell membrane receptors to the nucleus to arrange proper biological responses to stimuli, on human or microbial levels. The process of reconstructing signaling networks has typically been based on gene knockout techniques, which are effective in describing cascades in a linear or branched manner. Nevertheless, recent screens suggest a switch from such cascades to networks with complex interdependencies and feedbacks,96 which require methods that are able to infer aspects and features of signaling processes from high-throughput -omic data in a faster and systemic way. In general, such inference problems can be reduced to the definition of suitable optimal connected subgraphs of a network originally defined by the available data; examples include the Steiner tree approaches (based on the shortest total lengths of paths of interacting proteins), linear programming, and maximum-likelihood (e.g., tagging proteins as activators or repressors to explain the maximum number of observed gene knockout). Alternatives include the use of a probabilistic network, for example, network flow optimization (Bayesian weighting schemes for underlying PPI networks coupled with other -omics data), network propagation (gene prioritization function that scores the strength-of-association of proteins with a given disease), or information flow analysis (based on the identification of proteins dominant in the communication of biological information across the network).97,98

Metabolic networks

Metabolic network reconstruction is generally referred to as the annotation process of genes and metabolites for the determination of the metabolic network's elements, relationships, structure, and dynamics.83 It can be identified on human, microbial and their joint co-metabolic levels. It is usually possible to infer the enzymatic function of individual proteins, or to reconstruct larger (or even whole) metabolic networks. Techniques such as metabolic flux analysis (MFA) and its improvements (e.g., isotopically nonstationary MFA), and flux balance analysis have become largely utilized for the predictions of concurrent fluxes of multiple reactions. Recently, computational approaches coupling MFA with mass spectrometry have been also implemented. Single-enzyme function prediction can be carried out by resorting to machine learning, especially when the enzyme does not show significant similarity to existing proteins; or to “annotation transfer” approaches, based on the use of reference databases or orthologs to tag specific DNA sequences. Comparative pathway prediction methods use established functional annotations to check for the existence of new reactions, whereas explorative pathway prediction techniques (not using existing annotations) can be graph-theoretic (e.g., by weighting paths of metabolite connectivity) or constraint-based (e.g., elementary mode analysis), or both.99,100

TF networks

When talking about disease and transformation from health to disease, we cannot avoid the TF networks that were enabled by technological advances, such as genome-wide large-scale analyses, genome editing, single-cell analyses, live-cell imaging, etc. Enhancer locations and target genes are keys to TF network models.101 The original definition of enhancers is that they represent functional DNA sequences that can activate (enhance) the rate of transcription from a heterologous promoter, independent of their location and orientation.102 Determining the function of enhancers and whether TFs bind to them was accelerated by the CRISPR/Cas9 and other genome-editing technologies, as well as by the data collected within the large-scale efforts, such as the Human Epigenome, ENCODE, etc. If we combine the experimental evidence of TFs binding to specific promoter or enhancer DNA elements, at specific genomic loci, we can construct TF network models and maps, to predict biological behavior in silico and further guide experimental research. In principle, the TF network models are simple, consisting of subnetworks with nodes (genes and proteins) and edges that link the TFs to their functional targets. More complex models can, nevertheless, be used, for instance integrating Boolean and Bayesian approaches—see Brent101 for a review.

The TFs work predominantly in a tissue-specific manner to define the cell phenotypes. For a maximal output, different TFs usually cooperate and synergize, to modulate changes in gene expression.103 A TF network map is a graph where we can see which TFs directly regulate a gene by binding to one of its promoter or enhancer elements. A TF network map includes the basic biochemical knowledge, similarly as the metabolic network map. It links the TFs with target genes, taking into account the proper physiological or patophysiological conditions and signals (endogenous and external), as well as the context of the time (development, aging, circadian, etc.). Several approaches have been developed to model and/or graphically represent the TF networks, such as the PetriNets104 and the ARACNE algorithm that has been recently upgraded to suit also the single-cell gene expression data.105 The NetProphet 2.0106 is another algorithm for TF network mapping that can as accurately as possible identify TF targets. Another representation of TF networks are the maps that are built directly from transcriptome data by applying the enrichment procedures. These maps show whether the expression of individual TFs is related. For example, the KEGG pathways107 and TRANSFAC database were used for functional enrichment studies.108 Gene sets containing more than five elements were constructed and tested for enrichment by using the PGSEA package, and the TFs were merged based on their ID irrespective of their binding sites. In this manner, the TF enrichment analyses confirmed an increased unfolded protein response and metabolic decline after depleting one of the genes from cholesterol synthesis in the liver.109

Biomaterials

Biomaterial is a synthetic material that is used to replace part of a living system or to function in intimate contact with living tissue.110,111 Although there are different definitions of a biomaterial, the Clemson University Advisory Board for Biomaterials has officially defined a biomaterial as “a systemically and pharmacologically inert substance designed for implantation within or incorporation with living systems.” One must differ biomaterial from biological material (i.e., bone matrix or tooth enamel), which is produced by a biological system. Other materials that should be differentiated are artificial materials that are simply in contact with the skin (i.e., hearing aids and wearable artificial limbs), which are not biomaterials since the skin acts as a barrier with the external world. The main applications of biomaterials include assistance in healing, to improve function and correct abnormalities or replacement of a body part that has lost function due to disease or trauma. Advances in many fields, including surgery, have permitted materials to be used in many cases and wider scope.112,113

Biomechanics

Biomechanics is the application of classical mechanics to the study of biological systems. Laws of physics for statics, kinematics, dynamics, continuum mechanics, and tribology are applied for the study of biological systems from a single cell to whole human bodies.114 Biomechanics studies are employing both experiments and numerical simulations. Experiments in biomechanics are performed both in vitro and in vivo. Common experiments include measurements of kinematics and dynamics of human motion (gait analysis),115,116 soft tissue deformation and impact studies (tension-compression tests, impact tests, three-point bending tests),117 electromyography for neuromuscular control,118 but also experiments at microscopic level with dynamic loading of cells with microscopic cantilevers setups.119 Simulation of biomechanics systems has allowed the testing of conditions that would be dangerous to test with human participants or biological tissue, with applications ranging from vehicle safety with simulated crash tests using active human body models, study of biological systems with complex geometries that is not possible to measure their deformation response with experiments, as brain deformation during head impacts and faster and easier-to-perform parametric studies. However, it is important when using a simulation model to consider the range of parameters for which the model is valid.

Cellular automata

The CA are defined as abstract and discrete (spatially and temporally) computational systems that showed its application as general models of complexity and as more specific representations of nonlinear dynamics in a variety of scientific fields. The CA are composed of a finite (countable) set of homogeneous and simple units, called atoms or cells. These cells have an internal status that can take a finite set of values, and that is updated at each time step through functions or dynamical transition rules—generally as a function of the states of cells in the local neighborhood. It should be mentioned that CA are abstract, meaning they can be specified in purely mathematical terms and physical structures can implement them. Since CA are computational systems, they can compute functions and solve algorithmic problems, therefore displaying complex emergent behavior. Because of that, they are attracting a growing number of researchers from the cognitive and natural sciences interested in pattern formation and complexity in abstract setting.120 The CA have also been applied to some medical problems, as, for instance, image segmentation121,122 or infection modeling.123–125

Clinical decision support systems

Clinical decision making involves clinicians making decisions about patient diagnosis and treatment.126 Clinical decision making has traditionally largely been determined by human expertise. As of now, clinicians still make the final decisions on weighing across evidence, for example, from clinical data records.

Various statistical and mathematical methods,127 and knowledge-based approaches using dictionary-defined knowledge (e.g., with “if-then” rules)128 have now been used to aid clinical decision making, resulting in more quantitative, standardized, accurate, and objective decisions. This has led to the development of medical or clinical decision support systems (CDSSs), often in the form of computer software or health technology, aiding human experts with interpretation, diagnosis, and treatment.129

The rise of AI, particularly machine learning, has led to another form of CDSSs that is “non-knowledge-based.” Some of these approaches, for example, deep-learning algorithms, have been claimed to outperform human experts in diagnosis of specific illness.130 However, interpretability or explainability of the results of such approaches hinder their use in practice.131 It should be noted that CDSSs still remain not as highly adopted by users, perhaps partially due to general lack of engagement from clinicians, physicians, or health specialists.132

Clustering

In data mining, any problem involving the division of data into groups (clusters), such that each one of them contains similar records (according to some similarity measures), and that dissimilar records are organized into different clusters. It is also called unsupervised learning, as no a priori information about the structure of the groups is used. An alternative definition of clustering is proposed in Ref.133: “partition a given data set in groups, called clusters, so that the points belonging to a cluster are more similar to each other than the rest of the items belonging to other clusters.”

Although consensus on a unique classification of clustering algorithms has not been achieved, it is customary to divide such algorithms according to their underlying hypothesis134:

Hierarchical-based. Hierarchical clustering combines instances of the data set to form successive clusters, resulting in a tree form called dendrogram. Clusters are equal to individual instances in the lowest level of the tree, and upper levels of the tree are aggregations of the nodes below. Agglomerative and divisive clustering can be distinguished, depending on whether each observation starts in its own cluster, or in the complete set.

Partitions-based. As opposed to the previous group, partitions-based methods start from the complete data set and divide it into different disjoint subsets. Given a desired number of clusters, the process is based on assigning instances to different clusters and iteratively improving the division, until an acceptable solution is reached. Note that partitions-based methods are different from divisive hierarchical methods because, first, they require predefining the number of clusters; and second, because of their iterative nature. The well-known K-means algorithm,135 possibly the most commonly used clustering algorithm,136,137 belongs to this class.

Density-based. If the previously described algorithms assess the similarity of instances through a distance measure, density-based algorithms rely on density measures; clusters are thus formed by groups of instances that form a high-density region within the feature space. This presents the advantage of a lower sensitivity to noise and outliers. Among the most used algorithms belonging to this family, the DBSCAN138 is worth mentioning.

Probability-based. Probability-based clustering combines characteristics of both partitions-based and density-based approaches. The most important of these clustering approaches are mixture models,139 which are probabilistic models used to model heterogeneity and represent the presence of subpopulations (latent subgroups) in an overall population. The probabilistic component makes them a useful approach for complex (especially multimodal) data and they can be used to obtain statistical inferences about the property of latent subgroups without any a priori information about these subgroups. In practice this is achieved by using Expectation-Maximization algorithms.140 Important advantages are the flexibility with regards to choosing subgroup distributions and the possibility of obtaining “soft” stratification.

Complex networks

Born at the intersection of physics, mathematics, and statistics, the theory of complex networks has proven to be a powerful tool for the analysis of complex systems. Networks are mathematical objects composed of nodes, pairwise connected by links.141–143 Their flexibility, and indeed their success, resides in the fact that the identity of those elements is not defined a priori; for instance, networks can be used to represent from people and their social connections,144 market stocks and their correlations or co-ownership,145 to genes and their co-regulation.146 In all cases, networks allow reducing such complex systems into simple structures of interactions, which can easily be studied by means of mathematical (algebraic) tools, while removing all unnecessary details.

The most simple way of reconstructing networks, and indeed the first one from a historical perspective, is to directly map each element composing a system to a node, and map explicit relationships between elements as links. Consider the example of a gene co-regulation network: Nodes would represent genes, with pairs of them being connected when it is known (e.g., from direct biological experiments) that one of the two genes is regulating the second. Once the full network is reconstructed, its structure can be studied through a broad set of existing topological metrics,147 designed to numerically quantify specific structural features; and by using these metrics as input to data-mining models.148

In spite of the interesting results that could be obtained through this simple understanding of networks, it was soon apparent that many real-world systems needed more detailed descriptions. Specifically, it is worth noting that a simple network reconstruction implies three hidden assumptions: that links are constant through time; that nodes are connected by just one type of relationship; and that relationships are explicit. Breaking these three hypotheses gave birth, respectively, to time-evolving, multilayer and functional networks.

Complex systems

Systems were composed of a large number of elements, interacting in a nonlinear way between them. As opposed to more simple systems, these interactions are essential to understand the behavior of the complete system, and in some cases, they can even be more relevant than the individual elements.149–151 Due to this, the study of complex systems goes beyond the reductionism paradigm, where understanding is based on splitting to smaller subsystems that are simpler to understand. In other words, although the reductionistic approach works bottom-up, the systems view required to understand complex systems is a top-down one. Complex systems displays two important properties. On one hand, a nonlinear behavior, and thus tools originating in nonlinear analysis have been used in this domain—to illustrate, the analysis of time series describing the dynamics of complex systems often resort to the use of metrics of complexity,152 fractal dimension,153 sample entropy,154 and other types of entropies155 to quantify the irregularity, or detrended fluctuation analysis to quantify long-range correlations.156 On the other hand, emergence refers to the behaviors that may unexpectedly emerge, leading to order or disorder, and that cannot be explained by the dynamics of the system's units. Adaptation is considered as one of the qualities of complex systems, and this is a property that can be observed in the biomedical domain.157

Computational drug repurposing

Drug repurposing or repositioning is the detection of novel indications for existing drugs, to treat new diseases.158 A major advantage of the drug repurposing strategy is that it involves approved compounds that have passed the toxicological safety screening process and have a known pharmacokinetic (PK) profile: Repositioned drugs can, hence, enter directly to clinical Phase II, making the clinical phase process much faster than newly developed drugs, and thus more cost-effective. Computational drug repurposing approaches aim at optimizing and accelerating the drug repurposing procedures, also providing means for candidate drug prioritization. Computational drug repurposing methods include the following: Structure-based virtual screening (molecular docking), Ligand-based methods (Pharmacophore model, Quantitative structure–activity relationship, and Reverse docking methods),159 Transcriptomic-based methods,160 genome-wide association study (GWAS) methods,161 Literature-based discovery methods,162 and Network-based, Multisource data integration and Machine-Learning approaches.163

Constraints

In mathematics, constrains are conditions that must be fulfilled by some parameters (or solutions) of a model, to make the latter realistic. In the case of mathematical modeling of complex biological systems, different constraints can be implemented for parameters such as value range of variables, limitations of sum of parameters, transition speed, and other types of information. To illustrate, the angle of joints in the human arm cannot take any value, but must comply with some physical limitations.164 There are (i) general constraints that are true for any system (mass conservation, energy balance), (ii) organism level constraints—consistent limitations for all experimental and environmental conditions for a particular organism (range of viable metabolite concentrations, homeostatic constraint), and (iii) experiment-level constraints—environmental condition-dependent constraints for particular organisms (biomass composition, cellular resources).165

Context awareness systems

Context awareness systems address complex environments in terms of location, identity, components, and relations. Context refers to the information that describes an entity (person, location, object).166 The study of such complex environments has been made possible by the availability of Wireless Sensor Networks technologies, which allow heterogeneous sensors, distributed in a physical environment, to share their measurements. Still, these technologies do not protect from problems such as cross-domain sensing and coupling of sensors; to preserve performance and reliability, the data fusion has to be performed with caution.167 Context awareness systems have an important role in the design of health care monitoring systems, health smart homes, and ambient assisted living, which facilitate the acquisition of both ambient and medical data from sensors. Such systems also may include reasoning capabilities consisting of data processing and analysis as well as knowledge extraction.168

Correlation networks

Functional complex networks created by considering the correlation between the dynamics of pairs of nodes.

Cross-industry standard process for data mining

CRISP-DM stands for cross-industry standard process for data mining, an industrial group that proposed a methodology for organizing the data analysis process in six standard steps.169,170 Since then, the term CRISP-DM has been used to indicate both the group itself and the methodology. The six steps are:

Business (or Problem) understanding: initial understanding of the objectives and requirements of the analysis to be performed; these are expressed as a data mining problem, and should include a preliminary roadmap or execution plan.

Data understanding: In this second phase, data are collected and a first analysis is executed, to familiarize with them; identify quality problems; discover initial insights, and formulate initial hypotheses; and identify relevant data subsets.

Data preparation: Data received by the researchers are seldom ready to be processed; on the contrary, they usually require an initial preparation. This covers all of the activities required to construct the final data set, from selecting those data that are really relevant, to data cleaning and pre-processing. This is one of the most important steps of the whole process, as the success of the final analysis strongly depends on it; and is responsible for most of the time and resources consumed in a data analysis project, as data preparation is usually performed iteratively and without a fixed recipe. See Refs.171–173 for a review of techniques and the motivations for data preparation.

Modeling: phase in which data-mining algorithms are applied and parameters are calibrated to optimal values. Some algorithms covered in this review are ANNs, decision trees (DTs), random forests (RFs), and support vector machines (SVMs). Although each one of these models has specific requirements on the format of input data, and are built on top of hypotheses on the patterns to be detected, in practice multiple algorithms are suitable in any given problem. In these situations, multiple models are optimized and compared; the models reaching a higher performance are passed to the next phase for a final evaluation.

Evaluation: Model evaluation cannot be understood only from a data-mining perspective, for example, in terms of the achieved classification score; a business perspective should also be taken into account. Only when all relevant questions have been addressed, can one then move to the deployment of the extracted knowledge.

Deployment: When all of the information about the business problems has been gathered, the information and knowledge then has to be organized and presented.

Cross-validation

In data analysis, cross-validation (also known as rotation estimation and out-of-sample testing) refers to any technique used to validate a data-mining model, that is, to quantify how it will generalize to an independent data set, re-using a single data set. The initial data set is divided into multiple subsets, which are used to train or validate the model; this guarantees that the same data are never used in both tasks.174

Data analysis software

With the widespread adoption of data-based solutions in many real-world scenarios, it is not surprising to find a large number of analytic solutions, spanning from cloud pipelines to commercial and freeware software, and both stemming from research activities and having a commercial nature. The most important are listed here, classified according to their underlying structure in cloud, noncloud, and hybrid tools.

Noncloud (or local) solutions

Commercial and freeware software tools for data analysis are designed to work on a local (or at least, noncloud) environment. In this category, one can find:

KNIME175 (www.knime.com);

SPSS Modeller176 (www.ibm.com/products/spss-modeler);

RapidMiner177 (rapidminer.com);

Alteryx (www.alteryx.com).

These software platforms usually have a broad focus, allowing to process any (or most) kind of data; and they allow to construct models by connecting modules in a graphical interface.

Cloud-based solutions

Also known as Platform as a Service, are solutions based on full cloud environments, and on the creation of web-based pipelines in which data are fed, processed, and returned to the user in a completely automatic way. The most notable solutions include:

Google's ML Engine (cloud.google.com/ml-engine);

Amazon's SageMaker (aws.amazon.com/sagemaker);

Microsoft's Azure (studio.azureml.net).

This approach presents two advantages: a complete scalability, and a simplified user experience. At the same time, they usually provide a limited spectrum of possible analysis—for instance, Google ML Engine completely relies on Tensor Flow algorithms.178

Hybrid solutions

These solutions position themselves in between the two families previously described. Although they are designed for cloud deployment, they can easily be installed in a local infrastructure; and they shift the focus toward an intuitive representation of the results and simplified user experience. Among others, these include:

Sisense (www.sisense.com);

Looker (looker.com);

Zoho Analytics (www.zoho.com/analytics);

Tableau (www.tableau.com).

They usually allow to summarize data on high-level dashboards, with specific applications including business analytics179 or website usage tracking. They, nevertheless, do not provide the analytical flexibility required by systems medicine applications.

Data fusion and data integration

Data fusion is the process of integrating multiple data sources to produce more consistent, accurate, or useful information than that provided by a single data source, whereas data integration refers to heterogeneous data obtained from different methods or sources, which are merged to produce meaningful and valuable information. In the field of system/personalized medicine, progress has been made regarding data integration, with large sets of comprehensive tools and methods (e.g., Bayesian or network-based methods), especially for multi-omics processing.180

Data mining

General terms are used for describing the process of discovering patterns in data sets through the use of statistical and mathematical algorithms. Their definition overlaps with that of machine learning; and the term is also used to denote the modeling step of the CRISP-DM process.

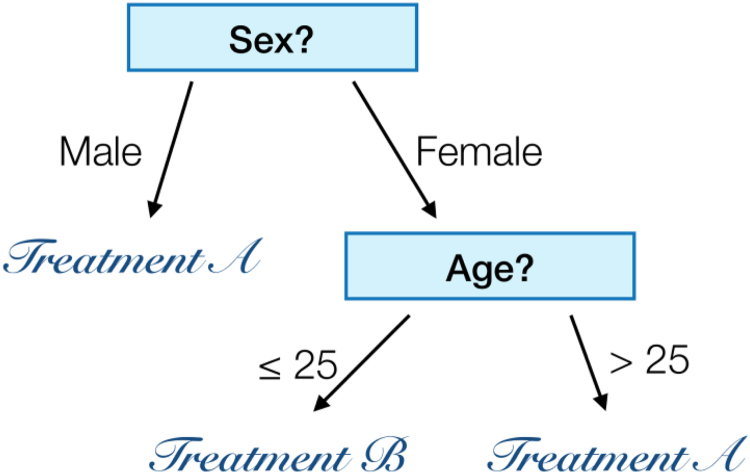

Decision tree

In data mining, DTs denote classification algorithms that rely on comprehensive tree structures, and that classify records by sorting them based on attribute values. Each node in a DT represents an attribute in an instance to be classified, whereas each branch represents a value that the attribute can take—see Figure 2 for a simple graphical representation. The DTs can be generalized to target continuous values, in which case they are usually referred to as regression trees.

FIG. 2.

Example of a simple decision tree model, trained to choose between two treatments as a function of the age and sex of the patient.

Let us denote by D the set of training instances that reach a node. The general procedure to build the tree is:

If all the instances of D belong to the same class, then the node is a leaf node.

Otherwise, use an attribute to split the set D into smaller subsets. These subset will then feed subsequent nodes, by applying this procedure recursively until a stop condition is met.

The main differences between the many implementations of DTs available in the literature reside in the criteria used to decide the splitting point. Among others, Gini index is used in CART,181 SLIQ,182 and SPRINT183; information gain is used in ID3184 and in the well-known C.45.185

The main advantage of DTs is their simplicity, both in the software implementation and in the interpretation of results; and their capacity of handling both numerical and categorical variables, thus implying little data preparation. This has fostered their use in medical applications, as reviewed, for instance, in Refs.186,187 They, nevertheless, suffer from a less-than-perfect performance. The concept of DT further underpins the RF classification algorithm.

Decision support systems

Decision support systems (DSSs) are information systems, that is, systems designed to collect, process and make available information, focused on supporting different types of decisions.188 The DSSs typically deal with business and management challenges; can be completely customized by including multiple user interfaces and flexible architectures; and implement Optimization/Mathematical Programming tools for solution strategy and report. The DSSs are able to provide a complete view of the activities and flow within large and complex real production systems, integrating the supply of raw materials, the production phases, the products distribution, and the recovery within the sustainable and closed-loop supply chains. The DSSs in the form of standardized, enterprise-wide information systems were widely implemented in multiple sectors, including industry supply chains (e.g., pharmaceutical, manufacturing, agri-food189) and health care services (e.g., CDSSs126–130).

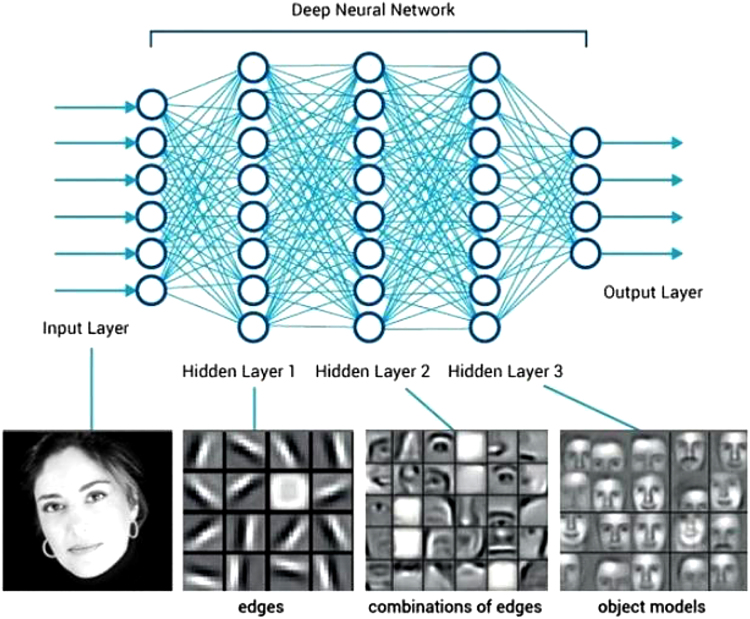

Deep learning

The ANNs, which form the basis of deep learning, were developed in the 1940s as a model for the human brain.190 Although this model has attracted the interest of researchers in previous periods, it made a significant leap in learning and classification with the development of deep learning systems based on the layered learning structure of the human brain. One of the main reasons for this is that computational infrastructure needed to satisfactorily operate these complex structures that contain hundreds of layers and thousands of neurons have only appeared in the past decade.

Deep-learning systems are mainly defined by the fact that each important feature of the phenomenon to be learned is automatically recognized by the algorithm and each group of features is learned by a separate artificial neural layer.191 For example, in an image recognition system developed for human face recognition, different facets of the face, such as lines, eyes, and mouths, and the general lines of the face are learned by different layers. Deep learning-based methods have greatly improved performance in computer vision and natural language processing, and they are integrated into many of the technologies currently used (Fig. 3).

FIG. 3.

Deep-learning system developed for human face recognition. Source: https://www.quora.com/What-do-you-think-of-Deep-Learning-2

Digital Health

The term “Digital Health” (or d-Health) is used for denoting the massive and ubiquitous use of information and communication technologies in health, health care, and medicine fields.192 Digital Health covers the range of technologies used in health and medicine from genome sequencing of the microbes in the human organs, such as the gut and the skin, through genome sequencing, to the use of smartphone for supporting online telemonitoring (exposome level). The main goals of digital health are to improve health care customer follow-up and engagement, in parallel of resources and cost optimization from the health organizations and providers. As a part of the fourth digital revolution, “Digital Health” is using internet of things (IoT) and business intelligence (BI) for delivering personalized health care and medicine services. However, Digital Health is taking health care from a paternalistic medicine wherein physicians are defining and deciding how to treat the patient to being patient-centered. Patient-centered in the Digital Health context means that the electronic tools, hardware and software, are enhancing the health care customers' experience and engagement by providing them with the decision support tools for getting better health outcomes and by considering their way of life and constraints.193,194 Nevertheless, Digital Health reduces direct human–human interactions and thus may induce a dehumanization of health care. Within Digital Health, a subsubject has to be highlighted: the development of methods allowing improving health care customers', practitioners', and other caregivers' (like patient's family members) experience, engagement, and interactions, by considering the digital environment as another kind of point-of-care similar to clinics, pharmacies, and hospitals. One limitation of a dynamic and fast development of Digital Health lies in local regulations that have the objective of keeping health-related data and information confidential and safe, and allowing their use in ways ensuring data availability and integrity only for relevant individuals (patients and their related one when relevant, professional, and specific organizations). Digital Health is a full component of the Systems Medicine paradigm by allowing a dynamic view of individuals from the nano-level (e.g., gene expression as a response to an environmental change) to the mega-level (e.g., population interactions/reactions—discussions— on social networks as a response to an epidemic announcement).

Digital Twin

The concept of Digital Twin is a bridge between the physical world, which can consist of a living system (i.e., an animal or a vegetal, an individual or a population) or a cyber-physical system (e.g., a biological process, a drug production line, a health monitoring service). A Digital Twin is a virtual or more accurately a computational representation of a real-world object.195 This kind of “duplicate” is allowing designing, implementing, and testing models in a virtual environment before or instead of performing these operations in a real-world context. From a Systems Medicine perspective, the digital twin is allowing building models of living systems (from the cell components level to the world population level for building and evaluating from biological to epidemiological models) by using socio-demographics, biological, clinical, and communicational data collected by health care customers and caregivers (see Medical Informatics section) and/or generated by IoT objects (see the Digital Health section).196,197

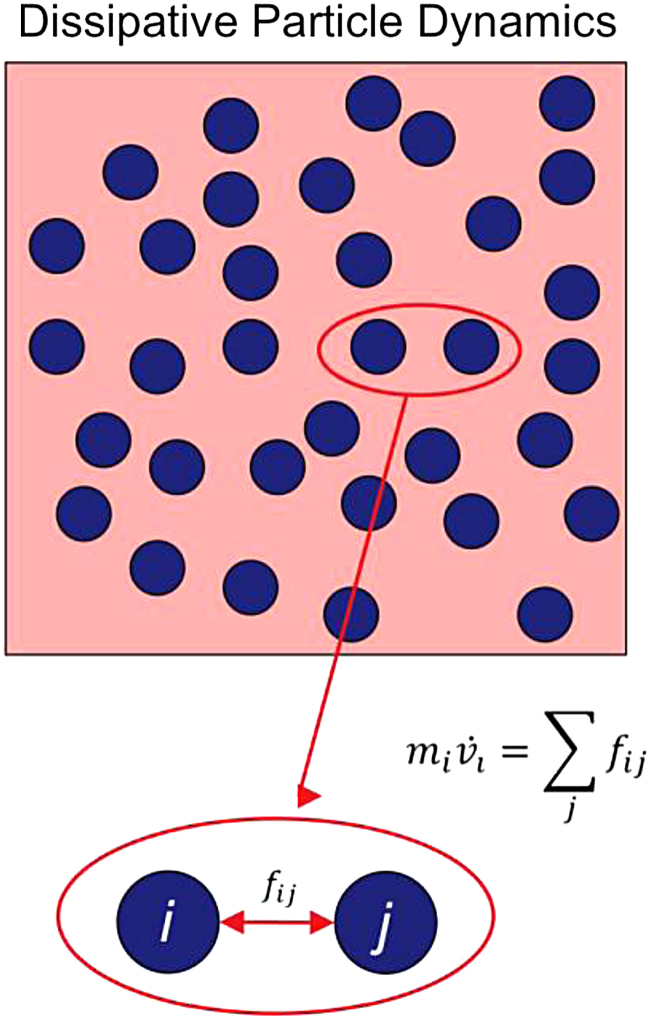

Dissipative particle dynamics

Dissipative particle dynamics (DPD) is a stochastic simulation technique used to study dynamical and rheological properties of fluids, both simple and complex. It involves a set of particles, representing clustered molecules or fluid regions, moving in a continuous space and at discrete time steps. This meso-scale approach disregards all atomistic details that are not considered relevant to the processes addressed. Internal degrees of freedom of particles are replaced by simplified pairwise dissipative and random forces, to conserve momentum locally and ensure a correct hydrodynamic behavior.

This technique facilitates the simulation of the statics and dynamics of complex fluids and soft matter systems. The main drawback is high computing power, but this has improved due to the high performance computing, which is now combined with this technique.198

Among others, the DPD can be used for modeling the transport of low-density lipoproteins (LDLs) through arterial wall and analyzing plaque formation, where the force of attraction of oxidase LDL molecules to the wall is modeled in the DPD solution as spring force with an experimentally determined coefficient199; for creating semicircular canal models with simplified geometry, showing the behavior of the fluid inside the canal, cupula deformation, and movement of otoconia particles to analyze benign paroxysmal positional vertigo200; or for modeling self-healing materials used for corrosion analysis and protection (Fig. 4).201

FIG. 4.

Schematic representation of a DPD model. DPD, dissipative particle dynamics.

Erdős–Rényi model

The Erdős–Rényi model is a model that is used to construct random graphs in which all edges, or links, have the same probability of existing, that is, they are independent. The model is usually denoted as , with n being the number of nodes and p the probability for any link to be present. Therefore, the model starts with n nodes, and each possible edge is included with probability p independent from every other edge.

The simplicity of this random network model makes it an ideal candidate for acting as null model in the normalization of network properties, although special care is required when the underlying real network is connected by construction, or has any other fixed characteristic.202

This simplicity also made possible the calculation of the expected characteristics of the graph, as a function of n and p, in an analytical way. Note that all these results are of a statistical nature, and hence that the error probability tends to zero; however, counterexamples can always be found. Among others, the most well-known ones include203:

If , then the graph will almost surely have no connected components of size larger than .

If , then the graph will almost surely have a largest component of size .

If , then the graph will be disconnected, that is, it will contain isolated nodes.

Conversely, if , then the graph will likely be connected.

Exposome

Exposome is the systems approach for disease study that takes into account the interaction of internal biological mechanisms with the environment, in other words, the interplay of genetic, epigenetic, and environmental factors. The concept was first introduced by Wild in 2005, and it encompasses for exogenous and endogenous components.204 A series of technological advances can be regarded as enabling technologies in this highly ambitious paradigm, including sensor networks monitor the air quality and make available the data, big data research, progress in microbiome analysis and metabolomics.

The study of endocrine disruptors and their role in pregnancy is one of the examples of this approach.205,206 Other work relates to cancer, and chronic diseases at large, involving pollutants, metabolism, inflammation, and diet. There are large initiatives worldwide aiming at creating synergies and building knowledge in this new field of research, as, for instance: https://www.projecthelix.eu/, https://humanexposomeproject.com/, http://metasub.org/

Findability, Accessibility, Interoperability, and Reusability principles

In an open-science approach, making scientific research, data, and dissemination accessible, four principles for scientific data management and stewardship were defined as Findability, Accessibility, Interoperability, and Reusability (FAIR), by the Force11 working group (https://www.force11.org/207). The principles do apply not only to data but also to algorithms, tools, and workflows. These objectives are now becoming expectations from funding agencies and publishers, regarding the use of contemporary data resources, tools, vocabularies, and infrastructures to assist research discovery and reuse by third parties.

Feature selection

In data analysis, the process of feature selection consists of applying algorithms designed to select a subset of features, from the original data set, for subsequent analysis. All other features are ideally irrelevant for the problem at hand, and they are thus disregarded.

Feature selection yields two main benefits. On one hand, even when the studied data set is not of a large size, it can help in data understanding, reducing training times, and improving prediction performance. On the other hand, feature selection is essential when the features outnumber the instances. To illustrate, domains such as gene and protein expression, chemistry or text classification are characterized by the limited availability of instances to train models—for example, a few patients and control subjects, a few complete textual records, etc. Refs.208,209 extensively review methods for feature selection.

Feature selection methods are usually classified in three different families:

Filters select subsets of variables, according to some rules, as a preprocessing step; in other words, this selection is not made taking into account the subsequent classification. One of the most relevant examples is the recursive feature elimination, based on iteratively constructing a classification model and removing features with low weights (i.e., of low relevance)—note that the classification model used here is independent from any subsequent classification. When features are added, instead of being eliminated, the result is a forward strategy.

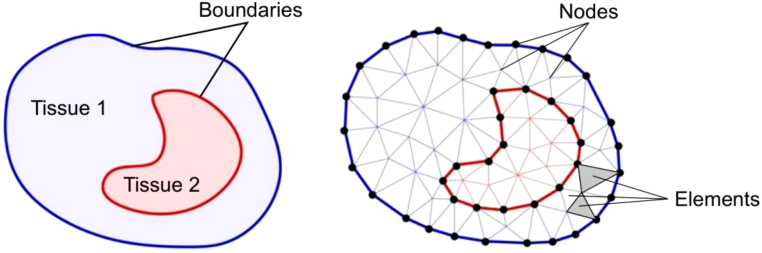

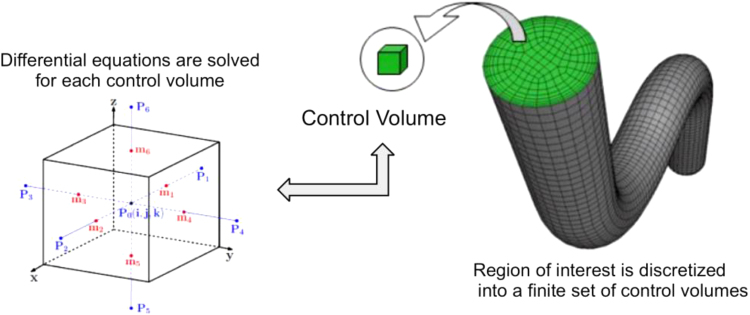

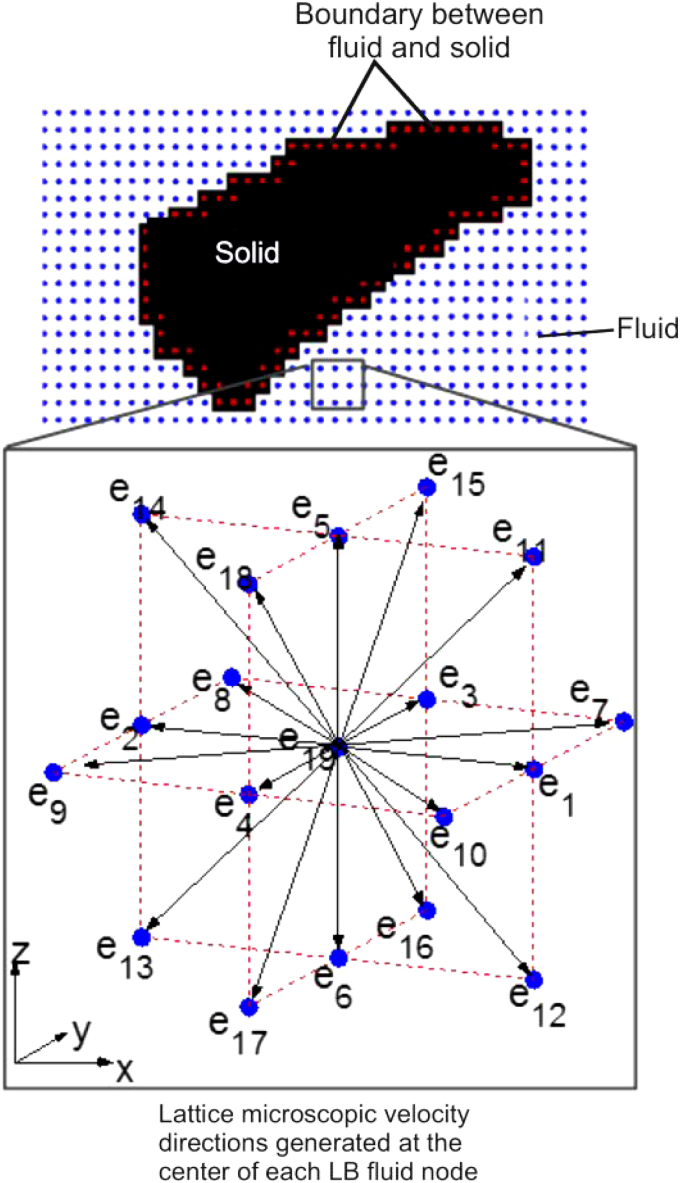

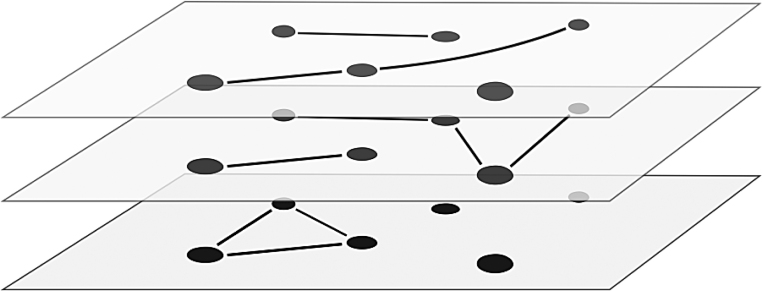

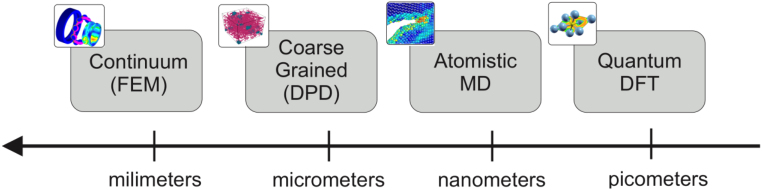

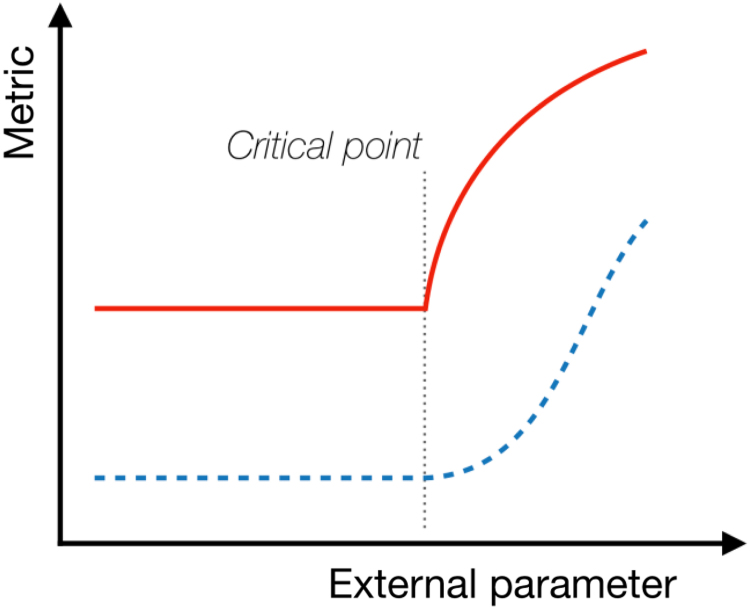

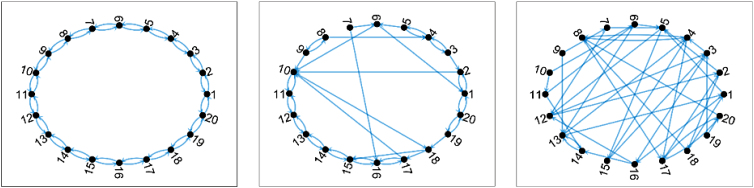

Wrappers assess subsets of features according to their usefulness to the subsequent classification problem. When the number of variables is reduced, this is done by evaluating all possible variable combinations; on the other hand, when this is not computationally feasible, a search heuristic is implemented. Note that here the machine-learning algorithm is taken as a black box, that is, it is only used to evaluate the features' predictive power. Wrappers can be computationally expensive and have a risk of overfitting in the model,210 in which case coarse search strategies may be applied.