Abstract

Background

Virtual Reality (VR) simulators are playing an increasingly prominent role in orthopaedic training and education. Face-validity - the degree to which reality is accurately represented - underpins the value of a VR simulator as a learning tool for trainees. Despite the importance of tactile feedback in arthroscopy, there is a paucity for evidence regarding the role of haptics in VR arthroscopy simulator realism.

Purpose

To assess the difference in face validity between two high fidelity VR simulators employing passive and active haptic feedback technology respectively.

Method

38 participants were recruited and divided into intermediate and expert groups based on orthopaedic training grade. Each participant completed a 12-point diagnostic knee arthroscopy VR module using the active haptic Simbionix ARTHRO Mentor and passive haptic VirtaMed ArthroS simulators. Subsequently, each participant completed a validated simulator face validity questionnaire.

Results

The ARTHRO Mentor active haptic system failed to achieve face validity with mean scores for external appearance (6.61), intra-articular appearance (4.78) and instrumentation (4.36) falling below the acceptable threshold (≥7.0). The ArthroS passive haptic simulator demonstrated satisfactory scores in all domains: external appearance (8.42), intra-articular appearance (7.65), instrumentation (7.21) and was significantly (p < 0.001) more realistic than ARTHRO Mentor for all metrics. 61% of participants gave scores ≥7.0 for questions pertaining to haptic feedback realism from intra-articular structures such as menisci and ACL/PCL for the ArthroS vs. 12% for ARTHRO Mentor. There was no difference in face-validity perception between intermediate and expert groups for either simulator (p > 0.05).

Conclusion

Current active haptic technology which employs motors to simulate tactile feedback fails to demonstrate sufficient face-validity or match the sophistication of passive haptic systems in high fidelity arthroscopy simulators. Textured rubber phantoms that mirror the anatomy and haptic properties of the knee joint provide a significantly more realistic training experience for both intermediate and expert arthroscopists.

Keywords: Arthroscopy, Simulation, Training, Virtual reality

1. Introduction

Surgical education has changed dramatically over the course of the last century; with the advent of innovative technology and novel surgical techniques, the demands of training have only increased. However, the limitation of working hours for surgical trainees has led to a reduction in operative learning opportunities, with up to 95% of surgical trainees failing to meet their required minimum clinical exposure in the United Kingdom.1, 2, 3 In the field of orthopaedics, arthroscopy is one of the most commonly performed surgical procedures, yet it is technically challenging and requires substantial training to achieve satisfactory proficiency.4,5 The learning curve for arthroscopy is particularly steep in the beginning and inexperienced surgeons exhibit demonstrably longer operating times and higher complication rates when compared to experienced arthroscopists.6, 7, 8

As a result, the use of virtual reality (VR) simulators to accompany and enhance traditional surgical education has been increasingly explored, with VR arthroscopy simulators offering a novel and safe means of practicing arthroscopy in a virtual rather than in vivo environment.9 Validity of a surgical simulator as an educational tool is contingent on both construct and face validity, the former describing the legitimacy of the user’s purported arthroscopy performance, whilst the latter measures the degree of realism the simulator provides compared to a ‘real-life’ arthroscopy.

Whilst face validity is an inherently subjective measure, it has a strong influence on trainees’ perception of a simulator’s educational value and subsequently their engagement with the tool.10 If the aim of the VR simulator in the surgical curriculum is to facilitate realistic training via guided multiple repetition in a safe virtual environment, then clearly the simulators’ face validity is a key consideration for assessors and trainees alike when deciding how best to leverage this technology to improve surgical outcomes.11

Early endoscopic VR simulators have utilised ‘active haptic’ feedback - where a small motor within the instrument provides vibrational tactile feedback in response to virtual instrument contact within the simulation on screen. When compared to the high fidelity sensory feedback of arthroscopy in vivo or on cadaveric specimens, active haptic feedback is crude and judged to be unrealistic by most orthopaedic trainees.12 The VirtaMed ArthroS™ (Zürich, Switzerland) simulator uniquely employs ‘passive haptic’ feedback technology, whereby the tactile feedback encountered through the instruments is the result of physical contact within the joint, whilst the action is accurately conveyed within the simulation on screen. Passive haptic feedback is thought to more closely resemble the high fidelity tactile sensation encountered in vivo arthroscopy.

Construct validity has been established in simulated knee, shoulder and hip environments by numerous studies for both simulators and is well documented in the literature.13, 14, 15, 16, 17 Since both simulators demonstrate comparable levels of construct validity, the difference in realism between the two is going to be the primary metric to judge when educators consider which simulator will provide optimal educational value for trainees.

The purpose of this study was to determine the difference in face validity between active and passive haptic knee arthroscopy simulators, with a view of informing and improving arthroscopy simulation provision for orthopaedic trainees. The educational value of a surgical simulator is intrinsically linked to it’s perceived realism and therefore this study highlights the role of haptics in VR arthroscopy simulators.

2. Methods

2.1. Recruitment

Orthopaedic surgeons of all grades were invited to participate in this study. The participants were recruited by email through four-regional teaching programmes which cover 5 urban teaching and 13 district general hospitals. The study protocol was approved by our local Institutional Review Board (IRB) and all participants provided informed consent.

38 participants completed the study, this included (in ascending order of experience): orthopaedic registrars, fellows and orthopaedic consultants. We excluded any novices with no prior arthroscopy experience from recruitment, since their ratings would inherently be invalid. This is in keeping with similar studies that evaluated the face validity of arthroscopy simulators using this questionnaire.18

A pre-study questionnaire was used to assess previous arthroscopic experience with data on operative numbers being extracted from e-logbook (Pan Surgical Electronic Logbook for the United Kingdom & Ireland) records. The number of performed diagnostic arthroscopies and simple arthroscopic procedures were recorded. The participants were then stratified into intermediates and experts based on their Post Graduate Year (PGY); intermediates (orthopaedic residents in training) were PGY 4–8 and experts (post-board certification fellows or attending/consultant surgeons were all PGY >8 (Table 1).

Table 1.

Demographic data for recruited participants.

| Participant Characteristics | Intermediate | Expert |

|---|---|---|

| Participants (n) | 25 | 13 |

| Age (Years ±SD) | 33 ± 4.9 | 38.3 ± 2.9 |

| Mean number of Knee arthroscopies performed (n, ±SD) | 146.8 ± 116.9 | 465.4 ± 290.1 |

| Mean number of shoulder arthroscopies performed (n, ±SD) | 30.0 ± 24.3 | 73.8 ± 131.5 |

| Percentage of right hand dominant participants (%) | 96.4 | 100 |

| Percentage of participants with previous simulator experience (%) | 46.4 | 61.5 |

| Percentage of participants that play video games (%) | 28.6 | 15.4 |

| SD, Standard Deviation | ||

3. Simulator equipment

The VirtaMed ArthroS™ (Zürich, Switzerland) simulator consists of a touch screen display monitor and PC Windows operating system with several interchangeable modules including the VR FAST shell, knee, shoulder, hip and ACL reconstruction. These various rubber and plastic intra-articular structures (ACL, femoral/tibial cartilage, menisci) are textured to closely resemble and reproduce the tactile feedback of a real knee joint (Fig. 1). The simulator utilises a passive haptic feedback system and arthroscopic instruments produced by Karl Storz (Tuttlingen, Germany). The physical interaction between the instruments and the intra-articular structures within the joint module is translated in real-time into a VR image. Pressure sensors within the instruments detect excessive force application and convey this on-screen, this provides the user with real-time feedback and analysis, e.g. alerting the user when chondral surfaces have been damaged whilst simultaneously reporting the percentage of the implicated surface area.

Fig. 1.

VirtaMed ArthroS™ arthroscopy simulator (https://www.virtamed.com/en/aoa/).

The Simbionix ARTHRO Mentor™ (3D Systems, Littleton, USA) simulator features a display monitor, two robotic arms housing the instrumentation and a fully articulating knee joint model, alongside further interchangeable polyurethane hip and shoulder modules (Fig. 2). This simulator provides active haptic feedback - a tactile vibration or sensation of resistance via connecting servo motors within the handheld instrumentation in response to virtual actions on the screen. There are no physical intra-articular structures present within the phantom knee joint.

Fig. 2.

3D systems arthro Mentor™ arthroscopy simulator (https://www.3dsystems.com/medical-simulators/simbionix-arthro-mentor).

Both simulators provide each participant with a login which allows tracking of performance and access to a database of instructional videos on arthroscopy.

4. Diagnostic knee arthroscopy

Each participant was randomly allocated to either the Simbionix ARTHRO Mentor™ (Active) or the VirtaMed ArthroS™ (Passive) and after completion of the first simulator assessment and post-arthroscopy questionnaire, the participant would proceed with the same task using the alternative simulator.

All participants completed a 14-point, diagnostic knee arthroscopy using the knee modules of the VirtaMed ArthroS and Simbionix ARTHRO Mentor respectively (Table 2). Participants completed the arthroscopy simulation three times for each simulator, this provided each participant with ample time and opportunity to familiarise themselves with the machine and scrutinise it more closely. A detailed inspection of the joint was required which included manipulation of the knee position (varus/valgus stress, flexion/extension) and use of a 30-degree arthroscope to complete the task. Visualisation of the entire intra-articular structure was required before being permitted to progress through the arthroscopy.

Table 2.

14-point diagnostic knee arthroscopy task flow.

| Task Order | Visualisation task |

|---|---|

| 1 | Patella surface |

| 2 | Supra-patella pouch |

| 3 | Popliteus insertion |

| 4 | Trochlear groove |

| 5 | Medial recessus |

| 6 | Medial meniscus - anterior horn |

| 7 | Medial meniscus - intermediate |

| 8 | Medial meniscus - posterior horn |

| 9 | Posterior cruciate ligament |

| 10 | Anterior cruciate ligament - distal |

| 11 | Anterior cruciate ligament - proximal |

| 12 | Lateral meniscus - posterior horn |

| 13 | Lateral meniscus - intermediate |

| 14 | Lateral meniscus - anterior horn |

All participants were individually supervised by the principal investigator (KV) and a maximum time limit of 20 min was set for the completion of each simulator-based arthroscopy.

5. Evaluation

To assess for face validity (the degree to which the simulator represents reality), each participant was asked to complete a validated post-arthroscopy questionnaire, consisting of 15 questions that evaluated their perception of the simulator appearance, realism of the intra-articular joint and instrumentation (Table 3). An additional 4 questions pertaining to user-friendliness were also administered as part of the questionnaire (Table 4). Each question was answered using a 10-point numerical scale, with a score of “1” denoting complete unrealism, whilst a score of “10” signifies complete or ‘life-like’ realism.18,19 In line with previous studies, a mean score of 7 was necessary to declare satisfactory face validity.18

Table 3.

Participant post-arthroscopy questionnaire (Face Validity).

| Face Validity Aspect | Question |

|---|---|

| Outer appearance | 1. To what extent do you think the outer appearance of the knee simulator represents reality? |

| 2. Is it clear which joint you are operating on? | |

| 3. Is it clear which portals are being used? | |

| Intra-articular joint | 4. How realistic is intra-articular anatomy? |

| 5. How realistic is the texture of the structures? | |

| 6. How realistic is the colour of the structures? | |

| 7. How realistic is the size of the structures? | |

| 8. How realistic is the size of the intra-articular joint space? | |

| 9. How realistic is the arthroscopic image? | |

| Instruments | 10. How realistic do the instruments look? |

| 11. How realistic is the motion of the instruments? | |

| 12. How realistic does the cartilage feel when you are probing (femoral condyle, tibial plateau)? | |

| 13. How realistic do the intra-articular ligaments feel (ACL, PCL)? | |

| 14. How realistic does the meniscus feel? | |

| 15. How realistic is the feedback from the intra-articular structures? |

Table 4.

Participant post-arthroscopy questionnaire (User-friendliness).

| User-friendliness Question |

|---|

| 1. How clear are the instructions to start an exercise on the simulator? |

| 2. How clear is the presentation of your performance on the simulator? |

| 3. Is it clear how you can improve your performance? |

| 4. How motivating is the way the results are presented? |

In an effort to focus participants’ attention and improve their rating accuracy, all participants were given a copy of the questionnaire prior to performing the arthroscopy so that they could familiarise themselves with the questions and domains to be assessed.

6. Statistical analysis

All statistical analysis was conducted using Python (version 3.7.4, Python Software Foundation, Delaware, United States) with the following packages: NumPy (version1.16.5) and SciPy (version 1.4.1). Normality of data distribution was assessed using the Kolmogorov-Smirnov Test. To assess the difference in face validity as well as user-friendliness between simulators, Welch’s (unequal variances) T-Test was employed. Significance was set at p ≤ 0.05.

We used the Holm-Bonferroni method to adjust the level of significance required for a positive test, in order to account for the number of tests done (alpha = 0.05).20

7. Results

7.1. Demographics

38 participants were recruited and consented to this study, this included 13 experts and 25 intermediates (Table 1).

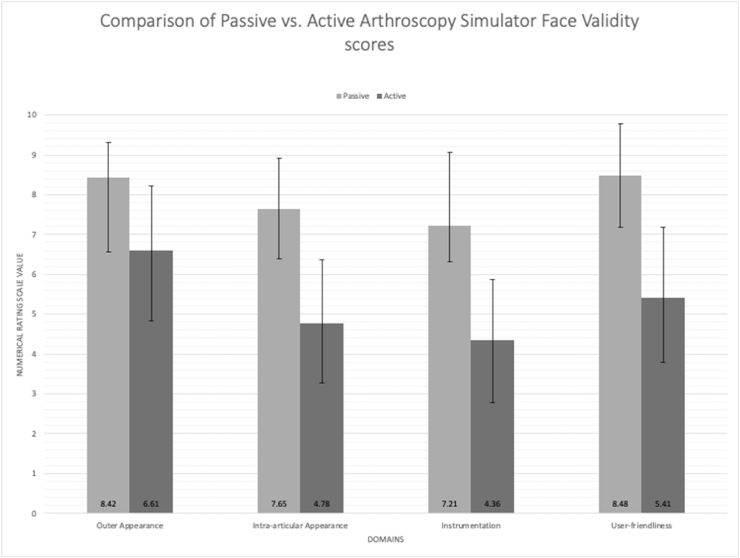

8. Main results - passive vs active

The results indicate that the ArthroS VR simulator demonstrates satisfactory (≥7.0) face validity in all assessed domains, whilst the ARTHRO Mentor failed to achieve acceptable levels of realism in any domains when judged by a range of orthopaedic surgeons. This supports our hypothesis that passive haptic systems provide a more realistic training experience for surgeons when compared to relatively low-fidelity active haptic systems. In addition, our study showed that across all face validity domains, the ArthroS scored significantly (p < 0.001) higher than the ARTHRO Mentor simulator, suggesting a clear preference amongst our study participants.

The mean combined face validity scores for ‘outer appearance’ were 8.42 (±0.89) and 6.61 (±1.61) for passive and active simulators respectively. (Fig. 3). 95% of participants gave the passive simulator a score ≥7.0 (indicating sufficient realism), compared to 47% for the active simulator (Table 5).

Fig. 3.

Comparative mean results of the passive and active arthroscopy simulator questionnaire scores. Error bars denote standard deviation. All pairwise comparisons shown below are significantly different (p < 0.001).

Table 5.

Mean domain-based scores for face validity by experience and simulator.

| Domain | Passive Haptic Simulator (Mean ± Standard Deviation) |

Active Haptic Simulator (Mean ± Standard Deviation) |

Welch’s T-test p value (Combined passive vs combined active) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Intermediates | Experts | Welch’s T-test p value (Intermediate vs Expert) | Combined | Intermediates | Experts | Welch’s T-test p Value (Intermediate vs Expert) | Combined | ||

| Outer Appearance | 8.37 ± 0.87 | 8.51 ± 0.98 | 0.670 | 8.42 ± 0.89 | 6.49 ± 1.58 | 6.82 ± 1.76 | 0.579 | 6.61 ± 1.61 | <0.001 |

| Intra-articular appearance | 7.48 ± 1.42 | 7.99 ± 0.88 | 0.186 | 7.65 ± 1.26 | 4.67 ± 1.70 | 4.97 ± 1.46 | 0.573 | 4.78 ± 1.59 | <0.001 |

| Instrumentation | 6.91 ± 1.91 | 7.79 ± 1.03 | 0.071 | 7.21 ± 1.68 | 4.15 ± 1.73 | 4.76 ± 0.95 | 0.247 | 4.36 ± 1.50 | <0.001 |

| User-friendliness | 8.28 ± 1.34 | 8.87 ± 1.08 | 0.155 | 8.48 ± 1.30 | 5.33 ± 1.64 | 5.52 ± 2.08 | 0.778 | 5.41 ± 1.77 | <0.001 |

The intra articular appearance domain scored a combined mean of 7.65 (±1.26) and 4.78 (±1.59) for passive and active simulators respectively. (Fig. 3). 76% of participants gave the passive simulator a score ≥7.0 in this category, compared to 11% for the active simulator.

Mean combined face validity scores for the instrument category were 7.21 (±1.68) and 4.36 (±1.50) for passive and active simulators respectively. (Fig. 3). Combined mean sufficient scores were given by 55% of participants for the passive simulator, versus 8% for the active simulator. None of the individual questions pertaining to haptic feedback (questions 12–15) scored an average ≥ 7.0 for either the passive (mean range: 6.58–6.89) or active (mean range: 3.74–4.19) simulator groups (Table 5, Table 6).

Table 6.

Combined (intermediate + expert) mean scores for individual questions.

| Question | Mean Score ± Standard Deviation |

Percentage (%) of participants giving a satisfactory score (≥7) |

|||

|---|---|---|---|---|---|

| Active Haptic Simulator | Passive Haptic Simulator | Active Haptic Simulator | Passive Haptic Simulator | ||

|

Outer Appearance |

1. To what extent do you think the outer appearance of the knee simulator represents reality? | 5.95 ± 1.82 | 7.79 ± 1.09 | 32 | 84 |

| 2. Is it clear which joint you are operating on? | 7.00 ± 2.19 | 9.08 ± 1.10 | 58 | 100 | |

| 3. Is it clear which portals are being used? | 6.87 ± 2.34 | 8.39 ± 1.46 | 55 | 89 | |

|

Intra-articular Appearance |

4. How realistic is the intra-articular anatomy? | 4.92 ± 2.12 | 8.00 ± 1.38 | 21 | 89 |

| 5. How realistic is the texture of the structures? | 4.34 ± 2.03 | 6.74 ± 2.27 | 16 | 66 | |

| 6. How realistic is the colour of the structures? | 4.66 ± 2.00 | 7.63 ± 1.48 | 21 | 87 | |

| 7. How realistic is the size of the structures? | 5.32 ± 1.66 | 8.11 ± 1.61 | 26 | 92 | |

| 8. How realistic is the size of the intra-articular joint space? | 4.92 ± 2.01 | 7.58 ± 1.57 | 26 | 82 | |

| 9. How realistic is the arthroscopic image? | 4.50 ± 1.80 | 7.87 ± 1.47 | 13 | 87 | |

|

Instrumentation |

10. How realistic do the instruments look? | 5.42 ± 2.04 | 8.21 ± 1.34 | 34 | 84 |

| 11. How realistic is the motion of the instruments? | 4.79 ± 1.76 | 7.92 ± 6.58 | 13 | 87 | |

| 12. How realistic does the cartilage feel when you are probing (femoral condyle, tibial plateau)? | 4.18 ± 2.10 | 6.58 ± 2.39 | 11 | 63 | |

| 13. How realistic do the intra-articular ligaments feel (ACL, PCL)? | 4.11 ± 2.00 | 6.89 ± 2.18 | 16 | 58 | |

| 14. How realistic does the meniscus feel? | 3.74 ± 1.67 | 6.79 ± 2.30 | 8 | 55 | |

| 15. How realistic is the feedback from the intra-articular structures? | 3.89 ± 2.01 | 6.87 ± 2.22 | 11 | 66 | |

|

User-friendliness |

16. How clear are the instructions to start an exercise on the simulator? | 5.87 ± 1.88 | 8.42 ± 1.50 | 32 | 89 |

| 17. How clear is the presentation of your performance on the simulator? | 5.24 ± 1.81 | 8.74 ± 1.37 | 24 | 87 | |

| 18. Is it clear how you can improve your performance? | 5.13 ± 2.17 | 8.29 ± 1.37 | 29 | 87 | |

| 19. How motivating is the way the results are presented? | 5.34 ± 1.91 |

8.47 ± 1.33 | 24 | 89 | |

User-friendliness domain scores were 8.48 (±1.30) for the passive simulator and 5.41 (±1.77) for the active simulator. (Fig. 3). 87% of participants gave the passive simulator a sufficient combined mean score compared to 24% for the active simulator (Table 5).

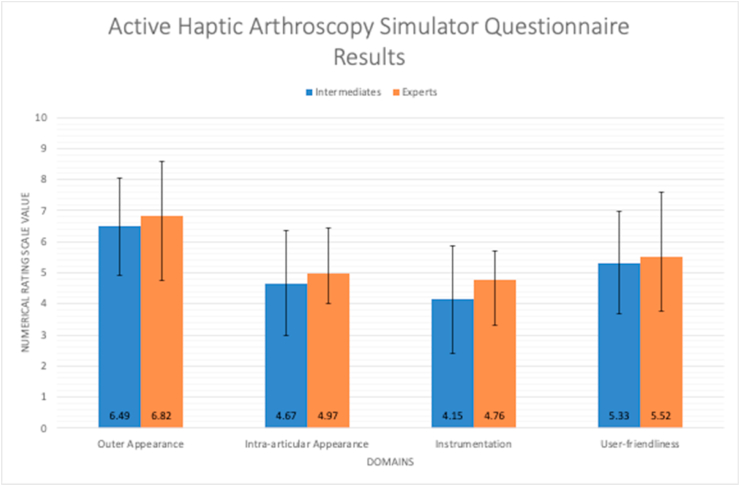

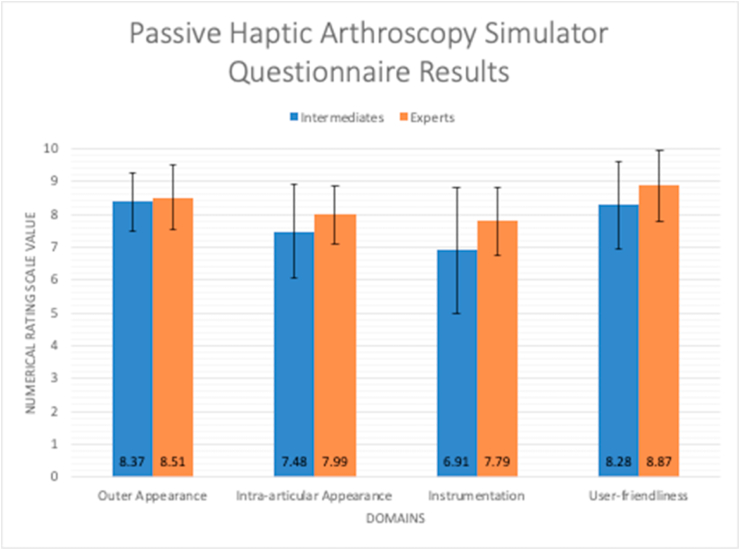

9. Intermediates vs experts

On average, the expert group rated both simulators more highly for every single domain when compared to the intermediate group, however this was not statistically significant for any of the domains (p > 0.05) (Fig. 4, Fig. 5) (Table 5).

Fig. 4.

Comparative mean scores of the active haptic arthroscopy simulator questionnaire for intermediates and experts. Error bars denote standard deviation. None of the pairwise comparisons below were significantly different (p > 0.05).

Fig. 5.

Comparative mean scores of the passive haptic arthroscopy simulator questionnaire for intermediates and experts. Error bars denote standard deviation. None of the pairwise comparisons below were significantly different (p > 0.05).

10. Discussion

The most important finding of this study is that surgeons prefer passive over active haptic feedback systems in virtual reality arthroscopy simulators. This corroborates and enhances the findings of several other studies that have demonstrated partial face validity of the VirtaMed ArthroS in both simulated knee and shoulder settings.17,19,21

The results of our study amplify and augment those of Martin et al. (2016), who evaluated face validity scores for three arthroscopy simulators (ArthroS, ARTHRO Mentor, ArthroSim) using the same questionnaire, yet failed to demonstrate any statistical difference between the ArthroS and ARTHRO Mentor for any domain. Similar to the findings of this study, Martin et al. demonstrated that >70% of participants gave sufficient mean face validity scores for the ArthroS; and whilst the ARTHRO Mentor failed to achieve comparably favourable ratings, they were nevertheless considerably more positive than our own. This discrepancy may be explained by the inclusion of novices in Martin et al.‘s study as well as a markedly smaller sample size compared to our study.

Whilst the passive haptic simulator managed to achieve an overall combined score of 7.21 (±1.68) for the instrumentation domain, all of the metrics explicitly tied to haptic feedback of bone and soft tissue structures, achieved mean scores just below 7.0 (Table 6). Therefore, whilst the tactile feedback from intra-articular structures provided by the ArthroS simulator lies on the cusp of sufficient realism, it remains unsatisfactory according to the arbitrary cut-off set by the questionnaire and this result is in keeping with the findings of similar studies.16,17 Nevertheless passive haptic feedback was judged to be superior when compared to active haptic feedback mechanisms in the context of VR arthroscopy - which suggests that the significantly superior passive haptic feedback may have a beneficial impact on the educational value of the simulator, resulting in improved precision and of trainees in the operating room.22

VR simulators employing active haptic systems rely on a complex interface of positional servo motors and haptic vibration transducers to alert the user when they ‘touch’ a solid virtual surface within the simulation e.g. scratching a meniscus with the instrument, however despite significant technological advances, this type of haptic feedback remains crude and cannot imitate the full incremental range of force feedback nor can it accurately recreate the intricate topology of the knee joint to a satisfactory level.23 From our results, we can conclude that tactile feedback provided by a physical counterpart to the virtual simulated environment in the form of a phantom knee joint is judged to be more realistic than the servo-simulated force-feedback based on complex collision algorithms employed by active haptic systems. This appreciable difference in realism is likely due to extremely sophisticated space-warping technology which accurately conveys physical contact in the virtual simulation based on the user-guided instrument making contact with the knee model in the real world.24 This is in effect a mixed reality system which benefits from the confluence of the physical and virtual world to promote a much higher degree of immersion for the user, which is therefore perceived as more realistic.

Although it would be reasonable to assume that perception of realism might differ based on level of experience, we did not find any statistically significant differences between face validity scores of the intermediate and expert groups. Whilst the expert group had performed a much higher number of arthroscopies prior to the study compared to the intermediate group, it stands to reason that both groups had a fundamentally similar appreciation of simulator verisimilitude - what a ‘real’ arthroscopy should feel like - despite a quantitative difference in arthroscopy experience. However, it should be noted that the majority of the participants in the intermediate group had completed >100 arthroscopies (mean: 146.8) which would place them in the expert bracket according to the criteria used by similar studies.14, 15, 16,18 (Table 1) However, it also means that early-stage trainees may have been underrepresented in our sample. Nevertheless, on average both our intermediate and expert groups were very experienced arthroscopists; which strengthens and lends further credence to the results of this study as our primary outcome measure was to determine simulator face validity, which inherently requires prior arthroscopy experience.

11. Limitations

The primary limitation of this study was the inherently subjective evaluation of simulator realism by candidates with varying levels of arthroscopy experience in order to determine face validity. Whilst we used a validated questionnaire that has been used extensively in VR arthroscopy simulator research,18,19,25 it is possible that the questionnaire was not interpreted appropriately by participants, with most raters eschewing the extremes of the Likert scale and tending to agree with the statements provided. Simultaneously, while we aimed to recruit a balanced proportion of arthroscopists from different hospitals and career stages, recruitment bias cannot be excluded, since participation was voluntary and invariably most of the study participants were interested in the application of VR simulators in surgical education - which may have skewed their ratings favourably toward the value of simulators. Furthermore, some of the participants were not simulator naive and had previous experience with arthroscopy simulators (61.5% of experts vs. 46.4% of intermediates), which may have tempered their expectation of simulator realism. However, it should be noted that despite these potential distortions, our study still showed a significant (p < 0.001) difference in face validity scores between the two simulators.

Due to time and resource limitations, this study was only able to compare and evaluate the face validity for a simulated diagnostic knee arthroscopy, other training modules were not assessed and therefore the conclusions drawn from our results may not be entirely generalisable to other simulator tasks.

Finally, the nature of arthroscopy simulator research limits the recruitment of large numbers of participants for practical reasons and our study is no exception. For the purposes of our study a pragmatic approach was taken, nevertheless one of the key limitations of our study is the relatively smaller number of experts compared to intermediates. However whilst this study is limited by a small sample size, it has the largest sample size of any previously published study directly comparing face validity between different high fidelity VR arthroscopy simulators.18,19

12. Future research

At present there is no commercially available VR arthroscopy simulator that allows for the training of portal placement - a key component of any arthro- or laparo-scopic operation and one which inherently relies on the surgeon’s appreciation of haptic feedback. Chae et al. have developed a novel cable-driven active haptic simulator to address this gap, however the construct and face validity of this simulator remain unclear.26

13. Conclusion

Current active haptic technology which employs motors to simulate tactile feedback fails to demonstrate sufficient face-validity or match the sophistication of passive haptic systems in high fidelity arthroscopy simulators. Textured rubber and plastic phantoms that mirror the anatomy and haptic properties of the knee joint when coupled with advanced motion sensing technology provides a significantly more realistic training experience for both intermediate and expert arthroscopists. This supports the use-case for passive haptic technology implementation in VR simulators for orthopaedic trainees looking to develop their knee arthroscopy skills.

References

- 1.Bota Collaborators, Rashid M.S. An audit of clinical training exposure amongst junior doctors working in Trauma & Orthopaedic Surgery in 101 hospitals in the United Kingdom. BMC Med Educ. 2018;18:1. doi: 10.1186/s12909-017-1038-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gmc . 2019. The State of Medical Education and Practice in the UK. [Google Scholar]

- 3.Wilson T., Sahu A., Johnson D.S., Turner P.G. The effect of trainee involvement on procedure and list times: a statistical analysis with discussion of current issues affecting orthopaedic training in UK. Surgeon. 2010;8:15–19. doi: 10.1016/j.surge.2009.10.013. [DOI] [PubMed] [Google Scholar]

- 4.Kim S., Bosque J., Meehan J.P., Jamali A., Marder R. Increase in outpatient knee arthroscopy in the United States: a comparison of national surveys of ambulatory surgery, 1996 and 2006. J Bone Joint Surg Am. 2011;93:994–1000. doi: 10.2106/JBJS.I.01618. [DOI] [PubMed] [Google Scholar]

- 5.Irani J.L., Mello M.M., Ashley S.W., Whang E.E., Zinner M.J., Breen E. Surgical residents’ perceptions of the effects of the ACGME duty hour requirements 1 year after implementation. Surgery. 2005;138:246–253. doi: 10.1016/j.surg.2005.06.010. [DOI] [PubMed] [Google Scholar]

- 6.Konan S., Rhee S.-J., Haddad F.S. Hip arthroscopy: analysis of a single surgeon’s learning experience. J Bone Joint Surg Am. 2011;93(Suppl 2):52–56. doi: 10.2106/JBJS.J.01587. [DOI] [PubMed] [Google Scholar]

- 7.Nawabi D.H., Mehta N., Chamberlin P. Learning curve for hip arthroscopy steeper than expected. J Hip Preserv Surg. 2016;3 doi: 10.1093/jhps/hnw030.007. [DOI] [Google Scholar]

- 8.Farnworth L.R., Lemay D.E., Wooldridge T. A comparison of operative times in arthroscopic ACL reconstruction between orthopaedic faculty and residents: the financial impact of orthopaedic surgical training in the operating room. Iowa Orthop J. 2001;21:31–35. [PMC free article] [PubMed] [Google Scholar]

- 9.Marcheix P.-S., Vergnenegre G., Dalmay F., Mabit C., Charissoux J.-L. Learning the skills needed to perform shoulder arthroscopy by simulation. Orthop Traumatol Surg Res. 2017;103:483–488. doi: 10.1016/j.otsr.2017.02.008. [DOI] [PubMed] [Google Scholar]

- 10.Karahan M., Kerkhoffs G.M.M.J., Randelli P., Tuijthof G.J.M., editors. Effective Training of Arthroscopic Skills. Springer; Berlin, Heidelberg: 2015. [Google Scholar]

- 11.Cannon W.D., Nicandri G.T., Reinig K., Mevis H., Wittstein J. Evaluation of skill level between trainees and community orthopaedic surgeons using a virtual reality arthroscopic knee simulator. J Bone Joint Surg Am. 2014;96:e57. doi: 10.2106/JBJS.M.00779. [DOI] [PubMed] [Google Scholar]

- 12.Mabrey J.D., Gillogly S.D., Kasser J.R. Virtual reality simulation of arthroscopy of the knee. Arthroscopy. 2002;18:E28. doi: 10.1053/jars.2002.33790. [DOI] [PubMed] [Google Scholar]

- 13.Jacobsen M.E., Andersen M.J., Hansen C.O., Konge L. Testing basic competency in knee arthroscopy using a virtual reality simulator: exploring validity and reliability. J Bone Joint Surg Am. 2015;97:775–781. doi: 10.2106/JBJS.N.00747. [DOI] [PubMed] [Google Scholar]

- 14.Khanduja V., Lawrence J.E., Audenaert E. Testing the construct validity of a virtual reality hip arthroscopy simulator. Arthrosc J Arthrosc Relat Surg. 2017;33:566–571. doi: 10.1016/j.arthro.2016.09.028. [DOI] [PubMed] [Google Scholar]

- 15.Rahm S., Germann M., Hingsammer A., Wieser K., Gerber C. Validation of a virtual reality-based simulator for shoulder arthroscopy. Knee Surg Sports Traumatol Arthrosc. 2016;24:1730–1737. doi: 10.1007/s00167-016-4022-4. [DOI] [PubMed] [Google Scholar]

- 16.Fucentese S.F., Rahm S., Wieser K., Spillmann J., Harders M., Koch P.P. Evaluation of a virtual-reality-based simulator using passive haptic feedback for knee arthroscopy. Knee Surg Sports Traumatol Arthrosc. 2015;23:1077–1085. doi: 10.1007/s00167-014-2888-6. [DOI] [PubMed] [Google Scholar]

- 17.Garfjeld Roberts P., Guyver P., Baldwin M. Validation of the updated ArthroS simulator: face and construct validity of a passive haptic virtual reality simulator with novel performance metrics. Knee Surg Sports Traumatol Arthrosc. 2017;25:616–625. doi: 10.1007/s00167-016-4114-1. [DOI] [PubMed] [Google Scholar]

- 18.Tuijthof G.J.M., Visser P., Sierevelt I.N., Van Dijk C.N., Kerkhoffs G.M.M.J. Does perception of usefulness of arthroscopic simulators differ with levels of experience? Clin Orthop Relat Res. 2011;469:1701–1708. doi: 10.1007/s11999-011-1797-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Martin K.D., Akoh C.C., Amendola A., Phisitkul P. Comparison of three virtual reality arthroscopic simulators as part of an orthopedic residency educational curriculum. Iowa Orthop J. 2016;36:20–25. [PMC free article] [PubMed] [Google Scholar]

- 20.Holm S. A simple sequentially rejective multiple test procedure. Scand Stat Theory Appl. 1979;6:65–70. [Google Scholar]

- 21.Stunt J.J., Kerkhoffs G.M.M.J., van Dijk C.N., Tuijthof G.J.M. Validation of the ArthroS virtual reality simulator for arthroscopic skills. Knee Surg Sports Traumatol Arthrosc. 2015;23:3436–3442. doi: 10.1007/s00167-014-3101-7. [DOI] [PubMed] [Google Scholar]

- 22.Panait L., Akkary E., Bell R.L., Roberts K.E., Dudrick S.J., Duffy A.J. The role of haptic feedback in laparoscopic simulation training. J Surg Res. 2009;156:312–316. doi: 10.1016/j.jss.2009.04.018. [DOI] [PubMed] [Google Scholar]

- 23.Kusins J.R., Strelzow J.A., LeBel M.-E., Ferreira L.M. Development of a vibration haptic simulator for shoulder arthroplasty. Int J Comput Assist Radiol Surg. 2018;13:1049–1062. doi: 10.1007/s11548-018-1734-6. [DOI] [PubMed] [Google Scholar]

- 24.Spillmann J., Tuchschmid S., Harders M. Adaptive space warping to enhance passive haptics in an arthroscopy surgical simulator. IEEE Trans Visual Comput Graph. 2013;19:626–633. doi: 10.1109/TVCG.2013.23. [DOI] [PubMed] [Google Scholar]

- 25.Bartlett J.D., Lawrence J.E., Khanduja V. Virtual reality hip arthroscopy simulator demonstrates sufficient face validity. Knee Surg Sports Traumatol Arthrosc. 2019;27:3162–3167. doi: 10.1007/s00167-018-5038-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chae S., Jung S.-W., Park H.-S. In vivo biomechanical measurement and haptic simulation of portal placement procedure in shoulder arthroscopic surgery. PloS One. 2018;13 doi: 10.1371/journal.pone.0193736. e0193736. [DOI] [PMC free article] [PubMed] [Google Scholar]