Abstract

This research aimed to demonstrate the existence of innovation in an evaluation model that is called the Description-Input-Verification-Action-Yack-Analysis-Nominate-Actualization (DIVAYANA) model as an evaluation model for the implementation of information technology-based learning at ICT vocational schools. This model can be used to determine the recommendation priority given to policy-makers to make decisions to optimize the implementation of information technology-based learning at the ICT vocational schools. This research was included in development research, with development stages that follow the Borg and Gall model design, which only focused on five stages. The five stages, included: research and information collecting, planning, develop preliminary form of product, preliminary field test, and main product revision. The subjects who were involved in the preliminary field test of the DIVAYANA model were 14 teachers from several ICT vocational schools in Bali. Determination of the research subject used purposive sampling technique. The reason for using this sampling technique is to make it easier to find subjects who understand and have critical thinking about the evaluation model. This research was carried out at ICT vocational schools in five districts in Bali province. The reason for choosing a research place at ICT vocational school is to show valid evidence that the DIVAYANA evaluation model is suitable for evaluating the information technology-based learning process at the level of vocational school. The data collection tools in the preliminary field test were questionnaires. The analysis technique that was used to analyze the quantitative data from the preliminary field test in this research was quantitative descriptive. The result of this research was in the form of effectiveness percentage level of the DIVAYANA model was 88.571%, so that this model was able to be categorized as an evaluation model that effective for information technology-based learning at ICT vocational schools.

Keywords: DIVAYANA, Evaluation model, Information technology-based learning

DIVAYANA; Evaluation model; Information technology-based learning.

1. Introduction

The shifting paradigms, approaches, models, and strategies used in the educational process are influenced by era and technology development (Singh and Mishra, 2017; Kanwar et al., 2019; Sharma, 2019). The change that is happening in the field of education today is a shift in the conventional learning process towards digital. This is reinforced by the statements of several researchers (Ramadhani et al., 2019; Li, 2016; Ghavifekr and Rosdy, 2015; Sadeghi, 2019) who in principle stated the same thing that the influence of globalization affects the shift in educational paradigms from conventional to an openness era based on digital.

The shift is marked by the use of information technology massively to support digital-based learning processes at various levels of education from elementary schools, junior high schools, senior high schools to universities. Some information technology-based learning models that have been massively used in various levels of education today are e-learning and blended learning. This statement is supported by several researchers (Atef and Medhat, 2015; Dziuban et al., 2018; Adams et al., 2018; Sohrabi et al., 2019; Harahap et al., 2019; Kerzˇič et al., 2019), which in principle have the same purpose. That purpose is the e-learning and blended learning can be used as learning models that utilize ICT so it can improve the learning process towards a better level of education.

Even though it is said that e-learning and blended learning models are familiar in various levels of education, in factually the use of these models is not optimum yet. This is evidenced from several researcher's statements (Medina, 2018; Bralić and Divjak, 2018; Keskin and Yurdugül, 2019) which stated that even though information technology-based learning has advantages compared with conventional learning that does not utilize ICT. But factually, information technology-based learning is not accepted in every educational area, so this indicates that its implementation is not optimum yet.

Based on that evidence, it is necessary to conduct a thorough evaluation for the implementation of the information technology-based learning models (particularly e-learning or blended learning) in schools or higher education institutions. There are many evaluation models in the field of education that can be used to evaluate the implementation of information technology-based learning, included: Context-Input-Process-Product (CIPP), countenance, formative-summative, and Center for the Study of Evaluation-University of California in Los Angeles (CSE-UCLA).

If we look at some of these evaluation models, it can be said that the CSE-UCLA model has advantages compared with other models. The advantage of the CSE-UCLA model is it has a component of program implementation. However, similar to the other evaluation models, the CSE-UCLA model also has weaknesses. Some of the weaknesses of each of the evaluation models that have been mentioned above are evidenced by several research results that showed the use of those evaluation models to evaluate ICT-based learning.

The research conducted by Thurab-Nkhosi (2019) showed a CIPP model was able to be used to evaluate the development of blended learning. The strength of the CIPP model in this Thurab-Nkhosi's research showed that the components of the context, input, process, and product were able to be used as a measure of success in developing blended learning. The weakness of the CIPP model shown in Thurab-Nkhosi's research was that it had not been able to show appropriate recommendations in determining the priority of improvements to the evaluation aspects of blended learning. The research conducted by Thanabalan et al. (2015) showed that the use of the Countenance model to evaluate digital modules, especially the module digital for story pedagogical. The strength of the Countenance model in Thanabalan et al.'s research showed the description matrix and consideration matrix used as a basis for evaluating the module digital for story pedagogical. The weakness of the Countenance model shown in Thanabalan et al.'s research was that it had not shown recommendations for the priority of improvements in the evaluation aspects of the module digital for story pedagogical. Perera-Diltz and Moe (2014) showed the use of the formative-summative model to evaluate the implementation of online education. The strength of the formative-summative model in research of Perera-Diltz and Moe showed that there were measuring criteria during the implementation of the learning process and after learning. The weakness of the formative-summative model shown in the research of Perera-Diltz and Moe was that it had not specifically demonstrated yet the evaluation aspects used to evaluate the online-based learning environment. The research of Suyasa et al. (2018) showed the use of the CSE-UCLA model to evaluate blended learning programs at SMA Negeri 1 Ubud. The strength of the CSE-UCLA model in Suyasa et al.'s research showed that there was an evaluation component at the socialization stage of the existence of the blended learning program. The weaknesses of the CSE-UCLA model shown in the Suyasa et al.'s research was that it had not to showed yet the determining process the best recommendation related to the priority aspects of improvement in the evaluation of blended learning. Based on the weakness of each of those evaluation models, it can be stated that those models have not been able to provide an appropriate and specific recommendation as a solution to the findings or problems obtained in the implementation of information technology-based learning.

Besides the existence of some studies that showed evaluation models in the education field, several studies also showed methods of decision support systems that can be used to determine the best recommendations in evaluation activities. Those are evidenced by the research results of Alqahtani and Rajkhan (2020) which showed the use of the TOPSIS (Technique for Order Preference by Similarity to Ideal Solution) and AHP (Analytic Hierarchy Process) methods in identifying the critical success factors for implementing E-learning during COVID-19. The research weakness of Alqahtani and Rajkhan was that it had not shown clearly the calculating process of weight given by the decision-makers together related to the determining criteria for the success of the implementation of e-learning.

The research results by Mohammed et al. (2018) showed the use of the AHP method to determine weighted rankings toward evaluation criteria and the use of the TOPSIS method to determine rankings from several alternatives of the e-learning approach. The weaknesses of Mohammed et al.'s research was that it had not shown the normalization process of weighting the evaluation criteria given by decision-makers based on mutual agreement or focus group discussion. The research results of İnce et al. (2017) showed a combination of AHP and TOPSIS methods to determine the best performance in evaluating learning objects. The weaknesses of İnce et al.'s research was that it had not shown a detailed calculation process related to the weighting of criteria given through the results of deliberation or collective agreement by decision-makers.

Based on the weakness described above and the evidence that supports it, so one of the new evaluation models that can be developed to answer the problems or difficulties of determining the appropriate evaluation model is used to evaluate the implementation of IT-based learning, namely the DIVAYANA model. Through this model, a definite and accurate recommendation can be determined based on a calculation process that adopts a decision support system as part of artificial intelligence.

There are several results from previous studies as the background for this research. Several previous studies certainly have similarities, differences, and weaknesses when compared to this research. The similarities, differences, and weaknesses from previous studies can be seen in Table 1.

Table 1.

Research results, similarities, differences, and weaknesses from previous studies.

| Research | Results | Similarities | Differences | Weaknesses |

|---|---|---|---|---|

| Prihaswati et al. (2017) | Prihaswati et al.'s research showed the use of the CSE-UCLA model which focuses on three components included: system assessment, program planning, and program implementation to evaluate a program. | The similarity of Prihaswati et al.'s research with this research lies in the system assessment component that explains the existence of the program, which has the same basic principle as the description component in the DIVAYANA model. The program planning component that explains the input of resources needed in implementing a program also has the same basic principle as the input component in the DIVAYANA model. | Research by Prihaswati et al. does not show an evaluation component that is equipped with a process of calculating the determination of priority recommendations like that of the DIVAYANA evaluation model, namely the nominate component. | Prihaswati et al.'s research had not shown yet the calculation process of determining priority recommendations to facilitate decision making in providing alternative improvements to the evaluated program. |

| Gondikit (2018) | Research conducted by Gondikit showed that there were description matrix and judgment matrix used to evaluate programs. | The similarity of Gondikit's research with this research lies in the description matrix that explains the existence of the program evaluated, and the judgment matrix which explains the evaluation success standards as a reference to facilitate decision making. That description matrix function is represented in the DIVAYANA model's description component, while the judgment matrix function is also represented in the verification component of the DIVAYANA model which also shows the evaluation success standards. | Gondikit's research has not a component that is used to calculate the priority determination of recommendations such as that of the DIVAYANA model. | Gondikit's research had not shown yet priority recommendations from the highest to the lowest levels that facilitate decision making to optimize the program being evaluated. |

| Agustina and Mukhtaruddin (2019) | Their research showed the existence of the CIPP evaluation component consisting of context, input, process, and product. | The similarity of Agustina and Mukhtaruddin's research with this research lies in the context, input, process, and product components which have the same function as the description, input, action, and actualization components in the DIVAYANA model. | Agustina and Mukhtaruddin's research does not have verification, yack, analysis, and nominate components like the DIVAYANA model. | Agustina and Mukhtaruddin's research had not shown yet a process to determine priority recommendations that make it easier for stakeholders to make the best decision. |

| Harjanti et al. (2019) | Research conducted by Harjanti et al. showed a responsive model that focused on the response of the audience to a program. | The similarity of Harjanti et al.'s research with this research is the attention to audience response which is needed also in the actualization component in the DIVAYANA model to measure the impact of implementing the priority recommendations given by evaluators. | Research by Harjanti et al. does not show the specific components that the responsive model has in conducting the evaluation, while the DIVAYANA model shows all the components used in conducting the evaluation. | Harjanti et al.'s research had not shown yet evaluation components that were specifically used to determine priority recommendations for the improvements to the program being evaluated. |

Based on those general problems and limitations found in previous studies that have been underlying this research, it is necessary to do further research. Therefore, the researcher is interested in researching the development of the DIVAYANA model, which is used as an evaluation model on information technology-based learning, with a case study in several ICT vocational schools in Bali. The research question in this research is “how the development of the DIVAYANA model used as an evaluation model of information technology-based learning at ICT vocational schools?”

2. Method

The approach in this research was development research with development stages referring to the Borg and Gall design. Borg and Gall's design consists of ten stages, included: 1) research and information collecting, 2) planning, 3) develop preliminary form of product, 4) preliminary field test, 5) main product revision, 6) main field test, 7) operational product revision, 8) operational field testing, 9) final product revision, and 10) dissemination and implementation.

This research only focused on carrying out five stages of development from the Borg and Gall design, starting from the stage of research and information collecting until the stage of main product revision. The reason is this research was only conducted for one year and the objects developed in this research were limited to conceptual design and simulation of the DIVAYANA model. But, it had not yet produced a physical product in the form of a computer application that was applied in the field on a large scale. The things that were done in the five stages of the Borg and Gall design related to the development of the DIVAYANA model can be explained as follows.

-

1)

Research and Information Collecting

This stage can be said as a preliminary study stage. At this stage, an assessment of the DIVAYANA model is carried out and the data needed in the DIVAYANA simulation model is described.

-

2)

Planning

At this stage, the planning of research activities is carried out. Things that need to be prepared are simulation data to simulate how the DIVAYANA model works.

-

3)

Develop Preliminary Form of Product

At this stage, the DIVAYANA model design is made. The DIVAYANA evaluation model design that is made adapts the characteristics of the object being evaluated.

-

4)

Preliminary Field Test

At this stage is carried out a limited trial toward the DIVAYANA model. Besides these trials, at this stage is carried out also a simulation of the use of the DIVAYANA model.

-

5)

Main Product Revision

At this stage, the model design improvement is carried out based on the results of the preliminary field test. The decision to make improvements or not to the model design is determined from the results of the effectiveness test on the DIVAYANA model.

The preliminary field test of the DIVAYANA evaluation model required samples that involved several teachers who use this evaluation model. The sampling technique used in this research was purposive sampling. The reason for using this technique is very appropriate for obtaining accurate information from parties who have knowledge and experience regarding the DIVAYANA evaluation model being tested. The samples involved in the preliminary field test of the DIVAYANA model were 14 teachers from several ICT vocational schools in Bali province.

The research location was carried out in several ICT vocational schools in Bali province spread across the five districts, included: SMK N 2 Tabanan (Tabanan Regency), SMK Werdhi Sila Kumara (Gianyar Regency), SMK N 3 Singaraja (Buleleng Regency), SMK TI Udayana (Badung Regency), and SMK Negeri 1 Denpasar (Denpasar City). The sampling technique used to determine the name of the school as the research location was also using the purposive sampling technique. The reason for conducting the research in several ICT vocational schools spread across the five districts is to prove clearly and correctly that the DIVAYANA evaluation model is effective in being used as an evaluation model for information technology learning at the vocational schools level in Bali province.

The instrument used to conduct the preliminary field test of the DIVAYANA evaluation model was questionnaires. The instrument consists of 15 questions related to a preliminary field test for the effectiveness of the DIVAYANA evaluation model. The validity test of the preliminary field test instrument was carried out by analyzing the validity of its contents. Content validity is the validity determined by the degree of representativity of the instrument items. The content validity analysis technique of the preliminary field test instrument in this research was carried out through expert testing with the Gregory formula. The Gregory formula can be shown as follows (Retnawati, 2016; Sugihartini et al., 2019).

| (1) |

Notes:

A = cell that shows disagreement between the two evaluators

B and C = cell that shows a different point of view between evaluators

D = cell that shows valid agreement between the two evaluators

Determining the categories of content validation results from the assessed instruments by experts is based on the classification of validity proposed by Guilford. The category of instrument validity that refers to the classification of validity proposed by Guilford (Katemba and Samuel, 2017; Fazlina, 2018; Ardayati and Herlina, 2020) can be seen as follows.

0.80 < rxy ≤ 1.00 : very high validity (very good)

0.60 < rxy ≤ 0.80 : high validity (good)

0.40 < rxy ≤ 0.60 : moderate validity (enough)

0.20 < rxy ≤ 0.40 : low validity (less)

0.00 < rxy ≤ 0.20 : very low validity (poor)

rxy ≤ 0.00 : Invalid

The analysis technique used to analyze quantitative data from the results of the preliminary field test of the DIVAYANA model was a quantitative descriptive using percentage descriptive calculation. This analysis technique serves to determine the effectiveness level of the DIVAYANA evaluation model. The formula is used for percentage descriptive calculations can be seen as follows (Fazlina, 2018).

| (2) |

Notes:

∑ = Total

n = Number of all questionnaire items

In interpreting and making decisions at the effectiveness level, the percentage results are converted to an achievement level of the five's scale. The conversion of the five's scale achievement level can be seen in Table 2 (Mantasiah et al., 2018; Fikri et al., 2018; Prima and Lestari, 2019).

Table 2.

The conversion of the Five's scale achievement level.

| Achievement Levels (%) | Qualification | Information |

|---|---|---|

| 90–100 | Excellent | Not revised |

| 80–89 | Good | Not revised |

| 65–79 | Moderate | Revised |

| 55–64 | Less | Revised |

| 0–54 | Poor | Revised |

3. Results

Referring to the development stages of the Borg and Gall which were carried out specifically in the research of 2020, there are five important things that can be shown as results from this research. Some of the intended research results can be explained as follows.

3.1. Results at the stage of research and information collecting

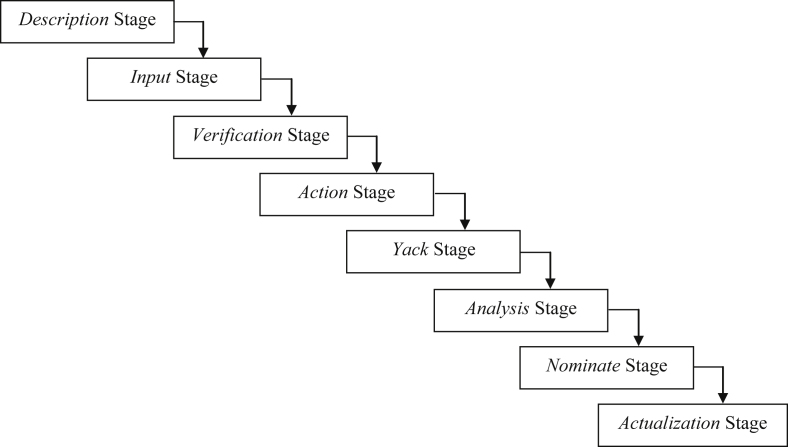

At this stage is shown an overview of the DIVAYANA evaluation model. The DIVAYANA model is one of the innovative evaluation models created by Dewa Gede Hendra Divayana (2020a, 2020b). This model can be used to evaluate information technology-based education services, information technology-based learning processes, information technology education policies, and other general matters in information technology-based education. When viewed from its name, DIVAYANA model is an acronym that comes from the initial letters of the following words: Description, Input, Verification, Action, Yack, Analysis, Nominate, and Actualization. The main purpose of creating this evaluation model is to determine the recommendations priority starting from the highest to the lowest levels of some recommendations given by decision-makers to solve some of the problems found in the field. The reliability of this evaluation model that is not owned by other educational evaluation models is the existence of a calculation process using the DIVAYANA formula which inserts the concept of artificial intelligence in determining recommendations priority ranging from highest to lowest priority aspects. The stages that must be passed in this model can be shown in Figure 1 (Divayana, 2020a, 2020b).

-

1)

Description Stage

Figure 1.

Stages of DIVAYANA model.

At this stage, a description is made regarding the existence of the object being evaluated and the problems found in the field related to the object being evaluated. The object being evaluated means policies, platforms, models, strategies, and other matters related to the ICT-based learning process.

-

2)

Input Stage

At this stage, it is explained about things that can be used as inputs that support the existence of the object being evaluated. Besides that, it has also given input in the form of alternative solutions to solve the problems found in the previous description stage.

-

3)

Verification Stage

At this stage, it is carried out the checking the suitability between several problem-solving alternatives offered and the existing problems. It is needed a standard that determines the success of the evaluation as a basis for reference to obtained good and clear verification results. The results of this stage are several problem solving alternatives that have been verified following the reference standards for the success of the evaluation.

-

4)

Action Stage

At this stage, a field trial is carried out on several alternative solutions that had been verified. Testing is carried out involving experts and evaluators to find out the success of the process in this stage.

-

5)

Yack Stage

At this stage, there is a discussion involving several experts and evaluators to obtain qualitative data through argumentations and clarifications, which can later be used as a reinforcement of quantitative data obtained from the results of tests conducted at the action stage. Besides, this stage is also conducted activity to repair of average weight given by experts and evaluators on the success criteria of evaluation.

-

6)

Analysis Stage

At this stage, analysis is made related to quantitative and qualitative data obtained from the action and yack stages. The analysis technique used to analyze quantitative data is quantitative descriptive using descriptive percentage calculations to determine the effectiveness of each solution alternative. Qualitative data are analyzed using triangulation of data through cross-checking the results of quantitative data analysis with data obtained from interviews, observations, and documentation.

-

7)

Nominate Stage

At this stage, it is carried out the calculation of the recommendations priority ranking from the highest to lowest level using data sourced from the effectiveness of each solution alternative obtained from the analysis stage. The method used to carry out the calculation to determine the priority ranking of recommendations at this Nominate stage can use the DIVAYANA formula. DIVAYANA formula consists of three equations, including Eq. (3) to determine the repair of weight average, Eq. (4) is used to determine the Vector-D value as a normalization value, and Eq. (5) to determine the Vector-R value as ranking value (Divayana, 2020a, 2020b).

| (3) |

Notes:

= Repair of Weight Average

= The weight average is given by each decision maker (experts and evaluators) through focus group discussion

| (4) |

Where i = 1,2,3,...,n; and should be the value of 1.

Notes:D = Vector-D

= Assessment score of each criterion

m = the total number of decision-makers (experts and evaluators)After getting the Vector-D value, then the Vector-R calculation is performed to determine to rank. The Vector-R calculation can use Eq. (5).

| (5) |

Notes:

R = Vector-R

D = Vector-D

-

8)

Actualization Stage

At this stage, internalization/implementation of recommendations which are the highest priority that had been obtained in the previous Nominate stage to the wider environment. If possible and have a long enough research time, at this stage, it can evaluate the impact arising from the implementation of the recommendations.

3.2. Results at the planning stage

The use of the DIVAYANA model requires quantitative and qualitative data relating to the objects to be evaluated. Especially for this 2020 research, how the DIVAYANA evaluation model works are shown by performing simulations using simulation data. Therefore, it is necessary to have good planning to prepare the simulation data so that the workings of using the DIVAYANA model can be more easily understood. For example, the prepared simulation version data is data related to the evaluation of the implementation of blended learning at ICT vocational schools. The complete data can be seen in Tables 3, 4, 5, 6, 7, 8, and 9.

Table 3.

Simulation data about the causes of the implementation of blended learning at ICT vocational schools.

| Causes Codes | Causes of Blended Learning Implementation |

|---|---|

| CS1 | Government policy |

| CS2 | Vision, mission, and objectives of the school |

| CS3 | School regulations |

| CS4 | School community support |

| CS5 | Adequate funding support |

| CS6 | The suitable platform |

| CS7 | Adequate supporting infrastructure |

| CS8 | Adequate human resources |

| CS9 | Adequate material content |

Table 4.

Simulation data about problems/constraints in the implementation of blended learning at ICT vocational schools.

| Problems Codes | Problems/Constraints in Implementation of Blended Learning |

|---|---|

| P1 | Unclear school regulation in the implementation and management of blended learning |

| P2 | Budget limitations |

| P3 | The low ability of developers/managers team of blended learning |

| P4 | The low ability of teachers and students in operating computers and the internet |

| P5 | The low interest of teachers to carry out the learning process or discussion through the blended learning platform |

| P6 | The low interest of students to learn independently through a blended learning platform |

| P7 | Limited supporting facilities and infrastructure |

| P8 | The low amount and quality of content material available in blended learning |

Table 5.

Simulation data about problem solving alternatives.

| Alternatives Codes | Alternatives of Problem Solving |

|---|---|

| A1 | School regulation readiness |

| A2 | Budget readiness |

| A3 | The ability readiness of blended learning developers/managers team |

| A4 | The readiness of teacher and student in operating the computers and the internet |

| A5 | Encouragement of teachers' interest to use a blended learning platform in the learning process or discussion with students |

| A6 | The encouragement of student independence to learn independently |

| A7 | The readiness of supporting facilities and infrastructure |

| A8 | Optimizing the amount and quality of material content |

Table 6.

The success standards of evaluation.

| Standard Codes |

Success Standards | Percentage of Effectiveness |

|---|---|---|

| S1 | Availability of government policies regarding blended learning | ≥95% |

| S2 | The availability of vision, mission, and school goals that support the implementation of blended learning | ≥95% |

| S3 | Availability of school regulations | ≥95% |

| S4 | Availability of school community support | ≥85% |

| S5 | Availability of adequate funds | ≥85% |

| S6 | Availability of the right platform | ≥90% |

| S7 | Availability of supporting infrastructure for blended learning | ≥88% |

| S8 | Availability of adequate human resources | ≥88% |

| S9 | Availability of adequate material content | ≥88% |

Table 7.

Simulation data about the conformity check between alternatives and success standards of evaluation.

| Alternatives | Standards | Suitability |

|

|---|---|---|---|

| Suitable | Unsuitable | ||

| A1. School regulation readiness | S3. Availability of school regulations | √ | |

| A2. Budget Readiness | S5. Availability of adequate funds | √ | |

| A3. The ability readiness of blended learning developers/managers team | S8. Availability of adequate human resources | √ | |

| A4. Teacher and student readiness in computer and internet operations | S8. Availability of adequate human resources | √ | |

| A5. Encourage teachers' interest to use a blended learning platform | S8. Availability of adequate human resources | √ | |

| A6. Encourage students' independence to study independently | S8. Availability of adequate human resources | √ | |

| A7.Supporting facilities and infrastructure readiness | S7. Availability of supporting infrastructure for blended learning | √ | |

| A8. Optimize the amount and quality of material content | S9. Availability of adequate material content | √ | |

Table 8.

Simulation data about the results of field trial recapitulation implementation of blended learning at ICT vocational schools.

| Alternatives | Average of Percentage |

|---|---|

| A1. School regulation readiness | 88.29 |

| A2. Budget Readiness | 73.71 |

| A3. The ability readiness of blended learning developers/managers team | 83.14 |

| A4. Teacher and student readiness in computer and internet operations | 81.71 |

| A5. Encourage teachers' interest to use a blended learning platform | 78.29 |

| A6. Encourage students' independence to study independently | 80.29 |

| A7. Supporting facilities and infrastructure readiness | 75.14 |

| A8. Optimize the amount and quality of material content | 78.57 |

Table 9.

Simulation Data about Some Arguments that have been Agreed by Experts and Evaluators in Focus Group Design Activities.

| Experts/Evaluators | Arguments |

|---|---|

| Expert-1 | In general, the readiness of school regulations to support the implementation of blended learning in ICT vocational schools is good and adequate. |

| Expert-2 | In general, the availability of budget/funding for the implementation of blended learning at ICT vocational schools is still relatively sufficient. |

| Expert-3 | The ability of the developer team to implement blended learning in ICT vocational schools is generally good. |

| Expert-4 | In general, teachers and students can operate the internet and computers. |

| Evaluator-1 | In general, there are sufficient activities that are capable of encouraging teachers' interest in using a blended learning platform. |

| Evaluator-2 | In general, some activities can encourage students to learn independently properly. |

| Evaluator-3 | In general, the readiness of facilities and supporting infrastructure for blended learning is still considered sufficient. |

| Evaluator-4 | In general, the amount and quality of material content are still considered sufficient. |

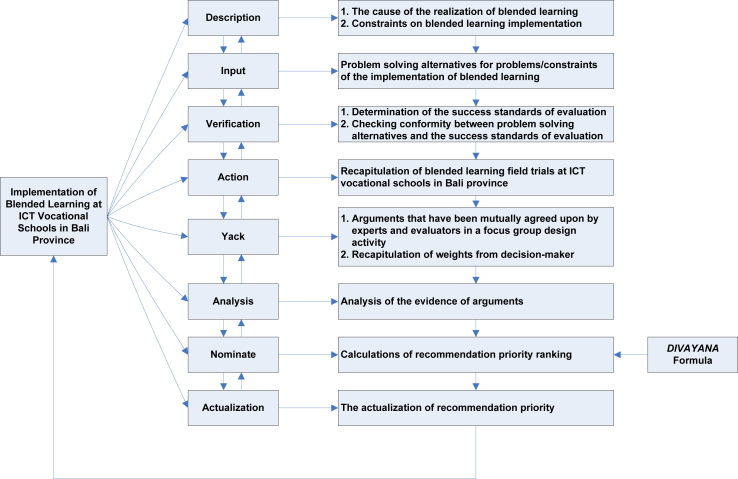

3.3. Results at the stage of develop a preliminary form of product

The results shown at this stage was the DIVAYANA model design used to evaluate information technology-based learning (example: blended learning at ICT vocational schools in Bali). The DIVAYANA model design intended can be seen in Figure 2.

Figure 2.

Design of DIVAYANA model as an evaluation model for IT-Based learning implementation (Example: Blended learning at ICT Vocational Schools in Bali).

3.4. Results at the stage of preliminary field test

The results shown at this stage were simulations of how to work using the DIVAYANA model to evaluate the implementation of information technology-based learning at ICT vocational schools in Bali province. The complete simulation results of how to use the DIVAYANA model can be explained as follows.

Based on dummy data that had been prepared in advance at the planning stage, so the evaluation process simulation can be performed using the DIVAYANA model. The DIVAYANA evaluation stage is carried out starting from the description stage to the actualization stage.

-

1)

Description stage

At this stage, an explanation of the causes of the appearance of information technology-based learning is carried out and the indicators that become obstacles in its implementation at ICT vocational schools. The data related to the causes and constraints of implementing information technology-based learning (for example is blended learning at ICT vocational schools in Bali province) that have previously been shown in Tables 3 and 4.

-

2)

Input Stage

At this stage, several alternatives are determined that can be used as a solution to the problems (it is shown previously in Table 4). Some of the problem-solving alternatives have previously been shown in Table 5.

-

3)

Verification Stage

At this stage is carried out the determination of standards/criteria for the success of evaluating the implementation of blended learning at ICT vocational schools in Bali province. The standard list is obtained based on the list of causes for the implementation of blended learning as shown in Table 3. The complete evaluation success standards have been shown previously in Table 6. At this stage, there is also a check on the suitability of problem-solving alternatives and evaluation standards. The results of these checks have previously been shown in Table 7.

-

4)

Action Stage

At this stage, the average percentage of field trial results is determined toward several alternative solutions to problems that have been verified in the previous verification stage. The results of the field trial recapitulation on the implementation of blended learning carried out in five schools spread across five districts in the Bali province have previously been shown in Table 8. Complete calculations to obtain the recapitulation results can be seen in Appendix A. List of questionnaire statements used to obtain data from the recapitulation results in Table 8 must be valid. The process for obtaining content validation of the questionnaire statement can be seen in Appendix C to Appendix F.

-

5)

Yack Stage

At this stage, focus group design activities are carried out to get opinions/arguments that are agreed upon with the experts and the evaluators. Some of the arguments that have been mutually agreed upon by the experts and evaluators have previously been shown in Table 9. In addition to the argument data shown in Table 9, we also need weight data given by decision-makers (experts and evaluators) to each evaluation success criteria. The recapitulation of weights given by experts to each criterion in detail can be shown in Table 10. The complete process of calculating the weights recapitulation can be seen in Appendix G.

-

6)

Analysis Stage

Table 10.

The recapitulation of weights from decision makers.

| Criteria Codes | Repair of Weight Average (WYack) |

|---|---|

| C1 | 0.114 |

| C2 | 0.118 |

| C3 | 0.111 |

| C4 | 0.111 |

| C5 | 0.092 |

| C6 | 0.118 |

| C7 | 0.111 |

| C8 | 0.111 |

| C9 |

0.114 |

| ΣWYack | 1 |

Based on the results shown in Table 9, it is proven that the arguments given by expert-1, which state that generally, the readiness of school regulations to support the implementation of blended learning in ICT vocational schools in Bali is good. This is reinforced by the results of the effectiveness level percentage of school regulation readiness shown in Table 8 that was equal to 88.29%. So, it can be categorized as good. The statements/arguments given by expert-2 have proven that the budget available to implementation of blended learning is sufficient. This is reinforced by the results of the effectiveness level percentage of budget availability that was equal to 73.71%. So, it can be categorized as moderate. The statements/arguments given by expert-3 have proven to be true regarding the ability of the developer/manager team to realize blended learning. This is reinforced by the results of the effectiveness level percentage of the ability from the developers/manager team of blended learning was equal to 83.14% so that it can be categorized as good. The statements/arguments given by expert-4 have also proven to be true regarding the ability of teachers and students to operate the internet and computers. This is reinforced by the results of the effectiveness level percentage of the ability of teachers and students in operating the internet and computers that were equal to 81.71% so that it can be categorized as good.

The statements/arguments that have been given by Evaluator-1 have indeed proven to be true related to the existence of activities that are sufficient to be able to encourage teachers' interest in using a blended learning platform. This is reinforced by the results of the effectiveness level percentage of activities that can encourage teachers' interest in the blended learning platform that was equal to 78.29% so that it can be categorized as moderate. The statements/arguments given by Evaluator-2 have indeed proven to be true related to the existence of activities that are quite capable of encouraging students to learn independently. This is reinforced by the results of the effectiveness level percentage of activities that can encourage students’ interest to learn independent was equal to 80.29% so that it can be categorized as good. The statements/arguments given by Evaluator-3 have indeed proven to be true about the readiness of facilities and infrastructure to support blended learning. This is reinforced by the results of the effectiveness level percentage of facilities and infrastructure supporting blended learning that was equal to 75.14% so that it can be categorized as moderate. The statements/arguments given by Evaluator-4 have indeed been proven to be true regarding the amount and quality of the material content. This is reinforced by the results of the effectiveness level percentage of the amount and quality of material content used in blended learning that was equal to 78.57% so that it can be categorized as moderate.

-

7)

Nominate Stage

At this stage, a ranking process of recommendation priority is carried out from the highest to the lowest level using the DIVAYANA formula. The data used for the calculation process of the DIVAYANA formula comes from the effectiveness average level of each problem-solving alternatives obtained from the analysis stage. The initial data used in the calculation process of the DIVAYANA formula can be seen in Table 11.

Table 11.

Preliminary data for DIVAYANA formula calculations.

| Alternatives | Criteria |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | |

| A1 | 88.29 | 11.71 | 11.71 | 11.71 | 11.71 | 11.71 | 11.71 | 11.71 | 11.71 |

| A2 | 26.29 | 26.29 | 73.71 | 26.29 | 26.29 | 26.29 | 26.29 | 26.29 | 26.29 |

| A3 | 16.86 | 16.86 | 16.86 | 16.86 | 16.86 | 16.86 | 16.86 | 83.14 | 16.86 |

| A4 | 18.29 | 18.29 | 18.29 | 18.29 | 18.29 | 18.29 | 18.29 | 81.71 | 18.29 |

| A5 | 21.71 | 21.71 | 21.71 | 21.71 | 21.71 | 21.71 | 21.71 | 78.29 | 21.71 |

| A6 | 19.71 | 19.71 | 19.71 | 19.71 | 19.71 | 19.71 | 19.71 | 80.29 | 19.71 |

| A7 | 24.86 | 24.86 | 24.86 | 24.86 | 24.86 | 24.86 | 75.14 | 24.86 | 24.86 |

| A8 | 21.43 | 21.43 | 21.43 | 21.43 | 21.43 | 21.43 | 21.43 | 78.57 | 21.43 |

The average scores of the effectiveness level from each alternative (sourced from data in Table 8) are placed in bold highlighted cells, which are a meeting between alternatives and the corresponding criteria (as shown earlier in Table 7). The average scores of the effectiveness level, which are placed on white block cells in one row in each alternative, are obtained from the result of subtracting score 100 with value in the bold located in one row of those rows. For example, a value of 11.71 in the meeting point of the cell between A1 and C2 is obtained by calculating 100–88.29. Likewise, the value of 21.43 in the meeting cell between A8 and C7 is obtained by calculating 100–78.57.

Based on the data shown in Tables 10 and 11, the Vector-D calculation process can be performed using the DIVAYANA formula. The recapitulation of Vector-D calculation results can be seen in Table 12. The complete Vector-D calculation process can be explained in Appendix H.

Table 12.

Recapitulation of the results of Vector-D calculation.

| Vector-D | Values |

|---|---|

| D1 | 1.84 |

| D2 | 3.69 |

| D3 | 2.52 |

| D4 | 2.70 |

| D5 | 3.13 |

| D6 | 2.88 |

| D7 | 3.51 |

| D8 | 3.09 |

After the Vector-D value was obtained, a calculation is performed to obtain the Vector-R value for ranking. The recapitulation of the Vector-R calculation results can be seen in Table 13. The complete Vector-R calculation process can be explained in Appendix I.

Table 13.

Recapitulation of the results of Vector-R calculation.

| Vector-R | Values |

|---|---|

| R1 | 0.079 |

| R2 | 0.158 |

| R3 | 0.108 |

| R4 | 0.116 |

| R5 | 0.134 |

| R6 | 0.123 |

| R7 | 0.150 |

| R8 | 0.132 |

From the results of Vector-R, the ranking recapitulation of problem-solving alternatives can be arranged from the highest level to the lowest. The highest level is used as the first rank, while the lowest level is the last rank. The complete recapitulations can be seen in Table 14.

Table 14.

-

8)Actualization Stage

| Rank | Alternatives | R-Vector Values |

|---|---|---|

| I | A2 | 0.158 |

| II | A7 | 0.150 |

| III | A5 | 0.134 |

| IV | A8 | 0.132 |

| V | A6 | 0.123 |

| VI | A4 | 0.116 |

| VII | A3 | 0.108 |

| VIII | A1 | 0.079 |

Based on the recapitulation results shown previously in Table 14, so can be made several recommendations that can be actualized starting from the first priority to the last priority. The first priority recommendation that needs to be followed up quickly is the preparation of an adequate budget to actualize the implementation of blended learning. Recommendations that become the last priority will also need to be followed up on an ongoing basis, namely by preparing appropriate school regulations related to the implementation of blended learning, which in the future will be adjusted based on the dynamics and changes alongside with technological advancement.

Besides showing the simulation results of how the DIVAYANA model works, this stage also showed the results of a preliminary field test to determine the effectiveness level percentage of using the DIVAYANA model. It was used as an evaluation model to evaluate the information technology-based learning process at ICT Vocational Schools in Bali province. The full preliminary field test of the DIVAYANA model can be seen in Table 15.

Table 15.

Preliminary field test toward the use of the DIVAYANA model as an evaluation model of the information technology-based learning process at ICT Vocational Schools in Bali province.

| Respondents | Items- |

∑ | Effectiveness Percentage (%) | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | |||

| Respondent-1 | 5 | 4 | 4 | 4 | 5 | 5 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 5 | 4 | 64 | 85.333 |

| Respondent-2 | 4 | 5 | 4 | 5 | 4 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 71 | 94.667 |

| Respondent-3 | 5 | 4 | 5 | 4 | 5 | 5 | 5 | 4 | 4 | 5 | 4 | 5 | 5 | 4 | 4 | 68 | 90.667 |

| Respondent-4 | 5 | 4 | 4 | 4 | 4 | 4 | 5 | 5 | 5 | 5 | 4 | 5 | 4 | 5 | 5 | 68 | 90.667 |

| Respondent-5 | 5 | 5 | 5 | 4 | 5 | 4 | 4 | 4 | 4 | 4 | 5 | 4 | 5 | 4 | 4 | 66 | 88.000 |

| Respondent-6 | 5 | 4 | 4 | 5 | 4 | 4 | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 5 | 5 | 70 | 93.333 |

| Respondent-7 | 4 | 5 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 5 | 5 | 4 | 4 | 4 | 4 | 63 | 84.000 |

| Respondent-8 | 5 | 4 | 4 | 4 | 5 | 5 | 5 | 4 | 5 | 4 | 4 | 4 | 4 | 4 | 4 | 65 | 86.667 |

| Respondent-9 | 4 | 4 | 4 | 4 | 5 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 61 | 81.333 |

| Respondent-10 | 4 | 4 | 5 | 4 | 4 | 5 | 4 | 5 | 5 | 4 | 4 | 4 | 4 | 4 | 5 | 65 | 86.667 |

| Respondent-11 | 5 | 5 | 4 | 5 | 5 | 4 | 5 | 4 | 5 | 5 | 5 | 4 | 4 | 4 | 4 | 68 | 90.667 |

| Respondent-12 | 5 | 4 | 4 | 4 | 4 | 5 | 4 | 5 | 5 | 5 | 4 | 4 | 4 | 4 | 4 | 65 | 86.667 |

| Respondent-13 | 5 | 4 | 5 | 4 | 5 | 4 | 4 | 5 | 4 | 4 | 5 | 4 | 5 | 5 | 5 | 68 | 90.667 |

| Respondent-14 |

5 |

5 |

4 |

5 |

5 |

4 |

4 |

4 |

5 |

5 |

4 |

5 |

5 |

4 |

4 |

69 |

90.667 |

| Average | 88.571 | ||||||||||||||||

In the preliminary field test stage, respondents also gave a qualitative assessment of the DIVAYANA model in addition to quantitatively. The qualitative assessment was carried out by providing suggestions for the DIVAYANA model. The complete suggestions given by the respondent can be seen in Table 16.

Table 16.

Suggestions given by respondents in the preliminary field test.

| No | Respondents | Suggestions |

|---|---|---|

| 1 | Respondent-1 | - |

| 2 | Respondent-2 | It is necessary to present the DIVAYANA formula into the DIVAYANA model design |

| 3 | Respondent-3 | It is necessary to add a title label to differentiate between evaluation components and evaluation activities |

| 4 | Respondent-4 | - |

| 5 | Respondent-5 | - |

| 6 | Respondent-6 | - |

| 7 | Respondent-7 | - |

| 8 | Respondent-8 | It is necessary to insert the DIVAYANA formula into the DIVAYANA model design |

| 9 | Respondent-9 | - |

| 10 | Respondent-10 | - |

| 11 | Respondent-11 | It is necessary to insert a title to indicate the evaluation components and evaluation activities |

| 12 | Respondent-12 | - |

| 13 | Respondent-13 | Give different colors to differentiate between evaluation components and evaluation activities |

| 14 | Respondent-14 | - |

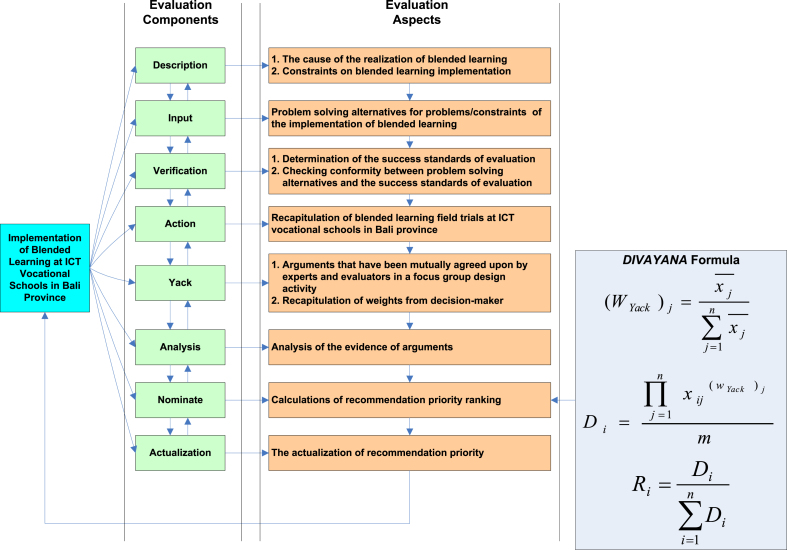

3.5. Results at the stage of main product revision

When viewed from the results of the percentage effectiveness average previously shown in Table 15, in general the DIVAYANA model design was good and there was no need for major revisions. However, if seen from some of the suggestions shown in Table 16, it was necessary to make minor improvements to the DIVAYANA model design. Therefore, the revision results of the DIVAYANA model design can be seen in Figure 3.

Figure 3.

Revision of DIVAYANA Model Design as an evaluation model for IT-Based Learning Implementation (Example: Blended Learning at ICT Vocational Schools in Bali).

4. Discussion

The simulation data shown in Tables 3, 4, 5, 6, 7, 8, and 9 were dummy data made by the author. The reason for the writer making this dummy data was to speed up the simulation process of how the DIVAYANA model works. In addition, readers can also focus more on understanding the stages and process of calculating the DIVAYANA model. The calculation process for obtaining the percentage average shown in Table 8 can be seen fully in Appendix A. Appendix B to Appendix F shows the content validation process of the statement items that have been used to obtain the results in Table 8.

The DIVAYANA model design in Figure 2 shows the object of evaluation, which is one of the information technology-based learning in the form of blended learning applied to ICT vocational schools. The evaluation object was evaluated using the DIVAYANA model which has eight evaluation components. These evaluation components include: Description, Input, Verification, Action, Yack, Analysis, Nominate, and Actualization. Activities carried out in the Description component, such as: determine the indicators of the causes for the blended learning realization and the obstacles in implementing blended learning. Activities carried out on the Input component were determining alternative solutions to problems/constraints in implementing blended learning. Activities that were carried out on the Verification component, including: 1) determine the evaluation success standard, and 2) determine the suitability check between problem solving alternatives and the evaluation success standards. Activities carried out in the Action component include recapitulating field trials on the blended learning implementation. Activities carried out on the Yack component, such as: 1) determine arguments that have been mutually agreed upon by experts and evaluators in focus group design activities, and 2) recapitulate the weight of decision makers. The activity carried out in the Analysis component was to perform a proof analysis of the arguments that had been obtained previously on the Yack component. Activities carried out on the Nominate component are performing the process of calculating the recommendation priority ranking using the DIVAYANA formula. Activities carried out in the Actualization component were actualization/applying priority recommendations to improve blended learning at ICT vocational schools.

Based on the preliminary field test results shown in Table 15, it shows that the DIVAYANA model was effective in evaluating information technology-based learning at ICT vocational schools case studies in Bali province. This was evidenced from the results of the comparison between the average of percentage effectiveness shown in Table 15 with the conversion of the five's scale achievement level shown in Table 2. The comparison results showed that the effectiveness percentage of the DIVAYANA model (88.571%). It was in the good category because it was located in the percentage range of 80%–89% on the table of five's scale achievement level. Referring to Table 15, there were 15 valid questions used to conduct preliminary field test of the DIVAYANA model. Question 1 was about the facilities existence to show indicators causes of the object appearance being evaluated (information technology based learning) is available in the description component. Question 2 was about the facilities existence to show the constraints indicators in implementing information technology-based learning that have been available in the description component. Question 3 was about the facilities existence to show alternative indicators of problem solving that have been available in the input component. Question 4 was about the facilities existence to show standard evaluation indicators that have been available on the verification component. Question 5 was about the facilities existence to show the suitability of the conformity checking process between alternatives and the evaluation success standard that has been available on the verification component. Question 6 was about the facilities existence to show the field trial recapitulation results of the information technology-based learning implementation that has been available in the action component. Question 7 was about the facilities existence to show arguments that have been mutually agreed upon by experts and evaluators in focus group design activities that has been available in the Yack component. Question 8 was about the facilities existence to show the recapitulation of decision maker weight that has been available in the Yack component. Question 9 was about the facilities existence to show the calculating process of the recommendations priority ranking using the DIVAYANA formula, which has been available in the nominate component. Question 10 was about the facilities existence to show the recommendations actualization process which is the highest priority in the information technology-based learning implementation, which has been available in the actualization component. Question 11 was related to the implementing ease of all stages in the DIVAYANA model evaluation. Question 12 was related to the ease of the DIVAYANA formula calculation process. Question 13 was related to the calculation results accuracy of the DIVAYANA formula. Question 14 was related to the process accuracy of determining priority recommendations. Question 15 was related to the appearance of the DIVAYANA model design. The process of validating the questions contents and their full interpretation can be seen in Appendix J to Appendix N. The appendix present the questions that had not been judged until the final 15 items were obtained which were used for the initial trials of the DIVAYANA model.

The findings of this study were the presence of an effective DIVAYANA evaluation model to evaluate the information technology-based learning implementation at ICT vocational schools. This had been proven from the trials results conducted by experts, evaluators and stakeholders regarding the effectiveness of the DIVAYANA model. In addition, the findings of the DIVAYANA model are important because they have a positive impact. Positive impact in solving problems related to difficulties in determining the weight calculation process. The weight was given jointly by the expert/decision-maker. Furthermore, the DIVAYANA model findings can also solve the difficulty of determining priority recommendations in information technology-based learning evaluation activities.

This is evidenced by this study results which have been answer the limitations previously found in Gondikit's research and also in Agustina and Mukhtaruddin's research. It namely by showing the results of priority recommendations from the lowest to the highest level which can be seen fully in Table 14. This study succeeded in answering the limitations of Prihaswati et al.'s research and also Harjanti et al.'s research. It was by showing the calculation process using the DIVAYANA formula to determine the priority of problem solving alternative. Start from the highest priority to the lowest level as a recommendation to facilitate decision making. The complete calculation process had been shown through the results at the nominate stage in this study. This research has also succeeded in providing an answer to the constraints of Alqahtani and Rajkhan's research; Mohammed et al.'s research; and İnce et al.'s research. It was by showing the weight calculate process of the criteria from the expert/decision-maker which can be seen fully in Appendix G.

Based on the weaknesses of previous studies are shown in Table 1, it appears that there are gaps between this research and previous studies. The gaps are related to the absence of an accurate process for determining priority recommendations, so causing difficulties for decision-makers to make optimal decisions. The gaps had answered through this research by presenting the DIVAYANA model. DIVAYANA model has shown the process existence of determining priority recommendations accurately based on the calculation process using the DIVAYANA formula. Therefore, the contribution of this research is clear as an answer to the gaps that occur.

When compared with other educational evaluation models, DIVAYANA evaluation model has an advantage in determining priority recommendations as a result of an evaluation activity. This is evidenced by the research results by Suparman and Sangadji (2019) regarding the use of the CIPP model which can only be used to show improvement recommendations for evaluation aspects in the context, input, process, and product components. From the Suparman and Sangadji's research results it appears that the limitations of their research had not shown priority recommendations for aspects that need special attention. This limitation can be avoided by showing an accurate calculation to determine priority recommendations according to the DIVAYANA model stages.

The results of research by Herwin et al. (2020) regarded the countenance evaluation model utilization. The countenance evaluation model utilization was only able to show the evaluation results in the description matrix and the consideration matrix of the evaluated object. Research limitation of Herwin et al. can be seen from its inability to show priority aspects of the recommendations for improvement in the consideration matrix. This limitation can be avoided by using the DIVAYANA evaluation model by displaying the priority results of recommendations ranging from low priority to high priority aspects.

The Rahman et al. (2018) research results regarding the use of the discrepancy evaluation model which is only able to show the inequalities results that occur in an evaluation activity. The Rahman et al.'s research results did not indicate a solution in the form of a recommendation that is most suitable to overcome this inequality. Therefore, it is very clear that the DIVAYANA model can be used as a solution to avoid limitations in the research of Rahman et al. The DIVAYANA model is able to provide solutions in the form of appropriate recommendations to overcome imbalances that occur in the field by referring to accurate calculation results and compared with predetermined evaluation standards.

The priority recommendations obtained through the DIVAYANA model were determined from the calculation results of the DIVAYANA formula. These priority recommendations are very important to optimize aspects that really need more attention for improvement/enhancement.

This research results need to be understood and can be used by educational evaluators, especially in the field of informatics engineering education who conduct research or evaluation of information technology-based learning processes. Things that must be done by educational evaluators to be able to understand the results of this study is to carry out direct trials and carefully implement the eight stages of the DIVAYANA evaluation model using valid data.

In general, the impact of using the DIVAYANA model is makes it easier for educational evaluators to determine priority recommendations. Namely is the priority recommendations that given to decision makers to optimize the implementation of information technology-based learning. Technically, the impact use of the DIVAYANA model is makes it easier for evaluators to carry out a mathematical and accurate calculation process in determining priority recommendations based on the equality of weight values given by decision makers. In particular, when referring to the results of this study, practically this DIVAYANA model can provide accurate evaluation results. It was by showing priority recommendations in the form of aspects that need attention for improvement/refinement in the implementation of blended learning at ICT vocational schools.

Although this research already has a novelty in the form of a new innovation in the educational evaluation model and is able to answer the limitations of other studies related to evaluation, but this research also has limitations. The limitations that were found specifically only in this study include: 1) the evaluation application had not been made using the DIVAYANA model and was only limited to design, 2) the DIVAYANA model simulation was only performed using dummy simulation data and had not used large amounts of real data in the field, 3) the stages of Borg and Gall's development used in this research were only limited to 5 stages because of the limited time for conducting the research.

5. Conclusions

This research had been able to provide an overview of the DIVAYANA model that can be used properly and effectively as an evaluation model to evaluate the implementation of IT-based learning (especially blended learning) at ICT vocational schools in Bali province. The end result was a priority recommendation that makes it easier for decision makers to make the right decisions. The use of the DIVAYANA evaluation model is not only limited to being used in evaluating the IT-based learning process at ICT vocational schools in Bali, but can also be used in all evaluation fields and all countries in the world. This is based on the focus of the DIVAYANA evaluation model, which is to determine the recommendations priority for improvement or enhancement of the aspects used in conducting an evaluation. The instruments used in conducting evaluations using the DIVAYANA evaluation model also do not set the basic and permanent standard. Evaluators can develop their own evaluation instruments according to their needs in evaluating a particular object being evaluated. Future work that can be done to overcome the limitations of this research includes: 1) by evaluating the implementation of IT-based learning with more complex scope and large amounts of real data, 2) conduct further research towards the development of an evaluation application based on the DIVAYANA model, 3) conduct further research by referring to the five stages of the next Borg and Gall's development that have not been carried out in this research.

Declarations

Author contribution statement

Dewa Gede Hendra Divayana: Conceived and designed the experiments; Performed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

P. Wayan Arta Suyasa, Ni Ketut Widiartini: Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Funding statement

This work was supported by The Institute of Research and Community Services, Universitas Pendidikan Ganesha (760/UN48.16/LT/2020).

Data availability statement

The data that has been used is confidential.

Declaration of interests statement

The authors declare no conflict of interest.

Additional information

No additional information is available for this paper.

Appendix A. Supplementary data

The following is the supplementary data related to this article:

References

- Adams D., Sumintono B., Mohamed A., Noor N.S.M. E-learning readiness among students of diverse backgrounds in A leading Malaysian Higher Education Institution. Int. Malays. J. Learn. Instruct. 2018;15(2):227–256. http://mjli.uum.edu.my/images/vol.15no.2/227-256new.pdf [Google Scholar]

- Agustina N.Q., Mukhtaruddin F. The CIPP model-based evaluation on integrated English Learning (IEL) Program at Language Center. Engl. Lang. Teach. Educat. J. (ELTEJ) 2019;2(1):22–31. [Google Scholar]

- Alqahtani A.Y., Rajkhan A.A. E-Learning critical success factors during the COVID-19 pandemic: a comprehensive analysis of E-learning managerial perspectives. Educ. Sci. 2020;10(216):1–16. [Google Scholar]

- Ardayati, Herlina Teaching reading comprehension by using herringbone technique to the eighth grade students of SMP Negeri 11 Lubuklinggau. J. Engl. Educat. Lit. Ling. 2020;3(1):79–85. [Google Scholar]

- Atef H., Medhat M. Blended learning possibilities in enhancing education, training and development in developing countries: a case study in graphic design courses. TEM J. 2015;4(4):358–365. https://www.temjournal.com/content/44/07/TemJournalNovember2015_358_365.pdf [Google Scholar]

- Bralić A., Divjak B. Integrating MOOCs in traditionally taught courses: achieving learning outcomes with blended learning. Int. J. Educat. Technol. Higher Educat. 2018;15(2):1–16. [Google Scholar]

- Divayana D.G.H. 2020. DIVAYANA Evaluation Model. Jakarta: Ministry of Law and Human Rights of the Republic of Indonesia.https://drive.google.com/file/d/1pnXUgVS7s0NHIcg6YOJDQ_URxWNREFwq/view?usp=sharing Copyright Number: 000197532. [Google Scholar]

- Divayana D.G.H. Utilization of DIVAYANA formula in evaluating of suitable platforms for online learning in the social distancing. Int. J. Interact. Mobile Technol. 2020;14(20):50–75. [Google Scholar]

- Dziuban C., Graham C.R., Moskal P.D., Norberg A., Sicilia N. Blended learning: the new normal and emerging technologies. Int. J. Educat. Technol. Higher Educat. 2018;15(3):1–16. [Google Scholar]

- Fazlina A. An analysis of college entrance test. Engl. Educ. J. 2018;9(2):192–215. http://jurnal.unsyiah.ac.id/EEJ/article/view/11528 [Google Scholar]

- Fikri H., Madona A.S., Morelent Y. The practicality and effectiveness of interactive multimedia in Indonesian language learning at the 5th grade of elementary school. J. Soc. Sci. Res. 2018;2:531–539. Special Issue. [Google Scholar]

- Ghavifekr S., Rosdy W.A.W. Teaching and learning with technology: effectiveness of ICT integration in schools. Int. J. Res. Educat. Sci. 2015;1(2):175–191. https://www.ijres.net/index.php/ijres/article/view/79 [Google Scholar]

- Gondikit T.J. The evaluation of post PT3 program using stake’s countenance model. Malays. J. Soc. Sci. Hum. (MJ-SSH) 2018;3(4):109–118. [Google Scholar]

- Harahap F., Nasution N.E.A., Manurung B. The effect of blended learning on student’s learning achievement and science process skills in plant tissue culture course. Int. J. InStruct. 2019;12(1):521–538. http://www.e-iji.net/dosyalar/iji_2019_1_34.pdf [Google Scholar]

- Harjanti R., Supriyati Y., Rahayu W. Evaluation of learning programs at elementary school level of “Sekolah Alam Indonesia (SAI)”: evaluative research using countenance stake’s model. Am. J. Educ. Res. 2019;7(2):125–132. [Google Scholar]

- Herwin, Jabar C.S.A., Senen A., Wuryandani W. The evaluation of learning services during the COVID-19 pandemic. Univ. J. Educat. Res. 2020;8(11B):5926–5933. [Google Scholar]

- İnce M., Yiğit T., Işık A.H. AHP-TOPSIS method for learning object metadata evaluation. Int. J. Informat. Educat. Technol. 2017;7(12):884–887. [Google Scholar]

- Kanwar A., Balasubramanian, Carr A. Changing the TVET paradigm: new models for lifelong learning. Int. J. Train. Res. 2019;17:54–68. [Google Scholar]

- Katemba C.V., Samuel Improving student’s reading comprehension ability using Jigsaw 1 technique. Acuity: J. Engl. Lang. Pedagogy Lit. Cult. 2017;2(2):82–102. [Google Scholar]

- Kerzˇič D., Tomazˇevič N., Aristovnik A., Umek L. Exploring critical factors of the perceived usefulness of blended learning for higher education students. PloS One. 2019;14(11):1–18. doi: 10.1371/journal.pone.0223767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keskin S., Yurdugül H. Factors affecting students’ preferences for online and blended learning: motivational vs cognitive. Eur. J. Open Dist. E Learn. 2019;22(2):71–85. [Google Scholar]

- Li Y.W. Transforming conventional teaching classroom to learner-centred teaching classroom using multimedia-mediated learning module. Int. J. Informat. Educat. Technol. 2016;6(2):105–112. [Google Scholar]

- Mantasiah R., Yusri, Jufri The development of grammar teaching material using error and contrastive analysis (A linguistic approach in foreign language teaching) TESOL Int. J. 2018;13(3):2–13. https://files.eric.ed.gov/fulltext/EJ1247441.pdf [Google Scholar]

- Medina L.C. Blended learning: deficits and prospects in higher education. Australas. J. Educ. Technol. 2018;34(1):42–56. [Google Scholar]

- Mohammed H.J., Kasim M.M., Shaharanee I.N. Evaluation of E-learning approaches using AHP-TOPSIS technique. J. Telecommun. Electron. Comput. Eng. 2018;10(1-10):7–10. https://journal.utem.edu.my/index.php/jtec/article/view/3783 [Google Scholar]

- Perera-Diltz D.M., Moe J.L. Formative and summative assessment in online education. J. Res. Innovat. Teach. 2014;7(1):130–142. https://digitalcommons.odu.edu/chs_pubs/37 [Google Scholar]

- Prihaswati M., Purnomo E.A., Sukestiyarno, Mulyono . The 3rd International Seminar on Education and Technology, Semarang, Indonesia, May 2017. 2017. UCLA method: the character education evaluation on basic mathematics learning in higher education; pp. 55–60.https://jurnal.unimus.ac.id/index.php/psn12012010/article/view/2752 [Google Scholar]

- Prima E., Lestari P.I. International Conference on Fundamental and Applied Research, Bali, Indonesia, October 2019. 2019. The implementation of token economy to improve the responsibility of early childhood’s behavior; pp. 191–197.https://jurnal.undhirabali.ac.id/index.php/icfar/article/view/969 [Google Scholar]

- Rahman H.A., Affandi H.M., Matore M.E.M. Evaluating school support plan: a proposed conceptual framework using discrepancy evaluation model. Int. J. Educat. Psychol. Couns. 2018;3(17):49–56. http://www.ijepc.com/PDF/IJEPC-2018-17-09-05.pdf [Google Scholar]

- Ramadhani R., Umam R., Abdurrahman A., Syazali M. The effect of flipped-problem based learning model integrated with LMS-google classroom for senior high school students. J. Educat. Gifted Young Sci. 2019;7(2):137–158. [Google Scholar]

- Retnawati Proving content validity of self-regulated learning scale (the comparison of Aiken Index and Expanded Gregory Index) Res. Evaluat. Educat. 2016;2(2):155–164. [Google Scholar]

- Sadeghi M. A shift from classroom to distance learning: advantages and limitations. Int. J. Res. Engl. Educat. 2019;4(1):80–88. http://ijreeonline.com/article-1-132-en.html [Google Scholar]

- Sharma P. Digital revolution of education 4.0. Int. J. Eng. Adv. Technol. 2019;9(2):3558–3564. https://www.ijeat.org/wp-content/uploads/papers/v9i2/A1293109119.pdf [Google Scholar]

- Singh B., Mishra P. Process of teaching and learning: a paradigm shift. Int. J. Educ. 2017;7:31–38. http://ijoe.vidyapublications.com/Issues/Vol7/06-Vol7.pdf [Google Scholar]

- Sohrabi B., Vanani I.R., Iraj H. The evolution of E-learning practices at the University of Tehran: a case study. Knowl. Manag. E-Learn. 2019;11(1):20–37. [Google Scholar]

- Sugihartini N., Sindu G.P., Dewi K.S., Zakariah M., Sudira P. Vol. 394. 2019. Improving teaching ability with eight teaching skills; pp. 306–310. (3rd International Conference on Innovative Research across Disciplines (ICIRAD 2019), Advances in Social Science, Education and Humanities Research). [Google Scholar]

- Suparman E., Sangadji K. Evaluation of learning programs with the CIPP Model in College A Theoretical Review. Int. J. Educat. Informat. Technol. Others. 2019;2(1):120–127. [Google Scholar]

- Suyasa P.W.A., Kurniawan P.S., Ariawan I.P.W., Sugandini W., Adnyawati N.D.M.S., Budhyani I.D.A.M., Divayana D.G.H. Empowerment of CSE-UCLA model based on Glickman Quadrant Aided by Visual application to evaluate the blended learning program on SMA Negeri 1 Ubud. J. Theor. Appl. Inf. Technol. 2018;96(18):6203–6219. http://www.jatit.org/volumes/Vol96No18/26Vol96No18.pdf [Google Scholar]

- Thanabalan T.V., Siraj S., Alias N. Evaluation of a digital story pedagogical module for the indigenous learners using the stake countenance model. TOJET - Turkish Online J. Educ. Technol. 2015;14(2):63–72. http://www.tojet.net/articles/v14i2/1429.pdf [Google Scholar]

- Thurab-Nkhosi D. The evaluation of A blended faculty development course using the CIPP framework. Int. J. Educ. Dev. using Inf. Commun. Technol. (IJEDICT) 2019;15(1):245–254. http://ijedict.dec.uwi.edu/viewarticle.php?id=2539 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that has been used is confidential.