Abstract

This paper presents an underwater high-precision line laser three-dimensional (3D) scanning (LLS) system with rotary scanning mode, which is composed of a low illumination underwater camera and a green line laser projector. The underwater 3D data acquisition can be realized in the range of field of view of 50° (vertical) × 360° (horizontal). We compensate the refraction of the 3D reconstruction system to reduce the angle error caused by the refraction of light on different media surfaces and reduce the impact of refraction on the image quality. In order to verify the reconstruction effect of the 3D reconstruction system and the effectiveness of the refraction compensation algorithm, we conducted error experiments on a standard sphere. The results show that the system’s underwater reconstruction error is less than 0.6 mm within the working distance of 140 mm~2500 mm, which meets the design requirements. It can provide reference for the development of low-cost underwater 3D laser scanning system.

Keywords: 3D reconstruction, underwater laser scanning, laser sensor, high precision

1. Introduction

In recent years, three-dimensional (3D) terrain data and scene reconstruction technology have been gradually applied to underwater imaging applications. Due to the disadvantages of traditional acoustic detection, such as it being easily affected by underwater noise, non-intuitive imaging, and poor visualization effect, optical 3D reconstruction technology gradually plays its advantages in underwater operations. For example, in terms of underwater engineering construction [1], laser 3D reconstruction technology can provide more accurate and visualized 3D site surveys for underwater construction projects, used to check the structure of subsea instruments and the wear status of pipelines. In military applications [2], laser 3D reconstruction technology can be used to detect and monitor the underwater targets, providing real-time data support for underwater military rescue. In terms of marine scientific research, laser 3D reconstruction technology can explore seabed resources and map seabed topography [3,4]. In addition, it can also be applied to biological survey [5,6], archaeology [7,8,9,10], sea bottom topography description, etc. [11,12].

Linear laser 3D reconstruction is an active optical measurement technology that belongs to structured light 3D reconstruction technology. The basic principle is that the structured light projector projects a controllable light strip onto the surface of the object to be measured, the image is obtained by the image sensor (such as camera), and the 3D coordinates of the object are calculated by the triangulation through the geometric relationship of the system [13]. Also, there are light spot and light beam laser scanning technology [14]. The light spot type is to scan the object point by point; however, the disadvantage is that image acquisition and post-processing are time-consuming and difficult to complete real-time measurement. Light plane type is to project a two-dimensional plane to an object, and the measurement speed is the fastest. It is usually used to project grating stripes, which is usually used in the air. The linear laser scanning sensor system is composed of a low-light underwater camera, a green line laser projector, and a scanning turntable. The calibration of the system includes the calibration of camera parameters and the calibration of system structure parameters. Through calibration, we can obtain the conversion relationship between the two-dimensional pixel coordinate system and the three-dimensional camera coordinate system, and then obtain the equation of the laser stripe on the plane target in the three-dimensional space. A series of solving and fitting algorithms can finally obtain the plane equation of the light plane in the camera coordinate system. In the early stage of linear laser sensor calibration, the mechanical method was proposed first and widely used. Later, the idea based on the cross ratio invariant method was developed to get rid of the shackles of precision platform in principle. A certain number of high precision calibration points can be obtained by using the specially designed stereo target to complete the calibration [15,16,17,18]. Other methods, such as hidden point, three-point perspective model, active vision, and binocular stereo vision, have certain applications on some specific occasions, greatly enriching the calibration method of the linear structured light sensor [19,20,21,22,23,24].

Michael Bleier et al. developed a laser scanning system with the wavelength of 525 nm to scan the objects in the water tank statically and dynamically. From the obtained point cloud data, the reconstruction errors of the two state systems are less than 5 cm [25]. Jules S. Jaffe [26] invented a line laser scanning imaging system for underwater robots, which effectively reduces backscatter and volume scattering by using large-scale camera splitting, scanning, or pulse systems. Yang Yu et al. [27] studied a multi-channel RGB laser scanning system and proposed a high-resolution underwater true-color three-dimensional reconstruction method with three primary color lasers as the active light source, which can target objects including human faces. Scanning and reconstruction of the millimeter level are performed while restoring the true color texture information of the target. Xu Wangbo et al. [28] designed and implemented an underwater object measurement system based on multi-line structured light. When the measurement distance is 2.45 m, the average error reaches 1.6443 mm, which has high measurement accuracy. At present, many research institutions have done a lot of work on image, point cloud processing, and water scattering correction, and good experimental results have been obtained. However, in the process of underwater experiments, the influence of refraction caused by different media surfaces on the viewing angle error and imaging quality still needs more attention [29].

In order to realize the high-precision 3D reconstruction of underwater target and better utilize the advantages of the optical 3D reconstruction system in underwater operations, this paper designs and develops an underwater high-precision 3D reconstruction system based on rotary scanning, and proposes a refraction compensation method for the system. This article will describe in detail the calibration principle and system structure involved in the reconstruction process in the second and third sections. The specific experimental process will be introduced in Section 4. Then, we will perform error analysis on the standard sphere with known radius, compare the reconstruction errors of the system in two different environments of air and water, and calculate the reconstruction accuracy of underwater objects before and after using the algorithm. The specific work contents are as follows.

2. Determination of System Parameters and Establishment of Light Plane

In the reconstruction of laser three-dimensional scanning, the conversion of the coordinate system and a series of calibration algorithms are involved, including the calibration of the internal and external parameters of the camera, and the determination of the laser plane equation of the system. Through calibration, the two-dimensional pixel coordinates obtained by the camera can be converted into three-dimensional point cloud coordinates. In the process of obtaining the point cloud, we added the refraction correction algorithm of the waterproof device to improve the system reconstruction accuracy.

2.1. Coordinate System Conversion

The calibration process of the system involves the conversion between image coordinate system (including pixel coordinate system and physical coordinate system), camera coordinate system, and world coordinate system. As shown in Figure 1, plane ABC is the laser plane, and is the camera focal length. We set as the world coordinate system, as the camera coordinate system, as the pixel coordinate system, and as the physical coordinate system.

Figure 1.

Geometric relations in coordinate system transformation.

The relationship between a point in the world coordinate system and a point in the camera coordinate system is as follows:

| (1) |

where and are the rotation matrix and translation matrix of the camera coordinate system relative to the world coordinate system.

According to the triangle similarity principle, the relationship between the point in the camera coordinate system and in the image coordinate system can be obtained

| (2) |

The conversion relationship between the point in the image coordinate system and the point in the camera coordinate system is shown in the following formula:

| (3) |

where and represent the physical size of unit pixel in x-axis and y-axis, respectively. To sum up, the conversion relationship of points from the pixel coordinate system to the world coordinate system can be obtained:

| (4) |

2.2. Camera Calibration

Camera calibration can be divided into traditional camera calibration method and camera self-calibration method [30,31]. The methods that are commonly used include the traditional Tsai calibration method and Zhang Zhengyou calibration method, which is between traditional and self-calibration methods. In this paper, the method we used is the Zhang Zhengyou calibration method. By taking photos of the calibration plate in different directions, two groups of parameters of the camera are directly obtained, which are the external parameters of spatial transformation and the internal parameters of the camera itself. Using the external and internal parameters, the corresponding relationship between the pixel coordinates of the image and the three-dimensional coordinates in the space can be established, that is, the three-dimensional space information can be obtained from the two-dimensional image [32,33]. The Zhang Zhengyou calibration method does not need to know the movement mode of the calibration plate, which avoids high equipment demand and complicated operation, and has higher accuracy and better robustness than the self-calibration method [34,35].

In the process of camera calibration, it is necessary to extract the corner coordinates of the checkerboard plane target. The most commonly used feature detection algorithms in the field of computer vision are Harris, SIFT, SURF, FAST, BRIEF, ORB, etc. The SIFT (Scale Invariant Feature Transform) algorithm proposed by David G. Lowe is based on the feature point extraction of the DoG (Difference of Gaussian) pyramid scale space [36]. The advantages are stable features and rotation invariance and the disadvantage is that the ability to extract feature points for smooth-edge targets is weak. The SURF (Speeded Up Robust Feature) algorithm is an improvement of the SIFT algorithm proposed by David Lowe in 1999 [37], which improves the execution efficiency of the algorithm and provides the possibility for the application of the algorithm in real-time computer vision systems. ORB (Oriented FAST and Rotated BRIEF) is a fast algorithm for feature point extraction and description [38,39]. It was written by Ethan Rublee, Vincent Rabaud, et al. in 2011 entitled “ORB: An Efficient Alternative to SIFT or SURF;” they proposed using the FAST algorithm for feature point extraction, and using the BRIEF algorithm to describe feature points. By combining the advantages of the FAST [40,41] and BRIEF algorithm [42,43] for fast feature point detection and simple description, and improving on them, it solves the complexity of SIFT calculation and the lack of rotation invariance, scale invariance, noise sensitivity, and other shortcomings.

This paper uses the classic Harris corner detection algorithm, which has the advantage of simple calculation and can easily recognize the gray level changes and translational rotation changes of flat images. Experimental verification shows that the Harris algorithm is suitable for the underwater rotating scanning system proposed in this paper, and can extract limited feature points on a planar target. In addition, corner points can also be accurately detected under noise interference, which has high stability and robustness. The basic idea is that the recognition of the human eye corner is usually completed in a local small area or small window [44]. If the small window with this feature is moved in all directions, the gray level of the area in the window changes greatly, and then it is considered that the corner is encountered in the window. The general steps are as follows:

Firstly, calculate the image matrix , and each pixel of the image is filtered by using the horizontal and numerical difference operators to get the values of and , and then the four elements in are obtained:

| (5) |

Then, the four elements of are filtered by Gaussian smoothing to get a new The discrete two-dimensional mean Gaussian function is:

| (6) |

Use to calculate the amount of corners corresponding to each pixel Cim:

| (7) |

When a point satisfies that Cim is greater than the threshold and is a local maximum in a neighborhood, it can be considered as a corner.

Some of the extraction results are shown in Figure 2, and the pixel coordinates of some corner points of the calibration board are shown in Table 1.

Figure 2.

Some corners extracted by Harris algorithm.

Table 1.

Pixel coordinates of some corners of calibration board.

| Index | u(pix.) | v(pix.) |

|---|---|---|

| 1 | 1148.465576 | 1012.713867 |

| 2 | 800.38644746 | 1893.737305 |

| 3 | 497.802887 | 1882.058228 |

| 4 | 193.6052246 | 1868.337646 |

| 5 | 497.802887 | 1882.058228 |

| 6 | 264.0452271 | 363.3004456 |

2.3. Calibration of System Structure Parameters

The calibration of sensor structure parameters is the position equation of the laser plane relative to camera. The main calibration methods include the mechanical adjustment method [45], filament scattering method [46,47], and cross ratio invariant method [48]. From the angle of target, it can be divided into the three-dimensional target, plane target, one-dimensional target, and no target [49,50,51,52]. In this paper, a new method of line laser calibration is used. By combining the linear equation formed by the optical center and the point on the light strip and the plane equation of the target in the camera coordinate system, the equation of the line in the camera coordinate system can be obtained. Finally, an equation that describes the plane in the camera coordinate system can be obtained by using the least square method. Let the plane equation be . The specific algorithm flow is shown in Figure 3.

Figure 3.

Flow chart of laser plane equation calibration.

2.4. Refraction Compensation of Underwater 3D Reconstruction System

When working underwater, the instruments are sealed in a waterproof device, so the underwater target and the laser scanning system are separated in media with different refractive indices. When the camera photographs the underwater object, the light passes through the interface of the water-plane glass waterproof cover and the glass waterproof cover-air. After being refracted twice, the object is finally being imaged on the image surface of the camera. According to the points on the image plane of the camera, we perform reverse calculations on the refraction light path to find the intersection point. This intersection point is the actual image point of the measured object after removing the effect of refraction [53,54,55,56]. Since the light is refracted twice at the waterproof cover, and the thickness of the waterproof cover is thin (negligible), the imaging process in the water is simplified as shown in Figure 4, where the plane of the waterproof cover is parallel to the imaging plane of the camera.

Figure 4.

Refraction caused by watertight devices.

In view of the above situation, this section will compensate the system from two aspects. They are the offset compensation of the imaging point on the CCD object surface and the laser plane at the sealing glass.

2.4.1. Refraction Compensation of Light Plane

As shown in Figure 4, the light plane A in the air is refracted by the glass surface to obtain the underwater light plane B. is the laser projection angle, is the angle between the light plane A and the normal, is the is the angle between the normal to A and the normal to C, the is the angle between the light plane B and the normal C, and is the angle between the normal B and the normal C.

Suppose the normal vector of the refractive surface is , the normal vector of the light plane A is , the normal vector of the light plane B is , and the relative refractive index of water and air is . From the law of refraction, we can get that:

| (8) |

where is the refractive index of air and is the refractive index of water.

| (9) |

Since the normal vector of the light plane B is a unit vector, then:

| (10) |

According to (6), it can be concluded that:

| (11) |

| (12) |

According to the simultaneous Formulas (11) and (12), it can be concluded that:

| (13) |

The coordinates of intersection point D between light plane A and light plane B and glass surface are, where H is the distance from camera optical center to glass surface, and the equation of light plane B is as follows:

| (14) |

Substituting the coordinate of point d into (11), we can get the following results:

| (15) |

After simplification, we can get the following conclusions:

| (16) |

In conclusion, the laser plane equation after refraction can be calculated:

| A’x + B’y + C’z + D’ = 0. | (17) |

2.4.2. CCD Image Coordinate Refraction Compensation

It can be seen from the Figure 4 that the imaging point of the underwater target on the image plane is . If it is in the air, the direct imaging point is . There is a relationship between and :

| (18) |

For each measured point, refraction correction can be realized as long as it is obtained.

| (19) |

and because:

| (20) |

| (21) |

where is the focal length of the camera, point is known; when a condition is satisfied , the results are as follows:

| (22) |

Substituting the results of refraction compensation into the camera calibration program and coordinate conversion formula, the compensated three-dimensional coordinates can be obtained.

3. Rotary Scanning System

Linear laser 3D rotation system mainly includes optical system and mechanical structure. The optical system is composed of laser, camera, and LED, as it shown in Figure 5.

Figure 5.

Mechanical model of the rotating scanning system. (a) Optical system; (b) System mechanical structure with variable included angle; (c) Rotating stage; (d) The overall model of the system.

The distance between the camera and the laser is 250 mm, and they are, respectively, installed in two independent watertight chambers, fixed on both sides of the support frame to maintain their relative position. The turntable is controlled by the host computer, and the system can scan at a fixed position within the range of 50° × 360°. The specific model of the system is in Figure 5. Some parameters of camera, laser and system performance are shown in Table 2:

Table 2.

Mechanical model of rotary scanning system.

| Performance | |

|---|---|

| Measuring Method | Triangulation |

| Scan Range | Minimum: 0.14 m | Maximum: 2.5 m |

| Field of View | 360°Pan 90°Tilt |

| Operating Temperature | -10℃–40℃ |

| Pan and Roll Accuracy | 0.1° |

| Pitch and Roll Accuracy | ±0.25° |

| Laser | |

| Wavelength | 520 nm |

| Electric Current | ≤300 mA |

| Power Supply | 50 mW |

| Camera | |

| Model | WP-UT530M |

| Pixel Size | 4.84.8 μm |

| Resolution | 2592×2048 |

| Frame Rate | 60 FPS |

| Exposure Time | 16 μs–1 s |

| Spectral Range | 380–650 nm |

4. Performance Tests

4.1. Three-Dimensional Reconstruction in Air

We choose a checkerboard with a side length of 25 mm as the calibration target. By changing the angle of the rotation and tilt of the target, 12 pictures of the checkerboard in different poses under the camera coordinate system can be obtained. Through Zhang Zhengyou’s calibration method, the camera’s internal parameters and calibration results include external parameters and distortion coefficients. The whole calibration process includes the original image acquisition, chessboard corner extraction, reprojection error, and other steps. The result is shown in Figure 6.

Figure 6.

Camera calibration process in the air and some corner points extraction results. (a) Twelve Calibration boards with different attitude; (b) Corner extraction results of image.

We put the fixture in the air for a scanning experiment. Through the coordinate transformation and the solution of the light plane equation, we can finally get the three-dimensional point cloud coordinates of the fixture. After noise reduction, the final three-dimensional point cloud of the fixture is shown in the Figure 7b.

Figure 7.

Three-dimensional (3D) reconstruction point cloud in air. (a) Measured object in the air; (b) Point cloud of object.

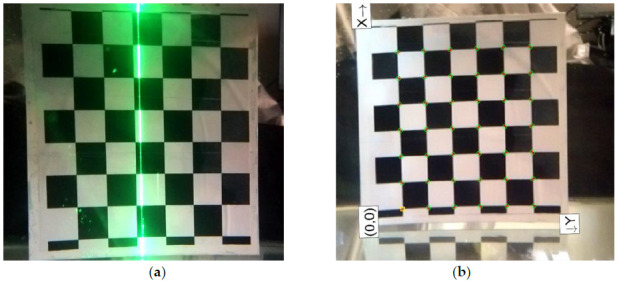

4.2. 3D Reconstruction of Underwater Target

From the above experimental results, the system can complete the 3D point cloud coordinate extraction of the water target, and reconstruct the characteristics of the object, which verifies the feasibility of the system in the air. The next step is to put the scanning system in water and calibrate it again. It is known that light will refract on passing through different media, and underwater calibration can better reduce the influence of laser light refraction on the reconstruction accuracy, and can better scan the conch in the water to verify the reconstruction effect of the underwater system. First, we calibrate the chessboard target underwater, as shown in Figure 8.

Figure 8.

Underwater chessboard calibration. (a) Checkerboard calibration; (b) Corner extraction of underwater chessboard.

After calibration, the internal parameter matrix of the camera is as follows:

| (23) |

Other related parameters include camera focal length, principal point, image size, radial distortion, and tangential distortion, as shown in the Table 3 below:

Table 3.

Some parameters of the system.

| Parameter | Result (Pix.) |

|---|---|

| Focal Length | [ ] |

| Principal Point | [ ] |

| Image Size | [2048 2592] |

| Radial Distortion | [−0.1380 15.7017] |

| Tangential Distortion | [0 0] |

After getting the calibration results of the system structure parameters, we fit the laser plane projected by the laser. Finally, the laser plane equation is obtained as follows:

| (24) |

The parameters corresponding to the light plane equation in Section 2.3 are: A = 0.019092; B = 0.128103; C = 1; D = 1498.888393.

We selected the conch as the scanning object, as shown in the Figure 9a. The 3D point cloud information of a single frame image can be obtained by converting the two-dimensional image data into three-dimensional point cloud coordinates through system parameter calibration. According to the motion mode of the turntable, the three-dimensional point cloud data can be spliced. Finally, the laser scanning results are obtained. The three-dimensional point cloud image of conch is as follows:

Figure 9.

3D reconstruction results of underwater conch. (a) Conch placed in the water; (b) 3D point cloud of conch.

4.3. Error Analysis

In order to measure the accuracy of the system, a standard ball with a radius of 20.01 mm is scanned and reconstructed in the range of 500 mm to 1200 mm. The two-dimensional coordinates of the scanned object surface are obtained by using the laser stripe center extraction algorithm, and then the three-dimensional point cloud coordinates of the standard sphere are obtained by using the coordinate transformation formula. The standard sphere radius can be obtained by calculation, and the fringe extraction result is shown in Figure 10.

Figure 10.

Measurement value and error of standard ball at different distances. (a) Reconstruction of standard sphere in air; (b) Extraction of laser stripe center in air; (c) Reconstruction of standard sphere in water; (d) Stripe center extraction of underwater standard sphere.

The reconstruction results in air and underwater are shown in Figure 11. The average measurement error of the standard sphere in the air is less than 0.16 mm, and the average error after refraction corrected in water is less than 0.6 mm. In addition, we can also conclude that as the measurement distance increases, the error gradually increases.

Figure 11.

Relationship between the measured value of standard error sphere radius and the measured distance. (a) Reconstruction error results in water and air; (b) Reconstruction of standard ball diameter at different distances.

In order to verify the effectiveness of the underwater refraction correction algorithm, we use different methods to carry out underwater measurement experiments, for example, we select a standard ball with a radius of 20.01 mm as the measured object, select 12 different positions in the field of view for measurement, and calculate the measurement radius of the standard ball without refraction correction algorithm and with refraction correction algorithm, respectively. is the underwater measurement radius without the refraction correction algorithm, and is the measurement radius with the refraction correction. The detailed measurement results are shown in Table 4:

Table 4.

Measurement result and error of standard ball.

| Distance(mm) | R(mm) | Error(mm) | ||

|---|---|---|---|---|

| 500 | 24.541 | 4.531 | 20.117 | 0.107 |

| 550 | 24.995 | 4.985 | 20.091 | 0.081 |

| 600 | 25.336 | 5.326 | 20.195 | 0.185 |

| 650 | 25.365 | 5.355 | 20.233 | 0.223 |

| 700 | 24.379 | 4.369 | 20.295 | 0.285 |

| 750 | 25.52 | 5.51 | 19.742 | 0.268 |

| 800 | 25.993 | 5.983 | 20.347 | 0.337 |

| 850 | 26.238 | 6.228 | 19.646 | 0.364 |

| 900 | 26.862 | 6.852 | 20.468 | 0.458 |

| 950 | 25.999 | 5.989 | 20.503 | 0.493 |

| 1000 | 27.109 | 7.099 | 20.583 | 0.573 |

| 1200 | 27.022 | 7.012 | 19.414 | 0.596 |

| Average | 25.780 | 5.770 | 20.136 | 0.331 |

| Max | 27.109 | 7.099 | 20.583 | 0.596 |

It can be seen from Table 4 that the measurement error of standard ball is within 7.1 mm without refraction correction, and the maximum measurement error is 7.099 mm. The average radius of standard sphere is 25.780 mm. After adding refraction correction, the maximum error of reconstructed standard sphere radius is 0.596 mm, and the error range is within 0.6 mm. It can be seen that the reconstruction accuracy of the system has been significantly improved after adding the refraction correction algorithm. It is proved that the algorithm proposed in this paper is accurate and effective.

5. Conclusions

In this paper, an underwater laser 3D scanning system based on rotation scanning is proposed, which is composed of line laser, underwater camera and turntable, realizing rotation scanning in the range of 50 ° (vertical) × 360 ° (horizontal). In order to achieve high-precision and high-resolution reconstruction results, this paper proposes a refraction correction algorithm for watertight devices, carries out three-dimensional reconstruction experiments on underwater targets, and obtains three-dimensional point clouds. Finally, in order to verify the feasibility of the system and the effectiveness of the algorithm, we use a standard ball with a radius of 20.01 mm for error analysis, showing that the underwater 3D reconstruction error of the system is less than 0.6 mm, which further proves that the line laser scanning system with low cost and simple structure can replace the expensive professional optical depth sensor and provide a new reference for underwater 3D laser reconstruction technology.

Acknowledgments

The authors would like to appreciate the technical editor and anonymous reviewers for their constructive comments and suggestions on this study.

Author Contributions

Conceptualization, Q.X. and Q.S.; methodology, Q.X. and Q.S.; software, H.B.; validation, Q.S.; writing—original draft preparation, Q.S.; writing—review and editing, F.W., B.Y. and Q.L.; project administration, Q.X.; funding acquisition, Q.X. All authors modified and approved the final submitted materials. All authors have read and agreed to the published version of the manuscript.

Funding

National Defense Scientific Research Joint Cultivation Project (202065004); Jilin Scientific and Technological Development Program (20190302083GX); Key deployment project of the Marine Science Research Center of the Chinese Academy of Sciences (COMS2019J04); National Key Research and Development Program of China (2019YFC1408300, 2019YFC1408301); National Natural Science Foundation of China-Shandong Joint Fund (U2006209); Natural Science Foundation of China (52001295).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Coiras E., Petillot Y., Lane D.M. Multiresolution 3-D reconstruction from side-scan sonar images. IEEE Trans. Image Process. 2007;16:382–390. doi: 10.1109/TIP.2006.888337. [DOI] [PubMed] [Google Scholar]

- 2.He X., Tong N., Hu X. High-resolution imaging and 3-D reconstruction of precession targets by exploiting sparse apertures [J] IEEE Trans. Aerosp. Electron. Syst. 2017;53:1212–1220. doi: 10.1109/TAES.2017.2668058. [DOI] [Google Scholar]

- 3.Lirman D., Gracias N., Gintert B., Gleason A.C., Deangelo G., Dick M., Martinez E., Reid R.P. Damage and recovery assessment of vessel grounding injuries on coral reef habitats by use of georeferenced landscape video mosaics. Limnol. Oceanogr. Methods. 2010;8:88–97. doi: 10.4319/lom.2010.8.0088. [DOI] [Google Scholar]

- 4.Schjølberg I., Gjersvik T.B., Transeth A.A., Utne I.B. Next generation subsea inspection, Maintenance and Repair Operations. IF AC PapersOnLine. 2016;49:434–439. [Google Scholar]

- 5.Gomes L., Bellon O.R.P., Silva L. 3D reconstruction methods for digital preservation of cultural heritage: A survey. Pattern Recognit. Lett. 2014;50:3–14. doi: 10.1016/j.patrec.2014.03.023. [DOI] [Google Scholar]

- 6.Tetlow S., Allwood R.L. The use of a laser stripe illuminator for enhanced underwater viewing. Proc. SPIE. 1994;2258:547–555. [Google Scholar]

- 7.Meline A., Triboulet J., Jouvencel B. Comparative study of two 3D reconstruction methods for underwater archaeology; Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems; Algarve, Portugal. 7–12 October 2012; pp. 740–745. [Google Scholar]

- 8.Eric M., Kovacic R., Berginc G., Pugelj M., Stopinsek Z., Solina F. The impact of the latest 3D technologies on the documentation of underwater heritage sites; Proceedings of the IEEE Digital Heritage International Congress; Marseille, France. 28 October–1 November 2013; pp. 281–288. [Google Scholar]

- 9.Bruno F., Gallo A., Muzzupappa M., Daviddde Petriaggi B., Caputo P. 3D documentation and monitoring of the experimental cleaning operations in the underwater archaeological site of Baia (Italy); Proceedings of the IEEE Digital Heritage International Congress; Marseille, France. 28 October–1 November 2013; pp. 105–112. [Google Scholar]

- 10.Skarlatos D. Project iMARECULTURE: advanced VR, iMmersive serious games and augmented REality as tools to raise awareness and access to European underwater CULTURal heritagE; Proceedings of the Euro-Mediterranean Conference; Nicosia, Cyprus. 31 October–5 November 2016. [Google Scholar]

- 11.Johnson-Roberson M., Bryson M., Friedman A., Pizarro O., Troni G., Ozog P., Henderson J.C. High-resolution underwater robotic vision-based mapping and three-dimensional reconstruction for archaeology. J. Field Robot. 2017;34:625–643. doi: 10.1002/rob.21658. [DOI] [Google Scholar]

- 12.Ulrik J., Skjetne R. Real-time 3D Reconstruction of Underwater Sea-ice Topography by Observations from a Mobile Robot in the Arctic. Ifac Proc. Vol. 2013;46:310–315. [Google Scholar]

- 13.Norström C. Underwater 3-D imaging with laser triangulation. [(accessed on 12 February 2021)]; Available online: https://www.diva-portal.org/smash/get/diva2:21659/FULLTEXT01.pdf.

- 14.Usamentiaga R., Molleda J., Garcia D.F. Fast and robust laser stripe extraction for 3D reconstruction in industrial environments. Mach. Vis. Appl. 2012;23:179–196. doi: 10.1007/s00138-010-0288-6. [DOI] [Google Scholar]

- 15.Bruno F., Bianco G., Muzzupappa M., Barone S., Razionale A. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J. Photogramm Remote Sens. 2011;66:508–518. [Google Scholar]

- 16.Bianco G., Gallo A., Bruno F., Muzzupappa M. A comparative analysis between active and passive techniques for underwater 3D reconstruction of close-range objects. Sensors. 2013;13:11007–11031. doi: 10.3390/s130811007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Salvi J., Fernandez S., Pribanic T., Llado X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010;43:2666–2680. doi: 10.1016/j.patcog.2010.03.004. [DOI] [Google Scholar]

- 18.Bradski G., Kaehler A. Camera Model and Calibration. In: Kaiqi W., editor. Learning OpenCV. 1st ed. Tsinghua University Press; Beijing, China: 2009. pp. 427–432. [Google Scholar]

- 19.Miguel C., Palomer A., Forest J., Ridao P. State of the art of underwater active optical 3D Scanners. Sensors. 2019;19:51–61. doi: 10.3390/s19235161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhang G.J., Wei Z.Z. A novel calibration approach to structured light 3D vision inspection. Opt. Laser Technol. 2002;34:373–380. doi: 10.1016/S0030-3992(02)00031-2. [DOI] [Google Scholar]

- 21.Ben-Hamadou A. Flexible calibration of structured-light systems projecting point patterns. Comput. Vis. Image Underst. 2013;117:1468–1481. doi: 10.1016/j.cviu.2013.06.002. [DOI] [Google Scholar]

- 22.Yang R.Q., Sheng C., Chen Y.Z. Flexible and accurate implementation of a binocular structured light system. Opt. Lasers Eng. 2008;46:373–379. doi: 10.1016/j.optlaseng.2007.12.008. [DOI] [Google Scholar]

- 23.Ya Q.L.I. Underwater dense stereo matching based on depth constraint. Acta Photonica Sinica. 2017;46:715001. [Google Scholar]

- 24.Zhuang S.F. A standard expression of underwater binocular vision for stereo matching. Meas. Sci. Technol. 2020;31:115012. doi: 10.1088/1361-6501/ab94fd. [DOI] [Google Scholar]

- 25.Bleier M., Lucht J.V.D., Nüchter A. Scout3D-an underwater laser scanning system for mobile mapping. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019;42:13–18. doi: 10.5194/isprs-archives-XLII-2-W18-13-2019. [DOI] [Google Scholar]

- 26.Jaffe J. Development of a Laser Line Scan Lidar Imaging System for AUV Use. [(accessed on 12 February 2021)]; Available online: https://agris.fao.org/agris-search/search.do?recordID=AV2012084496.

- 27.Yang Y. Research on Underwater Multi-Channel True Color 3D Reconstruction and Color Reduction Method. Ocean University of China; Qingdao, China: 2014. [Google Scholar]

- 28.Xu W.B. Underwater Geomorphology Detection Based on Structured Light. North China University of Water Resources and Hydropower; Zhengzhou, China: 2018. [Google Scholar]

- 29.Shortis M.R. Calibration techniques for accurate measurements by underwater camera systems. Sensors. 2015;15:30810–30827. doi: 10.3390/s151229831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Usamentiaga R., Garcia D.F. Multi-camera calibration for accurate geometric measurements in industrial environments. Measurement. 2019;134:45–358. doi: 10.1016/j.measurement.2018.10.087. [DOI] [Google Scholar]

- 31.Agrafiotis P., Drakonakis G.I., Skarlatos D. Latest Developments in Reality-Based 3D Surveying and Modelling. 2018. Underwater Image Enhancement before Three-Dimensional (3D) Reconstruction and Orthoimage Production Steps: Is It Worth? [Google Scholar]

- 32.Zhang Z.Y. Camera Calibration with One-Dimensional Objects. IEEE Trans. Pattern Anal. Mach. Intell. 2004;7:892–899. doi: 10.1109/TPAMI.2004.21. [DOI] [PubMed] [Google Scholar]

- 33.Zhang Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2002;11:1330–1334. doi: 10.1109/34.888718. [DOI] [Google Scholar]

- 34.Penna M. Camera calibration: a quick and easy way to detection the scale factor. IEEE Trans. Pattern Anal. Mach. Intell. 1991;13:1240–1245. doi: 10.1109/34.107007. [DOI] [Google Scholar]

- 35.Rufli M., Scaramuzza D., Siegwart R. Automatic detection of checkerboards on blurred and distorted images in Intelligent Robotsand Systems; Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems; Nice, France. 22–26 September 2008; pp. 3121–3126. [Google Scholar]

- 36.Lowe D.G. Object recognition from local scale-invariant features; Proceedings of the Seventh IEEE International Conference on Computer Vision; Kerkyra, Greece. 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- 37.Bay H. Speeded-up robust features (SURF) Comput. Vis. image Underst. 2008;110:346–359. doi: 10.1016/j.cviu.2007.09.014. [DOI] [Google Scholar]

- 38.Rublee E. ORB: An efficient alternative to SIFT or SURF; Proceedings of the 2011 International conference on computer vision; Barcelona, Spain. 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- 39.Makantasis K. In the wild image retrieval and clustering for 3D cultural heritage landmarks reconstruction. Multimed. Tools Appl. 2016;75:3593–3629. doi: 10.1007/s11042-014-2191-z. [DOI] [Google Scholar]

- 40.Tuzel O., Fatih P., Peter M. Region covariance: A fast descriptor for detection and classification; Proceedings of the European conference on computer vision; Berlin/Heidelberg, Germany. 7–13 May 2006; pp. 589–600. [Google Scholar]

- 41.Tola E., Vincent L., P F. A fast local descriptor for dense matching; Proceedings of the 2008 IEEE conference on computer vision and pattern recognition; Anchorage, AK, USA. 22–24 October 2008; pp. 1–8. [Google Scholar]

- 42.Calonder M. BRIEF: Computing a local binary descriptor very fast. IEEE Trans. pattern Anal. Mach. Intell. 2011;34:1281–1298. doi: 10.1109/TPAMI.2011.222. [DOI] [PubMed] [Google Scholar]

- 43.Calonder M. Brief: Binary robust independent elementary features; Proceedings of the European conference on computer vision; Berlin/Heidelberg, Germany. 5–11 November 2010; pp. 778–792. [Google Scholar]

- 44.Harris C., Stephens M. A combined corner and edge detector; Proceedings of the Alvey vision conference; Manchester, UK. 15–17 September 1988. [Google Scholar]

- 45.Zhang M., Li D.H. An on-site calibration technique for line structured light 3D scanner; Proceedings of the 2009 Asia-Pacific Conference on Computational Intelligence and Industrial Applications (PACIIA); Wuhan, China. 28–29 November 2009; pp. 30–33. [Google Scholar]

- 46.Dewar R. Self-generated targets for spatial calibration of structured light optical sectioning sensors with respect to an external coordinate system; Proceedings of the Robots and Vision’88 Conf. Proceedings; Detroit, MI, USA. 5–9 June 1988; pp. 5–13. [Google Scholar]

- 47.James K.W. Noncontact machine vision metrology within a CAD coordinate system; Proceedings of the Autofact’88Conf.Proceedings; Chicago, IL, USA. 10–16 December 1988; pp. 9–17. [Google Scholar]

- 48.Zhang Y.B., Lu R.S. Calibration method of linear structured light vision measurement system. Meas. Syst. World. 2003;8:10–13. [Google Scholar]

- 49.Ying J.G., Li Y.J., Ye S.H. Fast calibration method of line structured light sensor based on coplanar calibration reference. China Mech. Eng. 2006;17:183–186. [Google Scholar]

- 50.Liu Z., Zhang G.J., Wei Z.Z., Jiang J. A field calibration method for high precision line structured light vision sensor. Acta Optica Sinica. 2009;29:3124–3128. [Google Scholar]

- 51.Zhou F.Q., Cai F.H. Calibration of structured light vision sensor based on one dimensional target. Chin. J. Mech. Eng. 2010;46:8–12. doi: 10.3901/JME.2010.18.007. [DOI] [Google Scholar]

- 52.Chen T.F., Ma Z., Wu X. Light plane in structured light sensor based on active vision calibration line. Opt. Precis. Eng. 2012;20:257–263. [Google Scholar]

- 53.Yau T., Gong M.L., Yang Y.H. Underwater camera calibration using wavelength triangulation; Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition; Portland, OR, USA. 23–28 June 2013; pp. 2499–2506. [Google Scholar]

- 54.Agrawal A., Ramalingam S., Taguchi Y. A theory of multi-layer flat refractive geometry; Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI; New York, NY, USA. 16–21 June 2012; pp. 3346–3353. [Google Scholar]

- 55.Treibitz T., Schechner Y., Kunz C., Singh H. Flat refractive geometry. IEEE Trans. Pattern Anal. Mach. Intell. 2012;34:51–65. doi: 10.1109/TPAMI.2011.105. [DOI] [PubMed] [Google Scholar]

- 56.Xie Z.X., Yu J.S., Chi S.K., Li J.P., Li M.H. Underwater calibration and measurement of nonparallel binocular vision system. Acta Optica Sinica. 2019;39:195–204. [Google Scholar]