Abstract

Artificial intelligence, which has been actively applied in a broad range of industries in recent years, is an active area of interest for many researchers. Dentistry is no exception to this trend, and the applications of artificial intelligence are particularly promising in the field of oral and maxillofacial (OMF) radiology. Recent researches on artificial intelligence in OMF radiology have mainly used convolutional neural networks, which can perform image classification, detection, segmentation, registration, generation, and refinement. Artificial intelligence systems in this field have been developed for the purposes of radiographic diagnosis, image analysis, forensic dentistry, and image quality improvement. Tremendous amounts of data are needed to achieve good results, and involvement of OMF radiologist is essential for making accurate and consistent data sets, which is a time-consuming task. In order to widely use artificial intelligence in actual clinical practice in the future, there are lots of problems to be solved, such as building up a huge amount of fine-labeled open data set, understanding of the judgment criteria of artificial intelligence, and DICOM hacking threats using artificial intelligence. If solutions to these problems are presented with the development of artificial intelligence, artificial intelligence will develop further in the future and is expected to play an important role in the development of automatic diagnosis systems, the establishment of treatment plans, and the fabrication of treatment tools. OMF radiologists, as professionals who thoroughly understand the characteristics of radiographic images, will play a very important role in the development of artificial intelligence applications in this field.

Keywords: Artificial Intelligence, Radiology, Dentistry

Introduction

Artificial intelligence was previously felt to be a far-off, fantastic dream for the distant future, but has gradually began to develop into a real-world phenomenon in a wide range of industries, including the dental/medical field. In particular, artificial intelligence has recently been applied to radiographic images in the field of dentistry, especially oral and maxillofacial (OMF) radiology.

Artificial intelligence was born at a summer workshop held at Dartmouth in 1956, and had the potential to open up several major avenues of research, including neural networks, natural language processing, theory of computation, and other topics of interest.1 However, despite the optimism of the founders of this field, artificial intelligence encountered numerous challenges. The “artificial intelligence winter,” a period of reduced funding and interest in artificial intelligence, took place in 1974–1980 and 1987–1993; this downturn was partially due to the tension between unrealistically high expectations from artificial intelligence systems and the limitations of that era in terms of data accessibility and the computing power required to solve complex problems.2

In the late 1990s, artificial intelligence experienced a resurgence after Deep Blue (IBM’s expert system) beat the world chess champion, Garry Kasparov.3 In the early 21st century, powerful artificial intelligences such as Watson4 and Alphago5 have shocked the world, as they have gone beyond human abilities in fields that were firmly believed to be unique to humans. Recently, with the advent of deep learning, autonomous cars have begun to operate on the streets, and an artificial intelligence system passed the Turing test, implying that it had intelligence.6

Artificial intelligence is quietly permeating every aspect of our lives in the form of various conveniences such as artificial intelligence-based speakers, content recommendation systems, and so on. The rise of deep learning also offers fascinating perspectives for the automation of dental and medical image analysis. Major advances have been achieved in all areas of artificial intelligence, such as medical image analysis, data mining, robotic systems, and natural language processing.3

However, controversy over how far the diagnosis made by artificial intelligence can be trusted and what is the basis for the judgement of artificial intelligence has also been raised. For the development of artificial intelligence, it is necessary to consider the concept of explainable artificial intelligence and the necessity of human intervention. In addition, it is an urgent matter to consider how to prevent malicious use of artificial intelligence in the diagnosis area.

Machine learning and deep learning

Machine learning is a subset of artificial intelligence. More specifically, machine learning refers to the scientific study of computer models that improve their performance by learning from experience without using explicit instructions. Therefore, a machine learning algorithm requires sample data to build a model for making predictions or decisions.7

Machine learning is classified into the broad categories of supervised, unsupervised, and reinforcement learning. Supervised learning involves learning labels for each input, and mainly deals with problems of classification and regression. For supervised learning of diagnostic images, expert radiologists must carry out labeling or annotation. Unsupervised learning involves systems that learn unlabeled data by themselves, and deals with questions such as clustering and distribution estimation. Reinforcement learning algorithms learn from positive or negative feedback in a dynamic environment and are used in various fields of robotics, video games, and computer vision.7

Deep learning is a type of machine learning that emerged from neural network research in the 1980s. Insofar as it involves learning models that use data, deep learning shares a common strategy with conventional machine learning, but deep learning systems are distinct from conventional machine learning models due to their ability to derive high-level abstractions and complex features from big data through a combination of several nonlinear transformations using artificial neural networks.8

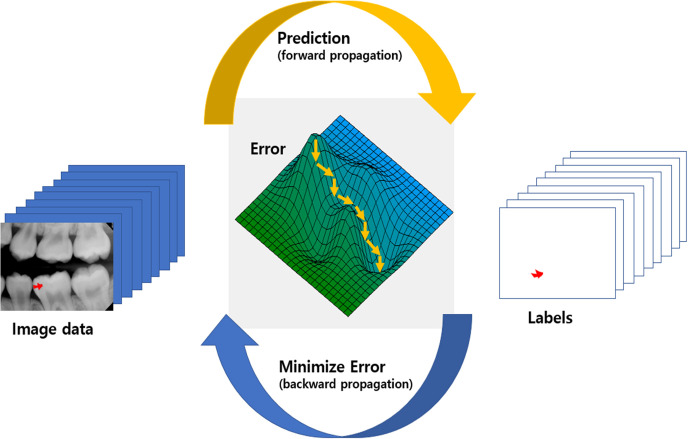

Since LeCun et al9 introduced a deep neural network in 1989 that self-learned from data using a backpropagation algorithm, deep learning has evolved explosively due to solving the overfitting problem,10 in which the predictions correspond too closely to the training set, the use of high-performance graphics processing units, and the increasing availability of big data. Convolutional neural networks (CNNs), which are a deep learning architecture that has contributed to recent advances in artificial intelligence, are most commonly applied to analyzing large and complex images. Figure 1 shows a schematic view of deep learning training about caries segmentation in periapical radiograph. Deep neural network learns from data lowering error between prediction and ground truth labels during repetitive step. Error minimization occurs gradually using differentiation of the error of minibatch (partitioned data set)

Figure 1.

Schematic view of deep learning training with caries segmentation in periapical X-ray. During repetitive step between prediction (forward propagation) and error minimization (backward propagation), deep learning network learns from data lowering error between prediction and ground truth labels. Error minimization occurs gradually (downhill small arrows) using differentiation of the error of partitioned dataset which is called minibatch.

CNNs have also been used as a main component of networks in the field of OMF radiology.11 Recently, generative adversarial networks (GANs) were introduced; these models generate new data that mimic the original data, and consist of two networks that are trained by contesting each other in a game.12 Improved GAN models using CNNs13 have been applied to radiographic images.14,15

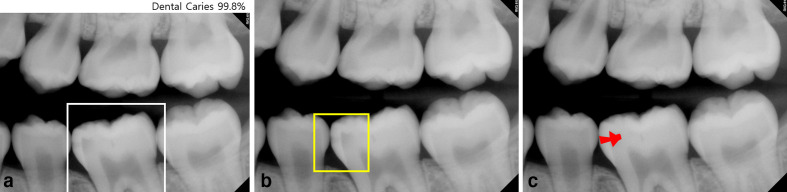

CNNs in the radiology field can be used for classification, detection and segmentation (Figure 2). Classification is the task ranging widely from determining the presence of absence of a disease to identifying the type of malignancy.16 With advances in computing power, deeper and more complex CNN models have been introduced to solve classification problems in radiographic image analysis. Detection is performed to identify and localize regions with lesions or certain anatomical structures in radiographic image analysis.17 CNNs for detection tasks are fundamentally similar to those used for classification tasks. However, several layers with additional functions, such as a region proposals or regression, have been added to CNNs for disease detection. Segmentation has been used to segment various anatomical structures or lesions in images obtained using various modalities, including plain radiography, CT, MR, and ultrasound images.18

Figure 2.

a. Dental caries is present in the rectangular box on the image (classification). b. Dental caries is detected in the square box (detection). c. A dental caries is segmented on the image (segmentation).

Preparation of data sets for artificial intelligence

Collecting and labeling data

In practice, it is very costly to apply artificial intelligence for automated interpretation. Massive-scale learning to improve accuracy requires a large amount of data, which must be processed. Experts must devote their time and effort to make the data suitable for effective learning.

Artificial intelligence studies necessitate data curation, which refers to the process of organizing and integrating data collected from various sources. The data curation process of radiographic images includes data anonymization, checking the representativeness of the data, standardization of the data format, minimization of noise in the data, segmentation of the region of interest, and annotation.19

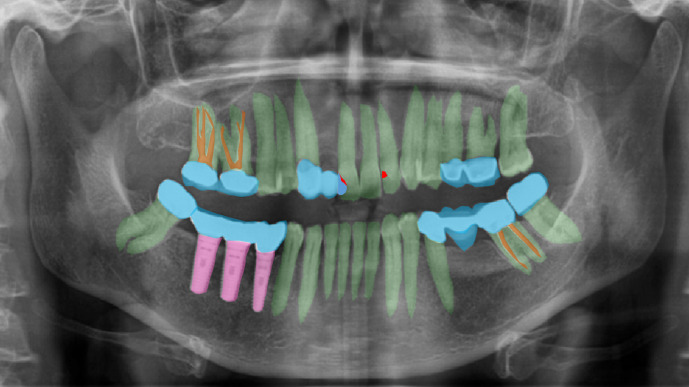

Ground truth denotes reliable values that can be used as a reference, and in deep learning, it refers to correct labels that are generated by experts.19 High accuracy is essential for applications of artificial intelligence using deep learning, and in turn it is necessary to have a high-quality data set with accurate labels. There are two ways to make labels. One way is for a radiologist to make annotations through image review, and the other is to use information from radiology reports. The former method requires considerable time and effort, and there may be differences among readers. In two-dimensional (2D) radiographic images such as periapical and panoramic photographs, this difference between observers may be more severe, because in 2D radiographic images where structures overlap, it is often difficult to clearly distinguish and outline three-dimensional (3D) anatomical structures (Figure 3). Using the latter method, it may be necessary to re-test the correctness of the labels. The choice of the method depends on the task. When radiologists create labels, some points should be considered. First, in order to create massive quantities of data, it is generally necessary for a team consisting of several radiologists to label the images, and the standards between radiologists may differ during this process. Therefore, establishing a consensus for labeling is important for ensuring an adequate ground truth. Using intra/inter class coefficient is a practical way of checking agreement between radiologist. The format of the labels and the labeling tool should reflect the task content and the deep learning model that is being used. For instance, one model might require an exact marking line to indicate the margin of the lesion, while another model might just need simple marking to indicate the presence of the lesion. To establish the type of label, radiologists should communicate with the engineers who develop the code for deep learning applications.

Figure 3.

Example of complex labeling of dental panoramic radiography. In two-dimensional radiographs where structures overlap, it is often difficult to clearly distinguish and outline three-dimeniosnal anatomical structures. Green: teeth, pink: implant, sky: prosthodontics, brown: endodontic filling, red: dental caries.

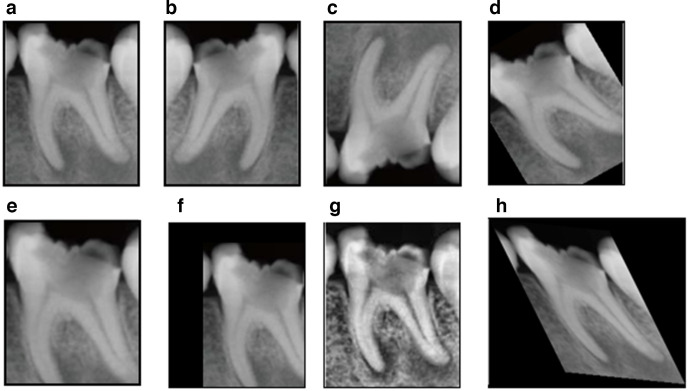

Acquiring an extremely large data set of high-quality data is ideal for optimizing the performance of a deep learning application. However, obtaining high-quality labeled data is costly and time-consuming, and it might not be possible to obtain a sufficient number of case samples for some studies. This problem can be solved by using data augmentation, which involves altering the data set in a way that changes the data representation while keeping the label the same. Possible transformations include cropping, flipping, rotation, translation, zooming, skewing, elastic deformation, and modifying the contrast or resolution (Figure 4).20 When using data augmentation, care should be given not to remove region of interest by the augmentation process, which can degrade performance of deep learning model. Recently, as synthetic data augmentation using GANs has become more sophisticated, studies have been launched to solve the data scarcity issue in various problems related to classification, detection, and segmentation.21

Figure 4.

Data augmentation example of periapical X-ray. a. Original image, b, c. Flip, d. Rotation, e. Zoom. f, Translation, g. Contrast adjustment, h. Elastic deformation. Note that apical lesion is removed in d, e, and f, which can degrade performance of periapical lesion detection model.

Diving data for learning process

Labeled data are typically divided into training, validation, and test data sets. The training data set is used to train and adjust the parameters of the learning model. The validation data are used to monitor the performance of the model during training and to search for the best model. The test data are used to evaluate the final performance of the model. It is crucial to avoid any overlap between training/validation and test data sets, and the required size of the data set for each step depends on the nature and the complexity of the task.

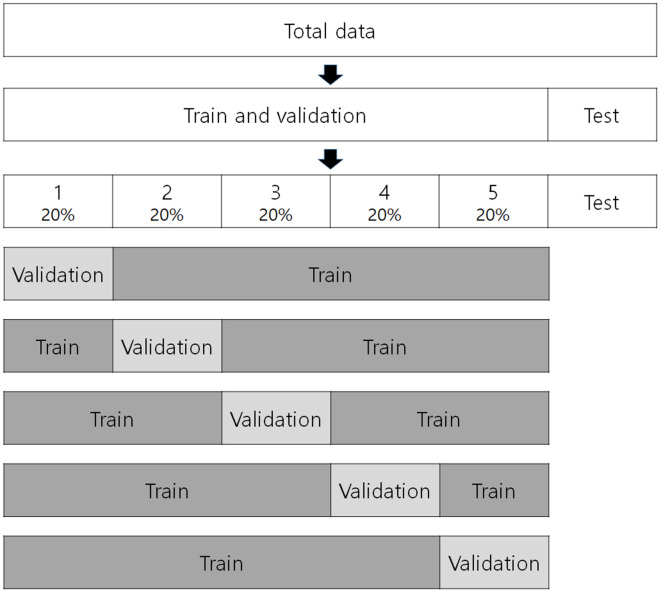

In cases of small data set, cross-validation can be conducted to estimate and generalize the performance of training model in the training phase.22 The training data set is divided into k equal subsets in k-fold cross-validation (Figure 5). One data subset is saved as the validation set, and the others (k-1) are saved as a cross-validation training set. The model is then trained using the training set, and the performance of the model is measured with the validation set. This process is repeated a total of k times, changing the validation set. The final performance is the average of the k measured performances.23 In some studies, the validation set is omitted, and the data are separated into training and test data sets.

Figure 5.

An example of fivefold cross-validation. Total data separate into train, validation, and test data sets for a study on artificial intelligence.

Artificial intelligence in OMF radiology

Artificial intelligence is being studied in relation to various topics in the field of OMF radiology. Hwang et al11 reviewed English-language artificial intelligence studies in the dental field using the PubMed, Scopus, and IEEE Xplore databases. Of the 25 articles that they found, 12 were related to teeth, 2 were related to dental tissues, and 2 were related to osteoporosis. According to their results, CNN was used as a main network component. The number of published papers and training data sets tended to increase over time in various fields of dentistry.

Schwendicke et al24 classified 36 articles published from 2015 to 2019 and identified the types of images. The studies focused on general dentistry (n = 15 studies), cariology (n = 5), endodontics (n = 2), periodontology (n = 3), orthodontics (n = 3), dental radiology (n = 2), forensic dentistry (n = 2) and general medicine (n = 4). The most commonly analyzed image type was panoramic radiographs (n = 11), followed by periapical radiographs (n = 8) and cone-beam CT or conventional CT (n = 6). The sizes of the data sets ranged from 10 to 5166 images (mean, 1053).

Hung et al25 investigated the current clinical applications and diagnostic performance of artificial intelligence in dental and maxillofacial radiology in an analysis of 50 studies. In their review, there had been studies using periapical radiographs (n = 6), panoramic radiographs (n = 14), both intraoral and panoramic radiographs (n = 1), cephalometric radiographs (n = 10), cone beam CT (CBCT) images (n = 14), intraoral photographs (n = 1), intraoral fluorescent images (n = 1), undescribed dental X-ray images to develop their artificial intelligence models (n = 1), and 3D images (CR/MRI) and cephalometric radiographs to test performance of an available computer-aided diagnostic software (n = 2). Most studies focused on artificial intelligence applications for automated localization of cephalometric landmarks, diagnosis of osteoporosis, classification/segmentation of maxillofacial cysts and/or tumors, and identification of periodontitis/periapical disease. The performance of artificial intelligence models varied across different algorithms. Table 1 reveals the dental applications and imaging modalities of deep learning in the systemic reviews.

Table 1.

Dental applications and imaging modalities of deep learning in systemic reviews.

| Author (year) | Applications | Imaging modalities |

| Hwang et al. (2019)11 | Tooth related 12 | Intraoral 6 |

| N = 25 | Dental plaque 3 | Panoramic 6 |

| Gingiva or periodontium 2 | Cephalometric 1 | |

| Osteoporosis 2 | CBCT 4 | |

| Others 5 | CT 1 | |

| Others 7 | ||

| Schwendicke et al. | General dentistry 15 | Intraoral 11 |

| (2019)24 | Cariology 5 | Panoramic 10 |

| N = 36 | Endodontics 2 | Cephalometric 2 |

| Periodontology 3 | Panoramic and CBCT 1 | |

| Orthodontics 3 | CBCT 5 | |

| Dental radiology 2 | CT 1 | |

| Forensic dentistry 2 | Others 6 | |

| General medicine 4 | ||

| Hung et al. (2020)25 | Cephalometric landmarks 19 | Periapical 6 |

| N = 50 | Diagnosis of osteoporosis 9 | Intraoral and panoramic 1 |

| Maxillofacial cyst and/or tumors 6 | Panoramic 14 | |

| Alveolar bone resorption 3 | Cephalometric 10 | |

| Periapical diseases 3 | CBCT 14 | |

| Multiple dental diseases 2 | Others 5 | |

| Tooth Types 2 | ||

| Ohers 6 |

CBCT: cone-beam computed tomography,CT, computed tomography; N, number of reviewed articles.

Nagi et al26 reviewed the clinical applications and performance of intelligence systems in dental and maxillofacial radiology. They described the basic concepts of artificial intelligence, machine learning, deep learning, training of neural networks, learning program and algorithms, and fuzzy logic. They also suggested future prospects of artificial intelligence in radiomics, imaging biobanks, and hybrid intelligence.

Radiographic diagnosis

In the field of OMF radiology, studies have been conducted on artificial intelligence for the diagnosis of a broad range of diseases, dental caries, periodontal disease, osteosclerosis, odontogenic cysts and tumors, and diseases of the maxillary sinus or temporomandibular joints.

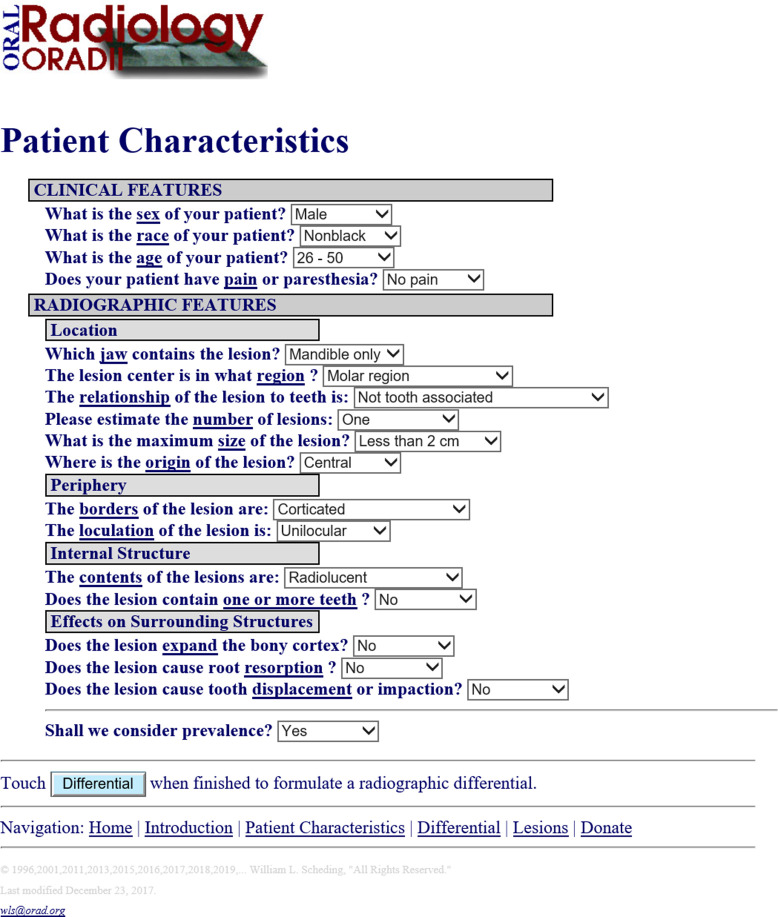

In 1990s, an early type of artificial intelligence was developed by White at UCLA, who developed a system named “ORAD” in 1995. This system has been upgraded to ORAD II (http://www.orad.org/cgi-bin/orad/index.pl) and it provides differential diagnosis of OMF diseases (Figure 6).27 When using the system, a user can put a patient’s clinical and radiographic features into the system, and then a list of differential diagnoses is provided. This system reflects an early step in the application of artificial intelligence for diagnostic purposes.

Figure 6.

ORAD II web interface27 at http://www.orad.org/cgi-bin/orad/patient.pl?eval=true&now=1596450628.

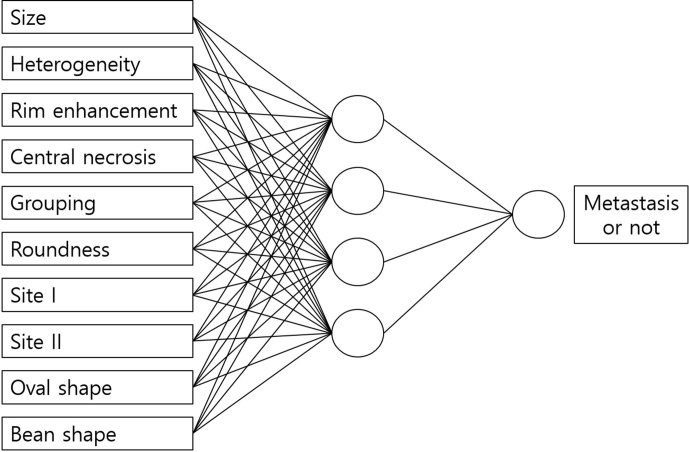

In 1999, Park et al28 studied an artificial neural network system that evaluated cervical lymph node metastasis of oral squamous cell carcinoma on MR images, and they concluded that the artificial neural network system was more useful than any single MR imaging criterion. In their study, the imaging features of lymph node metastasis were determined and entered into the system by the observer (Figure 7).

Figure 7.

An example of artificial neural network from modified by Park et al’s study.28

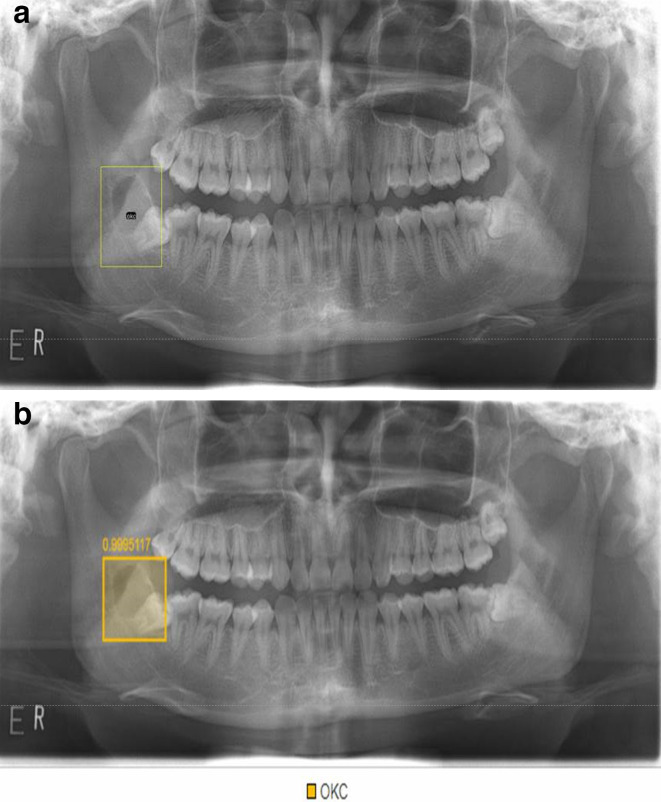

Studies have investigated the use of deep learning for diagnosis of dental caries,29,30 periodontal disease,31,32 vertical root fracture,33 periapical pathosis,34 and cysts and tumors of jaw bone (Figure 8).35 Chang et al36 recently developed an automatic method for staging periodontitis on dental panoramic radiographs using a deep learning hybrid method, and their artificial intelligence system, which combined a deep learning architecture and the conventional computer-assisted diagnosis (CAD) approach, demonstrated high accuracy and excellent reliability in the automatic diagnosis of periodontal bone loss and staging of periodontitis according to the amount of alveolar bone loss.

Figure 8.

a. Odontogenic keratocyst (OCK) is labelled at the right posterior mandible on a panoramic radiograph. b. The lesion is automatically detected using deep learning.

An important consideration for artificial intelligence studies is that the learning procedure requires refined data based on accurate readings from OMF radiologists. However, the diagnostic accuracy of dental caries varies depending on the observers’ capability, because there are differences among observers in terms of experience and skill. Therefore, the overall ability of artificial intelligence systems depends on the accuracy of the annotation data. For this reason, researchers studying automatic readings using artificial intelligence must use refined data (i.e. readings from experienced OMF radiologists) to obtain reasonable results.

Radiographic analysis

Image analysis using artificial intelligence in dentistry has been applied to various tasks, such as tooth segmentation or localization, bone quality (osteoporosis) assessment, bone age assessment using hand-wrist radiographs, and cephalometric landmark localization.11,25 Deep learning systems using CNN structures have been applied in the dental field, and a system that used 3D CBCT images as well as 2D images was developed.11

Deep learning has been used to detect and classify teeth on CBCT images as well as panoramic images.37,38 When teeth are classified using such systems, automated CAD outputs can help dentists make clinical decisions and reduce their charting time by automatically filling digital patient records.37,39

Panoramic radiographs provide important information for diagnosing osteopenia and osteoporosis,40 and osteoporosis in postmenopausal females was determined using the reduction in mandibular cortical width (MCW) and the degree of erosion of the mandibular lower cortex.41,42 Using MCW and mandibular cortical erosion findings of panoramic radiographs, artificial intelligence studies were conducted to diagnose osteoporosis.43,44 It is expected that artificial intelligence models will be clinically applied to diagnose osteopenia and osteoporosis. Lee et al45 evaluated the diagnostic performance of a deep convolutional neural network (DCNN)-based CAD system in the detection of osteoporosis on panoramic radiographs, and they concluded that a DCNN-based CAD system could provide information to dentists for the early detection of osteoporosis.

According to Dallora et al,46 most bone age assessment studies using machine learning implemented automatic systems for bone age assessment by setting regions of interest in the hands and wrists on radiographs.47,48 Deep learning models were able to estimate bone age with accuracy similar to that of a professional radiologist,47,48 and increased efficiency by reducing reading time while maintaining diagnostic accuracy.48,49 Shin et al50 evaluated the clinical efficacy of a TW3-based fully automated bone age assessment system using 13 regions of interest on hand-wrist radiographs of healthy children and adolescents in Korea. They used a VGGNet-BA CNN to classify the skeletal maturity level of a region of interest, and they demonstrated that this bone age assessment system could be effectively used for TW3-based bone age assessments using hand-wrist radiographs of children and adolescents aged 7–15 years in Korea.

It is often necessary to determine which dental implants have been placed in a patient. Sukegawa et al51 performed a study on a deep neural network for classifying dental implant systems. Five deep CNN modes were evaluated, and they concluded that the finely tuned VGG16 showed the best results for classifying dental implant systems.

Cephalometric analyses have been performed using several artificial intelligence models. Early systems for automatic cephalometric analysis were not accurate enough for clinical use.52 Accuracy was then improved by developing new algorithms. In recent years, researchers have actively explored 3D cephalometric landmark analysis using CBCT images,53,54 and the reliability of the mid-sagittal plane landmarks was found to be higher than that of the bilateral landmarks.55

Forensic dentistry

Forensic dentistry is widely used for age estimation, sex determination, and identification of unknown people because human dentition follows a predictable developmental sequence. Studies have investigated the evidence that can be derived from the teeth or surrounding bony structures on dental radiographs. However, dental radiographs are well known for their poor image quality, with problems including blurring, low contrast exposure, and in some cases superimposition and distortion. Therefore, finding any relevant evidence using clinical procedures is very labor-intensive, while forensic methods involving measurements of the teeth could be affected by observers’ subjective judgment.56 Many researchers have tried to develop automated methods to overcome these difficulties, and some recent studies have explored the applicability of artificial intelligence–based methods using dental radiography for forensic odontology.

For postmortem identification using dental radiography, Nassar and Ammar57 introduced an automated dental identification system based on individual CNNs. They used periapical and bitewing images. In 2018, Zhang et al58 proposed a label tree with a cascade network based on deep learning techniques for tooth recognition, and reported that this method performed much better than a single network.

An age estimation techniques using teeth are based on developmental and degenerative changes of teeth or their supporting structures in the oral cavity. Dental radiology-based methods are beneficial as non-invasive and accurate alternatives instead of sectioning or extracting teeth to analyze their structure, and they can therefore be used for both living and deceased individuals. Generally, automated age estimation involves several processes including image preprocessing, segmentation, extraction of features, and classification, but the data obtained can be affected by observers. In contrast, deep learning algorithms do not require such steps; therefore, automatic methods using deep learning have been receiving more attention than other artificial intelligence algorithms. In 2017, De Tobel et al59 proposed an automated method to evaluate the degree of third molar development on 400 dental panoramic radiographs. The region of interest of the third molar was manually set by observers and several machine learning algorithms were applied. Farhadian et al60 performed a study on the artificial neural networks for dental age estimation using pulp-to-tooth ratio in canines, they suggested the possibility of developing a new tool using neural networks to predict age based on dental findings. Vila-Blanco et al61 proposed the DASNET, which added a second CNN path to predict sex and used sex-specific features. They reported that this algorithm was significantly more accurate for age prediction than manual age estimation, especially in young subjects with developing dentitions. Mualla et al62 proved that a transfer learning method was effective for age estimation. They used AlexNet and ResNet-101 for image feature extraction and a decision tree, linear discriminant, k-nearest neighbor, support vector machine for classification. Only one study has focused on forensic sex determination using dental panoramic radiographs, and even that study was conducted based on mandibular morphometric measurements that were manually obtained by observers.63

Although radiographic findings of dental structures, including teeth, play an important role in forensic science, it is very time-consuming work and measurements are affected by the subjective judgment of observers. Artificial intelligence is a promising method for solving this problem and enabling objective decision-making. Although artificial intelligence has mainly been studied for age estimation, future innovations in other domains will be highly useful.

Image quality improvement

Most medical images are prone to noise in the image acquisition and transmission process,64 in particular, the geometry of CBCT makes it vulnerable to noise.65 Image denoising has been attempted using machine learning techniques such as sparse-based or filtering methods, but limitations include the need for manual parameter setting or the inapplicability of a specific method to other situations.66 Deep learning has recently shown promise for overcoming these drawbacks owing to its flexible architecture. In particular, CNN and GAN architectures have been applied to deal with noise reduction and image deblocking.67–69 An important application of image denoising technology is image quality improvement for low-dose CT. Although low-dose CT is desirable for treatment planning and simulation to reduce patient exposure, the use of low-dose CT inevitably increases noise. As deep learning has been applied to low-dose CT in recent research, denoising studies combining deep learning with machine learning technology are actively being conducted to leverage the effects of synergy.70

Motion artifacts caused by patient or organ movements are an important issue that may influence diagnostic accuracy. Studies using CNNs are being conducted to improve the quality of blurred images.71

Artificial intelligence has also been used to reduce metal artifacts in images. High-attenuation materials, such as dental crowns and implants, lead to severe scattering, photon starvation, and beam hardening. These phenomena cause dark and bright streak artifacts to appear in reconstructed CT and CBCT images, potentially reducing diagnostic accuracy.72 Although conventional metal artifact reduction (MAR) has been proposed to replace artifacts using the surrounding data by interpolation,73 clinical limitations have been reported.74 Recently, MAR using deep learning has been studied to recover information in the artifact area. In this research, image deblocking techniques using CNN or GAN have been used to reconstruct images after removing the metal part.75

Considerations in artificial intelligence

As presented above, several recent studies have been conducted in the field of OMF radiology with promising results, and artificial intelligence research into various topics continues to be actively carried out. Many factors should be considered for artificial intelligence research to achieve the desired results.

First, the development of automatic interpretation systems using artificial intelligence requires large amounts of data, necessitating a proper augmentation process for increasing the quantity of data. In addition, training data sets should have minimal error and high levels of accuracy and consistency. Otherwise, the learning process of the artificial intelligence system will not be appropriate. Therefore, it is essential for OMF radiologists with sufficient experience to participate in these efforts. Also, it is necessary to develop adequate annotation, labeling, and drawing tools to perform these tasks accurately and efficiently. And a large-scale data set reservatory containing fine-labeled data annotated by OMF radiologists is needed. It is difficult to develop deep learning algorithms that can be applied to clinical practice in the field of dentistry with fragmentary studies using small-scale data as of now.

A sufficient amount of training data are necessary for satisfactory learning. It is only known that there is a proportional relationship between the training data required for learning and the complexity of the data, but no method of measuring data complexity and calculating the sufficient amount of data has yet been established.76 When constructing a training data set, OMF radiologists must annotate the target disease or anatomical structure in each image. This is a very difficult and time-consuming process, and it would be more efficient to minimize the size of training data sets while maintaining reasonable results. Future research is expected to clarify sufficient data for specific dental image using big data gathered in the reservoir mentioned above

High performance of artificial intelligence system requires sufficient training data with minimal errors, but it depends on network architecture and parametric configurations such as various layers, functions, filters, initializers, optimizers, learning rate, and so on, too.77 They should be organized well by an expert with extensive experience in development of artificial intelligence system; therefore, the engineers’ experience and ability are also important in developing high-performance systems.

The proper interpretation of radiographic images is essential, but there are too few OMF radiologists worldwide with adequate expertise to interpret the radiographic images taken at all dental clinics. Considering this situation, artificial intelligence is expected to be highly helpful in supporting the work of OMF radiologists for dealing with more images at the same time. For example, the AI system can mark and classify suspicious area in advance. However, the results of artificial intelligence readings are not inherently fully reliable, since the output of the system depends on the training data and adequate selection and training of the model. Therefore, a final interpretation by OMF radiologists is still considered necessary.

The complex judgment process of artificial intelligence is difficult for humans to intuitively understand, and is often compared to a black-box. The difficulty of human access to this judgment process eventually leads to a problem of reliability, because it is difficult to understand why when the artificial intelligence makes a wrong judgment, and thus it causes the problem that the judgment logic of the artificial intelligence cannot be easily corrected.

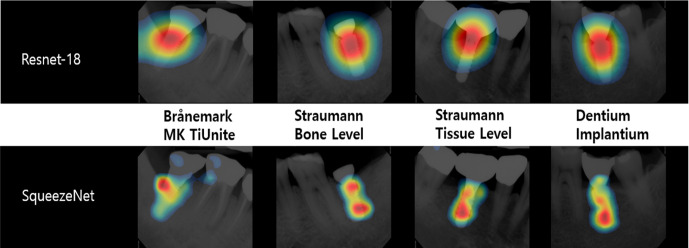

Recently, class activation mapping (CAM), gradient-weighted CAM (grad-CAM), and guided backpropagation have been used to investigate and explain the predictions of CNN networks. CAM can be used to guarantee the reliability of AI judgment as it can check whether the CNN network has made a judgment by looking at features that radiologists value.78 Figure 9 shows an example of CAM in Kim et al’s study79 which can help radiologist to evaluate the reliability of trained deep learning network. In order to move forward to explainable artificial intelligence, further development and study of these technologies is needed.

Figure 9.

Example of the class activation maps of the five classification networks for four implant fixture types. When the network classifies the fixture type, it determines by looking at specific parts of the fixture.

In addition, preparation for DICOM image hacking using deep learning is necessary for the safe use of AI in clinical practice. There have been reports that cancer images can be injected or removed from DICOM images using deep learning, which can cause serious problems in patient treatment and safety. Therefore, it is urgent to strengthen the security of DICOM systems as well as hospital system security, as most (99%) radiologists perceived these altered images as real.80

Radiographic imaging is very useful in the dental field, both for diagnostic purposes and to ensure proper treatment. In implant, orthodontic, and oral surgery, radiographic images are quantitatively analyzed as part of treatment planning. Artificial intelligence is of considerable value in these fields. OMF radiologists, as professionals who understand the basic principles and characteristics of radiographic imaging and have the ability to read radiographs and interpret them in terms of various diseases, continue to play an important role in artificial intelligence-related research, and OMF radiology is one of the most promising areas for the further development of dentistry.

REFERENCES

- 1.McCarthy J, Minsky ML, Rochester N, Shannon CE. A proposal for the Dartmouth summer research project on artificial intelligence, August 31, 1955. AI Mag 2006; 27: 12. [Google Scholar]

- 2.Crevier D. AI: the tumultuous history of the search for artificial intelligence. New York, NY: Basic Books; 1993. [Google Scholar]

- 3.Campbell M, Hoane AJ, Hsu F-hsiung. Deep blue. Artif Intell 2002; 134(1-2): 57–83. doi: 10.1016/S0004-3702(01)00129-1 [DOI] [Google Scholar]

- 4.Russell SJ, Norvig P. Artificial intelligence: a modern approach. 4th. New Jersey: Pearson; 2020. [Google Scholar]

- 5.Ferrucci D, Levas A, Bagchi S, Gondek D, Mueller ET. Watson: beyond jeopardy! Artif Intell 2013; 199-200: 93–105. doi: 10.1016/j.artint.2012.06.009 [DOI] [Google Scholar]

- 6.Wang FY, Zhang JJ, Zheng X, Wang X, Yuan Y, Dai X, et al. Where does AlphaGo go: from church-turing thesis to AlphaGo thesis and beyond. IEEE/CAA J Automatica Sinica 2016; 3: 113–20. [Google Scholar]

- 7.Bishop CM. Pattern recognition and machine learning. New York: Springer; 2006. [Google Scholar]

- 8.Najafabadi MM, Villanustre F, Khoshgoftaar TM, Seliya N, Wald R, Muharemagic E. Deep learning applications and challenges in big data analytics. J Big Data 2015; 2: 1. doi: 10.1186/s40537-014-0007-7 [DOI] [Google Scholar]

- 9.LeCun Y, Boser B, Denker JS, Henderson D, Howard RE, Hubbard W, et al. Backpropagation applied to handwritten ZIP code recognition. Neural Comput 1989; 1: 541–51. doi: 10.1162/neco.1989.1.4.541 [DOI] [Google Scholar]

- 10.Hinton GE. Learning multiple layers of representation. Trends Cogn Sci 2007; 11: 428–34. doi: 10.1016/j.tics.2007.09.004 [DOI] [PubMed] [Google Scholar]

- 11.Hwang J-J, Jung Y-H, Cho B-H, Heo M-S. An overview of deep learning in the field of dentistry. Imaging Sci Dent 2019; 49: 1–7. doi: 10.5624/isd.2019.49.1.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S et al. Generative adversarial nets. : Ghahramani Z, Welling M, Cortes C, Lawrence N. D, Weinberger K. Q, Advances in Neural Information Processing Systems 27 (NIPS 2014). Neural Information Processing Systems Foundation. San Diego; 2014. . 2672–80. [Google Scholar]

- 13.Radford A, Metz L, Chintala S. Unsupervised Representation learning with deep convolutional generative adversarial networks. arXiv [Internet]. 2015. Available from: https://arxiv.org/abs/1511.06434 [2020 Jul 20].

- 14.Costa P, Galdran A, Meyer MI, Niemeijer M, Abramoff M, Mendonca AM, et al. End-To-End Adversarial retinal image synthesis. IEEE Trans Med Imaging 2018; 37: 781–91. doi: 10.1109/TMI.2017.2759102 [DOI] [PubMed] [Google Scholar]

- 15.Hwang JJ, Azernikov S, Efros AA, SX Y. Learning beyond human expertise with generative models for dental restorations. arXiv [Internet]. 2018. Available from: https://arxiv.org/abs/1804.00064 [2020 Jul 20].

- 16.Kim M, Yun J, Cho Y, Shin K, Jang R, Bae H-J, et al. Deep learning in medical imaging. Neurospine 2019; 16: 657–68. doi: 10.14245/ns.1938396.198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ker J, Wang L, Rao J, Lim T. Deep learning applications in medical image analysis. IEEE Access 2018; 6: 9375–89. doi: 10.1109/ACCESS.2017.2788044 [DOI] [Google Scholar]

- 18.Hesamian MH, Jia W, He X, Kennedy P. Deep learning techniques for medical image segmentation: achievements and challenges. J Digit Imaging 2019; 32: 582–96. doi: 10.1007/s10278-019-00227-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Do S, Song KD, Chung JW. Basics of deep learning: a radiologist's guide to understanding published radiology articles on deep learning. Korean J Radiol 2020; 21: 33–41. doi: 10.3348/kjr.2019.0312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Roth HR, Lu L, Liu J, Yao J, Seff A, Cherry K, et al. Improving computer-aided detection using convolutional neural networks and random view aggregation. IEEE Trans Med Imaging 2016; 35: 1170–81. doi: 10.1109/TMI.2015.2482920 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Frid-Adar M, Diamant I, Klang E, Amitai M, Goldberger J, Greenspan H. GAN-based synthetic medical image augmentation for increased CNN performance in liver lesion classification. Neurocomputing 2018; 321: 321–31. doi: 10.1016/j.neucom.2018.09.013 [DOI] [Google Scholar]

- 22.Montagnon E, Cerny M, Cadrin-Chênevert A, Hamilton V, Derennes T, Ilinca A, et al. Deep learning workflow in radiology: a primer. Insights Imaging 2020; 11: 22. doi: 10.1186/s13244-019-0832-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rogers W, Thulasi Seetha S, Refaee TAG, Lieverse RIY, Granzier RWY, Ibrahim A, et al. Radiomics: from qualitative to quantitative imaging. Br J Radiol 2020; 93: 20190948. doi: 10.1259/bjr.20190948 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schwendicke F, Golla T, Dreher M, Krois J. Convolutional neural networks for dental image diagnostics: a scoping review. J Dent 2019; 91: 103226. doi: 10.1016/j.jdent.2019.103226 [DOI] [PubMed] [Google Scholar]

- 25.Hung K, Montalvao C, Tanaka R, Kawai T, Bornstein MM. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: a systematic review. Dentomaxillofac Radiol 2020; 49: 20190107. doi: 10.1259/dmfr.20190107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Nagi R, Aravinda K, Rakesh N, Gupta R, Pal A, Mann AK. Clinical applications and performance of intelligent systems in dental and maxillofacial radiology: a review. Imaging Sci Dent 2020; 50: 81–92. doi: 10.5624/isd.2020.50.2.81 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Orad.org [Internet] Los Angeles: Oral Radiology ORAD II; c. 2019. Available from: http://www.orad.org/cgi-bin/orad/patient.pl [May 1, 2020].

- 28.Park SW, Heo MS, Lee SS, Choi SC, Park TW, You DS. Artificial neural network system in evaluating cervical lymph node metastasis of squamous cell carcinoma. Korean J Oral Maxillofac Radiol 1999; 29: 149–59. [Google Scholar]

- 29.Casalegno F, Newton T, Daher R, Abdelaziz M, Lodi-Rizzini A, Schürmann F, et al. Caries detection with near-infrared transillumination using deep learning. J Dent Res 2019; 98: 1227–33. doi: 10.1177/0022034519871884 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J Dent 2018; 77: 106–11. doi: 10.1016/j.jdent.2018.07.015 [DOI] [PubMed] [Google Scholar]

- 31.Thanathornwong B, Suebnukarn S. Automatic detection of periodontal compromised teeth in digital panoramic radiographs using faster regional convolutional neural networks. Imaging Sci Dent 2020; 50: 169–74. doi: 10.5624/isd.2020.50.2.169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kim J, Lee H-S, Song I-S, Jung K-H. DeNTNet: deep neural transfer network for the detection of periodontal bone loss using panoramic dental radiographs. Sci Rep 2019; 9: 17615. doi: 10.1038/s41598-019-53758-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Fukuda M, Inamoto K, Shibata N, Ariji Y, Yanashita Y, Kutsuna S, et al.;in press Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiol 41. doi: 10.1007/s11282-019-00409-x [DOI] [PubMed] [Google Scholar]

- 34.Orhan K, Bayrakdar IS, Ezhov M, Kravtsov A, Özyürek T. Evaluation of artificial intelligence for detecting periapical pathosis on cone-beam computed tomography scans. Int Endod J 2020; 53: 680–9. doi: 10.1111/iej.13265 [DOI] [PubMed] [Google Scholar]

- 35.Kwon O, Yong TH, Kang SR, Kim JE, Huh KH, Heo MS, et al. Automatic diagnosis for cysts and tumors of both jaws on panoramic radiographs using a deep convolution neural network. Dentomaxillofac Radiol. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chang H-J, Lee S-J, Yong T-H, Shin N-Y, Jang B-G, Kim J-E, et al. Deep learning hybrid method to automatically diagnose periodontal bone loss and stage periodontitis. Sci Rep 2020; 10: 7531. doi: 10.1038/s41598-020-64509-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac Radiol 2019; 48: 20180051. doi: 10.1259/dmfr.20180051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Miki Y, Muramatsu C, Hayashi T, Zhou X, Hara T, Katsumata A, et al. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput Biol Med 2017; 80: 24–9. doi: 10.1016/j.compbiomed.2016.11.003 [DOI] [PubMed] [Google Scholar]

- 39.Doi K. Computer-Aided diagnosis in medical imaging: historical review, current status and future potential. Comput Med Imaging Graph 2007; 31(4-5): 198–211. doi: 10.1016/j.compmedimag.2007.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kwon A-Y, Huh K-H, Yi W-J, Lee S-S, Choi S-C, Heo M-S. Is the panoramic mandibular index useful for bone quality evaluation? Imaging Sci Dent 2017; 47: 87–92. doi: 10.5624/isd.2017.47.2.87 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Taguchi A, Tsuda M, Ohtsuka M, Kodama I, Sanada M, Nakamoto T, et al. Use of dental panoramic radiographs in identifying younger postmenopausal women with osteoporosis. Osteoporos Int 2006; 17: 387–94. doi: 10.1007/s00198-005-2029-7 [DOI] [PubMed] [Google Scholar]

- 42.Johari Khatoonabad M, Aghamohammadzade N, Taghilu H, Esmaeili F, Jabbari Khamnei H. Relationship among panoramic radiography findings, biochemical markers of bone turnover and hip BMD in the diagnosis of postmenopausal osteoporosis. Iran J Radiol 2011; 8: 23–8. [PMC free article] [PubMed] [Google Scholar]

- 43.Kavitha MS, An S-Y, An C-H, Huh K-H, Yi W-J, Heo M-S, et al. Texture analysis of mandibular cortical bone on digital dental panoramic radiographs for the diagnosis of osteoporosis in Korean women. Oral Surg Oral Med Oral Pathol Oral Radiol 2015; 119: 346–56. doi: 10.1016/j.oooo.2014.11.009 [DOI] [PubMed] [Google Scholar]

- 44.Hwang JJ, Lee J-H, Han S-S, Kim YH, Jeong H-G, Choi YJ, et al. Strut analysis for osteoporosis detection model using dental panoramic radiography. Dentomaxillofac Radiol 2017; 46: 20170006. doi: 10.1259/dmfr.20170006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Lee J-S, Adhikari S, Liu L, Jeong H-G, Kim H, Yoon S-J. Osteoporosis detection in panoramic radiographs using a deep convolutional neural network-based computer-assisted diagnosis system: a preliminary study. Dentomaxillofac Radiol 2019; 48: 20170344. doi: 10.1259/dmfr.20170344 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Dallora AL, Anderberg P, Kvist O, Mendes E, Diaz Ruiz S, Sanmartin Berglund J. Bone age assessment with various machine learning techniques: a systematic literature review and meta-analysis. PLoS One 2019; 14: e0220242. doi: 10.1371/journal.pone.0220242 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Larson DB, Chen MC, Lungren MP, Halabi SS, Stence NV, Langlotz CP. Performance of a deep-learning neural network model in assessing skeletal maturity on pediatric hand radiographs. Radiology 2018; 287: 313–22. doi: 10.1148/radiol.2017170236 [DOI] [PubMed] [Google Scholar]

- 48.Kim JR, Shim WH, Yoon HM, Hong SH, Lee JS, Cho YA, et al. Computerized bone age estimation using deep learning based program: evaluation of the accuracy and efficiency. AJR Am J Roentgenol 2017; 209: 1374–80. doi: 10.2214/AJR.17.18224 [DOI] [PubMed] [Google Scholar]

- 49.Booz C, Yel I, Wichmann JL, Boettger S, Al Kamali A, Albrecht MH, et al. Artificial intelligence in bone age assessment: accuracy and efficiency of a novel fully automated algorithm compared to the Greulich-Pyle method. Eur Radiol Exp 2020; 4: 6. doi: 10.1186/s41747-019-0139-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Shin NY, Lee BD, Kang JH, Kim HR, DH O, Lee BI, et al. Evaluation of the clinical efficacy of a TW3-based fully automated bone age assessment system using deep neural networks. Imaging Sci Dent. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Sukegawa S, Yoshii K, Hara T, Yamashita K, Nakano K, Yamamoto N, et al. Deep neural networks for dental implant system classification. Biomolecules 2020; 10: 984. doi: 10.3390/biom10070984 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Leonardi R, Giordano D, Maiorana F, Spampinato C. Automatic cephalometric analysis. Angle Orthod 2008; 78: 145–51. doi: 10.2319/120506-491.1 [DOI] [PubMed] [Google Scholar]

- 53.Neelapu BC, Kharbanda OP, Sardana V, Gupta A, Vasamsetti S, Balachandran R, et al. Automatic localization of three-dimensional cephalometric landmarks on CBCT images by extracting symmetry features of the skull. Dentomaxillofac Radiol 2018; 47: 20170054. doi: 10.1259/dmfr.20170054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Vernucci RA, Aghazada H, Gardini K, Fegatelli DA, Barbato E, Galluccio G, et al. Use of an anatomical mid-sagittal plane for 3-dimensional cephalometry: a preliminary study. Imaging Sci Dent 2019; 49: 159–69. doi: 10.5624/isd.2019.49.2.159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Sam A, Currie K, Oh H, Flores-Mir C, Lagravére-Vich M. Reliability of different three-dimensional cephalometric landmarks in cone-beam computed tomography : A systematic review. Angle Orthod 2019; 89: 317–32. doi: 10.2319/042018-302.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Saleh SA, Ibrahim H. Mathematical equations for homomorphic filtering in frequency domain: a literature survey. Int Proc Comput Info Knowl Manag 2012; 45: 74–7. [Google Scholar]

- 57.Nassar DEM, Ammar HH. A neural network system for matching dental radiographs. Pattern Recognit 2007; 40: 65–79. doi: 10.1016/j.patcog.2006.04.046 [DOI] [Google Scholar]

- 58.Zhang K, Wu J, Chen H, Lyu P. An effective teeth recognition method using label tree with cascade network structure. Comput Med Imaging Graph 2018; 68: 61–70. doi: 10.1016/j.compmedimag.2018.07.001 [DOI] [PubMed] [Google Scholar]

- 59.De Tobel J, Radesh P, Vandermeulen D, Thevissen PW. An automated technique to stage lower third molar development on panoramic radiographs for age estimation: a pilot study. J Forensic Odontostomatol 2017; 35: 42–54. [PMC free article] [PubMed] [Google Scholar]

- 60.Farhadian M, Salemi F, Saati S, Nafisi N. Dental age estimation using the pulp-to-tooth ratio in canines by neural networks. Imaging Sci Dent 2019; 49: 19–26. doi: 10.5624/isd.2019.49.1.19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Vila-Blanco N, Carreira MJ, Varas-Quintana P, Balsa-Castro C, Tomas I. Deep neural networks for chronological age estimation from OPG images. IEEE Trans Med Imaging 2020. [DOI] [PubMed] [Google Scholar]

- 62.Mualla N, Houssein E, Hassan M. Dental age estimation based on X-ray images. Comput Mater Continua 2019; 61: 591–605. [Google Scholar]

- 63.Patil V, Vineetha R, Vatsa S, Shetty DK, Raju A, Naik N, et al. Artificial neural network for gender determination using mandibular morphometric parameters: a comparative retrospective study. Cogent Engineering 2020; 7: 1723783. doi: 10.1080/23311916.2020.1723783 [DOI] [Google Scholar]

- 64.Peña-Cantillana F, Diaz-Pernil D, Berciano A, Gutierrez-Naranjo A. A parallel implementation of the thresholding problem by using tissue-like P systems. : Berciano A, Díaz-Pernil D, Kropatsch W, Molina-Abril H, Real P, Computer Analysis of Images and Patterns: Proceedings of 14th International Conference CAIP 2011. 2011. Seville, Spain, Berlin, Heidelberg: Springer-Verlag; 2011. . 277–84. [Google Scholar]

- 65.Pauwels R, Araki K, Siewerdsen JH, Thongvigitmanee SS. Technical aspects of dental CBCT: state of the art. Dentomaxillofac Radiol 2015; 44: 20140224. doi: 10.1259/dmfr.20140224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Tian C, Fei L, Zheng W, Xu Y, Zuo W, Lin C-W. Deep learning on image denoising: an overview. arXiv [Internet].. 2019. Available from: https://ui.adsabs.harvard.edu/abs/2019arXiv191213171T [cited Jul 20, 2020]. [DOI] [PubMed]

- 67.Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans Image Process 2017; 26: 3142–55. doi: 10.1109/TIP.2017.2662206 [DOI] [PubMed] [Google Scholar]

- 68.Zhang K, Zuo W, Zhang L.;in press FFDNet: toward a fast and flexible solution for CNN-based image denoising. IEEE Trans Image Process. [DOI] [PubMed] [Google Scholar]

- 69.Chen J, Chao H, Yang M. Image Blind Denoising with Generative Adversarial Network Based Noise Modeling. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2018 18-23 June; 2018. [Google Scholar]

- 70.Chen H, Zhang Y, Kalra MK, Lin F, Chen Y, Liao P. Low-Dose CT with a Residual Encoder-Decoder Convolutional Neural Network (RED-CNN). arXiv [Internet]. 2017. Available from: https://ui.adsabs.harvard.edu/abs/2017arXiv170200288C [Jul 20, 2020]. [DOI] [PMC free article] [PubMed]

- 71.Park J, Hwang D, Kim KY, Kang SK, Kim YK, Lee JS. Computed tomography super-resolution using deep convolutional neural network. Phys Med Biol 2018; 63: 145011. doi: 10.1088/1361-6560/aacdd4 [DOI] [PubMed] [Google Scholar]

- 72.De Man B, Nuyts J, Dupont P, Marchal G, Suetens P. Metal streak artifacts in X-ray computed tomography: a simulation study. IEEE Trans Nucl Sci 1999; 46: 691–6. doi: 10.1109/23.775600 [DOI] [Google Scholar]

- 73.Huang JY, Kerns JR, Nute JL, Liu X, Balter PA, Stingo FC, et al. An evaluation of three commercially available metal artifact reduction methods for CT imaging. Phys Med Biol 2015; 60: 1047–67. doi: 10.1088/0031-9155/60/3/1047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Dalili Kajan Z, Taramsari M, Khosravi Fard N, Khaksari F, Moghasem Hamidi F. The efficacy of metal artifact reduction mode in cone-beam computed tomography images on diagnostic accuracy of root fractures in teeth with intracanal posts. Iran Endod J 2018; 13: 47–53. doi: 10.22037/iej.v13i1.17352 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Zhang Y, Yu H. Convolutional neural network based metal artifact reduction in X-ray computed tomography. IEEE Trans Med Imaging 2018; 37: 1370–81. doi: 10.1109/TMI.2018.2823083 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Li L, Abu-Mostafa YS. Data complexity in machine learning [Internet]. Computer Science Technical Report CaltechCSTR:2006.004, California Institute of Technology. 2006. Available from: https://authors.library.caltech.edu/27081/1/dcomplex.pdf [2020 Aug 21].

- 77.Gu J, Wang Z, Kuen J, Ma L, Shahroudy A, Shuai B, et al. Recent advances in convolutional neural networks. Pattern Recognit 2018; 77: 354–77. doi: 10.1016/j.patcog.2017.10.013 [DOI] [Google Scholar]

- 78.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vis 2020; 128: 336–59. doi: 10.1007/s11263-019-01228-7 [DOI] [Google Scholar]

- 79.Kim J-E, Nam N-E, Shim J-S, Jung Y-H, Cho B-H, Hwang JJ. Transfer learning via deep neural networks for implant fixture system classification using periapical radiographs. J Clin Med 2020; 9: 1117. doi: 10.3390/jcm9041117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Desjardins B, Mirsky Y, Ortiz MP, Glozman Z, Tarbox L, Horn R, et al. DICOM images have been hacked! now what? AJR Am J Roentgenol 2020; 214: 727–35. doi: 10.2214/AJR.19.21958 [DOI] [PubMed] [Google Scholar]