Significance

Infants become attuned to the sounds of their native language(s) before they even speak. Hypotheses about what is being learned by infants have traditionally driven researchers’ attempts to understand this surprising phenomenon. Here, we propose to start, instead, from hypotheses about how infants might learn. To implement this mechanism-driven approach, we introduce a quantitative modeling framework based on large-scale simulation of the learning process on realistic input. It allows learning mechanisms to be systematically linked to testable predictions regarding infants’ attunement to their native language(s). Through this framework, we obtain evidence for an account of infants’ attunement that challenges established theories about what infants are learning.

Keywords: phonetic learning, language acquisition, computational modeling

Abstract

Before they even speak, infants become attuned to the sounds of the language(s) they hear, processing native phonetic contrasts more easily than nonnative ones. For example, between 6 to 8 mo and 10 to 12 mo, infants learning American English get better at distinguishing English and [l], as in “rock” vs. “lock,” relative to infants learning Japanese. Influential accounts of this early phonetic learning phenomenon initially proposed that infants group sounds into native vowel- and consonant-like phonetic categories—like and [l] in English—through a statistical clustering mechanism dubbed “distributional learning.” The feasibility of this mechanism for learning phonetic categories has been challenged, however. Here, we demonstrate that a distributional learning algorithm operating on naturalistic speech can predict early phonetic learning, as observed in Japanese and American English infants, suggesting that infants might learn through distributional learning after all. We further show, however, that, contrary to the original distributional learning proposal, our model learns units too brief and too fine-grained acoustically to correspond to phonetic categories. This challenges the influential idea that what infants learn are phonetic categories. More broadly, our work introduces a mechanism-driven approach to the study of early phonetic learning, together with a quantitative modeling framework that can handle realistic input. This allows accounts of early phonetic learning to be linked to concrete, systematic predictions regarding infants’ attunement.

Adults have difficulties perceiving consonants and vowels of foreign languages accurately (1). For example, native Japanese listeners often confuse American English and [l] (as in “rock” vs. “lock”) (2, 3), and native American English listeners often confuse French [u] and [y] (as in “roue,” wheel, vs. “rue,” street) (4). This phenomenon is pervasive (5) and persistent: Even extensive, dedicated training can fail to eradicate these difficulties (6–8). The main proposed explanations for this effect revolve around the idea that adult speech perception involves a “native filter”: an automatic, involuntary, and not very plastic mapping of each incoming sound, foreign or not, onto native phonetic categories—i.e., the vowels and consonants of the native language (9–13). American English and [l], for example, would be confused by Japanese listeners because their productions can be seen as possible realizations of the same Japanese consonant, giving rise to similar percepts after passing through the “native Japanese filter.”

Surprisingly, these patterns of perceptual confusion arise very early during language acquisition. Infants learning American English distinguish and [l] more easily than infants learning Japanese before they even utter their first word (14). Dozens of other instances of such early phonetic learning have been documented, whereby cross-linguistic confusion patterns matching those of adults emerge during the first year of life (15–17). These observations naturally led to the assumption that the same mechanism thought to be responsible for adults’ perception might be at work in infants—i.e., foreign sounds are being mapped onto native phonetic categories. This assumption—which we will refer to as the phonetic category hypothesis—is at the core of the most influential theoretical accounts of early phonetic learning (9, 18–21).

The notion of phonetic category plays an important role throughout the paper, and so requires further definition. It has been used in the literature exclusively to refer to vowel- or consonant-like units. What that means varies to some extent between authors, but there are at least two constant, defining characteristics (22). First, phonetic categories have the characteristic size/duration of a vowel or consonant, i.e., the size of a phoneme, the “smallest distinctive unit within the structure of a given language” (1, 23). This can be contrasted with larger units like syllables or words and smaller units like speech segments corresponding to a single period of vocal fold vibration in a vowel. Second, phonetic categories—although they may be less abstract than phonemes* —retain a degree of abstractness and never refer to a single acoustic exemplar. For example, we would expect a given vowel or consonant in the middle of a word repeated multiple times by the same speaker to be consistently realized as the same phonetic category, despite some acoustic variation across repetitions. Finally, an added characteristic in the context of early phonetic learning is that phonetic categories are defined relative to a language. What might count as exemplars from separate phonetic categories for one language might belong to the same category in another.

The phonetic category hypothesis—that infants learn to process speech in terms of the phonetic categories of their native language—raises a question. How can infants learn about these phonetic categories so early? The most influential proposal in the literature has been that infants form phonetic categories by grouping the sounds they hear on the basis of how they are distributed in a universal (i.e., language-independent) perceptual space, a statistical clustering process dubbed “distributional learning” (24–27).

Serious concerns have been raised regarding the feasibility of this proposal, however (28, 29). Existing phonetic category accounts of early phonetic learning assume that speech is being represented phonetic segment by phonetic segment—i.e., for each vowel and consonant separately—along a set of language-independent phonetic dimensions (9, 19, 20).† Whether it is possible for infants to form such a representation in a way that would enable distributional learning of phonetic categories is questionable, for at least two reasons. First, there is a lack of acoustic–phonetic invariance (30–32): There is not a simple mapping from speech in an arbitrary language to an underlying set of universal phonetic dimensions that could act as reliable cues to phonetic categories. Second, phonetic category segmentation—finding reliable language-independent cues to boundaries between phonetic segments (i.e., individual vowels and consonants)—is a hard problem (30). It is clear that finding a solution to these problems for a given language is ultimately feasible, as literate adults readily solve them for their native language. Assuming that infants are able to solve them from birth in a language-universal fashion is a much stronger hypothesis, however, with little empirical support.

Evidence from modeling studies reinforces these concerns. Initial modeling work investigating the feasibility of learning phonetic categories through distributional learning sidestepped the lack-of-invariance and phonetic category segmentation problems by focusing on drastically simplified learning conditions (33–38), but subsequent studies considering more realistic variability have failed to learn phonetic categories accurately (29, 39–43) (SI Appendix, Discussion 1).

These results have largely been interpreted as a challenge to the idea that distributional learning is how infants learn phonetic categories. Additional learning mechanisms tapping into other sources of information plausibly available to infants have been proposed (26, 28, 29, 39–44), but existing feasibility results for such complementary mechanisms still assume that the phonetic category segmentation problem has somehow been solved and do not consider the full variability of natural speech (29, 36, 39–43, 45). Attempts to extend them to more realistic learning conditions have failed (46, 47) (SI Appendix, Discussion 1).

Here, we propose a different interpretation for the observed difficulty in forming phonetic categories through distributional learning: It might indicate that what infants learn are not phonetic categories. We are not aware of empirical results establishing that infants learn phonetic categories, and, indeed, the phonetic category hypothesis is not universally accepted. Some of the earliest accounts of early phonetic learning were based on syllable-level categories and/or on continuous representations without any explicit category representations‡ (48–51). Although they appear to have largely fallen out of favor, we know of no empirical findings refuting them.

We present evidence in favor of this alternative interpretation, first by showing that a distributional learning mechanism applied to raw, unsegmented, unlabeled continuous speech signal predicts early phonetic learning as observed in American English and Japanese-learning infants—thereby providing a realistic proof of feasibility for the proposed account of early phonetic learning. We then show that the speech units learned through this mechanism are too brief and too acoustically variable to correspond to phonetic categories.

We rely on two key innovations. First, whereas previous studies followed an outcome-driven approach to the study of early phonetic learning—starting from assumptions about what was learned, before seeking plausible mechanisms to learn it—we adopt a mechanism-driven approach—focusing first on the question of how infants might plausibly learn from realistic input, and seeking to characterize what was learned only a posteriori. Second, we introduce a quantitative modeling framework suitable to implement this approach at scale using realistic input. This involves explicitly simulating both the ecological learning process taking place at home and the assessment of infants’ discrimination abilities in the laboratory.

Beyond the immediate results, the framework we introduce provides a feasible way of linking accounts of early phonetic learning to systematic predictions regarding the empirical phenomenon they seek to explain—i.e., the observed cross-linguistic differences in infants’ phonetic discrimination.

Approach

We start from a possible learning mechanism. We simulate the learning process in infants by implementing this mechanism computationally and training it on naturalistic speech recordings in a target language—either Japanese or American English. This yields a candidate model for the early phonetic knowledge of, say, a Japanese infant. Next, we assess the model’s ability to discriminate phonetic contrasts of American English and Japanese—for example, American English vs [l]—by simulating a discrimination task using speech stimuli corresponding to this contrast. We test whether the predicted discrimination patterns agree with the available empirical record on cross-linguistic differences between American English- and Japanese-learning infants. Finally, we investigate whether what has been learned by the model corresponds to the phonetic categories of the model’s “native” language (i.e., its training language).

To identify a promising learning mechanism, we build on recent advances in the field of machine learning and, more specifically, in unsupervised representation learning for speech technology, which have established that, given only raw, untranscribed, unsegmented speech recordings, it is possible to learn representations that accurately discriminate the phonetic categories of a language (52–69). The learning algorithms considered have been argued to be particularly relevant for modeling how infants learn in general, and learn language in particular (70). Among available learning algorithms, we select the one at the core of the winning entries in the Zerospeech 2015 and 2017 international competitions in unsupervised speech-representation learning (57, 58, 68). Remarkably, it is based on a Gaussian mixture clustering mechanism—illustrated in Fig. 1A—that can straightforwardly be interpreted as a form of distributional learning (24, 26). A different input representation to the Gaussian mixture is used than in previously proposed implementations of distributional learning, however (29, 33, 35, 37–39, 41). Simple descriptors of the shape of the speech signal’s short-term auditory spectrum sampled at regular points in time (every 10 ms) (71) are used instead of traditional phonetic measurements obtained separately for each vowel and consonant, such as formant frequencies or harmonic amplitudes.§ This type of input representation only assumes basic auditory abilities from infants, which are known to be fully operational shortly after birth (74), and has been proposed previously as a potential way to get around both the lack-of-invariance and the phonetic category segmentation problems in the context of adult word recognition (30). A second difference from previous implementations of distributional learning is in the output representation. Test stimuli are represented as sequences of posterior probability vectors (posteriorgrams) over Gaussian components in the mixture (Fig. 1B), rather than simply being assigned to the most likely Gaussian component. These continuous representations have been shown to support accurate discrimination of native phonetic categories in the Zerospeech challenges.

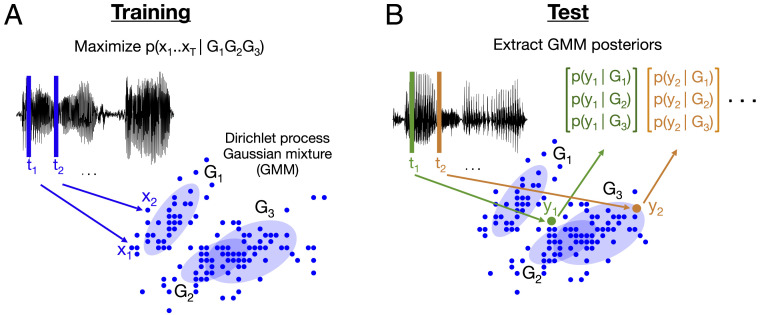

Fig. 1.

Gaussian mixture model training and representation extraction, illustrated for a model with three Gaussian components. In practice, the number of Gaussian components is learned from the data and much higher. (A) Model training: The learning algorithm extracts moderate-dimensional (d = 39) descriptors of the local shape of the signal spectrum at time points regularly sampled every 10 ms (speech frames). These descriptors are then considered as having been generated by a mixture of Gaussian probability distributions, and parameters for this mixture that assign high probability to the observed descriptors are learned. (B) Model test: The sequence of spectral-shape descriptors for a test stimulus (possibly in a language different from the training language) are extracted, and the model representation for that stimulus is obtained as the sequence of posterior probability vectors resulting from mapping each descriptor to its probability of having been generated by each of the Gaussian components in the learned mixture.

To simulate the infants’ learning process, we expose the selected learning algorithm to a realistic model of the linguistic input to the child, in the form of raw, unsegmented, untranscribed, multispeaker continuous speech signal in a target language (either Japanese or American English). We select recordings of adult speech made with near-field, high-quality microphones in two speech registers, which cover the range of articulatory clarity that infants may encounter. On one end of the range, we use spontaneous adult-directed speech, and on the other, we use read speech; these two speaking registers are crossed with the language factor (English or Japanese), resulting in four corpora, each split into a training set and a test set (Table 1). We would have liked to use recordings made in infants’ naturalistic environments, but no such dataset of sufficient audio quality was available for this study. It is unclear whether or how using infant-directed speech would impact results: The issue of whether infant-directed speech is beneficial for phonetic learning has been debated, with arguments in both directions (75–82). We train a separate model for each of the four training sets, allowing us to check that our results hold across different speech registers and recording conditions. We also train separate models on 10 subsets of each training set for several choices of subset sizes, allowing us to assess the effects of varying the amount of input data and the variability due to the choice of training data for a given input size.

Table 1.

Language, speech register, duration, and number of speakers of training and test sets for our four corpora of speech recordings

| Corpus | Language | Reg. | Duration | No. of speakers | ||

| Train | Test | Train | Test | |||

| R-Eng (83) | Am. English | Read | 19h30 | 9h39 | 96 | 47 |

| R-Jap (84) | Japanese | Read | 19h33 | 9h40 | 96 | 47 |

| Sp-Eng (85) | Am. English | Spont. | 9h13 | 9h01 | 20 | 20 |

| Sp-Jap (86) | Japanese | Spont. | 9h11 | 8h57 | 20 | 20 |

Am., American; reg., register; spont., spontaneous.

We next evaluate whether the trained “Japanese native” and “American-English native” models correctly predict early phonetic learning, as observed in Japanese-learning and American English-learning infants, respectively, and whether they make novel predictions regarding the differences in speech-discrimination abilities between these two populations. Because we do not assume that the outcome of infants’ learning is adult-like knowledge, we can only rely on infant data for evaluation. The absence of specific assumptions a priori about what is going to be learned and the sparsity of empirical data on infant discrimination make this challenging. The algorithm we consider outputs complex, high-dimensional representations (Fig. 1B) that are not easy to link to concrete predictions regarding infant discrimination abilities. Traditional signal-detection theory models of discrimination tasks (87) cannot handle high-dimensional perceptual representations, while more elaborate (Bayesian) probabilistic models (88) have too many free parameters given the scarcity of available data from infant experiments. We rely, instead, on the machine ABX approach that we previously developed (89, 90). It consists of a simple model of a discrimination task, which can handle any representation format, provided the user can provide a reasonable measure of (dis)similarity between representations (89, 90). This is not a detailed model of infant’s performance in a specific experiment, but, rather, a simple and effectively parameterless way to systematically link the complex speech representations produced by our models to predicted discrimination patterns. For each trained model and each phonetic contrast of interest, we obtain an “ABX error rate,” such that and error indicate perfect and chance-level discrimination, respectively. This allows us to evaluate the qualitative match between the model’s discrimination abilities and the available empirical record in infants (see SI Appendix, Discussion 3 for an extended discussion of our approach to interpreting the simulated discrimination errors and relating them to empirical observations, including why it would not be meaningful to seek a quantitative match at this point).

Finally, we investigate whether the learned Gaussian components correspond to phonetic categories. We first compare the number of Gaussians in a learned mixture to the number of phonemes in the training language (category number test): Although a phonetic category can be more concrete than a phoneme, the number of phonetic categories documented in typical linguistic analyses remains on the same order of magnitude as the number of phonemes. We then administer two diagnostic tests based on the two defining characteristics identified above that any representation corresponding to phonetic categories should pass.¶ The first characteristic is size/duration: A phonetic category is a phoneme-sized unit (i.e., the size of a vowel or a consonant). Our duration test probes this by measuring the average duration of activation of the learned Gaussian components (a component is taken to be “active” when its posterior probability is higher than all other components), and comparing this to the average duration of activation of units in a baseline system trained to recognize phonemes with explicit supervision. The second characteristic is abstractness: Although phonetic categories can depend on phonetic context∥ and on nonlinguistic properties of the speech signal—e.g., the speaker’s gender—at a minimum, the central phone in the same word repeated several times by the same speaker is expected to be consistently realized as the same phonetic category. Our acoustic (in)variance test probes this by counting the number of distinct representations needed by our model to represent 10 occurrences of the central frame of the central phone of the same word either repeated by the same speaker (within-speaker condition) or by different speakers (across-speaker condition). We use a generous correction to handle possible misalignment (Materials and Methods). The last two tests can be related to the phonetic category segmentation and lack-of-invariance problems: Solving the phonetic category segmentation problem involves finding units that would pass the duration test, while solving the lack-of-invariance problem involves finding units that would pass the acoustic (in)variance test. Given the laxity in the use of the concept of phonetic category in the literature, some might be tempted to challenge that even these diagnostic tests can be relied on. If they cannot, however, it is not clear to us how phonetic category accounts of early phonetic learning should be understood as scientifically refutable claims.

Results

Overall Discrimination.

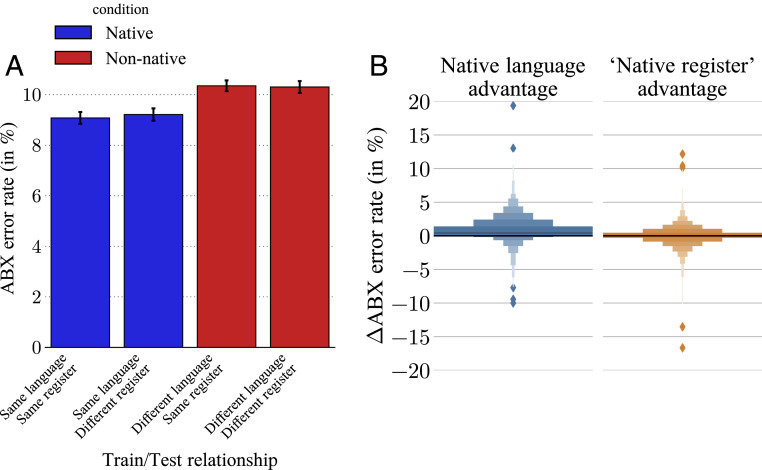

After having trained a separate model for each of the four possible combinations of language and register, we tested whether the models’ overall discrimination abilities, like those of infants (15–17), are specific to their “native” (i.e., training) language. Specifically, for each corpus, we looked at overall discrimination errors averaged over all consonant and vowel contrasts available in a held-out test set from that corpus (Table 1). We tested each of the two American English-trained and each of the two Japanese-trained models on each of four test sets, yielding a total of 44 discrimination errors. We tabulated the average errors in terms of four conditions, depending on the relation between the test set and the training background of the model: native vs. nonnative contrasts and same vs. different register. The results are reported in Fig. 2 (see also SI Appendix, Figs. S1 and S4 for nontabulated results). Fig. 2A shows that discrimination performance is higher, on average, in matched-language conditions (in blue) than in mismatched-language conditions (in red). In contrast, register mismatch has no discernible impact on discrimination performance. A comparison with a supervised phoneme-recognizer baseline (SI Appendix, Fig. S3) shows a similar pattern of results, but with a larger absolute cross-linguistic difference. If we interpret this supervised baseline as a proxy to the adult state, then our model suggests that infant’s phonetic representations, while already language-specific, remain “immature”.** Fig. 2B shows the robustness of these results, with 81.7% of the 1,295 distinct phonetic contrasts tested proving easier to discriminate on the basis of representations from a model trained on the matching language. Taken together, these results suggest that, similar to infants, our models acquire language-specific representations, and that these representations generalize across register.

Fig. 2.

(A) Average ABX error rates over all consonant and vowel contrasts obtained with our models as a function of the match between the training-set and test-set language and register. Error bars correspond to plus and minus one SD of the errors across resampling of the test-stimuli speakers. The native (blue) conditions, with training and test in the same language, show fewer discrimination errors than the nonnative (red) conditions, whereas there is little difference in error rate within the native and within the nonnative conditions. This shows that the models learned native-language-specific representations that generalize across register. (B) Letter-value representation (94) of the distribution of native advantages across all tested phonetic contrasts (pooled over both languages). The native-language advantage is the increase in discrimination error for a contrast of language L1 between an “L1-native” model and a model trained on the other language for the same training register. The “native register” advantage is the increase in error for a contrast of register R1 between an “R1-native” model and a model trained on the other register for the same training language. A native language advantage is observed across contrasts (positive advantage for of all contrasts), and there is a weaker native register advantage (positive advantage for of all contrasts).

American English –[l] Discrimination.

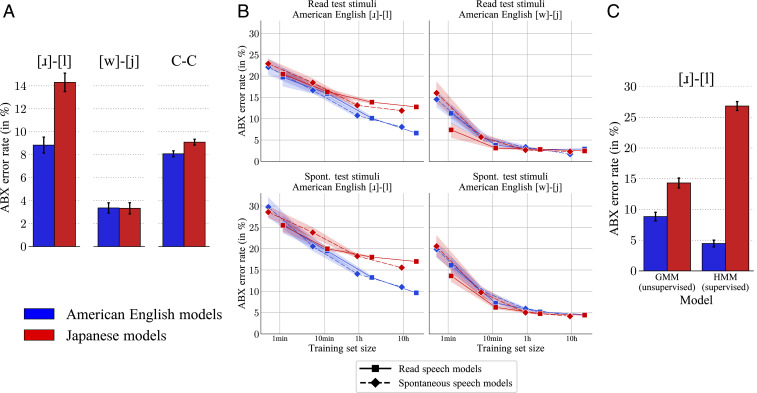

Next, we focus on the specific case of American English –[l] discrimination, for which Japanese adults show a well-documented deficit (2, 3) and which has been studied empirically in American English and Japanese infants (14). While 6- to 8-mo-old infants from American English- and Japanese-language backgrounds performed similarly in discriminating this contrast, 10- to 12-mo-old American English infants outperformed their Japanese peers. We compare the discrimination errors obtained with each of our four models for American English –[l] and for two controls: the American English [w]–[j] contrast (as in “wet” vs. “yet”), for which we do not expect a gap in performance between American English and Japanese natives (95), and the average error over all of the other consonant contrasts of American English. For each contrast and for each of the four models, we averaged discrimination errors obtained on each of the two American English held-out test sets, yielding 34 discrimination errors. We further averaged over models with the same native language to obtain 32 discrimination errors. The results are shown in Fig. 3 (see also SI Appendix, Figs. S2 and S6 for untabulated results and a test confirming our results with the synthetic stimuli used in the original infant experiment, respectively). In Fig. 3A, we see that, similar to 10- to 12-mo old infants, American English native models (in blue) greatly outperform Japanese native models (in red) in discriminating American English –[l]. Here, again, a supervised phoneme-recognizer baseline yields a similar pattern of results, but with larger cross-linguistic differences (Fig. 3C; see also SI Appendix, Fig. S5), again suggesting that the representations learned by the unsupervised models—like those of infants—remain somewhat “immature.” In Fig. 3B, we see results obtained by training 10 different models on 10 different subsets of the training set of each corpus, varying the sizes of the subsets (see Materials and Methods for more details). It reveals that 1 h of input is sufficient for the divergence between the Japanese and English models to emerge robustly and that this divergence increases with exposure to the native language. While it is difficult to interpret this trajectory relative to absolute quantities of data or discrimination scores, the fact that the cross-linguistic difference increases with more data mirrors the empirical findings from infants (see also an extended discussion of our approach to interpreting the simulated discrimination errors and relating them to empirical data in SI Appendix, Discussion 3).

Fig. 3.

(A) ABX error rates for the American English –[l] contrast and two controls: American English [w]–[j] and average over all American English consonant contrasts (C–C). Error rates are reported for two conditions: average over models trained on American English and average over models trained on Japanese. Error bars correspond to plus and minus one SD of the errors across resampling of the test-stimuli speakers. Similar to infants, the Japanese native models exhibit a specific deficit for American English –[l] discrimination compared to the American English models. (B) The robustness of the effect observed in A to changes in the training stimuli and their dependence on the amount of input are assessed by training separate models on independent subsets of the training data of each corpus of varying duration (Materials and Methods). For each selected duration (except when using the full training set), 10 independent subsets are selected, and 10 independent models are trained. We report mean discrimination errors for American English –[l] and [w]–[j] as a function of the amount of input data, with error bands indicating plus or minus one SD. The results show that a deficit in American English –[l] discrimination for Japanese-native models robustly emerges with as little as 1 h of training data. (C) To give a sense of scale we compare the cross-linguistic difference obtained with the unsupervised Gaussian mixture models (GMM) on American English –[l] (Left) to the one obtained with supervised phoneme-recognizer baselines (hidden Markov model, HMM; Right). The larger cross-linguistic difference obtained with the supervised baselines suggests that the representations learned by our unsupervised models, similar to those observed in infants, remain somewhat immature.

Nature of the Learned Representations.

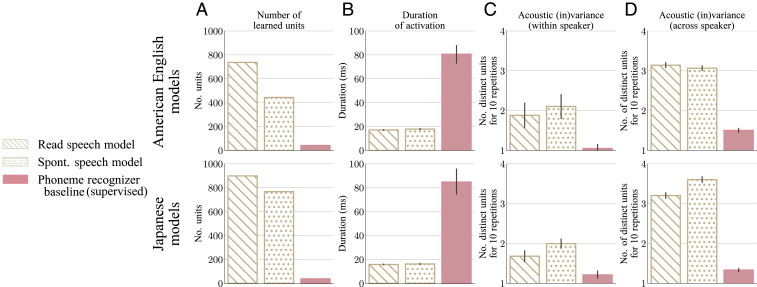

Finally, we considered the nature of the learned representations and tested whether what has been learned can be understood in terms of phonetic categories. Results are reported in Fig. 4 (see also SI Appendix, Fig. S7 for comparisons with a different supervised baseline). First, looking at the category number criterion in Fig. 4A, we see that our models learned more than 10 times as many categories as the number of phonemes in the corresponding languages. Even allowing for notions of phonetic categories more granular than phonemes, we are not aware of any phonetic analysis ever reporting that many allophones in these languages. Second, looking at the duration criterion in Fig. 4B, the learned Gaussian units appear to be activated, on average, for about a quarter the duration of a phoneme. This is shorter than any linguistically identified unit. It shows that the phonetic category segmentation problem has not been solved. Next, looking at the acoustic (in)variance criterion in Fig. 4 C and D—for the within- and across-speakers conditions, respectively—we see that our models require, on average, around two distinct representations to represent 10 tokens of the same phonetic category without speaker variability and three distinct representations across different speakers. The supervised phoneme-recognizer baseline establishes that our results cannot be explained by defective test stimuli. Instead, this result shows that the learned units are finer-grained than phonetic categories along the spectral axis and that the lack-of-invariance problem has not been solved. Based on these tests, we can conclude that the learned units do not correspond to phonetic categories in any meaningful sense of the term.

Fig. 4.

Diagnostic test results for our four unsupervised Gaussian mixture models (in beige) and phoneme-recognizer baselines trained with explicit supervision (in pink). (Upper) American English native models. (Lower) Japanese native models. Models are tested on read speech in their native language. (A) Number of units learned by the models. Gaussian mixtures discover 10 to 20 times more categories than there are phonemes in the training language, exceeding any reasonable count for phonetic categories. (B) Average duration of activation of the learned units. The average duration of activation of each unit is computed, and the average and SD of the resulting distribution over units are shown. Learned Gaussian units get activated, on average, for about the quarter of the duration of a phoneme. They are, thus, much too “short” to correspond to phonetic categories. (C) Average number of distinct representations for the central frame of the central phone for 10 repetitions of a same word by the same speaker, corrected for possible misalignment. The number of distinct representations is computed for each word type with sufficient repetitions in the test set, and the average and SD of the resulting distribution over word types are shown. The phoneme-recognizer baseline reliably identifies the 10 tokens as exemplars from a common phonetic category, whereas our Gaussian mixture models typically maintain on the order of two distinct representations, indicating representations too fine-grained to be phonetic categories. (D) As in C, but with repetitions of a same word by 10 speakers, showing that the learned Gaussian units are not speaker-independent. Spont., spontaneous.

Discussion

Through explicit simulation of the learning process under realistic learning conditions, we showed that several aspects of early phonetic learning, as observed in American English and Japanese infants, can be correctly predicted through a distributional learning (i.e., clustering) mechanism applied to simple spectrogram-like auditory features sampled at regular time intervals. This contrasts with previous attempts to show the feasibility of potential mechanisms for early phonetic learning, which only considered highly simplified learning conditions and/or failed (26, 28, 29, 33–44, 46–48). We further showed that the learned speech units are too brief and too acoustically variable to correspond to the vowel- and consonant-like phonetic categories posited in earlier accounts of early phonetic learning.

Distributional learning has been an influential hypothesis in language acquisition for over a decade (24, 26, 27). Previous modeling results questioning the feasibility of learning phonetic categories through distributional learning have traditionally been interpreted as challenging the learning mechanism (26, 28, 29, 39–44), but we have instead suggested that such results may be better interpreted as challenging the idea that phonetic categories are the outcome of early phonetic learning. Supporting this view, we showed that when the requirement to learn phonetic categories is abandoned, distributional learning on its own can be sufficient to explain early phonetic learning under realistic learning conditions—using unsegmented, untranscribed speech signal as input. Our results are still compatible with the idea that mechanisms tapping into other relevant sources of information might complement distributional learning—an idea supported by evidence that infants learn from some of these sources in the laboratory (96–102)—but they suggest that those other sources of information may not play a role as crucial as previously thought (26). Our findings also join recent accounts of “word segmentation” (103) and the “language familiarity effect” (104) in questioning whether we might have been overattributing linguistic knowledge to preverbal infants across the board.

An Account of Early Phonetic Learning without Phonetic Categories.

Our results suggest an account of phonetic learning that substantially differs from existing ones. Whereas previous proposals have been primarily motivated through an outcome-driven perspective—starting from assumptions about what it is about language that is learned—the motivation for the proposed account comes from a mechanism-driven perspective—starting from assumptions about how learning might proceed from the infant’s input. This contrast is readily apparent in the choice of the initial speech representation, upon which the early phonetic learning process operates (the input representation). Previous accounts assumed speech to be represented innately through a set of universal (i.e., language-independent) phonetic feature detectors (9, 18–21, 48–51). The influential phonetic category accounts, furthermore, assumed these features to be available phonetic segment by phonetic segment (i.e., for each vowel and consonant separately) (9, 18–21). While these assumptions are attractive from an outcome-driven perspective—they connect transparently to phonological theories in linguistics and theories of adult speech perception that assume a decomposition of speech into phoneme-sized segments defined in terms of abstract phonological features—from a mechanism-driven perspective, both assumptions are difficult to reconcile with the continuous speech signal that infants hear. The lack of acoustic–phonetic invariance problem challenges the idea of phonetic feature detectors, and the phonetic category segmentation problem challenges the idea that the relevant features are segment-based (30–32). The proposed account does not assume either problem to be solved by infants at birth. Instead, it relies on basic auditory abilities that are available to neonates (74), using simple auditory descriptors of the speech spectrum obtained regularly along the time axis. This type of spectrogram-like representation is effective in speech-technology applications (71) and can be seen as the output of a simple model of the peripheral auditory system (ref. 90, chap. 3), which is fully operational shortly after birth (74). Such representations have also been proposed before as an effective way to get around both the lack-of-invariance and the phonetic category segmentation problems in the context of adult word recognition (30) and can outperform representations based on traditional phonetic measurements (like formant frequencies) as predictors of adult speech perception (105–109).

While the input representation is different, the learning mechanism in the proposed account—distributional learning—is similar to what had originally been proposed in phonetic category accounts. Infants’ abilities, both in the laboratory (24, 27) and in ecological conditions (25), are consistent with such a learning mechanism. Moreover, when applied to the input representation considered in this paper, distributional learning is adaptive in that it yields speech representations that can support remarkably accurate discrimination of the phonetic categories of the training language, outperforming a number of alternatives that have been proposed for unsupervised speech representation learning (57, 58, 68).

As a consequence of our mechanism-driven approach, what has been learned needs to be determined a posteriori based on the outcomes of learning simulations. The speech units learned under the proposed account accurately model infants’ discrimination, but are too brief and acoustically variable to correspond to phonetic categories, failing, in particular, to provide a solution to the lack-of-invariance and phonetic category segmentation problems (30). Such brief units do not correspond to any identified linguistic unit (22) (see SI Appendix, Discussion 4 for a discussion of possible reasons why the language-acquisition process might involve the learning by infants of a representation with no established linguistic interpretation and a discussion of the biological and psychological plausibility of the learned representation), and it will be interesting to try to further understand their nature. However, since there is no guarantee that a simple characterization exists, we leave this issue for future work.

Phonetic categories are often assumed as precursors in accounts of phenomena occurring later in the course of language acquisition. Our account does not necessarily conflict with this view, as phonetic categories may be learned later in development, before phonological acquisition. Alternatively, the influential PRIMIR account of early language acquisition (“a developmental framework for Processing Rich Information from Multi-dimensional Interactive Representations”, ref. 20) proposes that infants learn in parallel about the phonetics, word forms, and phonology of their native language, but do not develop abstract phonemic representations until well into their second year of life. Although PRIMIR explicitly assumes phonetic learning to be phonetic category learning, other aspects of their proposed framework do not depend on that assumption, and our framework may be able to stand in for the phonetic learning process they assume.

To sum up, we introduced and motivated an account of early phonetic learning—according to which infants learn through distributional learning, but do not learn phonetic categories—and we showed that this account is feasible under realistic learning conditions, which cannot be said of any other account at this time. Importantly, this does not constitute decisive evidence for our account over alternatives. Our primary focus has been on modeling cross-linguistic differences in the perception of one contrast –[l]; further work is necessary to determine to what extent our results extend to other contrasts and languages (110). Furthermore, an absence of feasibility proof does not amount to a proof of infeasibility. While we have preliminary evidence that simply forcing the model to learn fewer categories is unlikely to be sufficient (SI Appendix, Figs. S9 and S10), recently proposed partial solutions to the phonetic category segmentation problem (e.g., refs. 111–113) and to the lack-of-invariance problem (114) (see also SI Appendix, Discussion 2 regarding the choice of model initialization) might yet lead to a feasible phonetic category-based account, for example. In addition, a number of other representation learning algorithms proposed in the context of unsupervised speech technologies and building on recent developments in the field of machine learning have yet to be investigated (52–69). They might provide concrete implementations of previously proposed accounts of early phonetic learning or suggest new ones altogether. This leaves us with a large space of appealing theoretical possibilities, making it premature to commit to a particular account. Candidate accounts should instead be evaluated on their ability to predict empirical data on early phonetic learning, which brings us to the second main contribution of this article.

Toward Predictive Theories of Early Phonetic Learning.

Almost since the original empirical observation of early phonetic learning (115), a number of theoretical accounts of the phenomenon have coexisted (9, 19, 48, 49). This theoretical underdetermination has typically been thought to result from the scarcity of empirical data from infant experiments. We argue instead that the main limiting factor on our understanding of early phonetic learning might have been the lack—on the theory side—of a practical method to link proposed accounts of phonetic learning with concrete, systematic predictions regarding the empirical discrimination data they seek to explain. Establishing such a systematic link has been challenging due to the necessity of dealing with the actual speech signal, with all its associated complexity. The modeling framework we introduce provides a practical and scalable way to overcome these challenges and obtain the desired link for phonetic learning theories—a major methodological advance, given the fundamental epistemological importance of linking explanandum and explanans in scientific theories (116).

Our mechanism-driven approach to obtaining predictions—which can be applied to any phonetic learning model implemented in our framework—consists first of explicitly simulating the early phonetic learning process as it happens outside of the laboratory, which results in a trained model capable of mapping any speech input to a model representation for that input. The measurement of infants’ perceptual abilities in laboratory settings—including their discrimination of any phonetic contrast—can then be simulated on the basis of the model’s representations of the relevant experimental stimuli. Finally, phonetic contrasts for which a significant cross-linguistic difference is robustly predicted can be identified through a careful statistical analysis of the simulated discrimination judgments (SI Appendix, Materials and Methods 4). As an illustration of how such predictions can be generated, we report specific predictions made by our distributional learning model in SI Appendix, Table S1 (see also SI Appendix, Discussion 5).

Although explicit simulations of the phonetic learning process have been carried out before (29, 33–43, 45, 48, 72, 73), those have typically been evaluated based on whether they learned phonetic categories, and have not been directly used to make predictions regarding infants’ discrimination abilities. An outcome-driven approach to making predictions regarding discrimination has typically been adopted instead, starting from the assumption that phonetic categories are the outcome of learning. To the best of our knowledge, this has never resulted in the kind of systematic predictions we report here, however (see SI Appendix, Discussion 6 for a discussion of the limits of previous approaches and of the key innovations underlying the success of our framework).

Our framework readily generates empirically testable predictions regarding infants’ discrimination, yet further computational modeling is called for before we return to experiments. Indeed, existing data—collected over more than three decades of research (5, 15–17)—might already suffice to distinguish between different learning mechanisms. To make that determination, and to decide which contrasts would be most useful to test next, in case more data are needed, many more learning mechanisms and training/test language pairs will need to be studied. Even for a specified learning mechanism and training/test datasets, multiple implementations should ideally be compared (e.g., testing different parameter settings for the input representations or the clustering algorithm), as implementational choices that weren’t initially considered to be important might, nevertheless, have an effect on the resulting predictions and, thus, need to be included in our theories. Conversely, features of the model that may seem important a priori (e.g., the type of clustering algorithm used) might turn out to have little effect on the learning outcomes in practice.

Cognitive science has not traditionally made use of such large-scale modeling, but recent advances in computing power, large datasets, and machine-learning algorithms make this approach more feasible than ever before (70). Together with ongoing efforts in the field to collect empirical data on a large scale—such as large-scale recordings of infants’ learning environments at home (117) and large-scale assessment of infants’ learning outcomes (118, 119)—our modeling approach opens the path toward a much deeper understanding of early language acquisition.

Materials and Methods

Datasets.

We used speech recordings from four corpora: two corpora of read news articles—a subset of the Wall Street Journal corpus of American English (83) (WSJ) and the Globalphone corpus of Japanese (84) (GPJ)—and two corpora of spontaneous speech—the Buckeye corpus of American English (85) (BUC) and a subset of the corpus of spontaneous Japanese (86) (CSJ). As we are primarily interested in the effect of training language on discrimination abilities, we sought to remove possibly confounding differences between the two read corpora and between the two spontaneous corpora. Specifically, we randomly sampled subcorpora while matching total duration, number, and gender of speakers and amount of speech per speaker. We made no effort to match corpora within a language, as the differences (for example, in the total duration and number of speakers) only serve to reinforce the generality of any result holding true for both registers. Each of the sampled subsets was further randomly divided into a training and a test set (Table 1), satisfying three conditions: The test set lasts approximately 10 h; no speaker is present in both the training and test set; and the training and test sets for the two read corpora, and separately for the two spontaneous corpora, remain matched on overall duration, number of speakers of each gender, and distribution of duration per speaker of each gender. To carry out analyses taking into account the effect of input size and of the choice of input data, we further divided each training set in 10 with each 1/10th subset containing an equal proportion of the speech samples from each speaker in the original training set. We then divided each of the 1/10th subsets in 10 again following the same procedure and selected the first subset to obtain 10 1/100th subsets. Finally, we iterated the procedure one more time to obtain 10 1/1,000th subsets. See SI Appendix, Materials and Methods 1 for additional information.

Signal Processing, Models, and Inference.

The raw speech signal was decomposed into a sequence of overlapping 25-ms-long frames sampled every 10 ms, and moderate-dimensional (d = 39) descriptors of the spectral shape of each frame were then extracted, describing how energy in the signal spreads across different frequency channels. The descriptors comprised 13 mel-frequency cepstral coefficients with their first and second time derivatives. These coefficients correspond approximately to the principal components of spectral slices in a log-spectrogram of the signal, where the spectrogram frequency channels were selected on a mel-frequency scale (linear for lower frequency and logarithmic for higher frequencies, matching the frequency selectivity of the human ear).

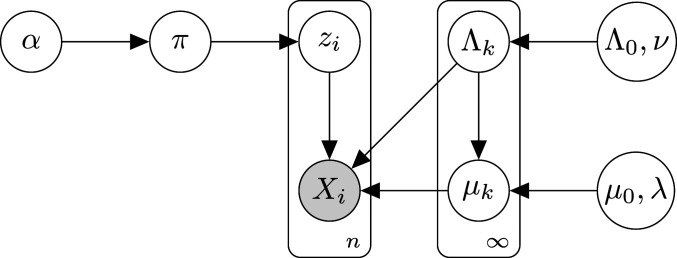

For each corpus, the set of all spectral-shape descriptors for the corpus’ training set was modeled as a large independent and identically distributed sample from a probabilistic generative model. The generative model is a Gaussian mixture model with no restrictions on the form of covariance matrices and with a Dirichlet process prior over its parameters with normal-inverse-Wishart base measure. The generative model is depicted as a graphical model in plate notation in Fig. 5, where is the number of input descriptors, are the random variables from which the observed descriptors are assumed to be sampled, and the other elements are latent variables and hyperparameters. The depicted variables have the following conditional distributions:

for any , for any , with the multivariate Gaussian distribution, the Wishart distribution, Multi the generalization of the usual multinomial probability distribution to an infinite discrete support, and SB the mixing weights generating distribution from the stick-breaking representation of Dirichlet processes (120). Mixture parameters with high posterior probability given the observed input features vectors and the prior were found by using an efficient parallel Markov chain Monte Carlo sampler (121). Following previous work (60, 65), model initialization was performed by partitioning training points uniformly at random into 10 clusters, and the hyperparameters were set as follows: to 1, to the average of all input features vectors, to 1, to the inverse of the covariance of all input feature vectors, and to 42 (i.e., the spectral shape descriptors dimension plus 3). We additionally trained a model on each of the 10 1/10th, 1/100th, and 1/1,000th training subsets of each of the four corpora, following the same procedure.

Fig. 5.

Generative Gaussian mixture model with Dirichlet process prior with normal-inverse-Wishart base measure, represented as a graphical model in plate notation based on the stick-breaking construction of Dirichlet processes.

Given a trained Gaussian mixture with components, mixing weights , means , and covariance matrices , we extracted a test stimulus representation from the sequence of spectral-shape descriptors for that stimulus, as the sequence of posterior probability vectors , where for any frame , , , with, for any :

As a baseline, we also trained a phoneme recognizer on the training set of each corpus, with explicit supervision (i.e., phonemic transcriptions of the training stimuli). We extracted frame-level posterior probabilities at two granularity levels: actual phonemes—the phoneme-recognizer baseline—and individual states of the contextual hidden Markov models—the ASR phone-state baseline. See SI Appendix, Materials and Methods 2 for additional information.

Discrimination Tests.

Discriminability between model representations for phonetic contrasts of interest was assessed by using machine ABX discrimination errors (89, 90). Discrimination was assessed in context, defined as the preceding and following sound and the identity of the speaker. For example, discrimination of American English [u] vs. [i] was assessed in each available context independently, yielding—for instance—a separate discrimination-error rate for test stimuli in [b]_[t] phonetic context, as in “boot” vs. “beet,” as spoken by a specified speaker. Other possible factors of variability, such as word boundaries or syllable position, were not controlled. For each model, each test corpus, and each phonemic contrast in that test corpus (as specified by the corpus’ phonemic transcriptions), we obtained a discrimination error for each context in which the contrasted phonemes occurred at least twice in the test corpus’ test set. To avoid combinatorial explosion in the number of ABX triplets to be considered, a randomly selected subset of five occurrences was used to compute discrimination errors when a phoneme occurred more than five times in a given context. An aggregated ABX error rate was obtained for each combination of model, test corpus, and phonemic contrast, by averaging the context-specific error rates over speakers and phonetic contexts, in that order.

Model representations were extracted for the whole test sets, and the part corresponding to a specific occurrence of a phonetic category was then obtained by selecting representation frames centered on time points located between the start and end times for that occurrence, as specified by the test set’s forced aligned phonemic transcriptions. Given model representations and for tokens of phonetic category and tokens of phonetic category , the nonsymmetrized machine ABX discrimination error between and was then estimated as the proportion of representation triplets , , , with and taken from and taken from , such that is closer to than to , i.e.,

where is the indicator function returning one when its predicate is true and zero otherwise, and is a dissimilarity function taking a pair of model representations as input and returning a real number (with higher values indicating more dissimilar representations). The (symmetric) machine ABX discrimination error between and was then obtained as:

As realizations of phonetic categories vary in duration, we need a dissimilarity function that can handle model representations with variable length. This was done, following established practice (12, 13, 55, 57, 68), by measuring the average dissimilarity along a time alignment of the two representations obtained through dynamic time warping (122), where the dissimilarity between model representations for individual frames was measured with the symmetrized Kullback–Leibler divergence for posterior probability vectors and with the angular distance for spectral shape descriptors.

Analysis of Learned Representations.

Learned units were taken to be the Gaussian components for the Gaussian mixture models, the phoneme models for the phoneme-recognizer baseline, and the phone-state models for the ASR phone-state baseline. Since experimental studies of phonetic categories are typically performed with citation form stimuli, we studied how each model represents stimuli from the matched-language read speech corpus’ test set.

To study average durations of activation, we excluded any utterance-initial or utterance-final silence from the analysis, as well as any utterance for which utterance-medial silence was detected during the forced alignment. The average duration of activation for a given unit was computed by averaging over all episodes in the test utterances during which that unit becomes dominant, i.e., has the highest posterior probability among all units. Each of these episodes was defined as a continuous sequence of speech frames, during which the unit remains dominant without interruptions, with duration equal to that number of speech frames times 10 ms.

The acoustic (in)variance of the learned units was probed by looking at multiple repetitions of a single word and testing whether the dominant unit at the central frame of the central phone of the word remained the same for all repetitions. Specifically, we counted the number of distinct dominant units occurring at the central frame of the central phone for 10 repetitions of the same word. To compensate for possible misalignment of the central phones’ central frames (e.g., due to slightly different time courses in the acoustic realization of the phonetic segment and/or small errors in the forced alignment), we allowed the dominant unit at the central frame to be replaced by any unit that is dominant at some point within the previous or following 46 ms (thus covering a 92-ms slice of time corresponding to the average duration of a phoneme in our read-speech test sets), provided it could bring down the overall count of distinct dominant units for the 10 occurrences (see SI Appendix, Materials and Methods 3 for more information). We considered two conditions: In the within-speaker condition, the test stimuli were uttered by the same speaker 10 times; in the across-speaker condition, they were uttered by 10 different speakers one time. See SI Appendix, Materials and Methods 3 for more information on the stimulus-selection procedure.

Data and Code Availability.

The datasets analyzed in this study are publicly available from the commercial vendors and research institutions holding their copyrights (83–86). Datasets generated during the course of the study that do not include proprietary information are available at https://osf.io/d2fpb/. Code to reproduce the results is available at https://github.com/Thomas-Schatz/perceptual-tuning-pnas.

Supplementary Material

Acknowledgments

We thank the editor, anonymous reviewers, and Yevgen Matusevych for helpful comments on the manuscript. The contributions of X.-N.C. and E.D. at Cognitive Machine Learning were supported by the Agence Nationale pour la Recherche Grants ANR-17-EURE-0017 Frontcog, ANR-10-IDEX-0001-02 PSL*, and ANR-19-P3IA-0001 PRAIRIE 3IA Institute; a grant from Facebook AI Research; and a grant from the Canadian Institute for Advanced Research (Learning in Machine and Brains) T.S. and N.H.F. were supported by NSF Grant BCS-1734245, and S.G. was supported by Economic and Social Research Council Grant ES/R006660/1 and James S. McDonnell Foundation Grant 220020374.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

*For example, the same phoneme might be realized as different phonetic categories depending on the preceding and following sounds or on characteristics of the speaker.

†In some accounts, the phonetic dimensions are assumed to be “acoustic” (9)—e.g., formant frequencies—in others, they are “articulatory” (19)—e.g., the degree of vocal tract opening at a constriction—and some accounts remain noncommittal (20).

‡Note that the claims in all of the relevant theoretical accounts are for the formation of explicit representations, in the sense that they are assumed to be available for manipulation by downstream cognitive processes at later developmental stages (see, e.g., ref. 20). Thus, even if one might be tempted to say that phonetic categories are implicitly present in some sense in a representation—for example, in a continuous representation exhibiting sharp increases in discriminability across phonetic category boundaries (48)—unless a plausible mechanism by which downstream cognitive processes could explicitly read out phonetic categories from that representation is provided, together with evidence that infants actually use this mechanism, this would not be sufficient to support the early phonetic category acquisition hypothesis.

§There was a previous attempt to model infant phonetic learning from such spectrogram-like auditory representations of continuous speech (72, 73), but it did not combine this modeling approach with a suitable evaluation methodology.

¶This provides necessary but not sufficient conditions for “phonetic categoriness,” but since we will see that the representations learned in our simulations already fail these tests, more fine-grained assessments will not be required.

∥For example, in the American English word “top,” the phoneme /t/ is realized as an aspirated consonant [th] (i.e., there is a slight delay before the vocal folds start to vibrate after the consonant), whereas in the word “stop,” it is realized as a regular voiceless consonant [t], which might be considered to correspond to a different phonetic category than [th].

**This is compatible with empirical evidence that phonetic learning continues into childhood well beyond the first year (see refs. 91–93, for example).

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2001844118/-/DCSupplemental.

References

- 1.Sapir E., An Introduction to the Study of Speech (Harcourt, Brace, New York, 1921). [Google Scholar]

- 2.Goto H., Auditory perception by normal Japanese adults of the sounds “L” and “R”. Neuropsychologia 9, 317–323 (1971). [DOI] [PubMed] [Google Scholar]

- 3.Miyawaki K., et al. , An effect of linguistic experience: The discrimination of [r] and [l] by native speakers of Japanese and English. Percept. Psychophys. 18, 331–340 (1975). [Google Scholar]

- 4.Strange W., Levy E. S., Law F. F., Cross-language categorization of French and German vowels by naïve American listeners. J. Acoust. Soc. Am. 126, 1461–1476 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Strange W., Speech Perception and Linguistic Experience: Issues in Cross-Language Research (York Press, Timonium, MD, 1995). [Google Scholar]

- 6.Logan J. S., Lively S. E., Pisoni D. B., Training Japanese listeners to identify English /r/ and /l/: A first report. J. Acoust. Soc. Am. 89, 874–886 (1991). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Iverson P., Hazan V., Bannister K., Phonetic training with acoustic cue manipulations: A comparison of methods for teaching English /r/-/l/ to Japanese adults. J. Acoust. Soc. Am. 118, 3267–3278 (2005). [DOI] [PubMed] [Google Scholar]

- 8.Levy E. S., Strange W., Perception of French vowels by American English adults with and without French language experience. J. Phonetics 36, 141–157 (2008). [Google Scholar]

- 9.Kuhl P. K., Williams K. A., Lacerda F., Stevens K. N., Lindblom B., Linguistic experience alters phonetic perception in infants by 6 months of age. Science 255, 606–608 (1992). [DOI] [PubMed] [Google Scholar]

- 10.Flege J. E., “Second language speech learning: Theory, findings, and problems” in Speech Perception and Linguistic Experience: Issues in Cross-Language Research, W. Strange, Ed. (York Press, Timonium, MD, 1995), pp. 233–277. [Google Scholar]

- 11.Best C. T., “A direct realist view of cross-language speech perception” in Speech Perception and Linguistic Experience: Issues in Cross-Language Research, W. Strange, Ed. (York Press, Timonium, MD, 1995), pp. 171–206. [Google Scholar]

- 12.Schatz T., Bach F., Dupoux E., Evaluating automatic speech recognition systems as quantitative models of cross-lingual phonetic category perception. J. Acoust. Soc. Am. 143, EL372–EL378 (2018). [DOI] [PubMed] [Google Scholar]

- 13.Schatz T., Feldman N. H., “Neural network vs. HMM speech recognition systems as models of human cross-linguistic phonetic perception” in CCN ’18: Proceedings of the Conference on Cognitive Computational Neuroscience, 10.32470/CCN.2018.1240-0 (2018). [DOI]

- 14.Kuhl P. K., et al. , Infants show a facilitation effect for native language phonetic perception between 6 and 12 months. Dev. Sci. 9, F13–F21 (2006). [DOI] [PubMed] [Google Scholar]

- 15.Werker J. F., Tees R. C., Influences on infant speech processing: Toward a new synthesis. Annu. Rev. Psychol. 50, 509–535 (1999). [DOI] [PubMed] [Google Scholar]

- 16.Gervain J., Mehler J., Speech perception and language acquisition in the first year of life. Annu. Rev. Psychol. 61, 191–218 (2010). [DOI] [PubMed] [Google Scholar]

- 17.Tsuji S., Cristia A., Perceptual attunement in vowels: A meta-analysis. Dev. Psychobiol. 56, 179–191 (2014). [DOI] [PubMed] [Google Scholar]

- 18.Kuhl P. K., “Innate predispositions and the effects of experience in speech perception: The native language magnet theory” in Developmental Neurocognition: Speech and Face Processing in the First Year of Life, B. DeBoysson-Bardies, S. de Schonen, P. Jusczyk, P. MacNeilage, J. Morton, Eds. (Nato Science Series D, Springer Netherlands, Dordrecht, Netherlands, 1993), vol. 69, pp. 259–274. [Google Scholar]

- 19.Best C. T., et al. , “The emergence of native-language phonological influences in infants: A perceptual assimilation model” in The Development of Speech Perception: The Transition from Speech Sounds to Spoken Words, Goodman J. C., Nusbaum H. C., Eds. (MIT Press, Cambridge, MA, 1994), pp. 167–224. [Google Scholar]

- 20.Werker J. F., Curtin S., PRIMIR: A developmental framework of infant speech processing. Lang. Learn. Dev. 1, 197–234 (2005). [Google Scholar]

- 21.Kuhl P. K., et al. , Phonetic learning as a pathway to language: New data and native language magnet theory expanded (NLM-e). Phil. Trans. Biol. Sci. 363, 979–1000 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kazanina N., Bowers J. S., Idsardi W., Phonemes: Lexical access and beyond. Psychon. Bull. Rev. 25, 560–585 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Trubetzkoy N. S., Principles of Phonology (University of California Press, Berkeley, CA, 1969). [Google Scholar]

- 24.Maye J., Werker J. F., Gerken L., Infant sensitivity to distributional information can affect phonetic discrimination. Cognition 82, B101–B111 (2002). [DOI] [PubMed] [Google Scholar]

- 25.Cristia A., Fine-grained variation in caregivers’ /s/ predicts their infants’ /s/ category. J. Acoust. Soc. Am. 129, 3271–3280 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Werker J. F., Yeung H. H., Yoshida K. A., How do infants become experts at native-speech perception? Curr. Dir. Psychol. Sci. 21, 221–226 (2012). [Google Scholar]

- 27.Cristia A., Can infants learn phonology in the lab? A meta-analytic answer. Cognition 170, 312–327 (2018). [DOI] [PubMed] [Google Scholar]

- 28.Swingley D., Contributions of infant word learning to language development. Phil. Trans. Biol. Sci. 364, 3617–3632 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Feldman N. H., Griffiths T. L., Goldwater S., Morgan J. L., A role for the developing lexicon in phonetic category acquisition. Psychol. Rev. 120, 751–778 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Klatt D. H., Speech perception: A model of acoustic-phonetic analysis and lexical access. J. Phon. 7, 279–312 (1979). [Google Scholar]

- 31.Shankweiler D., Strange W., Verbrugge R., “Speech and the problem of perceptual constancy” in Perceiving, Acting and Knowing: Toward an Ecological Psychology, Shaw R., Bransford J., Eds. (Lawrence Erlbaum Associates, Hillsdale, New Jersey, 1977), pp. 315–345. [Google Scholar]

- 32.Appelbaum I., “The lack of invariance problem and the goal of speech perception” in ICSLP ’96: Proceedings of the Fourth International Conference on Spoken Language Processing, Bunnell H. T., Idsardi W., Eds. (IEEE, Piscataway, NJ, 1996), pp. 1541–1544. [Google Scholar]

- 33.De Boer B., Kuhl P. K., Investigating the role of infant-directed speech with a computer model. Acoust Res. Lett. Online 4, 129–134 (2003). [Google Scholar]

- 34.Coen M. H., “Self-supervised acquisition of vowels in American English” in AAAI’06 Proceedings of the 21st National Conference on Artificial Intelligence, Cohn A., Ed. (AAAI Press, Palo Alto, CA, 2006), vol. 2, pp. 1451–1456. [Google Scholar]

- 35.Vallabha G. K., McClelland J. L., Pons F., Werker J. F., Amano S., Unsupervised learning of vowel categories from infant-directed speech. Proc. Natl. Acad. Sci. U.S.A. 104, 13273–13278 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Gauthier B., Shi R., Xu Y., Learning phonetic categories by tracking movements. Cognition 103, 80–106 (2007). [DOI] [PubMed] [Google Scholar]

- 37.McMurray B., Aslin R. N., Toscano J. C., Statistical learning of phonetic categories: Insights from a computational approach. Dev. Sci. 12, 369–378 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Jones C., Meakins F., Muawiyath S., Learning vowel categories from maternal speech in Gurindji Kriol. Lang. Learn. 62, 1052–1078 (2012). [Google Scholar]

- 39.Adriaans F., Swingley D., “Distributional learning of vowel categories is supported by prosody in infant-directed speech” in COGSCI ’12: Proceedings of the 34th Annual Meeting of the Cognitive Science Society, Miyake N., Peebles D., Cooper R. P., Eds. (Cognitive Science Society, Austin, TX, 2012), pp. 72–77. [Google Scholar]

- 40.Dillon B., Dunbar E., Idsardi W., A single-stage approach to learning phonological categories: Insights from Inuktitut. Cognit. Sci. 37, 344–377 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Frank S., Feldman N., Goldwater S., “Weak semantic context helps phonetic learning in a model of infant language acquisition” in ACL ’14: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, Long Papers, K. Toutanova, H. Wu, Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2014), pp. 1073–1083.

- 42.Adriaans F., Swingley D., Prosodic exaggeration within infant-directed speech: Consequences for vowel learnability. J. Acoust. Soc. Am. 141, 3070–3078 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Adriaans F., Effects of consonantal context on the learnability of vowel categories from infant-directed speech. J. Acoust. Soc. Am. 144, EL20–EL25 (2018). [DOI] [PubMed] [Google Scholar]

- 44.Räsänen O., Computational modeling of phonetic and lexical learning in early language acquisition: Existing models and future directions. Speech Commun. 54, 975–997 (2012). [Google Scholar]

- 45.Rasilo H., Räsänen O., Laine U. K., Feedback and imitation by a caregiver guides a virtual infant to learn native phonemes and the skill of speech inversion. Speech Commun. 55, 909–931 (2013). [Google Scholar]

- 46.Bion R. A. H., Miyazawa K., Kikuchi H., Mazuka R., Learning phonemic vowel length from naturalistic recordings of Japanese infant-directed speech. PloS One 8, e51594 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Antetomaso S., et al. , Modeling Phonetic Category Learning from Natural Acoustic Data (Cascadilla Press, Somerville, MA, 2017). [Google Scholar]

- 48.Guenther F. H., Gjaja M. N., The perceptual magnet effect as an emergent property of neural map formation. J. Acoust. Soc. Am. 100, 1111–1121 (1996). [DOI] [PubMed] [Google Scholar]

- 49.Jusczyk P. W., “Developing Phonological Categories from the Speech Signal” in Phonological Development: Models, Research, Implications, Ferguson C. A., Menn L., Stoel-Gammon C., Eds. (York Press, Timonium, MD, 1992), pp. 17–64. [Google Scholar]

- 50.Jusczyk P. W., From general to language-specific capacities: The WRAPSA model of how speech perception develops. J. Phonetics 21, 3–28 (1993). [Google Scholar]

- 51.Jusczyk P., The Discovery of Spoken Language (MIT Press, Cambridge, MA, 1997). [Google Scholar]

- 52.Varadarajan B., Khudanpur S., Dupoux E., “Unsupervised learning of acoustic sub-word units” in ACL ’08: HLT: Proceedings of the 46th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Short papers, J. D. Moore, S. Teufel, J. Allan, S. Furui, Eds. (Association for Computational Linguistics, Stroudsburg, PA, 2008), pp. 165–168.

- 53.Park A. S., Glass J. R., Unsupervised pattern discovery in speech. IEEE Trans. Audio Speech Lang. Process. 16, 186–197 (2008). [Google Scholar]

- 54.Lee Cy., Glass J., “A nonparametric Bayesian approach to acoustic model discovery” in ACL ’12: Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics, Long Papers, Li H., Lin C.-Y., Osborne M., Lee G. G., Park J.C., Eds. (Association for Computational Linguistics, 121 Stroudsburg, PA, 2012), vol. 1, pp. 40–49.

- 55.Jansen A., et al. , “A summary of the 2012 JHU CLSP workshop on zero resource speech technologies and models of early language acquisition” in ICASSP ’13: Proceedings of the 2013 IEEE International Conference on Acoustics, Speech, and Signal Processing (Institute of Electrical and Electronics Engineers, Piscataway, NJ, 2013), pp. 8111–8115.

- 56.Synnaeve G., Schatz T., Dupoux E., “Phonetics embedding learning with side information” in SLT ’14: Proceedings of the 2014 IEEE Spoken Language Technology Workshop (Institute of Electrical and Electronics Engineers, Piscataway, NJ, 2014), pp. 106–111.

- 57.Versteegh M., et al. , “The zero resource speech challenge 2015” in INTERSPEECH’15: Proceedings of the 16th Annual Conference of the International Speech Communication Association (International Speech Communication Association, Baixas, France, 2015), pp. 3169–3173.

- 58.Versteegh M., Anguera X., Jansen A., Dupoux E., The Zero Resource Speech Challenge 2015: Proposed approaches and results. Procedia Comput. Sci. 81, 67–72 (2016). [Google Scholar]

- 59.Ondel L., Burget L., Černockỳ J., Variational inference for acoustic unit discovery. Procedia Comput. Sci. 81, 80–86 (2016). [Google Scholar]

- 60.Chen H., Leung C. C., Xie L., Ma B., Li H., “Parallel inference of Dirichlet process Gaussian mixture models for unsupervised acoustic modeling: A feasibility study” in INTERSPEECH ’15: Proceedings of the 16th Annual Conference of the International Speech Communication Association (International Speech Communication Association, Baixas, France, 2015), pp. 3189–3193.

- 61.Thiolliere R., Dunbar E., Synnaeve G., Versteegh M., Dupoux E., “A hybrid dynamic time warping-deep neural network architecture for unsupervised acoustic modeling” in INTERSPEECH ’15: Proceedings of the 16th Annual Conference of the International Speech Communication Association (International Speech Communication Association, Baixas, France, 2015), pp. 3179–3183.

- 62.Kamper H., Elsner M., Jansen A., Goldwater S., “Unsupervised neural network based feature extraction using weak top-down constraints” in ICASSP ’15: Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (Institute of Electrical and Electronics Engineers, Piscataway, NJ, 2015), pp. 5818–5822.

- 63.Renshaw D., Kamper H., Jansen A., Goldwater S., “A comparison of neural network methods for unsupervised representation learning on the Zero Resource Speech Challenge” in INTERSPEECH ’15: Proceedings of the 16th Annual Conference of the International Speech Communication Association (International Speech Communication Association, Baixas, France, 2015), pp. 3200–3203.

- 64.Zeghidour N., Synnaeve G., Versteegh M., Dupoux E., “A deep scattering spectrum—deep Siamese network pipeline for unsupervised acoustic modeling” in ICASSP ’16: Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (Institute of Electrical and Electronics Engineers, Piscataway, NJ, 2016), pp. 4965–4969.

- 65.Heck M., Sakti S., Nakamura S., Unsupervised linear discriminant analysis for supporting DPGMM clustering in the zero resource scenario. Procedia Comput. Sci. 81, 73–79 (2016). [Google Scholar]

- 66.Heck M., Sakti S., Nakamura S., “Feature optimized DPGMM clustering for unsupervised subword modeling: A contribution to Zerospeech 2017” in ASRU ’17: Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (Institute of Electrical and Electronics Engineers, Piscataway, NJ, 2017), pp. 740–746. [Google Scholar]

- 67.Hsu W. N., Zhang Y., Glass J., “Unsupervised learning of disentangled and interpretable representations from sequential data” in NIPS ’17: Proceedings of the 31st International Conference on Neural Information Processing Systems, U. von Luxburg et al. (Curran Associates, Red Hook, NY, 2017), pp. 1876–1887.

- 68.Dunbar E., et al. , “The Zero Resource Speech Challenge 2017” in ASRU ’17: Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (Institute of Electrical and Electronics Engineers, Piscataway, NJ, 2017), pp. 320–330.

- 69.Chorowski J., Weiss R. J., Bengio S., van den Oord A., Unsupervised speech representation learning using WaveNet autoencoders. arXiv [Preprint] (2019) https://arxiv.org/abs/1901.08810 (accessed 13 January 2021).

- 70.Dupoux E., Cognitive science in the era of artificial intelligence: A roadmap for reverse-engineering the infant language-learner. Cognition 173, 43–59 (2018). [DOI] [PubMed] [Google Scholar]

- 71.Mermelstein P., Distance measures for speech recognition, psychological and instrumental. Pattern Recognit. Artif. Intell. 116, 91–103 (1976). [Google Scholar]

- 72.Miyazawa K., Kikuchi H., Mazuka R., “Unsupervised learning of vowels from continuous speech based on self-organized phoneme acquisition model” in INTERSPEECH ’10: Proceedings of the 11th Annual Conference of the International Speech Communication Association, T. Kobayashi, K. Hirose, S. Nakamura, Eds. (International Speech Communication Association, Baixas, France, 2010), pp. 2914–2917.

- 73.Miyazawa K., Miura H., Kikuchi H., Mazuka R., “The multi timescale phoneme acquisition model of the self-organizing based on the dynamic features” in INTERSPEECH ’11: Proceedings of the 12th Annual Conference of the International Speech Communication Association, P. Cosi, R. De Mori, G. Di Fabbrizio, R. Pieraccini, Eds. (International Speech Communication Association, Baixas, France, 2011), pp. 749–752.

- 74.Saffran J. R., Werker J. F., Werner L. A., “The infant’s auditory world: Hearing, speech, and the beginnings of language” in Handbook of Child Psychology: Cognition, Perception, and Language, D. Kuhn, R. S. Siegler, W. Damon, R. M. Lerner, Eds. (Wiley, New York, 2006), pp. 58–108. [Google Scholar]

- 75.Kuhl P. K., et al. , Cross-language analysis of phonetic units in language addressed to infants. Science 277, 684–686 (1997). [DOI] [PubMed] [Google Scholar]

- 76.Fernald A., Speech to infants as hyperspeech: Knowledge-driven processes in early word recognition. Phonetica 57, 242–254 (2000). [DOI] [PubMed] [Google Scholar]