Abstract

The free energy principle (FEP) in the neurosciences stipulates that all viable agents induce and minimize informational free energy in the brain to fit their environmental niche. In this study, we continue our effort to make the FEP a more physically principled formalism by implementing free energy minimization based on the principle of least action. We build a Bayesian mechanics (BM) by casting the formulation reported in the earlier publication (Kim in Neural Comput 30:2616–2659, 2018, 10.1162/neco_a_01115) to considering active inference beyond passive perception. The BM is a neural implementation of variational Bayes under the FEP in continuous time. The resulting BM is provided as an effective Hamilton’s equation of motion and subject to the control signal arising from the brain’s prediction errors at the proprioceptive level. To demonstrate the utility of our approach, we adopt a simple agent-based model and present a concrete numerical illustration of the brain performing recognition dynamics by integrating BM in neural phase space. Furthermore, we recapitulate the major theoretical architectures in the FEP by comparing our approach with the common state-space formulations.

Keywords: Free energy principle, Bayesian mechanics, Continuous state-space model, Neural phase space, Motor signal, Limit cycles

Introduction

The free energy principle (FEP) in the field of neurosciences rationalizes that all viable organisms cognize and behave in the natural world by calling forth the probabilistic models in their neural system—the brain—in a manner that ensures their adaptive fitness (Friston 2010a). The neurobiological mechanism that endows an organism’s brain—the neural observer—with this ability is theoretically framed into an inequality that weighs two information-theoretical measures: surprisal and informational free energy (IFE) (see, for a review, Buckley et al. 2017). The surprisal provides a measure of the atypicality of an environmental niche, and the IFE is the upper bound of the surprisal. The inequality enables a cognitive agent to minimize the IFE as a variational objective function indirectly instead of the intractable surprisal.1 The minimization corresponds to inferring the external causes of afferent sensory data, which are encoded as a probability density at the sensory interface, e.g., sensory organs. The brain of an organism neurophysically performs the Bayesian computation of minimizing the induced variational IFE; this is termed as recognition dynamics (RD), which emulates, under the Laplace approximation (Friston et al. 2007), the predictive coding scheme of message processing or recognition (Rao and Ballard 1999; Bogacz 2017). The neuronal self-organization in vitro under the FEP was studied recently at the level of single neuron responses (Isomura et al. 2015; Isomura and Friston 2018). Owing to its explanatory power of perception, learning, and behavior of living organisms within a framework, it is suggested a promising unified biological principle (Friston 2010a, 2013; Colombo and Wright 2018; Ramstead et al. 2018).

The neurophysical mechanisms of the abductive inference in the brain are yet to be understood; therefore, researchers mostly rely on information-theoretic concepts (Elfwing et al. 2016; Ramstead et al. 2019; Kuzma 2019; Shimazaki 2019; Kiefer 2020, Sanders et al. 2020). The FEP facilitates dynamic causal models in the brain’s generalized-state space (Friston 2008b; Friston et al. 2010b), which pose a mixed discrete-continuous Bayesian filtering (Jazwinski 1970; Balaji and Friston 2011). In this work, we consider that the brain confronts the continuous influx of stochastic sensations and conducts the Bayesian inversion of inferring external causes in the continuous state representations. Biological phenomena are naturally continuous spatiotemporal events; accordingly, we suggest that the continuous-state approaches used to describe cognition and behavior are better suited than discrete-state descriptions for studying the perceptual computation in the brain.

Recently, we carefully evaluated the FEP while clarifying technical assumptions that underlie the continuous state-space formulation of the FEP (Buckley et al. 2017). A full account of the discrete-state formulation complementary to our formulation can be found in (Da Costa et al. 2020a). In a subsequent paper (Kim 2018), we reported a different variational scheme that the Bayesian brain may utilize in conducting inference. In particular, by postulating that “surprisal” plays the role of a Lagrangian in theoretical mechanics (Landau and Lifshitz 1976; Sengupta et al. 2016), we worked a plausible computational implementation of the FEP by utilizing the principle of least action. We believed that although the FEP relies on Bayesian abductive computation, it must be properly formulated conforming to the physical principles and laws governing the matter comprising the brain. To this end, we proposed that any process theory of the FEP ought to be based on the full implication of the inequality (Kim 2018)

| 1 |

where and collectively denote the sensory inputs and environmental hidden states, respectively. The integrand on the left-hand side (LHS) of the preceding equation is the aforementioned surprisal, which measures the “self-information” contained in the sensory density (Cover and Thomas 2006), and on the right-hand side (RHS) is the variational IFE defined as

| 2 |

which encapsulates the recognition (R-) density and the generative (G-) density (Buckley et al. 2017). While the G-density represents the brain’s belief (or assumption) of sensory generation and hidden environmental dynamics, the R-density is the brain’s current estimate of the environmental cause of the sensory perturbation. The G- and R- densities together induce variational IFE when receptors at the brain–environment interface are excited by sensory perturbations.

According to Eq. (1), the FEP articulates that the brain minimizes the upper bound of the sensory uncertainty, which is a long-term surprisal. We identify this bound as an informational action (IA) within the scope of the mechanical principle of least action (Landau and Lifshitz 1976). Then, by complying with the revised FEP, we formulate the Bayesian mechanics (BM) that executes the RD in the brain. The RD neurophysically performs the computation for minimizing the IA when the neural observer encounters continuous streams of sensory data. The advantage of our formulation is that the brain and the environmental states are specified using only bare continuous variables and their first-order derivatives (velocities or equivalent momenta). The momentum variables represent prediction errors, which quantify the discrepancy between an observed input and its top-down belief of a cognitive agent in the predictive coding language (Huang and Rao 2011; de Gardelle et al. 2013; Kozunov et al. 2020).

The goal of this work is to cast our previous study to include the agent’s motor control, which acts on the environment to alter sensory inputs.2 Previously, by utilizing the principle of least action, we focused on the formulation of perceptual dynamics for the passive inference of static sensory inputs (Kim 2018) without incorporating motor control for the active perception of nonstationary sensory streams. Here, we apply our approach to the problem of active inference derived from the FEP (Friston et al. 2009, 2010c, 2011a), which proposes that organisms can minimize the IFE by altering sensory observations when the outcome of perceptual inference alone is not in accordance with the internal representation of the environment (Buckley et al. 2017). Living systems are endowed with the ability to adjust their sensations via proprioceptive feedback, which is attributed to an inherited trait of all motile animals embodied in the reflex pathways (Tuthill and Azim 2018). In this respect, motor control is considered an inference of the causes of motor signals encoded as prediction errors at proprioceptors, and motor inference is realized at the spinal level by classical reflex arcs (Friston 2011b; Adams et al. 2013). Our formulation evinces time-dependent driving terms in the obtained BM, which arise from sensory prediction errors, as control (motor) signals. Accordingly, the BM bears resemblance to the deterministic control derived from Pontryagin’s maximum principle in optimal control theory (Todorov 2007). In this work, we consider the agent’s locomotion for action inference only implicitly: our formulation focuses on the implementation of the control signal (or commands) at the neural level of description and not at the behavioral level of biological locomotion; accordingly, the additional minimization mechanism of the IA inferring optimal control was not explicitly handled, which is left as a future work. There are other systematic approaches that try to relate the active inference formalism to the existing control theories (Baltieri and Buckley 2019; Millidge et al. 2020a; Da Costa et al. 2020c).

Technically, a variation in the IA yields the BM that computes Bayesian inversion, which is given as a set of coupled differential equations for the brain variables and their conjugate momenta. The brain variables are ascribed to the brain’s representation of the environmental states, and their conjugate momenta are the combined prediction errors of the sensory data and the rate of the state representations. The neural computation of active inference corresponds to the BM integration and is subject to nonautonomous motor signals. The obtained solution results in optimal trajectories in the perceptual phase space, which yields a minimum accumulation of the IFE over continuous time, i.e., a stationary value of the IA. Our IA is identical to that of “free action” defined in the Bayesian filtering schemes (Friston et al. 2008c). When the minimization of free action is formulated in the generalized filtering scheme (Friston et al. 2010b), two approaches are akin to each other such that both assume the Laplace-encoded IFE as a mechanical Lagrangian. The difference lies in their mathematical realization of minimization: our approach applies the principle of least action in classical mechanics, while generalized filtering uses the gradient descent method in the generalized state space, where generalized states are interpreted as a solenoidal gradient flow in a nonequilibrium steady state.

The remainder of this paper is organized as follows. In Sect. 2, we unravel some of the theoretical details in the formulation of the FEP. In Sect. 3, we formulate the BM of the sensorimotor cycle by utilizing the principle of least action. Then, in Sect. 4, we present a parsimonious model for a concrete manifestation of our formulation. Finally, in Sect. 5, we provide the concluding remarks.

Recapitulation of technical developments

Here, we recapitulate theoretical architectures in the continuous-state formulation under the FEP while discussing technical features that distinguish our formulation from prevailing state-space approaches.

Perspective on generalized states

The Bayesian filtering formalism of the FEP adopts the concept of the generalized motion of a dynamical object by defining its mechanical state beyond position and velocity (momentum). The generalized states of motion are generated by recursively taking time derivatives of the bare states. A point in the hyperspace defined by the generalized states is interpreted as an instantaneous trajectory. This notion provides an essential theoretical basis for ensuring an equilibrium solution of the RD in the conventional formulation of the FEP (Kim 2018); it is commonly employed by researchers (Parr and Friston 2018; Baltieri and Buckley 2019).

The motivation behind the generalized coordinates of motion is to describe noise correlation in the generative processes beyond white noise (Wiener process), and thus, to provide a more detailed specification of the dynamical states (Friston 2008a, b; Friston et al. 2010b). The mathematical theory of a quasi-Markovian process undergirds this formulation, which describes general stochastic dynamics with a colored-noise correlation with a finite-dimensional Markovian equation in an extended state space by adding auxiliary variables (Pavliotis 2014). State-space augmentation in terms of generalized coordinates may be considered a special realization of the Pavliotis formalism. The state-extension procedure adopts some specific approximations, such as the local linearization procedure developed in nonlinear time-series analysis (Ozaki 1992).

From the physics perspective, higher-order states possess a different dynamical status in comparison with Newtonian mechanical states, specified only by position (bare order) and velocity (first order). A change in the Newtonian states is caused by a force that specifies acceleration (second order) (Landau and Lifshitz 1976). Although there are no “generalized forces” causing the jerk (third order), snap (fourth order), etc., the jerk can be measured phenomenologically by observing a change in acceleration. This induces all higher-order states to the kinematic level. Another perspective is whether update equations in terms of generalized coordinates are equivalent to the Pavliotis’ quasi-Markovian description. Auxiliary variables in Pavliotis’ analysis are not generated by the recursive temporal derivatives of a bare state. The generalized phase space considered in (Kerr and Graham 2000) is also spanned in terms of canonical displacement and momentum variables. A further in-depth analysis is required.

Our formulation does not employ the generalized states, but instead, it follows the normative rules in specifying generative models (Kim 2018). The derived BM performs the brain’s Bayesian inference in terms of only the bare brain variable and its conjugate momentum in phase space, and not in an extended state space. Accordingly, our formulation is restricted to the white noise in the generative processes; however, it provides a natural approach to determine the equilibrium solutions of the BM (see Sect. 4). For the general brain models described by many brain variables, the brain’s BM can be set up in multi-dimensional phase space, which is distinctive from the state-space augmentation in the generalized coordinate formulation (see Sect. 2.3).

Continuous state implementation of recognition dynamics (RD)

The conventional FEP employs the gradient-descent minimization of the variational IFE by the brain’s internal states. To incorporate the time-varying feature of sensory inputs, the method distinguishes the path of a mode and the mode of a path in the generalized state space (Friston 2008b; Friston et al. 2008c, 2010b). This theoretical construct intuitively considers the nonequilibrium dynamics of generalized brain states as drift–diffusion flows that locally conserve the ensemble density in the hyperspace of the generalized states (Friston et al. 2012b; Friston 2019).

Mathematically, the gradient descent formulation is based on the general idea for a fast and efficient convergence and it ensures that formulations reach a sophisticate level by incorporating the Riemannian metric in information geometry (Amari 1998; Surace et al. 2020); the idea is applied to the FEP (Sengupta and Friston 2017; Da Costa et al. 2020b).

In our proposed formulation, we replace the gradient descent scheme with the standard mechanical formulation of the least action principle (Kim 2018). However, there is a disadvantage in that we incorporate only the Gaussian white noise in the generative processes of the sensory data and environmental dynamics [see Sect. 2.3]. The resulting novel RD described by an effective Hamiltonian mechanics entails optimal trajectories but no single fixed points in the canonical state (phase) space, which provides an estimate of the minimum sensory uncertainty, i.e., the average surprisal over a finite temporal horizon. The phase space comprises the positions (predictions) and momenta (prediction errors) of the brain’s representations of the causal environment.

Our implementation of the minimization procedure is an alternative to the gradient descent algorithms in the FEP. A crucial difference between the two approaches is that while the gradient descent scheme searches for an instantaneous trajectory representing a local minimum on the IFE landscape in the multidimensional generalized state space, our theory determines an optimal trajectory minimizing the continuous-time integral of the IFE in two-dimensional phase space for a single variable problem.

Treatment of noise correlations

The FEP requires the brain’s internal model of the G-density encapsulating the likelihood and prior . The likelihood density is determined by the random fluctuation in the expected sensory-data generation, and the prior density is determined by that in the believed environmental dynamics. The brain encounters sensory signals on a timescale, which is often shorter than the correlation time of the random processes (Friston 2008a); accordingly, in general, the noises embrace a non-Markovian stochastic process with an intrinsic temporal correlation that surmounts the ideal white-noise stochasticity. Conventional formulations (Friston 2008b; Friston et al. 2010b) consider that colored noises are analytic (i.e., differentiable) to allow correlation between the distinct dynamical orders of the continuous states. In practice, to furnish a closed dynamics for a finite number of variables, the recursive equations of motion for the continued generalized states need to be truncated at an arbitrary embedding order.

Our formulation considers the BM in the brain in terms of the standard Newtonian (Hamiltonian) construct; the drawback is that our theory does not explore the nature of temporal correlation in the assumed Gaussian noises in the generative processes. Accordingly, our generative models assume and account for the white noise describing the Wiener processes. The delta-correlated white noise is mathematically singular; they need to be smoothed to describe fast biophysical processes. There are approaches in stochastic theories that formulate non-Markovian processes with colored noises without resorting to generalized states of motion (van Kampen 1981; Fox 1987; Risken 1989; Moon and Wettlaufer 2014), which are not discussed here.

Instead, we discuss an approach to extend the phase-space dimension for the white noise processes. At the level of the Hodgkin—Huxley description of the biophysical brain dynamics, the membrane potential, gating variables, and ionic concentrations are relevant coarse-grained brain variables (Hille 2001). Thus, if one employs the fluctuating Hodgkin–Huxley models with Gaussian white noises as neurophysically plausible generative models (Kim 2018), one can proceed with our Lagrangian (equivalently, Hamiltonian) approach to formulate the RD in an extended phase space. Such a state-space augmentation is different from and alternative to that in terms of the generalized coordinates of motion [see Sect. 2.1], while accommodating only delta-correlated noises.

Lagrangian formulation of Bayesian mechanics (BM)

The (classical) “action” is defined as an ordinary time-integral of the Lagrangian for an arbitrary trajectory (Landau and Lifshitz 1976). Our formulation of the BM proposes the Laplace-encoded IFE—an upper bound on the sensory surprisal—as an informational Lagrangian and hypothesizes the time-integral of the IFE—an upper bound on the sensory Shannon uncertainty—as an informational action (IA). By applying the principle of least action, we minimize the IA to find a tight bound for the sensory uncertainty and derive the BM that performs the brain’s Bayesian inference of the external cause of sensory data. In turn, we cast the working BM in our formulation as effective Hamilton’s equations of motion in terms of position and momentum in phase space.

Meanwhile, the BM described in (Friston 2019) intuitively adopts the idea of Feynman’s path integral formulation (Feynman and Hibbs 2005). The Feynman’s path integral formulation extends the idea of classical action to quantum dynamics and provides an approach to determine the “propagator” that specifies the transition probability between initial and final states. The propagator is defined as a functional integral of the exponentiated action, which summates all possible trajectories connecting initial and final states. The description provided in (Friston 2019) identifies the propagator using the probability density over neural states, and it makes the connection to the Bayesian FEP. In this manner, the surprisal may be identified as a negative log of the steady-state density in nonequilibrium ensemble dynamics (Parr et al. 2020), which is governed by a Fokker–Plank equation. The generalized Bayesian filtering scheme (Friston et al. 2008c, 2010b) provides a continuous-state formulation of minimizing the surprisal, and it delivers the BM in terms of the generalized coordinates of motion using the concept of gradient flow.

In some technical details, the Lagrangian presented in (Friston 2019), which is the integrand in the classical action, encloses two terms. They are the quadratic term arising from the state equation and the term involving a state-derivative (divergence in three dimension) of the force, which appears in the Langevin-type state equation. The former term is included in our Lagrangian but with an additional quadratic term from the observation equation. In contrast, the latter is not present in our Lagrangian, which is known to arise from the Stratonovich convention (Seifert 2012; Cugliandolo and Lecomte 2017).

Closure of the sensorimotor loop in active inference

The conventional FEP facilitates gradient descent minimization for the mechanistic implementation of active inference, which makes the motor-control dynamics available in the brain’s RD (Friston et al. 2009, 2010c, 2011a). The gradient-descent scheme is mathematically expressed as

| 3 |

where a denotes an agent’s motor variable and F represents the Laplace-encoded IFE by the biophysical brain variables (Buckley et al. 2017). An agent’s capability of subjecting sensory inputs to motor control is considered a functional dependence in the environmental generative processes (Friston et al. 2009). According to Eq. (3), an agent performs the minimization by effectuating the sensory data and obtains the best result for motor inference when , where the condition must be met. Because produces terms proportional to the sensory prediction errors, the fulfillment of motor inference is equivalent to suppressing proprioceptive errors. Thus, motor control attempts to minimize prediction errors, while prediction errors convey motor signals for control dynamics; this forms a sensorimotor loop. Some subtle questions arise here regarding the dynamical status of the motor-control variable a: Eq. (3) evidently handles a as a dynamical state; however, the corresponding equation of motion governing its dynamics is not given in the environmental processes. Instead, the mechanism of motor control that vicariously alters the sensory-data generation is presumed (Friston et al. 2009). In addition, motor variables are represented as the active states of the brain, e.g., motor-neuron activities in the ventral horn of the spinal cord (Friston et al. 2010c); however, they are treated differently from other hidden-state representations. Recall that the internal state representations are expressed as generalized states, whereas the active states are not.

In the following, we pose a semi-active inference problem that does not explicitly address optimal motor control (motor inference) in the RD but encompasses the motor-control signal as a time-dependent driving term arising from nonstationary prediction errors in the sensory-data cause.

Closed-loop dynamics of perception and motor control

The brain is not divided into sensory and motor systems. Instead, it is one inference machine that performs the closed-loop dynamics of perception and motor control. Here, we develop a framework of active inference within the scope of the least action principle by employing the Laplace-encoded IFE as an informational Lagrangian.

The environmental states undergo deterministic or stochastic dynamics by obeying physical laws and principles. Here, we do not explicitly consider their equations of motion because they are hidden from the brain’s perspective, i.e., the brain as a neural observer does not possess direct epistemic access. Similarly, sensory data are physically generated by an externally hidden process at a sensory receptor, which constitutes the brain-environment interface. However, to emphasize the effect of an agent’s motor control a on sensory generation, we facilitate the generative process of sensory data using an instantaneous mapping

| 4 |

where denotes the linear or nonlinear map of input generation and represents the noise involved. Note that an agent’s motor-control a is explicitly included in the generative map. However, the neural observer is not aware of how the sensory streams are effectuated by the agent’s motion in the environment (Friston et al. 2010c).

The FEP circumvents this epistemic difficulty by hypothesizing a formal homology between external physical processes and the corresponding internal models foreseen by the neural observer (Friston et al. 2010c). Upon receiving sensory-data influx, the brain launches R-density to infer the external causes via variational Bayes. The R-density is the probabilistic representation of the environment, whose sufficient statistics are assumed to be encoded by neurophysical brain variables, e.g., neuronal activity or synaptic efficacy. When a fixed-form Gaussian density is considered for the R-density, which is called Laplace approximation, only the first-order sufficient statistic, i.e., the mean , is needed to specify the IFE effectively (Buckley et al. 2017). The brain continually updates the R-density using its internal dynamics, described here as a Langevin-type equation

| 5 |

where represents the brain’s belief regarding the external dynamics encoded by a neurophysical driving mechanism of the brain variables , and w is random noise. The sensory perturbations at the receptors are predicted by the neural observer via the instantaneous mapping

| 6 |

where the belief is encoded by the internal variables, and z is the associated noise. Our sensory generative model provides a mechanism for sampling sensory data using the brain’s active states a, which represent an external motor control embedded in Eq. (4). Note that Eq. (4) describes the environmental processes that generate sensory inputs , while its homolog “Eq. (6)” prescribes the brains’ prior belief of that can be altered by the active states a. The instantaneous state of the brain , which is specified by Eq. (5), selects a particular R-density when the brain seeks the true posterior (the goal of perceptual inference). The motor control fulfills the prior expectations by modifying the sensory generation via active-state effectuation at the proprioceptors.

Through Laplace approximation (Buckley et al. 2017), the G-density is encoded in the brain as , where the sensory stimuli are predicted by the neural observer via Eq. (6). Here, we argue that the physical sensory-recording process is conditionally independent of the brain’s internal dynamics; however, the brain states must be neurophysically involved in computing the sensory prediction. In other words, from the physics perspective, the sensory perturbation at the interface is a source for exciting the neuronal activity . This observation renders the set of Eqs. (5) and (6) to be dynamically coupled, and not conditionally independent. We incorporate this conditional dependence into our formulation by introducing a statistical coupling via the covariance connection between the likelihood and prior that together furnish the Laplace-encoded G-density.

For simplicity, we consider the stationary Gaussian processes for the bivariate variable Z as a column vector

where and , and we specify the Laplace-encoded G-density as

| 7 |

where and are the determinant and the inverse of the matrix , respectively; is the transpose of Z. The covariance matrix for the above is given as

where the stationary variances () and the transient covariance are defined, respectively, as

With the prescribed internal model of the brain for the G-density, the Laplace-encoded IFE can be specified as (for details, see Buckley et al. 2017). Then, it follows that

| 8 |

where denotes the correlation function defined as a normalized covariance

| 9 |

Furthermore, we introduce notations as

| 10 |

which are precisions, scaled by the correlation in the conventional FEP.

Next, as proposed in (Kim 2018), we identify F as an informational Lagrangian L within the scope of the principle of least action, and we define

| 11 |

which is viewed as a function of and for the given sensory inputs , i.e., . Note that we dropped the last term in Eq. (8) when translating F into L because it can be expressed as a total time-derivative term that does not affect the resulting equations of motion (Landau and Lifshitz 1976). Then, the theoretical action S that effectuates the variational objective functional under the revised FEP is set up as

| 12 |

The Euler–Lagrange equation of motion, which determines the trajectory for a given initial condition , is derived by minimizing the action .

Equivalently, the equations of motion can be considered in terms of the position and its conjugate momentum p, instead of the position and velocity . We used the terms position and velocity as a metaphor to indicate dynamical variables and , respectively. For this, we need to convert Lagrangian L into Hamiltonian H by performing a Legendre transformation

where p denotes the canonical momentum conjugate to , which is calculated from L as

| 13 |

After some manipulation, the functional form of H can be obtained explicitly as

| 14 |

where we indicated its dependence on the sensory influx . In addition, the terms T and V on the RHS are defined as

| 15 |

| 16 |

Here, T and V represent the kinetic and potential energies, respectively, which define the informational Hamiltonian of the brain. Similarly, and represent the neural inertial masse as a metaphor. Unlike that in standard mechanics, the second term in the expression for kinetic energy is dependent on linear momentum and position.

We generate the Hamilton equations of motion, which are equivalent to the Lagrange equation using

As described below, Hamilton’s equations are better suited for our purposes because they specify the RD as coupled first-order differential equations of the brain state and its conjugate momentum p. In contrast, the Lagrange equation is a second-order differential equation of the state variable (Landau and Lifshitz 1976). The results are

| 17 |

| 18 |

where parameters , , and have been, respectively, defined for notational convenience as

| 19 |

where denotes the tuning parameter to spawn stability. In Eqs. (17) and (18), we defined the notation as

| 20 |

It measures the discrepancy between the adjustable sensory input by an agent’s motor control a and the top-down neural prediction , weighted by the neural inertial mass .

Below, we appraise the BM prescribed by Eqs. (17) and (18) and note some significant aspects:

-

(i)

The derived RD suggests that both brain activities and their conjugate momenta p are dynamic variables. The instantaneous values of and p correspond to a point in the brain’s perceptual phase space, and the continuous solution over a temporal horizon forms an optimal trajectory that minimizes the theoretical action, which represents sensory uncertainty.

-

(ii)

The canonical momentum p defined in Eq. (13) can be rewritten as . Accordingly, when the normalized correlation is nonvanishing, the momentum quantifies combined errors in predicting changing states and sensory stimuli. Prediction errors propagate through the brain by obeying coupled dynamics according to Eqs. (17) and (18).

- (iii)

Equations (17) and (18) are the highlights of our formulation, which prescribe the brain’s BM of semi-actively inferring the external causes of sensory inputs under the revised FEP. Note that the motor variable a is not explicitly included in our derived RD; instead, it implicitly induces nonautonomous sensory inputs in the motor signal . The motor signal appears as a time-dependent driving force; accordingly, our Hamiltonian formulation bears a resemblance to the motor-control dynamics described by the Hamilton–Jacobi–Bellman (HJB) equation in the control theory (Todorov 2007). If one regards the Lagrangian Eq. (11) as a negative cost rate and the canonical momentum p as a costate, our IA is equivalent to the total cost function that generates the continuous-state HJB equations. In optimal control theory, the associated Hamiltonian function is further minimized with respect to the control signal, which we do not explicitly consider in this work. In our formulation, the motor signals are produced by the discrepancy between the sensory streams and those predicted by the brain. The nonstationary data are presented to a sensorimotor receptor, whose field position in the environment is specified by the agent’s locomotive motion. The neural observer continuously integrates the BM subject to a motor signal to perform the sensory-uncertainty minimization, thereby closing the perception and motion control within a reflex arc. When we neglect the correlation between the sensory prediction modeled by Eq. (6) and the internal dynamics of predicting the neuronal state modeled by Eq. (5), we can recover the RD reported in the previous publication (Kim 2018), which demonstrates the consistency of our formulation.

In the present treatment, we consider only a single brain variable ; accordingly, the ensuing BM specified by Eqs. (17) and (18) is described in a two-dimensional phase space. The extension of our formulation to the general case of the multivariate brain is possible by applying the same line of work proposed in (Kim (2018)). Under the independent-particle approximation, the multivariate Lagrangian takes the form

| 23 |

where denotes a row vector of N brain states that respond to multiple sensory inputs in a general manner. Note that our proposed multivariate formulation is different from the state-space augmentation using the higher-order states [see Sect. 2.1]. In our case, multiple brain states are, for instance, the membrane potential, gating variables, and ionic concentrations, that can be viewed as the fluctuating variables on a coarse-grained time scale, influenced by Gaussian white noises [see Sect. 2.3].

Furthermore, the implication of our formulation in the hierarchical brain can be achieved in a straightforward manner as in (Kim 2018), which adopts the bidirectional facet in information flow of descending predictions and ascending prediction errors (Markov and Kennedy 2013; Michalareas et al. 2016). Note that in ensuing formulation, both descending predictions and ascending prediction errors will constitute the dynamical states governed by the closed-loop RD in the functional architecture of the brain’s sensorimotor system. This feature is in contrast to the conventional implementation of the FEP, which delivers the backward prediction—belief propagation—as neural dynamics and the forward prediction error as an instant message passing without causal dynamics (Friston 2010a; Buckley et al. 2017).

Simple Bayesian-agent model: implicit motor control

In this section, we numerically demonstrate the utility of our formulation using an agent-based model, which is based on a previous publication (Buckley et al. 2017). Unlike that in the previous study, the current model does not employ generalized states and their motions; instead, the RD is specified using only position and its conjugate momentum p for incoming sensory data . Environmental objects invoking an agent’s sensations can be either static or time dependent, and in turn, the time dependence can be either stationary (not moving on average) or nonstationary. According to the framework of active inference, the inference of static properties corresponds to passive perception without motor control a. Meanwhile, the inference of time-varying properties renders an agent’s active perception of proprioceptive sensations by discharging motor signals via classic reflex arcs.

In the present simulation, the external hidden state is a point property, e.g., temperature or a salient visual feature, which varies with the field point x. As the simplest environmental map, we consider and assume that the sensory influx at the corresponding receptor is given by

| 24 |

where denotes the random fluctuation. The external property, e.g., temperature, is assumed to display a spatial profile as

where denotes the value at the field origin, and the desired environmental niche is situated at , where . The biological agent that senses temperature is allowed to navigate through a one-dimensional environment by exploiting the hidden property. The agent initiates its motion from x(0), where the temperature does not accord with the desired value. In this case, the agent must fulfill its allostasis at the cost of biochemical energy by exploiting the environment based on

| 25 |

where a(t) denotes a motor variable, e.g., agent’s velocity. The nonstationary sensory data are afferent at the receptor subject to noise ; its time dependence is caused by the agent’s own motion, i.e., , which is assumed to be latent to the agent’s brain in the current model. With the prescribed sensorimotor control, the rate of sensory data averaged over the noise is related to the control variable as

The neural observer is not aware of how sensory inputs at the proprioceptor are affected by the motor reflex control of the agent. In the case of saccadic motor control (Friston et al. 2012b), an agent may stand at a field point without changing its position; however, sampling the salient visual features of the environment through a fast eye movement a(t) makes the visual input nonstationary, i.e., .

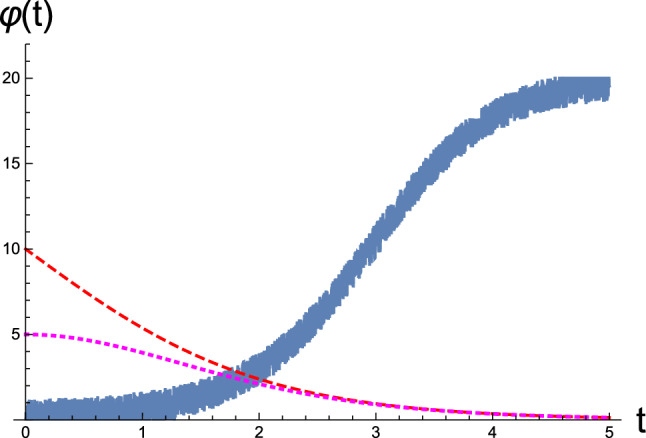

In Fig. 1, we depict streams of sensory data at the agent’s receptor as a function of time. For this simulation, the latent motor variable in Eq. (25) is considered as

which renders the agent’s position in the environment as with . For simplicity, we assume that this is hardwired in the agent’s reflex pathway over evolutionary and developmental time scales. The figure shows that the agent, initially located at , senses an undesirable stimulus ; accordingly, it reacts by using motor control to determine an acceptable ambient niche. For this illustration, we assumed the environmental property at the origin to be . After a period of , the agent finds itself at the origin , where the environmental state is marked by the value .

Fig. 1.

Influx of stochastic sensory data in the blue curve was generated by the environmental process shown in Eq. (24), which instantly enters the sensory receptor located at the field point x(t). The dashed curve represents the agent’s position at x(t) as a function of time, with its movement starting from . The dotted curve represents the magnitude of the latent motor variable a(t) that controls the agent’s location. [All curves are in arbitrary units.]

Having prescribed the nonstationary sensory data, we now set up the BM to be integrated by applying Eqs. (17) and (18) to the generative models below. We assume that the agent has already learned an optimal generative model; therefore, the agent retains prior expectations regarding the observations and dynamics. Here, for the demonstration, we consider the learned generative model in its simplest linear form

| 26 |

| 27 |

Note that the motor control a is not included in the generative model, and the desired sensory data , e.g., temperature, appear as the brain’s prior belief of the hidden state. Accordingly, Eqs. (17) and (18) are reduced to a coupled set of differential equations for the brain variable and its conjugate momentum p as

| 28 |

| 29 |

Parameters , , and are proportional to the correlation ; see Eq. (19). Hence, they become zero when the neural response to the sensory inputs is uncorrelated with neural dynamics, which is not the case in general. Time-dependent driving terms appearing on the RHS of both equations, namely Eqs. (28) and (29), include the sensorimotor signal given in Eq. (20). The motor variable a, which drives the nonstationary inputs , is unknown to the neural observer in our implementation.

In the following, for a compact mathematical description, we denote the brain’s perceptual state as a column vector

Vector represents the brain’s current expectation and the associated prediction error p with respect to the sensory causes, as encoded by the neuronal activities performed when encountering a sensory influx. Therefore, in terms of perceptual vector , Eqs. (28) and (28) are expressed as

| 30 |

where relaxation matrix is defined as

| 31 |

and source vector encompassing the sensory influx is defined as

| 32 |

Unless it is a pathological case, the steady-state (or equilibrium) solution of Eq. (30) is uniquely obtained as

| 33 |

We find it informative to consider the general solution of Eq. (30) with respect to the fixed point by setting

To this end, we seek time-dependent solutions for the shifted measure as follows

where . It is straightforward to integrate the above inhomogeneous differential equation to obtain a formal solution, which is given by

| 34 |

Note that becomes zero identically for static sensory inputs; therefore, the relaxation admits simple homogeneous dynamics. In contrast, for time-varying sensory inputs, the inhomogeneous dynamics driven by the source term is expected to be predominant. However, on time scales longer than the sensory-influx saturation time , it can be shown that ; for instance, in Fig. 1. Therefore, for such a time scale, the inhomogeneous contribution in the relaxation diminishes even for time-varying sensory inputs, and the homogeneous contribution is dominant for further time-development. The ensuing homogeneous relaxation can be expressed in terms of eigenvalues and eigenvectors of the relaxation matrix as

| 35 |

where expansion coefficients are fixed by initial conditions . The initial conditions represent a spontaneous or resting cognitive state. In Eq. (35), eigenvalues and eigenvectors are determined by the secular equation

| 36 |

Then, the solution for the linear RD Eq. (30) is given by

| 37 |

which is exact for perceptual inference, and legitimate for active inference on timescales .

Before presenting the numerical outcome, we first inspect the nature of fixed points by analyzing the eigenvalues of the relaxation matrix given in Eq. (31). First, it can be seen that the trace of is zero, which indicates that the two eigenvalues have opposite signs, i.e., . Second, the determinant of can be calculated as

Therefore, if the correlation , it can be conjectured that both eigenvalues are real. This is because , which yields using the first conjecture. Thus, we can conclude that the two eigenvalues are real and have opposite signs. Therefore, for , the solution is unstable. In contrast, when the correlation is retained, can be positive for a suitable choice of statistical parameters, namely , , and . In the latter case, the condition renders for both . Accordingly, and that have opposite signs are purely imaginary, which makes the fixed point a center (Strogatz 2015). If we define , the long-time solution of RD with respect to is expressed as

which specifies a limit cycle with angular frequency . Thus, according to our formulation, the effect of correlation on the brain’s RD is not a subsidiary but a crucial component. Below, we consider numerical illustrations with finite correlation.

We exploited a wide range of parameters for numerically solving Eqs. (28) and (29) and found through numerical observation that there exists a narrow window in the statistical parameters , , and , within which a stable trajectory is allowed for a successful inference. This finding implies that the agent’s brain must learn and hardwire this narrow parameter range over evolutionary and developmental timescales; namely, generative models are conditioned on an individual biological agent. We denote the instantaneous cognitive state as for notational convenience.

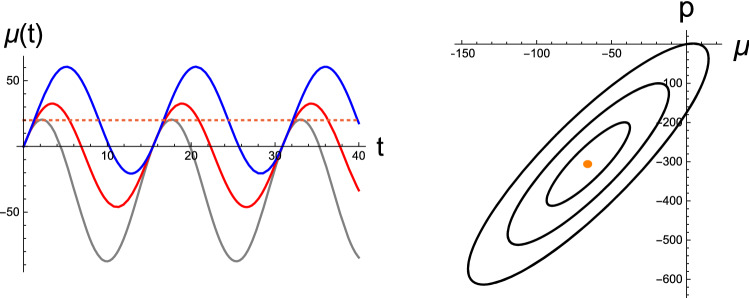

In Fig. 2, we depict the numerical outcome from the perceptual inference of static sensory inputs. To obtain the results, we select a particular set of statistical parameters as

which specify the neural inertial masses

and the coefficients that enter the RD, namely

In Fig. 2Left, we depict the brain variable as a function of time, which represents the cognitive expectation of a registered sensory input under the generative model [Eq. (26)] for three values, namely . For all illustrations, the agent’s prior belief with regard to the sensory input is set as

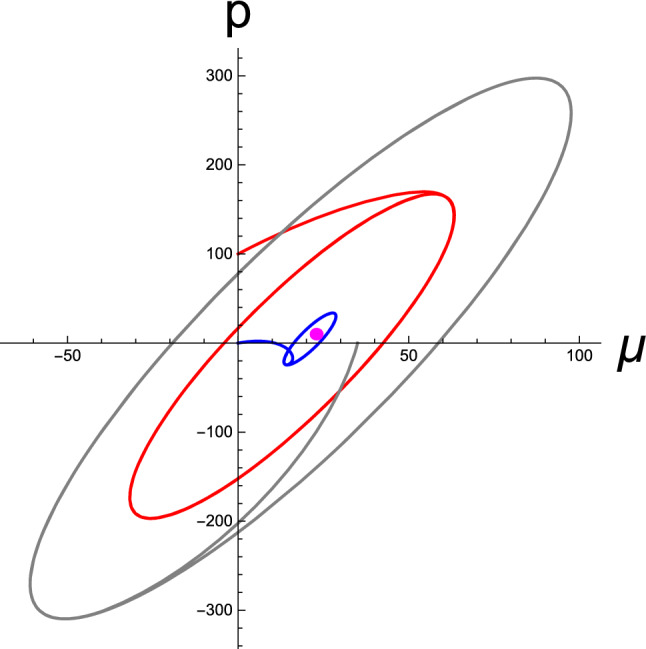

which is indicated by the horizontal dotted line. The blue curve represents the case in which sensory data are in line with the belief. The RD of the perceptual inference delivers an exact output , where and are the perceptual outcome of the sensory cause and its prediction error, respectively. Note that and correspond to the temporal averages of and p(t), respectively. The other two inferences underscore the correct answer. Figure 2Right corresponds to the case of a single sensory data , which the standing agent senses at the field point . The ensuing trajectories from all three initial spontaneous states have their limit cycles in the state space defined by and p. We numerically determined the fixed point to be and the two eigenvalues of the relaxation matrix to be , which are purely imaginary and have opposite signs. Again, the perceptual outcome does not accord with the sensory input; it deviates significantly.

Fig. 2.

Perceptual inference of static sensory data: (Left) Oscillatory brain variables in time t developed from a common spontaneous state by responding to sensory inputs (gray), 15 (red), and 20 (blue). The horizontal dotted line indicates the agent’s prior belief regarding sensory input. (Right) Limit cycles in the perceptual state space from an input for three initial conditions ; where the state space is spanned by continuous brain state and its conjugate momentum p variables. The common fixed point is indicated by an orange bullet at the center of the orbits, which predicts the sensory cause incorrectly. [All curves are in arbitrary units.]

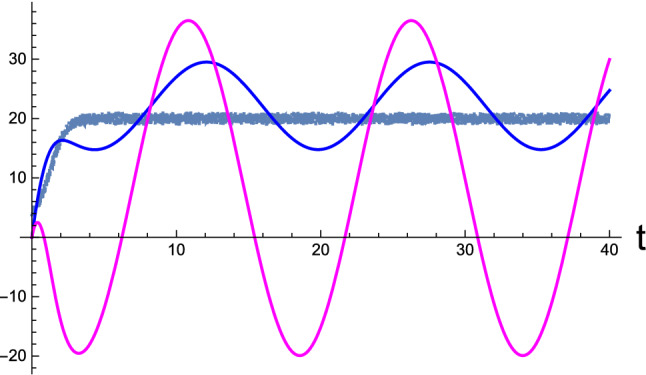

Next, in Fig. 3, we depict the results for active inference, which were calculated using the same generative parameters used in Fig. 2. The agent is initially situated at , where it senses the sensory influx , which does not match the desired value . Therefore, the agent reacts to identify a comfortable environmental niche matching its prior belief, which generates nonstationary sensory inputs at the receptors (Fig. 1). The brain variable initially undergoes a transient period at . The RD commences from the resting condition and then develops a stationary evolution. Furthermore, we numerically confirmed that the brain’s stationary prediction , which is the brain’s perceptual outcome of the sensory cause, is close to but not in line with the prior belief . The stationary value is estimated to be approximately 8.0, which is the average of the stationary oscillation of prediction error p(t).

Fig. 3.

Active perception: Time-development of the perceptual state inferring the external causes of sensory inputs altered by the agent’s motor control. Blue and magenta curves depict the brain activity and corresponding momentum p(t), respectively. In addition, the noisy curve indicates the nonstationary sensory inputs entering the sensory receptor at instant t. For numerical illustration, we used . [All curves are in arbitrary units.]

In Fig. 4, the trajectory corresponding to that in Fig. 3 is illustrated in blue in the perceptual state space spanned by and p, including two other time developments from different choices of initial conditions. All data were calculated using the same generative parameters and sensory inputs used for Fig. 3. Regardless of the initial conditions, after each transient period, the trajectories approach stationary limit cycles about a common fixed point, as seen in the case of static sensory inputs in Fig. 2. The fixed point and stationary frequency of the limit cycles are not affected by initial conditions, which are solely determined by the generative parameters , , and and the prior belief for a given sensory input [Eqs. (33) and (36)]. In addition, we numerically observed that the precise location of the fixed points is stochastic, thereby reflecting the noise from the nonstationary sensory influx .

Fig. 4.

Active inference: Temporal development of trajectories rendering stationary limit cycles in the perceptual phase space, spanned by continuous neural state and its conjugate momentum p variables. Data were obtained from the same statistical parameters used in Fig. 2. The blue, red, and gray curves correspond to the three initial conditions, , (0, 100), and (35, 0), respectively. The angular frequency of the limit cycles is the magnitude of the imaginary eigenvalues of the relaxation matrix given in Eq. (31). The common fixed point is indicated by a magenta bullet at the center of the orbits. [All curves are in arbitrary units.]

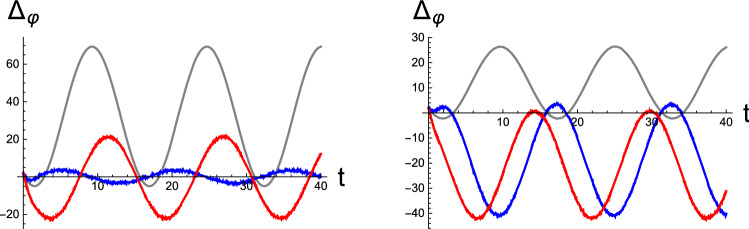

In the framework of active inference, motor behavior is attributed to the inference of the causes of proprioceptive sensations (Adams et al. 2013), and in turn, the prediction errors convey the motor signals in the closed-loop dynamics of perception and motor control. In Fig. 5, we depict the sensorimotor signals that appear as time-dependent driving terms in Eqs. (28) and (29). In both figures, the agent is assumed to be initially situated such that it can sense the sensory data . After an initial transient period elapses, the motor signals exhibit a stationary oscillation about average zero in Fig. 5Left, implying the successful fulfillment of the active inference of nonstationary sensory influx matching the desired belief . The amplitude of the motor signal shown by the blue curve is smaller than that shown by the red curve, which is also reflected in the size of the corresponding limit cycles in Fig. 4. The prediction-error signal from the plain perception exhibits an oscillatory feature in the gray curve, which arises from the stationary time dependence of the brain variable . The amplitude shows a large variation caused by the significant discrepancy between the static sensory input and its prior belief . In Fig. 5Right, we repeated the calculation with another value: . In this case, the prior belief regarding the sensory input does not accord with stationary sensory streams. Therefore, the blue and red signals for active inference oscillate about the negatively shifted values from average zero. In contrast to Fig. 5Left, the error-signal amplitude of the static input is reduced because the difference between the sensory data and prior belief decreases.

Fig. 5.

Motor signals as a function of time t evoked by the discrepancy between the nonstationary sensory stream and its top-down prediction [Eq. (20)]. Here, we set the prior belief (Left) and (Right). The blue and red curves represent the results from the initial condition and (0, 100), respectively. The gray curves represent the corresponding signals from the plain perception of the static sensory input. All data were obtained by setting the statistical parameters as . [All curves are in arbitrary units.]

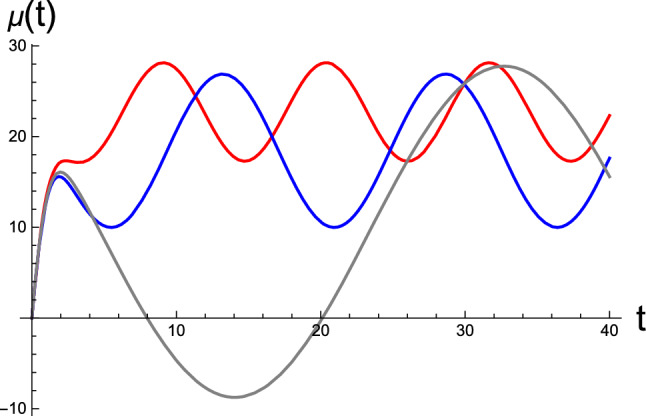

Next, we consider the role of correlation in the brain’s RD, whose value is limited by the constraint . To this end, we select three values of for the fixed variances and , and we integrate the RD for active inference. In Fig. 6, we present the resulting time evolution of the brain states for the initial condition . In this figure, the conjugate momentum variables are not shown. The noticeable features in the results include the changes in the fixed point and the amplitude of the stationary oscillation with correlation. The average value of in the periodic oscillation corresponds to the perceptual outcome of the sensory data in the stationary limit. We remark that for all numerical data presented in this work, we selected only negative values for . This choice was made because our numerical inspection revealed that positive correlation does not yield stable solutions.

Fig. 6.

Time evolution of the brain variable . Here, we vary the correlation for fixed variances and . The red, blue, and gray curves correspond to , , and , respectively. For all data, the agent is environmentally situated at , where it senses the transient sensory inputs induced by the motor reflexes at the proprioceptive level. The agent’s initial cognitive state is assumed to be , and the prior belief is set as . [All curves are in arbitrary units.]

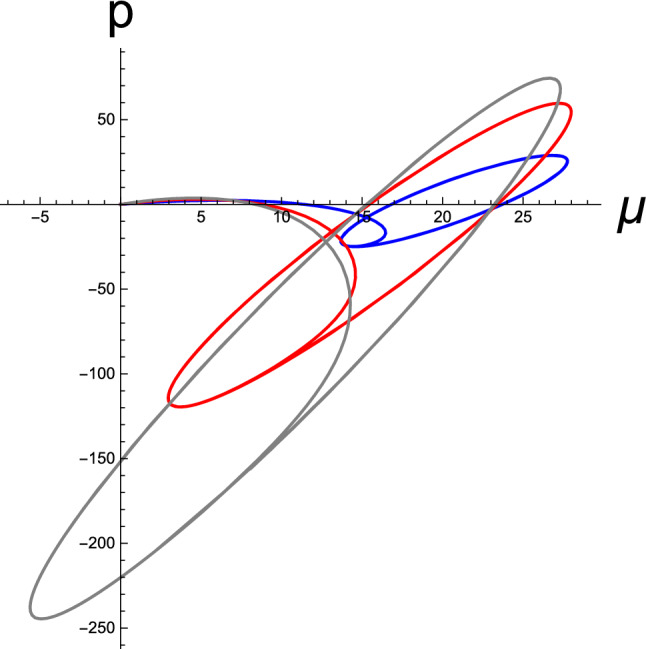

In Fig. 7, as the final numerical manifestation, we show the temporal buildup of the limit cycles in the perceptual phase space; however, this time, we fix while varying and . To generate the red, blue, and gray curves, the tuning parameter was selected as , respectively. The resulting fixed points are located approximately at the center of each limit cycle, which are not shown. Similar to that in Fig. 6, it can be observed that the positions of the fixed point and amplitudes of oscillation are altered by variations in the statistical parameters. Evidently, a different set of parameters, namely , , and , which are the learning parameters encoded by the brain, result in a distinctive BM of active inference.

Fig. 7.

Limit cycles in the perceptual phase space spanned by the brain state and its conjugate momentum p. Here, we considered several sets of and for a fixed . The red, blue, and gray curves were obtained from , , and , respectively. For all data, the agent’s initial cognitive state is assumed to be , and the prior belief is set as . The agent is environmentally situated at , where it senses the transient sensory inputs induced by the motor reflexes at the proprioceptive level. [All curves are in arbitrary units.]

Here, we summarize the major findings from the application of our formulation to a simple nonstationary model. The brain’s BM, i.e., Eqs. (28) and (29), employs linear generative models given in Eqs. (26) and (27).

-

(i)

The steady-state solutions of the RD turn out to be a center about which stationary limit cycles (periodic oscillations) are formed as an attractor (Friston and Ao 2012a) in the perceptual phase space, which constitute the brain’s nonequilibrium resting states.

-

(ii)

The nonequilibrium stationarity stems from the pair of purely imaginary eigenvalues of the relaxation matrix with opposite signs, given by Eq. (31); the equal magnitude specifies the angular frequency of the periodic trajectory.

-

(iii)

Centers are determined by generative parameters and the prior belief for a given sensory input [Eq. (33)], which represents the outcome of active inference and the entailed prediction error.

-

(iv)

The theoretical assumption of the statistical dependence of two generative noises describing the brain’s expectation of the external dynamics and sensory generation is consequential to ensuring a stationary solution. Furthermore, based on numerical experience, a negative covariance is necessary for obtaining stable solutions using the current model.

Concluding remarks

In the present study, we continued our effort to make the FEP a more physically principled formalism based on our previous publication (Kim 2018). We implemented the FEP in the scope of the principle of least action by casting the minimization scheme to the BM described by the effective Hamiltonian equations in the neural phase space. We deconstructed some of the theoretical details in the first part, which are embedded in the formulation of the FEP, while comparing our approach with other currently prevailing approaches. In the second half, we demonstrated our proposed continuous-state RD in the Bayesian brain using a simple model, which is biologically relevant to sensorimotor regulation such as motor reflex arcs or saccadic eye movement. In our theory, the time integral of the induced IFE in the brain, not the instant variational IFE, performs as an objective function. In other words, our minimization scheme searches for the tight bound on the sensory uncertainty (average surprisal) and not the instant sensory surprisal.

To present the novel aspects of our formulation, this study focused on the perceptual inference of nonstationary sensory influx at the interface. The nonstationary sensory inputs were assumed to be unknown or contingent to the neural observer without explicitly engaging in motor-inference dynamics in the BM. Instead, we considered that the motor signals are triggered by the discrepancies between the sensory inputs at the proprioceptive level and their top-down predictions. They appeared as nonautonomous source terms in the derived BM, thus completing the sensorimotor dynamics via reflex arcs or oculomotor dynamics of sampling visual stimuli. This closed-loop dynamics contrasts with the gradient-descent implementation, which involves the double optimization of the top-down belief propagation and the motor inference in message-passing algorithms. In our present formulation, the sensorimotor inference was not included; however, a mechanism of motor inference can be included explicitly by considering a Langevin equation for a sensorimotor state. This procedure extends the probabilistic generative model by accommodating the prior density for motor planning for active perception, which is similar to what was done in Bogacz (2020).

By integrating the Bayesian equations of motion for the considered parsimonious model, we manifested transient limit cycles in the neural phase space, which numerically illustrate the brain’s perceptual trajectories performing active perception of the causes of nonstationary sensory stimuli. Moreover, we revealed that ensuing trajectories and fixed points are affected by the input values of the learning parameters (both diagonal and off-diagonal elements of the covariance matrix) and prior belief regarding sensory data. The idea of exploring the effect of noise covariance was purely from the theoretical insight without a supporting empirical evidence, which allowed us to drive a stable solution in perceptual and motor-control dynamics. We did not attempt to explicate in detail the effect of neural inertial masses (precisions) and correlation (noise covariance) on the numerically observed limit cycles. This was because of the numerical limitation set by the presented model, which permits stable solutions in a significantly narrow window of statistical parameters. In neurosciences, it is commonly recognized that neural system dynamics implement cognitive processes influencing psychiatric states (Durstewitz et al. 2020). We hope that the key features of our manifestation will serve to motivate and guide further investigations on more realistic generative models with neurobiological and psychological implications.

Finally, we mention the recent research efforts on synthesizing perception, motor control, and decision making within the FEP (Friston et al. 2015, 2017; Biehl et al. 2018; Parr and Friston 2019; van de Laar and de Vries 2019; Tschantz et al. 2020; Da Costa et al. 2020a). The underlying idea of these studies is rooted in machine learning (Sutton and Barto 1998) and the intuition from nonequilibrium thermodynamics (Parr et al. 2020; Friston 2019), and they attempt to widen the scope of active inference by incorporating prior beliefs regarding behavioral policies. The new trend supplements the instant IFE to the future expected IFE in a time series, and it formulates the adaptive decision-making processes in action-oriented models. The assimilation of this feature needs to be studied in depth (Millidge et al. 2020b; Tschantz et al. 2020). We are currently considering a formulation of motor inference together with the assimilation of extended IFEs in the scope of the least action principle.

Funding

Not applicable.

Compliance with ethical standards

Conflict of interest

Not applicable.

Footnotes

Free energy (FE) is a notion developed by Hermann von Helmholtz in thermodynamics; it is a physical energy measured in joules. The FE in the FEP is an information-theoretic measure defined in terms of probabilities, which serves as an objective function for variational Bayesian inference. Accordingly, we call it variational IFE in our formulation.

In this work, we use the term control instead of the frequently used term “action” to mean the motion of a living agent’s effectors (muscles) acting on the environment. This is done to avoid any confusion with the term action appearing in the nomenclature of “the principle of least action”.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Adams RA, Shipp S, Friston KJ. Predictions not commands: active inference in the motor system. Brain Struct Funct. 2013;218:611–643. doi: 10.1007/s00429-012-0475-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amari S. Natural gradient works efficiently in learning. Neural Comput. 1998;10(2):251–276. doi: 10.1162/089976698300017746. [DOI] [Google Scholar]

- Balaji B, Friston K (2011) Bayesian state estimation using generalized coordinates. Proceedings of the SPIE 8050, signal processing, sensor fusion, and target recognition XX, 80501Y. 10.1117/12.883513

- Baltieri M, Buckley CL. PID control as a process of active inference with linear generative models. Entropy. 2019;21:257. doi: 10.3390/e21030257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biehl M, Guckelsberger C, Salge C, Smith SC, Polani D. Expanding the active inference landscape: more intrinsic motivations in the perception-action loop. Front Neurorobot. 2018;12:45. doi: 10.3389/fnbot.2018.00045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogacz R. A tutorial on the free-energy framework for modelling perception and learning. J Math Psychol. 2017;76(B):198–211. doi: 10.1016/j.jmp.2015.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogacz R. Dopamine role in learning and action inference. eLife. 2020;9:e53262. doi: 10.7554/eLife.53262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckley CL, Kim CS, McGregor S, Seth AK. The free energy principle for action and perception: a mathematical review. J Math Psychol. 2017;81:55–79. doi: 10.1016/j.jmp.2017.09.004. [DOI] [Google Scholar]

- Colombo M, Wright C. First principles in the life sciences: the free-energy principle, organicism, and mechanism. Synthese. 2018 doi: 10.1007/s11229-018-01932-w. [DOI] [Google Scholar]

- Cover T, Thomas JA. Elements of information theory. 2. Hoboken: Wiley; 2006. [Google Scholar]

- Cugliandolo LF, Lecomte V. Rules of calculus in the path integral representation of white noise Langevin equations: the Onsager–Machlup approach. J Phys A Math Theor. 2017;50:345001. doi: 10.1088/1751-8121/aa7dd6. [DOI] [Google Scholar]

- Da Costa L, Parr T, Sajid N, Veselic S, Neacsu V, Friston K. Active inference on discrete state-spaces: a synthesis. J Math Psychol. 2020;99:102447. doi: 10.1016/j.jmp.2020.102447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Da Costa L, Parr T, Sengupta B, Friston K (2020b) Natural selection finds natural gradient. arXiv:200108028 [q-bio]

- Da Costa L, Sajid N, Parr T, Friston K, Smith R (2020c) The relationship between dynamic programming and active inference: the discrete, finite horizon case. arXiv:2009.08111v3 [cs.AI]

- de Gardelle V, Waszczuk M, Egner T, Summerfield C. Concurrent repetition enhancement and suppression responses in extrastriate visual cortex. Cerebral Cortex. 2013;23(9):2235–2244. doi: 10.1093/cercor/bhs211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durstewitz D, Huys Q, Koppe G. Psychiatric illnesses as disorders of network dynamics. Biol Psychiatry Cogn Neurosci Neuroimg. 2020 doi: 10.1016/j.bpsc.2020.01.001. [DOI] [PubMed] [Google Scholar]

- Elfwing S, Uchibe E, Doya K. From free energy to expected energy: improving energy-based value function approximation in reinforcement learning. Neural Netw. 2016;84:17–27. doi: 10.1016/j.neunet.2016.07.013. [DOI] [PubMed] [Google Scholar]

- Feynman RP, Hibbs AR. Quantum mechanics and path integrals. Mineola, Emended: Dover Publication; 2005. [Google Scholar]

- Fox RF. Stochastic calculus in physics. J Stat Phys. 1987;46:1145–1157. doi: 10.1007/BF01011160. [DOI] [Google Scholar]

- Friston K, Mattout J, Trujillo-Barreto N, Ashburner J, Penny W. Variational free energy and the Laplace approximation. NeuroImage. 2007;34(1):220–234. doi: 10.1016/j.neuroimage.2006.08.035. [DOI] [PubMed] [Google Scholar]

- Friston K. Hierarchical models in the brain. PLoS Comput Biol. 2008;4(11):e1000211. doi: 10.1371/journal.pcbi.1000211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ. Variational filtering. Neuroimage. 2008;41:747–766. doi: 10.1016/j.neuroimage.2008.03.017. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Trujillo-Barreto N, Daunizeau J. DEM: a variational treatment of dynamic systems. Neuroimage. 2008;41(3):849–885. doi: 10.1016/j.neuroimage.2008.02.054. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Daunizeau J, Kiebel SJ. Reinforcement learning or active inference? PLoS ONE. 2009;4(7):e6421. doi: 10.1371/journal.pone.0006421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K. The free-energy principle: a unified brain theory? Nat Rev Neurosci. 2010;11:127–138. doi: 10.1038/nrn2787. [DOI] [PubMed] [Google Scholar]

- Friston K, Stephan K, Li B, Daunizeau J. Generalized filtering. Math Probl Eng. 2010;2010:261670. doi: 10.1155/2010/621670. [DOI] [Google Scholar]

- Friston KJ, Daunizeau J, Kilner J, Kiebel SJ. Action and behavior: a free-energy formulation. Biol Cybern. 2010;102(3):227–260. doi: 10.1007/s00422-010-0364-z. [DOI] [PubMed] [Google Scholar]

- Friston K, Mattout J, Kilner J. Action understanding and active inference. Biol Cybern. 2011;104:137–160. doi: 10.1007/s00422-011-0424-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K. What is optimal about motor control? Neuron. 2011;72(3):488–498. doi: 10.1016/j/neuron.2011.10.018. [DOI] [PubMed] [Google Scholar]

- Friston K, Ao P. Free energy, value, and attractors. Comput Math Methods Med. 2012;2012:937860. doi: 10.1155/2012/937860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K, Adams R, Perrinet L, Breakspear M. Perceptions as hypotheses: saccades as experiments. Front Psychol. 2012;3:151. doi: 10.3389/fpsyg.2012.00151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K. Life as we know it. J R Soc Interface. 2013;10:1020130475. doi: 10.1098/rsif.2013.0475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K, Rigoli F, Ognibene D, Mathys C, Fitzgerald T, Pezzulo G. Active inference and epistemic value. Cogn Neurosci. 2015;6:187–214. doi: 10.1080/17588928.2015.1020053. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Parr T, de Vries B. The graphical brain: belief propagation and active inference. Netw Neurosci. 2017;1(4):381–414. doi: 10.1162/NETN_a_00018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K (2019) A free energy principle for a particular physics. arXiv:190610184 [q-bio]

- Hille B. Ion channels of excitable membranes. 3. Sunderland: Sinauer Associates; 2001. [Google Scholar]

- Huang Y, Rao RPN. Predictive coding. WIREs Cogn Sci. 2011;2:580–593. doi: 10.1002/wcs.142. [DOI] [PubMed] [Google Scholar]

- Isomura T, Kotani K, Jimbo Y. Cultured cortical neurons can perform blind source separation according to the free-energy principle. PLoS Comput Biol. 2015;11(12):e1004643. doi: 10.1371/journal.pcbi.1004643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isomura T, Friston K. In vitro neural networks minimise variational free energy. Sci Rep. 2018;8:16926. doi: 10.1038/s41598-018-35221-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jazwinski AH. Stochastic process and filtering theory. New York: Academic Press; 1970. [Google Scholar]

- Kerr W, Graham A. Generalized phase space version of Langevin equations and associated Fokker–Planck equations. Eur Phys J B. 2000;15:305–311. doi: 10.1007/s100510051129. [DOI] [Google Scholar]

- Kiefer AB. Psychophysical identity and free energy. J R Soc Interface. 2020;17:20200370. doi: 10.1098/rsif.2020.0370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim CS. Recognition dynamics in the brain under the free energy principle. Neural Comput. 2018;30:2616–2659. doi: 10.1162/neco_a_01115. [DOI] [PubMed] [Google Scholar]

- Kozunov VV, West TO, Nikolaeva AY, Stroganova TA, Friston KJ. Object recognition is enabled by an experience-dependent appraisal of visual features in the brain’s value system. Neuroimage. 2020;221:117143. doi: 10.1016/j.neuroimage.2020.117143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuzma S. Energy-information coupling during integrative cognitive processes. J Theor Biol. 2019;469:180–186. doi: 10.1016/j.jtbi.2019.03.005. [DOI] [PubMed] [Google Scholar]

- Landau LD, Lifshitz EM. Course of Theoretical Physics S. 3. Amsterdam: Elsevier; 1976. Mechanics. [Google Scholar]

- Markov NT, Kennedy H. The importance of being hierarchical. Curr Opin Neurobiol. 2013;23(2):187–194. doi: 10.1016/j.conb.2012.12.008. [DOI] [PubMed] [Google Scholar]

- Michalareas G, Vezoli J, van Pelt S, Schoffelen JM, Kennedy H, Fries P. Alpha–beta and gamma rhythms subserve feedback and feedforward influences among human visual cortical areas. Neuron. 2016;89(2):384–397. doi: 10.1016/j.neuron.2015.12.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Millidge B, Tschantz A, Seth AK, Buckley CL (2020a) On the Relationship between active inference and control as inference. arXiv:2006.12964v3 [cs.AI]

- Millidge B, Tschantz A, Buckley CL (2020b) Whence the expected free energy? arXiv:2004.08128 [cs.AI] [DOI] [PubMed]

- Moon W, Wettlaufer J. On the interpretation of Stratonovich calculus. New J Phys. 2014;16:055017. doi: 10.1088/1367-2630/16/5/055017. [DOI] [Google Scholar]

- Ozaki T. A bride between nonlinear time series models and nonlinear stochstic dynamical systems: a local linearization approach. Stat Sin. 1992;2:113–135. [Google Scholar]

- Parr T, Friston KJ. Active inference and the anatomy of oculomotion. Neuropsychologia. 2018;111:334–343. doi: 10.1016/j.neuropsychologia.2018.01.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parr T, Friston KJ. Generalised free energy and active inference. Biol Cybern. 2019;113:495–513. doi: 10.1007/s00422-019-00805-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parr T, Da Costa L, Friston K. Markov blankets, information geometry and stochastic thermodynamics. Phil Trans R Soc A. 2020 doi: 10.1098/rsta.2019.0159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pavliotis GA. Stochastic processes and applications: diffusion processes the Fokker–Planck and Langevin equations. New York: Springer; 2014. [Google Scholar]

- Ramstead MJD, Badcock PB, Friston KJ. Answering Schrödinger’s question: a free-energy formulation. Phys Life Rev. 2018;24:1–16. doi: 10.1016/j.plrev.2017.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramstead MJD, Constant A, Badcock PB, Friston KJ (2019) Variational ecology and the physics of sentient systems. Phys Life Rev 31:188–205. 10.1016/j.plrev.2018.12.002 [DOI] [PMC free article] [PubMed]

- Rao RPN, Ballard DH. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat Neurosci. 1999;2(1):79–87. doi: 10.1038/4580. [DOI] [PubMed] [Google Scholar]

- Risken H. The Fokker–Planck Equation. 2. Berlin: Springer; 1989. [Google Scholar]

- Sanders H, Wilson MA, Gershman SJ. Hippocampal remapping as hidden state inference. eLife. 2020;9:e51140. doi: 10.7554/eLife.51140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seifert U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep Prog Phys. 2012;75:126001. doi: 10.1088/0034-4885/75/12/126001. [DOI] [PubMed] [Google Scholar]

- Sengupta B, Tozzi A, Cooray GK, Douglas PK, Friston KJ. Towards a neuronal gauge theory. PLoS Biol. 2016;14(3):e1002400. doi: 10.1371/journal.pbio.1002400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sengupta B, Friston K (2017) Approximate Bayesian inference as a gauge theory. arXiv:1705.06614v2 [q-bio.NC]

- Shimazaki H (2019) The principles of adaptation in organisms and machines I: machine learning, information theory, and thermodynamics. arXiv:1902.11233

- Strogatz SH. Nonlinear dynamics and chaos: with applications to physics, biology, chemistry, and engineering (Studies in Nonlinearity) 2. Cambridge: Westview Press; 2015. [Google Scholar]

- Surace SC, Pfister JP, Gerstner W, Brea J. On the choice of metric in gradient-based theories of brain function. PLoS Comput Biol. 2020;16(4):e1007640. doi: 10.1371/journal.pcbi.1007640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning: an introduction. Cambridge: The MIT Press; 1998. [Google Scholar]

- Todorov E. Bayesian brain: probabilistic approaches to neural coding. Cambridge: The MIT Press; 2007. Optimal control theory; pp. 269–298. [Google Scholar]

- Tschantz A, Seth AK, Buckley CL. Learning action-oriented models through active inference. PLOS Comput Biol. 2020;16(4):E1007805. doi: 10.1371/journal.pcbi.1007805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tuthill JC, Azim E. Proprioception. Current Biol. 2018;28(5):R194–R203. doi: 10.1016/j.cub.2018.01.064. [DOI] [PubMed] [Google Scholar]

- van Kampen NG. Itô versus Stratonovich. J Stat Phys. 1981;24:175–187. doi: 10.1007/BF01007642. [DOI] [Google Scholar]

- van de Laar TW, de Vries B. Simulating active inference processes by message passing. Front Robot AI. 2019;6:20. doi: 10.3389/frobt.2019.00020. [DOI] [PMC free article] [PubMed] [Google Scholar]