Abstract

School-based surveys provide a useful method for gathering data from youth. Existing literature offers many examples of data collection through school-based surveys, and a small subset of literature describes methodological approaches or general recommendations for health promotion professional seeking to conduct school-based data collection. Much less is available on real-life logistical challenges (e.g., minimizing disruption in the school day) and corresponding solutions. In this manuscript, we fill that literature gap by offering practical considerations for the administration of school-based surveys. The protocol and practical considerations outlined in the manuscript are based on a survey conducted with 11,681 students from seven large, urban public high schools in the southeast United States. We outline our protocol for implementing a school-based survey that was conducted with all students school-wide, and we describe six types of key challenges faced in conducting the survey: consent procedures, scheduling, locating students within the schools, teacher failure to administer the survey, improper administration of the survey, and minimizing disruption. For each challenge, we offer our key lessons learned and associated recommendations for successfully implementing school-based surveys, and we provide relevant tools for practitioners planning to conduct their own surveys in schools.

Keywords: Child/Adolescent Health, Evaluation Methods, School Health, Surveys

INTRODUCTION

In public health, school-based surveys provide useful methods for gathering data from youth. Health professionals have used school-based surveys to measure a variety of outcomes including youth physical activity levels, nutritional intake, sexual risk behaviors, and substance use (Anatale & Kelly, 2015; Brener et al., 2013; dos Santos, Hardman, Barros, Santos, & Barros, 2015; Hamilton et al., 2015; Ieorger, Henry, Chen, Cigularov, & Tomazic, 2015; Lowry, Michael, Demissie, Kann, & Galuska, 2015). School-based surveys are used by both domestic and international health promotion professionals, for surveillance and evaluation/assessment purposes, and are currently used for several key data sets on health behaviors of youth in the United States (Aten, Siegel, & Roghmann, 1996; Centers for Disease Control and Prevention (CDC), 2013, 2016; dos Santos et al., 2015; Kristjansson, Sigfusson, Sigfusdottir, & Allegrante, 2013; Lowry et al., 2015; Nathanson, McCormick, Kemple, & Sypek, 2013; Oregon Health Authority, n.d.; University of Michigan, 2014; WestEd, 2015). The school setting offers the benefits of reaching large numbers of youth at one time, allowing anonymity for sensitive questions, and accessing populations that are often hard-to-reach (Smit, Zwart, Spruit, Monshouwer, & Ameijden, 2002).

BACKGROUND

Practitioners seeking to use school-based surveys for data collection will find a robust literature offering general approaches and considerations. Existing literature includes descriptions of methodological approaches (Centers for Disease Control and Prevention (CDC), 2013; Yusoff et al., 2014) and considerations such as building support and buy-in among schools (e.g., identifying key points of contact, clarifying an educational benefit to the survey, offering incentives such as school-specific data reports or resources) and maximizing validity (Kristjansson et al., 2013; Madge et al., 2011; Testa & Coleman, 2006). The literature also includes explorations of various consent procedures (Dent, Sussman, & Stacy, 1997; Eaton, Lowry, Brener, Grunbaum, & Kann, 2004; Esbensen, Miller, Taylor, He, & Freng, 1999; Ji, Pokorny, & Jason, 2004; White, Morris, Hill, & Bradnock, 2007) and administration modes (Brener et al., 2006; Denniston et al., 2010; Eaton et al., 2010; Raghupathy & Hahn-Smith, 2013; Wyrick & Bond, 2011). However, in spite of having so much relevant content, detailed descriptions of real-life logistical challenges (e.g., minimizing disruption in the school day) and corresponding solutions appear mostly lacking from the literature. More specifically, even with appropriate administration modes, buy-in from school staff, proper incentives, and methods designed to ensure security and validity of the data, many additional factors can thwart successful data collection efforts; the literature offers little guidance on logistical challenges practitioners face when collecting data in schools and what can be done to avoid pitfalls

Purpose

The purpose of this paper is to help fill that methods-related literature gap with practical considerations for school-based survey administration. This paper is intended as a practice-oriented guide for health promotion professionals using school-based surveys to inform and evaluate programs. The paper is framed with the assumption readers are familiar with commonly recommended strategies for school-based data collections and are ready to consider administration logistics. For this reason, we share detailed descriptions of a survey distribution and collection protocol, as well as lessons learned and recommendations for successful data collection.

STRATEGIES: SURVEY PROTOCOL

This paper describes administration of a school-wide survey to evaluate a school-centered HIV prevention project. This project specifically focused on reaching a group of youth at disproportionate risk for HIV and other STDs: black and Latino adolescent sexual-minority males (Centers for Disease Control and Prevention, 2015). Intervention activities were designed to reach all youth in the schools while including messages or components designed to specifically reach and resonate with the subgroup at highest risk for HIV and STDs. To design an evaluation that could support analyses among the subgroup of interest while protecting their confidentiality and also provide findings for the broader groups of students in the schools, we took a census of students in the seven high schools participating in the intervention. In total, we surveyed 11,681 youth, and based on district enrollment records during data collection, we had a response rate of 79.5% of all students enrolled.

Pre-administration Protocol

After securing human subjects and administrative approvals, our team began pre-administration preparations, a key component of any school-based data collection. We hired a district-level local point-of-contact to coordinate data collection activities, and identified school-level contacts to facilitate preparations among school staff. In this pre-administration preparation phase, three key tasks required attention: teacher/proctor trainings, consent form distribution, and survey packet preparation.

Teacher/proctor trainings.

Our protocol used classroom teachers to proctor the self-administered student survey. To ensure protocols were followed, we conducted in-person trainings in each school for teachers overseeing survey administration 1–5 weeks prior to data collection. Training dates and times were selected by school administrators to ensure alignment with their professional development and staff meeting schedules. Trainings gave teachers detailed information about the survey administration process and parental consent procedures. In addition, an online module allowed on-demand viewing by staff unable to attend in-person trainings.

Consent form distribution.

Teachers were given passive parental consent forms with study information to send home with students at one time point at least two weeks prior to the survey; the consent form included English, Spanish, and Haitian Creole translations. Parents who did not want their children to take the survey were asked to sign the form and indicate they did not consent to participation. Teachers collected any returned consent forms and provided them to the evaluation team. On the day of data collection, teachers distributed decoy booklets instead of surveys to students whose parents had indicated they did not consent to their participation. Decoy booklets were multi-page scannable booklets with a cover and back identical to the survey but with blank pages instead of questions on the inside. A student receiving one of these booklets would be able to hold it at his/her desk without a peer knowing if he/she simply chose not to participate or had not been given permission by a parent to participate. No teachers reported challenges in using the decoy booklets.

Survey Packet Preparation.

Prior to survey administration, we prepared administration packets for each classroom complete with scripts for the proctor, survey booklets, decoy booklets, pencils, and a classroom information form. These packets were prepared using classroom-level rosters provided by each school-level contact. The survey administration materials were placed in a manila envelope and a label was attached to the top with: school name, teacher name, class period, and number of students enrolled in that class. Survey packets were delivered to teacher mailboxes at least 1 day prior to survey administration.

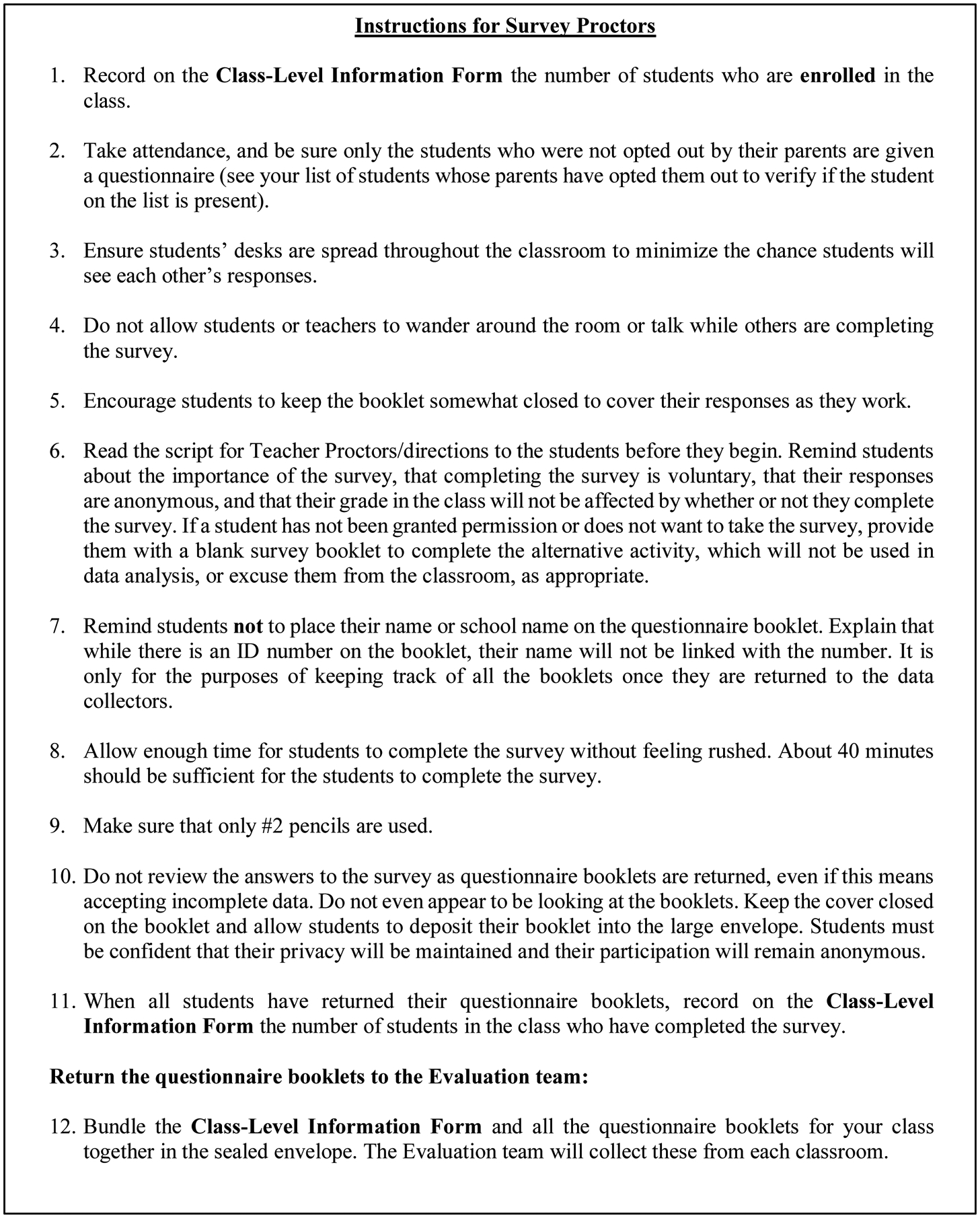

Administration Protocol

We used a 12-step protocol (see Figure 1) for teachers to distribute and collect students’ surveys. On data collection day, the evaluation team was dispersed across campus to ensure teachers had necessary supplies and to answer last-minute questions. We asked each teacher/proctor to record information on a class-level information form. This included the number of students who were enrolled in the class, the number of students present during survey administration, and the number of students whose parents requested they not complete the survey. Teachers were asked to distribute surveys and read the script to students. The script provided an overview of the survey and required student assent language. In addition, students were reminded the surveys were anonymous and they should not write their names on the surveys. As students finished, they placed surveys directly into a large manila envelope. Teachers were reminded not to look at the surveys or even appear to be looking at them. At the end of the class period, evaluation team members went to each classroom to collect envelopes of completed surveys, the class-level information forms, and unused materials.

Figure 1.

Example Protocol for Survey Distribution and Collection: Instructions for Survey Proctors.

Post-administration Processing Protocol

After collecting survey packets, the team immediately gathered in a designated location in the school (e.g., teacher’s lounge, break room) to complete initial post-administration processing. This processing involved ensuring all surveys and completed class-level information forms had been collected from each class on the administration rosters. Materials were boxed and boxes were numbered (e.g., box 1 of 6) before being transported to a secure off-campus location for further processing and final preparation for shipping to the survey scanning facility.

STRATEGIES: CHALLENGES AND SOLUTIONS

Based on our experience, we identified six types of challenges most problematic for our school-based survey administration; these included challenges related to consent procedures, scheduling, locating students within the schools, teacher failure to administer the survey, improper administration of the survey, and minimizing disruption. In the next section, we briefly describe each challenge and offer suggestions for addressing that particular challenge. A compilation of these challenges and solutions is provided in Table 1.

Table 1.

Summary of Challenges and Associated Recommendations for Addressing the Challenges.

| Challenge | Recommendation for Addressing the Challenge |

|---|---|

| Lack of clear understanding, and proper use, of parental permission procedures | Provide more detailed information and emphasis on this in teacher/proctor training Label the passive parental permission form as an “opt-out” form |

| Inability to reach all students due to less than ideal scheduling | When setting the dates and times for data collection, ask specifically about various activities or circumstances that could impact student attendance such as earlier release days, designated study days/periods prior to final exams, senior skip days, planned fire or emergency drills, field trips, etc. (see Figure 1 for a detailed checklist). |

| Not knowing the physical location of all eligible students who are in the school | Ask school staff in advance to inform you of situations in which students would not be sitting in the classrooms that were designated in the rosters; create alternate plans, as possibly survey locations, for administering the survey to these students |

| Teacher failure to administer the survey | Provide an online, archived version of the training for teachers/staff who cannot be trained in person Remind staff to view the required training in the days leading up to the survey Train additional district or school staff (or external data collectors, depending on protocol restrictions) to serve as back-up proctors. |

| Teachers improperly administering the survey | Clarify training requirements about why adults should not read surveys to students and provide specific examples of situations in which teachers might be tempted to, but should not, read the surveys to students Offer blank survey booklets to students who do not qualify, want, or have permission to take the survey |

| During immediate post-survey processing | Include classroom information sheet (or any other critical information required from teachers) on the outside of the materials packet for expedited processing |

Proper Use of Consent Procedures

Challenge.

Appropriate use of consent procedures is critical in any research activity, but in school-based surveys where participants are typically minors, consent processes (particularly parental permission processes) also introduce opportunities for misunderstanding, which can decrease participation rates. In our evaluation, we encountered misunderstanding by both teachers and parents related to the consent process. As previously described, our protocol employed passive parental permission, which meant parents only needed to sign forms if they did not want their students to participate. Unfortunately, a number of parents and teachers appeared to believe signatures were required to participate. In some cases, parents signed forms but did not check the box to state they withheld consent. Additionally, a few teachers failed to administer the survey because they had not received signed forms, when in contrast, any student who did not return a signed parental form should have been given an opportunity to take the survey; this impacted less than 1% of the total number of students enrolled at the time of the survey.

Solution.

Labeling the forms as “consent forms” may have created this confusion. To address this, we plan to label passive parental permission forms as “opt-out” forms in future data collections. Although the “consent” language is typically used among evaluators, it is possible this label was interpreted by parents as a request to allow to participation. Furthermore, additional teacher training is needed to ensure teachers understand that passive parental permission forms are not required to allow students to take the survey, but instead prohibit participation.

Scheduling

Challenge.

Scheduling can be an enormous challenge for any school-based intervention or evaluation. Having anticipated this from our experience in schools, our team worked with school staff to ensure we avoided known conflicts. We collected data in the fall rather than spring semester, when standardized testing adds to scheduling challenges; we consulted school staff and district calendars to plan around dates such as advanced placement testing days, holiday breaks, and teacher professional development days. However, despite our best efforts, we still encountered unexpected schedule-related challenges.

Several of these challenges were due to events known to school staff, but not considered when setting the data collection dates; these could have been avoided. In one school, we found out upon arrival that the school was having an “early release day.” This had not been communicated during the planning process and was not a day we would have naturally anticipated such an occurrence (such as the day before a holiday or break). As a result of early release, class periods were shortened, impacting both the amount of time students had for the survey and our start time (e.g., third period usually started at 10:00, but now started at 9:20). To compound the problem, an evaluation team member witnessed students arriving in the parking lot and then leaving campus upon hearing it was an early release day. Consistent with that anecdotal experience, school staff acknowledged absence rates that day were higher than usual.

Other schools had different schedule-related challenges. One school had a planned field trip that resulted in entire groups of students (in this case, the Greek club) being absent during the survey administration. Another school had a planned fire drill immediately prior to our survey administration, which could have impacted data collection. In general, through our conversations with school teachers and administrators, we were told that Mondays, Fridays, and 1st period classes were times with lower attendance.

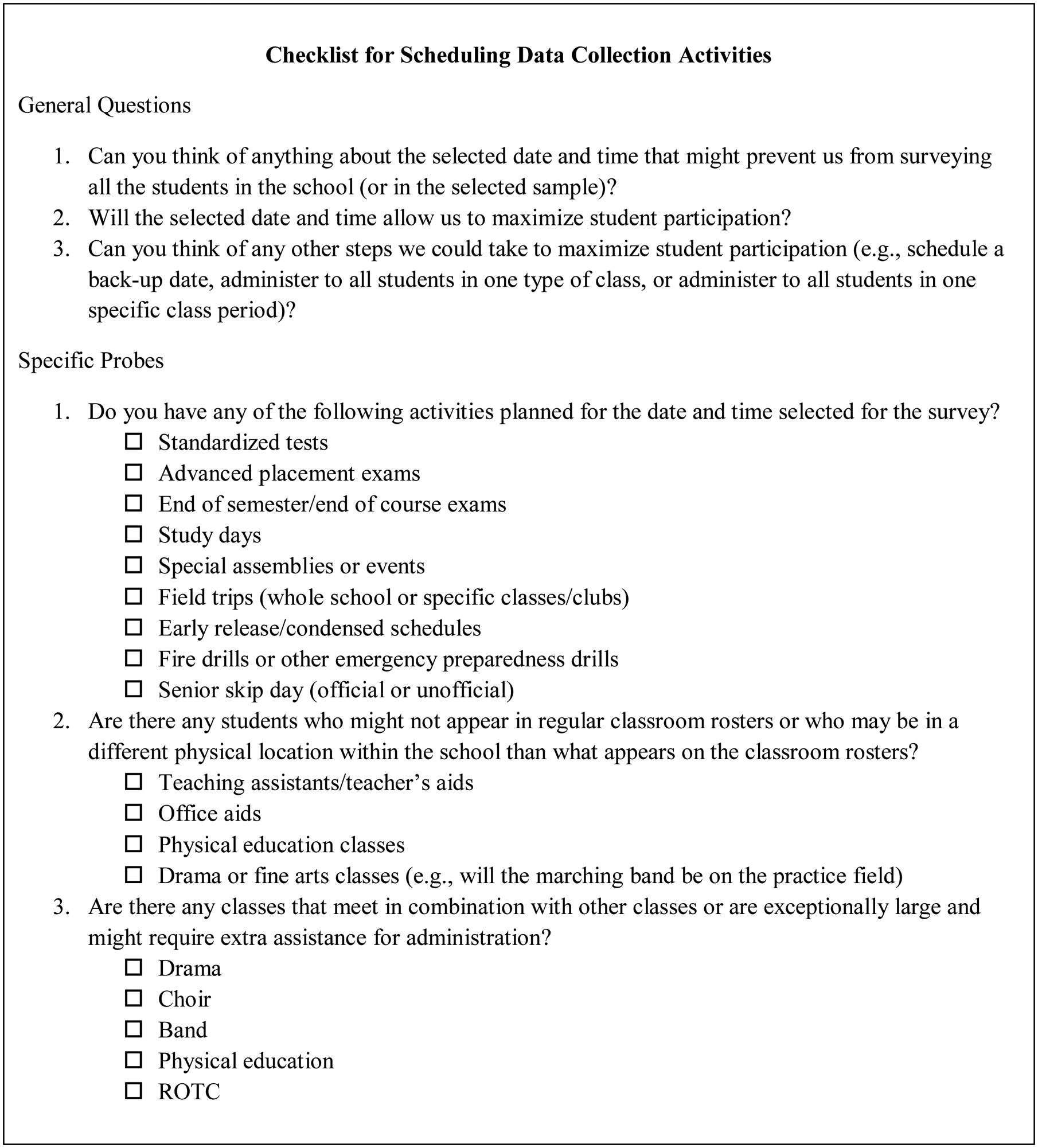

Solution.

In the future, we will ask even more questions about potential scheduling conflicts. We have compiled a checklist to prompt us to ask, not only broadly about events that might prevent us from accessing all the students during the selected dates and times, but also specifically for information on activities such as earlier release days, designated study days/periods prior to final exams, senior skip days, planned fire or emergency drills, and field trips (see Figure 2).

Figure 2.

Checklist for Scheduling Data Collection Activities

Finding Students within the School

Challenge.

We intended to survey all students enrolled in mainstream classes. Although we received rosters of all students with their classroom assignments, we noticed during data collection that there were groups of students in campus locations that were not indicated on the rosters. In one school, for example, we observed students sitting in the guidance counselor’s office during the survey; we later discovered those students were “aides” for that counselor. On the roster list, these “aides” appeared to be designated to a specific classroom, but when we arrived to that room to collect survey materials, the classroom was empty.

Solution.

In this future, we will discuss this specific type of situation with staff in advance. If we know of students assigned as “aides” or who would not be in the designated location for other reasons, we can make plans for reaching those students. As one example, those students could report to an alternate location to complete the survey.

Teacher Failure to Administer Survey

Challenge.

A few teachers failed to administer the survey during the scheduled class period. Some of those teachers had not attended our teacher/proctor training on the survey administration, so they simply did not administer the survey. In other cases, substitute teachers elected not to administer the survey because they had not received any training or information on it. One teacher reported he forgot to pass out consent forms, so he had planned to administer the survey at another date (which was not allowed under our protocol). Such failures to administer the survey had a negative impact on our response rate.

Solution.

Fortunately, we have a few suggestions for reducing this type of administration failure in the future. In upcoming data collections, we will remind school staff of the survey a couple of days in advance and encourage them to participate in the online trainings if they missed the in-person training. In addition, we plan to train a small pool of district staff members to be stand-in proctors for any teachers who either failed to attend one of our proctor trainings or are there as substitutes.

Improper Administration of the Survey

Challenge.

After the data collection, we discovered a few teachers improperly administered the survey. Our protocol specifically stated the survey was to be self-administered by the student, and therefore, it should not be administered in classrooms of students who had special needs or did not speak English fluently. In one instance, an evaluation team member discovered a teacher translating the survey to her English-as-a-second-language students. In another instance, a team member observed an adult aide for a student with special needs (but in a mainstream classroom) completing the student’s survey—not simply reading the survey or discussing the survey with the student, but actually taking the survey himself. According to our protocol, these students should have not received the survey, and adult administration of the survey to the student was not allowed. In both instances, improperly administered surveys were destroyed.

This does, however, raise an important question about which students should take surveys. We elected to provide surveys only in English in part because it was consistent with the protocol of the Youth Risk Behavior Survey, a large-scale, nationally-representative school-based survey on which we were basing much of our process, but also because the students were accustomed to using English for both learning and testing. The number of students who would be excluded by the English language requirement was relatively small, but it warrants consideration of translated versions of the surveys for future administrations. One thing we knew for certain was that the nature of our questions (very personal—about sexual activity, sexual orientation, sexual identity, bullying, etc.) meant we did not want students in a position where an adult was reading this survey to them individually and helping select the appropriate response. This could inhibit students from providing honest answers and put them in an uncomfortable, and possibly risky, situation of disclosing personal and sensitive information.

Solution.

In the future, we will continue limiting data collections to mainstream classrooms, but will work much harder to clarify why adults should not read the survey to students. We will talk specifically with teachers about the risks to the students in such situations. In addition, we will provide this as part of a brief, bulleted overview of the process that will be provided to staff on the day of the survey. We will continue to offer decoy survey booklets to students who do not take the survey for any reason (e.g., parents opt them out, they choose not to participate, or they are not eligible because they cannot participate independently).

Minimizing Disruption

Our aim is always to collect the highest quality data with the least disruption to the flow of the school day. For us, minimizing disruption meant attempting to be in and out of the school as quickly as possible. We think about our time in the school as three phases: (1) preparation, (2) administration, and (3) immediate post-survey processing.

Preparation challenge.

Upon arriving at each school for data collection, we quickly positioned team members around the campus for the most efficient dissemination and collection of survey-related materials. Given that some schools were very large, with thousands of students on the campus, the logistics were complicated. Our biggest challenges in the preparation phase were primarily related to learning and adapting to the layout of the school. Once we arrived, we divided survey administration responsibilities between team members and assigned each person to specific buildings or classrooms for which they would carry out administration-related responsibilities.

Solution.

To reduce time in the school for preparation, it helps to receive a campus map or tour in advance. Although we had some maps before arrival, several were outdated, so before trusting the map completely, it was necessary to have someone familiar with campus verify its accuracy. It is most helpful when maps include specific classroom numbers so that team members can be assigned at the room level as necessary; this is particularly important when some buildings (e.g., auditoriums) have only one class and others have dozens. The best way to maximize use of the team is to determine classroom-level positioning prior to survey administration.

Administration challenge.

In the administration phase, evaluation team responsibilities occurred primarily at the beginning and end of the survey. Although teachers already had survey material packets which included surveys, decoy booklets, and pencils for each class, the team was available to provide extra materials as necessary. After the survey, team members went to each classroom to collect survey materials. We attempted to collect materials by the start of the following class period so that only one class was interrupted for data collection. Unfortunately, large campuses, mixed readiness of teachers to return materials, and complex layouts of some schools resulted in this process running into the next class period for a small number of teachers.

Solution.

After survey administration at the first school, we improved efficiency by establishing a process where teachers called the main office or a designated number if they needed additional materials. A team member stationed at or near the phone would then contact the evaluation team member stationed closest to that teacher. We used cellphones to communicate within the team, but that created its own challenges because of limited cellular service in some areas or buildings. As we were able to work around those glitches, this overall approach worked well, but alternate methods for communication, such as walkie-talkies, might work even better.

In addition, advance provision of classroom-level maps allowed us to more evenly divide survey packet collection responsibilities. Once a team member collected his/her own materials, he/she would assist another team member who had not finished. This sped up the collection process and minimized disruption of an additional class period.

Post-survey processing challenge.

In post-survey processing, we accounted for all materials—completed surveys, decoy booklets, and completed classroom information forms—for every class. In the initial schools, we often found incomplete classroom information forms or discovered forms altogether missing and had to revisit teachers for remaining materials. We became more efficient over time, but in the first school, post-survey processing took our team, which had filled the teachers’ lounge, more than an hour. Although it was not an interruption of classroom time for most teachers, we still wanted to reduce this disruption to the normalcy of the day and physical space of the school.

Solution.

As we gained experienced in data collection, we became more efficient in immediate post-survey processing. To save time, we changed administration after the first few schools; we decided to place the classroom information sheet on a large sticker on the front of the survey packet envelope. This allowed us to see at a glance whether the numbers for class enrollment, absences, and parental opt-outs had been provided, and it shortened processing time substantially.

CONCLUSIONS

School-based surveys provide an excellent way to gather critical data from students, but they come with a number of challenges. Our experience revealed challenges that were both systematic (e.g. scheduling, post-survey processing) and sporadic (e.g. specific teachers failing to proctor the survey) in nature. Although many of our challenges were more pronounced because our survey was conducted school-wide, we believe they illustrate important considerations and possible solutions for any health promotion professional conducting data collection through school-based surveys of students. With increased understanding of these challenges, health promotion professionals can incorporate our proposed solutions to maximize response rates and the quality and representativeness of data.

ACKNOWLEDGEMENTS

This evaluation was supported by contract number 200-2009-30503 and task order number 200-2014-F-59670 from the Centers for Disease Control and Prevention, Division of Adolescent and School Health. The authors would like to thank the students and staff of the participating school district for their cooperation in this data collection.

Footnotes

HUMAN SUBJECTS APPROVAL STATEMENT

The evaluation described in this manuscript was reviewed and approved by ICF International’s Institutional Review Board for the Protection of Human Subjects and the Research Review Office of the participating school district.

Publisher's Disclaimer: DISCLAIMER

The findings and conclusions in the manuscript are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

Contributor Information

Catherine N. Rasberry, Division of Adolescent and School Health, Centers for Disease Control and Prevention, 1600 Clifton Road NE, Mailstop E-75, Atlanta, GA 30329-4027.

India Rose, ICF International, 3 Corporate Square, Suite 370, Atlanta, Georgia 30329.

Elizabeth Kroupa, ICF International, 3 Corporate Square, Suite 370, Atlanta, Georgia 30329.

Andrew Hebert, ICF International (former), ICF International, 3 Corporate Square, Suite 370, Atlanta, Georgia 30329.

Amanda Geller, ICF International, 3 Corporate Square, Suite 370, Atlanta, Georgia 30329.

Elana Morris, Division of HIV Prevention, Centers for Disease Control and Prevention, 1600 Clifton Road NE, Mailstop E-40, Atlanta, GA 30329-4027.

Catherine A. Lesesne, ICF International, 3 Corporate Square, Suite 370, Atlanta, Georgia 30329.

REFERENCES

- Anatale K, & Kelly S (2015). Factors influencing adolescent girls’ sexual behavior: A secondary analysis of the 2011 Youth Risk Behavior Survey. Issues in Mental Health Nursing, 36, 217–221. doi: 10.3109/01612840.2014.963902 [DOI] [PubMed] [Google Scholar]

- Aten MJ, Siegel DM, & Roghmann KJ (1996). Use of health services by urban youth: A school-based survey to assess differences by grade level, gender, and risk behavior. Journal of Adolescent Health, 19(4), 258–266. doi: 10.1016/S1054-139X(96)00029-8 [DOI] [PubMed] [Google Scholar]

- Brener ND, Eaton DK, Kann L, Grunbaum JA, Gross LA, Kyle TM, & Ross JG (2006). The association of survey setting and mode with self-reported health risk behaviors among high school students. Public Opinion Quarterly, 70(3), 354–374. doi: 10.1093/poq/nf1003 [DOI] [Google Scholar]

- Brener ND, Eaton DK, Kann LK, McManus TS, Lee SM, Scanlon KS, … O’Toole TP (2013). Behaviors related to physical activity and nutrition among U.S. high school students. Journal of Adolescent Health, 53(4), 539–546. doi: 10.1016/j.jadohealth.2013.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention (CDC). (2013). Methodology of the Youth Risk Behavior Surveillance System - 2013. Morbidity and Mortality Weekly Report, 62(1), 1–20. [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention (CDC). (2015). The young men who have sex with men (YMSM) project: Reducing the risk of HIV/STD infection. Retrieved from http://www.cdc.gov/healthyyouth/disparities/ymsm/

- Centers for Disease Control and Prevention (CDC). (2016, April 20). Global School-based Student Health Survey (GSHS). Retrieved November 19, 2016 from http://www.cdc.gov/gshs/background/

- Denniston MM, Brener ND, Kann L, Eaton DK, McManus T, Kyle TM, … Ross JG (2010). Comparison of paper-and-pencil versus Web administration of the Youth Risk Behavior Survey (YRBS): Participation, data quality, and perceived privacy and anonymity. Computers in Human Behavior, 26(5), 1054–1060. [Google Scholar]

- Dent CW, Sussman SY, & Stacy AW (1997). The impact of written consent policy on estimates from a school-based drug use survey. Evaluation Review, 21(6), 698–712. [DOI] [PubMed] [Google Scholar]

- dos Santos SJ, Hardman CM, Barros SSH, Santos C. d. F. B. F., & Barros M. V. G. d. (2015). Association between physical activity, participation in physical education classses, and social isolation in adolescents. Jornal de Pediatria, 91(6), 543–550. doi: 10.1016/j.jped.2015.01.008 [DOI] [PubMed] [Google Scholar]

- Eaton DK, Brener ND, Kann L, Denniston MM, McManus T, Kyle TM, … Ross JG (2010). Comparison of paper-and-pencil versus Web administration of the Youth Risk Behavior Survey (YRBS): Risk behavior prevalence estimates. Evaluation Review, 34(2), 137–153. doi: 10.1177/0193841X10362491 [DOI] [PubMed] [Google Scholar]

- Eaton DK, Lowry R, Brener ND, Grunbaum JA, & Kann L (2004). Passive versus active parental permission in school-based survey research: Does the type of permission affect prevalence estimates of risk behaviors? Evaluation Review, 28(6), 564–577. doi: 10.1177/0193841X04265651 [DOI] [PubMed] [Google Scholar]

- Esbensen F-A, Miller MH, Taylor TJ, He N, & Freng A (1999). Differential attrition rates and active parental consent. Evaluation Review, 23(3), 316–335. [DOI] [PubMed] [Google Scholar]

- Hamilton HA, Ferrence R, Boak A, O’Connor S, Mann RE, Schwartz R, & Adlaf EM (2015). Waterpipe use among high school students in Ontario: Demographic and substance use correlates. Canadian Journal of Public Health, 106(3), e121–e126. doi: 10.17269/cjph.106.4764 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ieorger M, Henry KL, Chen PY, Cigularov KP, & Tomazic RG (2015). Beyond same-sex attraction: Gender-variant-based victimization is associated with suicidal behavior and substance use for other-sex attracted adolescents. PLoS ONE, 10(6), 1–16. doi: 10.1371/journal.pone.0129976 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ji PY, Pokorny SB, & Jason LA (2004). Factors influencing middle and high schools’ active parental consent return rates. Evaluation Review, 28(6), 578–591. [DOI] [PubMed] [Google Scholar]

- Kristjansson AL, Sigfusson J, Sigfusdottir ID, & Allegrante JP (2013). Data collection procedures for school-based surveys among adolescents: The youth in the Europe study. Journal of School Health, 83(9), 662–667. [DOI] [PubMed] [Google Scholar]

- Lowry R, Michael S, Demissie Z, Kann L, & Galuska DA (2015). Associations of physical activity and sedentary behaviors with dietary behaviors among US high school students. Journal of Obesity. doi: 10.1155/2015/876524 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madge N, Hemming PJ, Goodman A, Goodman S, Stenson K, & Webster C (2011). Conducting large-scale surveys in secondary schools: The case of the Youth on Religion (YOR) project. Children and Society, 26, 417–429. doi: 10.1111/j.1099-0860.2011.00364.x [DOI] [Google Scholar]

- Nathanson L, McCormick M, Kemple JJ, & Sypek L (2013). Strengthening Assessments of School Climate: Lessons from the NYC School Survey. Retrieved November 20, 2016 from New York: http://files.eric.ed.gov/fulltext/ED543180.pdf

- Oregon Health Authority. (n.d.). Oregon Healthy Teens Survey. Retrieved July 2, 2015 from https://public.health.oregon.gov/BirthDeathCertificates/Surveys/OregonHealthyTeens/Pages/index.aspx

- Raghupathy S, & Hahn-Smith S (2013). The effect of survey mode on high school risk behavior data: A comparison between web and paper-based surveys. Current Issues in Education, 16(2), 1–9. [Google Scholar]

- Smit F, Zwart WD, Spruit I, Monshouwer K, & Ameijden EV (2002). Monitoring substance use in adolescents: School survey or household survey? Drugs: Education, Prevention and Policy, 9(3), 267–274. [Google Scholar]

- Testa AC, & Coleman LM (2006). Accessing research participants in schools: A case study of a UK adolescent sexual health survey. Health Education Research, 21(4), 518–526. doi: 10.1093/her/cyh078 [DOI] [PubMed] [Google Scholar]

- University of Michigan. (2014, December 16, 2014). Monitoring the Future: A continuing study of American youth. Retrieved April 22, 2015 from http://www.monitoringthefuture.org/

- WestEd. (2016). California Healthy Kids Survey. Retrieved November 21, 2016 from https://chks.wested.org/

- White DA, Morris AJ, Hill KB, & Bradnock G (2007). Consent and school-based surveys. British Dental Journal, 202(12), 715–717. doi: 10.1038/bdg.2007.532 [DOI] [PubMed] [Google Scholar]

- Wyrick DL, & Bond L (2011). Reducing sensitive survey response bias in research on adolescents: A comparison of web-based and paper-and-pencil administration. American Journal of Health Promotion, 25(5), 349–352. doi: 10.4278/ajhp.080611-ARB-90 [DOI] [PubMed] [Google Scholar]

- Yusoff F, Saari R, Naidu BM, Ahmad NA, Omar A, & Aris T (2014). Methodology of the National School-based Health Survey in Malaysia, 2012. Asia-Pacific Journal of Public Health, 26(5S), 9S–17S. doi: 10.1177/1010539514542424 [DOI] [PubMed] [Google Scholar]