Big data may be the future of medicine, but it is also its past. In 1964, the inventor Vladimir Zworykin warned that medical data were accumulating at a pace exceeding physicians’ cognitive capacity. Not only was the amount of information available in hospital records and medical literature “far too large to be encompassed by the memory of any single man,” Zworykin declared, “but the conventional techniques of abstracting, summarizing, and indexing cannot provide the physician with the needed knowledge in a form readily accessible in his practice.” Fortunately, the digital computer — which had shrunk from the room-sized ENIAC of the 1940s to the refrigerator-sized IBM mainframe of the 1950s to the “minicomputers” of the 1960s — could deploy algorithmic search techniques at superhuman speeds. “It is thus quite reasonable,” he concluded, “to think of electronic memories as effective supplements and extension of the human memory of the physician.”1

Half a century ago, physicians and engineers shared a dream that computers wielding ultravast memories and ultrafast processing times could deduce diagnoses, store medical records, and circulate information.2 Though today’s world of precision medicine, neural nets, and wearable technologies involves very different objects, networks, and users than the world of “mainframe medicine” did, many fundamental problems remain unchanged — and not all challenges of digital medicine can be resolved by new technologies alone.3

Electronic diagnosis

In the late 1950s, physicians at Cornell Medical School and Mt. Sinai Hospital and engineers at IBM created a mechanical diagnostic aid inspired by the diagnostic slide-rule of British physician F.A. Nash, in which a series of cardboard strips representing symptoms and signs could be lined up to produce a differential diagnosis.4 Replacing Nash’s slide rule with a deck of punch cards containing details from clinical history, physical examination, peripheral blood smear, and bone marrow biopsy, the team tried to build a machine that could calculate all hematologic diagnoses.

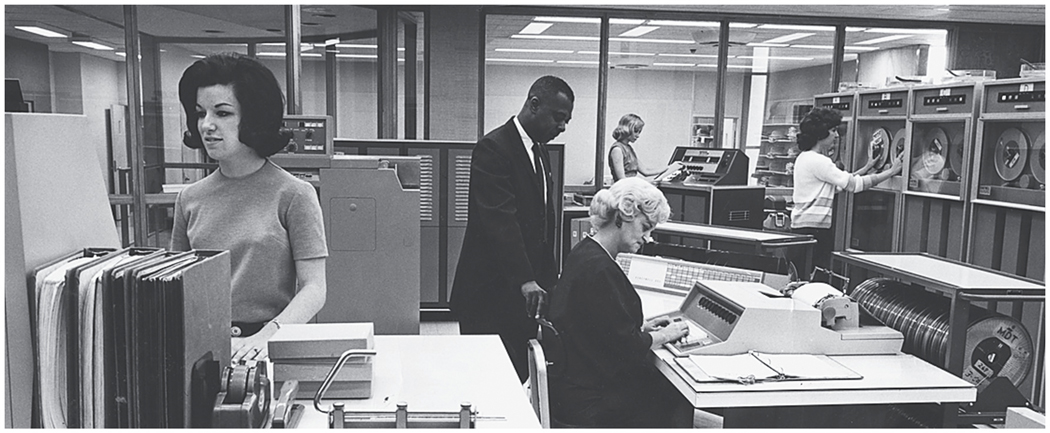

It was a short step from punch cards to the new IBM digital computer, which the Cornell team saw as a vehicle for more open-ended algorithmic machine learning: information gathered in one analysis could automatically feed back and change the conditions of future analyses. Presenting their work at the first IBM Medical Symposium in 1959, hematologist B.J. Davis claimed that computers could use “raw data,” rather than textbooks, to diagnose disease. After being fed data of hematologic diagnosis, their IBM 704 (Fig. 1) lumped and split the symptoms and signs into clusters. Reassuringly, the resulting diagnoses largely matched those in textbooks.5 Davis and his colleagues saw a future for machine learning in medicine: using a series of feedback loops, machines could organize and modify their own tables of disease on the basis of new data, perhaps more accurately than physicians could.6

Figure 1.

IBM 704 Computer.

Another Cornell team, led by psychiatrist Keeve Brodman, developed a computerized diagnostic system tested on nearly 6000 outpatients.7 By 1959, Brodman believed that “the making of correct diagnostic interpretations of symptoms can be a process in all aspects logical and so completely defined that it can be carried out by a machine.”8 At Georgetown and the University of Rochester, Robert Ledley and Lee Lusted used computational systems to introduce Bayesian logic into clinical diagnosis. Others followed suit, working to develop Bayesian systems that could diagnose problems ranging from congenital heart disease to causes of acute abdominal pain.9,10

Researchers aiming to teach diagnosis to these “electronic brains” were divided over the value of replicating diagnostic practices of the “nonelectronic brains” of master physicians versus allowing computers to use distinct logical pathways. “There is no reason to make the machine act in the same way the human brain does,” IBM’s Robert Taylor suggested, “any more than to construct a car with legs to move from place to place.”11,12 Yet the prospect of a diagnostic process opaque to the human mind was deeply unsettling to many people.

Attempts to automate diagnostic reasoning also required implicit valuations of varieties of medical work — separating monotonous, mechanizable processes from those that were complex and inextricably human. Advocates argued that medical computing could ease the growing physician shortage and allow doctors to focus more on human interaction.13 Critics objected, as Zworykin put it, “to the misconception that we were trying to replace the doctor by cold, hard, calculating machine,”14 fearing disruption of personal bonds between doctors and patients.15,16 Even as some physicians hailed the computer’s rote nature as a solution to errors arising from being “too human,” others warned that the computer would become an additional source of error, whether owing to bugs in its code or to human biases underlying that code.17

As Medical World News explained in 1967, “Diagnosis requires judgment, and a computer must rely on its human programmers to supply that judgment. The ‘electronic brain’ is no brain at all. It has been called ‘an idiot machine,’ capable of generating errors at the same fabulous rate at which it generates correct answers.”18 Today, there are similar fears that machine learning might — instead of eliminating lapses in human judgment — harden errors and biases into rhythms of care.19 Perhaps the most sobering appraisals of early computerized diagnostic systems came from engineers. By 1969, the Cornell team conceded that the most machine learning could promise was an algorithm for hematologic diagnoses — a relatively easy diagnostic area to conceive as a logical tree — that matched existing textbooks. Ironically, even some of the greatest successes of 1960s diagnostic computing — such as the automated readings produced by electrocardiograms — have become so naturalized that they’re no longer thought of as computer diagnosis.

Pioneers of computerized diagnosis ran up against inherent complexities. Zworykin’s team, for example, grew exasperated by uncertainty and medical heterogeneity. In seeking raw data for their system by reviewing the medical literature, they encountered a messy world of information in which researchers and clinicians reported data according to their own preferences, particularities, and biases. Perhaps more concerning, if medical experts themselves often disagreed about correct diagnoses, how could one know when the computer was right? Developers found it difficult to define gold standards against which to evaluate their systems’ accuracy.21 Such challenges persist today, both in designing adequate evaluation studies and in generating diagnostic “ground truth” for machine-learning algorithms.25 Some failings of early computer diagnosis pertained to technological and informational limitations of the time, but many fundamental challenges identified back then have yet to be overcome.

Electronic records

Already by the late 1950s, digital enthusiasts in the American Hospital Association and elsewhere promised that the computer would be more efficient than paper for storing and retrieving clinical information. As mainframes entered hospitals first in billing and accounting offices and then in clinical laboratories, computers’ role in forming a “total hospital information system” seemed both appealing and inevitable.

In 1958, 40 IBM mainframes were installed in U.S. medical schools, and it seemed as if all the elements for uptake of electronic medical records would soon be in place. A group of physicians and engineers at Tulane boasted by 1960 that they had generated a complete patient record on magnetic tape, storing hundreds of patients’ records on a single reel.23 Once coded in magnetic form, the electronic record turned patients’ experience into vast troves of digital data, which could be searched using a protocol called the Medical PROBE.24 The projected value to treating physicians and clinical investigators was immense — permitting “easier and more effective utilization” of medical records and turning “man-months” of coding case histories into “machine-minutes.”25,26 IBM’s Emmanuel Piore argued in 1960 that a network of electronic records would soon spread between clinics to link doctors and patients nationwide, and early pilot studies suggested that this vision might soon be realized.27 The boldest was the Hospital Computer Project of Massachusetts General Hospital (MGH), launched in 1962 as a partnership involving the National Institutes of Health (NIH), the American Hospital Association, MGH, and the computer applications firm Bolt, Beranek, and Newman (BBN). The effort was spearheaded by BBN’s Jordan Baruch, who sought to build better interfaces to attain “doctor–machine symbiosis.”28,29

Baruch designed a time-sharing computer system to generate an “on-line” electronic medical record connecting dial-up terminals throughout MGH with a mainframe across the river in Cambridge. Though the physician and computer scientist G. Octo Barnett was enthusiastic when he was appointed to the MGH side of the project in 1964, he soon grew concerned that his BBN colleagues prioritized the conceptual basis of their computer network over the pragmatic use of computers in the hospital’s day-to-day functioning. Systems broke down under the strain of patient care; the electronic medical record was offline for hours at a time. “Demonstration programs, however impressive, and promises for the future, however grandiose,” Barnett later noted, “could not substitute for the reality that all our computer programs could only function in the hothouse atmosphere of parallel operation for several days to several weeks and operated by our own research staff.”30

In 1968, Barnett lamented the “awesome gap between the hopes or claims and the realities of the situation” and declared the fully automated hospital “as much a mirage today as it was ten years ago.”31 The project was called off, and though Barnett went on to build an influential medical computing lab at MGH, the BBN–MGH collaboration was widely perceived as a failure. Beyond technological limitations, Barnett blamed a lack of mutual understanding among physicians and engineers, hospitals and computer firms, and the multiple stakeholders required to produce institutional change at a hospital. (At BBN, meanwhile, the demonstration of the online computer network was considered a success — and helped the company win a bid to the Defense Advanced Research Projects Agency to create ARPANET, forerunner of the Internet.32) As hospitals retreated from physician-designed “total information systems,” the introduction of computers into patient care became piecemeal, more successful with billing, admissions, and clinical laboratories than with written patient records.

Even as electronic health records (EHRs) have eclipsed paper records in U.S. health care in recent years, studies document lingering clinician unease with the integration of computer systems into the clinical world. The promise of producing new, life-saving forms of health data has yet to be fully realized,33 yet the EHR has altered doctor–patient relationships, increased the amount of time clinicians spend documenting their efforts, and been identified as a leading source of physician burnout. Today, as in the past, medical records serve many functions beyond registering patient information.34 But early EHRs were designed with billing offices, laboratories, and physicians in mind; patients’ ability to access their own medical information was not a concern for the first (and several subsequent) generations of technology. Although interoperability of electronic records across different scales and regions is now possible within a single EHR, widespread interoperability of data remains elusive, as does portability of records for patients.

Electronic information

Perhaps the most successful efforts to digitize medicine were achieved in a place typically considered a citadel of paper: the library. Here, big data was seen first as crisis, and only later as opportunity. NIH director James Shannon pointed out in 1956 that physicians could not keep up with the exponential growth of published medical research.36 Computers presented the most promising solution.37

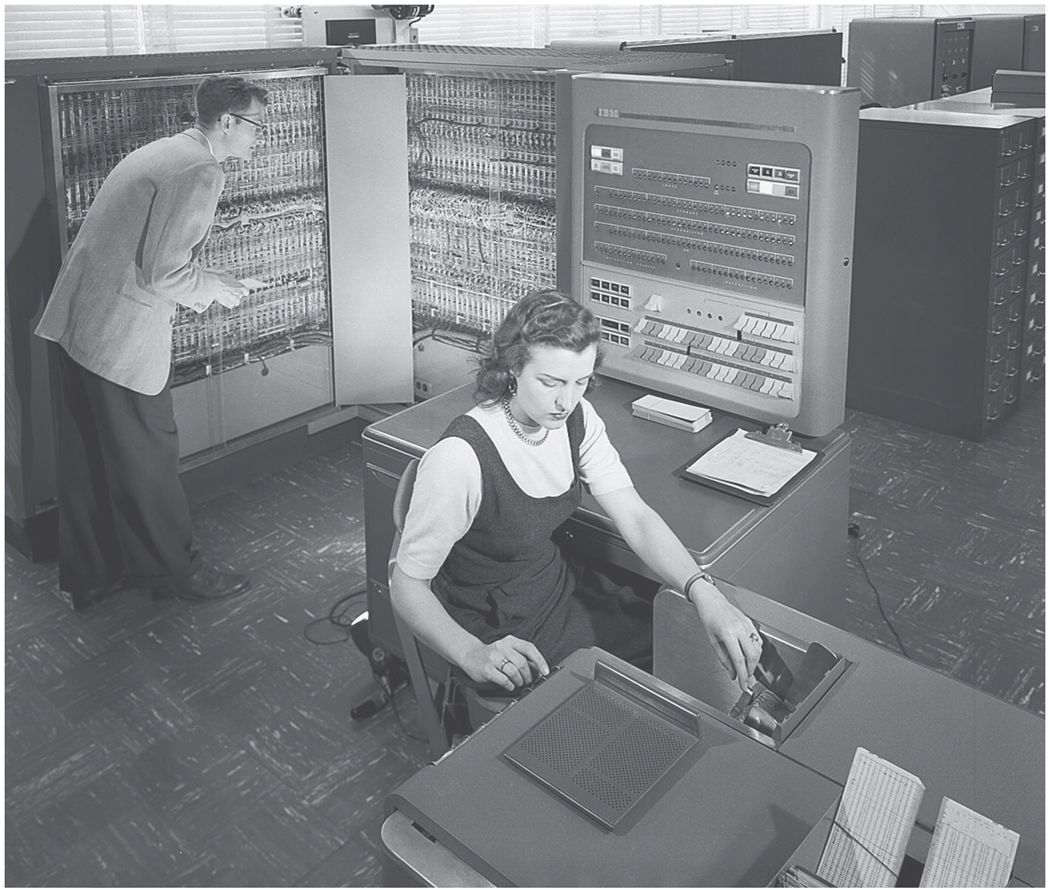

The National Library of Medicine (NLM) sought to use digital computers to automate the Index Medicus, the paper inventory that had been tracking medical knowledge since the Civil War. Starting in 1958, the NLM Index Mechanization Project placed photographs of text on IBM punch cards, and by 1960 the project could sort and store 1 million cards in 2 weeks. By January 1964, all 1963 journal material from the paper Index Medicus had been transferred by Flexowriter to punched paper tape for input into a new Honeywell 800 computer system (Fig. 2). The resulting Medical Literature Analysis and Retrieval System (MEDLARS) was the first large-scale project to provide electronic bibliographic access and copies of documents by computer.38

Figure 2.

Honeywell Computer of MEDLARS I.

MEDLARS sparked questions about computers’ ability to substitute for human medical information specialists. In a study of MEDLARS’ effectiveness in capturing the complexity of the medical literature,39 F.W. Lancaster demonstrated that the system searched with 58% recall and 50% precision — about as effective as a human indexer. But his study also raised concern about how humans could properly evaluate computers’ work, when the whole point of involving computers was that the scale of data was so big that human labor could not properly complete the required processing.40

The continuing growth of medical information soon surpassed even MEDLARS’ collating ability. By the mid-1960s, the Honeywell machinery was obsolete: MEDLARS searches were supposed to take no more than 2 days, but the average request took 2 weeks to fill.41 By the time its faster successor, MEDLARS II, was implemented in the early 1970s, expanded teletype access to medical literature enabled the planning of MEDLINE, forerunner of today’s universally accessible online index of medical literature, PubMed.

It is an irony well known to medical librarians that their own efforts in digitizing medical information helped produce a world in which physicians can complete training without ever entering a medical library. By the close of the 20th century, MEDLINE had become a gold standard for online bibliographic services in medical and nonmedical fields. Yet today, researchers searching the clinical literature often conflate PubMed with the sum total of medical knowledge, without realizing that its contents originally dated back only to 1963, when MEDLARS was established. This historical artifact has lasting effects, most gravely manifest in the 2001 death of Ellen Roche, a healthy volunteer in a trial of inhaled hexamethonium. Roche’s death might have been prevented had the investigators located published evidence of the drug’s adverse effects — evidence widely circulated in the 1950s.42

Conclusions

In the past half-century, the social and cultural context for digital medicine has changed as much as technologies. The shift from paternalistic to egalitarian approaches to care, the move from a private-practice orientation toward absorption into larger health systems, and changing understandings of privacy in “digital native” generations contribute to vastly different economic and political conditions for implementing digital medicine. Yet the promise of big data in medicine today recalls the optimism that accompanied the computer’s introduction into medicine 50 years ago. At every scale of “big data,” computers have offered elegant technological fixes to social, professional, and informational challenges — while introducing new problems.43 Then as now, computers both offered a means to transcend the human mind’s limitations in a world of expanding information and generated concern about entrusting life-and-death matters to unseen algorithms.

Exploring the history of these three intersecting domains — diagnostic algorithms, electronic medical records, and medical informatics — can help us think more carefully about the origins, complexities, and contingencies of data that drive clinical practice and medical research. Zworykin and his contemporaries did not stumble upon fully formed medical data that were ready for use; they created these data through historically contingent processes. The challenges and decisions they faced in collecting, managing, cleaning, curating, and interpreting medical information in silica endure 50 years later. If today we talk about big data as part of the natural or built environment, we miss the continuing roles that engineers, physicians, patients, and others play in building, renovating, and inhabiting this digital architecture.

We also miss the continuity of relevant problems and questions for medicine. What happens when human intelligence cannot comprehend the pathways of computer decisions?44 How does computerization of medical information change physicians’ roles or replicate the foibles of human diagnostic reasoning? What unintended displacements and transformations will computerized medicine produce next? These are not new questions, nor are they resolved by new technology.

Zworykin’s 1960 prediction that all electronic medical records for all humans on Earth could be stored in a memory unit of 1013 bits may seem comical now that a single PET scan may require 108 bits.45 Yet as the scale of big-data medicine has changed, many of the hopes, fears, and challenges have not.

Acknowledgments

Grant funding: NLM G13 (Dr. Greene),

Footnotes

Disclosure forms provided by the authors are available at NEJM.org.

Conflicts of Interest: None.

References

- 1.Zworykin VK. New frontiers in medical electronics: electronic aids for medical diagnosis. Paper delivered at the 39th Anniversary Congress, Pan-American Medical Association, February 19 1964, p. 3. Hagley Museum & Library, Zworykin Vladimir K. Papers (VZP), 1908–1981, David Sarnoff Research Center records (Accession 2464.09), box M&A 78, folder 59. [Google Scholar]

- 2.November JA. Biomedical computing: digitizing life in the United States. Baltimore, MD: Johns Hopkins University Press, 2012. [Google Scholar]

- 3.Yu KH, Beam AL, Kohane IS. Artificial intelligence in healthcare. Nat Biomed Eng 2018;2:719–731. [DOI] [PubMed] [Google Scholar]

- 4.Nash FA. Differential diagnosis: an apparatus to assist the logical faculties. Lancet 1954;263:874–875, p. 874. [DOI] [PubMed] [Google Scholar]

- 5.Davis BJ. The application of computers to clinical medical data (including machine demonstration). In: Proceedings of the 1st IBM medical symposium. Endicott, NY: IBM, 1959:179–184, p. 185. [Google Scholar]

- 6.Lipkin M Correlation of data with a digital computer in the differential diagnosis of hematological diseases. IRE T Med Electron. 1960;ME-7:243–246, p. 246. [DOI] [PubMed] [Google Scholar]

- 7.Brodman K, van Woerkom AJ. Computer-aided diagnostic screening for 100 common diseases. JAMA 1966;197:901–905. [PubMed] [Google Scholar]

- 8.Brodman K, van Woerkom AJ, Erdmann AJ Jr, Goldstein LS. Interpretation of symptoms with a data-processing machine. AMA Arch Intern Med 1959;103:116–122, p. 116. [DOI] [PubMed] [Google Scholar]

- 9.Warner HR, Toronto AF, Veasy LG. Experience with Bayes’ theorem for computer diagnosis of congenital heart disease. Ann NY Acad Sci 1964;115:558–567. [PubMed] [Google Scholar]

- 10.de Dombal FT, Leaper DJ, Staniland JR, McCann AP, Horrocks JC. Computer-aided diagnosis of acute abdominal pain. BMJ 1972;2: 9–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ledley RS. Using electronic computers in medical diagnosis. IRE T Med Electron 1960;ME-7:274–280, pp. 279–280. [DOI] [PubMed] [Google Scholar]

- 12.Taylor R, comments on Ledley RS. Using electronic computers in medical diagnosis. IRE T Med Electron 1960;ME-7:274–280, pp. 279–280. [DOI] [PubMed] [Google Scholar]

- 13.Bane F Physicians for a growing America. Washington, DC: US Government Printing Office, 1959. [Google Scholar]

- 14.Zworykin VK. Welcome—introduction to the conference. IRE T Med Electron 1960;ME-7:239. [Google Scholar]

- 15.Brodman K to Wander A, September 27, 1967. Medical Center Archives of New York Presbyterian/Weill Cornell, The Keeve Brodman, MD (1906–1979) Papers, box 4, folder 2. [Google Scholar]

- 16.“Computers programmed to sort routine symptoms,” n.d. Medical Center Archives of New York Presbyterian/Weill Cornell, The Ralph Engle, MD (1920–2000) Papers, box 8, folder 2. [Google Scholar]

- 17.McTernan E, Crocker D. Push-button medicine is no pipe dream! Hospital Physician January 1969; 85. [Google Scholar]

- 18.Medicine faces the computer revolution: electronic ‘brains’ are heralding a new epoch of improved diagnosis, timelier treatment, and far less medical paper work. Medical World News, July 14, 1967; 46–55, on p. 47. [Google Scholar]

- 19.Mullainathan S, Obermeyer Z, Does machine learning automate moral hazard and error? Am Econ Rev 2017;107:476–480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Progress Report: Electronic Data Processing in Hematology, Grant No. AM-06857–03, U.S. Public Health Services, National Institutes of Health, January 1963, p. 2. Medical Center Archives of New York Presbyterian/Weill Cornell, The Ralph Engle, MD (1920–2000) Papers, box 14, folder 11. [Google Scholar]

- 21.Buchanan BG, Shortliffe EH. The problem of evaluation. In: Buchanan BG, Shortliffe EH, eds. Rule-based expert systems: the MYCIN experiments of the Stanford Heuristic Programming Project. Reading, MA: Addison-Wesley, 1984, pp. 571–588. [Google Scholar]

- 22.Mullainathan S, Obermeyer Z, Does machine learning automate moral hazard and error? Am Econ Rev 2017;107:476–480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Schenthal JE. Clinical concepts in the application of large scale electronic data processing. In: Proceedings of the 2nd IBM Medical Symposium. Endicott, NY: IBM, 1960:391–399, pp. 398–399. [Google Scholar]

- 24.Schenthal JE, Sweeney JW, Nettleton WJ. Clinical application of large-scale electronic data processing apparatus I: new concepts in clinical use of the electronic digital computer. JAMA 1960;173:6–11. [DOI] [PubMed] [Google Scholar]

- 25.Schenthal JE, Sweeney JW, Nettleton WJ, Yoder RD. Clinical applications of electronic data processing apparatus III: system for processing medical records. JAMA 1963;186:101–105, p. 101. [DOI] [PubMed] [Google Scholar]

- 26.Schenthal JE, Sweeney JW, Nettleton WJ. Clinical application of electronic data processing apparatus II: new methodology in clinical record storage. JAMA 1961;178:267–270, p. 270. [DOI] [PubMed] [Google Scholar]

- 27.Piore ER. Welcoming address. In: Proceedings of 1st IBM Medical Symposium and 2nd IBM Medical Symposium. Poughkeepsie, NY: IBM, 1960:231–236, pp. 234–235. [Google Scholar]

- 28.Baruch JJ. Doctor-machine symbiosis. IRE T Med Electron 1960;ME-7:290–293. [DOI] [PubMed] [Google Scholar]

- 29.Theodore DM. Towards a new hospital: architecture, medicine, and computation, 1960–1975 (Ph.D. dissertation). Cambridge, MA: Harvard University, 2014. [Google Scholar]

- 30.Barnett GO. History of the development of medical information systems at the Laboratory of Computer Science at Massachusetts General Hospital. In: Blum BI, Duncan K, eds. A history of medical informatics. New York, NY: ACM Press, 1990: 141–156. [Google Scholar]

- 31.Barnett GO. Automated hospital revolution overdue. Medical World News May 17, 1968; 54–55. [Google Scholar]

- 32.Stevens H Life out of sequence: a data-driven history of bioinformatics. Chicago, IL and London, UK: University of Chicago Press, 2013. [Google Scholar]

- 33.Banerjee A, Mathew D, Rouane, K. Using patient data for patients’ benefit. BMJ 2017;358:j4413. [DOI] [PubMed] [Google Scholar]

- 34.Berg M, Bowker G. The multiple bodies of the medical record: toward a sociology of an artifact. Sociol Quart 1997;38:513–537. [Google Scholar]

- 35.Pronovost P, Johns MME, Palmer S, et al. Procuring Interoperability: Achieving High-Quality, Connected, and Person-Centered Care. Washington, DC: National Academy of Medicine, 2018. (https://nam.edu/procuring-interoperability-achieving-high-quality-connected-and-person-centered-care/). [PubMed] [Google Scholar]

- 36.Shannon JA, Kidd CV. Medical research in perspective. Science 1956;124:1185–1190. [DOI] [PubMed] [Google Scholar]

- 37.Ledley RS. Digital electronic computers in biomedical sciences. Science 1959;130:1225–1234, p. 1230. [DOI] [PubMed] [Google Scholar]

- 38.Dee CR. The development of the Medical Literature Analysis and Retrieval System (MEDLARS). J Med Libr Assoc 2007;95:416–425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Lancaster FW. MEDLARS: report on the evaluation of its operating efficiency. Am Doc 1969;20:119–142. [Google Scholar]

- 40.Cummings MM. US House. Departments of Labor and Health, Education, and Welfare and Related Agencies Appropriations for 1967. Part 3: National Institutes of Health. Subcommittee of the Committee on Appropriations. House, Hearing 1966 Mar 6:784–806, as cited in Dee CR. The development of the Medical Literature Analysis and Retrieval System (MEDLARS). J Med Libr Assoc 2007;95:416–425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Dee CR. The development of the Medical Literature Analysis and Retrieval System (MEDLARS). J Med Libr Assoc 2007;95:416–425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Savulescu J, Spriggs M. The hexamethonium study and the death of a normal volunteer in research. J Med Ethics 2002;28:332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Obermeyer Z, Lee T. Lost in thought—the limits of the human mind and the future of medicine. N Engl J Med 2017;377:1209–1211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kuang C A mind of its own: can AI be taught to explain itself? New York Times Magazine November 26, 2017; 46–51. [Google Scholar]

- 45.Zworykin V A rapid access multi-memory unit for medical data processing. Undated m.s., 1960.VZP, box M&A 78, folder 11, p. 5. [Google Scholar]