Abstract

The presence of missing entries in data often creates challenges for pattern recognition algorithms. Traditional algorithms for clustering data assume that all the feature values are known for every data point. We propose a method to cluster data in the presence of missing information. Unlike conventional clustering techniques where every feature is known for each point, our algorithm can handle cases where a few feature values are unknown for every point. For this more challenging problem, we provide theoretical guarantees for clustering using a fusion penalty based optimization problem. Furthermore, we propose an algorithm to solve a relaxation of this problem using saturating non-convex fusion penalties. It is observed that this algorithm produces solutions that degrade gradually with an increase in the fraction of missing feature values. We demonstrate the utility of the proposed method using a simulated dataset, the Wine dataset and also an under-sampled cardiac MRI dataset. It is shown that the proposed method is a promising clustering technique for datasets with large fractions of missing entries.

1. Introduction

CLUSTERING is an exploratory data analysis technique that is widely used to discover natural groupings in large datasets, where no labeled or pre-classified samples are available apriori. Specifically, it assigns an object to a group if it is similar to other objects within the group, while being dissimilar to objects in other groups. Example applications include analysis of gene experssion data, image segmentation, identification of lexemes in handwritten text, search result grouping and recommender systems [1]. A wide variety of clustering methods have been introduced over the years; see [2] for a review of classical methods. However, there is no consensus on a particular clustering technique that works well for all tasks, and there are pros and cons to most existing algorithms. The common clustering techniques such as k-means [3], k-medians [4] and spectral clustering [5] are implemented using the Lloyd’s algorithm which is non-convex and thus sensitive to initialization. Recently, linear programming and semi-definite programming based convex relaxations of the k-means and k-medians algorithms have been introduced [6] to overcome the dependence on initialization. Unlike the Lloyd’s algorithm, these relaxations can provide a certificate of optimality. However, all of the above mentioned techniques require apriori knowledge of the desired number of clusters. Hierarchical clustering methods [7], which produce easily interpretable and visualizable clustering results for a varying number of clusters, have been introduced to overcome the above challenge. A drawback of [7] is its sensitivity to initial guess and perturbations in the data. The more recent convex clustering technique (also known as sum-of-norms clustering) [8] retains the advantages of hierarchical clustering, while being invariant to initialization, and producing a unique clustering path. Theoretical guarantees for successful clustering using the convex-clustering technique are also available [9].

Most of the above clustering algorithms cannot be directly applied to real-life datasets, where a large fraction of samples are missing. For example, gene expression data often contains missing entries due to image corruption, fabrication errors or contaminants [10], rendering gene cluster analysis difficult. Likewise, large databases used by recommender systems (e.g Netflix) usually have a huge amount of missing data, which makes pattern discovery challenging [11]. The presence of missing responses in surveys [12] and failing imaging sensors in astronomy [13] are reported to make the analysis in these applications challenging. Several approaches were introduced to extend clustering to missing-data applications. For example, a partially observed dataset can be converted to a fully observed one using either deletion or imputation [14]. Deletion involves removal of variables with missing entries, while imputation tries to estimate the missing values and then performs clustering on the completed dataset. An extension of the weighted sum-of-norms algorithm (originally introduced for fully sampled data [8]) has been proposed where the weights are estimated from the data points by using some imputation techniques on the missing entries [15]. Kernel-based methods for clustering have also been extended to deal with missing entries by replacing Euclidean distances with partial distances [16], [17]. A majorize minimize algorithm was introduced to solve for the cluster-centres and cluster memberships in [18], which offers proven reduction in cost with iteration. In [19] and [20] the data points are assumed to lie on a mixture of K distributions, where K is known. The algorithms then alternate between the maximum likelihood estimation of the distribution parameters and the missing entries. A challenge with these algorithms is the lack of theoretical guarantees for successful clustering in the presence of missing entries. In contrast, there has been a lot of work in recent years on matrix completion for different data models. Algorithms along with theoretical guarantees have been proposed for low-rank matrix completion [21] and subspace clustering from data with missing entries [22], [23]. However, these algorithms and their theoretical guarantees cannot be trivially extended to the problem of clustering in the presence of missing entries.

The main focus of this paper is to introduce an algorithm for the clustering of data with missing entries and to theoretically analyze the conditions needed for perfect clustering in the presence of missing data. The proposed algorithm is inspired by the sum-of-norms clustering technique [8]; it is formulated as an optimization problem, where an auxiliary variable assigned to each data point is an estimate of the centre of the cluster to which that point belongs. A fusion penalty is used to enforce equality between many of these auxiliary variables. Since we have experimentally observed that non-convex fusion penalties provide superior clustering performance, we focus on the analysis of clustering using a fusion penalty in the presence of missing entries, for an arbitrary number of clusters. The analysis reveals that perfect clustering is guaranteed with high probability, provided the number of measured entries (probability of sampling) is high enough; the required number of measured entries depends on several parameters including intra-cluster variance and inter-cluster distance. We observe that the required number of entries is critically dependent on coherence, which is a measure of the concentration of inter cluster differences in the feature space. Specifically, if the clustering of the points is determined only by a very small subset of all the available features, then the clustering becomes quite unstable if those particular feature values are unknown for some points. Other factors which influence the clustering technique are the number of features, number of clusters and total number of points. We also extend the theoretical analysis to the case without missing entries. The analysis in this setting shows improved bounds when a uniform random distribution of points in their respective clusters is considered, compared to the worst case analysis considered in the missing-data setting. We expect that improved bounds can also be derived for the case with missing data when a uniform random distribution is considered.

We also propose an algorithm to solve a relaxation of the above penalty based clustering problem, using non-convex saturating fusion penalties. The algorithm is demonstrated on a simulated dataset with different fractions of missing entries and cluster separations. We observe that the algorithm is stable with changing fractions of missing entries, and the clustering performance degrades gradually with an increase in the number of missing entries. We also demonstrate the algorithm on clustering of the Wine dataset [24] and reconstruction of a dynamic cardiac MRI dataset from few Fourier measurements.

2. Clustering using fusion penalty

2.1. Background

We consider the clustering of points drawn from one of K distinct clusters C1, C2,…, CK. We denote the center of the clusters by . For simplicity, we assume that there are M points in each of the clusters. The individual points in the kth cluster are modelled as:

| (1) |

Here, nk(m) is the noise or the variation of zk(m) from the cluster center ck. The set of input points is obtained as a random permutation of the points {zk(m)}. The objective of a clustering algorithm is to estimate the cluster labels, denoted by .

The sum-of-norms (SON) method is a recently proposed convex clustering algorithm [8]. Here, a surrogate variable ui is introduced for each point xi, which is an estimate of the centre of the cluster to which xi belongs. As an example, let K = 2 and M = 5. Without loss of generality, let us assume that x1, x2,..., x5 belong to and x6, x7,..., x10 belong to . Then, we expect to arrive at the solution: u1 = u2 = ... = u5 = c1 and u6 = u7 = ... = u10 = c2. In order to find the optimal , the following optimization problem is solved:

| (2) |

The fusion penalty can be enforced using different norms, out of which the , and norms have been used in literature [8]. The use of sparsity promoting fusion penalties encourages sparse differences ui − uj, which facilitates the clustering of the points {ui}. For an appropriately chosen λ, the ui’s corresponding to xi’s from the same cluster converge to the same point. The main benefit of this convex scheme over classical clustering algorithms is the convergence of the algorithm to the global minimum.

The above optimization problem can be solved efficiently using the Alternating Direction Method of Multipliers (ADMM) algorithm and the Alternating Minimization Algorithm (AMA) [25]. Truncated and norms have also been used recently in the fusion penalty, resulting in non-convex optimization problems [26]. It has been shown that these penalties provide superior performance to the traditional convex penalties. Convergence to local minimum using an iterative algorithm has also been guaranteed in the non-convex setting.

The sum-of-norms algorithm has also been used as a visualization and exploratory tool to discover patterns in datasets [15]. Clusterpath diagrams are a common way to visualize the data. This involves plotting the solution path as a function of the regularization parameter λ. For a very small value of λ, the solution is given by: , i.e. each point forms its individual cluster. For a very large value of λ, the solution is given by: , i.e. every point belongs to the same cluster. For intermediate values of λ, more interesting behaviour is seen as various {ui} merge and reveal the cluster structure of the data.

In this paper, we extend the algorithm to account for missing entries in the data. We present theoretical guarantees for clustering with and without missing entries using an fusion penalty. Next, we approximate the penalty by non-convex saturating penalties, and solve the resulting relaxed optimization problem using an iterative reweighted least squares (IRLS) strategy [27]. The proposed algorithm is shown to perform clustering correctly in the presence of large fractions of missing entries.

2.2. Central Assumptions

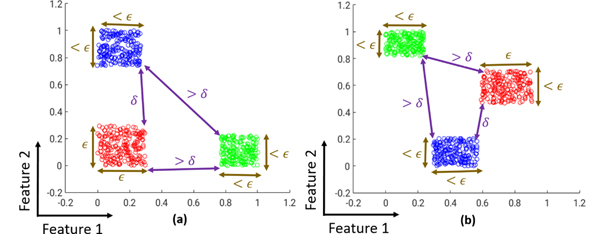

We make the following assumptions (illustrated in Fig 1), which are key to the successful clustering of the points:

Fig. 1:

Central Assumptions: (a) and (b) illustrate different instances where points belonging to are to be separated into 3 different clusters (denoted by the colours red, green and blue). Assumptions A.1 and A.2 related to cluster separation and cluster size respectively, are illustrated in both (a) and (b). The importance of assumption A.3 related to feature concentration can also be appreciated by comparing (a) and (b). In (a), points in the red and blue clusters cannot be distinguished solely on the basis of feature 1, while the red and green clusters cannot be distinguished solely on the basis of feature 2. Thus, it is difficult to correctly cluster these points if either of the feature values is unknown. In (b), due to low coherence (as assumed in A.3), this problem does not arise.

A.1: Cluster separation: Points from different clusters are separated by δ > 0 in the sense, i.e:

| (3) |

We require δ > 0 for the clusters to be non-overlapping. A high δ corresponds to well separated clusters.

A.2: Cluster size: The maximum separation of points within any cluster in the sense is ϵ ≥ 0, i.e:

| (4) |

Thus, the kth cluster is contained within a cube of size ϵ, with center ck.

A.3: Feature concentration: The coherence of a vector is defined as [21]:

| (5) |

By definition: 1 ≤ μ(y) ≤ P. Intuitively, a vector with a high coherence has a few large values and several small ones. Specifically, if μ(y) = P, then y has only 1 non-zero value. In contrast, if μ(y) = 1, then all the entries of y are equal. We bound the coherence of the difference between points from different clusters as:

| (6) |

μ0 is indicative of the difficulty of the clustering problem in the presence of missing data. If μ0 = P, then two clusters differ only a single feature, suggesting that it is difficult to assign the correct cluster to a point if this feature is not sampled. The best case scenario is μ0 = 1, when all the features are equally important. In general, cluster recovery from missing data becomes challenging with increasing μ0.

The quantity is a measure of the difficulty of the clustering problem. Small values of κ suggest large inter-cluster separation compared to the cluster size; the recovery of such well-defined clusters is expected to be easier than the case with large κ values. Note the norm is used in the definition of δ, while the norm is used to define ϵ. If , then κ = 1; this value of κ is of special importance since κ < 1 is a requirement for successful recovery in our main results.

We study the problem of clustering the points {xi} in the presence of entries missing uniformly at random. We arrange the points {xi} as columns of a matrix X. The rows of the matrix are referred to as features. We assume that each entry of X is observed with probability p0. The entries measured in the ith column are denoted by:

| (7) |

where Si is the sampling matrix, formed by selecting rows of the identity matrix. We consider solving the following optimization problem to obtain the cluster memberships from data with missing entries:

| (8) |

We use the above constrained formulation rather than the unconstrained formulation in (2) to avoid the dependence on λ. The 2,0 norm is defined as:

| (9) |

Similar to the SON scheme (2), we expect that all ui’s that correspond to xi in the same cluster are equal, while ui’s from different clusters are not equal. We consider the cluster recovery to be successful when there are no misclassifications. We claim that the above algorithm can successfully recover the clusters with high probability when:

The clusters are well separated (i.e, low )).

The sampling probability p0 is sufficiently high.

The coherence μ0 is small.

Before moving on to a formal statement and proof of this result, we consider a simple special case to illustrate the approach. In order to aid the reader in following the results, all the important symbols used in the paper have been summarized in Table 1.

TABLE 1:

Notations used

| K | Number of clusters |

| M | Number of points in each cluster |

| P | Number of features for each point |

| The ith cluster | |

| ci | Centre of |

| zi(m) | mth point in |

| {xi} | Random permutation of KM points {zk(m)} for |

| Si | Sampling matrix for xi |

| X | Matrix formed by arranging {xi} as columns, such that the ith column is xi |

| p0 | Probability of sampling each entry in X |

| δ | Parameter related to cluster separation defined in (3) |

| ϵ | Parameter related to cluster size defined in (4) |

| κ | Defined as |

| μ0 | Parameter related to coherence defined in (6) |

| γ0 | Defined in (16) |

| δ0 | Defined in (17) |

| β0 | Defined in (18) |

| η0 | Defined in (19) |

| η0,approx | Upper bound for η0 for the case of 2 clusters, defined in (21) |

| c | Parameter related to cluster centre separation defined in (27) |

| Κ′ | Defined as |

| Β1 | Defined in (28) |

| η1 | Probability of failure of Theorem 2.7 |

2.3. Noiseless Clusters with Missing Entries

We consider the simple case where all the points belonging to the same cluster are identical. Thus every cluster is “noiseless”, and we have: ϵ = 0 and hence κ = 0. The optimization problem (8) now reduces to:

| (10) |

Next, we state a few results for this special case in order to provide some intuition about the problem. The results are not stated with mathematical rigour and are not accompanied by proofs. In the next sub-section, when we consider the general case, we will provide lemmas and theorems (with proofs in the appendix), which generalize the results stated here. Specifically, Lemmas 2.1, 2.2, 2.3 and Theorem 2.4 generalize Results 2.1, 2.2, 2.3 and 2.4 respectively.

We will first consider the data consistency constraint in (10) and determine possible feasible solutions. We observe that all the points in any specified cluster can share a centre without violating the data consistency constraint:

Result 2.1.

Consider any two points x1 and x2 from the same cluster. A solution u exists for the following equations:

| (11) |

with probability 1.

The proof for the above result is trivial in this special case, since all points in the same cluster are the same. We now consider two points from different clusters.

Result 2.2.

Consider two points x1 and x2 from different clusters. A solution u exists for the following equations:

| (12) |

with low probability, when the sampling probability p0 is high and coherence μ0 is low.

By definition, and , where and are the index sets of the features that are sampled (not missing) in x1 and x2 respectively. We observe that (12) can be satisfied, iff:

| (13) |

which implies that the features of x1 and x2 are the same on the index set . If the probability of sampling p0 is sufficiently high, then the number of samples at commonly observed locations:

| (14) |

will be high, with high probability. If the coherence μ0 defined in assumption A3 is low, then with high probability the vector x1 − x2 does not have q entries that are equal to 0. In other words, the cluster memberships are not determined by only a few features. Thus, for a small value of μ0 and high p0, we can ensure that (13) occurs with very low probability. We now generalize the above result to obtain the following:

Result 2.3.

Assume that is a set of points chosen randomly from multiple clusters (not all are from the same cluster). A solution u exists for the following equations:

| (15) |

with low probability, when the sampling probability p0 is high and coherence μ0 is low.

The key message of the above result is that large mis-classified clusters are highly unlikely. We will show that all feasible solutions containing small mis-classified clusters are associated with higher cost than the correct solution. Thus, we can conclude that the algorithm recovers the ground truth solution with high probability, as summarized by the following result.

Result 2.4.

The optimization problem (10) results in the ground-truth clustering with a high probability if the sampling probability p0 is high and the coherence μ0 is low.

2.4. Noisy Clusters with Missing Entries

We will now consider the general case of noisy clusters with missing entries, and will determine the conditions required for (8) to yield successful recovery of clusters. The reasoning behind the proof in the general case is similar to that for the special case discussed in the previous sub-section. Before proceeding to the statement of the lemmas and theorems, we define the following quantities:

- Upper bound for probability that two points have less than commonly observed locations:

(16) - Given that two points from different clusters have more than commonly observed locations, upper bound for probability that they can yield the same u without violating the constraints in (8):

(17) - Upper bound for probability that two points from different clusters can yield the same u without violating the constraints in (8):

(18) - Upper bound for failure probability of (8):

where is the set of all sets of positive integers {mj} such that: and . Here, the function counts the number of non-zero elements in a set. For example, if K = 2 then contains all sets of 2 positive integers {m1, m2}, such that m1 + m2 = M. Thus, and (19) reduces to:(19) (20) - We note that the expression for η0 is quite involved. Hence, to provide some intuition, we simplify this expression for the special case where there are only two clusters. Under the assumption that , it can be shown that η0 is upper-bounded as:

The above upper bound is derived in Appendix F.(21)

We now state the results for clustering with missing entries in the general noisy case. The following two lemmas are generalizations of Results 2.1 and 2.2 to the noisy case.

Lemma 2.1.

Consider any two points x1 and x2 from the same cluster. A solution u exists for the following equations:

| (22) |

with probability 1.

The proof of this lemma is in Appendix A.

Lemma 2.2.

Consider any two points x1 and x2 from different clusters, and assume that κ < 1. A solution u exists for the following equations:

| (23) |

with probability less than β0.

The proof of this lemma is in Appendix C. We note that β0 decreases with a decrease in κ. A small ϵ implies less variability within clusters and a large δ implies well-separated clusters, together resulting in a low value of κ. Both these characteristics are desirable for clustering and result in a low value of β0. This lemma also demonstrates that the coherence assumption is important in ensuring that the sampled entries are sufficient to distinguish between a pair of points from different clusters. As a result, β0 decreases with a decrease in the value of μ0. As expected, we also observe that β0 decreases with an increase in p0.

The above result can be generalized to consider a large number of points from multiple clusters. If we choose M points such that not all of them belong to the same cluster, then it can be shown that with high probability, they cannot share the same u without violating the constraints in (8). This idea (a generalization of Result 2.3) is expressed in the following lemma:

Lemma 2.3.

Assume that is a set of points chosen randomly from multiple clusters (not all are from the same cluster). If κ < 1, a solution u does not exist for the following equations:

| (24) |

with probability exceeding 1 − η0.

The proof of this lemma is in Appendix D. We note here, that for a low value of β0 and a high value of M (number of points in each cluster), we will arrive at a very low value of η0. Using Lemmas 2.1, 2.2 and 2.3, we now move on to our main result which is a generalization of Result 2.4:

Theorem 2.4.

If κ < 1, the solution to the optimization problem (8) is identical to the ground-truth clustering with probability exceeding 1 − η0.

The proof of the above theorem is in Appendix E. The reasoning follows from Lemma 2.3. It is shown in the proof that all solutions with cluster sizes smaller than M are associated with a higher cost than the ground-truth solution.

2.5. Clusters without Missing Entries

We now study the case where there are no missing entries. In this special case, optimization problem (8) reduces to:

| (25) |

We have the following theorem guaranteeing successful recovery for clusters without missing entries:

Theorem 2.5.

If κ < 1, the solution to the optimization problem (25) is identical to the ground-truth clustering.

The proof for the above Theorem is in Appendix G. We note that the above result does not consider any particular distribution of the points in each cluster. Instead, if we consider that the points in each cluster are sampled from certain particular probability distributions such as the uniform random distribution, then a larger κ is sufficient to ensure success with high probability. In the general case where no such distribution is assumed, we cannot make a probabilistic argument, and a smaller κ is required. We now consider a special case, where the noise nk(m) is a zero mean uniform random variable . Thus, the points within each cluster are uniformly distributed in a cube of side ϵ. We note that δ is now a random variable, and thus instead of using the constant (as in previous lemmas), we define the following constant:

| (26) |

where c is defined as the minimum separation between the centres of any 2 clusters in the dataset:

| (27) |

We also define the following quantity:

| (28) |

We arrive at the following result for two points in different clusters:

Lemma 2.6.

Let , If the points in each cluster follow a uniform random distribution, then for two points x1 and x2 belonging to different clusters, a solution u exists for the following equations:

| (29) |

with probability less than β1.

The proof for the above lemma is in Appendix H. This implies that for , two points from different clusters cannot be misclassified to a single cluster with high probability. As η0 is expressed in terms of β0 in (19), we can also express η1 in terms of β1. We get the following guarantee for perfect clustering:

Theorem 2.7.

If the points in each cluster follow a uniform random distribution and , then the solution to the optimization problem (25) is identical to the ground-truth clustering with probability exceeding 1 − η1.

Note that . Thus, the above result allows for values κ > 1. Our results show that if we do not consider the distribution of the points, then we arrive at the bound κ < 1 with and without missing entries, as seen from Theorems 2.4 and 2.5 respectively. A uniform random distribution can also be assumed in the case of missing entries. Similar to Theorem 2.7, we expect an improved bound for the case with missing entries as well.

3. Relaxation of the penalty

3.1. Constrained formulation

We propose to solve a relaxation of the optimization problem (8), which is more computationally feasible. The relaxed problem is given by:

| (30) |

where ϕ is a function approximating the norm. Some examples of such functions are:

norm: , for some 0 < p < 1.

H1 penalty: .

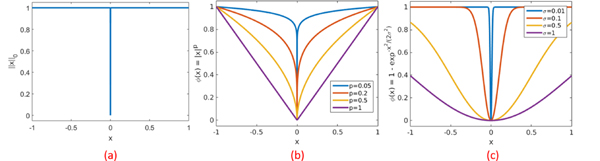

These functions approximate the penalty more accurately for lower values of p and σ, as illustrated in Fig 2. We reformulate the problem using a majorize-minimize strategy. Specifically, by majorizing the penalty ϕ using a quadratic surrogate functional, we obtain:

| (31) |

where , and d is a constant. For the two penalties considered here, we obtain the weights as:

norm: . The infinitesimally small α term is introduced to deal with situations where x = 0. For non-zero x, we get the expression .

H1 penalty: .

We can now state the majorize-minimize formulation for problem (30) as:

| (32) |

where the constant d has been ignored. In order to solve problem (32), we alternate between two sub-problems till convergence. At the nth iteration, these sub-problems are given by:

| (33) |

| (34) |

Fig. 2:

Different penalty functions ϕ. (a) The norm (b) The penalty function which is non-convex for 0 < p < 1 and convex for p = 1 (c) The H1 penalty function. The and H1 penalties closely approximate the norm for low values of p and σ respectively.

3.2. Unconstrained formulation

For larger datasets, it might be computationally intensive to solve the constrained problem. In this case, we propose to solve the following unconstrained problem:

| (35) |

As before, we can state the majorize-minimize formulation for problem (35) as:

| (36) |

In order to solve the problem (36), we alternate between two sub-problems till convergence. The 1st sub-problem is the same as (33). The 2nd sub-problem is given by:

| (37) |

3.3. Comparison of penalties

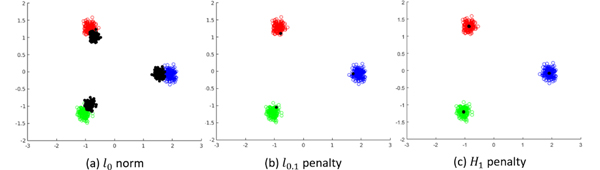

We compare the performance of different penalties when used as a surrogate for the norm. For this purpose, we use a simulated dataset with points in belonging to 3 well-separated clusters, with 200 points in each cluster. For this particular experiment, we considered x1, x2,...,x200 ∈ , x201, x202,...,x400 ∈ and x401, x402,...,x600 ∈ . We do not consider the presence of missing entries for this experiment. We solve problem (35) to cluster the points using the , (for p = 0.1) and H1 (for σ = 0.5) penalties. The results are shown in Fig 3. Only for the purpose of visualization, we take a PCA of the data matrix and retain the 2 most significant principal components to get a matrix of points . These points are plotted in the figure, with red, blue and green representing points from different clusters. We similarly obtain the 2 most significant components of the estimated centres and plot the resulting points in black. In (b) and (c), we note that and . Thus, the penalty and the H1 penalty are able to correctly cluster the points. This behaviour is not seen in (a). Thus it is concluded that the convex penalty is unable to cluster the points.

Fig. 3:

Comparison of different penalties. We show here the 2 most significant principal components of the solutions obtained using the IRLS algorithm. (a) It can be seen that the penalty is unable to cluster the points even though the clusters are well-separated. (b) The penalty is able to cluster the points correctly. However, the cluster-centres are not correctly estimated. (c) The H1 penalty correctly clusters the points and also gives a good estimate of the centres.

The cluster-centres estimated using the penalty are inaccurate. The H1 penalty out-performs the other two penalties and accurately estimates the cluster-centres. We can explain this behaviour intuitively by observing the plots in Fig 2. The norm penalizes differences between all pairs of points. The semi-norm penalizes differences between points that are close. Due to the saturating nature of the penalty, it does not heavily penalize differences between points that are further away. The same is true for the H1 penalty. However, we note that the H1 penalty saturates to 1 very quickly, similar to the norm. This behaviour is missing for the penalty. For this reason, it is seen that the penalty also penalizes inter-cluster distances (unlike the H1 penalty), and shrinks the distance between the estimated centres of different clusters.

3.4. Initialization Strategies

Our experiments emphasize the need for a good initialization of the weights wij for convergence to the correct cluster centre estimates. This dependence on the initial value arises from the non-convexity of the optimization problem. We consider two different strategies for initializing the weights:

Partial Distances: Consider a pair of points x1, x2 observed by sampling matrices and respectively. Let the set of common indices be . We define the partial distance as , where xiω represents the set of entries of xi restricted to the index set ω. Instead of the actual distances which are not available, the partial distances can be used for computing the weights.

- Imputation Methods: The weights can be computed from estimates , where:

Here m is a constant vector, specific to the imputation technique. The zero-filling technique corresponds to m = 0. Better estimation techniques can be derived where the jth row of m can be set to the mean of all measured values in the jth row of X.(38)

We will observe experimentally that for a good approximation of the initial weights W(0), we get the correct clustering. Conversely, the clustering fails for a bad initial guess. Our experiments demonstrate the superiority of a partial distance based initialization strategy over a zero-filled initialization.

4. Results

We study the proposed theoretical guarantees for Theorem 2.4 for different settings. We also test the proposed algorithm on simulated and real datasets. The simulations are used to study the performance of the algorithm with change in parameters such as fraction of missing entries, number of points to be clustered etc. We also study the effect of different initialization techniques on the algorithm performance. We demonstrate the algorithm on the publicly available Wine dataset [24], and use the algorithm to reconstruct a dataset of under-sampled cardiac MR images.

4.1. Study of Theoretical Guarantees

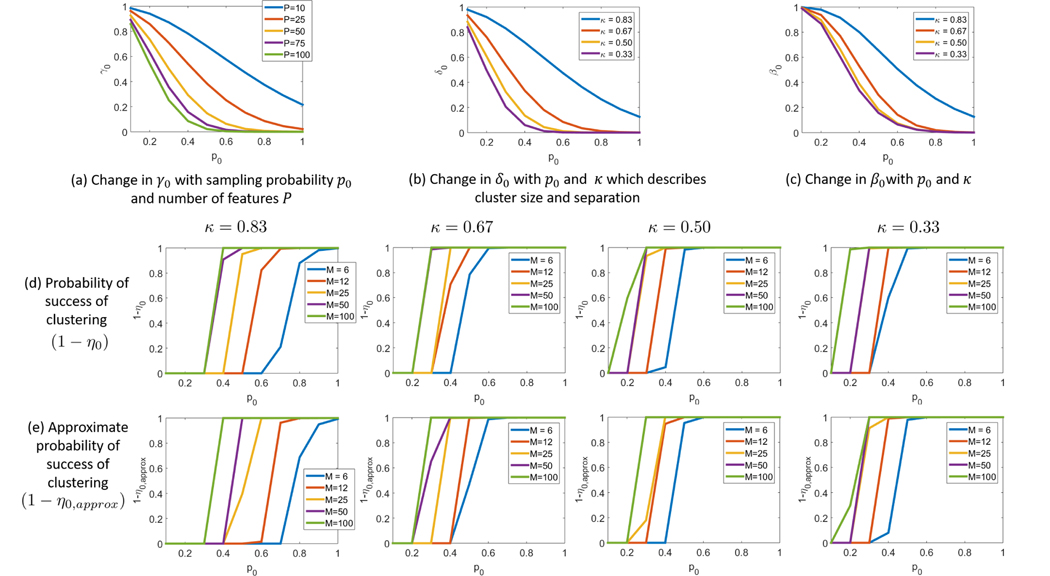

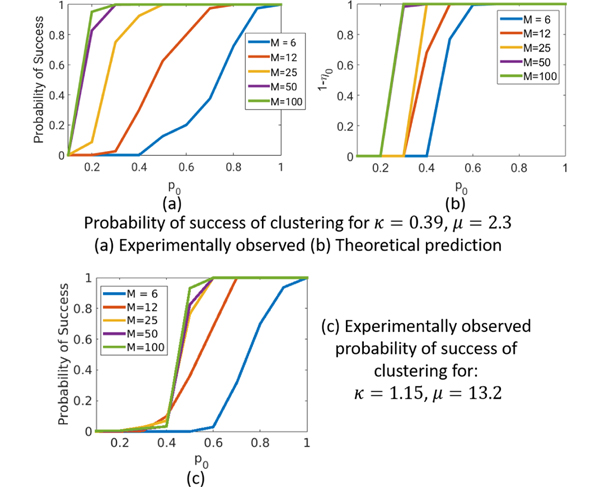

We observe the behaviour of the quantities γ0, δ0, β0, η0 and η0,approx (defined in section 2.4) as a function of parameters p0, P, κ and M. Fig 4 shows a few plots that illustrate the change in these quantities as the different parameters are varied. γ0 is an upper bound for the probability that a pair of points have entries observed at common locations. In Fig 4 (a), the change in γ0 is shown as a function of p0 for different values of P. In subsequent plots, we fix P = 50 and μ0 = 1.5. δ0 is an upper bound for the probability that a pair of points from different clusters can share a common centre, given that entries are observed at common locations. In Fig 4 (b), the change in δ0 is shown as a function of p0 for different values of κ. In Fig 4 (c), the behaviour of β0 = 1 − (1 − γ0)(1 − δ0) is shown, which is the probability mentioned in Lemma 2.2.

Fig. 4:

Study of Theoretical Guarantees. The quantities γ0, δ0 and β0 defined in Section 2.4 are studied in (a), (b) and (c) respectively. In (b) and (c), P = 50 and μ0 = 1.5 are assumed. β0 gives the probability that 2 points from different clusters can share a centre. As expected, this value decreases with increase in p0 and decrease in κ. Considering K = 2 clusters, a lower bound for the probability of successful clustering (1 − η0) using the proposed algorithm is shown in (d) for different values of κ. The approximate values (1 − η0,approx) computed using (21) are shown in (e).

We consider the two cluster setting, (i.e. K = 2) for subsequent plots. η0 is the probability of failure of the clustering algorithm (8). In (d) and (e), plots are shown for (1 − η0) and (1 − η0,approx) as a function of p0 for different values of κ and M. Here, η0,approx is an upper bound for η0 computed using (21). As expected, the probability of success of the clustering algorithm increases with increase in p0 and M and decrease in κ.

4.2. Clustering of Simulated Data

We simulated datasets with K = 2 disjoint clusters in with a varying number of points per cluster (M = 6, 12, 25, 50, 100). The points in each cluster follow a uniform random distribution. We study the probability of success of the H1 penalty based constrained clustering algorithm (with partial-distance based initialization) as a function of κ, M and p0. For a particular set of parameters the experiment was conducted 20 times to compute the probability of success of the algorithm. Between these 20 trials, the cluster-centers remain the same, while the points sampled from these clusters are different and the locations of the missing entries are different. Fig 5 (a) shows the result for datasets with κ = 0.39 and μ0 = 2.3. The theoretical guarantees for successfully clustering the dataset are shown in (b). Note that the theoretical guarantees do not assume that the points are taken from a uniform random distribution. Also, the theoretical bounds assume that we are solving the original problem using a norm, whereas the experimental results were generated for the H1 penalty. Our theoretical guarantees hold for κ < 1. However, we demonstrate in (c) that even for the more challenging case where κ = 1.15 and μ0 = 13.2, our clustering algorithm is successful. Note that we do not have theoretical guarantees for this case. However, by assuming a uniform random distribution on the points, we expect that we can get better theoretical guarantees (similar to Theorem 2.7 for the case without missing entries).

Fig. 5:

Experimental results for probability of success. Guarantees are shown for a simulated dataset with K = 2 clusters. The clustering was performed using (32) with an H1 penalty and partial distance based initialization. For (a) and (b) it is assumed that κ = 0.39 and μ0 = 2.3. (a) shows the experimentally obtained probability of success of clustering for clusters with points from a uniform random distribution. (b) shows the theoretical lower bound for the probability of success. (c) shows the experimentally obtained probability of success for a more challenging dataset with κ = 1.15 and μ0 = 13.2. Note that we do not have theoretical guarantees for this case, since our analysis assumes that κ < 1.

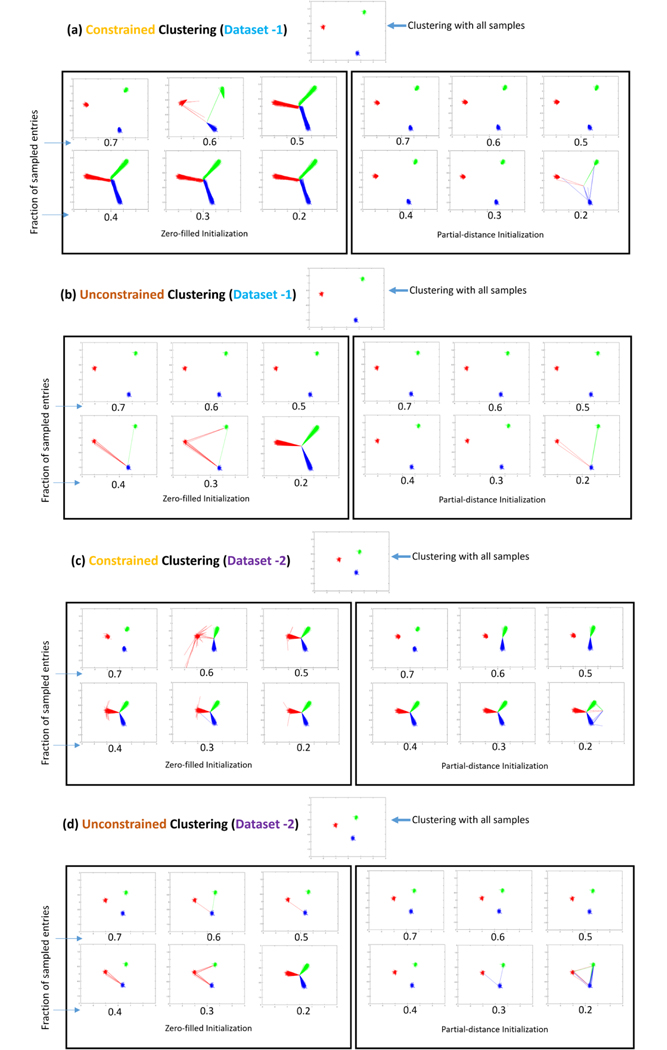

Clustering results with K = 3 simulated clusters are shown in Fig 6. We simulated Dataset-1 with K = 3 disjoint clusters in and M = 200 points in each cluster. In order to generate this dataset, 3 cluster centres in were chosen from a uniform random distribution. The distances between the 3 pairs of cluster-centres are 3.5, 2.8 and 3.3 units respectively. For each of these 3 cluster centres, 200 noisy instances were generated by adding zero-mean white Gaussian noise of variance 0.1. The dataset was sub-sampled with varying fractions of missing entries (p0 = 1,0.9, 0.8,...,0.3, 0.2). The locations of the missing entries were chosen uniformly at random from the full data matrix. We also generate Dataset-2 by halving the distance between the cluster centres, while keeping the intra-cluster variance fixed. We test both the constrained (30) and unconstrained (35) formulations of our algorithm on these datasets. Both the proposed initialization techniques for the IRLS algorithm (i.e. zero-filling and partial-distance) are also tested here. Since the points lie in , we take a PCA of the points and their estimated centres (similar to Fig 3) and plot the 2 most significant components. The 3 colours distinguish the points according to their ground-truth clusters. Each point xi is joined to its centre estimate by a line. As expected, we observe that the clustering algorithms are more stable with fewer missing entries. We also note that the results are quite sensitive to the initialization technique. We observe that the partial distance based initialization technique out-performs the zero-filled initialization. The unconstrained algorithm with partial distance-based initialization shows superior performance to the alternative schemes. Thus, we use this scheme for subsequent experiments on real datasets.

Fig. 6:

Clustering results in simulated datasets. The H1 penalty is used to cluster two datasets with varying fractions of missing entries. Both the constrained and unconstrained formulation results are presented with different initialization techniques (zero-filled and partial-distance based). We show here the 2 most significant principal components of the solutions. The original points {xi} are connected to their cluster centre estimates {ui} by lines. Inter-cluster distances in Dataset 2 are half of those in Dataset 1, while intra-cluster distances remain the same. Consequently, Dataset 1 performs better at a higher fraction of missing entries. For the unconstrained clustering formulation with partial-distance based initialization, the cluster centre estimates are relatively stable with varying fractions of missing entries.

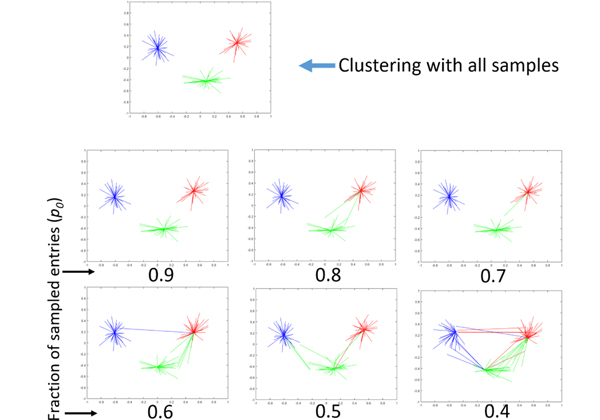

4.3. Clustering of Wine Dataset

We apply the clustering algorithm to the Wine dataset [24]. The data consists of the results of a chemical analysis of wines from 3 different cultivars. Each data point has P = 13 features. The 3 clusters have 59, 71 and 48 points respectively, resulting in a total of 178 data points. We created a dataset without outliers by retaining only M = 40 points per cluster, resulting in a total of 120 data points. We under-sampled these datasets using uniform random sampling with different fractions of missing entries. The results are displayed in Fig 7 using the PCA technique as explained in the previous sub-section. It is seen that the clustering is quite stable and degrades gradually with increasing fractions of missing entries.

Fig. 7:

Clustering on Wine dataset. The H1 penalty is used to cluster the Wine datasets with varying fractions of missing entries.

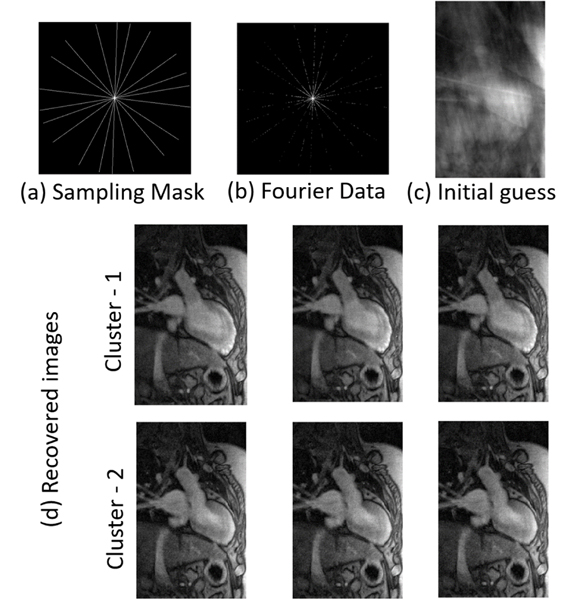

4.4. Cardiac MR Image Reconstruction

We apply the proposed algorithm to the reconstruction of a cardiac MR image time series. In MRI, samples are collected in the Fourier domain. However, due to the slow nature of the acquisition, only a small fraction of the Fourier samples can be acquired in each time frame. The goal of image reconstruction is to recover the image series from the incomplete Fourier observations. In the case of cardiac MRI, the different images in the time series appear in clusters determined by the cardiac and respiratory phase. Thus, the proposed algorithm can be applied to the image reconstruction problem.

The cardiac data was acquired on a Siemens Aera MRI scanner at the University of Iowa. The subject was asked to breathe freely, and 10 radial lines of Fourier data was acquired to reconstruct each image frame. Fourier data corresponding to 1000 frames was acquired and the image series was reconstructed using the proposed unconstrained algorithm. We performed spectral clustering [5] on the reconstructed images to form 20 clusters. A few reconstructed frames belonging to 2 different clusters are illustrated in Fig 8. The images displayed have minimal artefacts and are of diagnostic quality.

Fig. 8:

Cardiac MRI reconstruction results. The images were reconstructed from highly under-sampled Fourier data using the unconstrained formulation. A sampling mask for 1 particular frame is shown in (a), along with the Fourier data for that frame in (b). The missing Fourier entries were filled with zeros and an inverse Fourier Transform was taken to get the corrupted image in (c). The clustering algorithm was applied to this data and the resulting images were clustered into 20 clusters using spectral clustering. (d) shows some reconstructed images from 2 different clusters.

5. Discussion

We have proposed a technique to cluster points when some of the feature values of all the points are unknown. We theoretically studied the performance of an algorithm that minimizes an fusion penalty subject to certain constraints relating to consistency with the known features. We concluded that under favourable clustering conditions, such as well-separated clusters with low intra-cluster variance, the proposed method performs the correct clustering even in the presence of missing entries. However, since the problem is NP-hard, we propose to use other penalties that approximate the norm. We observe experimentally that the H1 penalty is a good surrogate for the norm. This non-convex saturating penalty is shown to perform better in the clustering task than previously used convex norms and penalties. We describe an IRLS based strategy to solve the relaxed problem using the surrogate penalty.

Our theoretical analysis reveals the various factors that determine whether the points will be clustered correctly in the presence of missing entries. It is obvious that the performance degrades with the decrease in the fraction of sampled entries (p0). Moreover, it is shown that the difference between points from different clusters should have low coherence (μ0). This means that the expected clustering should not be dependent on only a few features of the points. Intuitively, if the points in different clusters can be distinguished by only 1 or 2 features, then a point missing these particular feature values cannot be clustered correctly. Moreover, we note that a high number of points per cluster (M), high number of features (P) and a low number of clusters (K) make the data less sensitive to missing entries. Finally, well-separated clusters with low intra-cluster variance (resulting in low values of κ) are desirable for correct clustering.

Our experimental results show great promise for the proposed technique. In particular, for the simulated data, we note that the cluster-centre estimates degrade gradually with increase in the fraction of missing entries. Depending on the characteristics of the data such as number of points and cluster separation distance, the clustering algorithm fails at some particular fraction of missing entries. We also show the importance of a good initialization for the IRLS algorithm, and our proposed initialization technique using partial distances is shown to work very well.

The proposed algorithm performs well on the MR image reconstruction task, resulting in images with minimal artefacts and diagnostic quality. It is to be noted that the MRI images are reconstructed satisfactorily from very few Fourier samples. In this case the fraction of observed samples is around 5%. However, we see that the simulated datasets and the Wine datasets cannot be clustered at such a high fraction of missing samples. The fundamental difference between the MRI dataset and the other datasets is the coherence μ0. For the MRI data, we acquire Fourier samples. Since we know that the low frequency samples are important for image reconstruction, the MRI scanner acquires more low frequency samples. This is a case where high coherence is helpful in clustering. However, for the simulated and Wine data, we do not know apriori which features are more important. In any case the sampling pattern is random, and as predicted by theory, it is more useful to have low coherence. The conclusion is that if the sampling pattern is within our control, it is useful to have high coherence if the relative importance of the different features is known apriori. If this is unknown, then random sampling is preferred and it is useful to have low coherence. Our future work will focus on deriving guarantees for the case of high μ0 when the locations of the important features are known with some confidence, and the sampling pattern can be adapted accordingly.

Our theory assumes well-separated clusters and does not consider the presence of any outliers. Theoretical and experimental analysis for the clustering performance in the presence of outliers needs to be investigated. Improving the algorithm performance in the presence of outliers is a direction for future work. Moreover, we have shown improved bounds for the clustering success in the absence of missing entries when the points within a cluster are assumed to follow a uniform random distribution. We expect this trend to also hold for the case with missing entries. This case will be analyzed in future work.

6. Conclusion

We propose a clustering technique for data in the presence of missing entries. We prove theoretically that a constrained norm minimization problem recovers the clustering correctly even in the presence of missing entries. An efficient algorithm that solves a relaxation of the above problem is presented next. It is demonstrated that the cluster centre estimates obtained using the proposed algorithm degrade gradually with an increase in the number of missing entries. The algorithm is also used to cluster the Wine dataset and reconstruct MRI images from under-sampled Fourier data. The presented theory and results demonstrate the utility of the proposed algorithm in clustering data when some of the feature values of the data are unknown.

APPENDIX A. Proof of Lemma 2.1

Proof. Since x1 and x2 are in the same cluster, . For all the points in this particular cluster, let the pth feature be bounded as: . Then we can construct a vector u, such that . Now, since , the following condition will be satisfied for this particular choice of u:

| (39) |

From this, it follows trivially that the following will also hold:

| (40) |

□

APPENDIX B. Lemma B.1

Lemma B.1.

Consider any pair of points observed by sampling matrices and , respectively. We assume the set of common indices to be of size . Then, for some , the following result holds true regarding the partial distance :

| (41) |

Proof. We use some ideas for bounding partial distances from Lemma 3 of [22]. We rewrite the partial distance as the sum of q variables drawn uniformly at random from . By replacing a particular variable in the summation by another one, the value of the sum changes by at most . Applying McDiarmid’s Inequality, we get:

| (42) |

From our assumptions, we have . We also have by (6). We now substitute , where . Using the results above, we simplify expression (42) as:

| (43) |

□

APPENDIX C. Proof of Lemma 2.2

Proof. We will use proof by contradiction. Specifically, we consider two points x1 and x2 belonging to different clusters and assume that there exists a point u that satisfies:

| (44) |

We now show that the above assumption is violated with high probability. Following the notation of Lemma B.1, we denote the difference between the vectors by y = x1 − x2 and the partial distances by:

| (45) |

Using (44) and applying triangle inequality, we obtain , which translates to , where is the number of commonly observed locations. We need to show that with high probability, the partial distances satisfy:

| (46) |

which will contradict (44). We first focus on finding a lower bound for q. Using the Chernoff bound and setting , we have:

| (47) |

where . Thus, we can assume that with high probability.

Using Lemma B.1, we have the following result for the partial distances:

| (48) |

Since x1 and x2 are in different clusters, we have . We will now determine the value of t for which the above upper bound will equal the RHS of (46):

| (49) |

or equivalently:

| (50) |

Since t > 0, we require κ < 1, where . Using the above, we get the following bound if we assume that :

| (51) |

We now obtain the following probability bound for any :

| (52) |

Combining (47) and (52), the probability for (44) to hold is ≤ 1 − (1 − γ0)(1 − δ0) = β0. □

APPENDIX D. Proof of Lemma 2.3

Proof. We construct a graph where each point xi is represented by a node. Lemma 2.1 implies that a pair of points belonging to the same cluster can yield the same u in a feasible solution with probability 1. Hence, we will assume that there exists an edge between two nodes from the same cluster with probability 1. Lemma 2.2 indicates that a pair of points belonging to different clusters can yield the same u in a feasible solution with a low probability of β0. We will assume that there exists an edge between two nodes from different clusters with probability β0. We will now evaluate the probability that there exists a fully-connected sub-graph of size M, where all the nodes have not been taken from the same cluster. We will follow a methodology similar to [28], which gives an expression for the probability distribution of the maximal clique (i.e. largest fully connected sub-graph) size in a random graph. Unlike the proof in [28], in our graph every edge is not present with equal probability.

We define the following random variables:

t := Size of the largest fully connected sub-graph containing nodes from more than 1 cluster

n := Number of M membered complete sub-graphs containing nodes from more than 1 cluster

Our graph can have an M membered clique iff n is non-zero. Thus, we have:

| (53) |

Since the distribution of n is restricted only to the non-negative integers, it can be seen that:

| (54) |

Combining the above 2 results, we get:

| (55) |

Let us consider the formation of a particular clique of size M using m1, m2,...,mK nodes from clusters C1, C2,...,CK respectively such that , and at least 2 of the variables {mj} are non-zero. The number of ways to choose such a collection of nodes is: . In order to form a solution {mj}, we need inter-cluster edges to be present. We recall that each of these edges is present with probability β0. Thus, the probability that such a collection of nodes forms a clique is . This gives the following result:

| (56) |

where is the set of all sets of positive integers {mj} such that: and . Here, the function counts the number of non-zero elements in a set. Thus, we have:

| (57) |

This proves that with probability ≥ 1 − η0, a set of points of cardinality ≥ M not all belonging to the same cluster cannot all have equal cluster-centre estimates. □

APPENDIX E. Proof of Theorem 2.4

Proof. Lemma 2.1 indicates that fully connected original clusters with size M are likely with probability 1, while Lemma 2.3 shows that the size of misclassified large clusters cannot exceed M − 1 with very high probability. These results enable us to re-express the optimization problem (8) as a simpler maximization problem. We will then show that with high probability, any feasible solution other than the ground-truth solution results in a cost higher than the ground-truth solution.

Let a candidate solution have k groups of sizes M1, M2,...,Mk respectively. The centre estimates for all points within a group are equal. These are different from the centre estimates of other groups. Without loss of generality, we will assume that at most K of these groups each have points belonging to only a single ground-truth cluster, i.e. they are “pure”. The rest of the clusters in the candidate solution are “mixed” clusters. If we have a candidate solution with greater than K pure clusters, then they can always be merged to form K pure clusters; the merged solution will always result in a lower cost.

The objective function in (8) can thus be rewritten as:

| (58) |

Since we assume that the first K clusters are pure, therefore they have a size 0 ≤ Mi ≤ M, i = 1,...,K. The remaining clusters are mixed and have size ≤ M − 1 with probability ≥ 1 − η0. Hence, we have the constraints 0 ≤ Mi ≤ (M − 1), i = K + 1,...,k. We also have a constraint on the total number of points, i.e. . Thus, the problem (8) can be rewritten as the constrained optimization problem:

| (59) |

Note that we cannot have k < K, with probability ≥ 1 − η0, since that involves a solution with cluster size > M. We can evaluate the best solution for each possible value of k in the range K ≤ k ≤ MK. Then we can compare these solutions to get the solution with the highest cost. We note that the feasible region is a polyhedron and the objective function is convex. Thus, for each value of k, we only need to check the cost at the vertices of the polyhedron formed by the constraints, since the cost at all other points in the feasible region will be lower. The vertex points are formed by picking k − 1 out of the k box constraints and setting Mi to be equal to one of the 2 possible extremal values. We note that all the vertex points have either K or K + 1 non-zero values. As a simple example, if we choose M = 10 and K = 4, then the vertex points of the polyhedron (corresponding to different solutions {Mi}) are given by all possible permutations of the following:

(10,10,10,10,0,0...0) : 4 clusters

(10,10,10,0,1,9,0...0): 5 clusters

(10,10,0,0,2,9,9,0...0): 5 clusters

(10,0,0,0,3,9,9,9,0...0): 5 clusters

(0,0,0,0,4,9,9,9,9,0...0): 5 clusters

In the general case the vertices are given by permutations of the following:

(M, M,...,M, 0, 0...0): K clusters

(M, M,...,0, 0, 1, M − 1, 0...0): K + 1 clusters

(M, M,...,0, 0, 2, M − 1, M − 1...0): K + 1 clusters

…

(0, 0,...0, K, M − 1, M − 1...M − 1, 0): K+1 clusters

Now, it is easily checked that the 1st candidate solution in the list (which is also the ground-truth solution) has the maximum cost. Mixed clusters with size > M − 1 cannot be formed with probability > 1 − η0. Thus, with the same probability, the solution to the optimization problem (8) is identical to the ground-truth clustering. This concludes the proof of the theorem. □

APPENDIX F. Upper Bound for η0 in the 2-cluster case

Proof. We introduce the following notation:

F(i) = i(M − i)log β0, for i ∈ [1, M − 1].

G(i) = 2[log Γ(M + 1) − log Γ(i + 1) − log Γ (M − i + 1)], for i ∈ [1, M − 1] where Γ is the Gamma function.

We note that both the functions F and G are symmetric about , and have unique minimum and maximum respectively for . We will show that the maximum for the function F + G is achieved at the points i = 1, M − 1. We note that:

| (60) |

where Ψ is the digamma function, defined as the log derivative of the Γ function. We now use the expansion:

| (61) |

Substituting, we get:

| (62) |

We also have:

| (63) |

Adding, we get:

| (64) |

Now, in order to ensure that , we have to arrive at conditions such that:

| (65) |

Since the RHS is monotonically increasing in the interval the above condition reduces to:

| (66) |

Under the above condition, for all :

| (67) |

Thus, the function F + G reaches its maxima at the extremal points given by i = 1, M − 1. For positive integer values of i, i.e. i ∈ {1,2,...,M − 1}:

| (68) |

Thus, the function also reaches its maxima at i = 1, M − 1. This maximum value is given by: . This gives the following upper bound for η0:

| (69) |

□

APPENDIX G. Proof of Theorem 2.5

Proof. We consider any two points x1 and x2 that are in different clusters. Let us assume that there exists some u satisfying the data consistency constraint:

| (70) |

Using the triangle inequality, we have and consequently, . However, if we have a large inter-cluster separation , then this is not possible.

Thus, if , then points in different clusters cannot be misclassified to a single cluster. Among all feasible solutions, clearly the solution to problem (25) with the minimum cost is the one where all points in the same cluster merge to the same u. Thus, κ < 1 ensures that we will have the correct clustering. □

APPENDIX H. Proof of Lemma 2.6

Proof. The idea is similar to that in Theorem 2.5. We will show that with high probability two points x1 and x2 that are in different clusters satisfy with high probability, which implies that (29) is violated.

Let points in C1 and C2 follow uniform random distributions in with centres c1 and c2 respectively. The expected distance between x1 ∈ 1 and x2 ∈ 2 is given by:

| (71) |

where and are the pth features of ci and xi respectively, and . Let , for i = 1,2,...,P. Using Mcdiarmid’s inequality:

| (72) |

Let . Then we have:

| (73) |

We note that the RHS above is a decreasing function of c12. Thus, we consider some c ≤ c12, such that c is the minimum distance between any 2 cluster centres in the dataset. We then have the following bound:

| (74) |

To ensure t > 0, we require: , or equivalently, .

We now get the probability bound:

| (75) |

Thus, (29) is violated with probability exceeding 1 − β1. □

References

- [1].Saxena A, Prasad M, Gupta A, Bharill N, Patel OP, Tiwari A, Er MJ, Ding W, and Lin C-T, “A review of clustering techniques and developments,” Neurocomputing, 2017. [Google Scholar]

- [2].Jain AK, Murty MN, and Flynn PJ, “Data clustering: a review,” ACM computing surveys (CSUR), vol. 31, no. 3, pp. 264–323, 1999. [Google Scholar]

- [3].MacQueen J et al. , “Some methods for classification and analysis of multivariate observations,” in Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, vol. 1, no. 14. Oakland, CA, USA., 1967, pp. 281–297. [Google Scholar]

- [4].Bradley PS, Mangasarian OL, and Street WN, “Clustering via concave minimization,” in Advances in neural information processing systems, 1997, pp. 368–374. [Google Scholar]

- [5].Ng AY, Jordan MI, Weiss Y et al. , “On spectral clustering: Analysis and an algorithm,” in NIPS, vol. 14, no. 2, 2001, pp. 849–856. [Google Scholar]

- [6].Awasthi P, Bandeira AS, Charikar M, Krishnaswamy R, Villar S, and Ward R, “Relax, no need to round: Integrality of clustering formulations,” in Proceedings of the 2015 Conference on Innovations in Theoretical Computer Science. ACM, 2015, pp. 191–200. [Google Scholar]

- [7].Ward JH Jr, “Hierarchical grouping to optimize an objective function,” Journal of the American statistical association, vol. 58, no. 301, pp. 236–244, 1963. [Google Scholar]

- [8].Hocking TD, Joulin A, Bach F, and Vert J-P, “Clusterpath an algorithm for clustering using convex fusion penalties,” in 28th international conference on machine learning, 2011, p. 1. [Google Scholar]

- [9].Zhu C, Xu H, Leng C, and Yan S, “Convex optimization procedure for clustering: Theoretical revisit,” in Advances in Neural Information Processing Systems, 2014, pp. 1619–1627. [Google Scholar]

- [10].De Souto MC, Jaskowiak PA, and Costa IG, “Impact of missing data imputation methods on gene expression clustering and classification,” BMC bioinformatics, vol. 16, no. 1, p. 64, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Bell RM, Koren Y, and Volinsky C, “The bellkor 2008 solution to the netflix prize,” Statistics Research Department at AT&T Research, 2008. [Google Scholar]

- [12].Brick JM and Kalton G, “Handling missing data in survey research,” Statistical methods in medical research, vol. 5, no. 3, pp. 215–238, 1996. [DOI] [PubMed] [Google Scholar]

- [13].Wagstaff KL and Laidler VG, “Making the most of missing values: Object clustering with partial data in astronomy,” in Astronomical Data Analysis Software and Systems XIV, vol. 347, 2005, p. 172. [Google Scholar]

- [14].Dixon JK, “Pattern recognition with partly missing data,” IEEE Transactions on Systems, Man, and Cybernetics, vol. 9, no. 10, pp. 617–621, 1979. [Google Scholar]

- [15].Chen GK, Chi EC, Ranola JMO, and Lange K, “Convex clustering: An attractive alternative to hierarchical clustering,” PLoS Comput Biol, vol. 11, no. 5, p. e1004228, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Hathaway RJ and Bezdek JC, “Fuzzy c-means clustering of incomplete data,” IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), vol. 31, no. 5, pp. 735–744, 2001. [DOI] [PubMed] [Google Scholar]

- [17].Sarkar M and Leong T-Y, “Fuzzy k-means clustering with missing values.” in Proceedings of the AMIA Symposium. American Medical Informatics Association, 2001, p. 588. [PMC free article] [PubMed] [Google Scholar]

- [18].Chi JT, Chi EC, and Baraniuk RG, “k-pod: A method for k-means clustering of missing data,” The American Statistician, vol. 70, no. 1, pp. 91–99, 2016. [Google Scholar]

- [19].Hunt L and Jorgensen M, “Mixture model clustering for mixed data with missing information,” Computational Statistics & Data Analysis, vol. 41, no. 3, pp. 429–440, 2003. [Google Scholar]

- [20].Lin TI, Lee JC, and Ho HJ, “On fast supervised learning for normal mixture models with missing information,” Pattern Recognition, vol. 39, no. 6, pp. 1177–1187, 2006. [Google Scholar]

- [21].Candès EJ and Recht B, “Exact matrix completion via convex optimization,” Foundations of Computational mathematics, vol. 9, no. 6, p. 717, 2009. [Google Scholar]

- [22].Eriksson B, Balzano L, and Nowak RD, “High-rank matrix completion and subspace clustering with missing data,” CoRR, vol. abs/1112.5629, 2011. [Online]. Available: http://arxiv.org/abs/1112.5629 [Google Scholar]

- [23].Elhamifar E, “High-rank matrix completion and clustering under self-expressive models,” in Advances in Neural Information Processing Systems, 2016, pp. 73–81. [Google Scholar]

- [24].Lichman M, “UCI machine learning repository,” 2013. [Online]. Available: http://archive.ics.uci.edu/ml

- [25].Chi EC and Lange K, “Splitting methods for convex clustering,” Journal of Computational and Graphical Statistics, vol. 24, no. 4, pp. 994–1013, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Pan W, Shen X, and Liu B, “Cluster analysis: unsupervised learning via supervised learning with a non-convex penalty.” Journal of Machine Learning Research, vol. 14, no. 1, pp. 1865–1889, 2013. [PMC free article] [PubMed] [Google Scholar]

- [27].Chartrand R and Yin W, “Iteratively reweighted algorithms for compressive sensing,” in Acoustics, speech and signal processing, 2008. ICASSP 2008. IEEE international conference on. IEEE, 2008, pp. 3869–3872. [Google Scholar]

- [28].Matula DW, The largest clique size in a random graph. Department of Computer Science, Southern Methodist University, 1976. [Google Scholar]