Abstract

Objective

Teleneuropsychology (TNP) has been shown to be a valid assessment method compared with in-person neuropsychological evaluations. Interest in delivering TNP directly to patients’ homes has arisen in response to the coronavirus disease 2019 (COVID-19) pandemic. However, prior research has typically involved patients tested in clinical settings, and the validity of in-home TNP testing has not yet been established. The present study aims to explore the validity and clinical utility of in-home TNP testing in a mixed clinical sample in the wake of COVID-19.

Methods

Test profiles for 111 in-home TNP patients were retrospectively compared with 120 patients who completed in-person evaluations. The TNP test battery consisted of tests measuring attention/processing speed, verbal memory, naming, verbal fluency, and visuoconstruction. TNP scores of cognitively normal (CN) patients were compared with patients with neurocognitive disorders (NCD), and score profiles were examined among suspected diagnostic groups of Alzheimer’s disease (AD), Parkinson’s disease (PD), and vascular disease (VaD).

Results

TNP test scores did not significantly differ from in-person testing across all tests except the Hopkins Verbal Learning Test-Revised Discrimination Index. Within the TNP group, significant differences between the CN and NCD groups were found for all tests, and the memory and semantic fluency tests yielded large effect sizes (d ≥ 0.8). Score profiles among the AD, PD, and VaD groups were explored.

Conclusions

These findings support the validity of in-home TNP testing compared with in-person neuropsychological testing. Practice considerations, limitations, and future directions are discussed.

Keywords: Neuropsychological assessment, Health care, Telehealth, Validity, Technology

Introduction

In response to the coronavirus disease 2019 (COVID-19) global health crisis, health and public officials across the United States urged citizens to stay at home to help reduce the spread of the virus (Schuchat, 2020). In response, health care systems limited in-person services and procedures to decrease viral transmission and conserve hospital resources, such as personal protective equipment. The rapid onset of this public health crisis led to a significant increase in the utilization of telehealth services across most health care settings in the United States (Galewitz, 2020). The delivery of clinical neuropsychological services using teleconferencing video technology (i.e., teleneuropsychology [TNP]) is a growing practice area that is generally well accepted by patients (Parikh et al., 2013). However, TNP was not a mainstay in professional practice discourse until the onset of the COVID-19 pandemic. Adoption of TNP was accelerated by updates to the reimbursement for these services implemented by the Centers for Medicare and Medicaid Services (2020) and numerous private insurers. In addition, many states relaxed their licensing statutes through emergency orders allowing for clinicians to evaluate patients across state lines (American Psychological Association Services Inc., 2020). Although these steps have improved the feasibility of delivering TNP, clinicians have recently expressed concern regarding best practice guidelines and recommendations for successfully implementing TNP into clinical practice (Marra, Hoelzle, Davis, & Schwartz, 2020b).

Practice guidelines for implementing TNP have existed for the last two decades. An early paper from Schopp, Johnstone, and Merrel (2000) explored the effectiveness of clinical interviews conducted using videoconferencing technology; implications for reducing health care costs, ethical considerations, and ways to encourage clinicians to adopt new technology were also investigated. Grosch, Gottlieb, and Cullum (2011) explored and developed guidelines to address ethical issues specific to TNP, including informed consent, privacy and confidentiality, competence, test standardization and security, licensing and billing issues, technological matters, care for special populations, and staffing concerns. More recently, the Inter-Organizational Practice Committee, a committee of the chairs of the major professional neuropsychological organizations, disseminated guidelines and best practices for TNP (Bilder et al., 2020). Furthermore, two academic medical center-based neuropsychology groups have recently published their own TNP guidelines while highlighting a practice model for TNP testing (Hewitt & Loring, 2020; Marra, Hamlet, Bauer, & Bowers, 2020a). Although these updated guidelines have surely been helpful to clinicians in developing TNP practice models since the onset of COVID-19, neither model included empirical data. Despite these resources guiding clinicians in implementing TNP practice, concerns regarding the validity and clinical utility of TNP testing persist (American Academy of Clinical Neuropsychology, 2020).

Researchers have investigated the validity of TNP testing primarily by comparing scores from TNP and in-person testing methods. A meta-analysis (Brearly et al., 2017) of 12 TNP studies found that verbally administered tests, such as list learning, Digit Span (DS), and verbal fluency, were generally unaffected by the method of administration. However, TNP versions of tests involving visual and motor components (i.e., picture naming and Clock Drawing Tests [CDT]) were less supported. Although these results are promising, it is notable that the TNP testing in all studies included in the meta-analysis involved patients or participants undergoing TNP testing in either a controlled clinic or laboratory setting. A recent study from Barcellos and colleagues (2020) involved in-home TNP testing with patients with multiple sclerosis. The authors’ analyses showed that tests of verbal memory and processing speed could be administered remotely to patients in their homes. Nevertheless, there remains a paucity of evidence for the validity of in-home TNP testing; thus, the clinical utility of this testing method has yet to be fully established.

Perhaps reflecting the limited research base, the practical adoption of TNP testing prior to the COVID-19 pandemic was limited, with one survey of neuropsychologists showing that only 11% of respondents previously utilized TNP testing (Chapman et al., 2020). Even after the COVID-19 pandemic began, most clinicians were reluctant or unable to adapt their model of service delivery to accommodate TNP. A survey (Marra et al., 2020b) of neuropsychologists conducted in March 2020 found that just over half of respondents were using TNP for intake and feedback purposes, but not for testing administration purposes. Another survey of neuropsychologists by the American Academy of Clinical Neuropsychology Board of Directors (2020) found that only 1 in 10 neuropsychologists were utilizing or planned to utilize TNP services at all, and of those respondents using TNP, only about one-third were using TNP to conduct testing. Furthermore, almost one in three survey respondents expressed concern about test validity and reliability issues with TNP. These concerns involving test validity, test security, and technological difficulties were echoed in the international professional community (Hammers, Stolywk, Harder, & Cullum, 2020).

In response to COVID-19 social distancing and stay-at-home guidelines, our neuropsychology clinic developed a TNP model that involved all major evaluation elements (i.e., interviews, testing, and feedback) being conducted with patients in their own homes. This in-home TNP model was developed following a review of the extant TNP literature (Brearly et al., 2017) and the practice guidelines disseminated by professional neuropsychological organizations and academic groups (Bilder et al., 2020; Grosch et al., 2011; Marra et al., 2020a). The development of this TNP model was bolstered by our health care system’s expeditious implementation of the technological infrastructure necessary for delivering system-wide telehealth services, including video visit software (Zoom, Inc.), scheduling procedures, and electronic medical record documentation and billing guidelines. Although a potential benefit of this in-home TNP model was to increase access to patient care, especially during the COVID-19 stay-at-home orders, in-home delivery of TNP services was mostly uncharted territory warranting investigation.

The goal of the present study was to examine the validity of an in-home TNP model in a mixed clinical, adult outpatient sample in the wake of the COVID-19 public health crisis. The two main research questions were as follows: (1) Is in-home TNP testing a valid method for delivering neuropsychological services compared with in-person testing? and (2) Does testing using in-home TNP provide clinical utility in distinguishing patient groups? It was predicted that the neuropsychological test scores would not differ significantly based on the testing method. Based on this predicted outcome, it was also hypothesized that TNP testing would provide clinically useful neuropsychological data pertaining to level and pattern of cognitive impairment.

Methods

Participants

This study was approved by the University of Kansas Medical Center Institutional Review Board. A retrospective medical record review was conducted for a series of consecutive patients referred for evaluation in an academic medical center-based adult outpatient neuropsychology clinic located in a first-ring suburb outside a medium-sized, midwestern city. Patients were categorized into two groups based on the testing method, either TNP or in-person neuropsychology (IPNP). A total of 154 telehealth patients were seen over the study period (January–June 2020). Of those, 36 patients were not administered TNP testing and were rescheduled for in-person testing (11 patients due to clinician decision, 10 patients due to technical difficulties/internet connection, and 15 patients due to other reasons/no reason given). An additional four TNP patients were excluded due to test engagement/effort concerns, two patients were not seen due to state statutory/license limitations, and one patient chose not to complete testing due to personal preference. The final TNP sample consisted of 111 patients who were included in analyses.

The average age of the TNP group was 58.91 (standard deviation [SD] = 14.30, range = 19–89) and average years of education was 15.14 (SD = 2.62, range = 9–20). The TNP group consisted of 66 women (59.5%), and 94 patients self-identified as Caucasian (84.7%), 11 as African American (9.9%), four as Asian American (3.6%), and two patients identified as Latinx/Hispanic (1.8%). The second subgroup consisted of patients who completed an in-person evaluation using the IPNP service model. The patients in this subgroup were selected randomly from the larger pool of patients evaluated in clinic in the first 6 months of 2020. The sample size of 120 was chosen for this subgroup to keep the size similar to the TNP group. To account for possible selection bias due to the stay-at-home guidelines implemented following the onset of the COVID-19 pandemic in March 2020, half of the IPNP group (n = 60) was comprised of patients with evaluations conducted prior to the pandemic and half (n = 60) were patients evaluated after the TNP model was introduced. The IPNP group’s average age was 61.65 (SD = 16.90, range = 18–90), education in years was 14.68 (SD = 2.89, range = 6–20), and the group included 61 women (50.8%). Of the IPNP patients, 99 identified as Caucasian (82.5%), 16 as African American (13.3%), two as Asian American (1.7%), and three patients self-identified as Latinx/Hispanic (2.5%). Demographics were compared between the two IPNP subgroups (i.e., those who completed testing before and after the TNP testing model was implemented); these groups did not statistically differ in age, education, sex, or race/ethnicity.

To evaluate the clinical utility of in-home TNP testing, patients were assigned a diagnostic label based on their level of impairment using the Diagnostic and Statistical Manual, 5th edition (American Psychiatric Association, 2013) diagnostic criteria for mild neurocognitive disorder (MiNCD) and major neurocognitive disorder (MaNCD). These designations were assigned separately by two fellowship-trained clinical neuropsychologists, with at least one of the raters in each pair having obtained board certification in clinical neuropsychology. In cases in which the designations were not matched, a third clinician acted as a “tiebreaker” and the modal designation was assigned. Patients who were deemed cognitively healthy and did not meet criteria for a neurocognitive disorder were designated as cognitively normal (CN).

The TNP subgroup consisted of 52 patients categorized as CN, 46 patients categorized as MiNCD, and 13 patients categorized as MaNCD. Of the MiNCD patients, the primary diagnoses were vascular disease/stroke (VaD; n = 12), Alzheimer’s disease (AD; n = 11), Parkinson’s disease (PD; n = 9), multiple sclerosis (MS; n = 4), epilepsy (n = 3), brain injury (n = 2), cancer (n = 2), frontotemporal dementia (FTD; n = 2), and other (n = 2). Of the MaNCD patients, the primary diagnoses were AD (n = 8), VaD (n = 2), PD (n = 1), FTD (n = 1), and MS (n = 1). The most common diagnosis in the CN group was no suspected diagnosis (ND, n = 27), followed by psychiatric disorder (n = 9), pain disorder (n = 4), sleep disorder (n = 3), PD (n = 2), stroke (n = 2), MS (n = 1), epilepsy (n = 1), cancer (n = 1), brain injury (n = 1), and other (n = 1).

Procedures

The TNP evaluations involved neuropsychologists conducting interviews and providing feedback with trained psychometrists administering the TNP tests. All testing was administered by the examiner using a computer located in an adult outpatient clinic. Patients connected to a secure and Health Insurance Portability and Accountability Act (HIPAA)-compliant video meeting using a secure Zoom URL sent directly to their password-protected patient portal (MyChart®). Once patients provided informed consent to participate in the visit, the neuropsychologist typically conducted a clinical interview. In cases in which TNP testing was not indicated, the clinician referred the patient for either in-person testing at a later date or to other medical specialties. Following the interview, the neuropsychological test battery was administered to the patient. The majority of TNP patients were in their own homes, whereas some were in the home of a close family member or friend. Prior to the start of testing, patients were instructed to eliminate distractions from their immediate surroundings, ensure that no other person was nearby during testing, and encouraged to provide their best effort and engagement during the testing session. Following the session, the test results were reviewed by the clinician who then provided the patient feedback. The average duration of testing involved in the TNP evaluations was 55.21 min (SD = 14.48, range = 27–119). The IPNP evaluations were typically conducted in the same order as the TNP visits, with all elements of the IPNP evaluation conducted during the same clinic visit.

Due to the retrospective chart review study design, the neuropsychological tests included in the IPNP battery were administered based upon the preference of the clinician at the time of the evaluation. The TNP test battery was designed to provide an abbreviated cognitive profile and measure the same cognitive constructs as a recently validated TNP battery (Wadsworth et al., 2018). The tests that were not identical were considered to be similar in the construct being measured (e.g., different letters on phonemic fluency trials). The TNP and IPNP test batteries mostly overlapped, and the standardized instructions and scoring methodology from the Calibrated Neuropsychological Normative System (Schretlen, Testa, & Pearlson, 2010) were used for the following tests: Wechsler Adult Intelligence Scale, 3rd edition, DS (Wechsler, 1997); Calibrated Ideational Fluency Assessment (CIFA; Schretlen & Vannorsdall, 2010)—Verbal Fluency S Words, P Words, Animals, and Supermarket Items trials; Boston Naming Test 30-item short form (BNT-30; Goodglass & Kaplan, 1983); Hopkins Verbal Learning Test-Revised (HVLT-R; Benedict, Schretlen, Groninger, & Brand, 1998); and the Geriatric Depression Scale 15-item short form (Yesavage & Sheikh, 1986). The TNP test battery contained the following additional tests: Mini-Mental State Examination (MMSE; Folstein, Robins, & Helzer, 1983), Oral Trail Making Test Parts A and B (OTMT; Ricker, Axelrod, & Houtler, 1996), and CDT (Schretlen et al., 2010). Tests administered in the IPNP test battery, but not the TNP battery, were not included in these analyses.

Statistical Analyses

Analyses of data were performed using JASP version 0.13.1.0 (JASP Team, 2020), a free, open-source statistical test software that has been recognized for its functionality and user-friendly interface (Love et al., 2015). Descriptive statistics were compiled for the total sample including demographic variables (age, education, sex, and race/ethnicity) and the neuropsychological test variables (raw scores). Independent samples t-tests were used to compare the demographic and neuropsychological variables between the TNP and IPNP groups. Cohen’s d was utilized to compute effect sizes for the mean difference comparisons. Of note, the MMSE and CDT were not included in this initial set of analyses due to an insufficient number of scores for patients in the IPNP group. Additional independent samples t-tests were conducted to compare the demographic and neuropsychological variables among the TNP group based on level of impairment. Due to the limited number of MaNCD patients compared with the CN and MiNCD subgroups, the MaNCD patients were combined with the MiNCD subgroup for the final set of analyses (henceforth, referred to as NCD subgroup). Standardized scores (Z-scores) were computed for the TNP test variables among the clinical subgroups (AD, PD, and VaD) using the ND clinical subgroup as the control group of reference. Analysis of variance (ANOVA) was used to compare the neuropsychological test profiles among the clinical subgroups to the ND subgroup. Bonferroni post hoc correction was employed to allow for multiple comparisons.

Results

Descriptives for the demographic and neuropsychological variables are presented in Table 1 along with the group comparison analysis. The TNP and IPNP groups did not differ statistically across all demographic factors including age (t(229) = 1.325, p = .187), education (t(229) = −1.289, p = .199), sex (Χ2(1,231) = 1.733, p = .188), and race/ethnicity (Χ2(3,231) = 1.574, p = .665). Furthermore, years of education among the race/ethnicity groups did not differ for the TNP group (Χ2(30, 111) = 26.667, p = .641) or the IPNP group (Χ2(39, 120) = 37.744, p = .527). The TNP and IPNP group means across all neuropsychological test scores were not statistically different, with the exception of the HVLT-R Discrimination Index score (t(225) = −2.378, p = .018, d = 0.32).

Table 1.

Testing method group comparisons for demographic and neuropsychological variables

| Testing method | ||||||||

|---|---|---|---|---|---|---|---|---|

| TNP | IPNP | |||||||

| n | Mean | SD | n | Mean | SD | t | d | |

| Age | 111 | 58.91 | 14.30 | 120 | 61.65 | 16.90 | 1.325 | 0.17 |

| Education | 111 | 15.14 | 2.62 | 120 | 14.68 | 2.89 | −1.289 | 0.17 |

| Sex (% female) | 66 | 59.46 | 61 | 50.83 | 1.733† | |||

| Ethnicity (%) | 1.574† | |||||||

| Caucasian | 94 | 84.69 | 99 | 82.50 | ||||

| African American | 11 | 9.91 | 16 | 13.33 | ||||

| Asian American | 4 | 3.60 | 2 | 1.67 | ||||

| Latinx | 2 | 1.80 | 3 | 2.50 | ||||

| MMSE | 73 | 27.60 | 2.72 | |||||

| OTMT Part A | 107 | 8.65 | 2.80 | |||||

| OTMT Part B | 102 | 45.94 | 23.79 | |||||

| DS Forward | 111 | 6.04 | 1.24 | 117 | 6.25 | 1.36 | 1.224 | 0.16 |

| DS Backward | 111 | 4.48 | 1.21 | 117 | 4.35 | 1.22 | −0.788 | 0.10 |

| CIFA S Words | 107 | 11.28 | 4.19 | 117 | 12.08 | 5.20 | 1.255 | 0.17 |

| CIFA P Words | 90 | 10.82 | 4.30 | 116 | 11.91 | 5.40 | 1.570 | 0.22 |

| CIFA Animals | 107 | 16.66 | 5.86 | 117 | 15.74 | 6.00 | −1.160 | 0.16 |

| CIFA Supermarket Items | 74 | 21.50 | 8.85 | 109 | 19.89 | 8.28 | −1.255 | 0.19 |

| BNT-30 | 108 | 28.00 | 3.01 | 114 | 27.56 | 3.59 | −0.984 | 0.13 |

| CDT | 93 | 4.31 | 0.97 | |||||

| HVLT-R Total 1–3 | 111 | 21.40 | 6.31 | 117 | 20.67 | 6.98 | −0.827 | 0.11 |

| HVLT-R Delayed Recall | 111 | 6.16 | 4.02 | 117 | 5.31 | 4.32 | −1.543 | 0.20 |

| HVLT-R Percent Retention | 111 | 64.82 | 37.93 | 117 | 57.23 | 42.55 | −1.418 | 0.19 |

| HVLT-R Discrimination Index | 111 | 9.04 | 2.70 | 116 | 8.07 | 3.37 | −2.378* | 0.32 |

| GDS-15 | 110 | 4.66 | 3.67 | 84 | 3.85 | 3.67 | −1.54 | 0.22 |

Notes: N = 231; t = independent samples t-test; d = Cohen’s d; † = Chi-squared test; *p < .05; TNP = teleneuropsychology; IPNP = in-person neuropsychology; MMSE = Mini-Mental State Examination; OTMT = Oral Trail Making Test; DS = Digit Span; CIFA = Calibrated Ideational Fluency Assessment; BNT-30 = Boston Naming Test 30-item; CDT = Clock Drawing Test; HVLT-R = Hopkins Verbal Learning Test-Revised; GDS-15 = Geriatric Depression Scale 15-item.

Within the TNP group, the CN subgroup was significantly younger than the NCD subgroup (t(109) = −3.778, p = < .001), but the subgroups did not significantly differ in education level (t(109) = 1.571, p = .299) or race/ethnicity group makeup (Χ2(3, 111) = 1.037, p = .792). Females comprised a significantly greater proportion of the CN subgroup compared with the NCD subgroup (56.0% vs. 43.9%, respectively; (Χ2(1,111) = 5.550, p = .018). There were several significant test score differences between the level of impairment subgroups. The tests with large effect sizes (d > 0.8) included HVLT-R Delayed Recall (t(109) = 7.423, p < .001, d = 1.41), HVLT-R Percent Retention (t(109) = 6.481, p < .001, d = 1.23), CIFA-Supermarket Items (t(72) = 5.244, p < .001, d = 1.22), HVLT-R Total Trials 1–3 (t(109) = 6.189, p < .001, d = 1.18), HVLT-R Discrimination Index (t(109) = 5.773, p < .001, d = 1.10), CIFA-Animals (t(105) = 5.255, p < .001, d = 1.02), and CIFA-P Words (t(88) = 3.899, p < .001, d = 0.82). See Table 2 for the group comparisons analysis between the CN and the NCD subgroups.

Table 2.

Demographic and neuropsychological test scores compared between TNP level of impairment subgroups

| Level of impairment | ||||||||

|---|---|---|---|---|---|---|---|---|

| CN | NCD | |||||||

| N | Mean | SD | N | Mean | SD | t | d | |

| MMSE | 29 | 28.79 | 1.01 | 44 | 26.82 | 3.19 | 3.225** | 0.77 |

| OTMT Part A | 51 | 7.71 | 1.67 | 56 | 9.50 | 3.31 | −3.489*** | 0.68 |

| OTMT Part B | 50 | 39.76 | 19.95 | 52 | 51.87 | 25.80 | −2.644** | 0.52 |

| DS Forward | 52 | 6.44 | 1.32 | 59 | 5.68 | 1.06 | 3.384*** | 0.64 |

| DS Backward | 52 | 4.94 | 1.09 | 59 | 4.07 | 1.17 | 4.049*** | 0.77 |

| CIFA S Words | 51 | 12.41 | 3.68 | 56 | 10.25 | 4.38 | 2.749** | 0.53 |

| CIFA P Words | 44 | 12.50 | 4.22 | 46 | 9.22 | 3.76 | 3.899*** | 0.82 |

| CIFA Animals | 51 | 19.45 | 5.63 | 56 | 14.13 | 4.85 | 5.255*** | 1.02 |

| CIFA Supermarket Items | 38 | 26.00 | 8.89 | 36 | 16.75 | 5.90 | 5.244*** | 1.22 |

| BNT-30 | 51 | 28.86 | 1.74 | 57 | 27.23 | 3.64 | 2.920** | 0.56 |

| CDT | 44 | 4.55 | 0.66 | 49 | 4.10 | 1.14 | 2.257* | 0.47 |

| HVLT-R Total 1–3 | 52 | 24.81 | 5.12 | 59 | 18.39 | 5.73 | 6.189*** | 1.18 |

| HVLT-R Delayed Recall | 52 | 8.64 | 2.42 | 59 | 3.98 | 3.91 | 7.423*** | 1.41 |

| HVLT-R Percent Retention | 52 | 86.03 | 17.38 | 59 | 46.12 | 41.28 | 6.481*** | 1.23 |

| HVLT-R Discrimination Index | 52 | 10.42 | 1.46 | 59 | 7.81 | 2.96 | 5.773*** | 1.10 |

| GDS-15 | 51 | 5.33 | 3.84 | 59 | 4.09 | 3.45 | 1.797 | 0.34 |

Notes: N = 111; *p < .05; **p < .01; *** p < .001; t = independent samples t-test; d = Cohen’s d; CN = cognitively normal; NCD = neurocognitive disorder; MMSE = Mini Mental State Examination; OTMT = Oral Trail Making Test; DS = Digit Span; CIFA = Calibrated Ideational Fluency Assessment; BNT-30 = Boston Naming Test 30-item; CDT = Clock Drawing Test; HVLT-R = Hopkins Verbal Learning Test-Revised; GDS-15 = Geriatric Depression Scale 15-item.

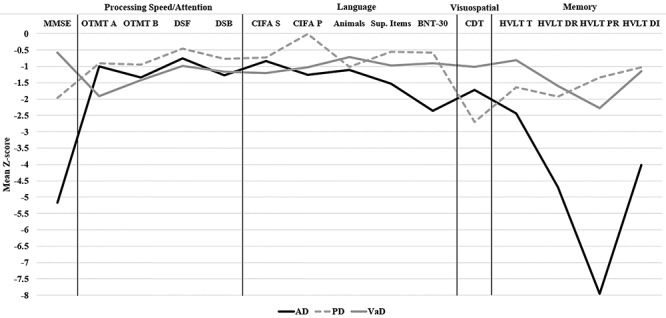

Visual inspection of Fig. 1 revealed several notable findings. Almost all score means were at least half an SD below the CN group mean with the exception of DS Forward and CIFA-P Words for the PD group. Multiple standardized test score discrepancies were present among the three clinical subgroups. To further explore these discrepancies, ANOVAs were conducted among the clinical and ND subgroups for each test raw score. The most notable differences between clinical subgroups and the ND subgroup were evident with OTMT A for the VaD subgroup (F(3, 68) = 4.274, p = .008, d = 0.97), CDT for the PD subgroup (F(3, 58) = 3.711, p = .017, d = 1.61), and MMSE (F(3, 46) = 7.763, p <.001, d = 1.66), CIFA-Supermarket Items (F(3, 46) = 7.763, p <.001, d = 1.66), BNT-30 (F(3, 69) = 3.804, p = .01, d = 0.88), and HVLT-R scores for the AD subgroup (HVLT-R Total 1–3: F(3, 70) = 20.405, p <.001, d = 2.59; HVLT-R Delayed Recall: F(3, 70) = 36.001, p < .001, d = 4.24; HVLT-R Percent Retention: F(3, 70) = 29.441, p < .001, d = 3.51); HVLT-R Discrimination Index: F(3, 70) = 16.696, p < .001, d = 2.03).

Fig. 1.

Teleneuropsychological test profiles among clinical subgroups. Note: MMSE = Mini-Mental State Examination; OTMT = Oral Trail Making Test; DSF = Digit Span Forward; DSB = Digit Span Backward; CIFA = Calibrated Ideational Fluency Assessment; S = S Words; P = P Words; BNT-30 = Boston Naming Test 30-item short form; CDT = Clock Drawing Test; HVLT = Hopkins Verbal Learning Test; T = Trials 1–3; DR = Delayed Recall; PR = Percent Retention; DI = Discrimination Index; AD = Alzheimer’s disease; PD = Parkinson’s disease; VaD = vascular disease.

Discussion

Neuropsychologists’ interest in implementing TNP in clinical practice has grown rapidly since the start of the COVID-19 pandemic (Chapman et al., 2020). Although TNP testing has previously been shown to be valid and reliable when administered to patients in a satellite clinical or laboratory setting (Brearly et al., 2017), the validity of in-home TNP testing had not been thoroughly explored. The purpose of this study was to compare patient data from an in-home TNP model implemented in the wake of the COVID-19 pandemic to data from patients administered traditional neuropsychological tests in person. It was found that TNP testing is sensitive to changes seen in patients with mild cognitive deficits, and that TNP testing has the potential to provide clinically useful information for different patient groups based upon level and pattern of cognitive impairment. These findings support the validity of TNP testing compared with traditional in-person testing as a viable option for remotely assessing patients’ neurocognitive functioning.

Several neuropsychological tests produced similar scores when administered to patients through in-home TNP versus traditional in-person testing. Cognitive tests measuring auditory attention, generative verbal fluency, confrontation naming, and verbal episodic memory did not statistically differ among groups based on the testing method. These findings align with prior research that TNP testing is a valid alternative for in-person neuropsychological testing (Brearly et al., 2017). An exception was noted in the analyses for a single test score (HVLT-R Discrimination Index) for which, although statistically different between the groups, the effect size was small (d = 0.32). Further analyses showed some TNP tests to be sensitive to mild cognitive deficits in a mixed clinical population. The tests that produced large effect sizes (d > 0.80) were three verbal fluency trials (CIFA Animals, Supermarket Items, and P Words) and all four memory scores (HVLT-R Total Learning Score, Delayed Recall, Percent Retention, and Discrimination Index).

Results showed that in-home TNP testing has the potential to produce differing cognitive profiles depending on the suspected cognitive disorder. For example, TNP patients with an amnestic syndrome due to suspected AD produced a profile similar to that which would be expected based on traditional neuropsychological testing. That is, these patients performed more poorly on tests of memory and language compared with the other clinical subgroups. The PD subgroup, a patient population in which visuospatial deficits are commonly seen, performed significantly worse than the other clinical subgroups on the CDT. Lastly, patients in the VaD subgroup performed poorest on the OTMT Part A trial, a subtest measuring oral output speed, which may be seen in patients with subcortically based cognitive impairment such as VaD. These findings contribute to the growing literature that TNP testing can provide a clinically useful estimate of these cognitive domains for patients with cognitive impairment related to neurological and psychiatric disorders.

The TNP battery in the present study was designed to be similar to a test battery validated with in-clinic TNP and traditional in-person testing methods (Wadsworth et al., 2018). The results of the present study were comparable in that most tests, including CDT, DS, BNT, HVLT-R Total and Delayed Recall scores, and phonemic verbal fluency, were not statistically significant between the testing methods. A significant difference was found by Wadsworth and colleagues (2018) for the Animals semantic verbal fluency test, but this difference was not found in the present study. Notably, Wadsworth and colleagues (2018) did not report the HVLT-R Discrimination Index scores, so the present study findings could not be compared with the prior results.

Similar to the Brearly and colleagues (2017) results, the visual-based tests in the present study (BNT-30 and CDT) tended to poorly discriminate the cognitively healthy patients from those with mild cognitive deficits. One possible explanation for this is the restriction of range in scores produced by these tests, particularly the CDT. For example, the CDT score range is on a 0–5 point scale. Almost 95% of patients in the total TNP sample scored between 3 and 5 on the CDT. This could also explain the modest effect size (d = 0.47) for the CDT in detecting mild cognitive deficits in the NCD subgroup. Nevertheless, it was shown in follow-up analyses (Fig. 1) that the visual-based tests showed some differences among the clinical subgroups, supporting the inclusion of these tests in the TNP test battery. A similar test-related limitation of the present study is the lack of estimating premorbid intellectual functioning. In clinical practice, test-based methods of estimating premorbid functioning commonly involve using measures of verbal comprehension or a test of single word reading. Other estimates involve formulae using demographic information, such as education, in addition to test scores (Smith-Seemiller et al., 1997). Such formula-based methods were developed due to the relationship between education and intelligence (Crawford, 1992). Although research examining the validity of TNP administration of premorbid functioning tests is limited, Kirkwood, Peck, and Bennie (2000) found that a test of single word reading showed more consistency than a brief measure of intelligence. Due to the significant clinical utility in the interpretation of these tests among various patient populations, the validity of their usage in TNP testing, and in-home TNP in particular, should be a direction of future research.

The usage of a retrospective clinical sample is a major limitation of the present study. The lack of a controlled experimental design similar to previous TNP studies (Wadsworth et al., 2018) limits the internal validity of this study. Although important clinical information including level of impairment and suspected diagnosis was extracted from patient charts, additional clinical information, such as medical history to examine comorbidities, was not included in these analyses. Such clinical information could provide a more accurate classification of the suspected diagnosis for the clinical subgroups. As previous TNP validity studies have often utilized within-subjects, repeated measures designs, this is an area for recommended future research. Such a hypothetical study would involve testing the same group across more than one setting, including a home-based setting, an off-site clinical setting (i.e., satellite clinic), or a traditional in-person setting. Such an approach could determine which aspects of a patient’s home setting, such as environmental distractions or the presence of a third party, could contribute to variability in test performance. In the present study, environmental noises and distractions were noticed occasionally by psychometrists; however, none of these instances was significant enough to invalidate the obtained test data or cause a discontinuation of the testing session.

Technological issues, a logistical limitation of telehealth practice in general, were found to be an obstacle for some patients in completing their full TNP visit, including testing (10 patients or 6.5% of the total TNP sample). These instances usually resulted in patients being scheduled for a follow-up visit to complete testing in person. One possible reason for this limitation is the nature of the referral population. Generally, adult patients referred for neuropsychological testing tend to be at the older end of the age spectrum (the mean age for the total sample of the present study was 60.33 years). Although a recent survey of broadband access in older adults shows the adoption of broadband internet use is increasing, it remains at just over 50% of adults age 65 and up in the United States (Anderson & Perrin, 2017). Furthermore, poor internet quality or lack of broadband internet access has been cited as a potential additional barrier to health care access in rural populations (O’Dowd, 2018), which has implications for whether some patients would be able to participate in an in-home TNP visit at all. Neuropsychologists who choose to incorporate TNP services into their practice will inevitably experience these issues if they work with older patients and should develop alternative routes for completing the evaluation when technical difficulties prevent the conclusion of the visit or testing.

In the present study, even among patients who were unable to complete testing during their telehealth visit (11 patients, 7.1%), most participated in the initial clinical interview via telehealth. To this point, TNP has the potential to enhance the initial patient triaging process and offers a potential avenue for future clinical research. New patients can first be screened with TNP to provide clinicians with more clinically useful information regarding a patient’s cognitive and functional status. This information could then assist the decision-making process and determine next steps for patients. This process would likely be more convenient for patients for whom a full, in-person visit is not necessary and could target access to care by freeing up appointments for patients who are most in need of an in-person evaluation. Furthermore, in the interest of efficiency and improving clinical care, TNP has been shown to be a less expensive alternative to in-person neuropsychological assessment, thus reducing the health care burden associated with these services (Schopp et al., 2000).

Another direction for future research in this area would be to investigate patient and caregiver attitudes toward in-home TNP testing. Due to the clinical nature of the present study, no formal survey or assessment of attitudes was able to be obtained. Previous survey research has explored attitudes of patients who utilized general medical and mental telehealth services (Grubaugh, Cain, Elhai, Patrick, & Frueh, 2008; Swinton, Robinson, & Bischoff, 2009). Patient preferences and level of proficiency with technology has been shown to predict willingness to participate in telehealth services in certain patient populations (Viers et al., 2015). There has yet to be an investigation of patient preferences regarding comfort level and ease of participating in neuropsychological services through video-based telehealth technology when these services are delivered directly to patients in their homes. Equipping clinicians with a better understanding of what patient factors contribute to patients’ willingness to participate in TNP evaluations could help improve clinic scheduling outcomes, increase visit volume and clinic efficiency, and improve overall patient access to care as a result.

The implementation of in-home TNP testing has the potential to transform clinical neuropsychology practice by extending the reach of clinicians beyond the walls of the clinic or hospital. Furthermore, TNP can increase access to health care resources for patients living great distances from the clinic or practitioner. In the present study, there were multiple patients who resided several hours from our clinic. The opportunity to participate in a TNP evaluation was often mentioned candidly by these patients whose commute to the clinic was not personally feasible. In addition, due to temporary emergency orders that relaxed licensure statutes, some patients were able to be tested while residing in a different state altogether. Although TNP has the potential to connect patients with health care services across great distances, some patient groups may be less likely to have access to reliable internet service. For example, a recent study of internet usage in patients with diabetes and hypertension found that African American and Hispanic/Latinx patients were less likely to have access to the internet compared with Caucasian patients (Jain et al., 2021). Although there are ongoing efforts to close this “racial digital divide” (Ravindran, 2020), clinicians who utilize TNP should be aware of the possible technological limitations that might be present in certain patient race/ethnicity groups.

In conclusion, the present study shows that in-home TNP testing is a valid and clinically useful approach for obtaining a limited neuropsychological profile, and that the findings extend the limited data that have been reported by other groups to date (Barcellos et al., 2020). These findings show that a cognitive test battery including tests of attention, processing speed, language, visuospatial processing, and memory can be administered using in-home TNP testing. These data also show that TNP testing has the potential to identify cognitive impairment in patients and possibly provide supportive evidence for the etiology of the cognitive impairment based on the test profile. Clinicians who may be hesitant to initiate in-home TNP testing may find these data compelling and seek to implement this test administration method in their own practice. In-home TNP testing has the potential to expand access to neuropsychological services, especially for patients where in-person testing is not indicated or feasible and patients who live a great distance from the clinic or provider, such as those with mobility or transportation limitations. Furthermore, patients whose clinical presentation suggests that brief cognitive screening is more appropriate than a lengthy in-person evaluation could benefit from in-home TNP testing. Finally, during the ongoing COVID-19 public health crisis, in-home TNP testing can offer patients an alternative to in-person visits, especially for patients in at-risk groups who are unable or do not wish to travel due to health precautions or social distancing guidelines.

Contributor Information

Adam C Parks, Department of Neurology, University of Kansas Medical Center, Kansas City, KS, USA.

Jensen Davis, Department of Psychology, University of Missouri–Kansas City, Kansas City, MO, USA.

Carrie D Spresser, Department of Neurology, University of Kansas Medical Center, Kansas City, KS, USA.

Ioan Stroescu, Department of Neurology, University of Kansas Medical Center, Kansas City, KS, USA.

Eric Ecklund-Johnson, Department of Neurology, University of Kansas Medical Center, Kansas City, KS, USA.

Funding

None declared.

Conflict of Interest

None declared.

References

- American Academy of Clinical Neuropsychology . (2020, April). AACN COVID-19 Survey Responses. https://theaacn.org/wp-content/uploads/2020/04/AACN-COVID-19-Survey-Responses.pdf (23 September 2020, date last accessed).

- American Psychiatric Association . (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Washington, DC: American Psychiatric Publishing, Inc. doi: 10.1176/appi.books.9780890425596. [DOI] [Google Scholar]

- American Psychological Association Services Inc . (2020). Telehealth Guidance by State During COVID-19. https://www.apaservices.org/practice/clinic/covid-19-telehealth-state-summary (23 September 2020, date last accessed).

- Anderson, M., & Perrin, A. (2017). Tech Adoption Climbs Among Older Adults. Pew Research Center. https://www.pewresearch.org/internet/2017/05/17/tech-adoption-climbs-among-older-adults/ (23 September 2020, date last accessed).

- Barcellos, L. F., Horton, M., Shao, X., Bellesis, K. H., Chinn, T., Waubant, E. et al. (2020). A validation study for remote testing of cognitive function in multiple sclerosis. Multiple Sclerosis Journal, 1–4. doi: 10.1177/1352458520937385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benedict, R. H., Schretlen, D., Groninger, L., & Brandt, J. (1998). Hopkins Verbal Learning Test—Revised: Normative data and analysis of inter-form and test-retest reliability. The Clinical Neuropsychologist, 12(1), 43–55. [Google Scholar]

- Bilder, R. M., Postal, K. S., Barisa, M., Aase, D. M., Cullum, C. M., Gillaspy, S. R. et al. (2020). Inter Organizational Practice Committee recommendations/guidance for teleneuropsychology in response to the COVID-19 pandemic. Archives of Clinical Neuropsychology, 35(6), 647–659. doi: 10.1093/arclin/acaa046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brearly, T. W., Shura, R. D., Martindale, S. L., Lazowski, R. A., Luxton, D. D., Shenal, B. V. et al. (2017). Neuropsychological test administration by videoconference: A systematic review and meta-analysis. Neuropsychology Review, 27(2), 174–186. doi: 10.1007/s11065-017-9349-1. [DOI] [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services . (2020). Physicians and Other Clinicians: CMS Flexibilities to Fight COVID-19. https://www.cms.gov/files/document/covid-19-physicians-and-practitioners.pdf (23 September 2020, date last accessed).

- Chapman, J. E., Ponsford, J., Bagot, K. L., Cadilhac, D. A., Gardner, B., & Stolwyk, R. J. (2020). The use of videoconferencing in clinical neuropsychology practice: A mixed methods evaluation of neuropsychologists’ experiences and views. Australian Psychologist, 55, 618–633. doi: 10.1111/ap.12471. [DOI] [Google Scholar]

- Crawford, J. R. (1992). Current and premorbid intelligence measures in neuropsychological assessment. In Crawford, J. R., Parker, D. M., & McKinlay, W. W. (Eds.), A handbook of neuropsychological assessment (pp. 21–49). Hove, UK: Psychology Press. [Google Scholar]

- Folstein, M. F., Robins, L. N., & Helzer, J. E. (1983). The Mini-Mental State Examination. Archives of General Psychiatry, 40(7), 812. [DOI] [PubMed] [Google Scholar]

- Galewitz, P. (2020). Telemedicine Surges, Fueled by Coronavirus Fears and Shift in Payment Rules. Kaiser Health News. https://khn.org/news/telemedicine-surges-fueled-by-coronavirus-fears-and-shift-in-payment-rules/ (23 September 2020, date last accessed).

- Goodglass, H., & Kaplan, E. (1983). Boston diagnostic examination for aphasia. Philadelphia, PA: Lea & Febiger. [Google Scholar]

- Grosch, M. C., Gottlieb, M. C., & Cullum, C. M. (2011). Initial practice recommendations for teleneuropsychology. The Clinical Neuropsychologist, 25(7), 1119–1133. doi: 10.1080/13854046.2011.609840. [DOI] [PubMed] [Google Scholar]

- Grubaugh, A. L., Cain, G. D., Elhai, J. D., Patrick, S. L., & Frueh, B. C. (2008). Attitudes toward medical and mental health care delivered via telehealth applications among rural and urban primary care patients. The Journal of Nervous and Mental Disease, 196(2), 166–170. doi: 10.1097/NMD.0b013e318162aa2d. [DOI] [PubMed] [Google Scholar]

- Hammers, D. B., Stolywk, R., Harder, L., & Cullum, C. M. (2020). A survey of international clinical teleneuropsychology service provision prior to and in the context of COVID-19. The Clinical Neuropsychologist, 34(7–8), 1267–1283. doi: 10.1080/13854046.2020.1810323. [DOI] [PubMed] [Google Scholar]

- Hewitt, K. C., & Loring, D. W. (2020). Emory University telehealth neuropsychology development and implementation in response to the COVID-19 pandemic. The Clinical Neuropsychologist, 34(7–8), 1352–1366. doi: 10.1080/13854046.2020.1791960. [DOI] [PubMed] [Google Scholar]

- Jain, V., Al Rifai, M., Lee, M. T., Kalra, A., Petersen, L. A., Vaughan, E. M. et al. (2021). Racial and geographic disparities in internet use in the U.S. among patients with hypertension or diabetes: Implications for telehealth in the era of coronavirus disease 2019. Diabetes Care, 44(1), e15–e17. doi: 10.2337/dc20-2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- JASP Team . (2020). JASP (Version 0.13.1.0). http://www.jasp-stats.org/ (23 September 2020, date last accessed).

- Kirkwood, K. T., Peck, D. F., & Bennie, L. (2000). The consistency of neuropsychological assessments performed via telecommunication and face to face. Journal of Telemedicine and Telecare, 6(3), 147–151. doi: 10.1258/1357633001935239. [DOI] [PubMed] [Google Scholar]

- Love, J., Selker, R., Verhagen, J., Marsman, M., Gronau, Q. F., Jamil, T. et al. (2015). Software to sharpen your stats. APS Observer, 28(3), 27–29. [Google Scholar]

- Marra, D. E., Hamlet, K. M., Bauer, R. M., & Bowers, D. (2020a). Validity of teleneuropsychology for older adults in response to COVID-19: A systematic and critical review. The Clinical Neuropsychologist, 34(7–8), 1411–1452. doi: 10.1080/13854046.2020.1769192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marra, D. E., Hoelzle, J. B., Davis, J. J., & Schwartz, E. S. (2020b). Initial changes in neuropsychologists clinical practice during the COVID-19 pandemic: A survey study. The Clinical Neuropsychologist, 34(7–8), 1251–1266. doi: 10.1080/13854046.2020.1800098. [DOI] [PubMed] [Google Scholar]

- O’Dowd, E. (2018). Lack of Broadband Access can Hinder Rural Telehealth Programs. HITInfrastructure. https://hitinfrastructure.com/news/lack-of-broadband-access-can-hinder-rural-telehealth-programs (23 September 2020, date last accessed).

- Parikh, M., Grosch, M. C., Graham, L. L., Hynan, L. S., Weiner, M., Shore, J. H. et al. (2013). Consumer acceptability of brief videoconference-based neuropsychological assessment in older individuals with and without cognitive impairment. The Clinical Neuropsychologist, 27(5), 808–817. doi: 10.1080/13854046.2013.791723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ravindran, S. (2020). America’s Racial Gap & Big Tech’s Closing Window. Deutsche Bank Research. https://www.dbresearch.com/PROD/RPS_EN-PROD/PROD0000000000511664/America%27s_Racial_Gap_%26_Big_Tech%27s_Closing_Window.pdf (16 November 2020, date last accessed).

- Ricker, J. H., Axelrod, B. N., & Houtler, B. D. (1996). Clinical validation of the Oral Trail Making Test. Neuropsychiatry, Neuropsychology, and Behavioral Neurology, 9(1), 50–53. [Google Scholar]

- Schretlen, D. J., Testa, S. M., & Pearlson, G. D. (2010). CNNS, Calibrated Neuropsychological Normative System: Professional manual. Lutz, FL: Psychological Assessment Resources. [Google Scholar]

- Schretlen, D. J., & Vannorsdall, T. D. (2010). CIFA, Calibrated Ideational Fluency Assessment: Professional manual. Lutz, FL: Psychological Assessment Resources. [Google Scholar]

- Schopp, L., Johnstone, B., & Merrell, D. (2000). Telehealth and neuropsychological assessment: New opportunities for psychologists. Professional Psychology: Research and Practice, 31(2), 179–183. doi: 10.1037/0735-7028.31.2.179. [DOI] [Google Scholar]

- Schuchat, A. (2020). Public Health Response to the Initiation and Spread of Pandemic COVID-19 in the United States. Centers for Disease Control. https://www.cdc.gov/mmwr/volumes/69/wr/mm6918e2.htm (23 September 2020, date last accessed).

- Smith-Seemiller, L., Franzen, M. D., Burgess, E. J., & Prieto, L. R. (1997). Neuropsychologists’ practice patterns in assessing premorbid intelligence. Archives of Clinical Neuropsychology, 12(8), 739–744. [PubMed] [Google Scholar]

- Swinton, J. J., Robinson, W. D., & Bischoff, R. J. (2009). Telehealth and rural depression: Physician and patient perspectives. Families, Systems & Health, 27(2), 172. [DOI] [PubMed] [Google Scholar]

- Viers, B. R., Pruthi, S., Rivera, M. E., O’Neil, D. A., Gardner, M. R., Jenkins, S. M. et al. (2015). Are patients willing to engage in telemedicine for their care: A survey of preuse perceptions and acceptance of remote video visits in a urological patient population. Urology, 85(6), 1233–1239. doi: 10.1016/j.urology.2014.12.064. [DOI] [PubMed] [Google Scholar]

- Wadsworth, H. E., Dhima, K., Womack, K. B., Hart, J., Weiner, M. F., Hynan, L. S. et al. (2018). Validity of teleneuropsychological assessment in older patients with cognitive disorders. Archives of Clinical Neuropsychology, 33(8), 1040–1045. doi: 10.1093/arclin/acx140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler, D. (1997). WAIS-3, WMS-3: Wechsler Adult Intelligence Scale, Wechsler Memory Scale: Technical manual. San Antonio, TX: Psychological Corporation. [Google Scholar]

- Yesavage, J. A., & Sheikh, J. I. (1986). 9/Geriatric Depression Scale (GDS). Clinical Gerontologist, 5(1–2), 165–173. doi: 10.1300/J018v05n01_09. [DOI] [Google Scholar]