Abstract

Purpose:

The objective of this study was to identify and validate smartphone-based visual acuity (VA) apps that can be used in a teleophthalmology portal.

Methods:

The study was conducted in three phases: A survey to investigate if the SmartOptometry App was easy to download, understand and test (phase I), an in-clinic comparison of VA measured in a random testing order with four tools namely COMPlog, Reduced Snellen near vision, Peek Acuity (Distance VA) and SmartOptometry (Near VA) (phase II) and a repeatability study on these 4 tools by measuring VA again (phase III). The study recruited the employees of our institute and adhered to the strict COVID-19 protocols of testing.

Results:

Phase I Survey (n = 40) showed 90% of participants used android phones, 60% reported that instructions were clear, and all users were able to self-assess their near VA with SmartOptometry App. Phase II (n = 68) revealed that Peek Acuity was comparable to COMPlog VA (P = 0.31), however SmartOptometry was statistically significantly different (within 2 log MAR lines) from Reduced Snellen near vision test, particularly for young (n = 44, P = 0.004) and emmetropic (n = 16, P = 0.04) participants. All the 4 tests were found to be repeatable in phase III (n = 10) with a coefficient of repeatability ≤0.14.

Conclusion:

Smartphone-based apps were easy to download and can be used for checking patient's distance and near visual acuity. An effect of age and refractive error should be considered when interpreting the results. Further studies with real-time patients are required to identify potential benefits and challenges to solve.

Keywords: Smartphone, tele-ophthalmology, tele-optometry, vision testing, visual acuity Apps

The need for telemedicine is evident with the ongoing coronavirus pandemic (COVID-19). This pandemic has almost affected the entire world and is highly contagious.[1] Social distancing, quarantine, and minimal face-to-face interaction between people became imperative to limit the spread of this contagion. This has led to a challenge in providing eye care to patients, since an eye examination requires one to be in close contact with the patient. It is also reported that head and neck surgeons, ENT, dental surgeons, and eye care professionals are at higher risk of COVID-19 transmission.[2] In fact, the very first case of COVID-19 was reported by an ophthalmologist.[3,4]

To control the pandemic the governments of many countries, including India enforced a lockdown, shutting public transport to restrict the mobility of people, except for emergency medical needs. People also volitionally avoided crowded places and skipped visits to medical facilities except in emergencies. Hospitals also chose to limit the number of patients examined and attended only to emergencies, postponing elective procedures. All this led to an increase in telephonic consultations for both follow-up advice and new complaints, across all specialties including ophthalmology.[5] While this enabled limited patient-physician interaction, there is still a need for improvement in technologies to provide effective virtual consultation and tele-eye health services.

Telemedicine is not new, having evolved well before the present pandemic in an attempt to address the medical care gap of various medical specialties in rural area.[6,7,8,9] Teleophthalmology and teleoptometry services provide an alternative approach in rural areas for the diagnosis of various diseases such as diabetic retinopathy, glaucoma, rehabilitative services for the visually impaired.[10,11,12,13,14,15,16] Telemedicine also saves money, time and travel for the patient. In the COVID-19 era, telemedicine adds a dimension of safety for both the patient and practitioner. Thus, it is imperative that teleophthalmology services should evolve beyond tele-counseling and triaging, to include more objective measures of eye examination.

Visual acuity is central to the assessment of a patient's eye health, both for follow-up and new patients alike. There are various online visual acuity testing tools available. However, the validation of these acuity tools with standard clinical testing is lacking.[17] Hence, there is an immediate need to validate tools, particularly Apps (applications) that are already available for devices such as tabs (tablets) or smartphones and are freely downloadable. The purpose of this study is two-fold. One to explain the strict protocols undertaken during the pandemic to minimize the risk of infection transmission; second, to validate the readily available visual acuity Apps.

Methods

A prospective study was conducted during the national COVID-19 lockdown period (April to May 2020). The Institutional Review Board of our institute approved the study through an expedited online review process. The study protocol adhered to the tenets of the declaration of Helsinki. Study participants were employees of the institute, who were recruited if willing, with informed written consent. The study was conducted in 3 phases. In the first phase, an online survey was conducted for assessing SmartOptometry App (SmartOptometry, v. 3.4. full, Idrija, Slovenia) use. In the second phase, two Apps: SmartOptometry and Peek Acuity (Ver. 3.5.13, London, UK) were validated with in-clinic measurements. In the third phase, repeatability of the clinical measures was conducted. SmartOptometry application measures near visual acuity at 40 cm and Peek Acuity[18] measures distance visual acuity at 2-meters.

Phase I - Pilot survey

The primary objectives were to investigate: (1) the ease of installing the SmartOptometry application, (2) to assess if the instructions were clear and (3) to self-measure a person's acuity. For this an e-mail invitation was sent to all the staff and faculty (both clinicians and non-clinicians) of our institute, requesting their participation to install and evaluate the SmartOptometry application. This application is freely available online (http://www.smart-optometry.com) for both android and iOS platforms. Electronic consent was taken in the Google survey form. Instructions for downloading the application and choosing the acuity test were shown pictorially. The link itself contained instructions to use the app and participant could do this test at the comfort of their home also. Participants were requested to test only one eye of their choice, without their spectacle correction. Post-testing questions were asked which pertained to the following: Visual acuity measured, eye tested, platform used (Android or iOS), age, gender, ease of installation and clarity of instructions in the App on a scale of 1 to 5, (5 being very easy or very clear). They were also asked to identify themselves as clinicians or non-clinicians.

Phase II - Validation of applications

An in-person study was conducted to validate the Apps with clinical tests. For near acuity, Reduced Snellen near vision chart with Tumbling E (Near Vision Test Book, India) was compared with SmartOptometry in the “Visual Acuity+” mode. For distance, COMPlog chart (COMPlog clinical vision measurement systems Ltd, London, UK) projecting Tumbling E with single line acuity was compared with Peek Acuity. Since Peek Acuity uses only Tumbling E optotype, it was the optotype that was uniformly chosen across all tests. The smallest optotype shown in the Apps is 20/20 (6/6 or logMAR 0), Prior to testing, refractive error was measured with an autorefractometer (UnicosURK-800F, Republic of Korea). Only one eye (randomly chosen) was tested per participant. Unaided acuity was measured, in order to know the measurement variability for different refractive errors. Retinoscopy and subjective refraction were avoided to limit the interaction with participants. Spectacle prescription if any, was measured with automated lensometer (Topcon CL 300, UK).

The order of testing was randomized for each participant. SmartOptometry presents 5 optotypes per line; COMPlog was set up in a similar way. Peek Acuity presents single optotype enclosed within a square, to simulate crowding effect.[18] All Apps have inbuilt stopping criteria. Essentially the criterion is to stop with 3 or more mistakes and consider the previous line as the visual acuity. The same criteria were followed for the standard clinical tests as well. Although in COMPlog, 1 or 2 letters missed or read were also considered to improve the precision.

Participants were informed about the study via email. No specific inclusion or exclusion criteria were applied for recruitment. With lockdown, the institute worked in a staggered fashion, being split into Teams A, B and C. Two authors (PNS & MT) involved in this study phase were in Team A. Hence, only Team A employees were recruited. First, the non-clinical employees were tested; following which the clinical employees were recruited. This order was adopted to limit the exposure of a potential contagion to the non-clinical employees, who were not coming in contact with patients visiting our institute.

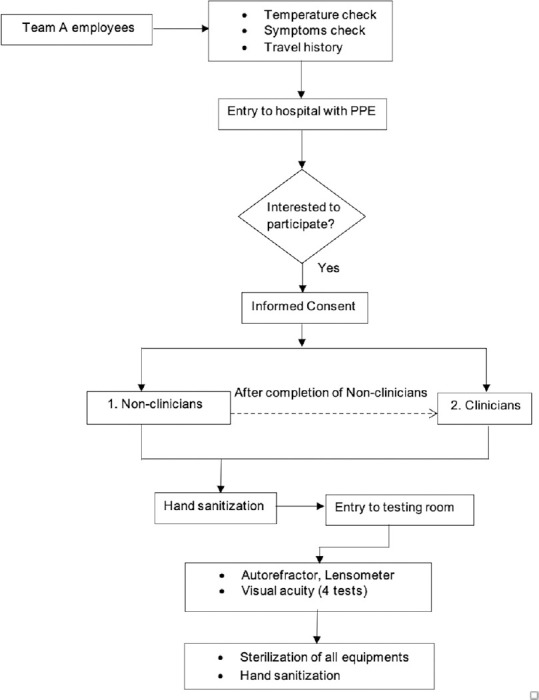

All the employees undergo temperature check at the main entrance. Safety precautions such as sanitizing the participant's hands, mask being worn by the participant were strictly followed [Fig. 1]. The examiner wore a N95 mask, head cap, gloves, and face-shield as personal protective equipment [Fig. 2]. The examiner sterilized her hands with alcohol-based sanitizer before testing any participant. All the instruments that included the Tab, examination chair unit, computer and its accessories were sterilized after each testing. Handwashing with soap by the examiner was done after testing every 5 participants or as and when needed.

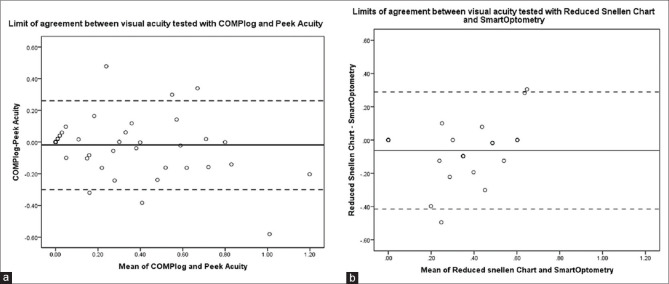

Figure 1.

Study flowchart

Figure 2.

Examiner with personal protective equipments testing the distance visual acuity with visual acuity App for a participant

An Android Tablet (800 × 1280, Honor mediaPad T3 10, Model AGS-L09, Android version 7.0) was used for the Apps. Calibration instructions for Peek Acuity were followed. A sticker was placed on the floor, to mark the test distance of 2 meters for Peek Acuity. For near vision testing, a 40 cm thread was attached to the Tab, to measure the viewing distance, from the participant, just near the forehead without touching. The Tab was kept at 100% brightness level. As the test involved identifying the optotype direction, participants were encouraged to point with their hand to minimize verbal response. Based on the direction pointed, the examiner swiped the touch screen for the Apps. If the gloved hand was not registering the swipe, the examiner removed the glove. Only one examiner (MT) was involved for data collection. Minimum engagement was maintained with all participants and the testing time ranged from 7 to 15 minutes.

Phase III - Test-retest repeatability

Test-retest repeatability was performed for all the 4 tests and aided visual acuity was considered. A subset of participants from Phase II, participated with their spectacle correction if any. A minimum of 2 days break was given before the next measurement. The random order used in the first visit was followed for the second visit. The precautions and measurement protocol were the same as in Phase II.

Data analysis

Visual acuity was the outcome measure. SmartOptometry displayed VA in decimal units, Peek Acuity in logMAR, COMPlog and Near Vision chart was recorded in Snellen fraction. All the measured values were converted to logMAR units for analysis. SPSS software (SPSS Inc., ver. 16.0, Chicago, USA) was used for statistical analysis. Normality of the data was checked with Q-Q plots and Shapiro-Wilk's test. Depending on the normality of the data, parametric (paired t-test) or non-parametric (Wilcoxon sign ranked test) test was chosen. Bland-Altman plot was made to assess repeatability.

Results

Phase I

There were 51 views for the online survey and 40 participants (22 males and 18 females) completed the survey. The mean age ± standard deviation (SD) of all the participants was 34 ± 11 years (range 22–69 years). Of these, an equal number of the respondents were non-clinicians (n = 20). Majority (n = 36, 90%) of the participants used Android platform and 75% tested their right eye. Overall, 67.5% reported installing the Application was 'very easy' and 60% reported the instructions were 'very clear'. More clinicians (100%) reported the instructions to be 'very easy' when compared to the non-clinicians (85%), however this was not statistically significant, (Fisher's Exact test, P = 0.11). Participants, including non-clinical staff (n = 19) were able to report their VA after the test.

Phase II

A total of 68 participants (39 males) were recruited. The mean ± SD age of the participants was 36 ± 11 years, (range 20-60 years). Wilcoxon sign ranked test showed no significant difference (P = 0.315) in distance acuity between Peek Acuity and COMPlog. Significant difference (P = 0.002) was observed in near acuity between SmartOptometry and Reduced Snellen Near vision chart. The overall data along with the effect of age and refractive error are shown in Table 1.

Table 1.

Overall Median logMAR visual acuity with range (minimum, maximum) for COMPlog, SmartOptometry, Peek Acuity and Reduced Snellen near vision chart

| Participants | Median age [Min, Max] | Charts | Median Visual Acuity [Min, Max] | P |

|---|---|---|---|---|

| All (n = 68) | 31 [20, 60] | COMPlog | 0.01 [0.00,1.09] | 0.31 |

| Peek Acuity | 0.00 [0.00,1.30] | |||

| SmartOptometry | 0.00 [0.00,1.00] | 0.002* | ||

| Reduced Snellen near vision | 0.00 [0.00,0.80] | |||

| Young (n = 44) | 27 [20, 36] | COMPlog | 0.00 [0.00,1.09] | 0.98 |

| Peek Acuity | 0.00 [0.00,1.30] | |||

| SmartOptometry | 0.00 [0.00,1.00] | 0.004* | ||

| Reduced Snellen near vision | 0.00 [0.00,0.78] | |||

| Presbyopia (n = 24) | 50 [37, 60] | COMPlog | 0.10 [0.00,0.83] | 0.17 |

| Peek Acuity | 0.15 [0.00,1.30] | |||

| SmartOptometry | 0.39 [0.00,0.60] | 0.007 | ||

| Reduced Snellen near vision | 0.30 [0.00,0.80] | |||

| Myopia (n = 29) | 29 [20, 56] | COMPlog | 0.36 [0.00,1.09] | 0.90 |

| Peek Acuity | 0.00 [0.00,1.30] | |||

| SmartOptometry | 0.30 [0.00,1.00] | 0.74 | ||

| Reduced Snellen near vision | 0.00 [0.00,0.80] | |||

| Hyperopia (n = 16) | 50 [22, 60] | COMPlog | 0.06 [0.00,0.58] | 0.74 |

| Peek Acuity | 0.10 [0.00,0.60] | |||

| SmartOptometry | 0.40 [0.00,0.60] | 0.11 | ||

| Reduced Snellen near vision | 0.30 [0.00,0.78] | |||

| Emmetropia (n = 16) | 29 [21, 47] | COMPlog | 0.00 [0.00,0.04] | 0.71 |

| Peek Acuity | 0.00 [0.00,0.10] | |||

| SmartOptometry | 0.00 [0.00,0.40] | 0.04* | ||

| Reduced Snellen near vision | 0.00 [0.00,0.18] |

*indicates statistically significant P-values (P < 0.05)

Effect of age

Participants were classified as young group (n = 44, age <37 years) and as presbyopic group (n = 24, age 37 and above). The distance acuity remained comparable (P > 0.2) with the two tests (COMPlog and Peek Acuity) for both the age groups. However, near acuity was comparable between SmartOptometry and Reduced Snellen near vision chart only in the presbyopic age group (P = 0.075) and not for the younger age group (P = 0.004).

Effect of refractive error

Participants were further classified based on their refractive error into myopia (spherical equivalent (SE) more than –0.50 DS, n = 29), emmetropia (SE: +0.50 DS to -0.50 DS, n = 23) and hyperopia (SE more than +0.50 DS, n = 16). Distance acuity was comparable for all refractive error groups between the two tests (Peek Acuity and COMPlog). However, a significant difference (P = 0.04) was observed between SmartOptometry and Reduced Snellen near vision chart only for emmetropia group.

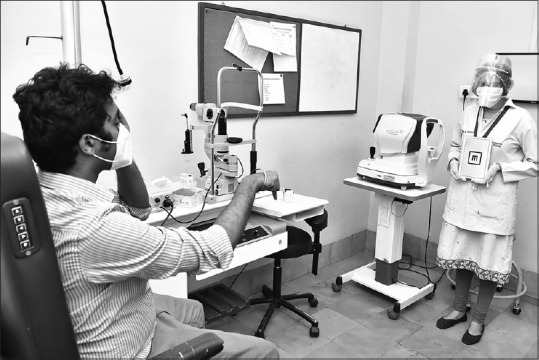

Bland-Altman plot for limits of agreement is shown for all participants in Fig. 3a for distance acuity and for participants in the presbyopic age group in Fig. 3b for near acuity. Peek Acuity showed good agreement with COMPlog. Peek Acuity, on an average underestimated acuity by 1 letter when compared to COMPlog with 95% confidence interval for this difference being up to 3 letters. On the other hand, SmartOptometry on average underestimates the acuity by 3 letters as compared to Reduced Snellen near vision chart with a much wider 95% confidence interval for this difference up to 2 lines.

Figure 3.

Bland-Altman plot for limits of agreement between visual acuity tested with COMPlog and Peek Acuity (a) and between visual acuity tested with SmartOptometry and Reduced Snellen near vision chart (b)

Phase III

For repeatability of visual acuity measurements, 15 participants from phase II were enrolled. Of the 15, 5 participants dropped out for the second visit, resulting in only 10 participants (5 females). The mean ± SD age of all participants was 26 ± 4 years and the refractive error was -1.96 ± 3.1 D.

Table 2 shows the mean difference of the two acuity measurements along with the limits of agreement and coefficient of repeatability. Reduced Snellen near vision chart test is not shown, since all the participants had 0 logMAR on both the days. Higher the coefficient of repeatability (CoR) and larger are the limits of agreement, worse is the repeatability. Visual acuity tested with COMPlog was repeatable within 2 letters (CoR = 0.04 logMAR), Peek Acuity was repeatable by approximately 2 letters (CoR 0.03 logMAR), Reduced Snellen near vision chart was 100% repeatable (CoR = 0.0 logMAR) and SmartOptometry was repeatable within 7 letters (CoR 0.14 logMAR).

Table 2.

Mean difference ± standard deviation (SD) of visual acuity measured on two different days with COMPlog, Peek Acuity and SmartOptometry

| Charts | Mean difference ± SD | 95% CI of mean difference | 95% CI of limit of agreement | Coefficient of repeatability |

|---|---|---|---|---|

| COMPlog | -0.006 ± 0.02 | -0.03 to -0.01 | -0.04 to 0.03 | 0.04 |

| Peek Acuity | -0.01 ± 0.03 | -0.02 to 0.08 | -0.07 to 0.05 | 0.03 |

| SmartOptometry | 0.03 ± 0.07 | -0.02 to 0.01 | -0.10 to 0.16 | 0.14 |

Reduced Snellen near vision chart is not tabulated since all the participants had 0 logMAR acuity on both the days and their Coefficient of repeatability was 0 (i.e., 100% repeatable)

Discussion

In the Post COVID-19 era, tele-eye health services must shift from simply expanding accessibility to also ensuring the safety of all stake holders. As we move towards this change, it becomes important to look at the readily available resources that can be accessed by a larger group of patients.

In this effort, we designed safety protocols for acuity testing [Figs. 1 and 2] and conducted a study to compare smartphone-based apps that measure visual acuity. Validation in our study is defined by how close the measured acuity values are to the existing standard clinical tests. This is the first step, before making these apps be available with instructions for patients in a teleophthalmology/teleoptometry/tele-eye health portal.

From our phase I, we found that majority (90%) of the participants use Android platform. Both the Apps assessed in this study are available in Android platform and can be downloaded from Google Play store. All the participants were able to do this step for SmartOptometry app. The instructions for testing was also reasonably clear to both clinical and non-clinical staff. This could indicate most patients who are familiar with their Smart devices (Phones/Tab) will be able to manage this step. We only used SmartOptometry App in this phase that allows self-administration for near vision test. Although these Apps were originally designed for eye care professionals our study showed non-clinical person can also assess their own acuity.

We observed that Peek Acuity was comparable with COMPlog acuity, the standard test in our clinic. Peek Acuity will require a family member's help to use the App at 2 meters distance. While an assumption can be made that the Tumbling E acuity will be comparable to Sloan optotypes, it may be prudent to use the Tumbling E both in in-office visit and remotely. Tumbling E is more universal and avoids language barriers, and can also be tried in young children.[19] Neither age nor refractive error significantly affected distance visual acuity measurement by either method [Table 1].

SmartOptometry while not comparable with Reduced Snellen chart, statistically, the difference was within 2 lines, which is still clinically acceptable.[20] Age and refractive error influenced these measurements significantly [Table 1]. In younger adults (<37 years) and those with emmetropia, SmartOptometry tends to underestimate the visual acuity. One reason for this could be the 'pixelation' problem inherent to digital screens.[21] The Tab (1280 × 800) used in our study had 157.2 pixels per inch and a pixel size of 0.16 mm. At a viewing distance of 40 cm, one pixel would subtend about 1.4' visual angle. Clearly this is larger than the minimum angle of resolution (1') of the human eye. Thus, artifacts would appear when optotypes are digitally drawn at a smaller size beyond the screen's resolution capacity. These distorted optotypes can lead to response error. This could be the reason why participants expected to have good acuity (young adults and emmetropes) are showing a reduction with SmartOptometry. Therefore, SmartOptometry may correlate with the Reduced Snellen visual acuity if a patient's visual acuity is poor and not when it is actually good. This could imply that SmartOptometry can be used for patients being followed up with disease conditions (e.g., age-related macular degeneration) that affect near visual acuity. A larger group of participants with a range of visual acuity should be included to validate this observation. On the contrary, a study[22] comparing an iPhone app with a near chart found the app to be overestimating the visual acuity particularly for those with poor acuity. Better screen resolution and higher contrast of the display screen were considered as the reasons in this study.[22] The limitations of the Reduced snellen near vision chart similar to the disadvantages of a Snellen acuity chart[23] should also be kept in mind.

The test-retest repeatability was less than 1 line for COMPlog, Peek Acuity and Reduced Snellen near vision chart. These measures were comparable to earlier studies that also included Peek Acuity.[18,24] SmartOptometry's repeatability is within 2 lines. This is larger than the expected limits of repeatability which is about 1 line for near acuity.[25] Again, this larger limits could be due to the artifacts described above. We checked the repeatability in participants with their habitual correction. This was done since patients visiting an eye hospital would be given the best refractive correction and the subsequent measurements in a tele-consult will be made with that habitual correction.

Limitations of our study include enrolling institute staff and not patients, that could give real-life challenges in using these Apps. Although majority of our participants were comfortable downloading and using these apps, this may not be so for patients not following English. We had limited sample size and ours being a pilot study, it was not calculated to achieve the desired difference in the VA measurements between various Apps. Another limitation in our study is that we didn't have participants with visual impairment, amblyopia, or ocular pathologies. The test-retest repeatability for these participants can be beyond one line. Laidlaw et al.[24] reported test-retest repeatability for visual acuity tested with COMPlog in amblyopic children and adults with or with or without ocular pathologies to be ±0.12 logMAR and ±0.10 logMAR respectively. Visual acuity also depends on other factors, such as different charts used,[26,27] different resolution of the displayed screen, age of the individual (old or children giving varying response),[26,28] optical defocus,[29] ocular abnormalities[26] and scoring method.[27]

Future studies with larger sample sizes are needed to validate the results of our observations and to test the effectiveness of these apps in real-life tele-consultation scenarios involving patients from various walks of life, various geographical locations (rural vs. urban), and speaking different native languages. Despite this, we hope that using the Tumbling E feature on Peek acuity app might help reduce these differences.

Conclusion

In conclusion, this study offers the first step of validating readily available Apps with standard clinical measurements. Our study suggests that it is feasible and reasonably accurate to use these apps for estimating distance and near VA. These apps have the potential to play an important role in televisual acuity testing.

Financial support and sponsorship

Hyderabad Eye Research foundation.

Conflicts of interest

There are no conflicts of interest.

Acknowledgements

Pravin Krishna Vaddavalli – Support for implementation of the study.

References

- 1. [[Last accessed on 2020 Sep 18]];World Health Organization. 2020 https://www.who.int/emergencies/diseases/novel-coronavirus-2019/events-as-they-happen. In Edition . [Google Scholar]

- 2.Kulcsar MA, Montenegro FL, Arap SS, Tavares MR, Kowalski LP. High risk of COVID-19 infection for head and neck surgeons. Int Arch Otorhinolaryngol. 2020;24:e129–30. doi: 10.1055/s-0040-1709725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Petersen E, Hui D, Hamer DH, Blumberg L, Madoff LC, Pollack M, et al. Li Wenliang, a face to the frontline healthcare worker.The first doctor to notify the emergence of the SARS-CoV-2, (COVID-19), outbreak. Int J Infect Dis. 2020;93:205–7. doi: 10.1016/j.ijid.2020.02.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Parrish RK, 2nd, Stewart MW, Duncan Powers SL. Ophthalmologists are more than eye doctors-in memoriam Li Wenliang. Am J Ophthalmol. 2020;213:A1–2. [Google Scholar]

- 5.Das AV, Rani PK, Vaddavalli PK. Tele-consultations and electronic medical records driven remote patient care: Responding to the COVID-19 lockdown in India. Indian J Ophthalmol. 2020;68:1007–12. doi: 10.4103/ijo.IJO_1089_20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Nagayoshi Y, Oshima S, Ogawa H. Clinical impact of telemedicine network system at Rural Hospitals without on-site cardiac surgery backup. Telemed J E Health. 2016;22:960–4. doi: 10.1089/tmj.2015.0225. [DOI] [PubMed] [Google Scholar]

- 7.Kohler JE, Falcone RA, Jr, Fallat ME. Rural health, telemedicine and access for pediatric surgery. Curr Opin Pediatr. 2019;31:391–8. doi: 10.1097/MOP.0000000000000763. [DOI] [PubMed] [Google Scholar]

- 8.Brignell M, Wootton R, Gray L. The application of telemedicine to geriatric medicine. Age Ageing. 2007;36:369–74. doi: 10.1093/ageing/afm045. [DOI] [PubMed] [Google Scholar]

- 9.Loomba A, Vempati S, Davara N, Shravani M, Kammari P, Taneja M, et al. Use of a tablet attachment in teleophthalmology for real-time video transmission from rural vision centers in a three-tier eye care network in India: EyeSmart cyclops. Int J Telemed Appl. 2019;2019:5683085. doi: 10.1155/2019/5683085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tan IJ, Dobson LP, Bartnik S, Muir J, Turner AW. Real-time teleophthalmology versus face-to-face consultation: A systematic review. J Telemed Telecare. 2017;23:629–38. doi: 10.1177/1357633X16660640. [DOI] [PubMed] [Google Scholar]

- 11.Kumari Rani P, Raman R, Manikandan M, Mahajan S, Paul PG, Sharma T. Patient satisfaction with tele-ophthalmology versus ophthalmologist-based screening in diabetic retinopathy. J Telemed Telecare. 2006;12:159–60. doi: 10.1258/135763306776738639. [DOI] [PubMed] [Google Scholar]

- 12.Das T, Raman R, Ramasamy K, Rani PK. Telemedicine in diabetic retinopathy: Current status and future directions. Middle East Afr J Ophthalmol. 2015;22:174–8. doi: 10.4103/0974-9233.154391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kawaguchi A, Sharafeldin N, Sundaram A, Campbell S, Tennant M, Rudnisky C, et al. Tele-ophthalmology for age-related macular degeneration and diabetic retinopathy screening: A systematic review and meta-analysis. Telemed J E Health. 2018;24:301–8. doi: 10.1089/tmj.2017.0100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nagra M, Vianya-Estopa M, Wolffsohn JS. Could telehealth help eye care practitioners adapt contact lens services during the COVID-19 pandemic? Cont Lens Anterior Eye. 2020;43:204–7. doi: 10.1016/j.clae.2020.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bittner AK, Yoshinaga P, Bowers A, Shepherd JD, Succar T, Ross NC. Feasibility of telerehabilitation for low vision: Satisfaction ratings by providers and patients. Optom Vis Sci. 2018;95:865–72. doi: 10.1097/OPX.0000000000001260. [DOI] [PubMed] [Google Scholar]

- 16.Choudhari NS, Chandran P, Rao HL, Jonnadula GB, Addepalli UK, Senthil S, et al. LVPEI glaucoma epidemiology and molecular genetic study: Teleophthalmology screening for angle-closure disease in an underserved region. Eye (Lond) 2020;34:1399–405. doi: 10.1038/s41433-019-0666-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Saleem SM, Pasquale LR, Sidoti PA, Tsai JC. Virtual ophthalmology: Telemedicine in a COVID-19 era. Am J Ophthalmol. 2020;216:237–42. doi: 10.1016/j.ajo.2020.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bastawrous A, Rono HK, Livingstone IA, Weiss HA, Jordan S, Kuper H, et al. Development and validation of a smartphone-based visual acuity test (peek acuity) for clinical practice and community-based fieldwork. JAMA Ophthalmol. 2015;133:930–7. doi: 10.1001/jamaophthalmol.2015.1468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rono HK, Bastawrous A, Macleod D, Wanjala E, Di Tanna GL, Weiss HA, et al. Smartphone-based screening for visual impairment in Kenyan school children: A cluster randomised controlled trial. Lancet Glob Health. 2018;6:e924–32. doi: 10.1016/S2214-109X(18)30244-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Lovie-Kitchin JE. Validity and reliability of visual acuity measurements. Ophthalmic Physiol Opt. 1988;8:363–70. doi: 10.1111/j.1475-1313.1988.tb01170.x. [DOI] [PubMed] [Google Scholar]

- 21.Carkeet A, Lister LJ. Computer monitor pixellation and Sloan letter visual acuity measurement. Ophthalmic Physiol Opt. 2018;38:144–51. doi: 10.1111/opo.12434. [DOI] [PubMed] [Google Scholar]

- 22.Tofigh S, Shortridge E, Elkeeb A, Godley BF. Effectiveness of a smartphone application for testing near visual acuity. Eye (Lond) 2015;29:1464–8. doi: 10.1038/eye.2015.138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Currie Z, Bhan A, Pepper I. Reliability of snellen charts for testing visual acuity for driving: Prospective study and postal questionnaire. BMJ. 2000;321:990–2. doi: 10.1136/bmj.321.7267.990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Laidlaw DAH, Tailor V, Shah N, Atamian S, Harcourt C. Validation of a computerised logMAR visual acuity measurement system (COMPlog): Comparison with ETDRS and the electronic ETDRS testing algorithm in adults and amblyopic children. Br J Ophthalmol. 2008;92:241–4. doi: 10.1136/bjo.2007.121715. [DOI] [PubMed] [Google Scholar]

- 25.Beck RW, Moke PS, Turpin AH, Ferris FL, 3rd, SanGiovanni JP, Johnson CA, et al. A computerized method of visual acuity testing: Adaptation of the early treatment of diabetic retinopathy study testing protocol. Am J Ophthalmol. 2003;135:194–205. doi: 10.1016/s0002-9394(02)01825-1. [DOI] [PubMed] [Google Scholar]

- 26.Dougherty BE, Flom RE, Bullimore MA. An evaluation of the Mars letter contrast sensitivity test. Optom Vis Sci. 2005;82:970–5. doi: 10.1097/01.opx.0000187844.27025.ea. [DOI] [PubMed] [Google Scholar]

- 27.Hazel CA, Elliott DB. The dependency of logMAR visual acuity measurements on chart design and scoring rule. Optom Vis Sci. 2002;79:788–92. doi: 10.1097/00006324-200212000-00011. [DOI] [PubMed] [Google Scholar]

- 28.Elliott DB, Yang K, Whitaker D. Visual acuity changes throughout adulthood in normal, healthy eyes: Seeing beyond 6/6. Optom Vis Sci. 1995;72:186–91. doi: 10.1097/00006324-199503000-00006. [DOI] [PubMed] [Google Scholar]

- 29.Rosser DA, Murdoch IE, Cousens SN. The effect of optical defocus on the test–retest variability of visual acuity measurements. Invest Ophthalmol Vis Sci. 2004;45:1076–9. doi: 10.1167/iovs.03-1320. [DOI] [PubMed] [Google Scholar]