Abstract

Background

Reliable information which can only be derived from accurate data is crucial to the success of the health system. Since encoded data on diagnoses and procedures are put to a broad range of uses, the accuracy of coding is imperative. Accuracy of coding with the International Classification of Diseases, 10th revision (ICD-10) is impeded by a manual coding process that is dependent on the medical records officers’ level of experience/knowledge of medical terminologies.

Aim statement

To improve the accuracy of ICD-10 coding of morbidity/mortality data at the general hospitals in Lagos State from 78.7% to ≥95% between March 2018 and September 2018.

Methods

A quality improvement (QI) design using the Plan–Do–Study–Act cycle framework. The interventions comprised the introduction of an electronic diagnostic terminology software and training of 52 clinical coders from the 26 general hospitals. An end-of-training coding exercise compared the coding accuracy between the old method and the intervention. The outcome was continuously monitored and evaluated in a phased approach.

Results

Research conducted in the study setting yielded a baseline coding accuracy of 78.7%. The use of the difficult items (wrongly coded items) from the research for the end-of-training coding exercise accounted for a lower coding accuracy when compared with baseline. The difference in coding accuracy between manual coders (47.8%) and browser-assisted coders (54.9%) from the coding exercise was statistically significant. Overall average percentage coding accuracy at the hospitals over the 12-month monitoring and evaluation period was 91.3%.

Conclusion

This QI initiative introduced a stop-gap for improving data coding accuracy in the absence of automated coding and electronic health record. It provides evidence that the electronic diagnostic terminology tool does improve coding accuracy and with continuous use/practice should improve reliability and coding efficiency in resource-constrained settings.

Keywords: continuous quality improvement, data accuracy, PDSA, quality improvement, quality measurement

Introduction

Effective and efficient planning, monitoring (M) and evaluation (E) of health services depend on the availability of accurate and reliable data for health statistics.

The International Classification of Diseases (ICD) is the international diagnostic classification standard for reporting diseases/health conditions, and for clinical and research purposes. It is maintained and revised by the WHO. With its comprehensive list, organised in a hierarchical fashion, it supports the management of health information for evidence-based decision-making, it allows sharing and comparison of health information between hospitals, regions, settings and countries. It also allows data comparisons in the same location across different time periods.1 In some middle-income and high-income countries, it is applied in their electronic health record (EHR) systems. However, in some middle-income and low-income countries, morbidity data are collected as paper-based health records through a number of manual processes leading up to the final collation on area-specific tabular list adapted from the ICD-10 tabular list.2 In this setting, a tabular list of about 600 codes, selected on the basis of frequency of occurrence or public health importance of the diseases/health problems, is referred to as the Cumulative Medical Form. The process of coding of the medical diagnoses documented in patients’ paper-based medical records folders is carried out by the medical records officers (MROs)/clinical coders. The many steps in the process of coding a diagnosis introduce numerous opportunities for error.3 4 Studies have suggested an association between the validity of coding and coder characteristics such as employment and experience.5 6

In some cases, it is suspected that data quality may be more of a human challenge than a technology challenge.7 Training and retraining of health information managers (HIMs) are needful but there is also a place for the introduction of appropriate technology.2 While efforts to address the clinicians’ role in ensuring accurate data are ongoing, improving the accuracy of ICD-10 coding by clinical coders is the focus of this quality improvement (QI) initiative.

One of the factors on which the quality of coded clinical data depends is the operation of a performance improvement plan that ensures continuous QI. The data quality dimensions that have been identified in data governance include: validity, reliability, completeness and timeliness. The outcome measure of this intervention is validity (accuracy). The balancing measures, that is, the unintended consequences of the intervention8 (which may be positive or negative), will be reliability and timeliness (efficiency).

Validity refers to the extent to which coded data accurately reflect the patient’s diagnoses, that is, the extent to which it is correct. Reliability is depicted by the extent to which multiple coders assign the same codes to the same diagnoses/health problem. Timeliness refers to the extent to which the coded data are available within the time frames required for decision support, billing purposes and other uses.2

Quality assurance involves repeated cycles of quality assessment and QI.9 Healthcare organisations should continually search for ways to improve the coding process through computerisation or other appropriate methods available to them.2

From their assessment of current clinical coding practices and implementation of ICD-10, some Nigerian HIM researchers noted that the absence of automation and an insufficient number of clinical coders were major challenges confronting the clinical coding process. The benefits of automated coding in EHR include more speed, the potential for increased coding consistency, productivity and improved overall coding accuracy. More so, the quality of documentation in electronic records is better than those held in paper-based records.10 The application of technology definitely impacts on the coding process.2

One of the technological advances that may be applied as a stop-gap on the way to deployment of EHR in a system operating on paper-based health records is an electronic diagnostic terminology tool that provides the interface between clinicians’ choices of diagnostic terminologies and ICD-10’s diagnostic terms/codes. It is built like an encoder, a computer software program designed to assist coders in assigning appropriate clinical codes to words and phrases expressed in natural human language.11 It was originally developed for paper-based health records with the principal purpose of ensuring accurate reporting for reimbursement. Encoders promote accuracy as well as consistency in the coding of diagnoses and procedures.12 Although encoders provide optimisation guidance thus allowing the coder to choose the most accurate code for a health problem or procedure, they require user interaction. This initiative employed the ICD-10-ICPC2 Thesaurus electronic terminology software which was made available by one of the researchers.13 It offers the possibility of double coding with the ICD-10 (the national coding standard in most countries including Nigeria) and the International Classification of Primary Care (the ordering system of the domain of primary care and a related classification of the ICD-10 in WHO’s Family of International Classification). It also consists of the thesaurus, a terminology interface. It is deployed in the form of a browser with a search bar for search texts.

The thesaurus is a systematic set of professionally used words, including terms and jargon in which each word is represented with possible synonyms and related words designating broader or narrower concepts. It may serve as a dictionary or as a translation from jargon to terminology. It enables the coder to review code selections, look-up tabular lists and various other automated notations that facilitate the choice of the most accurate code from the classification.12

This QI initiative focused on the accuracy (validity) of the ICD-10 coding process and the possibility of productive efficiency (timeliness)14 in a setting where there is a shortage of clinical coders.

It is often been said that you cannot improve what you cannot measure.7 In this data coding QI initiative, the accuracy of coded data was initially determined through an objective assessment that yielded a proportion that could be improved on, thus paving the way for the intervention. It addressed the question of whether an electronic diagnostic terminology tool improves the accuracy of manual coding compared with the traditional method of manual coding among non-clinician secondary coders in a setting that is yet to migrate to EHR.

Aim statement

To improve the accuracy of ICD-10 coding of morbidity/mortality data at the general hospitals in Lagos State from 78.7% to ≥95% between March 2018 and September 2018.

The subaim in relation to the experiment that was conducted at the training was to determine if coding accuracy will be better with the electronic browsing tool than the traditional manual method.

Methods

The detailed description of the methods is shown through the repeated Plan–Do–Study–Act (PDSA) cycles in online supplemental appendix 1. The PDSA cycles also show how the intervention was consolidated upon to sustain the outcome through various ideas such as the one presented in online supplemental appendix 3.

bmjoq-2020-000938supp001.pdf (88.7KB, pdf)

Study design

The study design was a QI initiative using the PDSA cycle framework. It entailed the establishment of a QI team, baseline assessment of the quality problem, planning and testing of interventions, continuous M and E with refinement to sustain the outcome.

At the training on the usage of the electronic diagnostic terminology tool (electronic ICD-10 browser), immediate assessment of the intervention was done through a two-group experiment that compared the accuracy between browser-assisted coders and manual coders.

Study setting

The study was set in Lagos State, Nigeria. It holds the most populous city in Africa, with a projected population of 20.5 million and an annual population growth rate of 8% estimating 4193 persons/km2. The vast majority of the population depends on the public health sector for their healthcare needs. This sector is operated by the state government through the public health facilities under the State Ministry of Health (SMoH). Coming closely behind the well-known health system problem of limited resources in the face of competing needs is poor data quality which has been identified as one of the impediments to the development of the health system. The Health Information Management Department of the state’s Health Service Commission, an arm of the SMoH, is responsible for the management of morbidity/mortality data across the 26 general hospitals in the state at the time of the study. Efforts to transit to EHRs have been slow and are at the pilot phase in one of the 26 secondary health facilities.

Study population and study participants

There were 26 general hospitals and 317 MROs/HIMs in Lagos State at the commencement of this initiative. The schedule of duty in each hospital enlists two MROs as clinical coders, making a total of 52 coders. Their minimum qualification was an ordinary national diploma in Health Information Management.

Evaluation plan

Outcome measure

Accuracy (the number of correctly coded diagnoses as a percentage of the total number of diagnoses to be coded). Accuracy was measured at two stages: at the stage of the training and at the stage of continuous M and E. First, the effect of the intervention was immediately tested at the training venue via an end-of-training coding exercise in which 52 coders were randomly assigned to the browser-assisted coding group or the manual coding group of 26 coders each at the point of registration for the training. The accuracy of coding was compared between the intervention group (browser-assisted coders who used the electronic diagnostic terminology tool) and the control group (the manual coders who coded the traditional way with the hard copies of the full volume of the ICD-10 tabular list and the alphabetical index). Accuracy of coding was determined by trained classification experts (authors 1 and 2) using the electronic diagnostic terminology tool.

Second, average monthly accuracy across the hospitals in the first cluster of the phased approach to continuous M and E was determined from the analysis of their monthly submissions to the M and E officer of systematically selected samples of diagnoses that had been coded with the electronic diagnostic terminology tool. MROs from each hospital submitted a list comprising codes assigned to the most recent diagnoses from a sample of 20 systematically selected patients’ records folders out of the first 100 folders that were retrieved from the clinics on a set date every month. The lists of coded diagnoses data from the hospitals were assessed for accuracy by the M and E officer (one of the authors).

The phased approach to continuous M and E entailed the division of the 26 general hospitals into five geographical clusters according to the existing divisions of the State (Ikeja, Badagry, Ikorodu, Lagos Island and Epe divisions) followed by simple random selection of a cluster/division for each phase of M and E. The first cluster was Lagos Island, comprised of the four general hospitals presented in this report. Every 9 months, all the hospitals in a new cluster will be on boarded until the fifth and last cluster is captured to complete the phased approach.

Balancing measure (unintended positive or negative consequence of the intervention).8

Timeliness/speed of coding recorded in minutes (increased or reduced time taken to complete the coding task with the electronic coding tool at different stages of implementation) translating to the efficiency of the system. This was measured during the end-of-training coding exercise as the average time taken to complete the coding exercise either with the electronic tool or with the ICD-10 alphabetical index and tabular list.

Process measures (measures of the fidelity of the intervention by showing how consistently/reliably the intervention is applied).8

Consistency of monthly submissions of the monthly samples of diagnoses coded with the electronic diagnostic terminology tool to the M and E Officer.

Self-report of subsequent usage of the electronic coding tool and the experiences surrounding the continuous usage of the electronic coding tool within the hospitals after implementation.

Once monthly impromptu courtesy calls to the medical records departments by the M and E Officer to obtain feedback regarding the intervention, its usage and to address any issues arising.

The adoption and institutionalisation of the use of the electronic diagnostic terminology tool by the overall head of the medical records departments (who is also a member of this QI team) as a means of promoting uniformity of coding across the 26 hospitals.

Pre-intervention activities

Preliminary assessment of the accuracy of ICD-10 coded data: research involving the clinical coders in this study setting revealed an ICD-10 coding accuracy of 78.7%.15 The study entailed manual ICD-10 coding of a list of 220 diagnoses/health problems collated from clinic attendance registers (that comprised daily records of patients’ biodata and diagnoses) using systematic sampling technique on entries from the previous 6 months.15 The items that were coded wrongly (the difficult items) are shown in online supplemental appendix 2. This QI initiative was motivated by the finding from that research.

Stakeholder analysis by the main researcher and meeting with the power-interest group.

Formation of the QI team, reflection on the QI problem and agreement on interventions, implementation, M and E, financial implication and sourcing of funds.

The QI team

The main researcher (first author) led the QI team, which included the overall head of Health Information Management department at the Health Service Commission, the assistant director of Medical Services and head of the Health Data and Statistics Committee (the last author), two doctors, and an information and communications technology officer. The impact of the QI team extends to all the hospitals because of the membership of the overall head of all 26 Health Information Management departments in the hospitals.

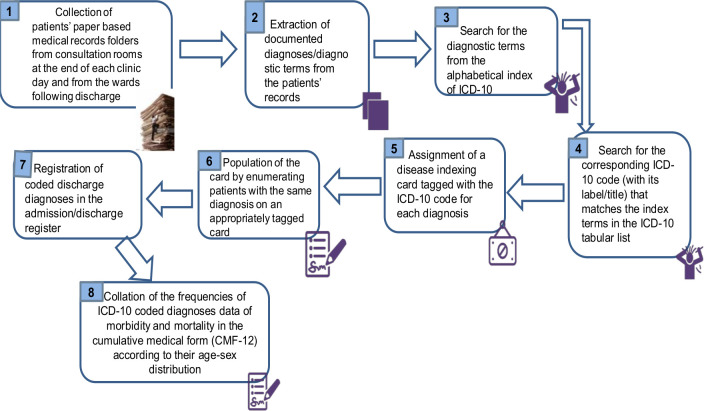

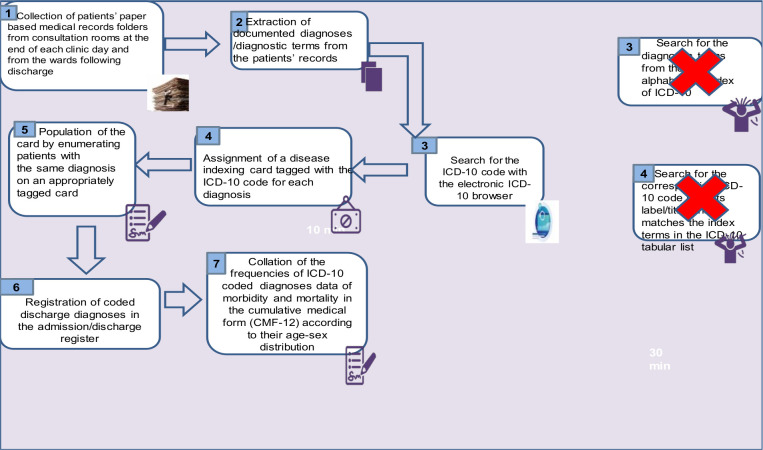

The process maps (figures 1 and 2) show the details of the state of clinical coding at the general hospitals in the first cluster before and after the intervention.

Figure 1.

The process map of diagnoses data coding by clinical coders at the general hospitals in Lagos before intervention. ICD-10, International Classification of Diseases, 10th revision.

Figure 2.

Post-intervention process map showing elimination of old steps 3 and 4 and introduction of electronic ICD-10 browser. ICD-10, International Classification of Diseases, 10th revision.

Immediate assessment of the intervention with the end-of-training coding exercise/experiment

The participants were randomly assigned to a browser-assisted coding group or a manual coding group of 26 coders each. They coded the same list of diagnoses/health problems individually. The browser-assisted coders used the ICD-10-ICPC2 Thesaurus while the manual coders coded the traditional way, using the hard copies of the ICD-10 tabular list and an alphabetical index. The accuracy and speed of coding were compared for both methods.

Materials/resources for the hands-on training on how to use the electronic medical terminology tool

Hard copies of the tabular list and alphabetical index of ICD-10.

ICD-10-ICPC2 Thesaurus CD-Rom13: the electronic diagnostic terminology software that was provided by one of its developers (the second author) and used with permission. It is an encyclopaedia of medical terms that has an automated codebook format type of encoder, incorporating all ICD-10 codes (≈14 000) and all ICPC2 codes. It is developed for use offline and well suited for paper-based medical records. It enables the coder to type the diagnosis/health problem into a search bar and review code selections, look-up tables and various other automated notations that facilitate the choice of the most accurate code.

A projector for the PowerPoint presentation.

Public address system.

Writing materials.

Computers with the encoding ICD10-ICPC2 software.

Data analysis

Data analysis was done with the Microsoft Excel software and SPSS V.23.0. The unit of inference was the average percentage of accurate codes selected by the coders. The speed of coding was determined as the average number of minutes taken to complete the coding task.

Patient and public involvement

The patients and the public were factored into the dissemination plans of this research but were not involved in the design, conduct and reporting.

Results

A total of 52 clinical coders with lengths of service ranging from 1 to 25 years and a median of 7 years participated in the training and the end-of-training coding exercise to compare coding accuracy between the old method and the intervention. Half were medical records departmental heads. The majority had undergraduate qualifications, higher national diplomas or BSc degrees (88.7%), and 73% used computers frequently.

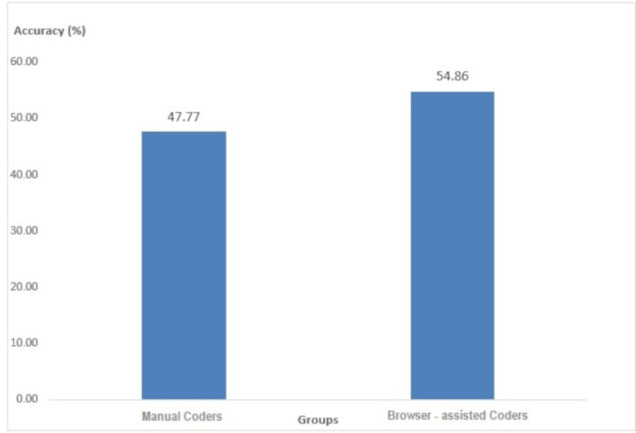

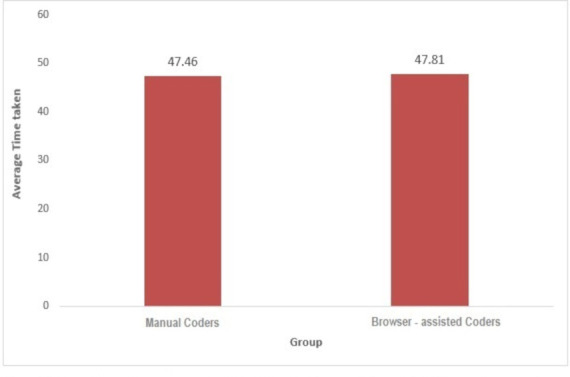

Figure 3 shows a higher coding accuracy (54.9%) from the intervention group. In figure 4, the speed of coding as demonstrated by the average time taken to code the same set of diagnoses was almost the same but for a narrow difference of 21 s between manual coders (47 min 28 s) and the browser-assisted coders (47 min 49 s). The difference in coding accuracy between the two groups of coders was statistically significant (p=0.02). However, this was not the case with the speed of coding (p=0.95).

Figure 3.

Comparison of average percentage coding accuracy between manual coders and browser-assisted coders from the end-of-coding exercise.

Figure 4.

Comparison of the average time taken (minutes) to complete end-of-training coding exercise between manual and browser-assisted coders.

Results of continuous M and E of coding accuracy

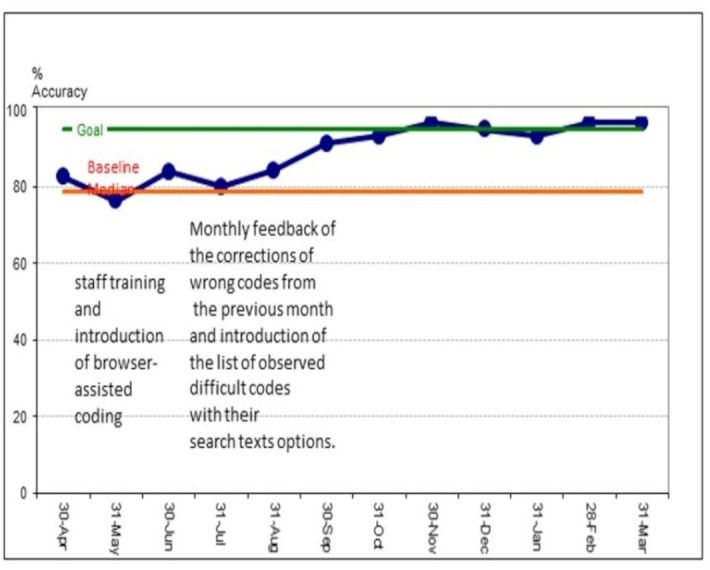

Figure 5 is a run chart of the average monthly percentage coding accuracy in the hospitals from April 2018 to March 2019 which ranged from 76.3% to 96.7%. By the target date of September 2018, the average percentage accuracy was 83.2% but this had improved to 91.3% by the 12th month of continuous M and E.

Figure 5.

Average monthly percentage coding accuracy in the hospitals from the period April 2018 to March 2019.

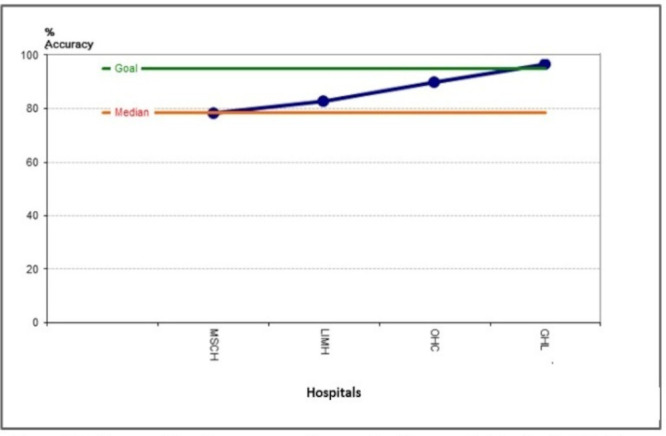

Figure 6 shows the average percentage coding accuracy from each of the four hospitals in the first randomly selected cluster/division after monthly M and E over a period of 12 months. All except one of the hospitals had exceeded baseline and one had reached the goal (96.7%).

Figure 6.

Average percentage coding accuracy from each of the hospitals in the first cluster of the phased monitoring and evaluation for the period April 2018–March 2019.

Retesting of accuracy for difficult items

Retesting of coding accuracy among the same set of coders for the same set of diagnoses that formed the end-of-training assessment revealed an improvement from 54.9% to 85% 4 months’ post-deployment of the intervention.

Process measures

Consistent monthly submission of a sample of diagnoses coded with the electronic diagnostic terminology tool was selected by systematic sampling of patients’ folders in the last week of every month.

Self-report of usage and challenges surrounding the use of the electronic diagnostic terminology tool: the respondents indicated that they had started using the software either on their personal computers or on the hospital computers. Half used it at least twice a week while others used it once a week. They all admitted that occasionally they coded manually with the ICD-10 book. The reasons adduced include power failure (25%), shortage of computers (12.5%) and preference for the old method (12.5%). Other problems identified were: a shortage of clinical coders and computers in the Health Information Management department, difficulty finding some terms used by the doctors on the software, incomplete or unclear diagnoses provided by the doctors. Respondents suggested periodic training/retraining on the usage of the software for both old and new staff and the recruitment of more staff.

However, the systematically selected samples for the monthly accuracy assessments were coded solely with the software to ensure that the process was valid for the purpose of testing the accuracy of the coded data from the electronic ICD-10 browser.

Discussion

The findings from this study demonstrate that ICD-10 coding accuracy can be improved with the use of the electronic diagnostic terminology software among non-clinician coders in a paper-based medical record setting. Although the desired accuracy of ≥95% was not reached within the stipulated time frame of 6 months, average percentage accuracy had improved to 91.3% by the 12th month of continuous M and E.

Comparison was restricted because we found no data from developing countries where a similar intervention was applied to paper-based records. Furthermore, available data from developed countries used fully automated encoding software with their EHR.

A review of literature focusing on assessment and improvement of data quality of trauma registries provided evidence regarding the weakness of most of these publications. Most publications dealing with the measurement of a dimension of data quality did not specify the methods used, likewise, most publications dealing with the improvement of data quality did not specify the dimension being targeted.16 This study focused primarily on the data quality dimensions of accuracy as well as timeliness/speed of coding because of the feasibility of measuring these in paper-based records.

From the immediate measurement of the outcome at the end of the training, coding accuracy was better by 7.1% among the browser-assisted coders than it was among coders who used the hard copies of the ICD-10 coding book. The difference in coding accuracy was statistically significant (p=0.021), confirming that the higher accuracy among the browser-assisted coders was as a result of the electronic diagnostic terminology tool rather than random chance. In this QI initiative, the immediate post-training comparison of coding accuracy between a contemporaneous control group who coded the traditional way and a test group who used the intervention enhanced the ability to determine whether the difference that was observed was as a result of the intervention. According to Wong and Sullivan, this evaluative approach has greater strength compared with the aggregated before–after data approach employed in some QI initiatives.8

The speed of coding was almost the same between manual coders (47 min 28 s) and browser-assisted coders (47 min 49 s). This may be explained by the fact that the training was the first experience the coders had with the electronic diagnostic terminology tool, coupled with the fact that 75% of the participants who reported that they rarely used computers in their day-to-day activities had been randomly assigned to the browser-assisted coding group. Obviously, this did not deter from the higher accuracy recorded by this group. The speed of coding with the new method is also expected to improve with practice.

Overall average percentage coding accuracy at the hospitals over the 12-month M and E period was 91.3%. As expected, retesting with the difficult items 4 months’ post-intervention showed that the coding accuracy with the electronic diagnostic terminology tool (electronic ICD-10 browser) had improved remarkably from 54.9% to 85% for the same set of difficult items that was presented at the end-of-training coding experiment. The long duration between testing and retesting makes the improvement in the accuracy score less likely to be the effect of memory.

Similarly, an Australian study involving six Veterans Administration medical centres described the effect of automated encoders on coding accuracy and speed when used by trained coding staff. It found that coding accuracy improved by 19.4% after the implementation of encoding software. The authors concluded that coding speed is affected by coding method (manual or automated). The effect of automated coding method on coding speed, however, depended on the system set-up, with some set-ups actually reducing the number of discharges coded per day.17

Conclusion

Clinical coding is an important practice in Health Information Management which provides valuable data for vital purposes such as healthcare quality evaluation, healthcare resource allocation, health services research, public health programming and medical billing.12 Although not comparable with the automated encoding software, an electronic diagnostic terminology tool offers the possibility of improving coding accuracy and is adaptable for this purpose in settings where paper-based medical records are operational.

Limitation

The self-report of usage of the software introduced a level of subjectivity into the process measurement. Paper-based records limited the possibility of measuring the data quality dimension of completeness because it is feasible to code mainly the principal diagnosis for each patient. However, this did not impede the measurement of the dimension of coded data accuracy which was the aim of this study. Although 4 months is a long period, the improvement in coding accuracy observed from retesting with the same set of difficult items may be due to memory.

Acknowledgments

We acknowledge the input and support of all the stakeholders at the Health Service Commission and the general hospitals, particularly Mrs Popoola, all medical records officers and all medical directors. We honour the faculty of Healthcare Leadership Academy (HLA), Africa for their invaluable technical input. We are grateful for Professor Bob Mash’s input and Dr Zelra Malan’s resourcefulness. We thank Mr Akeem Agoro for providing ICT support. We deeply appreciate the co-facilitators at the training: Dr Ihuoma Henshaw, Dr Sade Ogunnaike, Dr Tolu Onafeso, Dr Sam Amaiahan, Dr Folajimi Oyebola, Dr Ike Ajayi, Dr Cosmas Odoemena, Dr Grace Somefun and Dr Oluwatosin Agemo.

Footnotes

Correction notice: This article has been corrected since it was published. Contributors statement has been updated.

Contributors: OO contributed to the concept, design, definition of intellectual content, literature search, data acquisition, data analysis and manuscript preparation. KvB and OD were involved in the design, data collection and review of the final manuscript. KN was involved in the design, definition of intellectual content, data analysis, manuscript editing and review. AO was involved in the design, data collection and review of the final version of the manuscript. AO, OO, KN, KvB and OD are responsible for the overall content as guarantors.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient consent for publication: Not required.

Ethics approval: Ethical approval to conduct this study was obtained from the HREC of Lagos State University Teaching Hospital (reference number: LREC.06/10/1033) and informed consent was given by the medical records officers.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: All data relevant to the study are included in the article or uploaded as supplementary information.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

References

- 1.WHO . International classification of diseases. Available: http://www.who.int/classifications/icd/en/ [Accessed 25 Jan 2018].

- 2.Ayegbayo F. A modern approach to disease classification and clinical coding. Lagos: Constance-Praise, 2009. [Google Scholar]

- 3.O'Malley KJ, Cook KF, Price MD, et al. Measuring diagnoses: ICD code accuracy. Health Serv Res 2005;40:1620–39. 10.1111/j.1475-6773.2005.00444.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Stausberg J, Lehmann N, Kaczmarek D, et al. Reliability of diagnoses coding with ICD-10. Int J Med Inform 2008;77:50–7. 10.1016/j.ijmedinf.2006.11.005 [DOI] [PubMed] [Google Scholar]

- 5.Hennessy DA, Quan H, Faris PD, et al. Do coder characteristics influence validity of ICD-10 hospital discharge data? BMC Health Serv Res 2010;10:99. 10.1186/1472-6963-10-99 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Santos S, Murphy G, Baxter K, et al. Organisational factors affecting the quality of hospital clinical coding. Him J 2008;37:25–37. 10.1177/183335830803700103 [DOI] [PubMed] [Google Scholar]

- 7.Nichols J. Data quality: strategies for improving healthcare data. Available: www.himss.org/news/data-quality-strategies-improving-healthcare-data [Accessed 19 Sep 2016].

- 8.Wong BM, Sullivan GM. How to write up your quality improvement initiatives for publication. J Grad Med Educ 2016;8:128–33. 10.4300/JGME-D-16-00086.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nicolay CR, Purkayastha S, Greenhalgh A, et al. Systematic review of the application of quality improvement methodologies from the manufacturing industry to surgical healthcare. Br J Surg 2012;99:324–35. 10.1002/bjs.7803 [DOI] [PubMed] [Google Scholar]

- 10.Taiwo Adeleke I, Adeleke IT, Ajayi OO. Current clinical coding practices and implementation of ICD-10 in Africa: a survey of Nigerian hospitals. AJHR 2015;3:38–46. 10.11648/j.ajhr.s.2015030101.16 [DOI] [Google Scholar]

- 11.Medori J, Fairon C. Machine learning and features selection for semi-automatic ICD-9-CM encoding. Proceedings of the NAACL HLT 2010 Second Louhi Workshop on Text and Data Mining of Health Documents, 2010:84–9. [Google Scholar]

- 12.Stanfill MH, Williams M, Fenton SH, et al. A systematic literature review of automated clinical coding and classification systems. J Am Med Inform Assoc 2010;17:646–51. 10.1136/jamia.2009.001024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Becker HW, Oskam SK, Okkes IM. ICPC2-ICD10 Thesaurus. A diagnostic terminology for semi-automatic double coding in Electronic Patient records. : Okkes IM, Oskam SK, Lambert H, . ICPC in the Amsterdam transition project. Amsterdam: Academic Medical Center/University of Amsterdam Department of Family Medicine, 2005. [Google Scholar]

- 14.Kringos DS, Boerma WGW, Hutchinson A, et al. The breadth of primary care: a systematic literature review of its core dimensions. BMC Health Serv Res 2010;10:65. 10.1186/1472-6963-10-65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Olagundoye OA, Malan Z, Mash B, et al. Reliability measurement and ICD-10 validation of ICPC-2 for coding/classification of diagnoses/health problems in an African primary care setting. Fam Pract 2018;35:406–11. 10.1093/fampra/cmx132 [DOI] [PubMed] [Google Scholar]

- 16.O'Reilly GM, Gabbe B, Moore L, et al. Classifying, measuring and improving the quality of data in trauma registries: a review of the literature. Injury 2016;47:559–67. 10.1016/j.injury.2016.01.007 [DOI] [PubMed] [Google Scholar]

- 17.Lloyd SS, Layman E. The effects of automated encoders on coding accuracy and coding speed. Top Health Inf Manage 1997;17:72–9. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjoq-2020-000938supp001.pdf (88.7KB, pdf)