Abstract

Facial expression of humans is one of the main channels of everyday communication. The reported research work investigated communication regarding the pattern of emotional expression of healthy older adults and with mild cognitive impairments (MCI) or Alzheimer's disease (AD). It focuses on mimicking of displayed emotional facial expression on a sample of 25 older adults (healthy, MCI and AD patients). The adequacy of the patients' individual facial expressions in six basic emotions was measured with the Kinect 3D recording of the participants' facial expressions and compared to their own typical emotional facial expressions. The reactions were triggered by mimicking 49 still pictures of emotional facial expressions. No statistically significant differences in terms of frequency nor adequacy of emotional facial expression were reported in healthy and MCI groups. Unique patterns of emotional expressions have been observed in the AD group. Further investigating the pattern of older adults' facial expression may decrease the misunderstandings and increase the quality of life of the patients.

Keywords: emotion, aging, cognitive impairment, Alzheimer's disease, mild cognitive impairments

1. Introduction

The facial expression of emotional information is perceived as natural way of communicating the inner states of the human being. It also enriches the conversation without the additional verbal cues. It is generally perceived as a natural and automatic function but in certain clinical conditions it is observed to change [1]–[4]. One of these cases is Alzheimer's disease (AD) [5] and its prodromal phase mild cognitive impairments (MCI) [6]–[8]. Even though that general criteria of the both conditions highlight the cognitive aspect of disturbances [9] clinicians and caregivers report substantial impact of the altered facial emotional expression to the everyday caregiver-patient contact. The presence of the emotion dysregulation in everyday contact significantly impacts the welfare of the informal caregivers and is connected with decreased quality of life of the patient's family system [10]–[13]. Based on the authors' clinical experience the herein study has been performed. The main goal was the initial observation to see if emotional facial mimicry within the older adults had been altered due to the coexistence of the increasing cognitive impairments due to dementia. The authors focused on exploring the potential relation between the cognitive functioning level, verbal fluency, emotion perception, and the adequacy of the facial mimicking of the emotional stimuli. The study was approved by the Medical University of Lublin ethical committee on human research.

2. Materials and methods

2.1. Inclusion and exclusion criteria

For the purpose of the study 65 older adult volunteers (45 women and 20 men) were invited to participate in the study. They had normal or corrected to normal vision and hearing. The main inclusion criteria were: age (70–90 years old); signed informed consent for the participation in the study; maintained language skills; fulfilling the criteria of the cognitively healthy older adult; MCI and AD patient according to [9]; and confirmed medical diagnosis of AD (only in case of AD group). The main excluding criteria were: observed currently or in the past coexistence of the neurological condition (such as: epilepsy, tumor, stroke or brain damage due to trauma); and psychiatric conditions (such as depression) altering the cognitive state.

2.2. Neuropsychological assessment

A twofold neuropsychological assessment was performed to verify the cognitive, emotional and general health status. The first meeting was devoted to screening procedures (Mini Mental State Examination, Clinical Dementia Rating scale, Global Deterioration Scale, 7-minute test, fluency tasks, Hachinsky's scale, and the semi-structured interview). Participants fulfilling the inclusion/exclusion criteria then underwent the second part of the assessment consisting of Right Hemisphere Lesion Battery (RHLB-PL). Subjects showing cognitive impairment lower than 10 MMSE points were rejected from the further examination. Subject reporting more than 5 points in Hachinsky's scale were excluded from the study. Subjects not fulfilling the inclusion/exclusion criteria were briefed on their current condition and thanked for the participation.

2.3. Statistical analysis

Subjects fulfilling the criteria were invited to participate in the experiment on emotional facial expressions. The final group of participants in the experiment consisted of 25 right-handed older adults [M = 81.75 yrs. (73.58–89.66), SD = 4.17]. The group consisted of healthy older adults (6 women and 3 men), MCI patients (6 women and one men) and AD patients (6 women and 3 men). The groups detailed description is presented in Table 1 and Table 2. The normality of results' distribution was verified with W Shapiro-Wilk. Normality of distribution was confirmed for: age; MMSE; fluency (K, animals and body parts); RHLB- humor; and RHLB- emotional prosody. The homogeneity of results was verified with the Levene test. Depending on the results of the above the further analysis was performed with ANOVA, Kruskal-Wallis; or U-Mann-Whitney, and Chi2.

Table 1. Description of the sample.

| Group | M | SD | ||

| Age (years) | Healthy | 82.5 | 4.89 | F(2, 22) = 0.39; p = 0.68 |

| MCI | 80.62 | 4.43 | ||

| AD | 81.88 | 3.42 | ||

| Education (years) | Healthy | 12.67 | 3.16 | Chi2 = 1.65; p = 0.44 |

| MCI | 10.57 | 3.41 | ||

| AD | 11.56 | 2.96 | ||

| Hachinsky's scale (points) | Healthy | 1.9 | 0.9 | Chi2 = 8.92; p = 0.01 |

| MCI | 2.7 | 1.1 | ||

| AD | 3.6 | 1.0 | ||

| Time from the diagnosis (years) | MCI | 1.29 | 0.76 | U = 10.5; p = 0.02 |

| AD | 3.11 | 1.9 |

Note: MCI: mild cognitive impairment; AD: Alzheimer Disease; M: mean; SD: standard deviation.

Table 2. Neuropsychological profile of the participants.

| Scale | Group | M | SD | |

| MMSE | Healthy | 30.7 | 2.31 | F = 77.65; p = 0.001 |

| MCI | 23.1 | 3.4 | ||

| AD | 15.8 | 1.9 | ||

| Fluency-K | Healthy | 10.4 | 2.8 | F = 6.12; p = 0.008 |

| MCI | 7.7 | 4.2 | ||

| AD | 4.4 | 3.9 | ||

| Fluency-F | Healthy | 8.2 | 2.8 | Chi2 = 9.33; p = 0.009 |

| MCI | 4.7 | 4.2 | ||

| AD | 2.3 | 3.1 | ||

| Fluency-animals | Healthy | 15.5 | 4.4 | F = 17.22; p = 0.0001 |

| MCI | 8.6 | 3.6 | ||

| AD | 5.2 | 3.2 | ||

| Fluency-body | Healthy | 19.8 | 5.2 | F = 15.43; p = 0.0001 |

| MCI | 13.1 | 3.9 | ||

| AD | 7.4 | 4.8 | ||

| 7-minutes test | Healthy | 0.1 | 0.33 | Chi2 = 19.82; p = 0.0001 |

| MCI | 2.57 | 1.13 | ||

| AD | 3 | 0 | ||

| RHLB-PL | ||||

| Humor | Healthy | 6.2 | 2.4 | Chi2 = 5.08; p = 0.08 |

| MCI | 3.9 | 1.7 | ||

| AD | 3.9 | 0.8 | ||

| Lexical prosody | Healthy | 12.3 | 3.7 | Chi2 = 10.13; p = 0.006 |

| MCI | 9.1 | 2.9 | ||

| AD | 5.6 | 4.1 | ||

| Emotional prosody | Healthy | 10 | 2.9 | F = 9.97; p = 0.001 |

| MCI | 8.1 | 2.5 | ||

| AD | 4.7 | 2.2 |

Note: MCI: mild cognitive impairment; AD: Alzheimer Disease; M: mean; SD: standard deviation; MMSE: Mini Mental State Examination; RHLB-PL: Right Hemisphere Lesion Battery-Polish version.

The groups did not show differences in terms of age and education level; but differed in terms of Hachinsky's scale. The post hoc analysis showed the significant differences between healthy and AD group (U = 8.5; p = 0.004) but not between healthy and MCI (U = 17.5; p = 0.18), nor AD and MCI group (U = 19; p = 0.17). The clinical groups differed in terms of the time from the initial diagnosis.

The groups were well selected, which was confirmed by the significant differences in the main neuropsychological scales (MMSE, 7-minutes test). The groups of subjects showed significant differences in all neuropsychological scales. The differences correspond with meeting the criteria of healthy, MCI and AD group. The post hoc analysis showed the significant differences between healthy and AD patients in all scales used. The differences between healthy controls and AD patients in fluency tasks are: letter K (p = 0.006), animals (p < 0.001), body parts (p < 0.001), and letter F (U = 8; p = 0.004). The differences between the healthy participants and AD patients in RHLB subscales are: humor (U = 19; p = 0.05), lexical prosody (U = 7.5; p = 0.003), and emotional prosody (p = 0.001).

The significant differences between the healthy and MCI patients were observed in: fluency animals (p = 0.004), and body parts (p = 0.03). The differences between the healthy and MCI patients at the level of tendency were observed in: fluency letter F; RHLB humor; and RHLB lexical prosody. The significant differences between MCI and AD patients were observed in RHLB emotional prosody (p = 0.04) only. The differences between MCI and AD patients at the level of tendency were observed in: fluency body parts; and RHLB lexical prosody.

2.4. Facial emotions mimicking experiment layout

The subjects were presented with 83 pictures of human faces (male and female) cropped to show only the face of the actor. The actors were young and old Caucasian adults of both sexes without any distinguishing facial features (e.g. glasses, mustaches or beards). The pictures were taken from P. Ekman's Pictures of facial affect battery (POFA) together with pictures specially prepared by the authors. Pictures were assessed by 11 psychologists on the matter of emotional content. The psychologists were required to name the emotion presented and give its intensity in percentage value. The Fleiss' Kappa factor was calculated for each picture from the following categories: happy, sad, fear, disgust, surprised and anger. Pictures with the factor higher than 0.6 were included in the further analysis. In total the data obtained for the display of 15 color and 34 black-white pictures was analyzed. The data collected for the rest of the pictures was discarded from the further analysis. The subjects were blind to the researchers' choice and were instructed to react to all 83 pictures “as well as they can”. The subjects' task was to “Do as they do (presented actors)”. The majority of participants spontaneously gave the name of the emotion presented. Their assessment was mostly adequate. Each part of the assessment took approximately 45 minutes.

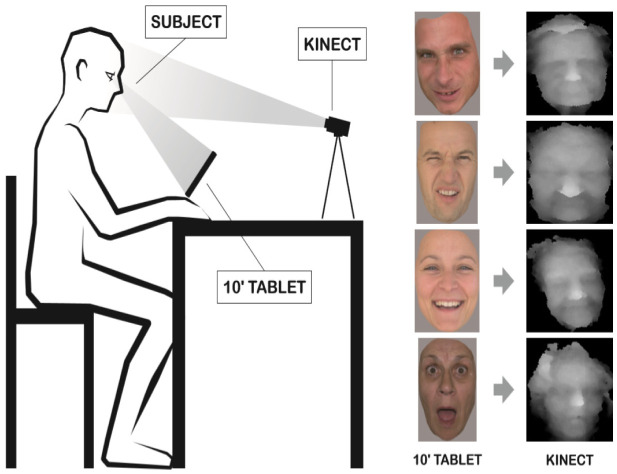

The subjects were sitting in a quiet room and were not disturbed throughout the experiment. The researcher was present through the whole experiment and if necessary, focused the participants' attention on the stimuli. Subjects were instructed to look at the tablet where the pictures were presented. Behind the tablet the Kinect sensor was placed at 1.5 m distance. The experiment setting is shown in Figure 1. The period of picture presentation was automatically set for one minute with the interval of 15 seconds, where the black background was displayed between the stimuli. The presentation of the stimuli was signaled by the cross in the middle of the screen. The average interval of data collection for healthy participants was set for the 10–15 seconds of initial picture display which was sufficient to react to single stimuli. In case of MCI/AD participants the interval for data collection was 10–15 seconds from the moment of stimuli recollection. It was possible to manually shorten the time of the stimuli presentation if the participant reacted to the stimuli presented. If no reaction was recorded within 1 minute the next stimuli was presented. Many participants during the 15 seconds interval between stimulus presentation used the tablet as a mirror checking on their appearance (fixing the hair, or commenting on their appearance). Only two participants felt discomfort during the emotional stimulus presentation and resigned from further participation. All other participants reported the experiment as amusing and not intrusive.

Figure 1. Study layout with the example of stimuli (angry/disgust/happy/surprise) and their corresponding 3D recording.

The data was collected through out all the experiment which on average took 45 minutes. At the beginning the subjects were asked to recall the happy, sad, annoying and pleasant surprises in their life in order to establish the individual personalized profile of the emotional facial expression of each participant in six basic emotions. The neutral facial expression was established too.

The face of the subject was continuously mapped by the Kinect sensor. The subjects' typical emotional facial reactions representing six basic emotions and neutral expression were set as the individual subject's prototypical facial expression. The facial emotional mimicry was measured by the specially designed algorithm comparing the subject's facial reaction registered within 10–15 seconds of time locked recording, corresponding to the stimulus and compared to the prototypical subject's individual reaction in corresponding to emotional category. The exact algorithm is described in [14].

2.5. Ethics approval of research

The research was conducted ethically in accordance with the World Medical Association Declaration of Helsinki. The subjects (or their guardians) have given their written informed consent and that the study protocol was approved by the Medical University of Lublin ethical committee on human research.

3. Results

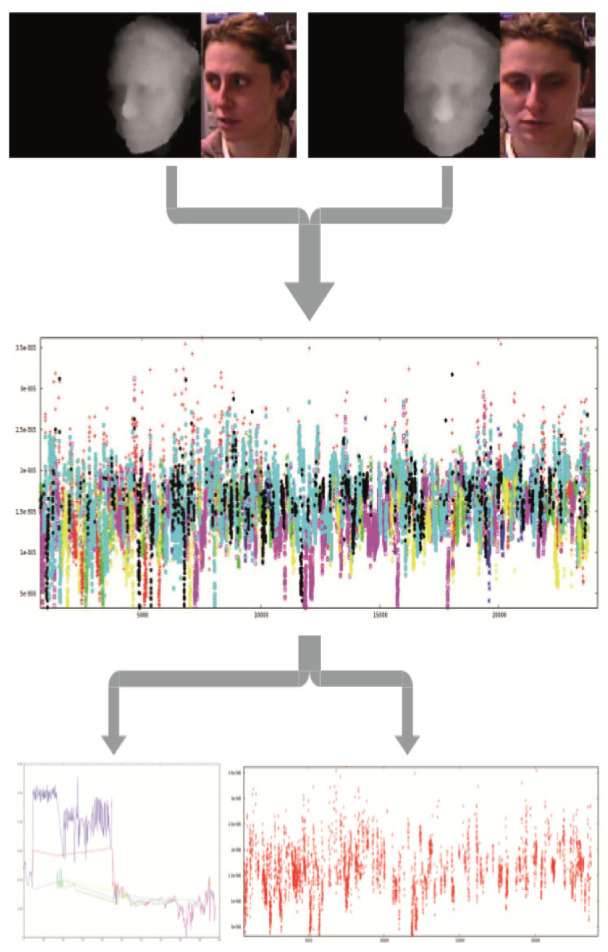

The final analysis was performed for 21 participants (seven subjects in each group: healthy older adults, MCI patients, AD patients). The data of 4 participants was discarded due to high level of noise (N = 2) or withdrawal of consent for the experiment (N = 2). During the recording 6000–74000 pictures were collected for each participants. Only the event locked data collected in the 10–15 seconds of the most intense reaction to the selected 49 pictures was analyzed. The initial automatic assessment was performed, but due to differences in reaction times of healthy older adults and MCI/AD patients the manual selection of the peak emotional responses was used for the further analysis. The graphical presentation of the emotional adequacy of participant's reaction is shown in Figure 2. The selected frames of the emotional facial reactions performed by the participant were assessed in terms of similarity to prototypical facial expression.

Figure 2. Emotion mimicking data processing: 1. Data recording in threefold frames (stimuli/3D screenshot/RGB screenshot); 2. The facial emotions accuracy during the experiment (red-neutral, green-fear, navy blue-anger, pink-sad, yellow-disgust, blue-happy, black-surprise) 3. Facial emotional reaction to one stimuli (left) and the distribution of the happy facial emotional reaction during the whole experiment (right).

The participants' emotional reactions to all 49 stimuli were calculated in terms of finding the differences between the groups favoring the particular emotion. The groups did not show statistically relevant differences in frequency of emotions expressed. The participants' emotional facial expression adequacy to the stimuli main emotion was similar for all the groups. The reactions were later clustered based on the sign of the emotion to positive and negative stimuli. The neutral stimuli were not included. The detail information is presented in Table 3. The adequacy of the emotional facial mimicking of the stimuli was calculated in the mixed model of variance. No statistical differences were observed between the groups (F(1;18) = 0.02; p = 0.98), nor main effects were identified (F(1;18) = 1.85; p = 0.19), nor their interactions (F(2;18) = 0.44; p = 0.65). Mauchly's sphericity test wasn't confirmed. Homogeneity of the variance was confirmed with M Box test. The further analysis for the dependent samples was conducted to verify the differences between the adequacy of the positive and negative stimuli emotional facial expression within the groups. The normality of distribution was confirmed with W Shapiro-Wilk. The statistically significant negative correlation (r-Pearson) between adequacy of the emotional facial expression in response to emotional stimuli has been confirmed (r = −0.41; p = 0.06).

Table 3. Facial emotional mimicking adequacy among the groups.

| Emotion | Mean frequency of emotional reaction | SD | % of adequacy of emotional reaction | SD | ||

| Happy healthy | 11 | 7.1 | F = 0.27; p = 0.77 | 20.9 | 11.5 | F = 1.53; p = 0.24 |

| MCI | 6.43 | 4.9 | 16.5 | 16.3 | ||

| AD | 11.57 | 5.9 | 20.9 | 10.6 | ||

| Fear healthy | 8.86 | 3.8 | F = 0.5; p = 0.62 | 10.7 | 13.4 | F = 0.47; p = 0.63 |

| MCI | 6.86 | 5 | 17.9 | 18.9 | ||

| AD | 6.29 | 6.5 | 10.7 | 13.4 | ||

| Disgust healthy | 9 | 4.4 | F = 0.29; p = 0.75 | 17.9 | 17.5 | F = 0.084; p = 0.36 |

| MCI | 5.42 | 4.7 | 23.2 | 18.3 | ||

| AD | 9.14 | 6.7 | 16.1 | 18.7 | ||

| Anger healthy | 6.43 | 3.7 | F = 1.23; p = 0.32 | 12.5 | 14.4 | F = 0.16; p = 0.86 |

| MCI | 5.86 | 4.1 | 12.5 | 14.4 | ||

| AD | 5 | 6.3 | 3.6 | 6.1 | ||

| Surprise healthy | 4 | 3.2 | F = 0.91; p = 0.42 | 5.4 | 9.8 | Chi2 = 4.91; p = 0.086 |

| MCI | 10 | 10.2 | 19.6 | 25.9 | ||

| AD | 10 | 6 | 16.1 | 22.5 | ||

| Sad healthy | 6.71 | 4.6 | F = 0.22; p = 0.81 | 10.7 | 15.2 | F = 0.16; p = 0.86 |

| MCI | 7.71 | 5.9 | 12.5 | 14.4 | ||

| AD | 8.57 | 7.8 | 19.6 | 25.9 | ||

| Neutral healthy | 10.57 | 4.1 | F = 2; p = 0.16 | |||

| MCI | 13.86 | 9.1 | ||||

| AD | 6.71 | 5.9 | ||||

| Emotions Total healthy | 14 | 3.8 | ||||

| MCI | 16.9 | 4.8 | ||||

| AD | 15.5 | 4.1 | ||||

| Negative emotions healthy | 13.27 | 6.1 | ||||

| MCI | 16.33 | 11.3 | ||||

| AD | 12.76 | 5.8 | ||||

| Positive emotions healthy | 20.88 | 11.5 | ||||

| MCI | 16.48 | 16.3 | ||||

| AD | 20.88 | 10.6 |

Note: MCI: mild cognitive impairment; AD: Alzheimer Disease; SD: standard deviation.

The post hoc analysis revealed the significant correlation for AD patients (r = −0.81; p = 0.02). No statistically significant correlations were confirmed within the remaining groups. The presented results suggest that despite the same frequency of negative/positive emotions mimicked in all groups the AD group shows significant difficulties with flexible adjustment to the sign of the stimuli presented. The results show that in AD group participants were able to adequate represent only one sign of emotional stimuli, either positive or negative.

4. Discussion

The obtained results partially correspond with the current data on the abilities of emotion recognition within the groups of older adults [7],[15]–[18]. The level of observed adequacy of the emotional facial expression may be connected with the level of the emotion expression intensity presented. Such a result has been already reported within the MCI and AD patients [5]. The natural mimicking and emotional contagion of the emotions presented is less efficient in persons with cognitive disorders [1]–[8]. As well it has been reported that neutral facial expressions of older adults may be identified as more negative than the actor of conversation intended [19]. The facial emotional mimicking is an unconscious social glue connecting us with our conversational partners [20]. The underlying mechanisms are complex and are being gradually discovered [21]–[29]. It is also believed that the network responsible for correct perception and performance of facial emotional reactions is fragile and may be changed during aversive individual experiences [30]–[34]. Participants of the current study were children during the II World War which may have leave its mark on their abilities to read facial expressions. As well the noticeable right hemisphere deficits in AD group may correspond with the lower abilities to express and recall the emotional content. As well the bias in responding to the positive and negative emotional stimuli has been observed within heathy young adults [35] and increases with age [14]. The above may have influenced the results of the current study and could be the typical pattern of emotional functioning of the older persons with and without cognitive dysfunctions.

The reported herein results should be treated as an initial findings. The further analysis with the bigger samples could reveal more significant and complex results within all the groups: healthy, MCI and AD.

The technological possibility of recording and analyzing dynamic changes in emotional human expression has its limitations [36]–[39]. Further developments within this field are expected soon. The current lines of investigation cover: physiological reactions measured by multiple sensors (Empatica E4) [40]–[41]; behavioral reactions measured by vison sensors (Kinect) [42]; and mapping the brain networks with deep learning systems [43]–[45]. The fast progress in the interdisciplinary research introducing the fusion of novel usage of already discovered solutions may give more light on human emotional functioning [39]. Presented herein the protocol of the study gives initial glimpse on the future usage of vision sensors in clinical setting. It is probable that observed level of adequacy in emotion mimicking may be connected with the sensitivity of the sensors applied in the study.

All of the discussed studies used language in establishing the sign and level of the emotion reported by the subjects. The self-reported questionnaires and labeling the emotions have been applied. The AD and MCI progression typically is connected with the loss of verbal fluency and the adequate use of mother tongue. Therefore, the results of the cited studies can be obtained only in the groups with relatively preserved language skills. The current study is one of few to the authors' knowledge that applied the nonverbal way of communication the emotion recognition in other humans' faces. Due to its resemblance to everyday human interactions, it was perceived by the subjects as pleasant and non-threatening. The reported results show similar pattern as already described in recent studies but were possible to obtain without specialized equipment (fMRI, EEG) and without the use of language. With the technological changes it may become possible to observe the dynamic changes of the alternation in the human facial expression caused by the disease. With the increase of application using the facial recognition embedded in the smartphones self-diagnosis of possible cognitive or mood disorder would become something more than just a vision of the future.

5. Conclusions

Observed changes in the demographic structure of societies worldwide and the growing amount of old persons suffering from the dementia and mild cognitive impairment bring up the question of self-diagnosing. The typical neuropsychological assessment performed by the professionals covers one edge of the everyday functioning. The emotional functioning assessment is currently based on observation and questionnaires. The data collected during such assessment is partial and subjective therefore developing the more objective tool that would enable measuring the process of emotional mimicking is needed. The recent development of the mobile technology and the vision capture algorithms give the hope for the caregivers to develop the application for the emotional mimicking adequacy recognition. Such application could give the start for the future development of the rehabilitation of the facial emotional recognition.

Acknowledgments

The study described herein was performed while Justyna Gerłowska was representing the Medical University of Lublin, Department of Neurology.

Footnotes

Author contributions: JG substantial contribution to the conception, design of the work, data acquisition, analysis, interpretation of data for the work, drafting the work, and final approval of the version to be published, KD substantial contribution to the design of the work, analysis, interpretation of data for the work, drafting the work, and final approval of the version to be published study design, KR substantial contribution to the conception, design of the work, revising critically data for the work for important intellectual content, and final approval of the version to be published data collection. All authors agreed to be accountable for all aspects of the work in ensuring that question related to the accuracy of integrity of any part of the work are appropriately investigated and resolved.

Conflict of interest: The authors declare no conflict of interest.

References

- 1.Gola KA, Shany-Ur T, Pressman P, et al. A neural network underlying intentional emotional facial expression in neurodegenerative disease. Neuroimage Clin. 2017;14:672–678. doi: 10.1016/j.nicl.2017.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Marshall CR, Hardy CJD, Russell LL, et al. Motor signatures of emotional reactivity in frontotemporal dementia. Sci Rep. 2018;8:1030. doi: 10.1038/s41598-018-19528-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Carr AR, Mendez MF. Affective Empathy in Behavioral Variant Frontotemporal Dementia: A Meta-Analysis. Front Neurol. 2018;9:417. doi: 10.3389/fneur.2018.00417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Patel S, Oishi K, Wright A, et al. Right Hemisphere Regions Critical for Expression of Emotion Through Prosody. Front Neurol. 2018;9:224. doi: 10.3389/fneur.2018.00224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Sapey-Triomphe L-A, Heckermann RA, Boublay N, et al. Neuroanatomical correlates of recognizing face expressions in mild stages of Alzheimer's disease. PLoS One. 2015;10:e0143586. doi: 10.1371/journal.pone.0143586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sturm VE, Yokoyama JS, Seeley WW, et al. Heightened emotional contagion in mild cognitive impairment and Alzheimer's disease is associated with temporal lobe degeneration. Proc Natl Acad Sci USA. 2013;110:9944–9949. doi: 10.1073/pnas.1301119110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Virtanen M, Singh-Manoux A, Batty DG, et al. The level of cognitive function and recognition of emotions in older adults. PLoS One. 2017;12:e0185513. doi: 10.1371/journal.pone.0185513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Savaskan E, Summermatter D, Schroeder C, et al. Memory deficits for facial identity in patients with amnestic mild cognitive impairment (MCI) PLoS One. 2018;13:e0195693. doi: 10.1371/journal.pone.0195693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rajan KB, Weuve J, Barnes LL, et al. The diagnosis of dementia due to Alzheimer's disease: Recommendations from the National Institute on Aging-Alzheimer's Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimers Dement. 2019;15:1–7. doi: 10.1016/j.jalz.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Raggi A, Tasca D, Panerai S, et al. The burden of distress and related coping processes in family caregivers of patients with Alzheimer's disease living in the community. J Neurol Sci. 2015;358:77–81. doi: 10.1016/j.jns.2015.08.024. [DOI] [PubMed] [Google Scholar]

- 11.Raivio MM, Laakkonen M-L, Pitkälä KH. Psychological well-being of spousal caregivers of persons with Alzheimer's disease and associated factors. Eur Geriatr Med. 2015;6:128–133. [Google Scholar]

- 12.Ikeda C, Terada S, Oshima E, et al. Difference in determinants of caregiver burden between amnestic mild cognitive impairment and mild Alzheimer's disease. Psychiatry Res. 2015;226:242–246. doi: 10.1016/j.psychres.2014.12.055. [DOI] [PubMed] [Google Scholar]

- 13.Hackett RA, Steptoe A, Cadar D, et al. Social engagement before and after dementia diagnosis in the English Longitudinal Study of Ageing. PLoS One. 2019;14:e0220195. doi: 10.1371/journal.pone.0220195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Dmitruk K, Wójcik GM. Modelling 3D scene based on rapid face tracking and object recognition. Ann UMCS Inform. 2010;X:63–68. [Google Scholar]

- 15.Gonçalves AR, Fernandes C, Pasion R, et al. Emotion identification and aging: behavioral and neural age-related changes. Clin Neurphysiol. 2018;129:1020–1029. doi: 10.1016/j.clinph.2018.02.128. [DOI] [PubMed] [Google Scholar]

- 16.Schmitt H, Kray J, Ferdinand NK. Does the Effort of Processing Potential Incentives Influence the Adaption of Context Updating in Older Adults? Front Psychol. 2017;8:1969. doi: 10.3389/fpsyg.2017.01969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Arani A, Murphy MC, Glaser KJ, et al. Measuring the effects of aging and sex on regional brain stiffness with MR elastography in healthy older adults. Neuroimage. 2015;111:59–64. doi: 10.1016/j.neuroimage.2015.02.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Medaglia JD, Pasqualetti F, Hamilton RH, et al. Brain and cognitive reserve: Translation via network control theory. Neurosci Biobehav Rev. 2017;75:53–64. doi: 10.1016/j.neubiorev.2017.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Albohn DN, Adams jr RB. Everyday beliefs about emotion perceptually derived from neural facial appearance. Front Psychol. 2020;11:264. doi: 10.3389/fpsyg.2020.00264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jarick M, Kingstone A. The duality of gaze: eyes extract and signal social information during sustained cooperative and competitive dyadic gaze. Front Psychol. 2015;6:1423. doi: 10.3389/fpsyg.2015.01423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dampney R. Emotion and the Cardiovascular System: Postulated Role of Inputs From the Medial Prefrontal Cortex to the Dorsolateral Periaqueductal Gray. Front Neurosci. 2018;12:343. doi: 10.3389/fnins.2018.00343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lin H, Müller-Bardorff M, Gathmann B, et al. Stimulus arousal drives amygdalar responses to emotional expressions across sensory modalities. Sci Rep. 2020;10:1898. doi: 10.1038/s41598-020-58839-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Song J, Wei Y, Ke H. The effect of emotional information from eyes on empathy for pain: A subliminal ERP study. PLoS One. 2019;14:e0226211. doi: 10.1371/journal.pone.0226211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Perusquía-Hernández M, Ayabe-Kanamura S, Suzuki K. Human perception and biosignal-based identification of posed and spontaneous smiles. PLoS One. 2019;14:e0226328. doi: 10.1371/journal.pone.0226328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kilpeläinen M, Salmela V. Perceived emotional expressions of composite faces. PLoS One. 2020;15:e0230039. doi: 10.1371/journal.pone.0230039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Borgomaneri S, Bolloni C, Sessa P, et al. Blocking facial mimicry affects recognition of facial and body expressions. PLoS One. 2020;15:e0229364. doi: 10.1371/journal.pone.0229364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Grueschow M, Jelezarova I, Westphal M, et al. Emotional conflict adaptation predicts intrusive memories. PLoS One. 2020;15:e0225573. doi: 10.1371/journal.pone.0225573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wirth BE, Wentura D. Furious snarling: Teeth-exposure and anxiety-related attentional bias towards angry faces. PLoS One. 2018;13:e0207695. doi: 10.1371/journal.pone.0207695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Avenanti A. Blocking facial mimicry affects recognition of facial and body expressions. 2019 doi: 10.17605/OSF.IO/CSUD3. Available from: [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Natu VS, Barnett MA, Hartley J, et al. Development of Neural Sensitivity to Face Identity Correlates with Perceptual Discriminability. J Neurosci. 2016;36:10893–10907. doi: 10.1523/JNEUROSCI.1886-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kungl MT, Bovenschen I, Spangler G. Early Adverse Caregiving Experiences and Preschoolers' Current Attachment Affect Brain Responses during Facial Familiarity Processing: An ERP Study. Front Psychol. 2017;8:2047. doi: 10.3389/fpsyg.2017.02047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Reynolds GD, Roth KC. The Development of Attentional Biases for Faces in Infancy: A Developmental Systems Perspective. Front Psychol. 2018;9:222. doi: 10.3389/fpsyg.2018.00222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hartling C, Fan Y, Weigand A, et al. Interaction of HPA axis genetics and early life stress shapes emotion recognition in healthy adults. Psychoneuroendocrinology. 2019;99:28–37. doi: 10.1016/j.psyneuen.2018.08.030. [DOI] [PubMed] [Google Scholar]

- 34.Ross P, Atkinson AP. Expanding Simulation Models of Emotional Understanding: The Case for Different Modalities, Body-State Simulation Prominence, and Developmental Trajectories. Front Psychol. 2020;11:309. doi: 10.3389/fpsyg.2020.00309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Recio G, Wilhelm O, Sommer W, et al. Are event-related potentials to dynamic facial expressions of emotion related to individual differences in the accuracy of processing facial expressions and identity? Cogn Affect Behav Neurosci. 2017;17:364–380. doi: 10.3758/s13415-016-0484-6. [DOI] [PubMed] [Google Scholar]

- 36.Nonis F, Dagnes N, Marcolin F, et al. 3D Approaches and challenges in facial expression recognition algorithms—a literature review. Appl Sci. 2019;9:3904. [Google Scholar]

- 37.Allaert B, Bilasco IM, Djeraba C. Micro and macro facial expression recognition using advanced Local Motion Patterns. IEEE Trans Affect Comput. 2019;PP:1. [Google Scholar]

- 38.Kulke L, Feyerabend D, Schacht A. A Comparison of the Affectiva iMotions Facial Expression Analysis Software With EMG for Identifying Facial Expressions of Emotion. Front Psychol. 2020;11:329. doi: 10.3389/fpsyg.2020.00329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Colombo D, Fernández-Álvarez J, García Palacios A, et al. New Technologies for the Understanding, Assessment, and Intervention of Emotion Regulation. Front Psychol. 2019;10:1261. doi: 10.3389/fpsyg.2019.01261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bastiaansen M, Oosterholt M, Mitas O, et al. An Emotional Roller Coaster: Electrophysiological Evidence of Emotional Engagement during a Roller-Coaster Ride with Virtual Reality Add-On. J Hosp Tour Res. 2020 [Google Scholar]

- 41.Van Lier HG, Pieterse ME, Garde A, et al. A standardized validity assessment protocol for physiological signals from wearable technology: Methodological underpinnings and an application to the E4 biosensor. Behav Res Methods. 2020;52:607–629. doi: 10.3758/s13428-019-01263-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mao Qr, Pan XY, Zhan YZ, et al. Using Kinect for real-time emotion recognition via facial expressions. Front Inform Tech El. 2015;16:272–282. [Google Scholar]

- 43.Ng HW, Nguyen VD, Vonikakis V, et al. Deep Learning for Emotion Recognition on Small Datasets using Transfer Learning. ACM Int Conf Multimodal Interact. 2015. pp. 443–449.

- 44.Liu W, Zheng WL, Lu BL. Emotion Recognition Using Multimodal Deep Learning. Neural Inf Process. 2016;9948:521–529. [Google Scholar]

- 45.Zhang TY, El Ali A, Wang C, et al. CorrNet: Fine-Grained Emotion Recognition for Video Watching Using Wearable Physiological Sensors. Sensors. 2021;21:52. doi: 10.3390/s21010052. [DOI] [PMC free article] [PubMed] [Google Scholar]