Abstract

Our ability to perceive meaningful action events involving objects, people, and other animate agents is characterized in part by an interplay of visual and auditory sensory processing and their cross-modal interactions. However, this multisensory ability can be altered or dysfunctional in some hearing and sighted individuals, and in some clinical populations. The present meta-analysis sought to test current hypotheses regarding neurobiological architectures that may mediate audio-visual multisensory processing. Reported coordinates from 82 neuroimaging studies (137 experiments) that revealed some form of audio-visual interaction in discrete brain regions were compiled, converted to a common coordinate space, and then organized along specific categorical dimensions to generate activation likelihood estimate (ALE) brain maps and various contrasts of those derived maps. The results revealed brain regions (cortical “hubs”) preferentially involved in multisensory processing along different stimulus category dimensions, including 1) living versus nonliving audio-visual events, 2) audio-visual events involving vocalizations versus actions by living sources, 3) emotionally valent events, and 4) dynamic-visual versus static-visual audio-visual stimuli. These meta-analysis results are discussed in the context of neurocomputational theories of semantic knowledge representations and perception, and the brain volumes of interest are available for download to facilitate data interpretation for future neuroimaging studies.

Keywords: categorical perception, embodied cognition, multisensory integration, neuroimaging, sensory-semantic categories

Introduction

The perception of different categories of visual (unisensory) object and action forms are known to differentially engage distinct brain regions or networks in neurotypical individuals, such as when observing or identifying faces, body parts, living things, houses, fruits and vegetables, and outdoor scenes, among other proposed categories (Martin et al. 1996; Tranel et al. 1997; Caramazza and Mahon 2003; Martin 2007). Distinct semantic categories of real world sound-producing (unisensory) events are also known or thought to recruit different brain networks, such as nonliving environmental and mechanical sounds (Lewis et al. 2012), nonvocal action events produced by nonhuman animal sources (Engel et al. 2009; Lewis, Talkington, et al. 2011), as well as the more commonly studied categories of living things (especially human conspecifics) and vocalizations (notably speech) (Dick et al. 2007; Saygin et al. 2010; Goll et al. 2011; Trumpp et al. 2013; Brefczynski-Lewis and Lewis 2017). Extending beyond unisensory category-specific percepts, the neurobiological representations of multisensory events are thought to develop based on complex combinations of sensory and sensory-motor information, with some dependence on differences with individual observers’ experiences throughout life, such as with handedness (Lewis et al. 2006). One may have varying experiences with, for instance, observing and hearing a construction worker hammering a nail, or feeling a warm purring gray boots breed cat on a sofa. Additionally, while watching television, or a smart phone device, one can readily accept the illusion that the synchronized audio (speakers) and video movements (the screen) are emanating from a single animate or object source, leading to stable, unified multisensory percepts. Psychological literature indicates that perception of multisensory events can manifest as well-defined category-specific objects and action representations that build on past experiences (Rosch 1973; Vygotsky 1978; McClelland and Rogers 2003; Miller et al. 2003; Martin 2007). However, the rules that may guide the organization of cortical network representations that mediate multisensory perception of real-world events, and whether any taxonomic organizations for such representations exist at a categorical level, remain unclear.

The ability to organize information to attain a sense of global coherence, meaningfulness, and possible intention behind every-day observable events may fail to fully or properly develop, as for some individuals with autism spectrum disorder (ASD) (Jolliffe and Baron-Cohen 2000; Happe and Frith 2006; Kouijzer et al. 2009; Powers et al. 2009; Marco et al. 2011; Pfeiffer et al. 2011, 2018; Ramot et al. 2017; Webster et al. 2020) and possibly for some individuals with various forms of schizophrenia (Straube et al. 2014; Cecere et al. 2016; Roa Romero et al. 2016; Vanes et al. 2016). Additionally, brain damage, such as with stroke, has been reported to lead to deficits in multisensory processing (Van der Stoep et al. 2019). Thus, further understanding the organization of the multisensory brain has been becoming a topic of increasing clinical relevance.

At some processing stages or levels, the central nervous system is presumably “prewired” to readily develop an organized architecture that can rapidly and efficiently extract meaningfulness from multisensory events. This includes audio-visual event encoding and decoding that enables a deeper understanding of one’s environment, thereby conferring a survival advantage through improvements in perceived threat detection and in social communication (Hewes 1973; Donald 1991; Rilling 2008; Robertson and Baron-Cohen 2017). An understanding of multisensory neuronal processing mechanisms, however, may in many ways be better understood through models of semantic knowledge processing rather than models of bottom-up signal processing, which is prevalent in unisensory fields of literature. One set of theories behind semantic knowledge representation includes distributed-only views, wherein auditory, visual, tactile, and other sensory-semantic systems are distributed neuroanatomically with additional task-dependent representations or convergence-zones in cortex that link knowledge (Damasio 1989a; Languis and Miller 1992; Damasio et al. 1996; Tranel et al. 1997; Ghazanfar and Schroeder 2006; Martin 2007). A distributed-plus-hub view further posits the existence of additional task-independent representations (or “hubs”) that support the interactive activation of representations in all modalities, and for all semantic categories (Patterson et al. 2007).

More recent neurocomputational theories of semantic knowledge learning entails a sensory-motor framework wherein action perception circuits (APCs) are formed through sensory experiences, which manifest as specific distributions across cortical areas (Pulvermuller 2013, 2018; Tomasello et al. 2017). In this construct, combinatorial knowledge is thought to become organized by connections and dynamics between APCs, and cognitive processes can be modeled forthright. Such models have helped to account for the common observation of cortical hubs or “connector hubs” for semantic processing (Damasio 1989b; Sporns et al. 2007; van den Heuvel and Sporns 2013), which may represent multimodal, supramodal, or amodal mechanisms for representing knowledge. From this connector hub theoretical perspective, it remains unclear whether or how different semantic categories of multisensory perceptual knowledge might be organized, potentially including semantic hubs that link, for instance, auditory and visual unisensory systems at a category level.

Here, we addressed the issue of global neuronal organizations that mediate different aspects of audio-visual categorical perception by using activation likelihood estimate (ALE) meta-analyses of a diverse range of published studies to date that reported audio-visual interactions of some sort in the human brain. We defined the term “interaction” to include measures of neuronal sensitivity to temporal and/or spatial correspondence, response facilitation or suppression, inverse effectiveness, an explicit comparison of information from different modalities that pertained to a distinct object, and cross-modal priming (Stein and Meredith 1990; Stein and Wallace 1996; Calvert and Lewis 2004). These interaction effects were assessed in neurotypical adults (predominantly, if not exclusively, right-handed) using hemodynamic blood flow measures (functional magnetic resonance imaging [fMRI], or positron emission tomography [PET]) or magnetoencephalography (MEG) methodologies as whole brain neuroimaging techniques.

The resulting descriptive compilations and analytic contrasts of audio-visual interaction sites across different categories of audio-visual stimuli were intended to meet three main goals: The first goal was to reveal a global set of brain regions (cortical and noncortical) with significantly high probability of cross-sensory interaction processing regardless of variations in methods, stimuli, tasks, and experimental paradigms. The second goal was to validate and refine earlier multisensory processing concepts borne out of image-based meta-analyses of audio-visual interaction sites (Lewis 2010) that used a subset of the paradigms included in the present study, but here taking advantage of coordinate-based meta-analyses and more rigorous statistical approaches now that additional audio-visual interaction studies have subsequently been published.

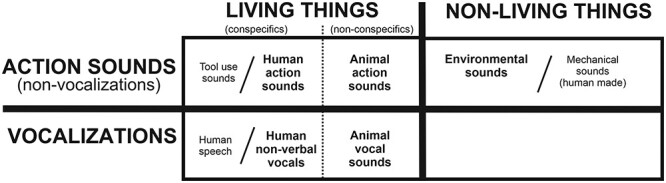

The third goal, as a special focus, was to test recent hypotheses regarding putative brain architectures mediating multisensory categorical perception that were derived from unisensory auditory object perception literature (Fig. 1), which encompassed theories to explain how real-world natural sounds are processed to be perceived as meaningful events to the observer (Brefczynski-Lewis and Lewis 2017). This hearing perception model entailed four proposed tenets that may shape brain organizations for processing real-world natural sounds, helping to explain “why” certain category-preferential representations appear in the human brain (and perhaps more generally in the brains of all mammals with hearing ability). These tenets for hearing perception included: 1) parallel hierarchical pathways process increasing information content, 2) metamodal operators guide sensory and multisensory processing network organizations, 3) natural sounds are embodied when possible, and 4) categorical perception emerges in neurotypical listeners.

Figure 1.

A taxonomic category model of the neurobiological organization of the human brain for processing and recognizing different acoustic–semantic categories of natural sounds (from Brefczynski-Lewis and Lewis 2017). Bold text in the boxed regions depict rudimentary sound categories, including living versus nonliving things and vocalizations versus nonvocal action sounds, which are categories being tested in the present audio-visual meta-analyses. Other subcategories are also indicated, including human speech, tool use sounds, and human-made machinery sounds. Vocal and instrumental music sounds/events are regarded as higher forms of communication, which rely on other networks and are thus outside the scope of the present study. Refer to text for other details.

After compiling the numerous multisensory human neuroimaging studies that employed different types of audio-visual stimuli, tasks, and imaging modalities, we sought to test three hypotheses relating to the above mentioned tenets and neurobiological model. The first two hypotheses effectively tested for support of the major taxonomic boundaries depicted in Figure 1: The first hypothesis being 1) that there will be a double-dissociation of brain systems for processing living versus nonliving audio-visual events, and the second hypothesis 2) that there will be a double-dissociation for processing vocalizations versus action audio-visual events produced by living things.

In the course of compiling neuroimaging literature, there was a clear divide between studies using static visual images (iconic representations) versus video with dynamic motion stimuli that corresponded with aspects of the auditory stimuli. The production of sound necessarily implies dynamic motion of some sort, which in many of the studies’ experimental paradigms also correlated with viewable object or agent movements. Thus, temporal and/or spatial intermodal invariant cues that physically correlate visual motion (“dynamic-visual”) with changes in acoustic energy are typically uniquely present in experimental paradigms using video (Stein and Meredith 1993; Lewkowicz 2000; Bulkin and Groh 2006). Conversely, static or iconic visual stimuli (“static-visual”) must be learned to be associated and semantically congruent with characteristic sounds, and with varying degrees of arbitrariness. Thus, a third hypothesis emerged 3) that the processing of audio-visual stimuli that entailed dynamic-visual motion stimuli versus static-visual stimuli will also reveal a double-dissociation of cortical processing pathways in the multisensory brain. The identification and characterization of any of these hypothesized neurobiological processing categories at a meta-analysis level would newly inform neurocognitive theories, specifying regions or network hubs where certain types of information may merge or in some way interact across sensory systems at a semantic category level. Thus, the resulting ALE maps are expected to facilitate the generation of new hypotheses regarding multisensory interaction and integration mechanisms in neurotypical individuals. They should also contribute to providing a foundation for ultimately understanding “why” multisensory processing networks develop the way they typically do, and why they may develop aberrantly, or fail to recover after brain injury, in certain clinical populations.

Materials and Methods

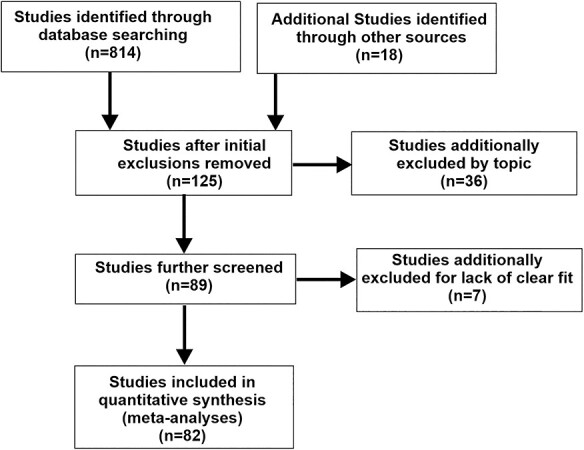

This work was performed in accordance with the PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions (Moher et al. 2009). Depicted in the PRISMA flow-chart (Fig. 2), original research studies were identified by PubMed and Google Scholar literature searches with keyword combinations “auditory + visual,” “audiovisual,” “multisensory,” and “fMRI” or “PET” or “MEG,” supplemented through studies identified through knowledge of the field published between 1999 through early 2020. Studies involving drug manipulations, patient populations, children, or nonhuman primates were excluded unless there was a neurotypical adult control group with separately reported outcomes. Of the included studies, reported coordinates for some paradigms had to be estimated from figures. Additionally, some studies did not use whole-brain imaging, but rather incorporated imaging to 50–60 mm slabs of axial brain slices so as to focus, for instance, on the thalamus or basal ganglia. These studies were included despite their being a potential violation of assumptions made by ALE analyses (see below) because the emphasis of the present study was to reveal proof of concept regarding differential audio-visual processing at a semantic category level. This yielded inclusion of 82 published fMRI, PET and MEG studies including audio-visual interaction(s) of some form (Table 1). The compiled coordinates, after converting to afni-TLRC coordinate system, derived from these studies are included in Appendix A, and correspond directly to Table 1.

Figure 2.

PRISMA table illustrating the flow of information through the different phases of the meta-analysis review.

Table 1.

List of all studies used in the subsequent subsets of audio-visual interaction site meta-analyses

| Study # | Experiment # | # Subjects | Multiple experiments | Left hem foci | Right hem foci | Number of foci | Congruent versus Incong | Living versus Nonliving | Emotional stimuli/task | Vocalizations versus Not | Dynamic (video) versus static | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 82 | 137 | First author | Year | Experimental code and abbreviated task | 1285 | 376 | 338 | 714 | Brief description of experimental paradigm | 2B | 2C | 2C | 2D | 2E | |

| 1 | 1 | Adams | 2002 | Expt 1 Table 3 A + V (aud coords only) | 12 | 5 | 1 | 6 | A and V commonly showing subordinate > basic object name verification (words with pictures or environmental sounds) | 1 | 2 | 2 | 2 | ||

| 2 | 2 | Alink | 2008 | Table 1c spheres move to drum sounds | 10 | 4 | 6 | 10 | Visual spheres and drum sounds moving: crossmodal dynamic capture versus conflicting motion | 1 | 2 | 2 | 1 | ||

| 3 | 3 | Balk | 2010 | Figure 2 asynchronous versus simultaneous | 14 | 2 | 1 | 3 | Natural asynchronous versus simultaneous AV speech synchrony (included both contrasts as interaction effects) | 1 | 1 | 1 | |||

| 4 | 4 | Baumann | 2007 | Table 1B coherent V + A versus A | 12 | 2 | 1 | 3 | Visual dots 16% coherent motion and in-phase acoustic noise > stationary acoustic sound | 1 | 2 | 2 | 2 | 1 | |

| 5 | Baumann | 2007 | Table 2B | pooled | 15 | 12 | 27 | Moving acoustic noise and visual dots 16% in-phase coherent > random dot motion | 1 | 2 | 2 | 2 | 1 | ||

| 5 | 6 | Baumgaertner | 2007 | Table 3 Action > nonact sentence+video | 19 | 3 | 0 | 3 | Conjunction spoken sentences (actions > nonactions) AND videos (actions > nonactions) | 1 | 1 | 1 | 1 | ||

| 6 | 7 | Beauchamp | 2004a | Figure 3J and K, Table 1 first 2 foci only | 26 | 2 | 0 | 2 | See photographs of tools, animals and hear corresponding sounds versus scrambled images and synthesized rippled sounds | 1 | 1 | 2 | 2 | 2 | |

| 7 | 8 | Beauchamp | 2004b | Expt 1 coordinates | 8 | 1 | 1 | 2 | High resolution version of 2004a study: AV tool videos versus unimodal (AV > A,V) | 1 | 1 | 2 | 2 | 1 | |

| 8 | 9 | Belardinelli | 2004 | Table 1 AV semantic congruence | 13 | 6 | 6 | 12 | Colored images of tools, animals, humans and semantically congruent versus incongruent sounds | 1 | 1 | 2 | 2 | ||

| 10 | Belardinelli | 2004 | Table 2 AV semantic incongruent | pooled | 2 | 3 | 5 | Colored images of tools, animals, humans and semantically incongruent versus congruent sounds | 2 | 0 | 0 | 0 | 0 | ||

| 9 | 11 | Biau | 2016 | Table 1A Interaction; speech synchronous | 17 | 8 | 0 | 8 | Hand gesture beats versus cartoon disk and speech interaction: synchronous versus asynchronous | 1 | 1 | 1 | 1 | ||

| 10 | 12 | Bischoff | 2007 | Table 2A only P < 0.05 included | 19 | 2 | 1 | 3 | Ventriloquism effect: gray disks and tones, synchronous (P < 0.05 corrected) | 1 | 2 | ||||

| 11 | 13 | Blank | 2013 | Figure 2 | 19 | 1 | 0 | 1 | Visual-speech recognition correlated with recognition performance | 1 | 1 | 1 | 1 | ||

| 12 | 14 | Bonath | 2013 | pg 116 congruent thalamus | 18 | 1 | 0 | 1 | Small checkerboards and tones: spatially congruent versus incongruent (thalamus) | 1 | 2 | 2 | 2 | ||

| 15 | Bonath | 2013 | pg 116 incongruent | pooled | 1 | 1 | 2 | Small checkerboards and tones: spatially incongruent versus congruent (thalamus) | 2 | 0 | 0 | 0 | 0 | ||

| 13 | 16 | Bonath | 2014 | Table 1A illusory versus not | 20 | 1 | 5 | 6 | Small checkerboards and tones: temporal > spatial congruence | 1 | 2 | 2 | 2 | ||

| 17 | Bonath | 2014 | Table 1B synchronous > no illusion | pooled | 3 | 0 | 3 | Small checkerboards and tones: spatial > temporal congruence | 1 | 2 | 2 | 2 | |||

| 14 | 18 | Bushara | 2001 | Table 1A (Fig. 2) AV-Control | 12 | 1 | 3 | 4 | Tones (100 ms) and colored circles synchrony: detect Auditory then Visual presentation versus Control | 1 | 2 | 2 | |||

| 19 | Bushara | 2001 | Table 1B (VA-C) five coords | pooled | 2 | 3 | 5 | Tones (100 ms) and colored circles synchrony: detect Visual then Auditory presentation versus Control | 1 | 2 | 2 | ||||

| 20 | Bushara | 2001 | Table 2A interact w/Rt Insula | pooled | 2 | 4 | 6 | Tones and colored circles: correlated functional connections with (and including) the right insula | 1 | 2 | 2 | ||||

| 15 | 21 | Bushara | 2003 | Table 2A collide > pass, strong A-V interact | 7 | 5 | 3 | 8 | Tone and two visual bars moving: tone synchrony induce perception they collide (AV interaction) versus pass by | 1 | 2 | 2 | 1 | ||

| 16 | 22 | Callan | 2014 | Table 5 AV-Audio (AV10-A10)-(AV6-A6) | 16 | 4 | 4 | 8 | Multisensory enhancement to visual speech in noise correlated with behavioral results | 1 | 1 | 1 | 1 | ||

| 23 | Callan | 2014 | Table 6 AV—Visual only | pooled | 1 | 1 | 2 | Multisensory enhancement to visual speech audio-visual versus visual only | 1 | 1 | 1 | 1 | |||

| 17 | 24 | Calvert | 1999 | Table 1 (Fig. 1) | 5 | 3 | 4 | 7 | View image of lower face and hear numbers 1 through 10 versus unimodal conditions (AV > Photos, Auditory) | 1 | 1 | 2 | 1 | 2 | |

| 18 | 25 | Calvert | 2000 | Figure 2 superadditive+subadditive AVspeech | 10 | 1 | 0 | 1 | Speech and lower face: supra-additive plus subadditive effects (AV-congruent > A,V > AV-incongruent) | 1 | 1 | 2 | 1 | 1 | |

| 26 | Calvert | 2000 | Table 1 supradditive AVspeech | pooled | 4 | 5 | 9 | Speech and lower face: supra-additive AV enhancement | 1 | 1 | 2 | 1 | 1 | ||

| 27 | Calvert | 2000 | Table 2 incongruent subadditive AVspeech | pooled | 3 | 3 | 6 | Speech and lower face: subadditive AV response to incongruent AV inputs | 2 | 0 | 0 | 0 | 0 | ||

| 19 | 28 | Calvert | 2001 | Table 2 superadditive and response depression | 10 | 4 | 11 | 15 | B/W visual checkerboard reversing and white noise bursts: Synchronous versus not; supradditive and response depression | 1 | 2 | 2 | |||

| 29 | Calvert | 2001 | Table 3A superadditive only | pooled | 6 | 4 | 10 | B/W visual checkerboard reversing and white noise bursts: Synchronous versus not; supradditive only | 1 | 2 | 2 | ||||

| 30 | Calvert | 2001 | Table 3B response depression only | pooled | 3 | 4 | 7 | B/W visual checkerboard reversing and white noise bursts: Synchronous versus not; response depression only | 1 | 2 | 2 | ||||

| 20 | 31 | Calvert | 2003 | Table 2A (Fig. 3 blue) | 8 | 13 | 8 | 21 | Speech and lower face: Moving dynamic speech (phonemes) versus stilled speech frames | 1 | 1 | 2 | 1 | 1 | |

| 21 | 32 | DeHaas | 2013 | Table 1A AVcong—Visual | 15 | 3 | 3 | 6 | Video clips of natural scenes (animals, humans): AV congruent versus Visual | 1 | 1 | 1 | |||

| 33 | DeHaas | 2013 | Table 1B V-AV incongruent | pooled | 2 | 0 | 2 | Video clips of natural scenes (animals, humans): Visual versus AV incongruent | 2 | 0 | 0 | 0 | 0 | ||

| 22 | 34 | Erickson | 2014 | Table 1A Congruent AV speech | 10 | 2 | 2 | 4 | McGurk effect (phonemes): congruent AV speech: AV > A and AV > V | 1 | 1 | 2 | 1 | 1 | |

| 35 | Erickson | 2014 | Table 1B McGurk speech | pooled | 2 | 0 | 2 | McGurk speech effect (phonemes) | 1 | 1 | 2 | 1 | 1 | ||

| 23 | 36 | Ethofer | 2013 | Table 1C emotion | 23 | 1 | 2 | 3 | Audiovisual emotional face-voice integration | 1 | 1 | 1 | 1 | 1 | |

| 24 | 37 | Gonzalo | 2000 | Table 1 AV > AVincon music and Chinese ideograms | 14 | 1 | 1 | 2 | Learn novel Kanji characters and musical chords, activity increases over time for consistent AV pairings | 1 | 2 | 2 | |||

| 38 | Gonzalo | 2000 | Table 2 inconsistent AV | pooled | 4 | 4 | 8 | Learn novel Kanji characters and musical chords, activity increases over time to inconsistent pairings | 2 | 0 | 0 | 0 | 0 | ||

| 39 | Gonzalo | 2000 | Table 3 AV consistent versus Aud | pooled | 1 | 1 | 2 | Learn novel Kanji characters and musical chords, learn consistent (vs. inconsistent) pairings versus auditory only | 1 | 2 | 2 | ||||

| 25 | 40 | Green | 2009 | Table 1 incongruent > congruent gesture-speech | 16 | 4 | 5 | 9 | Incongruent versus congruent gesture-speech | 2 | 1 | 1 | 1 | ||

| 41 | Green | 2009 | Table 4A Congruent gesture-speech > gesture or speech | pooled | 1 | 0 | 1 | Congruent gesture-speech versus gesture with unfamiliar speech and with familiar speech | 1 | 1 | 1 | 1 | |||

| 26 | 42 | Hagan | 2013 | Table 1 AV emotion, novel over time | 18 | 5 | 3 | 8 | Affective audio-visual speech: congruent AV emotion versus A, V; unique ROIs over time (MEG) | 1 | 1 | 1 | 1 | ||

| 43 | Hagan | 2013 | Table 2 AV emotion incongruent | pooled | 1 | 5 | 6 | Affective audio-visual speech: incongruent AV emotion versus A, V; unique ROIs over time (MEG) | 2 | 0 | 0 | 0 | 0 | ||

| 27 | 44 | Hasegawa | 2004 | Table 1A (well trained piano) AV induced by V-only | 26 | 12 | 6 | 18 | Piano playing: well trained pianists, mapping hand movements to sequences of sound | 1 | 1 | 2 | 1 | ||

| 28 | 45 | Hashimoto | 2004 | Table 1G (Fig. 4B, red) Learning Hangul letters to sounds | 12 | 2 | 1 | 3 | Unfamiliar Hangul letters and nonsense words, learn speech versus tone/noise pairings | 1 | 2 | 1 | 2 | ||

| 29 | 46 | He | 2015 | Table 3C AV speech foreign (left MTG focus) | 20 | 1 | 0 | 1 | Intrinsically meaningful gestures with German speech: Gesture-German > Gesture-Russian, German speech only | 1 | 1 | 1 | 1 | ||

| 30 | 47 | He | 2018 | Table 2 gestures and speech integration | 20 | 1 | 0 | 1 | Gesture-speech integration: Bimodal speech-gesture versus unimodal gesture with foreign speech and versus unimodal speech | 1 | 1 | 0 | 1 | 1 | |

| 31 | 48 | Hein | 2007 | Figure 2A AV incongruent | 18 | 0 | 2 | 2 | Familiar animal images and incorrect (incongruent) vocalizations (dog: meow) versus correct pairs | 2 | 0 | 0 | 0 | 0 | |

| 49 | Hein | 2007 | Figure 2B AV-artificial/nonliving | pooled | 0 | 1 | 1 | B/W images of artificial objects (“fribbles”) and animal vocalizations versus unimodal A, V | 1 | 2 | |||||

| 50 | Hein | 2007 | Figure 2C pSTS, pSTG, mSTG AV-cong | pooled | 0 | 3 | 3 | Familiar animal images and correct vocalizations (dog: woof-woof) | 1 | 1 | 1 | 2 | |||

| 51 | Hein | 2007 | Figure 3A incongruent | pooled | 4 | 0 | 4 | AV familiar incongruent versus unfamiliar artificial (red foci 1, 5, 6, 9) | 2 | 0 | 0 | 0 | 0 | ||

| 52 | Hein | 2007 | Figure 3B Foci 2, 3, 4 (blue) artificial/nonliving | pooled | 3 | 0 | 3 | Visual “Fribbles” and backward/underwater distorted animal sounds, learn pairings (blue foci 2,3,4) | 1 | 1 | 2 | ||||

| 53 | Hein | 2007 | Figure 3C congruent living (green) | pooled | 3 | 0 | 3 | Familiar congruent living versus artificial AV object features and animal sounds (green foci 7, 8, 10) | 1 | 1 | 2 | ||||

| 32 | 54 | Hocking | 2008 | pg 2444 verbal | 18 | 2 | 0 | 2 | (pSTS mask) Color photos, written names, auditory names, environmental sounds conceptually matched “amodal” | 1 | 1 | 2 | |||

| 55 | Hocking | 2008 | Table 3 incongruent simultaneous matching | pooled | 8 | 10 | 18 | Incongruent sequential AV pairs (e.g., see drum, hear bagpipes) versus congruent pairs | 2 | 0 | 0 | 0 | 0 | ||

| 33 | 56 | Hove | 2013 | pg 316 AV interaction putamen | 14 | 0 | 1 | 1 | Interaction between (beep > flash) versus (siren > moving bar); left putamen focus | 1 | 2 | ||||

| 34 | 57 | James | 2003 | Figure 2 | 12 | 0 | 1 | 1 | Activation by visual objects (“Greebles”) associated with auditory features (e.g., buzzes, screeches); (STG) | 1 | 2 | ||||

| 35 | 58 | James | 2011 | Table 1A bimodal (vs. scrambled) | 12 | 4 | 2 | 6 | Video of human manual actions (e.g., sawing): Auditory and Visual intact versus scrambled, AV event selectivity | 1 | 1 | 2 | 2 | 1 | |

| 36 | 59 | Jessen | 2015 | Table 1A emotion > neutral AV enhanced | 17 | 1 | 1 | 2 | Emotional multisensory whole body and voice expressions: AV emotion (anger and fear) > neutral expressions | 1 | 1 | 1 | 1 | 1 | |

| 60 | Jessen | 2015 | Table 1D fear > neutral AV enhanced | pooled | 2 | 1 | 3 | Emotional multisensory whole body and voice expressions: AV fear > neutral expressions | 1 | 1 | 1 | 1 | 1 | ||

| 37 | 61 | Jola | 2013 | Table 1C AVcondition dance | 12 | 3 | 3 | 6 | Viewing unfamiliar dance performance (tells a story by gesture) with versus without music: using intersubject correlation | 1 | 1 | 1 | 2 | 1 | |

| 38 | 62 | Kim | 2015 | Table 2A AV > C speech semantic match | 15 | 2 | 0 | 2 | Moving audio-visual speech perception versus white noise and unopened mouth movements | 1 | 1 | 1 | 1 | ||

| 39 | 63 | Kircher | 2009 | Figure 3B gesture related activation increase | 14 | 3 | 1 | 4 | Bimodal gesture-speech versus gesture and versus speech | 1 | 1 | 1 | 1 | ||

| 40 | 64 | Kreifelts | 2007 | Table 1 voice-face emotion | 24 | 1 | 2 | 3 | Facial expression and intonated spoken words, judge emotion expressed (AV > A,V; P < 0.05 only) | 1 | 1 | 1 | 1 | 1 | |

| 65 | Kreifelts | 2007 | Table 5 AV increase effective connectivity | pooled | 2 | 4 | 6 | Increased effectiveness connectivity with pSTS and thalamus during AV integration of nonverbal emotional information | 1 | 1 | 1 | 1 | 1 | ||

| 41 | 66 | Lewis | 2000 | Table 1 | 7 | 2 | 3 | 5 | Compare speed of tone sweeps to visual dot coherent motion: Bimodal versus unimodal | 1 | 2 | 2 | 1 | ||

| 42 | 67 | Matchin | 2014 | Table 1 AV > Aud only (McGurk) | 20 | 2 | 7 | 9 | McGurk audio-visual speech: AV > A only | 1 | 1 | 2 | 1 | 1 | |

| 68 | Matchin | 2014 | Table 2 AV > Video only | pooled | 9 | 6 | 15 | McGurk audio-visual speech: AV > V only | 1 | 1 | 2 | 1 | 1 | ||

| 69 | Matchin | 2014 | Table 3 MM > AV McGurk | pooled | 7 | 4 | 11 | McGurk Mismatch > AV speech integration | 2 | 0 | 0 | 0 | 0 | ||

| 43 | 70 | McNamara | 2008 | Table (BA44 and IPL) | 12 | 2 | 2 | 4 | Videos of meaningless hand gestures and synthetic tone sounds: Increases in functional connectivity with learning | 1 | 2 | 1 | |||

| 44 | 71 | Meyer | 2007 | Table 3 paired A + V versus null | 16 | 3 | 3 | 6 | Paired screen red flashes with phone ring: paired V (conditioned stimulus) and A (unconditioned) versus null events | 1 | 2 | 2 | |||

| 72 | Meyer | 2007 | Table 4 CS+, learned AV association with V-only | pooled | 4 | 6 | 10 | Paired screen flashes with phone ring: View flashes after postconditioned versus null events | 1 | 2 | 2 | ||||

| 45 | 73 | Muller | 2012 | Table S1 effective connectivity changes | 27 | 4 | 3 | 7 | Emotional facial expression (groaning, laughing) AV integration and gating of information | 1 | 1 | 1 | 1 | 2 | |

| 46 | 74 | Murase | 2008 | Figure 4 discordant > concordant AVinteraction | 28 | 1 | 0 | 1 | Audiovisual speech (syllables) showing activity to discordant versus concordant stimuli: left mid-STS | 2 | 1 | 2 | 1 | 1 | |

| 47 | 75 | Naghavi | 2007 | Figure 1C | 23 | 0 | 3 | 3 | B/W pictures (animals, tools, instruments, vehicles) and their sounds: Congruent versus Incongruent | 1 | 3 | 3 | 2 | ||

| 48 | 76 | Naghavi | 2011 | Figure 2A cong = incon | 30 | 1 | 0 | 1 | B/W drawings of objects (living and non) and natural sounds (barking, piano): congruent = incongruent encoding | 0 | 2 | ||||

| 77 | Naghavi | 2011 | Figure 2B congruent > incongruent | pooled | 0 | 1 | 1 | B/W drawings of objects (living and non) and natural sounds (barking, piano): congruent > incongruent encoding | 1 | 2 | |||||

| 78 | Naghavi | 2011 | Figure 2C incongruent > congruent | pooled | 1 | 1 | 2 | B/W drawings of objects (living and non) and natural sounds (barking, piano): incongruent > congruent encoding | 2 | 0 | 0 | 0 | 0 | ||

| 49 | 79 | Nath | 2012 | pg 784 | 14 | 1 | 0 | 1 | McGurk effect (phonemes): congruent AV speech correlated with behavioral percept | 1 | 1 | 2 | 1 | 1 | |

| 50 | 80 | Naumer | 2008 | Figure 2 Table 1A max contrast | 18 | 8 | 6 | 14 | Images of “Fribbles” and learned artificial sounds (underwater animal vocals): post training versus max contrast | 1 | 1 | 2 | |||

| 81 | Naumer | 2008 | Figure 3 Table 1B pre–post | pooled | 5 | 6 | 11 | Images of “Fribbles” and learned corresponding artificial sounds: Post- versus Pre-training session | 1 | 1 | 2 | ||||

| 82 | Naumer | 2008 | Figure 4 Table 2 | pooled | 1 | 1 | 2 | Learn of “Freebles” and distorted sounds as incongruent > congruent pairs | 2 | 0 | 0 | 0 | 0 | ||

| 51 | 83 | Naumer | 2011 | Figure 3C | 10 | 1 | 0 | 1 | Photographs of objects (living and non) and related natural sounds | 1 | 2 | ||||

| 52 | 84 | Noppeny | 2008 | Table 2 AV incongruent > congruent | 17 | 5 | 2 | 7 | Speech sound recognition through AV priming, environmental sounds and spoken words: Incongruent > congruent | 2 | 0 | 0 | 0 | 0 | |

| 85 | Noppeny | 2008 | Table 3 AV congruent sounds/words | pooled | 4 | 0 | 4 | Speech sound recognition through AV priming, environmental sounds and spoken words: Congruent > incongruent | 1 | 2 | |||||

| 53 | 86 | Ogawa | 2013a | Table 1 (pg 162 data) | 13 | 1 | 0 | 1 | AV congruency of pure tone and white dots moving on screen (area left V3A) | 1 | 2 | 2 | |||

| 54 | 87 | Ogawa | 2013b | Table 1 3D > 2D and surround > monaural effects | 16 | 3 | 4 | 7 | Cinematic 3D > 2D video and surround sound > monaural while watching a movie (“The Three Musketeers”) | 1 | 1 | 0 | 1 | ||

| 55 | 88 | Okada | 2013 | Table 1 AV > A | 20 | 5 | 4 | 9 | Video of AV > A speech only | 1 | 1 | 1 | 1 | ||

| 56 | 89 | Olson | 2002 | Table 1A synchronized AV > static Vis-only | 10 | 7 | 4 | 11 | Whole face video and heard words: Synchronized AV versus static V | 1 | 1 | 1 | 1 | ||

| 90 | Olson | 2002 | Table 1C synchronized AV > desynchronized AV speech | pooled | 2 | 0 | 2 | Whole face video and heard words: Synchronized versus desynchronized | 1 | 1 | 1 | 1 | |||

| 57 | 91 | Plank | 2012 | pg 803 AV congruent effect | 15 | 0 | 1 | 1 | AV spatially congruent > semantically matching images of natural objects and associated sounds (right STG) | 1 | 3 | 3 | 2 | ||

| 92 | Plank | 2012 | Table 2A spatially congruent-baseline | pooled | 5 | 5 | 10 | Images of natural objects and associated sounds, spatially congruent versus baseline | 1 | 3 | 3 | 2 | |||

| 58 | 93 | Raij | 2000 | Table 1B letters and speech sounds | 9 | 2 | 3 | 5 | Integration of visual letters and corresponding auditory phonetic expressions (MEG study) AV versus (A + V) | 1 | 2 | 1 | 2 | ||

| 59 | 94 | Regenbogen | 2017 | Table 2A degraded > clear Multisensory versus unimodal input | 29 | 5 | 6 | 11 | Degraded > clear AV versus both visual and auditory unimodal visual real-world object-in-action recognition | 1 | 0 | 2 | 1 | ||

| 60 | 95 | Robins | 2008 | Table 2 (Fig. 2) AV integration (AV > A and AV > V) | 10 | 2 | 1 | 3 | Face speaking sentences: angry, fearful, happy, neutral (AV > A,V) | 1 | 1 | 1 | |||

| 96 | Robins | 2008 | Table 4A (Fig. 5) AV integration and emotion | pooled | 1 | 4 | 5 | AV faces and spoken sentences expressing fear or neutral valence: AV integration (AV > A,V conditions) | 1 | 1 | 1 | 1 | |||

| 97 | Robins | 2008 | Table 4B emotion effects | pooled | 2 | 0 | 2 | AV faces and spoken sentences expressing fear or neutral valence: Emotional AV-fear > AV-neutral | 1 | 1 | 1 | 1 | 1 | ||

| 98 | Robins | 2008 | Table 4C (Fig. 5) fearful AV integration | pooled | 1 | 5 | 6 | AV faces and spoken sentences expressing fear or neutral valence: Fearful-only AV integration | 1 | 1 | 1 | 1 | 1 | ||

| 99 | Robins | 2008 | Table 4D AV-only emotion | pooled | 1 | 3 | 4 | AV faces and spoken sentences expressing fear or neutral valence: AV-only emotion | 1 | 1 | 1 | 1 | 1 | ||

| 61 | 100 | Scheef | 2009 | Table 1 cartoon jump + boing | 16 | 1 | 2 | 3 | Video of cartoon person jumping and “sonification” of a tone, learn correlated pairings: AV-V and AV-A conjunction | 1 | 1 | 2 | 2 | 1 | |

| 62 | 101 | Schmid | 2011 | Table 2E A effect V (Living and nonliving, pictures) | 12 | 3 | 4 | 7 | Environmental sounds and matching pictures: reduced activity by A | 1 | 3 | 3 | 2 | ||

| 102 | Schmid | 2011 | Table 2F V competition effect A (reduced activity by a visual object) | pooled | 2 | 2 | 4 | Environmental sounds and matching pictures: reduced activity by V | 1 | 3 | 3 | 2 | |||

| 103 | Schmid | 2011 | Table 2G AV crossmodal interaction × auditory attention | pooled | 2 | 3 | 5 | Environmental sounds and matching pictures: cross-modal interaction and auditory attention | 1 | 3 | 3 | 2 | |||

| 63 | 104 | Sekiyama | 2003 | Table 3 (fMRI nAV-AV) | 8 | 1 | 0 | 1 | AV speech, McGurk effect with phonemes (ba, da, ga) and noise modulation: noise-AV > AV (fMRI) | 1 | 1 | 2 | 1 | 1 | |

| 105 | Sekiyama | 2003 | Table 4 (PET nAV-AV) | pooled | 1 | 3 | 4 | AV speech, McGurk effect with phonemes (ba, da, ga) and noise modulation: noise-AV > AV (PET) | 1 | 1 | 2 | 1 | 1 | ||

| 64 | 106 | Sestieri | 2006 | Table 1 (Fig. 3), AV location match versus semantic | 10 | 2 | 5 | 7 | B/W images (animal, weapons) and environmental sounds: Match location > recognition | 1 | 1 | 2 | 2 | ||

| 107 | Sestieri | 2006 | Table 2 AV semantic recognition versus localization | pooled | 2 | 1 | 3 | B/W pictures and environmental sounds: congruent semantic recognition > localization task | 1 | 3 | 3 | 2 | |||

| 65 | 108 | Stevenson | 2009 | Table 1B AVtools > AVspeech | 11 | 1 | 1 | 2 | Hand tools in use video: inverse effectiveness (degraded AV tool > AV speech) | 1 | 1 | 2 | 3 | 1 | |

| 109 | Stevenson | 2009 | Table 1C (Fig. 8) AVspeech > AVtools | pooled | 1 | 1 | 2 | Face and speech video: inverse effectiveness (degraded AV speech > AV tool use) | 1 | 1 | 1 | 1 | |||

| 66 | 110 | Straube | 2011 | Table 3A and B iconic/metaphoric speech-gestures versus speech, gesture | 16 | 2 | 2 | 4 | Integration of Iconic and Metaphoric speech-gestures versus speech and gesture | 1 | 1 | 1 | 1 | ||

| 67 | 111 | Straube | 2014 | p939 Integration foci | 16 | 3 | 0 | 3 | Integration of iconic hand gesture-speech > unimodal speech and unimodal gesture (healthy control group) | 1 | 1 | 1 | 1 | ||

| 68 | 112 | Szycik | 2009 | Table 1 AV incongruent > AV congruent face+speech | 11 | 7 | 2 | 9 | Incongruent AV face-speech versus congruent AV face-speech | 2 | 1 | 1 | 1 | ||

| 69 | 113 | Tanabe | 2005 | Table 1A AV; A then V; not VA | 15 | 10 | 10 | 20 | Amorphous texture patterns and modulated white noises: Activation during learning delay period (AV) | 1 | 2 | 2 | 2 | 2 | |

| 114 | Tanabe | 2005 | Table 2A+2B (Fig. 5A) AV and VA | pooled | 5 | 6 | 11 | Amorphous texture patterns and modulated white noises: changes after feedback learning (AV and VA) | 1 | 2 | 2 | 2 | 2 | ||

| 115 | Tanabe | 2005 | Table 3A+3B (Fig. 6) AV and VA; delay period | pooled | 9 | 1 | 10 | Amorphous texture patterns and modulated white noises: sustained activity throughout learning (AV and VA) | 1 | 2 | 2 | 2 | 2 | ||

| 70 | 116 | Taylor | 2006 | pg 8240 AV incongruent | 15 | 1 | 0 | 1 | Color photos (V), environmental sounds (A), spoken words: Incongruent (living objects) | 2 | 0 | 0 | 0 | 0 | |

| 117 | Taylor | 2006 | Figure 1A and B, Figure 1C and D (living > nonliving) | pooled | 2 | 0 | 2 | Color photos (V), environmental sounds and spoken words (A): Cong AV versus Incong (living objects) | 1 | 2 | |||||

| 71 | 118 | Van Atteveldt | 2004 | Table 1A letters and speech sounds | 16 | 3 | 1 | 4 | Familiar letters and their speech sounds: Congruent versus not and Bimodal versus Unimodal | 1 | 1 | 2 | |||

| 72 | 119 | Van Atteveldt | 2007 | Table 2A+B (Fig. 2) | 12 | 3 | 2 | 5 | Single letters and their speech sounds (phonemes): Congruent > Incong; Passive perception, blocked and event-related design | 1 | 1 | 2 | |||

| 120 | Van Atteveldt | 2007 | Table 3 (Fig. 2) passive | pooled | 1 | 1 | 2 | Single letters and their speech sounds (phonemes): Congruent > Incong, active perception task | 1 | 1 | 2 | ||||

| 121 | Van Atteveldt | 2007 | Table 4 (Fig. 6) active condition, incongruent | pooled | 1 | 6 | 7 | Single letters and their speech sounds (phonemes): Incongruent > Congruent | 2 | 0 | 0 | 0 | 0 | ||

| 73 | 122 | Van Atteveldt | 2010 | Table 1B STS; specific adaptation congruent > incong | 16 | 3 | 1 | 4 | Letter and speech sound pairs (vowels, consonants): Specific adaptation effects | 1 | 1 | 2 | |||

| 74 | 123 | Van der Wyk | 2010 | Table 2 AV interaction effects oval/circles+speech/nonspeech | 16 | 3 | 3 | 6 | Geometric shape modulate with speech (sentences) | 1 | 1 | 1 | |||

| 75 | 124 | Von Kriegstein | 2006 | Figure 4B after > before voice-face | 14 | 0 | 4 | 4 | Face and object photos with voice and other sounds: Voice-Face association learning | 1 | 1 | 1 | 2 | ||

| 76 | 125 | Watkins | 2006 | Figure 4 illusory multisensory interaction | 11 | 0 | 2 | 2 | Two brief tone pips leads to illusion of two screen flashes (annulus with checkerboard) when only one flash present | 1 | 2 | 2 | |||

| 126 | Watkins | 2006 | Table 1 (A enhances V in general) | pooled | 5 | 3 | 8 | Single brief tone pip leads to illusion of single screen flash (annulus with checkerboard) when two flashes present | 1 | 2 | 2 | ||||

| 77 | 127 | Watkins | 2007 | Figure 3 2 flashes +1 beep illusion | 10 | 0 | 1 | 1 | Two visual flashes and single audio bleep leads to the illusion of a single flash | 1 | 2 | 2 | |||

| 78 | 128 | Watson | 2014a | Table 1A AV-adaptation effect (multimodal localizer) | 18 | 0 | 1 | 1 | Videos of emotional faces and voice: multisensory localizer | 1 | 1 | 1 | 1 | ||

| 129 | Watson | 2014a | Table 1C AV-adaptation effect, cross-modal adaptation effect | pooled | 0 | 1 | 1 | Videos of emotional faces and voice: crossmodal adaptation effects | 1 | 1 | 1 | 1 | 1 | ||

| 79 | 130 | Watson | 2014b | Table 1 AV > baseline (Living and nonliving) | 40 | 3 | 5 | 8 | Moving objects and videos of faces with corresponding sounds: AV > baseline | 1 | 1 | ||||

| 131 | Watson | 2014b | Table 4A integrative regions (Living and nonliving) | pooled | 2 | 2 | 4 | Moving objects and videos of faces with corresponding sounds: Integrative regions (AV > A,V) | 1 | 1 | |||||

| 132 | Watson | 2014b | Table 4B integrative regions (Living and nonliving) | pooled | 0 | 1 | 1 | Moving objects and videos of faces with corresponding sounds: People-selective integrative region | 1 | 1 | 1 | 1 | |||

| 80 | 133 | Werner | 2010 | Table 1 superadditive (AV-salience effect) | 21 | 0 | 3 | 3 | Categorize movies of actions with tools or musical instruments (degraded stimuli); AV interactions both tasks | 1 | 1 | 2 | 2 | 1 | |

| 134 | Werner | 2010 | Table 2 AV interactions predict behavior | pooled | 1 | 2 | 3 | Categorize movies of actions with tools or musical instruments; AV interactions predicted by behavior | 1 | 1 | 2 | 2 | 1 | ||

| 135 | Werner | 2010 | Table 3C superadditive AV due to task | pooled | 3 | 0 | 3 | Categorize movies of actions with tools or musical instruments; Subadditive AV to task | 1 | 1 | 2 | 2 | 1 | ||

| 81 | 136 | Willems | 2007 | Table 3C and D mismatch hand gestures and speech | 16 | 2 | 1 | 3 | Mismatch of hand gesture (no face) and speech versus correct | 2 | 1 | 1 | 1 | ||

| 82 | 137 | Wolf | 2014 | Table 1 face cartoons + phonemes | 16 | 1 | 1 | 2 | Drawing of faces with emotional expressions: Supramodal effects with emotional valence | 1 | 1 | 1 | 1 | 2 |

Notes: The first column denotes the 82 included studies, and the second column the 137 experimental paradigms of those studies. The next columns depict first author (alphabetically), the year, and abbreviated description of the data table (T) or figure (F) used, followed by number of subjects. The column labeled “Multiple experiments” indicates that the multiple experimental paradigms where subject numbers were pooled from that study for the meta-analysis, such as for the single study ALE meta-analysis depicted in Figure 3A (purple). The number of reported foci in the left and right hemispheres and their sum is also indicated. This is followed by a brief description of the experimental paradigm: B/W = black and white, A = audio, AV = audio-visual, V = visual, VA = visual-audio. The rightmost columns show the coding of experimental paradigms that appear in subsequent meta-analyses and Tables, with correspondence to the results illustrated in Figure 3: 0 = not used in contrast, 1 = included, 2 = included as the contrast condition, blank cell = uncertain of clear category membership and not used in that contrast condition. See text for other details.

Activation Likelihood Estimate Analyses

The ALE analysis consists of a coordinate-based, probabilistic meta-analytic technique for assessing the colocalization of reported activations across studies (Turkeltaub et al. 2002, 2012; Eickhoff et al. 2009, 2012, 2016; Laird et al. 2009, 2010; Muller et al. 2018). Whole-brain probability maps were initially created across all the reported foci in standardized stereotaxic space (Talairach “T88,” being converted from, for example, Montreal Neurological Institute “MNI” format) using GingerALE software (Brainmap GingerALE version 2.3.6; Research Imaging Institute; http://brainmap.org). This software was also used to create probability maps, where probabilities were modeled by 3D Gaussian density distributions that took into account sample size variability by adjusting the full-width half-max (FWHM) for each study (Eickhoff et al. 2009; Eickhoff et al. 2016). For each voxel, GingerALE estimated the cumulative probabilities that at least one study reported activation for that locus for a given experimental paradigm condition. Assuming and accounting for spatial uncertainty across reports, this voxel-wise procedure generated statistically thresheld ALE maps, wherein the resulting ALE values reflected the probability of reported activation at that locus. Using a random effects model, the values were tested against the null hypothesis that activation was independently distributed across all studies in a given meta-analysis.

To determine the likely spatial convergence of reported activations across studies, activation foci coordinates from experimental paradigms were transferred manually and compiled into one spreadsheet on two separate occasions by two different investigators (coauthors). To avoid (or minimize) the potential for errors (e.g., transformation from MNI to TAL, sign errors, duplicates, omissions, etc.) an intermediate stage of data entry involved logging all the coordinates and their transformations into one spreadsheet (Appendix A) where they were coded by Table/Figure and number of subjects (Table 1), facilitating inspection and verification relative to hard copy printouts of all included studies. A third set of files (text files) were then constructed from that spreadsheet of coordinates and entered as input files for the various meta-analyses using GingerALE software. This process enabled a check-sums of number of left and right hemisphere foci and the number of subjects for all of the meta-analyses reported herein. When creating single study data set analysis ALE maps, coordinates from experimental paradigms of a given study (using the same participants in each paradigm) were pooled together, thereby avoiding potential violations of assumed subject-independence across maps, which could negatively impact the validity of the meta-analytic results (Turkeltaub et al. 2012). After pooling, there were 1285 participants (Table 1, column 6). Some participants could conceivably have been recruited in more than one study (such as from the same laboratory). However, we had no means for assessing this and assumed that these were all unique individuals. All single study data set ALE maps were thresheld at P < 0.05 with a voxel-level family-wise error (FWE) rate correction for multiple comparisons (Muller et al. 2018) using 10 000 Monte Carlo threshold permutations. For all “contrast” ALE meta-analysis maps, cluster-level thresholds were derived using the single study corrected FWE datasets and then further thresheld for contrast at an uncorrected P < 0.05, and using 10 000 permutations. Minimum cluster sizes were used to further assess rigorousness of clusters, which are included in the tables and addressed in Results.

Guided by earlier meta-analyses of hearing perception and audio-visual interaction sites, several hypothesis driven contrasts were derived as addressed in the Introduction (Lewis 2010; Brefczynski-Lewis and Lewis 2017). A minimum of 17–20 studies was generally recommended to achieve sufficient statistical power to detect smaller effects and make sure that results were not driven by single experiments (Eickhoff et al. 2016; Muller et al. 2018). However, 2 of the 10 subsets of meta-analysis were performed despite there being relatively few numbers of studies (i.e., n = 13 in Table 9; n = 9 in Table 10), and thus their outcomes would presumably only reveal the larger effect sizes and merit future study. For visualization purposes, resulting maps were initially projected onto the N27 atlas brain using AFNI software (Cox 1996) to assess and interpret results, and onto the population-averaged, landmark-, and surface-based (PALS) atlas cortical surface models (in AFNI-Talairach space) using Caret software (http://brainmap.wustl.edu) for illustration of the main findings (Van Essen et al. 2001; Van Essen 2005).

Table 9.

Studies included in the Actions category for audio-visual interaction site meta-analyses

| Study # | Experiment # | # Subjects | Multiple experiments | Left hem foci | Right hem foci | Number of foci | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| 13 | 19 | First author | Year | Experimental code and abbreviated task | 205 | 50 | 50 | 100 | Brief description of experimental paradigm | |

| 6 | 7 | Beauchamp | 2004a | Figure 3J and K, Table 1 first 2 foci only | 26 | 2 | 0 | 2 | See photographs of tools, animals and hear corresponding sounds versus scrambled images and synthesized rippled sounds | |

| 7 | 8 | Beauchamp | 2004b | Expt 1 coordinates | 8 | 1 | 1 | 2 | High-resolution version of 2004a study: AV tool videos versus unimodal (AV > A,V) | |

| 8 | 9 | Belardinelli | 2004 | Table 1 AV semantic congruence | 13 | 6 | 6 | 12 | Colored images of tools, animals, humans and semantically congruent versus incongruent sounds | |

| 27 | 44 | Hasegawa | 2004 | Table 1A (well-trained piano) AV induced by V-only | 26 | 12 | 6 | 18 | Piano playing: well trained pianists, mapping hand movements to sequences of sound | |

| 35 | 58 | James | 2011 | Table 1A bimodal (vs. scrambled) | 12 | 4 | 2 | 6 | Video of human manual actions (e.g., sawing): Auditory and Visual intact versus scrambled, AV event selectivity | |

| 37 | 61 | Jola | 2013 | Table 1C AVcondition dance | 12 | 3 | 3 | 6 | Viewing unfamiliar dance performance (tells a story by gesture) with versus without music: using intersubject correlation | |

| 47 | 75 | Naghavi | 2007 | Figure 1C | 23 | 0 | 3 | 3 | B/W pictures (animals, tools, instruments, vehicles) and their sounds: Cong versus Incong | |

| 57 | 91 | Plank | 2012 | pg 803 AV congruent effect | 15 | 0 | 1 | 1 | AV spatially congruent > semantically matching images of natural objects and associated sounds (right STG) | |

| 92 | Plank | 2012 | Table 2A spatially congruent-baseline | pooled | 5 | 5 | 10 | Images of natural objects and associated sounds, spatially congruent versus baseline | ||

| 61 | 100 | Scheef | 2009 | Table 1 cartoon jump + boing | 16 | 1 | 2 | 3 | Video of cartoon person jumping and “sonification” of a tone, learn correlated pairings: AV-V and AV-A conjunction | |

| 62 | 101 | Schmid | 2011 | Table 2E A effect V (Living and nonliving, pictures) | 12 | 3 | 4 | 7 | Environmental sounds and matching pictures: reduced activity by A | |

| 102 | Schmid | 2011 | Table 2F V competition effect A (reduced activity by a visual object) | pooled | 2 | 2 | 4 | Environmental sounds and matching pictures: reduced activity by V | ||

| 103 | Schmid | 2011 | Table 2G AV crossmodal interaction × auditory attention | pooled | 2 | 3 | 5 | Environmental sounds and matching pictures: cross-modal interaction and auditory attention | ||

| 64 | 106 | Sestieri | 2006 | Table 1 (Fig. 3), AV location match versus semantic | 10 | 2 | 5 | 7 | B/W images (animal, weapons) and environmental sounds: Match location > recognition | |

| 107 | Sestieri | 2006 | Table 2 AV semantic recognition versus localization | pooled | 2 | 1 | 3 | B/W pictures and environmental sounds: congruent semantic recognition > localization task | ||

| 65 | 108 | Stevenson | 2009 | Table 1B AVtools > AVspeech | 11 | 1 | 1 | 2 | Hand tools in use video: inverse effectiveness (degraded AV tool > AV speech) | |

| 80 | 133 | Werner | 2010 | Table 1 superadditive (AV-salience effect) | 21 | 0 | 3 | 3 | Categorize movies of actions with tools or musical instruments (degraded stimuli); AV interactions both tasks | |

| 134 | Werner | 2010 | Table 2 AV interactions predict behavior | pooled | 1 | 2 | 3 | Categorize movies of actions with tools or musical instruments; AV interactions predicted by behavior | ||

| 135 | Werner | 2010 | Table 3C superadditive AV due to task | pooled | 3 | 0 | 3 | Categorize movies of actions with tools or musical instruments; Subadditive AV to task |

Table 10.

Studies included in the Emotional audio-visual interaction site meta-analyses

| Study # | Experiment # | # Subjects | Multiple experiments | Left hem foci | Right hem foci | Number of foci | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| 9 | 13 | First author | Year | Experimental code and abbreviated task | 160 | 24 | 29 | 53 | Brief description of experimental paradigm | |

| 23 | 36 | Ethofer | 2013 | Table 1C emotion | 23 | 1 | 2 | 3 | Audiovisual emotional face-voice integration | |

| 26 | 42 | Hagan | 2013 | Table 1 AV emotion, novel over time | 18 | 5 | 3 | 8 | Affective audio-visual speech: congruent AV emotion versus A, V; unique ROIs over time (MEG) | |

| 36 | 59 | Jessen | 2015 | Table 1A emotion > neutral AV enhanced | 17 | 1 | 1 | 2 | Emotional multisensory whole body and voice expressions: AV emotion (anger and fear) > neutral expressions | |

| 60 | Jessen | 2015 | Table 1D fear > neutral AV enhanced | pooled | 2 | 1 | 3 | Emotional multisensory whole body and voice expressions: AV fear > neutral expressions | ||

| 37 | 61 | Jola | 2013 | Table 1C AVcondition dance | 12 | 3 | 3 | 6 | Viewing unfamiliar dance performance (tells a story by gesture) with versus without music: using intersubject correlation | |

| 40 | 64 | Kreifelts | 2007 | Table 1 voice-face emotion | 24 | 1 | 2 | 3 | Facial expression and intonated spoken words, judge emotion expressed (AV > A,V; P < 0.05 only) | |

| 65 | Kreifelts | 2007 | Table 5 AV increase effective connectivity | pooled | 2 | 4 | 6 | Increased effectiveness connectivity with pSTS and thalamus during AV integration of nonverbal emotional information | ||

| 45 | 73 | Muller | 2012 | Supplementary Table 1 effective connectivity changes | 27 | 4 | 3 | 7 | Emotional facial expression (groaning, laughing) AV integration and gating of information | |

| 60 | 97 | Robins | 2008 | Table 4B emotion effects | 5 | 2 | 0 | 2 | AV faces and spoken sentences expressing fear or neutral valence: Emotional AV-fear > AV-neutral | |

| 98 | Robins | 2008 | Table 4C (Fig. 5) fearful AV integration | pooled | 1 | 5 | 6 | AV faces and spoken sentences expressing fear or neutral valence: Fearful-only AV integration | ||

| 99 | Robins | 2008 | Table 4D AV-only emotion | pooled | 1 | 3 | 4 | AV faces and spoken sentences expressing fear or neutral valence: AV-only emotion | ||

| 78 | 129 | Watson | 2014a | Table 1C AV-adaptation effect, cross-modal adaptation effect | 18 | 0 | 1 | 1 | Videos of emotional faces and voice: crossmodal adaptation effects | |

| 82 | 137 | Wolf | 2014 | Table 1 face cartoons + phonemes | 16 | 1 | 1 | 2 | Drawing of faces with emotional expressions: Supramodal effects with emotional valence |

Results

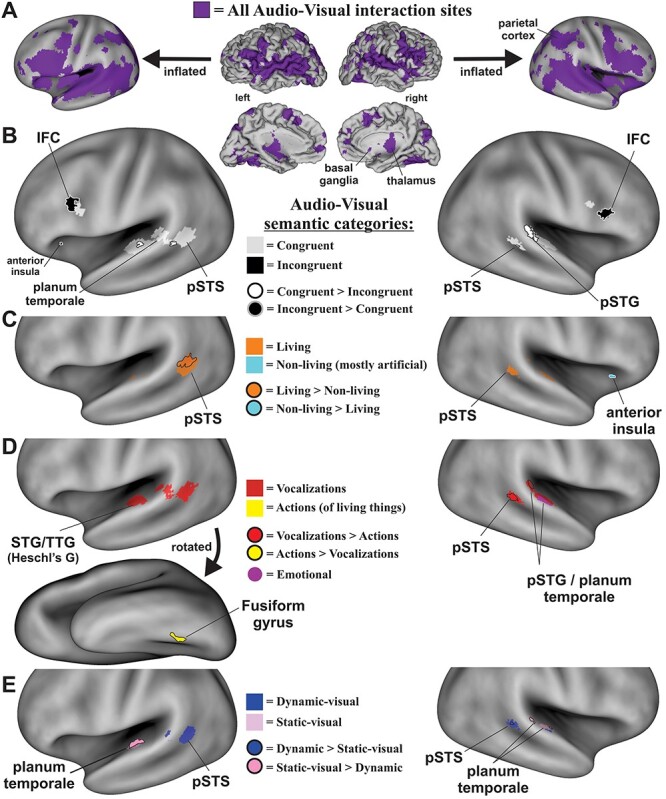

The database search for audio-visual experiments reporting interaction effects yielded 137 experimental paradigms from 82 published articles (Fig. 2; PRISMA flow-chart). Experiments revealing an effect of audio-visual stimuli (Table 1) included 1285 subjects (though see Materials and Methods) and 714 coordinate brain locations (376 left hemisphere, 338 right). ALE meta-analysis of all these reported foci (congruent plus incongruent audio-visual interaction effects) revealed a substantial expanse of activated brain regions (Fig. 3A, purple hues; projected onto both fiducial and inflated brain model images). Note that this unthresholded map revealed foci reported as demonstrating audio-visual interactions that were found to be significant in at least one of the original studies, thereby illustrating the substantial global expanse of reported brain territories involved in audio-visual interaction processing in general. This included subcortical in addition to cortical regions, such as the thalamus and basal ganglia (Fig. 3A insets), and cerebellum (not illustrated). However, subcortical regions are only approximately illustrated here since they did not survive threshold criteria imposed in the below single study and contrast ALE brain maps. Each study contained one or multiple experimental paradigms. For each experimental paradigm, several neurobiological subcategories of audio, visual, and/or audio-visual stimuli were identified. The subcategories are coded in Table 1 (far right columns) as either being excluded (0), included (1), included as a contrast condition (2), or deemed as uncertain for inclusion (blank cells) for use in different meta-analyses. Volumes resulting from the meta-analyses (depicted in Fig. 3) are available in Supplementary Material.

Figure 3.

ALE maps of audio-visual interaction sites. (A) Cortical maps derived from all studies (Table 1; purple hues, unthresholded) to illustrate global expanses of cortices involved. Data were projected onto the fiducial (lateral and medial views) and inflated (lateral views only) PALS atlas model of cortex. (B) Illustration of maps derived from single study congruent paradigms (white hues) plus superimposed maps of single study incongruent audio-visual paradigms (black). Outlined foci depict ROIs surviving after direct contrasts (e.g., congruent > incongruent). (C) ALE map revealing single study living (orange) contrasted with single study nonliving (cyan) categories of audio-visual paradigms and outlined foci that survived after direct contrasts. (D) ALE maps revealing audio-visual interactions involving single study vocalizations (red) versus single study action (mostly nonvocal) living source sounds (yellow), and outlined foci that survived after direct contrasts. A single study ALE map for paradigms using emotionally valent audio-visual stimuli, predominantly involving human vocalizations, is also illustrated (violet). (E) ALE maps showing ROIs preferentially recruited using single study dynamic-visual (blue hues) relative to single study static-visual (pink hues) audio-visual interaction foci, and outlined foci that survived after direct contrasts. All single study ALE maps were at corrected FWE P < 0.05, and subsequently derived contrast maps were at uncorrected P < 0.05. IFC = inferior frontal cortex, pSTS = posterior superior temporal sulcus, TTG = transverse temporal gyrus. Refer to text for other details.

Congruent versus Incongruent Audio-Visual Stimuli

The first set of meta-analyses examined reported activation foci specific to when audio-visual stimuli were perceived as congruent spatially, temporally, and/or semantically (Table 2; 79 studies, 117 experimental paradigms, 1235 subjects, 608 reported foci—see Table captions) versus those regions more strongly activated when the stimuli were perceived as incongruent (Table 3). Brain maps revealing activation when processing only congruent audio-visual pairings (congruent single study; corrected FWE P < 0.05) revealed several regions of interest (ROIs) (Fig. 3B, white hues; Table 4A coordinates), including the bilateral posterior superior temporal sulci (pSTS) that extended into the bilateral planum temporale and transverse temporal gyri (left > right), and the bilateral inferior frontal cortices (IFC). Brain maps revealing activation when processing incongruent audio-visual pairings (Fig. 3B, black hues; incongruent single study; corrected FWE P < 0.05; Table 4B) revealed bilateral IFC foci that were located immediately anterior to the IFC foci for congruent stimuli, plus a small left anterior insula focus.

Table 2.

Studies included in the Congruent category for audio-visual interaction site meta-analyses

| Study # | Experiment # | # Subjects | Multiple experiments | Left hem foci | Right hem foci | Number of foci | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| 79 | 117 | First author | Year | Experimental code and abbreviated task | 1235 | 320 | 288 | 608 | Brief description of experimental paradigm | |

| 1 | 1 | Adams | 2002 | Expt 1 Table 3 A + V (aud coords only) | 12 | 5 | 1 | 6 | A and V commonly showing subordinate > basic object name verification (words with pictures or environmental sounds) | |

| 2 | 2 | Alink | 2008 | Table 1c spheres move to drum sounds | 10 | 4 | 6 | 10 | Visual spheres and drum sounds moving: crossmodal dynamic capture versus conflicting motion | |

| 3 | 3 | Balk | 2010 | Figure 2 asynchronous versus simultaneous | 14 | 2 | 1 | 3 | Natural asynchronous versus simultaneous AV speech synchrony (included both contrasts as interaction effects) | |

| 4 | 4 | Baumann | 2007 | Table 1B coherent V + A versus A | 12 | 2 | 1 | 3 | Visual dots 16% coherent motion and in-phase acoustic noise > stationary acoustic sound | |

| 5 | Baumann | 2007 | Table 2B | pooled | 15 | 12 | 27 | Moving acoustic noise and visual dots 16% in-phase coherent > random dot motion | ||

| 5 | 6 | Baumgaertner | 2007 | Table 3 Action > nonact sentence+video | 19 | 3 | 0 | 3 | Conjunction spoken sentences (actions > nonactions) AND videos (actions > nonactions) | |

| 6 | 7 | Beauchamp | 2004a | Figure 3J and K, Table 1 first 2 foci only | 26 | 2 | 0 | 2 | See photographs of tools, animals and hear corresponding sounds versus scrambled images and synthesized rippled sounds | |

| 7 | 8 | Beauchamp | 2004b | Expt 1 coordinates | 8 | 1 | 1 | 2 | High resolution version of 2004a study: AV tool videos versus unimodal (AV > A,V) | |

| 8 | 9 | Belardinelli | 2004 | Table 1 AV semantic congruence | 13 | 6 | 6 | 12 | Colored images of tools, animals, humans and semantically congruent versus incongruent sounds | |

| 9 | 11 | Biau | 2016 | Table 1A Interaction; speech synchronous | 17 | 8 | 0 | 8 | Hand gesture beats versus cartoon disk and speech interaction: synchronous versus asynchronous | |

| 10 | 12 | Bischoff | 2007 | Table 2A only P < 0.05 included | 19 | 2 | 1 | 3 | Ventriloquism effect: gray disks and tones, synchronous (P < 0.05 corrected) | |

| 11 | 13 | Blank | 2013 | Figure 2 | 19 | 1 | 0 | 1 | Visual-speech recognition correlated with recognition performance | |

| 12 | 14 | Bonath | 2013 | pg 116 congruent thalamus | 18 | 1 | 0 | 1 | Small checkerboards and tones: spatially congruent versus incongruent (thalamus) | |

| 13 | 16 | Bonath | 2014 | Table 1A illusory versus not | 20 | 1 | 5 | 6 | Small checkerboards and tones: temporal > spatial congruence | |

| 17 | Bonath | 2014 | Table 1B synchronous > no illusion | pooled | 3 | 0 | 3 | Small checkerboards and tones: spatial > temporal congruence | ||

| 14 | 18 | Bushara | 2001 | Table 1A (Fig. 2) AV-Control | 12 | 1 | 3 | 4 | Tones (100 ms) and colored circles synchrony: detect Auditory then Visual presentation versus Control | |

| 19 | Bushara | 2001 | Table 1B (VA-C) five coords | pooled | 2 | 3 | 5 | Tones (100 ms) and colored circles synchrony: detect Visual then Auditory presentation versus Control | ||

| 20 | Bushara | 2001 | Table 2A interact w/Rt Insula | pooled | 2 | 4 | 6 | Tones and colored circles: correlated functional connections with (and including) the right insula | ||

| 15 | 21 | Bushara | 2003 | Table 2A collide > pass, strong A-V interact | 7 | 5 | 3 | 8 | Tone and two visual bars moving: Tone synchrony induce perception they collide (AV interaction) versus pass by | |

| 16 | 22 | Callan | 2014 | Table 5 AV-Audio (AV10-A10)-(AV6-A6) | 16 | 4 | 4 | 8 | Multisensory enhancement to visual speech in noise correlated with behavioral results | |

| 23 | Callan | 2014 | Table 6 AV—Visual only | pooled | 1 | 1 | 2 | Multisensory enhancement to visual speech audio-visual versus visual only | ||

| 17 | 24 | Calvert | 1999 | Table 1 (Fig. 1) | 5 | 3 | 4 | 7 | View image of lower face and hear numbers 1 through 10 versus unimodal conditions (AV > Photos, Auditory) | |

| 18 | 25 | Calvert | 2000 | Figure 2 superadd+subadd AVspeech | 10 | 1 | 0 | 1 | Speech and lower face: supra-additive plus subadditive effects (AV-congruent > A,V > AV-incongruent) | |

| 26 | Calvert | 2000 | Table 1 supradd AVspeech | pooled | 4 | 5 | 9 | Speech and lower face: supra-additive AV enhancement | ||

| 19 | 28 | Calvert | 2001 | Table 2 superadditive and resp depression | 10 | 4 | 11 | 15 | B/W visual checkerboard reversing and white noise bursts: Synchronous versus not; supradditive and response depression | |

| 29 | Calvert | 2001 | Table 3A superadditive only | pooled | 6 | 4 | 10 | B/W visual checkerboard reversing and white noise bursts: Synchronous versus not; supradditive only | ||

| 30 | Calvert | 2001 | Table 3B response depression only | pooled | 3 | 4 | 7 | B/W visual checkerboard reversing and white noise bursts: Synchronous versus not; response depression only | ||

| 20 | 31 | Calvert | 2003 | Table 2A (Fig. 3 blue) | 8 | 13 | 8 | 21 | Speech and lower face: Moving dynamic speech (phonemes) versus stilled speech frames | |

| 21 | 32 | DeHaas | 2013 | Table 1A AVcong—Visual | 15 | 3 | 3 | 6 | Video clips of natural scenes (animals, humans): AV congruent versus Visual | |

| 22 | 34 | Erickson | 2014 | Table 1A Congruent AV speech | 10 | 2 | 2 | 4 | McGurk effect (phonemes): congruent AV speech: AV > A and AV > V | |

| 35 | Erickson | 2014 | Table 1B McGurk speech | pooled | 2 | 0 | 2 | McGurk speech effect (phonemes) | ||

| 23 | 36 | Ethofer | 2013 | Table 1C emotion | 23 | 1 | 2 | 3 | Audiovisual emotional face-voice integration | |

| 24 | 37 | Gonzalo | 2000 | Table 1 AV > AVincon music and Chinese ideograms | 14 | 1 | 1 | 2 | Learn novel Kanji characters and musical chords, activity increases over time for consistent AV pairings | |

| 39 | Gonzalo | 2000 | Table 3 AV consistent versus Aud | pooled | 1 | 1 | 2 | Learn novel Kanji characters and musical chords, learn consistent (vs inconsistent) pairings versus auditory only | ||

| 25 | 41 | Green | 2009 | Table 4A Congruent gesture-speech > gesture or speech | 16 | pooled | 1 | 0 | 1 | Congruent gesture-speech versus gesture with unfamiliar speech and with familiar speech |

| 26 | 42 | Hagan | 2013 | Table 1 AV emotion, novel over time | 18 | 5 | 3 | 8 | Affective audio-visual speech: congruent AV emotion versus A, V; unique ROIs over time (MEG) | |

| 27 | 44 | Hasegawa | 2004 | Table 1A (well trained piano) AV induced by V-only | 26 | 12 | 6 | 18 | Piano playing: well trained pianists, mapping hand movements to sequences of sound | |

| 28 | 45 | Hashimoto | 2004 | Table 1G (Fig 4B, red) Learning Hangul letters to sounds | 12 | 2 | 1 | 3 | Unfamiliar Hangul letters and nonsense words, learn speech versus tone/noise pairings | |

| 29 | 46 | He | 2015 | Table 3C AV speech foreign (left MTG focus) | 20 | 1 | 0 | 1 | Intrinsically meaningful gestures with German speech: Gesture-German > Gesture-Russian, German speech only | |

| 30 | 47 | He | 2018 | Table 2, GSI, left MTG, gestures and speech integration | 20 | 1 | 0 | 1 | Gesture-speech integration: Bimodal speech-gesture versus unimodal gesture with foreign speech and versus unimodal speech | |

| 31 | 49 | Hein | 2007 | Figure 2B AV-artificial/nonliving | 18 | 0 | 1 | 1 | B/W images of artificial objects (“fribbles”) and animal vocalizations versus unimodal A, V | |

| 50 | Hein | 2007 | Figure 2C pSTS, pSTG, mSTG AV-cong | pooled | 0 | 3 | 3 | Familiar animal images and correct vocalizations (dog: woof-woof) | ||

| 52 | Hein | 2007 | Figure 3B Foci 2, 3, 4 (blue) artificial/nonliving | pooled | 3 | 0 | 3 | Visual “Fribbles” and backward/underwater distorted animal sounds, learn pairings (blue foci 2,3,4) | ||

| 53 | Hein | 2007 | Figure 3C congruent living (green) | pooled | 3 | 0 | 3 | Familiar congruent living versus artificial AV object features and animal sounds (green foci 7, 8, 10) | ||

| 32 | 54 | Hocking | 2008 | pg 2444 verbal | 18 | 2 | 0 | 2 | (pSTS mask) Color photos, written names, auditory names, environmental sounds conceptually matched “amodal” | |

| 33 | 56 | Hove | 2013 | pg 316 AV interaction putamen | 14 | 0 | 1 | 1 | Interaction between (beep > flash) versus (siren > moving bar); left putamen focus | |

| 34 | 57 | James | 2003 | Figure 2 | 12 | 0 | 1 | 1 | Activation by visual objects (“Greebles”) associated with auditory features (e.g., buzzes, screeches); (STG) | |

| 35 | 58 | James | 2011 | Table 1A bimodal (vs. scrambled) | 12 | 4 | 2 | 6 | Video of human manual actions (e.g., sawing): Auditory and Visual intact versus scrambled, AV event selectivity | |

| 36 | 59 | Jessen | 2015 | Table 1A emotion > neutral AV enhanced | 17 | 1 | 1 | 2 | Emotional multisensory whole body and voice expressions: AV emotion (anger and fear) > neutral expressions | |

| 60 | Jessen | 2015 | Table 1D fear > neutral AV enhanced | pooled | 2 | 1 | 3 | Emotional multisensory whole body and voice expressions: AV fear > neutral expressions | ||

| 37 | 61 | Jola | 2013 | Table 1C AVcondition dance | 12 | 3 | 3 | 6 | Viewing unfamiliar dance performance (tells a story by gesture) with versus without music: using intersubject correlation | |

| 38 | 62 | Kim | 2015 | Table 2A AV > C speech semantic match | 15 | 2 | 0 | 2 | Moving audio-visual speech perception versus white noise and unopened mouth movements | |

| 39 | 63 | Kircher | 2009 | Figure 3B: gesture related activation increase | 14 | 3 | 1 | 4 | Bimodal gesture-speech versus gesture and versus speech | |

| 40 | 64 | Kreifelts | 2007 | Table 1 voice-face emotion | 24 | 1 | 2 | 3 | Facial expression and intonated spoken words, judge emotion expressed (AV > A,V; P < 0.05 only) | |

| 65 | Kreifelts | 2007 | Table 5 AV increase effective connectivity | pooled | 2 | 4 | 6 | Increased effectiveness connectivity with pSTS and thalamus during AV integration of nonverbal emotional information | ||

| 41 | 66 | Lewis | 2000 | Table 1 | 7 | 2 | 3 | 5 | Compare speed of tone sweeps to visual dot coherent motion: Bimodal versus unimodal | |

| 42 | 67 | Matchin | 2014 | Table 1 AV > Aud only (McGurk) | 20 | 2 | 7 | 9 | McGurk audio-visual speech: AV > A only | |

| 68 | Matchin | 2014 | Table 2 AV > Video only | pooled | 9 | 6 | 15 | McGurk audio-visual speech: AV > V only | ||

| 43 | 70 | McNamara | 2008 | Table (BA44 and IPL) | 12 | 2 | 2 | 4 | Videos of meaningless hand gestures and synthetic tone sounds: Increases in functional connectivity with learning | |

| 44 | 71 | Meyer | 2007 | Table 3 paired A + V versus null | 16 | 3 | 3 | 6 | Paired screen red flashes with phone ring: paired V (conditioned stimulus) and A (unconditioned) versus null events | |

| 72 | Meyer | 2007 | Table 4 CS+, learned AV association with V-only | pooled | 4 | 6 | 10 | Paired screen flashes with phone ring: View flashes after postconditioned versus null events | ||

| 45 | 73 | Muller | 2012 | Supplementary Table 1 effective connectivity changes | 27 | 4 | 3 | 7 | Emotional facial expression (groaning, laughing) AV integration and gating of information | |

| 47 | 75 | Naghavi | 2007 | Figure 1C | 23 | 0 | 3 | 3 | B/W pictures (animals, tools, instruments, vehicles) and their sounds: congruent versus incongruent | |

| 48 | 77 | Naghavi | 2011 | Figure 2B congruent > incongruent | 30 | 0 | 1 | 1 | B/W drawings of objects (living and non) and natural sounds (barking, piano): congruent > incongruent encoding | |

| 49 | 79 | Nath | 2012 | pg 784 | 14 | 1 | 0 | 1 | McGurk effect (phonemes): congruent AV speech correlated with behavioral percept | |

| 50 | 80 | Naumer | 2008 | Figure 2 Table 1A max contrast | 18 | 8 | 6 | 14 | Images of “Fribbles” and learned artificial sounds (underwater animal vocals): posttraining versus max contrast | |

| 81 | Naumer | 2008 | Figure 3 Table 1B pre–post | pooled | 5 | 6 | 11 | Images of “Fribbles” and learned corresponding artificial sounds: Post- versus pretraining session | ||

| 51 | 83 | Naumer | 2011 | Figure 3C | 10 | 1 | 0 | 1 | Photographs of objects (living and non) and related natural sounds | |

| 52 | 85 | Noppeny | 2008 | Table 3 AV congruent sounds/words | 17 | 4 | 0 | 4 | Speech sound recognition through AV priming, environmental sounds and spoken words: Congruent > incongruent | |

| 53 | 86 | Ogawa | 2013a | Table 1 (pg 162 data) | 13 | 1 | 0 | 1 | AV congruency of pure tone and white dots moving on screen (area left V3A) | |

| 54 | 87 | Ogawa | 2013b | Table 1 3D > 2D and surround > monaural effects | 16 | 3 | 4 | 7 | Cinematic 3D > 2D video and surround sound > monaural while watching a movie (“The Three Musketeers”) | |

| 55 | 88 | Okada | 2013 | Table 1 AV > A | 20 | 5 | 4 | 9 | Video of AV > A speech only | |

| 56 | 89 | Olson | 2002 | Table 1A synchronized AV > static Vis-only | 10 | 7 | 4 | 11 | Whole face video and heard words: Synchronized AV versus static V | |

| 90 | Olson | 2002 | Table 1C synchronized AV > desynchronized AV speech | pooled | 2 | 0 | 2 | Whole face video and heard words: Synchronized versus desynchronized | ||

| 57 | 91 | Plank | 2012 | pg 803 AV congruent effect | 15 | 0 | 1 | 1 | AV spatially congruent > semantically matching images of natural objects and associated sounds (right STG) | |

| 92 | Plank | 2012 | Table 2A spatially congruent-baseline | pooled | 5 | 5 | 10 | Images of natural objects and associated sounds, spatially congruent versus baseline | ||

| 58 | 93 | Raij | 2000 | Table 1B letters and speech sounds | 9 | 2 | 3 | 5 | Integration of visual letters and corresponding auditory phonetic expressions (MEG study) AV versus (A + V) | |

| 59 | 94 | Regenbogen | 2017 | Table 2A degraded > clear Multisensory versus unimodal input | 29 | 5 | 6 | 11 | Degraded > clear AV versus both visual and auditory unimodal visual real-world object-in-action recognition | |

| 60 | 95 | Robins | 2008 | Table 2 (Fig. 2) AV integration (AV > A and AV > V) | 10 | 2 | 1 | 3 | Face speaking sentences: angry, fearful, happy, neutral (AV > A,V) | |

| 96 | Robins | 2008 | Table 4A (Fig. 5) AV integration and emotion | 5 | 1 | 4 | 5 | AV faces and spoken sentences expressing fear or neutral valence: AV integration (AV > A,V conditions) | ||

| 97 | Robins | 2008 | Table 4B emotion effects | pooled | 2 | 0 | 2 | AV faces and spoken sentences expressing fear or neutral valence: Emotional AV-fear > AV-neutral | ||

| 98 | Robins | 2008 | Table 4C (Fig. 5) fearful AV integration | pooled | 1 | 5 | 6 | AV faces and spoken sentences expressing fear or neutral valence: Fearful-only AV integration | ||

| 99 | Robins | 2008 | Table 4D AV-only emotion | pooled | 1 | 3 | 4 | AV faces and spoken sentences expressing fear or neutral valence: AV-only emotion | ||

| 61 | 100 | Scheef | 2009 | Table 1 cartoon jump + boing | 16 | 1 | 2 | 3 | Video of cartoon person jumping and “sonification” of a tone, learn correlated pairings: AV-V and AV-A conjunction | |

| 62 | 101 | Schmid | 2011 | Table 2E A effect V (Living and nonliving, pictures) | 12 | 3 | 4 | 7 | Environmental sounds and matching pictures: reduced activity by A | |

| 102 | Schmid | 2011 | Table 2F V competition effect A (reduced activity by a visual object) | pooled | 2 | 2 | 4 | Environmental sounds and matching pictures: reduced activity by V | ||

| 103 | Schmid | 2011 | Table 2G AV crossmodal interaction x auditory attention | pooled | 2 | 3 | 5 | Environmental sounds and matching pictures: cross-modal interaction and auditory attention | ||

| 63 | 104 | Sekiyama | 2003 | Table 3 (fMRI nAV-AV) | 8 | 1 | 0 | 1 | AV speech, McGurk effect with phonemes (ba, da, ga) and noise modulation: noise-AV > AV (fMRI) | |

| 105 | Sekiyama | 2003 | Table 4 (PET nAV-AV) | pooled | 1 | 3 | 4 | AV speech, McGurk effect with phonemes (ba, da, ga) and noise modulation: noise-AV > AV (PET) | ||

| 64 | 106 | Sestieri | 2006 | Table 1 (Fig. 3), AV location match versus semantic | 10 | 2 | 5 | 7 | B/W images (animal, weapons) and environmental sounds: Match location > recognition | |

| 107 | Sestieri | 2006 | Table 2 AV semantic recognition versus localization | pooled | 2 | 1 | 3 | B/W pictures and environmental sounds: congruent semantic recognition > localization task | ||

| 65 | 108 | Stevenson | 2009 | Table 1B 2 AVtools > AVspeech | 11 | 1 | 1 | 2 | Hand tools in use video: inverse effectiveness (degraded AV tool > AV speech) | |

| 109 | Stevenson | 2009 | Table 1C (Fig. 8) AVspeech > AVtools | pooled | 1 | 1 | 2 | Face and speech video: inverse effectiveness (degraded AV speech > AV tool use) | ||

| 66 | 110 | Straube | 2011 | Table 3A and B iconic/metaphoric speech-gestures versus speech, gesture | 16 | 2 | 2 | 4 | Integration of Iconic and Metaphoric speech-gestures versus speech and gesture | |

| 67 | 111 | Straube | 2014 | p939 Integration foci | 16 | 3 | 0 | 3 | Integration of iconic hand gesture-speech > unimodal speech and unimodal gesture (healthy control group) | |

| 69 | 113 | Tanabe | 2005 | Table 1A AV; A then V; not VA | 15 | 10 | 10 | 20 | Amorphous texture patterns and modulated white noises: Activation during learning delay period (AV) | |

| 114 | Tanabe | 2005 | Table 2A+2B (Fig. 5a) AV and VA | pooled | 5 | 6 | 11 | Amorphous texture patterns and modulated white noises: changes after feedback learning (AV and VA) | ||

| 115 | Tanabe | 2005 | Table 3A+3B (Fig. 6) AV and VA; delay period | pooled | 9 | 1 | 10 | Amorphous texture patterns and modulated white noises: sustained activity throughout learning (AV and VA) | ||

| 70 | 117 | Taylor | 2006 | Figure 1A and B, Figure 1C and D (living > nonliving) | 15 | 2 | 0 | 2 | Color photos (V), environmental sounds and spoken words (A): Congruent AV versus Incongruent (living objects) | |

| 71 | 118 | Van Atteveldt | 2004 | Table 1a letters and speech sounds | 16 | 3 | 1 | 4 | Familiar letters and their speech sounds: Congruent versus not and Bimodal versus Unimodal | |

| 72 | 119 | Van Atteveldt | 2007 | Table 2A+B (Fig. 2) | 12 | 3 | 2 | 5 | Single letters and their speech sounds (phonemes): Congruent > Incong; Passive perception, blocked and event-related design | |

| 120 | Van Atteveldt | 2007 | Table 3 (Fig. 2) passive | pooled | 1 | 1 | 2 | Single letters and their speech sounds (phonemes): Congruent > Incong, active perception task | ||